FROCC: Fast Random projection-based One-Class Classification

Abstract

We present Fast Random projection-based One-Class Classification (FROCC), an extremely efficient method for one-class classification. Our method is based on a simple idea of transforming the training data by projecting it onto a set of random unit vectors that are chosen uniformly and independently from the unit sphere, and bounding the regions based on separation of the data. FROCC can be naturally extended with kernels. We provide a new theoretical framework to prove that that FROCC generalizes well in the sense that it is stable and has low bias for some parameter settings. FROCC achieves up to 3.1 percent points better AUC ROC, with 1.2–67.8 speedup in training and test times over a range of state-of-the-art benchmarks including the SVM and the deep learning based models for the OCC task.

1 Introduction

One-class classification (OCC) has attracted a lot of attention over the years under various names such as novelty detection [30], anomaly or outlier detection [34, 29], concept learning [15], and so on. Unlike the classical binary (or multi-class) classification problem, the problem addressed in OCC is much simpler: to simply identify all instances of a class by using only a (small) set of examples from that class. Therefore it is applicable in settings where only “normal” data is known at training time, and the model is expected to accurately describe this normal data. After training, any data which deviates from this normality is treated as an anomaly or a novel item. Real-world applications include intrusion detection [11, 5], medical diagnoses [14], fraud detection [37], etc., where the requirement is to offer not only high precision, but also the ability to scale training and testing time as the volume of (training) data increases.

Primary approaches to OCC include one-class variants of support vector machines (SVM) [30], probabilistic density estimators describing the support of normal class [6, 27], and recently, deep-learning based methods such as auto-encoders (AE) and Generative Adversarial Networks (GAN) which try to learn the distribution of normal data samples during training [29, 24, 28]. However, almost all of these methods are either extremely slow to train or require significant hyper-parameter tuning or both.

We present an alternative approach based on the idea of using a collection of random projections onto a number of randomly chosen 1-dimensional subspaces (vectors) resulting in a simple yet surprisingly powerful one-class classifier. Random projections down to a single dimension or to a small number of dimensions have been used in the past to speed up approximate nearest neighbor search [17, 2, 13] and other problems that rely on the distance-preserving property guaranteed by the Johnson-Lindenstrauss Lemma but our problem space is different. We use random projections in an entirely novel fashion based on the following key insight: for the one-class classification problem, the preservation of distances is not important, we only require the class boundary to be preserved. The preservation of distances is a stronger property which implies that the class boundary is preserved, but the converse need not hold. We don’t need to preserve distances. All we need to do is “view” the training data from a “direction” that shows that the outlier is separated from the data.

Formalizing this intuition, we develop an algorithm for one-class classification called FROCC (Fast Random projection-based One-Class Classification) that essentially applies a simplified envelope method to random projections of training data to provide very strong classifier. FROCC also comes with a theoretically provable generalization property: the method is stable and has low bias.

At a high level, FROCC works as follows: given only positively labelled data points, we select a set of random vectors from the unit sphere, and project all the training data points onto these random vectors. We compactly represent all the training data projections along each vector using one (or more) intervals, based on the separation parameter . If a test data point’s projection with the random vector is within the interval spanned by all the training data projections, we say it is part of the normal class, otherwise it is an outlier. The parameter breaks the single interval used to represent the random vector projection into dense clusters with a density lower-bound , resulting in a collection of intervals along each vector.

The simplicity of the proposed model provides us with a massive advantage in terms of computational cost – during both training and testing – over all the existing methods ranging from SVMs to deep neural network models. We achieve 1–16 speedup for non-deep baselines like One Class SVM (OCSVM) [30] and Isolation forests. For state-of-the-art deep learning based approaches like Deep-SVDD and Auto Encoder we achieve a speedup to the tune of 45 – 68. At the same time, the average area under the ROC curve is 0.3 — 3.1 percent points better than the state-of-the-art baseline methods on six of the eleven benchmark datasets we used in our empirical study.

Like SVM our methods can be seamlessly extended to use kernels. These extensions can take advantage of kernels that may be suitable for certain data distributions, without changing the overall one-class decision framework of FROCC.

| Multi-modal Gaussian Data | |||

| ROC = 0.816 | ROC = 0.824 | ROC = 0.968 | ROC = 0.836 |

|

|

|

|

| Moon Data | |||

| ROC = 0.734 | ROC = 0.783 | ROC = 0.823 | ROC = 0.911 |

|

|

|

|

| (a) | (b) | (c) | (d) |

To understand the power of our method consider the one-class decision boundaries for various methods on two toy datasets visualized in Figure 1. We see that FROCC with computes a very desirable decision boundary for Multi-modal Gaussian data, a case which is known to be difficult for SVM to handle. In this case incorporating a standard Gaussian RBF kernel doesn’t improve the ROC for FROCC. In the case of the Moon-shaped data, however, this kernel is able to direct FROCC to better decision boundaries which reflect in better ROC scores.

Through a range of experiments we show that the in most cases FROCC is able to achieve high scores in ROC as well as Precision@n measures with 2–3 orders of improvement in speed.

Our contributions.

-

•

We define a powerful and efficient random-projection based one class classifier: FROCC.

-

•

We show theoretically that FROCC is a stable classification algorithm.

-

•

We perform an extensive experimental evaluation on several data sets that shows that our methods outperform traditional methods in both quality of results and speed, and that our methods are comparable in quality to recent neural methods while outperforming them in terms of speed by three orders of magnitude.

Organization.

We discuss related literature in Sec. 2. Formal definitions of our methods are presented in Sec. 3. In Section 4 we provide analysis of stability of our methods. The experiments setup and results of an extensive experimental comparison with several baselines are discussed in Sec. 5. We make some concluding remarks in Sec. 6.

2 Related Work

One Class Classification.

One class classification has been studied extensively so we don’t attempt to survey the literature in full detail, referring the reader instead to the survey of traditional methods by [16]. A more recent development is the application of deep learning-based methods to this problem. A partial survey of these methods is available in [28].

Unlike deep learning-based methods our work takes a more traditional geometric view of the one class problem in a manner similar to [34] and [30] but we do not rely on optimization as a subroutine like their methods do. This gives us an efficiency benefit over these methods in addition to the improvement we get in terms of quality of results. An additional efficiency advantage our FROCC methods have is that we do not need to preprocess to re-center the data like these two methods do.

Our methods operate in the paradigm where the training data consists of a fully labeled set of examples only from the normal class. We do not use even a small number of examples from the negative class as done in, e.g., [3]. Neither does our method use both labeled and unlabelled data as in the PAC model [35]. Our methods are randomized in nature which put them in the same category as probabilistic methods that attempts to fit a distribution on the positive data. One examples of such a method is learning using Parzen window density estimation [6]. We consider one such method as a baseline: [10] apply Minimum Co-variance Determinant Estimator [27] to find the region whose co-variance matrix has the lowest determinant, effectively creating an elliptical envelope around the positive data.

We also compare our algorithms to deep learning-based methods. Specifically we compare our methods to the Auto-encoder-based one class classification method proposed by [29].

Another baseline we compare against is the deep learning extension of Support Vector Data Description (SVDD) proposed by [28], where a neural network learns a transformation from input space to a useful space. In both cases our results are comparable and often better in terms of ROC while achieving a high speedup.

Random Projection-based Algorithms.

Random projection methods have been productive in nearest-neighbor contexts due to the fact that it is possible to preserve distances between a set of points if the number of random dimensions chosen is large enough, the so-called Johnson-Lindenstrauss property, c.f., e.g. locality sensitive hashing [12, 2]. Our methods do not look to preserve distances, only the boundary, and, besides, we are not necessarily looking for dimensionality reduction, which is key goal of the cited methods. A smaller number of projection dimensions may preserve distances between the training points but it does not make the task of discriminating outliers any easier. It may be possible that for the classifier to have good accuracy the dimensionality of the projection space be higher than the data space. As such our problem space is different from that of the space of problems that look for dimensionality reduction through random projection. [17] uses projections to a collection of randomly chosen vectors to take a simpler approach than locality sensitive hashing but his problem of interest is also approximate nearest neighbor search. Some research has also focused on using random projections to learn mixtures of Gaussians, starting with the work of [7] but again this is a different problem space.

The closest work to ours in flavor is that of [36] who attempts to find -half-spaces whose intersection fully contains a given positively labelled data set. The key difference here is that our methods work with the intersections of hyper-rectangles that are unbounded in one dimension and not half-spaces. While the solution in [36] can be a general convex body in the projection space, ours is specifically limited to cuboids. As such it is not possible to refine Vempala’s method by taking a parameter and subdividing intervals (c.f. Section 3), nor is it clear how it could be regularized for use in real-world applications.

3 Fast Random-Projection based OCC

Definition 1 (FROCC ()).

Assume that we are given a set of training points . Then, given an integer parameter , a real parameter and a kernel function , the -separated Fast Random-projection based One-Class Classifier FROCC , comprises, for each , ,

-

1.

A classifying direction that is a unit vector chosen uniformly at random from , the set of all unit vectors of , independent of all other classifying directions, and

-

2.

a set of intervals defined as follows: Let and is a shifted and scaled version of such that . Assume . Then each interval of has the property that it is of the form for some such that

-

(a)

for all such that , ,

-

(b)

whenever , and

-

(c)

whenever .

-

(a)

Given a query point , FROCC returns YES if for every , , lies within some interval of .

In the simplest setting the kernel function will be just the usual dot product associated with .

We show a small example in Figure 2. The green points are training points. Two classifying directions and are displayed. The test point has the property that lies in but since does not lie in , is said to be outside the class. Noting that the projections along of the two clusters of the training set are well separated, a choice of leads to splitting of the interval into and , thus correctly classifying to be an outlier.

In simple terms the intervals of have the property that the points of are densely scattered within each interval and there are wide gaps between the intervals that are empty of points of . The density of the intervals is lower bounded by and the width between successive intervals is lower bounded by . If we now look at Figure 2 we note that since the interval has length more than , becomes and so the projection of along is also classified as being outside the class.

Computing FROCC.

Algorithm 1 outlines the training procedure of FROCC. The function in Line 1 is any valid kernel, which defaults to inner product . Algorithm 2 shows how the interval tree is created for each classification direction. Unlike many optimization based learning methods, such as neural networks, our algorithm needs a single pass through the data.

The standard method for choosing uniformly random vectors in is to sample from a spherical -dimensional Gaussian centered at the origin and then normalizing it so that its length is 1. Folklore and a quick calculation suggests that independently picking random variables with distribution is good enough for this purpose. The spherical symmetry of the Gaussian distribution ensures that the random vector is uniformly picked from all possible directions.

Time complexity.

The time taken to compute the boundaries of the is for (assuming the kernel is dot product, which takes ). For additional factor of is required to create -separated intervals along each classifying direction.

4 Theoretical Analysis

In this section we state and prove certain properties of FROCC which states that FROCC is a stable classifier whose performance can be reliably tweaked by modifying the number of classification dimensions and separation parameter . In particular, we show that:

-

1.

Bias of FROCC decreases exponentially with the size of the training set if the training set is drawn from a spatially divisible distribution,

-

2.

Precision and model size of FROCC increase monotonically with and decrease monotonically with , and

-

3.

Probability of FROCC making an error decays exponentially with .

4.1 Stability of FROCC

Following the analysis of [4] we prove that FROCC generalizes in a certain sense. Specifically we will show that under some mild assumptions the bias of FROCC with goes to 0 at an exponential rate as the size of the training set increases. We first show a general theorem which should be viewed as a variant of Theorem 17 of [4] which is more suited to our situation since we address a one-class situation and our algorithm is randomized. Our Theorem 4 is of general interest and may have applications beyond the analysis of FROCC.

4.1.1 Terminology and notation

Let denote the set of all finite labelled point sets of where each point is labelled either 0 or 1. We assume we have a training set chosen randomly as follows: We are given an unknown distribution over and comprises samples with label 1 drawn i.i.d. from . For a point we use to denote and to denote .

Let be the set of all finite strings on some finite alphabet, and let us call the elements of decision strings. Further, let be the set of all classification functions from to . Then we say a classification map maps a training set and a decision string to a classification algorithm

With this setup we say that a randomized classification algorithm takes a training as input, picks a random decision string and returns the classification function which we denote . is a randomly chosen element of which we call a classifier. Given an , is fixed but we will use to denote the randomized classifier which has been given its training set but is yet to pick a random decision string. Now, given a , the loss function of a randomized classifier is given by

where the expectation is over the randomness of the decision string . In other words the loss is equal to .

We define the risk of as

where the expectation is over the random choice of and of the point . Note that already contains an expectation on the randomness associated with the decision string which is inherent in

The empirical risk of is defined

We introduce notation for two modifications of the training set . For we say that and where is chosen from independently of all previous choices. We now define a notion of stability.

The following notion of stability is a modification of the definition of stability for classification algorithms given by [4].

Definition 2 (0-1 uniform classification stability).

Suppose we have a randomized classification algorithm and a loss function whose co-domain is . Then is said to have 0-1 uniform classification stability if for all , for every such that , and for every

with probability at least .

If is and is for some constant , we say that is 0-1 uniform classification stable.

We prove that a 0-1 uniform classification stable algorithm has low bias and converges exponentially fast in the size of to its expected behavior. This is a general result that may be applicable to a large class of randomized classification algorithm. We also prove that FROCC is 0-1 uniform classification stable under mild conditions on the unknown distribution.

We also introduce a condition that the unknown distribution has to satisfy in order for us to show the desired stability property.

Definition 3 (Spatial divisibility).

Suppose that is a probability density function. For any set containing points let and be the two half-spaces defined by the hyper-plane containing all the points of . We say that is spatially divisible if for any set randomly chosen points and chosen independently according to density and any such that ,

Note that the set defines a hyper-plane in dimensions with probability 1 for any distribution defined on a non-empty volume of . Most standard distributions have the spatial divisibility property. For example, it is easy to see that a multi-variate Gaussian distribution has this property. If we pick a point uniformly at random from within a convex -polytope then too the spatial divisibility property is satisfied. To see this we note that the only way it could not be satisfied is if the points and are picked from the surface of the -polytope which is an event of probability 0.

4.1.2 Results

With the definition of stability given above we can prove the following theorem which shows that the deviation of the empirical risk from the expected risk tends to 0 exponentially as increases for a class of randomized algorithms that includes FROCC.

Theorem 4.

Suppose we have a randomized classification algorithm which has 0-1 uniform classification stability with , and suppose this algorithm is trained on a set drawn i.i.d. from a hidden distribution in such a way that the random choices made by are independent of , then, for any ,

| (1) |

Moreover if is 0-1 uniform classification stable then the RHS of (1) tends to 0 at a rate exponential in .

Proof.

As in [4] we will use McDiarmid’s inequality [22] to bound the deviation of the empirical risk from the risk. For completeness we state the result:

Lemma 5 ([22]).

Given a set of independent random variables and a function such that whenever , and , then

To use Lemma 5 we have to show that is a -Lipschitz function. However, this property is only true with a certain probability in our case. In particular we will show that for all , , with probability at least . To see this we note that for any and any (including ).

Using the definition of 0-1 uniform classification stability and the triangle inequality, we get that the RHS is bounded by with probability at least . If is the event that the RHS above is bounded by then we want to bound the probability of the event that this is true for all , , i.e., the event . Since , we can say that is at least .

Now, let be the event that and defined above be the event that , which is a function of the vector of elements chosen independently to form is -Lipschitz. We know that

and so we can say that

| (2) |

We have already argued that the second term on the RHS is upper bounded by so we turn to the first term. To apply Lemma 5 to the first term we note that depends on the random selection of which are selected independently of each other and, by assumption, independent of the random choices made by . Therefore the random collection is independent even when conditioned on which is determined purely by the random choices of and is true for every choice of set . Once this is noted then we can use McDiarmid’s inequality to bound the first term of Equation (2). We ignore by upper bounding it by 1. ∎

We now show that Theorem 4 applies to FROCC with if the unknown distribution is spatially divisible.

Proposition 6.

If the unknown distribution is spatially divisible then FROCC with is 0-1 uniform classification stable.

Proof.

For any , , where , we note that when the entire interval spanned by the projections in any direction is said to contain inliers, and so if lies strictly within the convex hull of then the removal of from does not affect the classifier. This is because the boundaries in any direction are determined by the points that are part of the convex hull. Let us denote this set . Therefore, since takes value at most 1, we deduce the following upper bound

To bound the probability that a point lies in the convex hull of we use an argument that Efron [9] attributes to Rényi and Sulanke [25, 26]. Given a region let us denote by the probability that a point drawn from the unknown distribution places a point in . Now given any points, say , we divide into two regions that lies on one side of the hyper-plane defined by and that lies on the other side. Then the probability that all lie in is equal to the probability that all the remaining points of are either in or in , i.e.,

From this, using the union bound we can say that

where is the set of all subsets of of size . This can further be bounded as

Since we have assumed that for all such that and , using the fact that , we can say that there is an such that and a constant such that

almost surely. Since the RHS goes to 0 exponentially fast in with probability 1, we can say that FROCC is 0-1 uniform classification stable when . ∎

Theorem 7.

For a training set chosen i.i.d. from a spatially divisible unknown distribution, if is set to 1 then converges in probability to exponentially fast in .

We feel that it should be possible to use the framework provided by Theorem 4 to show that FROCC is stable even when lies in , however this problem remains open.

4.2 Monotonicity properties of FROCC

We now show that the precision and the model size of FROCC is monotonic in the two parameters and but in opposite directions. The precision and model size increase as increases and decrease as increases. This result is formalized as Proposition 8 below and follows by a coupling argument.

We first introduce some terms. On the -algebra induced by the Borel sets of we define the probability measure induced by FROCC with parameters . Note that , FROCC is a random collection of -dimensional cubes in . These cubes are induced by taking the products of intervals in each of the dimensions. Each dimension has between 1 and intervals when trained on . We define the model size of FROCC to be the sum of the number of intervals, i.e., if there are intervals along dimension , , the model size is . We will use the letter to denote the model size of FROCC with parameters . Note that this is a random variable.

Proposition 8.

Given FROCC trained on a finite set , for , and any we have that

-

1.

whenever

-

(a)

,

-

(b)

, and,

-

(a)

-

2.

whenever

-

(a)

,

-

(b)

.

-

(a)

Proof.

For 1(a) and 1(b) we couple the probability distributions and by choosing random unit vectors, , and then constructing one instance of FROCC with separation and one instance of FROCC with separation using the same random unit vectors.

Now, consider some vector and let the be the projections of the training points on . Let the intervals formed by separated FROCC be and those of separated FROCC be . We know that . Clearly each is contained within some since the distance between subsequent points within is at most which is strictly less than . This proves 1(a) and also implies that which proves 1(b).

For 2(a) and 2(b) we couple the probability distributions and by choosing random unit vectors, , and then constructing two instances of FROCC. Then we choose an additional vectors and compute intervals corresponding to these and add them to the second instance of FROCC. 2(b) follows immediately. For 2(a) we note that any classified as YES by the second model must also be classified as YES by the first model since it is classified as YES along the first dimensions of the second model which are common to both models. ∎

From Proposition 8 it follows that expected model size and false positive probability vary inversely, i.e., the larger the model size the smaller the false positive probability and vice versa.

Proposition 8 is also consistent with the following upper bound that is easy to prove

Fact 9.

For FROCC constructed on training set ,

with probability 1, where .

The first inequality is trivial. The second follows by observing that along any dimension the gaps between intervals must be at least and the length of the interval between the maximum and minimum projection is at mode .

4.3 FROCC’s error decays exponentially with

Definition 10.

Given a finite set of points and any , we say that and . Now, given a point and , we say that the distance of from along the direction is defined as

Further we say that is a discriminator of from if . Finally we denote by the subset of unit vectors of that discriminate from .

FROCC has the property that the probability of misclassifying a point outside the class decreases exponentially with parameter . We state this formally. Given we denote the measure of by .

Proposition 11.

Given a set and a point with a set of non-empty measure such that for any , , the probability that FROCC projection dimension returns a Yes answer is

Hence for the classifier to given an erroneous Yes answer on with probability at most we need

| (3) |

projection dimensions.

5 Experiments and Results

We conducted a comprehensive set of experiments to evaluate the performance of FROCC on synthetic and real world benchmark datasets and compare them with various existing state of the art baselines. In this section we first present the details of the datasets, baselines, and metrics we used in our empirical evaluation, and then present the results of comparison.

5.1 Methods Compared

We compare FROCC with the following state of the art algorithms for OCC:

-

(i)

OC-SVM: One class SVM based on the formulation of Schölkopf et al [30],

-

(ii)

Isolation Forest: Isolation forest algorithm [21],

-

(iii)

AE: An auto encoder based anomaly detection algorithm based on Aggarwal [1],

-

(iv)

Deep SVDD: A deep neural-network based formulation of Ruff et al [28]. We used the implementation and the optimal model parameters provided by the authors111https://github.com/lukasruff/Deep-SVDD-PyTorch.

We implemented FROCC using Python 3.8 with numpy library. The IntervalTree subroutine (Algorithm 2) was optimized by quantizing the interval into discrete bins of sizes based on . This allows for a vectorized implementation of the subroutine. All experiments were conducted on a server with Intel Xeon (2.60GHz), and NVIDIA GeForce GTX 1080 Ti GPU. It is noteworthy that unlike deep learning based methods (i.e., AE and Deep SVDD), FROCC does not require GPU during training as well as testing.

For OCSVM and FROCC, for each dataset we chose the best performing (in ROC) kernel among the following: (a) linear (dot product), (b) radial basis function (RBF), (c) polynomial (degree=3) and, (d) sigmoid .

5.2 Datasets

We evaluate FROCC and the baseline methods on a set of well-known benchmark datasets summarized in Table 1. The set of benchmarks we have chosen covers a large spectrum of data characteristics such as the number of samples (from a few hundreds to millions) as well as the number of features (from a handful to tens of thousands). Thus it broadly covers the range of scenarios where OCC task appears.

To adapt the multi-class datasets for evaluation in OCC setting, we perform the processing similar to Ruff et al [28]: Given a dataset with multiple labels, we assign one class the positive label and all others the negative label. Specifically, we select the positive classes for i. MNIST: randomly from 0, 1 and 4, ii. CIFAR 10: airplane, automobile and deer and iii. CIFAR 100: beaver, motorcycle and fox. We then split the positive class instances into train and test sets. Using labelled data allows us to evaluate the performance using true labels. We do not use any labels for training. We double the test set size by adding equal number of instances from the negatively labeled instances. We train the OCC on the train set which consists of only positive examples. Metrics are calculated on test set which consists of equal number of positive and negative examples.

| ID | Name | Features | Training Samples |

|

||

|---|---|---|---|---|---|---|

| A1 | Diabetes [8] | 8 | 268 | 508 | ||

| A2⋆ | MAGIC Telescope [8] | 10 | 12332 | 20870 | ||

| A3⋆ | MiniBooNE [8] | 50 | 18250 | 30650 | ||

| A4 | Cardiotocography [8] | 35 | 147 | 294 | ||

| B1⋆ | SATLog-Vehicle [31] | 100 | 24623 | 36864 | ||

| B2⋆ | Kitsune Network Attack [23] | 115 | 3018972 | 3006490 | ||

| B3 | MNIST [20] | 784 | 3457 | 5390 | ||

| B4 | CIFAR-10 [18] | 3072 | 3000 | 5896 | ||

| B5 | CIFAR-100 [18] | 3072 | 300 | 594 | ||

| B6 | GTSRB [32] | 3072 | 284 | 562 | ||

| B7 | Omniglot [19] | 33075 | 250 | 400 |

5.3 Metrics

Classification Performance: For measuring the classification performance, we use the following two commonly used metrics:

-

•

ROC AUC to measure the classification performance of all methods, and

-

•

Adjusted Precision@n, that is, fraction of true outliers among the top n points in the test set, normalized across datasets [33].

Computational Scalability: For measuring the computational performance of all the methods, we use the speedup of their training and testing time over the fastest baseline method for the dataset. In other words, for an algorithm its speedup is calculated as the ratio of training (or testing) time of the fastest baseline method to the training (or testing) time of . We found Isolation forests to be the fastest across all scenarios, thus making it the default choice for computing the speedup metric over. All measurements are reported as an average over 5 runs for each method.

5.4 Results and Observations

| ID | OC-SVM | IsoForest | Deep SVDD | Auto Encoder | FROCC |

|---|---|---|---|---|---|

| A1 | 53.35 0.95 | 63.48 0.46 | 73.24 0.65 | 62.43 0.26 | 73.18 0.26 |

| A2⋆ | 68.12 0.85 | 77.09 0.84 | 61.39 0.87 | 75.96 0.44 | 72.30 0.33 |

| A3⋆ | 54.22 0.45 | 81.21 0.14 | 78.21 0.10 | 56.27 0.22 | 69.32 0.30 |

| A4 | 57.93 0.73 | 85.10 0.33 | 56.95 0.53 | 59.43 0.60 | 83.57 0.44 |

| B1⋆ | 73.51 0.41 | 83.19 0.44 | 68.36 0.64 | 76.42 0.76 | 85.14 0.74 |

| B2⋆ | 86.01 0.60 | 80.05 0.43 | 84.73 0.37 | 79.23 0.34 | 86.64 0.51 |

| B3 | 98.99 0.11 | 98.86 0.31 | 97.04 0.08 | 99.38 0.13 | 99.61 0.35 |

| B4 | 52.21 0.39 | 51.47 0.59 | 59.32 0.62 | 56.73 0.47 | 61.93 0.64 |

| B5 | 49.62 0.12 | 54.13 0.17 | 67.81 0.39 | 55.73 0.53 | 71.04 0.37 |

| B6 | 63.55 0.12 | 61.23 0.35 | 71.88 0.36 | 66.73 0.44 | 67.12 0.60 |

| B7 | 90.21 0.63 | 70.83 0.35 | 72.70 0.07 | 62.38 0.12 | 92.46 0.21 |

| ID | OC-SVM | IsoForest | Deep SVDD | Auto Encoder | FROCC |

|---|---|---|---|---|---|

| A1 | 59.72 0.95 | 61.64 0.05 | 72.74 0.13 | 63.54 0.14 | 72.83 0.27 |

| A2⋆ | 78.17 0.15 | 87.09 0.35 | 72.23 0.47 | 78.19 0.32 | 84.31 0.50 |

| A3⋆ | 61.05 0.56 | 79.77 0.73 | 78.32 0.43 | 66.17 0.48 | 71.91 0.36 |

| A4 | 63.21 0.94 | 82.83 0.18 | 60.03 0.35 | 57.73 0.54 | 85.91 0.33 |

| B1⋆ | 74.22 0.44 | 81.94 0.62 | 68.36 0.68 | 76.42 0.60 | 89.21 0.35 |

| B2⋆ | 81.35 0.60 | 87.24 0.65 | 81.21 0.63 | 72.94 0.56 | 83.21 0.65 |

| B3 | 92.34 0.47 | 91.65 0.65 | 93.25 0.45 | 74.32 0.37 | 99.80 0.58 |

| B4 | 50.02 0.75 | 51.42 0.86 | 57.32 0.09 | 51.63 0.18 | 57.92 0.41 |

| B5 | 50.08 0.32 | 50.13 0.43 | 55.21 0.64 | 53.40 0.77 | 55.62 0.38 |

| B6 | 61.63 0.01 | 59.13 0.11 | 69.38 0.30 | 64.71 0.16 | 62.46 0.36 |

| B7 | 88.32 0.10 | 72.83 0.26 | 82.70 0.51 | 65.90 0.47 | 79.34 0.40 |

| ID | OC-SVM | Deep SVDD | Auto Encoder | FROCC | ||||

|---|---|---|---|---|---|---|---|---|

| Train | Test | Train | Test | Train | Test | Train | Test | |

| A1 | 0.055 | 0.862 | 0.034 | 0.038 | 0.089 | 0.090 | 1.111(2) | 1.111 |

| A2⋆ | 0.057 | 0.847 | 0.032 | 0.038 | 0.085 | 0.088 | 1.333(3) | 1.075 |

| A3⋆ | 0.100 | 0.840 | 0.031 | 0.039 | 0.082 | 0.087 | 1.282(3) | 1.075 |

| A4 | 0.064 | 0.870 | 0.038 | 0.038 | 0.091 | 0.091 | 1.190(2) | 1.099 |

| B1⋆ | 0.074 | 0.855 | 0.032 | 0.038 | 0.087 | 0.089 | 1.075(1) | 1.087 |

| B2⋆ | 0.043 | 0.524 | 0.028 | 0.038 | 0.062 | 0.078 | 1.786(1) | 1.176 |

| B3 | 0.099 | 0.794 | 0.043 | 0.039 | 0.050 | 0.080 | 1.299(1) | 1.149 |

| B4 | 0.099 | 0.820 | 0.040 | 0.039 | 0.069 | 0.083 | 1.389(1) | 1.099 |

| B5 | 0.100 | 0.826 | 0.039 | 0.039 | 0.074 | 0.085 | 1.370(1) | 1.087 |

| B6 | 0.098 | 0.833 | 0.039 | 0.039 | 0.079 | 0.086 | 1.351(2) | 1.087 |

| B7 | 0.100 | 0.806 | 0.035 | 0.039 | 0.061 | 0.082 | 1.449(1) | 1.111 |

Table 2 compares the ROC scores of the baselines on the datasets. From the table we note that FROCC outperforms the baselines in six of the eleven datasets. Further, we note that for high dimensional datasets –i.e., datasets with 100 features (B1-B7), FROCC outperforms the baselines in six out of seven datasets while ranking second in the remaining one. This demonstrates the superiority of FROCC for high dimensional data which are known to be challenging for most OCC methods. Table 3 summarizes the Adjusted Precision@n (for a given dataset, is the number of positive examples in test data). Here too we observe that FROCC outperforms the baselines in six datasets. In high dimensional datasets, it is superior in four out of seven datasets, and is the second best in one. And even in datasets where the performance of FROCC is not the highest, it does not drop as low as traditional shallow baselines, which in two occasions fail to learn altogether.

Next, we turn our attention to the computational performance of all the methods summarized in Table 4, where we present the training and testing speedups. These results particularly highlight the scalability of FROCC over all other baselines, including the traditionally efficient shallow methods such as Isolation forest. Against deep learning based methods such as Deep SVDD and Auto Encoder, FROCC shows nearly 1-2 orders magnitude speedup. Overall, we notice that FROCC achieves up to speedup while beating the baselines, and up to speedup while ranking second or third.

5.5 Discussion of Results

Here we discuss the results from two broad perspectives:

ROC and Speed trade-offs:

The speedups show that FROCC is the fastest method. From Table 2, we see that in six datasets, FROCC outperforms the baseline as well. Of the cases it doesn’t outperform the baselines, it ranks second thrice out of five and in each of the these cases (datasets A1, A4 and B7) it is 35, 29 and 1.19 times faster than the best performing method respectively. Thus, depending on the application, the speed vs ROC trade-off may make FROCC the method of choice even when it doesn’t outperform other algorithms.

Scalability:

While we are faster than baselines in all datasets, large datasets like MiniBooNE (A3), SATLog-Vehicle (B1) and especially Kitsune network attack (B2) datasets – where FROCC is 68 times faster than the second best method – can be render the best performing deep baselines infeasible, leaving scalable methods like IsolationForest and FROCC as the only option. Given that FROCC consistently ranks among top three (1: 6 times, 2: 3 times, 3: 2 times), whereas IsolationForest’s rank varies inconsistently and go up to 5 (out of 5), FROCC is the algorithm of choice when scalability is a concern.

5.6 Parameter Sensitivity

In previous section we mentioned that the parameters were chosen based on grid search. In this section we present a study of sensitivity of FROCC to the parameters.

Sensitivity to dimensions.

From the analysis in Section 4, we know that the performance of FROCC improves with increasing number of classification dimensions. Figure 4 shows this variation for a few selected datasets.

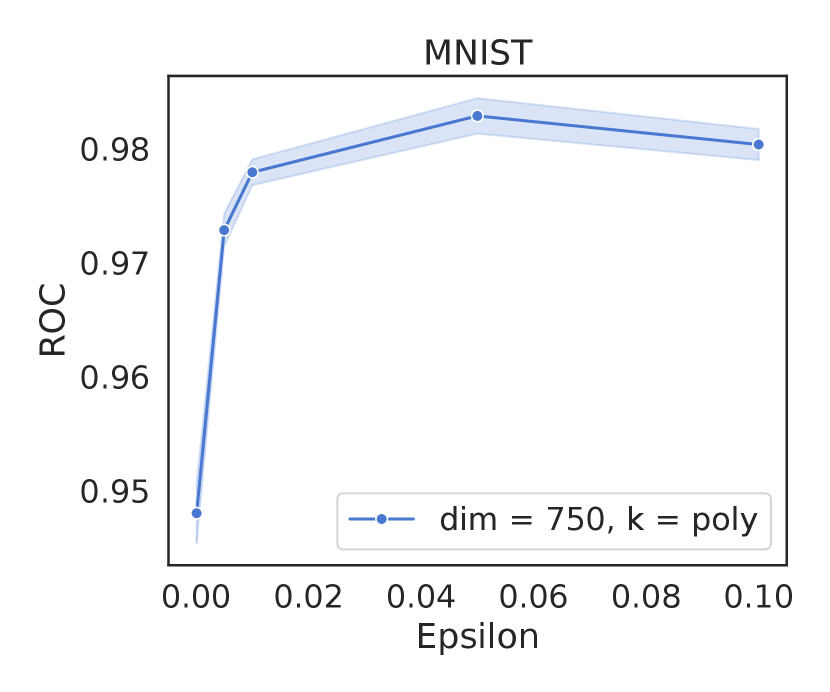

Sensitivity to .

The separation parameter is a dataset specific parameter and relates to the minimum distance of separation between positive examples and potential negative examples. In practice this comes out to be around the order of 0.1 or 0.01, as shown in Figure 5

6 Conclusion

In this paper, we presented a surprisingly simple and highly effective one-class classification strategy based on projecting data onto randomly selected vectors from the unit sphere. By representing these projections as a collections of intervals we developed FROCC. Our experiments over real and synthetic datasets reveal that despite its extreme simplicity –and the resulting computational efficiency– FROCC is not just better than the traditional methods for one-class classification but also better than the highly sophisticated (and expensive) models that use deep neural networks.

As part of our future work, we plan to explore extending the FROCC algorithms to the classification task under highly imbalanced data, and to application settings where high-performance OCC are crucial.

References

- Aggarwal [2015] Aggarwal CC (2015) Outlier analysis. In: Data mining, Springer, pp 237–263

- Ailon and Chazelle [2006] Ailon N, Chazelle B (2006) Approximate nearest neighbors and the fast Johnson-Lindenstrauss transform. In: ACM STOC, pp 557–563

- Borisyak et al [2019] Borisyak M, Ryzhikov A, Ustyuzhanin A, Derkach D, Ratnikov F, Mineeva O (2019) -class classification: an anomaly detection method for highly imbalanced or incomplete data sets, arXiv:1906.06096

- Bousquet and Elisseeff [2002] Bousquet O, Elisseeff A (2002) Stability and generalization. J Mach Learn Res 2:499–526

- Chandola et al [2009] Chandola V, Banerjee A, Kumar V (2009) Anomaly detection: A survey. ACM computing surveys (CSUR) 41(3):1–58

- Cohen et al [2008] Cohen G, Sax H, Geissbuhler A, et al (2008) Novelty detection using one-class parzen density estimator. an application to surveillance of nosocomial infections. In: MIE, pp 21–26

- Dasgupta [1999] Dasgupta S (1999) Learning mixtures of Gaussians. In: Proc. IEEE FOCS, pp 634–644

- Dua and Graff [2017] Dua D, Graff C (2017) UCI machine learning repository. URL http://archive.ics.uci.edu/ml

- Efron [1965] Efron B (1965) The convex hull of a random set of points. Biometrika 52(3-4):331–343

- Fauconnier and Haesbroeck [2009] Fauconnier C, Haesbroeck G (2009) Outliers detection with the minimum covariance determinant estimator in practice. Statistical Methodology 6(4):363–379

- Garcia-Teodoro et al [2009] Garcia-Teodoro P, Díaz-Verdejo JE, Maciá-Fernández G, Vázquez E (2009) Anomaly-based network intrusion detection: Techniques, systems and challenges. Computers & Security 28(1-2):18–28

- Indyk and Motwani [1998] Indyk P, Motwani R (1998) Approximate nearest neighbors: Towards removing the curse of dimensionality. In: Proc. ACM STOC, pp 604–613

- Indyk and Naor [2007] Indyk P, Naor A (2007) Nearest-neighbor-preserving embeddings. ACM Transactions on Algorithms (TALG) 3(3):31–es

- Irigoien et al [2014] Irigoien I, Sierra B, Arenas C (2014) Towards application of one-class classification methods to medical data. In: TheScientificWorldJournal

- Japkowicz et al [1995] Japkowicz N, Myers C, Gluck MA (1995) A novelty detection approach to classification. In: IJCAI, pp 518–523

- Khan and Madden [2009] Khan SS, Madden MG (2009) A survey of recent trends in one class classification. In: Irish conference on artificial intelligence and cognitive science, Springer, pp 188–197

- Kleinberg [1997] Kleinberg JM (1997) Two algorithms for nearest-neighbor search in high dimensions. In: Proc. 29th Annu. ACM STOC, pp 599–608

- Krizhevsky [2009] Krizhevsky A (2009) Learning multiple layers of features from tiny images

- Lake et al [2015] Lake BM, Salakhutdinov R, Tenenbaum JB (2015) Human-level concept learning through probabilistic program induction. Science 350(6266):1332–1338

- LeCun and Cortes [2005] LeCun Y, Cortes C (2005) The mnist database of handwritten digits

- Liu et al [2008] Liu FT, Ting KM, Zhou ZH (2008) Isolation forest. In: 2008 Eighth IEEE International Conference on Data Mining, IEEE, pp 413–422

- McDiarmid [1989] McDiarmid C (1989) On the method of bounded differences. In: Siemons J (ed) Surv. Comb., Cambridge University Press, London Mathematical Society Lecture Note Series

- Mirsky et al [2018] Mirsky Y, Doitshman T, Elovici Y, Shabtai A (2018) Kitsune: an ensemble of autoencoders for online network intrusion detection. Network and Distributed System Security Symposium (NDSS)

- Perera and Patel [2018] Perera P, Patel VM (2018) Learning deep features for one-class classification. IEEE Transactions on Image Processing 28:5450–5463

- Rényi and Sulanke [1963] Rényi A, Sulanke R (1963) Uber die convexe hulle von is zufallig gewahlten punkten i. Z Wahr Verw Geb 2:75–84

- Rényi and Sulanke [1964] Rényi A, Sulanke R (1964) Uber die convexe hulle von is zufallig gewahlten punkten ii. Z Wahr Verw Geb 3:138–147

- Rousseeuw and Driessen [1999] Rousseeuw PJ, Driessen KV (1999) A fast algorithm for the minimum covariance determinant estimator. Technometrics 41(3):212–223, 10.1080/00401706.1999.10485670

- Ruff et al [2018] Ruff L, Vandermeulen R, Goernitz N, Deecke L, Siddiqui SA, Binder A, Müller E, Kloft M (2018) Deep one-class classification. In: International conference on machine learning, pp 4393–4402

- Sakurada and Yairi [2014] Sakurada M, Yairi T (2014) Anomaly detection using autoencoders with nonlinear dimensionality reduction. In: Proceedings of the MLSDA 2014 2Nd Workshop on Machine Learning for Sensory Data Analysis, ACM, MLSDA’14, p 4:4–4:11

- Schölkopf et al [2000] Schölkopf B, Williamson R, Smola A, Shawe-Taylor J, Piatt J (2000) Support vector method for novelty detection. In: Advances in Neural Information Processing Systems, pp 582–588

- Siebert [1987] Siebert JP (1987) Vehicle recognition using rule based methods

- Stallkamp et al [2012] Stallkamp J, Schlipsing M, Salmen J, Igel C (2012) Man vs. computer: Benchmarking machine learning algorithms for traffic sign recognition. Neural Networks

- Swersky et al [2016] Swersky L, Marques HO, Sander J, Campello RJ, Zimek A (2016) On the evaluation of outlier detection and one-class classification methods. In: 2016 IEEE international conference on data science and advanced analytics (DSAA), IEEE, pp 1–10

- Tax and Duin [2004] Tax DM, Duin RP (2004) Support Vector Data Description. Machine Learning 54(1):45–66

- Valiant [1984] Valiant L (1984) Theory of the learnable. Commun ACM 27(11):1134–1142

- Vempala [2010] Vempala SS (2010) A random-sampling-based algorithm for learning intersections of halfspaces. J ACM 57(6):32:1–32:14

- Zheng et al [2018] Zheng P, Yuan S, Wu X, Li JY, Lu A (2018) One-class adversarial nets for fraud detection. In: AAAI