FreeMatch: Self-adaptive Thresholding for Semi-supervised Learning

Abstract

Semi-supervised Learning (SSL) has witnessed great success owing to the impressive performances brought by various methods based on pseudo labeling and consistency regularization. However, we argue that existing methods might fail to utilize the unlabeled data more effectively since they either use a pre-defined / fixed threshold or an ad-hoc threshold adjusting scheme, resulting in inferior performance and slow convergence. We first analyze a motivating example to obtain intuitions on the relationship between the desirable threshold and model’s learning status. Based on the analysis, we hence propose FreeMatch to adjust the confidence threshold in a self-adaptive manner according to the model’s learning status. We further introduce a self-adaptive class fairness regularization penalty to encourage the model for diverse predictions during the early training stage. Extensive experiments indicate the superiority of FreeMatch especially when the labeled data are extremely rare. FreeMatch achieves 5.78%, 13.59%, and 1.28% error rate reduction over the latest state-of-the-art method FlexMatch on CIFAR-10 with 1 label per class, STL-10 with 4 labels per class, and ImageNet with 100 labels per class, respectively. Moreover, FreeMatch can also boost the performance of imbalanced SSL. The codes can be found at https://github.com/microsoft/Semi-supervised-learning.111Note the results of this paper are obtained using TorchSSL (Zhang et al., 2021). We also provide codes and logs in USB (Wang et al., 2022).

1 Introduction

The superior performance of deep learning heavily relies on supervised training with sufficient labeled data (He et al., 2016; Vaswani et al., 2017; Dong et al., 2018). However, it remains laborious and expensive to obtain massive labeled data. To alleviate such reliance, semi-supervised learning (SSL) (Zhu, 2005; Zhu & Goldberg, 2009; Sohn et al., 2020; Rosenberg et al., 2005; Gong et al., 2016; Kervadec et al., 2019; Dai et al., 2017) is developed to improve the model’s generalization performance by exploiting a large volume of unlabeled data. Pseudo labeling (Lee et al., 2013; Xie et al., 2020b; McLachlan, 1975; Rizve et al., 2020) and consistency regularization (Bachman et al., 2014; Samuli & Timo, 2017; Sajjadi et al., 2016) are two popular paradigms designed for modern SSL. Recently, their combinations have shown promising results (Xie et al., 2020a; Sohn et al., 2020; Pham et al., 2021; Xu et al., 2021; Zhang et al., 2021). The key idea is that the model should produce similar predictions or the same pseudo labels for the same unlabeled data under different perturbations following the smoothness and low-density assumptions in SSL (Chapelle et al., 2006).

A potential limitation of these threshold-based methods is that they either need a fixed threshold (Xie et al., 2020a; Sohn et al., 2020; Zhang et al., 2021; Guo & Li, 2022) or an ad-hoc threshold adjusting scheme (Xu et al., 2021) to compute the loss with only confident unlabeled samples. Specifically, UDA (Xie et al., 2020a) and FixMatch (Sohn et al., 2020) retain a fixed high threshold to ensure the quality of pseudo labels. However, a fixed high threshold () could lead to low data utilization in the early training stages and ignore the different learning difficulties of different classes. Dash (Xu et al., 2021) and AdaMatch (Berthelot et al., 2022) propose to gradually grow the fixed global (dataset-specific) threshold as the training progresses. Although the utilization of unlabeled data is improved, their ad-hoc threshold adjusting scheme is arbitrarily controlled by hyper-parameters and thus disconnected from model’s learning process. FlexMatch (Zhang et al., 2021) demonstrates that different classes should have different local (class-specific) thresholds. While the local thresholds take into account the learning difficulties of different classes, they are still mapped from a pre-defined fixed global threshold. Adsh (Guo & Li, 2022) obtains adaptive thresholds from a pre-defined threshold for imbalanced Semi-supervised Learning by optimizing the the number of pseudo labels for each class. In a nutshell, these methods might be incapable or insufficient in terms of adjusting thresholds according to model’s learning progress, thus impeding the training process especially when labeled data is too scarce to provide adequate supervision.

For example, as shown in Figure 1(a), on the “two-moon” dataset with only 1 labeled sample for each class, the decision boundaries obtained by previous methods fail in the low-density assumption. Then, two questions naturally arise: 1) Is it necessary to determine the threshold based on the model learning status? and 2) How to adaptively adjust the threshold for best training efficiency?

In this paper, we first leverage a motivating example to demonstrate that different datasets and classes should determine their global (dataset-specific) and local (class-specific) thresholds based on the model’s learning status. Intuitively, we need a low global threshold to utilize more unlabeled data and speed up convergence at early training stages. As the prediction confidence increases, a higher global threshold is necessary to filter out wrong pseudo labels to alleviate the confirmation bias (Arazo et al., 2020). Besides, a local threshold should be defined on each class based on the model’s confidence about its predictions. The “two-moon” example in Figure 1(a) shows that the decision boundary is more reasonable when adjusting the thresholds based on the model’s learning status.

We then propose FreeMatch to adjust the thresholds in a self-adaptive manner according to learning status of each class (Guo et al., 2017). Specifically, FreeMatch uses the self-adaptive thresholding (SAT) technique to estimate both the global (dataset-specific) and local thresholds (class-specific) via the exponential moving average (EMA) of the unlabeled data confidence. To handle barely supervised settings (Sohn et al., 2020) more effectively, we further propose a class fairness objective to encourage the model to produce fair (i.e., diverse) predictions among all classes (as shown in Figure 1(b)). The overall training objective of FreeMatch maximizes the mutual information between model’s input and output (John Bridle, 1991), producing confident and diverse predictions on unlabeled data. Benchmark results validate its effectiveness. To conclude, our contributions are:

-

Using a motivating example, we discuss why thresholds should reflect the model’s learning status and provide some intuitions for designing a threshold-adjusting scheme.

-

We propose a novel approach, FreeMatch, which consists of Self-Adaptive Thresholding (SAT) and Self-Adaptive class Fairness regularization (SAF). SAT is a threshold-adjusting scheme that is free of setting thresholds manually and SAF encourages diverse predictions.

-

Extensive results demonstrate the superior performance of FreeMatch on various SSL benchmarks, especially when the number of labels is very limited (e.g, an error reduction of 5.78% on CIFAR-10 with 1 labeled sample per class).

2 A Motivating Example

In this section, we introduce a binary classification example to motivate our threshold-adjusting scheme. Despite the simplification of the actual model and training process, the analysis leads to some interesting implications and provides insight into how the thresholds should be set.

We aim to demonstrate the necessity of the self-adaptability and increased granularity in confidence thresholding for SSL. Inspired by (Yang & Xu, 2020), we consider a binary classification problem where the true distribution is an even mixture of two Gaussians (i.e., the label is equally likely to be positive () or negative ()). The input has the following conditional distribution:

| (1) |

We assume without loss of generality. Suppose that our classifier outputs confidence score , where is a positive parameter that reflects the model learning status and it is expected to gradually grow during training as the model becomes more confident. Note that is in fact the Bayes’ optimal linear decision boundary. We consider the scenario where a fixed threshold is used to generate pseudo labels. A sample is assigned pseudo label if and if . The pseudo label is (masked) if .

We then derive the following theorem to show the necessity of self-adaptive threshold:

Theorem 2.1.

For a binary classification problem as mentioned above, the pseudo label has the following probability distribution:

| (2) | ||||

where is the cumulative distribution function of a standard normal distribution. Moreover, increases as gets smaller.

The proof is offered in Appendix B. Theorem 2.1 has the following implications or interpretations:

-

(i)

Trivially, unlabeled data utilization (sampling rate) is directly controlled by threshold . As the confidence threshold gets larger, the unlabeled data utilization gets lower. At early training stages, adopting a high threshold may lead to low sampling rate and slow convergence since is still small.

-

(ii)

More interestingly, if . In fact, the larger is, the more imbalanced the pseudo labels are. This is potentially undesirable in the sense that we aim to tackle a balanced classification problem. Imbalanced pseudo labels may distort the decision boundary and lead to the so-called pseudo label bias. An easy remedy for this is to use class-specific thresholds and to assign pseudo labels.

-

(iii)

The sampling rate decreases as gets smaller. In other words, the more similar the two classes are, the more likely an unlabeled sample will be masked. As the two classes get more similar, there would be more samples mixed in feature space where the model is less confident about its predictions, thus a moderate threshold is needed to balance the sampling rate. Otherwise we may not have enough samples to train the model to classify the already difficult-to-classify classes.

The intuitions provided by Theorem 2.1 is that at the early training stages, should be low to encourage diverse pseudo labels, improve unlabeled data utilization and fasten convergence. However, as training continues and grows larger, a consistently low threshold will lead to unacceptable confirmation bias. Ideally, the threshold should increase along with to maintain a stable sampling rate throughout. Since different classes have different levels of intra-class diversity (different ) and some classes are harder to classify than others ( being small), a fine-grained class-specific threshold is desirable to encourage fair assignment of pseudo labels to different classes. The challenge is how to design a threshold adjusting scheme that takes all implications into account, which is the main contribution of this paper. We demonstrate our algorithm by plotting the average threshold trend and marginal pseudo label probability (i.e. sampling rate) during training in Figure 1(c) and 1(d). To sum up, we should determine global (dataset-specific) and local (class-specific) thresholds by estimating the learning status via predictions from the model. Then, we detail FreeMatch.

3 Preliminaries

In SSL, the training data consists of labeled and unlabeled data. Let and 222. be the labeled and unlabeled data, where and is their number of samples, respectively. The supervised loss for labeled data is:

| (3) |

where is the batch size, refers to cross-entropy loss, means the stochastic data augmentation function, and is the output probability from the model.

For unlabeled data, we focus on pseudo labeling using cross-entropy loss with confidence threshold for entropy minimization. We also adopt the “Weak and Strong Augmentation” strategy introduced by UDA (Xie et al., 2020a). Formally, the unsupervised training objective for unlabeled data is:

| (4) |

We use and to denote abbreviation of and , respectively. is the hard “one-hot” label converted from , is the ratio of unlabeled data batch size to labeled data batch size, and is the indicator function for confidence-based thresholding with being the threshold. The weak augmentation (i.e., random crop and flip) and strong augmentation (i.e., RandAugment Cubuk et al. (2020)) is represented by and respectively.

Besides, a fairness objective is usually introduced to encourage the model to predict each class at the same frequency, which usually has the form of (Andreas Krause, 2010), where is a uniform prior distribution. One may notice that using a uniform prior not only prevents the generalization to non-uniform data distribution but also ignores the fact that the underlying pseudo label distribution for a mini-batch may be imbalanced due to the sampling mechanism. The uniformity across a batch is essential for fair utilization of samples with per-class threshold, especially for early-training stages.

4 FreeMatch

4.1 Self-Adaptive Thresholding

We advocate that the key to determining thresholds for SSL is that thresholds should reflect the learning status. The learning effect can be estimated by the prediction confidence of a well-calibrated model (Guo et al., 2017). Hence, we propose self-adaptive thresholding (SAT) that automatically defines and adaptively adjusts the confidence threshold for each class by leveraging the model predictions during training. SAT first estimates a global threshold as the EMA of the confidence from the model. Then, SAT modulates the global threshold via the local class-specific thresholds estimated as the EMA of the probability for each class from the model. When training starts, the threshold is low to accept more possibly correct samples into training. As the model becomes more confident, the threshold adaptively increases to filter out possibly incorrect samples to reduce the confirmation bias. Thus, as shown in Figure 2, we define SAT as indicating the threshold for class at the -th iteration.

Self-adaptive Global Threshold

We design the global threshold based on the following two principles. First, the global threshold in SAT should be related to the model’s confidence on unlabeled data, reflecting the overall learning status. Moreover, the global threshold should stably increase during training to ensure incorrect pseudo labels are discarded. We set the global threshold as average confidence from the model on unlabeled data, where represents the -th time step (iteration). However, it would be time-consuming to compute the confidence for all unlabeled data at every time step or even every training epoch due to its large volume. Instead, we estimate the global confidence as the exponential moving average (EMA) of the confidence at each training time step. We initialize as where indicates the number of classes. The global threshold is defined and adjusted as:

| (5) |

where is the momentum decay of EMA.

Self-adaptive Local Threshold

The local threshold aims to modulate the global threshold in a class-specific fashion to account for the intra-class diversity and the possible class adjacency. We compute the expectation of the model’s predictions on each class to estimate the class-specific learning status:

| (6) |

where is the list containing all . Integrating the global and local thresholds, we obtain the final self-adaptive threshold as:

| (7) |

where is the Maximum Normalization (i.e., ). Finally, the unsupervised training objective at the -th iteration is:

| (8) |

4.2 Self-Adaptive Fairness

We include the class fairness objective as mentioned in Section 3 into FreeMatch to encourage the model to make diverse predictions for each class and thus produce a meaningful self-adaptive threshold, especially under the settings where labeled data are rare. Instead of using a uniform prior as in (Arazo et al., 2020), we use the EMA of model predictions from Eq. 6 as an estimate of the expectation of prediction distribution over unlabeled data. We optimize the cross-entropy of and over mini-batch as an estimate of . Considering that the underlying pseudo label distribution may not be uniform, we propose to modulate the fairness objective in a self-adaptive way, i.e., normalizing the expectation of probability by the histogram distribution of pseudo labels to counter the negative effect of imbalance as:

| (9) |

Similar to , we compute as:

| (10) |

The self-adaptive fairness (SAF) at the -th iteration is formulated as:

| (11) |

where . SAF encourages the expectation of the output probability for each mini-batch to be close to a marginal class distribution of the model, after normalized by histogram distribution. It helps the model produce diverse predictions especially for barely supervised settings (Sohn et al., 2020), thus converges faster and generalizes better. This is also showed in Figure 1(b).

The overall objective for FreeMatch at -th iteration is:

| (12) |

where and represents the loss weight for and respectively. With and , FreeMatch maximizes the mutual information between its outputs and inputs. We present the procedure of FreeMatch in Algorithm 1 of Appendix.

5 Experiments

5.1 Setup

We evaluate FreeMatch on common benchmarks: CIFAR-10/100 (Krizhevsky et al., 2009), SVHN (Netzer et al., 2011), STL-10 (Coates et al., 2011) and ImageNet (Deng et al., 2009). Following previous work (Sohn et al., 2020; Xu et al., 2021; Zhang et al., 2021; Oliver et al., 2018), we conduct experiments with varying amounts of labeled data. In addition to the commonly-chosen labeled amounts, following (Sohn et al., 2020), we further include the most challenging case of CIFAR-10: each class has only one labeled sample.

For fair comparison, we train and evaluate all methods using the unified codebase TorchSSL (Zhang et al., 2021) with the same backbones and hyperparameters. Concretely, we use Wide ResNet-28-2 (Zagoruyko & Komodakis, 2016) for CIFAR-10, Wide ResNet-28-8 for CIFAR-100, Wide ResNet-37-2 (Zhou et al., 2020) for STL-10, and ResNet-50 (He et al., 2016) for ImageNet. We use SGD with a momentum of as optimizer. The initial learning rate is with a cosine learning rate decay schedule as , where is the initial learning rate, is the current (total) training step and we set for all datasets. At the testing phase, we use an exponential moving average with the momentum of of the training model to conduct inference for all algorithms. The batch size of labeled data is except for ImageNet where we set . We use the same weight decay value, pre-defined threshold , unlabeled batch ratio and loss weights introduced for Pseudo-Label (Lee et al., 2013), model (Rasmus et al., 2015), Mean Teacher (Tarvainen & Valpola, 2017), VAT (Miyato et al., 2018), MixMatch (Berthelot et al., 2019b), ReMixMatch (Berthelot et al., 2019a), UDA (Xie et al., 2020a), FixMatch (Sohn et al., 2020), and FlexMatch (Zhang et al., 2021).

We implement MPL based on UDA as in (Pham et al., 2021), where we set temperature as 0.8 and as 10. We do not fine-tune MPL on labeled data as in (Pham et al., 2021) since we find fine-tuning will make the model overfit the labeled data especially with very few of them. For Dash, we use the same parameters as in (Xu et al., 2021) except we warm-up on labeled data for 2 epochs since too much warm-up will lead to the overfitting (i.e. 2,048 training iterations). For FreeMatch, we set for all experiments. Besides, we set for CIFAR-10 with 10 labels, CIFAR-100 with 400 labels, STL-10 with 40 labels, ImageNet with 100k labels, and all experiments for SVHN. For other settings, we use . For SVHN, we find that using a low threshold at early training stage impedes the model to cluster the unlabeled data, thus we adopt two training techniques for SVHN: (1) warm-up the model on only labeled data for 2 epochs as Dash; and (2) restrict the SAT within the range . The detailed hyperparameters are introduced in Appendix D. We train each algorithm 3 times using different random seeds and report the best error rates of all checkpoints (Zhang et al., 2021).

| Dataset | CIFAR-10 | CIFAR-100 | SVHN | STL-10 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| # Label | 10 | 40 | 250 | 4000 | 400 | 2500 | 10000 | 40 | 250 | 1000 | 40 | 1000 |

| Model (Rasmus et al., 2015) | 79.181.11 | 74.341.76 | 46.241.29 | 13.130.59 | 86.960.80 | 58.800.66 | 36.650.00 | 67.480.95 | 13.301.12 | 7.160.11 | 74.310.85 | 32.780.40 |

| Pseudo Label (Lee et al., 2013) | 80.210.55 | 74.610.26 | 46.492.20 | 15.080.19 | 87.450.85 | 57.740.28 | 36.550.24 | 64.615.6 | 15.590.95 | 9.400.32 | 74.680.99 | 32.640.71 |

| VAT (Miyato et al., 2018) | 79.811.17 | 74.662.12 | 41.031.79 | 10.510.12 | 85.201.40 | 46.840.79 | 32.140.19 | 74.753.38 | 4.330.12 | 4.110.20 | 74.740.38 | 37.951.12 |

| MeanTeacher (Tarvainen & Valpola, 2017) | 76.370.44 | 70.091.60 | 37.463.30 | 8.100.21 | 81.111.44 | 45.171.06 | 31.750.23 | 36.093.98 | 3.450.03 | 3.270.05 | 71.721.45 | 33.901.37 |

| MixMatch (Berthelot et al., 2019b) | 65.767.06 | 36.196.48 | 13.630.59 | 6.660.26 | 67.590.66 | 39.760.48 | 27.780.29 | 30.608.39 | 4.560.32 | 3.690.37 | 54.930.96 | 21.700.68 |

| ReMixMatch (Berthelot et al., 2019a) | 20.777.48 | 9.881.03 | 6.300.05 | 4.840.01 | 42.751.05 | 26.030.35 | 20.020.27 | 24.049.13 | 6.360.22 | 5.160.31 | 32.126.24 | 6.740.14 |

| UDA (Xie et al., 2020a) | 34.5310.69 | 10.623.75 | 5.160.06 | 4.290.07 | 46.391.59 | 27.730.21 | 22.490.23 | 5.124.27 | 1.920.05 | 1.890.01 | 37.428.44 | 6.640.17 |

| FixMatch (Sohn et al., 2020) | 24.797.65 | 7.470.28 | 4.860.05 | 4.210.08 | 46.420.82 | 28.030.16 | 22.200.12 | 3.811.18 | 2.020.02 | 1.960.03 | 35.974.14 | 6.250.33 |

| Dash (Xu et al., 2021) | 27.2814.09 | 8.933.11 | 5.160.23 | 4.360.11 | 44.820.96 | 27.150.22 | 21.880.07 | 2.190.18 | 2.040.02 | 1.970.01 | 34.524.30 | 6.390.56 |

| MPL (Pham et al., 2021) | 23.556.01 | 6.620.91 | 5.760.24 | 4.550.04 | 46.261.84 | 27.710.19 | 21.740.09 | 9.338.02 | 2.290.04 | 2.280.02 | 35.764.83 | 6.660.00 |

| FlexMatch (Zhang et al., 2021) | 13.8512.04 | 4.970.06 | 4.980.09 | 4.190.01 | 39.941.62 | 26.490.20 | 21.900.15 | 8.193.20 | 6.592.29 | 6.720.30 | 29.154.16 | 5.770.18 |

| FreeMatch | 8.074.24 | 4.900.04 | 4.880.18 | 4.100.02 | 37.980.42 | 26.470.20 | 21.680.03 | 1.970.02 | 1.970.01 | 1.960.03 | 15.560.55 | 5.630.15 |

| Fully-Supervised | 4.620.05 | 19.300.09 | 2.130.01 | - | ||||||||

5.2 Quantitative Results

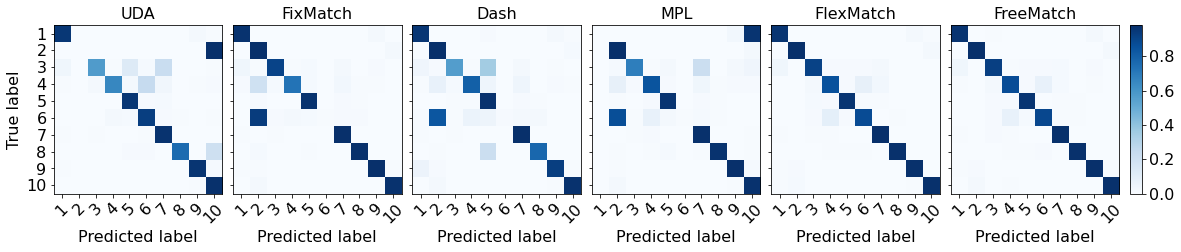

The Top-1 classification error rates of CIFAR-10/100, SVHN, and STL-10 are reported in Table 1. The results on ImageNet with 100 labels per class are in Table 2. We also provide detailed results on precision, recall, F1 score, and confusion matrix in Appendix E.3. These quantitative results demonstrate that FreeMatch achieves the best performance on CIFAR-10, STL-10, and ImageNet datasets, and it produces very close results on SVHN to the best competitor. On CIFAR-100, FreeMatch is better than ReMixMatch when there are 400 labels. The good performances of ReMixMatch on CIFAR-100 (2500) and CIFAR-100 (10000) are probably brought by the mix up (Zhang et al., 2017) technique and the self-supervised learning part. On ImageNet with 100k labels, FreeMatch significantly outperforms the latest counterpart FlexMatch by 1.28%333Following (Zhang et al., 2021), we train ImageNet for iterations like other datasets for a fair comparison. We use 4 Tesla V100 GPUs on ImageNet. . We also notice that FreeMatch exhibits fast computation in ImageNet from Table 2. Note that FlexMatch is much slower than FixMatch and FreeMatch because it needs to maintain a list that records whether each sample is clean, which needs heavy indexing computation budget on large datasets.

| Top-1 | Top-5 |

|

|||

|---|---|---|---|---|---|

| FixMatch | 43.66 | 21.80 | 0.4 | ||

| FlexMatch | 41.85 | 19.48 | 0.6 | ||

| FreeMatch | 40.57 | 18.77 | 0.4 |

Noteworthy is that, FreeMatch consistently outperforms other methods by a large margin on settings with extremely limited labeled data: 5.78% on CIFAR-10 with 10 labels, 1.96% on CIFAR-100 with 400 labels, and surprisingly 13.59% on STL-10 with 40 labels. STL-10 is a more realistic and challenging dataset compared to others, which consists of a large unlabeled set of 100k images. The significant improvements demonstrate the capability and potential of FreeMatch to be deployed in real-world applications.

5.3 Qualitative Analysis

We present some qualitative analysis: Why and how does FreeMatch work? What other benefits does it bring? We evaluate the class average threshold and average sampling rate on STL-10 (40) (i.e., 40 labeled samples on STL-10) of FreeMatch to demonstrate how it works aligning with our theoretical analysis. We record the threshold and compute the sampling rate for each batch during training. The sampling rate is calculated on unlabeled data as . We also plot the convergence speed in terms of accuracy and the confusion matrix to show the proposed component in FreeMatch helps improve performance. From Figure 3(a) and Figure 3(b), one can observe that the threshold and sampling rate change of FreeMatch is mostly consistent with our theoretical analysis. That is, at the early stage of training, the threshold of FreeMatch is relatively lower, compared to FlexMatch and FixMatch, resulting in higher unlabeled data utilization (sampling rate), which fastens the convergence. As the model learns better and becomes more confident, the threshold of FreeMatch increases to a high value to alleviate the confirmation bias, leading to stably high sampling rate. Correspondingly, the accuracy of FreeMatch increases vastly (as shown in Figure 3(c)) and resulting better class-wise accuracy (as shown in Figure 3(d)). Note that Dash fails to learn properly due to the employment of the high sampling rate until 100k iterations.

To further demonstrate the effectiveness of the class-specific threshold in FreeMatch, we present the t-SNE (Van der Maaten & Hinton, 2008) visualization of features of FlexMatch and FreeMatch on STL-10 (40) in Figure 5 of Section E.8. We exhibit the corresponding local threshold for each class. Interestingly, FlexMatch has a high threshold, i.e., pre-defined , for class and class , yet their feature variances are very large and are confused with other classes. This means the class-wise thresholds in FlexMatch cannot accurately reflect the learning status. In contrast, FreeMatch clusters most classes better. Besides, for the similar classes that are confused with each other, FreeMatch retains a higher average threshold than of FlexMatch, enabling to mask more wrong pseudo labels. We also study the pseudo label accuracy in Appendix E.9 and shows FreeMatch can reduce noise during training.

5.4 Ablation Study

Self-adaptive Threshold

We conduct experiments on the components of SAT in FreeMatch and compare to the components in FlexMatch (Zhang et al., 2021), FixMatch (Sohn et al., 2020), Class-Balanced Self-Training (CBST) (Zou et al., 2018), and Relative Threshold (RT) in AdaMatch (Berthelot et al., 2022). The ablation is conducted on CIFAR-10 (40 labels).

| Threshold | CIFAR-10 (40) |

|---|---|

| (FixMatch) | 7.470.28 |

| (FlexMatch) | 4.970.06 |

| 5.130.03 | |

| (Global) | 6.060.65 |

| 8.402.49 | |

| CBST | 16.652.90 |

| RT (AdaMatch) | 6.090.65 |

| SAT (Global and Local) | 4.920.04 |

As shown in Table 3, SAT achieves the best performance among all the threshold schemes. Self-adaptive global threshold and local threshold themselves also achieve comparable results, compared to the fixed threshold , demonstrating both local and global threshold proposed are good learning effect estimators. When using CPL to adjust , the result is worse than the fixed threshold and exhibits larger variance, indicating potential instability of CPL. AdaMatch (Berthelot et al., 2022) uses the RT, which can be viewed as a global threshold at -th iteration computed on the predictions of labeled data without EMA, whereas FreeMatch conducts computation of with EMA on unlabeled data that can better reflect the overall data distribution. For class-wise threshold, CBST (Zou et al., 2018) maintains a pre-defined sampling rate, which could be the reason for its bad performance since the sampling rate should be changed during training as we analyzed in Sec. 2. Note that we did not include in this ablation for a fair comparison. Ablation study in Appendix E.4 and E.5 on FixMatch and FlexMatch with different thresholds shows SAT serves to reduce hyperparameter-tuning computation or overall training time in the event of similar performance for an optimally selected threshold.

| Fairness | CIFAR-10 (10) |

|---|---|

| w/o fairness | 10.377.70 |

| 9.576.67 | |

| 12.075.23 | |

| DA (AdaMatch) | 32.941.83 |

| DA (ReMixMatch) | 11.068.21 |

| SAF | 8.074.24 |

Self-adaptive Fairness

As illustrated in Table 4, we also empirically study the effect of SAF on CIFAR-10 (10 labels). We study the original version of fairness objective as in (Arazo et al., 2020). Based on that, we study the operation of normalization probability by histograms and show that countering the effect of imbalanced underlying distribution indeed helps the model to learn and diverse better. One may notice that adding original fairness regularization alone already helps improve the performance. Whereas adding normalization operation in the log operation hurts the performance, suggesting the underlying batch data are indeed not uniformly distributed. We also evaluate Distribution Alignment (DA) for class fairness in ReMixMatch (Berthelot et al., 2019a) and AdaMatch (Berthelot et al., 2022), showing inferior results than SAF. A possible reason for the worse performance of DA (AdaMatch) is that it only uses labeled batch prediction as the target distribution which cannot reflect the true data distribution especially when labeled data is scarce and changing the target distribution to the ground truth uniform, i.e., DA (ReMixMatch), is better for the case with extremely limited labels. We also proved SAF can be easily plugged into FlexMatch and bring improvements in Appendix E.6. The EMA decay ablation and performances of imbalanced settings are in Appendix E.5 and Appendix E.7.

6 Related Work

To reduce confirmation bias (Arazo et al., 2020) in pseudo labeling, confidence-based thresholding techniques are proposed to ensure the quality of pseudo labels (Xie et al., 2020a; Sohn et al., 2020; Zhang et al., 2021; Xu et al., 2021), where only the unlabeled data whose confidences are higher than the threshold are retained. UDA (Xie et al., 2020a) and FixMatch (Sohn et al., 2020) keep the fixed pre-defined threshold during training. FlexMatch (Zhang et al., 2021) adjusts the pre-defined threshold in a class-specific fashion according to the per-class learning status estimated by the number of confident unlabeled data. A co-current work Adsh (Guo & Li, 2022) explicitly optimizes the number of pseudo labels for each class in the SSL objective to obtain adaptive thresholds for imbalanced Semi-supervised Learning. However, it still needs a user-predefined threshold. Dash (Xu et al., 2021) defines a threshold according to the loss on labeled data and adjusts the threshold according to a fixed mechanism. A more recent work, AdaMatch (Berthelot et al., 2022), aims to unify SSL and domain adaptation using a pre-defined threshold multiplying the average confidence of the labeled data batch to mask noisy pseudo labels. It needs a pre-defined threshold and ignores the unlabeled data distribution especially when labeled data is too rare to reflect the unlabeled data distribution. Besides, distribution alignment (Berthelot et al., 2019a; 2022) is also utilized in Adamatch to encourage fair predictions on unlabeled data. Previous methods might fail to choose meaningful thresholds due to ignorance of the relationship between the model learning status and thresholds. Chen et al. (2020); Kumar et al. (2020) try to understand self-training / thresholding from the theoretical perspective. A motivation example is used to derive implications for adjusting threshold according to learning status.

Except consistency regularization, entropy-based regularization is also used in SSL. Entropy minimization (Grandvalet et al., 2005) encourages the model to make confident predictions for all samples disregarding the actual class predicted. Maximization of expectation of entropy (Andreas Krause, 2010; Arazo et al., 2020) over all samples is also proposed to induce fairness to the model, enforcing the model to predict each class at the same frequency. But previous ones assume a uniform prior for underlying data distribution and also ignore the batch data distribution. Distribution alignment (Berthelot et al., 2019a) adjusts the pseudo labels according to labeled data distribution and the EMA of model predictions.

7 Conclusion

We proposed FreeMatch that utilizes self-adaptive thresholding and class-fairness regularization for SSL. FreeMatch outperforms strong competitors across a variety of SSL benchmarks, especially in the barely-supervised setting. We believe that confidence thresholding has more potential in SSL. A potential limitation is that the adaptiveness still originates from the heuristics of the model prediction, and we hope the efficacy of FreeMatch inspires more research for optimal thresholding.

References

- Andreas Krause (2010) Ryan Gomes Andreas Krause, Pietro Perona. Discriminative clustering by regularized information maximization. In Advances in neural information processing systems, 2010.

- Arazo et al. (2020) Eric Arazo, Diego Ortego, Paul Albert, Noel E O’Connor, and Kevin McGuinness. Pseudo-labeling and confirmation bias in deep semi-supervised learning. In 2020 International Joint Conference on Neural Networks (IJCNN), pp. 1–8. IEEE, 2020.

- Bachman et al. (2014) Philip Bachman, Ouais Alsharif, and Doina Precup. Learning with pseudo-ensembles. Advances in neural information processing systems, 27:3365–3373, 2014.

- Berthelot et al. (2019a) David Berthelot, Nicholas Carlini, Ekin D Cubuk, Alex Kurakin, Kihyuk Sohn, Han Zhang, and Colin Raffel. Remixmatch: Semi-supervised learning with distribution matching and augmentation anchoring. In International Conference on Learning Representations, 2019a.

- Berthelot et al. (2019b) David Berthelot, Nicholas Carlini, Ian Goodfellow, Nicolas Papernot, Avital Oliver, and Colin A Raffel. Mixmatch: A holistic approach to semi-supervised learning. Advances in Neural Information Processing Systems, 32, 2019b.

- Berthelot et al. (2022) David Berthelot, Rebecca Roelofs, Kihyuk Sohn, Nicholas Carlini, and Alex Kurakin. Adamatch: A unified approach to semi-supervised learning and domain adaptation. In International Conference on Learning Representations (ICLR), 2022.

- Carlini et al. (2019) Nicholas Carlini, Ulfar Erlingsson, and Nicolas Papernot. Distribution density, tails, and outliers in machine learning: Metrics and applications. arXiv preprint arXiv:1910.13427, 2019.

- Chapelle et al. (2006) Olivier Chapelle, Bernhard Schölkopf, and Alexander Zien (eds.). Semi-Supervised Learning. The MIT Press, 2006.

- Chen et al. (2020) Yining Chen, Colin Wei, Ananya Kumar, and Tengyu Ma. Self-training avoids using spurious features under domain shift. Advances in Neural Information Processing Systems, 33:21061–21071, 2020.

- Coates et al. (2011) Adam Coates, Andrew Ng, and Honglak Lee. An analysis of single-layer networks in unsupervised feature learning. In Proceedings of the fourteenth international conference on artificial intelligence and statistics, pp. 215–223. JMLR Workshop and Conference Proceedings, 2011.

- Cubuk et al. (2020) Ekin D Cubuk, Barret Zoph, Jonathon Shlens, and Quoc V Le. Randaugment: Practical automated data augmentation with a reduced search space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 702–703, 2020.

- Dai et al. (2017) Zihang Dai, Zhilin Yang, Fan Yang, William W Cohen, and Russ R Salakhutdinov. Good semi-supervised learning that requires a bad gan. Advances in neural information processing systems, 30, 2017.

- Deng et al. (2009) Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition, pp. 248–255. Ieee, 2009.

- Dong et al. (2018) Linhao Dong, Shuang Xu, and Bo Xu. Speech-transformer: a no-recurrence sequence-to-sequence model for speech recognition. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5884–5888. IEEE, 2018.

- Fan et al. (2021) Yue Fan, Dengxin Dai, and Bernt Schiele. Cossl: Co-learning of representation and classifier for imbalanced semi-supervised learning. arXiv preprint arXiv:2112.04564, 2021.

- Gong et al. (2016) Chen Gong, Dacheng Tao, Stephen J Maybank, Wei Liu, Guoliang Kang, and Jie Yang. Multi-modal curriculum learning for semi-supervised image classification. IEEE Transactions on Image Processing, 25(7):3249–3260, 2016.

- Grandvalet et al. (2005) Yves Grandvalet, Yoshua Bengio, et al. Semi-supervised learning by entropy minimization. volume 367, pp. 281–296, 2005.

- Guo et al. (2017) Chuan Guo, Geoff Pleiss, Yu Sun, and Kilian Q Weinberger. On calibration of modern neural networks. In International Conference on Machine Learning, pp. 1321–1330. PMLR, 2017.

- Guo & Li (2022) Lan-Zhe Guo and Yu-Feng Li. Class-imbalanced semi-supervised learning with adaptive thresholding. In International Conference on Machine Learning, pp. 8082–8094. PMLR, 2022.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778, 2016.

- John Bridle (1991) David MacKay John Bridle, Anthony Heading. Unsupervised classifiers, mutual information and ’phantom targets. 1991.

- Kervadec et al. (2019) Hoel Kervadec, Jose Dolz, Éric Granger, and Ismail Ben Ayed. Curriculum semi-supervised segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 568–576. Springer, 2019.

- Kim et al. (2020) Jaehyung Kim, Youngbum Hur, Sejun Park, Eunho Yang, Sung Ju Hwang, and Jinwoo Shin. Distribution aligning refinery of pseudo-label for imbalanced semi-supervised learning. Advances in Neural Information Processing Systems, 33:14567–14579, 2020.

- Kingma & Ba (2014) Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- Krizhevsky et al. (2009) Alex Krizhevsky et al. Learning multiple layers of features from tiny images. 2009.

- Kumar et al. (2020) Ananya Kumar, Tengyu Ma, and Percy Liang. Understanding self-training for gradual domain adaptation. In International Conference on Machine Learning, pp. 5468–5479. PMLR, 2020.

- Lee et al. (2013) Dong-Hyun Lee et al. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Workshop on challenges in representation learning, ICML, volume 3, pp. 896, 2013.

- Lee et al. (2021) Hyuck Lee, Seungjae Shin, and Heeyoung Kim. Abc: Auxiliary balanced classifier for class-imbalanced semi-supervised learning. Advances in Neural Information Processing Systems, 34, 2021.

- McLachlan (1975) Geoffrey J McLachlan. Iterative reclassification procedure for constructing an asymptotically optimal rule of allocation in discriminant analysis. Journal of the American Statistical Association, 70(350):365–369, 1975.

- Miyato et al. (2018) Takeru Miyato, Shin-ichi Maeda, Masanori Koyama, and Shin Ishii. Virtual adversarial training: a regularization method for supervised and semi-supervised learning. IEEE transactions on pattern analysis and machine intelligence, 41(8):1979–1993, 2018.

- Netzer et al. (2011) Yuval Netzer, Tao Wang, Adam Coates, Alessandro Bissacco, Bo Wu, and Andrew Y Ng. Reading digits in natural images with unsupervised feature learning. 2011.

- Oliver et al. (2018) Avital Oliver, Augustus Odena, Colin A Raffel, Ekin Dogus Cubuk, and Ian Goodfellow. Realistic evaluation of deep semi-supervised learning algorithms. Advances in neural information processing systems, 31, 2018.

- Pham et al. (2021) Hieu Pham, Zihang Dai, Qizhe Xie, and Quoc V Le. Meta pseudo labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11557–11568, 2021.

- Rasmus et al. (2015) Antti Rasmus, Mathias Berglund, Mikko Honkala, Harri Valpola, and Tapani Raiko. Semi-supervised learning with ladder networks. Advances in Neural Information Processing Systems, 28:3546–3554, 2015.

- Rizve et al. (2020) Mamshad Nayeem Rizve, Kevin Duarte, Yogesh S Rawat, and Mubarak Shah. In defense of pseudo-labeling: An uncertainty-aware pseudo-label selection framework for semi-supervised learning. In International Conference on Learning Representations, 2020.

- Rosenberg et al. (2005) Chuck Rosenberg, Martial Hebert, and Henry Schneiderman. Semi-supervised self-training of object detection models. 2005.

- Sajjadi et al. (2016) Mehdi Sajjadi, Mehran Javanmardi, and Tolga Tasdizen. Regularization with stochastic transformations and perturbations for deep semi-supervised learning. Advances in neural information processing systems, 29:1163–1171, 2016.

- Samuli & Timo (2017) Laine Samuli and Aila Timo. Temporal ensembling for semi-supervised learning. In International Conference on Learning Representations (ICLR), volume 4, pp. 6, 2017.

- Sohn et al. (2020) Kihyuk Sohn, David Berthelot, Nicholas Carlini, Zizhao Zhang, Han Zhang, Colin A Raffel, Ekin Dogus Cubuk, Alexey Kurakin, and Chun-Liang Li. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Advances in Neural Information Processing Systems, 33, 2020.

- Tarvainen & Valpola (2017) Antti Tarvainen and Harri Valpola. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 1195–1204, 2017.

- Van der Maaten & Hinton (2008) Laurens Van der Maaten and Geoffrey Hinton. Visualizing data using t-sne. Journal of machine learning research, 9(11), 2008.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. Advances in neural information processing systems, 30, 2017.

- Wang et al. (2022) Yidong Wang, Hao Chen, Yue Fan, SUN Wang, Ran Tao, Wenxin Hou, Renjie Wang, Linyi Yang, Zhi Zhou, Lan-Zhe Guo, et al. Usb: A unified semi-supervised learning benchmark for classification. In Thirty-sixth Conference on Neural Information Processing Systems Datasets and Benchmarks Track, 2022.

- Wei et al. (2021) Chen Wei, Kihyuk Sohn, Clayton Mellina, Alan Yuille, and Fan Yang. Crest: A class-rebalancing self-training framework for imbalanced semi-supervised learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10857–10866, 2021.

- Xie et al. (2020a) Qizhe Xie, Zihang Dai, Eduard Hovy, Thang Luong, and Quoc Le. Unsupervised data augmentation for consistency training. Advances in Neural Information Processing Systems, 33, 2020a.

- Xie et al. (2020b) Qizhe Xie, Minh-Thang Luong, Eduard Hovy, and Quoc V Le. Self-training with noisy student improves imagenet classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10687–10698, 2020b.

- Xu et al. (2021) Yi Xu, Lei Shang, Jinxing Ye, Qi Qian, Yu-Feng Li, Baigui Sun, Hao Li, and Rong Jin. Dash: Semi-supervised learning with dynamic thresholding. In International Conference on Machine Learning, pp. 11525–11536. PMLR, 2021.

- Yang & Xu (2020) Yuzhe Yang and Zhi Xu. Rethinking the value of labels for improving class-imbalanced learning. In NeurIPS, 2020.

- Zagoruyko & Komodakis (2016) Sergey Zagoruyko and Nikos Komodakis. Wide residual networks. In British Machine Vision Conference 2016. British Machine Vision Association, 2016.

- Zhang et al. (2021) Bowen Zhang, Yidong Wang, Wenxin Hou, Hao Wu, Jindong Wang, Manabu Okumura, and Takahiro Shinozaki. Flexmatch: Boosting semi-supervised learning with curriculum pseudo labeling. Advances in Neural Information Processing Systems, 34, 2021.

- Zhang et al. (2017) Hongyi Zhang, Moustapha Cisse, Yann N Dauphin, and David Lopez-Paz. mixup: Beyond empirical risk minimization. arXiv preprint arXiv:1710.09412, 2017.

- Zhou et al. (2020) Tianyi Zhou, Shengjie Wang, and Jeff Bilmes. Time-consistent self-supervision for semi-supervised learning. In International Conference on Machine Learning, pp. 11523–11533. PMLR, 2020.

- Zhu & Goldberg (2009) Xiaojin Zhu and Andrew B Goldberg. Introduction to semi-supervised learning. Synthesis lectures on artificial intelligence and machine learning, 3(1):1–130, 2009.

- Zhu (2005) Xiaojin Jerry Zhu. Semi-supervised learning literature survey. 2005.

- Zou et al. (2018) Yang Zou, Zhiding Yu, BVK Kumar, and Jinsong Wang. Unsupervised domain adaptation for semantic segmentation via class-balanced self-training. In Proceedings of the European conference on computer vision (ECCV), pp. 289–305, 2018.

Appendix A Experimental details of the “two-moon” dataset.

We generate only two labeled data points (one label per each class, denoted by black dot and round circle) and 1,000 unlabeled data points (in gray) in 2-D space. We train a 3-layer MLP with 64 neurons in each layer and ReLU activation for 2,000 iterations. The red samples indicate the different samples whose confidence values are above the threshold of FreeMatch but below that of FixMatch. The sampling rate is computed on unlabeled data as . Results are averaged 5 times.

Appendix B Proof of Theorem 2.1

Theorem 2.1 For a binary classification problem as mentioned above, the pseudo label has the following probability distribution:

| (13) | ||||

where is the cumulative distribution function of a standard normal distribution. Moreover, increases as gets smaller.

Proof.

A sample will be assigned pseudo label 1 if

which is equivalent to

Likewise, will be assigned pseudo label -1 if

which is equivalent to

If we integrate over , we arrive at the following conditional probabilities:

Recall that , therefore

Now, let’s use to denote , to show that increases as gets smaller, we only need to show gets bigger. We write as

where are positive constants. We futher only need to show that is monotone increasing on . Take the derivative of , we have

where is the probability density function of a standard normal distribution. Since , we have , and the proof is complete.

∎

Appendix C Algorithm

We present the pseudo algorithm of FreeMatch. Compared to FixMatch, each training step involves updating the global threshold and local threshold from the unlabeled data batch, and computing corresponding histograms. FreeMatchs introduce a very trivial computation budget compared to FixMatch, demonstrated also in our main paper.

Appendix D Hyperparameter setting

For reproduction, we show the detailed hyperparameter setting for FreeMatch in Table 5 and 6, for algorithm-dependent and algorithm-independent hyperparameters, respectively.

Algorithm FreeMatch Unlabeled Data to Labeled Data Ratio (CIFAR-10/100, STL-10, SVHN) 7 Unlabeled Data to Labeled Data Ratio (ImageNet) 1 Loss weight for all experiments 1 Loss weight for CIFAR-10 (10), CIFAR-100 (400), STL-10 (40), ImageNet (100k), SVHN 0.01 Loss weight for others 0.05 Thresholding EMA decay for all experiments 0.999

Dataset CIFAR-10 CIFAR-100 STL-10 SVHN ImageNet Model WRN-28-2 WRN-28-8 WRN-37-2 WRN-28-2 ResNet-50 Weight decay 5e-4 1e-3 5e-4 5e-4 3e-4 Batch size 64 128 Learning rate 0.03 SGD momentum 0.9 EMA decay 0.999

Note that for ImageNet experiments, we used the same learning rate, optimizer scheme, and training iterations as other experiments, and a batch size of 128 is adopted, whereas, in FixMatch, a large batch size of 1024 and a different optimizer is used. From our experiments, we found that training ImageNet with only is not enough, and the model starts converging at the end of training. Longer training iterations on ImageNet will be explored in the future. Single NVIDIA V100 is used for training on CIFAR-10, CIFAR-100, SVHN and STL-10. It costs about 2 days to train on CIFAR-10 and SVHN. 10 days are needed for the training on CIFAR-100 and STL-10.

Appendix E Extensive Experiment Details and Results

We present extensive experiment details and results as complementary to the experiments in the main paper.

E.1 Significant Tests

We did significance test using the Friedman test. We choose the top 7 algorithms on 4 datasets (i.e., ). Then, we compute the F value as , which is clearly larger than the thresholds and . This test indicates that there are significant differences between all algorithms.

To further show our significance, we report the average error rates for each dataset in Table 7. We can see FreeMatch outperforms most SSL algorithms significantly.

| CIFAR-10 | CIFAR-100 | SVHN | STL-10 | Total Average | |

|---|---|---|---|---|---|

| Model | 53.22 | 60.80 | 29.31 | 53.55 | 49.19 |

| Pseudo Label | 54.10 | 60.58 | 29.87 | 53.66 | 49.59 |

| VAT | 51.50 | 54.73 | 27.73 | 56.35 | 47.17 |

| MeanTeacher | 48.01 | 52.68 | 14.27 | 52.81 | 41.54 |

| MixMatch | 30.56 | 45.04 | 12.95 | 38.32 | 31.07 |

| ReMixMatch | 10.45 | 29.60 | 11.85 | 19.43 | 17.08 |

| UDA | 13.65 | 32.20 | 2.98 | 22.03 | 17.02 |

| FixMatch | 10.33 | 32.22 | 2.60 | 21.11 | 15.67 |

| Dash | 11.43 | 31.28 | 2.07 | 20.46 | 15.56 |

| MPL | 10.12 | 31.90 | 4.63 | 21.21 | 16.04 |

| FlexMatch | 7.00 | 29.44 | 7.17 | 17.46 | 14.40 |

| FreeMatch | 5.49 | 28.71 | 1.97 | 10.60 | 11.26 |

E.2 CIFAR-10 (10) Labeled Data

Following (Sohn et al., 2020), we investigate the limitations of SSL algorithms by giving only one labeled training sample per class. The selected 3 labeled training sets are visualized in Figure 4, which are obtained by (Sohn et al., 2020) using ordering mechanism (Carlini et al., 2019).

E.3 Detailed Results

To comprehensively evaluate the performance of all methods in a classification setting, we further report the precision, recall, f1 score, and AUC (area under curve) results of CIFAR-10 with the same 10 labels, CIFAR-100 with 400 labels, SVHN with 40 labels, and STL-10 with 40 labels. As shown in Table 8 and 9, FreeMatch also has the best performance on precision, recall, F1 score, and AUC in addition to the top1 error rates reported in the main paper.

Datasets CIFAR-10 (10) CIFAR-100 (400) Criteria Precision Recall F1 Score AUC Precision Recall F1 Score AUC UDA 0.5304 0.5121 0.4754 0.8258 0.5813 0.5484 0.5087 0.9475 FixMatch 0.6436 0.6622 0.6110 0.8934 0.5574 0.5430 0.4946 0.9363 Dash 0.6409 0.5410 0.4955 0.8458 0.5833 0.5649 0.5215 0.9456 MPL 0.6286 0.6857 0.6178 0.7993 0.5799 0.5606 0.5193 0.9316 FlexMatch 0.6769 0.6861 0.6780 0.9126 0.6135 0.6193 0.6107 0.9675 FreeMatch 0.8619 0.8593 0.8523 0.9843 0.6243 0.6261 0.6137 0.9692

Datasets SVHN (40) STL-10 (40) Criteria Precision Recall F1 Score AUC Precision Recall F1 Score AUC UDA 0.9783 0.9777 0.9780 0.9977 0.6385 0.5319 0.4765 0.8581 FixMatch 0.9731 0.9706 0.9716 0.9962 0.6590 0.5830 0.5405 0.8862 Dash 0.9782 0.9778 0.9780 0.9978 0.8117 0.6020 0.5448 0.8827 MPL 0.9564 0.9513 0.9512 0.9844 0.6191 0.5740 0.4999 0.8529 FlexMatch 0.9566 0.9691 0.9625 0.9975 0.6403 0.6755 0.6518 0.9249 FreeMatch 0.9783 0.9800 0.9791 0.9979 0.8489 0.8439 0.8354 0.9792

E.4 Ablation of pre-defined thresholds on FixMatch and FlexMatch

As shown in Table 12, the performance of FixMatch and FlexMatch is quite sensitive to the changes of the pre-defined threshold .

| FixMatch | FlexMatch | |

|---|---|---|

| 0.25 | 11.76±0.60 | 18.84±0.36 |

| 0.5 | 16.29±0.31 | 14.16±0.21 |

| 0.75 | 15.61±0.23 | 6.08±0.17 |

| 0.95 | 7.47±0.28 | 4.97±0.06 |

| 0.98 | 8.01±0.91 | 5.40±0.11 |

E.5 Ablation on EMA decay on CIFAR-10 (40)

We provide the ablation study on EMA decay parameter in Equation 5 and Equation 6. One can observe that different decay produces the close results on CIFAR-10 with 40 labels, indicating that FreeMatch is not sensitive to this hyper-parameter. A large is not encouraged since it could impede the update of global / local thresholds.

| Thresholding EMA decay | CIFAR-10 (40) |

|---|---|

| 0.9 | 4.940.06 |

| 0.99 | 4.920.08 |

| 0.999 | 4.900.04 |

| 0.9999 | 5.030.07 |

E.6 Ablation of SAF on FlexMatch and FreeMatch

In Table 13, we present the comparison of different class fairness objectives on CIFAR-10 with 10 labels. FreeMatch is better than FlexMatch in both settings. In addition, SAF is also proved effective when combined with FlexMatch.

| FixMatch | FlexMatch | |

|---|---|---|

| 0.25 | 11.76±0.60 | 18.84±0.36 |

| 0.5 | 16.29±0.31 | 14.16±0.21 |

| 0.75 | 15.61±0.23 | 6.08±0.17 |

| 0.95 | 7.47±0.28 | 4.97±0.06 |

| 0.98 | 8.01±0.91 | 5.40±0.11 |

| Fairness Objective | FlexMatch | FreeMatch |

|---|---|---|

| w/o SAF | 13.85±12.04 | 10.37±7.70 |

| w/ SAF | 12.60±8.16 | 8.07±4.24 |

E.7 Ablation of Imbalanced SSL

| Dataset | CIFAR-10-LT | CIFAR-100-LT | ||

|---|---|---|---|---|

| Imbalance | 50 | 150 | 20 | 100 |

| FixMatch | 18.50.48 | 31.21.08 | 49.10.62 | 62.50.36 |

| FlexMatch | 17.80.24 | 29.50.47 | 48.90.71 | 62.70.08 |

| FreeMatch | 17.70.33 | 28.80.64 | 48.40.91 | 62.50.23 |

| FixMatch w/ ABC | 14.00.22 | 22.31.08 | 46.60.69 | 58.30.41 |

| FlexMatch w/ ABC | 14.20.34 | 23.10.70 | 46.20.47 | 58.90.51 |

| FreeMatch w/ ABC | 13.90.03 | 22.30.26 | 45.60.76 | 58.90.55 |

To further prove the effectiveness of FreeMatch, We evaluate FreeMatch on the imbalanced SSL setting Kim et al. (2020); Wei et al. (2021); Lee et al. (2021); Fan et al. (2021), where the labeled and the unlabeled data are both imbalanced. We conduct experiments on CIFAR-10-LT and CIFAR-100-LT with different imbalance ratios. The imbalance ratio used on CIFAR datasets is defined as where is the number of samples on the head (frequent) class and the tail (rare). Note that the number of samples for class is computed as , where is the number of classes. Following (Lee et al., 2021; Fan et al., 2021), we set for CIFAR-10 and for CIFAR-100, and the number of unlabeled data is twice as many for each class. We use a WRN-28-2 (Zagoruyko & Komodakis, 2016) as the backbone. We use Adam (Kingma & Ba, 2014) as the optimizer. The initial learning rate is with a cosine learning rate decay schedule as , where is the initial learning rate, is the current (total) training step and we set for all datasets. The batch size of labeled and unlabeled data is 64 and 128, respectively. Weight decay is set as 4e-5. Each experiment is run on three different data splits, and we report the average of the best error rates.

The results are summarized in Table 14. Compared with other standard SSL methods, FreeMach achieves the best performance across all settings. Especially on CIFAR-10 at imbalance ratio 150, FreeMatch outperforms the second best by . Moreover, when plugged in the other imbalanced SSL method (Lee et al., 2021), FreeMatch still attains the best performance in most of the settings.

E.8 T–SNE Visualization on STL-10 (40)

We plot the T–SNE visualization of the features on STL-10 with 40 labels from FlexMatch (Zhang et al., 2021) and FreeMatch. FreeMatch shows better feature space than FlexMatch with less confusing clusters.

E.9 Pseudo Label accuracy on CIFAR-10 (10)

We average the pseudo label accuracy with three random seeds and report them in Figure 6. This indicates that mapping thresholds from a high fixed threshold like FlexMatch did can prevent unlabeled samples from being involved in training. In this case, the model can overfit on labeled data and a small amount of unlabeled data. Thus the predictions on unlabeled data will incorporate more noise. Introducing appropriate unlabeled data at training time can avoid overfitting on labeled datasets and a small amount of unlabeled data and bring more accurate pseudo labels.

E.10 CIFAR-10 (10) Confusion Matrix

We plot the confusion matrix of FreeMatch and other SSL methods on CIFAR-10 (10) in Figure 7. It is worth noting that even with the least prototypical labeled data in our setting, FreeMatch still gets good results while other SSL methods fail to separate the unlabeled data into different clusters, showing inconsistency with the low-density assumption in SSL.