placement=top, contents= This paper has been accepted for publication in the IEEE Robotics and Automation Letters. Please cite the paper as: Chen, B. S., Lin, Y. K., Chen, J. Y., Huang, C. W., Chern, J. L., Sun, C. C. (2024). FracGM: A Fast Fractional Programming Technique for Geman-McClure Robust Estimator. IEEE Robotics and Automation Letters, 9(12), 11666-11673. DOI: 10.1109/LRA.2024.3495372. , color=NavyBlue, opacity=1, scale=0.84, vshift=-32,

FracGM: A Fast Fractional Programming Technique for Geman-McClure Robust Estimator

Abstract

Robust estimation is essential in computer vision, robotics, and navigation, aiming to minimize the impact of outlier measurements for improved accuracy. We present a fast algorithm for Geman-McClure robust estimation, FracGM, leveraging fractional programming techniques. This solver reformulates the original non-convex fractional problem to a convex dual problem and a linear equation system, iteratively solving them in an alternating optimization pattern. Compared to graduated non-convexity approaches, this strategy exhibits a faster convergence rate and better outlier rejection capability. In addition, the global optimality of the proposed solver can be guaranteed under given conditions. We demonstrate the proposed FracGM solver with Wahba’s rotation problem and 3-D point-cloud registration along with relaxation pre-processing and projection post-processing. Compared to state-of-the-art algorithms, when the outlier rates increase from 20% to 80%, FracGM shows 53% and 88% lower rotation and translation increases. In real-world scenarios, FracGM achieves better results in 13 out of 18 outcomes, while having a 19.43% improvement in the computation time.

I Introduction

Robust estimation is an essential technique in real-world applications, including computer vision, robotics, and navigation. Its main objective is to mitigate the impact of outlier measurements, thereby improving the accuracy of the estimation. Recent studies have used various types of robust functions in different applications, such as rotation averaging [1, 2, 3, 4], point cloud registration [5, 6, 7, 8, 9], pose graph optimization [10, 11, 12], and satellite navigation [13, 14].

Introducing robust functions into estimation tasks usually generates additional non-convexity to the corresponding optimization problem. This major drawback poses a challenge to the convergence and global optimality guarantees of robust estimators. One strategy aims to iteratively update the auxiliary parameters (mainly known as weights) and solve the corresponding weighted least squares problem [15, 16, 17, 18]. It claims to be fast, accurate, and empirically practical for large-scale applications but lacks theoretical support for convergence and global optimality. In certain cases, trust-region methods are also required to stabilize the optimization process [19, 20]. Another strategy, especially for spatial perception, utilizes relaxation techniques to obtain globally optimal solutions to relevant dual problems. Global optimality is guaranteed by convex optimization for dual problems, as well as the tightness between the original and dual problems [10, 21, 7, 22, 9]. However, most approaches rely on large semidefinite programming (SDP), which results in computational inefficiency for large-scale applications. For example, the binary cloning technique introduced in TEASER [22, 9] will increase the problem dimension of SDP by the number of measurements.

Designing an efficient robust estimator for real-world applications while justifying global optimality becomes essential and challenging. Fortunately, we noticed that robust estimation with Geman-McClure (GM) robust function is a special case of sum-of-ratio problems, in which its convergence and global optimality using fractional programming have been widely discussed [23, 24, 25, 26]. To the best of our knowledge, most studies suppose that the numerators are convex and the denominators are concave. This violates the assumption of the Geman-McClure robust function, as both numerators and denominators are typically convex. To this end, we proposed a fast Fractional programming technique for Geman-McClure robust estimator (FracGM) by extending Jong’s approach [24]. In particular, we prove that the corresponding dual problems of Geman-McClure robust estimation problems are convex during the iterative optimization process. The benefits are threefold. First, it ensures that each iteration step of our solver is well-posed. Second, the final solutions of our solver correspond to the primary Geman-McClure robust estimation problem. Third, it allows us to study the conditions that make the proposed solver’s solution globally optimal. For the sake of completeness, we revisit Jong’s approach [24], and establish theoretical support for the solver proposed in this paper.

We show the robustness of FracGM on Wahba’s rotation problem[27] and 3-D point cloud registration, as shown in Figure 1. Both problems introduce an additional challenge for the non-convexity of the 3-D rotation group . In this work, we simply relax the feasible space from to , and post-process the matrix with the orthogonal Procrustes problem. Despite breaking the statement of global optimality in FracGM, empirically the proposed method demonstrates better robustness and accuracy than most state-of-the-art approaches. As a rotation solver, even with outlier rates above 90%, FracGM achieves rotation errors under 1 degree approximately 80% of the times, outperforming other solvers in a synthetic dataset. As a registration solver, when the outlier rates increase from 20% to 80%, the rotation and translation errors of FracGM increase by 53% and 88% less than those of the TEASER++[9] algorithm. In terms of registration in real-world scenes, FracGM achieves the best results in 13 of 18 outcomes, while having a 19.43% improvement of computation time compared to TEASER++. Overall, our main contributions are summarized as follows:

-

1.

We propose a fast Geman-McClure robust estimator with conditionally global optimality guarantees.

-

2.

We provide FracGM-based rotation and registration solvers, outperforming existing state-of-the-art methods in both accuracy and robustness.

contents=

II Related Work

II-A Geman-McClure Robust Solvers

Robust estimation problems are usually reformulated as iterative weighted least squares problems [15, 28]. In Geman-McClure robust estimations, most approaches rely on Black-Rangarajan duality [29], which is a weighted least squares with an additional regularization term [30, 17, 31]. For example, Graduated Non-Convexity (GNC) [17] proposes a general-purpose robust solver for both truncated least squares (TLS) and Geman-McClure (GM) robust cost in conjunction with such duality technique, and introduces an approximation optimization process from convexity to non-convexity. With recent advances of semidefinite relaxation [32], there are also approaches to recast Geman-McClure robust functions to semidefinite programming (SDP) [21].

Unlike the robust estimators based on the Black-Rangarajan duality and semidefinite relaxation Geman-McClure, FracGM leverages Dinkelbach’s transform [23] and the Lagrangian to reformulate the problem as an underlying convex program with additional auxiliary variables. It was originally introduced in the field of fractional programming optimization [23, 24, 25, 26], while to the best of our knowledge, FracGM is the first approach to apply such technique for Geman-McClure robust estimation.

II-B Robust Estimation for Point Cloud Registration

Point cloud registration is the process of aligning two point clouds by finding a spatial rigid transformation. Horn [33] first proposes a closed-form solution by decoupling the problem to scale, rotation and translation sub-problems. With advances of relaxation techniques in recent decades, Olsson and Eriksson [34] apply Lagrangian duality with semidefinite relaxation. In addition, Briales and Gonzalez-Jimenez [35] introduce orthonormality and determinant constraints such that the relaxation is empirically tight. Those methods are said to be outlier-free approaches, since they assume that all measurement noises are modeled under ordinary Gaussian distributions.

In real-world applications, however, there are outlier measurements which may interfere with estimation accuracy. For the purpose of mitigating the impact of outliers, robust estimation becomes a promising strategy in point cloud registration to identify and isolate bad measurements. Zhou et al. [31] propose the idea to solve robust registration by gradually adjusting the Geman-McClure robust function. Yang et al. [9] develop Truncated least squares Estimation And SEmidefinite Relaxation (TEASER), where they reformulate the problem to large-scale SDP. They also develop a fast implementation TEASER++ by leveraging GNC rotation solver to prevent large computational cost in SDP.

III Fractional Programming for Geman-McClure Robust Estimator

In this section, we provide the proposed fractional programming technique for Geman-McClure robust estimator along with theoretical optimal-guarantees of the solver. In general, a robust estimation problem has the following form [28, 17]:

| (1) |

Here is a robust function with residual functions . Note that least squares problem corresponds to setting the robust function as . Applying the Geman-McClure function,

| (2) |

Problem (1) becomes

| (3) |

where is a threshold that depicts the shape of robust function. We suppose that of all square residual functions are convex and twice continuously differentiable, and also suppose that we have additional convex constraints . In this way, Problem (3) is considered to be the following sum-of-ratio problem [36]:

| (4) | ||||

| s.t. |

in which and are also both convex and twice continuously differentiable.

We remark that each ratio’s numerator and denominator in Jong’s sum-of-ratio programming approach [24] are required to be convex and concave, respectively. However, Problem (4) does not meet the assumption because both numerator and denominator are convex. This discrepancy contradicts the theoretical foundation established in [24] for the existence and global optimality of solutions, which relies on the convexity of the linear combination of and with arbitrary non-negative coefficients. Nevertheless, we claim that it is still possible to solve the program by introducing additional conditions, thanks to the structure of Geman-McClure function. To fill the theoretical gap, we revisit the method in the following sections, and provide conditions to guarantee theoretical optimality.

III-A Sum-of-Ratio Programming

We write an equivalent problem for Problem (4) by introducing upper bound variable :

| (5) | ||||

| s.t. | ||||

With Dinkelbach’s transform [23] and Lagrangian, the following \threflemma_1 derives an underlying convex problem of the equivalent Problem (5). It also claims the relationship between the minimizer of Problem (4) and newly introduced auxiliary variables and .

Lemma 1 (Variant of [24, Lemma 1]).

lemma_1 If is a solution of Problem (5), then there exists such that is a solution of the following problem for and :

| (6) | ||||

| s.t. |

where and . Furthermore, also satisfies the following system of equations for and :

| (7) | ||||

| (8) |

Proof.

We begin by providing a high-level overview of the proof. Firstly, is claimed to be a KKT point of Problem (6). Secondly, Problem (6) is shown to be a convex program. In this way, by the KKT sufficient conditions, is an optimal solution of Problem (6). Please refer to [24] for detailed description of the first part.

For the second part, we point out that is convex in our case instead of concave as in [24]. Hereby we introduce additional auxiliary variable constraints and in \threflemma_1 in order to make the convexity of Problem (6) hold. To obtain the auxiliary variable constraints, we expand the objective of Problem (6):

which implies that the objective of Problem (6) is convex given and . ∎

In this way, the primal problem (5) turns out to be finding a feasible solution of the dual problem (6) as well as auxiliary variables . We resort to alternating minimization that iteratively and separately updates and . Specifically, in the -th iteration, given a fixed that satisfies the auxiliary variable constraints and , solving with Problem (6) becomes a convex programming with fixed variable dimension . As for finding with given , a root finding problem is introduced in the next section. It is same as Jong’s approach [24], whereas we will additionally claim that the auxiliary variable constraints is theoretically preserved during the optimization.

III-B Solving Auxiliary Variables

Let denote the auxiliary variables, let be the solution of problem (6) given an , and are the corresponding residuals. Define a function as follows:

This function is specifically defined by the constraints (7) and (8) such that , where and is the solution of Problem (6). By [24, Corollary 2.1], if is a solution of Problem (4), then there exists such that and

| (9) |

This shows that a solution of the auxiliary variables can be obtained by solving . \threfthm next states that such solution satisfies the KKT conditions in \threflemma_1.

Theorem 2.

Proof.

thm provides an assurance that all such that is a solution candidate for Problem (4) that satisfies necessary optimality conditions, and also guarantees that we can indeed use Jong’s approach [24] to obtain a solution in our case where numerators and denominators of the fractional program are all convex. To this end, given a fixed that satisfies the auxiliary variable constraints, we solve the convex program (6) and obtain . If , by \threfthm, FracGM converges to a local solution of Problem (4). Otherwise, we update by solving , where is the fixed solution of Problem (6) from the previous iteration in the sense of alternating minimization. Clearly, Problem (9) is a linear system with fixed , we write the closed-form solution component-wise as follows:

| (10) |

In summary, we iteratively update by Equation (10) and solve by Problem (6) until the stopping criteria: . Note that during the -th iteration that hasn’t converge yet, is the solution of from the previous iteration, however, not the solution of in the current iteration. This stopping criteria can also be interpreted as finding an such that both Problem (6) and Problem (9) are solved. The complete procedure is depicted in Algorithm 1.

III-C Global Optimality of FracGM

In Section III-A and III-B, we have shown that the solution of FracGM is a KKT point of Problem 6 by \threfthm, which is a local solution of Problem (5) by \threflemma_1. It follows from [24, Corollary 2.1] that if Problem (9) has only one solution, then such unique solution is the optimal solution of Problem (5). Consequently, we derive the following statement from the uniqueness of Problem (9) [24, Theorem 3.1]:

Proposition 3.

prop Suppose that the global solution of Problem (4) exists. If is differentiable and Lipschitz continuous in , then FracGM is a global solver.

An example of FracGM satisfying \threfprop, i.e., having theoretically global optimal guarantees, is given in the supplementary material.

IV Spatial Perception with FracGM

In this section, we demonstrate two examples of applying the proposed FracGM estimator to practical problems, a FracGM-based rotation solver (Section IV-A) and a FracGM-based registration solver (Section IV-B). Although only two examples are showed in this work, we reiterate that the proposed FracGM solver can apply to any problem in which the square of residuals are twice continuously differentiable and convex.

IV-A Robust Rotation Solver with FracGM

We first discuss about the Wahba’s rotation Problem [27], which is basically a registration of two point clouds that only differ by a 3-D rotation. Let be two sets of point clouds with points, source set and target set, where are 3-D points. The goal is to find a rotation matrix between the two point clouds, i.e., , with error terms following the isotropic Gaussian distribution. The maximum likelihood estimation is equivalent to the following least squares problem:

| (11) |

We first rewrite it as a quadratic program:

| (12) |

where , , is the column-wise vectorization of the rotation matrix , and . This vectorization and homogenization technique is commonly used [35, 34]. We also remark that all square of residual functions defined in Problem (12) are twice continuously differentiable and quadratic convex. Next, we apply the GM robust function (2) and form the following optimization problem:

| (13) | ||||

| s.t. |

In order to apply FracGM, we relax the constraints to align with the form of Problem (4). Since the numerators and denominators in the relaxed Problem (13) are quadratic, the derived Problem (6) is a quadratic program with only one equality constraint. Such problem has a closed-form solution by Shur complement and the KKT matrix [37]:

| (14) |

After finding a solution by FracGM, we dehomogenize to , then reshape it to a matrix . Since the solution is on the relaxed convex space and we are agnostic on theoretical conditions of tightness, we forcibly project it back to the special orthogonal space by SVD [38]. This technique is also commonly used after linear relaxation in rotation estimation [35, 34]. In light of an initial guess is needed for FracGM, we simply use the fast closed-form solution [33] for Problem (11). Despite that this closed-form solution does not tolerate outliers, we will show that it is proficient even when there are numerous outliers in Section V. We summarize the FracGM-based rotation solver in Algorithm 2.

IV-B Robust Registration Solver with FracGM

We extend the FracGM-based rotation solver to a registration solver, which is to find the spatial transformation between the two point clouds, i.e., , where is the rotation matrix, is the translation vector, and error terms follow the isotropic Gaussian distribution. In this way, we get the following problem:

| (15) |

We extend Problem (13) to solving spatial transformation and the problem formulation of GM robust point cloud registration becomes:

| (16) | ||||

| s.t. |

where , . The structural consistency between Equation (13) and Equation (16) permits a similar algorithm to solve the registration problem. In particular, the algorithm of FracGM for point cloud registration can be extended from Algorithm 2 by adding an initial guess and solving in Problem (16).

It is worth noting that the introduced FracGM-based registration solver directly estimates the relative transformation between two point clouds, whereas TEASER [9] decouples rotation and translation estimation. This strategy is more computationally efficient, as it avoids the increase in measurement number complexity up to when introducing translation-invariant measurements for rotation solvers. In addition, this computational cost-friendly strategy also improves the accuracy of estimation results.

We acknowledge that the theoretical guarantee of global optimality for FracGM-based rotation and registration solvers remains an open issue. Specifically, the global optimality is subjected to variable space relaxation tightness and \threfprop for the relaxed program, which could be challenging to verify as it depends on the input data. It requires further investigation to address the issue111Empirical studies on global optimality including relaxation tightness and sensitivity to initial guesses are available at the supplementary material..

V Experiments and Applications

We evaluate the proposed FracGM solver in rotation estimation and point cloud registration with both synthetic dataset and realistic 3DMatch dataset [39]. In the synthetic dataset, we experiment the Stanford Bunny point cloud [40], manipulate various proportions of points as outliers, and transform it to the target point cloud randomly. Hereby, point correspondences are predefined but noisy. This dataset validates methods’ robustness to outlier point correspondences at different levels. Meanwhile, the 3DMatch dataset [39] is used to examine the real-world capability of using FracGM in point cloud registration. Point correspondences in this case are obtained by point cloud feature extraction and matching, and therefore the actual noise model and outlier rates are unknown. All experiments are conducted over 40 Monte Carlo runs, and computed on a desktop computer with an Intel i9-13900 CPU and 128GB RAM.

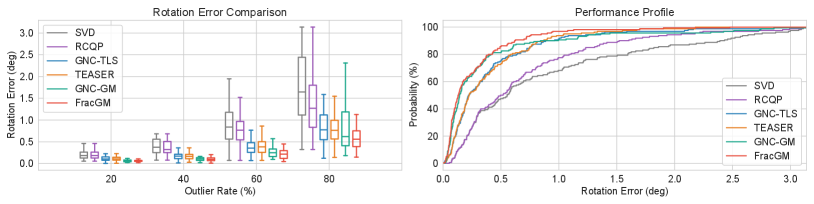

We compare FracGM with various state-of-the-art approaches. In rotation estimation, outlier-free approaches (SVD [33], RCQP [35]) and outlier-awareness approaches (GNC-TLS [17], GNC-GM [17], TEASER [9]) are chosen to study the impact of different outlier distributions on performance. In point cloud registration, Fast Point Feature Histograms (FPFH) [41] are used to extract point features and to perform feature matching with nearest neighbor searching. Outlier-awareness registration solvers (RANSAC [42], FGR [31], TEASER++ [9]) are chosen to evaluate registration accuracy and computational efficiency.

V-A Rotation Estimation with Synthetic Dataset

We evaluate the resistance to outliers in different outlier rates of the proposed FracGM rotation solver. In practice, we down sample the Stanford Bunny point cloud to points for outlier rates between 20% and 80%, and to points for outlier rates higher than 80%. We construct the corresponding point cloud by adding a Gaussian noise with standard deviation of 0.01, and applying random rotation . We generate outliers by replacing a fraction (outlier rate) of points by random sampled 3-D points inside the sphere of radius 2. The threshold in robust functions (TLS and GM) are all set to 1.

Figure 2 shows the box plot of error comparison and performance profile to evaluate rotation solvers. For outlier rates between 20% and 80%, FracGM has the lowest mean rotation error of and the highest probability of being the optimal solver than both outlier-free solvers and outlier-awareness solvers. FracGM stands out in extreme cases where the outlier rate is greater than 90%. In contrast to other solvers that can only limit up to 60% of rotation errors within 1 degree, FracGM confines approximately 80% of rotation errors within this range.

We also compare the error curve with TLS- and GM-based GNC [17] in Figure 3. It reveals that FracGM not only has a better convergence speed than GNC approaches, but also a lower error. We believe that GNC approximates the non-convex objective by some surrogate functions, in which such approximation might be far from the non-convex objective in the early iterations. On the other hand, our solver reformulates the non-convex objective to an equivalent convex problem, thus we are solving the exact same objective in every iteration. Thus, FracGM has a better ability to deal with outliers and can obtain a higher-quality estimation.

V-B Point Cloud Registration with Synthetic Dataset

We evaluate the proposed FracGM registration solver under two different scenarios. In the first scenario, we down sample the Stanford Bunny point cloud to 500 points and manipulate different rates of outlier point correspondences from 20% to 80%, which is the same configuration of Section V-A with additional random translation . We set the noise bound [9] of TEASER++ and FracGM ( in Equation (16)) to 0.1, while RANSAC and FGR does not consider in their problem formulation. In the second scenario, we solve the problem given different noise bound guess to evaluate the impact of it. We believe that specifying the above two configurations are important in the registration task, since the error modeling of outlier in real-world applications is usually unknown and diverse.

We notice that FGR first filters bad correspondence by Fast Point Feature Histogram (FPFH) and nearest neighbors search, while TEASER++ has a Maximum Clique Inlier Selection (MCIS) step to prune outliers before estimating. Both techniques serve as correspondence preprocessing, and since the main idea of robust estimator is to deal with outliers by the solver itself, we exclude both steps in order to demonstrate the robustness of each solver.

Figure 1 demonstrates an example of this experiment. We run RANSAC for 100 times, which is a multi-times non-robust solver, while others are one-time robust solvers. Figure 4 reports that FracGM is the only one that is truly robust to outliers and can really work well with larger outlier rate without any correspondence preprocessing or outlier pruning. As the outlier rate increased from 20% to 80%, the rotation and translation error of TEASER++ escalated by 186% and 900%, while FracGM increased only by 87% and 106%, representing an improvement of 53% and 88%, respectively. As stated in Section IV-B, we also extended a FracGM translation solver to adapt the decoupled structure of TEASER++. Figure 4 shows that the results of rotation estimation in FracGM (decouple) is similar to FracGM, while the accuracy of translation estimation in FracGM (decouple) falls. This is because the measurements for the decoupled translation solver are affected by the prior rotation estimation, and fixing a rotation matrix may cause the translation solver converge to some local solution. Table I reports that the computation time of FracGM (decouple) increases exponentially due to the fact that the measurements for the decoupled rotation solver also increases exponentially, while FracGM can deal with thousands of measurements with milliseconds.

Average Computation Time (sec) 100 500 1000 2000 5000 FracGM (decouple) 0.0108 0.3891 1.6401 6.8731 46.1361 FracGM 0.0003 0.0012 0.0024 0.0053 0.0137

In the above experiments, we choose a noise bound (the upper bound of in Equation (16)) of 0.1 that is larger than the noise standard deviation of the data set at . However, we may not know the actual noise of the measurements in reality, and thus we tend to set a larger noise bound guess. Figure 5 reports the result of setting different noise bounds on a fixed dataset with 50% of outliers, while the other settings are the same as before. It could be expected that the estimation will be more accurate if the noise bound is closer to the actual noise standard deviation. Despite this, the estimation error of TEASER++ increases dramatically. For example, given a noise bound of 1 that is 100 times the noise standard deviation, FracGM has a 66% and 62% improvement over TEASER++ in terms of rotation and translation error.

V-C Point Cloud Registration with 3DMatch Dataset

We test our proposed FracGM registration solver on the realistic 3DMatch dataset [39] to examine the real-world application capability. We downsample point clouds with a voxel size of 5 cm, obtain the correspondence by FPFH, perform a nearest-neighbor search to generate sets of point correspondences and filter them with MCIS [9].

Table II exhibits the registration accuracy and computational time between our solver and other state-of-the-art methods (RANSAC [42], FGR [31] and TEASER++ [9]). FracGM has the lowest average rotation error within and an average translation error less than 1.7 m. Since our solver converges fast and we only solve a fixed low-dimensional quadratic program and a linear equation system during each iteration, FracGM can be executed in real-time with an average of 25 milliseconds each registration. In terms of rotation and translation errors across 9 tested scenes, FracGM achieves the best results in 13 out of 18 outcomes, while having a 19.43% improvement of computation time compared to TEASER++.

Scenes Errors Kitchen Home 1 Home 2 Hotel 1 Hotel 2 Hotel 3 Study MIT Lab Average Time (s) RANSAC-10K Rotation (deg) 1.248 1.163 1.375 1.183 1.525 1.393 1.107 1.027 1.253 0.0151 Translation (m) 2.053 1.723 2.180 1.984 1.870 0.991 1.770 1.753 1.791 FGR [31] Rotation (deg) 1.275 1.206 1.133 1.232 1.555 1.491 1.203 1.065 1.270 0.0373 Translation (m) 2.041 1.767 2.007 2.068 2.031 1.224 1.911 1.888 1.867 TEASER++ [9] Rotation (deg) 1.225 1.146 1.357 1.177 1.525 1.349 1.034 0.960 1.221 0.0313 Translation (m) 2.044 1.691 2.131 1.984 1.864 1.014 1.674 1.575 1.747 FracGM Rotation (deg) 1.219 1.154 1.365 1.168 1.463 1.368 1.021 0.959 1.215 0.0252 Translation (m) 1.989 1.629 2.134 1.935 1.745 0.926 1.566 1.592 1.689

VI Conclusion

In this paper, we introduce FracGM, a fast algorithm for Geman-McClure robust estimation. We revisit and revise the theoretical part of existing fractional programming techniques to accommodate the case of using Geman-McClure robust function. In particular, we prove that the convexity of the dual problem is guaranteed during the iteration process. It not only establishes the validity of our solver, but also allows us to study the global optimality conditions. We demonstrate two FracGM-based solvers for real-world spatial perception problems, and empirical studies indicate that our solvers presents strong outlier rejection capability, good resilience to different error distributions, and fast computation speed. In the future, we would like to investigate theoretical conditions that guarantee global optimality of FracGM-based solvers on real-world applications.

Supplementary Material

Code and complementary documents are available at https://github.com/StephLin/FracGM.

References

- [1] A. Chatterjee and V. M. Govindu, “Efficient and robust large-scale rotation averaging,” in ICCV. IEEE Computer Society, 2013, pp. 521–528.

- [2] ——, “Robust relative rotation averaging,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 40, no. 4, pp. 958–972, 2018.

- [3] R. I. Hartley, K. Aftab, and J. Trumpf, “L1 rotation averaging using the weiszfeld algorithm,” in CVPR. IEEE Computer Society, 2011, pp. 3041–3048.

- [4] L. Wang and A. Singer, “Exact and stable recovery of rotations for robust synchronization,” Information and Inference: A Journal of the IMA, vol. 2, no. 2, pp. 145–193, 2013.

- [5] Z. Deng, Y. Yao, B. Deng, and J. Zhang, “A robust loss for point cloud registration,” in ICCV. IEEE, 2021, pp. 6118–6127.

- [6] A. W. Fitzgibbon, “Robust registration of 2d and 3d point sets,” Image Vis. Comput., vol. 21, no. 13-14, pp. 1145–1153, 2003.

- [7] H. M. Le, T. Do, T. Hoang, and N. Cheung, “SDRSAC: semidefinite-based randomized approach for robust point cloud registration without correspondences,” in CVPR. Computer Vision Foundation / IEEE, 2019, pp. 124–133.

- [8] J. Ma, J. Zhao, J. Tian, Z. Tu, and A. L. Yuille, “Robust estimation of nonrigid transformation for point set registration,” in CVPR. IEEE Computer Society, 2013, pp. 2147–2154.

- [9] H. Yang, J. Shi, and L. Carlone, “TEASER: fast and certifiable point cloud registration,” IEEE Trans. Robotics, vol. 37, no. 2, pp. 314–333, 2021.

- [10] L. Carlone and G. C. Calafiore, “Convex relaxations for pose graph optimization with outliers,” IEEE Robotics Autom. Lett., vol. 3, no. 2, pp. 1160–1167, 2018.

- [11] G. H. Lee, F. Fraundorfer, and M. Pollefeys, “Robust pose-graph loop-closures with expectation-maximization,” in IROS. IEEE, 2013, pp. 556–563.

- [12] N. Sünderhauf and P. Protzel, “Towards a robust back-end for pose graph SLAM,” in ICRA. IEEE, 2012, pp. 1254–1261.

- [13] J. Beuchert, M. Camurri, and M. F. Fallon, “Factor graph fusion of raw GNSS sensing with IMU and lidar for precise robot localization without a base station,” in ICRA. IEEE, 2023, pp. 8415–8421.

- [14] T. Suzuki, “Attitude-estimation-free GNSS and IMU integration,” IEEE Robotics Autom. Lett., vol. 9, no. 2, pp. 1090–1097, 2024.

- [15] M. Bosse, G. Agamennoni, and I. Gilitschenski, “Robust estimation and applications in robotics,” Found. Trends Robotics, vol. 4, no. 4, pp. 225–269, 2016.

- [16] L. Lu, W. Wang, X. Yang, W. Wu, and G. Zhu, “Recursive geman-mcclure estimator for implementing second-order volterra filter,” IEEE Trans. Circuits Syst. II Express Briefs, vol. 66-II, no. 7, pp. 1272–1276, 2019.

- [17] H. Yang, P. Antonante, V. Tzoumas, and L. Carlone, “Graduated non-convexity for robust spatial perception: From non-minimal solvers to global outlier rejection,” IEEE Robotics Autom. Lett., vol. 5, no. 2, pp. 1127–1134, 2020.

- [18] C. Zach, “Robust bundle adjustment revisited,” in ECCV. Springer, 2014, pp. 772–787.

- [19] F. Dellaert and M. Kaess, “Factor graphs for robot perception,” Found. Trends Robotics, vol. 6, no. 1-2, pp. 1–139, 2017.

- [20] D. M. Rosen, M. Kaess, and J. J. Leonard, “RISE: an incremental trust-region method for robust online sparse least-squares estimation,” IEEE Trans. Robotics, vol. 30, no. 5, pp. 1091–1108, 2014.

- [21] M. Castella and J. Pesquet, “Optimization of a geman-mcclure like criterion for sparse signal deconvolution,” in CAMSAP. IEEE, 2015, pp. 309–312.

- [22] H. Yang and L. Carlone, “A quaternion-based certifiably optimal solution to the wahba problem with outliers,” in ICCV. IEEE, 2019, pp. 1665–1674.

- [23] W. Dinkelbach, “On nonlinear fractional programming,” Management Science, vol. 13, no. 7, pp. 492–498, 1967.

- [24] Y. Jong, “An efficient global optimization algorithm for nonlinear sum-of-ratios problem,” Optimization Online, vol. 32, pp. 38–39, 2012.

- [25] I. M. Stancu-Minasian, Fractional programming: theory, methods and applications, 1997, vol. 409.

- [26] S. Schaible and J. Shi, “Fractional programming: The sum-of-ratios case,” Optim. Methods Softw., vol. 18, no. 2, pp. 219–229, 2003.

- [27] G. Wahba, “A least squares estimate of satellite attitude,” SIAM review, vol. 7, no. 3, pp. 409–409, 1965.

- [28] K. MacTavish and T. D. Barfoot, “At all costs: A comparison of robust cost functions for camera correspondence outliers,” in CRV. IEEE Computer Society, 2015, pp. 62–69.

- [29] M. J. Black and A. Rangarajan, “On the unification of line processes, outlier rejection, and robust statistics with applications in early vision,” Int. J. Comput. Vis., vol. 19, no. 1, pp. 57–91, 1996.

- [30] J. T. Barron, “A general and adaptive robust loss function,” in CVPR. Computer Vision Foundation / IEEE, 2019, pp. 4331–4339.

- [31] Q. Zhou, J. Park, and V. Koltun, “Fast global registration,” in ECCV. Springer, 2016, pp. 766–782.

- [32] F. Bugarin, D. Henrion, and J. Lasserre, “Minimizing the sum of many rational functions,” Math. Program. Comput., vol. 8, no. 1, pp. 83–111, 2016.

- [33] B. K. P. Horn, “Closed-form solution of absolute orientation using unit quaternions,” Journal of the Optical Society A, vol. 4, pp. 629–642, 04 1987.

- [34] C. Olsson and A. P. Eriksson, “Solving quadratically constrained geometrical problems using lagrangian duality,” in ICPR. IEEE Computer Society, 2008, pp. 1–5.

- [35] J. Briales and J. G. Jiménez, “Convex global 3d registration with lagrangian duality,” in CVPR. IEEE Computer Society, 2017, pp. 5612–5621.

- [36] R. W. Freund and F. Jarre, “Solving the sum-of-ratios problem by an interior-point method,” J. Glob. Optim., vol. 19, no. 1, pp. 83–102, 2001.

- [37] S. Boyd and L. Vandenberghe, Convex optimization. Cambridge university press, 2004.

- [38] P. H. Schönemann, “A generalized solution of the orthogonal procrustes problem,” Psychometrika, vol. 31, no. 1, pp. 1–10, 1966.

- [39] A. Zeng, S. Song, M. Nießner, M. Fisher, J. Xiao, and T. A. Funkhouser, “3dmatch: Learning local geometric descriptors from RGB-D reconstructions,” in CVPR. IEEE Computer Society, 2017, pp. 199–208.

- [40] G. Turk and M. Levoy, “Zippered polygon meshes from range images,” in SIGGRAPH. ACM, 1994, pp. 311–318.

- [41] R. B. Rusu, N. Blodow, and M. Beetz, “Fast point feature histograms (FPFH) for 3d registration,” in ICRA. IEEE, 2009, pp. 3212–3217.

- [42] M. A. Fischler and R. C. Bolles, “Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography,” Commun. ACM, vol. 24, no. 6, pp. 381–395, 1981.