Foundations of Multivariate Distributional Reinforcement Learning

Abstract

In reinforcement learning (RL), the consideration of multivariate reward signals has led to fundamental advancements in multi-objective decision-making, transfer learning, and representation learning. This work introduces the first oracle-free and computationally-tractable algorithms for provably convergent multivariate distributional dynamic programming and temporal difference learning. Our convergence rates match the familiar rates in the scalar reward setting, and additionally provide new insights into the fidelity of approximate return distribution representations as a function of the reward dimension. Surprisingly, when the reward dimension is larger than , we show that standard analysis of categorical TD learning fails, which we resolve with a novel projection onto the space of mass- signed measures. Finally, with the aid of our technical results and simulations, we identify tradeoffs between distribution representations that influence the performance of multivariate distributional RL in practice.

1 Introduction

Distributional reinforcement learning [MSK+10, BDM17b, BDR23, DRL] focuses on the idea of learning probability distributions of an agent’s random return, rather than the classical approach of learning only its mean. This has been highly effective in combination with deep reinforcement learning [YZL+19, BCC+20, WBK+22], and DRL has found applications in risk-sensitive decision making [LM22, KEF23], neuroscience [DKNU+20], and multi-agent settings [ROH+21, SLL21].

In general, research in distributional reinforcement learning has focused on the classical setting of a scalar reward function. However, prior non-distributional approaches to multi-objective RL [RVWD13, HRB+22] and transfer learning [BDM+17a, BHB+20] model value functions of multivariate cumulants,111Cumulants refer to accumulated quantities in RL (e.g., rewards or multivariate rewards)—not to be confused with statistical cumulants. rather than a scalar reward. Having learnt such a multivariate value function, it is then possible to perform zero-shot evaluation and policy improvement for any scalar reward signal contained in the span of the coordinates of the multivariate cumulants, opening up a variety of applications in transfer learning, and multi-objective and constrained RL.

Multivariate distributional RL combines these two ideas, and aims to learn the full probability distribution of returns given a multivariate cumulant function. Successfully learning the multivariate reward distribution opens up a variety of unique possibilities, such as zero-shot return distribution estimation [WFG+24] and risk-sensitive policy improvement [CZZ+24].

Pioneering works have already proposed algorithms for multivariate distributional RL. While these works all demonstrate benefits from the proposed algorithmic approaches, each suffers from separate drawbacks, such as not modelling the full joint distribution [GBSL21], lacking theoretical guarantees [FSMT19, ZCZ+21], or requiring a maximum-likelihood optimisation oracle for implementation [WUS23]. Concurrently, the work of [LK24] analyzed algorithms for DRL with Banach-space-valued rewards, and provided convergence guarantees for dynamic programming with non-parametric (intractable) distribution models.

Our central contribution in this paper is to propose algorithms for dynamic programming and temporal-difference learning in multivariate DRL which are computationally tractable and theoretically justified, with convergence guarantees. We show that reward dimensions strictly larger than introduce new computational and statistical challenges. To resolve these challenges, we introduce multiple novel algorithmic techniques, including a randomized dynamic programming operator for efficiently approximating projected updates with high probability, and a novel TD-learning algorithm operating on mass- signed measures. These new techniques recover existing bounds even in the scalar reward case, and provide new insights into the behavior of distributional RL algorithms as a function of the reward dimension.

2 Background

We consider a Markov decision process with Polish state space , action space , transition kernel , and discount factor . Unlike the standard RL setting, we consider a vector-valued reward function , as in the literature on successor features [BDM+18]. Given a policy , we write the policy-conditioned transition kernel .

Multi-variate return distributions. We write for a trajectory generated by setting , and for each , . The return obtained along this trajectory is then defined by , and the (multi-)return distribution function is .

Zero-shot evaluation. An intriguing prospect of estimating multivariate return distributions is the ability to predict (scalar) return distributions for any reward function in the span of the cumulants. Indeed, [ZCZ+21, CZZ+24] show that for any reward function for some , for . Likewise, one might consider , in which case corresponds to the per-trajectory discounted state visitation measure, and [WFG+24] demonstrated methods for learning the distribution of to infer the return distribution for any bounded deterministic reward function.

Multivariate distributional Bellman equation. It was shown in [ZCZ+21] that multi-return distributions obey a distributional Bellman equation, similar to the scalar case [MSK+10, BDM17b], and defines the multivariate distributional Bellman operator by

| (1) |

where and is the pushforward of a measure through a measurable function . In particular, [ZCZ+21] showed that satisfies the multi-variate distributional Bellman equation , and that is a -contraction in , where and is the -Wasserstein metric [Vil09]. This suggests a convergent scheme for approximating in by distributional dynamic programming, that is, computing the iterates , following Banach’s fixed point theorem.

Approximating multivariate return distributions. In practice, however, the iterates cannot be computed efficiently, because the size of the support of may increase exponentially with . A variety of alternative approaches that aim to circumvent this computational difficulty have been considered [FSMT19, ZCZ+21, WUS23]. Many of these approaches have proven effective in combination with deep reinforcement learning, though as tabular algorithms, either lack theoretical guarantees, or rely on oracles for solving possibly intractable optimisation problems. A more complete account of multivariate DRL is given in Appendix A. A central motivation of our work is the development of computationally-tractable algorithms for multivariate distributional RL with theoretically guarantees.

Maximum mean discrepancies. A core tool in the development of our proposed algorithms, as well as some prior work [NGV20, ZCZ+21], is the notion of distance over probability distributions given by maximum mean discrepancies [GBR+12, MMD]. A maximum mean discrepancy assigns a notion of distance to pairs of probability distributions, and is parametrised via a choice of kernel , defined by

A useful alternative perspective on MMD is that the choice of kernel induces a reproducing kernel Hilbert space (RKHS) of functions , namely the closure of the span of functions of the form for each , with respect to the norm induced by the inner product . With this interpretation, is equal to , where is the mean embedding of (similarly for ). When is injective, the kernel is called characteristic, and is then a proper metric on [GBR+12]. In the remainder of this work, we will assume that all spaces of measures will be over compact sets ; thus with continuous kernels, we are ensured that distances between probability measures are bounded. When comparing return distributions, this is achieved by asserting that rewards are bounded.

We conclude this section by recalling a particular family of kernels, introduced in [SSGF13], that will be particularly useful for our analysis. These are the kernels induced by semimetrics.

Definition 1.

Let be a nonempty set, and consider a function . Then is called a semimetric if it is symmetric and . Additionally, is said to have strong negative type if whenever with .

Notably, certain semimetrics naturally induce characteristic kernels and probability metrics.

Theorem 1 ([SSGF13, Proposition 29]).

Let be a semimetric on a space have strong negative type, in the sense that whenever are probability measures on a compact set . Moreover, let denote the kernel induced by , that is

for some . Then is characteristic, so is a metric.

3 Multivariate Distributional Dynamic Programming

To warm up, we begin by demonstrating that indeed the (multivariate) distributional Bellman operator is contractive in a supremal form of MMD, given by , for a particular class of kernels . Our first theorem generalizes the analogous results of [NGV20] in the scalar case to multivariate cumulants. The proof of Theorem 3, as well as proofs of all remaining results, are deferred to Appendix B.

[Convergent MMD dynamic programming for the multi-return distribution function]theoremdpmmd Let be a kernel induced by a semimetric on with strong negative type, satisfying

-

1.

Shift-invariance. For any , .

-

2.

Homogeneity. For any , there exists for which .

Consider the sequence given by . Then at a geometric rate of in , as long as .

Notably, the energy distance kernels satisfy the conditions of Theorem 3, and by the homogeneity of the Euclidean norm, so is a -contraction in the energy distances. This generalizes the analogous result of [NGV20] in the one-dimensional case.

While Theorem 3 illustrates a method for approximating in MMD, it leaves a lot to be desired. Firstly, even in tabular MDPs, just as in the case of scalar distributional RL, return distribution functions have infinitely many degrees of freedom, precluding a tractable exact representation. As such, it will be necessary to study approximate, finite parameterizations of the return distribution functions, requiring more careful convergence analysis. Moreover, in RL it is generally assumed that the transition kernel and reward function are not known analytically—we only have access to sampled state transitions and cumulants. Thus, cannot be represented or computed exactly, and instead we must study algorithms for approximating from samples. We provide algorithms for resolving both of these concerns—the former in Section 5 and the latter in Section 6—where we illustrate the difficulties that arise once the cumulant dimension exceeds unity.

4 Particle-Based Multivariate Distributional Dynamic Programming

Our first algorithmic contribution is inspired by the empirically successful equally-weighted particle (EWP) representations of multivariate return distributions employed by [ZCZ+21].

Temporal-difference learning with EWP representations. EWP representations, expressed by the class , are defined by

| (2) |

For simplicity, we consider the case here where at each state , the multi-return distribution is approximated by atoms. We can represent by for . The work of [ZCZ+21] introduced a TD-learning algorithm for learning a representation of , computing iterates of the particles according to

| (3) |

for step sizes and sampled next states , where is a copy of that does not propagate gradients. Despite the empirical success of this method in combination with deep learning, no convergence analysis has been established, owing to the nonconvexity of the MMD objective with respect to the particle locations. In this section we aim to understand to what extent analysis is possible for dynamic programming and temporal-difference learning algorithms based on the EWP representations in Equation (2).

Dynamic programming with EWP representations. As is often the case in approximate distributional dynamic programming [RBD+18, RMA+23], we have ; in words, the distributional Bellman operator does not map EWP representations to themselves. Concretely, as long as there exists a state at which the support of is not a singleton, will consist of more than atoms even when ; and secondly, if is not uniform, will not consist of equally-weighted particles.

Consequently, to obtain a DP algorithm over EWP representations, we must consider a projected operator of the form , for a projection . A natural choice for this projection is the operator that minimizes the MMD of each multi-return distribution in ,

| (4) |

Unfortunately, even in the scalar-reward () case, the operator is problematic; is not uniquely defined, and is not a non-expansion in [LB22, RMA+23]. These pathologies present significant complications when analyzing even the convergence of dynamic programming routines for learning an EWP representation of the multi-return distribution — in particular, it is not even clear that has a fixed point (let alone a unique one). Another complication arises due to the computational difficulty of computing the projection (4): even in the case where has finite support for each state , the projection is very similar to clustering, which can be intractable to compute exactly for large [She21]. Thus, the argmin projection in Equation (4) cannot be used directly to obtain a tractable DP algorithm.

Randomised dynamic programming. Towards this end, we introduce a tractable randomized dynamic programming algorithm for the EWP representation, by using a randomized proxy for , that produces accurate return distribution estimates with high probability. The method produces the following iterates,

| (5) |

A similar algorithm for categorical representations was discussed in concurrent work [LK24] without convergence analysis.

The intuition is that, particularly for large , the Monte Carlo error associated with the sample-based approximation to is small, and we can therefore expect the DP process, though randomised, to be accurate with high probability. This is summarised by a key theoretical result of this section; our proof of this result in the appendix provides a general approach to proving convergence for algorithms using arbitrary accurate approximations to (4) that we expect to be useful in future work.

theoremewpmmddpconvergence Consider a kernel induced by the semimetric with , and suppose rewards are bounded in each dimension within . For any such that , and any , for the sequence defined in Equation (5), with probability at least we have

where and , and where omits logarithmic factors in .

This shows that our novel randomised DP algorithm with EWP representations can tractably compute accurate approximations to the true multivariate return distributions, with only polynomial dependence on the dimension . Appendix C illustrates explicitly how this procedure is more memory efficient than unprojected EWP dynamic programming. However, the guarantees associated with this algorithm hold only in high probability, and are weaker than the pointwise convergence guarantees of one-dimensional distributional DP algorithms [RBD+18, RMA+23, BDR23]. Consequently, these guarantees do not provide a clear understanding of the EWP-TD method described at the beginning of this section. However, in the sequel, we introduce DP and TD algorithms based on categorical representations, for which we derive dynamic programming and TD-learning convergence bounds.

The proof of Theorem 4 is hinges on the following proposition, which demonstrates that convergence of projected EWP dynamic programming is controlled by how far return distributions are transported under the projection map.

[Convergence of EWP Dynamic Programming]propositiondpewp Consider a kernel satisfying the hypotheses of Theorem 3, suppose rewards are globally bounded in each dimension in , and let be a sequence of maps satisfying

| (6) |

Then the iterates given by with converge to a set in , where denotes the closed ball in ,

As an immediate corollary of Proposition 4 and Theorem 4, we can derive an error rate for projected dynamic programming with as well.

corollarydpewporacle For any kernel satisfying the hypotheses of Theorem 4, and for any for which , the iterates converge to a set , where

5 Categorical Multivariate Distributional Dynamic

Programming

Our next contribution is the introduction of a convergent multivariate distributional dynamic programming algorithm based on a categorical representation of return distribution functions, generalizing the algorithms and analysis of [RBD+18] to the multivariate setting.

Categorical representations. As outlined above, to model the multi-return distribution function in practice, it is necessary to restrict each multi-return distribution to a finitely-parameterized class. In this work, we take inspiration from successful distributional RL algorithms [BDM17b, RBD+18] and employ a categorical representation. The work of [WUS23] proposed a categorical representation for multivariate DRL, but their categorical projection was not justified theoretically, and it required a particular choice of fixed support. We propose a novel categorical representation with a finite (possibly state-dependent) support , that models the multi-return distribution function such that for each . The notation simply refers to the th support point at state specified by , and denotes the probability simplex on the finite set . We refer to the mapping as the support map222In many applications, the most natural support map is constant across the state space. and we denote the class of multi-return distribution functions under the corresponding categorical representation as .

Categorical projection. Once again, the distributional Bellman operator is not generally closed over , that is, . As such, we cannot actually employ the procedure described in Theorem 3 – rather, we need to project applications of back onto . Roughly, we need an operator for which . Given such an operator, as in the literature on categorical distributional RL [BDM17b, RBD+18], we will study the convergence of iterates .

Projection operators used in the scalar categorical distributional RL literature are specific to distributions over , so we must introduce a new projection. We propose a projection similar to (4),

| (7) |

We will now verify that is well-defined, and that it satisfies the aforementioned properties. {restatable}lemmacatprojwelldefined Let be a kernel induced by a semimetric on with strong negative type (cf. Theorem 1). Then is well-defined, , and . It is worth noting that beyond simply ensuring the well-posedness of the projection , Lemma 7 also certifies an efficient algorithm for computing the projection — namely, by solving the appropriate quadratic program (QP), as observed by [SZS+08]. We demonstrate pseudocode for computing the projected Bellman operator with a QP solver in Algorithm 1.

lemmaprojorthogonal Under the conditions of Lemma 7, is a nonexpansion in . That is, for any , we have

Categorical multivariate distributional dynamic programming. As an immediate consequence of Lemma 1, it follows that projected dynamic programming under the projection is convergent; this is because is a contraction in and is a nonexpansion in , so the projected operator is a contraction in ; a standard invokation of the Banach fixed point theorem appealing to the completenes of certifies that repeated application of will result in convergence to a unique fixed point.

corollarydpprojfixedpoint Let be a kernel satisfying the conditions of Theorem 3. Then for any , the iterates given by converge geometrically to a unique fixed point.

Beyond the result of Theorem 3, Corollary 1 illustrates an algorithm for estimating in provided knowledge of the transition kernel and the reward function, which is computationally tractable in tabular MDPs. Indeed, the iterates all lie in , having finitely-many degrees of freedom. Algorithm 1 outlines a computationally tractable procedure for learning in this setting.

We note that the work of [WUS23] provided an alternative multivariate categorical algorithm, which was not analyzed theoretically. Moreover, our method provides the additional ability to support state-dependent arbitrary support maps, while theirs requires support maps to be uniform grids.

Accurate approximations. We now provide bounds showing that the fixed point from Corollary 1 can be made arbitrarily accurate by increasing the number of atoms.

To derive a bound on the quality of the fixed point, we provide a reduction via partitioning the space of returns to the covering number of this space. Proceeding, we define a class of partitions , where each satisfies

-

1.

;

-

2.

For any , either or ;

-

3.

;

-

4.

Each element contains exactly one element .

For any partition , we define a notion of mesh size relative to a kernel induced by a semimetric ,

| (8) |

The following result confirms that recovers as the mesh decreases.

theoremdpconsistent Let be a kernel induced by a -homogeneous and shift-invariant semimetric conforming to the conditions of Theorem 3. Then the fixed point of satisfies

| (9) |

Thus, for any sequence of supports for which the maximal space (in ) between any two points in tends to as , the fixed point approximates to arbitrary precision for large enough . The next corollary illustrates this in a familiar setting.

corollarymeshcovering Let , where is a set of uniformly-spaced support points on . For induced by the semimetric for ,

With and , the MMD in Corollary 1 is equivalent to the Cramér metric [SR13], the setting in which categorical (scalar) distributional dynamic programming is well understood. Our rate matches the known rate shown by [RBD+18] in this setting. Thus, our results offer a new perspective on categorical DRL, and naturally generalizes the theory to the multivariate setting.

Theorem 1 relies on the following lemma about the approximation quality of the categorical MMD projection, which may be of independent interest.

lemmammdprojapprox Let be kernel satisfying the conditions of Lemma 7, and for any finite , define via . Then .

At this stage, we have shown definitively that categorical dynamic programming converges in the multivariate case. In the sequel, we build on these results to provide a convergent multivariate categorical TD-learning algorithm.

5.1 Simulation: The Distributional Successor Measure

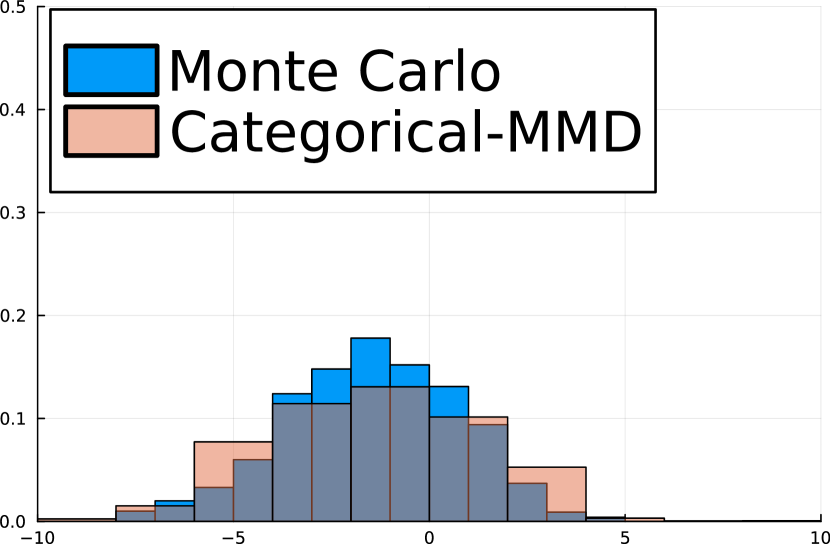

As a preliminary example, we consider 3-state MDPs with the cumulants for the th basis vector. In this setting, encodes the distribution over trajectory-wise discounted state occupancies, which was discussed in the recent work of [WFG+24] and called the distributional successor measure (DSM). Particularly, [WFG+24] showed that for is the return distribution function for any scalar reward function . Figure 1 shows that the projected categorical dynamic programming algorithm accurately approximates the distribution over discounted state occupancies as well as distributions over returns on held-out reward functions.

6 Multivariate Distributional TD-Learning

Next, we devise an algorithm for approximating the multi-return distribution function when the transition kernel and reward function are not known, and are observed only through samples. Indeed, this is a strong motivation for TD-learning algorithms [Sut88], wherein state transition data alone is used to solve the Bellman equation. In this section, we devise a TD-learning algorithm for multivariate DRL, leveraging our results on categorical dynamic programming in .

Relaxation to signed measures. In the setting, the categorical projection presented above is known to be affine [RBD+18], making scalar categorical TD-learning amenable to common techniques in stochastic approximation theory. However, the projection is not affine when ; we give an explicit example in Appendix D. Thus, we relax the categorical representation to include signed measures, which will provide us with an affine projection [BRCM19]—this is crucial for proving our main result, Theorem 6. We denote by the set of all signed measures over with . We begin by noting that the MMD endows with a metric structure.

lemmammdsigned Let be a characteristic kernel over some space . Then given by defines a metric on , where denotes the usual mean embedding of and is the RKHS with kernel .

We define the relaxed projection ,

| (10) |

Note that is very similar to the definition of the categorical MMD projection in (7)—the only difference is that the optimization occurs over the larger class of signed mass-1 measures. It is also worth noting that the distributional Bellman operator can be applied directly to signed measures, which yields the following convenient result.

lemmasmprojlikecatproj Under the conditions of Corollary 1, the projected operator is affine, is contractive with contraction factor , and has a unique fixed point .

While we have “relaxed” the projection, the fixed point is a good approximation of .

Notably, the second statement of Theorem 6 states that projecting back onto the space of multi-return distribution functions yields approximately the same error as when is near .

In the remainder of the section, we assume access to a stream of MDP transitions consisting of elements with the following structure,

| (11) |

where is some probability measure and is the canonical filtration . Based on these transitions, we can define stochastic distributional Bellman backups by

| (12) |

which notably can be computed exactly without knowledge of . Due to the stronger convergence guarantees shown for projected multivariate distributional dynamic programming, we introduce an asynchronous incremental algorithm leveraging the categorical representation, which produces iterates according to

| (13) |

for , where is any sequence of (possibly) random step sizes adapted to the filtration . The iterates of (13) closely resemble those of classic stochastic approximation algorithms [RM51] and particularly asynchronous TD learning algorithms [JJS93, Tsi94, BT96], but with iterates taking values in the space of state-indexed signed measures. Indeed, our next result draws on the techniques from these works to establish convergence of TD-learning on representations.

theoremcattdconvergence For a kernel induced by a semimetric of strong negative type, the sequence given by (11)-(13) converges to with probability 1.

6.1 Simulations: Distributional Successor Features

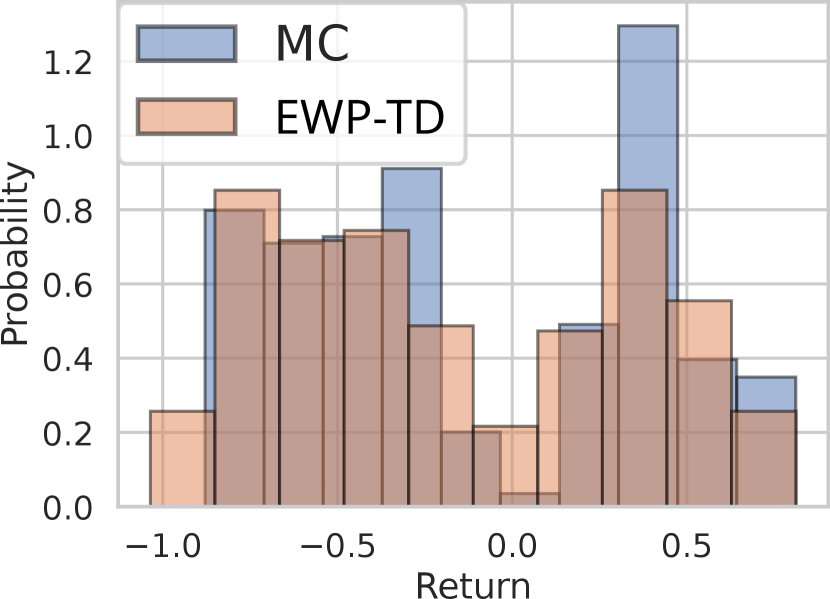

To illustrate the behavior of our categorical TD algorithm, we employ it to learn the multi-return distributions for several tabular MDPs with random cumulants. We focus on the case of - and -dimensional cumulants, which is the setting studied in recent works regarding multivariate distributional RL [ZCZ+21, WUS23]. Interpreting the multi-return distributions as joint distributions over successor features [BDM+18, SFs], we additionally evaluate the return distributions for random reward functions in the span of the cumulants. We compare our categorical TD approach with a tabular implementation of the EWP TD algorithm of [ZCZ+21], for which no convergence bounds are known.

Figure 2 compares TD learning approaches based on their ability to accurately infer (scalar) return distributions on held out reward functions, averaged over 100 random MDPs, with transitions drawn from Dirichlet priors and -dimensional cumulants drawn from uniform priors. The performance of the categorical algorithms sharply increases as the number of atoms increases. On the other hand, the EWP TD algorithm performs well with few atoms, but does not improve very much with higher-resolution representations. We posit this is due to the algorithm getting stuck in local minima, given the non-convexity of the EWP MMD objective. This hypothesis is supported as well by Figure 4, which depicts the learned distributional SFs and return distribution predictions.

Particularly, we observe that the learned particle locations in the EWP TD approach tend to cluster in some areas or get stuck in low-density regimes, which suggests the presence of a local optimum. On the other hand, our provably-convergent categorical TD method learns a high fidelity quantization of the true multi-return distributions.

Naturally, however, the benefits of the bounds for EWP suggested by Theorem 4 become more present as we increase the cumulant dimension. Figure 3 repeats the experiment of Figure 2 with , using randomized support points for the categorical algorithm to avoid a cubic growth in the cardinality of the supports. Notably, our method is the first capable of supporting such unstructured supports. While the categorical TD approach can still outperform EWP, a much larger number of atoms is required. This is unsurprising in light of our theoretical results.

7 Conclusion

We have provided the first provably convergent and computationally tractable algorithms for learning multivariate return distributions in tabular MDPs. Our theoretical results include convergence guarantees that indicate how accuracy scales with the number of particles used in distribution representations, and interestingly motivate the use of signed measures to develop provably convergent TD algorithms.

While it is difficult to scale categorical representations to high-dimensional cumulants, our algorithm is highly performant in the low setting, which has been the focus of recent work in multivariate distributional RL. Notably, even the setting has important applications—indeed, efforts in safe RL depend on distinguishing a cost signal from a reward signal (see, e.g., [YSTS23]), which can be modeled by bivariate distributional RL. In this setting, our method can easily be scaled to large state spaces by approximating the categorical signed measures with neural networks; an illustrated example is given in Appendix F.

On the other hand, the prospect of learning multi-return distributions for high-dimensional cumulants also has many important applications, such as modeling close approximations to distributional successor measures [WFG+24] for zero-shot risk-sensitive policy evaluation. In this setting, we believe EWP-based multivariate DRL will be highly impactful. Our results concerning EWP dynamic programming provide promising evidence that the accuracy of EWP representations scales gracefully with for a fixed number of atoms. Thus, we believe that understanding convergence of EWP TD-learning algorithms is a very interesting and important open problem for future investigation.

Acknowledgements

The authors would like to thank Yunhao Tang, Tyler Kastner, Arnav Jain, Yash Jhaveri, Will Dabney, David Meger, and Marc Bellemare for their helpful feedback, as well as insightful suggestions from anonymous reviewers. This work was supported by the Fonds de Recherche du Québec, the National Sciences and Engineering Research Council of Canada, and the compute resources provided by Mila (mila.quebec).

References

- [BB96] Steven J. Bradtke and Andrew G. Barto. Linear least-squares algorithms for temporal difference learning. Machine Learning, 22(1):33–57, 1996.

- [BBC+21] Mathieu Blondel, Quentin Berthet, Marco Cuturi, Roy Frostig, Stephan Hoyer, Felipe Llinares-López, Fabian Pedregosa, and Jean-Philippe Vert. Efficient and modular implicit differentiation. In Advances in Neural Information Processing Systems, 2021.

- [BCC+20] Marc G. Bellemare, Salvatore Candido, Pablo Samuel Castro, Jun Gong, Marlos C. Machado, Subhodeep Moitra, Sameera S. Ponda, and Ziyu Wang. Autonomous navigation of stratospheric balloons using reinforcement learning. Nature, 588(7836):77–82, December 2020.

- [BDM+17a] André Barreto, Will Dabney, Rémi Munos, Jonathan J. Hunt, Tom Schaul, Hado P. van Hasselt, and David Silver. Successor features for transfer in reinforcement learning. In Advances in Neural Information Processing Systems, 2017.

- [BDM17b] Marc G. Bellemare, Will Dabney, and Rémi Munos. A Distributional Perspective on Reinforcement Learning. In Proceedings of the International Conference on Machine Learning, 2017.

- [BDM+18] André Barreto, Will Dabney, Rémi Munos, Jonathan J. Hunt, Tom Schaul, Hado van Hasselt, and David Silver. Successor Features for Transfer in Reinforcement Learning, April 2018.

- [BDR23] Marc G. Bellemare, Will Dabney, and Mark Rowland. Distributional Reinforcement Learning. The MIT Press, 2023.

- [BEKS17] Jeff Bezanson, Alan Edelman, Stefan Karpinski, and Viral B. Shah. Julia: A fresh approach to numerical computing. SIAM Review, 59(1):65–98, 2017.

- [BFH+18] James Bradbury, Roy Frostig, Peter Hawkins, Matthew James Johnson, Chris Leary, Dougal Maclaurin, George Necula, Adam Paszke, Jake VanderPlas, Skye Wanderman-Milne, and Qiao Zhang. JAX: composable transformations of Python+NumPy programs, 2018.

- [BHB+20] André Barreto, Shaobo Hou, Diana Borsa, David Silver, and Doina Precup. Fast reinforcement learning with generalized policy updates. Proceedings of the National Academy of Sciences (PNAS), 117(48):30079–30087, 2020.

- [BRCM19] Marc G Bellemare, Nicolas Le Roux, Pablo Samuel Castro, and Subhodeep Moitra. Distributional reinforcement learning with linear function approximation. In Proceedings of the International Conference on Artificial Intelligence and Statistics, 2019.

- [BT96] Dimitri P. Bertsekas and John N. Tsitsiklis. Neuro-dynamic programming. Athena Scientific, 1996.

- [CGH+96] Robert M. Corless, Gaston H. Gonnet, David E.G. Hare, David J. Jeffrey, and Donald E. Knuth. On the Lambert W function. Advances in Computational Mathematics, 5:329–359, 1996.

- [CZZ+24] Xin-Qiang Cai, Pushi Zhang, Li Zhao, Jiang Bian, Masashi Sugiyama, and Ashley Llorens. Distributional Pareto-optimal multi-objective reinforcement learning. In Advances in Neural Information Processing Systems, 2024.

- [DKNU+20] Will Dabney, Zeb Kurth-Nelson, Naoshige Uchida, Clara Kwon Starkweather, Demis Hassabis, Rémi Munos, and Matthew Botvinick. A distributional code for value in dopamine-based reinforcement learning. Nature, 577(7792):671–675, 2020.

- [DRBM18] Will Dabney, Mark Rowland, Marc G. Bellemare, and Rémi Munos. Distributional Reinforcement Learning with Quantile Regression. In Proceedings of the AAAI Conference on Artificial Intelligence, 2018.

- [FSMT19] Dror Freirich, Tzahi Shimkin, Ron Meir, and Aviv Tamar. Distributional Multivariate Policy Evaluation and Exploration with the Bellman GAN. In Proceedings of the International Conference on Machine Learning, 2019.

- [GBR+12] Arthur Gretton, Karsten M. Borgwardt, Malte J. Rasch, Bernhard Schölkopf, and Alexander Smola. A Kernel Two-Sample Test. Journal of Machine Learning Research, 13(25):723–773, 2012.

- [GBSL21] Michael Gimelfarb, André Barreto, Scott Sanner, and Chi-Guhn Lee. Risk-Aware Transfer in Reinforcement Learning using Successor Features. In Advances in Neural Information Processing Systems, 2021.

- [HRB+22] Conor F. Hayes, Roxana Radulescu, Eugenio Bargiacchi, Johan Källström, Matthew Macfarlane, Mathieu Reymond, Timothy Verstraeten, Luisa M. Zintgraf, Richard Dazeley, Fredrik Heintz, Enda Howley, Athirai A. Irissappane, Patrick Mannion, Ann Nowé, Gabriel de Oliveira Ramos, Marcello Restelli, Peter Vamplew, and Diederik M. Roijers. A practical guide to multi-objective reinforcement learning and planning. In Proceedings of the International Conference on Autonomous Agents and Multiagent Systems (AAMAS), 2022.

- [JJS93] Tommi Jaakkola, Michael I. Jordan, and Satinder P. Singh. On the Convergence of Stochastic Iterative Dynamic Programming Algorithms. In Advances in Neural Information Processing Systems, 1993.

- [KEF23] Tyler Kastner, Murat A. Erdogdu, and Amir-massoud Farahmand. Distributional Model Equivalence for Risk-Sensitive Reinforcement Learning. In Advances in Neural Information Processing Systems, 2023.

- [Lax02] Peter D. Lax. Functional analysis. John Wiley & Sons, 2002.

- [LB22] Alix Lhéritier and Nicolas Bondoux. A Cramér Distance perspective on Quantile Regression based Distributional Reinforcement Learning. In Proceedings of the International Conference on Artificial Intelligence and Statistics, 2022.

- [LK24] Dong Neuck Lee and Michael R. Kosorok. Off-policy reinforcement learning with high dimensional reward. arXiv preprint arXiv:2408.07660, 2024.

- [LM22] Shiau Hong Lim and Ilyas Malik. Distributional Reinforcement Learning for Risk-Sensitive Policies. In Advances in Neural Information Processing Systems, 2022.

- [MSK+10] Tetsuro Morimura, Masashi Sugiyama, Hisashi Kashima, Hirotaka Hachiya, and Toshiyuki Tanaka. Nonparametric return distribution approximation for reinforcement learning. In Proceedings of the International Conference on Machine Learning, 2010.

- [NGV20] Thanh Tang Nguyen, Sunil Gupta, and Svetha Venkatesh. Distributional Reinforcement Learning via Moment Matching. In AAAI, 2020.

- [RBD+18] Mark Rowland, Marc G. Bellemare, Will Dabney, Remi Munos, and Yee Whye Teh. An Analysis of Categorical Distributional Reinforcement Learning. In Proceedings of the International Conference on Artificial Intelligence and Statistics, 2018.

- [RM51] Herbert Robbins and Sutton Monro. A Stochastic Approximation Method. The Annals of Mathematical Statistics, 22(3):400–407, September 1951.

- [RMA+23] Mark Rowland, Rémi Munos, Mohammad Gheshlaghi Azar, Yunhao Tang, Georg Ostrovski, Anna Harutyunyan, Karl Tuyls, Marc G. Bellemare, and Will Dabney. An Analysis of Quantile Temporal-Difference Learning. arXiv, 2023.

- [ROH+21] Mark Rowland, Shayegan Omidshafiei, Daniel Hennes, Will Dabney, Andrew Jaegle, Paul Muller, Julien Pérolat, and Karl Tuyls. Temporal difference and return optimism in cooperative multi-agent reinforcement learning. In AAMAS ALA Workshop, 2021.

- [RVWD13] Diederik M. Roijers, Peter Vamplew, Shimon Whiteson, and Richard Dazeley. A survey of multi-objective sequential decision-making. Journal of Artificial Intelligence Research (JAIR), 48:67–113, 2013.

- [Sch00] Bernhard Schölkopf. The Kernel Trick for Distances. In Advances in Neural Information Processing Systems, 2000.

- [SFS24] Eiki Shimizu, Kenji Fukumizu, and Dino Sejdinovic. Neural-kernel conditional mean embeddings. arXiv, 2024.

- [She21] Vladimir Shenmaier. On the Complexity of the Geometric Median Problem with Outliers. arXiv, 2021.

- [SLL21] Wei-Fang Sun, Cheng-Kuang Lee, and Chun-Yi Lee. DFAC framework: Factorizing the value function via quantile mixture for multi-agent distributional Q-learning. In Proceedings of the International Conference on Machine Learning, 2021.

- [SR13] Gábor J. Székely and Maria L. Rizzo. Energy statistics: A class of statistics based on distances. Journal of Statistical Planning and Inference, 143(8):1249–1272, August 2013.

- [SSGF13] Dino Sejdinovic, Bharath Sriperumbudur, Arthur Gretton, and Kenji Fukumizu. Equivalence of distance-based and RKHS-based statistics in hypothesis testing. The Annals of Statistics, 41(5), October 2013.

- [Sut88] Richard S. Sutton. Learning to predict by the methods of temporal differences. Machine Learning, 3:9–44, 1988.

- [SZS+08] Le Song, Xinhua Zhang, Alex Smola, Arthur Gretton, and Bernhard Schölkopf. Tailoring density estimation via reproducing kernel moment matching. In Proceedings of the 25th international conference on Machine learning, pages 992–999, 2008.

- [Tsi94] John N. Tsitsiklis. Asynchronous stochastic approximation and Q-learning. Machine learning, 16:185–202, 1994.

- [TSM17] Ilya Tolstikhin, Bharath K. Sriperumbudur, and Krikamol Mu. Minimax estimation of kernel mean embeddings. Journal of Machine Learning Research, 18(86):1–47, 2017.

- [TVR97] J.N. Tsitsiklis and B. Van Roy. An analysis of temporal-difference learning with function approximation. IEEE Transactions on Automatic Control, 42(5):674–690, 1997.

- [Vil09] Cédric Villani. Optimal transport: Old and new, volume 338. Springer, 2009.

- [VN02] Jan Van Neerven. Approximating Bochner integrals by Riemann sums. Indagationes Mathematicae, 13(2):197–208, June 2002.

- [WBK+22] Peter R Wurman, Samuel Barrett, Kenta Kawamoto, James MacGlashan, Kaushik Subramanian, Thomas J Walsh, Roberto Capobianco, Alisa Devlic, Franziska Eckert, Florian Fuchs, et al. Outracing champion gran turismo drivers with deep reinforcement learning. Nature, 602(7896):223–228, 2022.

- [WFG+24] Harley Wiltzer, Jesse Farebrother, Arthur Gretton, Yunhao Tang, André Barreto, Will Dabney, Marc G. Bellemare, and Mark Rowland. A distributional analogue to the successor representation. In Proceedings of the International Conference on Machine Learning, 2024.

- [WUS23] Runzhe Wu, Masatoshi Uehara, and Wen Sun. Distributional Offline Policy Evaluation with Predictive Error Guarantees. In Proceedings of the International Conference on Machine Learning, 2023.

- [YSTS23] Qisong Yang, Thiago D. Simão, Simon H. Tindemans, and Matthijs T. J. Spaan. Safety-constrained reinforcement learning with a distributional safety critic. Machine Learning, 112(3):859–887, 2023.

- [YZL+19] Derek Yang, Li Zhao, Zichuan Lin, Tao Qin, Jiang Bian, and Tie-Yan Liu. Fully parameterized quantile function for distributional reinforcement learning. Advances in neural information processing systems, 32, 2019.

- [ZCZ+21] Pushi Zhang, Xiaoyu Chen, Li Zhao, Wei Xiong, Tao Qin, and Tie-Yan Liu. Distributional Reinforcement Learning for Multi-Dimensional Reward Functions. In Advances in Neural Information Processing Systems, 2021.

[sections] \printcontents[sections]l1

Appendix A In-Depth Summary of Related Work

In Sections 1 and 2, we provided a high-level synopsis of the state of existing work in multivariate distributional RL. In this section, we elaborate further.

Analysis techniques. Our results in this paper mostly drawn on the analysis of one-dimensional distributional RL algorithms such as categorical and quantile dynamic programming, as well as their temporal-difference learning counterparts [RBD+18, DRBM18, RMA+23, BDR23]. The proof techniques in these works themselves are related to contraction-based arguments for reinforcement learning with function approximation [Tsi94, BT96, TVR97].

Multivariate distributional RL algorithms. Several prior works have contributed algorithms for multivariate distributional reinforcement learning, along with empirical demonstrations and theoretical analysis, though as we note in the main paper, the approaches proposed in this paper are the first algorithms with strong theoretical guarantees and efficient tabular implementations. [FSMT19] propose a deep-learning-based approach in which generative adversarial networks are used to model multivariate return distributions, and use these predictions to inform exploration strategies. [ZCZ+21] propose the TD algorithm combing equally-weighted particle representations and an MMD loss that we recall in Equation (3). They demonstrate the effectiveness of this algorithm in combination with deep learning function approximators, though do not analyze it. [WUS23] propose a family of algorithms for multivariate distributional RL termed fitted likelihood evaluation. These methods mirror LSTD algorithms [BB96], iteratively minimising a batch objective function (in this case, a negative log-likelihood, NLL) over a growing dataset. [WUS23] demonstrate that these algorithms are performant in low-dimensional settings empirically, and provide theoretical analysis for FLE algorithms, assuming an oracle which can approximately optimise the NLL objective at each algorithm step. [SFS24] also propose a TD learning algorithm for one-dimensional distributional RL using categorical support and an MMD-based loss. They demonstrate strong performance of this algorithm in classic RL domains such as CartPole and Mountain Car, but do not analyze the algorithm. Our analysis in this paper suggests our novel relaxation to mass-1 signed measures may be crucial to obtaining a straightforwardly analyzable TD algorithm.

Finally, the concurrent work of [LK24] studied distributional Bellman operators for Banach-space-valued reward functions. Their work focuses on analyzing how well the fixed point of a distributional finite-dimensional multivariate Bellman equation can approximate the fixed point of a distributional Banach-space-valued Bellman equation. In contrast, our work only studies finite-dimensional reward functions, but provides explicit convergence rates and approximation bounds when the distribution representations are finite dimensional, unlike [LK24]. Moreover, [LK24] considers a similar algorithm to that discussed in Theorem 4 but for categorical representations, though its convergence is not proved. Furthermore, [LK24] did not prove convergence of any TD-learning algorithms, although they did propose some TD-learning algorithms which achieved interesting results in simulation.

Appendix B Proofs

B.1 Multivariate Distributional Dynamic Programming: Section 3

In this section, we will state some lemmas building up to the proof of Theorem 3. These lemmas generalize corresponding results of [NGV20] that were specific to the scalar reward setting. We begin with a lemma that demonstrates a notion of convexity for the MMD induced by a conditional positive definite kernel.

Lemma 1.

Let and be collections of probability measures indexed by an index set . Suppose . Then for any characteristic kernel , the following holds,

Proof.

It is known from [Sch00] that characteristic kernels generate RKHSs into which probability measures can be embedded. As such, it holds that

where is the norm in the Hilbert space and is the mean embedding of – that is, the unique element of such that for every , and where is the inner product in .

Let and define analogously. We claim that . To see this, let , and observe that

where the third step invokes Fubini’s theorem, and the penultimate steps leverages the linearity of the inner product. Notably, acts as a linear operator on mean embeddings. As a result, we see that

where the penultimate inequality is due to Jensen’s inequality, and the final inequality holds since upper bounds the integrand, and the integral is a monotone operator. ∎

Next, we show how the under the kernels hypothesized in Theorem 3 behave under affine transformations to random variables.

Lemma 2.

Let be a kernel induced by a semimetric of strong negative type defined over a compact subset that is both shift invariant and -homogeneous (cf. Theorem 3). Then for any and ,

Proof.

It is known [GBR+12] that the MMD can be expressed in terms of expected kernel evaluations, according to

where the last step invokes the definition of a kernel induced by a semimetric, and the linearity of expectation. Then, defining as independent samples from and as independent samples from , we have

where the second step is a change of variables, the third step invokes the shift invariance of , and the fourth step invokes the homogeneity of .

Thus, it follows that . ∎

We are now ready to prove the main result of this section.

*

Proof.

We begin by showing that the distributional Bellman operator is contractive in . We have

We apply Lemma 1 with and , yielding

Next, invoking Lemma 2 with the shift-invariance and -homogeneity of , we have

It follows that , since is a fixed point of . Continuing, we see that . Since is a metric on for any characteristic kernel , it follows that approaches at a geometric rate. ∎

B.2 EWP Dynamic Programming: Section 4

In this section, we provide the proof of Theorem 4. To do so, we prove an abstract, general result, regarding any projection mapping that approximates the argmin MMD projection given in Equation (4).

*

Proof.

Let . Then we have

where the first step invokes the identity that is the fixed point of (which is well-defined by Theorem 3), the second step leverages the triangle inequality, and the third step follows by the definition of and the contractivity of established in Theorem 3. Unrolling the recurrence above, we have

As such, as , we have that

proving our claim. ∎

Proposition 4, despite its simplicity, reveals an interesting fact: given a good enough approximate MMD projection in the sense that decays quickly with , the dynamic programming iterates will eventually be contained in a (arbitrarily) small neighborhood of . The next result provides an example consequence of this abstract result, and establishes that is enough for convergence to an arbitrarily small set with projected distributional dynamic programming under the EWP representation.

Finally, we can now prove Theorem 4, which we restate below for convenience. \ewpmmddpconvergence*

Proof.

For each and , denote by the event given by

for a constant to be chosen shortly. Moreover, with , it holds that under , for all . Following the proof of Proposition 4, we have that, conditioned on ,

Now, by [TSM17, Proposition A.1], event holds with probability at least , since each is generated independently by sampling independent draws from the distribution . Therefore, event holds with probability at least . Choosing , we have that, with probability at least ,

As such, there exist universal constants such that

| (14) | ||||

∎

*

Proof.

Proposition 4 shows that projected EWP dynamic programming converges to a set with radius controlled by the quantity that upper bounds the distance between and at the worst state . In the proof of Theorem 4, we saw that with nonzero probability, the randomized projections satisfy . Thus, there exists a projection satisfying this bound. Since the projection is, by definition, the projection with the smallest possible , the claim follows directly by Proposition 4. ∎

B.3 Categorical Dynamic Programming: Section 5

*

Proof.

Firstly, note that is a bounded, finite-dimensional subspace for each . Thus, is compact, and by the continuity of the MMD, the infimum in (7) is attained.

Following the technique of [SZS+08], we establish that can be computed as the solution to a particular quadratic program with convex constraints.

Let denote a matrix where . Since is a positive definite kernel when is characteristic [GBR+12], it follows that is a positive definite matrix. Then, for any , we have

where is independent of , so it does not influence the minimization. Now, since is positive definite (by virtue of being characteristic) and is a closed convex subset of , it is well-known that there is unique optimum, and the infimum above is attained for some . Therefore, is indeed well-defined, and its range is contained in , confirming the first two claims. Finally, since is well-defined and since is nonnegative and separates points, must map elements of to themselves – this is because for the kernels we consider. ∎

*

Proof.

Fix any and denote , where is the RKHS induced by the kernel and denotes the mean embedding of in this RKHS. It is simple to verify that is linear: for any and , for all with we have

which implies that . As a consequence, inherits convexity from .

We claim that is closed as a subset of . Since is an injection [GBR+12], by Lemma 7, since there is a unique minimizing , there is a unique attaining the infimum over . Let . Then there exists such that , and since , it follows that . Since this is true for any , it follows that is open, so is closed.

We will now show that is a nonexpansion in . Let , and denote by the mean embeddings of . We slightly abuse notation and write to denote the mean embedding of . Since is convex, for any and we have

Now, by expanding the squared norms and taking , since is closed we have

where the second inequality follows by applying the same logic to . Choosing and adding these inequalities yields

Expanding, we see that

confirming that is a non-expansion. It follows that

∎

*

Proof.

Combining Theorem 3 and Lemma 1, we see that

for some . Thus, is a contraction on . If is the RKHS induced by , we showed in Lemma 1 that is isometric to a product of closed, convex subsets of . Therefore, by the completeness of , is isometric to a complete subspace, and consequently is a complete subspace under the metric . Invoking the Banach fixed-point theorem, it follows that has a unique fixed point , and geometrically. ∎

B.3.1 Quality of the Categorical Fixed Point

As we saw in our analysis of multivariate DRL with EWP representations, the distance between a distribution and its projection (as a function of ) plays a major role in controlling the approximation error in projected distributional dynamic programming. Before proving the main results of this section, we begin by analyzing this quantity by reducing it to the largest distance between points among certain partitions of the space of returns.

*

Proof.

Our proof proceeds by establishing approximation bounds of Riemann sums to the Bochner integral , similar to [VN02]. Let . Abusing notation to denote by the probability of the th atom of the discrete support under , we have

where for some . Since optimizes the MMD over all probability vectors in , for with for the th element of , we have

It was shown by [SSGF13] that is an isometry from to , where is the RKHS induced by . Thus, we have

Since this is true for any partition , the claim follows by taking the infimum over . ∎

We now move on to the main results.

*

Proof.

The proof begins in a similar manner to [RBD+18, Proposition 3]. Given that is a nonexpansion as shown in Lemma 1, we have

where leverages the fact that is the fixed point of and that is the fixed point of , follows since is a nonexpansion by Lemma 1, and follows by the contractivity of established in Theorem 3. Finally, by Lemma 1, we have

∎

Finally, we explicitly derive a convergence rate for a particular support map under the energy distance kernels.

*

Proof.

We begin bounding . Assume for some . We consider a partition consisting of -dimensional hypercubes with side length . By definition of , it is clear that these hypercubes cover and each contain exactly one support point. Now, for each , we have

where is the vector of all ones, and is any element in . Expanding, we have

Since this bound holds for any , invoking Theorem 1 yields

If instead , we omit all but of the support points, and achieve

Alternatively, we may write

∎

B.4 Categorical TD Learning: Section 6

In this section, we prove results leading up to and including the convergence of the categorical TD-learning algorithm over mass-1 signed measures. First, in Section B.4.1, we show that is in fact a metric on the space of mass-1 signed measures, and establish that the multivariate distributional Bellman operator is contractive under these distribution representations. Subsequently, in Section B.4.2, we analyze the temporal difference learning algorithm leveraging the results from Section B.4.1.

B.4.1 The Signed Measure Relaxation

We begin by establishing that is a metric on for spaces under which is a metric on . \mmdsigned*

Proof.

Naturally, since is given by a norm, it is non-negative, symmetric, and satisfies the triangle inequality. We must show that . Firstly, it is clear that by the positive homogeneity of the norm. It remains to show that .

Let . For the sake of contradiction, assume that . We will show that this implies that for a pair of distinct probability measures, which is a contradiction since with characteristic is known to be a metric on .

By the Hahn decomposition theorem, we may write for non-negative measures . Therefore, for some , we may express

where . Likewise, there exist and probability measure for which . Since by hypothesis, and by linearity of , we have

where and are convex combinations of probability measures, and are therefore probability measures themselves. So, we have that

which contradicts our hypothesis. Therefore, for any , and it follows that is a metric. ∎

Next, we show that the distributional Bellman operator is contractive on the space of mass-1 signed measure return distribution representations.

*

Proof.

Indeed, is, in a sense, a simpler operator than . Since is an affine subspace of , it holds that is simply a Hilbertian projection, which is known to be affine and a nonexpansion [Lax02]. Moreover, since acts identically on as it does on , it immediately follows that is a -contraction on , inheriting the result from Theorem 3. Thus, we have that for any ,

confirming that the projected operator is a contraction. Since is a metric on for each , it follows that is a metric on . The existence and uniqueness of the fixed point follows by the Banach fixed point theorem. ∎

Finally, we show that the fixed point of distributional dynamic programming with signed measure representations is roughly as accurate as .

*

Proof.

Since is a nonexpansion in by Lemma 6, following the procedure of Theorem 1, we have

Note that identifies the closest point (in ) to in and identifies the closest point to in . Since it is clear that , it follows that

The first statement then directly follows since it was shown in Lemma 1 that .

To prove the second statement, we apply the triangle inequality to yield

where the second step leverages the fact that is a nonexpansion in by Lemma 1. Applying the conclusion of the first statement as well as the bound on , we have

∎

B.4.2 Convergence of Categorical TD Learning

Convergence of the proposed categorical TD-learning algorithm will rely on studying the iterates through an isometry to an affine subspace of . This affine subspace is that consisting of the set of state-conditioned “signed probability vectors”. We define as an affine subspace of for any according to

| (15) |

We note that any element of can be encoded in by expresing as the sequence of signed masses associated to each atom of . In Lemma B.4.2, we exhibit an isometry between and .

lemmacatisometry Let be a characteristic kernel. There exists an affine isometric isomorphism between and an affine subspace (cf. (15)).

Proof.

Since is characteristic, it is positive definite [GBR+12]. Thus, for each , define according to

Then each is positive definite since is a positive definite kernel. Let , and define and such that and . Then, we have

Since is positive definite, is a norm on . Therefore, the map given by is a linear isometric isomorphism onto the affine subspace of with entries summing to , which we denote . Moreover, since is a finite dimensional Hilbert space, it is well known that there exists a linear isometric isomorphism with the usual norm. Thus, is a linear isometric isomorphism. Consequently, it follows that given by is a linear isometric isomorphism, where . ∎

lemmadpisometry Define the operator by , where is the isometry of Lemma B.4.2. Let be given by , where are the dynamic programming iterates . Then . Moreover, is contractive whenever is, and in this case, , where is the unique fixed point of .

Proof.

By definition, we have

which proves the first claim. Moreover, for and , we have

where the second step transforms the to since is an isometry between those metric spaces. Therefore, if is contractive with contraction factor , we have

so that has the same contraction factor as . Consequently, by the Banach fixed point theorem, has a unique fixed point whenever is contractive, and at the same rate as . ∎

The main theorem in this section is that temporal difference learning on the finite dimensional representations converges.

[Convergence of categorical temporal difference learning]propositioncattdisomconvergence Let be given by , and let be a kernel satisfying the conditions of Theorem 3. Suppose that, for each , the states and step sizes satisfy the Robbins-Munro conditions

Then, with probability 1, , where is the fixed point of .

The proof of this result as a natural extension of the convergence analysis of Categorical TD Learning given in [BDR23] to the multivariate return setting under the supremal MMD metric. The analysis hinges on the following general lemma.

Lemma 3 ([BDR23, Theorem 6.9]).

Let be a contractive operator with respect to with fixed point , and let be a filtered probability space. Define a map such that

| (16) |

For a stochastic process adapted to with , consider a sequence given by

| (17) |

where is adapted to and satisfies the Robbins-Munro conditions for each ,

Finally, for the processes where , assume the following moment condition holds,

| (18) |

for finite universal constants . Then, with probability 1, .

The operator of Lemma 3 will be substituted with , governing the dynamics of the encoded iterates of the multi-return distribution. The stochastic operator will be substituted with the corresponding stochastic TD operator for , given by

| (19) |

This corresponds to applying a Bellman backup from a stochastic reward and next state , followed by projecting back onto , and applying the isometry back to .

Proof of Proposition B.4.2.

Let . Note that for any , can be isometrically embedded into by zero-padding. Thus, can be isometrically embedded into , so without loss of generality, we will assume that .

Define the map according to

| (20) |

Then, defining with as in (11), we have

Note that, since is a Hilbert projection onto an affine subspace, it is affine [Lax02]. Consequently, is an unbiased estimator of the operator in the following sense,

where the first step invokes the linearity of , the second step invokes the linearity of the isometry established in Lemma B.4.2 and the third step is due to the definition of . As a result, we see that the conditions (16) and (17) of Lemma 3 are satisfied by , the iterates , and the step sizes . Moreover, for defined by

*

Proof.

By Proposition B.4.2, the sequence with converges to a unique fixed point with probability 1. Note that

Therefore, is a fixed point of . Since it was shown in Lemma 6 that has a unique fixed point, it follows that . Since is an isometry, with probability 1, so indeed with probability 1. ∎

Lemma 4.

Proof.

Since is an isometry, we have that

where is the RKHS induced by the kernel . Moreover, since is a nonexpansion in as argued in Lemma 6, we have that

Proceeding, we will bound the terms . To bound , we simply have

where we invoke the contraction of in from Theorem 3. Note that , so it follows that for some constant since the kernel is bounded in compact domains. Expanding the norm of the difference above yields

for a finite constant , again invoking the isometry in the last step.

Our bound for is similar. Choose any . We consider the operator given by

This operator is a contraction in , and correspondingly has a fixed point . To see this, we note that is simply a special case of for the case , so the contractivity and existence of the fixed point are inherited from Theorem 3. Proceeding in a manner similar to the bound on , we have

where the final step mirrors the bound on . Therefore, we have shown that

completing the proof. ∎

Appendix C Memory Efficiency of Randomized EWP Dynamic Programming

In Section 4, we argued for the necessity of considering a projection operator in EWP dynamic programming. While we provided a randomized projection, Theorem 4 requires that we apply only a finite amount of DP iterations. Thus, one might ask if, given that we apply only finitely many iterations, the naive unprojected EWP dynamic programming can produce accurate enough approximations of without costing too much in memory.

In this section, we demonstrate that, in fact, the algorithm described in Theorem 4 can approximate to any desired accuracy with many fewer particles. Suppose our goal is to derive some such that

for some . We will derive bounds on the number of required particles to attain such an approximation with unprojected EWP dynamic programming (denoting the number of particles ) as well as with our algorithm described in Theorem 4 (denoting the number of particles . In both cases, we will compute iterates starting with some with . For simplicity, we will consider the energy distance kernel with .

The remainder of this section will show that the dependence of the number of atoms on both and is substantially worse in the unprojected case (that is, for large state spaces or low error tolerance). We demonstrate this with concrete lower bounds on and upper bounds on below; note that these bounds are not optimized for tightness or generality, and are instead aimed to provide straightforward evidence of our core points above.

We will begin by bounding . In the best case, is supported on particle for each . If any state can be reached from any other state in the MDP with non-zero probability, then applying the distributional Bellman operator to will result in having support on atoms at each state (due to the mixture over successor states in the Bellman backup). Consequently, the iterate will be supported on atoms. Since by Theorem 3, we require

to ensure that . Thus, we have

On the other hand, the following lemma bounds ; we prove the lemma at the end of this section.

lemmamprojbound Let denote the output of the projected EWP algorithm described by Theorem 4 with particles. Then under the assumptions of Theorem 4 and with the energy distance kernel with , is achievable with

| (21) |

For any fixed MDP with and , we have that

since and does not depend on . Meanwhile, we have by Lemma C, indicating a much more graceful dependence on relative to the unprojected algorithm.

On the other hand, for any fixed tolerance , we immediately have

In the worst case, we may have (any larger will induce linearly dependent cumulants). Thus, we have

so the projected algorithm scales much more gracefully with as well.

Proof of Lemma C

Finally, we prove Lemma C, which determines the number of atoms required to achieve an -accurate return distribution estimate with the algorithm of Theorem 4.

*

Proof.

Note that, by Theorem 4, increasing can only decrease the error as long as . Therefore, as shown in (14) in the proof of Theorem 4, there exists a universal constant such that

| (22) |

Now, we write , and , yielding

Then, after isolating the logarithmic term and exponentiating, we see that

We now rearrange this expression and invoke the identity where is a Lambert -function [CGH+96]:

There are two branches of the Lambert -function on the reals, namely and . These two branches satisfy when and when . In our case, we know that is negative, and it is known [CGH+96] that when . Consequently, when , we have , and substituting , we have

| (23) |

On the other hand, when , we have

The upper bound given above will generally not be an integer. Howevever, increasing can only improve the approximation error, as shown in Theorem 4 since decreases monotonically when . So, we can round up to the nearest integer (or round it down when ) incurring a penalty of at most one atom. It follows that the randomized EWP dynamic programming algorithm of Theorem 4 run with given by (21) produces a return distribution function for which . ∎

Appendix D Nonlinearity of the Categorical MMD Projection

In Section 6, we noted that the categorical projection is non-affine. Here, we provide an explicit example certifying this phenomenon.

We consider a single-state MDP, since the nonlinearity issue is independent of the cardinality of the state space (the projection is applied to each state-conditioned distribution independently). We write , and consider the kernel induced by —this resulting MMD is known as the energy distance, which is what we used in our experiments. We consider two distributions, and .

| Support point | ||

|---|---|---|

We consider and compare with , and we note that ; confirming that is not an affine map. The results are tabulated in Table 1, with bolded entries depicting the atoms with non-negligible differences in probability under .

Appendix E Experiment Details

TD-learning experiments were conducted on a NVidia A100 80G GPU to parallelize experiments. Methods were implemented in Jax [BFH+18], particularly with the help of JaxOpt [BBC+21] for vectorizing QP solutions — this was helpful for computing the categorical projections discussed in this work. SGD was used for optimization, using an annealed learning rate schedule with , satisfying the conditions of Lemma 3. Experiments with constant learning rates yielded similar results, but were less stable—this validates that the choice of learning rate schedule did not impede learning.

The dynamic programming experiments were implemented in the Julia programming language [BEKS17].

In all experiments, we used the kernel induced by with reference point for MMD optimization—this corresponds to the energy distance, and satisfies the requisite assumptions for convergent multivariate distributional dynamic programming outlined in Theorem 3.

Appendix F Neural Multivariate Distributional TD-Learning

For the sake of illustration, in this section, we demonstrate that the signed categorical TD learning algorithm presented in Section 6 can be scaled to continuous state spaces with neural networks. We will consider an environment with visual (pixel) observations of a car in a parking lot, an example observation is shown in Figure 5.

Here, we consider 2-dimensional cumulants, where the first dimension tracks the coordinate of the car, and the second dimension is an indicator that is if and only if the car is parked in the parking spot. We learn a multivariate return distribution function with transitions sampled from trajectories that navigate around the obstacle to the parking spot. Notably, the successor features (expectation of multivariate return distribution) will be zero in the first dimension, since the set of trajectories is horizontally symmetric. Thus, from the successor features alone, one cannot distinguish the observed policy from one that traverses straight through the obstacle!

Fortunately, when modeling a distribution over multivariate returns, we should see that the support of the multivariate return distribution does not include points with vanishing first dimension.

To learn the multivariate return distribution function from images, we use a convolutional neural architecture as shown in Figure 6.

Notably, we simply use convolutional networks to model the signed masses for the fixed atoms of the categorical representation. The projection is computed by a QP solver as discussed in Section 5, and is applied only to the target distributions (thus we do not backpropagate through it).

We compared the multi-return distributions learned by our signed categorical TD method with that of [ZCZ+21]. Our results are shown in Figure 7. We see that both TD-learning methods accurately estimate the distribution over multivariate returns, indicating that no multivariate return will have a vanishing lateral component. Quantitatively, we see that the EWP algorithm appears to be stuck in a local optimum, with some particles lying in regions of low probability mass.

Moreover, on the right side of Figure 7, we show predicted return distributions for two randomly sampled reward vectors, and quantitatively evaluate the two methods. The leftmost reward vector incentivizes the agent to take paths conservatively avoiding the obstacle on the left. The rightmost reward vector incentivizes the agent to get to the parking spot as quickly as possible. We see that the EWP TD learning algorithm of [ZCZ+21] more accurately estimates the return distribution function corresponding to the latter reward vector, while our signed categorical TD algorithm more accurately estimates the return distribution function corresponding to the former reward vector. In both cases, both methods produce accurate estimations.