ForestProtector: An IoT Architecture Integrating Machine Vision and Deep Reinforcement Learning for Efficient Wildfire Monitoring ††thanks: This work was supported by a grant from Google AI, under the program TensorFlow Faculty Award 2021.

Abstract

Early detection of forest fires is crucial to minimizing the environmental and socioeconomic damage they cause. Indeed, a fire’s duration directly correlates with the difficulty and cost of extinguishing it. For instance, a fire burning for 1 minute might require 1 liter of water to extinguish, while a 2-minute fire could demand 100 liters, and a 10-minute fire might necessitate 1,000 liters. On the other hand, existing fire detection systems based on novel technologies (e.g., remote sensing, PTZ cameras, UAVs) are often expensive and require human intervention, making continuous monitoring of large areas impractical. To address this challenge, this work proposes a low-cost forest fire detection system that utilizes a central gateway device with computer vision capabilities to monitor a 360° field of view for smoke at long distances. A deep reinforcement learning agent enhances surveillance by dynamically controlling the camera’s orientation, leveraging real-time sensor data (smoke levels, ambient temperature, and humidity) from distributed IoT devices. This approach enables automated wildfire monitoring across expansive areas while reducing false positives. The full implementation, datasets, and trained models are available at https://github.com/EdwinTSalcedo/ForestProtector.

Index Terms:

Wildfire detection, real time monitoring, deep reinforcement learning, deep learning, edge computingI Introduction

In recent years, a surge in forest fires has plagued the globe, fueled by a complex interplay of factors including climate change, deforestation, land use changes, human activities, and prolonged droughts. This escalating crisis has had a particularly devastating impact on Bolivia, where wildfires in 2024 alone have scorched over 10 million hectares (24.7 million acres) of land [1]. This staggering figure surpasses previous years, marking a significant environmental crisis for the country [2]. While developed nations utilize sophisticated early wildfire warning systems like ALERTCalifornia [3], NASA’s FIRMS program [4], and the European Forest Fire Information System [5], their replication in developing countries remains a challenge due to financial constraints.

The longer a forest fire lasts, the more difficult and costly it becomes to extinguish. Therefore, early detection of forest fires is paramount in minimizing the environmental and socioeconomic damage they inflict, which in turn requires a low rate of false alarms. Fortunately, recent advances in embedded systems, artificial intelligence (AI), and the Internet of Things (IoT) are significantly enhancing the automation of wildfire monitoring and management. Novel real-time fire detection systems utilize a combination of sensors, microphones, and cameras to identify early fire indicators like smoke, heat, sound, and gas emissions [6, 7, 8, 9].

While current technologies have significantly improved wildfire monitoring, there is a critical need for more accessible and efficient early detection methods. Consequently, this project explores a novel approach by integrating deep reinforcement learning (DRL), computer vision, and the Internet of Things (IoT) to enhance wildfire time-to-detection. Our proposed system gathers real-time sensor data (temperature, humidity, and smoke) from multiple locations. This data informs a DRL agent located within a central gateway. Then, the agent prioritizes the surveillance of areas to verify the presence of smoke at long distances using a 3D convolutional neural network (3DCNN). This monitoring strategy, supported by IoT and a Low-Power Wide-Area Network (LPWAN), offers an innovative approach for enhancing wildfire detection over vast areas and to reduce false positives.

II Background

Conventional fire detection methods, often relying on human observation from watchtowers or using old tools such as the Osborne fire finder [10], are inherently inefficient and prone to human error. Over time, different wildfire detection technologies have emerged, with advantages and disadvantages involved. These solutions can be clustered into three main categories: remote sensing-based, optical sensor-based, and IoT sensor-based. Remote sensing (e.g. from Low Earth Orbit (LEO) satellites) is particularly valuable for monitoring large forest areas at a continental or global scale [11]. Although higher resolution and frequency in satellite imagery generally translate to increased costs, ongoing advancements in satellite technology and sensor development are improving revisit times and accessibility for wild fire detection [12]. This trend points towards greater accessibility and wider use of satellite-based fire detection in developing countries in the years to come.

Unlike the optical sensors used in remote sensing, ground-based optical sensors are widely available and have been adopted for a wide range of applications due to their affordability and ease of use. These sensors can be deployed in diverse locations, including ground level, watchtowers, poles, and even unmanned aerial vehicles [13, 14]. Optical sensors fall into two main categories, RGB cameras and infrared (IR) cameras, with IR encompassing short-wave, middle-wave, and long-wave [15]. All of them ease the detection of fire traits, such as smoke or flames during day and night time. Furthermore, 360° cameras (also known as PTZ cameras) are frequently used [16], despite their higher current cost.

Wireless sensor networks (WSNs), which can be integrated into the Internet of Things (IoT), can collect data on various factors, such as temperature, humidity, pressure, and gas levels (e.g., carbon monoxide and carbon dioxide). Indeed, some investigations have used this approach to continuously calculate fire risk [6, 7]. However, as with optical-based sensing, WSNs face limitations in covering large areas due to the need for multiple nodes to achieve comprehensive monitoring [17]. On the other hand, WSNs can be combined with other sensing modalities for prompt fire detection. For example, Peruzzi et al. [8] embedded convolutional neural networks (CNNs) on low-power IoT devices for audio and image data classification in forest areas. The combined use of the CNNs provided higher accuracy (96.15%) for normal or fire classification.

III Proposed System

The proposed system architecture, shown in Figure 1, consists of two main components: IoT sensor nodes and a central gateway. The sensor nodes, deployed near forests, monitor environmental conditions using various sensors, including those for temperature, humidity, barometric pressure, smoke and water. The central gateway, based on a NVIDIA Jetson Nano card, collects data from these nodes via the LoRa protocol. The gateway then utilizes a DRL agent to control the gateway camera’s perspective, focusing on potential nearby campfires, and employs a computer vision algorithm to verify the presence of smoke in the high-risk region. These components will be further described in the following sections.

| Sensor | Measurement | Range | Resolution |

| BME280 | Temperature | -40 °C to 85 °C | 0.01 °C |

| Barometric Pressure | 300 hPa to 1100 hPa | 0.18 Pa | |

| Humidity | 0% to 100% RH | 0.008% RH | |

| MQ2 | Smoke | 0 to 4095 | 1 |

| T1592 | Water | 0 a 4095 | 1 |

III-A IoT Sensor Nodes

To collect environmental data, we developed three IoT sensor nodes. Each node consists of a BME280 sensor, an MQ2 sensor, a TTGO LoRa32 module, a T1592 sensor, and a power bank (See Table I for sensor specifications). The BME280 sensor measures temperature, humidity, and pressure, while the MQ2 sensor detects smoke particles in the air. T1592 sensors were included to detect precipitation and halt data collection during rain. The TTGO LoRa32 modules continuously transmit sensor data to the central gateway via the LoRaWAN protocol. To protect the IoT nodes in outdoor conditions (e.g., rain and solar radiation), we designed a custom case in SolidWorks and 3D printed it with PETG (Polyethylene Terephthalate Glycol) material. The design allows for mounting the node to the top of a 4-inch diameter PVC tube to be installed at ground-level.

III-B Central Gateway and Cloud Server

The gateway uses a Lilygo T3S3 module to gather data from the IoT devices via the LoRaWAN protocol. Within the gateway, this data is transmitted in JSON format to the Jetson Nano, which acts as the central processing unit. A RL agent inside the Jetson Nano, trained on the IoT sensor data provided by the end devices, defines the Raspberry Picam v2 camera’s horizontal orientation (ranging from 0°to 360°). Once positioned, the camera, coupled with a computer vision system, detects smoke — an early indicator of wildfire. If smoke is detected, the gateway sends an alert to a cloud server, which then relays the alert to the designated users.

Additionally, all sensor data are transmitted to the cloud, which is received by an Express.js backend and stored in a MongoDB database for analysis and monitoring. A user-friendly React.js frontend deployed on Vercel provides real-time data visualizations and creates push notifications using a socket connection. Moreover, the system architecture, deployed on an AWS EC2 instance, includes an alert system that sends WhatsApp notifications to a designated phone number using the WhatsAppWeb.js API and displays alerts on the dashboard. This system architecture is depicted in Figure 2.

IV Deep Reinforcement Learning Model

IV-A Model design and training

The agent was designed using a Deep Q-Network (DQN) architecture, suitable for environments with discrete action spaces and complex observational patterns. The DQN model’s objective was to learn a policy that maximizes the cumulative reward by selecting the most relevant sector for monitoring based on indicators of potential wildfire risk. The resulting architecture consists of three fully connected layers: an input layer that processes the environment’s state, two hidden layers of 24 neurons with ReLU activations, and an output layer representing the Q-values for each action.

The Q-value function was updated using the Bellman equation, which models the expected cumulative reward for taking action in state , followed by an optimal policy. The update rule is given by:

| (1) |

where: is the estimated value of taking action in state . is the learning rate, which controls the weight given to new information during the update. is the reward received after taking action in state . is the discount factor, which balances the importance of immediate versus future rewards. represents the maximum expected value of future rewards for the next state , considering all possible actions .

IV-B Decision-making process for detecting wildfires

Each IoT sensor node provides observations on smoke levels, temperature, barometric pressure, humidity, and water presence, however, only temperature, humidity, and smoke levels were considered for the model. The model computes a weighted sum of these observations to prioritize the sector of the IoT node with the strongest indicators of potential wildfire presence. This signal strength for each IoT node is calculated as follows:

| (2) |

where , , and are the respective weights assigned to smoke, temperature, and humidity. and are binary values (1 or 0) indicating if thresholds for temperature and humidity have been surpassed. represents the smoke level, normalized to a scale from 0 to 1 by dividing by 100.

Based on the calculated signal for each IoT node , the agent selects the action that maximizes the expected reward according to Equation 3. This approach allows the agent to focus on IoT nodes with the strongest evidence of wildfire risk, enhancing the model’s responsiveness to critical conditions.

| (3) |

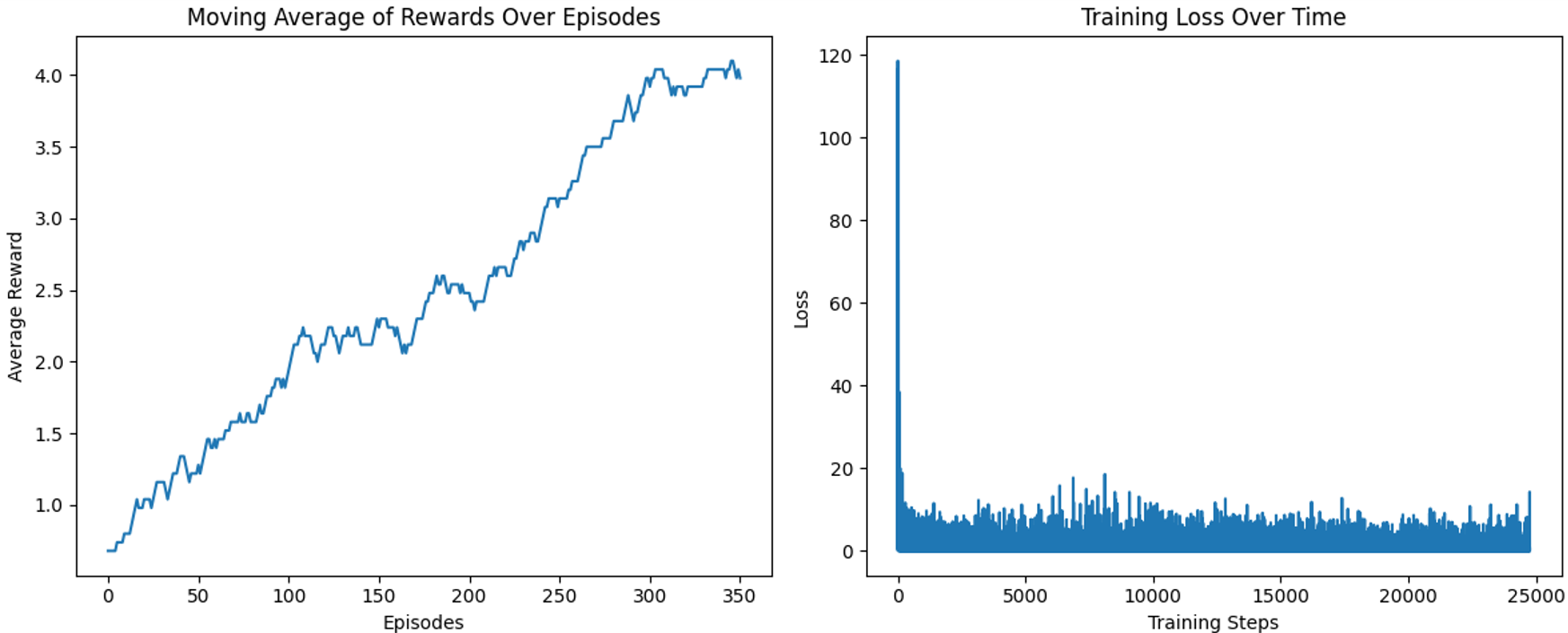

Moving on, the performance of the DQN agent was evaluated through two primary metrics: the moving average of rewards over episodes and the training loss over time. Together, these metrics validate the DQN agent’s ability to effectively learn and optimize its policy within the given environment. The moving average, calculated over a 100-episode window, provides a smooth indicator of the agent’s learning progress. This is formally defined in Equation 4, for a given episode , the moving average of rewards over a window . In this equation, denotes the total reward received in episode .

| (4) |

As for the Q-network, the training loss provides insights into the convergence. It is computed as the mean squared error (MSE) between the predicted Q-value and the target Q-value for each state-action pair in a batch. Given a mini-batch of size , the MSE loss can be defined as:

| (5) |

V Wildfire Detection Using Computer Vision

The proposed computer vision system utilizes a Raspberry Pi Camera Module v2.1 installed in the gateway, enabling the collection of RGB images with a resolution of 1920px x 1080px at 30 frames per second. During the day, the system prioritizes smoke detection over fire detection, as smoke is a more visible wildfire indicator from long distances. Inspired by our successful results classifying video on embedded devices (Jetson Nano development cards) [18], we implemented 3DCNN models for smoke recognition. These models were trained using a combination of real and synthetic videos of smoke plumes and wildfire captured at various distances.

For nighttime fire detection, the system relies on a modified version of the algorithm proposed by Gunay et al. in [19]. Our implementation prioritizes bright region detection (BRD), average magnitude difference function (AMDF), background subtraction, and color thresholding. We collected 50 night videos with fire and 50 night videos without fire. Through experimentation, we identified that 180 and 0.2 were suitable threshold values for the BRD and AMDF components, respectively. In the following sections, we describe the development of the 3DCNN-based model for daytime wildfire detection.

V-A Dataset preparation

To collect the dataset detailed in Table II, we used video editing tools, scraped videos from social media websites, and used generative AI tools. First, we used After Effects to generate smoke effects with varying wind and orientation, which were then fused with videos of forests recorded in La Paz, Bolivia. We also scraped videos from social media using search terms like “wildfire,” “burning forest,” and “wild forest.” Finally, we used the AI tool Haiper [20] to generate wildfire videos from static images.

We used data augmentation to create the final dataset, applying the following transformations: Horizontal Flip, Random Brightness and Contrast, Gaussian Blur, and Gaussian Noise. Each transformation had a 50% probability of being applied sequentially using the Albumentations library. This process resulted in a final dataset of 4,000 videos. For experimentation purposes, all videos were converted to grayscale, cropped to a 1:1 aspect ratio, and resized to 240x240 pixels.

| Class | Scrapped Videos | AI- generated Videos | Videos Generated with After Effects | Total | Aug. |

| With Smoke | 108 | 443 | 184 | 735 | 2,000 |

| Without Smoke | 663 | 0 | 0 | 663 | 2,000 |

| Total | 771 | 443 | 184 | 1,398 | 4,000 |

V-B 3DCNN Architecture

This study developed and evaluated five distinct 3DCNN architectures (S1 to S5) to optimize smoke detection accuracy in video sequences. The first model, S1, used Conv3D layers with MaxPooling3D to capture spatial and temporal features, followed by dense layers for classification. S2 built upon S1 by replacing MaxPooling3D with AveragePooling3D, which smoothed feature extraction and improved accuracy. S3, which maintained the structure of S2 but used grayscale data, achieved the best results during testing across all architectures, reducing computational load in the process. The conversion to grayscale was performed after observing the superior performance of models trained on grayscale data. S4 extended S2 with BatchNormalization to stabilize training and TimeDistributed layers to prepare data for bidirectional LSTM layers, further enhancing temporal feature learning. Finally, S5 replicated S4 but utilized grayscale data. Figure 3 illustrates the architecture of S5, the best-performing model.

VI Experimental Results and Discussion

VI-A Deep Reinforcement Learning Model

We trained the model using the following hyperparameters: learning rate , discount factor (to balance the importance of immediate and future rewards), and rewards for correctly identifying the IoT node with the strongest signal, and otherwise. To enhance stability during training, an experience replay buffer of size 100,000 was employed to store past transitions. At each step, a mini-batch of 64 transitions was randomly sampled. The epsilon-greedy policy enabled a balance between exploration and exploitation, with initialized at 1.0 and decaying at a rate of 0.995 per episode, down to a minimum of 0.01. As illustrated in Figure 4, the model demonstrated significant improvement in its decision-making capability over the episodes. Additionally, the following weights were assigned to the sensor data: (highest priority, as it provides a continuous measure strongly correlated with wildfire risk), (temperature sensor contributes significantly when combined with humidity), and (humidity sensor has the lowest individual weight).

VI-B Smoke Detection

III provides a comparative analysis of the five 3DCNN architectures (S1 to S5) experimented in the project. Our experiments demonstrated the importance of incorporating modules like Average Pooling, bidirectional LSTM layers, and grayscale conversion for enhanced performance. S5 achieved the highest metrics (accuracy = 0.925, F-score = 0.93), demonstrating superior performance. Figure 5 shows the accuracy and loss over epochs, highlighting S5’s convergence and stability. Furthermore, Figure 6 shows classification examples obtained using S5, where it is worth noting that the model misclassified fog as smoke in the second sample. In the inference stage, the video captured by the camera is divided into an 8x4 grid, and each cell is analyzed individually.

| Model | AUC | Recall | F1-score | Accuracy |

| S1 | 0.93 | 0.87 | 0.87 | 0.83 |

| S2 | 0.95 | 0.89 | 0.89 | 0.89 |

| S3 | 0.95 | 0.90 | 0.89 | 0.89 |

| S4 | 0.96 | 0.89 | 0.89 | 0.89 |

| S5 | 0.98 | 0.93 | 0.93 | 0.925 |

VI-C System Performance Results

The final prototypes of the IoT device and gateway are shown in Figures 7a and 7b, respectively. On November 30th, 2024, we conducted a series of outdoor experiments in La Paz, Bolivia, to test the system’s effectiveness in detecting fire features in real scenarios. As shown in Figure 7c, we installed the gateway in the middle and the IoT nodes at a distance of 3 meters from the gateway. We ignited a fire on a wheelbarrow. To simulate wildfire proximity, we moved the wheelbarrow closer to the IoT sensor nodes, keeping the fire facing outwards. We measured the time between moving the wheelbarrow closer to each node and the web application issuing an alert. These tests are shown in Table IV. The last column of this table shows the average detection time per IoT node induced by the fire on the wheelbarrow.

| IoT node | Mean | |||||

| A | 118 | 117 | 118 | 120 | 117 | 118 |

| B | 124 | 120 | 123 | 123 | 122 | 122.4 |

| C | 129 | 131 | 128 | 131 | 130 | 129.8 |

Our experimental results in open environments demonstrate the effectiveness of the proposed system in detecting fires and generating timely alerts. The DRL agent effectively directed the camera towards the source of the fire, and the 3DCNN model accurately verified the presence of smoke. The DRL agent quickly defined the camera orientation towards the potential fire source in approximately 0.82 seconds. However, the 3DCNN required significantly longer—over 1.7 minutes on average—to process the multiple frame sequences of 224x224 dimensions, highlighting the potential for optimizing the 3DCNN model’s inference time.

While our system showed promising results, its field deployment revealed several areas for improvement in future research. Firstly, using professional sensors for detecting smoke, CO, CO2, and other fire-related gases is crucial. While MQ2 sensors can detect various gases, including H2, LPG, CH4, CO, alcohol, smoke, and propane, their sensing range is limited. Also, they exhibited high sensitivity only for gases in close proximity to the sensor. Secondly, exploring the integration of solar energy could enhance the system’s autonomy. Finally, further validation requires deploying and evaluating the system in diverse real-world environments and at varying distances.

VII Conclusions

The proposed ForestProtector architecture integrates IoT technology, deep reinforcement learning, and computer vision to monitor large forest areas in real time. By leveraging IoT sensor nodes, a central gateway with a deep reinforcement learning agent, and a 3DCNN model for smoke detection, the system ensures effective risk prioritization and accurate smoke classification. Its modular design and cost-effective components make it scalable and accessible, offering a practical solution for wildfire detection in resource-constrained regions.

References

- [1] D. Welle, “How land clearance is destroying bolivia’s forests.” DW.com. Accessed: Oct 23, 2024.

- [2] E. Commision, “2023: A year of intense global wildfire activity.” COPERNICUS.eu. Accessed: Oct 2, 2024.

- [3] U. of California, “Alertcalifornia.” ALERTCALIFORNIA.org. Accessed: May 2, 2024.

- [4] NASA, “Fire information for resource management system.” NASA.gov. Accessed: Oct 2, 2024.

- [5] E. Commision, “European forest fire information system effis.” COPERNICUS.eu. Accessed: Oct 2, 2024.

- [6] U. Dampage, L. Bandaranayake, R. Wanasinghe, K. Kottahachchi, and B. Jayasanka, “Forest fire detection system using wireless sensor networks and machine learning,” Scientific reports, vol. 12, no. 1, p. 46, 2022.

- [7] W. Benzekri, A. El Moussati, O. Moussaoui, and M. Berrajaa, “Early forest fire detection system using wireless sensor network and deep learning,” International Journal of Advanced Computer Science and Applications, vol. 11, no. 5, 2020.

- [8] G. Peruzzi, A. Pozzebon, and M. Van Der Meer, “Fight fire with fire: Detecting forest fires with embedded machine learning models dealing with audio and images on low power iot devices,” Sensors, vol. 23, no. 2, p. 783, 2023.

- [9] X. Li, Y. Liu, L. Zheng, and W. Zhang, “A lightweight convolutional spiking neural network for fires detection based on acoustics,” Electronics, vol. 13, no. 15, p. 2948, 2024.

- [10] O. Tech, “Why earth observation is the future of fire detection.” ORORATECH.com. Accessed: Oct 30, 2024.

- [11] Y. Chen, D. C. Morton, and J. T. Randerson, “Remote sensing for wildfire monitoring: Insights into burned area, emissions, and fire dynamics,” One Earth, vol. 7, no. 6, pp. 1022–1028, 2024.

- [12] S. Yang, Q. Huang, and M. Yu, “Advancements in remote sensing for active fire detection: a review of datasets and methods,” Science of the total environment, p. 173273, 2024.

- [13] M. Mukhiddinov, A. B. Abdusalomov, and J. Cho, “A wildfire smoke detection system using unmanned aerial vehicle images based on the optimized yolov5,” Sensors, vol. 22, no. 23, p. 9384, 2022.

- [14] S. N. Saydirasulovich, M. Mukhiddinov, O. Djuraev, A. Abdusalomov, and Y.-I. Cho, “An improved wildfire smoke detection based on yolov8 and uav images,” Sensors, vol. 23, no. 20, p. 8374, 2023.

- [15] J. F. Ciprián-Sánchez, G. Ochoa-Ruiz, M. Gonzalez-Mendoza, and L. Rossi, “Fire-gan: a novel deep learning-based infrared-visible fusion method for wildfire imagery,” Neural Computing and Applications, pp. 1–13, 2023.

- [16] S. Shah, “Preliminary wildfire detection using state-of-the-art ptz (pan, tilt, zoom) camera technology and convolutional neural networks,” arXiv preprint arXiv:2109.05083, 2021.

- [17] J. Lloret, M. Garcia, D. Bri, and S. Sendra, “A wireless sensor network deployment for rural and forest fire detection and verification,” sensors, vol. 9, no. 11, pp. 8722–8747, 2009.

- [18] S. Fernandez-Testa and E. Salcedo, “Distributed intelligent video surveillance for early armed robbery detection based on deep learning,” in 2024 37th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), pp. 1–6, IEEE, 2024.

- [19] O. Günay, K. Taşdemir, B. U. Töreyin, and A. E. Çetin, “Video based wildfire detection at night,” Fire Safety Journal, vol. 44, no. 6, pp. 860–868, 2009.

- [20] H. AI, “Haiper.” HAIPER.ai. Accessed: Nov. 1, 2024.