FocusNetv2: Imbalanced Large and Small Organ Segmentation with Adversarial Shape Constraint for Head and Neck CT Images

Abstract

Radiotherapy is a treatment where radiation is used to eliminate cancer cells. The delineation of organs-at-risk (OARs) is a vital step in radiotherapy treatment planning to avoid damage to healthy organs. For nasopharyngeal cancer, more than 20 OARs are needed to be precisely segmented in advance. The challenge of this task lies in complex anatomical structure, low-contrast organ contours, and the extremely imbalanced size between large and small organs. Common segmentation methods that treat them equally would generally lead to inaccurate small-organ labeling. We propose a novel two-stage deep neural network, FocusNetv2, to solve this challenging problem by automatically locating, ROI-pooling, and segmenting small organs with specifically designed small-organ localization and segmentation sub-networks while maintaining the accuracy of large organ segmentation. In addition to our original FocusNet, we employ a novel adversarial shape constraint on small organs to ensure the consistency between estimated small-organ shapes and organ shape prior knowledge. Our proposed framework is extensively tested on both self-collected dataset of 1,164 CT scans and the MICCAI Head and Neck Auto Segmentation Challenge 2015 dataset, which shows superior performance compared with state-of-the-art head and neck OAR segmentation methods.

keywords:

\KWDOrgans-at-risk segmentation , Head and neck CT image, Semantic segmentation1 Introduction

Radiation therapy is an important treatment for cancers. High energy radiation beams are focused on the tumor area to prevent tumor cell division, and ultimately result in tumor cell death. However, radiation is not specific to cancer cells and can also damage healthy cells. For nasopharyngeal carcinoma, more than 20 organs-at-risk (OARs) in the head and neck (HaN) region may be affected during radiotherapy, which may cause side effects including dysphagia, xerostomia, hypopsia, dysacusis radiation-induced lower cranial neuropathy and etc. Therefore, during radiotherapy treatment planning, radiologists need to accurately plan the radiotherapy path to ensure that the radiation dose received by normal organs is within safe limits.

The quality of organs-at-risk delineation is a core factor affecting the efficacy and side effects of radiotherapy. Clinically, radiologists have to spend several hours manually delineating organs. It is usually very time consuming and requires a high level of professionalism for the radiologist. In some underdeveloped areas, qualified radiologists are very scarce resources. Thus designing a high-performance and robust OAR segmentation algorithm can effectively alleviate this dilemma, reduce the workload of doctors, improve the quality of radiotherapy, and reduce the waiting time for patients, which would greatly benefit both patients and doctors.

The main difficulty of this task lies in the following aspects. First, the complex anatomical structure, over 20 OARs in HaN have various structures and complex shapes. For example, the optic chiasm is not a smooth convex shape, but an X-like shape. Second, due to the limitation of CT imaging, the contrast of soft tissue is relatively low. It usually cannot clearly show the boundaries of organs and makes the automatic localization of organs’ boundaries a challenging task. Moreover, the sizes of organs in the head and neck are extremely unbalanced. The ratio of sizes between large and small organs can reach hundreds, e.g., the parotid gland occupies tens of thousands of voxels, while pituitary gland only occupies about one hundred voxels111Small organs are defined as organs that have small sizes. In our collected dataset, small organs include left and right lens, left and right optic nerve, optic chiasm and pituitary..

Over the past decade, there have been many approaches proposed to resolve the challenging problem of HaN OAR segmentation. Atlas-based methods were commonly used where there are only a small number of annotated images available. However, they are based on image registration techniques and might generate incorrect organ delineations, especially when tumors occupy the organs. The atlas-based methods’ time cost can take up to tens of minutes as it is computationally intensive. Recently, convolutional neural networks (CNN), with its powerful feature representation capability, have made revolutionary progress in OAR segmentation [19, 34, 12, 46, 35, 55]. However, existing segmentation CNNs are not optimized for unbalanced organ segmentation tasks. These networks generally produce accurate segmentation maps for large organs, while the accuracy of small organs is often sacrificed. Furthermore, trained with per-pixel loss, such as cross-entropy loss, existing deep learning methods can not guarantee consistency between predict organ shapes and prior knowledge.

In order to solve the problems mentioned above, we observe how professional doctors delineate OARs. For large organs, they usually label them at regular scales. For small organs, they first localize the rough location and zoom in for accurate delineation. For organs with blurry boundaries in CT images, the doctors usually fit a shape on the image based on prior medical knowledge. Take the optic chiasm for an example, doctors usually fit an X-shaped label to the image as much as possible at its rough location. According to this observation, we propose a novel 3D convolutional neural network, FocusNetv2, which is delicately designed for accurate segmentation of both large organs and small organs in HaN CT scans. To better regularize the predicted organ shapes, in addition to our original FocusNet [14], we further propose a novel adversarial autoencoder (AAE) to incorporate shape constraint as extra supervisions for the segmentation network.

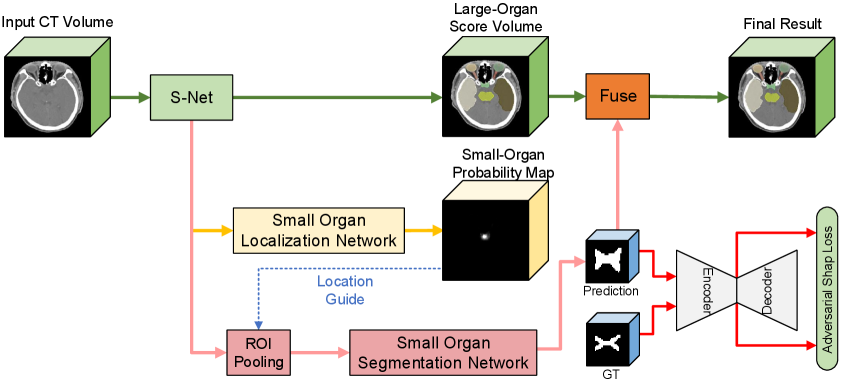

The overall framework of our approach is shown in Fig. 2. In particular, our network has two main components: the segmentation network and the adversarial autoencoder (AAE) for organ shape constraint. The segmentation network solves the extremely unbalanced data in a two-stage framework, which consists of three parts: main segmentation network (S-Net), Small-Organ Localization branch (SOL-Net) and Small-Organ Segmentation branch (SOS-Net). It imitates the process of how doctors delineate medical images. The segmentation network first segments all organs with the main segmentation network (S-Net) and localizes the central locations of a series of pre-defined small organs with the Small-Organ Localization branch (SOL-Net). Multi-scale features and high-resolution images are ROI-pooled by the Small-Organ segmentation branch (SOS-Net) to generate small-organ label maps. After further adding shape constraints with the proposed adversarial autoencoder (AAE), it encourages the predictions of the segmentation network being consistent with the prior shapes of different organs, even if there are no clear boundaries in the CT images. To the best of our knowledge, this is the first segmentation method that leverages both the autoencoder and adversarial learning for shape regularization.

This paper is an extension of our preliminary work, FocusNet [14] (denoted as FocusNetv1 in the following paper). Several modifications were made to both methodology and experiments, including modeling organ shape priors by the newly proposed adversarial autoencoder, additional experiments on larger datasets, and more ablation studies. The rest of this paper is organized as follows. Section 2 reviews related works on semantic segmentation in medical images, OAR segmentation and shape constraints by CNNs. Section 3 describes the proposed OAR segmentation framework and the adversarial shape autoencoder for better organ-shape regularization. Section 4 presents the experimental results. At last, Section 5 concludes the methodology and experiments.

2 Related Work

2.1 CNNs for medical image segmentation

Recently, convolutional neural networks have greatly advanced the field of medical image analysis because of its ability to learn more representative features from data. CNNs demonstrate state-of-the-art performance in many challenging tasks, such as image classification, segmentation, detection, registration, super-resolution and etc.

Long et al. [25] first proposed fully convolutional network (FCN), which uses convolutions with 11 sized filter to replace the fully connected layer, and allows the prediction of multiple pixels at the same time. Ronneberger et al. [37] further built a “U” shaped network (named U-Net) with a contracting path and a symmetric expanding path. Skip connections are also used to propagate features from early layers to later ones. A vast number of works based on variants of FCN and U-Net are applied in the field of 2D medical image segmentation [5, 52, 3, 38, 40].

For 3D images like CT or MR, 2D CNNs can be utilized in a slice-by-slice manner, however, the context information encoded in the volumetric data is ignored. Some 2.5D methods [39, 48] attempted to incorporate 3D spatial information by using three orthogonal slices or adjacent slices. But their representation capabilities are still limited by 2D convolutional kernels. To overcome this weakness, 3D CNN-based algorithms are proposed. For example, the 3D version of U-Net is proposed by Çiçek et al. [6]; Milletari et al. [27] proposed V-Net, which introduces the residual connection [17] between building blocks to mitigate gradient vanishing problem. Several 3D networks were also proposed for different applications, such as Merkow et al. [26], Dou et al. [10], Kamnitsas et al. [20].

Even though 3D CNN based methods can better leverage spatial context for learning better feature representations, the sample imbalanced problem is magnified in 3D tasks, as the training errors are mostly dominated by voxels belong to large organs. Ronneberger et al. [37] proposed to use the weighted cross-entropy loss function, while Milletari et al. [27] proposed the Dice coefficient loss, they can only mitigate the challenge of unbalanced data but are far away from solving it.

2.2 OAR segmentation for head and neck region

There are numerous works of OAR segmentation proposed for radiotherapy treatment planning of different body parts. Atlas-based methods are among the most commonly used traditional approaches. The optimal transformation between the atlas, which has the pre-segmented annotation map, and the image to be segmented is aligned by affine and deformable registration. Then the segmentation for the target image can be obtained by applying this transformation on the annotation map of the reference image. The reference images can be multiple ones with expert annotations or templates generated from the training set. The accuracy of atlas-based approaches is affected by two factors: First, the capability of the registration method, whether it can align the target image and atlas images accurately. Different approaches, such as Demons registration [42, 33], block-matching [32, 15], and B-Spline registration [53], have been proposed. Second, physiologic or pathologic anatomical variations of some organs make it difficult to find the optimal correspondences between target images and reference images, thus some methods are proposed to use atlases that reflect average patient anatomy [8] or the fusion of results from multi-atlas [7, 36]. Some hybrid methods post-process results from atlas-based segmentation using active contours [53] and graph cuts [23, 11]. Although atlas-based methods have the advantages of robustness and can perform segmentation without user interaction, they are based on image registration techniques and might generate incorrect organ maps if the organs are occupied by tumors. The time cost can be up to tens of minutes due to its huge amount of computation.

Recently, convolutional neural networks were adopted to advance OAR delineation accuracy significantly . Ibragimov and Xing [19] proposed the first deep learning-based algorithm. They first detect OARs by the head center point and then train a patch-based CNN to classify voxels in the region of interest. Ren et al. [35] proposed an interleaved 3D-CNN for the joint segmentation of small organs in HaN, where the region of interest is obtained via registration techniques. Zhu et al. [55] proposed a 3D squeeze-and-excitation U-Net for fast segmentation. Tong et al. [43] proposed a fully convolutional neural network with a shape representation model. Tang et al. [41] also proposed a two-stage segmentation method based on detection, where local contrast normalization is applied in the segmentation head for achieving better segmentation performance. There are also methods [28] segment OARs in other modalities, such as MRI.

2.3 Shape regularization in segmentation CNNs

Current CNN-based approaches are usually trained with pixel-wise losses, such as the cross-entropy loss and the dice loss, which consider pixels’ prediction errors separately. Even with large enough receptive fields, they cannot maintain the overall shapes or higher-level structures of the organ of interest. Conditional random field (CRF) and graph cut methods were proposed to enforce spatial contiguity of the output label maps. Several approaches attempted to incorporate shape constraints in CNNs, Mosinska et al. [29] incorporated a pre-trained VGG network to extract higher-order topological features from the network’s predictions and ground truth label maps, and then minimizes the loss between them. However, the ImageNet pre-trained VGG network may not capture various organs’ anatomy in medical images. Oktay et al. [31] used an autoencoder (AE) to learn representations of shapes from ground-truth annotations and minimize the Euclidean loss between the encoded latent codes of the network’s predicted label maps and ground truth label maps. Tong et al. [43] applied similar ideas with Oktay et al. [31] in the segmentation of OARs. Al Arif et al. [1] modified the UNet to generate a signed distance function (SDF) instead of segmentation maps and computes the errors directly in the shape domain using principal component analysis (PCA). Adversarial losses were also introduced as high-order shape regularization [50, 49]. The basic idea of methods mentioned above are similar, i.e., instead of measuring the shape similarity with ground-truth in the pixel domain, they proposed to measure the similarity in a lower dimension manifold by different kinds of projection (i.e., pre-trained VGG network, autoencoder, PCA or discriminator). Therefore, the key is the quality of the shape projection. However, existing adversarial discriminators lack generalization capability. The autoencoder in Oktay et al. [31], Tong et al. [43] was trained with only ground-truth annotation. It cannot well encode various predicted shapes by the segmentation models. To mitigate the problem, we proposed a novel adversarial shape constraint based on autoencoder that incorporates benefits from both autoencoder and adversarial discriminators.

There are three main differences between this work and the previous work. First, we designed a high-performance backbone network with fewer down-samplings but using DenseASPP [51] to retain more detailed high-resolution information as well as learn multi-scale features. Second, we use a two-stage segmentation model for small organs to solve the imbalance problem between large and small organs. The third and most crucial difference is that we use the adversarial autoencoder to apply shape constraints to the network prediction, which allows our network to generate predicted shapes that conform to prior medical knowledge for specific organs with unclear boundaries and low contrast. In practical clinical applications, our model can generate delineation results that are more acceptable by human doctors to improve the efficiency of radiotherapy treatment planning.

3 Method

The overall structure of the proposed FocusNetv2 is illustrated in Fig. 2. The FocusNetv2 contains two main parts, the segmentation network and the adversarial autoencoder for shape regularization. They are trained in an adversarial fashion. For the segmentation network, it imitates how human doctors delineate medical images: labeling large organs at a regular scale, while for the small organs, they first localize them and then zoom in for further accurate delineation. Therefore, the segmentation network first segments all organs with the main segmentation network (S-Net) and localizes the small-organ central locations with the Small-Organ Localization branch (SOL-Net). Multi-scale features and raw CT images are ROI-pooled from small-organ locations by the Small-Organ Segmentation branch (SOS-Net) to generate small-organ label maps. Therefore, the proposed segmentation network can solve the class imbalance for HaN OAR segmentation.

To better regularize the shapes output by the segmentation network, we proposed an adversarial autoencoder (AAE) to constrain the estimated small-organ shapes. The AAE is trained with two types of inputs, ground truth label map and predicted mask from our SOS-Net. The segmentation network and the AAE are alternately trained in an adversarial fashion. The AAE tries to encode better shape representations, while the SOS-Net tries to predict more realistic shapes that are consistent with prior medical knowledge.

3.1 Segmentation Network

3.1.1 Main Segmentation Network (S-Net)

U-Net is a commonly used 2D CNN that has made great progress on medical image segmentation compared to conventional methods. Recently, its 3D variants were shown to tackle the segmentation problem of 3D images by better capturing volumetric contextual information. However, vanilla 3D U-Net has poor performance on head-and-neck OAR segmentation. We observe that its inefficacy mainly derives from two aspects. First, U-Net has a symmetric encoder-decoder structure, the encoder embeds multi-scale information into feature maps by four down-sampling operations, while the decoder gradually reconstructs spatial resolution from high-level feature maps by a series of up-sampling or deconvolution operations. However, too much down-sampling leads to the loss of high-resolution information, which would have catastrophic effects on the small organs that only occupy a few voxels. Although high-resolution features are utilized in the shortcut connections between the encoder and decoder, the fusion of low-level and high-level features can only alleviate the problem to some extent. Second, multi-scale features are learned through multiple times of down-sampling, which makes the scale of high-level features fixed and have limited representation capability.

Our proposed S-Net is designed to solve the problem mentioned above. As shown in Fig. 3, S-Net has a strong backbone, which is a variant of 3D U-Net with residual connections. Squeeze-and-excitation modules [18] are used for channel-wise attention. The S-Net is built upon the SEResBlock, where the detailed structure is exhibited in Fig. 3. To reduce information loss and balance between GPU memory usage and segmentation accuracy, the S-Net only performs 2X down-sampling twice. However, such a structure has the disadvantage of limited receptive fields, making it difficult to integrate global image patterns to learn high-level features. Therefore, dilated convolution and densely connected atrous spatial pyramid pooling (DenseASPP) [51] are also adopted in our S-Net. Densely connected ASPP has the ability to combine arbitrary scales of features via adjusting the dilation rate with better feature reuse. In our model, we use the dilation rates of 3, 6, 12, 18. It should be noted that the dilation is only applied to x and y axes of the convolution kernels, while the dilation rates along z axis are fixed to 1. This is because many small organs only present in several continuous slices. Shortcut connections are also utilized to fuse features of the same scales from the encoder and decoder for learning better features.

3.1.2 Small-Organ Localization Network (SOL-Net)

Although the S-Net uses several components to improve the performance, the class imbalance problem between the large and small organs still prevents the network from accurately segmenting small organs. Doctors usually zoom in to finely delineate small organs, which is similar to ROI-pooling operations in two-stage detection or instance segmentation methods.

For OAR localization, the location, orientation and size of OARs are generally consistent among patients, and would not change as general objects in natural images. Therefore, directly regressing the small-organ keypoint locations is more suitable for OAR localization. Inspired by keypoint detection tasks [30], we propose to design a Small-Organ Localization Network (SOL-Net) to localize the center locations of small organs. As shown in Fig. 2, the feature volumes from the last layer of the decoder of our S-Net is used as the input of SOL-Net. The training targets are the small-organ center location heat maps created as 3D Gaussian distributions centered at the small-organ center locations with a standard deviation of 5 voxels, and each small organ has a separate map. The SOL-Net is trained to predict such center-location heat maps with the loss. It consists of 1 SEResBlock and a final convolution layer with a sigmoid layer to output the small-organ location probability maps. With such location maps, we could further ROI-pool feature volumes from the small organ locations for accurately segmenting them.

3.1.3 Small-Organ Segmentation Network (SOS-Net)

Given the center locations of the small organs from the SOL-Net outputs, we further improve the segmentation accuracy by focusing on each small organ’s surrounding regions. Specifically, given the location probability map of a small organ, we first identify the voxel with the highest location probability value as the small-organ center location, and ROI-pool a 3D feature volume around it. Considering the localization error, and the sizes of small organs in our collected dataset, the size of ROIs are fixed as (mm in physical space) for all small organs. This is different from our original FocusNetv1 [14], where the ROI sizes are set as three-time of the OARs’ sizes, for implementation consideration, as we add adversarial autoencoder for regularizing small organs’ estimated shapes. A Small-Organ Segmentation Network (SOS-Net) is adopted for each small organ’s segmentation.

To add more high-resolution information for the segmentation of small organs, multi-scale feature volumes from the last layer of the S-Net decoder as well as the raw input image are ROI-pooled from the small-organ ROI and concatenated together as the input of SOS-Net. Intuitively, the multi-scale feature volumes from S-Net already encode small organs’ initial segmentation results and the raw image volumes can help refine the segmentation results. The SOS-Net consists of 2 SEResBlocks and a convolution layer with a sigmoid layer to output the binary mask of each small organ. The proposed two-stage localization-refinement strategy for small organ segmentation can simultaneously eliminate information loss from the down-samplings and the class imbalance between large and small organs. To output unified segmentation results of all organs, we overlay the small-organ segmentation results by the SOS-Net into the large-organ segmentation results from the S-Net to obtain the final segmentation map for all organs.

3.2 Segmentation losses

In our task, the ratio between the largest and the smallest organ can reach nearly , which makes the loss dominated by the large number of large-organ voxel samples. In recent literature, focal loss [24] and generalized dice loss are two effective loss functions that try to solve the problem of class imbalance. We propose to use a weighted focal loss for multi-class segmentation,

| (1) |

where is the number of categories, is the probability of class . For training the S-Net, is the weight of each organ, which is inversely proportional to each organ’s average size. is the modulating factor that weights less on easy samples (voxels) with prediction confidence close to and weight larger on challenging samples. The focal loss can adaptively down-weight the loss contributed from easy samples, while suppress lightly the contributions of incorrectly classified hard samples. In our experiment, is empirically set as .

Generalized dice loss is another loss function that directly optimizes towards the evaluation metrics. We adopt the following generalized dice loss,

| (2) |

where and denote the ground-truth labels and predicted probabilities of class . In our experiment, the combination of focal loss and dice loss results in the best segmentation accuracy. The total segmentation loss is therefore defined as

| (3) |

where weights the two losses and we empirically set in our experiments.

3.3 Shape regularization with adversarial autoencoder (AAE)

Due to CT’s imaging principle, some OARs do not show obvious boundaries in the images, such as the optic chiasm. As OARs are normal organs, they usually have relatively consistent shapes among different patients, incorporating high-order shape constraint to the segmentation network can make the prediction more consistent with prior anatomical knowledge. We propose a novel adversarial autoencoder (AAE) to introduce shape regularization into the training of our segmentation framework. To the best of our knowledge, this is the first segmentation method that leverages both the autoencoder and adversarial learning for constraining segmentation masks.

A good design of shape regularization term should have the following two characteristics. First, it needs to be capable of representing shapes in a differentiable way, so that the segmentation network can be trained with back-propagation for shape regularization; Second, it should be able to distinguish subtle differences between shapes, such that as the shape predicted by the segmentation network gets closer to the ground-truth shapes, it still gives a correct penalty.

The shapes represented by label maps are highly structured and high dimensional, making it extremely challenging to measure the similarity between two shapes in such high dimensional space. The high-dimensional shape usually lies in a lower-dimensional shape manifold [44, 31], where each shape would be mapped to a lower-dimensional point (vector) in the subspace. If a shape manifold is successfully discovered, starting from a point on the manifold (corresponding to one specific shape), we can traverse along with different directions on the manifold. The corresponding shape would change smoothly and continuously at the semantic level. Therefore, we measure the similarity between two shapes in a low-dimensional shape manifold. We use a shape autoencoder, which is a neural network trained to reconstruct the input organ shape as much as possible (see Fig. 4). The bottleneck structure enables the autoencoder to transform the input shape into a latent code that captures its salient features while discarding irrelevant features. Therefore, if the autoencoder can well reconstruct the input shape, the latent space is a good approximation of low-dimensional shape manifold [22, 54]. Moreover, the autoencoder is differentiable and can regularize estimated organ shapes by minimizing the distance between the predicted organ shape and ground-truth shape in the latent space.

For the second characteristic, the accurate measurement of the similarity between the predicted organ shape and the ground-truth organ shape is crucial. Therefore, we introduce an adversarial training scheme for training the adversarial autoencoder. The autoencoder is trained with both the predicted shape from the small organ segmentation branch and the corresponding ground-truth shapes. It has two loss terms, where the first one is to reconstruct the input shapes for learning shape representations by minimizing the conventional reconstruction loss,

| (4) |

where is the input image, is its corresponding ground-truth label, is the segmentation network, is the predicted binary organ mask from SOS-Net given the input image, and are the reconstruction results of AAE, given the ground truth label and predicted organ mask .

The other adversarial loss term tries to distinguish the latent codes of predicted shapes and ground-truth shapes by maximizing their distance in the low-dimensional manifold. In this way, we enforce the autoencoder to better encode the two types of shapes and capture their subtle differences, while the segmentation network is encouraged to fool the autoencoder to being unable to capture the subtle differences. Therefore, the proposed adversarial shape loss is formulated as

| (5) |

where or is the latent code and multi-scale decoder features of the ground-truth organ shape and the predicted organ shape , as illustrated in Fig. 4. Intuitively, the segmentation network and the AAE play a min-max game. The segmentation network tries to predict masks that are consistent with shape prior to minimize the distance in low-dimensional shape manifold, while the AAE tries to learn better encoding to maximize the distance.

The overall objective function for segmentation network and with the shape regularization term from the adversarial autoencoder is therefore defined as

| (6) |

In practice, the segmentation network and the adversarial autoencoder are trained in an alternative optimization scheme. The is first optimized by fixing and minimizing the following loss

| (7) |

where is empirically set as 5 to balance the two terms. Optimizing would encourage the segmentation network to output organ shapes that are consistent with the ground-truth shapes. The is then optimized when is fixed by minimizing the following loss,

| (8) |

where is set as 0.001 in our experiment as larger may cause unstable training of the proposed AAE. During the training of segmentation network , the estimated organ shapes by will gradually become closer to the ground-truth organ shapes, therefore, if the encoded latent codes are not distinguishable between the estimated organ shapes and the ground-truth organ shapes, the autoencoder may hardly provide effective supervision for the segmentation network . Therefore, maximizing the distance between and encourages the autoencoder to encode subtle difference between them. Eqs. (7) and (8) are optimized alternatively to gradually improve both the segmentation network and the adversarial autoencoder .

Discussions on segmentation with autoencoder or adversarial learning. Previous segmentation methods have also explored using autoencoder in segmentation. Oktay et al. [31] and Tong et al. [43] only trained the autoencoder with ground-truth organ shapes. The segmentation network is then trained to minimize the distance between the latent features from autoencoder of predicted shapes and ground truth shapes, where the parameters of autoencoder are fixed. However, since the autoencoder has not been trained with the predicted organ shapes by the segmentation network ever, when the estimated organ shapes are close to the ground-truth shapes, the latent codes of autoencoder alone cannot distinguish these two, thus the autoencoder cannot provide effective guidance to further regularize the segmentation network. Moreover, in Tong et al. [43], the autoencoder is trained with whole images. The autoencoder training also faces the extremely unbalanced data, thus making the training of small organs’ shape representations ineffective. In our approach, we adopt the autoencoder in the small-organ branch, thus avoid the imbalanced class problem.

Several previous works [50, 16] adopted adversarial training in segmentation networks using the objective function in the following way,

| (9) |

where is the input image, is its corresponding ground-truth shape, is the segmentation network, is the predicted organ shape, is the discriminator network that tries to distinguish its input to be real or fake (i.e. ground truth label or network predicted mask). However, was previously designed as a classifier while we adopted the shape autoencoder for the min-max game.

| OAR | Multi-Atlas | DeepLabv3+ | AnatomyNet | FocusNetv1 | FocusNetv2 |

|---|---|---|---|---|---|

| Eye L | 78.1711.28 | 88.782.34 | 88.272.59 | 89.281.95 | 89.682.22 |

| Eye R | 78.6210.15 | 87.972.79 | 87.562.40 | 88.952.34 | 89.472.12 |

| Lens L | 30.4518.77 | 72.867.21 | 72.729.86 | 78.068.37 | 81.918.36 |

| Lens R | 28.4416.32 | 74.8268.21 | 74.928.68 | 78.737.56 | 82.476.46 |

| Opt Nerve L | 41.2316.32 | 68.219.88 | 63.9111.27 | 68.769.98 | 71.339.23 |

| Opt Nerve R | 44.1517.45 | 69.909.31 | 65.4110.59 | 73.328.84 | 75.257.59 |

| Opt Chiasm | 40.0715.66 | 57.7014.72 | 54.8914.72 | 61.1512.44 | 61.2213.34 |

| Pituitary | 41.1318.56 | 67.2112.05 | 66.1310.88 | 68.7812.55 | 72.1911.88 |

| Brain Stem | 86.144.61 | 88.913.06 | 88.513.26 | 89.263.17 | 89.093.47 |

| Temporal Lobes L | 86.695.51 | 87.524.00 | 87.314.89 | 87.893.94 | 87.733.98 |

| Temporal Lobes R | 87.395.16 | 87.994.19 | 87.533.91 | 88.223.85 | 88.304.30 |

| Spinal Cord | 78.225.7 | 81.995.59 | 82.795.26 | 82.605.32 | 83.095.04 |

| Parotid Gland L | 80.557.91 | 82.995.56 | 83.895.76 | 85.055.47 | 84.586.06 |

| Parotid Gland R | 83.455.07 | 85.912.96 | 85.883.37 | 86.873.82 | 87.013.80 |

| Inner Ear L | 85.046.75 | 84.885.08 | 86.795.43 | 84.875.48 | 86.424.77 |

| Inner Ear R | 81.916.57 | 84.485.44 | 86.666.34 | 85.045.96 | 85.515.90 |

| Mid Ear L | 82.447.74 | 84.275.97 | 85.716.21 | 86.105.63 | 85.735.90 |

| Mid Ear R | 79.2311.4 | 82.397.92 | 84.028.18 | 84.128.14 | 84.328.23 |

| TMJ L | 74.9810.86 | 76.2210.13 | 77.7610.37 | 76.4310.92 | 76.369.98 |

| TMJ R | 78.159.69 | 79.129.58 | 79.1110.69 | 78.6512.31 | 78.9110.07 |

| Mandible L | 89.283.35 | 90.701.56 | 92.001.61 | 92.011.57 | 92.441.63 |

| Mandible R | 89.613.44 | 90.082.18 | 91.841.46 | 92.211.53 | 92.541.63 |

| Average | 70.24 | 80.70 | 80.62 | 82.10 | 82.98 |

| Small Organ Average | 37.57 | 68.45 | 66.33 | 71.47 | 74.04 |

4 Experiments

4.1 Datasets

The proposed FocusNetv2 was evaluated on two datasets of HaN CT images. The first dataset is a self-collected dataset, denoted as our dataset. Our dataset consists of 1,164 collected CT scans of patients with nasopharyngeal carcinoma. 22 OARs to be considered in HaN radiotherapy treatment planning are delineated in each scan, including (left and right) eyes, (left and right) lens, (left and right) optic nerves, optic chiasm, pituitary, brain stem, (left and right) temporal lobes, spinal cord, (left and right) parotid glands, (left and right) inner ears, (left and right) middle ears, (left and right) temporomandibular joints, and (left and right) mandible. Ground truth annotations of each case were provided by senior doctors with hundreds of cases of annotation experience, with each structure being segmented by the same annotator and reviewed by the other one. The left and right lens, left and right optic nerves, optic chiasm, pituitary are defined as small organs due to their small volume and complex anatomical structures. The CT scans have anisotropic voxel spacing ranging from 0.78mm to 1.25mm and inter-slice thickness ranging from 2.7mm to 3.5mm. All scans are resampled to mm for further processing. We randomly shuffle our dataset and select 1,044 samples for training and 120 samples for testing.

For comparison with state-of-the-art methods on HaN OAR segmentation, we evaluate the proposed FocusNetv2 on a public dataset, the MICCAI Head and Neck Auto Segmentation Challenge 2015 dataset (denoted as MICCAI’15 dataset). This dataset is also known as a Public Domain Database for Computational Anatomy (PDDCA) provided and maintained by Harvard Medical School. The dataset includes multiple image studies from patients with stage III or IV squamous cell carcinoma of the oropharynx, hypopharynx or larynx. It consists of 38 CT scans for training and 10 scans for testing, and has 9 organ annotations: brain stem, mandible, optic chiasm, (left and right) optic nerves, (left and right) parotid glands and (left and right) submandibular glands, where optic chiasm, left and right optic nerves are defined as small organs. The delineation of structures is based on the protocol described by Radiation Therapy Oncology Group (RTOG). We resample all scans to have a voxel size of mm to train our FocusNetv2, while for a fair comparison with other methods, we resampled the predicted segmentation labels by our proposed method back into the original spacing and then calculate the evaluation metrics.

4.2 Implementation details

Our method is implemented with PyTorch and trained on NVIDIA TITAN Xp GPUs. The segmentation networks are trained from scratch with initial weights sampled from a standard Gaussian distribution. We first train the S-Net, and then train the SOL-Net while fixing the trained parameters of S-Net. The SOS-Net is trained afterward, and is updated with the adversarial autoencoder in an alternative way. At last, we finetune the whole network for joint optimization. We use the ADAM optimizer to train the network with a learning rate 0.0005. The batch size is set as 1. For the adversarial autoencoder, it is pretrained with ground truth labels in the dataset to stabilize the adversarial training process.

The original CT image size was around with being the number of slices. Since the majority of each CT image is the background, they are centrally cropped to . It would be better to use whole image volumes to train the network. However, due to GPU memory limitation, we randomly crop 40-slice chunks along the -axis from CT images for each iteration when training the S-Net and the SOL-Net. One problem raised by the sliding slices strategy is that the cropping process might destroy organs’ shape for training AAE and SOS-Net. As the small organs in both datasets are mainly lens, optic nerves, optic chiasm, and pituitary. They lie in only a few adjacent slices along the -axis, which can be fully included in a 40-slice cube with a large margin. Therefore, we adopt a sampling strategy when training AAE and SOS-Net. Although we sample cubes along -axis with random translation, we always ensure that the cube contains all small organs with some margin. Therefore, the shapes of small organs are complete. The CT scans are cropped every 40 slices with a stride of 40 along the -axis, i.e., no overlap between the crops. We then stack the segmentation result of each 40-slice crop together to obtain the final prediction. Random affine transformations (translation within 40 pixels in x and y axes, rotation within 10 degrees, and scale from 0.7 to 1.3 times) are used for data augmentation during training.

4.3 Evaluation metrics

We use two evaluation metrics in this study. Dice score coefficient (DSC) measures the degree of overlap between the predicted segmentation and the ground truth segmentation with the formula, , where and represents the voxel sets of prediction and ground-truth respectively. 95% Hausdorff Distance (95HD) is a variant of Hausdorff distance, which measures the largest distance from points in to its nearest neighbors in . The HD is calculated as the average of two directions, . The 95% Hausdorff Distance can mitigate the sensitivity of outliers by calculating the 95% largest distances.

| OAR | Multi-Atlas | DeepLabv3+ | AnatomyNet | FocusNetv1 | FocusNetv2 |

|---|---|---|---|---|---|

| Eye L | 5.186.45 | 1.670.71 | 1.740.62 | 1.640.80 | 1.510.63 |

| Eye R | 5.226.00 | 1.840.70 | 2.030.71 | 1.810.73 | 1.560.60 |

| Lens L | 5.683.61 | 1.990.88 | 2.040.91 | 1.570.77 | 1.410.68 |

| Lens R | 5.342.52 | 1.940.84 | 1.760.83 | 1.731.05 | 1.591.07 |

| Opt Nerve L | 4.281.58 | 2.770.84 | 3.210.96 | 2.640.81 | 2.520.89 |

| Opt Nerve R | 4.121.44 | 2.890.65 | 3.140.87 | 2.610.89 | 2.550.89 |

| Opt Chiasm | 5.161.58 | 3.770.98 | 3.830.95 | 3.470.80 | 3.580.93 |

| Pituitary | 4.031.40 | 2.490.84 | 2.620.86 | 2.360.87 | 2.160.94 |

| Brain Stem | 2.611.37 | 2.091.17 | 2.151.16 | 2.051.18 | 1.930.96 |

| Temporal Lobes L | 4.443.43 | 4.013.21 | 4.143.16 | 3.953.37 | 3.993.19 |

| Temporal Lobes R | 4.143.22 | 3.783.00 | 4.052.71 | 3.872.96 | 3.852.95 |

| Spinal Cord | 6.267.72 | 1.860.89 | 1.750.92 | 1.750.89 | 1.700.68 |

| Parotid Gland L | 4.093.09 | 3.682.84 | 3.432.35 | 3.262.54 | 3.302.47 |

| Parotid Gland R | 3.441.63 | 2.931.40 | 2.761.05 | 2.581.33 | 2.631.28 |

| Inner Ear L | 2.230.94 | 2.030.88 | 1.810.93 | 2.090.86 | 1.990.85 |

| Inner Ear R | 2.650.86 | 2.010.81 | 1.820.95 | 2.110.87 | 2.090.92 |

| Mid Ear L | 3.091.71 | 3.091.19 | 2.691.35 | 2.531.2 | 2.591.31 |

| Mid Ear R | 4.443.71 | 3.952.49 | 3.312.12 | 3.121.81 | 3.372.74 |

| TMJ L | 3.291.55 | 3.101.25 | 3.021.53 | 3.071.22 | 3.151.29 |

| TMJ R | 2.740.89 | 2.741.00 | 2.671.18 | 2.561.15 | 2.661.07 |

| Mandible L | 2.562.25 | 1.590.67 | 1.380.70 | 1.420.67 | 1.310.66 |

| Mandible R | 2.472.52 | 1.540.64 | 1.280.64 | 1.250.55 | 1.261.26 |

| Average | 3.98 | 2.63 | 2.58 | 2.43 | 2.40 |

| Small Organ Average | 4.76 | 2.64 | 2.76 | 2.39 | 2.30 |

| Study | Brain | Mandible | Optic | Optic | Optic | Parotid | parotid | SMG | SMG | AVG | Small |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Stem | Chiasm | Nerve L | Nerve R | L | R | L | R | AVG | |||

| Raudaschl et al. [34] | 88.0 | 93.0 | 55.0 | 62.0 | 62.0 | 84.0 | 84.0 | 78.0 | 78.0 | 76.0 | 59.7 |

| Ren et al. [35] | - | - | 58.017.0 | 72.08.0 | 70.09.0 | - | - | - | - | - | - |

| Wang et al. [46] | 90.04.0 | 94.01.0 | - | - | - | 83.06.0 | 83.06.0 | - | - | - | - |

| Zhu et al. [55] | 86.72.0 | 92.52.0 | 53.215.0 | 72.16.0 | 70.610.0 | 88.12.0 | 87.34.0 | 81.44.0 | 81.34.0 | 79.3 | 65.3 |

| Tong et al. [43] | 87.03.0 | 93.71.2 | 58.410.3 | 65.35.8 | 68.94.7 | 83.52.3 | 83.21.4 | 75.56.5 | 81.36.5 | 77.4 | 64.2 |

| Tang et al. [41] | 87.52.5 | 95.00.8 | 61.510.2 | 74.87.1 | 72.35.9 | 88.71.9 | 87.55.0 | 82.35.2 | 81.54.5 | 81.2 | 69.5 |

| FocusNetv2 | 88.22.5 | 94.71.1 | 71.317.0 | 79.07.5 | 81.77.3 | 89.81.6 | 88.14.2 | 84.04.6 | 83.84.1 | 84.5 | 77.3 |

4.4 Experiments on our collected dataset

We compare our proposed method with a multi-atlas based method, where Symmetric Normalization (SyN) [2] is used as the registration method, a 3D variant of DeepLabv3+ [4] and a state-of-the-art deep learning method in HaN OAR segmentation named AnatomyNet [55].

For the multi-atlas-based method, we randomly select 9 CT scans from the training set as the atlas due to the tie and computational resouce limitation. Symmetric Normalization (SyN) [2] with its implementation in ANTs software package is used to recover the optimal affine matrix and deformable transformation field between the CT to be segmented and each atlas. 9 label maps are obtained by applying the transformation fields to atlas labels, then the final prediction is obtained by voting. DeepLabv3+ [4] is a well-known segmentation framework originally designed for 2D semantic segmentation. It uses the spatial pyramid pooling and dilated convolution and achieves state-of-the-art performance on natural image segmentation. We extend their network structure to 3D for volumetric segmentation. It was randomly initialized and trained using the same loss function as our proposed FocusNetv2. AnatomyNet [55] is designed for fast segmentation on whole CT images and has good performance compared with traditional atlas-based methods.

4.4.1 Quantitative comparison

Comparative results are shown in Tables 1 and 2. The conventional multi-atlas based method SyN has decent performance on large organs, especially for those with high contrast with surrounding regions, such as mandible. Nevertheless, it results in undesirable segmentation results for organs of small sizes. Deep learning based methods have overwhelming advantages in these circumstances, because small organs have more complex anatomical structures, the multi-atlas based method SyN has limited capability of dealing with complicated and diverse anatomy variations. Among deep learning based methods, even without adversarial autoencoder, our FocusNetv1 performs better in most organs. This is because that our specially designed two-stage framework significantly reduces the extremely unbalanced ratio between background, large organs and small organs. Each small-organ branch can focus on the segmentation of specified organs, where high-resolution detailed information is incorporated for detailed refinement. After incorporating the adversarial shape loss from the proposed adversarial autoencoder, the segmentation accuracy of small organs could be further improved with large margins. Compared with other approaches, our FocusNet has the best Dice scores in 19 out of 22 organs, and has the best 95HD scores in 19 out of 22 organs. In terms of accuracies of small organs, our FocusNetv2 has an improvement of 5.59% in Dice score compared with other deep learning based methods.

4.4.2 Qualitative comparison

As illustrated in Fig. 5, in the first two rows, the multi-atlas based method SyN misses the left lens, and does not perform well on segmenting optic nerves, which is consistent with quantitative results. There are errors in the region where eyeballs are attached to the lens by DeepLabv3+. It might be because DeepLabv3+ processes the images in a low resolution and then up-sample to the origin resolution for final prediction, which results in the loss of detailed information. In the third row, for the optic chiasm, although the result of multi-atlas based method is not accurate enough, its shape conforms to prior medical knowledge. DeepLabv3+ and AnatomyNet are more inclined to predict a smooth shape in its results, which is a common problem of state-of-the-art segmentation deep learning networks. This is because the combination of the low contrast of anatomy contour and the extremely small volume of organs makes the training of small organs ineffective. Moreover, per-pixel losses cannot penalize high-level shape errors. By incorporating the proposed small-organ segmentation branch and adversarial shape autoencoder, our FocusNetv2 generates satisfactory X-shape segmentation masks. This proves that our FocusNetv2 successfully introduces shape constraints into the deep learning framework. It can achieve satisfactory segmentation accuracy and make the segmentation results consistent with prior medical knowledge at the same time.

4.4.3 Processing time

| Method | DeepLabv3+ | AnatomyNet | S-Net | FocusNetv2 |

|---|---|---|---|---|

| Time (s) | 4.52 | 2.28 | 3.33 | 4.36 |

The processing time of deep learning methods are presented in Table 4, all methods are measured using the same computing platform and an NVIDIA TITAN Xp GPU. Our backbone network S-Net takes 3.33s on average to process one CT scan. After adding SOL-Net and SOS-Net, our FocusNetv2 takes 4.36s, which is still faster than DeepLabv3+, but with much higher segmentation accuracy. Our method consumes more computing resources than AnatomyNet, but less than DeepLabv3+. Considering that radiotherapy treatment planning generally takes several hours and is not a time-sensitive task, our method can achieve optimal performance in a reasonable time.

We further test the doctor’s average delineation time with and without using our algorithm’s results. It generally takes about one hour for human doctors to delineate 22 OARs in a head and neck CT scan. If our algorithm’s results are used for assistance, the doctors only need to make small modifications on the automatic delineation results for most patients. The entire delineation time only takes 20-30 minutes, which is 1/3 to 1/2 shorter than before. It could dramatically improve the efficiency of radiologists.

4.5 Experiments on MICCAI’15 dataset

| Organ | Ren et al. [35] | Zhu et al. [55] | Tong et al. [43] | FocusNetv2 |

|---|---|---|---|---|

| Brain Stem | - | 6.422.38 | 4.010.93 | 2.320.70 |

| Optic Chiasm | 2.811.56 | 5.762.49 | 2.171.04 | 2.250.85 |

| Mandible | - | 6.282.21 | 1.500.32 | 1.080.45 |

| Optic Ner. L | 2.330.84 | 4.852.32 | 2.521.04 | 1.920.80 |

| Optic Ner. R | 2.130.96 | 4.774.27 | 2.901.88 | 2.170.74 |

| Parotid L | - | 9.313.32 | 3.972.15 | 1.810.43 |

| Parotid R | - | 10.05.09 | 4.201.27 | 2.432.00 |

| Submand. L | - | 7.014.44 | 5.595.59 | 2.841.20 |

| Submand. R | - | 6.021.78 | 4.844.84 | 2.741.25 |

| Average | - | 6.72 | 3.52 | 2.17 |

4.5.1 Comparison with state-of-the-arts

We also test our FocusNetv2 on MICCAI 2015 Head and Neck dataset. All the settings of the FocusNetv2 are the same as those used in experiments on our collected dataset, except for the number of small organs is set as 3, including left and right optic nerve and optic chiasm.

We compare our method with previously published state-of-the-art results, the Dice scores and 95 HD scores are shown in Tables 3 and 5, our method demonstrates superior overall performance, and outperforms previous state-of-the-art methods by large margins. Especially in terms of small organs, our FocusNetv2 outperforms previous state-of-the-art methods by 7.8%. Ren et al. [35] proposed an interleaved 3D CNNs for jointly segmentation of optic nerves and chiasm. They perform patch-based segmentation in a small target volume obtained from registration. Wang et al. [47] proposed a vertex regression-based method, which has good performance in the brain stem and mandible. However, it has relatively poorer performance in parotid glands, and they did not provide results of other organs. Zhu et al. [55] proposed the AnatomyNet, which claims a similar idea with our backbone S-Net. But they only reduce the number of down-sampling operations, which results in limited receptive fields thus generates outliers in predicted label maps. Our S-Net introduces densely connected ASPP, which not only enlarges receptive field but also learns better multi-scale feature representations. It outputs fewer errors, as shown in 95HD in Table 5.

Tong et al. [43] first introduced shape constraint into HaN OAR segmentation. They used an autoencoder to learn the latent shape representation from the training dataset. The main difference between our method and theirs lies in two-fold. First, we use adversarial training to learn shape representations, not only for ground truth labels, but also for estimated segmentation mask from the segmentation network. Therefore, the autoencoder can better encode subtle differences between estimated and ground-truth organ masks, thus providing more effective gradients to the segmentation network, and makes the prediction of segmentation network more consistent with ground truth shape. Second, they train the shape representation model of all organs in the original scale. However, as the extremely unbalanced ratio between background, large and small organs leads to insufficient training of small organs for the segmentation network, it will also affect the learning of shape representations by the autoencoder. Therefore, we integrate the adversarial shape loss only in our proposed SOS-Net, making the shape representation of small organs more effective. The significant performance improvement of our adversarial shape loss proves the effectiveness of our method.

Tang et al. [41] used a two-stage method, consisting of an OAR detection module and an OAR segmentation module, where the OAR detection module identifies the location and size of OAR and segmentation module is to further segment within OAR region. As the location and size of OARs are relatively consistent among patients, while using object detection module needs to carefully design anchor size and prone to have false positive, therefore, our method localizes OAR by OAR center location regression and the size of ROI is fixed based on statistics of the training set. By specially designed structure, our backbone S-Net has comparable performance with Tang et al. [41], and our final FocusNetv2 outperforms theirs by a large margin after introducing small organ segmentation branch and the proposed adversarial shape loss.

4.5.2 Ablation studies

We also conduct ablation studies of the impact of each component of FocusNetv2 on MICCAI’15 dataset. The results are exhibited in Table 6. We first conduct experiments on the impacts of the number of down-sampling operations in the S-Net. We train SEResUNet with 1, 2, 3 and 4 down-samplings (denoted as “SEResUNet_dX”) with cross-entropy loss respectively. The overall Dice score and Dice score of small organs goes up when the number of down-sampling operation decreases. The performance of SEResUNet_d2 and SEResUNet_d1 is similar, but SEResUNet_d2 has slightly better performance on small organs. Utilizing the focal loss and the Dice loss (denoted as “SEResUNet_d2_FL_DL”) improves the segmentation accuracy greatly. Further combining the ASPP module into S-Net (denoted as “SEResUNet_d2+ASPP”) can slightly boost the performance. Introducing the small-organ localization network and the small-organ segmentation network (denoted as “FocusNetv1”), the class imbalance problem can be solved. After adding shape constraint by adversarial autoencoder, the FocusNetv2 considerably boosts the Dice score of small organs by 5.94%. The “FocusNetv2 no concat” in Table 6 only takes the ROI-pooled features from S-Net as the input of the small organ segmentation network (SOS-Net), where the raw CT image is not concatenated. It also adopts the AAE shape constraint. We observe that the raw CT image has a great effect on the refinement of small organ segmentation.

At last, we conduct an experiment to show the effectiveness of adversarial training of autoencoder, shown by FocusNetv2 w/ origin AE in Table 6. The autoencoder is only trained to reconstruct the inputs of ground-truth shapes from the dataset. The autoencoder’s parameters are fixed to regularize the training of the segmentation network similarly to Tong et al. [43], except that the regularization is only applied to the small organ segmentation branches. As the autoencoder is not trained against the segmentation network, its regularization capability is limited. The performance of FocusNetv2 w/ origin AE is 4.11% less than the proposed FocusNetv2, which proves the effectiveness of our adversarial autoencoder.

| Method | AVG Dice | AVG Small Dice |

|---|---|---|

| SEResUNet_d4 | 68.31 | 38.64 |

| SEResUNet_d3 | 70.08 | 41.03 |

| SEResUNet_d2 | 71.19 | 45.58 |

| SEResUNet_d1 | 71.18 | 44.3 |

| SEResUNet_d2_FL_DL | 80.15 | 66.48 |

| S-Net (SEResUNet_d2+ASPP) | 81.04 | 68.71 |

| FocusNetv1 | 81.90 | 71.40 |

| FocusNetv2 no concat | 82.40 | 72.84 |

| FocusNetv2 w/ origin AE | 83.14 | 73.23 |

| FocusNetv2 | 84.51 | 77.34 |

4.5.3 Robustness of SOL-Net and SOS-Net

| Localization | 3mm | 4mm | 5mm |

| Error | Id. Rate | Id. Rate | Id. Rate |

| 2.7 mm | 63.3% | 83.3% | 100% |

| SOL-Net | 0 mm | 1 mm | 3 mm | 5 mm | 7 mm | 9 mm |

| 77.33 | 76.83 | 77.13 | 76.96 | 76.87 | 75.07 | 73.54 |

The segmentation performance of small organs might be affected by the small-organ localization accuracy. If the organ bounding boxes deviate too far away from the ground-truth location, the SOS-Net would have difficulty on segmenting the small organs accurately. Therefore, we conducted experiments to analyze the robustness of our proposed SOL-Net and SOS-Net. First, we analyzed the localization accuracy of SOL-Net, as shown in Table 7. SOL-Net’s average localization error (average distance between the estimated centroids and ground truth centroids) for all small organs is about 2.7mm. We further measure the small-organ localization rates within different distances. An organ is considered to be correctly localized if its estimated centroid is within a certain distance from the ground truth centroid. 63.3% of small organs are localized within 3mm, 83.3% can be localized within 4mm. If we extend the distance to 5mm, all small organs can be localized correctly.

Although our SOL-Net can localize small organs with small errors, we conducted another experiment to explore the effect of localization error on the following segmentation model. After the segmentation network, including S-Net, SOL-Net, SOS-Net, is trained, all parameters are fixed. Then, instead of using the localization bounding boxes from the SOL-Net, we obtain the small organs’ ground truth boxes, and add random translations to simulate the localization errors. Then the randomly shifted boxes are used to guide ROI-pooling for segmentation by the SOS-Net. The results are shown in Table 8. For localization error within 5mm, there is a slight degradation of segmentation accuracy. Even the localization errors reach up to 9 mm, the performance degradation is still within an acceptable range. Considering that our SOL-Net’s 5mm localization rate reaches 100%, our method is robust against the localization errors of SOL-Net.

5 Limitations and future works

Although our method demonstrates strong performance and can accelerate the radiotherapy planning process in clinical applications, it is still far from perfect. Our shape constraints currently only apply to small organs, as there also exists a sample imbalance problem when training the shape autoencoder. Directly training an autoencoder for all organs would favor large organs while ignoring much of the small organs, making it difficult to constrain the segmentation results of small organs. Considering that the S-Net has good performance for large organs, while there is significant variance among patients for small organs, we only apply shape constraint to small organs with blur boundaries to encourage the network to generate predictions in agreement with shape priors. Besides, our current training has multiple stages, which makes the training process complex. This is a consideration from a performance perspective. In our experiments, the network cannot achieve optimal performance when end-to-end training.

There are several main directions for future improvements. The first is to adopt novel CNN technologies, such as attention mechanisms [45, 13], to increase the capacity and capability of backbone networks. Relative position encoding [9] can be utilized to learn relative position information between and within organs. Besides, the model’s uncertainty estimation [21] can be provided, which is of great significance for clinical applications. It allows the doctor to pay more attention to the regions where the model is uncertain. Furthermore, our proposed adversarial shape constraints can be applied to more organs and body parts to realize the fully automatic whole-body radiotherapy planning.

6 Conclusion

We proposed a novel segmentation framework with adversarial shape constraint, which outperforms state-of-the-art methods on the segmentation of imbalanced OARs in HaN CT images with large margins. The framework contains two parts, a two-stage segmentation network and an adversarial autoencoder as shape regularization. The two-stage segmentation network is specifically designed for unbalanced problem of OARs in HaN CT images. By reducing the number of down-samplings and utilizing multi-scale features learned by DenseASPP, our S-Net can guarantee the accuracy of the segmentation of large organs. Trained to predict small-organ center location maps, our SOL-Net can generate accurate small-organ central locations. SOS-Net can solve the unbalanced class problem, and high-resolution feature volumes can be utilized to accurately segment small organs and thus further boost the performance. With our new adversarial autoencoder, our framework does not only learn the shape representations, but also encodes more discriminative features for organ masks. It is able to provide better supervision for training the segmentation network. Extensive experiments on a large amount of real patient data and the MICCAI 2015 dataset show the effectiveness of our proposed framework.

Acknowledgments

This work has been supported in part by the General Research Fund through the Research Grants Council of Hong Kong under Grants CUHK14208417 and CUHK14239816, in part by the Hong Kong Innovation and Technology Support Programme (No. ITS/312/18FX), in part by NSF grants CCF-1733843.

References

- Al Arif et al. [2018] Al Arif, S.M.R., Knapp, K., Slabaugh, G., 2018. Spnet: Shape prediction using a fully convolutional neural network, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer. pp. 430–439.

- Avants et al. [2008] Avants, B.B., Epstein, C.L., Grossman, M., Gee, J.C., 2008. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Medical image analysis 12, 26–41.

- Brosch et al. [2016] Brosch, T., Tang, L.Y., Yoo, Y., Li, D.K., Traboulsee, A., Tam, R., 2016. Deep 3d convolutional encoder networks with shortcuts for multiscale feature integration applied to multiple sclerosis lesion segmentation. IEEE transactions on medical imaging 35, 1229–1239.

- Chen et al. [2018] Chen, L.C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H., 2018. Encoder-decoder with atrous separable convolution for semantic image segmentation, in: Proceedings of the European conference on computer vision (ECCV), pp. 801–818.

- Christ et al. [2016] Christ, P.F., Elshaer, M.E.A., Ettlinger, F., Tatavarty, S., Bickel, M., Bilic, P., Rempfler, M., Armbruster, M., Hofmann, F., D’Anastasi, M., et al., 2016. Automatic liver and lesion segmentation in ct using cascaded fully convolutional neural networks and 3d conditional random fields, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer. pp. 415–423.

- Çiçek et al. [2016] Çiçek, Ö., Abdulkadir, A., Lienkamp, S.S., Brox, T., Ronneberger, O., 2016. 3d u-net: learning dense volumetric segmentation from sparse annotation, in: International conference on medical image computing and computer-assisted intervention, Springer. pp. 424–432.

- Commowick and Malandain [2007] Commowick, O., Malandain, G., 2007. Efficient selection of the most similar image in a database for critical structures segmentation, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer. pp. 203–210.

- Commowick et al. [2009] Commowick, O., Warfield, S.K., Malandain, G., 2009. Using frankenstein’s creature paradigm to build a patient specific atlas, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer. pp. 993–1000.

- Dai et al. [2019] Dai, Z., Yang, Z., Yang, Y., Carbonell, J., Le, Q.V., Salakhutdinov, R., 2019. Transformer-xl: Attentive language models beyond a fixed-length context. arXiv preprint arXiv:1901.02860 .

- Dou et al. [2016] Dou, Q., Chen, H., Jin, Y., Yu, L., Qin, J., Heng, P.A., 2016. 3d deeply supervised network for automatic liver segmentation from ct volumes, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer. pp. 149–157.

- Fortunati et al. [2013] Fortunati, V., Verhaart, R.F., van der Lijn, F., Niessen, W.J., Veenland, J.F., Paulides, M.M., van Walsum, T., 2013. Tissue segmentation of head and neck ct images for treatment planning: a multiatlas approach combined with intensity modeling. Medical physics 40, 071905.

- Fritscher et al. [2016] Fritscher, K., Raudaschl, P., Zaffino, P., Spadea, M.F., Sharp, G.C., Schubert, R., 2016. Deep neural networks for fast segmentation of 3d medical images, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer. pp. 158–165.

- Fu et al. [2019] Fu, J., Liu, J., Tian, H., Li, Y., Bao, Y., Fang, Z., Lu, H., 2019. Dual attention network for scene segmentation, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3146–3154.

- Gao et al. [2019] Gao, Y., Huang, R., Chen, M., Wang, Z., Deng, J., Chen, Y., Yang, Y., Zhang, J., Tao, C., Li, H., 2019. Focusnet: Imbalanced large and small organ segmentation with an end-to-end deep neural network for head and neck ct images, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer. pp. 829–838.

- Han et al. [2008] Han, X., Hoogeman, M.S., Levendag, P.C., Hibbard, L.S., Teguh, D.N., Voet, P., Cowen, A.C., Wolf, T.K., 2008. Atlas-based auto-segmentation of head and neck ct images, in: International Conference on Medical Image Computing and Computer-assisted Intervention, Springer. pp. 434–441.

- Han et al. [2018] Han, Z., Wei, B., Mercado, A., Leung, S., Li, S., 2018. Spine-gan: Semantic segmentation of multiple spinal structures. Medical image analysis 50, 23–35.

- He et al. [2016] He, K., Zhang, X., Ren, S., Sun, J., 2016. Deep residual learning for image recognition, in: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778.

- Hu et al. [2018] Hu, J., Shen, L., Sun, G., 2018. Squeeze-and-excitation networks, in: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

- Ibragimov and Xing [2017] Ibragimov, B., Xing, L., 2017. Segmentation of organs-at-risks in head and neck ct images using convolutional neural networks. Medical physics 44, 547–557.

- Kamnitsas et al. [2017] Kamnitsas, K., Ledig, C., Newcombe, V.F., Simpson, J.P., Kane, A.D., Menon, D.K., Rueckert, D., Glocker, B., 2017. Efficient multi-scale 3d cnn with fully connected crf for accurate brain lesion segmentation. Medical image analysis 36, 61–78.

- Lakshminarayanan et al. [2017] Lakshminarayanan, B., Pritzel, A., Blundell, C., 2017. Simple and scalable predictive uncertainty estimation using deep ensembles, in: Advances in neural information processing systems, pp. 6402–6413.

- Lei et al. [2020] Lei, N., An, D., Guo, Y., Su, K., Liu, S., Luo, Z., Yau, S.T., Gu, X., 2020. A geometric understanding of deep learning. Engineering .

- Van der Lijn et al. [2011] Van der Lijn, F., De Bruijne, M., Klein, S., Den Heijer, T., Hoogendam, Y.Y., Van der Lugt, A., Breteler, M.M., Niessen, W.J., 2011. Automated brain structure segmentation based on atlas registration and appearance models. IEEE transactions on medical imaging 31, 276–286.

- Lin et al. [2017] Lin, T.Y., Goyal, P., Girshick, R., He, K., Dollár, P., 2017. Focal loss for dense object detection, in: Proceedings of the IEEE international conference on computer vision, pp. 2980–2988.

- Long et al. [2015] Long, J., Shelhamer, E., Darrell, T., 2015. Fully convolutional networks for semantic segmentation, in: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 3431–3440.

- Merkow et al. [2016] Merkow, J., Marsden, A., Kriegman, D., Tu, Z., 2016. Dense volume-to-volume vascular boundary detection, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer. pp. 371–379.

- Milletari et al. [2016] Milletari, F., Navab, N., Ahmadi, S.A., 2016. V-net: Fully convolutional neural networks for volumetric medical image segmentation, in: 2016 Fourth International Conference on 3D Vision (3DV), IEEE. pp. 565–571.

- Mlynarski et al. [2020] Mlynarski, P., Delingette, H., Alghamdi, H., Bondiau, P.Y., Ayache, N., 2020. Anatomically consistent cnn-based segmentation of organs-at-risk in cranial radiotherapy. Journal of Medical Imaging 7, 014502.

- Mosinska et al. [2018] Mosinska, A., Marquez-Neila, P., Koziński, M., Fua, P., 2018. Beyond the pixel-wise loss for topology-aware delineation, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3136–3145.

- Newell et al. [2016] Newell, A., Yang, K., Deng, J., 2016. Stacked hourglass networks for human pose estimation, in: European conference on computer vision, Springer. pp. 483–499.

- Oktay et al. [2017] Oktay, O., Ferrante, E., Kamnitsas, K., Heinrich, M., Bai, W., Caballero, J., Cook, S.A., De Marvao, A., Dawes, T., O‘Regan, D.P., et al., 2017. Anatomically constrained neural networks (acnns): application to cardiac image enhancement and segmentation. IEEE transactions on medical imaging 37, 384–395.

- Ourselin et al. [2000] Ourselin, S., Roche, A., Prima, S., Ayache, N., 2000. Block matching: A general framework to improve robustness of rigid registration of medical images, in: International Conference on Medical Image Computing And Computer-Assisted Intervention, Springer. pp. 557–566.

- Qazi et al. [2011] Qazi, A.A., Pekar, V., Kim, J., Xie, J., Breen, S.L., Jaffray, D.A., 2011. Auto-segmentation of normal and target structures in head and neck ct images: A feature-driven model-based approach. Medical physics 38, 6160–6170.

- Raudaschl et al. [2017] Raudaschl, P.F., Zaffino, P., Sharp, G.C., Spadea, M.F., Chen, A., Dawant, B.M., Albrecht, T., Gass, T., Langguth, C., Lüthi, M., et al., 2017. Evaluation of segmentation methods on head and neck ct: auto-segmentation challenge 2015. Medical physics 44, 2020–2036.

- Ren et al. [2018] Ren, X., Xiang, L., Nie, D., Shao, Y., Zhang, H., Shen, D., Wang, Q., 2018. Interleaved 3d-cnn s for joint segmentation of small-volume structures in head and neck ct images. Medical physics 45, 2063–2075.

- Rohlfing et al. [2004] Rohlfing, T., Brandt, R., Menzel, R., Maurer Jr, C.R., 2004. Evaluation of atlas selection strategies for atlas-based image segmentation with application to confocal microscopy images of bee brains. NeuroImage 21, 1428–1442.

- Ronneberger et al. [2015] Ronneberger, O., Fischer, P., Brox, T., 2015. U-net: Convolutional networks for biomedical image segmentation, in: International Conference on Medical image computing and computer-assisted intervention, Springer. pp. 234–241.

- Roth et al. [2016] Roth, H.R., Lu, L., Farag, A., Sohn, A., Summers, R.M., 2016. Spatial aggregation of holistically-nested networks for automated pancreas segmentation, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer. pp. 451–459.

- Roth et al. [2014] Roth, H.R., Lu, L., Seff, A., Cherry, K.M., Hoffman, J., Wang, S., Liu, J., Turkbey, E., Summers, R.M., 2014. A new 2.5 d representation for lymph node detection using random sets of deep convolutional neural network observations, in: International conference on medical image computing and computer-assisted intervention, Springer. pp. 520–527.

- Tan et al. [2018] Tan, C., Zhao, L., Yan, Z., Li, K., Metaxas, D., Zhan, Y., 2018. Deep multi-task and task-specific feature learning network for robust shape preserved organ segmentation, in: 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), IEEE. pp. 1221–1224.

- Tang et al. [2019] Tang, H., Chen, X., Liu, Y., Lu, Z., You, J., Yang, M., Yao, S., Zhao, G., Xu, Y., Chen, T., et al., 2019. Clinically applicable deep learning framework for organs at risk delineation in ct images. Nature Machine Intelligence 1, 480–491.

- Thirion [1998] Thirion, J.P., 1998. Image matching as a diffusion process: an analogy with maxwell’s demons. Medical image analysis 2, 243–260.

- Tong et al. [2018] Tong, N., Gou, S., Yang, S., Ruan, D., Sheng, K., 2018. Fully automatic multi-organ segmentation for head and neck cancer radiotherapy using shape representation model constrained fully convolutional neural networks. Medical physics 45, 4558–4567.

- Wang et al. [2014] Wang, W., Huang, Y., Wang, Y., Wang, L., 2014. Generalized autoencoder: A neural network framework for dimensionality reduction, in: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp. 490–497.

- Wang et al. [2018a] Wang, X., Girshick, R., Gupta, A., He, K., 2018a. Non-local neural networks, in: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 7794–7803.

- Wang et al. [2017] Wang, Z., Wei, L., Wang, L., Gao, Y., Chen, W., Shen, D., 2017. Hierarchical vertex regression-based segmentation of head and neck ct images for radiotherapy planning. IEEE Transactions on Image Processing 27, 923–937.

- Wang et al. [2018b] Wang, Z., Wei, L., Wang, L., Gao, Y., Chen, W., Shen, D., 2018b. Hierarchical vertex regression-based segmentation of head and neck ct images for radiotherapy planning. IEEE Transactions on Image Processing 27, 923–937.

- Xu et al. [2017] Xu, Y., Géraud, T., Bloch, I., 2017. From neonatal to adult brain mr image segmentation in a few seconds using 3d-like fully convolutional network and transfer learning, in: 2017 IEEE International Conference on Image Processing (ICIP), IEEE. pp. 4417–4421.

- Xue et al. [2018] Xue, Y., Xu, T., Zhang, H., Long, L.R., Huang, X., 2018. Segan: Adversarial network with multi-scale l 1 loss for medical image segmentation. Neuroinformatics 16, 383–392.

- Yang et al. [2017] Yang, D., Xu, D., Zhou, S.K., Georgescu, B., Chen, M., Grbic, S., Metaxas, D., Comaniciu, D., 2017. Automatic liver segmentation using an adversarial image-to-image network, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer. pp. 507–515.

- Yang et al. [2018] Yang, M., Yu, K., Zhang, C., Li, Z., Yang, K., 2018. Denseaspp for semantic segmentation in street scenes, in: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

- Yi et al. [2019] Yi, J., Wu, P., Jiang, M., Huang, Q., Hoeppner, D.J., Metaxas, D.N., 2019. Attentive neural cell instance segmentation. Medical image analysis 55, 228–240.

- Zhang et al. [2009] Zhang, X., Tian, J., Wu, Y., Zheng, J., Deng, K., 2009. Segmentation of head and neck ct scans using atlas-based level set method, in: Proceedings of Head And Neck Auto-Segmentation Challenge Workshop, p. 56.

- Zhu et al. [2016] Zhu, J.Y., Krähenbühl, P., Shechtman, E., Efros, A.A., 2016. Generative visual manipulation on the natural image manifold, in: Proceedings of European Conference on Computer Vision (ECCV).

- Zhu et al. [2019] Zhu, W., Huang, Y., Zeng, L., Chen, X., Liu, Y., Qian, Z., Du, N., Fan, W., Xie, X., 2019. Anatomynet: Deep learning for fast and fully automated whole-volume segmentation of head and neck anatomy. Medical physics 46, 576–589.

Appendix

6.1 Network details

In this section, we provide more details about our network structure, including the segmentation network and the autoencoder.

The structure of autoencoder is shown in Table 9, which is a convolutional autoencoder. Batch normalization is also used before each ReLU layer except for the FC layer. We use trilinear upsampling layers follows convolution layers instead of transpose convolution layers in the decoder. The output of the first FC layer, which is a 512 dimentional feature vector, is the latent code of the autoencoder.

The structure of segmentation network is shown in Table 10, including S-Net, SOL-Net, and SOS-Net. For SOS-Net, the first maxpooling uses kernel size of (1,2,2) as the spacing of CT images is anisotropy, and is larger in z-axis. In the decoder, SOS-Net uses trilinear upsampling layers follow SEResBlocks to recover the spatial resolution.

| Kernel | Stride/Scale | Channels | None-linear | |

|---|---|---|---|---|

| Conv3D | 5 | 2 | 64 | ReLU |

| Conv3D | 5 | 2 | 128 | ReLU |

| Conv3D | 5 | 1 | 128 | ReLU |

| Conv3D | 5 | 2 | 256 | ReLU |

| FC | - | - | 512 | None |

| FC | - | - | 256 | ReLU |

| Upsample | - | 2 | - | - |

| Conv3D | 5 | 1 | 128 | ReLU |

| Conv3D | 5 | 1 | 128 | ReLU |

| Upsample | - | 2 | - | - |

| Conv3D | 5 | 1 | 64 | ReLU |

| Upsample | - | 2 | - | - |

| Conv3D | 5 | 1 | 1 | ReLU |

| layer name | Channels | |

| S-Net | Conv3D stride=1, kernel size=3 | 32 |

| SEResBlock | 32 | |

| maxpooling kernel size=(1,2,2) | - | |

| SEResBlock | 48 | |

| maxpooling kernel size=(2,2,2) | - | |

| SEResBlock | 64 | |

| SEResBlock , dilation rate=2 | 64 | |

| DenseASPP dilation rate=3,6,12,18 | 320 | |

| Upsample scale=(2,2,2) | - | |

| SEResBlock | 48 | |

| Upsample scale=(1,2,2) | - | |

| SEResBlock | 32 | |

| Conv3D stride=1, kernel size=1 | 1 | |

| SOL-Net | SEResBlock | 32 |

| Conv3D stride=1, kernel size=1 | Num small organs | |

| SOS-Net | SEResBlock | 32 |

| Conv3D stride=1, kernel size=1 | 1 |

6.2 More visualization results