FM-Fusion: Instance-aware Semantic Mapping

Boosted by Vision-Language Foundation Models

Abstract

Semantic mapping based on the supervised object detectors is sensitive to image distribution. In real-world environments, the object detection and segmentation performance can lead to a major drop, preventing the use of semantic mapping in a wider domain. On the other hand, the development of vision-language foundation models demonstrates a strong zero-shot transferability across data distribution. It provides an opportunity to construct generalizable instance-aware semantic maps. Hence, this work explores how to boost instance-aware semantic mapping from object detection generated from foundation models. We propose a probabilistic label fusion method to predict close-set semantic classes from open-set label measurements. An instance refinement module merges the over-segmented instances caused by inconsistent segmentation. We integrate all the modules into a unified semantic mapping system. Reading a sequence of RGB-D input, our work incrementally reconstructs an instance-aware semantic map. We evaluate the zero-shot performance of our method in ScanNet and SceneNN datasets. Our method achieves 40.3 mean average precision (mAP) on the ScanNet semantic instance segmentation task. It outperforms the traditional semantic mapping method significantly. Code is available at https://github.com/HKUST-Aerial-Robotics/FM-Fusion.

Index Terms:

Semantic Scene Understanding; Mapping; RGB-D PerceptionI Introduction

Istance-aware semantic mapping in indoor environments is a key module for an autonomous system to achieve a higher level of intelligence. Based on the semantic map, a mobile robot can detect loop more robust[1] and efficiently[2]. The current methods rely on supervised object detectors like Mask R-CNN [3] to detect semantic instances and fuse them into an instance-level semantic map. However, the supervised object detectors are trained in specific data distribution and lack generalization ability. In deploying them in other real-world scenarios without fine-tune the networks, their performance is seriously degenerated. As a result, the reconstructed semantic map is also of poor quality in the target environment.

On the other hand, foundation models have been developing rapidly in vision-language modality [4] [5]. Multiple foundation models are combined to detect and segment objects. GroundingDINO[6], the latest State-of-the-Arts (SOTA) open-set object detection network, reads a text prompt and performs vision-language modal fusion. It detects objects with bounding boxes and open-set labels. The open-set labels are open vocabulary semantic classes. GroundingDINO has achieved 52.5 mAP on the zero-shot COCO object detection benchmark. It is higher than most of the supervised object detectors. Moreover, the image tagging model recognizes anything (RAM) [7] predicts semantic tags from an image. The tags can be encoded as a text prompt and sent to GroundingDINO. Vision foundation model segment anything (SAM)[4] generates precise zero-shot image segmentation results from geometric prompts, including a bounding box prompt. SAM can generate high-quality masks for detection results from GroundingDINO.

RAM, GroundingDINO, and SAM can be combined to detect objects in open-set labels and high-quality masks. All of these foundation models are trained using large-scale data and demonstrate strong zero-shot generalization ability in various image distributions. They provide a new approach for the autonomous system to reconstruct a generalizable instance-aware semantic map. This paper explores how to fuse object detection from foundation models into an instance-aware semantic map.

To fuse object detection from foundation models, two challenges should be addressed. Firstly, the foundation models generate open-set tags or labels. However, the semantic mapping task requires each constructed instance to be classified in close-set semantic classes. A label fusion method is required to predict an instance’s semantic class from a sequence of observed open-set labels. Secondly, SAM is operating on a single image. In dense indoor environments, SAM frequently generates inconsistent instance masks at changed viewpoints. It results in over-segmented and noisy instance volumes. Refining instance volumes integrated from inconsistent instance segmentation results is the challenge. However, these challenges have not been considered in traditional semantic mapping works. If foundation models are directly used in a traditional semantic mapping system, they reconstruct semantic instances in a less satisfied quality.

To address such challenges, we propose a probabilistic label fusion method following the Bayes filter algorithm. Meanwhile, we refine the instance volume via merging over-segmentation and fuse instance volume with the global volumetric map. The label fusion and instance refinement modules are incrementally run in our system. As shown in Figure 1, reading a sequence of RGB-D frames, FM-Fusion fuses the detections from foundation models and runs simultaneously with a traditional SLAM system. Our main contributions are:

-

•

An approach to fuse the object detections from vision-language foundation models into an instance-aware semantic map. The foundation models are used without fine-tune.

-

•

A probabilistic label fusion method that predicts close-set semantic classes from open-set label measurements.

-

•

Instances are refined to address inconsistent masks at changed viewpoints.

- •

II Related Works

II-A Vision-Language Foundation Models

The image tagging foundation model RAM [7], recognizes the semantic categories in the image and generates related tags. The open-set object detector, such as GLIP [10] and GroundingDINO [6], reads a text prompt to detect the objects. The text prompt can be a sentence or a series of semantic labels. It extracts the regional image embeddings and matches the image embedding to the phrase of the text prompt through a grounding scheme. The network is trained using contrastive learning to align the image embeddings and text embeddings. The detection results contain a bounding box and a set of open-set label measurements. SAM[4] can precisely segment any object with a geometric prompt. It is trained with 11M images and evaluated in zero-shot benchmarks. SAM demonstrates strong generalization ability across data distribution without fine-tune. The combined foundation models read an image and detect objects with open-set labels and masks. We denote them as RAM-Grounded-SAM. 111https://github.com/IDEA-Research/Grounded-Segment-Anything.

The foundation models have been applied in a series of downstream tasks without fine-tuning. Without semantic prediction, SAM3D [11] projects the image-wise segmentation from SAM to a 3D point cloud map. It further merges the segments with geometric segments generated from graph-based segmentation [12]. SAM is also combined with a neural radiance field to generate a novel view of objects [13]. On the other hand, combining the SAM or other foundation models with semantic mapping is still an open area.

II-B Semantic Mapping

SemanticFusion [14] is a pioneer work in semantic mapping. It trains a lightweight CNN-based semantic segmentation network [15] on the NYUv2 dataset. SemanticFusion incrementally fuses the semantic labels, ignoring the instance-level information, into each surfel of the global volumetric map. In Bayesian fusing the label measurement, the semantic probability is directly provided by the object detector. Relying on a pre-trained Mask R-CNN on the COCO dataset, Kimera [16] uses similar methods to fuse semantic labels into a voxel map. It clusters the nearby voxels with identical semantic labels into instances. Kimera further constructs a scene graph, which is a hierarchical map representation. Based on Kimera, Hydra [2] utilizes the scene graph to detect loops more efficiently.

On the other hand, Fusion++ [17] directly detects semantic instances on images and fuses them into instance-wise volumetric maps. It further demonstrates that semantic landmarks can be used in loop detection. Later methods use similar methods to construct semantic instance maps but utilize the semantic landmarks in novel methods to detect loops [1][18].

Rather than a pure dense map such as a surfel map or voxel map, Voxblox++ [19] first generates geometric segments on each depth frame[20]. If the object detection masks the complete region of an instance, it can merge those broken segments generated from geometric segmentation. Then, the merged segments with their labels are fused into a global segment map through a data association strategy.

The main limitation of the current semantic mapping methods is the lack of ability to generalize. The supervised object detection networks are trained with limited source data. Considering the majority of target SLAM scenarios do not provide annotated semantic data, object detection can not be fine-tuned on the target distribution. To avoid the issue of generalization, Kimera has to experiment in a synthetic dataset[16], including some experiments that rely on ground-truth segmentation. Lin etc. [1] sets up an environment with sparsely distributed objects to reconstruct a semantic map. Voxblox++ evaluates a few of the 9 semantic classes in 10 scans. Although they propose novel semantic SLAM methods, the semantic mapping module prevents their methods from being used in other real-world scenes.

To enhance robustness in the distribution shift, our method fuses the object detections from foundation models to reconstruct the instance-aware semantic map. We evaluate its zero-shot performance on the ScanNet semantic instance segmentation benchmark. It involves 20 classes in the NYUv2 label-set and evaluates their average precision(AP) in 3D space. We also show the qualitative results in several SceneNN scans, which have been used by the previous semantic mapping works.

III Fuse Multi-frame Detections

III-A Overview

As shown in Figure 2, FM-Fusion reads an RGB-D sequence and reconstructs a semantic instance map. Each semantic instance is represented as , where is its predicted semantic class and is its voxel grid map. is predicted as a label over the NYUv2 label-set . At each RGB-D frame at frame index , RAM generates a set of possible object tags. The valid tags are encoded into the text prompt . GroundingDINO generates object detections with each of the detection , where is the predicted open-set label, is the corresponding similarity score and is the frame-wise text prompt. For each , SAM generates an object mask .

III-B Prepare the object detector

We first construct open-set labels of our interests . RAM generates various tags. Many of them are not correlated with the pre-defined labels . The labels of interest can be selected by sampling a histogram of measured labels for each semantic class in . In the ScanNet experiment, we select open-set labels to construct . Only the tags belonging to are encoded into the and sent to GroundingDINO. GroundingDINO matches each detected object with the tags in the text prompt. The tags in and label measurements in each are all from the label-set .

In a single image frame, RAM can miss some objects in its generated tags due to occlusion. The missing tags further cause GroundingDINO to detect objects incorrectly. It is a natural limitation of running foundation models on a single image. To address it, we encode the detected labels in adjacent frames into the text prompt. The augmented text prompt , where is the valid tags from RAM and is a set of measured labels in previous adjacent frames. All the tags in and labels in belong to the . The text prompt augmentation can reduce the missing tags generated from a single image. More complete tags improve the detection performance of GroundingDINO.

III-C Data association and integration

In our system, each instance maintains an individual voxel grid map , similar to Fusion++ [17]. Meanwhile, the SLAM module integrates a global TSDF map [21] separately. The advantage of separating semantic mapping and global volumetric mapping is that false or missed object detection can not affect the global volumetric map. So, in each RGB-D frame, all the observed sub-volumes are integrated into the global TSDF map despite the detection variances.

In each detection frame, data association is conducted between detection results and volumes of the existing instances. Specifically, the observed instance voxels are first queried. They can be searched by projecting the depth image into the voxel grid map of all the instances. If an instance is observed, its voxels are projected to the current RGB frame. For a detection and a projected instance , their intersection over union (IoU) can be calculated , where is a detection mask and is the projected mask of an existed instance. If is larger than a threshold, the detection is associated with instance .

After data association, we integrate the voxel grid map of matched instances accordingly. Those unmatched detections initiate new instances. An instance voxel grid map is integrated using the traditional voxel map fusion method [21]. Specifically, we raycast the masked depth of a detected object and update all of its observed voxels.

III-D Probabilistic label fusion

As shown in Figure 3, an object is observed by GroundingDINO across frames. Each generated detection result contains multiple label measurements , the corresponding similarity score and a text prompt , where . Based on the associated detections, we predict a probability distribution , where and is the index of the image frame.

We follow the Bayes filter algorithm [22] to fuse open-set label measurements and propagate them along the image sequence. The input to the Bayesian label fusion is detection result , semantic probability distribution at the last frame , and a uniform control input . And it predicts the latest semantic probability distribution .

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

| (6) |

The key part in our Bayesian label fusion module is the likelihood function , as shown in equation (5). The score likelihood is given by GroundingDINO, while label likelihood should be statistic summarized. Since GroundingDINO can only detect a label if it is given in the text prompt, the label likelihood can be transmitted as equation (6). denotes the detected label exists in the text prompt .

Here, we further expand the label likelihood in equation (6) into two conditional probabilities,

| (7) |

The first term is a detection likelihood while the second term is a tagging likelihood. They can be statistically summarized using the detection results from GroundingDINO and tagging results from RAM. We follow the equation (7) to construct a label likelihood matrix over and . In the ScanNet training set, we sample image frames with tagging results, detection results, and ground-truth annotation to summarize the statistics. In the Bayesian update step in equation (5), the label likelihood between each pair of can be queried from the constructed label likelihood matrix.

As shown in Figure 4(a), parts of the constructed label likelihood matrix are visualized, while the complete likelihood matrix involves the entire and . For comparison, we construct a manually assigned label likelihood matrix similar to Kimera. As shown in Figure 4, the statistic summarized likelihood matrix is quite different from the manually assigned one. In the statistical label likelihood, each semantic class can be detected by its similar open-set labels at various probabilities. Those cells beyond the diagonal can also have likelihood values, indicating the probability of falsely measured labels. The summarized likelihood matrix following equation (7) describes the probability distribution of label measurements reasonably.

In actual implementation, the multiplicative measurement update in equation (3) frequently generates over-confident probability distribution, which is also reported in Fusion++[17]. It causes can be easily dominated by the latest measurement even if previous label measurements are all different with . As a result, in the measurement update, we propagate the probability distribution by weighted addition.

| (8) |

Then, the predicted semantic class for each instance at frame is .

IV Instance refinement

IV-A Merge over-segmentation

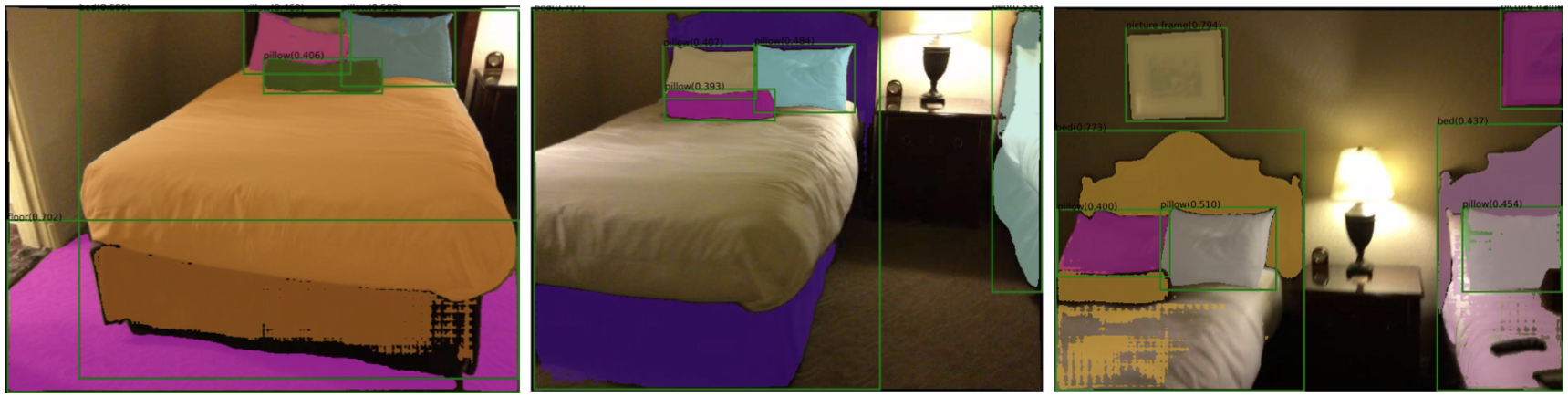

Although SAM has demonstrated promising segmentation on a single image, it generates inconsistent instance masks at changed viewpoints, as shown in Figure 5. The inconsistent masks prevent a correct data association between detections and observed instances. Those mismatched detections are initialized as new instances and cause over-segmentation, as shown in Figure 6(a).

The inconsistent instance mask is a natural limitation for image-based segmentation networks, including SAM and Mask R-CNN. To address it, we utilize spatial overlap information to merge the over-segmentation. For a pair of instances at detection frame , where is volumetric larger than , their semantic similarity and 3D IoU are calculated,

| (9) |

| (10) |

where is the normalized semantic distribution, is an instance voxel grid map and is the inflated voxel grid map. The voxel inflation is designed to enhance 3D IoU for instances with sparse volume. It can be directly generated by scaling the length of each voxel in . If the semantic similarity and 3D IoU are both larger than the corresponding thresholds, is integrated into the voxel grid map of and further cleared from the instance map. As shown in Figure 6(b), over-segmented instances caused by inconsistent object masks are merged.

IV-B Instance-geometry fusion

The instance-wise voxel grid map can contain voxel outliers due to noisy depth images being integrated. On the other hand, the global TSDF map is a precise 3D geometric representation. It is because the global TSDF map integrates all the observed volumes in each RGB-D frame, while instance volume only integrates a masked RGB-D frame if the corresponding instance is correctly detected. To filter voxel outliers, we fuse instance-wise voxel grid map with the point cloud extracted from the global TSDF map. As shown in Figure 7, those voxels in that are not occupied by any point in are outliers and have been removed. The fused voxel grid map represents the instance volume precisely.

V Experiment

We chose the public dataset ScanNet and SceneNN to evaluate the semantic mapping quality. In ScanNet, 30 scans from its validation set are used. We evaluated its semantic instance segmentation by average precision (AP). In another experiment, we selected 5 scans from SceneNN and evaluated the generalization ability of our method. The SceneNN scans are also used by the previous method [19]. In all the experiments, camera poses are provided by the dataset.

We compared our method with Kimera 222https://github.com/MIT-SPARK/Kimera-Semantics and a self-implemented Fusion++. To enable Kimera to read open-set labels , we converted each label in to a semantic class in NYUv2 label-set . The hard association between are decided by an academic ChatGPT 333https://chatgpt.ust.hk. Then, Kimera can reconstruct a point cloud with semantic labels. To further generate instance-aware point cloud, we employed the geometric segmentation method known as ”Cluster-All” [23]. It clusters the nearby points with identical semantic labels into an instance. Cluster-All is applied as a post-processing step on the reconstructed semantic map from Kimera. Notice that Cluster-All is very similar to the post-processing module provided by Kimera. But we use Cluster-All for convenient implementation. Meanwhile, Fusion++ is implemented based on our system modules. Compared with the original Fusion++ method, the main difference is that our implemented version does not maintain a foreground probability for each voxel. Instead, we updated the voxel’s weight and filter background voxels using their weights. In experiments with traditional object detection, we used a Mask R-CNN backbone with FPN101 image backbone. We evaluated a pre-trained Mask R-CNN and a fine-tuned Mask R-CNN. The pre-trained one is trained in COCO instance segmentation dataset, while we also fine-tuned it using ScanNet dataset.

In implementation, we utilized Open3D[24] toolbox to construct the global TSDF map and instance-wise voxel grid map. The global TSDF map is integrated for every RGB-D frame, while our method and all baselines run in every frames to integrate the detected instances. In all the experiments, the RGB-D images are in dimension and the voxel length is set to be cm. The experiment is run on an Intel-i7 computer with Nvidia RTX-3090 GPU in an offline fashion.

| Method | cab. | bed | cha. | sof. | tab. | door | win. | bkf. | pic. | cou. | desk | cur. | ref. | show. | toi. | sink | bath. | oth. | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M-CNN& Kimera | 0.0 | 6.4 | 10.0 | 25.1 | 17.3 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 24.6 | 0.0 | 10.4 | 4.3 | 0.0 | 0.0 | 5.4 |

| M-CNN& Fusion++ | 0.0 | 27.1 | 3.7 | 14.7 | 4.4 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 23.9 | 0.0 | 46.6 | 20.0 | 0.0 | 0.0 | 7.8 |

| M-CNN∗& Kimera | 27.8 | 55.5 | 18.7 | 0.0 | 0.0 | 16.5 | 33.1 | 31.2 | 12.9 | 23.3 | 3.9 | 26.0 | 0.0 | 75.0 | 60.0 | 22.7 | 60.0 | 0.0 | 25.9 |

| M-CNN∗& Fusion++ | 7.1 | 22.0 | 31.2 | 0.0 | 13.3 | 12.5 | 15.0 | 11.5 | 28.5 | 0.0 | 0.0 | 18.1 | 0.0 | 0.0 | 0.0 | 40.1 | 0.0 | 0.0 | 11.1 |

| G-SAM &Kimera | 32.5 | 21.0 | 16.8 | 54.5 | 21.7 | 26.0 | 31.5 | 45.3 | 21.9 | 8.7 | 3.9 | 24.8 | 29.6 | 0.0 | 50.0 | 9.4 | 46.2 | 0.0 | 24.7 |

| G-SAM & Ours | 4.6 | 46.2 | 49.2 | 39.6 | 37.3 | 19.5 | 12.0 | 50.4 | 44.0 | 3.8 | 8.9 | 15.5 | 66.7 | 82.2 | 100.0 | 41.5 | 75.0 | 30.7 | 40.3 |

V-A ScanNet Evaluation

In the instance segmentation benchmark, as shown in Table I, semantic mapping based on Mask R-CNN can only reconstruct a few of the semantic categories. It is because the pre-trained Mask R-CNN is trained using COCO label-set and those new semantic classes in NYUv2 label-set are predicted with AP. Even for those predictable semantic classes, the pre-trained Mask R-CNN suffers from the issue of generalization and achieve low scores. In experiment with fine-tune Mask R-CNN, although the mean AP is improved, they still reconstruct a few of semantic classes with AP. We also notice that Kimera performs significantly better than the implemented Fusion++. We believe the difference comes from their different map management methods. Unlike Kimera ignores the instance-wise segmentation, Fusion++ maintains instance-wise volumes and requires data association. But the fine-tuned Mask R-CNN still generate detections with noisy instance masks, causing a large amount of false data association. As a result, Fusion++ generates instances with too many over-segmentation and maintains a low AP score.

The results demonstrated that semantic mapping based on supervised object detection can be easily affected by image distribution, label-set distribution and annotation quality. On the other hand, boosted by pre-trained foundational models RAM-Grounded-SAM, both Kimera and our method reconstructed semantic instances in higher quality than semantic mapping methods based on the supervised object detection.

However, simply replacing object detectors with foundation models could not utilize the maximum potential of the foundation models. Compared with Kimera using RAM-Grounded-SAM, our method achieved . The boosted performance comes from two aspects. Firstly, our probabilistic label fusion predicts semantic class in higher accuracy. As shown in Figure 8(a), Kimera predicts semantics of some sub-volumes falsely. Since Kimera updates the label measurements with a manually assigned likelihood probability and ignores the similarity score provided by GroundingDINO, it is easier to be affected by false label measurements. Secondly, Kimera ignores the instance-level segmentation and reconstructs many over-segmented instances. Some of them are predicted with different semantic labels, as shown in Figure 8(a) and 8(c). However, our method is instance-aware. Each instance volume is maintained separately. Our instance refinement module merges over-segmented instances caused by inconsistent instance masks at changed viewpoints. We further fused instance volume with a global volumetric map. Hence, our instances volumes are spatially consistent and relative precise, as shown in Figure 8(d).

| Method | Prm. Aug. | Likelihood | Refine | |

|---|---|---|---|---|

| A | ✓ | Manual Assign | ✓ | 35.9 |

| B | statistic | ✓ | 34.1 | |

| C | ✓ | statistic | 23.4 | |

| Ours | ✓ | statistic | ✓ | 40.3 |

The rest of the ScanNet experiment focus on evaluating each module of our method through an ablation study. As shown in Table.II, the text prompt augmentation, probabilistic label fusion with statistic summarized likelihood, and instance refinement all improve the reconstructed semantic instances.

A visualized example of the Ablation-A is shown in Figure 9. As shown in Figure 9(a), Ablation-A predicts an instance falsely, similar to Kimera. It also predicts overlapped instances with over-confident semantic probability distributions. They can not be merged during refinement due to their low semantic similarity. So, the over-segmented instances can not be merged, as shown in Figure 9(c). On the other hand, our method predicts the corresponding semantic classes correctly. The over-segmented instances are predicted with a similar semantic probability distribution and have been merged successfully, as shown in Figure 9(b) and 9(d).

As shown in Figure 10(a), RAM fails to recognize a table due to the extreme viewpoint, and GroundingDINO cannot detect it either. On the other hand, as illustrated in section III-B, our method maintains a series of labels that has been detected in previous frames. If a label in is not given in RAM tags, the corresponding label is added to the text prompt. As shown in Figure 10(b), our method detects the table correctly. Beyond miss detecting objects, the incomplete tags from RAM cause false label measurements in other frames. Hence, Ablation-B reconstructs a few instances with a false semantic class. More results can be found in our supplementary video.

To sum up, simply replacing traditional object detectors with RAM-Grounded-SAM to construct the semantic map improves the semantic mapping performance significantly. However, false label measurements, inconsistent instance masks, and missed tags in the text prompt still exist in foundation models. They limit the performance of semantic mapping. We consider those limitations of foundation models. Compared with Kimera using RAM-Grounded-SAM, our method further improves by .

V-B SceneNN evaluation

In the SceneNN experiment, we kept using the label likelihood matrix summarized in ScanNet and compare it with Kimera.

As shown in Figure11, Kimera reconstructed some instances with false labels and over-segmentation, similar to its reconstruction in ScanNet. On the other hand, our semantic prediction is more accurate and significantly less over-segmentation. The quantitative results can be found in Table III. Although our statistical label likelihood is summarized using ScanNet data, we have not observed a domain gap in implementing it in SceneNN. One of the reasons is that foundation models preserve strong generalization ability. RAM-Grounded-SAM maintains a similar label likelihood matrix across the image distribution. For example, a door is frequently detected as a cabinet in both ScanNet and SceneNN datasets, which are highlighted in red in Figure9(a) and Figure11(a). Hence, our statistical label likelihood can be used across domains.

| 096 | 206 | 223 | 231 | 255 | All | |

|---|---|---|---|---|---|---|

| Kimera | 52.0 | 29.9 | 28.6 | 34.0 | 37.5 | 41.1 |

| Ours | 63.1 | 69.7 | 37.5 | 38.8 | 25.0 | 49.7 |

V-C Efficiency

| Base | Scaling | ||

| Foundation | RAM | 28.5 ms | - |

| Models | GroundingDINO | 120.7 ms | - |

| SAM | 464.4 ms | - | |

| FM-Fusion | Projection | 307ms | 63.4 ms/obj |

| Data Assoc. | 47.1ms | 9.7 ms/obj | |

| Integration | 71.9ms | 14.9 ms/obj | |

| Total | 1039.6 ms | - | |

So far, the system run offline. As shown in Table. IV, the total time for each frame is ms. Although it is not a real-time system yet, many modules can be optimized in the future. SAM-related variants have been published to generate instance masks faster[25]. In FM-Fusion, a few modules are implemented with Python, the efficiency can be further improved by deploying it with C++. That would be one of our future works.

VI Conclusion

In this work, we explored how to boost instance-aware semantic mapping with zero-shot foundation models. With foundation models, objects are detected in open-set semantic labels at various probabilities. The object masks generated at changed viewpoints are inconsistent and cause over-segmentation. The current semantic mapping methods have not considered such challenges. On the other hand, our method uses a Bayesian label fusion module with statistic summarized likelihood and refines the instance volumes simultaneously. Compared with the baselines, our method performs significantly better in ScanNet and SceneNN benchmarks.

References

- [1] S. Lin, J. Wang, M. Xu, H. Zhao, and Z. Chen, “Topology Aware Object-Level Semantic Mapping towards More Robust Loop Closure,” IEEE Robotics and Automation Letters (RA-L), vol. 6, pp. 7041–7048, 2021.

- [2] N. Hughes, Y. Chang, and L. Carlone, “Hydra: A real-time spatial perception system for 3D scene graph construction and optimization,” in Proc. of Robotics: Science and System (RSS), 2022.

- [3] K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask r-cnn,” in Proc. of the IEEE intl. Conf. on Comp. Vis. (ICCV), 2017, pp. 2961–2969.

- [4] A. Kirillov, E. Mintun, N. Ravi, H. Mao, C. Rolland, L. Gustafson, T. Xiao, S. Whitehead, A. C. Berg, W.-Y. Lo, P. Dollár, and R. Girshick, “Segment anything,” arXiv:2304.02643, 2023.

- [5] A. Radford, J. W. Kim, C. Hallacy, A. Ramesh, G. Goh, S. Agarwal, G. Sastry, A. Askell, P. Mishkin, J. Clark et al., “Learning transferable visual models from natural language supervision,” in International conference on machine learning(ICML). PMLR, 2021, pp. 8748–8763.

- [6] S. Liu, Z. Zeng, T. Ren, F. Li, H. Zhang, J. Yang, C. Li, J. Yang, H. Su, J. Zhu et al., “Grounding dino: Marrying dino with grounded pre-training for open-set object detection,” arXiv preprint:2303.05499, 2023.

- [7] Y. Zhang, X. Huang, J. Ma, Z. Li, Z. Luo, Y. Xie, Y. Qin, T. Luo, Y. Li, S. Liu et al., “Recognize anything: A strong image tagging model,” arXiv preprint:2306.03514, 2023.

- [8] A. Dai, A. X. Chang, M. Savva, M. Halber, T. Funkhouser, and M. Nießner, “Scannet: Richly-annotated 3d reconstructions of indoor scenes,” in Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2017.

- [9] B.-S. Hua, Q.-H. Pham, D. T. Nguyen, M.-K. Tran, L.-F. Yu, and S.-K. Yeung, “Scenenn: A scene meshes dataset with annotations,” in Proc. of the International Conference on 3D Vision (3DV), 2016, pp. 92–101.

- [10] L. H. Li, P. Zhang, H. Zhang, J. Yang, C. Li, Y. Zhong, L. Wang, L. Yuan, L. Zhang, J.-N. Hwang et al., “Grounded language-image pre-training,” in Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 10 965–10 975.

- [11] D. Zhang, D. Liang, H. Yang, Z. Zou, X. Ye, Z. Liu, and X. Bai, “Sam3d: Zero-shot 3d object detection via segment anything model,” arXiv preprint:2306.02245, 2023.

- [12] P. F. Felzenszwalb and D. P. Huttenlocher, “Efficient graph-based image segmentation,” International journal of computer vision (IJCV), vol. 59, no. 2, pp. 167–181, 2004.

- [13] Q. Shen, X. Yang, and X. Wang, “Anything-3d: Towards single-view anything reconstruction in the wild,” arXiv preprint:2304.10261, 2023.

- [14] J. McCormac, A. Handa, A. Davison, and S. Leutenegger, “Semanticfusion: Dense 3d semantic mapping with convolutional neural networks,” in Proc. of the IEEE Intl. Conf. on Robot. and Autom. (ICRA). IEEE, 2017, pp. 4628–4635.

- [15] H. Noh, S. Hong, and B. Han, “Learning deconvolution network for semantic segmentation,” in Proc. of the IEEE intl. Conf. on Comp. Vis. (ICCV), 2015, pp. 1520–1528.

- [16] A. Rosinol, M. Abate, Y. Chang, and L. Carlone, “Kimera: an open-source library for real-time metric-semantic localization and mapping,” in Proc. of the IEEE Intl. Conf. on Robot. and Autom. (ICRA), 2020.

- [17] J. McCormac, R. Clark, M. Bloesch, A. J. Davison, and S. Leutenegger, “Fusion++: Volumetric object-level SLAM,” Proc. of the International Conference on 3D Vision (3DV), pp. 32–41, 2018.

- [18] J. Yu and S. Shen, “Semanticloop: loop closure with 3d semantic graph matching,” IEEE Robotics and Automation Letters (RA-L), vol. 8, no. 2, pp. 568–575, 2022.

- [19] M. Grinvald, F. Furrer, T. Novkovic, J. J. Chung, C. Cadena, R. Siegwart, and J. Nieto, “Volumetric instance-aware semantic mapping and 3D object discovery,” IEEE Robotics and Automation Letters (RA-L), vol. 4, no. 3, pp. 3037–3044, 2019.

- [20] F. Furrer, T. Novkovic, M. Fehr, A. Gawel, M. Grinvald, T. Sattler, R. Siegwart, and J. Nieto, “Incremental object database: Building 3d models from multiple partial observations,” in Proc. of the IEEE/RSJ Intl. Conf. on Intell. Robots and Syst.(IROS). IEEE, 2018, pp. 6835–6842.

- [21] H. Oleynikova, Z. Taylor, M. Fehr, R. Siegwart, and J. Nieto, “Voxblox: Incremental 3D Euclidean Signed Distance Fields for on-board MAV planning,” Proc. of the IEEE/RSJ Intl. Conf. on Intell. Robots and Syst.(IROS), vol. 2017-Sep, pp. 1366–1373, 2017.

- [22] S. Thrun, “Probabilistic robotics,” Communications of the ACM, vol. 45, no. 3, pp. 52–57, 2002.

- [23] B. Douillard, J. Underwood, N. Kuntz, V. Vlaskine, A. Quadros, P. Morton, and A. Frenkel, “On the segmentation of 3d lidar point clouds,” in Proc. of the IEEE Intl. Conf. on Robot. and Autom. (ICRA). IEEE, 2011, pp. 2798–2805.

- [24] Q.-Y. Zhou, J. Park, and V. Koltun, “Open3D: A modern library for 3D data processing,” arXiv:1801.09847, 2018.

- [25] X. Zhao, W. Ding, Y. An, Y. Du, T. Yu, M. Li, M. Tang, and J. Wang, “Fast segment anything,” arXiv preprint:2306.12156, 2023.

Appendix A

Generate hard-associated label set

As shown in Fig. 12(a), we ask ChatGPT to generate a hard association between open-set labels and NYUv2 labels . In experiment with Kimera, we follow the hard association to convert each label.

Appendix B

Summarize Label Likelihood

We summarized the image tagging likelihood matrix and object detection likelihood matrix that are introduced in equation (7). For example, the corresponding likelihood of table can be summarized as follows,

| (11) | |||

is a set of image frames that observed a ground-truth table, while is a set of image frames with table in their predicted tags. Similarly, is a set of observed ground-truth table instances if their image tags contain a table, while is a set of predicted table instances. We summarized the label likelihood matrix using the ScanNet dataset. The summarized image tagging likelihood and object detection likelihood are visualized in Fig. 13.

We follow equation 7 to compute the label likelihood . It is visualized in Fig. 14(a). To compare, the manually assigned label likelihood is visualized in Fig. 14(b). It is generated based on the hard-associated label set given by ChatGPT, and we manually assign a likelihood at . The manually assigned label likelihood is used in experiments with Ablation-A.