FlowFace++: Explicit Semantic Flow-supervised End-to-End Face Swapping

Abstract

This work proposes a novel face-swapping framework FlowFace++, utilizing explicit semantic flow supervision and end-to-end architecture to facilitate shape-aware face-swapping. Specifically, our work pretrains a facial shape discriminator to supervise the face swapping network. The discriminator is shape-aware and relies on a semantic flow-guided operation to explicitly calculate the shape discrepancies between the target and source faces, thus optimizing the face swapping network to generate highly realistic results. The face swapping network is a stack of a pre-trained face-masked autoencoder (MAE), a cross-attention fusion module, and a convolutional decoder. The MAE provides a fine-grained facial image representation space, which is unified for the target and source faces and thus facilitates final realistic results. The cross-attention fusion module carries out the source-to-target face swapping in a fine-grained latent space while preserving other attributes of the target image (e.g. expression, head pose, hair, background, illumination, etc). Lastly, the convolutional decoder further synthesizes the swapping results according to the face-swapping latent embedding from the cross-attention fusion module. Extensive quantitative and qualitative experiments on in-the-wild faces demonstrate that our FlowFace++ outperforms the state-of-the-art significantly, particularly while the source face is obstructed by uneven lighting or angle offset.

Index Terms:

face swapping, image translation, image edit, facial expression, face identity.![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/1c7bc034-4ff1-4b8e-89c8-3b5a1fbd9287/x1.png)

I Introduction

Face swapping transfers identity information from a source face onto a target face while preserving the target attributes, such as expression, pose, hair, lighting, and background. This technique has great research value due to its diverse applications in portrait reenactment, film production, and virtual reality [1]. Figure 1 shows several examples of face swapping.

Recent works [2, 3, 4, 5, 6] have made great improvements in achieving promising face-swapping results. However, many of them focus on transferring inner facial features, while neglecting facial contour reshaping. We are aware that facial contours also play a crucial role in conveying a person’s identity, yet few efforts [7, 6] have been devoted to exploring contour transferring. Reshaping facial contours presents significant challenges as it involves making substantial changes to the pixel values. HifiFace [7] directly utilizes a 3D facial reconstruction model with the coarse perception of face shape. FlowFace [6] adopts a two-stage framework with a specific face-reshaping network and a face-swapping network. It suffers from error accumulation introduced by the two individual stages. In fact, facial shape transferring is still a challenge for authentic face swapping. To further solve the shape transferring problem, we propose an end-to-end framework with the supervision of explicit semantic flow of face contour, dubbed FlowFace++. Unlike existing methods, FlowFace++ is a shape-aware and end-to-end face-swapping network.

Our end-to-end FlowFace++ is composed of three modules. Firstly, a pre-trained masked autoencoder (MAE) is used to transform facial images into a fine-grained representation space shared by the target and source faces, which facilitates the realism of face swapping. Then, a cross-attention fusion module performs fine-grained face swapping in latent space, including the source-to-target identity transferring, the preservation of target facial expression and other attributes (e.g., head pose, illumination, background, etc). Based on the above face-swapping latent features, a convolutional decoder is trained to carry out the synthesis of the swapped facial image. Particularly, to improve the accuracy of face contour shaping, we have developed a novel shape-aware discriminator. This discriminator relies on a semantic flow-guided operation to explicitly calculate the shape discrepancies between the target and source faces, ensuring that the face-swapping network produces an accurate face shape consistent with the given source face. Overall, our end-to-end face-swapping network achieves highly realistic and accurate face-swapping results, which benefits from the MAE encoder, cross-attention fusion module, convolutional decoder, and shape-aware discriminator.

Prior studies[7, 3, 8, 5, 2, 4] commonly utilize a face recognition model to obtain identity embedding of source face. However, given that identity embeddings are typically trained under face recognition tasks, they may not fully align with the requirements of face-swapping tasks, leading to overlooking intra-class variations [8]. In contrast, our MAE encoder is pre-trained using a mask-then-reconstruct training strategy on a large-scale facial dataset. It is capable of utilizing semantic-level features that maintain a higher degree of fine-grained information [29] than those identity-specific features of commonly-used identity embeddings.

Most previous works [5, 2, 7, 4] are inspired by the style transferring method to mapping target faces to the styleGAN2 latent space [13] and employ AdaIN [14] to integrate the identity embedding of a source face into the target face. With AdaIN, the identity embedding of a source face is viewed as image global information to adapt the space of trainable parameters, while then manipulating the latent space of the target image for the local region of face identity. Performing global operations on two distinct latent spaces does not sufficiently capture the critical interaction of local face region features in latent space. Differently, our FlowFace++ makes use of the MAE encoder to encode the source and target faces into a unified latent representation. It introduces the cross-attention fusion module to self-adaptive transfer identity information from source patches to their corresponding target patches.

Furthermore, our facial shape discriminator calculates pixel-level differences in facial shape between source and target faces by modeling the dense motion of facial contour. Compared to previous methods that either ignore facial shape transfer or use shape coefficients of a 3D face reconstruction model [7] as supervision, our facial shape discriminator provides a more finely tuned perception of facial discrepancies and facilitates the facial shape transfer capabilities of our face swapping network.

We conduct extensive quantitative and qualitative experiments to evaluate the effectiveness of our FlowFace++ approach on in-the-wild faces. The results show that FlowFace++ outperforms the current state-of-the-art methods in terms of both objective metrics and subjective visual quality. Overall, our contributions are summerized as follows:

-

•

We propose an end-to-end framework for shape-aware face swapping, namely FlowFace++. It can effectively transfer both the inner facial details and the facial outline of the source face to the target one, thus achieving authentic face-swapping results and robustness even under extreme input conditions (e.g. angle jamming and uneven light exposure).

-

•

We design a facial shape discriminator that explicitly distinguishes the facial outline discrepancies between the given source and target input faces, with generating a shape-aware semantic flow. Our experimental results conclusively demonstrate that the incorporation of the discriminator supervision within the face swap network enables accurate facial shape transfer.

-

•

We propose a pre-trained face-masked autoencoder-based face-swapping encoder (named MAE encoder), as well as a cross-attention fusion module. The MAE encoder provides a unified latent representation for the source and target face inputs.

II Related Work

Previous face-swapping methods can be categorized as either target attribute-guided or source identity-guided approaches.

Target attribute-guided methods involve editing the source face first and then blending it into the target background. Early methods [15, 16, 17] directly warp the source face according to the target facial landmarks, thus failing to address large posture differences and expression differences. 3DMM-based methods [18, 19, 20, 21] swap faces by 3D-fitting and re-rendering. However, these methods often struggle to handle skin color and lighting differences, leading to poor fidelity in the final result. Later, GAN-based methods improve the fidelity of the generated faces. Deepfakes [22] transfers the target attributes to the source face by an encoder-decoder structure while being constrained by two specific identities. FSGAN[23] utilizes the target facial landmarks to animate the source face and introduces a blending network to fuse the generated source face with the target background. However, it struggles to handle significant differences in skin color. AOT [24] later concentrates on face swapping, which involves significant variations in skin color and lighting conditions by formulating appearance mapping as an optimal transport problem. Although these methods have proven effective, they still require a facial mask to blend the generated face with the target background. However, mask-guided blending can restrict the degree of face shape change, which will limit the overall quality of the final result.

Source identity-guided methods typically rely on the use of identity embeddings or the latent representations of StyleGAN2 [13] to represent the source identity. These representations are then injected into the target face to transfer the source identity onto the target. The FaceShifter model [2] incorporates an adaptive attentional denormalization generator that integrates the source identity embedding and the target features to produce highly realistic facial images. SimSwap [3] introduces a weak feature matching loss to effectively retain the target attributes. MegaFS [25], RAFSwap [26] and HighRes [27] leverage pre-trained StyleGAN2 models to facilitate face swapping and can achieve high-resolution face swapping. FaceController [4] exploits the identity embedding with 3D priors to represent the source identity and design a unified framework for identity swapping and attribute editing. InfoSwap [28] applies the information bottleneck principle to effectively disentangle the identity-related and identity-irrelevant information. FaceInpainter [5] also utilizes the identity embedding with 3D priors to implement controllable face in-painting under heterogeneous domains. Smooth-Swap [8] introduces a novel approach to constructing smooth identity embeddings, which significantly improves the training efficiency and stability of face swapping model.

However, Most existing face swapping methods do not take into account the facial outlines during face swapping. Recently, HifiFace [7] attempts to address this issue by introducing a 3D shape-aware identity that can control the face shape during the swapping process. However, it injects the shape representation into the latent feature space, making it difficult for the model to correctly decode the face shape. Moreover, these methods always need a pre-trained face recognition model to extract features of source faces and another encoder for target faces during the inference time, which is not friendly to deployment.

III Proposed Method

Face swapping task aims to generate a facial image with the identity of the source face and the attributes of the target face. As shown in Figures 2 and 3, this paper proposes a novel face-swapping framework, named FlowFace++, to realize shape-aware face swapping. FlowFace++ consists in one face swapping network and one facial shape discriminator . In training, is fed with one face with target attributes and the other one with source identity. is responsible for supervising to perform shape-aware face swapping. Once trained, is able to carry out face swapping from one source image to another target one.

III-A Face Swapping Network

The face swapping network is responsible for fusing identity characteristics (e.g. facial shape and inner details) of a source face and other attributes (e.g., expression, pose, hair, illumination, and others) of a target face to synthesize face-swapping results. Figure 2 shows . Specifically, a shared MAE encoder is used to convert source face () and target face () into patch embeddings and , respectively. Subsequently, a cross-attention fusion module is taken to adaptively fuse the identity information of the source face and the attribute information of the target together to produce a fusion embedding. The fusion embedding is then fed into the convolutional decoder (), which generates the final face-swapping result . More details will be detailed as follows.

III-A1 Shared Face Encoder.

In many previous face-swapping methods, the source face is typically mapped into an ID embedding using a pre-trained face recognition model. The ID embedding is trained on recognition tasks, which leads to indistinguishable intra-class variance for a specific person. This means that the ID embedding may not fully capture the personalized appearances of a face during face swapping. This could potentially result in a loss of important details in the final face-swapping output. Additionally, previous methods use another face encoder to extract the target face attributes. This means that they propose different face representations for the source and the target faces. The distinguishable face representations bring challenges to their fusion, which may damage the fine granularity of the resulting image.

In our work, the above two issues are addressed by a pretrained face-MAE model (MAE encoder) that provides a prior fine granularity of facial representation. The pretrained face-MAE model is built with image reconstruction, instead of ID recognition. It is shared by the source and target faces, so our work provides a unified face representation for the source and target faces.

Specifically, we opt to employ a shared encoder that projects both the source and target face into a unified latent representation. The encoder architecture is based on the MAE [29] framework and was pre-trained on a large-scale face dataset [9, 10, 11, 12] consisting of 2.17 million images, utilizing a masked training strategy. When contrasted with the compact latent code of StyleGAN2 [13] and the identity embedding, the latent representation of MAE has the ability to more effectively capture both facial appearances and identity information. This is due to the use of masked training, which necessitates the reconstruction of masked image patches from visible neighboring patches. As a result, each patch embedding contains rich topology and semantic information. Using the pre-trained MAE encoder , we can project a given facial image into a latent representation, commonly referred to as patch embeddings:

| (1) |

where . and denote the number of patches and the dimension of each embedding, respectively.

III-A2 Cross-Attention Fusion Module.

Through the use of the shared MAE encoder, both the source and target faces are projected into a representational latent space. The next step is to fuse the source identity information with the target attribute within this latent space. It’s assumed that related patches, such as the nose to nose, would convey identity information during the transfer process. To account for this, we developed a cross-attention fusion module (CAFM) that can dynamically aggregate identity information from the source and blend it into the target in an adaptive and patch-wise manner.

As shown in Figure 2, CAFM comprises a cross-attention block and two standard transformer blocks [30]. To begin, we calculate the values for each patch embedding in the source () and target () sets. Then the cross attention is computed by:

| (2) |

In this equation, denotes Cross Attention, are predicted using attention heads, and denotes the dimension of . The cross-attention mechanism characterizes the relationship between each target patch and the source patches. Subsequently, we aggregate the source identity information using the computed and fuse it with the target values through addition:

| (3) |

After that, are normalized through a layer normalization (LN) and processed by multi-layer perceptrons (MLP). Both the Cross Attention and MLP are accompanied by skip connections. The fused embeddings are then fed into two transformer blocks, resulting in the final output .

Finally, a convolutional decoder is utilized to generate the final swapped face image from the output . In contrast to the ViT decoder in MAE, we find that the convolutional decoder produces more realistic results.

III-A3 Training Loss.

To train our face-swapping network , two human face images (i.e. and ) will be used as the source face and target face, respectively, serving as the two inputs of . Generally, there is no ground truth available for the results of face swapping. To further constrain the output distribution of the swapping result , the training data include a portion of (, ) where . This portion of data allows using pixel-wise reconstruction loss, as done in [7, 23, 3, 26, 2]. The other of the training data consists of (, ) where .

We design seven loss functions from the aspects of facial shape, posture, texture, expression, and identity, to constrain the result faces generated by our :

| (4) |

where , , , , are hyperparameters for each term. We set =10, =5, =10, =5000, =2 and =3 in our experiment.

Adversarial Loss. To enhance the realism of the face swapping results, we employ the hinge version adversarial loss[31] for training, denoted by :

| (5) |

where is the discriminator which is trained with:

| (6) |

Reconstruction Loss. As mentioned above, in 25% of the training data, and are identical to each other. It means that is the desired result of . For these data, is supervised by an additional pixel-wise reconstruction loss:

| (7) |

It is noteworthy that the reconstruction loss is not existed when .

Posture Loss. To maintain proper face posture during face swapping, we utilize the landmark loss as a constraint:

| (8) |

where represents the landmarks of . It is worth noting that only the 51 landmarks of the inner-face are included in and , and the facial shape is determined by the facial shape loss (see below) rather than the landmarks of the facial contour.

Perceptual Loss. As high-level feature maps contain semantic information, we utilize the feature maps from the final two convolutional layers of a pre-trained VGG as the representation of facial attributes. The loss is formulated as:

| (9) |

Expression Loss. We adopt a novel fine-grained expression loss [32] that penalizes the distance of two expression embeddings:

| (10) |

Identity Loss. The identity loss is utilized to enhance the identity similarity between and :

| (11) |

where denotes a face recognition model [33] and denotes the cosine similarity.

Facial Shape Loss. The facial shape loss is used to constrain to have a similar facial shape as . The shape-aware semantic flow generated by explicitly quantifies the discrepancies in facial contour between the two input faces by modeling the pixel-level motion trend on facial shape. Consequently, as the face shape of approaches that of , the semantic flow between and tends towards zero:

| (12) |

| (13) |

where denotes the semantic flow between and , denotes a full-zero matrix with the same dimensions as and denotes the euclidean distance.

III-B facial shape discriminator

As mentioned above, a facial shape discriminator, , is built to explicitly evaluate the face shape contour. It is used to constrain the resulting face to have a face contour close to the source face as much as possible. When training , quantifies the discrepancy of the facial contours of the resulting face and the source face. The quantified discrepancy is viewed as the facial shape loss, , which is mentioned above. Specifically, the discrepancy is based on the estimated semantic flow which reflects the pixel-level motion from the resulting contour to the source one.

The building of relies on training a neural network model in a setting of pairs of two images ( and ). The images in a pair are randomly selected from three widely-used face datasets: CelebA-HQ [9], FFHQ [34], and VGGFace2 [35].

In the training of , the semantic flow of face contour shape is estimated from to . Then the flow warps the shape of pixel-wisely to make its contour converge to the . Thus the semantic flow reflects the pixel-level motion of facial contour and then achieves fine modeling and perception of discrepancies in facial shape. To achieve this goal, requires a face shape representation of the two input faces and then estimates a semantic flow according to the differences of two shape representations.

In the training of , is utilized as the facial shape loss. Specifically, and are at the place of and , respectively.

III-B1 Face Shape Representation.

Since our facial shape discriminator needs to warp the face shape pixel-wisely while being trained, we choose the explicit facial landmarks as the shape representation. We use a 3D face reconstruction model (3DMM) [36] to obtain facial landmarks. As shown in Figure 3, the 3D face reconstruction model extracts 3D coefficients of the source and target:

| (14) |

where are the FLAME coefficients [37] representing the facial shape, pose, expression, and camera, respectively. is or , representing the first or the second input face. With these coefficients, the second input face can be modeled as:

| (15) |

where represents the 3D face mesh of the . is a linear blend skinning (LBS) function that is applied to rotate the vertices of around joint . is the blend weights. denotes the template mesh with shape, pose, and expression offsets [37].

Then, we reconstruct similarly, except that the pose and expression coefficients are replaced with the ’s ones. The obtained 3D face mesh is denoted as . Finally, we sample 3D facial landmarks from and and project these 3D points to 2D facial landmarks with the target camera parameter :

| (16) |

where is a vertex in , is an orthographic 3D-2D projection matrix, and and are parameters in , indicating isotropic scale and 2D translation. denotes the 2D facial landmarks. It should be noted that we only use the landmarks at the facial contours as the shape representation since inner facial landmarks contain identity information that may influence the reshaping result.

III-B2 Semantic Flow Estimation.

The relative displacement between and only describes sparse movement. To accurately perceive the discrepancies of the two faces, we need to obtain dense motion between them. Therefore, we propose the semantic flow, which models the semantic correspondences between two faces. To achieve a more shape-aware semantic flow, warps the target face according to the semantic flow during training, and constrains the warped target face to be consistent with the source face in terms of facial shape. We design a semantic guided generator to estimate the semantic flow. Specifically, requires three inputs: , and , where and are the 2D facial landmarks obtained above. is the second face segmentation map that complements the semantic information lost in facial landmarks. The output of is the estimated semantic flow , the formulation is:

| (17) |

Then, a warping module is introduced to generate the warped faces using . We find that an inaccurate flow is likely to produce unnatural images, On the contrary, imposing constraints on the warped images can lead to a more precise semantic flow, and therefore, we design a semantic guided discriminator that ensures to produce a more accurate flow. The warping operation is conducted on :

| (18) |

Where is the warping function in the warping module. We feed the warped face to . Thus, is able to discriminate whether the input is real or fake. It should be noted that and warping module are only used during training of .

III-B3 Training Loss.

We adopt three loss functions for :

| (19) |

where and represent hyperparameters associated with each term. In our experimental setup, we have designated the value of as 800 and that of as 10.

As in the training of , the reconstruction loss between and is used for self-supervision since there is also no ground-truth for face swapping results.

Adversarial Loss. The more realistic the resultant images are, the more accurate the generated shape-aware semantic flows are, therefore we employ the hinge version adversarial loss[31] for training, denoted by :

| (20) |

where is the discriminator which is trained with:

| (21) |

Landmark Loss. Since there is not pixel-wised ground truth for , we rely on the 2D facial landmarks to regulate the shape of . To be specific, we employ a pre-trained facial landmark detector [38] to forecast the facial landmarks of , which are denoted as . Then the loss is computed as:

| (22) |

At this point, our designed facial shape discriminator is able to generate a shape-aware semantic flow which finely perceives discrepancies of facial shape between two input faces. Subsequently, the semantic flow can be utilized to enforce similarity between the facial shape of the face-swapped faces and that of the source faces during training of the .

IV Experiments

| Methods | ID Acc() | Shape | Expr. | Pose. | ||

|---|---|---|---|---|---|---|

| CosFace | SphereFace | Avg | ||||

| Deepfakes | 83.32 | 86.93 | 85.13 | 1.78 | 0.57 | 4.05 |

| FaceSwap | 70.74 | 76.69 | 73.72 | 1.85 | 0.43 | 2.20 |

| FSGAN | 48.88 | 54.09 | 51.49 | 2.18 | 0.30 | 2.20 |

| FaceShifter | 97.38† | 80.64 | 89.01 | 1.68 | 0.36 | 2.28 |

| SimSwap | 93.37 | 96.15 | 94.76 | 1.74 | 0.30 | 1.40 |

| HifiFace | 98.48† | 90.61 | 94.55 | 1.62 | 0.33 | 2.30 |

| FlowFace | 99.20 | 98.87 | 99.04 | 1.17† | 0.24 | 2.40 |

| Ours | 99.51 | 99.03 | 99.27 | 1.43 | 0.23 | 2.20 |

To validate our FlowFace++ method, we perform quantitative and qualitative comparisons with state-of-the-art approaches, as well as a user study. Additionally, we conduct several ablation experiments involving those employed , CAFM, MAE, and convolutional decoder to validate our design.

IV-A Implementation Details

Dataset. We collect the training dataset from three widely-used face datasets: CelebA-HQ [9], FFHQ [34], and VGGFace2 [35]. The faces are first aligned and cropped to . To ensure high-quality training, we filter out low-quality images from the above datasets. The final used dataset consists of 350K high-quality face images, and we randomly select 10K images from the dataset as the validation dataset. For the comparison experiments, we construct the test set by sampling FaceForensics++(FF++) [39], following the methodology used in [2]. The FF++ dataset comprises of 1000 video clips, and we collect the test set by sampling ten frames from each clip, resulting in a total of 10000 images.

Training. Our FlowFace++ is trained in two stages. Specifically, is first trained for 250K steps with a batch size of eight. As for , we first pre-train the MAE encoder following the training strategy of MAE on our face dataset. Then we fix the MAE encoder and train other components of for 640K steps with a batch size of eight. Due to the time-consuming nature of extracting coefficients from the 3D face reconstruction model used in , the facial shape loss is not involved in the training for the first 320K steps in order to accelerate the training speed. We utilize the Adam optimizer [40], with set to 0 and set to 0.99, and a learning rate of 0.0001.

Metrics. We employ four metrics for the quantitative evaluation of our model: identity retrieval accuracy (ID Acc), shape error, expression error (Expr Error), and pose error. We follow the same testing protocol as outlined in [2, 7]. However, since certain pre-trained models used as metrics in their evaluation are not accessible, we utilize other models for evaluation. For ID Acc, we employ two other face recognition models: CosFace (CF) [41] and SphereFace (SF) [42], to perform identity retrieval for a more comprehensive comparison. For expression error, we adopt another expression embedding model [43] to compute the euclidean distance of expression embeddings between the target and swapped faces.

IV-B Comparisons with State-of-the-arts

IV-B1 Quantitative Comparisons.

Our method is compared with seven methods including Deepfakes [22], FaceSwap [20], FlowFace [6], FSGAN [23], FaceShifter [2], SimSwap [3], and HifiFace [7]. For Deepfakes, FaceSwap, FaceShifter, and HifiFace, we use their released face swapping results of the sampled 10,000 images. For FlowFace, FSGAN and SimSwap, the face swapping results are generated with their released codes.

Table I demonstrates that the proposed FlowFace++ outperforms the other methods in most evaluation metrics, including ID Acc, shape error, and expression error (Expr Error). These results validate the superiority of FlowFace++. FlowFace++ produces slightly worse results in terms of pose error compared to other methods, which may be attributed to our manipulation of face shape that poses greater challenges in pose. The employed head pose estimator is sensitive to face shapes, which might have impacted the results.

IV-B2 Qualitative Comparisons.

The qualitative comparisons are conducted on the same FF++ test set collected in the quantitative comparisons. As shown in Figure 4, our FlowFace++ (or FlowFace) maintains the best face shape consistency. Most methods result in face shapes similar to the target ones since they do nothing to transfer the face shape.

Although HifiFace is intentionally designed to manipulate the face shape, our method still outperforms it in terms of evaluation metrics. Compared to HifiFace, Figure 4 illustrates that our generated face shapes are more similar to the source faces. Since HifiFace injects the shape representation into the latent feature space, and directly uses facial landmarks generated by the 3D face reconstruction module as supervision for facial shape, it may be harder to achieve a fine perception of facial shape than our explicit supervision of facial shape differences by the semantic flow. Additionally, our proposed method excels at preserving fine-grained target expressions, as marked indicated by the red boxes in Rows 1 and 4 of Figure 4.

| Method | Shape. () | ID. () | Exp. () | Realism () |

|---|---|---|---|---|

| SimSwap | 21.89 | 24.41 | 44.37 | 24.68 |

| HifiFace | 36.44 | 33.53 | 15.98 | 36.23 |

| Ours | 41.67 | 42.06 | 39.66 | 39.09 |

We further compare our methods with more six SOTA face swapping methods:MegaFS [25], FaceInpainter [5], HighRes [27], SmoothSwap [8], HifiFace [7] and RAFSwap [26]. The source, target faces and results used in this comparative experiment are cropped from the original papers of these methods. Figure 5 demonstrates that our method is capable of better transferring the shape of the source face to the target. Although the results exhibited in the paper of HifiFace demonstrate its effectiveness in transferring facial shape, it suffers from the problem of facial expression leakage from the source face to the result. While SmoothSwap can change the facial shape, it often destroys the target attributes (e.g., hairstyle, and hair color).

The qualitative comparisons above also demonstrate that our results exhibit higher similarity to the source face in terms of inner facial features (e.g., beard), confirming that our MAE encoder is more efficacious in effectively representing facial appearances than the identity embedding used in [7, 5, 8] or the latent code of StyleGAN2 used in [25, 27, 26]. Moreover, our approach demonstrates a higher degree of fidelity in preserving target attributes (e.g., skin color, lighting, and expression), in comparison to other other methods.

IV-B3 User Study.

In order to further validate our FlowFace++, we conduct a subjective comparison study with two of the state-of-the-art face swapping methods, SimSwap and HifiFace, both of which have publicly shared their codes or results. We randomly select 30 instances of swapped faces generated from each of the three aforementioned methods. Participants are instructed to choose the best results in terms of shape consistency, identity consistency, expression consistency, or image realism.

For each image, a maximum of 39 participants are recruited for evaluation. Each participant selects their preferred option. And for a given option, assigns a value of 1 if they select it and 0 if they do not. Under the condition of a confidence threshold of 80%, if the left endpoint of a confidence interval for one given option is greater than 0.5, this image is considered to have completed the evaluation process. Otherwise, the number of participants is increased until the maximum of 39 participants was reached. Table II shows that our method outperforms the two baselines in terms of shape consistency, identity consistency and image realism, validating the superiority of our method. In terms of expression consistency, our FlowFace++ slightly lags behind SimSwap, which could be attributed to the coupling between facial shape and expression, where changes in facial shape may affect the discriminability of expressions.

IV-C Robustness Comparisons.

We compare the performance differences between our FlowFace++ and four other available face swap methods under extreme input conditions. It’s worth noting that FaceShifter relies on an open-source implementation 111https://github.com/mindslab-ai/hififace by others, rather than authors.

IV-C1 Angle jamming.

As shown in Figure 6, FSGAN and FaceSwap struggle to produce convincing results from the large angular deviations of the source faces. On the other hand, FaceShifter and SimSwap generate resulting faces that are not as clear and accurate. Although other results generated by RAFSwap based on StyleGAN2 are still clear, it cannot effectively extract the identity information of source faces, resulting in poor identity similarity between the result and source faces. In contrast, our FlowFace++ is still able to transfer the attributes and facial shape of the source faces to the target faces accurately.

IV-C2 Uneven light exposure.

As shown in Figure 7, FSGAN and FaceSwap mistakenly transfer the lighting of the source faces to the result faces, while SimSwap encounters difficulties in extracting facial features from unevenly lit source faces, resulting in blurred results. And RAFSwap generates some incorrect transferings (note the red box markings). Our FlowFace++ is still capable of generating high-quality face-swapping results even in the presence of uneven illumination.

Our FlowFace++ achieves remarkable performance in robustness testing, primarily because we utilize the MAE encoder which is designed following MAE and pre-trained on a large-scale face dataset using the masked training strategy. Even under extreme input conditions with various interferences, the MAE encoder is able to extract rich features from the input faces. Furthermore, our CAFM module facilitates the adaptive aggregation of identity and facial shape information from the source, allowing us to effectively eliminate the interfering information.

To maintain the expression in the generated image consistent with the target image, we introduce an effective expression embedding [32] which employs a continuous and compact embedding space to represent the fine-grained expressions. The distance between two expression embedding reflects the similarity between them. Therefore, we formulate the expression loss as a L2 loss that computes the expression embedding distance between the generated and target images, so as to ensure that they are consistent in terms of expression.

IV-D Analysis of FlowFace++

Three ablation studies are conducted to validate our end-to-end FlowFace++ framework and several components used in and , respectively.

IV-D1 Ablation Study on FlowFace++.

We conduct ablation experiments to validate the design of end-to-end face-swapping framework . We remove the (w/o ). Figure 8 shows that in the absence of ’s constraints, the network lacks the ability to transfer facial shape (note the cheeks and jaw angles). Hence, ’s constraints are crucial in ensuring our FlowFace++’s proficiency in facial shape warping.

To further validate the effectiveness of , we attempt to warp the input faces using the semantic flows generated by . As shown in Figure 9, after warping with the semantic flow, the facial contours of are changed, becoming closer to , while maintaining the inner-facial appearances. This fully demonstrates that the semantic flow generated by our designed effectively represents the pixel-wise motions in facial contours explicitly.

Our previous work, FlowFace[6], is a two-stage framework. It uses the ’s warped results on the target faces as the first stage, then utilizes the which is trained without ’s supervision to transfer the non-shape identity as the second stage.

As shown in Figure 10, the performance of FlowFace[6] on the target faces via is not entirely perfect and may result in distortions in details (e.g., eyebrow, mouth and low jawbone), as highlighted by the red circle in the image. Such distortions may emerge in the second stage, potentially leading to flaws in the final results. This convincingly underscores the rationale behind our end-to-end network architecture of FlowFace++, which incorporates as a form of supervision.

| Methods | ID Acc() | Shape | Expr. | Pose. | ||

|---|---|---|---|---|---|---|

| CosFace | SphereFace | Avg | ||||

| Sparse Ldmks | 99.01 | 98.16 | 98.59 | 1.49 | 0.23 | 1.86 |

| w/o | 98.43 | 97.01 | 97.72 | 1.55 | 0.22 | 1.78 |

| w Seg | 98.72 | 97.34 | 98.03 | 1.53 | 0.28 | 1.91 |

| w/o Seg | 96.79 | 96.09 | 96.44 | 1.57 | 0.22 | 1.74 |

| 99.51 | 99.03 | 99.27 | 1.43 | 0.23 | 2.20 | |

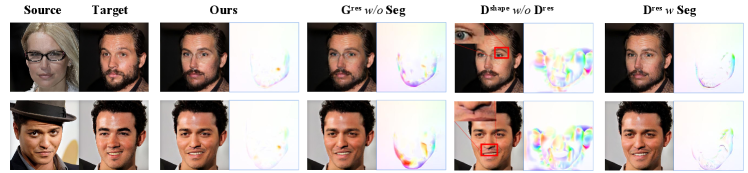

IV-D2 Ablation study on .

We design four ablation experiments to validate the effectiveness of our proposed :

A. Sparse Landmarks vs. Dense Flow. During the training process of , we adopt the dense flow generated by to calculate the shape discrepancies between and . To demonstrate the rationality of dense flow, similar to the approach of HifiFace[7], we replace it with sparse landmarks as the supervision for facial shape, where the sparse landmarks can be obtained by calculating Equation 16. Then the new facial shape loss can be computed by:

| (23) |

The represents the landmarks of the and only 17 landmarks on the contour are involved in the calculation of . As seen in Figure 14, when sparse landmarks are used as supervision, residual ghosts may appear on the facial contour during the process of transferring facial shape. This phenomenon can be attributed to that sparse landmarks cannot represent pixel-wise dense motion.

B. Removing of . We Remove the semantic input of ( w/o Seg) to validate our proposed semantic guided generator . It can be seen from Figure 14 that some inaccurate flow occurs in the generated face, which implies that only facial landmarks cannot guide to produce accurate dense flow due to the lack of semantic information. The results also demonstrate that the semantic information is beneficial for accurate flow estimation and validates .

C. Adding the semantic inputs ( and ) of ( w Seg). FlowFace [6] proposes that adding semantic inputs to the adversarial loss can improve the reconstruction of fine details in the facial region. We attempt to add semantic inputs to as well, however, as shown in the experimental results in Table III suggest that this does not result in any significant accuracy improvements. The implication is that in FlowFace++, does not require semantic inputs and is still capable of effectively discriminating between real and generated faces.

D. Removing (w/o ). As observed in Figure 14, the generated images produced by w/o exhibit a few artifacts, and the estimated semantic flow is also marred by substantial noise.

The aforementioned observations serve to corroborate the efficacy of our proposed .

| Methods | ID Acc | Expr | Pose | Shape | ||

|---|---|---|---|---|---|---|

| CosFace | SphereFace | Avg | ||||

| Addition | 99.11 | 99.31 | 99.21 | 0.35 | 4.29 | 1.30 |

| AdaIN | 33.53 | 25.62 | 29.58 | 0.29 | 2.67 | 2.63 |

| Id Embed. | 96.76 | 95.44 | 96.10 | 0.23 | 1.78 | 1.71 |

| Vit | 99.42 | 98.85 | 99.14 | 0.26 | 2.69 | 1.39 |

| Ours | 99.51 | 99.03 | 99.27 | 0.23 | 2.20 | 1.43 |

IV-D3 Ablation study on .

Three ablation experiments are conducted to evaluate the design of :

A. Choices on CAFM, Addition and AdaIN. To verify the effectiveness of our proposed CAFM, we conduct a comparative analysis between the CAFM and two other methods, namely, Addition (which simply involves adding the source values to the target values) and AdaIN (which first averages source patch embeddings and then injects them into the target feature map using AdaIN residual blocks). As shown in Figure 12 and Table IV, Addition simply blends all information of the source face to the target face, thus resulting in severe pose and expression mismatch. AdaIN, due to its global modulation, impacts not only the facial features but also the non-face parts such as hair. By contrast, with CAFM achieves a high ID Acc and effectively preserves the target attribute. This result demonstrates the highly accurate identity information extraction capabilities of CAFM from the source face and its adaptive infusion into the target counterpart.

To further validate the efficacy of our CAFM, we visualize the cross-attention computed by CAFM. As depicted in Figure 13, when a specific part of the target face is given (marked with red boxes), our proposed CAFM precisely focuses on the corresponding regions of the source face, thus validating its ability to adaptively transfer the identity information from the source patches to the respective target patches.

B. Latent Representation vs. ID Embedding (ID Embed.). To verify the superiority of using the latent representation of MAE, We design an experiment that use a face recognition network [44] to extract the features of the source faces and continue to use the original MAE encoder to extract the features of the target faces, while keeping the other network structures unchanged. As can be seen from Figure 12, ID Embed. misses some fine-grained face appearances, such as eyebrow edge. In contrast, contains richer identity information and achieves higher ID Acc, as shown in Tab IV.

C. Convolutional Decoder vs. ViT Decoder. We try two different decoders to determine the better one. As shown in Table IV, compared to Vit Decoder, Convolutional Decoder exhibits superior performance in terms of ID accuracy, expression error, and pose error, while performing roughly on par in the aspect of shape error. As can be seen in Figure 12, the result of Vit Decoder exhibits partial blurry regions and erroneous leakage of the source face’s hair.

V Conclusion

This work proposes a novel face-swapping framework, FlowFace++, which utilizes explicit semantic flow supervision and an end-to-end architecture to facilitate shape-aware face swapping. Specifically, our work pretrains a shape-aware discriminator to supervise the face swapping network thus optimizing it to generate highly realistic results. The face swapping network is a stack of a pre-trained face-masked autoencoder (MAE), a cross-attention fusion module, and a convolutional decoder. MAE is used to extract facial features that better capture facial appearances and identity information. The cross-attention fusion module is designed to better fuse the source and the target features, thus leading to better identity preservation.

We conduct extensive quantitative and qualitative experiments on in-the-wild faces, demonstrating that FlowFace++ outperforms the state-of-the-art significantly. In the quantitative experiments, our FlowFace++ demonstrates effective performance with four metrics, including identity retrieval accuracy, shape error, expression error, and pose error. In the qualitative experiments, our approach achieves higher similarity with the source faces in terms of facial shape and inner facial features. Furthermore, we compare the performance of our FlowFace++ with other methods under extreme input conditions, and it exhibits higher stability when handling source faces with large angular deviations or non-uniform illumination.

We further conduct comprehensive ablation experiments to validate the rationality of the FlowFace++ design. The experimental results demonstrate the irreplaceable superiority of our facial shape discriminator, MAE encoder, cross-attention fusion module, and convolutional decoder in achieving high-quality transferring of inner-facial appearances and facial shape.

Despite the superior performance of our FlowFace++, there are still some limitations. Our FlowFace++ still faces the challenge of lacking temporal constraints, which has not been explicitly addressed by previous face-swapping methods. Besides, in situations where the source face is wearing sunglasses, it may cause distortion in the eye regions of the resultant face.

References

- [1] Z. Xu, H. Zhou, Z. Hong, Z. Liu, J. Liu, Z. Guo, J. Han, J. Liu, E. Ding, and J. Wang, “Styleswap: Style-based generator empowers robust face swapping,” in ECCV, 2022.

- [2] L. Li, J. Bao, H. Yang, D. Chen, and F. Wen, “Faceshifter: Towards high fidelity and occlusion aware face swapping,” arXiv preprint arXiv:1912.13457, 2019.

- [3] R. Chen, X. Chen, B. Ni, and Y. Ge, “Simswap: An efficient framework for high fidelity face swapping,” in Proceedings of the 28th ACM International Conference on Multimedia, 2020, pp. 2003–2011.

- [4] Z. Xu, X. Yu, Z. Hong, Z. Zhu, J. Han, J. Liu, E. Ding, and X. Bai, “Facecontroller: Controllable attribute editing for face in the wild,” arXiv preprint arXiv:2102.11464, 2021.

- [5] J. Li, Z. Li, J. Cao, X. Song, and R. He, “Faceinpainter: High fidelity face adaptation to heterogeneous domains,” in CVPR, 2021, pp. 5089–5098.

- [6] H. Zeng, W. Zhang, C. Fan, T. Lv, S. Wang, Z. Zhang, B. Ma, L. Li, Y. Ding, and X. Yu, “Flowface: Semantic flow-guided shape-aware face swapping,” arXiv preprint arXiv:2212.02797, 2022.

- [7] Y. Wang, X. Chen, J. Zhu, W. Chu, Y. Tai, C. Wang, J. Li, Y. Wu, F. Huang, and R. Ji, “Hififace: 3d shape and semantic prior guided high fidelity face swapping,” arXiv preprint arXiv:2106.09965, 2021.

- [8] J. Kim, J. Lee, and B.-T. Zhang, “Smooth-swap: A simple enhancement for face-swapping with smoothness,” in CVPR, 2022, pp. 10 779–10 788.

- [9] T. Karras, T. Aila, S. Laine, and J. Lehtinen, “Progressive growing of gans for improved quality, stability, and variation,” arXiv preprint arXiv:1710.10196, 2017.

- [10] A. Mollahosseini, B. Hasani, and M. H. Mahoor, “Affectnet: A database for facial expression, valence, and arousal computing in the wild,” IEEE Transactions on Affective Computing, vol. 10, no. 1, pp. 18–31, 2017.

- [11] D. Yi, Z. Lei, S. Liao, and S. Z. Li, “Learning face representation from scratch,” arXiv preprint arXiv:1411.7923, 2014.

- [12] R. Rothe, R. Timofte, and L. V. Gool, “Dex: Deep expectation of apparent age from a single image,” in IEEE International Conference on Computer Vision Workshops (ICCVW), December 2015.

- [13] T. Karras, S. Laine, M. Aittala, J. Hellsten, J. Lehtinen, and T. Aila, “Analyzing and improving the image quality of stylegan,” in CVPR, 2020, pp. 8110–8119.

- [14] X. Liu, B. Vijaya Kumar, J. You, and P. Jia, “Adaptive deep metric learning for identity-aware facial expression recognition,” in IEEE Conference on CVPR Workshops, 2017, pp. 20–29.

- [15] D. Bitouk, N. Kumar, S. Dhillon, P. Belhumeur, and S. K. Nayar, “Face swapping: automatically replacing faces in photographs,” in ACM SIGGRAPH 2008 papers, 2008, pp. 1–8.

- [16] D. Chen, Q. Chen, J. Wu, X. Yu, and T. Jia, “Face swapping: realistic image synthesis based on facial landmarks alignment,” Mathematical Problems in Engineering, vol. 2019, 2019.

- [17] Y. Lin, Q. Lin, F. Tang, and S. Wang, “Face replacement with large-pose differences,” in ACM international conference on Multimedia, 2012, pp. 1249–1250.

- [18] V. Blanz, K. Scherbaum, T. Vetter, and H.-P. Seidel, “Exchanging faces in images,” in Computer Graphics Forum, vol. 23. Wiley Online Library, 2004, pp. 669–676.

- [19] J. Thies, M. Zollhofer, M. Stamminger, C. Theobalt, and M. Nießner, “Face2face: Real-time face capture and reenactment of rgb videos,” in CVPR, 2016, pp. 2387–2395.

- [20] M. MarekKowalski, “Faceswap,” [EB/OL], 2021, https://github.com/MarekKowalski/FaceSwap Accessed March 1, 2021.

- [21] Y. Nirkin, I. Masi, A. T. Tuan, T. Hassner, and G. Medioni, “On face segmentation, face swapping, and face perception,” in Inter. Conf. on Automatic Face & Gesture Recognition, 2018, pp. 98–105.

- [22] DeepFakes, “Deepfakes,” https://github.com/deepfakes/faceswap, 2019, Online; Accessed March 1, 2021.

- [23] Y. Nirkin, Y. Keller, and T. Hassner, “Fsgan: Subject agnostic face swapping and reenactment,” in ICCV, 2019, pp. 7184–7193.

- [24] H. Zhu, C. Fu, Q. Wu, W. Wu, C. Qian, and R. He, “Aot: Appearance optimal transport based identity swapping for forgery detection,” in Neural Information Processing Systems (NeurIPS), 2020.

- [25] Y. Zhu, Q. Li, J. Wang, C.-Z. Xu, and Z. Sun, “One shot face swapping on megapixels,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 4834–4844.

- [26] C. Xu, J. Zhang, M. Hua, Q. He, Z. Yi, and Y. Liu, “Region-aware face swapping,” in CVPR, 2022, pp. 7632–7641.

- [27] Y. Xu, B. Deng, J. Wang, Y. Jing, J. Pan, and S. He, “High-resolution face swapping via latent semantics disentanglement,” in CVPR, 2022, pp. 7642–7651.

- [28] G. Gao, H. Huang, C. Fu, Z. Li, and R. He, “Information bottleneck disentanglement for identity swapping,” in CVPR, 2021, pp. 3404–3413.

- [29] K. He, X. Chen, S. Xie, Y. Li, P. Dollár, and R. Girshick, “Masked autoencoders are scalable vision learners,” in CVPR, 2022, pp. 16 000–16 009.

- [30] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly et al., “An image is worth 16x16 words: Transformers for image recognition at scale,” arXiv preprint arXiv:2010.11929, 2020.

- [31] J. H. Lim and J. C. Ye, “Geometric gan,” arXiv preprint arXiv:1705.02894, 2017.

- [32] W. Zhang, X. Ji, K. Chen, Y. Ding, and C. Fan, “Learning a facial expression embedding disentangled from identity,” in CVPR, 2021, pp. 6759–6768.

- [33] J. Deng, J. Guo, N. Xue, and S. Zafeiriou, “Arcface: Additive angular margin loss for deep face recognition,” in CVPR, 2019, pp. 4690–4699.

- [34] T. Karras, S. Laine, and T. Aila, “A style-based generator architecture for generative adversarial networks,” in CVPR, 2019, pp. 4401–4410.

- [35] Q. Cao, L. Shen, W. Xie, O. M. Parkhi, and A. Zisserman, “Vggface2: A dataset for recognising faces across pose and age,” in international conference on automatic face & gesture recognition, 2018, pp. 67–74.

- [36] Y. Feng, H. Feng, M. J. Black, and T. Bolkart, “Learning an animatable detailed 3D face model from in-the-wild images,” vol. 40, no. 8, 2021. [Online]. Available: https://doi.org/10.1145/3450626.3459936

- [37] T. Li, T. Bolkart, M. J. Black, H. Li, and J. Romero, “Learning a model of facial shape and expression from 4D scans,” ACM Transactions on Graphics, (Proc. SIGGRAPH Asia), vol. 36, no. 6, pp. 194:1–194:17, 2017. [Online]. Available: https://doi.org/10.1145/3130800.3130813

- [38] K. Sun, Y. Zhao, B. Jiang, T. Cheng, B. Xiao, D. Liu, Y. Mu, X. Wang, W. Liu, and J. Wang, “High-resolution representations for labeling pixels and regions,” arXiv preprint arXiv:1904.04514, 2019.

- [39] A. Rössler, D. Cozzolino, L. Verdoliva, C. Riess, J. Thies, and M. Nießner, “FaceForensics++: Learning to detect manipulated facial images,” in International Conference on Computer Vision (ICCV), 2019.

- [40] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [41] H. Wang, Y. Wang, Z. Zhou, X. Ji, D. Gong, J. Zhou, Z. Li, and W. Liu, “Cosface: Large margin cosine loss for deep face recognition,” in CVPR, 2018, pp. 5265–5274.

- [42] W. Liu, Y. Wen, Z. Yu, M. Li, B. Raj, and L. Song, “Sphereface: Deep hypersphere embedding for face recognition,” in CVPR, 2017, pp. 212–220.

- [43] R. Vemulapalli and A. Agarwala, “A compact embedding for facial expression similarity,” in CVPR, 2019, pp. 5683–5692.

- [44] TreB1eN, “Insightface pytorch,” https://github.com/TreB1eN/InsightFace\_Pytorch, 2018, Online; Accessed March 1, 2021.