Flexible and Robust Counterfactual Explanations with Minimal Satisfiable Perturbations

Abstract.

Counterfactual explanations (CFEs) exemplify how to minimally modify a feature vector to achieve a different prediction for an instance. CFEs can enhance informational fairness and trustworthiness, and provide suggestions for users who receive adverse predictions. However, recent research has shown that multiple CFEs can be offered for the same instance or instances with slight differences. Multiple CFEs provide flexible choices and cover diverse desiderata for user selection. However, individual fairness and model reliability will be damaged if unstable CFEs with different costs are returned. Existing methods fail to exploit flexibility and address the concerns of non-robustness simultaneously. To address these issues, we propose a conceptually simple yet effective solution named Counterfactual Explanations with Minimal Satisfiable Perturbations (CEMSP). Specifically, CEMSP constrains changing values of abnormal features with the help of their semantically meaningful normal ranges. For efficiency, we model the problem as a Boolean satisfiability problem to modify as few features as possible. Additionally, CEMSP is a general framework and can easily accommodate more practical requirements, e.g., casualty and actionability. Compared to existing methods, we conduct comprehensive experiments on both synthetic and real-world datasets to demonstrate that our method provides more robust explanations while preserving flexibility.

1. introduction

Understanding the internal mechanisms behind model predictions is difficult due to the large volume of parameters in machine learning models. This problem is particularly significant in high-stakes domains such as healthcare and finance, where incorrect predictions are disastrous (Daws, 2021). Counterfactual explanation (CFE) (Wachter et al., 2017) aims to identify minimal changes required to modify the input to achieve a desired prediction and provides insights into why a model produces a certain prediction instead of the desired one. CFEs can help understand the underlying logic of certain predictions (Schoeffer et al., 2022), detect the inherent model bias for fairness (Kasirzadeh and Smart, 2021), and provide suggestions to users who receive adverse predictions (Karimi et al., 2021; Ustun et al., 2019). Therefore, CFEs can be adopted in broad applications of healthcare, finance, education, justice, and other domains.

Despite the valuable insights provided by CFEs, recent studies (Wachter et al., 2017; Schoeffer et al., 2022; Sokol and Flach, 2019; Virgolin and Fracaros, 2023; Slack et al., 2021; Laugel et al., 2019) have shown that multiple CFEs can exist with equivalent evaluation metrics (e.g., validity, proximity, sparsity, plausibility), yet significantly differ on feature values for an input or seemingly indifferent inputs. For example, Wachter et al. (Wachter et al., 2017) pointed out “multiple counterfactuals are possible, …” and “multiple outcomes based on changes to multiple variables may be possible”. Laugel et al. (Laugel et al., 2019) demonstrated that instances that are close to each other can have different CFEs. Moreover, Virgolin and Fracaros (Virgolin and Fracaros, 2023) have argued that CFEs lack robustness to adverse perturbations if not deliberately designed. While multiple CFEs can lead to the same desired prediction, each CFE tells a different story to reach the target.

It is important to note that counterfactual multiplicity has both advantages and disadvantages. On one hand, multiple CFEs can be beneficial because they afford more flexibility and freedom to select user-friendly CFEs when a single CFE may be overly restricted for users. Specifically, diverse CFEs (Russell, 2019; Mothilal et al., 2020) are offered to potentially cover broad user preferences; interactive human-computer interfaces (Cheng et al., 2021; Wang et al., 2021) are designed on multiple CFEs to obtain more satisfiable ones. On the other hand, users who have the same feature values or seemingly inconsequential differences may receive inconsistent CFEs (e.g., two different diverse sets) as the CFE method itself does not store historical CFEs and guarantee the optimal solutions either. Such inconsistency inevitably raises fairness issues (Virgolin and Fracaros, 2023; Artelt et al., 2021) and undermines users’ trust (Schoeffer et al., 2022) in CFEs. For example, two financially similar individuals are rejected when they apply for a loan. Yet, CFEs for two people are quite different-one needs to update the salary slightly while the other is required to get a higher education degree and a better job. Another negative example is when users make some efforts towards previous CFEs but receive a significantly different CFE, rendering their previous efforts futile. Therefore, it is crucial to take advantage of the flexibility of multiple CFEs and maintain consistency for users having the same feature values or slight differences.

Recent research (Upadhyay et al., 2021; Black et al., 2022; Pawelczyk et al., 2020b; von Kügelgen et al., 2022; Dutta et al., 2022) on robustness mainly studies CFEs with consistent predictions under slight model updates (by restricting CFEs to preserve causal constraints, or follow data distribution, etc.), rather than generates CFEs with consistent feature values. Therefore, these studies fail to address fairness concerns and ignore the freedom of user selection. Generally, models to explain are highly complex and non-convex, e.g., DNN models. Even constrained by consistent prediction, heuristic search strategies (Wachter et al., 2017; Laugel et al., 2017; Sharma et al., 2020; Tolomei et al., 2017) can still converge to different non-optimal solutions due to the huge search space. Meanwhile, these works do not explicitly exploit flexibility to meet user preferences. As the number of possible CFEs can be huge, existing methods on flexibility or robustness can be explained to be different selection strategies from a solution pool (without optimizing diversity and robustness in advance), i.e., selecting CFEs that are diverse (Mothilal et al., 2020), follow data distribution (Pawelczyk et al., 2020b), or withhold causal constraints (von Kügelgen et al., 2022). Motivated by this, we target to design a novel method that obtains a diverse and robust set of CFEs simultaneously.

To overcome the above limitations, we propose to incorporate task priors (normal ranges, a.k.a. reference intervals) to stabilize valid search regions, while ensuring that counterfactual explanations (CFEs) are diverse to meet various user requirements. It should be noted that robustness measures the differences between two sets of CFEs in different trials while diversity measures the inherent discrepancy within a set of CFEs. Normal ranges in our approach commonly exist in broad domains and are easy to obtain from prior knowledge. For example, the normal range of heart rate per minute is between 60 and 100; the IELTS score should be greater than 6.5 and the minimal GPA is 3.5 for Ph.D. admissions. We assume that the undesired prediction results from certain features outside of normal ranges and thus, we attempt to move abnormal features into normal ranges to generate CFEs with the desired prediction. Specifically, we replace an abnormal feature with the closest endpoint of its normal range. As the endpoints are stationary, CFEs after feature replacement tend to have the same feature value in different trials for the same/similar input. In practice, it may be unnecessary to move all abnormal features into normal ranges for the desired prediction. Therefore, we aim to select minimal subsets of abnormal features to replace where each subset corresponds to a CFE. CFEs determined by all minimal subsets are diverse as an arbitrary minimal subset is not contained by another subset.

As mentioned earlier, the problem of finding CFEs boils down to selecting minimal subsets of abnormal features to replace, which can be formulated as either the Maximally Satisfiable Subsets (MSS) or Minimal Unsatisfiable Subsets (MUS) problem (Liffiton and Malik, 2013; Liffiton and Sakallah, 2005). However, finding all minimal sets for satisfiable CFEs is an NP-Complete problem as an exponential number of subsets should be checked. To enhance efficiency, we covert the enumeration of minimal subsets to the Boolean satisfiability problem (SAT) that finds satisfiable Boolean assignments over a series of Boolean logic formulas, which can be solved with efficient modern solvers. As for commonly mentioned constraints (e.g., actionability, correlation, and causality), we can conveniently write them in Boolean logic formulas, which can be conjugated into current clauses in conjunctive normal form (CNF). Therefore, our framework is flexible to provide feasible counterfactual recommendations.

The main contributions of this paper are summarized as follows.

-

•

We reformulate the counterfactual explanation problem to satisfy both flexibility and robustness by replacing a minimal subset of abnormal features with the closest endpoints of normal ranges.

-

•

We convert this problem by checking the satisfiability of Boolean logic formulas for a Boolean assignment, which can be solved by modern SAT solvers efficiently. In addition, common constraints can be easily incorporated into current Boolean logic formulas and solved together.

-

•

We conduct intensive experiments on both synthetic and real-world datasets to demonstrate that our approach produces more consistent and diverse CFEs than state-of-the-art methods.

2. Related Work

Counterfactual explanations (Wachter et al., 2017) refer to perturbed instances with the minimum cost that result in a different prediction from a pre-trained model given an input instance. These explanations provide ways to comprehend the model’s prediction logic and offer advice to users receiving adverse predictions. Most existing algorithms focus on modeling practical requirements and user preferences with proper constraints. Typical constraints include actionability (Ustun et al., 2019), which freezes immutable features such as race, gender, etc; plausibility (Joshi et al., 2019; Van Looveren and Klaise, 2019), which requires CFEs to follow the data distribution; diversity (Mothilal et al., 2020; Russell, 2019), which generates a diverse set of explanations at a time; sparsity (Dhurandhar et al., 2018), which favors fewer features changed; causality (Joshi et al., 2019; Karimi et al., 2020), which restricts CFEs to meet specific causal relations. However, recent studies (Wachter et al., 2017; Laugel et al., 2019; Russell, 2019; Schoeffer et al., 2022; Sokol and Flach, 2019; Virgolin and Fracaros, 2023) have revealed that there often exist multiple CFEs with equivalent performance but different feature values for an input or seemingly indifferent inputs. Next, we review research that takes advantage of counterfactual multiplicity and addresses concerns regarding non-robustness.

Multiple CFEs provide users with more flexibility to prioritize their preferences without compromising the validity and proximity of CFEs. When a single CFE is inadequate to meet users’ requirements, employing a diverse set of CFEs is an effective and straightforward strategy to overcome this limitation. For example, Wachter et al. (Wachter et al., 2017) generate a diverse set by running multiple times with different initializations; Russell (Russell, 2019) prohibits the transition to previous CFEs in each run; while Mothilal et al. (Mothilal et al., 2020) add a DPP (Determinantal Point Processes) term to ensure that the CFEs are far apart from each other. In addition, multiple CFEs also enable researchers to develop Human-Computer Interaction (HCI) tools for interactively satisfying user requirements (Cheng et al., 2021; Wang et al., 2021). However, such a diverse set can be inconsistent for two inputs with no or slight differences. In our paper, we aim to generate a consistent and diverse set of CFEs for an input or seemingly different inputs, to enhance the robustness and reliability of CFEs.

The non-robustness issue of CFEs has garnered significant attention recently. As introduced in (Slack et al., 2021; Laugel et al., 2019), even a slight perturbation to the input can result in drastically different CFEs. To verify this phenomenon for neural network models, Slack et al. (Slack et al., 2021) train an adversarial model that is sensitive to trivial input changes. Some relevant works (Upadhyay et al., 2021; Black et al., 2022; Pawelczyk et al., 2020b; Dutta et al., 2022) propose to generate CFEs that yield consistent predictions when the model is retrained. For example, (Pawelczyk et al., 2020b) proves that adhering to the data manifold ensures stable predictions for CFEs; (Upadhyay et al., 2021) incorporates adversarial training to produce robust models for generating explanations; Black et al. (2022) states that closeness to the data manifold is insufficient to indicate counterfactual stability, and they propose Stable Neighbor Search (SNS) to find an explanation with the lower model Lipschitz continuity and higher confidence. However, constraining consistent predictions of CFEs does not necessarily ensure CFEs with the same or similar feature values, and still fails to address the unfairness issue. Moreover, these robustness methods lack the flexibility to meet user requirements while our work considers both flexibility and robustness simultaneously.

3. Preliminary

3.1. Counterfactual Explanations

Let us consider a pretrained model , where denotes the feature space and is the prediction space. For simplicity, let , where / denotes unfavorable/favorable prediction, respectively. Given an input instance , which is predicted to be the unfavorable outcome (), a counterfactual explanation (CFE) is a data point that leads to a favorable prediction, i.e., , with minimal perturbations of . Formally, a counterfactual explanation method can be mathematically defined as follows:

| (1) | ||||

where is a distance or cost metric that quantifies the efforts required in order to change from an input to its CFE . In practice, the commonly used cost function includes /MAD (Wachter et al., 2017; Russell, 2019), total log-percentile shift (Ustun et al., 2019), and norm on latent space (Pawelczyk et al., 2020a). To optimize Eqn. (1), it can be further transformed to the Lagrangian form (Wachter et al., 2017), as shown below:

| (2) |

where is a differential function to measure the gap between and the favorable prediction , and is a positive trade-off factor. By optimizing the above objective, a CFE method returns a single CFE or a set of CFEs for an input .

3.2. Robustness of Counterfactual Explanations

Motivated by the formalization in (Artelt et al., 2021), we formally define the robustness of CFEs in more general cases that include slight input perturbations and model changes. However, before presenting technical details, one critical question that needs to answer is “Do we want CFEs to remain consistent after a series of slight changes, or should they vary to reflect such changes?”. The answer depends on practical scenarios. In certain applications, one may expect CFEs to be sensitive to such tiny changes. For example, in the study of the effects of climate change on sea turtles (Blechschmidt et al., 2020), one may expect CFEs to be sensitive to temperature changes. In this paper, we assume that such trivial changes are either irrelevant or less important to the generation of CFEs. As such, we aim to produce CFEs that are robust to trivial changes, such as inputs added with random noise, and model retraining on new data from the same distribution.

Let represent a slightly perturbed sample that is close to , meaning , where is the density estimation of perturbed samples that yield the same prediction as the input . Similarly, let denote a retrained model belonging to the class , which consists of potential models that perform equivalently well as the original one.

Definition 0 (Robustness of Counterfactual Explanations).

Given a function computing the similarity between two sets of CFEs, we quantify the robustness of the explanations by assessing the expected similarity between the current set of CFEs, and a new set of CFEs after potential input perturbations or model changes.

| (3) |

A lower value indicates higher robustness. By minimizing the above expectation, we can generate robust CFEs. However, in real life, and are typically unknown. Intuitively, they can be determined based on specific changes that users desire to be robust against. For instance, one can consider adding Gaussian noise to the input, masking certain features, or retraining the models on data from the same distribution, to decide and .

3.3. Causes of Non-robustness

Here, we explain the root causes of non-robustness. The total loss in Eqn. (2) form is usually non-convex due to the non-convex decision surface of probabilistic models and other constraints. As shown in Figure 1, multiple local minima can be found, but current methods often select a single or CFEs from them. Such selected CFEs can be different in each trial. Next, we discuss several influential factors that result in non-robust CFEs.

-

•

Input perturbations. Input instances can be perturbed by adding some noise or masking random features. Due to the local sensitivity of large models, such trivial perturbations can significantly influence model predictions, leading to different counterfactual explanations (CFEs) (Slack et al., 2021).

-

•

Model updates. The predictive model in Eqn. (1) is typically retrained periodically in deployment. The updated model may exhibit slightly different behavior compared to the previous model and thus may have a great impact on the cost of the desired prediction.

-

•

Random factors. Heuristic search methods for Eq. (2) often involve random factors, e.g., random initial points in gradient descent (Wachter et al., 2017), random samples in Growing Sphere (Lash et al., 2017; Pawelczyk et al., 2020a), and random selection in genetic algorithms (Sharma et al., 2020). Algorithm randomness often causes different solutions in non-convex situations.

-

•

Algorithmic configurations. Different configurations of also affect the search process, e.g., the trade-off factor , the step size in gradient descent, the stop condition, the guide point (Van Looveren and Klaise, 2019), the radius of the sphere (Lash et al., 2017; Pawelczyk et al., 2020a), variational autoencoders used to approximate data distribution (Pawelczyk et al., 2020a; Joshi et al., 2019).

4. Proposed Method

In this section, we propose a method offering a diverse set of CFEs that remain stable when the input or model being explained is changed subtly by incorporating domain knowledge into the search.

4.1. Problem Formulation

Let be the indices of -dimensional input features. denotes the -th feature value of an input instance , and denotes the normal range of -th feature. We assume the normal range of each feature can be acquired from domain knowledge. For example, the normal range of BMI (CDC, 2022) is , and the normal heart rate (Shmerling, 2020) is between 60 to 100. For a given input , then we partition into two disjoint sets by checking whether each feature is in the normal range or not: (with size ) for abnormal features and (with size ) for features within the normal range.

We further assume that undesired predictions are attributed to abnormal features. Our intuition is to bring an abnormal feature within its normal range, which would increase the probability of the desired prediction. To ensure consistency, we replace an abnormal feature with the closest endpoint of the corresponding normal range. For instance, if the BMI of an obese person is , and the suggested BMI is (BMI normal range ). We aim to convert a subset of abnormal features into their respective normal ranges, to achieve lower cost and higher sparsity. Let represent the subset of abnormal features to be replaced. We employ the function to calculate the values after replacement for the abnormal features, where the -th element is computed as follows:

| (4) |

In applications where normal ranges are not available (we set ), we replace the input feature values with the corresponding values of a guide point or prototype that has the desired prediction. Correspondingly, given a guide point or prototype , can be expressed as follows:

| (5) |

We use the binary vector (abbreviated as ) to represent the presence or absence of each feature in subset . Specifically, each element indicates whether the -th feature is included in , i.e., . The feature replacement operation for an input on subset is defined as follows:

| (6) |

where is the Hadamard (element-wise) product of vectors. If the -th feature is not included in , we keep its original value, otherwise, we change it to the closest endpoint of its normal range.

As there could be a large number of subsets that can achieve the desired prediction after feature replacement, we aim to find the minimal subsets . Note that a minimal subset does not necessarily indicate minimal cardinality due to the difficulty of comparing the costs of different features. For example, increases in “academic degree”, “credit score”, and “salary” cannot be measured concisely in a mathematical cost. As such, we treat the subset (on “academic degree”, “credit score”) and the subset (on “salary”) as two different solutions, although the latter subset has the smaller cardinality, same to (Wang et al., 2021). Based on the above discussion, we formulate this problem to generate nondominated sets of CFEs as follows:

Definition 0 (Minimal Satisfiable Counterfactual Explanations).

Given a pre-trained model , input and feature normal ranges, the goal is to find all minimal satisfiable subsets, where for each subset , can achieve the desired prediction and represents a counterfactual explanation .

Diversity Analysis: In (Mothilal et al., 2020), authors propose a diversity metric over a set of CFEs of size , named count-diversity, to measure the inner discrepancy, written by,

| (7) |

Theorem 2.

The lower bound of count-diversity defined in Eqn. (7) over solutions of our problem definition is .

Proof.

Let’s denote the inner loop term as for brevity. We aim to prove that for any two arbitrary CFEs and , the pairwise distance is always greater than or equal to . To demonstrate this, we consider two contradictive cases by assuming .

Case 1 ():

This case implies , which contradicts the fact that two distinct CFEs are from the solution set.

Case 2 ():

In this case, there is only one feature difference. Let and denote the indices of abnormal features of two solutions. Then, or must exist, which also contradicts the minimal set to return in our problem definition. One CFE should be excluded because it costs more than the other.

The above contradictive proof shows that holds. Summing up all pair-wise distance , we can obtain the lower bound . ∎

Robustness Analysis: Let represent the recommended actions for a user. In our method, consistently applies to slightly perturbed instance , except in the following two situations: (1) is no longer valid, which occurs when slight perturbations have a negative impact on the desired prediction. For example, normal features may be turned into abnormal ones. We need more effort than to achieve the desired prediction. (2) Changing fewer abnormal features is sufficient to achieve the desired prediction, indicating that slight perturbations are beneficial. In this case, is omitted as there exist more cost-efficient solutions. As both model continuity and perturbation strategies can influence the , we leave the determination of the maximal bound of perturbation, to which our method remains robust, for future work.

4.2. Problem Solving

The brute-force method that evaluates all possible subsets is exponentially complex with respect to the number of abnormal features. Next, we propose a technique Counterfactual Explanations with Minimal Satisfiable Perturbation (CEMSP) to boost the search process. Our method starts with finding the binary vectors that satisfy the desired prediction after feature replacement. This can be converted into the Boolean satisfiability problem that checks whether there exists a Boolean value assignment on variables (features in abnormal ranges) such that the conjunction of Boolean formulas evaluates to . For better efficiency, we introduce the following proposition from domain knowledge.

Proposition 1 (Monotoncity of ).

The function is monotone, that is, holds for all .

This proposition aligns with common sense in practical applications. It is important to note that the undesired prediction arises from specific abnormal features according to our assumption. Intuitively, moving an abnormal feature into the normal range should never decrease the desired probability. Additionally, we assume that the predictive model has learned the relationship between feature normal ranges and the predicted classes.

Based on the monotonicity of function , we derive the following two theorems for any .

Theorem 3.

If can achieve the desired target, can also achieve the desired target for any superset of .

Theorem 4.

If cannot achieve the desired target, cannot satisfy the desired target either for any subset of .

Proof.

Theorem 3 illustrates that if we can achieve the desired prediction by replacing abnormal features in , there is no need to change more abnormal features. The theorem tends to produce sparser results at a lower cost. Theorem 4 demonstrates that if we cannot achieve the desired prediction by changing abnormal features in , there is no need to check the satisfiability of any subsets of .

To prune as many as subsets at a time with these two theorems, we need to find the minimal satisfiable subset (MSS) and the maximal unsatisfiable subset (MUS), shown as boxes filled with green/red background in Figure 2. Next, we introduce two algorithms to achieve this: Grow() in Algorithm 1 and Shrink() in Algorithm 2. The Grow() algorithm starts with an arbitrary unsatisfiable subset and iteratively attempts to change other abnormal features until a maximal unsatisfiable subset is found. The Shrink() algorithm starts with an arbitrary satisfiable subset and iteratively attempts to reserve some features until a minimal satisfiable subset is found. Note that Grow() and Shrink() algorithms serve two plugins in our method, which can be replaced by any advanced algorithm with the same purpose.

Further, we introduce how to solve it under Boolean satisfiability problem (Liffiton and Malik, 2013; Bendík et al., 2018). In particular, any subset can be converted to a satisfiable Boolean assignment under a set of propositional logic formulas in conjunctive normal form (CNF), i.e., . For example, for a subset in Figure 2, we can write the CNF in the following equation, and is the only solution.

| (8) |

By employing this approach, the explicit materialization of all subsets can be avoided, thereby mitigating the exponential space complexity. The crux lies in devising the appropriate propositional logic formulas.

Our complete algorithm is shown in Algorithm 3. Initially, in line , we merely forbid the changes on normal features and any possible binary assignments on abnormal features can satisfy the CNF. uses an SAT solver to return a solution satisfying the CNF in line . In our paper, we adopt the Z3 package111https://github.com/Z3Prover/z3. In line , we convert the binary vector to indices of subsets. Next, we check whether replacing features in this subset can achieve the desired prediction. If not satisfying the desired prediction, we call the Grow() algorithm to find the maximal unsatisfiable subset and then prune all subsets of it. The prune operation is achieved by the following propositional Boolean formulas which are conjugated into the existing CNF.

| (9) |

Similarly, if we find a subset satisfying the desired prediction, we call Shrink() function to return a minimal satisfiable subset, which can induce a CFE with minimal perturbations of features. Then, we prune all supersets of it with the following logic formula,

| (10) |

If no solution satisfies the CNF in the current iteration, we stop our algorithm in the line and .

Correctness. In our algorithm, each subset is either evaluated to be an MSS/MUS or pruned by an MSS/MUS. Hence, our algorithm will return all minimal CFEs, providing the same solutions as the brute-force search.

Space Complexity. The space complexity depends on how many minimal CFEs are returned. The worst case is , which corresponds to that feature replacements on arbitrary abnormal features of size are satisfiable.

Time Complexity. The runtime of our method primarily depends on two parts: (1) a solver (line 3) that takes a set of constraints and returns a mask . The solver is considerably faster than calling the pretrained model. (2) evaluating the prediction on a subset. Compared with brute-force search, which calls the deep model times to check all possible sets, our method reduces the number of calls on the model by pruning on certain subsets and supersets. Therefore, the empirical running time will decrease.

4.3. Compatibility with Other Constraints

The major theme of recent research is to model various constraints into CFE generation. Here, we show how to write these constraints by propositional logic formulas that can be conjugated into the CNF in line of our Algorithm 3.

Immutable features. Considering some features are immutable (e.g., race, birthplace), CFEs should avoid perturbations on these features. To achieve this, we add the following Boolean logic formula for a set of immutable features ,

| (11) |

Accordingly, these features should be ignored in Grow() algorithm when it searches for the maximal unsatisfiable set. Alternatively, we can directly treat immutable features as normal features to avoid any changes to them.

Conditional immutable features. These features must change in one direction, e.g., education degree. We can examine whether moving a feature value into its normal range follows the valid direction. If violating the valid direction, we treat this feature as an immutable feature, otherwise, we put no restriction on this feature.

Causality. In practice, changing one feature may cause a change in other features. Such causal relations among features are generally written by a set of triplets in the structural causal model (SCM) (Pearl, 2009), that is, , where are exogenous features, are endogenous features, and is a set of functions that describe how endogenous features are quantitatively affected by exogenous features. To adapt causality to our method, we only keep these triplets that normal exogenous features lead to normal endogenous features as our method merely considers discrete feature changes (from abnormal to normal). For example, feature is an exogenous feature that affects two endogenous features and , and and become normal in the consequence of its normal ancestor feature . In this example, we can add two material conditions in the following that restrict the feature change of CFEs to follow the causal relations.

| (12) |

At the same time, Grow() and Shrink() algorithms should be updated to satisfy such causal relations when they attempt to add/remove a feature. This can be easily implemented by storing these causal relations by an inverted index where an entry is an exogenous feature and the inverted list contains all its endogenous features.

Correlation. Correlation can be regarded as bidirectional causal relations. For example, if features and are correlated and in the normal range simultaneously, we can write the correlation between and as,

| (13) |

The great advantage of our framework is that it allows us to insert these constraints gradually and flexibly, as the complete relation graphs (e.g., full causal graph) are often difficult to derive in the beginning.

5. Experiments

In this section, we undertake a quantitative comparison between our proposed method CEMSP and state-of-the-art approaches. Additionally, we demonstrate empirical examples of counterfactual explanations that effectively integrate practical constraints. The source code is available at the GitHub repository222https://github.com/wangyongjie-ntu/CEMSP.

Datasets.

We conducted a comprehensive series of experiments involving a synthetic dataset and two real-world UCI medical datasets. Notably, the medical datasets encompass diagnostic features with well-defined and clinically significant normal ranges.

-

•

Synthetic Dataset is a binary class dataset consisting of samples with features. Each feature is sampled from the normal distribution independently. Regarding label balance, the binary label is assigned a value of when the following equation is satisfied; otherwise, is set to 0:

We set the lower values of normal ranges of four features as for a higher confidence prediction.

-

•

UCI HCV Dataset (Diaconis and Efron, 1983). This dataset contains instances. Following (BHATT, 2021), we convert categories of diagnosis into binary classes. After label conversion, the dataset consists of individuals diagnosed with HCV and individuals labeled as healthy. Next, we remove the “Age” and “Sex” and keep the other medical features with normal ranges. We adopt the tight normal ranges from laboratory tests in (Hoffmann et al., 2016) as certain normal ranges depend on “Sex” and we remove the “Sex” attribute in preprocessing.

-

•

UCI Thyroid Dataset (Quinlan et al., 1987). The raw dataset contains instances where each instance is described by features and labeled as either hypothyroid or normal class. We retain the most discriminative features “FTI”, “TSH”, “T3”, “TT4” that have meaningful normal ranges and remove other features. Subsequently, we drop certain rows with missing values. The final dataset consists of patients and healthy users. Normal ranges of “TSH”, “T3”, “TT4” are from laboratory tests in (Joshi et al., 2011). As we do not find the normal range of “FTI” that matches the “FTI” values in this dataset, we simply choose the 1-sigma interval of “FTI” of the normal group.

Evaluation Metrics.

To comprehensively compare over CFEs across various approaches, we employ the following evaluation metrics.

- •

-

•

Average Percentile Shift (APS) (Pawelczyk et al., 2020a) measures the relative cost of perturbations of CFEs,

(15) where denotes the percentile of the -th feature value relative to all values of the feature in the whole data set. A lower score is favored.

-

•

Sparsity. It measures the percentage of features that remain unchanged and we prefer higher sparsity,

(16) -

•

Diversity. We consider two diversity metrics, named Diversity, which is introduced in (Mothilal et al., 2020), and count-diversity (named C-Diversity for abbreviation), which is defined in Eqn (7) in Section 4.1, to measure the discrepancy within returned solutions.

(17) where represents the and is the number of CFEs.

Baselines.

We compare our method with the following baseline methods.

-

•

GrowingSphere (GS) (Lash et al., 2017). This algorithm searches for CFEs from random samples in the sphere neighborhood of the input. The radius of the sphere grows until a CFE is found. It adopts the postprocessing on returned CFEs to make sparser solutions.

- •

-

•

CFProto (Van Looveren and Klaise, 2019). It adds a prototype term to restrict that CFEs should resemble the prototype of the desired class. In our experiment, we set the prototype as the closest endpoints of normal ranges of these abnormal features, that is, .

-

•

DiCE (Mothilal et al., 2020). Compared with PlainCF, it considers the diversity constraint that is modeled by a term over a set of CFEs.

-

•

SNS (Black et al., 2022). It finds a CFE with higher confidence and lower Lipschitz constant in the neighborhood of a given CFE, to produce consistent prediction under model update.

Experiment configurations.

We first randomly split the datasets into train/test sets at the ratio of and normalize all features by a standard scaler on two UCI datasets (no feature normalization on the synthetic dataset). Then, we train a 3-layer Multilayer perceptron (MLP) model with Adam optimizer. The test accuracies are 99%, 96%, and 98% on three datasets correspondingly. As we intend to convert unhealthy patients to healthy ones, we produce CFEs for all correctly classified patients in test sets for two UCI datasets. For saving time, we only produce CFEs for 100 random true negative samples in the synthetic dataset.

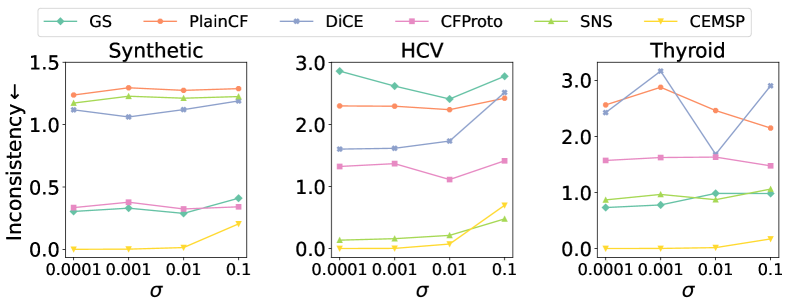

Our method produces a set of CFEs of varied size , while GS, PlainCF, CFProto, and SNS generate one at a time. We run GS, PlainCF, and CFProto times for fair comparisons to generate the same number of CFEs as ours. For DiCE, we directly keep the size of the diverse set as ours. We evaluate all CFE methods under the following two kinds of slight updates to measure the algorithm robustness.

-

•

Inputs are fixed, and we produce two sets of CFEs from two models that are trained on the same dataset with different initializations.

-

•

Model is fixed, and we produce two sets of CFEs from an input and its perturbed instance , where and is the random noise sampling from a Gaussian distribution . In our experiments, .

5.1. Quantitative Evaluation

We first report the quantitative comparison of sparsity, APS, Diversity, and C-Diversity in Figure 5, where the -axis denotes a counterfactual explanation generation method, the -axis represents the average score of each metric of all evaluated instances. We can see that our method achieves the competitive sparsity as GS. GS achieves sparsity through post-preprocessing techniques, whereas our method CEMSP focuses on making minimal modifications to subsets of abnormal features. In contrast, other methods do not explicitly optimize for sparsity and consequently fall behind in this aspect. For the APS, CEMSP is slightly better. This result is grounded in two key considerations: firstly, we substitute an abnormal feature with its closest endpoints and secondly, we aim to change the minimal number of abnormal features. It is worth noting that although PlainCF minimizes the distance in its objective, this does not equate to minimizing the APS since APS is a density-aware metric among the population. Our method achieves at least C-Diversity as expected. In contrast to sparsity, C-Diversity sums up the fraction of features that are different between any two CFEs. Therefore, the method with a higher sparsity often associates with a lower C-Diversity. As a result, our CEMSP appears to have less competitive C-Diversity than methods that make simultaneous changes on many numerous features. However, our CEMSP has a competitive Diversity score defined in (Mothilal et al., 2020).

Figure 5 and 5 report inconsistency scores under model retraining and input perturbations. Our CEMSP exhibits superior performance compared to other baseline methods. Specifically, GS, plainCF, and DiCE yield the poorest results when consistency restrictions are not enforced. CFProto, which incorporates a prototype term, achieves a better inconsistency score than PlainCF by directing all CFEs towards the prototype. As discussed earlier, our findings demonstrate that SNS does not perform well in generating CFEs with consistent feature values, despite having CFEs that yield consistent model predictions. In summary, our CEMSP outperforms the baseline methods across the aforementioned metrics, establishing its overall superiority.

5.2. Use-Case Evaluation

Next, we use the use-case evaluation in Figure 6 to present the compatibility of our method. The input instance is a patient in the HCV dataset who has the undesired prediction. Without any constraint, our method generates 4 CFEs, as illustrated in the table located at the bottom left. By introducing additional constraints, our model can effortlessly generate new CFEs that meet the desired criteria. For example, if we want to keep the original value of , we can easily incorporate the CNF (), leading to CFEs that solely modify the remaining features. Furthermore, domain knowledge reveals that and are correlated (Shen et al., 2015). Consequently, we can incorporate correlation constraints that limit simultaneous changes to both features. This can be achieved by including the CNF in our method, effectively enforcing the desired correlation constraint. Although this use-case evaluation may not yet be fully applicable to real-life scenarios, it offers valuable insights and demonstrates the potential to accommodate more practical considerations.

6. Conclusion

Lacking robustness in counterfactual explanations can undermine both individual fairness and model reliability. In this work, we present a novel framework to generate robust and diverse counterfactual explanations (CFEs). Our work leverages the feature normal ranges from domain knowledge and generates CFEs that replace the minimal number of abnormal features to the closest endpoints of their normal ranges. We convert this problem into the Boolean satisfiability problem and solve it with modern SAT solvers. Experiments on both synthetic and real-life datasets demonstrate that our generated CFEs are more consistent than baselines while preserving flexibility for user preferences.

7. Limitations and Future Work

While our work offers the potential to address the non-robustness issue through the utilization of domain knowledge, such as the normal ranges in healthcare and finance, some limitations hinder its applicability in broader contexts. Firstly, the scalability of the proposed method is underestimated. SAT solvers exhibit exponential complexity in the worst-case scenario. When dealing with a substantial number of features, the time required to find a binary mask from an SAT solver may surpass that of a forward pass in the DNN model. This concern can be addressed through empirical comparisons of the execution time between the SAT solver and DNN model revoking. Secondly, our approach is not directly applicable to scenarios where a portion of normal ranges is unknown. It might be necessary to incorporate additional information to determine the appropriate replacement values for these features. Thirdly, our study is established on binary classification tasks. However, the direct adaptation of our method to multi-class classification or regression tasks remains challenging. Normal ranges are typically contingent upon the target prediction. In the context of multi-class classification or regression, the target predictions can become intricate, rendering the normal ranges unattainable.

In future work, our ultimate goal is to investigate robust and flexible counterfactual explanations in more general situations without any assumption about normal ranges. In addition, we intend to develop a sustainable system that offers users actionable recommendations and gathers valuable feedback to nourish continual enhancements.

Acknowledgements.

This research is supported, in part, by Alibaba Group through Alibaba Innovative Research (AIR) Program and Alibaba-NTU Singapore Joint Research Institute (JRI), Nanyang Technological University, Singapore. This research is also supported, in part, by the National Research Foundation, Prime Minister’s Office, Singapore under its NRF Investigatorship Programme (NRFI Award No. NRF-NRFI05-2019-0002). Any opinions, findings and conclusions or recommendations expressed in this material are those of the authors and do not reflect the views of National Research Foundation, Singapore.References

- (1)

- Artelt et al. (2021) André Artelt, Valerie Vaquet, Riza Velioglu, Fabian Hinder, Johannes Brinkrolf, Malte Schilling, and Barbara Hammer. 2021. Evaluating robustness of counterfactual explanations. In 2021 IEEE Symposium Series on Computational Intelligence (SSCI). IEEE, 01–09.

- Bendík et al. (2018) Jaroslav Bendík, Ivana Černá, and Nikola Beneš. 2018. Recursive online enumeration of all minimal unsatisfiable subsets. In International symposium on automated technology for verification and analysis. Springer, 143–159.

- BHATT (2021) KRISHNA BHATT. 2021. Hepatitis C Predictions. https://www.kaggle.com/code/krishnabhatt4/hepatitis-c-predictions

- Black et al. (2022) Emily Black, Zifan Wang, and Matt Fredrikson. 2022. Consistent Counterfactuals for Deep Models. In International Conference on Learning Representations. https://openreview.net/forum?id=St6eyiTEHnG

- Blechschmidt et al. (2020) Jana Blechschmidt, Meike J Wittmann, and Chantal Blüml. 2020. Climate change and green sea turtle sex ratio—preventing possible extinction. Genes 11, 5 (2020), 588.

- CDC (2022) USA CDC. 2022. Assessing Your Weight. https://www.cdc.gov/healthyweight/assessing/index.html

- Cheng et al. (2021) F. Cheng, Y. Ming, and H. Qu. 2021. DECE: Decision Explorer with Counterfactual Explanations for Machine Learning Models. IEEE Transactions on Visualization & Computer Graphics 27, 02 (feb 2021), 1438–1447. https://doi.org/10.1109/TVCG.2020.3030342

- Daws (2021) Ryan Daws. 2021. Medical chatbot using OpenAI’s GPT-3 told a fake patient to kill themselves. AI News (11 2021). https://www.artificialintelligence-news.com/2020/10/28/medical-chatbot-openai-gpt3-patient-kill-themselves/

- Dhurandhar et al. (2018) Amit Dhurandhar, Pin-Yu Chen, Ronny Luss, Chun-Chen Tu, Paishun Ting, Karthikeyan Shanmugam, and Payel Das. 2018. Explanations based on the missing: Towards contrastive explanations with pertinent negatives. In Advances in Neural Information Processing Systems. 592–603.

- Diaconis and Efron (1983) Persi Diaconis and Bradley Efron. 1983. Computer-intensive methods in statistics. Scientific American 248, 5 (1983), 116–131.

- Dubuisson and Jain (1994) M-P Dubuisson and Anil K Jain. 1994. A modified Hausdorff distance for object matching. In Proceedings of 12th international conference on pattern recognition, Vol. 1. IEEE, 566–568.

- Dutta et al. (2022) Sanghamitra Dutta, Jason Long, Saumitra Mishra, Cecilia Tilli, and Daniele Magazzeni. 2022. Robust counterfactual explanations for tree-based ensembles. In International Conference on Machine Learning. PMLR, 5742–5756.

- Hoffmann et al. (2016) Georg Hoffmann, Ralf Lichtinghagen, and Werner Wosniok. 2016. Simple estimation of reference intervals from routine laboratory data. LaboratoriumsMedizin 39, s1 (2016).

- Jesorsky et al. (2001) Oliver Jesorsky, Klaus J Kirchberg, and Robert W Frischholz. 2001. Robust face detection using the hausdorff distance. In International conference on audio-and video-based biometric person authentication. Springer, 90–95.

- Joshi et al. (2019) Shalmali Joshi, Oluwasanmi Koyejo, Warut Vijitbenjaronk, Been Kim, and Joydeep Ghosh. 2019. Towards realistic individual recourse and actionable explanations in black-box decision making systems. arXiv preprint arXiv:1907.09615 (2019).

- Joshi et al. (2011) Shashank R Joshi et al. 2011. Laboratory evaluation of thyroid function. J Assoc Physicians India 59, Suppl (2011), 14–20.

- Karimi et al. (2021) Amir-Hossein Karimi, Bernhard Schölkopf, and Isabel Valera. 2021. Algorithmic Recourse: from Counterfactual Explanations to Interventions. In FAccT ’21: 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtual Event / Toronto, Canada, March 3-10, 2021, Madeleine Clare Elish, William Isaac, and Richard S. Zemel (Eds.). ACM, 353–362. https://doi.org/10.1145/3442188.3445899

- Karimi et al. (2020) Amir-Hossein Karimi, Julius von Kügelgen, Bernhard Schölkopf, and Isabel Valera. 2020. Algorithmic recourse under imperfect causal knowledge: a probabilistic approach. Advances in Neural Information Processing Systems 33 (2020).

- Kasirzadeh and Smart (2021) Atoosa Kasirzadeh and Andrew Smart. 2021. The Use and Misuse of Counterfactuals in Ethical Machine Learning. In FAccT ’21: 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtual Event / Toronto, Canada, March 3-10, 2021, Madeleine Clare Elish, William Isaac, and Richard S. Zemel (Eds.). ACM, 228–236. https://doi.org/10.1145/3442188.3445886

- Lash et al. (2017) Michael T Lash, Qihang Lin, Nick Street, Jennifer G Robinson, and Jeffrey Ohlmann. 2017. Generalized inverse classification. In Proceedings of the 2017 SIAM International Conference on Data Mining. SIAM, 162–170.

- Laugel et al. (2019) Thibault Laugel, Marie-Jeanne Lesot, Christophe Marsala, and Marcin Detyniecki. 2019. Issues with post-hoc counterfactual explanations: a discussion. arXiv preprint arXiv:1906.04774 (2019).

- Laugel et al. (2017) Thibault Laugel, Marie-Jeanne Lesot, Christophe Marsala, Xavier Renard, and Marcin Detyniecki. 2017. Inverse classification for comparison-based interpretability in machine learning. arXiv preprint arXiv:1712.08443 (2017).

- Liffiton and Malik (2013) Mark H Liffiton and Ammar Malik. 2013. Enumerating infeasibility: Finding multiple MUSes quickly. In International Conference on Integration of Constraint Programming, Artificial Intelligence, and Operations Research. Springer, 160–175.

- Liffiton and Sakallah (2005) Mark H Liffiton and Karem A Sakallah. 2005. On finding all minimally unsatisfiable subformulas. In International conference on theory and applications of satisfiability testing. Springer, 173–186.

- Mothilal et al. (2020) Ramaravind K Mothilal, Amit Sharma, and Chenhao Tan. 2020. Explaining machine learning classifiers through diverse counterfactual explanations. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency. 607–617.

- Pawelczyk et al. (2020a) Martin Pawelczyk, Klaus Broelemann, and Gjergji Kasneci. 2020a. Learning Model-Agnostic Counterfactual Explanations for Tabular Data. In Proceedings of The Web Conference 2020. 3126–3132.

- Pawelczyk et al. (2020b) Martin Pawelczyk, Klaus Broelemann, and Gjergji Kasneci. 2020b. On counterfactual explanations under predictive multiplicity. In Conference on Uncertainty in Artificial Intelligence. PMLR, 809–818.

- Pearl (2009) Judea Pearl. 2009. Causality (2 ed.). Cambridge University Press. https://doi.org/10.1017/CBO9780511803161

- Quinlan et al. (1987) J. R. Quinlan, P. J. Compton, K. A. Horn, and L. Lazarus. 1987. Inductive Knowledge Acquisition: A Case Study. In Proceedings of the Second Australian Conference on Applications of Expert Systems. Addison-Wesley Longman Publishing Co., Inc., USA, 137–156.

- Russell (2019) Chris Russell. 2019. Efficient search for diverse coherent explanations. In Proceedings of the Conference on Fairness, Accountability, and Transparency. 20–28.

- Schoeffer et al. (2022) Jakob Schoeffer, Niklas Kuehl, and Yvette Machowski. 2022. “There Is Not Enough Information”: On the Effects of Explanations on Perceptions of Informational Fairness and Trustworthiness in Automated Decision-Making. In 2022 ACM Conference on Fairness, Accountability, and Transparency (Seoul, Republic of Korea) (FAccT ’22). Association for Computing Machinery, New York, NY, USA, 1616–1628. https://doi.org/10.1145/3531146.3533218

- Sharma et al. (2020) Shubham Sharma, Jette Henderson, and Joydeep Ghosh. 2020. CERTIFAI: A Common Framework to Provide Explanations and Analyse the Fairness and Robustness of Black-Box Models. Association for Computing Machinery, New York, NY, USA, 166–172. https://doi.org/10.1145/3375627.3375812

- Shen et al. (2015) Jianying Shen, Jingying Zhang, Jing Wen, Qiang Ming, Ji Zhang, and Yawei Xu. 2015. Correlation of serum alanine aminotransferase and aspartate aminotransferase with coronary heart disease. International journal of clinical and experimental medicine 8, 3 (2015), 4399.

- Shmerling (2020) Robert H. Shmerling, MD. 2020. How’s your heart rate and why it matters? Harvard Health (3 2020). https://www.health.harvard.edu/heart-health/hows-your-heart-rate-and-why-it-matters

- Slack et al. (2021) Dylan Slack, Anna Hilgard, Himabindu Lakkaraju, and Sameer Singh. 2021. Counterfactual explanations can be manipulated. Advances in Neural Information Processing Systems 34 (2021), 62–75.

- Sokol and Flach (2019) Kacper Sokol and Peter A Flach. 2019. Counterfactual explanations of machine learning predictions: opportunities and challenges for AI safety. In SafeAI@ AAAI.

- Tolomei et al. (2017) Gabriele Tolomei, Fabrizio Silvestri, Andrew Haines, and Mounia Lalmas. 2017. Interpretable predictions of tree-based ensembles via actionable feature tweaking. In Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining. 465–474.

- Upadhyay et al. (2021) Sohini Upadhyay, Shalmali Joshi, and Himabindu Lakkaraju. 2021. Towards robust and reliable algorithmic recourse. Advances in Neural Information Processing Systems 34 (2021), 16926–16937.

- Ustun et al. (2019) Berk Ustun, Alexander Spangher, and Yang Liu. 2019. Actionable recourse in linear classification. In Proceedings of the Conference on Fairness, Accountability, and Transparency. 10–19.

- Van Looveren and Klaise (2019) Arnaud Van Looveren and Janis Klaise. 2019. Interpretable counterfactual explanations guided by prototypes. arXiv preprint arXiv:1907.02584 (2019).

- Verma et al. (2020) Sahil Verma, John Dickerson, and Keegan Hines. 2020. Counterfactual Explanations for Machine Learning: A Review. arXiv preprint arXiv:2010.10596 (2020).

- Virgolin and Fracaros (2023) Marco Virgolin and Saverio Fracaros. 2023. On the Robustness of Sparse Counterfactual Explanations to Adverse Perturbations. Artif. Intell. 316, C (feb 2023), 30 pages. https://doi.org/10.1016/j.artint.2022.103840

- von Kügelgen et al. (2022) Julius von Kügelgen, Amir-Hossein Karimi, Umang Bhatt, Isabel Valera, Adrian Weller, and Bernhard Schölkopf. 2022. On the fairness of causal algorithmic recourse. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 36. 9584–9594.

- Wachter et al. (2017) Sandra Wachter, Brent Mittelstadt, and Chris Russell. 2017. Counterfactual Explanations without Opening the Black Box: Automated Decisions and the GDPR. arXiv:1711.00399 [cs.AI]

- Wang et al. (2021) Yongjie Wang, Qinxu Ding, Ke Wang, Yue Liu, Xingyu Wu, Jinglong Wang, Yong Liu, and Chunyan Miao. 2021. The Skyline of Counterfactual Explanations for Machine Learning Decision Models. Association for Computing Machinery, New York, NY, USA, 2030–2039. https://doi.org/10.1145/3459637.3482397