FLAVA: Find, Localize, Adjust and Verify to Annotate LiDAR-Based Point Clouds

Abstract

Recent years have witnessed the rapid progress of perception algorithms on top of LiDAR, a widely adopted sensor for autonomous driving systems. These LiDAR-based solutions are typically data hungry, requiring a large amount of data to be labeled for training and evaluation. However, annotating this kind of data is very challenging due to the sparsity and irregularity of point clouds and more complex interaction involved in this procedure. To tackle this problem, we propose FLAVA, a systematic approach to minimizing human interaction in the annotation process. Specifically, we divide the annotation pipeline into four parts: find, localize, adjust and verify. In addition, we carefully design the UI for different stages of the annotation procedure, thus keeping the annotators to focus on the aspects that are most important to each stage. Furthermore, our system also greatly reduces the amount of interaction by introducing a light-weight yet effective mechanism to propagate the annotation results. Experimental results show that our method can remarkably accelerate the procedure and improve the annotation quality.

keywords:

Point Cloud Annotation, LiDAR, Scene Understanding, Autonomous Driving1 Introduction

LiDAR is widely used in today’s autonomous driving systems. It can provide accurate spatial information of the 3D environment, and thus assist the scene understanding and decision-making process of the system. In recent years, a lot of perception algorithms using deep learning have emerged to handle this kind of data [26, 13, 16, 20, 27, 19], which are significantly superior to monocular and stereo approaches in application. The rapid progress of these algorithms is supported by several challenging benchmarks built on multiple open datasets [11, 4, 12, 17]. However, although a decent amount of data has been released, the actual product deployment still needs more data with accurate labels to feed the algorithms. The only publicly accessible tools for annotation like [28] are still very coarse, especially in terms of annotation accuracy, which limits the research progress in this field.

While there are many existing approaches to efficiently annotating RGB images [9, 5, 2, 15], not much work has focused on 3D annotation tasks due to their more complex cases (Figure 2). First of all, it is difficult to identify all the objects of interest correctly in the sparsely and irregularly distributed point cloud. Further, the operation complexity is relatively high considering the larger degree of freedom (DoF) in the procedure, such as the need for annotating height and steering angle of objects, thus requiring customized UI design to achieve accurate annotation. Finally, there exists sequential correlation between consecutive frames, which can be leveraged to reduce the operations of annotators. A few recent works [18, 14, 25] noticed these problems, but they mainly used some algorithm-assisted semi-automatic ways to improve the efficiency of annotation rather than focused on the human-computer interaction in this process. Actually, these algorithms are not much efficient and convenient in practical use considering the equipment provided to annotators. Most of them need GPUs to train models and are not able to run smoothly on an ordinary laptop.

In this work, we target on the human-computer interaction in the process of 3D annotation, especially the annotation used for detection and tracking. We aim at tackling two difficulties in this process from the perspective of annotators: the difficulty of identifying objects correctly in the global scene at the beginning and the difficulty of accurately labeling objects after primarily localizing them. Specifically, we propose FLAVA, a systematic annotation pipeline to minimize the annotator’s operations, which can be divided into four steps: find, localize, adjust and verify. As shown in Figure 3, to label a 3D bounding box, we find the targets in a top-down way at first and then localize it primarily in the top view, where the first difficulty is needed to be tackled. Subsequently, after the height is automatically computed, we adjust the box on the projected view of the local point cloud in a bottom-up way to solve the second problem. Finally, the semantic information of the RGB image and the perspective view of the point cloud can be combined to verify the results.

Apart from the whole constructive pipeline, we also design a UI tailored to these four stages (Figure 1 and 6). The UI has several appealing functions, such as various zoomable views for multimodal data, highlight of local point clouds and length specification, which keep the annotators focusing on the most important tasks at each stage and thus ensure the accuracy of annotated results. Furthermore, we introduce a mechanism to propagate the annotated results between objects and consecutive frames. With this mechanism, most 3D annotation cases can be basically simplified as concise operations of 2D boxes in the top view, which significantly reduces unnecessary repeated operations.

We evaluated the proposed annotation method with several sequences collected from KITTI raw data. Compared with our baseline, it can not only accelerate the annotation speed by 2.5 times, but also further improve the quality of the labels, as measured by 27.50% high 3D average precision, and 9.88% high bounding box IoU.

Our contributions of this work are summarized as follows:

-

•

We start from the human habit of understanding a scene, and propose a systematic annotation pipeline, namely FLAVA, to tackle the two key problems in 3D annotation tasks, identifying objects correctly and annotating them accurately.

-

•

We designed a clear UI and annotation transfer mechanism according to the characteristics of data and tasks, which makes it more convenient for annotators to concentrate on much simpler work at each stage and accomplish it with fewer operations.

-

•

We tested the proposed annotation method on the KITTI dataset, and proved its remarkable effect on the efficiency and quality of labeling. Detailed ablation studies reveal the significance of different functions on this issue.

2 Related Work

2.1 LiDAR-based benchmarks

In recent years, LiDAR has been widely used in various autonomous driving systems. In order to promote the development of this field, many open datasets have been released. Several benchmarks of various tasks are set up on top of them, including 3D object detection, 3D object tracking and point cloud semantic segmentation. One of the pioneers in this aspect is the KITTI dataset [11], which has about 15000 frames of data in 22 scenes for training and testing, including about 200K 3D boxes. Afterwards, two large-scale datasets named nuScenes [4] and Lyft [12] were released. They shared similar data formats and provided point cloud data of consecutive frames. NuScenes dataset is split in 700/150/150 scenes for training/validation/testing respectively. There are overall 1.4M annotated 3D boxes, far more than KITTI’s 200K boxes. Lyft dataset has 180 and 218 scenes for training and testing. Both of them have more data, more object categories and richer scenes. Recently, Waymo open dataset [17] has been released and it is currently the largest dataset along them. In addition, it is worth noting that Waymo uses a mid-range lidar and four short-range lidars, which are different from the 64-line velodyne used by KITTI and the 32-line velodyne used by nuScenes and Lyft.

On the basis of these open datasets, many algorithms have emerged to solve these 3D tasks, such as [6, 26, 13, 16, 27, 19] for 3D detections and [20, 7] for 3D tracking, However, despite these open datasets, the actual product adoption still needs more data support to ensure the stability and security of algorithms. Moreover, when the configuration of the lidar changes, for example, the location is different or the number of lines is different, the model needs new data for training and tuning. All of these show that an efficient annotation method is still an important demand in this field.

2.2 Annotation tools

As data plays an increasingly important role in various fields of computer vision, assisted labeling has gained great popularity. For images and videos annotation, VIA [9] proposed a simple and standalone annotation tool for image, audio and video. Polygon-RNN [5, 2] trained a recurrent CNN to inference the polygonal mask on the image to assist the annotation for semantic segmentation. Curve-GCN [15] further improved the efficiency of generating polygon vertices and achieved real-time interaction. For semi-automatic annotation tailored to autonomous driving applications, BDD100K [23] proposed a set of tools to annotate bounding boxes and semantic masks on RGB images. It also leveraged pretrained detectors to accelerate the annotation for 2D detection. Few works focused on the annotation in LiDAR-based point clouds. [14] presented a method to generate ground truths via selecting spatial seeds assisted by pretrained networks. [10] utilized active learning to train 3D detectors while minimizing human annotation efforts. [25] proposed to autolabel 3D objects from pretrained off-the-shelf 2D detectors and sparse LiDAR data. LATTE [18] used sensor fusion, one-click annotation and tracking to assist point cloud annotation in the bird view. However, although there exist these works investigating how to accelerate this process, most of them tried to use algorithms to achieve it instead of diving into the details of 3D interactions. Furthermore, most of them are not much efficient and practical regarding the equipment deployed to the annotators.

| Method | Data | Task | Characteristics |

|---|---|---|---|

| VIA [9] | Image, audio and video | Multi-task | Simple and standalone |

| Polygon-RNN [5, 2] | Image | Semantic segmentation | Recurrent CNN, polygonal mask |

| Curve-GCN [15] | Image | Semantic segmentation | GCN, predict vertices simultaneously |

| BDD 100K [23] | Image and video | Multi-task | 2D pretrained detectors, the largest dataset |

| GT Generation [14] | Point Cloud | 3D detection | 3D pretrained detectors |

| LiDAR Active Learning [10] | Point Cloud | 3D detection | Active learning |

| Autolabeling [25] | Point Cloud | 3D detection | Signed distance fields (SDF) |

| LATTE [18] | Point Cloud | BEV detection | Mark-RCNN, Clustering, Kalman filter |

2.3 Data generation from LiDAR simulation

Because the annotation of point clouds is challenging and time-consuming, many research efforts aim at building simulation environment to obtain enough data for training neural networks. [24] proposed a framework to produce point clouds with accurate point-level labels on top of a computer game named GTA-V. This kind of simulated data can be combined with the data from the real world to feed algorithms ([21, 22, 24]). CARLA [8] and AutonoVi-Sim [3] also tried to simulate the LiDAR point cloud data from the virtual world. However, their primary target is to provide a platform for testing algorithms of learning and control for autonomous vehicles instead of augmenting specific LiDAR data. Furthermore, due to the difference of spatial distribution between the simulated and real data, the model trained with these platforms performs poorly on the real-world data. Although some researchers have made great progress in this domain adaptation problem, the gap was just reduced but not closed. Therefore, an efficient annotation pipeline used to collect data from the real world is still a critical need.

3 Methodology

Overview Object detection and tracking in LiDAR-based point clouds are very important tasks for the 3D perception system of autonomous driving. Current algorithms need to be trained and tested with manually labeled data to accomplish these tasks. Specifically, in this type of annotation task, the annotator needs to correctly identify the object to be detected in the sparse point cloud first and then accurately label its position, size, orientation, category, and so on. Achieving both of them efficiently is not trivial due to the complex interaction involved in the procedure. Our FLAVA is a systematic approach to addressing this issue. In this section, we will elaborate the four steps as well as the UI designs involved in the annotation pipeline (Figure 3 and 6), where the first two steps aim at identifying and localizing the objects primarily in a global view, the third step is to annotate accurately, and the final step is to ensure all the annotations are confident enough. Finally, we will present the annotation transfer mechanism used in our system that can greatly reduce unnecessary interactions.

3.1 Find

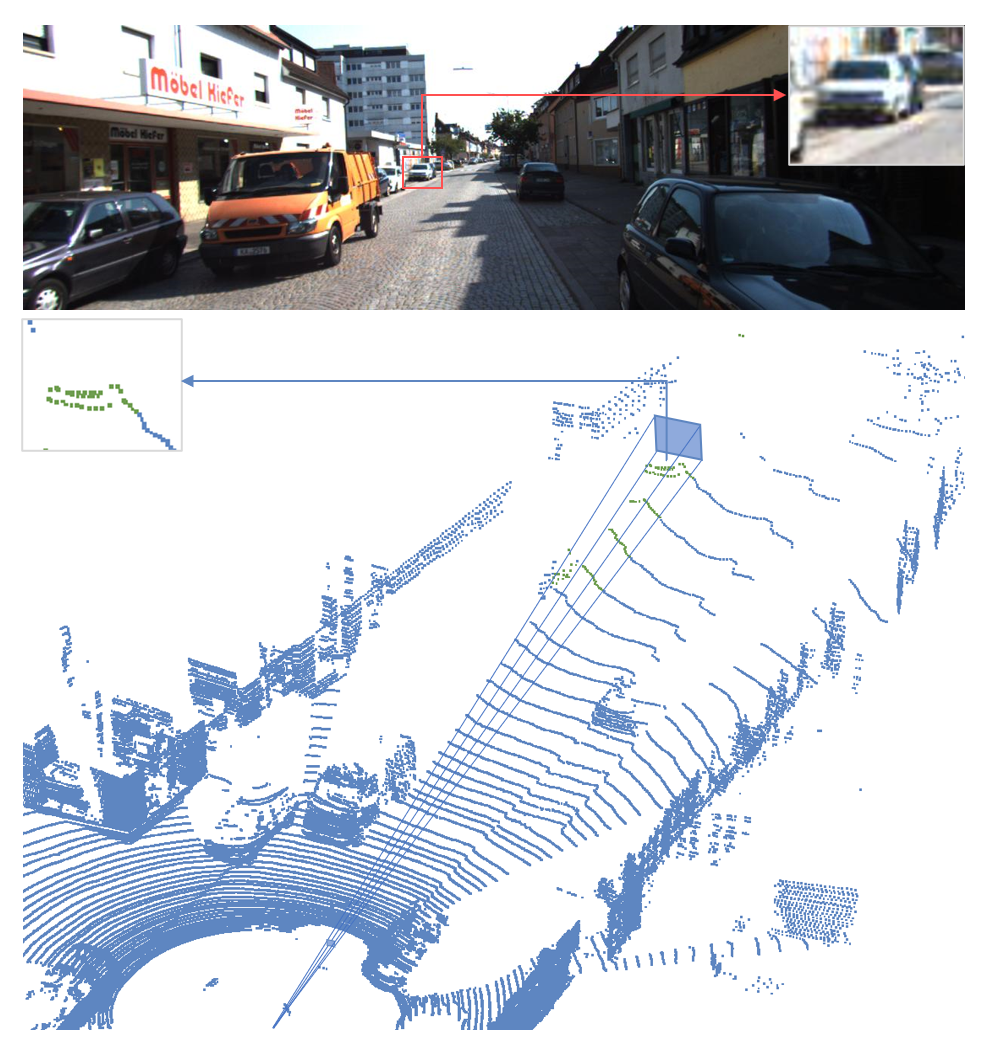

To begin with, we need to find target objects from the entire scene. Point clouds can accurately reflect the 3D physical environment in the real world; while RGB images can provide semantic information for human analysis. How to combine these two modes of data is a key problem. For the point cloud, apart from its perspective view, considering the particularity of the scenario, the objects we need to detect are basically on the ground, so the bird view of the point cloud is also a good starting global view for labeling, which can avoid the occlusion problem between objects that may exist in RGB images. For nearby and large objects, we can easily find them based on these two views of the point cloud, such as the object 3 in the Figure 6. For distant and small objects, it is difficult to identify them directly in the point cloud, and the semantic information of RGB images is needed. As the Figure 4 shows, the object of interest may have only a few points obtained from LiDAR, but it can be found directly in the image. Therefore, we can leverage the corresponding frustum proposal 111The frustum proposal refers to the 3D search space lifted from a 2D bounding box in the image with near and far planes specified by depth sensor range. in 3D space to find the object primarily.

Specifically, we first find the approximate position in the RGB image, and further identify which points are relevant by highlighting those within the generated frustum proposal and estimating its distance in the 3D environment (Figure 3(a)(b) and Figure 4). In the process, we need to use the projection transformation from point cloud to image when constructing the frustum proposal:

| (1) |

where is a 3D point in the velodyne coordinate system, is the projected coordinate in the camera image, is the projection matrix after rectification corresponding to the i-th camera, is the rectifying rotation matrix (expanded by appending a fourth zero-row and column, and setting ), is the rigid body transformation from velodyne coordinates to camera coordinates. After projected onto the image, the points falling into the 2D box in the RGB image will be highlighted for our reference, as shown in Figure 3(a) and Figure 4. With explicitly marking the relevant points, we can basically identify which points belong to the object of our interest by combining the RGB image and the contextual information in the nearby region.

3.2 Localize

Once we have found the object of interest, what we need to do subsequently is to localize it. Primarily "finding" and "localizing" objects share similar characteristics in terms of visual perception. They both aim to correctly identify the targets from a global environment, and thus top-down methods should be more effective. Therefore, we still mainly focus on the bird view of the entire scene, supplemented by the perspective view. In terms of UI design, considering the large scope of the global scene and the importance of point cloud data, we also give it the largest area to display (Figure 6). We divide the whole process into three parts: drawing bounding boxes in the bird view, adjusting their position and orientation, and finally generating height information automatically.

As is shown in the Figure 5, we find the object of our interest at first, draw the bounding box in the top view, and then adjust its position and orientation by shifting and rotating without changing its size. As mentioned later, this will be the most core and simple operation throughout our annotation process, especially when the size and height of the box are initially determined. Note that after we draw the box, the front view and side view of the local point cloud will be updated. The orientation in the side view is very useful when determining whether we have annotated a correct orientation. Regarding the side view derived here is observed from the right side, the object facing right indicates our correct annotation (Figure 3(c)).

Finally, the height and 3D center of the box are automatically generated based on the highest and lowest points within the 2D box in the top view. The box we get here is an incompletely accurate one that tightly covers the point cloud vertically. For example, when a point cloud is swept only over the top half of an object, the position of the box we get may be skewed; when a point cloud is scanned more fully, the points on the ground or some noises may get involved (Figure 7). Therefore, in order to get a more accurate labeling result, we need to finetune the size and position of the box next.

3.3 Adjust

Unlike the previous two steps, when adjusting the box, the analysis of local saliency is more important, which means that it would be better to be done in a bottom-up way. Here, we use the front view and side view of the local point cloud as the main data formats for our operations. As shown in Figure 7, this design is particularly important when distant objects need to be labeled. On the one hand, labeling distant objects are constrained by the 3D interactive environment, which makes it difficult to zoom in and observe them carefully; on the other hand, operating directly in the environment to annotate height information can also result in inadequate flexibility and accuracy. Instead, considering the incompleteness of the scanning and the symmetry of the object outline, the front view and side view of a local point cloud can best help the annotator to imagine the general shape of the object and pay attention to more details such as whether the points on the boundary are involved in, so that the annotator can draw a more accurate box. Note that by borrowing the idea of anchor from detection algorithms222We usually set a reference box, namely anchor, for each category of objects to simplify the inference of box size in the detection algorithms., here we specify the length of each edge of the box in the projected views, which can make it convenient for annotators to compare their annotation with the reference box size and approximate the complete bounding box more reasonably.

To be more specific for the implementation, when finetuning boxes in the front view and side view of a local point cloud, we need to map the adjustment in the 2D view to the 3D box. Taking the case of front view as an example (Figure 9), we split the adjustments in the 2D view into two orthogonal directions and transform the 3D box accordingly. For height adjustment, there is no particular coordinate transformation. For the operation in the horizontal direction, we first turn the box back to the 0° orientation, adjust its vertices coordinates, find a new center, and then rotate it back to the original orientation. Note that this example can be extended to any possible cases like resizing in other ways or shifting the box. Extension to the case in the side view is also straightforward, where we just need to simply apply the changes on the width to the length.

3.4 Verify

After adjusting, we need to verify the annotated box at the end. At this time, we can make full use of all kinds of modal data besides the projected views of the local point cloud for validation, including the various stereo perspectives of the 3D point cloud and RGB images. In this process, various zoomable views, highlight of local point clouds and length specification in the UI design are all important details to assist the annotator to verify (Figure 6).

For the point cloud, we can switch to the perspective view for observation, especially when the point cloud is sparse, we need to further confirm whether the imaginary height of the box is reasonable in the global view. In addition, the projected view of the local point cloud can be used to further confirm whether the boundary and orientation of the labeled object are correct. For the RGB image, we use Eqn. 1 to project eight vertices of the bounding box into the image, and verify the correctness of annotation with semantic information.

After the verification of various perspectives, if we need to adjust the position, orientation and size of the object, considering that the height adjustment in the third step has been very accurate, we specially fix the height information of the object (including the height of the box and its center). This detail will also be covered in the later part, in order to reduce unnecessary repeated operations in height adjustment and improve the stability of height annotation.

3.5 Annotation Transfer

The previous four parts describe the labeling process for a single object or a single frame. In this section, we will describe the most important detail used throughout the labeling procedure, namely annotation transfer. Given the operation complexity of labeling an object, how to rationally use the labeled ground truths to reduce the number of operations is a very important issue. Here we mainly use two kinds of annotation transfer, called inter-object transfer and inter-frame transfer.

First, since objects on the same lane, like car, van and cyclist, usually share similar orientation and height, inter-object transfer can significantly reduce the rotation and height adjustment of such boxes, while also making labeling more reasonable in the regions with sparse point clouds.

As for the inter-frame annotation transfer, when labeling consecutive frames, there are usually only slight shifts and deflections between the annotations of these frames, so the operations involved in height adjustment can be greatly reduced by passing labels. Through this kind of transfer, we can avoid the situation of missing labels due to the sparse local point cloud of individual frames as much as possible. Furthermore, we can achieve a one-to-one correspondence between the annotations of consecutive frames, which enables our labels to be used for both 3D object detection and 3D object tracking.

When implementing the transfer, we just copy and paste the labels to minimize the computational overhead of this function. Actually, real-time hand-crafted algorithms hardly avoid other necessary operations like shifting and resizing in the bird view, but usually introduce additional costs. Table 2 compares the number of basic operations that will be involved in the case with and without the assistance of annotation transfer, and it can be seen that annotation transfer can significantly reduce the number of operations required in step 3, especially the fine-tuning of height. Therefore, the more consecutive frames a sequence contains, the more objects there are in the same lane in a frame, the more efficient the labeling will be.

4 Evaluation

In this section, we will present our evaluation details, including the experimental setup, adopted metrics, and experimental results.

| Operation | w/o transfer | w/ transfer |

|---|---|---|

| Locating | Only shift | |

| Rotating | Little | |

| Adjusting box size (BV) | Little | |

| Adjusting height | Little |

| Multimodal | Inter-object transfer | Inter-frame transfer | Time (s) | BEV IoU (%) | 3D IoU (%) |

| 31.10 | 79.30 | 73.85 | |||

| 25.59 | 85.48 | 81.98 | |||

| 21.90 | 86.62 | 83.26 | |||

| 12.37 | 87.35 | 83.73 |

| Multimodal | Inter-object transfer | Inter-frame transfer | BEV AP (0.7) | BEV AP (0.5) | 3D AP (0.7) | 3D AP (0.5) |

| 71.43 | 99.62 | 59.74 | 89.48 | |||

| 88.49 | 99.75 | 86.33 | 90.51 | |||

| 87.97 | 99.75 | 86.89 | 89.62 | |||

| 89.17 | 99.92 | 87.24 | 99.43 |

4.1 Experimental Setup

Although we can intuitively feel that our method improves the efficiency and accuracy of this annotation task, we still tried to test the productivity advances quantitatively and precisely. In each group of experiments, we assigned the randomly selected data from KITTI raw data to the same number of volunteers, and compared the accuracy and efficiency of their annotation. The KITTI dataset provides data from consecutive frames in different scenes, including RGB images, GPS/IMU data, 3D object tracklet labels and calibration data. These data cover six categories of scenes, including city, residential, road, campus, etc., and eight categories of objects. We randomly selected six sequences of different scenes, and five consecutive frames of data from each sequence as our test data. This test benchmark contains a total amount of 374 instances. More detailed analysis of data distribution is shown in Figure 8.

We set up four experimental groups. First of all, we added the function of annotating 3D bounding boxes on top of the open-source tool [18], which is a point cloud annotation tool only for the 2D annotation on the bird view. With our supplemented functions, annotators can use this tool to adjust the top and bottom of boxes, and thus we take it as the baseline of our experiments. This method can realize the most basic functions of 3D annotation, but due to the lack of effective organization of multimodal data and full use of data characteristics, it cannot fully realize the complete idea of FLAVA. On this basis, we added various functions of multimodal data, inter-object annotation transfer and inter-frame annotation transfer in turn, as the other three experimental groups, to test the contribution of each function to annotation efficiency and accuracy. The functions of using multimodal data include finding and primarily localizing objects by the RGB image, adjusting and verifying the annotated box by the projected view of local point clouds, and finally verifying annotation results by the RGB image.

We invited the same number of different volunteers to label in each experimental group, to ensure that everyone only used the corresponding features to label, and would not get benefit from improved familiarity and proficiency of annotating these data. Volunteers were asked to only annotate the instances for which they felt confident. For instance, for very distant objects, like cars farther than 70 meters away, because the points that can be obtained from LiDAR are very sparse, they will not be labeled. This reduces the uncertainty of the comparison of results that may be produced due to unreasonable samples. We only verify the instances with corresponding ground truths when evaluating. Specifically, we only evaluate the accuracy of annotated boxes that can intersect with a ground truth.

4.2 Metrics

When evaluating the quality of annotation quantitatively, we used different metrics to test the efficiency and accuracy of annotation. For the efficiency of annotation, on the one hand, according to Table 2, we can have a qualitative sense of the operation complexity involved in the annotation process; on the other hand, we used the average time spent when annotating each instance as the metrics to measure the efficiency in practical use.

For the evaluation of accuracy, first of all, we need to note that considering that KITTI’s annotation does not include all instances in a scene, especially the objects behind the drive, we referred to the method of [18], asked an expert annotator to provide high-quality annotation as the ground truth of the given test data. We used two metrics to evaluate the accuracy, which are commonly used in 3D object detection: intersection over union (IoU) and average precision (AP). Among them, IoU is only calculated when the object is labeled and has a ground truth at the same time, which is different from average precision. IoU can effectively evaluate the average accuracy of labels that are relatively correct, while average precision can evaluate whether the annotation can identify those objects of interest correctly. When computing average precision, we set two kinds of difficulties. In the relatively strict case, we take the label with IoU greater than 0.7 for car and van while 0.5 for pedestrian and cyclist as a true positive; and the relatively easy standard is that the label with IoU greater than 0.5 for car and van while 0.25 for pedestrian and cyclist can be regarded as a true positive. We also calculated the IoU and AP of 2D boxes in the bird view in addition to 3D boxes, which can help us to analyze the effect of different functions on the most difficult part in this annotation task — height annotation.

4.3 Results

Quantitative Analysis Since there is no open source tool with similar functions, we supplemented the functions of [18] so that it can have theoretically complete functions in 3D annotation. We regard it as the baseline of FLAVA. On this basis, we add functions in turn, so that the whole process and functions gradually approach our method. It can be seen from Table 3 and 4 that although it takes the longest time, 31.1s, to annotate each instance in the baseline, its label quality of both 3D and bird-view 2D boxes is poorest under multiple metrics of IoU and average precision.

Subsequently, we firstly organize multimodal data effectively, and we can see that not only the average time used to annotate each instance is reduced by about 6s, but also the IoU and average precision are significantly improved. Moreover, it can be seen that since our height adjustment is mainly implemented in the projected view of the local point cloud, the performance improvement of 3D boxes is much greater than that of 2D boxes in the bird view.

Then we add inter-object transfer and inter-frame transfer, which further improve the accuracy and efficiency of annotation. In particular, introducing inter-frame transfer almost doubles the efficiency of annotation and shows a 2.5 times speed-up compared with the baseline. Note that this improvement is achieved on our specific test benchmark, where a sequence only consists of 5 consecutive frames. It is conceivable that the more frames a sequence contains, the greater this improvement will be. Furthermore, annotation transfer also makes the height annotation more stable and accurate. It can be seen that 99.75% of AP(0.5) in the bird view of the 2nd group of experiments is not much different from 99.92% of the 4th group, but 90.51% is much lower than 99.43% in terms of the 3D AP. Similar improvements brought by annotation transfer can also be reflected in other metrics results. Finally, compared to other public annotation tools, the accuracy outperforms [28] (about 20% 3D IoU) by a large margin and the user experience is considered to be smoother from all of our volunteers’ feedback.

Qualitative Analysis To have a more intuitive understanding of the improved label quality, we show some examples to compare the annotations from the baseline and our proposed method (Figure 10). Firstly, it can be seen that from the bird view, there exist some slight but noticeable differences when annotating the front and the back of cars. In the left example, there are some noises behind the car, which are not clear from the bird view. However, our adjustment in the side view can help a lot. Similarly, the bottom of the car in the right example adjusted from the side view is more accurate than that adjusted from the perspective view. Furthermore, due to the annotation transfer adopted in our method, the front of the car is consistent with the more confident annotation in previous frames, which is also more accurate.

In a word, from both quantitative and qualitative results, it can be seen that the performance of baseline based on 3D interaction can be greatly improved by leveraging the multimodal data due to its contribution to the better identification of distant objects and the more accurate annotation of box boundaries. The introduction of annotation transfer fully utilizes the specific characteristics of data. It further improves the efficiency and accuracy of annotation, making the whole annotation procedure more constructive and flexible. An example of our annotation results is shown in Figure 11. See more examples of our annotation process and results in the demo video.

5 Discussion

From the previous discussion and evaluation, it is evident that all the annotated operations and verification should not be performed only on a single modal data. We need to give full consideration to what kind of data is more appropriate for the operation of annotator and what kind of data can highlight the saliency of our interest. A constructive pipeline and the effective organization of multimodal data can greatly improve the efficiency and accuracy of annotation. At the same time, novel algorithms are sometimes not very practical regarding the equipment given to the annotators in application. Instead, combining various simple but efficient techniques may be more effective in improving the user experience of annotators.

Although our FLAVA solves some basic problems in labeling point cloud, there are still challenges in application. First, labeling point cloud is a relatively skilled work. In the actual annotation process, many annotators have received professional training and long-term practice to further improve their efficiency and proficiency. Therefore, it is interesting if there is a way to use our annotation tools to train them pertinently. Maybe it can achieve unexpected results while reducing the training workload. Similarly, we can also use active learning to improve the performance of related algorithms efficiently through the interactions between annotators and tools. These are some possibilities that can be mined in this interaction procedure.

In addition, there are some other engineering problems in application. For example, when the number of points becomes larger, whether it will affect the performance of our annotation tool. The test result is that for the current web-based tool, about 100 thousand of point cloud data can be imported quickly enough. About 1000 thousand of point cloud data takes nearly half a minute to import without affecting the interactive process of annotation. When the resolution of the input point cloud becomes further higher, the time of importing data and the fluency of operation may also become important factors restricting the tool. Another engineering problem is the synchronization of different modal data. Sometimes the image and point cloud data cannot be fully synchronized. How to solve the impact of this deviation on the annotation process is also worth further exploration. Finally, although we propose a systematic annotation process for the task of 3D object detection and tracking, there still exist new difficulties in other annotation tasks like point cloud semantic segmentation, which may also need specific designs tailored to those tasks.

In the process of annotation, we also try to get the inspiration for the current 3D detection algorithms. For example, human beings usually verify the annotation results in RGB images, which has not been well modeled and applied in the detection algorithms. On the other hand, human annotation quality may be regarded as an important goal and performance bottleneck of LiDAR-based object detection algorithms. The current state-of-the-art methods can achieve about 80% of 3D AP (0.7) without considering the efficiency of the algorithm when detecting cars, while our annotation can achieve about 90%. Therefore, the gap between current algorithms and human’s ability can be estimated roughly. How to further reduce this gap is a problem that researchers need to consider at present. At the same time, when the gap is closed, it may also indicate that the point cloud data has been utilized to the greatest extent, and further considering the combination with other data and control algorithms may be a more important task.

6 Conclusion

In this paper, we propose FLAVA, a systematic annotation method to minimize human interaction when annotating LiDAR-based point clouds. It aims at helping annotators solve two key problems, identifying the objects of interest correctly and annotating them accurately. We carefully design a UI tailored to this pipeline and introduce annotation transfer regarding the specific characteristics of data and tasks, which make annotators be able to focus on simpler tasks at each stage and accomplish it with fewer interactions. Detailed ablation studies demonstrate that this annotation approach can effectively reduce unnecessary repeated operations, and significantly improve the efficiency and quality of annotation. At last, we discuss the various thinking and possibilities of the extension of this annotation task. Future work includes designing annotation tools for other tasks upon LiDAR-based point clouds and improving related algorithms based on human’s annotation procedure.

References

- [1]

- [2] David Acuna, Huan Ling, Amlan Kar, and Sanja Fidler. 2018. Efficient Interactive Annotation of Segmentation Datasets with Polygon-RNN++. In IEEE Conference on Computer Vision and Pattern Recognition.

- [3] Andrew Best, Sahil Narang, Lucas Pasqualin, Daniel Barber, and Dinesh Manocha. 2018. AutonoVi-Sim: Autonomous Vehicle Simulation Platform with Weather, Sensing, and Traffic Control. In IEEE Conference on Computer Vision and Pattern Recognition Workshops.

- [4] Holger Caesar, Varun Bankiti, Alex H. Lang, Sourabh Vora, Venice Erin Liong, Qiang Xu, Anush Krishnan, Yu Pan, Giancarlo Baldan, and Oscar Beijbom. 2019. nuScenes: A multimodal dataset for autonomous driving. CoRR abs/1903.11027 (2019). http://arxiv.org/abs/1903.11027

- [5] Lluis Castrejon, Kaustav Kundu, Raquel Urtasun, and Sanja Fidler. 2017. Annotating Object Instances with a Polygon-RNN. In IEEE Conference on Computer Vision and Pattern Recognition.

- [6] Xiaozhi Chen, Huimin Ma, Ji Wan, Bo Li, and Tian Xia. 2017. Multi-View 3D Object Detection Network for Autonomous Driving. In IEEE Conference on Computer Vision and Pattern Recognition.

- [7] Hsu-kuang Chiu, Antonio Prioletti, Jie Li, and Jeannette Bohg. 2020. Probabilistic 3D Multi-Object Tracking for Autonomous Driving. arXiv preprint arXiv:2020 (2020).

- [8] Alexey Dosovitskiy, German Ros, Felipe Codevilla, Antonio Lopez, and Vladlen Koltun. 2017. CARLA: An Open Urban Driving Simulator. In Annual Conference on Robot Learning.

- [9] Abhishek Dutta, Ankush Gupta, and Andrew Zisserman. 2016. VGG Image Annotator (VIA). http://www.robots.ox.ac.uk/~vgg/software/via/. (2016).

- [10] Di Feng, Xiao Wei, Lars Rosenbaum, Atsuto Maki, and Klaus Dietmayer. 2019. Deep Active Learning for Efficient Training of a LiDAR 3D Object Detector. In 30th IEEE Intelligent Vehicles Symposium.

- [11] Andreas Geiger, Philip Lenz, and Raquel Urtasun. 2012. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In IEEE Conference on Computer Vision and Pattern Recognition.

- [12] R. Kesten, M. Usman, J. Houston, T. Pandya, K. Nadhamuni, A. Ferreira, M. Yuan, B. Low, A. Jain, P. Ondruska, S. Omari, S. Shah, A. Kulkarni, A. Kazakova, C. Tao, L. Platinsky, W. Jiang, and V. Shet. 2019. Lyft Level 5 AV Dataset 2019. https://level5.lyft.com/dataset/. (2019).

- [13] Alex H. Lang, Sourabh Vora, Holger Caesar, Lubing Zhou, Jiong Yang, and Oscar Beijbom. 2019. PointPillars: Fast Encoders for Object Detection from Point Clouds. In IEEE Conference on Computer Vision and Pattern Recognition.

- [14] Jungwook Lee, Sean Walsh, Ali Harakeh, and Steven L. Waslander. 2018. Leveraging Pre-Trained 3D Object Detection Models For Fast Ground Truth Generation. In IEEE International Conference on Intelligent Transportation Systems.

- [15] Huan Ling, Jun Gao, Amlan Kar, Wenzheng Chen, and Sanja Fidler. 2019. Fast Interactive Object Annotation with Curve-GCN. In IEEE Conference on Computer Vision and Pattern Recognition.

- [16] Shaoshuai Shi, Xiaogang Wang, and Hongsheng Li. 2019. PointRCNN: 3D Object Proposal Generation and Detection from Point Cloud. In IEEE Conference on Computer Vision and Pattern Recognition.

- [17] Pei Sun, Henrik Kretzschmar, Xerxes Dotiwalla, Aurelien Chouard, Vijaysai Patnaik, Paul Tsui, James Guo, Yin Zhou, Yuning Chai, Benjamin Caine, Vijay Vasudevan, Wei Han, Jiquan Ngiam, Hang Zhao, Aleksei Timofeev, Scott Ettinger, Maxim Krivokon, Amy Gao, Aditya Joshi, Sheng Zhao, Shuyang Cheng, Yu Zhang, Jon Shlens, Zhifeng Chen, and Dragomir Anguelov. 2019. Scalability in Perception for Autonomous Driving: Waymo Open Dataset. arXiv preprint arXiv:2019 (2019).

- [18] Bernie Wang, Virginia Wu, Bichen Wu, and Kurt Keutzer. 2019. LATTE: Accelerating LiDAR Point Cloud Annotation via Sensor Fusion, One-Click Annotation, and Tracking. In IEEE International Conference on Intelligent Transportation Systems.

- [19] Tai Wang, Xinge Zhu, and Dahua Lin. 2020. Reconfigurable Voxels: A New Representation for LiDAR-Based Point Clouds. In Conference on Robot Learning.

- [20] Xinshuo Weng and Kris Kitani. 2019. A Baseline for 3D Multi-Object Tracking. arXiv preprint arXiv:2019 (2019).

- [21] Bichen Wu, Alvin Wan, Xiangyu Yue, and Kurt Keutzer. 2018. SqueezeSeg: Convolutional Neural Nets with Recurrent CRF for Real-Time Road-Object Segmentation from 3D LiDAR Point Cloud. In IEEE International Conference on Robotics and Automation.

- [22] Bichen Wu, Xuanyu Zhou, Sicheng Zhao, Xiangyu Yue, and Kurt Keutzer. 2019. SqueezeSegV2: Improved Model Structure and Unsupervised Domain Adaptation for Road-Object Segmentation from a LiDAR Point Cloud. In IEEE International Conference on Robotics and Automation.

- [23] Fisher Yu, Haofeng Chen, Xin Wang, Wenqi Xian, Yingying Chen, Fangchen Liu, Vashisht Madhavan, and Trevor Darrell. 2020. BDD100K: A Diverse Driving Dataset for Heterogeneous Multitask Learning. In IEEE Conference on Computer Vision and Pattern Recognition.

- [24] Xiangyu Yue, Bichen Wu, Sanjit A. Seshia, Kurt Keutzer, and Alberto L. Sangiovanni-Vincentelli. 2018. A LiDAR Point Cloud Generator: from a Virtual World to Autonomous Driving. In International Conference on Multimedia Retrieval. ACM.

- [25] Sergey Zakharov, Wadim Kehl, Arjun Bhargava, and Adrien Gaidon. 2020. Autolabeling 3D Objects with Differentiable Rendering of SDF Shape Priors. In IEEE Conference on Computer Vision and Pattern Recognition.

- [26] Yin Zhou and Oncel Tuzel. 2018. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In IEEE Conference on Computer Vision and Pattern Recognition.

- [27] Xinge Zhu, Yuexin Ma, Tai Wang, Yan Xu, Jianping Shi, and Dahua Lin. 2020. SSN: Shape Signature Networks for Multi-class Object Detection from Point Clouds. In Proceedings of the European Conference on Computer Vision.

- [28] Walter Zimmer, Akshay Rangesh, and Mohan Trivedi. 2019. 3D BAT: A Semi-Automatic, Web-based 3D Annotation Toolbox for Full-Surround, Multi-Modal Data Streams. In 30th IEEE Intelligent Vehicles Symposium.