Fixed-Budget Best-Arm Identification with Heterogeneous Reward Variances

Abstract

We study the problem of best-arm identification (BAI) in the fixed-budget setting with heterogeneous reward variances. We propose two variance-adaptive BAI algorithms for this setting: for known reward variances and for unknown reward variances. The key idea in our algorithms is to adaptively allocate more budget to arms with higher reward variances. The main algorithmic novelty is in the design of , which allocates budget greedily based on overestimating unknown reward variances. We bound the probabilities of misidentifying best arms in both and . Our analyses rely on novel lower bounds on the number of arm pulls in BAI that do not require closed-form solutions to the budget allocation problem. One of our budget allocation problems is equivalent to the optimal experiment design with unknown variances and thus of a broad interest. We also evaluate our algorithms on synthetic and real-world problems. In most settings, and outperform all prior algorithms.

1 Introduction

The problem of best-arm identification (BAI) in the fixed-budget setting is a pure exploration bandit problem which can be briefly described as follows. An agent interacts with a stochastic multi-armed bandit with arms and its goal is to identify the arm with the highest mean reward within a fixed budget of arm pulls [Bubeck et al., 2009, Audibert et al., 2010]. This problem arises naturally in many applications in practice, such as online advertising, recommender systems, and vaccine tests [Lattimore and Szepesvari, 2019]. It is also common in applications where observations are costly, such as Bayesian optimization [Krause et al., 2008]. Another commonly studied setting is fixed-confidence BAI [Even-Dar et al., 2006, Soare et al., 2014]. Here the goal is to identify the best arm within a prescribed confidence level while minimizing the budget. Some works also studied both settings [Gabillon et al., 2012, Karnin et al., 2013, Kaufmann et al., 2016].

Our work can be motivated by the following example. Consider an A/B test where the goal is to identify a movie with the highest average user rating from a set of movies. This problem can be formulated as BAI by treating the movies as arms and user ratings as stochastic rewards. Some movies get either unanimously good or bad ratings, and thus their ratings have a low variance. Others get a wide range of ratings, because they are rated highly by their target audience and poorly by others; and hence their ratings have a high variance. For this setting, we can design better BAI policies that take the variance into account. Specifically, movies with low-variance ratings can be exposed to fewer users in the A/B test than movies with high-variance ratings.

An analogous synthetic example is presented in Figure 1. In this example, reward variances increase with mean arm rewards for a half of the arms, while the remaining arms have very low variances. The knowledge of the reward variances can be obviously used to reduce the number of pulls of arms with low-variance rewards. However, in practice, the reward variances are rarely known in advance, such as in our motivating A/B testing example, and this makes the design and analysis of variance-adaptive BAI algorithms challenging. We revisit these two examples in our empirical studies in Section 5.

We propose and analyze two variance-adaptive BAI algorithms: and . assumes that the reward variances are known and is a stepping stone for our fully-adaptive BAI algorithm , which estimates them. utilizes high-probability upper confidence bounds on the reward variances. Both algorithms are motivated by sequential halving () of Karnin et al. [2013], a near-optimal solution for fixed-budget BAI with homogeneous reward variances.

Our main contributions are:

-

•

We design two variance-adaptive algorithms for fixed-budget BAI: for known reward variances and for unknown reward variances. is only a third algorithm for this setting [Gabillon et al., 2011, Faella et al., 2020] and only a second that can be implemented as analyzed [Faella et al., 2020]. The key idea in is to solve a budget allocation problem with unknown reward variances by a greedy algorithm that overestimates them. This idea can be applied to other elimination algorithms in the cumulative regret setting [Auer and Ortner, 2010] and is of independent interest to the field of optimal experiment design [Pukelsheim, 1993].

-

•

We prove upper bounds on the probability of misidentifying the best arm for both and . The analysis of extends that of Karnin et al. [2013] to heterogeneous variances. The analysis of relies on a novel lower bound on the number of pulls of an arm that scales linearly with its unknown reward variance. This permits an analysis of sequential halving without requiring a closed form for the number of pulls of each arm.

-

•

We evaluate our methods empirically on Gaussian bandits and the MovieLens dataset [Lam and Herlocker, 2016]. In most settings, and outperform all prior algorithms.

2 Setting

We use the following notation. Random variables are capitalized, except for Greek letters like . For any positive integer , we define . The indicator function is denoted by . The -th entry of vector is . If the vector is already indexed, such as , we write . The big O notation up to logarithmic factors is .

We have a stochastic bandit with arms and denote the set of arms by . When the arm is pulled, its reward is drawn i.i.d. from its reward distribution. The reward distribution of arm is sub-Gaussian with mean and variance proxy . The best arm is the arm with the highest mean reward,

Without loss of generality, we make an assumption that the arms are ordered as . Therefore, arm is a unique best arm. The agent has a budget of observations and the goal is to identify as accurately as possible after pulling all arms times. Specifically, let denote the arm returned by the agent after pulls. Then our objective is to minimize the probability of misidentifying the best arm , which we also call a mistake probability. This setting is known as fixed-budget BAI [Bubeck et al., 2009, Audibert et al., 2010]. When observations are costly, it is natural to limit them by a fixed budget .

Another commonly studied setting is fixed-confidence BAI [Even-Dar et al., 2006, Soare et al., 2014]. Here the agent is given an upper bound on the mistake probability as an input and the goal is to attain at minimum budget . Some works also studied both the fixed-budget and fixed-confidence settings [Gabillon et al., 2012, Karnin et al., 2013, Kaufmann et al., 2016].

3 Algorithms

A near-optimal solution for fixed-budget BAI with homogeneous reward variances is sequential halving [Karnin et al., 2013]. The key idea is to sequentially eliminate suboptimal arms in stages. In each stage, all arms are pulled equally and the worst half of the arms are eliminated at the end of the stage. At the end of the last stage, only one arm remains and that arm is the estimated best arm.

The main algorithmic contribution of our work is that we generalize sequential halving of Karnin et al. [2013] to heterogeneous reward variances. All of our algorithms can be viewed as instances of a meta-algorithm (Algorithm 1), which we describe in detail next. Its inputs are a budget on the number of observations and base algorithm . The meta-algorithm has stages (line 2) and the budget is divided equally across the stages, with a per-stage budget (line 5). In stage , all remaining arms are pulled according to (lines 6–8). At the end of stage , the worst half of the remaining arms, as measured by their estimated mean rewards, is eliminated (lines 9–12). Here is the stochastic reward of arm in round of stage , is the pulled arm in round of stage , is the number of pulls of arm in stage , and is its mean reward estimate from all observations in stage .

The sequential halving of Karnin et al. [2013] is an instance of Algorithm 1 for . The pseudocode of , which pulls all arms in stage equally, is in Algorithm 2. We call the resulting algorithm . This algorithm misidentifies the best arm with probability [Karnin et al., 2013]

| (1) |

where

| (2) |

is a complexity parameter and is the suboptimality gap of arm . The bound in (1) decreases as budget increases and problem complexity decreases.

is near optimal only in the setting of homogeneous reward variances. In this work, we study the general setting where the reward variances of arms vary, potentially as extremely as in our motivating example in Figure 1. In this example, would face arms with both low and high variances in each stage. A variance-adaptive could adapt its budget allocation in each stage to the reward variances and thus eliminate suboptimal arms more effectively.

3.1 Known Heterogeneous Reward Variances

We start with the setting of known reward variances. Let

| (3) |

be a known reward variance of arm . Our proposed algorithm is an instance of Algorithm 1 for . The pseudocode of is in Algorithm 3. The key idea is to pull the arm with the highest variance of its mean reward estimate. The variance of the mean reward estimate of arm in round of stage is , where is the reward variance of arm and is the number of pulls of arm up to round of stage . We call the resulting algorithm .

Note that is an instance of . Specifically, when all for some , pulls all arms equally, as in . can be also viewed as pulling any arm in stage for

| (4) |

times. This is stated formally and proved below.

Lemma 1.

Fix stage and let the ideal number of pulls of arm be

Let all be integers. Then pulls arm in stage exactly times.

Proof.

First, suppose that pulls each arm exactly times. Then the variances of all mean reward estimates at the end of stage are identical, because

Now suppose that this is not true. This implies that there exists an over-pulled arm and an under-pulled arm such that

| (5) |

Since arm is over-pulled and is an integer, there must exist a round such that

Let be the last round where this equality holds, meaning that arm is pulled in round .

Now we combine the second inequality in (5) with , which holds by definition, and get

The last two sets of inequalities lead to a contradiction. On one hand, we know that arm is pulled in round . On the other hand, we have , which means that arm cannot be pulled. This completes the proof. ∎

Lemma 1 says that each arm is pulled times. Since the mean reward estimate of arm at the end of stage has variance , the variances of all estimates at the end of stage are identical, . This relates our problem to the G-optimal design [Pukelsheim, 1993]. Specifically, the -optimal design for independent experiments is an allocation of observations such that and the maximum variance

| (6) |

is minimized. This happens precisely when all are identical, when for in Lemma 1.

3.2 Unknown Heterogeneous Reward Variances

Our second proposal is an algorithm for unknown reward variances. One natural idea, which is expected to be practical but hard to analyze, is to replace in with its empirical estimate from the past rounds in stage ,

where

is the empirical mean reward of arm in round of stage . This design would be hard to analyze because can underestimate , and thus is not an optimistic estimate.

The key idea in our solution is to act optimistically using an upper confidence bound (UCB) on the reward variance. To derive it, we make an assumption that the reward noise is Gaussian. Specifically, the reward of arm in round of stage is distributed as . This allows us to derive the following upper and lower bounds on the unkown variance .

Lemma 2.

Fix stage , round , arm , and failure probability . Let

and suppose that . Then

holds with probability at least . Analogously,

holds with probability at least .

Proof.

The first claim is proved as follows. By Cochran’s theorem, we have that is a random variable with degrees of freedom. Its concentration was analyzed in Laurent and Massart [2000]. More specifically, by (4.4) in Laurent and Massart [2000], an immediate corollary of their Lemma 1, we have

Now we divide both sides in the probability by , multiply by , and rearrange the formula as

When , we can divide both sides by it and get the first claim in Lemma 2.

By Lemma 2, when ,

| (7) |

is a high-probability upper bound on the reward variance of arm in round of stage , which holds with probability at least . This bound decreases as the number of observations increases and confidence decreases. To apply the bound across multiple stages, rounds, and arms, we use a union bound.

The bound in (7) leads to our algorithm that overestimates the variance. The algorithm is an instance of Algorithm 1 for . The pseudocode of is in Algorithm 4. To guarantee , we pull all arms in any stage for times initially. We call the resulting algorithm .

Note that can be viewed as a variant of where replaces . Therefore, it can also be viewed as solving the G-optimal design in (6) without knowing reward variances ; and is of a broader interest to the optimal experiment design community [Pukelsheim, 1993]. We also note that the assumption of Gaussian noise in the design of is limiting. To address this issue, we experiment with non-Gaussian noise in Section 5.2.

4 Analysis

This section comprises three analyses. In Section 4.1, we bound the probability that , an algorithm that knows reward variances, misidentifies the best arm. In Section 4.2, we provide an alternative analysis that does not rely on the closed form in (4). Finally, in Section 4.3, we bound the probability that , an algorithm that learns reward variances, misidentifies the best arm.

All analyses are under the assumption of Gaussian reward noise. Specifically, the reward of arm in round of stage is distributed as .

4.1 Error Bound of

We start with analyzing , which is a stepping stone for analyzing . To simplify the proof, we assume that both and are integers. We also assume that all budget allocations have integral solutions in Lemma 1.

Theorem 3.

misidentifies the best arm with probability

where is the minimum gap.

Proof.

The claim is proved in Appendix A. We follow the outline in Karnin et al. [2013]. The novelty is in extending the proof to heterogeneous reward variances. This requires a non-uniform budget allocation, where arms with higher reward variances are pulled more (Lemma 1). ∎

The bound in Theorem 3 depends on all quantities as expected. It decreases as budget and minimum gap increase, and the number of arms and variances decrease. reduces to in Karnin et al. [2013] when for all arms . The bounds of and become comparable when we apply in (1) and note that in Theorem 3. The extra factor of in the exponent of (1) is due to a different proof, which yields a finer dependence on gaps.

4.2 Alternative Error Bound of

Now we analyze differently. The resulting bound is weaker than that in Theorem 3 but its proof can be easily extended to .

Theorem 4.

misidentifies the best arm with probability

where is the minimum gap and is the maximum reward variance.

Proof.

The claim is proved in Appendix B. The key idea in the proof is to derive a lower bound on the number of pulls of any arm in stage , instead of using the closed form of in (4). The lower bound is

An important property of the bound is that it is , similarly to in (4). Therefore, the rest of the proof is similar to that of Theorem 3. ∎

4.3 Error Bound of

Now we analyze .

Theorem 5.

Suppose that and

Then misidentifies the best arm with probability

where and are defined in Theorem 4, and

Proof.

The claim is proved in Appendix C. The key idea in the proof is to derive a lower bound on the number of pulls of any arm in stage , similarly to that in Theorem 4. The lower bound is

and holds with probability at least . Since the bound is , as in the proof of Theorem 4, the rest of the proof is similar. The main difference from Theorem 4 is in factor , which converges to as . ∎

5 Experiments

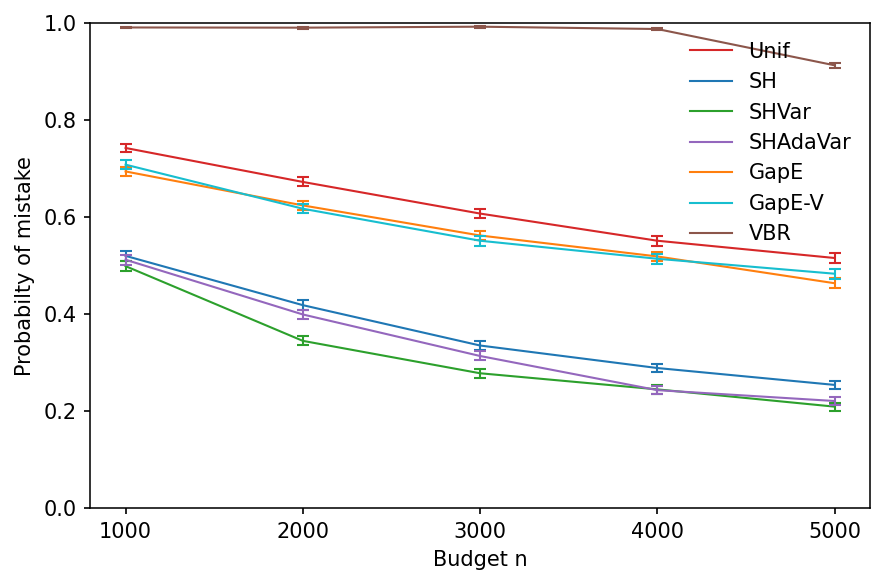

In this section, we empirically evaluate our proposed algorithms, and , and compare them to algorithms from prior works. We choose the following baselines: uniform allocation (), sequential halving () [Karnin et al., 2013], gap-based exploration () [Gabillon et al., 2011], gap-based exploration with variance () [Gabillon et al., 2011], and variance-based rejects () [Faella et al., 2020].

allocates equal budget to all arms and was originally proposed for homogeneous reward variances. Neither nor can adapt to heterogenuous reward variances. , and are variance-adaptive BAI methods from related works (Section 6). In , we use from Theorem 1 of Gabillon et al. [2011]. In , we use from Theorem 2 of Gabillon et al. [2011]. Both and assume bounded reward distributions with support . We choose , since this is a high-probability upper bound on the absolute value of independent observations from . In , we set , and thus our upper bounds on reward variances hold with probability . In , , which means that the mean arm rewards lie between their upper and lower bounds with probability . Faella et al. [2020] showed that performs well with Gaussian noise when . All reported results are averaged over runs.

and have error bounds on the probability of misidentifying the best arm, where is the budget, is the complexity parameter, and for and for . Our error bounds are , where is a comparable complexity parameter and . Even for moderate , . Therefore, when and are implemented as analyzed, they provide stronger guarantees on identifying the best arm than and . To make the algorithms comparable, we set of and to , by increasing their confidence widths. Since is an input to both and , note that they have an advantage over our algorithms that do not require it.

5.1 Synthetic Experiments

Our first experiment is on a Gaussian bandit with arms. The mean reward of arm is . We choose this setting because is known to perform well in it. Specifically, note that the complexity parameter in (2) is minimized when are equal for all . For our , and thus . We set the reward variance as when arm is even and when arm is odd. We additionally perturb and with additive and multiplicative noise, respectively. We visualize the mean rewards and the corresponding variances , for arms, in Figure 1. The variances are chosen so that every stage of sequential halving involves both high-variance and low-variance arms. Therefore, an algorithm that adapts its budget allocation to the reward variances of the remaining arms eliminates the best arm with a lower probability than the algorithm that does not.

In Figure 2, we report the probability of misidentifying the best arm among arms (Figure 1) as budget increases. As expected, the naive algorithm performs the worst. and perform only slightly better. When the algorithms have comparable error guarantees to and , their confidence intervals are too wide to be practical. performs surprisingly well. As observed by Karnin et al. [2013] and confirmed by Li et al. [2018], is a superior algorithm in the fixed-budget setting because it aggressively eliminates a half of the remaining arms in each stage. Therefore, it outperforms and . We note that outperforms all algorithms for all budgets . For smaller budgets, outperforms . However, as the budget increases, outperforms ; and without any additional information about the problem instance approaches the performance of , which knows the reward variances. This shows that our variance upper bounds improve quickly with larger budgets, as is expected based on the algebraic form in (7).

In the next experiment, we take same Gaussian bandit as in Figure 2. The budget is fixed at and we vary the number of arms from to . In Figure 3, we show the probability of misidentifying the best arm as the number of arms increases. We observe two major trends. First, the relative order of the algorithms, as measured by their probability of a mistake, is similar to Figure 2. Second, all algorithms get worse as the number of arms increases because the problem instance becomes harder. This experiment shows that and can perform well for a wide range of , they have the lowest probabilities of a mistake for all . While the other algorithms perform well at , their probability of a mistake is around or below; they perform poorly at , their probability of a mistake is above .

5.2 MovieLens Experiments

Our next experiment is motivated by the A/B testing problem in Section 1. The objective is to identify the movie with the highest mean rating from a pool of movies, where movies are arms and their ratings are rewards. The movies, users, and ratings are simulated using the MovieLens 1M dataset [Lam and Herlocker, 2016]. This dataset contains one million ratings given by users to movies. We complete the missing ratings using low-rank matrix factorization with rank , which is done using alternating least squares [Davenport and Romberg, 2016]. The result is a matrix , where is the estimated rating given by user to movie .

This experiment is averaged over runs. In each run, we randomly choose new movies according to the following procedure. For all arms , we generate mean and variance as described in Section 5.1. Then, for each , we find the closest movie in the MovieLens dataset with mean and variance , the movie that minimizes the distance . The means and variances of movie ratings from two runs are shown in Figure 4. As in Section 5.1, the movies are selected so that sequential elimination with halving is expected to perform well. The variance of movie ratings in Figure 4 is intrinsic to our domain: movies are often made for specific audiences and thus can have a huge variance in their ratings. For instance, a child may not like a horror movie, while a horror enthusiast would enjoy it. Because of this, an algorithm that adapts its budget allocation to the rating variances of the remaining movies can perform better. The last notable difference from Section 5.1 is that movie ratings are realistic. In particular, when arm is pulled, we choose a random user and return as its stochastic reward. Therefore, this experiment showcases the robustness of our algorithms beyond Gaussian noise.

In Figure 5, we report the probability of misidentifying the best movie from as budget increases. and perform the best for most budgets, although the reward distributions are not Gaussian. The relative performance of the algorithms is similar to Section 5.1: is the worst, and and improve upon it. The only exception is : it performs poorly for smaller budgets, and on par with and for larger budgets.

We increase the number of movies next. In Figure 6, we report the probability of misidentifying the best movie from as budget increases. The trends are similar to , except that performs poorly for all budgets. This is because has stages and eliminates one arm per stage even when the number of observations is small. In comparison, our algorithms have stages.

6 Related Work

Best-arm identification has been studied extensively in both fixed-budget [Bubeck et al., 2009, Audibert et al., 2010] and fixed-confidence [Even-Dar et al., 2006] settings. The two closest prior works are Gabillon et al. [2011] and Faella et al. [2020], both of which studied fixed-budget BAI with heterogeneous reward variances. All other works on BAI with heterogeneous reward variances are in the fixed-confidence setting [Lu et al., 2021, Zhou and Tian, 2022, Jourdan et al., 2022].

The first work on variance-adaptive BAI was in the fixed-budget setting [Gabillon et al., 2011]. This paper proposed algorithm and showed that its probability of mistake decreases exponentially with budget . Our error bounds are comparable to Gabillon et al. [2011]. The main shortcoming of the analyses in Gabillon et al. [2011] is that they assume that the complexity parameter is known and used by . Since the complexity parameter depends on unknown gaps and reward variances, it is typically unknown in practice. To address this issue, Gabillon et al. [2011] introduced an adaptive variant of , , where the complexity parameter is estimated. This algorithm does not come with any guarantee.

The only other work that studied variance-adaptive fixed-budget BAI is Faella et al. [2020]. This paper proposed and analyzed a variant of successive rejects algorithm [Audibert et al., 2010]. Since of Karnin et al. [2013] has a comparable error bound to successive rejects of Audibert et al. [2010], our variance-adaptive sequential halving algorithms have comparable error bounds to variance-adaptive successive rejects of Faella et al. [2020]. Roughly speaking, all bounds can be stated as , where is a complexity parameter that depends on the number of arms , their variances, and their gaps.

We propose variance-adaptive sequential halving for fixed-budget BAI. Our algorithms have state-of-the-art performance in our experiments (Section 5). They are conceptually simpler than prior works [Gabillon et al., 2011, Faella et al., 2020] and can be implemented as analyzed, unlike Gabillon et al. [2011].

7 Conclusions

We study best-arm identification in the fixed-budget setting where the reward variances vary across the arms. We propose two variance-adaptive elimination algorithms for this problem: for known reward variances and for unknown reward variances. Both algorithms proceed in stages and pull arms with higher reward variances more often than those with lower variances. While the design and analysis of are of interest, they are a stepping stone for , which adapts to unknown reward variances. The novelty in is in solving an optimal design problem with unknown observation variances. Its analysis relies on a novel lower bound on the number of arm pulls in BAI that does not require closed-form solutions to the budget allocation problem. Our numerical simulations show that and are not only theoretically sound, but also competitive with state-of-the-art baselines.

Our work leaves open several questions of interest. First, the design of is for Gaussian reward noise. The reason for this choice is that our initial experiments showed quick concentration and also robustness to noise misspecification. Concentration of general random variables with unknown variances can be analyzed using empirical Bernstein bounds [Maurer and Pontil, 2009]. This approach was taken by Gabillon et al. [2011] and could also be applied in our setting. For now, to address the issue of Gaussian noise, we experiment with non-Gaussian noise in Section 5.2. Second, while our error bounds depend on all parameters of interest as expected, we do not provide a matching lower bound. When the reward variances are known, we believe that a lower bound can be proved by building on the work of Carpentier and Locatelli [2016]. Finally, our algorithms are not contextual, which limits their application because many bandit problems are contextual [Li et al., 2010, Wen et al., 2015, Zong et al., 2016].

References

- Audibert et al. [2010] Jean-Yves Audibert, Sebastien Bubeck, and Remi Munos. Best arm identification in multi-armed bandits. In Proceeding of the 23rd Annual Conference on Learning Theory, pages 41–53, 2010.

- Auer and Ortner [2010] Peter Auer and Ronald Ortner. UCB revisited: Improved regret bounds for the stochastic multi-armed bandit problem. Periodica Mathematica Hungarica, 61(1-2):55–65, 2010.

- Boucheron et al. [2013] Stephane Boucheron, Gabor Lugosi, and Pascal Massart. Concentration Inequalities: A Nonasymptotic Theory of Independence. Oxford University Press, 2013.

- Bubeck et al. [2009] Sebastien Bubeck, Remi Munos, and Gilles Stoltz. Pure exploration in multi-armed bandits problems. In Proceedings of the 20th International Conference on Algorithmic Learning Theory, pages 23–37, 2009.

- Carpentier and Locatelli [2016] Alexandra Carpentier and Andrea Locatelli. Tight (lower) bounds for the fixed budget best arm identification bandit problem. In Proceeding of the 29th Annual Conference on Learning Theory, pages 590–604, 2016.

- Davenport and Romberg [2016] Mark Davenport and Justin Romberg. An overview of low-rank matrix recovery from incomplete observations. IEEE Journal of Selected Topics in Signal Processing, 10(4):608–622, 2016.

- Even-Dar et al. [2006] Eyal Even-Dar, Shie Mannor, and Yishay Mansour. Action elimination and stopping conditions for the multi-armed bandit and reinforcement learning problems. Journal of Machine Learning Research, 7:1079–1105, 2006.

- Faella et al. [2020] Marco Faella, Alberto Finzi, and Luigi Sauro. Rapidly finding the best arm using variance. In Proceedings of the 24th European Conference on Artificial Intelligence, 2020.

- Gabillon et al. [2011] Victor Gabillon, Mohammad Ghavamzadeh, Alessandro Lazaric, and Sebastien Bubeck. Multi-bandit best arm identification. In Advances in Neural Information Processing Systems 24, 2011.

- Gabillon et al. [2012] Victor Gabillon, Mohammad Ghavamzadeh, and Alessandro Lazaric. Best arm identification: A unified approach to fixed budget and fixed confidence. In Advances in Neural Information Processing Systems 25, 2012.

- Jourdan et al. [2022] Marc Jourdan, Remy Degenne, and Emilie Kaufmann. Dealing with unknown variances in best-arm identification. CoRR, abs/2210.00974, 2022. URL https://arxiv.org/abs/2210.00974.

- Karnin et al. [2013] Zohar Karnin, Tomer Koren, and Oren Somekh. Almost optimal exploration in multi-armed bandits. In Proceedings of the 30th International Conference on Machine Learning, pages 1238–1246, 2013.

- Kaufmann et al. [2016] Emilie Kaufmann, Olivier Cappe, and Aurelien Garivier. On the complexity of best-arm identification in multi-armed bandit models. Journal of Machine Learning Research, 17(1):1–42, 2016.

- Krause et al. [2008] Andreas Krause, Ajit Paul Singh, and Carlos Guestrin. Near-optimal sensor placements in Gaussian processes: Theory, efficient algorithms and empirical studies. Journal of Machine Learning Research, 9:235–284, 2008.

- Lam and Herlocker [2016] Shyong Lam and Jon Herlocker. MovieLens Dataset. http://grouplens.org/datasets/movielens/, 2016.

- Lattimore and Szepesvari [2019] Tor Lattimore and Csaba Szepesvari. Bandit Algorithms. Cambridge University Press, 2019.

- Laurent and Massart [2000] B. Laurent and P. Massart. Adaptive estimation of a quadratic functional by model selection. The Annals of Statistics, 28(5):1302–1338, 2000.

- Li et al. [2010] Lihong Li, Wei Chu, John Langford, and Robert Schapire. A contextual-bandit approach to personalized news article recommendation. In Proceedings of the 19th International Conference on World Wide Web, 2010.

- Li et al. [2018] Lisha Li, Kevin Jamieson, Giulia DeSalvo, Afshin Rostamizadeh, and Ameet Talwalkar. Hyperband: A novel bandit-based approach to hyperparameter optimization. Journal of Machine Learning Research, 18(185):1–52, 2018.

- Lu et al. [2021] Pinyan Lu, Chao Tao, and Xiaojin Zhang. Variance-dependent best arm identification. In Proceedings of the 37th Conference on Uncertainty in Artificial Intelligence, 2021.

- Maurer and Pontil [2009] Andreas Maurer and Massimiliano Pontil. Empirical Bernstein bounds and sample-variance penalization. In Proceedings of the 22nd Annual Conference on Learning Theory, 2009.

- Pukelsheim [1993] Friedrich Pukelsheim. Optimal Design of Experiments. John Wiley & Sons, 1993.

- Soare et al. [2014] Marta Soare, Alessandro Lazaric, and Remi Munos. Best-arm identification in linear bandits. In Advances in Neural Information Processing Systems 27, pages 828–836, 2014.

- Wen et al. [2015] Zheng Wen, Branislav Kveton, and Azin Ashkan. Efficient learning in large-scale combinatorial semi-bandits. In Proceedings of the 32nd International Conference on Machine Learning, 2015.

- Zhou and Tian [2022] Ruida Zhou and Chao Tian. Approximate top- arm identification with heterogeneous reward variances. In Proceedings of the 25th International Conference on Artificial Intelligence and Statistics, 2022.

- Zong et al. [2016] Shi Zong, Hao Ni, Kenny Sung, Nan Rosemary Ke, Zheng Wen, and Branislav Kveton. Cascading bandits for large-scale recommendation problems. In Proceedings of the 32nd Conference on Uncertainty in Artificial Intelligence, 2016.

Appendix A Proof of Theorem 3

First, we decompose the probability of choosing a suboptimal arm. For any , let be the event that the best arm is not eliminated in stage and be its complement. Then by the law of total probability,

We bound based on the observation that the best arm can be eliminated only if the estimated mean rewards of at least a half of the arms in are at least as high as that of the best arm. Specifically, let be the set of all arms in stage but the best arm and

Then by the Markov’s inequality,

The key step in bounding the above expectation is understanding the probability that any arm has a higher estimated mean reward than the best one. We bound this probability next.

Lemma 6.

For any stage with the best arm, , and any suboptimal arm , we have

Proof.

The proof is based on concentration inequalities for sub-Gaussian random variables [Boucheron et al., 2013]. In particular, since and are sub-Gaussian with variance proxies and , respectively; their difference is sub-Gaussian with a variance proxy . It follows that

where the last step follows from the definitions of and in Lemma 1. ∎

The last major step is bounding with the help of Lemma 6. Starting with the union bound, we get

Now we chain all inequalities and get

To get the final claim, we use that

This concludes the proof.

Appendix B Proof of Theorem 4

This proof has the same steps as that in Appendix A. The only difference is that and in Lemma 6 are replaced with their lower bounds, based on the following lemma.

Lemma 7.

Fix stage and arm in . Then

where is the maximum reward noise and is the budget in stage .

Proof.

Let be the most pulled arm in stage and be the round where arm is pulled the last time. By the design of , since arm is pulled in round ,

holds for any arm . This can be further rearranged as

Since arm is the most pulled arm in stage and is the round of its last pull,

Moreover, . Now we combine all inequalities and get

| (8) |

To eliminate dependence on random , we use . This concludes the proof. ∎

Appendix C Proof of Theorem 5

This proof has the same steps as that in Appendix A. The main difference is that and in Lemma 6 are replaced with their lower bounds, based on the following lemma.

Lemma 8.

Fix stage and arm in . Then

where is the maximum reward noise, is the budget in stage , and

is an arm-independent constant.

Proof.

Let be the most pulled arm in stage and be the round where arm is pulled the last time. By the design of , since arm is pulled in round ,

holds for any arm . Analogously to (8), this inequality can be rearranged and loosened as

| (9) |

We bound from below using the fact that holds with probability at least , based on the first claim in Lemma 2. To bound , we apply the second claim in Lemma 2 to bound in , and get that

holds with probability at least . Finally, we plug both bounds into (9) and get

To eliminate dependence on random , we use that and . This yields our claim and concludes the proof of Lemma 8. ∎

Similarly to Lemma 7, this bound is asymptotically tight when all reward variances are identical. Also as . Therefore, the bound has the same shape as that in Lemma 7.

The application of Lemma 8 requires more care. Specifically, it relies on high-probability confidence intervals derived in Lemma 2, which need . This is guaranteed whenever . Moreover, since the confidence intervals need to hold in any stage and round , and for any arm , we need a union bound over events. This leads to the following claim.