FineEHR: Refine Clinical Note Representations to Improve Mortality Prediction

Abstract

Monitoring the health status of patients in the Intensive Care Unit (ICU) is a critical aspect of providing superior care and treatment. The availability of large-scale electronic health records (EHR) provides machine learning models with an abundance of clinical text and vital sign data, enabling them to make highly accurate predictions. Despite the emergence of advanced Natural Language Processing (NLP) algorithms for clinical note analysis, the complex textual structure and noise present in raw clinical data have posed significant challenges. Coarse embedding approaches without domain-specific refinement have limited the accuracy of these algorithms. To address this issue, we propose FineEHR, a system that utilizes two representation learning techniques, namely metric learning and fine-tuning, to refine clinical note embeddings, while leveraging the intrinsic correlations among different health statuses and note categories. We evaluate the performance of FineEHR using two metrics, namely Area Under the Curve (AUC) and AUC-PR, on a real-world MIMIC III dataset. Our experimental results demonstrate that both refinement approaches improve prediction accuracy, and their combination yields the best results. Moreover, our proposed method outperforms prior works, with an AUC improvement of over 10%, achieving an average AUC of 96.04% and an average AUC-PR of 96.48% across various classifiers.

Index Terms:

Electronic Health Record, Mortality Prediction, Representation LearningI Introduction

I-A Background and Motivation

Mortality prediction, which aims to forecast the probability of patient death, is crucial for timely and improved risk assessment in clinical services. Intensive care units (ICUs) produce and record massive structured or unstructured clinical data, increasing the potential for machine learning models to predict and analyze automatically instead of relying on specialists. Many researchers used advanced natural language processing algorithms to learn document embeddings from textual data, then make prediction models for downstream tasks. Clinical notes tend to be fragmented and have different writing styles due to individual differences between recorders, which are very noisy for machine learning models and limit the performance of prediction tasks overall. Our work investigates representation learning techniques to overcome this challenge.

I-B Mortality prediction via Electronic Health Records

Previous works in mortality prediction can be categorized into three approaches: the time series-based approach, the texts-based approach, and the hybrid approach.

I-B1 Time series-based

These works use clinical time series data to analyze and predict the health status of patients, including vital signs (e.g., heart rate, blood pressure) and laboratory test results (e.g., electrolytes, blood glucose). Harutyunyan et al. [1] proposed four prediction tasks on the MIMIC III dataset, including In-hospital Mortality, Decompensation, Length of Stay, and Phenotyping, and also explored the effects of multitask learning using clinical time series data on all tasks. Facing the challenge of missing data, Che et al. [2] used an RNN-based approach to interpolate missing data in multivariate clinical time series data, significantly improving prediction accuracy. Narayan Shukla et al. [3] proposed a multi-attention based mechanism to solve the problem of irregularly sampled clinical time series data, improving prediction accuracy on tasks.

I-B2 Texts-based

Clinical notes are textual data recorded by healthcare providers. This approach models the semantic information of clinical notes to reflect the health status of patients and then make essential predictions like mortality, readmission, and stays-days. Clinical note categories include nursing notes, discharge summaries, and physician notes, which are recorded by healthcare professionals during patient hospital stays. Boag et al. [4] proposed extracting critical information and values from clinical notes to predict mortality. Liu et al. [5] introduced a knowledge-aware deep dual network to utilize external medical knowledge to guide mortality prediction. Huang et al. [6] designed ClinicalBERT, a domain-specific adaptation of the pre-trained BERT model, optimizing semantic embeddings of clinical notes to improve the prediction accuracy of hospital readmissions. Jin et al. [7] using named entities recognition to discover important words in clinical notes to enhance hospital mortality prediction.

I-B3 Hybrid

These researches combine multiple data types like clinical textual, time series, image to profile a patient’s health status, enabling more accurate predictions of clinical tasks. Ghorbani et al. [8] and Khadanga et al. [9] designed a hybrid predictive model combining patient health signs in clinical time series and notes and performed well in mortality prediction on imbalanced datasets. Deznabi et al. [10] combined clinical notes and time series data to create a more comprehensive representation of patients’ health status for accurate mortality prediction. Zheng et al. [11] proposed a novel heterogeneous graph approach to model the correlation between patients and diseases to make better prediction performance. Fan et al. [12] and [13] designed attention neural network to extract tissue topological structures from magnetic resonance imaging (MRI) data for predicting breast cancer.

I-C Representation Generation and Refining

Embedding unstructured data across various modals typically involves two key steps: generating embedding and refining embedding. We first introduce some classic algorithms and advanced application works of embedding generation across various data sources, including text, image, and multisource data. Then we discuss embedding refining approaches for improving downstream tasks’ performance, including fine-tuning, metric method, reweighing and sampling.

I-C1 Embedding Generation

This field targets mapping high-dimensional data into a numerical vector space. In the field of natural language processing (NLP), the generation operation represents words, phrases, and sentences into numerical vectors. Popular methods include Word2Vec [14], GloVe [15], and Transformer [16], which leverage various techniques such as neural networks, co-occurrence statistics, and contextual language modeling to capture the semantic and syntactic properties of textual data.

Image and video data are widely collected in hospitals. Image data are represented to high-dimensional space before make prediction. The most famous work is AlexNet [17], which learns hierarchical feature representations from raw image data. Currently, DeepMTL Pro [18] proposed a novel image-to-image translation framework via image representing and understanding. Many deep learning and machine learning models represent image data to diagnose diseases. Syed Muhammad et al. [19] is a survey to introduce how convolutional neural network (CNN) works for medical image analysis. Yuli et al. [20] utilized probability maps to represent magnetic resonance images (MRIs) for brain disorder analysis. Liangliang et al. [21] proposed a deep reinforcement learning model to detect and track multiple objections in video frames. Xueshen et al. [22] and [23] designed spatial-temporal deep learning algorithms to detect and measure gastric diseases.

Jointly learning from textual, image and time series data (multi-source data) and make representation is challenge and essential for tasks in the real-world environment. Current researches made some breakthroughs. Yuxin et al. [24] designed a multi-modal transformer-based architecture and applied it in e-commerce applications. ALBEF [25] aligned text and image embeddings to do text-image matching. GL-RG [26] can generate text with similar semantic information from visual signals. CellPAD [27] combined temporal signals and local patterns in time series to predict network anomalies. Xiaoling et al. [28] combined time-series signs and video to estimate vehicle traffic. BotShape [29] represented social account behavioral logs into sequence embeddings for bot detection. Shujie et al. [30] combined textual system logs and hard disk time series to predict disk failure in data center.

I-C2 Embedding Refining

This technique improves the quality of initially generated embeddings and represents them as new embeddings. Metric learning and model fine-tuning are two main refining technqiues.

Metric learning was first applied in computer vision by adjusting the distance between positive and negative samples for better prediction task performance. Bertinetto et al. [31] proposed a fully-convolutional siamese network architecture for object tracking and presented accurate performance across various scenarios. Zhuoyi et al. [32] and [33] target to build a robust and general metric-learning solution to deal with open-world problems. Our previous work BotTriNet [34] applied the triplet network to textual embedding representation, vastly improving social bots detection accuracy.

The most efficient application of fine-tuning technique is BERT [35], fine-tuning parameters of the pre-trained model, showing outperformance on various NLP tasks. ULMFiT [36] applied transfer learning method for fine-turning a language model. Statistical re-weighting is a common strategy to solve medical data imbalance problem. However, PriMeD [37] abandoned the solution proposed an intelligent deep learning method via auto-encoder.

II Dataset

| Category | Ratio | Descriptions |

|---|---|---|

| Discharge summary | 97.57% | A summary of a patient diagnosis, treatment during discharge (has no mortality status). |

| ECG | 87.58% | A text that records the electrical activity of the heart to help diagnose heart conditions. |

| Radiology | 82.95% | A medical imaging record such as X-rays, CT scans, and MRI. |

| Nursing / other | 51.39% | Notes of general nursing care or other issues. |

| Echo | 45.82% | A textual record of imaging test using sound waves to create pictures of the heart. |

| Nursing | 19.26% | A note related specific nursing care. |

| Physician | 19.16% | A note written by a physician or health carers. |

MIMIC III is a real-world clinical and health-related dataset, which includes thousands of ICU patient admission records in a medical center between 2001 and 2012. The dataset includes extensive electronic health records (EHR), including medications, laboratory results, vital signs, and diagnosis codes. We focus on the mortality prediction task. The mortality label is positive if a patient dies during their hospital stay. We use clinical notes to extract semantic embeddings as features. Clinical notes refer to text information recorded by healthcare professionals, including different types such as nursing notes, operative notes, progress notes, and others, each containing different kinds of information. Table I provides descriptions of clinical note categories whose proportion is over 15%. The ratio refers to the percentage of the corresponding note category that appears as the total number of hospital admissions.

III Design

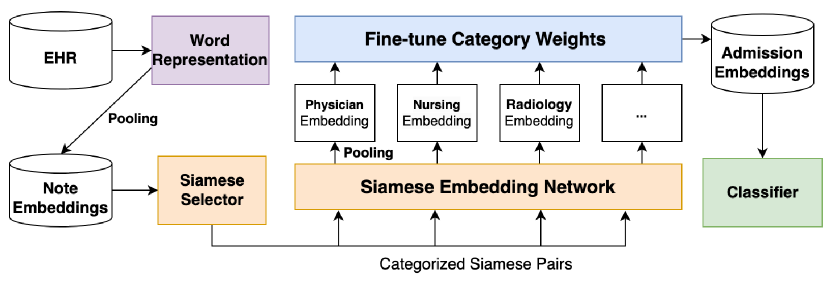

We present FineEHR, a system for refining clinical note representations for accurate mortality prediction. It takes clinical notes from ICU patients’ electronic health records (EHR) as input and predicts whether a patient will die in the future. Figure 1 shows the architecture of our system.

FineEHR first represents the original textual notes in semantic spaces via the word embedding technique and then generates note-level, category-level, and admission-level embeddings through the pooling method. To refine the above embeddings for better mortality prediction, considering the textual structure differences among various note categories, FineEHR utilizes two representation learning techniques, including metric learning and fine-tuning at the middle stages. Specifically, it first uses a Siamese Network [38] to adjust the distances between positive and negative note embeddings for each category. After producing category-level embeddings through average pooling of adjusted note embeddings, FineEHR learns each category’s weights (importance) by fine-tuning on training instances and then aggregating all category-level embeddings into the unique admission-level embedding for each patient via pooling according to corresponding weights. Finally, the tuned admission-level embeddings support various classifiers for mortality prediction.

III-A Word Representation

Word embedding is a fundamental technique in the field of NLP. It transforms words into lower dimensions with a vector representation as the base material for downstream NLP modeling. The widely applied word representation algorithms include Word2Vec [39], GloVe [15], and BERT [16]. FineEHR utilizes Word2Vec to generate clinical word embeddings based on the textual MIMIC III notes. To avoid transforming label information to test samples, we restrictively used only texts in train samples to fit and generate word embeddings. FineEHR selected Word2Vec as the word representation approach because it outperformed GloVe in the performance of our task, and its embedding generation speed was faster than the pre-trained model called BioBERT [40].

The detailed implementation first split texts into sentences, then split each sentence into continuous word sequences (called tokenization). Then, it inputs word sequences into the Word2Vec model, which utilizes the surrounding words to make word presentations. The structure of EHR notes is irregular, and we did extra text preprocessing for original texts before tokenization. In detail, we use a newline as the splitting point, even if it looks incomplete, except for standard ending punctuation between sentences. We also convert all capital letters to lowercase before tokenization to handle multiple versions of the same medical vocabulary.

III-B Refine Note Embedding via Siamese Network

FineEHR applies average pooling to generate note-level embeddings for each clinical note. Average pooling is a simple but effective approach in sentence embedding generation and downstream classification tasks [41]. The dimension size of the note-level embedding and word embedding is the same after directly averaging values from all words in each dimension.

FineEHR chose Siamese Network to refine the original note embedding, inspired by Sentence BERT [42], which used a metric approach to represent sentence embedding to enhance the performance of downstream text tasks and outperformed the original sentence embeddings of BERT model. FineEHR did a domain-specific adaption of the Siamese Network after observing the inner similarity between same-category notes and the extensive content diversity of notes among different categories. We respectively introduce three core modules, including Siamese Selector, Siamese Embedding Network, and Siamese Loss.

III-B1 Siamese Selector

Siamese Selector refers to the algorithm for selecting Siamese Pairs. The overall training objective of the Siamese Network is to bring samples closer if they have the same label and move samples far from each other if their labels are different. In Siamese Networks, the format of an input instance is a pair during iterations. In detail, it randomly selects two samples of note embeddings at each time (called the Anchor and Contrast, collectively called a Siamese Pair). Specifically, if the contrast sample shares the same label as the anchor sample, the Pair is considered a positive instance; otherwise, it is a negative instance. The positive or negative correlation is passed to the Siamese Network to calculate the loss function.

To refine clinical representations better, FineEHR made a domain-specific adjustment to the Siamese Selector setting. Specifically, FineEHR divided samples of note embeddings into various groups based on their category. Pairs are randomly selected only within each group (category), with the correlation of labels determining whether they are positive or negative.

As illustrated in Table I, the content of different clinical note categories varies significantly, resulting in natural differences among note embeddings in the semantic space. Consequently, applying metric learning across different types of notes is deemed meaningless. We discuss the consequences of the two methods of not using a categorized manner. If two notes with the same mortality label but different categories are forced to be closer in the distance, their natural distributions (embeddings) may interfere with each other; if their original mortality statuses differ, pushing them away from each other is superfluous because they already distributed separately in semantic space. In conclusion, the categorized Siamese Selector enhances the precision of the embeddings and decreases the number of iterating epochs required.

III-B2 Siamese Embedding Network

Siamese Embedding Network is a representation learning network that can refine embedding by transforming raw embeddings into tuned embeddings. The network structure can be simple or complex. FineEHR chooses a symmetrical multilayer perceptron structure (MLP) as the representation network, where the input and output layer dimensions are equal, and the dimensions of each layer increase first and decrease then. We did not spend more time designing complex network structures because we found that the effect of MLP is remarkable enough.

III-B3 Siamese Loss

The loss function in a Siamese Network optimizes the embedding space by minimizing the distance between similar samples (positive pairs) and between dissimilar samples (negative pairs). The goal is to improve the model’s ability to differentiate between distinct classes or categories in the latent space.

A standard implementation of the Siamese Network loss function is the contrastive loss. It operates by calculating the Euclidean distance between the embeddings of the anchor and contrast samples. In detail, the contrastive loss function has two parts: one targeting positive and the other optimizing negative Siamese pairs.

Siamese Network adjusts the parameters of the Embedding Network via the gradient descent method to minimize the following contrastive loss function:

With the label of Siamese Pair (positive(Y=1) or negative(Y=0)), the Euclidean distance between the two raw embeddings of anchor and contrast samples in the pair. is a hyper-parameter to ensure that anchor and contrast samples with different labels are separated by a minimum distance in the embedding space.

III-C Refine Admission Embedding via Fine-tuning

As we mentioned in Section II, the definitions and ratios of note categories are different. We naturally consider the contributions of different clinical note categories to be various. For example, the Physician category contains more professional descriptions of diseases and symptoms, while the General category only records unimportant information like meals and schedules. Based on the observation and understanding of clinical notes, FineEHR defined fine-tuning approach based on categories to allocate different embeddings with different importance. Figure 2 shows the model design.

First, our system calculates the category-level embeddings for each note category by average pooling all notes with the corresponding category in one patient’s admission. Then it sets a linear representation model to learn weights for each category and represent multiple category-level embeddings into only one embedding (called refined admission-level embedding). The system optimizes the linear model weights based on the classification accuracy of the mortality prediction task. The system uses another linear MLP as the classifier to fit the correlation between the admission-level embedding and the mortality label. After iterations, the model outputs the parameters in the first linear model as the optimized weights and output layer embedding as the admission-level embedding for various classifiers to make predictions.

FineEHR outputs refined admission-layer embedding for downstream classifiers to fit training data. In other words, its main target is to learn a better representation of clinical notes, but it leaves the flexibility for users to choose different classification algorithms (e.g., Random Forest, Logistic Regression, or Deep Network). The sub section IV-B gives the performance across different classifier based on the refined admission-level embeddings.

IV Evaluation

IV-A Ground Truth and Performance Metrics

| RF | LR | MLP | GBDT | |||||

| Approach | AUC | AUC-PR | AUC | AUC-PR | AUC | AUC-PR | AUC | AUC-PR |

| Baseline | 86.73% | 88.72% | 89.52% | 90.75% | 86.41% | 87.35% | 87.30% | 88.80% |

| Only Metric | 94.10% | 95.01% | 92.69% | 93.84% | 91.05% | 93.15% | 93.53% | 93.59% |

| Only Weight | 95.09% | 95.36% | 94.35% | 95.02% | 94.41% | 93.90% | 96.02% | 96.50% |

| FineEHR | 97.18% | 97.77% | 95.18% | 96.08% | 94.91% | 95.35% | 96.88% | 96.72% |

Ground-truth is a helpful tool for evaluating the prediction performance with labeled instances. We conducted a ground-truth evaluation through the MIMIC III dataset to evaluate FineEHR. In the data, the identification of hospital admission is HADM_ID. The id correlates with an attribute HOSPITAL_EXPIRE_FLAG (called flag), recording the label of mortality and all related clinical notes during this admission. The flag equals one stand for the mortality status is actual, and we set the hospital admission as a positive instance. Otherwise, the admission is a negative instance.

The raw dataset is imbalanced. We equaled the numbers of positive and negative instances for a better performance evaluation via random downsampling on the negative samples. All the negative instances and sampled positive instances were randomly split into two groups called training set and testing set (testing occupying 20 percent).

Performance metrics are essential for comparing different models or approaches. We used AUC and AUC-PR as evaluation metrics. AUC is the area under the Receiver Operating Characteristic (ROC) curve, which plots the True Positive Rate (TPR) against the False Positive Rate (FPR). A higher AUC value indicates that the model performs better in discriminating between the positive and negative classes across various threshold settings. AUC-PR is the area under the precision-recall curve. The higher it is, the more mortality instances would be accurately predicted, and the fewer false alarms would appear.

IV-B Accuracy Improvements via Refining Approaches

To present the effectiveness of two refining approaches, including Siamese Network and Category Weights Fine-tuning, we conducted baseline experiments using raw embeddings without any refining process for better comparison.

Baseline Approach: In subsection III-B, FineEHR obtains raw note-level embeddings via average pooling. The baseline approach then directly computes the admission-level embedding through an average pooling of all related note-level embeddings.

Only Metric: We train a Siamese Network to refine the raw note-level embeddings separately in each category (using only instances in the training set). After refinement, FineEHR directly computes the admission-level embedding by averaging all the refined note-level embeddings.

Only Weight: Without Siamese Network refinement, FineEHR uses raw note-level embeddings to fine-tune the category weights in a linear model, then pools them into an admission-level embedding through learned weights for different categories.

Four settings (including the complete version of FineEHR) generate different admission-level embeddings. We use four well-known classifiers to make mortality predictions and evaluate the predicted labels using the testing set. RF refers to Random Forest. LR refers to Logistic Regression. MLP refers to Multi-layer Perceptron. GBDT refers to Gradient-Boosted Decision Trees. For a fair comparison, all classifiers are under default parameter settings without parameter optimization for the four different settings.

Table II shows that both the Metric and Weight approaches significantly improve the prediction accuracy. Moreover, when combining the two refinement approaches, FineEHR achieves the highest performance. These results demonstrate that the two refining approaches are very effective, independently or combined.

Table II shows the AUC and AUC-PR under different settings in FineEHR. It shows that both the Metric and Weight approaches significantly improve the prediction accuracy. Moreover, when combining the two refinement approaches, FineEHR achieves the highest performance. These results demonstrate that the two approaches are effective, whether independently or combined.

IV-C Compared With Previous Works

| Approaches | Approach | AUC |

|---|---|---|

| Harutyunyan et al. [1] | Time Series | 87.00% |

| Che et al. [2] | Time Series | 85.29% |

| Shukla et al. [3] | Time Series | 85.91% |

| Deznabi et al. [10] | Only Text | 87.50% |

| Deznabi et al. [10] | Hybrid | 89.90% |

| Khadanga et al. [9] | Hybrid | 86.50% |

| Jin et al. [7] | Hybrid | 87.53% |

| Baseline | Only Text | 86.73% |

| FineEHR | Only Text | 97.18% |

In subsection I-B1, we surveyed three mortality prediction approaches on time-series data and clinical notes in EHR. Most of them only presented the AUC result of mortality prediction. Therefore, we only compare their AUC with FineEHR to demonstrate its improvement. Table III shows the references, approach, and AUC (presented in the companion paper), as well as the Baseline Approach and FineEHR using Random Forest Classifier. The Baseline Approach has a lower AUC than most previous works. However, FineEHR demonstrates a state-of-the-art performance and improves the AUC by nearly 10 percent compared to previous works.

V Conclusions

Clinical text understanding and representation play an essential role in clinical prediction tasks. In this paper, we studied approaches to refine clinical embeddings for better prediction performance downstream. We focus on two representation learning techniques: metric learning and fine-tuning.

We designed a system called FineEHR to refine text embeddings based on our domain understanding of clinical texts’ complexity on various note sources (categories). It inputs raw EHR notes and mortality status, uses Siamese Network to represent raw embedding and adjust distances between note-level embedding pairs, and finally fine-tunes a linear model to combine multiple category-level embeddings into unique admission-level embedding with various weights.

Experiment results show that both refining approaches can improve AUC and AUC-PR compared to a baseline setting. Their combination achieves the highest performance, with an average AUC of 96.04% and an average AUC-PR of 96.48% on four famous classifiers. FineEHR also outperforms all previous approaches, with an AUC improvement of over 10%.

References

- [1] H. Harutyunyan, H. Khachatrian, D. C. Kale, G. Ver Steeg, and A. Galstyan, “Multitask learning and benchmarking with clinical time series data,” Scientific data, vol. 6, no. 1, p. 96, 2019.

- [2] Z. Che, S. Purushotham, K. Cho, D. Sontag, and Y. Liu, “Recurrent neural networks for multivariate time series with missing values,” Scientific reports, vol. 8, no. 1, p. 6085, 2018.

- [3] S. N. Shukla and B. M. Marlin, “Multi-time attention networks for irregularly sampled time series,” arXiv preprint arXiv:2101.10318, 2021.

- [4] W. Boag, D. Doss, T. Naumann, and P. Szolovits, “What’s in a note? unpacking predictive value in clinical note representations,” AMIA Summits on Translational Science Proceedings, vol. 2018, p. 26, 2018.

- [5] N. Liu, P. Lu, W. Zhang, and J. Wang, “Knowledge-aware deep dual networks for text-based mortality prediction,” in 2019 IEEE 35th International Conference on Data Engineering (ICDE). IEEE, 2019, pp. 1406–1417.

- [6] K. Huang, J. Altosaar, and R. Ranganath, “Clinicalbert: Modeling clinical notes and predicting hospital readmission,” arXiv preprint arXiv:1904.05342, 2019.

- [7] M. Jin, M. T. Bahadori, A. Colak, P. Bhatia, B. Celikkaya, R. Bhakta, S. Senthivel, M. Khalilia, D. Navarro, B. Zhang et al., “Improving hospital mortality prediction with medical named entities and multimodal learning,” arXiv preprint arXiv:1811.12276, 2018.

- [8] R. Ghorbani, R. Ghousi, A. Makui, and A. Atashi, “A new hybrid predictive model to predict the early mortality risk in intensive care units on a highly imbalanced dataset,” IEEE Access, vol. 8, pp. 141 066–141 079, 2020.

- [9] S. Khadanga, K. Aggarwal, S. Joty, and J. Srivastava, “Using clinical notes with time series data for icu management,” arXiv preprint arXiv:1909.09702, 2019.

- [10] I. Deznabi, M. Iyyer, and M. Fiterau, “Predicting in-hospital mortality by combining clinical notes with time-series data,” in Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, 2021, pp. 4026–4031.

- [11] Z. Liu, X. Li, H. Peng, L. He, and S. Y. Philip, “Heterogeneous similarity graph neural network on electronic health records,” in 2020 IEEE International Conference on Big Data (Big Data). IEEE, 2020, pp. 1196–1205.

- [12] F. Wang, S. Kapse, S. Liu, P. Prasanna, and C. Chen, “Topotxr: A topological biomarker for predicting treatment response in breast cancer,” in Information Processing in Medical Imaging: 27th International Conference, IPMI 2021, Virtual Event, June 28–June 30, 2021, Proceedings. Springer, 2021, pp. 386–397.

- [13] F. Wang, H. Liu, D. Samaras, and C. Chen, “Topogan: A topology-aware generative adversarial network,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part III 16. Springer, 2020, pp. 118–136.

- [14] T. Mikolov, I. Sutskever, K. Chen, G. S. Corrado, and J. Dean, “Distributed representations of words and phrases and their compositionality,” Advances in neural information processing systems, vol. 26, 2013.

- [15] J. Pennington, R. Socher, and C. D. Manning, “Glove: Global vectors for word representation,” in Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), 2014, pp. 1532–1543.

- [16] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

- [17] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” Communications of the ACM, vol. 60, no. 6, pp. 84–90, 2017.

- [18] C. Zhan, M. Ghaderibaneh, P. Sahu, and H. Gupta, “Deepmtl pro: Deep learning based multiple transmitter localization and power estimation,” Pervasive and Mobile Computing, vol. 82, p. 101582, 2022.

- [19] S. M. Anwar, M. Majid, A. Qayyum, M. Awais, M. Alnowami, and M. K. Khan, “Medical image analysis using convolutional neural networks: a review,” Journal of medical systems, vol. 42, pp. 1–13, 2018.

- [20] Y. Wang, A. Feng, Y. Xue, M. Shao, A. M. Blitz, M. D. Luciano, A. Carass, and J. L. Prince, “Investigation of probability maps in deep-learning-based brain ventricle parcellation,” in Medical Imaging 2023: Image Processing, vol. 12464. SPIE, 2023, pp. 565–570.

- [21] L. Ren, J. Lu, Z. Wang, Q. Tian, and J. Zhou, “Collaborative deep reinforcement learning for multi-object tracking,” in Proceedings of the European conference on computer vision (ECCV), 2018, pp. 586–602.

- [22] X. Li, Y. Gan, D. Duan, and X. Yang, “Detecting and measuring human gastric peristalsis using magnetically controlled capsule endoscope,” arXiv preprint arXiv:2301.10218, 2023.

- [23] Li, Xueshen and Gan, Yu and Duan, David and Yang, Xiao, “Detecting human gastric peristalsis using magnetically controlled capsule endoscope via deep learning,” in Medical Imaging 2023: Image Processing, vol. 12464. SPIE, 2023, pp. 325–329.

- [24] Y. Tian, S. Newsam, and K. Boakye, “Fashion image retrieval with text feedback by additive attention compositional learning,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2023, pp. 1011–1021.

- [25] J. Li, R. Selvaraju, A. Gotmare, S. Joty, C. Xiong, and S. C. H. Hoi, “Align before fuse: Vision and language representation learning with momentum distillation,” Advances in neural information processing systems, vol. 34, pp. 9694–9705, 2021.

- [26] L. Yan, Q. Wang, Y. Cui, F. Feng, X. Quan, X. Zhang, and D. Liu, “Gl-rg: Global-local representation granularity for video captioning,” arXiv preprint arXiv:2205.10706, 2022.

- [27] J. Wu, P. P. Lee, Q. Li, L. Pan, and J. Zhang, “Cellpad: Detecting performance anomalies in cellular networks via regression analysis,” in 2018 IFIP Networking Conference (IFIP Networking) and Workshops. IEEE, 2018, pp. 1–9.

- [28] X. Luo, X. Ma, M. Munden, Y.-J. Wu, and Y. Jiang, “A multisource data approach for estimating vehicle queue length at metered on-ramps,” Journal of Transportation Engineering, Part A: Systems, vol. 148, no. 2, p. 04021117, 2022.

- [29] J. Wu, X. Ye, and C. Mou, “Botshape: A novel social bots detection approach via behavioral patterns,” in 12th International Conference on Data Mining & Knowledge Management Process, 2023.

- [30] S. Han, J. Wu, E. Xu, C. He, P. P. Lee, Y. Qiang, Q. Zheng, T. Huang, Z. Huang, and R. Li, “Robust data preprocessing for machine-learning-based disk failure prediction in cloud production environments,” arXiv preprint arXiv:1912.09722, 2019.

- [31] L. Bertinetto, J. Valmadre, J. F. Henriques, A. Vedaldi, and P. H. Torr, “Fully-convolutional siamese networks for object tracking,” in Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, October 8-10 and 15-16, 2016, Proceedings, Part II 14. Springer, 2016, pp. 850–865.

- [32] Z. Wang, Y. Wang, B. Dong, S. Pracheta, K. Hamlen, and L. Khan, “Adaptive margin based deep adversarial metric learning,” in 2020 IEEE 6th Intl Conference on Big Data Security on Cloud (BigDataSecurity), IEEE Intl Conference on High Performance and Smart Computing,(HPSC) and IEEE Intl Conference on Intelligent Data and Security (IDS). IEEE, 2020, pp. 100–108.

- [33] Y. Gao, Y.-F. Li, B. Dong, Y. Lin, and L. Khan, “Sim: Open-world multi-task stream classifier with integral similarity metrics,” in 2019 IEEE International Conference on Big Data (Big Data). IEEE, 2019, pp. 751–760.

- [34] J. Wu, X. Ye, and M. Y. Yuet, “Bottrinet: A unified and efficient embedding for social bots detection via metric learning,” arXiv preprint arXiv:2303.03144, 2023.

- [35] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “Bert: Pre-training of deep bidirectional transformers for language understanding,” arXiv preprint arXiv:1810.04805, 2018.

- [36] J. Howard and S. Ruder, “Universal language model fine-tuning for text classification,” arXiv preprint arXiv:1801.06146, 2018.

- [37] Z. Liu, X. Li, and P. Yu, “Mitigating health disparities in ehr via deconfounder,” in Proceedings of the 13th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics, 2022, pp. 1–6.

- [38] J. Bromley, I. Guyon, Y. LeCun, E. Säckinger, and R. Shah, “Signature verification using a” siamese” time delay neural network,” Advances in neural information processing systems, vol. 6, 1993.

- [39] T. Mikolov, K. Chen, G. Corrado, and J. Dean, “Efficient estimation of word representations in vector space,” arXiv preprint arXiv:1301.3781, 2013.

- [40] J. Lee, W. Yoon, S. Kim, D. Kim, S. Kim, C. H. So, and J. Kang, “Biobert: a pre-trained biomedical language representation model for biomedical text mining,” Bioinformatics, vol. 36, no. 4, pp. 1234–1240, 2020.

- [41] N. Kalchbrenner, E. Grefenstette, and P. Blunsom, “A convolutional neural network for modelling sentences,” arXiv preprint arXiv:1404.2188, 2014.

- [42] N. Reimers and I. Gurevych, “Sentence-bert: Sentence embeddings using siamese bert-networks,” arXiv preprint arXiv:1908.10084, 2019.