Fine-grained Visual-Text Prompt-Driven Self-Training for Open-Vocabulary Object Detection

Abstract

Inspired by the success of vision-language methods (VLMs) in zero-shot classification, recent works attempt to extend this line of work into object detection by leveraging the localization ability of pre-trained VLMs and generating pseudo labels for unseen classes in a self-training manner. However, since the current VLMs are usually pre-trained with aligning sentence embedding with global image embedding, the direct use of them lacks fine-grained alignment for object instances, which is the core of detection. In this paper, we propose a simple but effective fine-grained Visual-Text Prompt-driven self-training paradigm for Open-Vocabulary Detection (VTP-OVD) that introduces a fine-grained visual-text prompt adapting stage to enhance the current self-training paradigm with a more powerful fine-grained alignment. During the adapting stage, we enable VLM to obtain fine-grained alignment by using learnable text prompts to resolve an auxiliary dense pixel-wise prediction task. Furthermore, we propose a visual prompt module to provide the prior task information (i.e., the categories need to be predicted) for the vision branch to better adapt the pre-trained VLM to the downstream tasks. Experiments show that our method achieves the state-of-the-art performance for open-vocabulary object detection, e.g., 31.5% mAP on unseen classes of COCO.

Index Terms:

Open-vocabulary Object Detection, Self-training, Prompt Learning, Vision-languageI Introduction

The dominant object detection paradigm uses supervised learning to predict limited categories of the object. However, the existing object detection datasets usually contain only a few categories due to the time-consuming labeling procedure, e.g., 20 in PASCAL VOC [1], 80 in COCO [2]. Some methods [3] attempt to expand the categories to a larger scale by adding more labeled datasets. However, it is time-consuming and difficult to guarantee sufficient instances in each class due to the naturally long-tailed distribution. On the other hand, previous methods [4, 5, 6, 7] follow the setting of zero-shot detection and align the visual embeddings with the text embeddings generated from a pre-trained text encoder on base categories, but they still have a significant performance gap compared to the supervised counterpart.

Benefiting from the massive scale of datasets collected from the web [8, 9], recent vision-language pre-training models (VLM), e.g., CLIP [10], have shown a surprising zero-shot classification capability by aligning the image embeddings with corresponding caption. However, it is a challenging research direction to transfer this zero-shot classification ability to object detection in dense prediction framework, because fine-grained pixel-level alignment, which is essential for dense tasks, is missing in the current visual language pre-training models.

There exist some attempts to train an open-vocabulary detector by leveraging the zero-shot classification ability of a pre-trained VLM. The work [11] proposes a basic self-training pipeline, which utilizes the activation map of the noun tokens in the caption from the pre-trained VLM and generates the pseudo bounding-box label. However, existing OVD methods just take advantage of the global text-image alignment ability of VLMs, thus failing to capture dense text-pixel alignment and severely hindering the self-training performance of OVD tasks. Directly using them by activation map cannot fully adapt to downstream detection tasks, which require better dense representations. For example, as shown in the upper part of Fig. 1, directly utilizing the pre-trained VLM can only obtain a low-quality and incomplete dense score map for the dog in the input image, which is harmful for the next pseudo labeling stage. Recent works [12, 13] attempt to build generic object detection frameworks by scaling to larger label spaces, while they are costly required to acquire large-scale annotations from bigger datasets.

To achieve fine-grained alignment over dense pixels and avoid extra data cost, we propose VTP-OVD, a Visual-Text Prompt-driven self-training paradigm for Open-Vocabulary Detection (VTP-OVD), to improve the robustness and generalization capability. Inspired by the recent advance in learning-based prompting methods [14, 15] from Natural Language Processing (NLP) community, we design a novel prompt-driven self-training framework (Fig.2) for better adaption of the pre-trained VLM to new detection tasks. In detail, VTP-OVD designs a fine-grained visual-text prompt adapting stage to obtain more powerful dense alignments for better pseudo label generation by introducing an additional dense prediction task. Specifically in adapting stage, to reduce the domain gap of upstream and downstream tasks and obtain the semantic-aware visual embedding, we introduce the visual and text prompt modules into the learnable image encoder and text encoder of the original VLM respectively. The text prompts provide dense alignment task cues to enhance category embedding, and the visual prompt module aligns enhanced categories information to each pixel.

Furthermore, with the prompt-enhanced VLM after adapting stage, a better pseudo label generation strategy is proposed for novel classes by leveraging the non-base categories’ names as the label input and aligning each connect region of score map for each category. As far as we know, our VTP-OVD is the first work to employ a learnable prompt-driven adapting stage in OVD task to capture fine-grained pixel-wise alignment and therefore to generate better pseudo labels.

We evaluate our VTP-OVD on the popular object detection dataset COCO [2] under the well-known zero-shot setting, and further validate its generalization capability on PASCAL VOC [1], Objects365 [16] and LVIS [3] benchmarks. Experiment results show that our VTP-OVD achieves the state-of-the-art performance on detecting novel classes (without annotations), e.g., 31.5% mAP on the COCO dataset. Besides, VTP-OVD also outperforms other open-vocabulary detection (OVD) methods when directly adapting the model trained on COCO to perform open-vocabulary detection on the three other object detection datasets. To demonstrate the effectiveness of the two learnable prompt modules, further experiments are conducted to show that fine-tuned with these two modules, VTP-OVD can generate a better dense score map (4.3% mIoU higher) compared to directing using the pre-trained CLIP [10] model.

II Related Work

II-A Zero-shot Object Detection

Most zero-shot detection (ZSD) methods [4, 5, 6, 7] align the visual embeddings to the text embeddings of the corresponding base category generated from a pre-trained text encoder. Several methods introduce GCN [17], contrastive learning [18], and a novel ‘polarity loss’ [19] to bridge the gap between the visual embeddings and text embeddings. Inspired by the success of VLMs [10, 20], several methods attempt to perform ZSD by training with image captions or directly leveraging a pre-trained VLM. [21] propose to pre-train the CNN backbone and a Vision to Language Module (V2L) via grounding task on an image caption dataset, and then the whole architecture is fine-tuned with an additional RPN module. However, it still suffers from a large performance gap with the SOTA, and the domain of upstream and downstream datasets must remain similar to maintain performance [11]. Based on a pre-trained VLM, [22] distills the learned image embeddings of the cropped proposal regions to a student detector, [23] further introduces an inter-embedding relationship matching loss to instill inter-embedding relationships. While each proposal needs to be fed forward into the image encoder of VLM. which requires huge computation costs. [11] proposes a basic self-training pipeline based on ALBEF [20], which firstly utilizes Grad-CAM [24] to obtain dense activation region of specific words in the caption and then generate the pseudo bounding-box label by selecting the proposal that has the largest overlap with the activation region. However, most VLMs lack the ability to perform pixel-wise classification since it is pre-trained with the correspondence of text embedding and global visual token instead of pixel embeddings. Besides, the reliance on caption data limits the application of this generalization to some datasets without caption. Inspired by [25], our method can obtain the pseudo annotations with the pixel-alignment patches.

II-B Vision-language Pre-training

The pre-training tasks of Vision-and-Language Pre-training (VLP) models can be divided into two categories: image-text contrastive learning tasks and Language Modeling (LM) based tasks. The first category, e.g., CLIP [10] and ALIGN [8], aims to align the visual feature with textual feature in a cross-modal common semantic space. The other category, e.g., VisualBERT [26], UNITER [9], M6 [27], DALL-E [28], ERNIE-ViLG [29], employs LM-like objects, include both autoregressive LM (e.g., image captioning [30], VQA [31]) and masked LM (e.g., Masked Language/Region Modeling). Different from previous works that only focus on global feature alignment, our proposed model can achieve pixel-aware alignments via prompts and perform better in downstream tasks of pixel-wise dense prediction.

II-C Prompt Tuning

Freezing the pre-trained models with only tuning the soft prompts can benefit from efficient serving and matching the performance of full model tuning. Prompt tuning has been verified as an effective method to mitigate the gap between pre-training and fine-tuning. As a rapidly emerging field in NLP [14], prompt tuning is originally designed for probing knowledge in pre-trained language models [32] and now applied in various NLP tasks, e.g., language understanding [33], emotion detection [34] and generation [35]. Prompt tuning has now been extended to vision-language models. Instead of constructing hand-crafted prompts in CLIP [10], CoOp [15] proposes tuning soft prompts with unified context and class-specific context in downstream classification task. CPT [36] proposes colorful cross-modal prompt tuning to explicitly align natural language to fine-grained image regions. The main differences between our usage of learnable multi-modal prompts with the previous prompt tuning works lie in three aspects: 1) Unaligned upstream and downstream tasks; 2) Multi-modal; 3) Learnable prompts for self-training-based open-vocabulary object detection.

III Method

In this section, we first briefly introduce the VTP-OVD framework. Then we describe the details of different stages in VTP-OVD: a) learnable multi-modal prompts in fine-grained alignment stage, which are used to enable VL model to obtain fine-grained pixel-wise alignment ability; b) better pseudo label generation strategy in the pseudo labeling stage and c) the details of the final self-training stage.

Basic Notations: We construct a combined categories set (denoted as ) via extracting the categories from several large-scale detection datasets. The base (seen) categories, novel (unseen) categories, and non-base categories are denoted as , , (i.e., = ). The input image is represented as . We use and to represent the modified image encoder [37] and text encoder of the original VLM specifically.

III-A VTP-OVD Framework

Fig. 3 illustrates the overall pipeline of the proposed VTP-OVD. We use different colors to indicate the parameter fixing or requiring training as clarified in the upper right corner of Fig. 3. Firstly, at the fine-grained visual-text prompt adapting stage, by employing the learnable text prompt module and visual prompt module into the text encoder and image encoder , we can fine-tune the whole network under dense supervision of pseudo dense score map with other parameters fixed. Secondly, for pseudo labeling stage, we obtain the pseudo labels of non-base classes by leveraging the dense classification ability of the fine-tuned VL model and the location ability of a pre-trained (on base classes) Region Proposal Network (RPN) . Finally, in the final self-training stage, an open-vocabulary detector is further trained with the combination of the ground truth of base classes and the pseudo labels of non-base classes to fulfill the self-training pipeline. The complete training procedure can be found in Algorithm 1.

III-B Adapting Stage Via Multi-Modal Prompts

The crucial part of the self-training pipeline for open-vocabulary detection (OVD) lies in the pseudo labeling stage that determines the final detection performance of novel classes. To generate better pseudo labels for the detection task, our method first aligns each pixel with a category and then adopts a pre-trained RPN for bounding-box localization. Since most vision-language methods (VLM) are trained via the alignment between the whole image and the corresponding caption, they lack the dense alignment ability between pixels and categories. Thus in this section, we focus on modifying the pre-trained vision-language model (VLM) to enhance the current self-training paradigms with fine-grained adapting stage via a newly designed dense alignment loss function and learnable text/visual prompts. Note that to obtain the dense pixel-level visual embeddings instead of the global visual embedding, following [37], we modified the image encoder by removing the query and key embedding layers, which is denoted as in Fig. 3 (a).

III-B1 Dense Alignment Task

As shown in Fig. 3, we introduce a dense alignment task to fine-tune the VLM under the supervision of pseudo dense score map , where denotes the all categories, including multi dataset categories, and represents the number of . The is generated by calculating the similarity of pixel-wise visual embeddings of input image with each category via the original VLM.

The dense score map is calculated by:

| (1) |

where denotes the inner product operation, denotes the normalization operation. and represent the visual embeddings and text embeddings specifically. To reduce the domain gap of upstream and downstream tasks and obtain the semantic-aware visual embedding, we further introduce the visual and text prompt modules into the learnable image encoder and text encoder of the original VLM respectively. Benefit from the same task form as upstream tasks, most previous prompt-based tuning works [14, 38, 15] still performs well on downstream tasks with different data domains. However, our VTP-OVD utilizes learnable multi-modal prompts to obtain the fine-grained feature alignment, which is different from the upstream global alignment in the task domain. The objective of dense alignment stage is:

| (2) |

where represents the cross-entropy loss. Similar to Eq. (1), the model prediction with the image input is calculated as:

| (3) |

where and denote the image feature and text feature integreated with prompt embeddings.

III-B2 Text Prompt

Prompt tuning has been verified as an effective method to probe knowledge in pre-trained language models [32] and applied in various NLP tasks [33, 35]. For example, the hand-crafted text prompts, e.g., ‘a photo of a {}.’, have been adopted to adapt the pre-trained VLM to the different downstream tasks [10, 22]. Inspired by these works, we further introduce a learnable text prompt encoder to provide dense alignment task cues for enhancing categories embeddings since downstream text-pixel alignment task is quite different from the upstream global text-image alignment task.

As shown in Fig. 4 (a), the text prompt encoder maps the learnable prompt tokens into the prompt embeddings . denotes the total number of prompt tokens. We believe that the should depend on each other since the words in a natural sentence always have a strong contextual relationship. Therefore for the structure of the proposed text prompt encoder module, it contains a bidirectional long-short term memory network (LSTM) to get the contextual information followed by a ReLU activated two-layer multilayer perceptron (MLP) [39]:

| (4) |

After that, we concatenate the same text prompt embeddings with each class token to obtain the prompt-enhanced text embedding by:

| (5) |

where denotes the concatenation operation, and denotes the length of prompt tokens added before the category token.

III-B3 Visual Prompt

As shown in Fig. 4 (b), the text prompts employ dense alignment task cues to enhance category embedding. Therefore, to align the enhanced categories information to each pixel, a visual prompt encoder is built upon the cross-attention mechanism to generate semantic-aware visual embedding for each pixel to improve dense alignment. Similar to ActionCLIP [40], which utilizes positional embeddings as visual prompt to provide additional temporal information, ours is also formed as a post-network prompt module [40] to provide additional semantic cues and we only finetune the visual prompt module while fixing the visual encoder. By taking each pixel embedding in visual features as the input of the query and the text embeddings of all categories as the input of key and value, the cross-attention block output the semantic-aware visual prompts for each pixel. Then they’re concatenated with the visual features followed by an 1-layer multilayer perceptron (MLP) to obtain the semantic-aware visual embeddings (see Fig. 4 (b)):

| (6) | ||||

where and represent the concatenation operation and the feature dimension of text embeddings . denotes the 1-layer multi-layer perceptron to reduce back the feature dimension.

III-C Pseudo Label Generation

Based on the more precise dense score map generated by the fine-tuned VLM with learnable text and visual prompts, we can obtain the non-base classes’ pseudo labels by additionally leveraging the location ability of a pre-trained region proposal network (RPN) (shown in Fig. 3(b)). Note that is only trained on base classes, and the experiments in [22] already demonstrate that training only on the base categories can achieve comparable recall to average recall of the overall categories. Previous pseudo labeling generation strategy [11] used the objects of interest in the caption, which not only harms the transfer ability to the detection datasets without the caption but also is limited to the uncompleted description of caption data. For example, such a strategy cannot generate the pseudo labels of classes that are not included in the caption.

To address these issues, We directly use non-base classes’ names as the input of fine-tuned text encoder . Besides, to better distinguish the background classes, instead of directly using the word "background", we treat all the base classes as background since we already have the ground-truth annotation of these classes. Then we obtain the pseudo dense score map of non-base classes from the fine-tuned VLM with the procedure same to Eq. (3). For each image , we firstly compute the connected regions for - non-base category on by setting a similarity threshold , then we adopt the intersection of union (IoU) of - proposal from RPN and as confidence score:

| (7) |

where and the denotes the confidence score that the proposal belongs to the - non-base category for image . Finally, a hard score threshold is adopted on the confidence score to filter out pseudo boxes with less confidence.

Self-training stage. After obtaining the pseudo bounding-box labels for non-base classes, together with the ground-truth annotations of base classes, we are able to train a final open-vocabulary detector to fulfill the self-training pipeline (shown in Fig. 3(c)) via Faster-RCNN [41]. We build the by replacing the last classification layer with the text embeddings generated by the text encoder on all categories . The objective of self-training stage is calculated as:

| (8) |

Where the and denote the cross-entropy loss for classification head and L1-loss for regression head, and represent the model prediction and annotations combined with pseudo labels of non-base classes and the ground-truth of base classes. The trained detector can then transfer to other detection datasets by providing the class names.

IV Experiments

IV-A Open-Vocabulary Object Detection Setups

Benchmark Setting: We benchmark on the widely-used object detection dataset COCO 2017 [2]. Following the well-known settings [4] adopted by many OVD methods, we divide the categories used in COCO into 48 base (seen) classes and 17 novel (unseen) classes. Each method can only be trained on annotations of the base categories and then predicts the novel categories without seeing any annotations of these categories. We report the detection performance of both the base and novel classes during inference, as the generalized settings used in [21]. We also evaluate the generalization ability of the trained detector by directly transferring it to three other object detection datasets, including PASCAL VOC [1], LVIS [3] and Objects365 [16].

Evaluation Metric: We use mean average precision (mAP) with IoU threshold 0.5 as the evaluation metric. We pay more attention to the novel class performance since we aim to build an open-vocabulary detector and the annotations of base classes are already provided.

| Method | VL Model | Using COCO caption | Novel | Base | Overall |

| SB[4] | ✗ | ✗ | 0.31 | 29.2 | 24.9 |

| LAB[4] | ✗ | ✗ | 0.22 | 20.8 | 18.0 |

| DSES[4] | ✗ | ✗ | 0.27 | 26.7 | 22.1 |

| DELO[43] | ✗ | ✗ | 3.41 | 13.8 | 13.0 |

| PL[44] | ✗ | ✗ | 4.12 | 35.9 | 27.9 |

| OVR-CNN [21] | - | ✓ | 22.8 | 46.0 | 39.9 |

| ViLD* [22] | CLIP | ✗ | 27.6 | 59.5 | 51.3 |

| Self-training based open-vocabulary detection methods | |||||

| OVD-ALBEF[11] | ALBEF | ✓ | 30.8 | 46.1 | 42.1 |

| Detic[12] | - | ✗ | 27.8 | 47.1 | 45.0 |

| VTP-OVD (w/o FT) | CLIP | ✗ | 29.8 | 51.8 | 46.1 |

| VTP-OVD (Ours) | CLIP | ✗ | 31.5 | 51.9 | 46.6 |

| Method | VOC | Objects365 | LVIS | COCO |

|---|---|---|---|---|

| OVR-CNN | 52.9 | 4.6 | 5.2 | 39.9 |

| OVD-ALBEF | 59.2 | 6.9 | 8 | 42.1 |

| Ours | 61.1 | 7.4 | 10.6 | 46.6 |

| H.P | T.P | V.P | S.T | mAP(%) | mIoU (%) |

|---|---|---|---|---|---|

| ✗ | ✗ | ✗ | ✗ | 22.5 | 30.9 |

| ✓ | ✗ | ✗ | ✗ | 23.7+1.2 | 31.1+0.2 |

| ✗ | ✓ | ✗ | ✗ | 25.4+2.9 | 31.3+0.4 |

| ✗ | ✗ | ✓ | ✗ | 25.1+2.6 | 33.1+2.2 |

| ✗ | ✓ | ✓ | ✗ | 26.0+3.5 | 35.4+4.5 |

| ✗ | ✓ | ✓ | ✓ | 31.5+9.0 | / |

IV-B Implementation Details

We utilize a pre-trained CLIP (RN5016) [10] model as the VLM. Note that our method is compatible with conventional VL models. For simplicity, we take CLIP as an example. All the detection models are implemented on the mmdetection [45] codebase and follow the default setting as Mask-RCNN (ResNet50) [46], 1X schedule unless otherwise mentioned. At the adapting stage, the prompt-enhanced CLIP is trained for 5 epochs, with the text prompt learning rate set to 1e-1 and the visual prompt set to 1e-5 separately. For the pseudo labeling stage, the RPN is trained only on the base classes in the 2X schedule. The objectness score threshold for RPN is set to 0.98. The similarity threshold and score threshold are set to 0.6 and 0.4. For the self-training stage, following the default setting, we adopt a Mask-RCNN (ResNet50) [46] with the last classification layer replaced by class embeddings output by the text encoder. We train the detector for 12 epochs, with the learning rate decreased by a factor of 0.1 at 8 and 11 epochs with 8 V100 GPUs. The initial learning rate is set to 0.04 with batch size 32, and the weight decay is set to 1e-4. We extract the categories of large-scale object detection dataset (i.e., LVIS [3] and Objects365 [16]) as the combined categories set , which contains about 1k categories. We generate non-base pseudo labels for training, while evaluate only on novel categories and base categories following [21].

| Embedding | LSTM | MLP | mAP(%) | |

|---|---|---|---|---|

| ✗ | ✗ | ✗ | 22.5 | |

| ✓ | ✓ | ✗ | 23.6+1.1 | |

| ✓ | ✗ | ✓ | 24.3+1.8 | |

| ✓ | ✓ | ✓ | 25.4+2.9 |

IV-C Main Results

COCO Dataset. We compare our VTP-OVD with existing open-vocabulary methods on the COCO dataset [2]. As shown in Table I, VTP-OVD achieves the state-of-the-art performance (i.e., 31.5% mAP) on the novel categories of the COCO dataset and 4.5% mAP improvement on overall categories compared to another self-training based method OVD-ALBEF [11]. Besides, by comparing with the VTP-OVD (w/o FT), e.g., 31.5% mAP vs. 29.8% mAP, we further demonstrate the necessity of the fine-grained adapting stage with learnable visual and text prompts for dense alignment tasks. Note that we utilize the basic vision-language model (VLM) CLIP [10] and do not use the caption data, guaranteeing the transfer ability to other VLMs or pre-training datasets without caption information. Clarification should be made that self-training-based methods (e.g., OVD-ALBEF [11] and ours) usually achieve slightly worse on base classes compared to the knowledge-distillation (KD) method (e.g., ViLD [22]). We attribute this to the reason that self-training methods make the model optimize more towards novel classes via generating massive pseudo labels on them, while KD-based methods try to keep the performance of the base class by only distilling the classification ability.

Generalization Abilities. To further demonstrate the open-vocabulary ability of the detector trained through the VTP-OVD pipeline, we directly transfer the final detection model trained on COCO datasets, to other detection datasets, including PASCAL VOC [1], Object365 [16] and LVIS [3]. Benefit from the training on non-base pseudo labels, experimental results in Table II show that our VTP-OVD achieves the best generalization ability even adopted to the datasets with much more categories than the pre-trained dataset, i.e., 81 classes in COCO vs. 1203 classes in LVIS. Note that we do not compare with ViLD [22] since it does not provide either this experiment result or the code.

IV-D Ablation Study

What’s the effect of the learnable prompt modules for pseudo label generation?

We introduce two learnable prompt modules to obtain the dense alignment ability, which is important for pseudo bounding-box label generation. To analyze the effect of proposed visual and text prompts, we conduct the quantitative experiments with different combinations of prompt modules to show the quality of pseudo labels (with mAP) and dense score map (with mIoU on novel classes). As shown in Table III, adopting hand-crafted prompts improves the baseline (without prompt) by 1.2% mAP increment on pseudo labels’ quality, while adding text and visual prompts separately gain additional 1.7% and 1.4% increment, respectively. Combining visual and text prompts makes better performance (+3.5%) and the best dense score map (35.4% mIoU). After adopting the self-training stage, it reaches the state-of-the-art detection performance (31.5% mAP) on COCO novel classes.

| CATEGORY TOKEN POSITION | FRONT | MID | BACK |

|---|---|---|---|

| (, ) | (0,1) | (1,2) | (1,1) |

| mAP (%) | 25.4 | 25.5 | 25.6 |

| PROMPT TOKEN NUMBER | 1 | 3 | 5 |

| (, ) | (1,1) | (3,3) | (5,5) |

| mAP (%) | 25.6 | 25.4 | 25.3 |

What’s the effect of different text prompt structures?

As shown in Table IV, we evaluate the effect of different structures of only the text prompts. Based on the input learned embeddings, separately using LSTM and MLP obtain a relatively small improvement due to the lack of the contextual information of prompt tokens. Adopting both LSTM and MLP for the association of prompt tokens achieves the best performance, i.e., 25.4% mAP for generated pseudo labels on novel classes of COCO. The ablations of the category token position and prompt token number which are decided by and in Eq.4 are shown in Table V.

How the pseudo labeling robust to hyper parameters?

As shown in Fig. 5, we conduct the ablation studies on the two most important hyper parameters, including the similarity threshold for dense score map to compute connected regions and objectness threshold for RPN to filter the low-confident proposals, in fine-tuning stage. Note that when we ablation one parameter, we keep the other fixed as the default value. Observation can be made that our pseudo labeling strategy is robust to this two parameters and achieves the highest when similarity threshold and objectness threshold set to 0.6 and 0.98 respectively. Low similarity and objectness threshold bring much false positives while high similarity and objectness threshold decrease the overall recall.

IV-E Qualitative Results

Qualitative examples. We visualize the final detection performance of VTP-OVD on the novel classes of COCO dataset and the transfer performance on Objects365, LVIS, and Pascal VOC in Fig. 6. We can observe that our VTP-OVD can obtain high-quality bounding-boxes for novel classes on COCO even without any annotation of these categories. Besides, as shown in the third row of Fig. 6, our VTP-OVD can detect rare classes like fireplug and polar bear, demonstrating its open-vocabulary detection ability.

However, we also find our VTP-OVD fails in detecting several novel categories, including skateboard, snowboard, etc, during the pseudo labeling stage and self-training stage. By observing the dense score map and the pseudo labels of skateboard class in Fig. 7, we attribute this phenomenon to the generation of wrong pseudo labels.

To explain the reason, we think that some objects are often related to the environment, such as skateboards, skateboarders, and skateboard playground often appear together, thus trained with the alignment based on global image information and text embeddings, current vision-language model usually cannot distinguish these objects from other objects in same scene.

Effect of prompt modules. In Fig. 8, we visualize the effect of proposed prompt modules on the generation of the pseudo labels of novel classes. By comparing the dense score maps in the third and sixth column, observation can be made that through learnable visual-text prompts, the VLM can achieve a better dense alignment and improve the quality of pseudo labels. Specifically, adopting the visual prompt tends to fill the dense alignment of the object, while adopting the text prompt usually explores the new classes or objects. Combining these two prompts makes the better performance to generate more precise pseudo bounding-boxes.

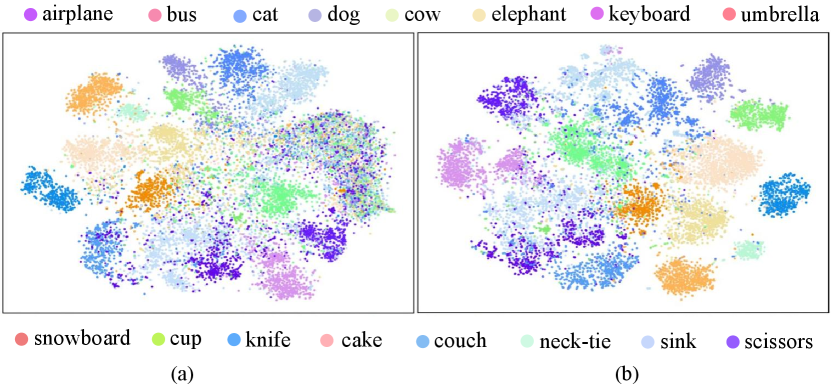

We also visualize the distribution of the pixel embeddings generated by the vision-language model CLIP (with or without learnable prompt modules) via t-SNE [47] on novel classes of COCO dataset in Fig. 9. Observation can be made that adopting learnable prompt modules helps cluster the pixel embeddings in the same class and separate pixel embeddings from different categories, demonstrating their effects on dense alignments.

V Conclusions

In this paper, we propose a novel open-vocabulary pipeline named VTP-OVD. VTP-OVD introduces a new fine-tuning stage to enhance the self-training paradigm with dense alignments by adopting two learnable visual and text prompt modules. Experimental results show that VTP-OVD achieves the state-of-the-art performance on the novel classes of COCO datasets and the best transfer performance when directly adapting the model trained on COCO to PASCAL VOC, Object365, and LVIS datasets. Additional experiments also show that after fine-tuning with two learnable prompt modules, VTP-OVD obtains a more precise dense score map (4.3% higher on mIoU) on novel classes. Nevertheless, VTP-OVD is a general pipeline of adopting the pre-trained vision-image encoder to dense prediction tasks, which can be easily extended to other tasks, e.g., open-vocabulary instance segmentation. We hope VTP-OVD can serve as a strong baseline for future research on different open-vocabulary tasks.

VI Acknowledments

This work was supported in part by National Key R&D Program of China under Grant No. 2020AAA0109700, Guangdong Outstanding Youth Fund (Grant No. 2021B1515020061), Shenzhen Science and Technology Program (Grant No. RCYX20200714114642083), Shenzhen Fundamental Research Program(Grant No. JCYJ20190807154211365), Nansha Key RD Program under Grant No.2022ZD014 and Sun Yat-sen University under Grant No. 22lgqb38 and 76160-12220011. We thank MindSpore for the partial support of this work, which is a new deep learning computing framwork111https://www.mindspore.cn/.

References

- [1] M. Everingham, L. Van Gool, C. K. Williams, J. Winn, and A. Zisserman, “The pascal visual object classes (voc) challenge,” International journal of computer vision, vol. 88, no. 2, pp. 303–338, 2010.

- [2] T. Lin, M. Maire, S. J. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft COCO: common objects in context,” in Computer Vision - ECCV 2014 - 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V, ser. Lecture Notes in Computer Science, D. J. Fleet, T. Pajdla, B. Schiele, and T. Tuytelaars, Eds., vol. 8693. Springer, 2014, pp. 740–755.

- [3] A. Gupta, P. Dollar, and R. Girshick, “Lvis: A dataset for large vocabulary instance segmentation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 5356–5364.

- [4] A. Bansal, K. Sikka, G. Sharma, R. Chellappa, and A. Divakaran, “Zero-shot object detection,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 384–400.

- [5] B. Demirel, R. G. Cinbis, and N. Ikizler-Cinbis, “Zero-shot object detection by hybrid region embedding,” arXiv preprint arXiv:1805.06157, 2018.

- [6] S. Rahman, S. Khan, and N. Barnes, “Transductive learning for zero-shot object detection,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 6082–6091.

- [7] N. Hayat, M. Hayat, S. Rahman, S. Khan, S. W. Zamir, and F. S. Khan, “Synthesizing the unseen for zero-shot object detection,” in Proceedings of the Asian Conference on Computer Vision, 2020.

- [8] C. Jia, Y. Yang, Y. Xia, Y.-T. Chen, Z. Parekh, H. Pham, Q. V. Le, Y. Sung, Z. Li, and T. Duerig, “Scaling up visual and vision-language representation learning with noisy text supervision,” in International Conference on Machine Learning, 2021.

- [9] Y. Chen, L. Li, L. Yu, A. E. Kholy, F. Ahmed, Z. Gan, Y. Cheng, and J. Liu, “UNITER: universal image-text representation learning,” in Computer Vision - ECCV 2020 - 16th European Conference, Glasgow, UK, August 23-28, 2020, Proceedings, Part XXX, ser. Lecture Notes in Computer Science, A. Vedaldi, H. Bischof, T. Brox, and J. Frahm, Eds., vol. 12375. Springer, 2020, pp. 104–120.

- [10] A. Radford, J. W. Kim, C. Hallacy, A. Ramesh, G. Goh, S. Agarwal, G. Sastry, A. Askell, P. Mishkin, J. Clark et al., “Learning transferable visual models from natural language supervision,” arXiv preprint arXiv:2103.00020, 2021.

- [11] M. Gao, C. Xing, J. C. Niebles, J. Li, R. Xu, W. Liu, and C. Xiong, “Towards open vocabulary object detection without human-provided bounding boxes,” arXiv preprint arXiv:2111.09452, 2021.

- [12] X. Zhou, R. Girdhar, A. Joulin, P. Krähenbühl, and I. Misra, “Detecting twenty-thousand classes using image-level supervision,” arXiv preprint arXiv:2201.02605, 2022.

- [13] C. Feng, Y. Zhong, Z. Jie, X. Chu, H. Ren, X. Wei, W. Xie, and L. Ma, “Promptdet: Expand your detector vocabulary with uncurated images,” arXiv preprint arXiv:2203.16513, 2022.

- [14] P. Liu, W. Yuan, J. Fu, Z. Jiang, H. Hayashi, and G. Neubig, “Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing,” arXiv preprint arXiv:2107.13586, 2021.

- [15] K. Zhou, J. Yang, C. C. Loy, and Z. Liu, “Learning to prompt for vision-language models,” arXiv preprint arXiv:2109.01134, 2021.

- [16] S. Shao, Z. Li, T. Zhang, C. Peng, G. Yu, X. Zhang, J. Li, and J. Sun, “Objects365: A large-scale, high-quality dataset for object detection,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 8430–8439.

- [17] C. Yan, Q. Zheng, X. Chang, M. Luo, C.-H. Yeh, and A. G. Hauptman, “Semantics-preserving graph propagation for zero-shot object detection,” IEEE Transactions on Image Processing, vol. 29, pp. 8163–8176, 2020.

- [18] C. Yan, X. Chang, M. Luo, H. Liu, X. Zhang, and Q. Zheng, “Semantics-guided contrastive network for zero-shot object detection,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

- [19] S. Rahman, S. Khan, and N. Barnes, “Polarity loss: Improving visual-semantic alignment for zero-shot detection,” IEEE Transactions on Neural Networks and Learning Systems, pp. 1–13, 2022.

- [20] J. Li, R. Selvaraju, A. Gotmare, S. Joty, C. Xiong, and S. C. H. Hoi, “Align before fuse: Vision and language representation learning with momentum distillation,” Advances in Neural Information Processing Systems, vol. 34, 2021.

- [21] A. Zareian, K. D. Rosa, D. H. Hu, and S.-F. Chang, “Open-vocabulary object detection using captions,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 14 393–14 402.

- [22] X. Gu, T.-Y. Lin, W. Kuo, and Y. Cui, “Open-vocabulary object detection via vision and language knowledge distillation,” arXiv preprint arXiv:2104.13921, vol. 2, 2021.

- [23] H. Bangalath, M. Maaz, M. U. Khattak, S. H. Khan, and F. Shahbaz Khan, “Bridging the gap between object and image-level representations for open-vocabulary detection,” Advances in Neural Information Processing Systems, vol. 35, pp. 33 781–33 794, 2022.

- [24] R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra, “Grad-cam: Visual explanations from deep networks via gradient-based localization,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 618–626.

- [25] Z. Gu, S. Zhou, L. Niu, Z. Zhao, and L. Zhang, “From pixel to patch: Synthesize context-aware features for zero-shot semantic segmentation,” IEEE Transactions on Neural Networks and Learning Systems, pp. 1–15, 2022.

- [26] L. H. Li, M. Yatskar, D. Yin, C.-J. Hsieh, and K.-W. Chang, “Visualbert: A simple and performant baseline for vision and language,” Preprint arXiv:1908.03557, 2019.

- [27] J. Lin, R. Men, A. Yang, C. Zhou, M. Ding, Y. Zhang, P. Wang, A. Wang, L. Jiang, X. Jia et al., “M6: A chinese multimodal pretrainer,” Preprint arXiv:2103.00823, 2021.

- [28] A. Ramesh, M. Pavlov, G. Goh, S. Gray, C. Voss, A. Radford, M. Chen, and I. Sutskever, “Zero-shot text-to-image generation,” Preprint arXiv:2102.12092, 2021.

- [29] H. Zhang, W. Yin, Y. Fang, L. Li, B. Duan, Z. Wu, Y. Sun, H. Tian, H. Wu, and H. Wang, “Ernie-vilg: Unified generative pre-training for bidirectional vision-language generation,” arXiv preprint arXiv:2112.15283, 2021.

- [30] K. Fu, J. Li, J. Jin, and C. Zhang, “Image-text surgery: Efficient concept learning in image captioning by generating pseudopairs,” IEEE Transactions on Neural Networks and Learning Systems, vol. 29, no. 12, pp. 5910–5921, 2018.

- [31] Z. Yu, J. Yu, C. Xiang, J. Fan, and D. Tao, “Beyond bilinear: Generalized multimodal factorized high-order pooling for visual question answering,” IEEE Transactions on Neural Networks and Learning Systems, vol. 29, no. 12, pp. 5947–5959, 2018.

- [32] F. Petroni, T. Rocktäschel, P. Lewis, A. Bakhtin, Y. Wu, A. H. Miller, and S. Riedel, “Language models as knowledge bases?” arXiv preprint arXiv:1909.01066, 2019.

- [33] T. Schick and H. Schütze, “It’s not just size that matters: Small language models are also few-shot learners,” arXiv preprint arXiv:2009.07118, 2020.

- [34] R. Mao, Q. Liu, K. He, W. Li, and E. Cambria, “The biases of pre-trained language models: An empirical study on prompt-based sentiment analysis and emotion detection,” IEEE Transactions on Affective Computing, pp. 1–11, 2022.

- [35] X. L. Li and P. Liang, “Prefix-tuning: Optimizing continuous prompts for generation,” arXiv preprint arXiv:2101.00190, 2021.

- [36] Y. Yao, A. Zhang, Z. Zhang, Z. Liu, T.-S. Chua, and M. Sun, “Cpt: Colorful prompt tuning for pre-trained vision-language models,” 2021.

- [37] C. Zhou, C. C. Loy, and B. Dai, “Denseclip: Extract free dense labels from clip,” arXiv preprint arXiv:2112.01071, 2021.

- [38] P. Gao, S. Geng, R. Zhang, T. Ma, R. Fang, Y. Zhang, H. Li, and Y. Qiao, “Clip-adapter: Better vision-language models with feature adapters,” arXiv preprint arXiv:2110.04544, 2021.

- [39] X. Liu, Y. Zheng, Z. Du, M. Ding, Y. Qian, Z. Yang, and J. Tang, “Gpt understands, too,” arXiv preprint arXiv:2103.10385, 2021.

- [40] M. Wang, J. Xing, and Y. Liu, “Actionclip: A new paradigm for video action recognition,” arXiv preprint arXiv:2109.08472, 2021.

- [41] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” Advances in neural information processing systems, vol. 28, pp. 91–99, 2015.

- [42] G. Ghiasi, Y. Cui, A. Srinivas, R. Qian, T.-Y. Lin, E. D. Cubuk, Q. V. Le, and B. Zoph, “Simple copy-paste is a strong data augmentation method for instance segmentation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 2918–2928.

- [43] P. Zhu, H. Wang, and V. Saligrama, “Don’t even look once: Synthesizing features for zero-shot detection,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 11 693–11 702.

- [44] S. Rahman, S. Khan, and N. Barnes, “Improved visual-semantic alignment for zero-shot object detection,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 07, 2020, pp. 11 932–11 939.

- [45] K. Chen, J. Wang, J. Pang, Y. Cao, Y. Xiong, X. Li, S. Sun, W. Feng, Z. Liu, J. Xu, Z. Zhang, D. Cheng, C. Zhu, T. Cheng, Q. Zhao, B. Li, X. Lu, R. Zhu, Y. Wu, J. Dai, J. Wang, J. Shi, W. Ouyang, C. C. Loy, and D. Lin, “MMDetection: Open mmlab detection toolbox and benchmark,” arXiv preprint arXiv:1906.07155, 2019.

- [46] K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask r-cnn,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 2961–2969.

- [47] L. Van der Maaten and G. Hinton, “Visualizing data using t-sne.” Journal of machine learning research, vol. 9, no. 11, 2008.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7c38905d-c352-47fa-8d7e-026ff0127d93/longyanxin_new.jpg) |

Yanxin Long is a third-year master in the School of Intelligent Systems Engineering, Sun Yat-Sen University. He works at the Human Cyber Physical Intelligence Integration Lab, Guangzhou under the supervision of Prof. Xiaodan Liang. Before that, He received his Bachelor Degree from the Comunication College, Xidian University in 2020. His research interests include 2D detection and vision-and-language understanding. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7c38905d-c352-47fa-8d7e-026ff0127d93/hanjianhua.jpg) |

Jianhua Han received the Bachelor Degree in 2016 and Master Degree in 2019 from Shanghai Jiao Tong University, China. He is currently a re-searcher with the Noahs Ark Laboratory, Huawei Technologies Co ., Ltd. His research interests lie primarily in deep learning and computer vision. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7c38905d-c352-47fa-8d7e-026ff0127d93/huangrunhui.jpg) |

Runhui Huang is a two-year master in the School of Intelligent Systems Engineering, Sun Yat-sen University. He works at the Human Cyber Physical Intelligence Integration Lab, Guangzhou under the supervision of Prof. Xiaodan Liang. Before that, he received the Bachelor Degree from the School of Intelligent Systems Engineering, Sun Yat-sen University in 2021. His reasearch interests include vision-and-language pre-training. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7c38905d-c352-47fa-8d7e-026ff0127d93/xuhang.jpg) |

Hang Xu is currently a senior researcher in Huawei Noah Ark Lab. He received his BSc in Fudan University in 2012 and Ph.D. in Hong Kong University in Statistics in 2018. His research Interest includes machine Learning, vision-language models, object detection and AutoML. He has published 50+ papers in top-tier AI conferences such as NeurIPS, CVPR, ICCV, ICLR and some statistics journals, e.g. CSDA, Statistical Computing. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7c38905d-c352-47fa-8d7e-026ff0127d93/zhuyi.jpg) |

Yi Zhu received the B.S. degree in software engineering from Sun Yat-sen University, Guangzhou, China, in 2013. Since 2015, she has been a Ph.D. student in computer science at the School of Electronic, Electrical, and Communication Engineering, University of Chinese Academy of Sciences, Beijing, China. Her current research interests include object recognition, scene understanding, weakly supervised learning and visual reasoning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7c38905d-c352-47fa-8d7e-026ff0127d93/xuchunjing.png) |

Chunjing Xu received his Bachelor’s degree in Math from Wuhan University 1999, Master degree in Math from Peking University 2002, and Ph.D. from Chinese University of Hong Kong 2009. He was Assistant Professor and then Associate Professor at Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences. He became director of the computer vision lab in Noah’s Ark lab, Central research institute in 2017. His main research interests focus on machine learning and computer vision. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7c38905d-c352-47fa-8d7e-026ff0127d93/x10.png) |

Xiaodan Liang is currently an Associate Professor at Sun Yat-sen University. She was a postdoc researcher in the machine learning department at Carnegie Mellon University, working with Prof. Eric Xing, from 2016 to 2018. She received her PhD degree from Sun Yat-sen University in 2016, advised by Liang Lin. She has published several cutting-edge projects on human-related analysis, including human parsing, pedestrian detection and instance segmentation, 2D/3D human pose estimation and activity recognition. |