Feynman checkers:

towards algorithmic quantum theory

Abstract

We survey and develop the most elementary model of electron motion introduced by R.Feynman. In this game, a checker moves on a checkerboard by simple rules, and we count the turns. Feynman checkers are also known as a one-dimensional quantum walk or an Ising model at imaginary temperature. We solve mathematically a problem by R.Feynman from 1965, which was to prove that the discrete model (for large time, small average velocity, and small lattice step) is consistent with the continuum one. We study asymptotic properties of the model (for small lattice step and large time) improving the results by J.Narlikar from 1972 and by T.Sunada–T.Tate from 2012. For the first time we observe and prove concentration of measure in the small-lattice-step limit. We perform the second quantization of the model.

Keywords and phrases. Feynman checkerboard, quantum walk, Ising model, Young diagram, Dirac equation, stationary phase method

MSC2010: 82B20, 11L03, 68Q12, 81P68, 81T25, 81T40, 05A17, 11P82, 33C45.

1 Introduction

We survey and develop the most elementary model of electron motion introduced by R. Feynman (see Figure 1). In this game, a checker moves on a checkerboard by simple rules, and we count the turns (see Definition 2). Feynman checkers can be viewed as a one-dimensional quantum walk, or an Ising model, or count of Young diagrams of certain type.

|

|

|

1.1 Motivation

The simplest way to understand what is the model about is the classical double-slit experiment (see Figure 2). In this experiment, a (coherent) beam of electrons is directed towards a plate pierced by two parallel slits, and the part of the beam passing through the slits is observed on a screen behind the plate. If one of the slits is closed, then the beam illuminates a spot on the screen. If both slits are open, one would expect a larger spot, but in fact one observes a sequence of bright and dark bands (interferogram).

This shows that electrons behave like a wave: the waves travel through both slits, and the contributions of the two paths either amplify or cancel each other depending on the final phases.

Further, if the electrons are sent through the slits one at time, then single dots appear on the screen, as expected. Remarkably, however, the same interferogram with bright and dark bands emerges when the electrons are allowed to build up one by one. One cannot predict where a particular electron hits the screen; all we can do is to compute the probability to find the electron at a given place.

The Feynman sum-over-paths (or path integral) method of computing such probabilities is to assign phases to all possible paths and to sum up the resulting waves (see [11, 12]). Feynman checkers (or Feynman checkerboard) is a particularly simple combinatorial rule for those phases in the case of an electron freely moving (or better jumping) along a line.

1.2 Background

The beginning. The checkers model was invented by R. Feynman in 1940s [43] and first published in 1965 [12]. In Problem 2.6 there, a function on a lattice of small step was constructed (called kernel; see (2)) and the following task was posed:

If the time interval is very long () and the average velocity is small [], show that the resulting kernel is approximately the same as that for a free particle [given in Eq. (3-3)], except for a factor .

Mathematically, this means that the kernel (divided by ) asymptotically equals free-particle kernel (24) (this is Eq. (3-3) from [12]) in the triple limit when time tends to infinity, whereas the average velocity and the lattice step tend to zero (see Table 1 and Figure 3). Both scaling by the lattice step and tending it to zero were understood, otherwise the mentioned ‘‘exceptional’’ factor would be different (see Example 4). We show that the assertion, although incorrect literally, holds under mild assumptions (see Corollary 5).

Although the Feynman problem might seem self-evident for theoretical physicists, even the first step of a mathematical solution (disproving the assertion as stated) is not found in literature. As usual, the difficulty is to prove the convergence rather than to guess the limit.

| propagator | continuum | lattice | context | references |

| free-particle kernel | (24) | - | quantum mechanics | [12, (3-3)] |

| spin- retarded propagator | (26),(27) | (2) | relativistic | cf. [23, (13)] |

| quantum mechanics | and [12, (2-27)] | |||

| spin- Feynman propagator | (34),(35) | (32) | quantum field theory | cf. [2, §9F] |

In 1972 J. Narlikar discovered that the above kernel reproduces the spin- retarded propagator in the different limit when the lattice step tends to zero but time stays fixed [36] (see Table 1, Figures 4 and 1, Corollary 6). In 1984 T.Jacobson–L.Schulman repeated this derivation, applied stationary phase method among other bright ideas, and found the probability of changing the movement direction [23] (cf. Theorem 6). The remarkable works of that period contain no mathematical proofs, but approximate computations without estimating the error.

Ising model. In 1981 H. Gersch noticed that Feynman checkers can be viewed as a -dimensional Ising model with imaginary temperature or edge weights (see §2.2 and [18], [23, §3]). Imaginary values of these quantities are usual in physics (e.g., in quantum field theory or in alternating current networks). Due to the imaginarity, contributions of most configurations cancel each other, which makes the model highly nontrivial in spite of being -dimensional. In particular, the model exhibits a phase transition (see Figures 1 and 5). Surprisingly, the latter seems to have never been reported before. Phase transitions were studied only in more complicated -dimensional Ising models [25, §III], [35], in spite of a known equivalent result, which we are going to discuss now (see Theorem 1(B) and Corollary 3).

Quantum walks. In 2001 A. Ambainis, E. Bach, A. Nayak, A. Vishwanath, and J. Watrous performed a breakthrough [1]. They studied Feynman checkers under names one-dimensional quantum walk and Hadamard walk; although those cleverly defined models were completely equivalent to Feynman’s simple one. They computed the large-time limit of the model (see Theorem 2). They discovered several striking properties having sharp contrast with both continuum theory and the classical random walks. First, the most probable average electron velocity in the model equals of the speed of light, and the probability of exceeding this value is very small (see Figures 5 and 1 to the left and Theorem 1(B)). Second, if an absorbing boundary is put immediately to the left of the starting position, then the probability that the electron is absorbed is . Third, if an additional absorbing boundary is put at location , the probability that the electron is absorbed to the left actually increases, approaching in the limit . Recall that in the classical case both absorption probabilities are . In addition, they found many combinatorial identities and expressed the above kernel through the values of Jacobi polynomials at a particular point (see Remark 3; cf. [48, §2]).

N. Konno studied a biased quantum walk [29, 30], which is still essentially equivalent to Feynman checkers (see Remark 4). He found the distribution of the electron position in the (weak) large-time limit (see Figure 5 and Theorem 1(B)). This result was proved mathematically by G.R. Grimmett–S. Janson–P.F. Scudo [20]. In their seminal paper [47] from 2012, T. Sunada–T. Tate found and proved a large-time asymptotic formula for the distribution (see Theorems 2–4). This was a powerful result but it still could not solve the Feynman problem because the error estimate was not uniform in the lattice step. In 2018 M. Maeda et al. proved a bound for the maximum of the distribution for large time [33, Theorem 2.1].

Quantum walks were generalized to arbitrary graphs and applied to quantum algorithms (see Figure 6 and [17] for an implementation). We refer to the surveys by M.J. Cantero–F.A. Grünbaum–L. Moral–L. Velázquez, N. Konno, J. Kempe, and S.E. Venegas-Andraca [7, 30, 27, 50, 31] for further details in this direction.

Lattice quantum field theories. In a more general context, this is a direction towards creation of Minkowskian lattice quantum field theory, with both space and time being discrete [2]. In 1970s F. Wegner and K. Wilson introduced lattice gauge theory as a computational tool for gauge theory describing all known interactions (except gravity); see [34] for a popular-science introduction. This culminated in determining the proton mass theoretically with error less than in a sense. This theory is Euclidean in the sense that it involves imaginary time. Likewise, an asymptotic formula for the propagator for the (massless) Euclidean lattice Dirac equation [28, Theorem 4.3] played a crucial role in the continuum limit of the Ising model performed by D. Chelkak–S. Smirnov [8]. Similarly, asymptotic formulae for the Minkowskian one (Theorems 2–5) can be useful for missing Minkowskian lattice quantum field theory. Several authors argue that Feynman checkers have the advantage of no fermion doubling inherent in Euclidean lattice theories and avoid the Nielsen–Ninomiya no-go theorem [4, 15].

Several upgrades of Feynman checkers have been discussed. For instance, around 1990s B. Gaveau–L. Schulman and G. Ord added electromagnetic field to the model [16, 38]. That time they achieved neither exact charge conservation nor generalization to non-Abelian gauge fields; this is fixed in Definition 3. Another example is adding mass matrix by P. Jizba [24].

It was an old dream to incorporate also checker paths turning backwards in time or forming cycles [43, p. 481–483], [22]; this would mean creation of electron-positron pairs, celebrating a passage from quantum mechanics to quantum field theory. One looked for a combinatorial model reproducing the Feynman propagator rather than the retarded one in the continuum limit (see Table 1). Known constructions (such as hopping expansion) did not lead to the Feynman propagator because of certain lattice artifacts (e.g., the title of [39] is misleading: the Feynman propagator is not discussed there). In the massless case, a noncombinatorial construction of Feynman propagator on the lattice was provided by C. Bender–L. Mead–K. Milton–D. Sharp in [2, §9F] and [3, §IV]. In Definition 6 the desired combinatorial construction is finally given.

Another long-standing open problem is to generalize the model to the -dimensional real world. In his Nobel prize lecture, R. Feynman mentioned his own unsuccessful attempts. There are several recent approaches, e.g., by B. Foster–T. Jacobson from 2017 [15]. Those are not yet as simple and beautiful as the original -dimensional model, as it is written in [15, §7.1] itself.

On physical and mathematical works. The physical literature on the subject is quite extensive [50], and we cannot mention all remarkable works in this brief overview. Surprisingly, in the whole literature we have not found the shouting property of concentration of measure for lattice step tending to zero (see Corollary 7). Many papers are well-written, insomuch that the physical theorems and proofs there could be carelessly taken for mathematical ones (see the end of §12). We are aware of just a few mathematical works on the subject, such as [20, 47, 33].

1.3 Contributions

We solve mathematically a problem by R. Feynman from 1965, which was to prove that his model is consistent with the continuum one, namely, reproduces the usual quantum-mechanical free-particle kernel for large time, small average velocity, and small lattice step (see Corollary 5). We compute large-time and small-lattice-step asymptotic formulae for the lattice propagator, uniform in the model parameters (see Theorems 2 and 5). For the first time we observe and prove concentration of measure in the continuum limit: the average velocity of an electron emitted by a point source is close to the speed of light with high probability (see Corollary 7). The results can be interpreted as asymptotic properties of Young diagrams (see Corollary 2) and Jacobi polynomials (see Remark 3).

All these results are proved mathematically for the first time. For their statements, just Definition 2 is sufficient. In Definitions 3 and 6 we perform a coupling to lattice gauge theory and the second quantization of the model, promoting Feynman checkers to a full-fledged lattice quantum field theory.

1.4 Organization of the paper and further directions

First we give the definitions and precise statements of the results, and in the process provide a zero-knowledge examples for basic concepts of quantum theory. These are precisely those examples that R. Feynman presents first in his own books: Feynman checkers (see §3) is the first specific example in the whole book [12]. The thin-film reflection (see §7) is the first example in [11]; see Figures 10–11 there. Thus we hope that these examples could be enlightening to readers unfamiliar with quantum theory.

We start with the simplest (and rough) particular case of the model and upgrade it step by step in each subsequent section. Before each upgrade, we summarize which physical question does it address, which simplifying assumptions does it resolve or impose additionally, and which experimental or theoretical results does it reproduce. Some upgrades (§§7–9) are just announced to be discussed in a subsequent publication. Our aim is (1+1)-dimensional lattice quantum electrodynamics (‘‘QED’’) but the last step on this way (mentioned in §10) is still undone. Open problems are collected in §11. For easier navigation, we present the upgrades-dependence chart:

Hopefully this is a promising path to making quantum field theory rigorous and algorithmic. An algorithmic quantum field theory would be a one which, given an experimentally observable quantity and a number , would provide a precise statement of an algorithm predicting a value for the quantity within accuracy . (Surely, the predicted value needs not to agree with the experiment for less than accuracy of theory itself.) See Algorithm 1 for a toy example. This would be an extension of constructive quantum field theory (currently far from being algorithmic). Application of quantum theory to computer science is in mainstream now, but the opposite direction could provide benefit as well. (Re)thinking algorithmically is a way to make a subject available to nonspecialists, as it is happening with, say, algebraic topology.

The paper is written in a mathematical level of rigor, in the sense that all the definitions, conventions, and theorems (including corollaries, propositions, lemmas) should be understood literally. Theorems remain true, even if cut out from the text. The proofs of theorems use the statements but not the proofs of the other ones. Most statements are much less technical than the proofs; hence the proofs are kept in a separate section (§12) and long computations are kept in [44]. In the process of the proofs, we give a zero-knowledge introduction to the main tools to study the model: combinatorial identities, the Fourier transform, the method of moments, the stationary phase method. Remarks are informal and usually not used elsewhere (hence skippable). Text outside definitions, theorems, proofs is neither used formally.

2 Basic model (Hadamard walk)

Question: what is the probability to find an electron in the square , if it was emitted from ?

Assumptions: no self-interaction, no creation of electron-positron pairs, fixed mass and lattice step, point source; the electron moves in a plane ‘‘drifting uniformly along the -axis’’ or along a line (and then is time).

Results: double-slit experiment (qualitative explanation), charge conservation, large-time limiting distribution.

2.1 Definition and examples

We first give an informal definition of the model in the spirit of [11] and then a precise one.

On an infinite checkerboard, a checker moves to the diagonal-neighboring squares, either upwards-right or upwards-left (see Figure 7 to the left). To each path of the checker, assign a vector as follows (see Figure 7 to the middle). Take a stopwatch that can time the checker as it moves. Initially the stopwatch hand points upwards. While the checker moves straightly, the hand does not rotate, but each time when the checker changes the direction, the hand rotates through clockwise (independently of the direction the checker turns). The final direction of the hand is the direction of the required vector . The length of the vector is set to be , where is the total number of moves (this is just a normalization). For instance, for the path in Figure 7 to the top-middle, the vector .

|

|

|

|

|

|

|

|

|

|

Denote by the sum over all the checker paths from the square to the square , starting with the upwards-right move. For instance, ; see Figure 7 to the right. The length square of the vector is called the probability to find an electron in the square , if it was emitted from (see §2.2 for a discussion of the terminology). The vector itself is called the arrow [11, Figure 6].

Let us summarize this construction rigorously.

Definition 1.

A checker path is a finite sequence of integer points in the plane such that the vector from each point (except the last one) to the next one equals either or . A turn is a point of the path (not the first and not the last one) such that the vectors from the point to the next and to the previous ones are orthogonal. The arrow is the complex number

where the sum over all checker paths from to with the first step to , and is the number of turns in . Hereafter an empty sum is by definition. Denote

Points (or squares) with even and odd are called black and white respectively.

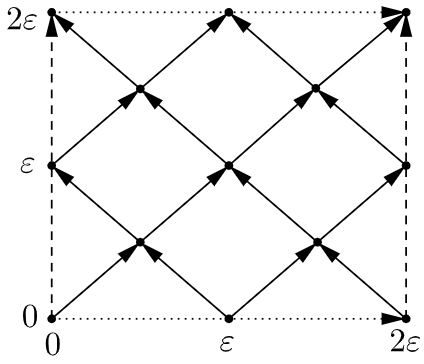

Figure 8 and Table 2 depict the arrows and the probabilities for small . Figure 9 depicts the graphs of , , and as functions in an even number . We see that under variation of the final position at a fixed large time , right after the peak the probability falls to very small although still nonzero values. What is particularly interesting is the unexpected position of the peak, far from . In Figure 1 to the left, the color of a point with even depicts the value . Notice that the sides of the apparent angle are not the lines but the lines (see Theorem 1(A)).

|

|

|

2.2 Physical interpretation

Let us comment on the physical interpretation of the model and ensure that it captures unbelievable behavior of electrons. There are two different interpretations; see Table 3.

| object | standard interpretation | spin-chain interpretation |

| path | configuration of ‘‘’’ and ‘‘’’ in a row | |

| number of turns | half of the configuration energy | |

| time | volume | |

| position | difference between the number of ‘‘’’ and ‘‘’’ | |

| average velocity | magnetization | |

| probability amplitude | partition function up to constant | |

| probability | partition function norm squared | |

| normalized logarithm of amplitude | free energy density | |

| conditional probability amplitude of the last move upwards-right | ‘‘probability’’ of equal signs at the ends of the spin chain |

Standard interpretation. Here the - and -coordinates are interpreted as the electron position and time respectively. Sometimes (e.g., in Example 1) we a bit informally interpret both as position, and assume that the electron performs a ‘‘uniform classical motion’’ along the -axis. We work in the natural system of units, where the speed of light, the Plank and the Boltzmann constants equal . Thus the lines represent motion with the speed of light. Any checker path lies above both lines, i.e. in the light cone, which means agreement with relativity: the speed of electron cannot exceed the speed of light.

To think of as a probability, consider the -coordinate as fixed, and the squares as all the possible outcomes of an experiment. For instance, the -th horizontal might be a screen detecting the electron. We shall see that all the numbers on one horizontal sum up to (Proposition 2), thus indeed can be considered as probabilities. Notice that the probability to find the electron in a set is rather than (cf. [11, Figure 50]).

In reality, one cannot measure the electron position exactly. A fundamental limitation is the electron reduced Compton wavelength meters, where is the electron mass. Physically, the basic model approximates the continuum by a lattice of step exactly . But that is still a rough approximation: one needs even smaller step to prevent accumulation of approximation error at larger distances and times. For instance, Figures 1 and 10 to the left show a finite-lattice-step effect (renormalization of speed of light): the average velocity cannot exceed of the speed of light with high probability. (An explanation in physical terms: lattice regularization cuts off distances smaller than the lattice step, hence small wavelengths, hence large momenta, and hence large velocities.) A more precise model is given in §3: compare the plots in Figure 1.

As we shall see now, the model qualitatively captures unbelievable behavior of electrons. (For correct quantitative results like exact shape of an interferogram, an upgrade involving a coherent source is required; see §6.)

The probability to find an electron in the square subject to absorption in a subset is defined analogously to , only the summation is over checker paths that have no common points with possibly except and . The probability is denoted by . Informally, this means an additional outcome of the experiment: the electron has been absorbed and has not reached the screen. In the following example, we view the two black squares on the horizontal as two slits in a horizontal plate (cf. Figure 2).

Example 1 (Double-slit experiment).

Distinct paths cannot be viewed as ‘‘mutually exclusive’’:

Absorption can increase probabilities at some places: .

The standard interpretation of Feynman checkers is also known as the Hadamard walk, or more generally, the 1-dimensional quantum walk or quantum lattice gas. Those are all equivalent but lead to generalizations in distinct directions [50, 31, 51]. For instance, a unification of the upgrades from §3–7 gives a general inhomogeneous 1-dimensional quantum walk.

The striking properties of quantum walks discussed in §1.2 are stated precisely as follows:

Recently M. Dmitriev [10] has found for a few values (see Problem 6). Similar numbers appear in the simple random walk on [41, Table 2].

Spin-chain interpretation. There is a very different physical interpretation of the same model: a -dimensional Ising model with imaginary temperature and fixed magnetization.

Recall that a configuration in the Ising model is a sequence of of fixed length. The magnetization and the energy of the configuration are and respectively. The probability of the configuration is , where the inverse temperature is a parameter and the partition function is a normalization factor. Additional restrictions on configurations are usually imposed.

Now, moving the checker along a path , write ‘‘’’ for each upwards-right move, and ‘‘’’ for each upwards-left one; see Figure 11 to the left. The resulting sequence of signs is a configuration in the Ising model, the number of turns in is one half of the configuration energy, and the ratio of the final - and -coordinates is the magnetization. Thus coincides (up to a factor not depending on ) with the partition function for the Ising model at the imaginary inverse temperature under the fixed magnetization :

Notice a crucial difference of the resulting spin-chain interpretation from both the usual Ising model and the above standard interpretation. In the latter two models, the magnetization and the average velocity were random variables; now the magnetization (not to be confused with an external magnetic field) is an external condition. The configuration space in the spin-chain interpretation consists of sequences of ‘‘’’ and ‘‘’’ with fixed numbers of ‘‘’’ and ‘‘’’. Summation over configurations with different or would make no sense: e.g., recall that rather than .

Varying the magnetization , viewed as an external condition, we observe a phase transition: (the imaginary part of) the limiting free energy density is non-analytic when passes through (see Figure 5 to the middle and Corollary 3). The phase transition emerges as . It is interesting to study other order parameters, for instance, the ‘‘probability’’ of equal signs at the endpoints of the spin chain (see Figure 10 and Problems 4–5). These quantities are complex just because the temperature is imaginary.

(A comment for specialists: the phase transition is not related to accumulation of zeroes of the partition function in the plane of complex parameter as in [25, §III] and [35]. In our situation, is fixed, the real parameter varies, and the partition function has no zeroes at all [37, Theorem 1].)

Young-diagram interpretation. Our results have also a combinatorial interpretation.

The number of steps (or inner corners) in a Young diagram with columns of heights is the number of elements in the set ; see Figure 11 to the left. Then the value is the difference between the number of Young diagrams with an odd and an even number of steps, having exactly columns and rows.

Interesting behaviour starts already for (see Proposition 4). For even, the difference vanishes. For odd, it is . Such -periodicity roughly remains for close to [45, Theorem 2]. For fixed half-perimeter , the difference slowly oscillates as increases, attains a peak at , and then harshly falls to very small values (see Corollary 2 and Theorems 2–4).

Similarly, is the difference between the number of Young diagrams with an even and an odd number of steps, having exactly columns and less than rows. The behaviour is similar. The upgrade in §3 is related to Stanley character polynomials [48, §2].

Discussion of the definition. Now compare Definition 1 with the ones in the literature.

The notation ‘‘’’ comes from ‘‘arrow’’ and ‘‘probability amplitude’’; other names are ‘‘wavefunction’’, ‘‘kernel’’, ‘‘the Green function’’, ‘‘propagator’’. More traditional notations are ‘‘’’, ‘‘’’, ‘‘’’, ‘‘’’, ‘‘’’ depending on the context. We prefer a neutral one suitable for all contexts.

The factor of and the minus sign in the definition are irrelevant (and absent in the original definition [12, Problem 2.6]). They come from the ordinary starting direction and rotation direction of the stopwatch hand, and reduce the number of minus signs in what follows.

The normalization factor can be explained by analogy to the classical random walk. If the checker were performing just a random walk, choosing one of the two possible directions at each step (after the obligatory first upwards-right move), then would be the probability of a path . This analogy should be taken with a grain of salt: in quantum theory, the ‘‘probability of a path’’ has absolutely no sense (recall Example 1). The reason is that the path is not something one can measure: a measurement of the electron position at one moment strongly affects the motion for all later moments.

Conceptually, one should also fix the direction of the last move of the path (see [12, bottom of p.35]). Luckily, this is not required in the present paper (and thus is not done), but becomes crucial in further upgrades (see §4 for an explanation).

One could ask where does the definition come from. Following Feynman, we do not try to explain or ‘‘derive’’ it physically. This quantum model cannot be obtained from a classical one by the standard Feynman sum-over-paths approach: there is simply no clear classical analogue of a spin particle (cf. §4) and no true understanding of spin. ‘‘Derivations’’ in [1, 4, 36] appeal to much more complicated notions than the model itself. The true motivation for the model is its simplicity, consistency with basic principles (like probability conservation), and agreement with experiment (which here means the correct continuum limit; see Corollary 6).

2.3 Identities and asymptotic formulae

Let us state several well-known basic properties of the model. The proofs are given in §12.1.

First, the arrow coordinates and satisfy the following recurrence relation.

Proposition 1 (Dirac equation).

For each integer and each positive integer we have

This mimics the -dimensional Dirac equation in the Weyl basis [42, (19.4) and (3.31)]

| (1) |

only the derivatives are replaced by finite differences, is set to , and the normalization factor is added. For the upgrade in §3, this analogy becomes transparent (see Remark 2). The Weyl basis is not unique, thus there are several forms of equation (1); cf. [23, (1)].

The Dirac equation implies the conservation of probability.

Proposition 2 (Probability/charge conservation).

For each integer we get .

For and , there is an ‘‘explicit’’ formula (more ones are given in Appendix A).

Proposition 3 (‘‘Explicit’’ formula).

For each integers such that is even we have

The following proposition is a straightforward corollary of the ‘‘explicit’’ formula.

Proposition 4 (Particular values).

For each integer the numbers and are the coefficients at and in the expansion of the polynomial . In particular,

In §3.1 we give more identities. The sequences and are present in the on-line encyclopedia of integer sequences [46, A098593 and A104967].

The following remarkable result was observed in [1, §4] (see Figures 5 and 10), stated precisely and derived heuristically in [29, Theorem 1], and proved mathematically in [20, Theorem 1]. See a short exposition of the latter proof in §12.2, and generalizations in §3.2.

Theorem 1 (Large-time limiting distribution; see Figure 10).

For each we have

We have the following convergence in distribution as :

For each integer we have

Theorem 1(B) demonstrates a phase transition in Feynman checkers, if interpreted as an Ising model at imaginary temperature and fixed magnetization. Recall that then the magnetization is an external condition (rather than a random variable) and is the norm square of the partition function (rather than a probability). The distributional limit of is discontinuous at , reflecting a phase transition (cf. Corollary 3).

3 Mass (biased quantum walk)

Question: what is the probability to find an electron of mass in the square , if it was emitted from ?

Assumptions: the mass and the lattice step are now arbitrary.

Results: analytic expressions for the probability for large time or small lattice step, concentration of measure.

3.1 Identities

Definition 2.

Fix and called lattice step and particle mass respectively. Consider the lattice (see Figure 3). A checker path is a finite sequence of lattice points such that the vector from each point (except the last one) to the next one equals either or . Denote by the number of points in (not the first and not the last one) such that the vectors from the point to the next and to the previous ones are orthogonal. For each , where , denote by

| (2) |

the sum over all checker paths on from to with the first step to . Denote

Remark 1.

In particular, and .

One interprets as the probability to find an electron of mass in the square with the center , if the electron was emitted from the origin. Notice that the value , hence , is dimensionless in the natural units, where . In Figure 1 to the middle, the color of a point depicts the value (if is an integer). Table 4 shows for small and . Recently I. Novikov elegantly proved that the probability vanishes nowhere inside the light cone: for , and even [37, Theorem 1].

Example 2 (Boundary values).

We have and for each , , and for each or .

Example 3 (Massless and heavy particles).

For each , where , we have

Proposition 5 (Dirac equation).

For each , where , we have

| (3) | ||||

| (4) |

Remark 2.

Proposition 6 (Probability conservation).

For each , , we get .

Proposition 7 (Klein–Gordon equation).

For each , where , we have

This equation reproduces the Klein–Gordon equation in the limit .

Proposition 8 (Symmetry).

For each , where , we have

Proposition 9 (Huygens’ principle).

For each , where , we have

Informally, Huygens’ principle means that each black square on one horizontal acts like an independent point source, with the amplitude and phase determined by .

Proposition 10 (Equal-time recurrence relation).

For each , where , we have

| (5) |

This allows to compute and quickly on far horizontals, starting from and respectively (see Example 2).

Proposition 11 (‘‘Explicit’’ formula).

For each with and even,

| (6) | ||||

| (7) |

Remark 3.

For each we have unless , which gives . Beware that the proposition is not applicable for .

By the definition of Gauss hypergeometric function, we can rewrite the formula as follows:

This gives a lot of identities. E.g., Gauss contiguous relations connect the values at any neighboring lattice points; cf. Propositions 5 and 10. In terms of the Jacobi polynomials,

There is a similar expression through Kravchuk polynomials (cf. Proposition 4). In terms of Stanley character polynomials (defined in [48, §2]),

Proposition 12 (Fourier integral).

Set . Then for each and such that and is even we have

Fourier integral represents a wave emitted by a point source as a superposition of waves of wavelength and frequency .

Proposition 13 (Full space-time Fourier transform).

Denote , if , and , if . For each and such that and is even we get

3.2 Asymptotic formulae

Large-time limit. (See Figure 12) For fixed , and large time , the function

We start with discussing regime (A). Let us state our main theorem: an analytic approximation of , which is very accurate between the peaks not too close to them (see Figure 12 to the left).

|

|

|

|

|

|

Theorem 2 (Large-time asymptotic formula between the peaks; see Figure 12 to the left).

For each there is such that for each and each satisfying

| (8) |

we have

| (9) | ||||

| (10) | ||||

| for odd and even respectively, where | ||||

| (11) | ||||

Hereafter the notation means that there is a constant (depending on but not on ) such that for each satisfying the assumptions of the theorem we have .

The main terms in Theorem 2 were first computed in [1, Theorem 2] in the particular case . The error terms were estimated in [47, Proposition 2.2]; that estimate had the same order in but was not uniform in and (and cannot be uniform as it stands, otherwise one would get a contradiction with Corollary 6 as ). Getting uniform estimate (9)–(10) has required a refined version of the stationary phase method and additional Steps 3–4 of the proof (see §12.3). Different approaches (Darboux asymptotic formula for Legendre polynomials and Hardy–Littlewood circle method) were used in [6, Theorem 3] and [45, Theorem 2] to get (9) in the particular case and a rougher approximation for close to respectively.

Theorem 2 has several interesting corollaries. First, it allows to pass to the large-time distributional limit (see Figure 10). Compared to Theorem 1, it provides convergence in a stronger sense, not immediately accessible by the method of moments and by [47, Theorem 1.1].

Corollary 1 (Large-time limiting distribution).

For each and we have

as , , uniformly in .

Recall that all our results can be stated in terms of Young diagrams or Jacobi polynomials.

Corollary 2 (Steps of Young diagrams; see Figure 11).

Denote by and the number of Young diagrams with exactly rows and columns, having an even and an odd number of steps (defined in page 11) respectively. Then for almost every we have

For the regimes (B) and (C) around and outside the peaks, we state two theorems by Sunada–Tate without discussing the proofs in detail. (To be precise, the main terms in literature [1, Theorem 2], [47, Propositions 2.2, 3.1, 4.1] are slightly different from (9), (13), (17); but that difference is within the error term. Practically, the latter three approximations are better by several orders of magnitude.)

Regime (B) around the peaks is described by the Airy function

Theorem 3 (Large-time asymptotic formula around the peaks; see Figure 12 to the middle).

[47, Proposition 3.1] For each and each sequence satisfying

| (12) |

we have

| (13) | ||||

| (14) | ||||

| for odd and even respectively, where the signs agree and | ||||

| (15) | ||||

We write , if there is a constant (depending on , and the whole sequence but not on ) such that for each we have .

Exponential decay outside the peaks was stated without proof in [1, Theorem 1]. A proof appeared only a decade later, when the following asymptotic formula was established.

Theorem 4 (Large-time asymptotic formula outside the peaks; see Figure 12 to the right).

[47, Proposition 4.1] For each , and each sequence satisfying

| (16) |

we have

| (17) | ||||

| (18) | ||||

| for odd and even respectively, where | ||||

| (19) | ||||

Corollary 3 (Limiting free energy density; see Figure 5 to the middle).

For each , , and given by (19) we have

This means phase transition in the Ising model in a strong sense: the limiting free energy density (with the imaginary part proportional to the left side) is nonanalytic in .

Feynman triple limit. Theorem 2 allows to pass to the limit as follows.

Corollary 4 (Simpler and rougher asymptotic formula between the peaks).

Under the assumptions of Theorem 2 we have

| (20) |

Corollary 5 (Feynman triple limit; see Figure 3).

For each and each sequence such that , is even, and

| (21) |

we have the equivalence

| (22) |

Example 4.

Equivalence (22) holds for neither nor nor , where : the limit of the ratio of the left and the right side equals , , and does not exist respectively, rather than equals . (The absence of the latter limit confirms that tending to was understood in the Feynman problem.)

Corollary 5 solves the Feynman problem (and moreover corrects the statement, by revealing the required sharp assumptions). The main difficulty here is that it concerns triple rather than iterated limit. We are not aware of any approach which could solve the problem without proving the whole Theorem 2. E.g., the Darboux asymptotic formula for the Jacobi polynomials (see Remark 3) is suitable for the iterated limit when first , then , giving a (weaker) result already independent on . Neither the Darboux nor Mehler–Heine nor more recent asymptotic formulae [32] are applicable when or is unbounded. The same concerns [47, Proposition 2.2] because the error estimate there is not uniform in and . Conversely, the next theorem is suitable for the iterated limit when first , then , then , but not for the triple limit because the error term blows up as .

Continuum limit. The limit is described by the Bessel functions of the first kind:

Theorem 5 (Asymptotic formula in the continuum limit).

For each and such that even, , and , where , we have

Recall that means that there is a constant (not depending on ) such that for each satisfying the assumptions of the theorem we have .

The main term in Theorem 5 was computed in [36, §1]. Numerical experiment shows that the error term decreases faster than asserted (see Table 5 computed in [44, §14]).

In the next corollary, we approximate a fixed point in the plane by the lattice point (see Figure 4). The factors of make the latter accessible for the checker.

Corollary 6 (Uniform continuum limit; see Figure 4).

For each fixed we have

| (23) |

as uniformly on compact subsets of the angle .

The proof of pointwise convergence is simpler and is presented in Appendix B.

Corollary 7 (Concentration of measure).

For each we have

This result, although expected, is not found in literature. An elementary proof is given in §12.6. We remark a sharp contrast between the continuum and the large-time limit here: by Corollary 1, there is no concentration of measure as for fixed .

| 0.02 | 0.1 | 1.1 |

| 0.002 | 0.04 | 0.06 |

| 0.0002 | 0.009 | 0.006 |

3.3 Physical interpretation

Let us discuss the meaning of the Feynman triple limit and the continuum limit. In this subsection we omit some technical definitions not used in the sequel.

The right side of (22) is up to the factor the free-particle kernel

| (24) |

describing the motion of a non-relativistic particle emitted from the origin along a line.

Limit (23) reproduces the spin- retarded propagator describing motion of an electron along a line. More precisely, the spin- retarded propagator, or the retarded Green function for Dirac equation (1) is a matrix-valued tempered distribution on vanishing for and satisfying

| (25) |

where is the Dirac delta function. The propagator is given by (cf. [23, (13)], [42, (3.117)])

| (26) |

In addition, involves a generalized function supported on the lines , not observed in the limit (23) and not specified here. A more common expression is (cf. Proposition 13)

| (27) |

where the limit is taken in the weak topology of matrix-valued tempered distributions and the integral is understood as the Fourier transform of tempered distributions (cf. [14, (6.47)]).

The propagator ‘‘square’’ is ill-defined (because of the square of the Dirac delta-function supported on the lines involved). Thus the propagator lacks probabilistic interpretation, and global charge conservation (Proposition 6) has no continuum analogue. For instance, paradoxically. A physical explanation: the line carries infinite charge flowing inside the angle . One can interpret the propagator ‘‘square’’ for as a relative probability density or charge density (see Figure 1 to the right). In the spin-chain interpretation, the propagator is the limit of the partition function for one-dimensional Ising model at the inverse temperature . Those are essentially the values of for which phase transition is possible [35].

The normalization factor before ‘‘’’ in (23) can be explained as division by the length associated to one black lattice point on a horizontal line. On a deeper level, it comes from the normalization of arising from (25).

Theorem 5 is a toy result in algorithmic quantum field theory: it determines the lattice step to compute the propagator with given accuracy. So far this is not a big deal, because the propagator has a known analytic expression and is not really experimentally-measurable; neither the efficiency of the algorithm is taken into account. But that is a first step.

Algorithm 1 (Approximation algorithm for spin- retarded propagator (26)).

Input: mass , coordinates , accuracy level .

Output: an approximate value of within distance from the true value (26).

Algorithm: compute by (2) for

Here we used an explicit estimate for the constant understood in the big-O notation in Theorem 5; it is easily extracted from the proof. The theorem and the estimate remain true, if with is replaced by with arbitrary .

4 Spin

Question: what is the probability to find a right electron at , if a right electron was emitted from ?

Assumptions: electron chirality is now taken into account.

Results: the probability of chirality flip.

A feature of the model is that the electron spin emerges naturally rather than is added artificially.

It goes almost without saying to view the electron as being in one of the two states depending on the last-move direction: right-moving or left-moving (or just ‘right’ or ‘left’ for brevity).

The probability to find a right electron in the square , if a right electron was emitted from the square , is the length square of the vector , where the sum is over only those paths from to , which both start and finish with an upwards-right move. The probability to find a left electron is defined analogously, only the sum is taken over paths which start with an upwards-right move but finish with an upwards-left move. Clearly, these probabilities equal and respectively, because the last move is directed upwards-right if and only if the number of turns is even.

These right and left electrons are exactly the -dimensional analogue of chirality states for a spin particle [42, §19.1]. Indeed, it is known that the components and in Dirac equation in the Weyl basis (1) are interpreted as wave functions of right- and left-handed particles respectively. The relation to the movement direction becomes transparent for : then a general solution of (1) is ; thus the maxima of and (if any) move to the right and to the left respectively as increases. Beware that in or more dimensions, spin is not the movement direction and cannot be explained in nonquantum terms.

This gives a more conceptual interpretation of the model: an experiment outcome is a pair (final -coordinate, last-move direction), whereas the final -coordinate is fixed. The probabilities to find a right/left electron are the fundamental ones. In further upgrades, and become complex numbers and should be defined as rather than by the above formula , being a coincidence.

Theorem 6 (Probability of chirality flip).

For integer we get

See Figure 13 for an illustration and comparison with the upgrade from §5. The physical interpretation of the theorem is limited: in continuum theory, the probability of chirality flip (for an electron emitted by a point source) is ill-defined similarly to the propagator ‘‘square’’ (see §3.3). A related more reasonable quantity is studied in [23, p. 381] (cf. Problem 5). Recently I. Bogdanov has generalized the theorem to an arbitrary mass and lattice step (see Definition 2): if then [6, Theorem 2]. This has confirmed a conjecture by I. Gaidai-Turlov–T. Kovalev–A. Lvov.

5 External field (inhomogeneous quantum walk)

Question: what is the probability to find an electron at , if it moves in a given electromagnetic field ?

Assumptions: the electromagnetic field vanishes outside the -plane; it is not affected by the electron.

Results: ‘‘spin precession’’ in a magnetic field (qualitative explanation), charge conservation.

Another feature of the model is that external electromagnetic field emerges naturally rather than is added artificially. We start with an informal definition, then give a precise one, and finally show exact charge conservation.

In the basic model, the stopwatch hand did not rotate while the checker moved straightly. It goes without saying to modify the model, rotating the hand uniformly during the motion. This does not change the model essentially: since all the paths from the initial to the final position have the same length, their vectors are rotated through the same angle, not affecting probabilities. A more interesting modification is when the current rotation angle depends on the checker position. This is exactly what electromagnetic field does. In what follows, the rotation angle assumes only the two values and for simplicity, meaning just multiplication by .

Thus an electromagnetic field is viewed as a fixed assignment of numbers and to all the vertices of the squares. For instance, in Figure 14, the field equals at the top-right vertex of each square with both and even. Modify the definition of the vector by reversing the direction each time when the checker passes through a vertex with the field . Denote by the resulting vector. Define and analogously to and replacing by in the definition. For instance, if identically, then .

Let us slightly rephrase this construction, making the relation to lattice gauge theory more transparent. We introduce an auxiliary grid with the vertices at the centers of black squares (see Figure 11 to the right). It is the graph where the checker actually moves.

Definition 3.

An auxiliary edge is a segment joining nearest-neighbor integer points with even sum of coordinates. Let be a map from the set of all auxiliary edges to . Denote by

the sum over all checker paths with , , and . Set . Define and analogously to , only add the condition and respectively. For half-integers denote by the value of on the auxiliary edge with the midpoint .

Remark 5.

Here the field is a fixed external classical field not affected by the electron.

This definition is analogous to one of the first constructions of gauge theory by Weyl–Fock–London, and gives a coupling of Feynman checkers to the Wegner–Wilson lattice gauge theory. In particular, it reproduces the correct spin for the electromagnetic field: a function defined on the set of edges is a discrete analogue of a vector field, i.e., a spin field. Although this way of coupling is classical, it has never been explicitly applied to Feynman checkers (cf. [16, p. 36]), and is very different from both the approach of [38] and Feynman-diagram intuition [11].

For instance, the field in Figure 14 has form for each auxiliary edge , where is the vector-potential of a constant homogeneous electromagnetic field.

For an arbitrary gauge group, and are defined analogously, only becomes a map from the set of auxiliary edges to a matrix group, e.g., or . Then , where are the entries of a matrix .

Example 5 (‘‘Spin precession’’ in a magnetic field).

The following propositions are proved analogously to Propositions 5–6, only a factor of is added due to the last step of the path passing through the vertex .

Proposition 14 (Dirac equation in electromagnetic field).

For each integers and ,

Proposition 15 (Probability/charge conservation).

For each integer , .

6 Source

Question: what is the probability to find an electron at , if it was emitted by a source of wavelength ?

Assumptions: the source is now realistic.

Results: wave propagation, dispersion relation.

A realistic source produces a wave rather than electrons localized at (as in the basic model). This means solving Dirac equation (3)–(4) with (quasi-)periodic initial conditions.

To state the result, it is convenient to rewrite Dirac equation (3)–(4) using the notation

so that it gets form

| (28) | ||||

| (29) |

The following proposition is proved by direct checking (available in [44, §12]).

Proposition 16 (Wave propagation, dispersion relation).

Remark 6.

The solution of continuum Dirac equation (1) is given by the same expression (30)–(31), only and are redefined by and instead. In both continuum and discrete setup, these are the hypotenuse and the angle in a right triangle with one leg and another leg either or respectively, lying in the plane or a sphere of radius respectively. This spherical-geometry interpretation is new and totally unexpected.

Physically, (30)–(31) describe a wave with the period and the wavelength . The relation between and is called the dispersion relation. Plank and de Broglie relations assert that and are the energy and the momentum of the wave (recall that in our units). The above dispersion relation tends to Einstein formula as and .

A comment for specialists: replacing and by creation and annihilation operators, i.e., the second quantization of the lattice Dirac equation, leads to the model from §9.

For the next upgrades, we just announce results to be discussed in subsequent publications.

7 Medium

Question: which part of light of given color is reflected from a glass plate of given width?

Assumptions: right angle of incidence, no polarization of light; mass now depends on but not on the color.

Results: thin-film reflection (quantitative explanation).

Feynman checkers can be applied to describe propagation of light in transparent media such as glass. Light propagates as if it had acquired some nonzero mass plus potential energy (depending on the refractive index) inside the media, while both remain zero outside. In general the model is inappropriate to describe light; partial reflection is a remarkable exception. Notice that similar classical phenomena are described by quantum models [50, §2.7].

In Feynman checkers, we announce a rigorous derivation of the following well-known formula for the fraction of light of wavelength reflected from a transparent plate of width and refractive index :

This makes Feynman’s popular-science discussion of partial reflection [11] completely rigorous and shows that his model has experimentally-confirmed predictions in the real world, not just a -dimensional one.

8 Identical particles

Question: what is the probability to find electrons at and , if they were emitted from and ?

Assumptions: there are several moving electrons.

Results: exclusion principle, locality, charge conservation.

We announce a simple-to-define upgrade describing the motion of several electrons, respecting exclusion principle, locality, and probability conservation (cf. [51, §4.2]).

Definition 4.

Fix integer points , , , and their diagonal neighbors , , , , where and . Denote

where the first sum is over all pairs consisting of a checker path starting with the move and ending with the move , and a path starting with the move and ending with the move , whereas in the second sum the final moves are interchanged.

The length square is called the probability to find right electrons at and , if they are emitted from and . Define analogously also for , . Here we require , if both signs are the same, and allow arbitrary and , otherwise.

9 Antiparticles

Question: what is the expected charge in the square , if an electron was emitted from the square ?

Assumptions: electron-positron pairs are now created and annihilated, the -axis is time.

Results: spin- Feynman propagator in the continuum limit, an analytic expression for the large-time limit.

9.1 Identities and asymptotic formulae

Finally, we introduce a completely new upgrade (Feynman anti-checkers), allowing creation and annihilation of electron-positron pairs during the motion. The upgrade is defined just by allowing odd in the Fourier integral (Proposition 12), that is, computing the same integral in white checkerboard squares in addition to black ones. This is equivalent to the second quantization of lattice Dirac equation (28)–(29), but we do not need to work out this procedure (cf. [2, §9F] and [3, §IV] for the massless case). Anyway, the true motivation of the upgrade is consistency with the initial model and appearance of spin- Feynman propagator (34) in the continuum limit (see Figure 15). We also give a combinatorial definition (see Definition 6).

Definition 5.

One can show that is purely imaginary for odd. Thus the real and the imaginary parts ‘‘live’’ on the black and white squares respectively, analogously to how discrete analytic functions are defined (see [8]). The sign convention for is dictated by the analogy to continuum theory (see (33) and (35)).

Example 6.

The value is the Gauss constant and is the inverse lemniscate constant, where and are the complete elliptic integrals of the 1st and 2nd kind respectively (cf. [13, §6.1]).

The other values are even more complicated irrationalities (see Table 6).

We announce that the analogues of Propositions 5–8 and 10 remain true literally, if and are replaced by and respectively (the assumption can then be dropped). As a consequence, and for all are rational linear combinations of the Gauss constant and the inverse lemniscate constant . In general, we announce that and can be ‘‘explicitly’’ expressed through Gauss hypergeometric function: for , with odd we get

and is the principal branch of the hypergeometric function. The idea of the proof is induction on : the base is given by [19, 9.112, 9.131.1, 9.134.3, 9.137.15] and the step is given by the analogue of (3)–(4) for and plus [19, 9.137.11,12,18].

Remark 7.

Remark 8.

The number equals times the probability that a planar simple random walk over white squares dies at , if it starts at and dies with the probability before each step. Nothing like that is known for and with .

The following two results are proved almost literally as Proposition 13 and Theorem 2. (The only difference is change of the sign of the summands involving in (41), (46) (50), (52); the analogues of Lemmas 5 and 11 are then obtained by direct checking.)

Proposition 17 (Full space-time Fourier transform).

Denote , if , and , if . For each and we get

| (33) | ||||

9.2 Physical interpretation

One interprets as the expected charge in a square with , in the units of electron charge. The numbers cannot be anymore interpreted as probabilities to find the electron in the square. The reason is that now the outcomes of the experiment are not mutually exclusive: one can detect an electron in two distinct squares simultaneously. There is nothing mysterious about that: Any measurement necessarily influences the electron. This influence might be enough to create an electron-positron pair from the vacuum. Thus one can detect a newborn electron in addition to the initial one; and there is no way to distinguish one from another. (A more formal explanation for specialists: two states in the Fock space representing the electron localized at distant regions are not mutually orthogonal; their scalar product is essentially provided by the Feynman propagator.)

We announce that the model reproduces the Feynman propagator rather than the retarded one in the continuum limit (see Figure 15). The spin- Feynman propagator equals

| (34) |

where and are Bessel functions of the 2nd kind and modified Bessel functions of the 2nd kind, and . In addition, there is a generalized function supported on the lines , which we do not specify. The Feynman propagator satisfies (25). We see that it has additional imaginary part (and an overall factor of ) compared to retarded one (26). In particular, it does not vanish for : annihilation of electron at one point and creation at another one may result in apparent motion faster than light.

Overall, a small correction introduced by the upgrade reflects some fundamental limitations on measurement rather than adds something meaningful to description of the motion. The upgrade should only be viewed as an ingredient for more realistic models with interaction.

9.3 Combinatorial definition

Informally, the combinatorial definition of Feynman anti-checkers (Definition 6) is obtained from the definition of Feynman checkers (Definition 2) by the following four-step modification:

- Step 1:

-

the particle mass acquires small imaginary part which we eventually tend to zero;

- Step 2:

-

just like the real mass is ‘‘responsible’’ for turns in the centers of the squares, the imaginary one allows turns at their vertices (see Figure 16 to the left);

- Step 3:

-

the infinite lattice is replaced by a torus with the size eventually tending to infinity;

- Step 4:

-

the sum over lattice paths is replaced by a ratio of sums over loop configurations.

Here Step 2 is completely new whereas the other ones are standard. It reflects a general principle that the real and the imaginary part of a quantity should be always put on dual lattices.

Definition 6.

(see Figure 16) Fix and called lattice size, lattice step, particle mass, and small imaginary mass respectively. Assume and . The lattice is the quotient set

(This is a finite subset of the torus obtained from the square by an identification of the opposite sides.) A lattice point is even (respectively, odd), if is even (respectively, odd). An edge is a vector starting from a lattice point and ending at the lattice point or .

A generalized checker path is a finite sequence of distinct edges such that the endpoint of each edge is the starting point of the next one. A cycle is defined analogously, only the sequence has the unique repetition: the first and the last edges coincide, and there is at least one edge in between. (In particular, a generalized checker path such that the endpoint of the last edge is the starting point of the first one is not yet a cycle; coincidence of the first and the last edges is required. The first and the last edges of a generalized checker path coincide only if the path has a single edge. Thus in our setup, a path is never a cycle.) Changing the starting edge of a cycle means removal of the first edge from the sequence, then a cyclic permutation, and then adding the last edge of the resulting sequence at the beginning. A loop is a cycle up to changing of the starting edge.

A node of a path or loop is an ordered pair of consecutive edges in (the order of the edges in the pair is the same as in ). A turn is a node such that the two edges are orthogonal. A node or turn is even (respectively, odd), if the endpoint of the first edge in the pair is even (respectively, odd). Denote by , , , the number of even and odd turns and nodes in . The arrow (or weight) of is

where the overall minus sign is taken when is a loop.

A set of checker paths or loops is edge-disjoint, if no two of them have a common edge. An edge-disjoint set of loops is a loop configuration. A loop configuration with a source and a sink is an edge-disjoint set of any number of loops and exactly one generalized checker path starting with the edge and ending with the edge . The arrow of a loop configuration (possibly with a source and a sink) is the product of arrows of all loops and paths in the configuration. An empty product is set to be .

The arrow from an edge to an edge (or finite-lattice propagator) is

Now take a point and set , . Let be the edges starting at , , and ending at , , respectively. The arrow of the point (or infinite-lattice propagator) is the pair of complex numbers

Example 7.

(See Figure 16 to the middle) The lattice of size lies on the square with the opposite sides identified. The lattice has points: the midpoint and the identified vertices of the square. It has edges . The generalized checker paths , , are distinct although they contain the same edges. Their arrows are , , respectively. Those paths are distinct from the cycles , . The two cycles determine the same loop with the arrow . There are totally loop configurations: , , , , , , , , . Their arrows are , , , , , , , , respectively, where .

Informally, the loops form the Dirac sea of electrons filling the whole space, and the edges not in the loops form paths of holes in the sea, that is, antiparticles.

10 Towards -dimensional quantum electrodynamics

Question: what is the probability to find electrons (or an electron and a positron) with momenta and in the far future, if they were emitted with momenta and in the far past?

Assumptions: interaction now switched on; all simplifying assumptions removed except the default ones:

no nuclear forces, no gravitation, electron moves along the -axis only, and the -axis is time.

Results: repulsion of like charges and attraction of opposite charges (qualitative explanation expected).

Construction of the required model is a widely open problem because in particular it requires the missing mathematically rigorous construction of the Minkowskian lattice gauge theory.

11 Open problems

We start with problems relying on Definition 1. The plots suggest that for fixed , the most probable position of the electron is near to (see Figure 9 to the left, Theorems 1(B) and 2). Although this was noticed 20 years ago, the following question is still open.

Problem 1.

(A.Daniyarkhodzhaev–F.Kuyanov; see Figure 9 to the left) Denote by a point where has a maximum for fixed . Is bounded as ?

What makes the problem hard is that the behavior of is only known near to (Theorem 3) and far from (Theorems 2 and 4) but not at intermediate distances.

Problem 2.

(Cf. [47]) Find a asymptotic formula for as uniform in .

Problem 3.

The aim of the next two problems is to study the phase transition by means of various order parameters (see page 11). Specifically, we conjecture that the limiting ‘‘probability’’ of equal signs at the endpoints of the spin-chain, as well as the limiting ‘‘probability’’ of equal signs at the endpoints and the midpoint, are nonanalytic at .

Problem 4.

Problem 5.

(Cf. [23, p. 381].) Find the weak limit .

The next problem is on absorption probabilities; it relies on the definition before Example 1.

Problem 6.

Problem 7.

Problem 8.

(M. Blank–S. Shlosman) Is the number of times the function changes the sign on bounded as for fixed ?

Corollary 6 gives uniform limit on compact subsets of the angle , hence misses the main contribution to the probability. Now we ask for the weak limit detecting the peak.

Problem 9.

Find the weak limits and on the whole . Is the former limit equal to propagator (27) including the generalized function supported on the lines ? What is the physical interpretation of the latter limit (providing a value to the ill-defined square of the propagator)?

The following problem is to construct a continuum analogue of Feynman checkers.

Problem 10.

(M. Lifshits) Consider as a charge on the set of all checker paths from to starting and ending with an upwards-right move. Does the charge converge (weakly or in another sense) to a charge on the space of all continuous functions with boundary values and respectively as ?

The following problem relying on Definition 3 would demonstrate ‘‘spin precession’’.

Problem 11.

Define analogously to and , unifying Definitions 2–3 and Remark 5. The next problem asks if this reproduces Dirac equation in electromagnetic field.

Problem 12.

The next two problems rely on Definition 5.

Problem 13.

(Cf. Corollary 1) Prove that .

The last problem is informal; it stands for half a century.

Problem 15.

(R. Feynman; cf. [15]) Generalize the model to dimensions so that

coincides with the spin- retarded propagator, now in space- and time-dimension.

12 Proofs

Let us present a chart showing the dependence of the above results and further subsections:

Particular proposition numbers are shown above the arrows. Propositions 7, 10, and 13 are not used in the main results.

In the process of the proofs, we give a zero-knowledge introduction to the used methods. Some proofs are simpler than the original ones.

12.1 Identities: elementary combinatorics (Propositions 1–13)

Proof of Propositions 1 and 5.

Let us derive a recurrence for . Take a path on from to with the first step to . Set .

The last move in the path is made either from or from . If it is from , then must be odd, hence does not contribute to . Assume further that the last move in is made from . Denote by the path without the last move. If the directions of the last moves in and coincide, then , otherwise .

Summation over all paths gives the required equation

The recurrence for is proved analogously. ∎

Proof of Propositions 2 and 6.

The proof is by induction on . The base is obvious. The step of induction follows immediately from the following computation using Proposition 5:

∎

Lemma 1 (Adjoint Dirac equation).

For each , where , we have

Proof of Lemma 1.

Proof of Proposition 7.

Proof of Proposition 8.

Let us prove the first identity. For a path denote by the reflection of with respect to the axis, and by the path consisting of the same moves as , but in the opposite order.

Take a path from to with the first move upwards-right such that is odd (the ones with even do not contribute to ). Then the last move in is upwards-left. Therefore, the last move in is upwards-right, hence the first move in is upwards-right. The endpoint of both and is , because reordering of moves does not affect the endpoint. Thus is a bijection between the paths to and to with odd. Thus .

We prove the second identity by induction on (this proof was found and written by E. Kolpakov). The base of induction ( and ) is obvious.

Step of induction: take . Applying the inductive hypothesis for the three points and the identity just proved, we get

Summing up the equations with the coefficients respectively, we get

Here the 3 terms in the 2nd line, as well as the 3 terms in the 3rd line, cancel each other by Lemma 1. Applying the Klein–Gordon equation (Proposition 7) to the expressions in the 1st and 4th line and cancelling the common factor , we get the desired identity

The 1st and the 3rd identities can also be proved simultaneously by induction on using Proposition 5.

Proof of Proposition 9.

Take a checker path from to . Denote by the point where intersects the line . Denote by the part of that joins with . Denote by the part starting at the intersection point of with the line and ending at (see Figure 17). Translate the path so that it starts at . Set . Since , it follows that

In the former case replace the path by the path obtained by the reflection with respect to the line (and starting at the origin). We have . Therefore,

The formula for is proven analogously. ∎

Proof of Proposition 10.

Denote by the difference between the left- and the right-hand side of (5). Introduce the operator

It suffices to prove that

| (36) |

Then (5) will follow by induction on : (36) expresses as a linear combination of with smaller ; it remains to check for , which is done in [44, §11].

To prove (36), write

for certain cubic polynomials (see (5)), and observe the Leibnitz rule

where and are the finite difference operators, and are the shift operators. Since by Proposition 7, each operator decreases , and all the above operators commute, by the Leibnitz rule we get (36). This proves the first identity in the proposition; the second one is proved analogously (the induction base is checked in [44, §11]). ∎

Alternatively, Proposition 10 can be derived by applying the Gauss contiguous relations to the hypergeometric expression from Remark 3 seven times.

Proof of Propositions 3 and 11.

Let us find . Consider a path with an odd number of turns; the other ones do not contribute to . Denote by the number of turns in the path. Denote by and the number of upwards-right and upwards-left moves respectively. Let be the number of upwards-right moves before the first, the third, …, the last turn respectively. Let be the number of upwards-left moves after the first, the third, …, the last turn respectively. Then for and

The problem now reduces to a combinatorial one: the number of paths with turns equals the number of positive integer solutions of the resulting equations. For the first equation, this number is the number of ways to put sticks between coins in a row, that is, . Thus

Thus (6) follows from and . Formula (7) is derived analogously. ∎

Proof of Proposition 12.

The proof is by induction on .

The base is obtained by the change of variable so that the integrals over and cancel for odd and there remains .

The inductive step is the following computation and an analogous one for :

Here the 1st equality is Proposition 5. The 2nd one is the inductive hypothesis. The 3rd one follows from and . ∎

Proof of Proposition 13.

To prove the formula for , we do the -integral:

as uniformly in . Here we assume that and is sufficiently small. Equality is obtained by the change of variables and then the change of the contour direction to the counterclockwise one. To prove , we find the roots of the denominator

where denotes the value of the square root with positive real part. Then follows from the residue formula: the expansion

shows that is inside the unit circle, whereas is outside, for sufficiently small in terms of and . In we denote so that and pass to the limit which is uniform in by the assumption .

The resulting uniform convergence allows to interchange the limit and the -integral, and we arrive at Fourier integral for in Proposition 12. The formula for is proved analogously, with the case considered separately. ∎

12.2 Phase transition: the method of moments (Theorem 1)

In this subsection we give a simple exposition of the proof of Theorem 1 from [20] using the method of moments. The theorem also follows from Corollary 1 obtained by another method in §12.4. We rely on the following well-known result.

Lemma 2.

(See [5, Theorems 30.1–30.2]) Let , where , be piecewise continuous functions such that is finite and for each . If the series has positive radius of convergence and for each , then converges to in distribution.

Proof of Theorem 1.

Let us prove (C); then (A) and (B) will follow from Lemma 2 for and because for , hence .

Rewrite Proposition 12 in a form, valid for each independently on the parity:

| (37) |

where

| (38) | ||||

and . Now (37) holds for each : Indeed, the identity

shows that the contributions of the two summands to integral (37) are equal for even and cancel for odd. The summand contributes by Proposition 12.

By the derivative property of Fourier series and the Parseval theorem, we have

| (39) |

The derivative is estimated as follows:

| (40) |

Indeed, differentiate (38) times using the Leibnitz rule. If we differentiate the exponential factor each time, then we get the main term. If we differentiate a factor rather than the exponential at least once, then we get less than factors of , hence the resulting term is by compactness because it is continuous and -periodic in .

12.3 The main result: the stationary phase method (Theorem 2)

In this subsection we prove Theorem 2. First we outline the plan of the argument, then prove the theorem modulo some technical lemmas, and finally the lemmas themselves.

The plan is to apply the Fourier transform and the stationary phase method to the resulting oscillatory integral. The proof has steps, with the first two known before ([1, §4], [47, §2]):

- Step 1:

-

computing the main term in the asymptotic formula;

- Step 2:

-

estimating approximation error arising from neighborhoods of stationary points;

- Step 3:

-

estimating approximation error arising from a neighborhood of the origin;

- Step 4:

-

estimating error arising from the complements to those neighborhoods.

Proof of Theorem 2 modulo some lemmas.

Derive the asymptotic formula for ; the derivation for is analogous and is discussed at the end of the proof. By Proposition 12 and the identity for odd, we get

| (41) |

where and

| (42) | ||||

| (43) |

Step 1. We estimate oscillatory integral (41) using the following known technical result.

Lemma 3 (Weighted stationary phase integral).

[21, Lemma 5.5.6] Let be a real function, four times continuously differentiable for , and let be a real function, three times continuously differentiable for . Suppose that there are positive parameters , with

and positive constants such that, for ,

for and , and

Suppose also that changes sign from negative to positive at a point with . If is sufficiently large in terms of the constants , then we have

| (44) |

Here the first term involving the values at the stationary point is the main term, and the boundary terms involving the values at the endpoints and are going to cancel out in Step 3.

Lemma 4.

To estimate integral (41), we are going to apply Lemma 3 twice, for the functions in appropriate neighborhoods of their critical points . In the case of , we perform complex conjugation of both sides of (44). Then the total contribution of the two resulting main terms is

| (46) |

A direct but long computation (see [44, §2]) then gives the desired main term in the theorem:

Step 2. To estimate the approximation error, we need to specify the particular values of parameters which Lemma 3 is applied for:

| (47) |

We also need to specify the interval

| (48) |

To estimate the derivative from below, we make sure that we are apart its roots .

The wise choice of the interval provides the following more technical estimate.

This gives for under notation (47). Now all the assumptions of Lemma 3 have been verified ( and are automatic because and by (8)). Apply the lemma to and on (the minus sign before guarantees the inequality and the factor of inside is irrelevant for application of the lemma). We arrive at the following estimate for the approximation error on those intervals.

Although that is only a part of the error, it is already of the same order as in the theorem.

Step 3. To estimate the approximation error outside , we use another known technical result.

Lemma 10 (Weighted first derivative test).

[21, Lemma 5.5.5] Let be a real function, three times continuously differentiable for , and let be a real function, twice continuously differentiable for . Suppose that there are positive parameters , with and positive constants such that, for ,

for and . If and do not change sign on the interval , then

This lemma in particular requires the interval to be sufficiently small. By this reason we decompose the initial interval into a large number of intervals by the points

Here and are given by (48) above. The other points are given by

| (49) |

The indices and are the minimal ones such that and . Thus all the resulting intervals except and its neighbors have the same length . (Although it is more conceptual to decompose using a geometric sequence rather than arithmetic one, this does not affect the final estimate here.)

We have already applied Lemma 3 to and we are going to apply Lemma 10 to each of the remaining intervals in the decomposition for (this time it is not necessary to change the sign of ). After summation of the resulting estimates, all the terms involving the values and at the endpoints, except the leftmost and the rightmost ones, are going to cancel out. The remaining boundary terms give

| (50) |