11email: {m.baktashmotlagh, a.eriksson}@uq.edu.au 22institutetext: Mila, University of Montreal 22email: [email protected] 33institutetext: University of Toronto 33email: [email protected] 44institutetext: Samsung AI Research Center, Toronto 55institutetext: Huawei Noah’s Ark Lab. London 55email: [email protected]

Few-Shot Single-View 3-D Object Reconstruction with Compositional Priors

Abstract

The impressive performance of deep convolutional neural networks in single-view 3D reconstruction suggests that these models perform non-trivial reasoning about the 3D structure of the output space. However, recent work has challenged this belief, showing that complex encoder-decoder architectures perform similarly to nearest-neighbor baselines or simple linear decoder models that exploit large amounts of per category data in standard benchmarks. A more realistic setting, however, involves inferring the 3D shape of objects with few available examples; this requires a model that can successfully generalize to novel object classes. In this work we demonstrate experimentally that naive baselines fail in this few-shot learning setting, where the network must learn informative shape priors for inference of new categories. We propose three ways to learn a class-specific global shape prior, directly from data. Using these techniques, our learned prior is able to capture multi-scale information about the 3D shape, account for intra-class variability by virtue of an implicit compositional structure. Experiments on the popular ShapeNet dataset show that our method outperforms a zero-shot baseline by over and the current state-of-the-art by over in terms of relative performance, in the few-shot setting.

Keywords:

3D reconstruction, few-shot learning, compositionality, CNN1 Introduction

Inferring the 3D geometry of an object, or a scene, from its 2D projection on the image plane is a classical computer vision problem with a plethora of applications, including object recognition, scene understanding, medical diagnosis, animation, and more. After decades of research this problem remains challenging as it is inherently ill-posed: there are many valid 3D objects shapes that correspond to the same 2D projection.

Traditional multi-view geometry and shape-from-X methods try to resolve this ambiguity by using multiple images of the same object/scene from different viewpoints to find a mathematical solution to the inverse 2D-to-3D reconstruction mapping. Notable examples of such methods include [24, 13, 31, 10, 9].

In contrast to the challenges faced by all these methods, humans can solve this ill-posed problem relatively easily, even using just a single image. Through experience and interaction with objects, people accumulate prior knowledge about their 3D structure, and develop mental models of the world, that allow them to accurately predict how a 2D scene could be “lifted” in 3D, or how an object would look from a different viewpoint.

The question then becomes: “how can we incorporate similar priors into our models?”. Early works use CAD models[12]. Xu et al. [33] use low-level priors and mid-level Gestalt principles such as curvature, symmetry, and parallelism, to regularize the 3D reconstruction of a 2D sketch. However, all these methods require an extremely specific specification of the model priors, and do not permit easily learning about shapes from data, which is impractical and does not capture the intuitive way people reason about the 3D world.

Motivated by the success of deep convolutional networks (CNN) in multiple domains, the community has recently switched to an alternative paradigm, where more sophisticated priors are directly learned from data. The idea is straightforward: given a an appropriate set of paired data, one can train a model that takes as input a 2D image and outputs a 3D shape. Most of these works rely on an encoder-decoder architecture, where the encoder extracts a latent representation of the object depicted in the image, and the decoder maps that representation into a 3D shape [23, 2, 15]. Many works have studied ways to make the 3-D decoder more efficient, attempting to improve the shape representation. Looking at the high quality outputs obtained, it is reasonable to assume that, indeed, these models learn to perform non-trivial reasoning about 3D object structure.

Surprisingly, recent works [16, 28] have shown that this is not the case. Tatarchenko et al. [28] argue that, because of the way current benchmarks are constructed, even the most sophisticated learning methods end up finding shortcuts, and can rely primarily on recognition to address the single-view 3D reconstruction task. Their experiments show that modern CNNs for 3D reconstruction are outperformed by simple nearest neighbor (NN) or classification baselines, both quantitatively, and qualitatively. Similarly [16] showed that simple linear decoder models learned by PCA are sufficient to achieve high performance. There is one caveat though: to achieve good performance with these baselines, having a large dataset is crucial. More importantly, true 3D shape understanding implies good generalization to new object classes. This is trivial to humans –we can reason about the 3D structure of unknown objects, drawing on our inductive bias from similar objects we have seen– but still remains an open computer vision problem.

Based on this observation, we argue that single-view 3D reconstruction is of particular interest in the few-shot learning setting. Our hypothesis is that learning to recover 3D shapes using few examples, while promoting generalization to novel classes, provides a good setup for the development and evaluation of models that go beyond simple categorization and actually learn about shape.

To the best of our knowledge, the first work of that kind is by Wallace and Hariharan [29]. Instead of directly learning a mapping from 2D images to 3D shapes, [29] train a model that uses features extracted from 2D images to refine an input shape prior into a final output. Their framework allows one to easily adapt the shape prior and use it when inferring new classes. However their approach has several restrictions. Firstly, when presented with multiple examples of a new class shape the shapes are averaged, or alternatively a random one is selected, both approaches leading to a collapse of intra-class variability. Secondly, the method does not explicitly force inter-class concepts to be learned.

In this work we first demonstrate empirically that the few-shot generalization baseline is not susceptible to naive benchmarks described in [28]. We then address the aforementioned shortcoming of [29] on this task. We investigate three strategies to construct the shape prior, focusing on modelling intra-class variability, compositionality and multi-scale conditioning. More specifically, we first learn a shape prior that captures intra-class variability by solving an optimization problem involving all shapes available for the new class. We then introduce a compositional bias in the shape prior that allows to build shared concepts across classes that can be transferred to new ones. Finally, we make use of conditional batchnorm to impose class conditioning explicitly at multiple scales of the decoding process.

In summary, we make the following contributions:

-

•

We investigate the few-shot learning setting for 3D shape reconstruction and demonstrate that this set-up constitutes an ideal testbed for the development of methods that reason about shapes.

-

•

We introduce three strategies for shape prior modelling. We notably introduce a compositional approach that successfully exploits similarities across classes.

-

•

Extensive experiments demonstrate that we outperform the state of the art by a significant margin generalizing to new classes more accurately.

2 Related Work

2.1 Single-view 3D Reconstruction

Recently a focus in 3D reconstruction from single or multiple view has been on finding better shape representations and alternative decoder models to the typically used 3D discretized set of voxels and corresponding 3D CNN decoder. [2, 7, 32, 34, 35], one that can permit more efficient learning and generation. These include point clouds [4], meshes [30], signed distance transform based representations [19, 16]. Indeed there is not an agreed upon canonical 3D shape representation and decoder structure for use with deep learning models. However, [28, 16] has shown that although many of these models improve over each other they do not beat naive baselines such as nearest neighbors.

2.2 Few-shot Learning

Few-shot learning has become a highly popular research topic in computer vision and machine learning [22, 6]. Typically research focuses on the classification task, with few works investigating more complex problems such as segmentation [26] or object detection. Existing methods comprise two categories: meta-learning/meta-gradient based approaches [5], and metric-learning [21, 27]. The former aims to teach models to adapt quickly, in a few gradient updates, to new unseen classes, while the latter learns a distance metric such that the distance of a query image to the few annotated examples of the same class is minimal.

3 Methods

Let be a set of image-shape pairs, belonging to one of base object classes. We assume that is large, i.e., contains enough training examples for our purposes. We also consider a much smaller set , containing examples of novel classes. Each class in possesses only a small set of K image-shape pairs , and a large set of test or query images. Given , our objective is to use the abundant data in to build a model that takes a 2D image , containing a single object, as input, and outputs a 3D reconstruction of the object, . Similar to previous works employing an encoder-decoder architecture, we choose voxels as our 3D shape representation. At the same time, we also want our model to be able to leverage the limited data in , to successfully generalize to novel categories.

We propose three strategies to achieve this. We first introduce our global class conditioning approach (GCE), in Section 3.1. GCE models the shape prior of a specific object class, as a learned global embedding. In Section 3.2, we describe a compositional extension of GCE, which aims to learn compositional representations across classes and exploit their similarities. Finally, in 3.3 we investigate multi-scale conditioning via conditional batch normalization [20].

3.1 Shape Encoding and Global Class Embedding

Consider an encoder-decoder framework involving

-

•

an encoder that takes a 2D image, , and outputs its embedding, ;

-

•

a category specific shape embedding, ;

-

•

a decoder that takes the image and shape embeddings and outputs the reconstructed 3D shape, , in the form of a voxelized grid.

This relationship is formally expressed by

| (1) |

This model can be trained using a binary cross entropy loss on the voxel occupancy confidence for voxel in the output grid given

| (2) |

In the rest of the text, we drop for notational simplicity. For base class training, , and are learned by minimizing (2). For inference on examples of new classes, is computed and fed along with extracted image embedding as input to the trained network.

In [29] (illustrated in Figure 2 (top)), is computed with a shape encoder that takes a category-specific shape prior ; is either a randomly selected shape from the set of training shapes associated with class , or the average of all training shapes for a class in voxel space. Providing an explicitly defined single shape or average shape as a shape prior has severe limitations. Such a prior cannot account for intra-class variability and is therefore intrinsically sub-optimal in settings with more than one new examples are considered. We propose to address this issue by learning a global class embedding (GCE), , that captures the “essence” of object class-. The GCE is built using all available shapes for a particular class, we expect a high-dimensional latent representation to be much more successful in capturing nuances (like intra-class variability) than simple shape averaging.

Our framework is illustrated in Figure 2 (bottom). The encoder and decoder are trained jointly with the base class embeddings on the set of base classes, minimizing (2). For novel classes with a small training set , all model parameters of and are fixed, and class specific embeddings are obtained by solving

using all of the available training images. We note that, due to our few-shot set-up, this optimization problem can be solved in few iterations since it only involves a small set of parameters () and a small amount of new samples. By construction, this model does not lose performance on base classes and can continually add multiple new classes, while at the same time learning implicit shape prior representation to guide the decoding process.

Finally we note that we use concatenation at the first stage of to combine and , instead of the element-wise sum of and used in [29].

3.2 Compositional Global Class Embeddings

GCE allows us to exploit all available, class specific training shapes to learn a representative shape prior. However, the learned global embeddings do not explicitly exploit similarities across different classes, which may result in sub-optimal, and potentially redundant representations.

However, it does not explicitly exploit similarities across shapes, a strategy which would allow increased robustness in the lowest data regimes. We introduce a novel strategy to address this issue, attempting to learn compositional representations between classes which we call Compositional Global Class Embeddings (CGCE). This model is illustrated in Figure 3.

Our objective is to explicitly encourage the model to discover shared concepts among shapes which can be reused across shapes. Taking inspiration from work on compressing word embeddings [25], we propose to decompose our class representation into a linear combination of vectors that are shared across classes. More specifically, we learn a set of codebooks (or embedding tables), with each codebook comprising individual embedding vectors (or codes) , where . Intuitively, each codebook can be interpreted as the representation of an abstract concept which can be shared across multiple classes.

For each class , a learned attention vector selects the most relevant code(s) from each codebook. The codes of all codebooks are then combined to yield an final embedding: where corresponds to scalar attention on the code at codebook , while corresponds to the code of codebook . During base class training we learn both and . We highlight that codebooks are shared across classes and therefore need only be trained on base classes. As a result, constitutes the only class specific variable to fine-tune on novel classes:

Since we would like each codebook to assign a discrete attribute to each class, we aim for the model to select few codes in a given codebook. We thus use a form of attention that relies on the sparsemax [14] operator. Specifically each codebooks attention is given by , where is a learned parameter and the sparsemax operator gives an output that sums to , but will typically attend to just a few outputs.

3.3 Multi-scale Conditional Class Embeddings

Another inductive bias we can add is the use of multi-scale structure in the shape construction. An elegant way to do this is by applying the conditional batchnorm technique [20] to the 3D decoder model. Conditional batch normalization replaces the affine parameters in all batch-norm layers with embeddings at each layer. Since 3D decoders have an inherent multi-scale structure that gradually creates more refined areas in the output, each layer’s batch-norm parameters can be seen as defining the class conditional structure at different scales. As in GCE when receiving novel classes we learn the conditional batchnorm parameters for the new class, keeping all other weights frozen. We refer to this approach as Multi-scale Conditional Class Embeddings (MCCE).

3.4 Nearest Neighbor Oracle, Zero-Shot and All-Shot Baselines

In this section, we introduce and discuss three simple baselines that are used in our experiments. First, we consider an oracle nearest neighbor (ONN) [28] baseline. Given a query 3D shape, ONN exhaustively searches a shape database for the most similar entry with respect to a given metric, (Intersection-Over-Union/IoU in this case). Although this method cannot be applied in practice, it provide an upper bound on how well a retrieval method can perform on the task. With ONN, we aim to show that unlike the standard paradigm [28], the few shot generalization benchmark cannot be solved with such a naive baseline.

We further consider a zero-shot (ZS) baseline, and an all-shot (AS) baseline. For the ZS baseline, we train encoder decode model as described in Eq. (1) and use to infer 3D shapes for novel classes, without using the category-specific shape prior . We expect this to give a lower bound of performance, since it does not make any use of shape prior information. For the AS baseline, we merge the base class and novel class datasets, obtaining . We train the model on this joint dataset and then test on examples from only the novel classes. We expect that this baseline will set an upper bound on the performance of the vanilla encoder-decoder architecture, since the model also has access to the examples from the novel classes in .

4 Experiments

4.1 Dataset and Evaluation Protocol

For our experiments we use the ShapeNetCore_v1.0 [1] dataset and the few-shot generalization benchmark of [29]. As in [29] we use the following 7 categories as our base classes: plane, car, chair, display, phone, speaker, table. We also use following 10 categories as our novel classes: bench, cabinet, lamp, rifle, sofa, watercraft, knife, bathtub, guitar, laptop. Note that we have added additional categories to the standard benchmark, for a more extensive evaluation. Out data comes in the form of pairs of sRGB images rendered using Blender [3], and voxelized representations obtained using Binvox [18, 17]. Each 3D model has 24 associated images, rendered from random viewpoints. For evaluation we use the standard Intersection over Union (IoU) score to compare predicted shapes to ground truth shapes : .

4.2 Implementation Details

All methods are trained on the 7 base classes except for the AS-baseline which is trained on all 17 categories. All methods share the same 2D encoder and 3D decoder architectures. We use the same 2D encoder as in [23, 29], a ResNet [8] that takes a image as input, and outputs a 128-dimensional embedding. Our 3D decoder consists of 7 convolutional layers, followed by batch-normalization, and ReLU activations. For training, we use the same 80-20 train-test split as in R2N2 [2, 29]. Unless otherwise stated, we use as the learning rate and ADAM [11] as the optimizer. All networks are trained with binary cross entropy on the predicted voxel presence probabilities in the output 3D grid.

ZS-Baseline

is trained on the 7 base categories for 25 epochs. We use the trained model to make predictions for novel classes without further adaptations.

AS-baseline

is trained on all 17 categories for 25 epochs. Note that we do not use any pre-trained weights, but we rather train this baseline model from a random initialization.

Wallace et al. [29].

To ensure a fair comparison in our experiments, we re-implemented this framework, using the exact same settings reported in the respective paper. In the supplementary material we include a comparison only on the subset of classes used in [29], validating that our implementation yields practically identical results.

GCE.

We use the same architecture as in the baselines models and in [29]. As mentioned in Section 3.1, we concatenate the 128- embeddings from the 2D encoder and the conditional branch (as opposed to [29] which uses element-wise addition); and we feed the resulting 256- embedding into the 3D decoder. The class conditioning vectors are initialized randomly following a normal distribution . After training the GCE on the base classes, we freeze the parameters of and and initialize the novel class embeddings as the average of the learned base class encodings. We then optimize using stochastic gradient descent (SGD) with momentum set to .

CGCE.

The conditional branch is composed of 5 codebooks, each containing 6 codes of dimension 128, and an attention array of size ; i.e., one attention value per triplet. The codes and attention values are initialized using a uniform distribution . During training, we push the attention array to focus on meaningful codes by employing sparsemax [14], a modification of the standard softmax function that promotes sparsity of the output. After training the CGCE on the base classes, we freeze the parameters of and , as well as the codebook entries . We initialize the novel class attentions from a uniform distribution . We then optimize using stochastic gradient descent (SGD) with momentum set to .

MCCE

We replace all batch normalization (bnorm) layers in the 3D decoder with conditional batch normalization (cond-bnorm) [20]. More precisely, the affine parameters and are initialized from a normal distribution , and conditioned on the class (). For novel class adaptation only the aforementioned and for new classes are learned. We use SGD as optimizer with momentum set to 0.9 for this novel class adaptation.

4.3 Evaluating the Zero-shot Baseline and nearest neighbor oracle

| cat | ZS-Baseline | AS-baseline | ONN_1 | ONN_2 | ONN_3 | ONN_4 | ONN_5 | ONN_10 | ONN_25 | ONN_full |

| bench | 0.366 | 0.524 | 0.238 | 0.240 | 0.245 | 0.271 | 0.276 | 0.360 | 0.420 | 0.708 |

| cabinet | 0.686 | 0.753 | 0.400 | 0.458 | 0.460 | 0.461 | 0.480 | 0.495 | 0.631 | 0.842 |

| lamp | 0.186 | 0.368 | 0.153 | 0.162 | 0.177 | 0.189 | 0.194 | 0.223 | 0.282 | 0.515 |

| firearm | 0.133 | 0.561 | 0.377 | 0.396 | 0.420 | 0.425 | 0.434 | 0.510 | 0.550 | 0.707 |

| sofa | 0.519 | 0.692 | 0.445 | 0.458 | 0.459 | 0.530 | 0.534 | 0.579 | 0.616 | 0.791 |

| watercraft | 0.283 | 0.560 | 0.259 | 0.286 | 0.317 | 0.354 | 0.372 | 0.479 | 0.527 | 0.697 |

| mean_novel | 0.362 | 0.576 | 0.312 | 0.333 | 0.346 | 0.371 | 0.381 | 0.441 | 0.504 | 0.710 |

We first illustrate that naive baselines perform poorly in a few-shot setting [29] which requires good generalization about shapes. We do this by performing a comparison to two baseline methods shown in Table 1. The first is a zero-shot (ZS) baseline, where a model is trained on base classes and used directly to infer shapes of novel classes. The all-shot (AS) baseline is trained on all classes using the full data (which has 1000-8000 shapes per class). We can expect these two baselines to be upper and lower bounds on the performance of the few-shot generalization benchmark. We use the nearest neighbor oracle described in Sec. 3.4 for several possible levels. We observe that the full nearest neighbor oracle outperforms the deep learning model trained on all the data (as observed in [29]) indicating the task does not require generalization. On the other hand in the few shot case we see that performance is often below that of even the ZS baseline, indicating this task is appropriate for evaluating model generalization.

Note that the ZS benchmark in Table 1 already obtains relatively high performance on select classes (sofa and cabinet), we hypothesize this is due to similarity towards base classes. In order to best evaluate these methods we take the novel classes and attempt to compute a similarity metric of new classes towards base classes. We do this by finding the average IOU of the nearest neighbor from base classes. Specifically for each shape in the novel class we compute for all novel shapes in a given class and average across the class to obtain the proximity scores. The proximity scores for different classes are illustrated in Table 2. We observe in Figure 4 that indeed the higher performing zero-shot cases correspond to close proximity classes, we thus use the proximity criteria as an additional consideration when comparing methods. Furthermore to extend the number of distant classes to the base class set, we select 4 additional far proximity classes from ShapeNet than the original benchmark used in [29], these are included in green in Table 2.

4.4 Evaluating Few Shot-Generalization

| inter class proximity | |

|---|---|

| base | 1 |

| cabinet | 0.794 |

| sofa | 0.671 |

| bench | 0.574 |

| watercraft | 0.539 |

| knife | 0.458 |

| bathtub | 0.436 |

| laptop | 0.432 |

| guitar | 0.429 |

| lamp | 0.347 |

| firearm | 0.290 |

We now evaluate the methods discussed in Sec 3. We evaluate the three methods on the 1-shot baseline in Table 3. We report both the IOU as well as the relative improvement over the ZS baseline. Note that as the ZS-baseline provides strong performance for easy classes, the average IOU is dominated by these, thus relative improvement is a more meaningful metric for aggregation across classes. Observe that GCE improves performance over the method of [29], particularly in cases where the classes are distant, obtaining improvement over Zero-Shot overall compared to [29]. CGCE and MCCE give a more drastic improvement in the performance obtaining and respectively by adding multiscale and compositional priors to the simple class prior of [29].

| 0-shot | All-shot | 1 shot | ||||

| cat | B0 | AS | Wallace | GCE | CGCE | MCCE |

| cabinet | 0.69 | 0.75 | 0.69 (0.00) | 0.69 (0.01) | 0.71 (0.03) | 0.69 (0.01) |

| sofa | 0.52 | 0.69 | 0.54 (0.04) | 0.52 (0.00) | 0.54 (0.04) | 0.54 (0.03) |

| bench | 0.37 | 0.52 | 0.37 (0.00) | 0.37 (0.00) | 0.37 (0.00) | 0.37 (0.00) |

| watercraft | 0.28 | 0.56 | 0.33 (0.16) | 0.34 (0.19) | 0.39 (0.39) | 0.37 (0.29) |

| knife | 0.12 | 0.60 | 0.30 (1.47) | 0.26 (1.13) | 0.31 (1.5) | 0.27 (1.19) |

| bathtub | 0.24 | 0.46 | 0.26 (0.05) | 0.27 (0.09) | 0.28 (0.13) | 0.27 (0.11) |

| laptop | 0.09 | 0.56 | 0.21 (1.30) | 0.27 (1.85) | 0.29 (2.10) | 0.27 (1.87) |

| guitar | 0.23 | 0.69 | 0.31 (0.38) | 0.30 (0.31) | 0.32 (0.42) | 0.30 (0.31) |

| lamp | 0.19 | 0.37 | 0.20 (0.05) | 0.20 (0.07) | 0.20 (0.05) | 0.22 (0.16) |

| firearm | 0.13 | 0.56 | 0.21 (0.58) | 0.24 (0.83) | 0.23 (0.70) | 0.30 (1.26) |

| mean (relative to B0) | 40.2 | 44.7 | 53.7 | 52.2 | ||

As discussed in Sec. 3 our approach based on global conditional embeddings is able to capture intra-class variability and thus extends more naturally beyond the 1-shot setting. In Table 4 we evaluate the compositional method (which performs best in 1-shot evaluation) on 10 and 25-shot settings and compare to [29]. We observe that similar to the 1-shot case most methods do not improve much the performance for close-proximity cases. However, for more distant classes we can see substantially performance improvement (sometimes 200+ in IOU). In Table 4 we observe the increased performance of CGCE versus [29] for increasing shots. Indeed for larger number of observations the method is able to obtain larger performance gains.

| 0-shot | all-shot | 10 shot | 25 shot | |||

| cat | B0 | AS | Wallace | CGCE | Wallace | CGCE |

| cabinet | 0.69 | 0.75 | 0.69 (0.00) | 0.71 (0.03) | 0.69 (0.01) | 0.71 (0.04) |

| sofa | 0.52 | 0.69 | 0.54 (0.04) | 0.54 (0.04) | 0.54(0.04) | 0.55 (0.06) |

| bench | 0.37 | 0.52 | 0.36 (-0.01) | 0.37 (0.03) | 0.36 (-0.01) | 0.38 (0.04) |

| watercraft | 0.28 | 0.56 | 0.36 (0.26) | 0.41 (0.45) | 0.37 (0.29) | 0.43 (0.53) |

| knife | 0.12 | 0.60 | 0.31 (1.52) | 0.32 (1.62) | 0.31 (1.57) | 0.35 (1.87) |

| bathtub | 0.24 | 0.46 | 0.26 (0.05) | 0.28 (0.16) | 0.26 (0.06) | 0.30 (0.23) |

| laptop | 0.09 | 0.56 | 0.24 (1.53) | 0.30 (2.24) | 0.27 (1.85) | 0.32 (2.45) |

| guitar | 0.23 | 0.69 | 0.32 (0.39) | 0.33 (0.47) | 0.32 (0.42) | 0.37 (0.62) |

| lamp | 0.19 | 0.37 | 0.19 (0.04) | 0.20 (0.05) | 0.19 (0.03) | 0.20 (0.07) |

| firearm | 0.13 | 0.56 | 0.24 (0.83) | 0.23 (0.75) | 0.26 (0.95) | 0.28 (1.08) |

| mean (relative to B0) | 46.5 | 58.3 | 51.9 | 69.8 | ||

| cat | B0 | AS | GCE | GCE_rand |

|---|---|---|---|---|

| plane | 0.580 | 0.572 | 0.582 | 0.198 |

| car | 0.835 | 0.830 | 0.837 | 0.412 |

| chair | 0.504 | 0.500 | 0.510 | 0.284 |

| monitor | 0.516 | 0.508 | 0.520 | 0.346 |

| cellphone | 0.704 | 0.689 | 0.710 | 0.497 |

| speaker | 0.648 | 0.659 | 0.670 | 0.505 |

| table | 0.536 | 0.537 | 0.540 | 0.376 |

4.4.1 Validating class prior

It’s possible that in the GCE framework as well as in [29] the trained model can learn to ignore the conditioning information. In order to validate that the class codes in the GCE framework (on which CGCE and MCCE are based) are learning meaningful priors we perform a simple ablation shown in Table 5. After training on base classes we randomize the class selected at test time (GCE_rand) and observe that performance drops drastically, thus validating that the model is learning to use the class prior.

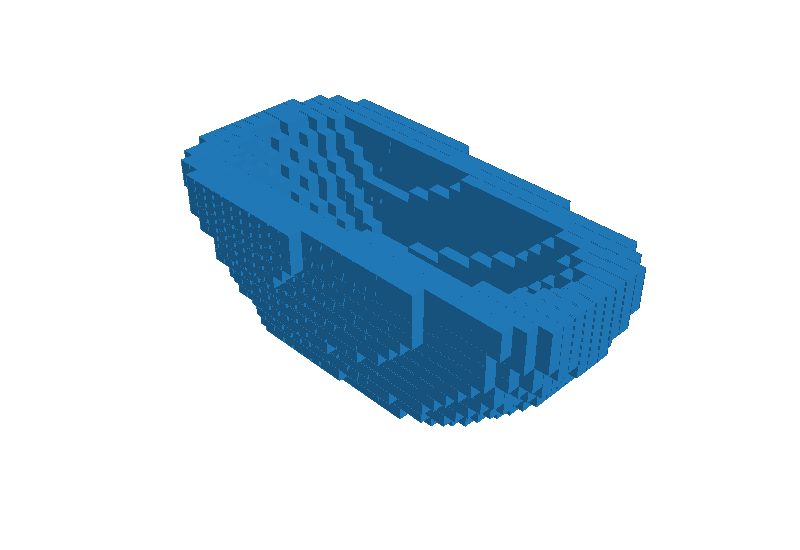

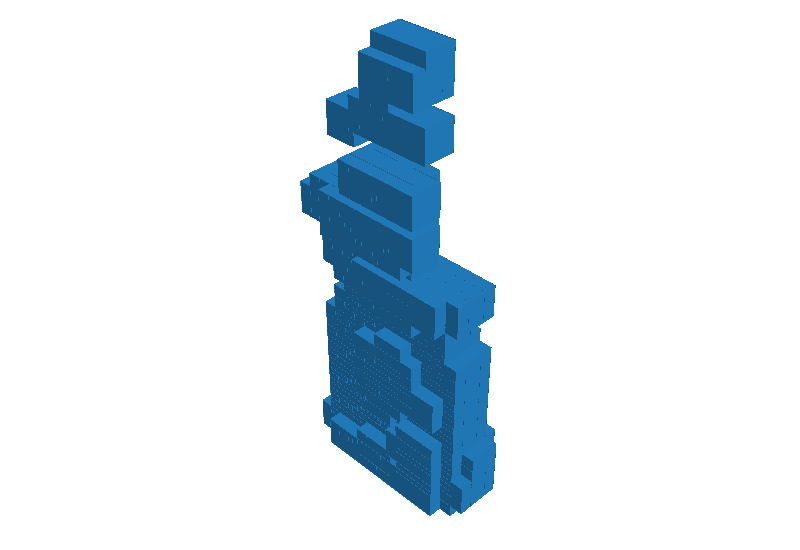

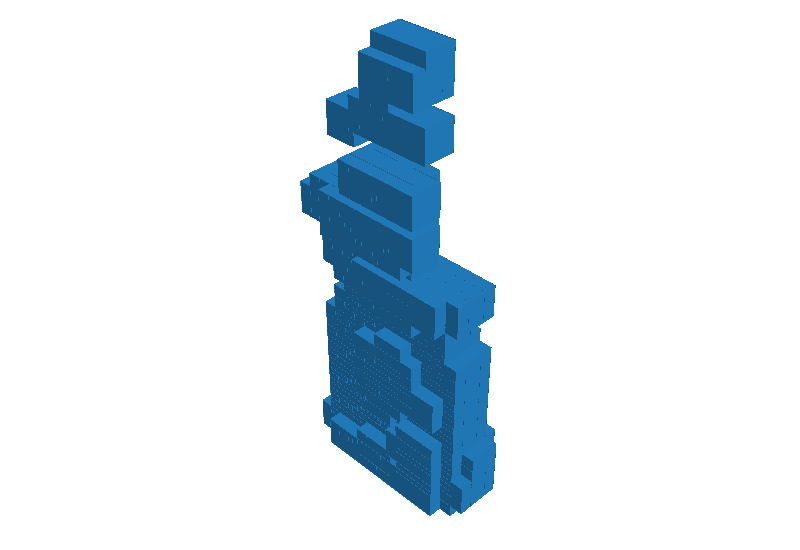

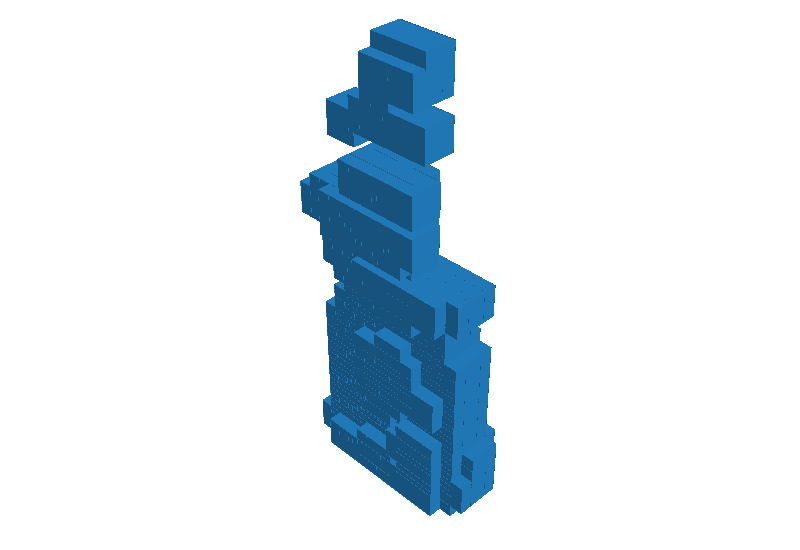

4.4.2 Analysis of the Compositional GCE

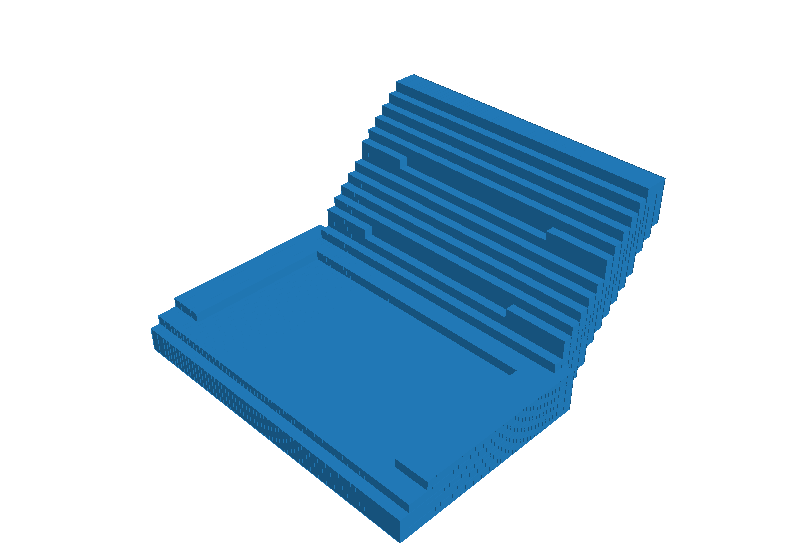

Here we qualitatively analyze the CGCE codes learned by our model. First we attempt to visualize if codebook entries are being associated with visible concepts or parts. We thus generate a reconstruction for an input image and then randomly remove codebook entries. The results of these experiments are illustrated in Figure 6. We observe that removing some code entries can remove semantically meaningful portions of the overall reconstructed image. For example tables can be observed to lose their legs, table legs can turn into wheel like structures, and planes can be observed to lose their wings. Thus the codes to an extent exhibit some association to object parts.

| GT |  |

|

|

|

|

|---|---|---|---|---|---|

| CGCE |  |

|

|

|

|

| CGCE-cb |  |

|

|

|

|

We also explicitly analyze the attention learned over the codebook entries. In Figure 7 we use the IOU based class similarity metric to associate each novel class to its closest base classes. We observe that when a novel classes attends to the same code as a base class, this will tend to align with our proximity metric, but not for all code-books. Indeed one would expect that classes sharing similar structures will have many similar concepts, but differ in some. The full visualization of these experiments is included in the supplementary materials.

4.4.3 Qualitative results

Finally we visualize our reconstructions as compared to those of [29] in the 25-shot case. We observe that the numerical performance gains can also be seen qualitatively in the reconstructions.

| 2D view | Zero-Shot | Wallace | CGCE | GT |

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

5 Conclusions

We have highlighted that few shot generalization can be an excellent benchmark for studying 3-D deep learning models and their ability to generalize about object shapes. We addressed several key weaknesses of models in this setting, particularly showing we can deal with intra-class variability and that inducing compositional and multi-scale priors can improve greatly the performance on this benchmark. Future work in this area can aim to study how various shape representations which have been developed for 3-D object reconstruction perform on this benchmark, and whether their inductive biases can further improve generalization, particularly for higher resolution shapes.

References

- [1] Chang, A.X., Funkhouser, T., Guibas, L., Hanrahan, P., Huang, Q., Li, Z., Savarese, S., Savva, M., Song, S., Su, H., et al.: Shapenet: An information-rich 3d model repository. arXiv preprint arXiv:1512.03012 (2015)

- [2] Choy, C.B., Xu, D., Gwak, J., Chen, K., Savarese, S.: 3d-r2n2: A unified approach for single and multi-view 3d object reconstruction. In: Proceedings of the European Conference on Computer Vision (ECCV) (2016)

- [3] Community, B.O.: Blender - a 3D modelling and rendering package. Blender Foundation, Stichting Blender Foundation, Amsterdam (2018), http://www.blender.org

- [4] Fan, H., Su, H., Guibas, L.J.: A point set generation network for 3d object reconstruction from a single image. vol. 2, p. 6 (2017)

- [5] Finn, C., Abbeel, P., Levine, S.: Model-agnostic meta-learning for fast adaptation of deep networks. In: Proceedings of the 34th International Conference on Machine Learning-Volume 70. pp. 1126–1135. JMLR. org (2017)

- [6] Gidaris, S., Komodakis, N.: Dynamic few-shot visual learning without forgetting. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 4367–4375 (2018)

- [7] Girdhar, R., Fouhey, D.F., Rodriguez, M., Gupta, A.: Learning a predictable and generative vector representation for objects. In: European Conference on Computer Vision. pp. 484–499. Springer (2016)

- [8] He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (2016)

- [9] Hoiem, D., Efros, A.A., Hebert, M.: Automatic photo pop-up. In: ACM SIGGRAPH 2005 Papers, pp. 577–584 (2005)

- [10] Horn, B.K.: Shape from shading: A method for obtaining the shape of a smooth opaque object from one view. Tech. rep., USA (1970)

- [11] Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

- [12] Kong, C., Lin, C.H., Lucey, S.: Using locally corresponding cad models for dense 3d reconstructions from a single image. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 4857–4865 (2017)

- [13] Kutulakos, K.N., Seitz, S.M.: A theory of shape by space carving. International journal of computer vision 38(3), 199–218 (2000)

- [14] Martins, A., Astudillo, R.: From softmax to sparsemax: A sparse model of attention and multi-label classification. In: International Conference on Machine Learning. pp. 1614–1623 (2016)

- [15] Mescheder, L., Oechsle, M., Niemeyer, M., Nowozin, S., Geiger, A.: Occupancy networks: Learning 3d reconstruction in function space (2018)

- [16] Michalkiewicz, M., Belilovsky, E., Baktashmotagh, M., Eriksson, A.: A simple and scalable shape representation for 3d reconstruction (2020), https://openreview.net/forum?id=rJgjGxrFPS

- [17] Min, P.: binvox. http://www.patrickmin.com/binvox or https://www.google.com/search?q=binvox (2004 - 2019), accessed: 2020-03-05

- [18] Nooruddin, F.S., Turk, G.: Simplification and repair of polygonal models using volumetric techniques. IEEE Transactions on Visualization and Computer Graphics 9(2), 191–205 (2003)

- [19] Park, J.J., Florence, P., Straub, J., Newcombe, R., Lovegrove, S.: Deepsdf: Learning continuous signed distance functions for shape representation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 165–174 (2019)

- [20] Perez, E., Strub, F., De Vries, H., Dumoulin, V., Courville, A.: Film: Visual reasoning with a general conditioning layer. In: Thirty-Second AAAI Conference on Artificial Intelligence (2018)

- [21] Qi, H., Brown, M., Lowe, D.G.: Low-shot learning with imprinted weights. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 5822–5830 (2018)

- [22] Ravi, S., Larochelle, H.: Optimization as a model for few-shot learning. In: ICLR (2017)

- [23] Richter, S.R., Roth, S.: Matryoshka networks: Predicting 3d geometry via nested shape layers. In: CVPR. pp. 1936–1944. IEEE Computer Society (2018)

- [24] Savarese, S., Andreetto, M., Rushmeier, H., Bernardini, F., Perona, P.: 3d reconstruction by shadow carving: Theory and practical evaluation. International journal of computer vision 71(3), 305–336 (2007)

- [25] Shu, R., Nakayama, H.: Compressing word embeddings via deep compositional code learning. arXiv preprint arXiv:1711.01068 (2017)

- [26] Siam, M., Oreshkin, B., Jagersand, M.: Adaptive masked proxies for few-shot segmentation. arXiv preprint arXiv:1902.11123 (2019)

- [27] Snell, J., Swersky, K., Zemel, R.: Prototypical networks for few-shot learning. In: Advances in neural information processing systems. pp. 4077–4087 (2017)

- [28] Tatarchenko, M., Richter, S.R., Ranftl, R., Li, Z., Koltun, V., Brox, T.: What do single-view 3d reconstruction networks learn? In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 3405–3414 (2019)

- [29] Wallace, B., Hariharan, B.: Few-shot generalization for single-image 3d reconstruction via priors. In: Proceedings of the IEEE International Conference on Computer Vision. pp. 3818–3827 (2019)

- [30] Wang, N., Zhang, Y., Li, Z., Fu, Y., Liu, W., Jiang, Y.G.: Pixel2mesh: Generating 3d mesh models from single rgb images. In: ECCV (2018)

- [31] Witkin, A.P.: Recovering surface shape and orientation from texture. Artificial intelligence 17(1-3), 17–45 (1981)

- [32] Wu, J., Zhang, C., Xue, T., Freeman, B., Tenenbaum, J.: Learning a probabilistic latent space of object shapes via 3d generative-adversarial modeling. In: Advances in neural information processing systems. pp. 82–90 (2016)

- [33] Xu, B., Chang, W., Sheffer, A., Bousseau, A., McCrae, J., Singh, K.: True2form: 3d curve networks from 2d sketches via selective regularization. ACM Transactions on Graphics (TOG) 33(4), 1–13 (2014)

- [34] Yan, X., Yang, J., Yumer, E., Guo, Y., Lee, H.: Perspective transformer nets: Learning single-view 3d object reconstruction without 3d supervision. In: Advances in Neural Information Processing Systems. pp. 1696–1704 (2016)

- [35] Zhu, R., Kiani Galoogahi, H., Wang, C., Lucey, S.: Rethinking reprojection: Closing the loop for pose-aware shape reconstruction from a single image. In: The IEEE International Conference on Computer Vision (ICCV) (Oct 2017)

Appendix 0.A Appendix

We provide additional material to supplement our work. Appendix 0.A.1 verifies the accuracy of our re-implementation of [29], which is, to our knowledge, the only pre-existing work on few-shot 3D reconstruction. In Appendix 0.A.2, we report performance on base classes for our three considered methods and Wallace et al. [29]. Appendix 0.B shows learned attention maps obtained using the CGCE model and analyses similarities across classes. Finally, we provide qualitative examples in Appendix 0.C.

0.A.1 Verifying Implementation of Wallace et al. [29]

In this section we validate that our re-implementation of [29] is correct. In Table 6 we observe performance to be very similar to the numbers reported in [29], with small variations that can be reasonably attributed to random initializations. Base class performance is not reported per class in [29]. Note that in the main paper we report the results obtained using our implementation (Wallace(ours)), including the results on classes not attempted in [29].

| cat | Wallace [29] | Wallace(ours) |

| base | ||

| plane | N/A | 0.57 |

| car | N/A | 0.84 |

| chair | N/A | 0.49 |

| monitor | N/A | 0.50 |

| cellphone | N/A | 0.74 |

| speaker | N/A | 0.66 |

| table | N/A | 0.52 |

| mean_base | 0.62 | 0.62 |

| novel | ||

| bench | 0.37 (0%) | 0.37 (0%) |

| cabinet | 0.66 (0%) | 0.69 (0%) |

| lamp | 0.19 (5%) | 0.20 (5%) |

| firearm | 0.19 (58%) | 0.21 (58%) |

| couch | 0.52 (4%) | 0.54 (4%) |

| watercraft | 0.38 (15%) | 0.33 (16%) |

| mean_novel | 0.39 | 0.39 |

0.A.2 Base Class Performance

We report in Table 7 the performance on base classes for all methods. Note that performance is similar amongst methods. This is consistent with our observation that nearest neighbor can solve the problem when large number of classes is provided. Thus most learning methods with enough capacity can expect to obtain similar performance. On the other hand, as shown in the main paper, novel class performance is improved for our proposals (GCE,CGCE,MCCE) demonstrating they generalize better about shapes.

| cat | Wallace | GCE | CGCE | MCCE |

|---|---|---|---|---|

| base | ||||

| plane | 0.57 | 0.58 | 0.59 | 0.59 |

| car | 0.84 | 0.84 | 0.84 | 0.84 |

| chair | 0.49 | 0.51 | 0.49 | 0.50 |

| monitor | 0.50 | 0.52 | 0.51 | 0.52 |

| cellphone | 0.74 | 0.71 | 0.69 | 0.71 |

| speaker | 0.66 | 0.67 | 0.66 | 0.66 |

| table | 0.52 | 0.54 | 0.54 | 0.53 |

| mean_base | 0.62 | 0.62 | 0.62 | 0.62 |

Appendix 0.B Compostional GCE Further Analysis: Attention Maps

As described in the main text, a learned attention vector selects the most relevant codes from each of the 5 available codebooks. We visualize these selections for each category as a heat map in Figure 9. Note that we have previously obtained a similarity metric between classes (using nearest neighbor proximity) as shown in Figure 7 in the main text. In Table 8 we further illustrate some pairs which show high similarity (as per our proximity metric). We observe in Figure 9 that similar classes will often share codes. We further illustrate this by selecting 3 pairs of similar categories and 3 pairs of distant categories (see Table 8 and Figure 10). Indeed this shows that CGCE model is learning to assign general structure to each class which can be reused in similar classes. As expected, however, not all codebooks for similar classes share exactly the same codes, thus they can learn distinctions across classes.

| object | similar object | distant object |

|---|---|---|

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/923f7185-4649-4e17-8d47-b883d4a4440f/x7.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/923f7185-4649-4e17-8d47-b883d4a4440f/x8.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/923f7185-4649-4e17-8d47-b883d4a4440f/x9.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/923f7185-4649-4e17-8d47-b883d4a4440f/x10.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/923f7185-4649-4e17-8d47-b883d4a4440f/x11.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/923f7185-4649-4e17-8d47-b883d4a4440f/x12.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/923f7185-4649-4e17-8d47-b883d4a4440f/x13.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/923f7185-4649-4e17-8d47-b883d4a4440f/x14.png) |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/923f7185-4649-4e17-8d47-b883d4a4440f/x15.png) |

Appendix 0.C Additional Qualitative Examples

We provide more visualizations of our reconstructions as compared to Zero-Shot baseline and Wallace which demonstrate higher quality predictions obtained using our proposed method.

| 2D view | Zero-Shot | Wallace | CGCE | GT |

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|