Federated Social Recommendation with Graph Neural Network

Abstract.

Recommender systems have become prosperous nowadays, designed to predict users’ potential interests in items by learning embeddings. Recent developments of the Graph Neural Networks (GNNs) also provide recommender systems with powerful backbones to learn embeddings from a user-item graph. However, only leveraging the user-item interactions suffers from the cold-start issue due to the difficulty in data collection. Hence, current endeavors propose fusing social information with user-item interactions to alleviate it, which is the social recommendation problem. Existing work employs GNNs to aggregate both social links and user-item interactions simultaneously. However, they all require centralized storage of the social links and item interactions of users, which leads to privacy concerns. Additionally, according to strict privacy protection under General Data Protection Regulation, centralized data storage may not be feasible in the future, urging a decentralized framework of social recommendation.

As a result, we design a federated learning recommender system for the social recommendation task, which is rather challenging because of its heterogeneity, personalization, and privacy protection requirements. To this end, we devise a novel framework Fedrated Social recommendation with Graph neural network (FeSoG). Firstly, FeSoG adopts relational attention and aggregation to handle heterogeneity. Secondly, FeSoG infers user embeddings using local data to retain personalization. Last but not least, the proposed model employs pseudo-labeling techniques with item sampling to protect the privacy and enhance training. Extensive experiments on three real-world datasets justify the effectiveness of FeSoG in completing social recommendation and privacy protection. We are the first work proposing a federated learning framework for social recommendation to the best of our knowledge.

1. Introduction

The developments of Recommender Systems (RSs) (Rendle et al., 2009; Liu et al., 2020c, 2021; Fan et al., 2019; Zhou et al., 2021a) become prosperous nowadays. A well-designed recommender system is able to predict users’ potential interests in items. The core of it is to learn user/item embeddings (Rendle et al., 2009; Zhou et al., 2021b; Liu et al., 2020b) by fitting historical user-item interactions. Recently, the prosperity of graph neural networks (GNNs) (Dou et al., 2020; Liu et al., 2020a; Cao et al., 2021; Peng et al., 2021b) provide powerful frameworks to learn node embeddings, which also motivates the community to design GNN-based RS models (Wang et al., 2019; Liu et al., 2020c, b; Fan et al., 2019; Wang et al., 2021). However, the cold-start issue (Liu et al., 2021; Zhou et al., 2021b), which is associated with users having few records, impairs the performance of learned embeddings.

To cope with this, one can leverage the social information of users (Fan et al., 2019; Liu et al., 2019; Shen and Jin, 2012; Mu et al., 2019). In this way, we assume that users with social links also share similar item interests. Therefore, we could simultaneously aggregate social information and user-item interactions (Yang et al., 2021; Fan et al., 2019, 2020; Wu et al., 2018) to alleviate the cold-start issue. SocialGCN (Wu et al., 2018, 2019a) employs the Graph Convolutional Network (GCN) to enhance user embedding by simulating how the recursive social diffusion process influences users. GraphRec (Fan et al., 2019) and GraphRec+ (Fan et al., 2020) propose to model three types of aggregations upon social graph, user-item graph and item-item graph. Thus, it can comprehensively fuse the social links and item transactions. ConsisRec (Yang et al., 2021) introduces the social inconsistency problem from context-level and relation-level. It solves this problem by using a sampling-based attention mechanism.

Though being effective in fusing the social and user-item information, they all require a centralized storage (Wu et al., 2021b; Chen et al., 2020; Yang et al., 2019) of both the social networks and item transaction history of users. Existing centralized storage methods pose risks of leaking privacy-sensitive data. Additionally, due to the strict privacy protection under General Data Protection Regulation (GDPR)111https://gdpr-info.eu/, centralized data storage may not be the first choice of online platforms in the future. Therefore, a new decentralized model training framework for social recommendation is necessary. According to previous researches in federated learning (McMahan et al., 2017; Yang et al., 2019), the user data can be stored locally in each client while only uploading the necessary gradients for updating the model on a server. As for the federated recommender systems (Chen et al., 2020; Chai et al., 2020; Ammad-Ud-Din et al., 2019; Wu et al., 2021b), the sensitive user-item interactions are stored locally and clients only upload gradients to update user/item embeddings.

However, there is few work discussing how to design a federated learning recommender system to complete the social recommendation task. We address the challenges of a federated social recommender system (FSRS) as follows: (1) Heterogeneity. The current federated recommender system stores user-item interactions locally. However, a FSRS requires both user-user and user-item interactions, as shown in Fig. 1. Therefore, we should store and fuse two types of relations simultaneously. (2) Personalization. Each client has special item interests and social connections, which leads to the non-iid distribution of the local data (Yang et al., 2019). The model should be able to characterize the personalized federated learning process (Fallah et al., 2020) for those clients, which is rather challenging. (3) Privacy Protection. Though user privacy data are stored locally, a federated recommender system yet demands collecting necessary gradients (Chai et al., 2020) from clients for updating embeddings on the server. This uploading process may lead to information leakage of original data (Chai et al., 2020). Therefore, we should design a protection module before uploading any information.

To this end, we devise a novel FSRS framework to address the challenges as mentioned above, which are Fedrated Social recommendation with Graph neural network (FeSoG). Firstly, we propose to use a local graph neural network to learn node embeddings. To tackle the heterogeneity of local data, we employ a relation attention mechanism to distinguish user-user and user-item interactions, which characterize the importance of neighbors by assigning different attention weights with respect to their relations. Secondly, the local graph neural networks on each client are updated only based on the data on devices. As such, models on devices possess personalizing training. Last but not least, FeSoG employs pseudo-labelling technique for the on-device training of local models. This pseudo-labelling can protect the privacy data from leakage when uploading gradients and enhance the robustness of the training process. Extensive experiments on three datasets demonstrate the effectiveness of FeSoG. Compared with other baselines, FeSoG achieves up to 5.26% in RMSE and 5.46% in MAE across all three datasets. In ablation studies, FeSoG also demonstrates the necessity of each component developed in the federated learning framework. The contributions are summarized as follows:

-

•

Novel: We are the first work proposing a federated learning framework to tackle the social recommendation problem to the best of our knowledge.

-

•

Substantial: We address three critical challenges, i.e., heterogeneity, personalization, and privacy protection, by proposing a new model FeSoG.

-

•

Comprehensive: We conduct extensive experiments on three publicly available datasets to verify the effectiveness of FeSoG. Detailed analysis and ablation study further prove the efficacy of our proposed components in FeSoG.

In the following sections, we first introduce the related work in Sec. 2. Then, we present some preliminaries in Sec. 3, including both the definition and formulation. The detailed descriptions of our proposed FeSoG model are in Sec. 4. Experiments are discussed in Sec. 5. Finally, we conclude this paper and open up possible future work in Sec. 6.

2. Related Work

This section presents three relevant areas to this paper: GNN for recommendation, social recommendation, and federated learning for recommendation.

2.1. Graph Neural Network for Recommendation

The recent developments of Graph Neural Networks (GNNs) (Kipf and Welling, 2017; Hamilton et al., 2017; Veličković et al., 2017; Liu et al., 2020a) motivate the community to propose a GNN-based recommender system. The intuition of a GNN model is to aggregate neighbors to recursively learn node embeddings (Peng et al., 2021a). GC-MC (Berg et al., 2017) first employs the GCN (Kipf and Welling, 2017) architecture to complete the user-item rating matrix. It uses the GCN as an encoder to train user/item embeddings, which are input to a fully connected neural network to predict the ratings. PinSAGE (Ying et al., 2018) proposes to use the GraphSAGE (Hamilton et al., 2017) backbone to learn item embeddings over an attributed item graph. It first samples fixed-size nodes from multi-hop neighbors and then uses aggregators to aggregate those sampled nodes to learn the embeddings for center nodes. NGCF (Wang et al., 2019) is proposed later to explicitly model the collaborative signals upon user-item interaction graph by applying the GNN model. DGCF (Liu et al., 2020b) observes the oscillation problem when applying GNN on the bipartite graph and solves it with cross-hop propagation layers. BasConv (Liu et al., 2020c) is a pioneer work that investigates using GNN to complete basket recommendation. These works prove the efficacy of using the GNN framework to learn embeddings in a recommender system. GNN-based models are advantageous as their aggregation can model high-order structural information crucial for learning user/item embeddings from interactions. This paper also adopts the GNN model to embed the local graphs. We employ the graph attention networks (Vaswani et al., 2017) as a backbone.

2.2. Social Recommendation

The social recommendation aims to relieve the data sparsity and cold start problem by inducing information of social links between users (Ma et al., 2008; Wang et al., 2014; Yang et al., 2021). Social recommendation methods can be generally categorized as social matrix factorization based methods and graph neural network based methods. Existing social matrix factorization approaches either jointly factorize the rating and social relationship matrices or regularize the user/item embeddings with constraints of social connections. SoRec (Ma et al., 2008) co-factorizes the user rating matrix and social link matrix. SocialMF (Jamali and Ester, 2010) adds a regularization term to constrain the difference between the user’s taste and his/her trusted friends’ average weighted taste. SoReg (Ma et al., 2011) adds a regularization term to directly minimize the difference in the user latent feature between two trusted users, which can prevent the counteraction of the latent feature of one’s trusted friends. HGMF (Wang et al., 2014) introduces a hierarchical group matrix factorization technique to learn the user-group feature in a social network for recommendation. Unlike matrix factorization methods, graph neural network methods infer node embeddings directly from graphs and demonstrate the effectiveness from recent social recommendation work (Li et al., 2020; Wu et al., 2019b, 2021a). GraphRec (Fan et al., 2019) and GraphRec+ (Fan et al., 2020) uses graph attention networks to learn user and item embeddings for recommendation. (Song et al., 2019) utilizes dynamic graph attention networks to capture the dynamic user’s interest from the social dimension. CUNE (Zhang et al., 2017) assumes that users hold implicit social links from each other. CUNE extracts semantic and reliable social information by graph embedding method. DiffNet (Wu et al., 2019a) and DiffNet++ (Wu et al., 2020) model the social influence diffusion process to enhance the social recommendation. ConsisRec (Yang et al., 2021) examines the inconsistency problems in the social recommendation and introduces a consistent neighbor sampling module in the GNN model. The above studies show the effectiveness of incorporating social information into the recommender system.

2.3. Federated Learning for Recommender System

Google proposed Federated learning in 2016 (McMahan et al., 2017). It calls for data privacy-preserving solutions in machine learning models (Erlingsson et al., 2014), with the raised privacy concerns of existing centralized training based models. The fundamental of federated learning is to design a decentralized training framework, which distributes the data to clients rather than storing it in a server (McMahan et al., 2017; Konečnỳ et al., 2016). User transactions are sensitive information and probably cause identity information leakage if used for malicious purposes. Several recent works (Chai et al., 2020; Wu et al., 2021b; Ammad-Ud-Din et al., 2019) developed federated recommender systems for user information protection while still preserving good enough personalization. Federated Collaborative Filtering (FCF) (Ammad-Ud-Din et al., 2019) and FedMF (Chai et al., 2020) are two pioneering works investigating a novel federated learning framework to learn the user/item embeddings for a recommender system. Both works develop the federated learning on the top of factorization (Koren et al., 2009) of the user-item rating matrix. To achieve federated learning, they propose that the user’s ratings should be stored locally. The user embeddings can be trained locally, and the server only retains the item embeddings. This training framework leads to protecting the privacy data, as there is no transfer of users’ interactions. Ribero et al. (Ribero et al., 2020) argues that the model updates sent to the server may contain sufficient information to uncover raw data, which leaves privacy concerns. They propose to use the differential privacy (McSherry and Mironov, 2009) to limit the exposure of the data in a federated recommender system. FED-MVMF (Flanagan et al., 2020) extends the Multi-View Matrix Factorization (MVMF) (Singh and Gordon, 2008) to a federated learning framework. It simultaneously factorizes both feature matrices and interaction matrices. A-FRS (Chen et al., 2020) proposes a robust federated recommender system against the poisoning attacks of clients. It employs an item similarity model (Kabbur et al., 2013) in learning the user/item embeddings. FedGNN (Wu et al., 2021b) is the most recent work that combines GNN with a federated recommender system, which is also the most relevant work to our paper. However, FedGNN fails to solve social recommendations, and the clients’ models are not personalized (Fallah et al., 2020). We present a comparison of a set of representative social recommender systems and federated learning methods in Table 1.

| Social Information | Multi-relation | Graph Neural Network | Data Storage | |

| RSTE (Ma et al., 2009) | ✓ | centralized | ||

| TrustWalker (Jamali and Ester, 2009) | ✓ | centralized | ||

| SoRec (Ma et al., 2008) | ✓ | centralized | ||

| SoReg (Ma et al., 2011) | ✓ | centralized | ||

| SocialMF (Jamali and Ester, 2010) | ✓ | centralized | ||

| TrustSVD (Guo et al., 2015) | ✓ | centralized | ||

| CUNE (Zhang et al., 2017) | ✓ | ✓ | centralized | |

| GCMC+SN (Berg et al., 2017) | ✓ | ✓ | centralized | |

| DANSER (Wu et al., 2019b) | ✓ | ✓ | ✓ | centralized |

| GraphRec (Fan et al., 2019) | ✓ | ✓ | ✓ | centralized |

| ConsisRec (Yang et al., 2021) | ✓ | ✓ | ✓ | centralized |

| FedMF (Chai et al., 2020) | local | |||

| FedGNN (Wu et al., 2021b) | ✓ | local | ||

| FeSoG | ✓ | ✓ | ✓ | local |

3. Preliminary

In this section, we present the preliminaries and definitions of essential concepts. The glossary of necessary notations are summarized in Table 2.

3.1. Definitions

The target in a social recommendation is to predict the users’ ratings to items, when given social interactions and user-item interactions. Denote the and as the set of users and item, respectively. and are the numbers of users and items, respectively. The social recommendation is to complete the ratings of users to items given both rating matrix and the social connection matrix . We denote the user ’s rating value to an item as . Similarly, the connection between an user and user is denoted as . In a federated learning scenario, the data of each user is stored locally. Hence, both the rating matrix and social connection matrix are not available. The data of each user are stored in the local client, which is defined as:

Definition 1.

(Client). A client is defined as a local device storing the rating data and the social data. Each client is associated with a user , whose rating data and social data are and , respectively.

Definition 2.

(Server). A server is defined as a central device managing the coordination of multiple clients in training a model. It does not exchange raw data from clients but only requests necessary messages for updating the model.

In this paper, we assume clients and server to be honest-but-curious (Kairouz et al., 2019). In other words, they provide correct information and cannot tamper with the training process. The Federated Social Recommender System (FSRS) is to complete the rating matrix given its partially complete rating data and social data, which is defined as follows:

Definition 3.

(FSRS). For clients and a server, given the partially observed rating data and social data as and of each client , respectively, where and , an FSRS can predict the unobserved rating data of the client without access to the raw data in each client.

Note that both the rating and social data are stored locally in the corresponding clients and never be uploaded to the server. Multiple clients collaboratively train an FSRS under the orchestration of the center server (Kairouz et al., 2019).

3.2. Formulation

Our FSRS is designed by formulating the data on clients as multiple local graphs, which is illustrated in Fig. 1. The local graph contains the first order neighbors of the client user, including item ratings and social neighbors. We denote the local graph for client as , which consists of both user nodes and item nodes. is constructed from partially observed privacy data. Moreover, there are two type of edges in , i.e., the user-item edges with rating value as attributes and the user-user edges denoting the social interactions. For each client , we denotes its rated items as and social neighbors as . The FSRS can predict the rating value of an unobserved item . In other words, the FSRS can predict the attribute value of the local graph for the edge between the user and a new item . Thus, the problem can be formulated as follows:

Definition 4.

(Problem Definition): Given the local graphs , can we collaboratively train a model to predict the attribute value for an unobserved edges without access to the raw data of any local graphs?

We specify the social recommendation problem to be a link prediction problem. It indicates that we should learn graph embeddings from local graphs to preserve the structural information. Additionally, it is necessary to tackle the heterogeneity (Liu et al., 2020c; Hu et al., 2020; Yang et al., 2020) of those local graphs.

| Symbol | Definition |

|---|---|

| user set; item set | |

| rating matrix; social connection matrix | |

| total number of users; total number of items | |

| client , which is associated with user | |

| the local observed rating and social data of client | |

| the local observed rated items; the social connected neighbors of client | |

| embedding for users, embedding for items | |

| the local inference embedding of user | |

| attention layer vector for user-user interaction; for item-item interaction; for relation vector | |

| linear mapping matrix for user-user interaction; for item-item interaction | |

| attention weights for neighbor users; for neighbor items | |

| hidden embeddings for neighbor users; hidden embeddings for neighbor items | |

| attention weight for aggregating social relation; for aggregating user-item relation | |

| social relation vector; user-item relation vector | |

| ground truth rating score; predicted rating score | |

| loss for client ; protected loss for client | |

| the gradients for client ; the embedding gradients; the model gradients | |

| the parameters for LDP |

4. Proposed Framework

In this section, we illustrate the FSRS framework of our proposed FeSoG. It has three crucial modules: embeddings layer, local graph neural networks, and gradient protector. The proposed framework is in Fig. 3.

4.1. Embeddings

Node embeddings are crucial components in preserving graph structural information (Wang et al., 2019; Liu et al., 2020c; Yang et al., 2021). The FeSoG has embedding layers for user and item nodes. We denote the embeddings for users and items as and , respectively, which are both maintained by the server. is the dimension size for embeddings. Clients request the embeddings tables from the server. Then, they learn a local user/item embeddings and a local GNN model by using their interaction data. Those embeddings will be updated on the server by aggregating the gradients uploaded from clients.

A client downloads the complete embedding tables and uses the user/item ids in interaction records to infer the corresponding embeddings. To be more specific, for a client , which has rated items as and social neighbors as , its rated item embeddings are and social neighbor embeddings are , where and denote the total number of item neighbors and user neighbors, respectively. Those embeddings are input the local GNN model to learn client user embedding and predict item scores.

4.2. Local Graph Neural Network

The local GNN module is the major component in FeSoG to learn node embeddings and make a prediction. It consists of heterogeneous graph attention layers, relational graph aggregation layers, and rating prediction layers.

4.2.1. Relational Graph Attention

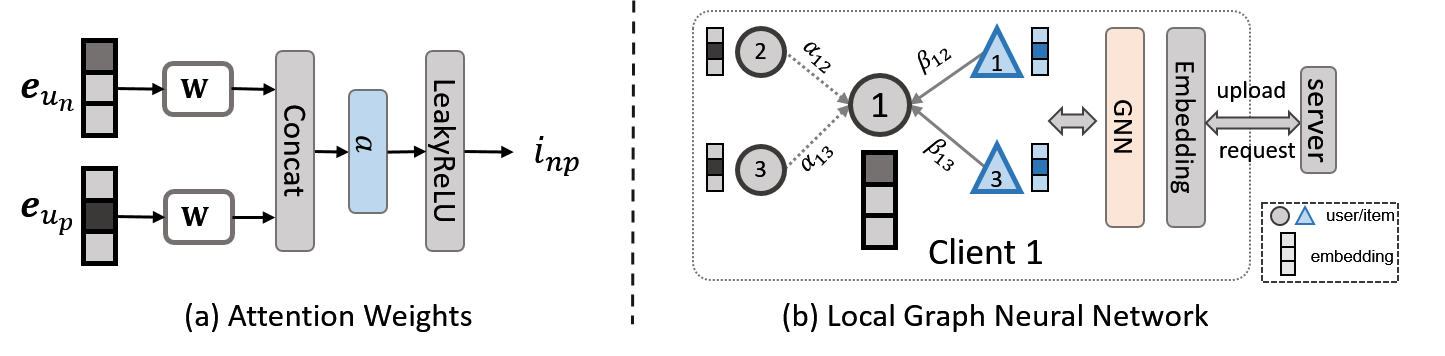

In general, we have no constraints on the local graph neural networks. There can be arbitrary GNN models, such as GCN (Kipf and Welling, 2017), GAT (Veličković et al., 2017), and GraphSAGE (Hamilton et al., 2017), etc. This paper focuses on proposing a new FSRS framework. We directly adopt the GAT layer for learning node embedding and leave other GNN layers for future investigation. The GAT layer is designed by employing the self-attention mechanism (Vaswani et al., 2017). To learn the node embedding of , we aggregate neighbor embeddings of . However, since neighbors contribute unequally to the center node , we should first learn a weight for each neighbor by employing an attention layer. Specifically, for a social pair , their attention scores are formulated as:

| (1) |

where is a scalar denoting the attention weight, is a linear mapping matrix, and Attention is the attention layer. We define the attention layer as a single-layer feed-forward neural network. It is parameterized with a weight vector and employs a LeakyReLU activation (Vaswani et al., 2017), which is formulated as:

| (2) |

where denotes the transpose of the attention layer parameter and denotes the concatenation operation of two vectors. An illustration of the attention weight is in Fig. 2(a). The attention weight should be calculated for all the neighbors of the center node , which forms a probability distribution by employing the softmax function:

| (3) |

where is the final attention weights, exp denotes the exponential function. Note that is calculated as the attention weights for user neighbors. We should learn attention weights for user-item pair in a similar way, which employs another linear mapping as in Eq. (1) and another attention parameters as in Eq. (4), as following:

| (4) |

where is the mapping matrix and is the weights for the user-item interaction attention layer. By employing the softmax to all the item neighbors, we derive the attention weights for item neighbors as:

| (5) |

where denotes the attention weights for items normalized over all neighboring items, next, we present the relational aggregation for both user neighbors and item neighbors.

4.2.2. Relational Graph Aggregation

Inferring the center user embeddings requires aggregating both neighbor user nodes and neighbor item nodes with their associated attention weights, which are formulated as follows:

| (6) |

where is the linear mapping weight matrix, and denotes the hidden embeddings for aggregating user neighbors and item neighbors, respectively. The general aggregation process is illustrated in Figure 2(b). Intuitively, we should aggregate hidden embeddings and the center node embedding to infer the embedding of . However, social relation, user-item interactions and center node embedding are not equally contributed to the learning process (Yang et al., 2021). We should also handle the heterogeneity during the aggregation step. Therefore, we propose to use three relation vectors to preserve their semantics, i.e., preserving the social semantics, user-item semantics and center node itself semantics respectively. To be more specific, we concatenate hidden embeddings with their relation vectors and employing the self-attention mechanism to learn the weights for aggregation:

| (7) |

| (8) |

| (9) |

where , and are the attention weights for hidden user neighbors embedding, hidden item neighbor embedding and center node itself embedding, respectively. is the weight vector for the attention layer. Given that, we infer the node embeddings of as:

| (10) |

where is the local user embedding for prediction. The aggregation is illustrated in Fig. 3. As such, local clients preserve their user embeddings, which tackles the personalization problem. Next, we will use the learned embeddings and downloaded item embeddings to make a prediction.

4.2.3. Prediction

To predict the local item ratings, we adopt the dot-product between the inferred user embedding and item embeddings. For a user and an item with embedding and , respectively, the rating is:

| (11) |

where denotes the dot-product operation. We use the local user-item rating values to optimize the prediction by employing the Root Mean Squared Error (RMSE) between the predicted score and the ground-truth rating score :

| (12) |

where denotes the rated items of user and is the local loss for user . Recall that each user is associated with a client. This loss function will be used to calculate the gradient for clients. Then, the gradients are collected from multiple clients to the server for further updates. However, directly uploading gradients leads the user-item interaction data to be vulnerable (Chai et al., 2020; Wu et al., 2021b). It urges us to design a privacy protection mechanism regarding the gradients, which will be introduced next.

4.3. Privacy Protection

This section introduces two techniques to protect the local user-item interaction data when uploading the gradients: dynamic Local Differential Privacy (LDP) and pseudo-item labelling.

4.3.1. Local Differential Privacy

According to FedMF (Chai et al., 2020), the user’s rating information can be inferred if given the gradients of a user uploaded in two continuous steps. Though our case is more complicated than in FedMF, it is still problematic if directly optimizing the local data and uploading the gradients. Moreover, for embedding gradients, only items with ratings in a local client have non-zero gradients to upload to the server. FedMF (Chai et al., 2020) proposes using encryption for the gradients so that the server cannot inverse the encoding process. However, it requires generating the public key and secret key and introducing additional computation for encryption. Additionally, to solve the zero-gradient for non-rated items, FedGNN (Wu et al., 2021b) proposes to sample pseudo-interacted items and add Gaussian noise with the same mean and variance as the ground-truth items to their gradients. Another technique used broadly is the Local Differential Privacy (LDP) module (Erlingsson et al., 2014; Ribero et al., 2020; Qi et al., 2020). It adopts clipping the local gradients based on their L-norm with a threshold and applies a LDP module with zero-mean Laplacian noise to the unified gradients to achieve privacy protection.

To be more specific, we instantiate the item embedding gradients, user embedding gradients and model gradients from client as , and , respectively. Combining them gives the gradients as , where denotes all trainable parameters. Then, the LDP is formulated as:

| (13) |

where is the randomized gradients, clip denotes limiting with the threshold , and Laplacian is the Laplacian noise with mean and strength. However, a constant noise strength is inappropriate when dealing with gradients at different magnitudes. The gradient magnitude of different parameters varies during training. Hence, we propose to add dynamic noise based on the gradient, which is formulated as follows:

| (14) |

4.3.2. Pseudo-Item Labelling

Based on existing work, we propose a new privacy protection module, which is advantageous as it can protect the training gradients and enhance the model with more robustness. In a local client, before calculating the training loss, we first sample items not in the neighbor items, which are the pseudo-items in Fig. 3, denoting as . Then, we use the local model to predict the ratings for these pseudo items. The predicted ratings are rounded to be the pseudo ratings. Hence, the loss in Eq. (12) is changed to:

| (15) |

Note that compared with Eq.(12), Eq.(15) is calculated from both the true interacted items and pseudo items. The ground-truth ratings for pseudo items are the rounded predicted score. The difference between the predicted score and the rounded one contributes to the gradients for those pseudo items. Here, we assume that , while . The gradients derived from Eq. (15) contain both ground-truth rating information and the pseudo item rating information, which prevents the data leakage problem. Additionally, the pseudo labels of items provide additional rating information, which can alleviate the cold-start issue of the data. Intuitively, this technique works as a data augmentation method (Liu et al., 2021; Xia et al., 2020; Liu et al., 2020d). We sample those pseudo items and view the difference between rounded ratings with a predicted rating as the randomness, which enhances the robustness of the local model.

4.4. Optimization

In this section, we first present the optimization process of the FeSoG framework before we present the pseudo-code of the algorithm.

4.4.1. Gradient Collection for Optimization

The server in FeSoG collects the gradients uploaded from clients to update both the model parameters and embeddings, which collaboratively optimize the model. Recall that the gradients from client is and the parameters is , which includes , and indicating model parameters, item embedding and user embedding, separately. In each round, the server builds a connection with a batch (e.g., 128) of clients, denoted as . It first sends the current model parameters and embeddings to those clients. Then, it aggregates local gradients from those clients as follows:

| (16) |

where is the total number interaction for calculating the gradients, including both the real interactions and pseudo interactions. and indicate interactions involving item and user , separately. Intuitively, , , are weighted average of the gradients from clients. After aggregation, the server updates the parameter with gradient descent as:

| (17) |

where is the learning rate. This learning process is operated multiple rounds until convergence.

4.4.2. Algorithm

The pseudo-code of the algorithm of FeSoG is presented in Algorithm 1. The input are consist of the training hyper-parameters such as the embedding size and learning . Additionally, the client data should also be given, i.e. the clients local graphs . Though the target is to predict item ratings, we output the parameters and the local inferred embeddings , which is sufficient for clients to predict ratings. In the algorithm, the line 1 to the line 1 is the loop operated on the server, which sends parameters to clients and collects their gradients for updating. The function ClientUpdate() is the operation on local devices. It downloads the parameters to infer the local user embeddings (line 1). Then, the pseudo items are sampled (line 1). Pseudo-labelling and LDP are combined (line 1 to line 1) to protect the gradients from privacy leakage. This function returns the gradients and the number of interactions (line 1) for the server to collect.

5. Experiments

In this section, we conduct experiments to evaluate the effectiveness of FeSoG. We will answer the following Research Questions (RQs):

-

•

RQ1: Does FeSoG outperform existing methods in social recommendation?

-

•

RQ2: What is the impact of the hyper-parameters in FeSoG?

-

•

RQ3: Are those components in FeSoG necessary?

5.1. Experimental Setup

5.1.1. Datasets

In this paper, we adopt three commonly used social recommendation datasets to conduct experimental analyses, which are Ciao, Epinions (Tang et al., 2012b, a, 2013) and Filmtrust (Guo et al., 2013). Ciao and Epinions222https://www.cse.msu.edu/~tangjili/datasetcode/truststudy.htm (Tang et al., 2012b, a, 2013) are crawled from shopping website. Both datasets contain user rating scores on items and trust links between users as social relations. Each user can give an integer score in to rate an item, where indicates least like while represents most. Filmtrust333https://guoguibing.github.io/librec/datasets.html (Guo et al., 2013) is built from online film rating website and the trust relationship between users. The rating scale ranges from 1 to 8. Social relations are also the trust links between users in these datasets. Data statistics are shown in Table 3. In our FSRS scenario, each user is treated as a local client, and the user’s interactions are local privacy data on the device. The global graph information is transferred from user embeddings.

| Dataset | Ciao | Epinions | Filmtrust |

|---|---|---|---|

| Users | 7,317 | 18,069 | 874 |

| Items | 104,975 | 261,246 | 1,957 |

| # of ratings | 283,320 | 762,938 | 18,662 |

| Rating density | 0.0369 | 0.0162 | 1.0911 |

| # of social connections | 111,781 | 355,530 | 1,853 |

| Social connection density | 0.2088 | 0.1089 | 0.2426 |

5.1.2. Baselines

We adopt three types of baselines for comparison: traditional Matrix Factorization (MF) based methods for social recommendation, recent GNN-based methods for social recommendation, and federated learning frameworks. MF-based and GNN-based methods are based on centralized learning, which is unable to protect user privacy. Federated learning methods are not able to handle the fusion of local social information and rating information.

MF-based methods

-

•

SoRec (Ma et al., 2008): It co-factorizes user-item rating matrix and user-user social matrix.

-

•

SoReg (Ma et al., 2011): It develops a social regularization with social links to regularize on matrix factorization.

-

•

SocialMF (Jamali and Ester, 2010): Compared with SoReg, social matrix factorization also considers social trust propagation.

-

•

CUNE (Zhang et al., 2017): Collaborative user network embedding assumes users hold implicit social links from each other, and it tries to extract semantic and reliable social information by graph embedding method.

GNN-based methods

-

•

GCMC+SN (Berg et al., 2017): GCMC is a graph neural network based method. User nodes are initialized as vectors learned by node2vec (Grover and Leskovec, 2016) from the social graph to obtain social information. The dense representation learned upon the social graph can include more information than the random initialized feature.

-

•

GraphRec (Fan et al., 2019): Graph recommendation uses graph neural network to learn user embedding and item embedding from their neighbors and uses several fully connected layers as the rating predictor.

-

•

ConsisRec (Yang et al., 2021): It is the state-of-the-art method in social recommendation. ConsisRec modifies graph neural network to mitigate the inconsistency problems in social recommendation.

Federated learning methods

-

•

FedMF (Chai et al., 2020): It separates the matrix factorization computation to different users and uses an encryption method to avoid information leakage.

-

•

FedGNN (Wu et al., 2021b): Federated graph neural network is the state-of-the-art federated recommendation method. It adopts local differential privacy methods to protect user’s interaction with items.

5.1.3. Evaluation Metrics

To evaluate the performance and compare, we adopt Mean Absolute Error (MAE) and Root Mean Square Error (RMSE) to measure the model performance because they are the most commonly used metrics in social recommendation. Smaller values of both two metrics indicate better performance in the test data. The two metrics are calculated as follows:

| (18) |

| (19) |

where and are the true rating value and predicted rating value of user for item , respectively. is the total number of users for testing. denotes the rated items for user . Again, the evaluation is conducted on devices locally since the server has no access to the local privacy data. The lower MAE and RMSR both indicate better performance.

5.1.4. Experimental Setups

The ratings in each dataset are randomly split into training set (), validation set (), and test set (). Hyper-parameters are tuned based on the validation performance. Then, we report the final performance on the test dataset. In all experiments, we initialize the parameters with standard Gaussian distribution. For the LDP technique used in FeSoG, the gradient clipping threshold is set to , and the strength of Laplacian noise is set to 0.1. Other hyperparameters are tuned based on grid searching. The number of pseudo interacted items is search in . Embedding size is tuned from . User batch size in each training round is searched in . Learning rate is searched in . Training is stopped if RMSE on the validation set does not improve for successive validations.

5.2. Overall Comparison (RQ1)

In this section, we conduct the overall comparison of different models. The experimental results are shown in Table 4, which are categorized into three groups. We have the following observations:

-

•

FeSoG significantly outperforms the State-Of-The-Art (SOTA) federated recommender systems in all datasets. Compared with FedGNN, FeSoG achieves on average and relative improvements on RMSE and MAE, respectively. Several advantages of FeSoG support its superiority: (1) social information helps the recommendation, which the improvement can demonstrate over FedMF; (2) the relational graph attention and aggregation can effectively integrate both user-item interactions and social information; (3) the local pseudo-item sampling technique enhances the performance.

-

•

The GNN-based models perform better than those MF-based models. ConsisRec is the SOTA GNN model that employs relation attention and consistent neighbor aggregation, which leads to its best performance. Compared with SocialMF, which is the best MF-based method, ConsisRec achieves in average and relative improvements in RMSE and MAE, respectively. GNN-based models are better as they can directly model structure information and simultaneously aggregate social and user-item interactions. Between the two federated learning baselines, FedGNN also significantly outperforms FedMF, which again supports the claims that GNN-based models are better than MF-based methods. FeSoG is also based on GNN aggregation. Its local GNN aggregation employs relation attention, which leads to its better performance against FedGNN.

-

•

Federated learning impairs the performance compared with centralized learning. Even a simple GCMC+SN model is better than the FedGNN model. There are two reasons: On the one hand, to achieve privacy protection, the federated learning framework has no access to the local data, limiting its capacity to model the global structures. According to (Liu et al., 2020a; Fan et al., 2019), the core of graph embedding is to aggregate high-order neighbors and select informative contexts. On the other hand, the local gradients are protected by adding random noise. Though it theoretically will not hurt the performance in expectation, it still prevents the server from receiving qualitative gradients from clients. We should find a trade-off between performance and privacy protection. This observation also brings opportunities for federated learning research.

| Method | Ciao | Epinions | Filmtrust | |||

|---|---|---|---|---|---|---|

| RMSE | MAE | RMSE | MAE | RMSE | MAE | |

| SoRec | 1.2024 | 0.8693 | 1.3389 | 1.0618 | 1.8094 | 1.4529 |

| SoReg | 1.0066 | 0.7595 | 1.0751 | 0.8309 | 1.7950 | 1.4413 |

| SocialMF | 1.0013 | 0.7535 | 1.0706 | 0.8264 | 1.8077 | 1.4557 |

| GCMC+SN | 1.0301 | 0.7970 | 1.1070 | 0.8480 | 1.8025 | 1.4325 |

| GraphRec | 1.0040 | 0.7591 | 1.0799 | 0.8219 | 1.6775 | 1.3194 |

| CUNE | 1.0002 | 0.7591 | 1.0681 | 0.8284 | 1.7675 | 1.4178 |

| ConsisRec | 0.9722 | 0.7394 | 1.0495 | 0.8046 | 1.7148 | 1.3093 |

| FedMF | 2.4216 | 2.0792 | 2.0685 | 1.5254 | 2.795 | 2.1713 |

| FedGNN | 2.02 | 1.58 | 1.8346 | 1.4238 | 2.13 | 1.65 |

| FeSoG | 1.9136 | 1.4937 | 1.7969 | 1.3847 | 2.0942 | 1.5855 |

| improvement | 5.26% | 5.46% | 2.05% | 2.74% | 1.68% | 3.9% |

5.3. Sensitivity Analysis (RQ2)

In this section, we emphasize on analyzing the impacts of those hyper-parameters involving in FeSoG and some other baselines. We include user batch size as in the line 1 of Algorithm 1, embedding size , learning rate , the number of pseudo items , local differential privacy parameter and .

5.3.1. User Batch Size

In this section, we analyze the impact of user batch size. Intuitively, choosing a small user batch size increases the communication rounds for the server to train a model. However, it is unclear how it affects the prediction performance. We report the performance of FedGNN and FeSoG with respect to RMSE and MAE across three datasets, which is illustrated in Fig. 4 and Fig. 5, respectively. We have the following observations:

-

•

FeSoG performs better than FedGNN. On all three datasets, the RMSE of FeSoG is consistently lower than FedGNN, which results from its powerful embedding ability of relational local GNN.

-

•

The performance of FeSoG becomes better with the increase of user batch size across datasets. With a larger user batch size, the server can obtain a more accurate global information estimation, which leads to a better performance. However, in practice, aggregating more users at each training step would lead to more computational cost and more time to converge.

5.3.2. Number of Pseudo Items

Pseudo items are sampled to protect the gradients from privacy leakage. Sampling more pseudo items requires more computational cost as more ratings should be predicted. However, it is unclear how many samples should be selected to achieve satisfying results. Hence, we conduct experiments on three datasets to study the impacts of the number of sampled pseudo items. We also compare FedGNN, which samples a set of negative items and assigns them with random gradients. The influence of the number of pseudo items on three datasets with respect to RMSE and MAE is reported in Fig. 6 and Fig. 7. Besides the performance value, we also present the computational cost with respect to the number of sampled pseudo items. We have the following observations:

-

•

FeSoG yields better performance compared with FedGNN. On Ciao and Epinions datasets, the performance of FeSoG and FedGNN gets worse with the increase of pseudo items. However, the value of FeSoG is much lower than FedGNN. It suggests that our pseudo item sampling and the pseudo labeling techniques are robust. Therefore, we can conclude that the pseudo item sampling in FeSoG can protect privacy data and enhance the training process.

-

•

If increasing the number of pseudo items, the error value all increases for both FedGNN and FeSoG on Ciao and Epinions datasets, which results from more noise caused by pseudo items. However, on the Filmtrust dataset, the error value of FedGNN first increases and then drops. This is because FedGNN generates gradients of pseudo items from the same Gaussian distribution, and at the same time Filmtrust dataset only has items. So when sampling more than pseudo items, the gradient of pseudo items from different users would counteract with each other to reduce noise impact. FeSoG generates gradients of pseudo items by user-specific labelling, which does not have this characteristic.

-

•

We should find a trade-off between pseudo-item sampling and model performance. As illustrated in Fig. 6 and Fig. 7, if increasing the number of pseudo items, the extra computational cost increases linearly and the server would be harder to infer the true interacted items. At the same time, prediction accuracy would become worse. So we should find a suitable pseudo item number to balance privacy protection and model performance. For example, pseudo items for Ciao dataset would be an appropriate setting because the model performance does not deteriorate much when the number of pseudo items is lower than .

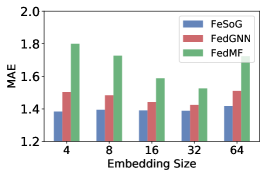

5.3.3. Embedding Size

This section studies the performance with respect to the embedding size. The values of RMSE and MAE on three datasets are reported in Fig. 8 and Fig. 9, respectively. For comparison, we also select two representative baselines, which are FedGNN and FedMF. We have the following observations:

-

•

The proposed FeSoG consistently outperforms other federated recommender system methods across all embedding sizes. This observation suggests that FeSoG can learn informative structures from local graphs. Compared with FedMF, FedGNN demonstrates a much better performance, which justifies the necessity to employ GNN for graph embedding.

-

•

FeSoG has lower fluctuations as embedding sizes change compared with the other two baselines. It indicates that FeSoG is more robust than FedGNN on different embedding sizes, demonstrating the effectiveness of using pseudo-item protection to enhance the training.

-

•

Furthermore, suitable embedding sizes are crucial for different datasets to achieve satisfying performance. All federated learning methods follow the same trend and obtain the best performance in either or . With a smaller embedding size (e.g., ), the model has insufficient representation ability. However, with large embedding sizes (e.g., ), it may cause the overfitting issue due to limited data.

5.3.4. Learning Rate

Learning rate affects the number of communication rounds for the convergence of a federated learning framework. Intuitively, fewer communication steps can decrease the number of communication times between the server and clients, which implies less risks of information leakage. We investigate the impact of learning rate with respect to the training loss of FeSoG and report the results in Fig. 10. The learning rather is selected from . We have the following observations:

-

•

The training of FeSoG is smooth. The loss on three datasets all converges smoothly when increasing the number of communications steps. It suggests that the federated learning framework can effectively transfer informative gradients for the FeSoG to converge.

-

•

Different datasets prefer different learning rates. For example, on the Epinions dataset, the best learning rate . While both Ciao and Filmtrust datasets converge the fastest when the learning rate . Learning rate has a critical influence on different datasets.

5.3.5. Local Differential Privacy parameters and

This section studies the correlations between model performance and the LDP module. It contains two parameters: gradient clip threshold and Laplace noise strength , as shown in Eq. (14). Intuitively, this module alters gradients by injecting noise to gradients to protect the user’s privacy. The injected gradients contribute to the training because the server also aggregates them for updating. Therefore, we conduct experiments to study the model performance with respect to the variations of these two parameters, which is shown in Fig. 11 and Fig. 12 for RMSE and MAE, respectively. We have the following observations:

-

•

With a fixed , FeSoG performs better when increasing . The reason is that a large tends to clip less gradients. Therefore, the aggregated gradient information would be more accurate to reflect the true gradients.

-

•

With fixed , FeSoG performs worse when increasing . It is because a larger injects more substantial Laplace noise to the model gradient. Hence, the gradient learned from data would be overwhelmed by generated noises. Thus, a smaller is preferred.

-

•

There is a trade-off in selecting optimal values. Although larger or smaller lead to better performance, it would increase the risk of privacy leakage. If is infinitely large and is , the server can revert the interactions by checking uploaded gradients from clients. Therefore, we should choose an optimal pair to achieve acceptable performance with a small enough privacy budget.

5.4. Ablation Study (RQ3)

| Variant | Ciao | Epinions | Filmtrust | |||

|---|---|---|---|---|---|---|

| RMSE | MAE | RMSE | MAE | RMSE | MAE | |

| sharing GAT layer | 2.0262 | 1.5863 | 1.8046 | 1.3876 | 2.148 | 1.5939 |

| relative difference | ||||||

| w/o relational vector | 2.1545 | 1.7023 | 1.8044 | 1.3903 | 2.1379 | 1.6441 |

| relative difference | 12.58% | 13.96% | 0.42% | 0.40% | 2.09% | 3.7% |

| w/o pseudo items | 1.9026 | 1.4849 | 1.7053 | 1.3021 | 2.0825 | 1.5798 |

| relative difference | -0.57% | -0.58% | -5.1% | -5.97% | -0.56% | -0.36% |

| FeSoG | 1.9136 | 1.4937 | 1.7969 | 1.3847 | 2.0942 | 1.5855 |

In this section, we conduct an ablation study to analyze those components in FeSoG to validate their effectiveness. We create three other variants of FeSoG:

-

•

sharing GAT layer: FeSoG applies different GAT layers to learn the attention weights for social neighbors and item neighbors. This variant shares the GAT layer for all neighbors. Hence, we only have one GAT layer to learn weight. Note that this variant also employs the relational vector during aggregation.

-

•

w/o relational vectors: this variant ignores the social relation and user-item relation vectors during aggregation. As such, it directly aggregates all the neighbors with their associated attention weights. Since the attention weights are learned separately for user neighbors and item neighbors, we should normalize those weights for all neighbors.

-

•

w/o pseudo items: this variant is a special case of Sec. 5.3.2 where the number of the pseudo item is . It cannot protect the user privacy data from a protection perspective because. Without pseudo items, we only use the true interacted items that have non-zero gradients. However, we also include this variant for a comprehensive study.

The performance comparison of these methods on three datasets is reported in Table 5. We also present the corresponding difference between FeSoG and the variants in a group. We have the following observations:

-

•

We should employ different GAT layers for users neighbors and item neighbors. Compared with FeSoG, sharing GAT layer has worse performance. On the Filmtrust dataset, it has and relative difference on RMSE and MAE, respectively. On the Epinions dataset, it has and relative difference on RMSE and MAE, respectively. The worst performance of this variant is on Ciao, which has and relative difference on RMSE and MAE, respectively.

-

•

We should apply the relational vectors for aggregation. Compared with FeSoG, without relational vectors has worse performance. On the Epinions dataset, it has and relative difference on RMSE and MAE, respectively. On the Filmtrust dataset, it has and relative difference on RMSE and MAE, respectively. The worst performance of this variant is on Ciao, which has and relative difference on RMSE and MAE, respectively.

-

•

Sampling pseudo items always worsen performance. However, compared with FeSoG, without pseudo items is unable to protect the privacy because the server can easily infer the true interacted items by checking which item gradient is not .

6. Conclusion and Future Work

In this paper, we propose a new federated learning framework, FeSoG, for social recommendation. It decentralizes the data storage compared with existing social recommender systems. Moreover, it comprehensively fuses the local user privacy data in clients and uses a server to train an FSRS collaboratively. We address three challenges in designing this model: the heterogeneity of the data, the personalization requirements of the local modeling, and privacy protection for communication. The components in FeSoG jointly tackle these challenges: the relational attention and aggregation of the local graph neural network distinguish social and item neighbors; the local user embedding inference preserves the personalizing information for clients; the pseudo-item labeling, as well as the dynamic LDP technique, protect the gradients from privacy data leakage. To verify the effectiveness of FeSoG, we conduct extensive experiments. The overall comparing experiments demonstrate that FeSoG significantly outperforms SOTA federated learning framework in solving social recommendation problems. Detailed sensitivity analysis regarding the hyper-parameters further justifies the efficacy of FeSoG infusing the social information and user-item interaction and preserving the user privacy data locally. Moreover, the ablation study by dropping the components in FeSoG demonstrates the necessity of our designing.

Though being effective in solving the social recommendation problem, there are still several future directions. Firstly, we randomly sample pseudo items and predict their pseudo labels to protect gradients. However, as the items may have their relations, we may investigate employing an adaptive sampling of items rather than randomly. For example, we may train a local reinforcement learning model to explore the non-interacted item, which can decrease the noise. Secondly, we train the relational graph neural network only by leveraging local data. It is also possible to extend the local graph to be a high-order graph. However, this requires data transferring among clients. To protect privacy, we may design a peer-to-peer communication of clients preserving the decentralized storage characteristics of a federated recommender system. Finally, we can study the efficiency of communication. Since it requires numerous communication rounds to train a federated learning framework, it will be satisfying to decrease the time for communication or increase the bandwidth for communication with large-scale clients.

Acknowledgment

Hao Peng is supported by the National Key R&D Program of China through grant 2021YFB1714800, NSFC through grants 62002007 and U20B2053, S&T Program of Hebei through grant 21340301D, Fundamental Research Funds for the Central Universities. Philip S. Yu is partially supported by NSF under grants III-1763325, III-1909323, III-2106758, and SaTC-1930941.

References

- (1)

- Ammad-Ud-Din et al. (2019) Muhammad Ammad-Ud-Din, Elena Ivannikova, Suleiman A Khan, Were Oyomno, Qiang Fu, Kuan Eeik Tan, and Adrian Flanagan. 2019. Federated collaborative filtering for privacy-preserving personalized recommendation system. arXiv preprint arXiv:1901.09888 (2019).

- Berg et al. (2017) Rianne van den Berg, Thomas N Kipf, and Max Welling. 2017. Graph convolutional matrix completion. arXiv preprint arXiv:1706.02263 (2017).

- Cao et al. (2021) Yuwei Cao, Hao Peng, Jia Wu, Yingtong Dou, Jianxin Li, and Philip S Yu. 2021. Knowledge-Preserving Incremental Social Event Detection via Heterogeneous GNNs. In Proceedings of the Web Conference 2021. 3383–3395.

- Chai et al. (2020) Di Chai, Leye Wang, Kai Chen, and Qiang Yang. 2020. Secure federated matrix factorization. IEEE Intelligent Systems (2020).

- Chen et al. (2020) Chen Chen, Jingfeng Zhang, Anthony KH Tung, Mohan Kankanhalli, and Gang Chen. 2020. Robust federated recommendation system. arXiv preprint arXiv:2006.08259 (2020).

- Dou et al. (2020) Yingtong Dou, Zhiwei Liu, Li Sun, Yutong Deng, Hao Peng, and Philip S Yu. 2020. Enhancing graph neural network-based fraud detectors against camouflaged fraudsters. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management. 315–324.

- Erlingsson et al. (2014) Úlfar Erlingsson, Vasyl Pihur, and Aleksandra Korolova. 2014. Rappor: Randomized aggregatable privacy-preserving ordinal response. In Proceedings of the 2014 ACM SIGSAC conference on computer and communications security. 1054–1067.

- Fallah et al. (2020) Alireza Fallah, Aryan Mokhtari, and Asuman Ozdaglar. 2020. Personalized federated learning: A meta-learning approach. arXiv preprint arXiv:2002.07948 (2020).

- Fan et al. (2019) Wenqi Fan, Yao Ma, Qing Li, Yuan He, Eric Zhao, Jiliang Tang, and Dawei Yin. 2019. Graph neural networks for social recommendation. In The World Wide Web Conference, WWW 2019, San Francisco, CA, USA, May 13-17, 2019. ACM, 417–426.

- Fan et al. (2020) Wenqi Fan, Yao Ma, Qing Li, Jianping Wang, Guoyong Cai, Jiliang Tang, and Dawei Yin. 2020. A Graph Neural Network Framework for Social Recommendations. IEEE Transactions on Knowledge and Data Engineering (2020).

- Flanagan et al. (2020) Adrian Flanagan, Were Oyomno, Alexander Grigorievskiy, Kuan Eeik Tan, Suleiman A Khan, and Muhammad Ammad-Ud-Din. 2020. Federated multi-view matrix factorization for personalized recommendations. arXiv preprint arXiv:2004.04256 (2020).

- Grover and Leskovec (2016) Aditya Grover and Jure Leskovec. 2016. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining. 855–864.

- Guo et al. (2013) G. Guo, J. Zhang, and N. Yorke-Smith. 2013. A Novel Bayesian Similarity Measure for Recommender Systems. In Proceedings of the 23rd International Joint Conference on Artificial Intelligence (IJCAI). 2619–2625.

- Guo et al. (2015) Guibing Guo, Jie Zhang, and Neil Yorke-Smith. 2015. Trustsvd: Collaborative filtering with both the explicit and implicit influence of user trust and of item ratings. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 29.

- Hamilton et al. (2017) William L Hamilton, Rex Ying, and Jure Leskovec. 2017. Inductive representation learning on large graphs. In Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4-9, 2017, Long Beach, CA, USA. 1024–1034.

- Hu et al. (2020) Ziniu Hu, Yuxiao Dong, Kuansan Wang, and Yizhou Sun. 2020. Heterogeneous graph transformer. In Proceedings of The Web Conference 2020. 2704–2710.

- Jamali and Ester (2009) Mohsen Jamali and Martin Ester. 2009. Trustwalker: a random walk model for combining trust-based and item-based recommendation. In Proceedings of the 15th ACM SIGKDD international conference on Knowledge discovery and data mining. 397–406.

- Jamali and Ester (2010) Mohsen Jamali and Martin Ester. 2010. A matrix factorization technique with trust propagation for recommendation in social networks. In Proceedings of the fourth ACM conference on Recommender systems. 135–142.

- Kabbur et al. (2013) Santosh Kabbur, Xia Ning, and George Karypis. 2013. Fism: factored item similarity models for top-n recommender systems. In Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining. 659–667.

- Kairouz et al. (2019) Peter Kairouz, H Brendan McMahan, Brendan Avent, Aurélien Bellet, Mehdi Bennis, Arjun Nitin Bhagoji, Keith Bonawitz, Zachary Charles, Graham Cormode, Rachel Cummings, et al. 2019. Advances and open problems in federated learning. arXiv preprint arXiv:1912.04977 (2019).

- Kipf and Welling (2017) Thomas N Kipf and Max Welling. 2017. Semi-supervised classification with graph convolutional networks. In 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, April 24-26, 2017, Conference Track Proceedings. OpenReview.net.

- Konečnỳ et al. (2016) Jakub Konečnỳ, H Brendan McMahan, Felix X Yu, Peter Richtárik, Ananda Theertha Suresh, and Dave Bacon. 2016. Federated learning: Strategies for improving communication efficiency. arXiv preprint arXiv:1610.05492 (2016).

- Koren et al. (2009) Yehuda Koren, Robert Bell, and Chris Volinsky. 2009. Matrix factorization techniques for recommender systems. Computer 42, 8 (2009), 30–37.

- Li et al. (2020) Munan Li, Kenji Tei, and Yoshiaki Fukazawa. 2020. An Efficient Adaptive Attention Neural Network for Social Recommendation. IEEE Access 8 (2020), 63595–63606.

- Liu et al. (2019) Peng Liu, Lemei Zhang, and Jon Atle Gulla. 2019. Real-time social recommendation based on graph embedding and temporal context. International Journal of Human-Computer Studies 121 (2019), 58–72.

- Liu et al. (2020d) Ye Liu, Yao Wan, Lifang He, Hao Peng, and Philip S Yu. 2020d. KG-BART: Knowledge Graph-Augmented BART for Generative Commonsense Reasoning. arXiv preprint arXiv:2009.12677 (2020).

- Liu et al. (2020a) Zhiwei Liu, Yingtong Dou, Philip S Yu, Yutong Deng, and Hao Peng. 2020a. Alleviating the Inconsistency Problem of Applying Graph Neural Network to Fraud Detection. In Proceedings of the 43rd International ACM SIGIR conference on research and development in Information Retrieval, SIGIR 2020, Virtual Event, China, July 25-30, 2020. ACM, 1569–1572.

- Liu et al. (2021) Zhiwei Liu, Ziwei Fan, Yu Wang, and Philip S. Yu. 2021. Augmenting Sequential Recommendation with Pseudo-PriorItems via Reversely Pre-training Transformer. Proceedings of the 44th international ACM SIGIR conference on Research and development in information retrieval.

- Liu et al. (2020b) Zhiwei Liu, Lin Meng, Jiawei Zhang, and Philip S Yu. 2020b. Deoscillated Graph Collaborative Filtering. arXiv preprint arXiv:2011.02100 (2020).

- Liu et al. (2020c) Zhiwei Liu, Mengting Wan, Stephen Guo, Kannan Achan, and Philip S Yu. 2020c. Basconv: aggregating heterogeneous interactions for basket recommendation with graph convolutional neural network. In Proceedings of the 2020 SIAM International Conference on Data Mining. SIAM, 64–72.

- Ma et al. (2009) Hao Ma, Irwin King, and Michael R Lyu. 2009. Learning to recommend with social trust ensemble. In Proceedings of the 32nd international ACM SIGIR conference on Research and development in information retrieval. 203–210.

- Ma et al. (2008) Hao Ma, Haixuan Yang, Michael R Lyu, and Irwin King. 2008. Sorec: social recommendation using probabilistic matrix factorization. In Proceedings of the 17th ACM conference on Information and knowledge management. 931–940.

- Ma et al. (2011) Hao Ma, Dengyong Zhou, Chao Liu, Michael R Lyu, and Irwin King. 2011. Recommender systems with social regularization. In Proceedings of the fourth ACM international conference on Web search and data mining. 287–296.

- McMahan et al. (2017) Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, and Blaise Aguera y Arcas. 2017. Communication-efficient learning of deep networks from decentralized data. In Artificial Intelligence and Statistics. PMLR, 1273–1282.

- McSherry and Mironov (2009) Frank McSherry and Ilya Mironov. 2009. Differentially private recommender systems: Building privacy into the netflix prize contenders. In Proceedings of the 15th ACM SIGKDD international conference on Knowledge discovery and data mining. 627–636.

- Mu et al. (2019) Nan Mu, Daren Zha, Yuanye He, and Zhihao Tang. 2019. Graph Attention Networks for Neural Social Recommendation. In 31st IEEE International Conference on Tools with Artificial Intelligence, ICTAI 2019, Portland, OR, USA, November 4-6, 2019. IEEE, 1320–1327.

- Peng et al. (2021a) Hao Peng, Jianxin Li, Yangqiu Song, Renyu Yang, Rajiv Ranjan, Philip S Yu, and Lifang He. 2021a. Streaming Social Event Detection and Evolution Discovery in Heterogeneous Information Networks. ACM Transactions on Knowledge Discovery from Data (TKDD) 15, 5 (2021), 1–33.

- Peng et al. (2021b) Hao Peng, Renyu Yang, Zheng Wang, Jianxin Li, Lifang He, Philip Yu, Albert Zomaya, and Raj Ranjan. 2021b. Lime: Low-cost incremental learning for dynamic heterogeneous information networks. IEEE Trans. Comput. (2021).

- Qi et al. (2020) Tao Qi, Fangzhao Wu, Chuhan Wu, Yongfeng Huang, and Xing Xie. 2020. Privacy-Preserving News Recommendation Model Learning. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: Findings. 1423–1432.

- Rendle et al. (2009) Steffen Rendle, Christoph Freudenthaler, Zeno Gantner, and Lars Schmidt-Thieme. 2009. BPR: Bayesian personalized ranking from implicit feedback. In UAI. 452–461.

- Ribero et al. (2020) Mónica Ribero, Jette Henderson, Sinead Williamson, and Haris Vikalo. 2020. Federating recommendations using differentially private prototypes. arXiv preprint arXiv:2003.00602 (2020).

- Shen and Jin (2012) Yelong Shen and Ruoming Jin. 2012. Learning personal+ social latent factor model for social recommendation. In Proceedings of the 18th ACM SIGKDD international conference on Knowledge discovery and data mining. 1303–1311.

- Singh and Gordon (2008) Ajit P Singh and Geoffrey J Gordon. 2008. Relational learning via collective matrix factorization. In Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining. 650–658.

- Song et al. (2019) Weiping Song, Zhiping Xiao, Yifan Wang, Laurent Charlin, Ming Zhang, and Jian Tang. 2019. Session-based social recommendation via dynamic graph attention networks. In Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining. 555–563.

- Tang et al. (2013) Jiliang Tang, Huiji Gao, Xia Hu, and Huan Liu. 2013. Exploiting homophily effect for trust prediction. In Sixth ACM International Conference on Web Search and Data Mining, WSDM 2013, Rome, Italy, February 4-8, 2013. ACM, 53–62.

- Tang et al. (2012a) Jiliang Tang, Huiji Gao, and Huan Liu. 2012a. mTrust: Discerning multi-faceted trust in a connected world. In Proceedings of the Fifth International Conference on Web Search and Web Data Mining, WSDM 2012, Seattle, WA, USA, February 8-12, 2012. ACM, 93–102.

- Tang et al. (2012b) Jiliang Tang, Huiji Gao, Huan Liu, and Atish Das Sarma. 2012b. eTrust: Understanding trust evolution in an online world. In The 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’12, Beijing, China, August 12-16, 2012. ACM, 253–261.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Lukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. arXiv preprint arXiv:1706.03762 (2017).

- Veličković et al. (2017) Petar Veličković, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Lio, and Yoshua Bengio. 2017. Graph attention networks. arXiv preprint arXiv:1710.10903 (2017).

- Wang et al. (2021) Chen Wang, Yueqing Liang, Zhiwei Liu, Tao Zhang, and Philip S Yu. 2021. Pre-training Graph Neural Network for Cross Domain Recommendation. arXiv preprint arXiv:2111.08268 (2021).

- Wang et al. (2019) Xiang Wang, Xiangnan He, Meng Wang, Fuli Feng, and Tat-Seng Chua. 2019. Neural Graph Collaborative Filtering. In SIGIR. 165–174.

- Wang et al. (2014) Xin Wang, Weike Pan, and Congfu Xu. 2014. Hgmf: Hierarchical group matrix factorization for collaborative recommendation. In Proceedings of the 23rd ACM International Conference on Conference on Information and Knowledge Management. 769–778.

- Wu et al. (2021b) Chuhan Wu, Fangzhao Wu, Yang Cao, Yongfeng Huang, and Xing Xie. 2021b. Fedgnn: Federated graph neural network for privacy-preserving recommendation. arXiv preprint arXiv:2102.04925 (2021).

- Wu et al. (2020) Le Wu, Junwei Li, Peijie Sun, Richang Hong, Yong Ge, and Meng Wang. 2020. DiffNet++: A Neural Influence and Interest Diffusion Network for Social Recommendation. IEEE Transactions on Knowledge and Data Engineering (2020).

- Wu et al. (2019a) Le Wu, Peijie Sun, Yanjie Fu, Richang Hong, Xiting Wang, and Meng Wang. 2019a. A neural influence diffusion model for social recommendation. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR 2019, Paris, France, July 21-25, 2019. ACM, 235–244.

- Wu et al. (2018) Le Wu, Peijie Sun, Richang Hong, Yanjie Fu, Xiting Wang, and Meng Wang. 2018. SocialGCN: An efficient graph convolutional network based model for social recommendation. arXiv preprint arXiv:1811.02815 (2018).

- Wu et al. (2021a) Le Wu, Peijie Sun, Richang Hong, Yong Ge, and Meng Wang. 2021a. Collaborative neural social recommendation. IEEE Transactions on Systems, Man, and Cybernetics: Systems 51, 1 (2021), 464–476.

- Wu et al. (2019b) Qitian Wu, Hengrui Zhang, Xiaofeng Gao, Peng He, Paul Weng, Han Gao, and Guihai Chen. 2019b. Dual graph attention networks for deep latent representation of multifaceted social effects in recommender systems. In The World Wide Web Conference, WWW 2019, San Francisco, CA, USA, May 13-17, 2019. ACM, 2091–2102.

- Xia et al. (2020) Congying Xia, Caiming Xiong, S Yu Philip, and Richard Socher. 2020. Composed Variational Natural Language Generation for Few-shot Intents. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: Findings. 3379–3388.

- Yang et al. (2020) Chengxu Yang, QiPeng Wang, Mengwei Xu, Shangguang Wang, Kaigui Bian, and Xuanzhe Liu. 2020. Heterogeneity-aware federated learning. arXiv preprint arXiv:2006.06983 (2020).

- Yang et al. (2021) Liangwei Yang, Zhiwei Liu, Yingtong Dou, Jing Ma, and Philip S. Yu. 2021. ConsisRec: Enhancing GNN for Social Recommendation via Consistent Neighbor Aggregation. Proceedings of the 44th international ACM SIGIR conference on Research and development in information retrieval.

- Yang et al. (2019) Qiang Yang, Yang Liu, Tianjian Chen, and Yongxin Tong. 2019. Federated machine learning: Concept and applications. ACM Transactions on Intelligent Systems and Technology (TIST) 10, 2 (2019), 1–19.

- Ying et al. (2018) Rex Ying, Ruining He, Kaifeng Chen, Pong Eksombatchai, William L. Hamilton, and Jure Leskovec. 2018. Graph Convolutional Neural Networks for Web-Scale Recommender Systems. In SIGKDD, Yike Guo and Faisal Farooq (Eds.). 974–983.

- Zhang et al. (2017) Chuxu Zhang, Lu Yu, Yan Wang, Chirag Shah, and Xiangliang Zhang. 2017. Collaborative User Network Embedding for Social Recommender Systems. In Proceedings of the 2017 SIAM International Conference on Data Mining, Houston, Texas, USA, April 27-29, 2017, Nitesh V. Chawla and Wei Wang (Eds.). SIAM, 381–389.

- Zhou et al. (2021a) Yao Zhou, Haonan Wang, Jingrui He, and Haixun Wang. 2021a. From Intrinsic to Counterfactual: On the Explainability of Contextualized Recommender Systems. arXiv preprint arXiv:2110.14844 (2021).

- Zhou et al. (2021b) Yao Zhou, Jianpeng Xu, Jun Wu, Zeinab Taghavi, Evren Korpeoglu, Kannan Achan, and Jingrui He. 2021b. PURE: Positive-Unlabeled Recommendation with Generative Adversarial Network. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining. 2409–2419.