Federated clustering with GAN-based data synthesis

Abstract

Federated clustering (FC) is an extension of centralized clustering in federated settings. The key here is how to construct a global similarity measure without sharing private data, since the local similarity may be insufficient to group local data correctly and the similarity of samples across clients cannot be directly measured due to privacy constraints. Obviously, the most straightforward way to analyze FC is to employ the methods extended from centralized ones, such as K-means (KM) and fuzzy c-means (FCM). However, they are vulnerable to non independent-and-identically-distributed (non-IID) data among clients. To handle this, we propose a new federated clustering framework, named synthetic data aided federated clustering (SDA-FC). It trains generative adversarial network locally in each client and uploads the generated synthetic data to the server, where KM or FCM is performed on the synthetic data. The synthetic data can make the model immune to the non-IID problem and enable us to capture the global similarity characteristics more effectively without sharing private data. Comprehensive experiments reveals the advantages of SDA-FC, including superior performance in addressing the non-IID problem and the device failures.

1 Introduction

In an era where privacy concerns are paramount, federated learning McMahan et al. (2017) has garnered considerable attention and found applications in diverse domains, including autonomous driving Nguyen et al. (2022a); Fu et al. (2023), smart healthcare Nguyen et al. (2022b), smart cities Rasha et al. (2023) and IoT-data Nguyen et al. (2021); He et al. (2023). It aims to train a global model by fusing multiple local models trained on client devices without sharing private data.

| KM-based methods | FCM-based methods | ||||

| KM_central | k-FED | ours | FCM_central | FFCM | ours |

| 1 | 0.7352 | 1 | 1 | 0.3691 | 1 |

While federated learning excels in independent-and-identically-distributed (IID) scenarios, the local data distributions across client devices often deviate substantially from the IID scenarios. This phenomenon, referred to as non-IID data or data heterogeneity, can impede convergence and detrimentally affect model performance Zhu et al. (2021); Ma et al. (2022). In non-IID scenarios, a natural approach involves departing from the conventional single-center framework, which trains only one global model. Instead, one can construct a multi-center framework, harnessing the power of client clustering Ghosh et al. (2020); Zec et al. (2022) or data clustering to enhance collaboration Dennis et al. (2021); Stallmann and Wilbik (2022); Chung et al. (2022). For the client clustering, the basic idea of it is that each client may come from a specific distribution. Thus, we should use clients within the same cluster to collaboratively train a specific global model. However, each sample within a single client may also come from a specific distribution. Hence, the data clustering, also know as federated clustering, can be more beneficial for client collaboration Stallmann and Wilbik (2022). The goal of it is to cluster data based on a global similarity measure while keeping all data local Stallmann and Wilbik (2022).

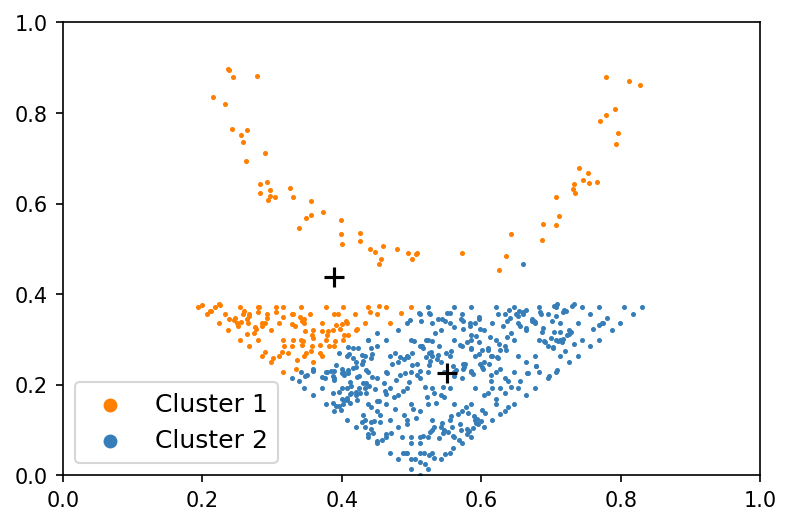

Beyond its role in mitigating the non-IID problem, federated clustering itself presents an intriguing research avenue. As shown in Fig. 1, local similarity alone inadequately recovers accurate local data grouping, while a global perspective excels in this regard. Yet, obtaining the global real dataset is unfeasible due to the confidentiality of local client data. Hence, the key here is how to measure the global similarity without sharing private data. To handle this, previous work introduced adaptations of classic centralized clustering algorithms, such as K-means (KM) MacQueen (1967) and fuzzy c-means (FCM) Bezdek et al. (1984), to the federated setting, resulting in k-FED Dennis et al. (2021) and federated fuzzy c-means (FFCM) Stallmann and Wilbik (2022). The basic ideas of them are similar: alternately estimating the local cluster centroids and the global ones, in other words, mining the local centroids based on local private data and uploading them to the server, where one can run KM to construct global cluster centroids for housing the global similarity information that is then disseminated to clients. However, the resulting global cluster centroids may be delicate and susceptible to varying non-IID levels, thereby impairing the robustness and performance of the model.

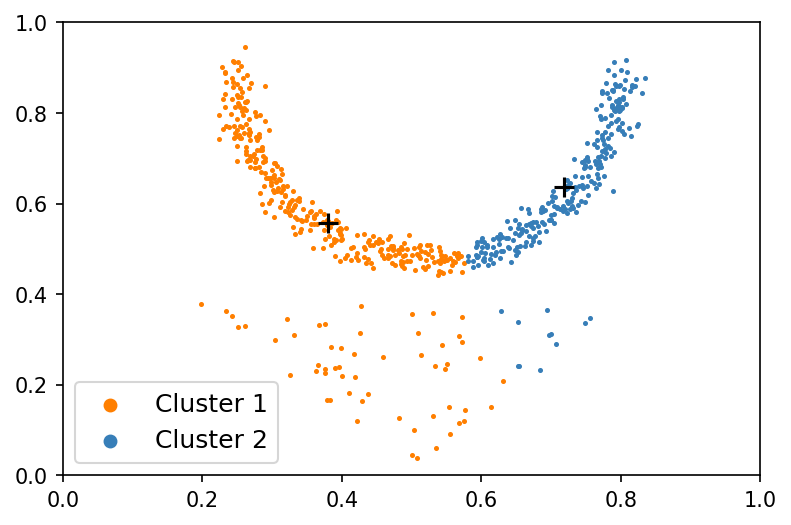

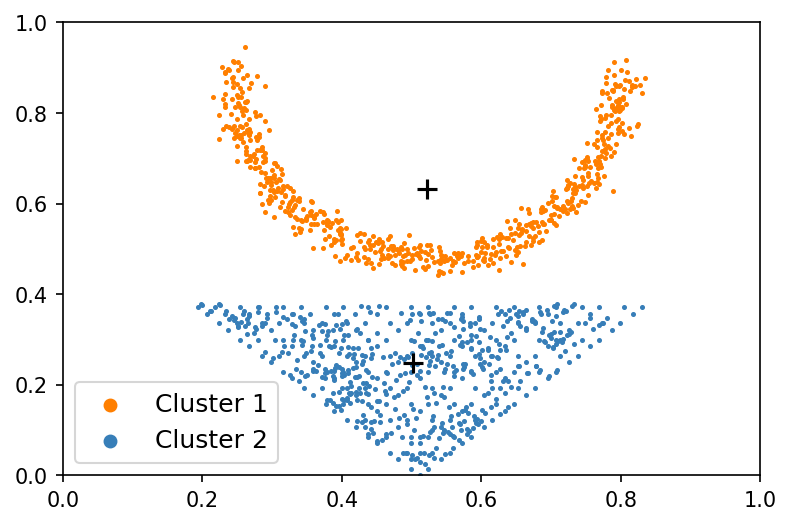

Given a federated dataset, the non-IID level solely hinges on the local data distribution rather than the global one. Consequently, the model performance can exhibit insensitivity to different non-IID levels if a good approximation of the global data can be constructed. Moreover, such an approximation can enhance the capture of global similarity characteristics without compromising data privacy. Motivated by this, we propose a simple but effective federated clustering framework, denominated synthetic data aided federated clustering (SDA-FC). It encompasses two main steps: global synthetic data construction and cluster assignment. In the first step, the central server aims to construct a global synthetic data using multiple local GANs Goodfellow et al. (2014) trained from local data. In the second step, the central server first performs KM or FCM on the global synthetic dataset to get global cluster centroids that is then disseminated to clients. Subsequently, cluster assignment is performed based on the proximity of local data to these centroids. As shown in Fig. 1, the local cluster centroids (Fig. 1 (a) and Fig. 1 (b)) markedly differ from the global ones (Fig. 1. (c)), and the latter cannot be accurately approximated by running KM on the former simply. Notably, the global synthetic data (Fig. 1 (d)) emerges as a good approximation of the global real data, with centroids derived from this synthetic dataset closely mirroring those obtained from the real global data. To further sense the gap between federated clustering and centralized clustering, we performed k-FED and FFCM on the toy example with the federated setting and performed KM and FCM with the centralized setting. As shown in Table 1, there is a gap between them, but it is narrowed by us.

In addition to the non-IID problem, there are also some other challenges in federated learning, such as expensive communication and systems heterogeneity Li et al. (2020). These challenges can lead to low efficiency and device failures. Nevertheless, the proposed framework effectively addresses these concerns, requiring only one communication round between the central server and clients, and can run asynchronously while remaining robust to device failures.

The rest of this paper is organized as follows: Sect.2 first concisely describe four core challenges in federated learning, and then review some representative federated clustering methods. Following that, Sect. 3 first introduce some preliminaries about GAN, and then propose a new federated clustering framework SDA-FC. Sect. 4 reveals the advantages of SDA-FC. Finally, this paper is concluded in Sect. 5.

2 Related Work

2.1 Core challenges in federated learning

In traditional centralized learning, all data is typically stored on a central server. For this learning protocol, one problem is hardware constraints that it places a high demand on the storage and computing capacity of the central server when the dataset is large. And another problem is privacy risks and data leakage Zhu et al. (2021). To handle the first problem, distributed data parallelism Dean et al. (2012) uses multiple devices to train model replicas in parallel using different data subsets. Nevertheless, this method still necessitates access to the entire dataset for division into subsets, thus retaining inherent privacy risks.

Federated learning McMahan et al. (2017), a recent research focal point, offers a promising solution by enabling the collaborative training of a global model through the fusion of local models trained on client devices, all while safeguarding user privacy. Despite its potential to alleviate both hardware and privacy concerns, federated learning presents several challenges, which are outlined as follows Li et al. (2020):

-

•

Expensive Communication. In practice, federated networks may encompass millions of client devices, rendering network communication substantially more costly than local computation Huang et al. (2013); Van Berkel (2009). Crafting communication-efficient models involves minimizing both communication rounds and message volumes.

-

•

Systems Heterogeneity. Client devices often possess diverse communication, computation, and storage capabilities due to variations in wireless network connectivity, energy resources, and hardware limitations. Consequently, only a fraction of devices may effectively participate in training a large model Bonawitz et al. (2019), and device disconnections during training must be anticipated. Thus, a robust federated model should accommodate low device participation and device failures.

-

•

Data Heterogeneity. In federated learning, local data distributions across client devices can differ substantially, influenced by how each client utilizes their local device. This phenomenon, known as non-IID data or data heterogeneity, can impede convergence and degrade model performance Zhu et al. (2021). A natural solution to this challenge involves moving beyond the traditional single-center framework, where only one global model is trained. Instead, constructing a multi-center framework, which simultaneously trains multiple global models based on client clustering Ghosh et al. (2020); Zec et al. (2022) or data clustering Dennis et al. (2021); Stallmann and Wilbik (2022); Chung et al. (2022), may offer a more viable approach.

-

•

Privacy Concerns. Although the classic federated averaging method McMahan et al. (2017) helps mitigate shallow data leakage from raw data, Zhu et al. Zhu et al. (2019) demonstrated its potential to lead to deep data leakage through shared gradients and parameters. This deep data leakage allows the reconstruction of original data from these gradients and parameters. To enhance privacy in federated learning, various works have employed differential privacy Dwork et al. (2006); Dwork (2011); Dwork et al. (2014), a popular privacy protection technique Geyer et al. (2017); Zhao et al. (2020); Wei et al. (2020). However, while improving privacy, differential privacy can significantly degrade model performance.

Numerous centralized algorithms have been adapted for federated settings in recent years, encompassing reinforcement learning Wang et al. (2019, 2020); Zhang et al. (2021), classification algorithms Brisimi et al. (2018); Yu et al. (2020); Chen and Chao (2021), clustering algorithms Dennis et al. (2021); Stallmann and Wilbik (2022); Chung et al. (2022), and more. Nevertheless, these extensions are often discouraged by the challenges of federated learning in terms of model efficiency and model performance. This work specifically addresses the challenges associated with federated clustering.

2.2 Federated clustering

As shown in Fig. 1, the local similarity is insufficient to group local data correctly, while a global perspective excels in this regard. However, the global real dataset cannot be obtained since the local data on client devices are forbidden to share. Hence, the key here is how to measure the global similarity without sharing private data.

To tackle this challenge, k-FED Dennis et al. (2021) and federated fuzzy c-means (FFCM) Stallmann and Wilbik (2022) have extended two classic centralized clustering algorithms, K-means (KM) MacQueen (1967) and fuzzy c-means (FCM) Bezdek et al. (1984), to the federated setting, respectively. Specifically, each client device runs a classic centralized clustering algorithm on the local data to generate several cluster centroids and uploads them to the central server. Then, the central server can construct global cluster centroids by running KM on the uploaded local cluster centroids. The classic centralized clustering algorithm used in k-FED is KM and that used in FFCM is FCM. However, the resulting global cluster centroids often exhibit fragility and sensitivity to varying non-IID levels, leading to suboptimal and non-robust model performance.

Given a federated dataset, the non-IID level quantifies the heterogeneity degree among local data distributions, and it remains independent of the global distribution. Hence, model performance can remain unaffected by different non-IID levels if a good approximation of the global real data can be constructed. Additionally, such an approximation may facilitate the more effective capture of global similarity characteristics without sharing private data. Motivated by this, we introduce a simple but effective federated clustering framework based on GAN-based data synthesis, named synthetic data aided federated clustering (SDA-FC). It requires only one communication round, can run asynchronously, and can handle device failures. It comprises two main steps: global synthetic data construction and cluster assignment. In the former, the central server endeavors to construct a global synthetic dataset using multiple local GANs Goodfellow et al. (2014), trained on local data. For the latter, the central server first applies KM or FCM to the global synthetic dataset, resulting in global cluster centroids. Then, the final clustering result can be obtained based on the proximity of local data points to these centroids.

3 Synthetic Data Aided Federated Clustering (SDA-FC)

In this section, we present the synthetic data aided federated clustering (SDA-FC) framework. First, we introduce some preliminaries about GAN, which form the basis of our approach. Subsequently, we detail the SDA-FC.

3.1 Preliminaries

The vanilla GAN Goodfellow et al. (2014) architecture comprises two networks: the generator and the discriminator. These networks engage in a two-player game wherein they continuously improve each other. The generator aims to produce synthetic samples that closely resemble real data, thereby deceiving the discriminator, whose role is to differentiate between real and generated samples. The game concludes when the discriminator can no longer distinguish between real and generated samples, indicating that the generator has learned the underlying real data distribution and reached the theoretical global optimum.

The objective function of the vanilla GAN is defined as:

| (1) |

where is the generator that inputs a noise and outputs a generated sample, is Gaussian distribution, is the discriminator that inputs a sample and outputs a scalar to tell the generated samples from the real ones, and is the distribution of real data. While GAN have demonstrated remarkable success in various applications, adversarial training is known for its instability and susceptibility to mode collapses Metz et al. (2017), resulting in both high-quality and low-diversity generated samples. Mode collapses imply that the model captures only a portion of the real data’s characteristics. To address this, Mukherjee et al. Mukherjee et al. (2019) introduced an additional categorical variable into the generator’s input, promoting a clearer cluster structure in the latent space and enhancing sample diversity.

To mitigate mode collapses in our SDA-FC framework, we also use a mixture of discrete and continuous variables as the input of the generator by following Mukherjee et al. (2019). The new objective function is defined as:

| (2) |

where is a uniform random distribution with the lowest value 1 and the highest value , and is a one-hot vector with the -th element being 1.

3.2 Synthetic Data Aided Federated Clustering (SDA-FC)

Given a real world dataset , which is distributed among clients, i.e. . The goal of federated clustering is to cluster data based on a global similarity measure while keeping all data local Stallmann and Wilbik (2022). However, direct measurement of global similarity is infeasible without sharing private data. This is why federated clustering is a challenging task to handle.

To handle this, we propose the synthetic data aided federated clustering (SDA-FC) framework, which is designed to require only a single round of communication between clients and the central server. It consists of two main steps: global synthetic data construction and cluster assignment, which are detailed below.

3.2.1 Global synthetic data construction

Firstly, client () downloads an initial GAN model from the central server and trains it with the local data . Then, the trained generator and the local data size of are uploaded to the central server. Finally, the central server use to generate a dataset of the same size as , and the global synthetic dataset can be obtained by merging all generated datasets, i.e. .

3.2.2 Cluster assignment

As a general federated clustering framework, it is simple to combine SDA-FC with some centralized clustering methods, such as K-means (KM) MacQueen (1967) and fuzzy c-means (FCM) Bezdek et al. (1984), leading to two specific methods, SDA-FC-KM and SDA-FC-FCM. Specifically, the central server first performs KM or FCM on the global synthetic dataset to obtain global centroids. Then, each client downloads the centroids and the final clustering result can be obtained by performing cluster assignment according to the cosine distance from local data to the centroids.

4 Experimental results

In this section, we detail the experimental settings, validate the efficacy of our proposed methods and the global synthetic dataset on several datasets with different non-IID scenarios, and perform a sensitivity analysis of clustering methods in response to device failures induced by system heterogeneity. Finally, we summarize the experimental findings.

| Dataset | Type | Size | Image size/Features | Class |

|---|---|---|---|---|

| MNIST | image | 70000 | 10 | |

| Fashion-MNIST | image | 70000 | 10 | |

| CIFAR-10 | image | 60000 | 10 | |

| STL-10 | image | 13000 | 10 | |

| Pendigits | time series | 10992 | 16 | 10 |

4.1 Experimental Settings

Creating a universal non-IID benchmark dataset in the realm of federated learning remains a challenging endeavor due to the inherent complexity of federated learning itself Li et al. (2022); Hu et al. (2022). Here, following ref. Chung et al. (2022), we simulate different federated scenarios by partitioning the real-world dataset into smaller subsets (each one corresponds to a client) and scaling the non-IID level in clients, where is the number of true clusters. For a client with datapoints, the first datapoints are sampled from a single cluster, and the remaining ones are randomly sampled from any cluster. Extremely, means the local data across the clients is IID, and means the local data across the clients is completely non-IID.

As shown in Table LABEL:datasets, we select two grey image datasets MNIST and Fashion-MNIST, two color image datasets CIFAR-10 Krizhevsky et al. (2009) and STL-10 111Note that, to reduce the computational cost of baseline methods and to use the same network structure for CIFAR-10, we performed a preprocessing step to resize the images in STL-10 to 32 32. Coates et al. (2011), and a time series dataset Pendigits Keller et al. (2012) for comprehensive analysis. In SDA-FC, all local GANs are trained with the Adam Optimizer Kingma and Ba (2014). More detailed hyperparameter settings can be found in the Appendix A. Our code will be made publicly available to facilitate reproducibility.

| Dataset | KM-based methods | FCM-based methods | |||||

| KM_central | k-FED | SDA-FC-KM | FCM_central | FFCM | SDA-FC-FCM | ||

| MNIST | 0.0 | 0.5304 | 0.5081 | 0.5133 | 0.5187 | 0.5157 | 0.5141 |

| 0.25 | 0.4879 | 0.5033 | 0.5264 | 0.5063 | |||

| 0.5 | 0.4515 | 0.5118 | 0.4693 | 0.5055 | |||

| 0.75 | 0.4552 | 0.5196 | 0.4855 | 0.5143 | |||

| 1.0 | 0.4142 | 0.5273 | 0.5372 | 0.5140 | |||

| Fashion-MNIST | 0.0 | 0.6070 | 0.5932 | 0.5947 | 0.6026 | 0.5786 | 0.6027 |

| 0.25 | 0.5730 | 0.6052 | 0.5995 | 0.5664 | |||

| 0.5 | 0.6143 | 0.6063 | 0.6173 | 0.6022 | |||

| 0.75 | 0.5237 | 0.6077 | 0.6139 | 0.5791 | |||

| 1.0 | 0.5452 | 0.6065 | 0.5855 | 0.6026 | |||

| CIFAR-10 | 0.0 | 0.0871 | 0.0820 | 0.0823 | 0.0823 | 0.0812 | 0.0819 |

| 0.25 | 0.0866 | 0.0835 | 0.0832 | 0.0818 | |||

| 0.5 | 0.0885 | 0.0838 | 0.0870 | 0.0810 | |||

| 0.75 | 0.0818 | 0.0864 | 0.0842 | 0.0808 | |||

| 1.0 | 0.0881 | 0.0856 | 0.0832 | 0.0858 | |||

| STL-10 | 0.0 | 0.1532 | 0.1468 | 0.1470 | 0.1469 | 0.1436 | 0.1406 |

| 0.25 | 0.1472 | 0.1511 | 0.1493 | 0.1435 | |||

| 0.5 | 0.1495 | 0.1498 | 0.1334 | 0.1424 | |||

| 0.75 | 0.1455 | 0.1441 | 0.1304 | 0.1425 | |||

| 1.0 | 0.1403 | 0.1477 | 0.1565 | 0.1447 | |||

| Pendigits | 0.0 | 0.6877 | 0.7001 | 0.6972 | 0.6862 | 0.6866 | 0.6654 |

| 0.25 | 0.6620 | 0.6796 | 0.6848 | 0.6631 | |||

| 0.5 | 0.6625 | 0.6661 | 0.6798 | 0.6544 | |||

| 0.75 | 0.5521 | 0.6734 | 0.6757 | 0.6846 | |||

| 1.0 | 0.6296 | 0.5172 | 0.7236 | 0.5162 | |||

| count | - | - | 7 | 18 | - | 16 | 9 |

| Dataset | KM-based methods | FCM-based methods | |||||

| KM_central | k-FED | SDA-FC-KM | FCM_central | FFCM | SDA-FC-FCM | ||

| MNIST | 0.0 | 0.4786 | 0.5026 | 0.4977 | 0.5024 | 0.5060 | 0.5109 |

| 0.25 | 0.4000 | 0.4781 | 0.5105 | 0.5027 | |||

| 0.5 | 0.3636 | 0.4884 | 0.3972 | 0.4967 | |||

| 0.75 | 0.3558 | 0.4926 | 0.4543 | 0.5021 | |||

| 1.0 | 0.3386 | 0.5000 | 0.5103 | 0.5060 | |||

| Fashion-MNIST | 0.0 | 0.4778 | 0.4657 | 0.4640 | 0.5212 | 0.4974 | 0.5252 |

| 0.25 | 0.5222 | 0.4763 | 0.5180 | 0.4962 | |||

| 0.5 | 0.4951 | 0.4826 | 0.4974 | 0.5258 | |||

| 0.75 | 0.4240 | 0.4774 | 0.4995 | 0.4955 | |||

| 1.0 | 0.3923 | 0.4825 | 0.4672 | 0.5323 | |||

| CIFAR-10 | 0.0 | 0.1347 | 0.1305 | 0.1275 | 0.1437 | 0.1439 | 0.1283 |

| 0.25 | 0.1366 | 0.1275 | 0.1491 | 0.1376 | |||

| 0.5 | 0.1252 | 0.1307 | 0.1316 | 0.1411 | |||

| 0.75 | 0.1303 | 0.1360 | 0.1197 | 0.1464 | |||

| 1.0 | 0.1147 | 0.1341 | 0.1237 | 0.1494 | |||

| STL-10 | 0.0 | 0.1550 | 0.1390 | 0.1533 | 0.1602 | 0.1514 | 0.1505 |

| 0.25 | 0.1361 | 0.1448 | 0.1479 | 0.1527 | |||

| 0.5 | 0.1505 | 0.1377 | 0.1112 | 0.1620 | |||

| 0.75 | 0.1256 | 0.1513 | 0.1001 | 0.1603 | |||

| 1.0 | 0.1328 | 0.1527 | 0.1351 | 0.1553 | |||

| Pendigits | 0.0 | 0.6523 | 0.7079 | 0.7444 | 0.6521 | 0.6523 | 0.6611 |

| 0.25 | 0.6420 | 0.7304 | 0.6535 | 0.6469 | |||

| 0.5 | 0.6285 | 0.6149 | 0.6823 | 0.6349 | |||

| 0.75 | 0.4493 | 0.6228 | 0.6323 | 0.7364 | |||

| 1.0 | 0.5222 | 0.5063 | 0.6772 | 0.5062 | |||

| count | - | - | 9 | 16 | - | 10 | 15 |

4.2 Effectiveness analysis of SDA-FC

To evaluate the effectiveness of SDA-FC, we employ two state-of-the-art federated clustering methods as baselines: k-FED Dennis et al. (2021) and federated fuzzy c-means (FFCM) Stallmann and Wilbik (2022). Additionally, to contextualize the performance of federated clustering relative to centralized clustering, we report numerical results for K-means (KM) and fuzzy c-means (FCM) in centralized scenarios, denoted as KM_central and FCM_central, respectively. In all experiments, the default fuzzy degree in FCM-based methods is set to 1.1.

The clustering performance based on NMI Strehl and Ghosh (2002) and kappa Liu et al. (2019) is shown in Table 3 and Table 4. One can observe that: 1) For the KM-based methods, both metrics consistently indicate the superiority of our proposed method over k-FED in terms of both effectiveness and robustness. For the FCM-based methods, the two metrics give different ranks. Although NMI is the most commonly used metric, recent researchs highlight its limitations, rendering Kappa a more reliable alternative Liu et al. (2019); Yan et al. (2022). Here, we also contribute some new cases to show that NMI fails to accurately reflect the performance of clustering results. As shown in Fig. 2, SDA-FC provides clustering results more aligned with the ground truth, yet NMI ranks it lower (0.6806 for FFCM and 0.6654 for SDA-FC), which is perplexing. In contrast, Kappa produces a more reasonable ranking (0.6523 for FFCM and 0.6611 for SDA-FC). Moreover, on the CIFAR-10 dataset, we establish an oracle baseline with ground-truth centroids (computed using ground-truth labels) in a centralized scenario, which assigns clusters based on cosine distance from local data to the centroids. It is intuitive that KM-based or FCM-based methods cannot surpass the oracle baseline. However, NMI ranks them inversely (0.1009 for the oracle baseline, 0.1051 for FFCM with a fuzzy degree of 2 when p = 0.75, and 0.1059 for FFCM with a fuzzy degree of 2 when p = 1), while Kappa provides more reasonable values (0.1992 for the oracle baseline, 0.1634 for FFCM with a fuzzy degree of 2 when p = 0.75, and 0.1854 for FFCM with a fuzzy degree of 2 when p = 1). Hence, for FCM-based methods, our proposed approach excels in terms of both effectiveness and robustness. 2) There exists a discernable gap between federated clustering and centralized clustering, but the SDA-FC framework mitigates this gap.

4.3 Effectiveness analysis of the global synthetic dataset

While we have demonstrated the effectiveness of the SDA-FC framework in conjunction with KM or FCM, we expect that SDA-FC can serve as a versatile framework for addressing more intricate challenges in federated clustering, including its integration with deep clustering methods. The crux lies in ensuring that the global synthetic dataset generated by SDA-FC is a good approximation of the real one.

For each image dataset, we generate a global synthetic dataset of the same size as the real dataset. We showcase part of the generated images randomly in Fig. 4 and visualize the global data distribution using t-SNE Van der Maaten and Hinton (2008) in Fig. 5. One can see that: 1) Despite instances where objects in generated images are unrecognizable, SDA-FC effectively captures the fundamental data characteristics. 2) The global synthetic datasets and the global real ones exhibit substantial overlap, affirming that the former serves as a good approximation of the latter. 3) The cluster structure is more evident in grayscale image datasets compared to color image datasets. This elucidates the superior clustering performance observed in grayscale datasets, as outlined in Table 4.

4.4 Sensitivity analysis of clustering performance to device failures

In practice, certain client devices may experience disconnections from the server due to factors such as wireless network fluctuations or energy constraints. Consequently, specific data characteristics from the disconnected devices may be lost, potentially affecting clustering performance. This means that the sensitivity analysis of clustering performance to device failures is worth investigating.

To handle such problem, following Li et al. (2020), we simply ignore the failed devices and continue training with the remaining ones. The rate of disconnection among all devices is denoted as the disconnection rate. Our experiments simulate various disconnected scenarios on MNIST by scaling the disconnection rate and run four federated clustering methods on them. As shown in Fig. 3, one can see that: 1) The proposed methods exhibit superior effectiveness and robustness in all considered scenarios. 2) The sensitivity of clustering performance to device failures is positively correlated with the non-IID level . Larger values result in reduced mutual substitutability of data characteristics across clients (one extreme case is that the data across the clients is completely non-IID when ), exacerbating the adverse impact of device failures on clustering performance.

In summary, our findings reveal that: 1) The proposed methods outperform baselines in terms of both effectiveness and robustness. 2) Kappa emerges as a more reliable metric compared to NMI. 3) The global synthetic dataset generated by local GANs serves as a commendable approximation of the global real dataset. 4) Our proposed method demonstrates heightened resilience to device failures. 5) The sensitivity of clustering performance to device failures intensifies with higher heterogeneity levels, as characterized by .

5 Conclusion

In this study, we have introduced SDA-FC, a simple yet effective federated clustering framework with GAN-based data synthesis. SDA-FC demonstrates outstanding performance in terms of effectiveness and robustness, surpassing existing state-of-the-art baselines. Notably, it runs with minimal communication overhead, requiring just one round and supports asynchronous execution. Furthermore, by leveraging a global synthetic dataset, our proposed methods exhibit superior performance in addressing non-IID data challenges and device failures without sharing private data.

Nonetheless, it is essential to acknowledge that SDA-FC, when paired with shallow clustering methods, may face limitations when dealing with complex data types, such as color images. Future research endeavors could explore the integration of SDA-FC with deep clustering methods to tackle these more intricate scenarios. Lastly, our findings underline the importance of considering appropriate evaluation metrics. Recent studies have cast doubts on the reliability of NMI values as indicators of clustering performance. We advocate for the adoption of the Kappa metric as a more robust and accurate alternative for assessing clustering results.

References

- Bezdek et al. [1984] James C Bezdek, Robert Ehrlich, and William Full. Fcm: The fuzzy c-means clustering algorithm. Computers & geosciences, 10(2-3):191–203, 1984.

- Bonawitz et al. [2019] Keith Bonawitz, Hubert Eichner, Wolfgang Grieskamp, Dzmitry Huba, Alex Ingerman, Vladimir Ivanov, Chloe Kiddon, Jakub Konečnỳ, Stefano Mazzocchi, Brendan McMahan, et al. Towards federated learning at scale: System design. Proceedings of Machine Learning and Systems, 1:374–388, 2019.

- Brisimi et al. [2018] Theodora S Brisimi, Ruidi Chen, Theofanie Mela, Alex Olshevsky, Ioannis Ch Paschalidis, and Wei Shi. Federated learning of predictive models from federated electronic health records. International journal of medical informatics, 112:59–67, 2018.

- Chen and Chao [2021] Hong-You Chen and Wei-Lun Chao. On bridging generic and personalized federated learning for image classification. In International Conference on Learning Representations, 2021.

- Chung et al. [2022] Jichan Chung, Kangwook Lee, and Kannan Ramchandran. Federated unsupervised clustering with generative models. In AAAI 2022 International Workshop on Trustable, Verifiable and Auditable Federated Learning, 2022.

- Coates et al. [2011] Adam Coates, Andrew Ng, and Honglak Lee. An analysis of single-layer networks in unsupervised feature learning. In Proceedings of the fourteenth international conference on artificial intelligence and statistics, pages 215–223. JMLR Workshop and Conference Proceedings, 2011.

- Dean et al. [2012] Jeffrey Dean, Greg Corrado, Rajat Monga, Kai Chen, Matthieu Devin, Mark Mao, Marc’aurelio Ranzato, Andrew Senior, Paul Tucker, Ke Yang, et al. Large scale distributed deep networks. Advances in neural information processing systems, 25, 2012.

- Dennis et al. [2021] Don Kurian Dennis, Tian Li, and Virginia Smith. Heterogeneity for the win: One-shot federated clustering. In International Conference on Machine Learning, pages 2611–2620. PMLR, 2021.

- Dwork et al. [2006] Cynthia Dwork, Frank McSherry, Kobbi Nissim, and Adam Smith. Calibrating noise to sensitivity in private data analysis. In Theory of cryptography conference, pages 265–284. Springer, 2006.

- Dwork et al. [2014] Cynthia Dwork, Aaron Roth, et al. The algorithmic foundations of differential privacy. Foundations and Trends® in Theoretical Computer Science, 9(3–4):211–407, 2014.

- Dwork [2011] Cynthia Dwork. A firm foundation for private data analysis. Communications of the ACM, 54(1):86–95, 2011.

- Fu et al. [2023] Yuchuan Fu, Changle Li, F Richard Yu, Tom H Luan, and Pincan Zhao. An incentive mechanism of incorporating supervision game for federated learning in autonomous driving. IEEE Transactions on Intelligent Transportation Systems, 2023.

- Geyer et al. [2017] Robin C Geyer, Tassilo Klein, and Moin Nabi. Differentially private federated learning: A client level perspective. arXiv preprint arXiv:1712.07557, 2017.

- Ghosh et al. [2020] Avishek Ghosh, Jichan Chung, Dong Yin, and Kannan Ramchandran. An efficient framework for clustered federated learning. Advances in Neural Information Processing Systems, 33:19586–19597, 2020.

- Goodfellow et al. [2014] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. Advances in neural information processing systems, 27, 2014.

- He et al. [2023] Zaobo He, Lintao Wang, and Zhipeng Cai. Clustered federated learning with adaptive local differential privacy on heterogeneous iot data. IEEE Internet of Things Journal, 2023.

- Hu et al. [2022] Sixu Hu, Yuan Li, Xu Liu, Qinbin Li, Zhaomin Wu, and Bingsheng He. The oarf benchmark suite: Characterization and implications for federated learning systems. ACM Transactions on Intelligent Systems and Technology (TIST), 13(4):1–32, 2022.

- Huang et al. [2013] Junxian Huang, Feng Qian, Yihua Guo, Yuanyuan Zhou, Qiang Xu, Z Morley Mao, Subhabrata Sen, and Oliver Spatscheck. An in-depth study of lte: Effect of network protocol and application behavior on performance. ACM SIGCOMM Computer Communication Review, 43(4):363–374, 2013.

- Keller et al. [2012] Fabian Keller, Emmanuel Muller, and Klemens Bohm. Hics: High contrast subspaces for density-based outlier ranking. In 2012 IEEE 28th international conference on data engineering, pages 1037–1048. IEEE, 2012.

- Kingma and Ba [2014] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- Krizhevsky et al. [2009] Alex Krizhevsky, Geoffrey Hinton, et al. Learning multiple layers of features from tiny images. 2009.

- Li et al. [2020] Tian Li, Anit Kumar Sahu, Ameet Talwalkar, and Virginia Smith. Federated learning: Challenges, methods, and future directions. IEEE Signal Processing Magazine, 37(3):50–60, 2020.

- Li et al. [2022] Qinbin Li, Yiqun Diao, Quan Chen, and Bingsheng He. Federated learning on non-iid data silos: An experimental study. In 2022 IEEE 38th International Conference on Data Engineering (ICDE), pages 965–978. IEEE, 2022.

- Liu et al. [2019] Xin Liu, Hui-Min Cheng, and Zhong-Yuan Zhang. Evaluation of community detection methods. IEEE Transactions on Knowledge and Data Engineering, 32(9):1736–1746, 2019.

- Ma et al. [2022] Xiaodong Ma, Jia Zhu, Zhihao Lin, Shanxuan Chen, and Yangjie Qin. A state-of-the-art survey on solving non-iid data in federated learning. Future Generation Computer Systems, 135:244–258, 2022.

- MacQueen [1967] J MacQueen. Classification and analysis of multivariate observations. In 5th Berkeley Symp. Math. Statist. Probability, pages 281–297, 1967.

- McMahan et al. [2017] Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, and Blaise Aguera y Arcas. Communication-efficient learning of deep networks from decentralized data. In Artificial intelligence and statistics, pages 1273–1282. PMLR, 2017.

- Metz et al. [2017] Luke Metz, Ben Poole, David Pfau, and Jascha Sohl-Dickstein. Unrolled generative adversarial networks. In ICLR (Poster). OpenReview.net, 2017.

- Mukherjee et al. [2019] Sudipto Mukherjee, Himanshu Asnani, Eugene Lin, and Sreeram Kannan. Clustergan: Latent space clustering in generative adversarial networks. In Proceedings of the AAAI conference on artificial intelligence, volume 33, pages 4610–4617, 2019.

- Nguyen et al. [2021] Dinh C Nguyen, Ming Ding, Pubudu N Pathirana, Aruna Seneviratne, Jun Li, and H Vincent Poor. Federated learning for internet of things: A comprehensive survey. IEEE Communications Surveys & Tutorials, 23(3):1622–1658, 2021.

- Nguyen et al. [2022a] Anh Nguyen, Tuong Do, Minh Tran, Binh X Nguyen, Chien Duong, Tu Phan, Erman Tjiputra, and Quang D Tran. Deep federated learning for autonomous driving. In 2022 IEEE Intelligent Vehicles Symposium (IV), pages 1824–1830. IEEE, 2022.

- Nguyen et al. [2022b] Dinh C Nguyen, Quoc-Viet Pham, Pubudu N Pathirana, Ming Ding, Aruna Seneviratne, Zihuai Lin, Octavia Dobre, and Won-Joo Hwang. Federated learning for smart healthcare: A survey. ACM Computing Surveys (CSUR), 55(3):1–37, 2022.

- Rasha et al. [2023] Al-Huthaifi Rasha, Tianrui Li, Wei Huang, Jin Gu, and Chongshou Li. Federated learning in smart cities: Privacy and security survey. Information Sciences, 2023.

- Stallmann and Wilbik [2022] Morris Stallmann and Anna Wilbik. Towards federated clustering: A federated fuzzy -means algorithm (ffcm). In AAAI 2022 International Workshop on Trustable, Verifiable and Auditable Federated Learning, 2022.

- Strehl and Ghosh [2002] Alexander Strehl and Joydeep Ghosh. Cluster ensembles—a knowledge reuse framework for combining multiple partitions. Journal of machine learning research, 3(Dec):583–617, 2002.

- Van Berkel [2009] CH Van Berkel. Multi-core for mobile phones. In 2009 Design, Automation & Test in Europe Conference & Exhibition, pages 1260–1265. IEEE, 2009.

- Van der Maaten and Hinton [2008] Laurens Van der Maaten and Geoffrey Hinton. Visualizing data using t-sne. Journal of machine learning research, 9(11), 2008.

- Wang et al. [2019] Xiaofei Wang, Yiwen Han, Chenyang Wang, Qiyang Zhao, Xu Chen, and Min Chen. In-edge ai: Intelligentizing mobile edge computing, caching and communication by federated learning. IEEE Network, 33(5):156–165, 2019.

- Wang et al. [2020] Hao Wang, Zakhary Kaplan, Di Niu, and Baochun Li. Optimizing federated learning on non-iid data with reinforcement learning. In IEEE INFOCOM 2020-IEEE Conference on Computer Communications, pages 1698–1707. IEEE, 2020.

- Wei et al. [2020] Kang Wei, Jun Li, Ming Ding, Chuan Ma, Howard H Yang, Farhad Farokhi, Shi Jin, Tony QS Quek, and H Vincent Poor. Federated learning with differential privacy: Algorithms and performance analysis. IEEE Transactions on Information Forensics and Security, 15:3454–3469, 2020.

- Yan et al. [2022] Jie Yan, Xin Liu, Ji Qi, Tao You, and Zhong-Yuan Zhang. Selective clustering ensemble based on kappa and f-score. arXiv preprint arXiv:2204.11062, 2022.

- Yu et al. [2020] Felix Yu, Ankit Singh Rawat, Aditya Menon, and Sanjiv Kumar. Federated learning with only positive labels. In International Conference on Machine Learning, pages 10946–10956. PMLR, 2020.

- Zec et al. [2022] Edvin Listo Zec, Ebba Ekblom, Martin Willbo, Olof Mogren, and Sarunas Girdzijauskas. Decentralized adaptive clustering of deep nets is beneficial for client collaboration. arXiv preprint arXiv:2206.08839, 2022.

- Zhang et al. [2021] Peiying Zhang, Chao Wang, Chunxiao Jiang, and Zhu Han. Deep reinforcement learning assisted federated learning algorithm for data management of iiot. IEEE Transactions on Industrial Informatics, 17(12):8475–8484, 2021.

- Zhao et al. [2020] Yang Zhao, Jun Zhao, Mengmeng Yang, Teng Wang, Ning Wang, Lingjuan Lyu, Dusit Niyato, and Kwok-Yan Lam. Local differential privacy-based federated learning for internet of things. IEEE Internet of Things Journal, 8(11):8836–8853, 2020.

- Zhu et al. [2019] Ligeng Zhu, Zhijian Liu, and Song Han. Deep leakage from gradients. Advances in neural information processing systems, 32, 2019.

- Zhu et al. [2021] Hangyu Zhu, Jinjin Xu, Shiqing Liu, and Yaochu Jin. Federated learning on non-iid data: A survey. Neurocomputing, 465:371–390, 2021.