Feature Whitening via Gradient Transformation

for Improved Convergence

Abstract

Feature whitening [16] is a known technique for speeding up training of DNN. Under certain assumptions, whitening the activations [7] reduces the Fisher information matrix to a simple identity matrix, in which case stochastic gradient descent is equivalent to the faster natural gradient descent. Due to the additional complexity resulting from transforming the layer inputs and their corresponding gradients in the forward and backward propagation, and from repeatedly computing the Eigenvalue decomposition (EVD), this method is not commonly used to date.

In this work, we address the complexity drawbacks of feature whitening. Our contribution is twofold. First, we derive an equivalent method, which replaces the sample transformations by a transformation to the weight gradients, applied to every batch of samples. The complexity is reduced by a factor of , where denotes the feature dimension of the layer output. As the batch size increases with distributed training, the benefit of using the proposed method becomes more compelling. Second, motivated by the theoretical relation between the condition number of the sample covariance matrix and the convergence speed, we derive an alternative sub-optimal algorithm which recursively reduces the condition number of the latter matrix. Compared to EVD, complexity is reduced by a factor of the input feature dimension . We exemplify the proposed algorithms with ResNet-based networks for image classification demonstrated on the CIFAR and Imagenet datasets. Parallelizing the proposed algorithms is straightforward and we implement a distributed version thereof. Improved convergence, in terms of speed and attained accuracy, can be observed in our experiments.

1 Introduction

Feature whitening is a known method for speeding-up training [15, 19, 18, 16], which aims to decorrelate the inputs to the network layers, making it possible to match the learning rate at each eigenspace to its optimum value, inversely proportionate to the corresponding eigenvalue. Due to the additional complexity, resulting from transforming the layer inputs and their gradients in the forward- and backward-propagation stages, and from repeatedly computing the EVD, using it is uncommon. The celebrated batch norm (BN) method [12] implements a degenerate version of feature whitening, aiming to standardize the features without decorrelating them. Although sub-optimal, BN was found to speed up convergence [5] and has become a common practice in designing deep neural networks thanks to its appealingly low complexity. In [7] it has been shown that when the features become white the Fisher information matrix (FIM) reduces to an identity matrix and the simple stochastic gradient descent (SGD) optimization coincides with the natural gradient descent (NGD) optimization [2] which is more suitable for difficult optimization tasks [17]. Some computations can be saved with the assumption that feature statistics vary slowly during training, thus allowing to update the principle component analysis (PCA)-based whitening transformation every block of samples and amortize the complexity of the EVD computation. Incorporating BN with feature whitening has been found to be useful to limit the variation of feature statistics within blocks. Instability of feature-whitening has been reported in [12] and was attributed to treating the transformation as constant and independent of the data during the back propagation stage. In [11] the stability issue was investigated, and it was found that constructing the whitening matrix based on zero-phase component analysis (ZCA) [4, 13] is more stable than based on PCA as it maintains a lower distortion compared to the original samples and avoids the stochastic axis swapping problem. Furthermore, the derivatives with respect to the whitening transformation were computed and incorporated in the back propagation stage, which further improved convergence speed, at the expense of increased complexity.

In this work, we consider the feature whitening method [7] using ZCA instead of PCA and derive two reduced complexity methods. The first method is equivalent to the aforementioned method, and yet, computations are saved by transforming the weight gradients on every batch instead of whitening the activations and their gradients at every sample. The second method replaces the EVD computation with an approximate recursive algorithm which conditions the activations’ covariance matrix one subspace at a time. The structure of the paper is as follows: In Sec. 2 we present the feature whitening method of [7]. Then in Secs. 3 and 4 we derive the proposed reduced complexity methods. The complexity of the proposed methods is analyzed in Sec. 5. We evaluate the proposed methods with various datasets and models in Sec. 6 and conclude the work in Sec. 7.

2 Direct feature whitening

We describe the feature whitening method as presented in [7], where the transformation is constructed using ZCA as in [11], and denote it by the direct feature whitening method. Consider the input of the -th layer of a DNN, denoted by an matrix , where denotes the input index, is the number of columns corresponding to the spatial dimension and is the number of rows, corresponding to the feature dimension. This formulation is generic, where any spatial dimension can be vectorized (using the operator) into a single dimension. SGD is used to update the parameters, where it has been shown to be equivalent to the NGD optimizer when the features are white and under certain assumptions [7]. For brevity of notation, we omit the -th layer index hereafter, unless explicitly stated.

Denote the -th spatial element of the input, for , as , and define the input matrix

| (1) |

During the training procedure, as the network adapts, the mean and covariance of vary as well and correspondingly the whitening matrix has to be adapted too. A common method for continuously tracking the moments is to use the empirical sample-mean and sample-covariance-matrix, computed in blocks of batches, each consisting of samples, and recursively average them between blocks. The sample indices of the -th block are . The varying mean and covariance are estimated at block index by:

| (2) | ||||

| (3) |

where is a recursive-averaging factor between blocks. The mean and covariance are initialized in the first block () to:

| (4) | ||||

| (5) |

Assuming that the first and second order moments vary slowly and that they are similar across space, we can reduce the estimation complexity by averaging over a subset of the samples and of spatial elements. Storing the moments requires additional memory elements.

We turn to the construction of the whitening matrix at the -th block. A whitening matrix satisfies the following condition:

| (6) |

where is an identity matrix. The whitening matrix is not unique and can be constructed in several methods [8]. Some methods are based on the EVD of , defined as:

| (7) |

where is a dimensional eigenvectors matrix,

| (8) |

is a diagnonal dimensional eigenvalues matrix and denotes a diagonal matrix with the argument vector placed at the diagonal. Without loss of generality we assume that the eigenvalues are sorted in decreasing order, such that . Given the latter decomposition, the ZCA-based whitening matrix can be constructed by:

| (9) |

where , the gains vector is defined as

| (10) |

and limits the minimal denominator for numerical stability.

Denote the output of the direct feature whitening transformation in the forward-propagation stage of the -th block as:

| (11) |

Note that -th block outputs are constructed using the -th transformation. The whitening transformation is initialized as:

| (12) | ||||

| (13) |

In the back propagation stage, propagating the gradient through the whitening transformation from (11) requires computing:

| (14) |

where denotes the loss-function that is being optimized. Note that we adopt the denominator layout notation convention when considering derivatives with respect to vectors or matrices.

Denote the dimensional output of the -th layer as , where and respectively denote the feature and space dimensions. As in [7], we limit the discussion to whitening the input of linear layers. In this case the -th layer can be formulated as

| (15) |

for , where the trained parameters of the layer are dimensional weight matrices , for and , and a dimensional bias vector . Convolution and fully-connected layers can be formulated as special cases of this generic formulation. Substituting the transformed input (11) into (15) yields:

| (16) |

Considering (15), the gradient with respect to the weights and bias in the -th batch is computed by:

| (17) | ||||

| (18) |

for and . The parameters are updated on every batch according to the SGD optimization rule:

| (19) | ||||

| (20) |

where denotes the assignment operator and denotes the learning rate.

3 Gradient-based feature whitening

We propose the gradient-based feature whitening method in Sec. 3.1 and prove its equivalence to the direct feature whitening method from the previous section. In Sec. 3.2 we present some practical considerations and supplements to the basic method.

3.1 Method

The forward propagation stage of the method is given by:

| (21) |

where for and and are the trained parameters. Unlike in [7], is not split into two parts, and applied as a single transformation, conceptually including both the whitening and trained transformations. The activations and their gradients in the forward and backward propagation are computed normally, and do not require another whitening transformation as in [7]. The whitening matrix is computed as in (9), however, it is only used in the back propagation stage to construct the weight gradient transformation, such that the weights are updated as in the direct whitening method.

The equivalence is proven by induction. Without loss of generality, let us assume that (21) is equivalent to the forward propagation of the direct whitening in (16), and that the corresponding whitening transformations are identical, i.e.:

| (22) |

for . It follows that:

| (23) | ||||

| (24) |

We consider the relations between the parameter gradients in the direct whitening method, denoted and , and their gradient-based whitening method counterparts, denoted and . Considering (23), (24) and using matrix derivative rules, we obtain the relations:

| (25) | ||||

| (26) |

Finally, following the SGD update rules in (19) and (20) and substituting (23) and (24), we derive the update rules of the gradient-based whitening as:

| (27) | ||||

| (28) | ||||

| (29) | ||||

| (30) |

Note that these update rules satisfy that the updated parameters in the gradient-based whitening method are equal to the direct whitening method counterparts, and therefore the varying networks are identical and the methods are equivalent by construction.

Special treatment is required for every -th block, when the empirical mean is updated from to . In these cases, the bias parameter is updated to compensate for the update and maintain the overall network unchanged after update. Considering (21) and respectively denoting by and the bias prior to and after the update, we would like to maintain the relation . The bias compensation rule is therefore defined as:

| (31) |

This concept is similar to [7]. However, there is no need to compensate for changes in the whitening matrix , as they are only manifested in the gradients and not explicitly applied to the activations.

3.2 Practical considerations

We present some modifications to the basic method from the previous section, which we found to improve performance and robustness in practice. In the general case the dimensional feature subspace can be split into a signal subspace which corresponds to the largest eigenvalues and holds most of the energy and a noise subspace which corresponds to the smallest eigenvalues. We conjecture that the noise subspace does not contain information that is instrumental to further reduction of the loss function. Consequently, amplifying the noise components might have a destructive effect and hamper the convergence process. We propose to adopt the Akaike information criterion (AIC) [1] for estimating the rank of the signal subspace :

| (32) | ||||

| (33) |

where the latter are the normalized eigenvalues for . Note that in the extreme case of identical eigenvalues, we obtain . In the other extreme case where the samples are completely correlated, i.e., and for , we obtain .

Instead of forcing the variance of the whitened signals to be , we maintain the average variance at the input, and limit the maximal gain to . To summarize, the gain in (10) is replaced by:

| (34) |

for and otherwise. Finally, we suggest to use a recursively smoothed version of , denoted as , initialized by and updated every block according to:

| (35) |

where is a recursive averaging factor.

4 Recursive feature whitening

Haykin [9] analyzed the stability and convergence of the gradient descent algorithm for Gaussian signals and a linear system. Similarly to LeCun [16] he concluded that the convergence time is proportionate to the condition number of the input covariance matrix, i.e., convergence-time is shorter when the eigenvalues spread is reduced. At the limit, when the condition number equals , the convergence time is minimal and the corresponding input covariance matrix is white.

Motivated by the high computational complexity of EVD, and by the relation between the convergence time and the condition number of the input covariance matrix, we propose a recursive approach for reducing the condition number. The idea of the recursive approach is to monitor the covariance matrix of the transformed input , computed similarly to (3), and in every block identify a high power subspace. Then, construct a simple transformation step to reduce its power and append it to the previous whitening transformation, thereby reducing the condition number of in the following block. The method for constructing the transformation is described in Sec. 4.1. For estimating the high power subspace, we refer to [3] and adopt the procedure for estimating the principal eigenvector, which we present in Sec. 4.2.

4.1 Method

Let denote a high power subspace of , containing more power than the average eigenvalue. The vector is normalized, such that . The power contained in its subspace is denoted and is computed by:

| (36) |

Define the recursive average power of the -th input feature as:

| (37) |

and define the average over all input features as:

| (38) |

We define the transformation-step which reduces the power in the subspace of as a summation of two projection matrices:

| (39) | ||||

| (40) | ||||

| (41) |

with being a parameter controlling the power reduction. Note that the transformation step reduces the power of the high power subspace to , whereas all orthogonal subspaces remain unchanged.

Due to variations of the feature covariance matrix, resulting from variations of the network parameters, it is necessary to introduce a forgetting procedure which reverts the transformation in subspaces which no longer contain high power. The procedure is based on leaking a fraction of the input to the whitened input . Given the previous block whitening transformation and the recursive transformation-step (39), the update rule for the whitening transformation is defined as:

| (42) |

where is the leakage factor and

| (43) |

Considering the transformation step (39) and (42), the matrix from (30) can be efficiently computed as:

| (44) |

requiring multiply and accumulate (MAC) operations instead of . Similarly to (35) in the direct feature whitening method, the weight gradients are transformed by an inter-block smoothed version of (44).

4.2 High power subspace estimation

We adopt the procedure from [3] for estimating the principal eigenvector, in the high signal-to-noise-ratio case. The block index is omitted for brevity.

Let be an vector comprised of the norms of the columns of , i.e.:

| (45) |

for where is a selection vector, used to pick the -th column of . Let

| (46) |

be the index of the column with the highest norm. The following method aligns the other columns with the -th column, and the high power subspace is computes as the normalized average of the aligned columns. Define the inner product between the -th column and every column in as

| (47) |

Next, we define a set of column indices . An index is included in the set if the correlation between its corresponding column and the -th column is sufficiently large, i.e.:

| (48) |

where and are relative and absolute coherence threshold. Finally, we align the columns of which indices are in , average them and normalize to obtain the high power subspace :

| (49) | ||||

| (50) |

5 Complexity analysis

The computational complexity of the direct feature whitening from Sec. 2 is comprised of: 1) Computing the transformation at every block based on EVD, requiring MACs per sample; 2) Transforming the input in the forward propagation stage, requiring MACs per sample; and 3) Transforming the activation gradients in the backward propagation stage, requiring MACs per sample. A total of MACs per sample are required.

The gradient-based feature whitening in Sec. 3 replaces transforming of the activations and their gradients at each sample by instead transforming the weights gradients at each batch, requiring MAC per sample. The computational complexity in the forward and backward propagation is therefore reduced by a factor of , which becomes lower with the tendency of the batch size to increase when training is performed in parallel over multiple machines. In case that , one could apply the direct whitening method.

In the recursive gradient-based whitening method in Sec. 4, computing the EVD is replaced by recursively computing a high power subspace and updating the transformation. Also, by leveraging the structure of the recursive transformation, the gradient transformation is efficiently computed by MACs according to (44) instead of in the generic matrix multiplication case. The computational complexity of constructing the whitening transformation is reduced by a factor .

Note that we neglect the complexity of tracking the moments, which is identical in all methods. This is a reasonable assumption given there is a low variability of the statistics over consecutive activations and different space elements.

6 Experimental study

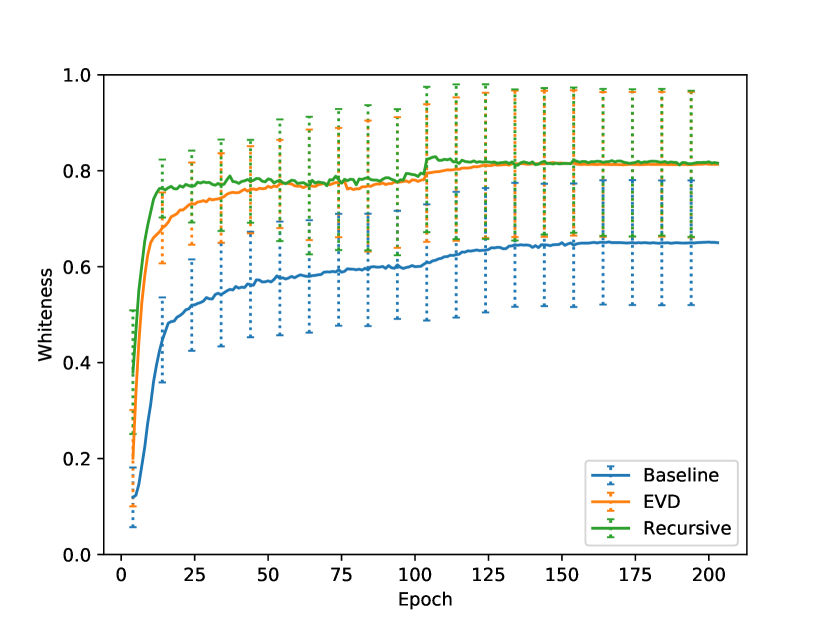

We incorporate the proposed methods into the ResNet-110 and ResNet-50 models [10] and evaluate their convergence when training on the CIFAR [14] and Imagenet [6] datasets with and classes, respectively. For each model we compare three methods: the original model, denoted as baseline; the EVD gradient-based feature whitening method, denoted as EVD; and the recursive feature whitening method, denoted as recursive. In models incorporating feature whitening we place a whitening layer prior to the convolution layers at each basic block (i.e., in ResNet-110 and ResNet-50 we respectively add and whitening layers). Training on CIFAR consists of epochs, where the learning rate is initialized to , and reduces to and at epochs and , respectively. The SGD rule is applied with a momentum of and weight decay of . Training on Imagenet consists of epochs, where the learning rate is initialized to , and reduces to and at epochs and , respectively. The SGD rule is applied with a momentum of and weight decay of . The hyper-parameters of the EVD-based feature whitening are , , and . The hyperparameters of the recursive feature whitening are , , , , , and . In order to evaluate the effectiveness of feature whitening we evaluate the classification accuracy and examine the normalized rank and whiteness of the covariance matrix of the whitened features. The normalized rank is defined as , where is estimated using AIC. The whiteness of the covariance matrix measures how close it is to being diagonal, and is defined as

| (51) |

where in the extreme case of a diagonal we get , and in the other extreme case of being completely correlated we get . We average the and over all layers. The testing accuracy, the average normalized rank and average whiteness of features covariance for ResNet-110 and ResNet-50 are respectively depicted in Figs. 1(a), 1(b), 1(c) and Figs. 2(a), 2(b), 2(c). We capture the variability of the normalized rank and whiteness across different whitening layers, and also depict its standard-error boundaries. As expected, from the normalized rank Figs. 1(b),2(b) and the whiteness Figs. 1(c),2(c) it is evident that the whitening methods obtain a consistently higher rank and higher whiteness than the baseline methods. Considering the accuracy figures, it is noticeable that the whitening methods converge faster, and are more stable and less noisy. The converged accuracy of the baseline, EVD and recursive whitening methods is respectively , and for ResNet-110 and , and for ResNet-50. I.e., the whitening methods are on par or slightly better than the baseline method.

7 Conclusion

Two novel methods for whitening the features, named EVD and recursive gradient-based feature whitening, have been proposed. The methods offer reduced complexity compared to the direct feature whitening method [7], by applying the transformation to the weight gradients instead of to the activations and their gradients. The recursive method further saves computations by replacing the EVD-based transformation with a recursive transformation, updated in steps, treating only one subspace per step and designed to gradually reduce the condition number of the features covariance-matrix. The proposed methods are applied too ResNet-110 and ResNet-50 and obtain state of the art convergence in terms of speed, stability and accuracy.

References

- [1] Hirotugu Akaike. A new look at the statistical model identification. IEEE transactions on automatic control, 19(6):716–723, 1974.

- [2] Shun-Ichi Amari. Natural gradient works efficiently in learning. Neural computation, 10(2):251–276, 1998.

- [3] Anna Barnov, Vered Bar Bracha, and Shmulik Markovich-Golan. QRD based MVDR beamforming for fast tracking of speech and noise dynamics. In 2017 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), pages 369–373. IEEE, 2017.

- [4] Anthony J Bell and Terrence J Sejnowski. The “independent components” of natural scenes are edge filters. Vision research, 37(23):3327–3338, 1997.

- [5] Nils Bjorck, Carla P Gomes, Bart Selman, and Kilian Q Weinberger. Understanding batch normalization. In Advances in Neural Information Processing Systems, pages 7694–7705, 2018.

- [6] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei. ImageNet: A Large-Scale Hierarchical Image Database. In CVPR09, 2009.

- [7] Guillaume Desjardins, Karen Simonyan, Razvan Pascanu, et al. Natural neural networks. In Advances in Neural Information Processing Systems, pages 2071–2079, 2015.

- [8] Gene H Golub and Charles F Van Loan. Matrix computations, volume 3. JHU press, 2012.

- [9] Simon S Haykin. Adaptive filter theory. Pearson Education India, 2005.

- [10] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [11] Lei Huang, Dawei Yang, Bo Lang, and Jia Deng. Decorrelated batch normalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 791–800, 2018.

- [12] Sergey Ioffe and Christian Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167, 2015.

- [13] Agnan Kessy, Alex Lewin, and Korbinian Strimmer. Optimal whitening and decorrelation. The American Statistician, 72(4):309–314, 2018.

- [14] Alex Krizhevsky, Geoffrey Hinton, et al. Learning multiple layers of features from tiny images. 2009.

- [15] Yann Le Cun, Ido Kanter, and Sara A Solla. Eigenvalues of covariance matrices: Application to neural-network learning. Physical Review Letters, 66(18):2396, 1991.

- [16] Yann A LeCun, Léon Bottou, Genevieve B Orr, and Klaus-Robert Müller. Efficient backprop. In Neural networks: Tricks of the trade, pages 9–48. Springer, 2012.

- [17] Daniel Povey, Xiaohui Zhang, and Sanjeev Khudanpur. Parallel training of deep neural networks with natural gradient and parameter averaging. arXiv preprint arXiv:1410.7455, 2014.

- [18] Tapani Raiko, Harri Valpola, and Yann LeCun. Deep learning made easier by linear transformations in perceptrons. In Artificial intelligence and statistics, pages 924–932, 2012.

- [19] Simon Wiesler and Hermann Ney. A convergence analysis of log-linear training. In Advances in Neural Information Processing Systems, pages 657–665, 2011.