Feature-based Federated Transfer Learning: Communication Efficiency, Robustness and Privacy

Abstract

In this paper, we propose feature-based federated transfer learning as a novel approach to improve communication efficiency by reducing the uplink payload by multiple orders of magnitude compared to that of existing approaches in federated learning and federated transfer learning. Specifically, in the proposed feature-based federated learning, we design the extracted features and outputs to be uploaded instead of parameter updates. For this distributed learning model, we determine the required payload and provide comparisons with the existing schemes. Subsequently, we analyze the robustness of feature-based federated transfer learning against packet loss, data insufficiency, and quantization. Finally, we address privacy considerations by defining and analyzing label privacy leakage and feature privacy leakage, and investigating mitigating approaches. For all aforementioned analyses, we evaluate the performance of the proposed learning scheme via experiments on an image classification task and a natural language processing task to demonstrate its effectiveness. 111Code implementation available at: https://github.com/wfwf10/Feature-based-Federated-Transfer-Learning.

Index Terms:

Federated learning, transfer learning, communication efficiency, robustness, privacyI Introduction

Federated learning (FL) is a form of distributed learning in which only model parameters are exchanged while datasets are kept local with the goal to maintain data privacy [1]. Typically, in FL, a central server, known as the parameter server (PS), coordinates the collaborative training of a deep neural network (DNN) model [2] by aggregating updates on the weights and biases from multiple participating devices/clients. The widespread use of mobile phones and tablets with sufficient computational power and wireless communication capability enables FL in a wide range of applications such as speech recognition and image classification. Internet of Things (IoT) with large number of devices/sensors may generate even larger amount of data while casting further constraints on the computational power and transmission power [3]. With these, FL has gained widespread attention from both academic and industrial communities, resulting in a rapid increase in research on FL techniques, such as federated averaging (FedAvg) [4], federated transfer learning (FTL) [5, 6], and FL with differential privacy (DP) [7]. Among them, model-based FTL stands out as a particularly efficient scheme, because it transfers a well-trained source model into the target task of interest where samples may have different input and output spaces, and thus FTL requires fewer training samples and shortens the training process [8, 9, 10, 11]. Especially, with the availability of open-source big data repositories and deep learning models [12, 13], transfer learning (TL) has become an attractive solution for various applications, such as text sentiment classification [14, 15, 16], image classification [17, 18, 19, 20, 21, 22], human activity classification [23, 24, 25], software defect classification [26, 27], and multi-language text classification [28, 29, 30].

However, when the clients in FL are mobile devices and the training process is performed over a wireless network such as a wireless local area network (WLAN) or cellular system, it is crucial to minimize the data sent during updates of the model parameters. This is due to the limited availability of radio spectrum, and the uncertain nature of the wireless environment caused by factors such as channel fading and interference [31]. In the context of FL applications on IoT devices, the added constraints on computation and power consumption for uploading data make it even more challenging to perform complex tasks that require deep neural networks (DNNs) with many layers and a large number of trainable parameters. While many existing FL studies have focused on shallow DNNs with a few layers, state-of-the-art DNN models used in various applications often have dozens of layers and millions or billions of trainable parameters in order to achieve the highest accuracy in e.g., image segmentation [32, 33], image classification [34, 35], object detection [36, 37], question answering [38], medical image segmentation [39], and speech recognition [40].

I-A Contributions

In response to the above-mentioned constraints on the uplink payload budget and limitations on the local computational power, we develop a novel scheme to perform model-based transfer FL and refer to this scheme as feature-based federated transfer learning (FbFTL). In FbFTL, rather than uploading the gradients, the input and output of the subset of DNN to be trained are uploaded with the goal to reduce the uplink payload. This is one of the key differences from prior FL and FTL strategies. In this paper, after describing the proposed FbFTL scheme, we analyze its overall uplink payload and provide comparisons with prior schemes to quantify the gains. Specifically, we test this approach by transferring the VGG-16 convolutional neural network (CNN) model [41] trained with ImageNet dataset [42] to CIFAR-10 dataset [43], and show that FL, two types of FTL, and FbFTL require uploading 3216 Tb, 949.5 Tb, 599 Tb, and 6.6 Gb of data, respectively, until performance convergence. With this, we demonstrate that the proposed FbFTL outperforms FL and FTL substantially by reducing the upload requirements by at least 5 orders of magnitude. We note that the ITU standard of 5G uplink user experienced data rate is only 50 Mb/s [44], and even the 6G uplink user experienced data rate at Gbit/s level may not sufficiently support such huge uploading requirements of regular FL. Additionally, to our best knowledge, existing works on improving FL efficiency (such as FedAvg [4], sparsification [45, 46] and quantization [47, 48] with or without error feedback [49, 50], federated distillation [51, 52, 53], pruning [54, 55] or partially trainable network [56, 57], and over-the-air computation [58, 59, 60]) still consider the transmission of gradient updates and can achieve a relatively limited reduction in payload and experience degradation in performance. For instance, the payload reduction is of only two orders of magnitude of the original payload on the same CIFAR-10 dataset [61, 62]. Therefore, FbFTL is still a more effective approach in reducing the uplink payload even after the efficiency of FL has been improved via the aforementioned methods. We further show that FbFTL has substantially lower downlink payload, and requires significantly less computational power from the edge devices, and therefore it is much more friendly in terms of facilitating the training tasks on clients with limited power budget.

To validate our approach, we further analyze the robustness of FbFTL against FL and FTL. We show significant reduction on packet loss rate (PLR) with FbFTL with the same block loss rate (BLR), and demonstrate the robustness of FbFTL with insufficient training data. While there have been numerous studies on gradient quantization and sparsification to reduce the uplink payload [63, 64], we illustrate that FbFTL also achieves significant compression rate by quantization while the validation accuracy is barely affected. Furthermore, we analyze the privacy leakage to a potential adversary. We define the label privacy leakage and feature privacy leakage for both feature-based and gradient-based frameworks. To our best knowledge, this is the first work that divides the privacy into different categories, defines each type, and proposes mitigation approaches. Via experimental results, we show that FbFTL has better performance (e.g., classification accuracy) with the same level of privacy protection when each client obtains a small set of samples.

In summary, our main contribution can be listed as follows:

-

•

We propose the FbFTL scheme that uploads extracted features instead of gradients to reduce uplink payload by five orders of magnitude.

-

•

We analyze the robustness of FbFTL, and demonstrate that it maintains the payload advantage when strategies such as sparsification, quantization and error feedback are deployed.

-

•

We study the privacy guarantees in terms of entropy based formulations that quantify the uncertainty and also by utilizing differential privacy mechanisms.

-

•

We show that a small batch size is preferred to protect label privacy of shuffled batches, and FbFTL has better performance (such as in terms of accuracy) given the differential privacy constraint for input privacy in a small batch.

I-B Organization and Notations

| FL Parameters: | Section II-A |

| , | Set of clients, Number of clients |

| Set of local training samples at client | |

| Number of local training samples at client | |

| The th sample at client | |

| Input vector of with attributes | |

| Output vector of with attributes (or classes) | |

| DNN that maps the input x to estimated output | |

| Trainable parameter vector of DNN | |

| Loss function | |

| Sum of updates (or gradients) at client | |

| Learning rate | |

| Number of communication iterations during training process (superscript indicates methods) | |

| Fraction of clients selected in each iteration | |

| FTL Parameters: | Section II-B, III-A, III-B |

| Number of layers without trainable parameters | |

| Number of layers with trainable parameters | |

| Index of the layer partitioning DNN into feature extraction part and task-specific part | |

| Feature extraction sub-model with trainable parameters | |

| Task-specific sub-model with trainable parameters | |

| Extracted features | |

| Number of trainable parameters in the th trainable layer | |

| Number of input nodes at the th trainable layer | |

| Number of output nodes at the th trainable layer | |

| Performance Measurements: | Section II-A, III-B, ,III-C |

| Payload of each float number in bits | |

| Uplink payload (superscript indicates methods) | |

| Downlink payload (superscript indicates methods) | |

| Computation time complexity for training | |

| Robustness Measurements: | Section IV |

| PLR | Packet loss rate |

| BLR | Block loss rate |

| Number of blocks in each packet | |

| Maximum number of packet retransmission allowed | |

| Sparsification function keeping a ratio of the input elements | |

| Quantization function keeping bits of the input elements | |

| Local memory vector for error feedback | |

| Privacy Measurements: | Section V |

| Entropy to quantify the uncertainty | |

| Output dimension (or number of labels) | |

| Set of possible batches | |

| Number of possible batches | |

| Unknown batch type of client as a random variable | |

| Distribution over labels | |

| Uniform label distribution | |

| Count of output label type in a batch of type | |

| Adversary’s presumed probability distribution of batches without prior knowledge | |

| Adversary’s uncertainty of a batch without prior knowledge of the content | |

| True label distribution | |

| Adversary’s presumed probability distribution of batches given the true sample distribution | |

| Adversary’s uncertainty of a batch given the true sample distribution | |

| Label Privacy Leakage with Statistical Information | |

| Count for batches of different types among shuffled batches | |

| Number of each batch type in shuffled batches as a set of random variables | |

| Adversary’s presumed empirical distribution of batches given shuffled batches | |

| Adversary’s uncertainty of a batch given shuffled batches | |

| Label Privacy Leakage with Query | |

| Total Label Privacy Leakage | |

| n | Additive independent Gaussian noise to protect local instance privacy, where |

| d | Training samples for one batch |

| Uploading vector in one batch | |

| Differential privacy level | |

| Maximum relative distance | |

| Additionally: | in subscript () denotes transfer learning updating full model, and in subscript () denotes transfer learning updating task-specific sub-model |

The remainder of the paper is organized as follows. In Section II, we discuss the preliminary concepts regarding FL and FTL, describe the system design via FedAvg, and demonstrate their uplink payload. In Section III, we introduce the proposed FbFTL algorithm, introduce the learning structure and system design, and compare its payload with FL and FTL. In this section, we also evaluate the performance of FbFTL via simulations, and provide comparisons with FL and FTL. In Section IV, we illustrate the robustness of FbFTL in the presence of packet loss, data insufficiency, and quantization. In Section V, we define the label privacy leakage and the feature privacy leakage. For label privacy, we discuss the leakage with statistical information and the leakage with query, and investigate mitigation approaches to avoid these privacy leakages. For feature privacy leakage, we illustrate the mitigation approach via differential privacy. Finally, we conclude the paper in Section VI. The list of all notations in the paper is provided in Table I as a quick reference.

II Preliminaries

In this section, we introduce preliminary concepts related to FL and FTL, and analyze the requirements on their successful uplink payload.

II-A Federated Learning

We address a common FL task in which a PS coordinates a set of clients to cooperatively train a DNN model. Each client possesses a local dataset with samples for training. In this dataset, the th sample is comprised of an input vector and an output vector . The DNN, represented by the function , maps the input x to an output (as an estimate of y) with trainable parameter vector . The goal of the FL training process is to update the parameter vector using the training samples from each client in order to minimize the expected loss on the unseen sample with the same distribution as the training samples. For most DNNs with classification tasks, the output label y is an axis-aligned unit vector with one element equal to 1 indicating the class of this sample, and all others equal to 0. In this case, the loss function is typically the categorical cross-entropy:

| (1) |

In order to minimize the loss and keep the training samples local, the authors in [4] proposed an iterative distributed optimization scheme called FedAvg with the following steps:

-

1.

The PS initiates trainable parameters , and broadcasts the model structure with non-trainable parameters to each client.

-

2.

The PS chooses a subset of clients (with denoting the fraction of clients in this iteration) and broadcasts the trainable parameters .

-

3.

Each client performs stochastic gradient descent (SGD) to obtain the parameter update corresponding to each training sample: .

-

4.

Each client sends the sum of the updates over all local samples and to the PS.

-

5.

The PS updates the parameter with where is the learning rate that controls the training speed.

-

6.

Return to step 2) until convergence.

Let us assume that the number of iterations in FL with FedAvg is (in the absence of any processing and transmission failures). This implies that the total number of times the clients upload is within the training process. Assume further that the DNN includes layers with trainable parameters (such as convolutional layer and fully connected layer, i.e., dense layer) and layers without trainable parameters (such as pooling layer and residue connection). The th trainable layer has trainable parameters. Thus, for each trainable parameter, the client uploads one float number of bits for the corresponding element in the update during each iteration. Therefore, the overall uplink payload to train a DNN via FL with FedAvg is

| (2) |

and we will set this as a benchmark to compare with three other training methods in the remainder of this paper.

II-B Federated Transfer Learning

TL is an effective learning technique that utilizes knowledge from a different domain to enhance the performance in the target domain. FTL incorporates the privacy-preserving distributed learning paradigm into conventional TL in order to address the challenges of spectrum limitations in wireless applications and training data scarcity. In this paper, we consider FTL to be performed in the same fashion as in the previous subsection, where one PS orchestrates clients. Based on the difference between the domains, FTL can be divided into three different categories: instance-based FTL, feature-based FTL, and model-based FTL [6, 65]. The former two types of FTL assume similarity in the distribution of the input and output, and hence we in this paper focus on the model-based FTL which only assumes similarity in the functionality to extract a high-dimensional description from the input data.

As shown in Fig. 1, we consider FTL with a DNN model pre-trained with an open-source dataset that corresponds to a similar but not the same task as the source model. The goal here is to reduce the number of iterations during training. Many DNNs can be partitioned into two sections. The first section generates the high-dimensional features from the sample input data with general information. The second section carries out operations for a particular task, such as classification, and is less likely to be transferable to a new task. To move the knowledge of the source model into a new task before training, the feature extraction section of the source model is directly transferred to the new target model, while the task-specific part is randomly initiated. FTL can be performed by retraining all the parameters with new data. However, there typically exist a large number of parameters to train, and in order to reduce the training time and data requirements, a common approach is to perform FTL by fixing the feature extraction part and simply updating the task-specific part. In particular, we select one fully connected layer (which is Layer 4 in Fig. 1), close to the output without a paralleling path (such as residue connection), and randomly initiate all trainable parameters of layers (which are Layer 4 and Layer 5 in Fig. 1). During the distributed training process, all copied parameters are fixed, and we only update the parameters of layers .

Similar to the previous sub-section on FL, we determine the least requirements on successful uplink payload for FTL until convergence. For FTL that updates all parameters, assuming that FTL with FedAvg is iterated times, the overall uplink payload required for training is

| (3) |

On the other hand, assuming FTL with FedAvg that only updates the task-specific part with iterations and assuming that each sum update consists of trainable parameters, the overall uplink payload for training is

| (4) |

Typically, training from scratch requires more training samples, and thus we have . For most of the models, the majority of the DNN structure is dedicated to the feature extraction, and obviously . Therefore,

| (5) |

II-C Other Recent Transfer and Federated Learning Schemes

| Method | Payload | Computation | Key Assumption |

|---|---|---|---|

| FL | iterative gradient-level | medium, distributed | start with random initialization |

| FTL | iterative gradient-level | low, distributed | start with source model |

| FbFTL (ours) | one-time feature-level | low, mostly centralized | start with source model |

| EWC [66] | iterative gradient-level | high, distributed | evaluating many different tasks |

| SFL [67] | iterative gradient-level | medium, hybrid of distributed and centralized | two servers needed |

| PFL [68] | iterative gradient-level | very high, distributed | large and heterogeneous local batch |

While we in this paper focus on FL schemes that generalize to the majority of use cases, there are also recent works that improve the performance under certain assumptions and can be used in FL. We list these well-known methods, along with FL, FTL, and the proposed FbFTL, in Table II and provide a comparison. Below, we briefly describe the key assumptions of these methods, and highlight the differences of the proposed FbFTL scheme.

Specifically, elastic weight consolidation (EWC) [66] focuses on a large number of different tasks simultaneously and the transfer between them by remembering the importance of each weight. The performance is typically measured on all experienced tasks. EWC is inspired by neuroscience and Bayesian inference, and it aims to quantify the importance of each weight to the tasks the model has previously learned. The fundamental idea is that the weights critical to prior tasks should be altered less when new data is encountered. In this paper, we consider a different problem, where we transfer to a single target task from a single source model on another task whose performance is no longer considered. In this problem setting, there is no clear difference between EWC and plain SGD in [66] in terms of both training performance or training time, but EWC does introduce extra communication payload and computation cost in FL. On the contrary, FbFTL achieves five orders of magnitude payload reduction.

Split federated learning (SFL) [67] partitions the neural network into two segments: the initial part near the input undergoes training in a federated learning manner using a “Fed Server”, and the subsequent part near the output is trained using split learning with a “Main Server,” which receives the smashed data. Compared to FbFTL, the federated learning segment utilizing the Fed Server operates in the same way as FL and requires significant uplink and downlink payload. Hence, this part alone is typically much more communication-expensive compared to FbFTL. The advantage of SFL lies in its similar performance to FL and not needing a pre-trained model as it updates all parameters. We will present the experimental performance comparison in Table III in Section III-D.

Personalized federated learning (PFL) [68] considers clients with large number of heterogeneous local samples, enabling generalization to the heterogeneous local distribution. However, we in this paper consider a large number of clients with a small set of local samples from each. As explained in Section V-A and Section V-C, such a setting better protects label privacy. Therefore, personalized federated learning focuses on a different problem from ours. We cannot apply this approach to FbFTL because personalized FbFTL violates the shuffle-batch assumption, unless we train the global model by FbFTL and then retrain locally. Although PFL has better local performance than FL with large heterogeneous batches, it requires several independent local batches and significantly more local computation. Furthermore, it does not allow quantization and sparsification since the weights instead of gradients are uploaded. Therefore, it is comparatively even less communication-efficient when quantization and sparsification are deployed in other methods, as shown in Section IV-C and Table V.

III Feature-based Federated Transfer Learning

In this section, we describe the proposed FbFTL framework and demonstrate that it requires a substantially smaller uplink payload compared to FL and FTL. This efficiency arises from the fact that the extracted features and outputs are uploaded rather than the parameter updates.

III-A Learning Structure

As depicted in Fig. 2, in FbFTL we consider the model-based TL on a source DNN model pre-trained on a different task. We choose one fully connected layer without paralleling path as the cut layer, and divide the new target model into two parts. Those layers before layer (which is layer 4 in Fig. 2, i.e., ) are regarded as the feature extraction sub-model , and the parameters for the new target model are copied from the pre-trained source model and fixed without any further update. The other layers are regarded as the task-specific sub-model , and all trainable parameters in the new target model are randomly initiated for training with the th client’s th training sample for all and .

The forward pass of the full model maps the input to the estimated output , and we note that the output of the feature extraction sub-model is also the input of the task-specific sub-model . Note that we require only the task-specific sub-model to be trained. In this setting, each client generates the features from input and sends these features to the PS only once. Subsequently, in FbFTL, the PS performs the gradient back-propagation iteratively without sending any feedback to the clients. Contrary to this, in FL and FTL, the parameter update, which is based on the gradients, highly relies on the parameters in the current training iteration, and the same data sample may generate different parameter updates in different iterations within the training process. Therefore, in FL and FTL, we either require many more training samples, or need to upload updates multiple times for the same sample. However in FbFTL, each client only needs to upload the intermediate output and output once, instead of iteratively uploading the gradients. The PS may deem these as the input and the output of the training sample for the task-specific sub-model . Such pairs of samples are not correlated with the model parameters , and therefore they can be used in different training iterations without downloading or uploading anything again. We provide the steps of the FbFTL algorithm in Algorithm 1 below.

As emphasized above, a key distinction of FbFTL is that the training samples of task-specific sub-model are uploaded rather than the direct gradient updates. With this, FbFTL provides additional important benefits that can lead to further improvements in the training performance and efficient management in practice. One of the most important benefits is the significant reduction of packet loss rate given the same batch loss rate since FbFTL has much less data in each batch, as we will illustrate in detail in Section IV-A. One other benefit is the waiving of the requirement of synchronization between clients, since there is only one iteration in FbFTL. The other benefits pertain to hyper-parameter fine-tuning, dataset balancing, and enabling flexible training batch size selection, as detailed below.

Specifically, one of the most important additional benefit is that FbFTL enables iterative fine-tuning of the SGD optimizer hyper-parameters such as the learning rate. To obtain the optimized DNN, one needs to find the optimized hyper-parameters that provide the best convergence performance. In FbFTL, the model can be trained from scratch several times at the PS to identify the best hyper-parameter setting without additional communication with the clients. On the other hand, in FL and FTL, the entire online training process has to be run several times, resulting in a much higher cost compared to the ideal uplink payload .

Another benefit of FbFTL is the dataset balancing. If the overall dataset is imbalanced and hence the samples with certain types of output appears much more frequently than those of other outputs, it is hard for FL and FTL to distinguish this via gradient updates, and such imbalanced data distribution could significantly degrade FL performance [69, 70]. However, FbFTL with direct output information enables techniques, such as re-sampling specific classes or merging near-identical classes, to improve dataset imbalance.

One more benefit of FbFTL is to lift the constraint of and on the training batch size. To avoid over-fitting to the training dataset, one needs to validate the performance on a separate validation set of data to identify the optimal number of training iterations to stop training and conclude the final model. It is straightforward for FbFTL to divide the obtained dataset into training and validation subsets. However, the gradient-based FL frameworks call for extra effort for the communication system to meticulously perform the training process and validation process with the desired order and number of samples. Additionally for the training process, due to the broadcast nature of the downlink in wireless FL and FTL, all selected clients in the same communication iteration receive the same parameters. Hence, each SGD mini-batch has a larger size than , and an overwhelmingly large SGD mini-batch size may delay the training process and require more training iterations. The mini-batches in these gradient-based FL frameworks are also on the order of given clients’ samples. Therefore, when the clients’ sample distribution is biased, we cannot shuffle the samples between iterations and have to accept the loss in the final performance. However, FbFTL may choose any rational size of SGD mini-batch without the constraints of the communication system, and can reshuffle the data in each training iteration.

III-B Payload Analysis

Note that FbFTL has the major benefit of requiring one-time communication between the clients and the PS. In addition to this, FbFTL has much less data in a single upload batch compared to that of each sample in the upload batch of FL and FTL. Let us assume that in the fully connected layer with bias, the number of input nodes and the number of output nodes are denoted by and , respectively Then, the number of trainable parameters in this layer is given by . Compared to the dimension of , the amount of information that we need to represent , i.e., bits, is negligible. Note that in FbFTL, gradients are computed at the PS. Therefore, FedAvg cannot be applied and each sample is required to be uploaded separately. Therefore for FbFTL, the uplink payload for each sample is , and the overall successful uplink payload required for training is

| (6) |

Compared to the uplink payload in (4) of FTL with FedAvg (in which only the task-specific sub-model is updated), the ratio of each upload for single sample between FTL and FbFTL is

| (7) |

Therefore,

| (8) |

The number of extracted features for many state-of-the-art models is larger than , and TL usually requires a relatively deeper task-specific sub-model, and therefore can be large. For the cross-device federated learning, the clients do not obtain huge local datasets, . Therefore, we have . We note that FbFTL and FTL that updates the task-specific sub-model have the same performance at every iteration because the only difference between the two methods is the communication model but not the numerical process to generate the gradient updates. Combining this observation with the conclusion in (5), we have

| (9) |

and therefore we expect extremely smaller uplink payload in FbFTL compared to FTL and FL.

We also note that FbFTL has the smallest downlink broadcast payload and the least local computation compared to FL and FTL. For FL, FTL that updates all parameters, FTL that updates the task-specific sub-model, and FbFTL, the overall downlink broadcast payloads, respectively, are

| (10) |

| (11) |

| (12) |

| (13) |

III-C Time Complexity Analysis

We note that FbFTL consumes less computation time and power for training in total, and also transfers a proportion of computation from the client devices to the PS. In FL and FTL, each client must complete one full forward pass and one back-propagation of the parameters to be trained for each training sample at each iteration. However, in FbFTL, each sample is only processed once by the client, and each client only needs to complete the forward pass of feature extraction sub-model, while all other computations are completed at the PS. Such shift of computational load to the PS is particularly beneficial if the end users and devices are severely limited in their computational capabilities and power resources (for instance, in IoT networks) and the PS is equipped with advanced processors and has access to more resources.

In this subsection, we use time complexity to estimate the computation time and energy consumption. For each fully connected layer with input nodes and output nodes, there are trainable parameters, and each sample requires time complexity for matrix multiplication during forward pass, and the same time complexity for matrix multiplication during back-propagation. Each 2-dimensional convolutional layer with stride 1 in each direction and same padding has the input shape where , kernel shape where , and output shape where . Thus, each sample requires time complexity for matrix multiplication during forward pass, and the same during back-propagation. Comparatively, the time complexity of activation functions, max-pooling layers and dropout layers is negligible. Therefore, we denote the time complexity of each trainable layer for a single training sample as , and the overall time complexity required for all clients as . Specifically, the overall time complexities at clients for the methods of FL, FTL that updates all parameters, FTL that updates the task-specific sub-model, and FbFTL, respectively, are

| (14) |

| (15) |

| (16) |

| (17) |

where stands for “proportional to”. Typically, we have .

III-D Experimental Results

In this section, we consider the application of FL, FTL and FbFTL to the VGG-16 CNN model [41] and transfer the knowledge learned from ImageNet dataset [42] to CIFAR-10 dataset [43].

ImageNet is a vast online database containing over 14 million images, each with hand-annotated labels describing the classification types or intended outputs for training. The pre-trained source model we use for TL was created on the 2012 ImageNet Large Scale Visual Recognition Challenge (ILSVRC2012, [71]), and the images come from 1000 different categories. As an illustration, we provide 10 samples with their labels in Fig. 3.

CIFAR-10 is a database of images, each with one of distinct labels. Out of these images, images are used for training and images for testing. Fig. 4 showcases the first ten samples with their labels. Note that these samples have simple labels and appear more blurred compared to those in ImageNet due to their lower resolution/dimension.

In Fig. 5, we depict the structure of VGG-16 used for training on CIFAR-10. For TL, we consider the first half of the layers marked blue as the feature extraction sub-model and directly transfer this sub-model from that trained on ImageNet. We deem the latter part marked yellow as the task-specific sub-model and randomly initiate this part. For FbFTL, the dimension of the intermediate output is .

To train the model on CIFAR-10, we utilize Nvidia GeForce GPU with CUDA to run the algorithms with PyTorch [72]. We assume that there are clients in total, each iteration takes a fraction of all clients, and each batch contains samples. In the two most commonly used deep learning tools TensorFlow (including Keras) [73] and PyTorch, the default data type of each number has 32 bits and therefore bits. The learning rate is , the momentum of the optimizer is , and the L2 penalty is .

| FL | SFL | FLlow | FTLf | FTL | FTLc | FbFTL | |

| number of upload batches | 656250 | 656250 | 68750 | 193750 | 25000 | 525000 | 50000 |

| upload parameters per batch | 153144650 | 117483328 | 153144650 | 153144650 | 153144650 | 35665418 | 4096 |

| uplink payload per batch | 4.9 Gb | 3.8 Gb | 4.9 Gb | 4.9 Gb | 4.9 Gb | 1.1 Gb | 131 Kb |

| total uplink payload | 3216 Tb | 2467 Tb | 337 Tb | 949 Tb | 123 Tb | 599 Tb | 6.6 Gb |

| total downlink payload | 402 Tb | 308 Tb | 42 Tb | 253 Tb | 15 Tb | 322 Tb | 3.8 Gb |

| computation time complexity | |||||||

| validation accuracy | 89.42% | 89.42% | 86.64% | 93.75% | 86.45% | 86.51% | 86.51% |

In Table III, we compare the performances of FL, SFL, FTL updating the full model (FTLf), FTL updating the task-specific sub-model (FTLc), and FbFTL. For increased fairness in the comparison of payloads, we further demonstrate the performances FLlow and FTL, which are the FL and FTLf algorithms that terminate training process when the validation accuracy reaches those of FTLc and FbFTL (i.e., ). As we have analyzed, the other algorithms require successfully uploaded batches, while FbFTL only requires batches. Also, FL, SFL, FTLf and FTLc require uploading bits, bits, bits, and bits, respectively, for each batch, while FbFTL only requires uploading bits for each batch. In the third row of the table, we observe that the FbFTL algorithm significantly reduces the uplink payload per batch by four orders of magnitude (i.e. a factor of ) compared to the other algorithms for each client. Additionally, in the fourth row, FbFTL leads to a reduction of five orders of magnitude (i.e. a factor of ) in the total uplink payload during training when compared to the other algorithms. These results demonstrate that FbFTL is apparently the most efficient scheme. FbFTL also results in a substantial decrease in the overall downlink payload. If larger models are trained for more complex tasks or if the size of the training dataset is more limited, the difference in payload could be even greater. In (14) through (17), we have described the overall computation time complexity required for training at clients as . In the second-to-last row of the table, we quantify and provide this time complexity for each method by counting the number of multiplications among floating numbers. We readily observe that FbFTL requires the least computation time and power consumption at the clients, and has two orders of magnitude reduction compared to FL, SFL and FTLf, since it only runs the forward pass over feature extraction sub-model for each sample one time. We further note that for FbFTL, the computation complexity at the PS is also low with . Furthermore, we note that different number of clients in each iteration does not affect the performance of FbFTL, but a large number of clients reduces the performance of FL and FTL, since it defines the minimum “training batch size” . The performance of FL and FTL will decrease with an overwhelmingly large training batch size, which is another benefit of FbFTL. We in the experiment pick a small so that the benchmark schemes (i.e., FL, FTLf, and FTLc) have the best performance (at which point FTLc and FbFTL have the same performance). Moreover, we also observe that FTLc and FbFTL only update a small portion of the parameters and exhibit a slight decrease in validation accuracy, which is the trade-off for the reduced payload. However, we will show in Section IV and Section V that under communication efficiency or privacy constraints, FbFTL may also prevail in terms of validation accuracy in certain situations.

| FTLf | FTLc | FbFTL | FTLc | FbFTL | FTLc | FbFTL | |

| number of trained encoders | 8 | 8 | 8 | 4 | 4 | 2 | 2 |

| number of upload batches | 132588 | 36830 | 7366 | 88392 | 7366 | 103124 | 7366 |

| upload parameters per batch | 109860224 | 60511616 | 1024 | 51070144 | 1024 | 46349504 | 1024 |

| uplink payload per batch | 3.5 Gb | 1.9 Gb | 32.7 Kb | 1.6 Gb | 32.7 Kb | 1.5 Gb | 32.7 Kb |

| total uplink payload | 466.1 Tb | 71.3 Tb | 241.4 Mb | 144.5 Tb | 241.4 Mb | 152.9 Tb | 241.4 Mb |

| total downlink payload | 116.0 Tb | 32.2 Tb | 1.58 Gb | 77.3 Tb | 1.88 Gb | 90.2 Tb | 2.03 Gb |

| validation ROUGE-1 | 45.9249 | 45.4680 | 45.4680 | 45.2827 | 45.2827 | 44.9862 | 44.9862 |

Furthermore, we note that in transfer learning, there is an additional trade-off between privacy protection, performance and payload. When partitioning a model into feature extraction sub-model and task-specific sub-model, choosing the cut layer closer to the output better preserves data privacy, while picking a cut layer closer to the input improves the training performance. In order to demonstrate such a trade-off, we next consider a language model as our application scenario. In FL, FTL, and FbFTL on natural language processing tasks, we consider tasks where the model and the intermediate features are not proprietary or private, and thus we assume that the clients are willing to share them while keeping the local data private As an example, we show the results on a conversation summary task SAMSum [74] with 32128 distinct token types out of 14732 training dialogues and 819 testing dialogues. For instance, dialogue ID 13728867 in SAMSum is “Olivia: Who are you voting for in this election? Oliver: Liberals as always. Olivia: Me too!! Oliver: Great”, and ground truth summary is “Olivia and Olivier are voting for liberals in this election.” For this task, we deploy a language model FLAN-T5-small [75], which is a transformer with 110 million parameters, including 8 encoders and 8 decoders. This model is pre-trained and the dataset is relatively small, so we do not have FL in this case. We assume that there are clients, each has dialogues, and each iteration takes a fraction of all clients. Our experiment is based on HuggingFace [76] with learning rate .

In Table IV, we compare the performances of FTL and FbFTL in terms of ROUGE-1 score, which measures the match between the generated text and reference text. A larger ROUGE-1 indicates better performance. We note that FTLf trains all components including encoders, decoders, embedding and the final linear layer. On the other hand, FTLc and FbFTL freeze embedding, and may or may not freeze several encoders close to the input prompts. The number of trained encoders is given in the second row of Table IV. For instance, the performance results in the third and fourth columns are for FTLc and FbFTL that have trained all encoders (and hence have not frozen any of them), while the other columns provide the performances with 4 or 2 encoders trained (indicating that 4 or 6 encoders close to the input are frozen). We do not need to freeze decoders since label privacy leakage converges to zero with shuffled batches, as will be shown in Section V. In Table IV, we observe that FbFTL reduces the uplink and downlink payload by similar orders of magnitude as in the VGG-16 experiment. Furthermore, we notice that training less layers or encoders leads to a slight reduction in ROUGE-1 score but it does not guarantee lower payload. While FTL may require more iterations to arrive convergence, FbFTL may need to broadcast a larger feature extraction sub-model.

IV Robustness Analysis

In this section, we compare the performance of FbFTL with that of FL and FTL under the same packet loss rate (PLR) for each batch being uploaded, and illustrate the robustness of FbFTL against packet losses, data insufficiency, and compression, including quantization, sparsification and error feedback.

IV-A Packet Loss

As we have demonstrated in the previous section, gradient-based FedAvg FL frameworks including FL and FTL upload the gradient update iteratively, where each batch contains the gradient summation from all samples of the client. In contrast, our proposed FbFTL uploads the data for each sample only once, and each batch contains extracted features and output from one sample.

In (7), we have shown that each batch for gradient-based FL is much larger than each batch for FbFTL (by about larger in our experiments), and we assume that the packets to transmit both types of batches consist of multiple transmission blocks of the same size. For both types of packets, we consider measuring the robustness of all frameworks against packet loss caused by block losses due to network congestion or link outage (e.g., as a result of deep fading in wireless networks). For each learning framework whose packet consists of transmission blocks, we consider the same block loss rate (BLR). The PLR without retransmission is

| (18) |

Obviously, PLR highly depends on the value of . FbFTL has significantly lower packet size and consequently we expect much lower PLR for the given same BLR. We show the significant performance difference among different learning frameworks in our experiments at the end of this section.

If, on the other hand, we allow at most retransmissions for all packets and assume the channel state of each transmission to be independent and identically distributed (i.i.d.), we can lower the PLR and achieve similar packet-level reliability. However, this is realized at the cost of higher total uplink payload. Specifically for each learning framework requiring uplink payload , the expected uplink payload with retransmissions is

| (19) |

We note that is the expected number of retransmissions, which is the same for all different learning frameworks. If the transmission has a fixed bandwidth, also represents the delay factor of the total transmission. We further note that if , then . Otherwise, . Notice that the increase in the payload grows with and . Hence, learning frameworks with higher payload experience a higher increase in payload when retransmissions are introduced.

IV-B Data Insufficiency

At the end of Section III-A, we have discussed the benefit that FbFTL does not require additional online uploads from the clients to test different sets of hyper-parameters, while gradient-based frameworks need to run the entire uploading process multiple times. In practice, in addition to hyper-parameters, another intangible aspect prior to training is whether there exists a sufficient number of participating clients and training samples. If the planned set of samples is insufficient to train the neural network, gradient-based frameworks require rerunning the overall process with more clients, which leads to high consumption and potential waste of both computational and transmission resources. However, FbFTL only requires the new clients to compute and upload their batches, and hence there is no waste of transmission resources.

IV-C Quantization, Sparsification and Error Feedback

To further reduce the uplink payload of gradient-based frameworks, there have been extensive works on gradient compression, including gradient sparsification [77, 78] and gradient quantization [63, 64], especially signSGD, the extreme case in which each element is reduced to be binary valued without scaling [79]. Several recent studies utilize error feedback (or quantization and sparsification with memory) that reduces the error in compression at client’s local device to improve the updates in future iterations [50, 49].

For gradient-based frameworks utilizing gradient update with elements, we denote the sparsification function as , where . This function keeps the value of elements in the vector with the highest absolute values, and set the value of all other elements to 0. We denote the quantization function as where , and this function quantizes each element in the vector to bits within the max/min range. Furthermore, we denote the error memory vector for client at iteration as . Therefore, the process of quantization and sparsification with error feedback at iteration is described as follows:

| (20) |

| (21) |

where is uploaded to the PS, and the local error memory vector is updated to . For initialization, .

Similarly, for FbFTL utilizing extracted feature with elements, we may also apply sparsification and quantization. However, error feedback is not applicable, because there is only one upload iteration in FbFTL, and the extracted feature is not additive unlike the gradient. Therefore, the process of quantization and sparsification for FbFTL is described as follows:

| (22) |

where is uploaded to the PS.

In both cases, the compression rate is close to where is the number of bits for the representation of the original data type. We note that there is also a potential drawback in the practical deployment of sparsification and error feedback. On the one hand, while all other operations including training, communication, inference, quantization and error feedback have time complexity no more than where is the total number of parameters in the neural network, we notice that depending on the sparsification ratio , sparsification requires up to time complexity for gradient-based frameworks and time complexity for FbFTL. Typically, we have , and FbFTL can achieve more significant time complexity reduction in local sparsification computation compared to gradient-based frameworks. On the other hand, by compensating for the compression error in future iterations, the error feedback significantly improves the training performance when the compression rate is high, i.e., and are low. However, the error feedback requires additional memory throughout the entire training process and not just during the local training. Also, we demonstrate below in the experimental results that the gradient-based frameworks do not prevail even with error feedback.

IV-D Experimental Results

In this section, we numerically demonstrate the aforementioned robustness metrics in our experiments with the VGG-16 CNN model on transfer learning from ImageNet dataset to CIFAR-10 dataset.

In Fig. 7, we show the PLR (without retransmissions) of different learning frameworks when the block size equals the batch size of FbFTL (i.e., the BLR equals the PLR of FbFTL). As we have shown in the row of uplink payload per batch of Table III, the batch sizes of other learning frameworks are about times larger than that of FbFTL. Due to the huge difference in the batch sizes, the PLR curves of the other learning frameworks almost reach when the PLR of FbFTL is less than (or equivalently when BLR). The difference is still substantially high if a few rounds of retransmissions are allowed in gradient-based frameworks. Fig. 7 provides the magnified plot of Fig. 7 for BLR, and we note that the curve of FL and the curve of FTL that updates full model overlap since they have the same number of parameters to update and therefore have the same batch size. In this figure, we again observe that the PLR curves of learning mechanisms other than FbFTL quickly approach within the considered range of BLR , while the PLR of FbFTL (being equal to BLR) stays below .

Since FbFTL has significantly lower PLR, we subsequently analyze its performance with different amount of data. In Fig. 8, we see that FbFTL has relatively high validation accuracy even with only 10% of the samples. Indeed, when we have only of the samples (i.e., the case with 50 samples), the accuracy is even with PLR. Furthermore, we observe that as PLR increases, the accuracy curves remain relatively flat, and experience sharp drops only when PLR approaches 1. Therefore, FbFTL is considerably robust against data insufficiency and PLR. We note that such robustness requires significantly more training iterations such that and have the same order of magnitude, where is the original number of training iterations with all participating clients, and is the number of training iterations with limited data from clients. In the case of smaller number of participating clients and PLR , FbFTL requires the same level of computational power consumption and more total downlink payload compared to the original case with clients. However, there is a huge reduction in total uplink payload and total local computational power consumption, because FbFTL only requires uploading once for each participating client. In comparison, gradient-based frameworks require uploading in every training iteration, and therefore there is no such benefit in terms of reduced uplink payload and local computations, while they also suffer from higher downlink payload. Similarly, we have the performance curves for FLAN-T5-small in Fig. 9.

In Table V, we show the validation accuracy of each framework with data from all clients and PLR , but for different values of quantization size bits and sparsification ratio . In the experiment, we pick the same set of values for and as in [77, 78]. Note that the highest reduction in uplink payload is achieved when we set and . However, even in this case, the uplink payload of gradient-based frameworks (i.e., FTL, FTLf, FTLc) is still greater than that of FbFTL with and . Moreover, with such drastic reduction, gradient-based schemes achieve lower accuracies compared to that of FbFTL with and . Therefore, even without sparsification and more restrictive quantization (e.g., or ), FbFTL outperforms gradient-based frameworks in terms of both accuracy and payload reduction. We can further reduce the uplink payload of FbFTL by choosing and . We note that FbFTL in the extreme case of only keeps 4 elements in the extracted features to distinguish among 10 classes. In this setting, the information is severely limited and is insufficient to make an accurate prediction, resulting in accuracy levels of for FbFTL. If, on the other hand, we pick a certain threshold on the validation accuracy, for instance 82%, FbFTL requires the sparsification ratio to be no lower than (which is times more than that of the best gradient-based framework), but still maintains a reduction of more than four orders of magnitude (i.e., reduction of ) in total uplink payload compared to the gradient-based algorithms while achieving the same validation accuracy. Such good performance of FbFTL without error feedback is due to the robustness of extracted features against noise. If we consider the error from compression as random noise, the extracted features from a well-trained source model is typically robust to noise while gradient update does not necessarily have the same level of robustness.

|

FL

FTLf FTLc FbFTL |

||||

|---|---|---|---|---|

|

89.42%

93.75% 86.51% 86.51% |

83.96%

93.46% 85.45% 86.47% |

59.97%

90.98% 84.49% 82.10% |

42.26%

81.98% 82.78% 65.71% |

|

|

88.46%

93.36% 86.16% 86.41% |

82.34%

93.34% 85.44% 86.39% |

57.04%

90.83% 84.41% 81.85% |

39.34%

81.91% 82.53% 65.55% |

|

|

45.96%

88.20% 81.44% 85.50% |

44.67%

88.16% 81.29% 85.48% |

33.63%

86.19% 80.91% 80.99% |

31.91%

80.15% 78.61% 65.18% |

V Privacy Analysis

In previous sections, we have described the FbFTL framework where each client with samples uploads extracted features and output for instead of the gradient update as done in FL and FTL with FedAvg. In this section, we conduct a privacy analysis by studying privacy leakage to a potential adversary, and propose protection strategies. In particular, we consider leakage due to the unveiling of information regarding the outputs/labels (henceforth referred to as label privacy leakage) and the unveiling of intermediate features (henceforth referred to as feature privacy leakage). For example, during the training process of a classifier to distinguish the character in a photo to be a dog or a cat, the identity of the character being labeled as a dog is considered as label privacy, while the feature privacy includes additional information of the certain photo besides its label privacy such as the color of the fur and the furniture in the background.

The label privacy leakage quantifies how much information about the batch of a targeted client is revealed to an adversary through the information on the outputs (or type of label in classification problems). The information on the outputs/labels can be of statistical nature or can be obtained via the unveiling of the outputs to the adversary. The former considers the information leakage via the knowledge of the general sample output distribution, while the latter specifies the leakage when the adversary has access to the output/label values. We analyze the label privacy leakage via the entropy and mutual information from the adversary’s perspective and propose an uploading design that randomly shuffles all batches to conceal the dependency between the client address and the output data.

Feature privacy leakage describes the amount of information that possibly leaks when the adversary obtains uploaded contents from clients. We will analyze the feature privacy leakage via the differential privacy (DP) framework [7] and provide comparisons between FbFTL and the gradient-based frameworks (FL and FTL, in which cases feature privacy is leaked when the adversary obtains the gradient updates) through experiments and numerical results.

V-A Label Privacy Leakage

First, we analyze the label privacy leakage. While FbFTL directly uploads the output in each batch for each sample, we note that FL and FTL with FedAvg that update the final layer also leak the output, and hence label privacy leakage also occurs in these cases. According to [80], the count of each output type in a batch can be numerically solved given the average gradient. Adversaries with certain prior knowledge on the training data are able to gain further knowledge and reconstruct the input from the average gradient, such as deep leakage [81] or gradient inversion [82, 83]. Although the following label privacy analysis applies to both feature-based FbFTL and gradient-based FedAvg frameworks, we in label privacy analysis use the word “batch” to indicate all uploaded information from one client with multiple samples. Compared to the high-dimensional gradient or feature , the output y also potentially reveals clients’ private information but up to a certain degree. In this setting, we analyze the conditions under which the label privacy leakage (with statistical information) vanishes. We also address the role of shuffling the output information from all clients as a way of hiding the client’s address from uploaded content when adversary has access to outputs.

To validate these approaches, we analyze the private output information leakage of a specific batch to a potential adversary without client addresses. According to [84], the privacy loss of a query can be evaluated as the difference in the privacy before and after the query. In our setting, we determine the amount of label privacy via the uncertainty from the adversary’s perspective, and quantify it by utilizing the entropy formulation.

As shown in Fig. 10, we consider a common learning task in which the output is an axis-aligned unit vector with one element having a value of 1 indicating the label associated with the given input, and all others equal to 0. We assume that each sample is independent and identically distributed (i.i.d.). Each client transmits one batch with samples, including the outputs/labels . Therefore for this client, there are different possible ordered batches of outputs in total (where is the number of possible different labels that can be associated with the input).

Assuming that the order in the batch does not contain information, we use to denote the set of possible batches without order from client , and the privacy information of the real batch from client is the sum vector , where . Subsequently, the number of possible batches without order is the combination with the replacement of items taken times and is given by

| (23) |

We denote the index of client ’s batch as a random variable , and the index of the uploaded batch as . We denote the type of the label ’s distribution as . Typically to achieve better performance, in most of the machine learning applications we desire a uniform distribution over different labels, and we denote the uniform distribution over labels as .

To evaluate the adversary’s knowledge on one given batch of a target victim client, we consider the presumed distribution of the batch from the adversary’s perspective to quantify the adversary’s uncertainty through the entropy of the given batch.

V-A1 Label Privacy Leakage with Statistical Information

A weak adversary without any prior knowledge of the sample distribution may presume the sample distribution to be uniform, i.e., . In this case, the distribution of ordered batches from the adversary’s perspective is also uniform with probability . Each batch index without order corresponds to different batch indices with order, and hence the adversary’s presumed probability distribution of batches without order (under the assumption of uniform sample distribution) is , where

| (24) |

Therefore from the weak adversary’s perspective, the uncertainty of the batch from client with known format and size but without prior knowledge about the content can be quantified by the entropy

| (25) |

where the condition in the entropy signifies that this is the entropy under the assumption of uniformly distributed samples.

On the contrary, a stronger adversary with the ability to steal and decode a large amount of uploaded data may learn the structure of the DNN and is able to decode the labels of the samples in a batch, and hence such an adversary is likely to have the prior knowledge of the general distribution of batches from a large number of clients. In the case that the true sample distribution is known, we denote the strong adversary’s presumed distribution of the batch from client as

| (26) |

and the corresponding uncertainty at the adversary in the original setting before query is

| (27) |

By comparing the uncertainty between the weak adversary and the strong adversary, we give the definition of label privacy leakage with statistical information.

Definition 1.

Label Privacy Leakage with Statistical Information: For an adversary without prior knowledge of the sample distribution (and hence that initially assumes uniform sample distribution), the label privacy leakage is the amount of information regarding the target batch that leaks to the adversary when it obtains the true distribution . This privacy leakage can be formulated as follows:

| (28) |

Note that is the uncertainty in under the assumption that the labels are uniformly distributed. is the remaining uncertainty in when the true sample distribution is learned by the adversary. Hence, the difference is the information gained by or equivalently leaked to the adversary.

Machine learning tasks typically require dataset balancing. If the data collection process is sufficiently well designed so that each output type is almost equally likely and we have , then the label privacy leakage . As we have illustrated in section III-A, achieving this goal is more viable in FbFTL.

V-A2 Label Privacy Leakage with Access via Query

Next, we analyze the label privacy leakage when the adversary has access to the shuffled outputs (e.g., via a query). In the worst case, the strongest query is the process that the adversary acquires all batches without clients’ addresses. Specifically, we assume that the adversary has access to randomly shuffled batches. Recall that there are different types of batches. We use to denote the count for type batches among given batches. With this definition, we have . For different set of batches, the counts will be different. We define as a random vector of counts of different types of batches among a total of batches. Hence, for given batches, the realization of this vector is . In the absence of any other statistical information, the adversary can utilize the following empirical distribution of based on the frequency of each batch type among all batches:

| (29) |

With this empirical distribution based on the shuffled batches, the adversary’s uncertainty is given by the entropy

| (30) |

In the case in which the adversary has acquired batches via the query and knows the distribution of the samples, we denote the adversary’s presumed distribution as , and its uncertainty on client ’s batch as .

First, we establish the following result.

Lemma 1.

Assume that the true sample distribution is known. Once batches are revealed to the adversary, the distribution of the batches (from the adversary’s perspective) depends only on the frequency/count of each batch type in the unveiled batches and not on the sample distribution, i.e.,

| (31) |

Proof.

We prove this lemma by utilizing the Bayes’ rule and determining the ratio of two conditional probabilities as follows:

| (32) |

∎

This result indicates that the batch distribution is equal to the empirical distribution in (29). Consequently, we also have the following characterization for the entropies:

| (33) |

Next, we give the definition of the label privacy leakage with query, and identify a condition under which the privacy leakage vanishes.

Definition 2.

Label Privacy Leakage with Query: For an adversary with prior knowledge of the true sample distribution , the label privacy leakage with query is the amount of information regarding the batch of a target client that leaks to the adversary when it obtains the randomly shuffled set of uploaded batches including the target’s batch. This privacy leakage can be formulated as follows:

| (34) |

Lemma 2.

As the number of shuffled batches goes to infinity, the label privacy leakage with query converges to 0, i.e.,

| (35) |

Proof.

As grows without bound, we have by the law of large numbers, and as a result, we have the characterization that . Hence, the label privacy leakage with query converges to 0. ∎

Note that if the adversary does not initially know even the distribution of the samples and assume a uniformly distributed samples, then total label privacy leakage after the unveiling of the batches to the adversary can be defined as

| (36) |

If the shuffled dataset is perfectly balanced and we have , the total label privacy leakage is zero, i.e., .

V-B Feature Privacy Leakage

In this section, we analyze the privacy leakage on the input x via uploaded content. Such privacy leakage includes the information that is not necessarily needed to determine the output y, and we will define it as feature privacy. Different from label privacy where the adversary knows the implication of each type of output (or the meaning of each label), it is hard to define the adversary’s prior knowledge on the gradients and extracted features, and it is unlikely to cancel feature privacy leakage via shuffling. Furthermore, it is hard to quantify and analyze the entropy and mutual information of gradients and extracted features because of the complexity of neural networks. Instead, we will analyze the feature privacy leakage via differential privacy.

For FbFTL, the intermediate output z is also referred to as the smashed data in split learning [85, 86], and cannot be directly transformed back to the input x due to the nonlinearity of the activation functions in each layer. However, it is possible that FbFTL leaks privacy to some extent and partially reveals the input. Since the feature before a fully connected layer from a sample can be analytically solved from its gradient [87], it is possible that gradient-based frameworks also leak the input. The strategy to extract the input from FedAvg gradients includes deep leakage [81, 87, 88] and gradient inversion [82, 83], and is extensively studied for image recognition. However, deep leakage highly depends on the dataset and DNN structure. Therefore, an analysis of the privacy leakage beyond label privacy is needed to identify what may be partially revealed regarding the input x from the uploaded features.

Differential privacy (DP) provides an upper bound on the privacy leakage using a different measuring approach, and we compare the feature privacy preserving performances of FbFTL and other schemes via DP. In the DP analysis of feature privacy, we denote the training samples for each batch as d and the function that generates the upload vector as . More specifically, and for FbFTL, while and for gradient-based FedAvg frameworks. Similarly as in [89, 90, 91], we define the feature privacy through the following condition: satisfies -DP if for any subset of possible outputs it holds that

| (37) |

where d and differ in a single sample with the true distribution .

In [92], it has been shown via moments accountant approach that adding Gaussian noise n to prior to transmission maintains an overall privacy loss of . Typically, the variance of the noise depends on the maximum distance for any two adjacent inputs d and . To provide a fair comparison between different learning frameworks, we consider the maximum relative distance within training set for each framework, where denotes absolute value and denotes the standard deviation. Thus, the additive Gaussian noise to each output should be to mitigate the feature privacy leakage, and the lower bound of the value can be determined via the moments accountant approach.

As a result, according to [92, Theorem 1], there exist constants and such that for any , the training process with is -differentially private for any if we choose , where is the number of iterations ( for FbFTL), and is the fraction of clients selected in each iteration. When the value of and noise factor are fixed, for FedAvg DP decreases as the batch size grows, but increases as training iterations (and potentially, the number of retraining for hyper-parameter tuning) increase. However, for FbFTL DP does not depend on these factors. Furthermore, the final performance also depends on the robustness of each framework against noise. Therefore, we demonstrate the comparisons via experiments in the following subsection.

V-C Experimental Results

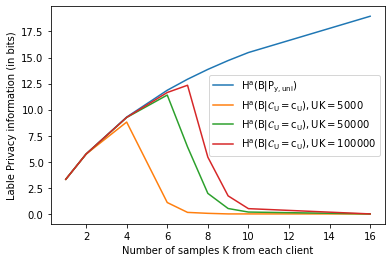

In our experiments, we utilize the dataset CIFAR-10 which has types of labels, and is well-balanced (i.e., ). We generate shuffled batches according to the uniform sample distribution and evaluate the total label privacy leakage in different scenarios in Fig. 11. The blue curve plots the initial uncertainty for different values of (where is the number of samples from each client). Each of the other curves shows the uncertainty given shuffled data with fixed total amount of samples from all clients, and the number of clients is determined by each value of accordingly. By Definition 1 and Definition 2, the total label privacy leakage given shuffled data is and is equal to the difference . We see that when is small, and thus the label privacy is well preserved. However, for given value of the product , diminishes fast once a certain threshold of is exceeded. Therefore, we note that the label privacy leakage becomes high when is large. As addressed before, the label privacy applies in the same way to both FbFTL and gradient-based FedAvg frameworks, and hence a smaller might be preferred in order to preserve the label privacy. However, current FL privacy analyses typically focus on DP that provides a relatively loose upper bound on the total local privacy. Within the DP setting, studies typically consider a small number of clients and assume that each client obtains hundreds of samples, if not thousands of, to achieve improved DP performance. However, as we have observed above, a setting with higher values of can lead to higher label privacy loss (in addition to requiring more training epochs and achieving less validation accuracy). This leads to the important conclusion that the balance between different types of privacy leakage needs to be considered carefully.

To compare the DP performances of feature privacy leakage, we apply different noise levels on upload content of different frameworks to achieve the same level of privacy, and compare the validation accuracy at convergence with different in Fig. 12. In our experiments, we implement the moments accountant via Rényi Divergence-based DP accountant to get a tighter bound. With fixed , each plot shows the validation accuracy at convergence as a function of for given . We note that higher indicates weaker DP protection, lower noise level , and thus higher accuracy (closer to the original performance without noise). As shown in Fig. 11, label privacy is better preserved at , and we show the DP performance within the same setting in Fig. 12(a). While gradient-based FedAvg frameworks totally fail, FbFTL has good performance because it has much smaller data size and is more robust against noise, as it receives constant data instead of varying perturbation in each training iteration and converges to a sub-optimal model. Such advantage of FbFTL remains until increases to 100 as shown in Fig. 12(b), where FTL that updates the task-specific sub-model outperforms FbFTL at as they have the same data size while the noise level of FedAvg decreases as increases. However, the label privacy completely leaks for as we have seen in Fig. 11. FedAvg for only prevails in protecting the subset of feature privacy that is not mitigated via shuffling. In Fig. 12(c), we show the comparison for even larger , where all types of FedAvg perform better than FbFTL.

Note that it is extremely difficult to analytically evaluate the volume of feature privacy leakage that can be mitigated via shuffling. Howeover, we can compare the performances of FbFTL and FedAvg for a given via experimental results as done above. One key conclusion we have is the following. In terms of privacy preservation, FbFTL is preferred when is small (i.e., each client obtains a small set of samples), and gradient-based FedAvg is preferred when is large.

VI Conclusion

In this paper, we have presented a novel communication-efficient federated transfer learning method. In this proposed feature-based federated transfer learning (FbFTL), the features and outputs are uploaded rather than the gradient updates. We have provided a thorough description of the system design and the learning algorithm, and compared its theoretical payload with that of federated learning and federated transfer learning. Our results demonstrate substantial reductions in both uplink and downlink payload when using FbFTL. Via experiments, we have further shown the effectiveness of the proposed FbFTL by showing that FbFTL reduces the uplink payload by up to five orders of magnitude compared to that of existing methods. Subsequently, we have demonstrated that FbFTL with small batch size has significantly less packet loss rate than gradient-based frameworks, and illustrated its robustness against data insufficiency and quantization. Finally, we have considered different types of privacy leakage, and analyzed mitigation approaches. Specifically, we have first analyzed label privacy leakage with both statistical knowledge and query (resulting in access to the label outputs). We have identified a condition under which label privacy vanishes. We have also addressed feature privacy and considered a DP mechanism for preserving this privacy. We have shown that with small and shuffling that eliminates label privacy leakage, FbFTL also attains good differential feature privacy protection. These characterizations and results render FbFTL a communication-efficient, robust, and privacy-preserving novel federated transfer learning scheme.

References

- [1] J. Konečnỳ, H. B. McMahan, D. Ramage, and P. Richtárik, “Federated optimization: Distributed machine learning for on-device intelligence,” arXiv preprint arXiv:1610.02527, 2016.

- [2] P. Kairouz, H. B. McMahan, B. Avent, A. Bellet, M. Bennis, A. N. Bhagoji, K. Bonawitz, Z. Charles, G. Cormode, R. Cummings et al., “Advances and open problems in federated learning,” arXiv preprint arXiv:1912.04977, 2019.

- [3] J. Mills, J. Hu, and G. Min, “Communication-efficient federated learning for wireless edge intelligence in iot,” IEEE Internet of Things Journal, vol. 7, no. 7, pp. 5986–5994, 2019.

- [4] B. McMahan, E. Moore, D. Ramage, S. Hampson, and B. A. y Arcas, “Communication-efficient learning of deep networks from decentralized data,” in Artificial intelligence and statistics. PMLR, 2017, pp. 1273–1282.

- [5] Y. Cheng, J. Lu, D. Niyato, B. Lyu, J. Kang, and S. Zhu, “Federated transfer learning with client selection for intrusion detection in mobile edge computing,” IEEE Communications Letters, vol. 26, no. 3, pp. 552–556, 2022.

- [6] Q. Yang, Y. Liu, Y. Cheng, Y. Kang, T. Chen, and H. Yu, “Federated learning,” Synthesis Lectures on Artificial Intelligence and Machine Learning, vol. 13, no. 3, pp. 1–207, 2019.

- [7] K. Wei, J. Li, M. Ding, C. Ma, H. H. Yang, F. Farokhi, S. Jin, T. Q. Quek, and H. V. Poor, “Federated learning with differential privacy: Algorithms and performance analysis,” IEEE Transactions on Information Forensics and Security, vol. 15, pp. 3454–3469, 2020.

- [8] X. Yin, X. Yu, K. Sohn, X. Liu, and M. Chandraker, “Feature transfer learning for face recognition with under-represented data,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 5704–5713.

- [9] Y. Chen, X. Qin, J. Wang, C. Yu, and W. Gao, “Fedhealth: A federated transfer learning framework for wearable healthcare,” IEEE Intelligent Systems, vol. 35, no. 4, pp. 83–93, 2020.

- [10] C. Ju, D. Gao, R. Mane, B. Tan, Y. Liu, and C. Guan, “Federated transfer learning for eeg signal classification,” in 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC). IEEE, 2020, pp. 3040–3045.

- [11] H. Yang, H. He, W. Zhang, and X. Cao, “Fedsteg: A federated transfer learning framework for secure image steganalysis,” IEEE Transactions on Network Science and Engineering, 2020.

- [12] S. J. Pan and Q. Yang, “A survey on transfer learning,” IEEE Transactions on knowledge and data engineering, vol. 22, no. 10, pp. 1345–1359, 2009.

- [13] K. Weiss, T. M. Khoshgoftaar, and D. Wang, “A survey of transfer learning,” Journal of Big data, vol. 3, no. 1, pp. 1–40, 2016.

- [14] C. Wang and S. Mahadevan, “Heterogeneous domain adaptation using manifold alignment,” in Twenty-second international joint conference on artificial intelligence, 2011.

- [15] M. Kaya, G. Fidan, and I. H. Toroslu, “Transfer learning using twitter data for improving sentiment classification of turkish political news,” in Information sciences and systems 2013. Springer, 2013, pp. 139–148.

- [16] F. H. Khan, U. Qamar, and S. Bashir, “Enhanced cross-domain sentiment classification utilizing a multi-source transfer learning approach,” Soft Computing, vol. 23, no. 14, pp. 5431–5442, 2019.

- [17] L. Duan, D. Xu, and I. Tsang, “Learning with augmented features for heterogeneous domain adaptation,” arXiv preprint arXiv:1206.4660, 2012.

- [18] B. Kulis, K. Saenko, and T. Darrell, “What you saw is not what you get: Domain adaptation using asymmetric kernel transforms,” in CVPR 2011. IEEE, 2011, pp. 1785–1792.

- [19] Y. Zhu, Y. Chen, Z. Lu, S. J. Pan, G.-R. Xue, Y. Yu, and Q. Yang, “Heterogeneous transfer learning for image classification,” in Twenty-Fifth AAAI Conference on Artificial Intelligence, 2011.

- [20] M. Shaha and M. Pawar, “Transfer learning for image classification,” in 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA). IEEE, 2018, pp. 656–660.