Feasibility Analysis and Regularity Characterization

of Distributionally Robust Safe Stabilizing Controllers

Abstract

This paper studies the well-posedness and regularity of safe stabilizing optimization-based controllers for control-affine systems in the presence of model uncertainty. When the system dynamics contain unknown parameters, a finite set of samples can be used to formulate distributionally robust versions of control barrier function and control Lyapunov function constraints. Control synthesis with such distributionally robust constraints can be achieved by solving a (convex) second-order cone program (SOCP). We provide one necessary and two sufficient conditions to check the feasibility of such optimization problems, characterize their computational complexity and numerically show that they are significantly faster to check than direct use of SOCP solvers. Finally, we also analyze the regularity of the resulting control laws.

Safety-critical control, control barrier functions, distributionally robust control synthesis.

1 Introduction

Recent years have seen increasing deployment of control systems and robots to aid transportation, warehouse management, and home automation. In these applications, it is crucial to implement controllers with provable safety and stability guarantees despite uncertainty in the system models and operational conditions. Recent work [1, 2, 3, 4, 5, 6] tackles this when some prior information about the uncertainty is known. Instead, here we rely on a line of work initiated in [7] that circumvents the need for knowledge about the uncertainty distribution and uses only uncertainty samples to formulate distributionally robust constraints for control synthesis. This approach is robust to distributional shift at deployment time and enjoys provable out-of-sample performance. However, it also introduces several challenges, which we focus on here: the characterization of the quality and number of uncertainty samples needed to guarantee the feasibility of the safety and stability constraints, and the study of the regularity properties of the resulting controllers.

Literature Review

Control Lyapunov functions (CLFs) [8] are a well-established tool to design stabilizing controllers for nonlinear systems. More recently, control barrier functions (CBFs) [9] have gained popularity as a tool to render a desired subset of the system state space safe. If the system is control affine, CLF and CBF constraints are linear in the control input and can be incorporated in a quadratic program (QP) [10] that, if feasible, can be solved efficiently to obtain control inputs guaranteeing safety and stability. Recent work has explored alternative optimization formulations when the system model is uncertain. Under the assumption that the uncertainty follows a Gaussian Process (GP) or satisfies worst-case bounds,[2, 11, 12, 1, 3, 5] formulate second-order cone constraints that can be used to design controllers achieving safe stabilization of the true system. The paper [4] gives sufficient conditions for the feasibility of such second-order cone constraints. Our work here is closely related to [7], which leverages ideas from distributionally robust optimization (DRO) [13, 14] to model the uncertainty. The DRO framework constructs an ambiguity set of probability distributions that contains the true (unknown) one with high confidence. Such ambiguity sets are constructed with only finitely many samples and are used to formulate distributionally robust versions of the control design problem.

Statement of Contributions

We study the problem of safe stabilization of control-affine systems under uncertainty. We assume that the distribution of the uncertainty is unknown and formulate a second-order cone program (SOCP) using distributionally robust versions of the CLF and CBF constraints constructed on the basis of uncertainty samples. Our first contribution is the derivation of a necessary condition and two sufficient conditions for the feasibility of the optimization problem. We characterize the computational complexity of these conditions and show that, for a large number of samples, it is significantly smaller than solving the SOCP directly, which makes them useful to efficiently check whether the problem is feasible without having to solve it. Our first sufficient condition is dependent on the quality of the uncertainty samples but is limited to a single control objective. Our second sufficient condition is only dependent on the number of samples but can be used for any number of constraints. Our final contribution shows that the solution of this distributionally robust optimization problem is point-Lipschitz, and hence continuous, which means that solutions of the closed-loop system are guaranteed to exist and the controller obtained from it can be implemented without inducing chattering.

2 Preliminaries

We review distrib. robust chance-constrained programs and control Lyapunov and barrier functions under uncertainty.

2.1 Distributionally Robust Chance Constrained Programs

Given a random vector following distribution supported on set and a closed convex set , let define a probabilistic constraint . We are interested in satisfying this constraint with a prescribed confidence , with , while minimizing a convex objective function . To achieve this111We denote by , and ≥0 the set of positive integers, real, and nonnegative real numbers, resp. We denote by the -dimensional zero vector. We write for the boundary of the set . Given , we denote . Given , denotes the Euclidean norm of . For , we define . A function is of class if , is strictly increasing and . A function is positive definite if and for all , and proper in a set if is compact for any . Given an matrix and two integers such that , denotes the matrix obtained by selecting the rows from to of . A function is point-Lipschitz at a point if there exists a neighborhood of and a constant such that for all ., define the chance-constrained program:

| (1) | ||||

| s.t. |

The feasible set of (1) is not convex in general. Nemirosvski and Shapiro [15, Section 2] propose a convex approximation of the feasible set of (1) by replacing the chance constraint with a conditional value-at-risk () constraint. CVaR of can be formulated as the following convex program:

| (2) |

The resulting problem

| (3) | ||||

| s.t. |

is convex and its feasible set is contained in that of (1).

Both (1) and (3) assume that is known. Instead, suppose that it is unknown and we only have access to samples from . We describe a way of constructing a set of distributions that could have generated the samples. Let be the set of probability measures with finite -th moment supported on . Let be the empirical distribution constructed from the samples . Let be the -Wasserstein distance [14, Definition 3.1] between two probability measures in and let be the ball of radius centered at . We define a distributionally robust chance-constrained program:

| (4) | ||||

| s.t. |

We can use CVaR to obtain a convex conservative approximation of (4):

| (5) | ||||

| s.t. |

If (5) is feasible, then (4) is also feasible [15, Section 2].

We say that a distribution is light-tailed if there exists such that . If is light-tailed, the following observation specifies how the radius of should be selected so that the true distribution lies in the ball with high confidence.

Remark 2.1

(Choice of Wasserstein ball radius): If the true distribution is light-tailed, the choice of given in [14, Theorem 3.5],

| (6) |

where and are positive constants that only depend on , and (cf. [14, Theorem 3.4]), ensures that the ball contains with probability at least . Then, a solution of (5) satisfies the constraint with probability at least . Note that , and can be computed by knowing the class of distributions to which belongs to, without having actual knowledge of . If exact values are not known, but upper and lower bounds are, these can be used instead to compute an upper bound of .

Remark 2.2

(Choice of ): The parameter determines the confidence level for constraint satisfaction. Throughout the paper, we assume , albeit results are valid generally, with explicit expressions becoming more involved.

2.2 Distributionally Robust Safety and Stability

The notions of CLF [8] and CBF [9] can be used to design controllers in uncertainty-free systems that enforce stability and safety, resp. Here we extend these notions for systems with uncertainty in the dynamics. Consider a nominal model and a linear combination of perturbations,

| (7) |

where for , denotes known model perturbations, and denotes the corresponding unknown weight, and . We let . We assume that follows an unknown distribution but a set of samples is available. We are interested in extending the notions of CLF [8] and CBF [9] for systems of the form (7). To do so, note that as shown in [7, Section IV], the CBF condition for a system of the form (7) and a function reads as , where the exact forms of and are given in [7, Section IV] and depend on and its gradient. Now, since follows a distribution , we extend the definition of CBF by requiring that for all in the safe set, there exist such that

| (8) |

The CLF condition for (7) takes a similar form and is written as (cf. [7, Section IV]). As shown in Section 2.1, can be used as a convex approximation of (8) and its analogue with . We use

| (9a) | |||

| (9b) | |||

as the distributionally robust analogues of the CLF and CBF conditions from [8] and [9], resp. The existence of a controller satisfying (9) implies the existence of a controller that makes the CLC (resp. the CBC) condition hold at every point with probability at least , paving the way for the design of controllers that make the system stable (resp. safe) with arbitrarily high probability.

3 Problem Statement

Consider the system model in (7) with distributional uncertainty, meaning that the true distribution of the parameter is unknown. We assume that the system admits a CLF and a CBF, which allow us to formulate the constraints (9). Given a nominal controller specified by a smooth function , we would like to synthesize a controller closest to it that respects safety and stability constraints. Using (2), this problem can be written in general form as

| (10) | ||||

| s.t. |

where and each is an affine function in and , , for smooth functions and . With and constraints corresponding to and , this corresponds to a stable and safe control synthesis problem. The case with the constraint corresponds to a distributionally robust version of a safety filter of .

Although the constraints in (10) are convex, the program is intractable due to the search of suprema over the Wasserstein set. Fortunately, [7, Proposition IV.1] shows that when and , the following SOCP is equivalent to (10):

| (11a) | ||||

| s.t. | (11b) | |||

| (11c) | ||||

| (11d) | ||||

| (11e) | ||||

We refer to (11) as the DRO-SOCP and take and Wasserstein distance throughout the paper.

A critical observation about problem (11) is that, in general, it might be infeasible, leading to controllers that are undefined.

Furthermore, even if the problem is feasible, the controller obtained from it might not be continuous, hence resulting in implementation problems (it might induce chattering behavior when implemented on physical systems) and theoretical problems (lack of existence of solutions of the closed-loop system). Hence, our goal in this paper is twofold. First, we derive conditions to ensure the feasibility of (11). Given the complexity of obtaining characterizations for the feasibility of such problems, we focus on identifying conditions that are easy to evaluate computationally as opposed to directly attempting to solve the optimization problem: either sufficient conditions, to quickly ensure feasibility, or necessary, to quickly discard it. Second, assuming that the problem (11) is feasible, we characterize the regularity properties of the resulting controller.

4 Feasibility Analysis

In this section, we study the feasibility properties of (11). We start by giving a necessary condition for its feasibility.

Proposition 4.1

(Necessary condition for feasibility of DRO-SOCP): Let and . For , let

for and . Let be the minimum eigenvalue of and suppose is invertible for all . If (11) is feasible, then for each , there exists such that is not positive definite and one of the following holds:

-

(i)

,

-

(ii)

and ,

-

(iii)

, and .

Proof 4.2.

Note that (10) (and hence (11)) is equivalent to

| (12) | ||||

for , cf. [7, Proposition IV.1]. For , the function is a piecewise linear function in . Since , it is decreasing for and increasing for . Hence, it achieves its minimum at . Thus, (11) is feasible if and only if for all the following inequalities are simultaneously feasible:

| (13) |

Note that, if for some , the constraint is infeasible for all , then (11) is infeasible. Note that this is only a sufficient, but not necessary, condition for infeasibility (or equivalently, a necessary, but not sufficient, condition for feasibility). The result follows from [2, Theorem 2], which characterizes the feasibility of a single second-order cone constraint.

Next, we state a sufficient condition for the feasibility of (11) in the case .

Proposition 4.3.

(Sufficient condition for feasibility of DRO-SOCP with one constraint): Let , , and . Given , define

Let be the minimum eigenvalue of . Suppose that is invertible, is not positive definite and one of the following holds:

-

(i)

,

-

(ii)

and ,

-

(iii)

and .

Then, (11) is feasible at .

Proof 4.4.

By repeating an argument similar to the one in the proof of Proposition 4.1, (11) is feasible in the case if and only if the following inequality is feasible:

| (14) |

Using the Cauchy-Schwartz inequality, the following inequality being feasible implies that (14) is feasible,

| (15) |

If (15) is feasible, there exists such that for all , and thus satisfies (14). The result follows by [2, Thm. 2].

Remark 4.5.

(More data leads to better feasibility guarantees): For a fixed , the addition of new data points (larger ) implies that there are more chances that either of (i)-(iii) in Proposition 4.1 are satisfied for each . Moreover, if is light-tailed, decreases with . The choice means that for each fixed and , the feasible set of the inequality increases, which from the proof of Proposition 4.1, also means that there are more chances that either of (i)-(iii) are met. Similarly, under the assumption that the norm of additional samples is upper bounded by , the choice also leads to a larger feasible set of (15) and thus the sufficient condition in Proposition 4.3 has more chances of being satisfied.

We next give a sufficient condition for the feasibility of (11) with high probability for an arbitrary number of constraints.

Proposition 4.6.

(Sufficient condition for feasibility of DRO-SOCP): Let , and . Suppose that there exists a controller and non-negative functions for satisfying

| (16) |

Moreover, suppose that is light-tailed and let be defined as in (6). Let be such that for all , and let be an upper bound on the norm of . Then, if

| (17) |

(11) is strictly feasible at with probability at least for any .

Proof 4.7.

Note that by definition, the first component of is for all . Hence, for all so (17) is well-defined. Let be such that

and define . Note that for any ,

| (18) |

where we have used the fact that the operator is Lipschitz with constant 1. Using (18) in [14, Theorem 3.2], we conclude that for any , .

From (17), together with the fact that contains with probability at least , cf. Remark 2.1, and since the maximum Wasserstein distance between two distributions in is , with probability at least ,

| (19) |

for any . By definition of , cf. (2), for any , . Combining this with (19) and (16), we get that with probability at least , for all . This argument holds for , implying that is strictly feasible for (10) (and hence, (11)) with probability at least for any .

Remark 4.8.

(Dependency of sufficient condition on slack terms): Condition (16) on the controller guarantees the satisfaction of the constraints in (10) with a slack term on the righthand side. Larger values of these slack terms mean that fewer samples are needed to satisfy (17). Moreover, for the constraints in (9), [4, Remark 2.3] shows how to obtain such functions , even without knowledge of .

Remark 4.9.

(Applicability of the sufficient condition): Checking condition (17) does not require precise knowledge of , just an upper bound of its norm. In particular, if bounds on the control norm are included as constraints in (11), those can be used to construct . Moreover, unlike Proposition 4.3, condition (17) is agnostic to the samples and instead solely depends on its number . Note that for each with for all , if for all , there exists such that condition (17) holds for all . This is because is decreasing in and . The value is state-dependent, larger for smaller values of , and larger values of .

Remark 4.10.

(Checking for (in)feasibility efficiently): A commonly used algorithm for solving SOCPs is the method in [16]. For an SOCP with constraints and optimization variable of dimension , it requires solving linear systems of dimension , and hence has complexity , cf. [17]. Therefore, (11) has complexity . Instead, since checking the positive definiteness of a symmetric matrix of dimension can be done by checking if its Cholesky factorization exists (which has complexity ), the complexity of checking the condition in Proposition 4.1 is . Hence, for large , it is much more efficient than solving the SOCP (11) directly. We also note that the scaling in for the complexity of the SOCP solver is more favorable than that of checking the necessary condition. On the other hand, the complexity of checking the sufficient condition in Proposition 4.3 reduces to finding a maximum of numbers (which has complexity linear in ) and checking the positive definiteness of two symmetric matrices of dimension and , resp. Hence, its complexity is , which is also more efficient than solving the SOCP. Finally, note that the complexity of checking the conditions in Proposition 4.6 is constant in and , and is linear in due to the minimum in (17). Table 1 summarizes this complexity analysis.

| Method | Necessary/Sufficient | Complexity | |

|---|---|---|---|

| Prop. 4.1 | Necessary | any | |

| Prop. 4.3 | Sufficient | ||

| Prop. 4.6 | Sufficient | any | |

| SOCP solver | Necessary and sufficient | any |

Proposition 4.1 provides necessary conditions for feasibility. If the conditions are not met, it is reasonable to gather more data for verifying feasibility without having to directly solve the program. Moreover, if the conditions in Propositions 4.3 and 4.6 are not met (which does not mean that (11) is not feasible), this might be an indication that more data is needed to certify feasibility, cf. Remarks 4.5 and 4.9.

5 Regularity Analysis

In this section, we show that the controller obtained by solving (11) is point-Lipschitz.

Proposition 5.1.

Proof 5.2.

We first show the result for . Let , note that the set is dependent on , but we omit this dependency to simplify the notation. Note also that since is continuous in and for all , there exists a neighborhood of such that for all , there exists such that . Recall from the proof of Proposition 4.1 that, for any , the function attains its minimum at . Therefore, for , .

For each , let be defined as:

| (20) | ||||

Note that since (10) is strictly feasible at , there exists such that . By continuity of and in , there exists a neighborhood of such that for all and . This implies that (20) is strictly feasible for any . Hence, by [4, Proposition 5.4], is point-Lipschitz at for each . Now, since for all there exists such that , and , it follows for some . Now, by taking , it follows that for all and hence is point-Lipschitz at . The argument if is analogous, defining a set similar to for each .

6 Simulations

In this section, we evaluate our results in a ground-robot navigation example. We model the robot motion using unicycle kinematics and take a small distance off the wheel axis, cf.[18] to obtain a relative-degree-one model:

where , are the linear and angular velocity, and

represent the model perturbations in the drift, angular velocity, and orientation. We consider uncertainty samples: , , and , where , , denote normal, uniform, and beta distributions, resp. The optimization programs are solved using the Embedded Conic Solver in CVXPY [19] with an Intel i7 9700K CPU.

We first consider the problem of stabilizing the uncertain unicycle system to a goal position with initial state , so we take in (10). At the initial state, the robot is assumed to have samples and initial Wasserstein radius with risk tolerance . As the robot moves, each unsuccessful solver attempt prompts the collection of additional samples, and a corresponding reduction in the ambiguity radius as prescribed by (6). In all the figures presented, the -axis represents the simulation timestep, where each timestep is equivalent to seconds, and the -axis denotes the time spent for carrying out the necessary and sufficient condition checks, as well as for running the solver at each timestep.

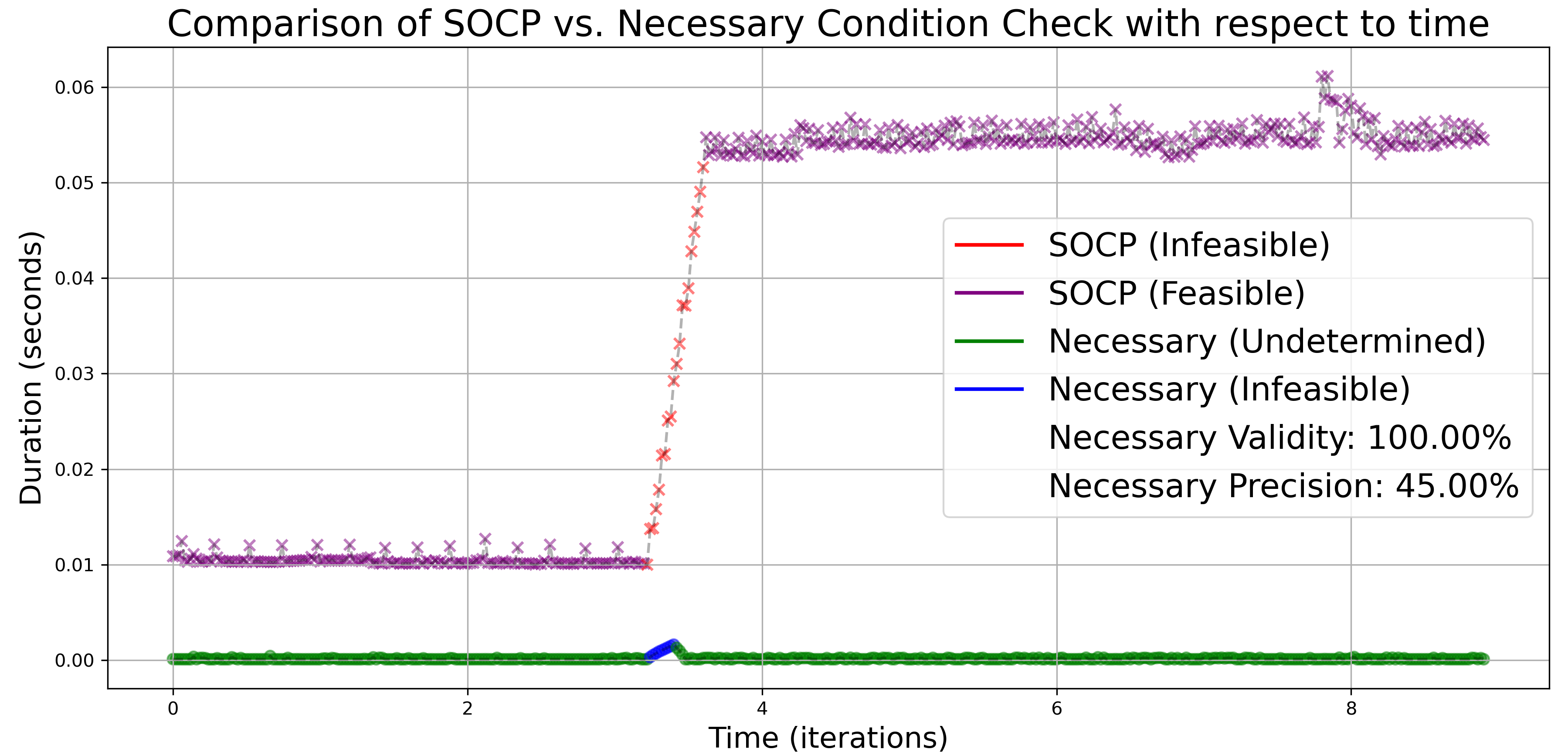

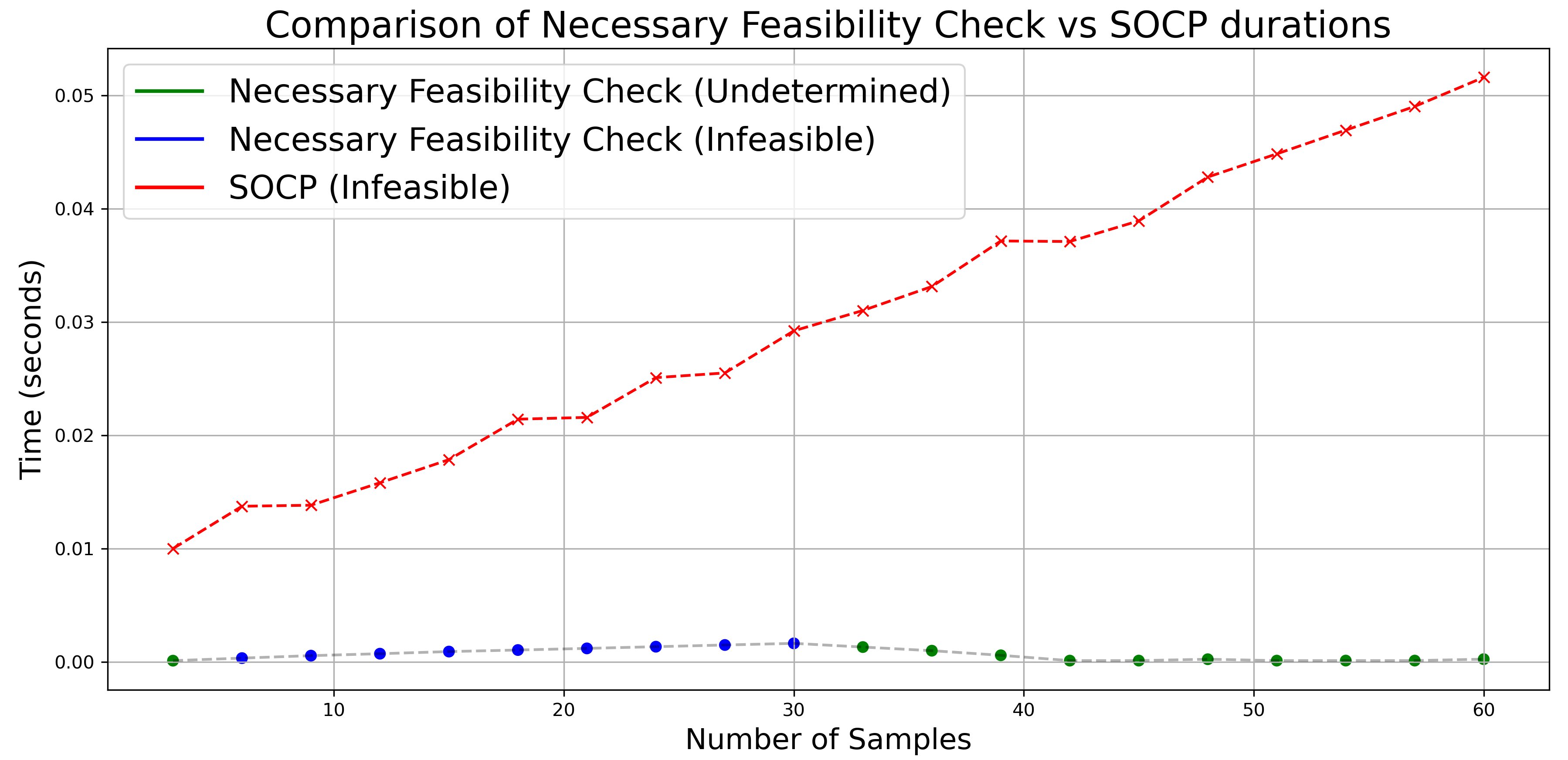

The time complexity, validity, and precision of Proposition 4.1 are explored in Fig. 1(a) and Fig. 1(b). Fig. 1(a) compares the time complexity of checking the necessary condition in Proposition 4.1 and of solving the corresponding SOCP along the whole robot trajectory. Notably, the SOCP becomes infeasible at around s and more uncertainty samples are given until feasibility is regained. As expected, when Proposition 4.1 predicts the program is infeasible, such inference is consistently mirrored by the solver. Fig. 1(b) specifically emphasizes the time complexity during data collection stages. As the number of samples increases, the SOCP’s time complexity escalates at a much faster rate than the necessary condition verification, in agreement with Remark 4.10.

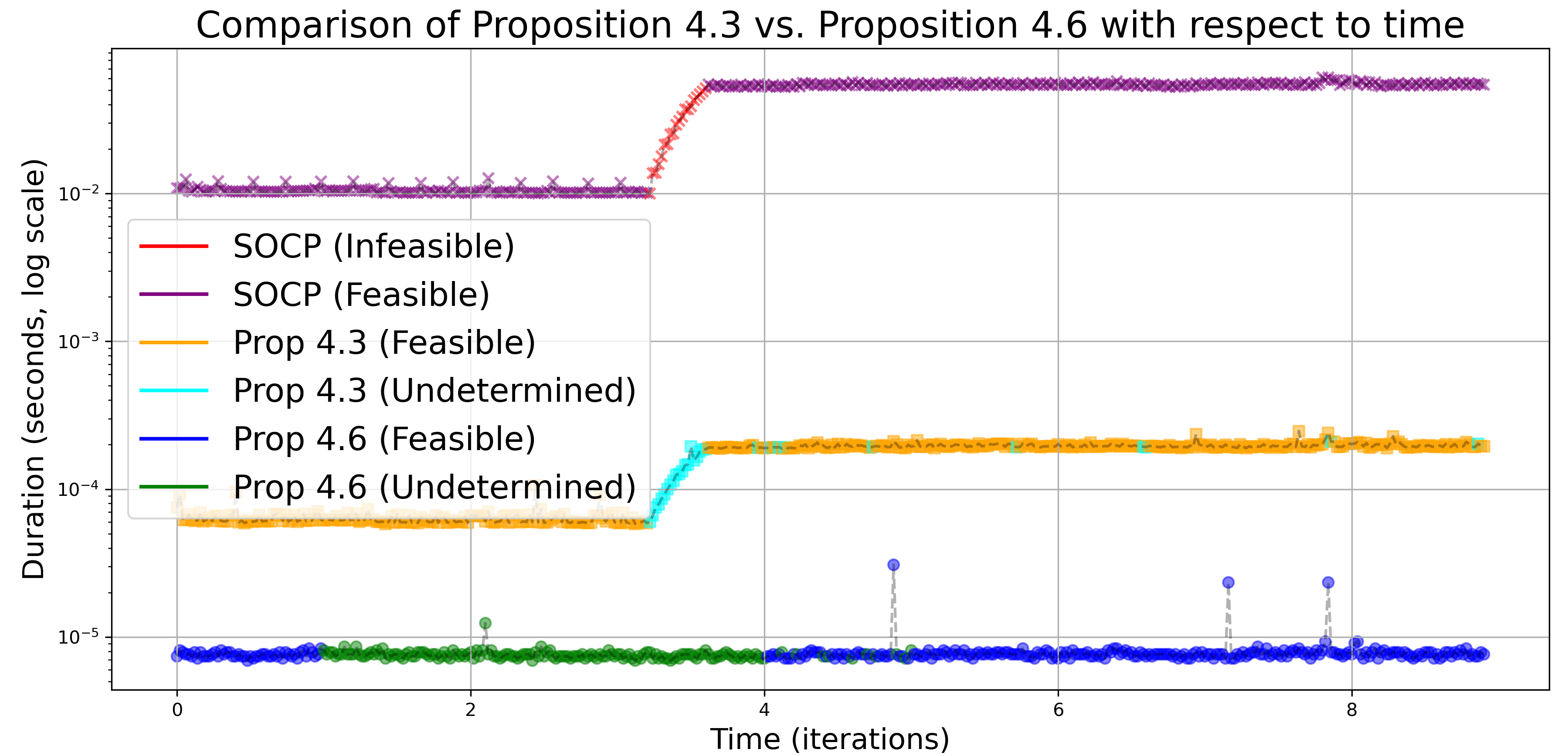

Fig. 1(c) compares the time complexity of solving the SOCP and of checking the sufficient conditions in Propositions 4.3 and 4.6. As expected, feasibility validation by either result ensures the actual feasibility of the program by the solver. Checking Proposition 4.3 is more time-consuming than checking Proposition 4.6, cf. Remark 4.10, but has greater accuracy in validating feasibility. Notably, both checks are significantly more efficient than solving the SOCP problem.

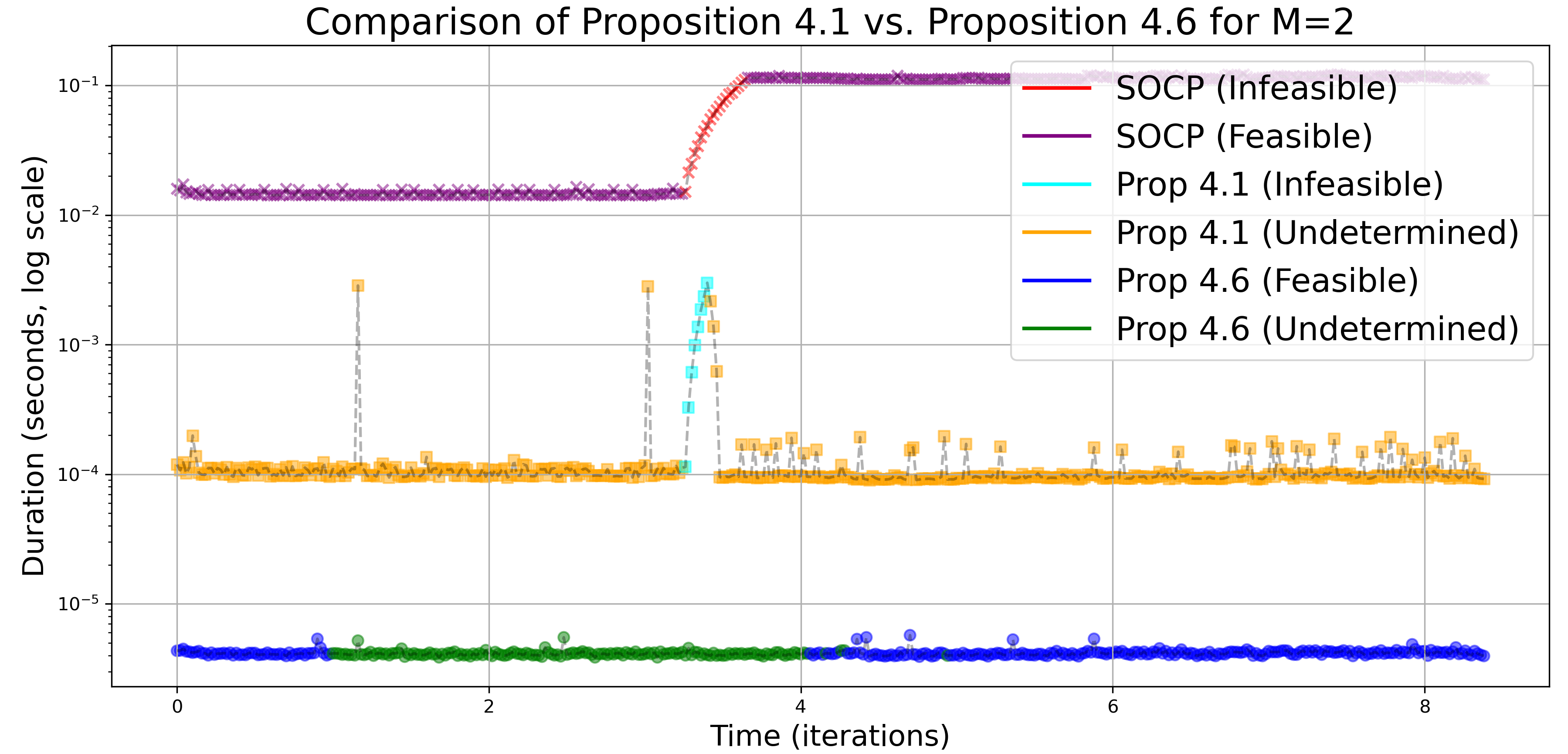

We also consider the safe stabilizing problem of the unicycle system. The stabilization goal is while the safety goal is to avoid a circular obstacle centered at with radius . Fig. 2 compares the time complexity and conservativeness of Proposition 4.1 and Proposition 4.6 for the case in (10). Proposition 4.1 is valid and requires significantly less time than solving the SOCP, while Proposition 4.6 is also valid and even more efficient.

7 Conclusions

We studied the feasibility of SOCP problems whose solution provide safe stabilizing controllers for uncertain systems with no prior knowledge of the uncertainty distribution and only a finite number of samples available. We provided a necessary condition and two sufficient conditions to check feasibility, characterized their computational complexity, and showed through simulations their usefulness in practical scenarios. We also showed that the controller obtained by solving the SOCP is point-Lipschitz under fairly general conditions. Future work will consider leveraging the identified feasibility conditions to guide online data-gathering policies that aim to reduce uncertainty about the system dynamics.

References

- [1] K. Long, V. Dhiman, M. Leok, J. Cortés, and N. Atanasov, “Safe control synthesis with uncertain dynamics and constraints,” IEEE Robotics and Automation Letters, vol. 7, no. 3, pp. 7295–7302, 2022.

- [2] F. Castañeda, J. J. Choi, B. Zhang, C. J. Tomlin, and K. Sreenath, “Pointwise feasibility of Gaussian process-based safety-critical control under model uncertainty,” in IEEE Conference on Decision and Control, Austin, Texas, USA, 2021, pp. 6762–6769.

- [3] A. J. Taylor, V. D. Dorobantu, S. Dean, B. Recht, Y. Yue, and A. D. Ames, “Towards robust data driven control synthesis for nonlinear systems with actuation uncertainty,” in IEEE Conf. on Decision and Control, Austin, Texas, USA, 2021, pp. 6469–6476.

- [4] P. Mestres and J. Cortés, “Feasibility and regularity analysis of safe stabilizing controllers under uncertainty,” Automatica, 2023, submitted.

- [5] A. Lederer, A. Begzadic, N. Das, and S. Hirche, “Safe learning-based control of elastic joint robots via control barrier functions,” arXiv 2212.00478, 2023.

- [6] P. Seiler, M. Jankovic, and E. Hellstrom, “Control barrier functions with unmodeled input dynamics using integral quadratic constraints,” IEEE Control Systems Letters, vol. 6, pp. 1664–1669, 2022.

- [7] K. Long, Y. Yi, J. Cortés, and N. Atanasov, “Safe and stable control synthesis for uncertain system models via distributionally robust optimization,” in American Control Conference, Jun. 2023, pp. 4651–4658.

- [8] E. D. Sontag, Mathematical Control Theory: Deterministic Finite Dimensional Systems, 2nd ed., ser. TAM. Springer, 1998, vol. 6.

- [9] A. D. Ames, S. Coogan, M. Egerstedt, G. Notomista, K. Sreenath, and P. Tabuada, “Control barrier functions: theory and applications,” in European Control Conference, Naples, Italy, Jun. 2019, pp. 3420–3431.

- [10] A. D. Ames, X. Xu, J. W. Grizzle, and P. Tabuada, “Control barrier function based quadratic programs for safety critical systems,” IEEE Transactions on Automatic Control, vol. 62, no. 8, pp. 3861–3876, 2017.

- [11] F. Castañeda, J. J. Choi, B. Zhang, C. J. Tomlin, and K. Sreenath, “Gaussian process-based min-norm stabilizing controller for control-affine systems with uncertain input effects and dynamics,” in American Control Conference, New Orleans, LA, May 2021, pp. 3683–3690.

- [12] K. Long, C. Qian, J. Cortés, and N. Atanasov, “Learning barrier functions with memory for robust safe navigation,” IEEE Robotics and Automation Letters, vol. 6, no. 3, pp. 4931–4938, 2021.

- [13] A. Ben-Tal, L. E. Ghaoui, and A. Nemirovski, Robust Optimization, ser. Applied Mathematics Series. Princeton University Press, 2009.

- [14] P. M. Esfahani and D. Kuhn, “Data-driven distributionally robust optimization using the Wasserstein metric: performance guarantees and tractable reformulations,” Mathematical Programming, vol. 171, no. 1-2, pp. 115–166, 2018.

- [15] A. Nemirovski and A. Shapiro, “Convex approximations of chance constrained programs,” SIAM Journal on Optimization, vol. 17, no. 4, pp. 969–996, 2006.

- [16] M. S. Lobo, L. Vandenberghe, S. Boyd, and H. Levret, “Applications of second-order cone programming,” Linear algebra and its applications, vol. 284, pp. 193–228, 1997.

- [17] A.-L. Cholesky, “Sur la résolution numerique des systèmes d’équations linéaires,” Bulletin de la société des amis de la bibliothèque de l’École polytechnique, vol. 39, 2005.

- [18] J. Cortés and M. Egerstedt, “Coordinated control of multi-robot systems: A survey,” SICE Journal of Control, Measurement, and System Integration, vol. 10, no. 6, pp. 495–503, 2017.

- [19] S. Diamond and S. Boyd, “CVXPY: A Python-embedded modeling language for convex optimization,” Journal of Machine Learning Research, 2016.