FCCDN: Feature Constraint Network for VHR Image Change Detection

Abstract

This is a preprint version of a paper submitted to ISPRS Journal of Photogrammetry and Remote Sensing.

Change detection is the process of identifying pixelwise differences in bitemporal co-registered images. It is of great significance to Earth observations. Recently, with the emergence of deep learning (DL), the power and feasibility of deep convolutional neural network (CNN)-based methods have been shown in the field of change detection. However, there is still a lack of effective supervision for change feature learning. In this work, a feature constraint change detection network (FCCDN) is proposed. We constrain features both in bitemporal feature extraction and feature fusion. More specifically, we propose a dual encoder-decoder network backbone for the change detection task. At the center of the backbone, we design a nonlocal feature pyramid network to extract and fuse multiscale features. To fuse bitemporal features in a robust way, we build a dense connection-based feature fusion module. Moreover, a self-supervised learning-based strategy is proposed to constrain feature learning. Based on FCCDN, we achieve state-of-the-art performance on two building change detection datasets (LEVIR-CD and WHU). On the LEVIR-CD dataset, we achieve an IoU of an 0.8569 and an F1 score of 0.9229. On the WHU dataset, we achieve an IoU of 0.8820 and an F1 score of 0.9373. Moreover, for the first time, the acquisition of accurate bitemporal semantic segmentation results is achieved without using semantic segmentation labels. This is vital for the application of change detection because it saves the cost of labeling.

keywords:

change detection , deep learning , feature constraint1 Introduction

Change detection is the process of identifying differences in the state of an object or phenomenon by observing it at different times [1]. Since the dynamic monitoring of ground objects is crucial for remote sensing applications, change detection has been the focus and a challenge of remote sensing for a long time. The overall workflow of change detection consists of data acquisition, data preprocessing, a change detection algorithm, and an accuracy evaluation. Traditional change detection algorithms can be divided into algebra-based methods, transformation-based methods, classification-based methods, and clustering-based methods [2]. Algebra-based methods, including image differing methods [3], image rationing methods[4], and change vector analysis (CVA) [5], often extract changing information by algebraic operations on the corresponding pixels of bitemporal data. Transformation-based methods detect changes by transforming coregistered images into the feature space. The commonly used transforms include principal component analysis (PCA) [6] and tasseled cap transformation (KT) [7]. Classification-based change detection algorithms obtain a changing area by using the classification results and include postclassification comparisons [8] and the direct classification of bitemporal data[9]. Clustering-based algorithms generate change maps by clustering bitemporal data into a changed area and an unchanged area. Commonly used clustering algorithms include K-means [10] and fuzzy c-means (FCM) [11]. Although most of the traditional methods are simple and very computationally efficient, their robustness is poor, and their accuracy is not guaranteed.

Deep learning (DL) allows computational models that are composed of multiple processing layers to learn representations of data with multiple levels of abstraction [12]. In recent years, with the development of computing power, the accumulation of data, and proposals of algorithms for mining big data, DL has achieved breakthroughs in many fields. Additionally, remote sensing has entered the era of big data [13] and has met the high data requirements of DL. Since massive remote sensing data are available, DL can be used to extract useful features and make correct decisions through a large number of remote sensing images, which allows DL-based methods to outperform traditional methods in many remote sensing applications. For the change detection task, a large number of DL change detection algorithms have also been proposed [14, 15, 16, 17, 2, 18, 19, 20]. Early change detection networks are classification networks that input small image patches and output the corresponding categories [21, 22]. With the emergence of fully convolutional networks [23], fully convolutional change detection networks have become the preferred architecture [24, 14, 16, 18, 19, 20, 25]. Compared with traditional change detection methods, a DL-based algorithm has more hyperparameters, stronger robustness to the input data, and a better generalization ability.

DL architectures make decisions based on the features they learn. Hence, feature learning plays a decisive role in network performance. Currently, Siamese networks with dual encoders and single decoders have been the preferred architecture for very high resolution (VHR) image change detection. They can constrain feature learning by sharing the weights of the dual encoder. Although many Siamese networks have been proposed [24, 14, 16, 26, 20, 25], there is still a lack of work on constrained feature learning. The shortcomings can be summarized as follows: 1) Most existing networks focus on the process of extracting and fusing bitemporal features. They tend to ignore some implicit constraints of the change detection task, such as the relationship between bitemporal features. 2) Most existing methods generate change features by fusing encoder features [[14, 27, 25]. These low-level features may cause noise inference [17]. 3) There is still a lack of an effective solution for the feature misalignment among bitemporal features, especially when the unchanged objects in bitemporal images are very different in the feature space. Therefore, it is still challenging for DL algorithms to extract and fuse bitemporal features correctly.

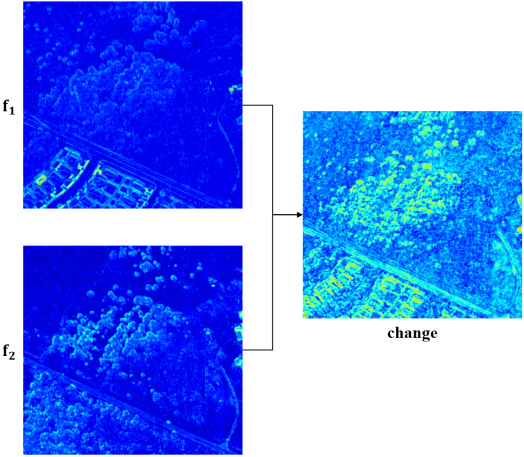

Humans can identify changing areas on the basis of understanding the categories of objects in bitemporal images. We can first extract features and classify them into different categories. The category of objects in bitemporal images can help us identify changes. For change detection tasks, extracting the target object features correctly and maintaining a good separation between the target object features and the background features can help the networks perform better. Ideally, we can obtain change information by directly comparing these features (as shown in Figure 1). However, the task of change detection for remote sensing images is extremely complex. On the one hand, the objects in remote sensing images are complex and diverse. It is not easy to distinguish the target objects from the background objects. On the other hand, there are many unconcerned differences among bitemporal data, such as seasonal changes in vegetation and building offsets caused by different viewing angles. These unconcerned differences may result in pseudochanges in the final results.

In this work, we propose a change detection algorithm based on feature constraints. Our work’s critical point is to extract the target object features correctly, suppress the background object features, and fuse the bitemporal features more reasonably. We carry out our work from four aspects: a network backbone, multiscale feature extraction and fusion, bitemporal feature fusion, and feature constraints based on self-supervised learning (SSL). Our algorithm is very intuitive and effective, and the performance is very satisfactory.

In summary, the contributions of this work are as follows:

-

1.

We propose a dual encoder-decoder (DED) backbone for change detection (Section 3.2). Unlike existing works, features from the decoder instead of the encoder are used to calculate change information. In this way, considerable background noise is suppressed and better change features are generated..

-

2.

We design a nonlocal feature pyramid network (NL-FPN) in the center of the backbone to enhance the extraction and fusion of multiscale features (Section 3.3).

-

3.

We design a dense connection-based feature fusion module (DFM) to fuse bitemporal features (Section 3.4). We use two densely connected branches to fuse bitemporal features by ensembling multistage features.

-

4.

We introduce a novel but straightforward SSL task to constrain feature extraction (Section 3.5). The SSL task works by using additional loss on the changed area and unchanged area.

-

5.

We obtain the semantic segmentation results of bitemporal images without semantic segmentation labels on most change detection tasks. The acquisition of the semantic segmentation results is vital for remote sensing because it saves much work with respect to labeling.

We validate our work on two building change detection datasets: LEVIR-CD [28] and WHU [29]. The experiments show that our algorithm has obvious advantages over the latest methods. Moreover, the acquisition of the bitemporal building extraction results highlights the significance of our work.

The rest of this paper is organized as follows. Section 2 reviews the related work. Section 3 introduces our algorithm in detail. Section 4 shows our experiments and performance. Section 5 discusses the application of the proposed method to multiclass change detection tasks and compares SSL to contrastive loss. Finally, we conclude our work in Section 6.

2 Related work

2.1 Fully convolutional networks for change detection

FCN is an end-to-end architecture and can make full use of semantic information to obtain pixelwise results. It is naturally suitable for change detection on high-resolution optical images. FCN was first used in street view change detection tasks [24]. Rodrigo et al. [14] were some of the first researchers to fit this structure into remote sensing image change detection. They presented three FCNs for change detection tasks and achieved state-of-the-art results on several datasets.

In recent years, many change detection networks based on FCN have been proposed. FCN-based change detection architectures can be roughly divided into single-stream networks [15, 24, 30, 18, 31] and double-stream networks [16, 27, 26, 17, 25, 20].

Single-stream networks are usually semantic segmentation networks that take concatenated or differential images of two bitemporal images as input. Peng et al. [15] use a single-path network to obtain change mask. They first concatenated the coregistered image pairs at the channel dimension. Then, the new multispectral data were fed into a modified UNet++ [32]. Liu et al. [31] inputted concatenated images to a modified UNet [33], which was built with depthwise separable convolution. Zheng et al. [18] proposed a cross-layer convolutional neural network for change detection tasks under the structure of UNet. They designed a cross-layer block to aggregate the multiscale features and multilevel context information.

Double-stream networks are commonly made of two weight-sharing feature extraction streams that directly take bitemporal images as the input. Compared with single-stream backbones, most recent works prefer a double-stream network, owing to the Siamese structure and weight-sharing strategy. Zhang et al. [16] proposed a fully convolutional two-stream network in which bitemporal features are extracted in a Siamese manner. Then, the paired features are sent to a feature fusion subnetwork to reconstruct the change map. Zhang et al. [20] proposed a Siamese network for change detection tasks with a hierarchical fusion strategy. Bitemporal features are hierarchically fused with concatenating options. Fang et al. [25] extracted bitemporal features with a dual encoder. The bitemporal features are fed into UNet++ to generate change masks. Although bitemporal features are generated by a weight-sharing encoder, features of unchanged objects may vary greatly in bitemporal feature maps. Therefore, how to fuse these features effectively remains a problem. Some researchers fused bitemporal features by concatenation or difference [14, 27, 20, 25], while others sought better methods. Chen et al. [34] proposed a dual attention mechanism that can capture long-range dependencies in feature pairs. The dual attention mechanism is applied before feature fusion to obtain more discriminant feature representations. However, the attention module is only applied to the last stage features of the weight-shared backbone. Since the features are downsampled eight times at the last stage, their method may lose much spatial information. Zhang et al. [16] introduced an attention module to effectively fuse features in different domains. The attention module they built is a combination of a channel attention module and a spatial attention module. Diakogiannis et al. [26] designed a new attention module to calculate change features. The module uses the fractal Tanimoto similarity to compare queries with keys inside the attention module. Zhang et al. [17] proposed an object-level change detection network with a dual correlation attention-guided detector. They built a correlation attention-guided feature fusion neck that uses a position-correlated attention module and channel-correlated attention module to guide the generation of change features. These methods use a series of attention modules that fuse bitemporal features hierarchically. Although they can remarkably boost the performance of networks, most attention-based fusion methods introduce a large amount of computation and a large number of parameters. Moreover, there still exist several issues that need to be considered. On the one hand, most existing works fuse shallow features to obtain the change information, which may introduce considerable noise in change maps [17]. On the other hand, existing change detection networks mainly rely on the supervision of labels. They tend to ignore the relationship among bitemporal features. Inspired by the above issues, a feature constraint architecture for change detection tasks is proposed in this literature.

2.2 Self-supervised learning

SSL is a subset unsupervised learning method that can learn general image features without using any human-annotated labels [35]. It is widely used in the computer vision field. Unlike other unsupervised learning methods, SSL trains with annotations, which are obtained by mining the internal information of images through certain pretext tasks. There have been a variety of pretext tasks for SSL, such as colorizing grayscale images [36], image inpainting [37], image rotation [38], and image jigsaw puzzles [39].

Recently, several researchers in the field of remote sensing have carried out their research with SSL. Tao et al. [40] introduced a new instance discrimination-based SSL mechanism to pretrain a feature exactor for remote sensing scene classification tasks. They also investigated the impacts of several factors in the SSL-based classification task. Vincenzi et al. [41] took advantage of SSL and trained a promising feature extractor for the downstream landcover classification task. Their pretext task was to reconstruct the visible colors with high-dimensionality spectral bands. For change detection, Dong et al. [42] an SSL-based change detection algorithm. They built a network to identify different sample patches between two temporal images, namely, temporal prediction. With this pretext task, the network can encode input images to more consistent feature representations, which can be used to generate binary change maps by clustering. Chen et al. [43] proposed an SSL-based change detection approach based on an unlabeled multiview setting. They used SSL by pretraining a Siamese network with a contrastive loss for heterogeneous images and a regression loss for homogeneous images. Although these change detection tasks benefit from the SSL-based strategy, SSL is only used as a pretraining strategy. There is still a lack of research on the introduction of an SSL strategy to change detection tasks.

3 Methodology

In this section, we introduce the architecture of the proposed method in detail. The overall structure of FCCDN is presented in Section 3.1. More specifically, we propose a novel DED backbone for the change detection task (Section 3.2), an NL-FPN module for multiscale feature extraction (Section 3.3), a DFM block for generating change features (Section 3.4), and a feature constraint strategy based on SSL (Section 3.5).

3.1 Overall structure of proposed network architecture

The overall structure of the proposed feature constraint change detection network (FCCDN) is shown in Figure 2. FCCDN uses a DED backbone (Figure 3). At the center of DED, an NL-FPN (Figure 5) is proposed to enhance multiscale features in a nonlocal way. At the decoding stage, a series of DFMs (Figure 6) are applied to fuse bitemporal features and generate change features. Then, the change features are hierarchically fed to a change decoder to obtain the final change map. FCCDN contains three output branches: one change branch and two segmentation branches Figure 8). The change branch produces the change confidence, which is used to calculate the change loss with labels in the training process. The two segmentation branches output segmentation scores and are supervised by the SSL-based strategy.

3.2 Dual encoder-decoder network

Since bitemporal data are usually used in change detection tasks, a fully convolutional Siamese (FCS) network has been a common structure for change detection in recent years. Conventional FCS (also referred to as FCS) generally uses a dual encoder with weight sharing to extract bitemporal features. After the encoding process, a series of feature fusion blocks are applied to obtain change features based on the extracted features. At the decoding stage, a single-stream change decoder is used to combine the change features mentioned above in a similar way as UNet [33].

Figure 3 shows the overall structure of our baseline FCS. We build the dual encoder with a series of SE-ResNet modules (Figure 3) [44], which uses the squeeze and excitation (SE) block (Figure 3) in the residual branch. The SE block uses global average pooling (GAP) to aggregate the feature maps across spatial dimensions and captures channelwise feature responses. After GAP, two fully connected (FC) layers are applied to learn a weight vector for each channel. The vector is then normalized and multiplied by the original feature. The dual encoder is built with four SE-ResNet modules. The output of the four modules are features with sizes of (h/2, w/2), (h/4, w/4), (h/8, w/8), and (h/16, w/16) (h represents the height of the input image and w represents the width of the input image). The encoder features are then fed to four feature fusion modules to fuse bitemporal features and generate four change features. In the decoding process, several change decoder blocks are employed to fuse features generated by bitemporal feature fusion blocks hierarchically. Since the bitemporal feature fusion block and the change decoder block are both aimed at fusing features, we build them with the same module, as shown in Figure 3. This fusion module is a combination of an upsampling layer (if necessary), a concatenate operation, a convolution layer with kernel size = 3, a batch normalization (BN) layer [45], and a rectified linear unit (ReLU) function. The output of the last change decoder block (C-block3) is then fed into a simple segmentation head, which is meant to reduce the channels and resize the change mask to the input size.

The conventional FCS network uses low-level features in the dual encoder to calculate the change features. However, low-level features pay more attention to general information, which contains much information that is useless for change detection [17]. We validate this conjecture with the change detection task on buildings and visualize some features in Figure 4. The feature maps are visualized in the form of heatmaps with Grad-CAM [46]. In the heatmap, the warmer the color is, the more attention the network pays to that area. That is, the degree of warmth represents the contribution of each pixel. As shown in Figure 4, the low-level features ( and ) from the dual encoder contain not only the edges of buildings but also the edges of background objects. In this case, there is much noise in the change features, which is not conducive to the change detection task.

In this paper, we propose a novel DED neural network backbone for change detection tasks (see Figure 3). This architecture has the same dual encoder and change decoder as FCS. Nevertheless, unlike FCS, DED has an extra dual decoder with weight sharing, which is used to filter out invalid information and reconstruct useful information. The dual decoder is also built with the feature fusion block shown in Figure 3. Change features are generated by fusing the corresponding features from the dual decoder. Then, these change features are fed into a change decoder in the same way as in FCS. The bitemporal features reconstructed by the dual decoder are shown in Figure 4. Compared with the bitemporal features from the dual encoder of FCS, the bitemporal features from the dual decoder can better highlight the borders of buildings and suppress background features. Therefore, the change features generated by DED are more accurate.

3.3 Non-local feature pyramid network

It is well known that ground objects vary on different scales. Therefore, it is necessary to extract and fuse features at multiple scales and with large receptive fields. Some researchers try to tackle the above issue with pyramid pooling (PSP) [47, 48]] or atrous spatial pyramid pooling (ASPP) [49]. However, these methods use large-scale pooling or convolution layers with a large dilate rate, resulting in the absence of local information [50]. In addition, there are also some solutions based on a feature pyramid network (FPN) [51, 52], which can effectively fuse multiscale features. However, conventional FPN methods cannot obtain long-range dependencies, which makes it hard to extract large object features.

Here, we propose an NL-FPN module to extract and fuse features in a nonlocal way. The module is added to the center of FCCDN (Figure 2) to augment the feature maps by considering similarities between any pairs of pixels. Because similarities between any two positions is considered, we can further strengthen the intraclass correlations and increase interclass separation. Figure 5 shows the details of our NL-FPN. Nonlocal blocks (NL-blocks) are added to the upsampling stage of FPN, which consists of six convolution layers. We build the NL-block inspired by the spatial self-attention mechanism [53, 54]. The self-attention mechanism uses the dot product between the key vector and the query vector to obtain the similarity between different pixels. Since the features are normalized to unit lengths, the distance between vectors can be computed using the dot product [55]. We can formulate this as

| (1) |

where F represents the feature map and p and q represent different positions on the feature map. For this reason, we directly consider the dot product of the original input and its transpose instead of using a key vector and query vector. In this way, the similarity can also be obtained, and the network parameters can be reduced.

The NL-block we build is shown in Figure 5. The input feature map is fed into three branches: a reshape branch, a reshape and transpose branch, and a convolution branch. The features in the first two branches are fused by a dot product followed by a softmax function. Here, a similarity map is generated. The feature in the convolution branch is fed into a convolution layer. Then, the output of the convolution branch is reshaped and transposed so that we can further fuse it with the similarity map mentioned before by using a dot product. At the end of this block, the fusion result is reshaped and fed into a convolution branch to obtain the final weight map. The weight map is used to augment the features in FPN by a Hadamard product.

3.4 Dense fusion module

For change detection with Siamese networks, bitemporal feature fusion is the most critical part. It is difficult for two reasons: (1) Bitemporal images fed into Siamese networks are often offset in spatial position and color. (2) The background objects are complex and diverse. Conventional methods use direct subtraction or concatenation to fuse features [14]. Unfortunately, although Siamese networks extract features by dual blocks, there is still much misalignment among the bitemporal features. There have also been many researchers who have tried to tackle this problem with attention mechanisms [16, 34, 26, 17]. Nevertheless, most existing attention-based feature modules introduce many calculations and consume considerable memory.

Here, we propose a simple yet effective feature fusion module based on dense connections. We name this module DFM. DFM consists of two branches, the sum branch and the difference branch. The sum branch is used to enhance the edge information, and the difference branch is used to generate change regions. Each branch is built with two densely connected streams with weight sharing. Figure 6 illustrates the details of DFM. All convolution operations use 3×3 kernels. We should note that we do not use BN in each branch until the final convolution layer.

We build DFM for the purpose of ensembling multiple features in each stream and making better decisions. This structure can increase the robustness of the model and prevent pseudochanges caused by feature misalignment. Additionally, owing to the rich residual connections in dense connections, the last two features in each stream can be regarded as the residual of the previous feature, which is to some extent the correction of the previous feature and makes the new feature map more aligned. We validate this module by visualizing features in the difference branch (Figure 7). From the visualization of the feature maps, we can see that DFM indeed reduces feature misalignment and calculates more accurate change features.

3.5 Self-supervised learning-based feature constraint

Change detection is aimed at finding changes of interest in bitemporal images. Currently, most change detection tasks are inter-class change detection, in which the categories of bitemporal images should differ in changed areas, and the categories in the unchanged areas should be the same. That is, the bitemporal features in unchanged areas should be as close as possible, and the bitemporal features in changed areas should be as far away as possible. To this end, we use the novel idea of applying SSL to the task of change detection. With this SSL-based strategy, we can further constrain feature learning.

As shown in Figure 8, we add two auxiliary branches to the dual decoder of the proposed DED backbone. The two auxiliary branches are meant to obtain the semantic segmentation results of target objects. For the learning of the auxiliary branches, we use a novel SSL strategy to generate pseudolabels for each branch. It should be noted that we take the binary change detection task as an example to describe our idea. We discuss the application of the SSL-based feature constraint strategy for multiclass change detection tasks in the discussion section(Section 5). The details of the SSL strategy are as follows: (1) According to the change detection label, we split the semantic segmentation results of the auxiliary branches ( and in Figure 8) into two parts: the changed area and unchanged area. (2) In the unchanged area, the semantic segmentation result of one branch is used as the label of the other branch. (3) In the changed area, the opposite semantic segmentation result of one branch is used as the label of the other branch.

The way we generate pseudolabels can be expressed by the following formulas. We assume that the output of the two auxiliary branches is , . Since and are normalized to [0, 1], we can obtain the semantic segmentation results of the target object ( and ) with:

| (2) |

where i represents the position of each pixel. Due to , , based on the idea of SSL, we use them as pseudolabels to constrain each semantic segmentation branch. For example, we use as the label of . As mentioned earlier, the categories of bitemporal images in the changed area must be different, and the categories in the unchanged area must be the same. We split and into two parts: the changed area C and the unchanged area U. Then, we feed semantic segmentation results and pseudolabels into loss functions. Hence, we obtain:

| (3) | |||

where F denotes the loss function, and denote the auxiliary losses we introduce. In the end, our loss of change detection task consists of three parts: a loss of change mask (), pretemporal semantic segmentation loss (), bitemporal semantic segmentation loss (). The final loss function can be formulated as:

| (4) |

Both the change loss and auxiliary loss are calculated with the same loss function, which is a combination of the binary cross-entropy (BCE) loss and dice coefficient loss functions [56]. The BCE loss can be formulated as follow:

| (5) |

where N represents the total number of pixels in a label patch and denote the predicted change confidence and the label in the corresponding position, respectively. The dice coefficient loss is calculated as:

| (6) |

where X and Y represent the predicted change confidence and the label denotes the intersection of X and Y. Hence, the BCE + dice loss we use can be expressed as:

| (7) |

There is a simple way to validate our SSL-based feature constraint strategy. That is, we can validate the improvement by checking the inference results of the two auxiliary semantic segmentation branches. The two auxiliary branches are meant to guide DED to extract better target object features and suppress background object features. Therefore, the accuracy of the semantic segmentation results directly represents the performance of our SSL-based feature constraint. We show several results in Figure 9. From left to right: pretemporal images, posttemporal images, the pretemporal semantic segmentation results, the posttemporal semantic segmentation results, and the change detection results. Apparently, the two semantic segmentation branches achieve good performance and further improve the change task.

Note that we only test the SSL-based strategy on inter-class change detection tasks since almost all the available change detection datasets are inter-class change detection. It is hard to tell whether this strategy can boost performance on intra-class change detection tasks. So, we can only conclude that the SSL-based strategy can effectively constrain the feature learning of inter-class change detection tasks.

4 Experimental Results

We validate FCCDN on two building change detection datasets: LEVIR-CD and WHU. The experimental results demonstrate that FCCDN outperforms recently proposed change detection methods and achieves state-of-the-art performance on experimental datasets. In this section, we start by introducing the experimental datasets. Then, we describe our implementation details. After that, we introduce the evaluation metrics we use. In the end, we present our results in detail.

4.1 Datasets

We describe the experimental datasets in this subsection. We offer a brief view of the LEVIR-CD dataset and WHU dataset in Table 1. More details are shown in Section 4.1.1 and Section 4.1.2.

| Name | Bands | Image pairs | Resolution(m) | Image size |

|---|---|---|---|---|

| WHU | 3 | 1 | 0.3 | 32207×15354 |

| LEVIR-CD | 3 | 637 | 0.5 | 1024 × 1024 |

4.1.1 LEVIR-CD dataset

The LEVIR-CD dataset consists of 637 VHR image patches collected from Google Earth (GE). The resolution of each image is 0.5 m, and the size is 1024×1024. It is a large-scale change detection dataset and covers different kinds of buildings. The author of LEVIR-CD provided a standard train/validation/test split, which assigns 70% of the samples for training, 10% for validation, and 20% for testing. We follow the standard split provided by the author. Most existing literature crops the samples into 256 × 256 [28, 26, 30]. Theoretically, large training slices contain more context information than small training slices. Owing to the low computational cost of FCCDN, we can feed larger slices into the network. We try two crop strategies: 1) we crop the samples into 256 × 256 with an overlap of 128 on each side (horizontal and vertical); 2) we crop the samples into 512 × 512 with an overlap of 256 on each side.

4.1.2 WHU building change detection dataset

The WHU building change detection dataset consists of two-period aerial images, each with a resolution of 0.3 m. The two-period images were obtained in 2012 and 2016. There are a variety of buildings with large-scale changes in the dataset. There does not exist a standard splitting for this dataset. Different researchers use different data splitting approaches to validate their models. For the convenience of comparison, we use the splitting approach that was used in [30], and several change detection architectures have been tested based on this splitting approach. We crop the dataset into 256 × 256 slices and randomly split them into training/validation/test sets at a ratio of 7:1:2. Note that we do not use any overlap during the splitting.

4.2 Implementation Details

4.2.1 Data preprocessing and augmentation

We generate the training set and validation set in the way mentioned in Section 4.1. For each dataset, the slices are normalized according to Equation 8 before being fed into the network.

| (8) |

where represents the slices before normalization, represents the slices after normalization, and and are the mean value and standard deviation of the images in the datasets, respectively.

To improve the generalization ability of the models, we use several data augmentation strategies in the training stage. This includes random flipping (probability = 0.5), transposing (probability = 0.5), rotating (probability = 0.3, -45° angle 45°), zooming in/out (probability = 0.3, scale 0.1), HSV shifting (probability = 0.3, H-shift 10, S-shift 5, V-shift 10), and adding Gaussian noise (probability = 0.3, mean = 0, 10 variance 50). All the above data augmentation methods are realized with Albumentations [57], which is a Python library for data augmentation. In addition, we randomly exchange the input order of the bitemporal images (probability = 0.5).

4.2.2 Training

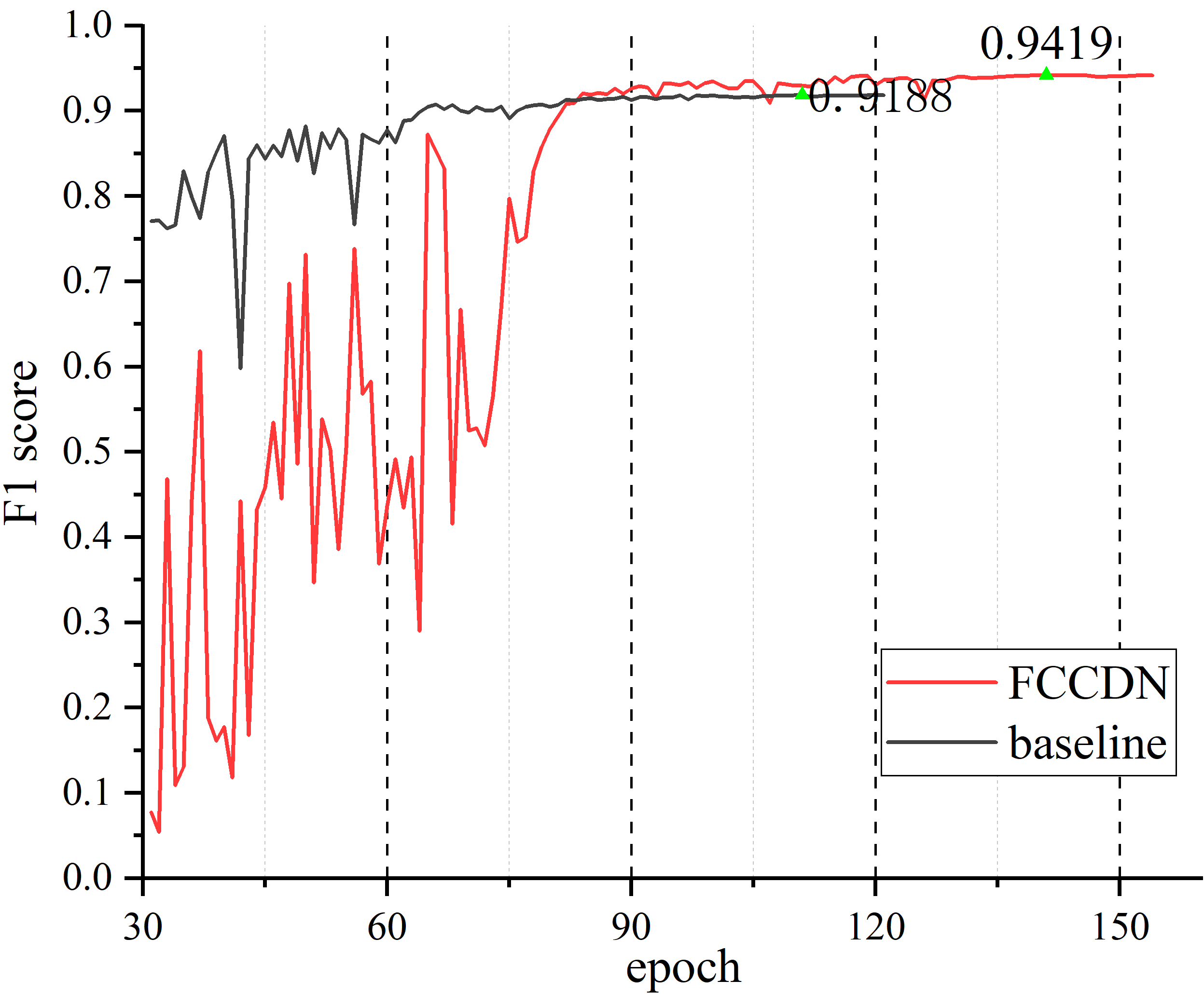

FCCDN is implemented with the PyTorch DL framework [58]. We have open-source our work on GitHub, and here is the link: https://github.com/chenpan0615/FCCDN_pytorch. We trained our networks on 1 RTX TITAN GPU (24 GB memory). For the proposed network architecture, the minibatch size can reach 16 with 512 × 512 slices (LEVIR-CD) and 64 with 256 × 256 slices (WHU). We choose the BCE + dice coefficient loss as the loss function. We use AdamW [59] as the optimizer with an original learning rate = 0.002 and weight decay = 0.001. The learning rate is adjusted by observing whether the F1 score of the validation set increases within ten epochs. If there is no increase, the learning rate is reduced by a factor of 0.3. We find that the models almost converge when the learning rate is adjusted more than three times. Thus, we end the training process when the learning rate is about to adjust a 4th time. Since the models are far from converging in the first 30 epochs, we do not validate the models with the validation sets until the 30th epoch. The pretrained model is vital for improving model robustness and uncertainty [60], especially for training with a small training set. Therefore, in the experiment on the WHU dataset, we initialize the encoder of FCCDN with the weights trained on the LEVIR-CD dataset.

We plot the performance of the baseline (FCS) and FCCDN on the validation sets in Figure 10. We show the curves of FCCDN in red and the curves of the baseline in gray. From Figure 10, we can learn that FCCDN can achieve good performance after no more than 220 epochs. To further validate our models on the test sets, we save the weights with the highest validation accuracy as the checkpoints for testing.

4.2.3 Inference

We use a very simple inference methodology in the testing stage. On the LEVIR-CD dataset, we feed the original testing slices (sizes of 1024 × 1024) into the networks. On the WHU dataset, the slices (sizes of 256 × 256) are already prepared when splitting the dataset. Then, the slices are normalized with the same mean value and standard deviation used in the training stage. The checkpoints with the highest F1 scores on the validation sets are used for testing. The change masks are generated in the way described by Equation 2. We do not use test time augmentation (TTA) or multimodel ensembles.

4.3 Evaluation Metrics

In this paper, we use the intersection over union (IoU) and F1 score as the evaluation metrics. The two metrics are commonly used to quantify the performance of the change detection task. The values of IoU and F1 range from 0 to 1, and the higher the value is, the better the performance. The IoU and F1 score are calculated as:

| (9) |

| (10) |

The precision is calculated as:

| (11) |

The recall is calculated as:

| (12) |

TP denotes true positive, FP denotes false positive, and FN means false negative.

4.4 Results

4.4.1 Ablation study

In this subsection, we present the ablation study of FCCDN on the LEVIR-CD dataset. As is shown in Table 2, we compare each architecture mainly with two evaluation metrics: the IoU and F1 score. The formulas of the above metrics are described in Section 4.3. We test each architecture three times and present the average performance in Table 2.

We start the ablation study by composing two backbone architectures: the FCS network and DED network. FCS achieves the lowest IoU (0.8380) and F1 score (0.9119) in the ablation study. Although the precision of FCS is high (0.9301), its recall is extremely low (0.8944), which means FCS misses many changes. Compared with FCS, DED achieves great improvements (1% in terms of the IoU and 0.6% in terms of the F1 score). The performance of FCS and DED further proves our argument in Section 3.2.

We validate the NL-FPN by adding it to the center of FCS and DED. The performance is shown in the second line and the 6th line of Table 2. FCS + NL-FPN achieves an IoU of 0.8430 and an F1 score of 0.9148. DED + NL-FPN achieves an IoU of 0.8500 and an F1 score of 0.9189. Notably, both FCS and DED benefit from NL-FPN.

We validate DFM by using it as the bitemporal feature fusion module in our architectures. The performance results displayed in the third line and the 7th line of Table 2 demonstrate that DFM can significantly improve the accuracy of change detection networks. The combination of FCS and DFM can boost the F1 score from 0.9119 to 0.9161. The combination of DED and DFM can boost the F1 score from 0.9176 to 0.9192.

For the SSL-based feature constraint strategy, we only test it with the DED backbone. By comparing the accuracy in the 5th line and the 8th line of Table 2, we can see that the SSL-based strategy works as expected. It improves the IoU from 0.8478 to 0.8530 and improves the F1 score from 0.9176 to 0.9206.

We also try other combinations of the proposed architectures and show the experimental results in Table 2. All the combinations can achieve distinct accuracy improvements. The combination of DED + NL-FPN + DFM + SSL achieves the best performance with an IoU of 0.8565 and an F1 score of 0.9227.

| NL-FPN | DFM | SSL | precision(%) | recall(%) | IoU(%) | F1(%) | |

|---|---|---|---|---|---|---|---|

| FCS | 93.01 | 89.44 | 83.80 (±0.1) | 91.19 (±0.06) | |||

| FCS | ✓ | 92.76 | 86.90 | 84.30 (±0.26) | 91.48 (±0.15) | ||

| FCS | ✓ | 92.78 | 87.06 | 84.53 (±0.12) | 91.61 (±0.07) | ||

| FCS | ✓ | ✓ | 92.91 | 90.76 | 84.87 (±0.23) | 91.82 (±0.14) | |

| DED | 92.88 | 90.67 | 84.78 (±0.2) | 91.76 (±0.12) | |||

| DED | ✓ | 92.66 | 91.15 | 85.00 (±0.17) | 91.89 (±0.1) | ||

| DED | ✓ | 92.81 | 91.04 | 85.03 (±0.1) | 91.92 (±0.05) | ||

| DED | ✓ | 92.97 | 91.20 | 85.30 (±0.11) | 92.06 (±0.06) | ||

| DED | ✓ | ✓ | 92.45 | 91.75 | 85.35 (±0.12) | 92.10 (±0.07) | |

| DED | ✓ | ✓ | 92.31 | 91.73 | 84.98 (±0.3) | 92.01 (±0.12) | |

| DED | ✓ | ✓ | 92.81 | 91.47 | 85.42 (±0.2) | 92.14 (±0.11) | |

| DED | ✓ | ✓ | ✓ | 92.95 | 91.61 | 85.65(±0.05) | 92.27 (±0.02) |

4.4.2 LEVIR-CD dataset

In this subsection, we present the comparison results of FCCDN and other change detection architectures on the LEVIR-CD dataset. The methods used for comparison are recently published methods that were tested on the LEVIR-CD dataset.

Most of the architectures are validated on the same test set proposed by [28], except for DDCNN [30]. Although DDCNN used a different data splitting approach, our method outperforms it with respect to the F1 score by 2%. We list the comparison results in Table 3. As shown in the table, our network architecture outperforms all the competitors and achieves new state-of-the-art results on the LEVIR-CD dataset.

| precision(%) | recall(%) | IoU(%) | F1(%) | |

|---|---|---|---|---|

| STANet [28] | 83.80 | 91.00 | - | 87.30 |

| BiT S4 [61] | 89.24 | 89.37 | 80.68 | 89.31 |

| DDCNN [30] | 91.85 | 88.69 | 82,21 | 90.24 |

| FracTAL ResNet [26] | 93.60 | 89.38 | 84.23 | 91.44 |

| CEECNetV1 [26] | 93.73 | 89.93 | 84.82 | 91.79 |

| CEECNetV2 [26] | 93.81 | 89.92 | 84.89 | 91.83 |

| FCCDN (256) | 92.96 | 91.55 | 85.62 | 92.25 |

| FCCDN (512) | 93.07 | 91.52 | 85.69 | 92.29 |

We present several inference results on the test set in Figure 11. Since almost all existing methods are validated on the same test set from this dataset, the values in Table 3 represent the performance of the existing methods. We only plot our results in this figure to show the inference details. The first three columns of the figure are the bitemporal images and ground truths. In the fourth column, we present the results of FCCDN. To better identify the difference between the labels and change masks, we plot the change masks in four colors: white denotes changed areas that are correctly identified (true positive), black denotes unchanged areas that are correctly identified (true negative), red denotes unchanged areas that are wrongly identified as changed areas (false positive), blue denotes changed areas that are missed (false negative). In addition to the change masks generated by FCCDN, we also plot the bitemporal semantic segmentation results of buildings in the fifth and sixth columns, which are generated in an unsupervised way.

As shown in Figure 11, FCCDN performs excellently on the test set. Almost all the changes are accurately found. We need to note that there are still some significant differences at the edges of the buildings, especially in the first row and the fourth row. These differences are mainly caused by the shadows of buildings. It is difficult to identify the exact boundary since some edges are hidden in the shadows.

In the field of observing landcover information, we not only care about the changing information but also care about the information of target objects in bitemporal images. With the above information, we can further obtain advanced results. Therefore, the pixel category in bitemporal images is vital for the application of change detection. As shown in the last two columns of Figure 11, we output accurate bitemporal semantic segmentation results of the buildings in an unsupervised way. This is the first study to generate bitemporal semantic segmentation results only with change labels; existing methods need extra segmentation labels. In conclusion, our architecture can save much labeling work in real applications.

4.4.3 WHU dataset

We present the comparison results of FCCDN and other change detection architectures on the WHU dataset in this subsection. The comparison is mainly based on the work of [30], who tested many change detection methods with their splitting approach. Therefore, we use the values of the comparison methods reported in their work. We also add the experimental result of [61], who split their dataset in the same way as in [30]. We list the comparison results in Table 4. From the table, we can learn that FCCDN outperforms all other architectures with a remarkable advantage. We achieve the highest IoU (0.8820) and F1 score (0.9373) on this dataset.

| precision(%) | recall(%) | IoU(%) | F1(%) | |

|---|---|---|---|---|

| FC-EF [14] | 78.86 | 78.64 | 64.94 | 78.75 |

| FC-Sima-diff [14] | 84.73 | 87.31 | 75.44 | 86.00 |

| FC-Sima-conc [14] | 78.86 | 78.64 | 64.94 | 78.75 |

| BiDataNet [62] | 86.75 | 90.60 | 79.59 | 88.63 |

| BiT S4 [61] | 86.64 | 81.48 | 72.39 | 83.98 |

| CDNet [24] | 91.75 | 86.89 | 80.60 | 89.62 |

| UNet++_MSOF [15] | 91.96 | 89.40 | 82.92 | 90.66 |

| DASNet [34] | 88.23 | 84.62 | 76.04 | 86.39 |

| DDCNN [30] | 93.71 | 89.12 | 84.09 | 91.36 |

| IFN [16] | 91.44 | 89.75 | 82.79 | 90.59 |

| FCCDN (ours) | 96.39 | 91.24 | 88.20 | 93.73 |

We plot several inference results of the test set in Figure 12. In the first three columns, we present the bitemporal images and ground truths. The fourth column and the fifth column are the change masks of DDCNN and FCCDN. We also show the bitemporal semantic segmentation results in the last two columns. The distribution of the slices for visualization is shown in Figure 12. Each green rectangle indicates a testing chip of size 256 × 256.

To better identify the difference between the labels and change masks, we plot the change masks with four colors in the same way as mentioned before. We further highlight some critical areas with green rectangles. As shown in the figure, FCCDN achieves excellent performance on the test set and outperforms the comparison methods. Most of the changes are well recognized except in several areas. At the tops of the second and third rows, both our results and the comparison results contain some incorrect decisions. This is understandable because it is difficult to identify buildings at the edge of images. Inferences with larger slices can perform better. At the center of the last row, there are pseudochanges in the result of FCCDN. However, since the building in the posttemporal image is under construction, building changes do exist in this area. At the bottom right of the last row, both FCCDN and the comparison architecture miss a small change. This seems to be a common problem in this dataset and is also mentioned in [26].

4.4.4 efficiency test

In this subsection, we present the efficiency of FCCDN in terms of four factors: parameters of the model (Params), the training time (Tt), the training batch size with 12 GB memory (Tb/12 GB), and the inference time with batch size = 1 (It). We evaluate our model efficiency on the WHU dataset. To better show the efficiency of FCCDN, we also test the efficiency of several existing change detection methods [14, 34, 24, 15, 30, 16].

Table 5 shows the comparison results of the efficiency test. From the table, we can see that FCCDN achieves the best F1 score with competitive efficiency.

| Params(Mb) | Tt(s) | It(s) | F1(%) | ||

|---|---|---|---|---|---|

| FC-EF [14] | 5.15 | 140 | 35 | 90 | 78.75 |

| FC-Sima-diff [14] | 5.15 | 140 | 35 | 65 | 86.00 |

| FC-Sima-conc [14] | 5.9 | 140 | 35 | 60 | 83.47 |

| BiDataNet [62] | 192 | 140 | 45 | 40 | 88.63 |

| BiT S4 [61] | 7.75 | 145 | 40 | 45 | 89.62 |

| CDNet [24] | 7.75 | 145 | 40 | 45 | 89.62 |

| UNet++_MSOF [15] | 34.6 | 170 | 40 | 14 | 90.66 |

| DDCNN [30] | 178 | 750 | 85 | 5 | 91.36 |

| IFN [16] | 137 | 255 | 75 | 14 | 90.59 |

| FCCDN (ours) | 24.2 | 150 | 60 | 28 | 93.73 |

5 Discussion

5.1 SSL-based strategy for multi-class change tasks

In this subsection, we discuss the application of the proposed SSL-based feature constraint strategy to multiclass change detection tasks. The discussion is addresses three popular multiclass change detection datasets: 1) the season-varying dataset [63], which is used to identify multiclass changes with binary change labels (Figure 14); 2) SECOND [64], which is used to identify multiclass changes with multiclass semantic segmentation labels in the bitemporal changed area (Figure 14); 2) HRSCD [65], which is used to identify multiclass changes with multiclass semantic segmentation labels in each temporal image (Figure 14).

5.1.1 Season-varying dataset

The season-varying dataset is a multiclass change detection dataset with optical satellite images obtained by GE. The resolution of the dataset varies from 3 cm to 100 cm. As shown in Figure 14, seasonal changes are labeled with white color, and the rest are unchanged objects. The author of the dataset released a standard splitting plan, which consists of 10000 training slices, 3000 validation slices, and 3000 test slices. Each slice is an image with a size of 256 × 256.

Since the SSL-based feature constraint strategy relies on the supervision of the segmentation branches, it is essential to know the categories of the changed objects. The pseudolabels can be obtained only by acknowledging the class of objects in the bitemporal images. For the season-varying dataset, there are no clear categories for the changed objects. In this case, our SSL-based strategy will be simplified to a contrastive loss function. The contrastive loss is used to pull closer unchanged bitemporal features and push changed bitemporal features away. The loss function can be formulated as:

| (13) |

where denotes the contrastive loss, denotes the unchanged pixels, denotes the changed pixels, denotes the outputs of the auxiliary branches, and MSE denotes the mean squared error (squared L2 norm), which is calculated as:

| (14) |

where N represents the total number of pixels in a label patch. The contrastive loss is added to the main loss in the following way:

| (15) |

where is the loss of the binary change mask and is calculated with the BCE loss + dice coefficient loss.

We test the contrastive loss strategy on the season-varying dataset by fitting it to the DED backbone (Figure 3). We add two additional branches to DED. The output of the two branches is fed into the auxiliary loss function. We use the same training details as the details shown in Section 4.2. The experimental result is shown in Table 6. From the table, we can see that the contrastive loss strategy can remarkably boost the IoU performance from 0.9081 to 0.9157 and the F1 score from 0.9519 to 0.9560.

| precision(%) | recall(%) | IoU(%) | F1(%) | |

|---|---|---|---|---|

| DED | 97.22 | 93.23 | 90.81 | 95.19 |

| DED + ContraLoss | 97.33 | 97.93 | 91.57 | 95.60 |

We also visualize several test results on the season-varying dataset in Figure 15. The results show that the contrastive loss strategy can help the model better identify seasonal changes and suppress unconcerned changes.

5.1.2 SECOND dataset

The SECOND dataset is a multiclass change detection dataset with six landcover classes, i.e., nonvegetated ground surface, trees, low vegetation, water, buildings, and playgrounds. Changed objects in the bitemporal images are annotated with the above categories, while the unchanged objects are labeled as background. Samples of the SECOND dataset are shown in Figure 14. From left to right are the pretemporal image, pretemporal changed area semantic segmentation label, posttemporal changed area semantic segmentation label, and and posttemporal image. Changed objects are labeled with different values (visualized with different colors), and unchanged objects are masked out (visualized with white). The dataset contains 2968 samples. We randomly split the dataset into a training set, validation set, and testing set based on the ratio train:validation:test=8:1:1.

With the explicit categories of changed objects, we can further constrain feature learning by the additional semantic segmentation tasks on the bitemporal images. To this end, we add two semantic segmentation branches to the DED backbone. Each branch produces a semantic segmentation result. Since only bitemporal semantic segmentation labels in the changed area are available, we utilize a multiclass SSL strategy for the unchanged area. We first identify the unchanged area and the changed area with change detection labels. In the changed area, the output is supervised with a typical semantic segmentation task. In the unchanged area, the semantic segmentation result of one branch is used as the label of the other branch. The SSL-based loss function can be expressed as:

| (16) |

where donates the SSL-based loss in the unchanged area, donates unchanged pixels, donates the outputs of auxiliary branches, donates the semantic segmentation results of auxiliary branches, donates Crossentropy loss + dice coefficient loss. The final loss can be calculated as:

| (17) |

where represents the loss of the binary change mask, represents the semantic segmentation loss in the changed area, and represents the SSL-based loss. All the loss values are calculated with the cross-entropy loss + dice coefficient loss.

We validate the performance of the multiclass SSL strategy by applying it to ordinary DED, which has one change mask branch and two semantic segmentation branches for the changed pixels. Compared with ordinary DED, the SSL-based strategy introduces two additional semantic segmentation branches for the unchanged pixels. We use the mean IoU (mIoU) of the binary change mask and the separated kappa (Sek) coefficient [64] of the multiclass change detection results as the evaluation metrics. The Sek coefficient is a modified kappa coefficient that alleviates the influence of label imbalance. Table 7 shows the comparison results.

| mIoU (%) | Sek(%) | |

|---|---|---|

| DED | 70.34 | 19.17 |

| DED + SSL | 70.79 | 20.1 |

Figure 16 shows several inference results on the testing set. From the figure, we can see that the model performs better with the supervision of pseudolabels in the unchanged area. Unconcerned changes are effectively suppressed in the final results.

5.1.3 HRSCD dataset

The HRSCD dataset is a multiclass change detection dataset with bitemporal semantic segmentation labels. Objects in bitemporal images are divided into several semantic classes, i.e., no information, artificial surfaces, agricultural areas, forests, wetlands, and water. A sample of the HRSCD dataset is shown in Figure 14. Change labels can be easily acquired by comparing the land cover maps of bitemporal images. Since the HRSCD dataset provides complete bitemporal semantic segmentation labels, we can constrain the feature learning of the change detection task with multitask learning [65]. Semantic segmentation of bitemporal images can be introduced as an auxiliary task. In this case, the SSL-based strategy would be unnecessary. Therefore, we will not describe the experiment on this dataset in more detail. Those interested can refer to [65].

5.2 Comparison of the SSL-based strategy and contrastive loss strategy

Since both the SSL-based strategy and contrastive loss strategy are used for constraining feature learning in an unsupervised way, we discuss the differences between these two methods in this subsection. The main difference lies in the fact that the SSL-based strategy uses a stricter constraint. For the contrastive loss strategy, the network needs to learn discriminative features. Unchanged features are pulled together, and changed features are pushed apart. However, ground objects in the bitemporal images are not distinguished. Compared with the contrastive loss strategy, the SSL-based strategy introduces an additional constraint that assigns bitemporal features to different semantics classes. With the supervision of additional semantic segmentation branches, changed features and unchanged features can be easily acquired by comparing bitemporal features.

We test the contrastive loss strategy and SSL-based strategy on the LEVIR-CD dataset and the SECON dataset. The comparison results are shown in Table 8. From the table, we can learn that both the contrastive loss strategy and SSL-based strategy can boost model performance. Compared with the contrastive loss strategy, the SSL-based strategy performs better. On the LEVIR-CD dataset, SSL improves 0.29% in terms of the IoU and 0.16% in terms of the F1 score. On the SECOND dataset, SSL improves 0.2% in terms of the mIoU and 0.24% in terms of the SeK.

| LEVIR-CD | SECOND | |||||

|---|---|---|---|---|---|---|

| DED | 92.88 | 90.67 | 84.78 | 91.76 | 70.34 | 19.17 |

| DED + ContraLoss | 92.84 | 90.98 | 85.01 | 91.90 | 70.77 | 19.86 |

| DED + SSL | 92.95 | 91.20 | 85.30 | 92.06 | 90.97 | 20.10 |

6 Conclusions

In this paper, we propose a new change detection architecture for VHR remote sensing images. We design the algorithm for the purpose of extracting correct bitemporal features and fusing them in an effective way. To this end, we propose a DED backbone and an NL-FPN module to constrain the bitemporal feature extraction, DFM to fuse bitemporal features effectively, and an SSL-based strategy to constrain overall feature learning. Based on the above contributions, we propose FCCDN and validate it on two building change detection tasks. The experimental results show that FCCDN can achieve state-of-the-art performance with relatively high efficiency. Moreover, FCCDN can obtain bitemporal semantic segmentation results in an unsupervised way on the experimental datasets, which is vital for better Earth observations.

Although very promising, FCCDN still has several limitations. Firstly, as a supervised learning algorithm, FCCDN needs many labeled data to train a robust model. As building a change detection dataset is time-consuming, it is significant to seek solutions to change detection tasks with insufficient samples. Secondly, the SSL-based strategy may not work when applied to intra-class change detection tasks. If a ground object changes its appearance without changing its semantic class, the SSL-based strategy may not offer any additional supervision. In addition, since the SSL-based strategy relies on the supervision of the segmentation branches, it is essential to know the semantic categories of objects in bitemporal images. If the semantic categories are unknown, it is challenging to build the semantic segmentation brunches and formulate the auxiliary loss function. Consequently, future work will focus on fitting the proposed architecture to more datasets.

Acknowledgments

The authors thank the editors and anonymous reviewers for their valuable comments, which greatly improved the quality of the paper.

This research was supported by the Strategic Priority Research Program of the Chinese Academy of Sciences under Grant No. XDA19080302.

References

- MAHMOUDZADEH [2007] H. MAHMOUDZADEH, Digital change detection using remotely sensed data for monitoring green space destruction in tabriz (2007).

- Shi et al. [2020] W. Shi, M. Zhang, R. Zhang, S. Chen, Z. Zhan, Change detection based on artificial intelligence: State-of-the-art and challenges, Remote Sensing 12 (2020) 1688.

- Quarmby and Cushnie [1989] N. Quarmby, J. Cushnie, Monitoring urban land cover changes at the urban fringe from spot hrv imagery in south-east england, International Journal of Remote Sensing 10 (1989) 953–963.

- Howarth and Wickware [1981] P. J. Howarth, G. M. Wickware, Procedures for change detection using landsat digital data, International Journal of Remote Sensing 2 (1981) 277–291.

- Liu et al. [2015] S. Liu, L. Bruzzone, F. Bovolo, M. Zanetti, P. Du, Sequential spectral change vector analysis for iteratively discovering and detecting multiple changes in hyperspectral images, IEEE Transactions on Geoscience and Remote Sensing 53 (2015) 4363–4378.

- Richards [1984] J. Richards, Thematic mapping from multitemporal image data using the principal components transformation, Remote Sensing of Environment 16 (1984) 35–46.

- Jin and Sader [2005] S. Jin, S. A. Sader, Comparison of time series tasseled cap wetness and the normalized difference moisture index in detecting forest disturbances, Remote sensing of Environment 94 (2005) 364–372.

- Ghosh et al. [2011] A. Ghosh, N. S. Mishra, S. Ghosh, Fuzzy clustering algorithms for unsupervised change detection in remote sensing images, Information Sciences 181 (2011) 699–715.

- Im and Jensen [2005] J. Im, J. R. Jensen, A change detection model based on neighborhood correlation image analysis and decision tree classification, Remote Sensing of Environment 99 (2005) 326–340.

- Liu et al. [2019] J. Liu, K. Chen, G. Xu, X. Sun, M. Yan, W. Diao, H. Han, Convolutional neural network-based transfer learning for optical aerial images change detection, IEEE Geoscience and Remote Sensing Letters 17 (2019) 127–131.

- Cui et al. [2019] B. Cui, Y. Zhang, L. Yan, J. Wei, H. Wu, et al., An unsupervised sar change detection method based on stochastic subspace ensemble learning, Remote Sensing 11 (2019) 1314.

- LeCun et al. [2015] Y. LeCun, Y. Bengio, G. Hinton, Deep learning, nature 521 (2015) 436–444.

- Zhang et al. [2019] B. Zhang, Z. Chen, D. Peng, J. A. Benediktsson, B. Liu, L. Zou, J. Li, A. Plaza, Remotely sensed big data: Evolution in model development for information extraction [point of view], Proceedings of the IEEE 107 (2019) 2294–2301.

- Daudt et al. [2018] R. C. Daudt, B. Le Saux, A. Boulch, Fully convolutional siamese networks for change detection, in: 2018 25th IEEE International Conference on Image Processing (ICIP), IEEE, 2018, pp. 4063–4067.

- Peng et al. [2019] D. Peng, Y. Zhang, H. Guan, End-to-end change detection for high resolution satellite images using improved unet++, Remote Sensing 11 (2019) 1382.

- Zhang et al. [2020] C. Zhang, P. Yue, D. Tapete, L. Jiang, B. Shangguan, L. Huang, G. Liu, A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images, ISPRS Journal of Photogrammetry and Remote Sensing 166 (2020) 183–200.

- Zhang et al. [2021] L. Zhang, X. Hu, M. Zhang, Z. Shu, H. Zhou, Object-level change detection with a dual correlation attention-guided detector, ISPRS Journal of Photogrammetry and Remote Sensing 177 (2021) 147–160.

- Zheng et al. [2021] Z. Zheng, Y. Wan, Y. Zhang, S. Xiang, D. Peng, B. Zhang, Clnet: Cross-layer convolutional neural network for change detection in optical remote sensing imagery, ISPRS Journal of Photogrammetry and Remote Sensing 175 (2021) 247–267.

- Hou et al. [2021] X. Hou, Y. Bai, Y. Li, C. Shang, Q. Shen, High-resolution triplet network with dynamic multiscale feature for change detection on satellite images, ISPRS Journal of Photogrammetry and Remote Sensing 177 (2021) 103–115.

- Zhang et al. [2021] Y. Zhang, L. Fu, Y. Li, Y. Zhang, Hdfnet: Hierarchical dynamic fusion network for change detection in optical aerial images, Remote Sensing 13 (2021) 1440.

- Zagoruyko and Komodakis [2015] S. Zagoruyko, N. Komodakis, Learning to compare image patches via convolutional neural networks, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 4353–4361.

- Gao et al. [2019] Y. Gao, F. Gao, J. Dong, S. Wang, Change detection from synthetic aperture radar images based on channel weighting-based deep cascade network, IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 12 (2019) 4517–4529.

- Long et al. [2015] J. Long, E. Shelhamer, T. Darrell, Fully convolutional networks for semantic segmentation, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 3431–3440.

- Alcantarilla et al. [2018] P. F. Alcantarilla, S. Stent, G. Ros, R. Arroyo, R. Gherardi, Street-view change detection with deconvolutional networks, Autonomous Robots 42 (2018) 1301–1322.

- Fang et al. [2021] S. Fang, K. Li, J. Shao, Z. Li, Snunet-cd: A densely connected siamese network for change detection of vhr images, IEEE Geoscience and Remote Sensing Letters (2021).

- Diakogiannis et al. [2020] F. I. Diakogiannis, F. Waldner, P. Caccetta, Looking for change? roll the dice and demand attention, arXiv preprint arXiv:2009.02062 (2020).

- Hou et al. [2019] B. Hou, Q. Liu, H. Wang, Y. Wang, From w-net to cdgan: Bitemporal change detection via deep learning techniques, IEEE Transactions on Geoscience and Remote Sensing 58 (2019) 1790–1802.

- Chen and Shi [2020] H. Chen, Z. Shi, A spatial-temporal attention-based method and a new dataset for remote sensing image change detection, Remote Sensing 12 (2020) 1662.

- Ji et al. [2018] S. Ji, S. Wei, M. Lu, Fully convolutional networks for multisource building extraction from an open aerial and satellite imagery data set, IEEE Transactions on Geoscience and Remote Sensing 57 (2018) 574–586.

- Peng et al. [2020] X. Peng, R. Zhong, Z. Li, Q. Li, Optical remote sensing image change detection based on attention mechanism and image difference, IEEE Transactions on Geoscience and Remote Sensing (2020).

- Liu et al. [2020] R. Liu, D. Jiang, L. Zhang, Z. Zhang, Deep depthwise separable convolutional network for change detection in optical aerial images, IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 13 (2020) 1109–1118.

- Zhou et al. [2018] Z. Zhou, M. M. R. Siddiquee, N. Tajbakhsh, J. Liang, Unet++: A nested u-net architecture for medical image segmentation, in: Deep learning in medical image analysis and multimodal learning for clinical decision support, Springer, 2018, pp. 3–11.

- Ronneberger et al. [2015] O. Ronneberger, P. Fischer, T. Brox, U-net: Convolutional networks for biomedical image segmentation, in: International Conference on Medical image computing and computer-assisted intervention, Springer, 2015, pp. 234–241.

- Chen et al. [2020] J. Chen, Z. Yuan, J. Peng, L. Chen, H. Haozhe, J. Zhu, Y. Liu, H. Li, Dasnet: Dual attentive fully convolutional siamese networks for change detection of high resolution satellite images, IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing (2020).

- Jing and Tian [2020] L. Jing, Y. Tian, Self-supervised visual feature learning with deep neural networks: A survey, IEEE Transactions on Pattern Analysis and Machine Intelligence (2020).

- Zhang et al. [2016] R. Zhang, P. Isola, A. A. Efros, Colorful image colorization, in: European conference on computer vision, Springer, 2016, pp. 649–666.

- Pathak et al. [2016] D. Pathak, P. Krahenbuhl, J. Donahue, T. Darrell, A. A. Efros, Context encoders: Feature learning by inpainting, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 2536–2544.

- Feng et al. [2019] Z. Feng, C. Xu, D. Tao, Self-supervised representation learning by rotation feature decoupling, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 10364–10374.

- Noroozi and Favaro [2016] M. Noroozi, P. Favaro, Unsupervised learning of visual representations by solving jigsaw puzzles, in: European conference on computer vision, Springer, 2016, pp. 69–84.

- Tao et al. [2020] C. Tao, J. Qi, W. Lu, H. Wang, H. Li, Remote sensing image scene classification with self-supervised paradigm under limited labeled samples, IEEE Geoscience and Remote Sensing Letters (2020).

- Vincenzi et al. [2020] S. Vincenzi, A. Porrello, P. Buzzega, M. Cipriano, P. Fronte, R. Cuccu, C. Ippoliti, A. Conte, S. Calderara, The color out of space: learning self-supervised representations for earth observation imagery, arXiv preprint arXiv:2006.12119 (2020).

- Dong et al. [2020] H. Dong, W. Ma, Y. Wu, J. Zhang, L. Jiao, Self-supervised representation learning for remote sensing image change detection based on temporal prediction, Remote Sensing 12 (2020) 1868.

- Chen and Bruzzone [2021] Y. Chen, L. Bruzzone, Self-supervised change detection in multi-view remote sensing images, arXiv preprint arXiv:2103.05969 (2021).

- Hu et al. [2018] J. Hu, L. Shen, G. Sun, Squeeze-and-excitation networks, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7132–7141.

- Ioffe and Szegedy [2015] S. Ioffe, C. Szegedy, Batch normalization: Accelerating deep network training by reducing internal covariate shift, in: International conference on machine learning, PMLR, 2015, pp. 448–456.

- Selvaraju et al. [2017] R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, D. Batra, Grad-cam: Visual explanations from deep networks via gradient-based localization, in: Proceedings of the IEEE international conference on computer vision, 2017, pp. 618–626.

- Zhao et al. [2017] H. Zhao, J. Shi, X. Qi, X. Wang, J. Jia, Pyramid scene parsing network, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 2881–2890.

- Diakogiannis et al. [2020] F. I. Diakogiannis, F. Waldner, P. Caccetta, C. Wu, Resunet-a: a deep learning framework for semantic segmentation of remotely sensed data, ISPRS Journal of Photogrammetry and Remote Sensing 162 (2020) 94–114.

- Chen et al. [2017] L.-C. Chen, G. Papandreou, F. Schroff, H. Adam, Rethinking atrous convolution for semantic image segmentation, arXiv preprint arXiv:1706.05587 (2017).

- Li et al. [2018] H. Li, P. Xiong, J. An, L. Wang, Pyramid attention network for semantic segmentation, arXiv preprint arXiv:1805.10180 (2018).

- Lin et al. [2017] T.-Y. Lin, P. Dollár, R. Girshick, K. He, B. Hariharan, S. Belongie, Feature pyramid networks for object detection, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 2117–2125.

- Mi and Chen [2020] L. Mi, Z. Chen, Superpixel-enhanced deep neural forest for remote sensing image semantic segmentation, ISPRS Journal of Photogrammetry and Remote Sensing 159 (2020) 140–152.

- Wang et al. [2018] X. Wang, R. Girshick, A. Gupta, K. He, Non-local neural networks, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7794–7803.

- Yuan and Wang [2018] Y. Yuan, J. Wang, Ocnet: Object context network for scene parsing, arXiv preprint arXiv:1809.00916 (2018).

- Xu et al. [2017] J. Xu, R. Ranftl, V. Koltun, Accurate optical flow via direct cost volume processing, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 1289–1297.

- Zhou et al. [2018] L. Zhou, C. Zhang, M. Wu, D-linknet: Linknet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2018, pp. 182–186.

- Buslaev et al. [2020] A. Buslaev, V. I. Iglovikov, E. Khvedchenya, A. Parinov, M. Druzhinin, A. A. Kalinin, Albumentations: fast and flexible image augmentations, Information 11 (2020) 125.

- Paszke et al. [2017] A. Paszke, S. Gross, S. Chintala, G. Chanan, E. Yang, Z. DeVito, Z. Lin, A. Desmaison, L. Antiga, A. Lerer, Automatic differentiation in pytorch (2017).

- Kingma and Ba [2014] D. P. Kingma, J. Ba, Adam: A method for stochastic optimization, arXiv preprint arXiv:1412.6980 (2014).

- Hendrycks et al. [2019] D. Hendrycks, K. Lee, M. Mazeika, Using pre-training can improve model robustness and uncertainty, in: International Conference on Machine Learning, PMLR, 2019, pp. 2712–2721.

- Chen et al. [2021] H. Chen, Z. Qi, Z. Shi, Efficient transformer based method for remote sensing image change detection, arXiv preprint arXiv:2103.00208 (2021).

- Papadomanolaki et al. [2019] M. Papadomanolaki, S. Verma, M. Vakalopoulou, S. Gupta, K. Karantzalos, Detecting urban changes with recurrent neural networks from multitemporal sentinel-2 data, in: IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, IEEE, 2019, pp. 214–217.

- Lebedev et al. [2018] M. Lebedev, Y. V. Vizilter, O. Vygolov, V. Knyaz, A. Y. Rubis, Change detection in remote sensing images using conditional adversarial networks., International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences 42 (2018).

- Yang et al. [2020] K. Yang, G.-S. Xia, Z. Liu, B. Du, W. Yang, M. Pelillo, Asymmetric siamese networks for semantic change detection, arXiv preprint arXiv:2010.05687 (2020).

- Daudt et al. [2019] R. C. Daudt, B. Le Saux, A. Boulch, Y. Gousseau, Multitask learning for large-scale semantic change detection, Computer Vision and Image Understanding 187 (2019) 102783.