These authors contributed equally to this work. \equalcontThese authors contributed equally to this work. \equalcontThese authors contributed equally to this work.

1] \orgnameChina Telecom Cloud, \cityGuangzhou \countryChina

2]\orgdivShanghaiTech University, \cityShanghai, \countryChina

FCA-RAC: First Cycle Annotated Repetitive Action Counting

Abstract

Repetitive action counting quantifies the frequency of specific actions performed by individuals. However, existing action-counting datasets have limited action diversity, potentially hampering model performance on unseen actions. To address this issue, we propose a framework called First Cycle Annotated Repetitive Action Counting (FCA-RAC). This framework contains 4 parts: 1) a labeling technique that annotates each training video with the start and end of the first action cycle, along with the total action count. This technique enables the model to capture the correlation between the initial action cycle and subsequent actions; 2) an adaptive sampling strategy that maximizes action information retention by adjusting to the speed of the first annotated action cycle in videos; 3) a Multi-Temporal Granularity Convolution (MTGC) module, that leverages the muli-scale first action as a kernel to convolve across the entire video. This enables the model to capture action variations at different time scales within the video; 4) a strategy called Training Knowledge Augmentation (TKA) that exploits the annotated first action cycle information from the entire dataset. This allows the network to harness shared characteristics across actions effectively, thereby enhancing model performance and generalizability to unseen actions. Experimental results demonstrate that our approach achieves superior outcomes on RepCount-A and related datasets, highlighting the efficacy of our framework in improving model performance on seen and unseen actions. Our paper makes significant contributions to the field of action counting by addressing the limitations of existing datasets and proposing novel techniques for improving model generalizability.

keywords:

Repetitive action counting, Adaptive sampling strategy, Multi-Temporal Granularity Convolution, Training Knowledge Augmentation

1 Introduction

Repetitive Action Counting (RAC) is a technology that utilizes video capture technology to count the number of repetitions of a specific action. It has potential applications in athlete training evaluation, physical condition monitoring[3], fitness tracking, and other video analysis studies such as pedestrian detection and reconstruction [4] [5] [6] [7]. Moreover, RAC’s ability to accurately count repetitions of an exercise renders it a valuable tool in the field of fitness, enabling individuals to track their progress and measure the intensity of their workout, thus making them stay motivated and focus on achieving their fitness goals. To calculate the number of repeated actions in a video, computer vision algorithms such as optical flow or motion estimation can be used to extract features frame by frame and detect the motion of objects in the video[8]. Subsequently, a tracking algorithm can be employed to track the motion of the person and count the number of times the same motion is repeated. These mechanisms enable RAC to provide users with precise and reliable quantitative data on their physical performance, transforming the way we evaluate, train, and optimize human movement.

In previous methods, models were trained on datasets with either coarse-grained annotations (as in [9] [10] [11]) or fine-grained annotations (as in [1],[12]). However, each action-counting dataset only covers a limited range of actions. In real-world scenarios, there will inevitably be actions that are not presented in the dataset. As a result, it is reasonable to assume that the model’s performance will be sub-optimal when applied to unseen actions. To tackle this problem, we propose a framework called First Cycle Annotated Repetitive Action Counting (FCA-RAC), which contains 4 parts.

The first part is a labeling technique where each training video is annotated with the start and end of the first action cycle and the total count of repetitive actions. The first action cycle of the test set is also annotated to improve performance on unseen actions. In comparison, previous methods [1] fully annotate the start and end frames of each action cycle in the training set, without annotation information in the test set. Figure.1a shows the comparison of two methods. This labeling technique allows the model to learn the relationship between the first action cycle and subsequent actions, enhancing its ability to predict unseen actions. We assume that the model trained on the dataset with our labeling technique is trying to learn how to match the pattern of the first cycle with subsequent actions in the video. In other words, our approach seeks to leverage the labeled first action cycle to help the model recognize and predict subsequent actions. In cases where the video contains an action that has not been seen before, the model can still be effective if the first action cycle is annotated. Consequently, this labeling technique significantly enhances the ability of action counting. As long as the training is conducted on a dataset containing several actions, the model can predict any actions, even those that do not appear in the dataset, at the cost of marking the start and end time of the first action. Such an approach has the potential to greatly improve the real-world applicability of action-counting models.

The second part is a novel video sampling technique. Prior methods [12] [1] relied on fixed frame rates for prediction, where 64 frames are captured from each video in [1] for further analysis. However, this approach may not be sufficient when dealing with videos containing an extreme number of actions. Accurate prediction becomes increasingly difficult as the number of actions exceeds the number of frames, while videos with fewer actions may waste computational resources. Another shortcoming of fixed frame extraction is that some actions are very fast (e.g. skipping rope) while others are very slow (e.g. playing tennis). Fixed frame extraction would lead to the degradation of model performance due to variations in action speeds. To address these issues, we propose a dynamic sampling technique that calculates the sampling rate based on the beginning and end of the first action cycle in each video. The video is sampled according to the speed of the first action cycle. This approach enables our model to accurately predict the number of actions in different types of videos, regardless of their length or the frequency of the actions they contain.

Variations in the speed of actions can be observed across different activities as well as within a single video. For instance, individuals performing fitness exercises (e.g. front raises) may start with a fast pace, but gradually slow down due to exhaustion. To tackle this problem, as well as to leverage the benefits of the sampling technique and annotation methodology, we introduce the Multi-Temporal Granularity Convolution (MTGC) module, the third part of our framework. The MTGC module employs the encoded feature of the first action cycle as the kernel for convolution with the remaining video feature. This enables the model to identify and correspond to the pattern of the initial action cycle with the remaining portions of the video. To account for the variations in action speed within the video, the first action cycle kernel is interpolated to different scales. The multi-temporal kernels are then used to convolve across the entire video. This approach facilitates the extraction of action variations across different time scales, enabling the model to capture subtle differences in movement.

To enhance the model performance, previous work focused on adapting the network to the testing examples. Viresh et al. [2] propose a Test Time Adaptation (TTA) strategy, that utilizes a few gradient descent updates to adapt the network with the provided testing example. The procedure of Test Time Adaptation on RAC is illustrated on the left of Figure.1b. However, when it comes to action counting in the video, the extracted feature may vary due to the pose or angle variation of the person. Relying solely on the first action cycle of a video to match the entire video can lead to a decline in model performance. Hence, utilizing the similarities of the first action from other videos for fine-tuning and prediction holds the potential to significantly enhance model performance. Additionally, when dealing with an unseen action, the prediction result can also be improved by utilizing the similarities from other videos.

To this end, we introduced the final part of our framework, called Training Knowledge Augmentation (TKA), as shown in the right of Figure.1b. This strategy utilizes similarities between the first action cycles of videos in the training set for fine-tuning and prediction. After pre-training, the model constructs an embedding vector space to maintain the first annotated training cycle of each video in the training set. During both fine-tuning and inference stages, the top-k nearest embedding vectors are selected based on their similarity to the input video’s first cycle. These vectors are incorporated into convolution kernels to perform MTGC with the input video, improving the prediction accuracy. This strategy attempts to use similar actions within the dataset to enhance prediction accuracy even when dealing with previously unseen actions. This obviates the need for Test Time Adaptation and enhances the model’s performance.

Our proposed method yields satisfactory results on the RepCount-A, CountixAV, and UCFRep datasets. Furthermore, we conducted experiments on the UCFRep and QUVA datasets, with the model pretraining on RepCount-A to evaluate the generalization of our method. These results demonstrated that our method achieves competitive performance when applied to previously seen or unseen data.

Our contributions can be summarized as follows:

-

•

We introduce a method for annotating repetitive actions that capture the relationship between the first and subsequent actions within a video.

-

•

To handle videos with various speeds, we introduce a dynamic sampling technique that adjusts the sampling rate according to the speed of the first action cycle, creating a more robust representation of the video across various speeds.

-

•

We present a network module capable of accommodating various action speeds within a single video, which utilizes the multi-temporal scale of the first action cycle as convolution kernels to capture action variations across different time scales.

-

•

We present an adaptation strategy to utilize the dataset’s annotated first action cycle information. This strategy aims to enhance prediction accuracy by utilizing similarities between actions, regardless of whether the model is predicting previously observed or new actions.

2 Related Work

Temporal Correlation Temporal correlation has been widely utilized in video understanding, encompassing areas such as video action recognization [13, 14], action detection [15], and action localization [16]. Vaswani et al.[17] employed attention mechanisms to draw the correlation within the given sequences, while Junejo et al.[18] propose an approach that extracts temporal correlations between frames using self-similarities.

Counting in Computer Vision The counting problem has been widely studied [19]. Arteta et al. [20] focus on counting crowded objects from images using dot annotation. Ranjan et al. [2] addresses the task of counting all objects in an image and proposes an adaptation strategy for testing with limited labeled examples. Levy et al. [21] proposed a method that can count the number of repetitions in videos online. Zhang et al. [12] tackle the challenge of unknown action cycle lengths by introducing a context-aware and scale-insensitive framework. Hu et al. [1] introduce a large-scale dataset with fine-grained action cycle annotations, considering realistic scenarios of interruptions and occlusions.

Temporal Convolution Network Zheng et al. [22] present a multi-channel convolutional neural network (CNN) architecture for effective analysis of multivariate time series data. Their approach processes individual time series with separate CNNs, extracting relevant features that are then concatenated and fed into a novel CNN architecture for further analysis.

Our proposed Multi-Temporal Granularity Convolution module exploits the strong similarities between the first action cycle and the entire video. This is akin to the work of [23], where a matching mechanism was proposed to address the counting problem. In our proposed method, we utilize the first action cycle as a convolution kernel within the Multi-Temporal Granularity Convolution module, with the aim of matching the pattern of the first action cycle to the entire video. Through this process, we can generate accurate counting numbers for the actions within the video.

Training Knowledge Augmentation Aaron et al.[24] developed a VQ-VAE method that constructs a latent embedding space to facilitate the training of encoder and decoder networks. Inspired by this work, as well as research by Zongwei et al.[25], we introduce our proposed TKA, which constructs embedding vector space using the first annotated training cycle from the dataset. During fine-tuning and inference stage, the model will seek the most informative vectors to enhance the prediction, without requiring test time adaptation.

We compare our approach of labeling, sampling, multi-temporal granularity convolution, and training knowledge augmentation strategies on the RepCount, countixAV, QUVA and UCFRep datasets, and empirically show that it leads to better performance on seen and unseen actions.

3 Method

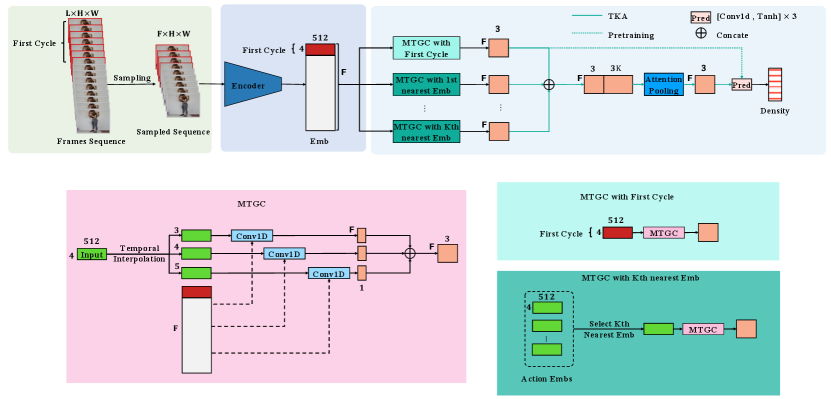

The aim of this study is to develop an accurate method for counting repetitive actions performed in videos. Figure.2 illustrates the structure of our proposed model. We start by sampling the video in the pre-training stage. The sampled video is then processed by an encoder and a Multi-Temporal Granularity Convolution module (MTGC), which uses a multi-scaled first action cycle as a kernel to convolve with the entire video. The resulting feature is passed through a prediction module to obtain the output density map. During the fine-tuning and inference stage, we utilize a Training Knowledge Augmentation strategy (TKA) that leverages similar actions within the dataset to promote the model’s performance.

3.1 Input Sampling

As shown in Figure.2, assuming the input video contains frames , the first frames are labeled as the first action cycle. We sample these N frames to k frames (k = 4 in this article) to represent the action’s start, middle, and end stages. The sampling rate can be calculated as . Consequently, the sampled video ( , ) contain frames. By sampling all videos according to the speed of the first action, we ensure consistency in action speeds across different videos, thereby mitigating the effect of speed variations.

3.2 Encoder

We extract the video sequence with sliding window step size , as in Eq.1.

| (1) |

For feature extraction, we employ the Video-Swin-Transformer[26], which is designed specifically for visual recognition tasks.

Let the video vector pass through the Video-Swin-Transformer. For each clip, the size of the extracted features is , which can then be concatenated to form a tensor of size .

We then apply a conv3D layer with filters and ReLU activation, to enhance the combination of spatial and temporal features. A spatial pooling layer aggregates space information into the channel dimension, resulting in the final temporal context features X as defined below:

| (2) |

3.3 Multi-Temporal Granularity Convolution

To capture the similarities between the first action cycle and the entire video, we introduce the multi-temporal granularity convolution (MTGC) module. The MTGC module leverages the spatio-temporal information contained in the first action cycle to estimate the count for the entire video. We use the first action cycle , as a temporal granularity convolution kernel, to convolve with the entire video feature . To account for the variations in action speed within the video, we interpolate the first action cycle kernel to 3 and 5 in different scales, respectively, resulting in three multi-scaled kernels. Each kernel performs temporal granularity convolution on the entire video with stride 1, and their outputs are concatenated to obtain the temporal granularity feature .

3.4 Prediction Layer

In the pre-training stage, is passed through the prediction layer, which consists of three 1D convolution layers and the Tanh activation function. The output is the final density map representing the action period distribution. By summing up the density, we obtain the action count in the video.

Density Map The advantage of the density map is the strong interpretability [27] [28] [29] [30], with each frame representing the action’s distribution. To construct the density map of the first action cycle, we follow the procedure in [1], using the Gaussian function [31] with a 99% confidence interval. Since a 99% confidence interval corresponds to , we could obtain from . Then the density value can be calculated as follows:

| (3) |

This approach allows us to construct an accurate density map of the first action cycle to train the model.

3.5 Training Knowledge Augmentation

In the proposed Training Knowledge Augmentation (TKA) strategy, we leverage the similarities of the first action cycle between the input video and training set to enhance model performance. After pre-training, we construct an embedding vector space capturing the embedding vectors from the first annotated cycle of each video in the training set, where T is the total number of the training videos, and k is the number of sampled frames of the first cycle (i.e. in this article), and D stands for the dimension of the embedding vectors. Each embedding vector is obtained by passing the sampled first action of the training video through the Encoder described in Sec.3.2. During both fine-tuning and inference stages, the top K closest embedding vectors are selected based on their similarity to the temporal context features of the first cycle from the input video. The Euclid distance between and can be defined as follows:

| (4) |

Then we sort the distances d in ascending order and select the top vector with the smallest distance. Therefore, we can express the process of selecting the closest vector using the formula:

| (5) |

Here represents the th closest vector to after sorting all values in . Subsequently, each embedding vector performs MTGC with the entire video, as described in Sec.3.3. After concatenating the output of the TKA module with the original output of MTGC, we obtain the feature .

To compress the resulting feature, we employ a feature fusion strategy using an attention-pooling layer[32][33] with learnable weights:

| (6) |

| (7) |

Finally, the feature of is passed through the prediction Layer to generate the density map.

3.6 Loss

To incorporate the label of the first cycle, we crop the first action from the output density map , which is denoted as . We use the Gaussian function to derive the ground truth density of the first cycle . Therefore, we can calculate the MSE loss (Mean Squared Error) of the first cycle:

| (8) |

Additionally, we compute the MAE loss (Mean Absolute Error) between the ground truth count and the predicted count obtained by summing the density map values:

| (9) |

The overall loss function is a linear combination of the MSE and MAE losses, weighted by :

| (10) |

3.7 Inference

Given a video clip, since its first action cycle is labeled, we first employed our sampling technique to sample the video. Then the sampled video is fed into the model (with TKA strategy), shown in Figure.2. To apply the TKA strategy, the top K closest embedding vectors from the training set are selected, and each embedding vector serves as a convolution kernel to perform MTGC with the input video. After concatenating, the resulting features are passed through the attention-pooling and prediction layers to generate the density map. After applying a linear sum of the density map, we could obtain the predicted value of the action period.

3.8 Baseline

The setting of our framework is different from the previous one [1][12]. These methods only use the annotation from the training set, and predict the action number in the test set without annotation, while our framework utilizes the annotated first action in the video during prediction. For a fair comparison, we introduce two baseline modules for action counting prediction. Each baseline utilizes temporal context feature as input and generates a video density map by passing the features through different module types.

The first baseline, named First Cycle Video Attention (FC-V), employs an attention mechanism based on the first annotated cycle. Given the temporal context features , we use the first action cycle as the query matrix Q, while the subsequent temporal features are treated as the key matrix K and value matrix V. These matrices are processed through trained linear layers. The cross-attention feature C is calculated by applying the attention equation:

| (11) |

The cross-attention feature is then fed into the prediction module to generate the output density map.

The second baseline, named Video to Video Attention (V-V), employs a self-attention mechanism to compute the similarity matrix from the temporal context features , similar to the approach used in TransRAC [1]. V-V is designed to leverage the entire temporal context of the video, which may provide useful information about the frequency and duration of the repetitive actions. Specifically, the feature is multiplied with two weights matrices to obtain keys matrix K and query matrix Q. Then the similarity matrix is calculated by where denotes the dot product.

Unlike TransRAC[1], we apply a convolution layer to the similarity matrix , yielding the context feature . Afterward, an adaptive average pooling layer modifies the last axis from (the frame length) to 16, resulting in the context feature C. Finally, the context feature is fed into the prediction module to generate the output density map.

Test Time Adaptation. To enhance the performance of both baselines, we incorporate the Test Time Adaptation using the approach from[2] . This adaptation strategy adjusts the network to the input video during test time, further improving the accuracy of the estimated count, as shown in the left of Figure.1b. Specifically, during test time, the model parameters are frozen except for the prediction module. We perform several gradient descent steps on the first action cycle of the testing video to optimize the predicted count with the mean-squared error loss defined in Eq.8.

4 Experiments

| Annotation | Algorithms | RepCount-A | CountixAV | UCFRep | ||||

|---|---|---|---|---|---|---|---|---|

| Train | Test | MAE | OBO | MAE | OBO | MAE | OBO | |

| Count | - | Zhang [11] | - | - | 0.331 | 0.43 | 0.143 | 0.80 |

| All Cycles | - | X3D[34] | 0.910 | 0.11 | 0.933 | 0.08 | 0.982 | 0.33 |

| TANet [35] | 0.662 | 0.10 | 0.811 | 0.18 | 0.892 | 0.13 | ||

| VST [26] | 0.575 | 0.13 | 0.792 | 0.25 | 1.122 | 0.03 | ||

| Huang [36] | 0.526 | 0.16 | - | - | 1.035 | 0.02 | ||

| Zhang [12] | 0.878 | 0.16 | - | - | 0.147 | 0.79 | ||

| TransRAC [1] | 0.443 | 0.29 | - | - | 0.581 | 0.33 | ||

| First Cycle +Count | First Cycle | FC-V | 0.344 | 0.36 | 0.392 | 0.56 | 0.305 | 0.56 |

| V-V | 0.322 | 0.36 | 0.379 | 0.55 | 0.211 | 0.69 | ||

| FCA-RAC | 0.268 | 0.47 | 0.330 | 0.58 | 0.150 | 0.77 | ||

| RepCount-A | CountixAV | UCFRep | QUVA | |

| Num. of Videos | 1041 | 1863 | 526 | 100 |

| Duration(s) | 31927 | 11401 | 3500 | 1754 |

| Duration(s) Min/Max | 4.0/88.0 | 1.8/10.0 | 2.08/33.84 | 2.5/64.2 |

| Num. of Counts. | 15615 | 12722 | 3506 | 1246 |

| Count Min/Max | 1/141 | 2/60 | 3/54 | 1/6 |

4.1 Datasets and Evaluation Matrices

We use 4 repetition counting datasets [12, 1, 11, 37] to evaluate the effectiveness of our framework. The summary of their statistics is shown in Table.2.

RepCount-A The RepCount-A dataset [1] consists of 1041 videos with about 20,000 fine-grained annotations total. It includes videos of varying lengths and multiple anomaly cases, with each video annotated for the beginning and end of each action period. The average video length in the dataset is 39.35 seconds, significantly surpassing the durations of UCFRep[12] and CountixAV[11]. On average, each video clip in the dataset contains about 16 action cycles.

Countix-AV[11], a subset of Countix[9], is a dataset sourced from YouTube. It consists of 1863 videos, with 987, 311, and 565 videos allocated for training, validation, and testing, respectively. Since each video only annotates the count of repeated actions, which does not meet our task requirements, we manually annotate the start and end frames of the first action cycle to align with our framework.

UCFRep[12], a subset of UCF101, is a dataset that selectively includes 23 action categories out of the original 101, focusing on cyclically performed actions. The dataset contains a total of 526 videos, with 421 videos assigned to the training set and 105 videos designated as the validation set. Each video in UCFRep is annotated with the temporal boundaries of each repetitive action, making it directly applicable to our task.

QUVA [37], comprises 100 videos exhibiting various activity ranges. Due to its relatively small scale, the QUVA dataset is commonly employed for evaluation purposes. All videos in the QUVA dataset are annotated with the temporal bounds of each interval, enabling us to assess the generalizability of our method.

To measure the accuracy of our method, we adopted two evaluation metrics following the previous work [9][12]: Mean Absolute Error (MAE) and Off-by-One accuracy (OBO). MAE represents the normalized absolute error between the predicted count and the ground truth count, while OBO measures the accuracy of the repetition count across the entire dataset. Specifically, for OBO, we considered a video to be counted correctly if the predicted count is within one count of the ground truth; otherwise, it was considered a counting error. The definitions of MAE and OBO are as follows:

| (12) |

| (13) |

where and represent the ground truth and predicted counts of the -th video, respectively, and is the total number of videos in the evaluation set.

4.2 Implementation Details

We implement our method using PyTorch with four NVIDIA V100 GPUs. The Video Swin Transformer tiny [26] served as the encoder, which was pre-trained on the Kinetics400. Due to the limitation of GPU memory, the parameter of the pre-trained encoder is frozen. To train our FCA-RAC model, we normalized the first annotated cycle to frames and resized all frames to . Within a batch, we padded the sampled frames to match the longest selected frames for training purposes. The density map in the padded frames was set to 0, so the predicted number of actions would be the sum of the ”real” density map. We used a weighted combination of and for the loss function with weight coefficients . We train the model for 16K steps with a learning rate of and optimized by the Adam optimizer.

After pre-training, we applied TKA to fine-tune the model. Before fine-tuning, we save the encoded feature of the first action cycle of all training videos to construct the embedding vectors. During each step of the fine-tuning, the top K closest vectors with the first action of the input video are selected to perform MTGC and the subsequent modules to generate the density map. The embedding vectors were updated at every epoch to align them with the output of the model. We perform 1.6K steps during fine-tuning with a learning rate of .

4.3 Evaluation and Comparison

To evaluate the performance of our FCA-RAC model, we conducted comparisons with existing methods [34, 35, 26, 36, 12, 1] in Table.1. The results reported for RepCount-A and UCFRep datasets are taken from [1, 38]. Since the countixAV dataset lacks label information for action cycle boundaries, we manually annotated the start and end frames of the first action cycle.

Our FCA-RAC model demonstrates superior performance on both the RepCount-A and countixAV datasets. In RepCount-A, the model achieves an MAE of 0.268 and an OBO of 0.47, which outperforms the TransRAC[1] by 0.175/0.18 on MAE/OBO. Similarly, in countixAV, our model attains an MAE of 0.330 and an OBO of 0.58, slightly surpassing the result in [11]. Notably, the FCA-RAC model’s performance on the UCFRep dataset is also comparable with the state-of-the-art.

Our model’s experimental setting differs from previous methods. While previous methods lack label information in the test set, we utilize annotated first action cycles in testing videos to enhance generalizability. To ensure a fair comparison, we also evaluate the baseline described in Sec.3.8, as shown in Table.1. Our model exhibits MAE/OBO performance gains of 0.076/0.11(0.054/0.11), 0.062/0.02(0.049/0.03), 0.155/0.21(0.061/0.08) over FC-V(V-V) on RepCount-A, CountixAV, and UCFRep dataset, respectively. These results highlight our model’s significant superiority over the baseline across all three datasets. These findings provide strong evidence that our FCA-RAC is an effective model for repetition action counting.

Generalization We evaluated the generalization capabilities of our FCA-RAC model from two perspectives.

First, we conducted experiments on the UCFRep and QUVA datasets using the model pre-trained on the RepCount-A dataset. The results are shown in Table.3. Our methods outperform the TransRAC[1] by 0.339/0.23, and 0.512/0.51 in terms of MAE/OBO on UCFRep and QUVA datasets respectively. These results demonstrate the superior generalization capabilities of our method compared to previous approaches when applied to unseen datasets.

Second, we reorganized the RepCount-A dataset according to the guidelines in [1], creating new training, validation, and test subsets with disjoint action types. This ensured that the actions in the test set did not appear in the training set. We then evaluated our FCA-RAC model on this newly constructed dataset, and the results are presented in Table.4. Our method achieves improvements of 0.175/0.18, and 0.210/0.13 in terms of MAE/OBO in the regular setting and resplit setting respectively, which demonstrates that our model also exhibits strong generalization performance on previously unseen actions. These findings demonstrate the strong generalization performance of our model when faced with diverse and unfamiliar action scenarios.

| Method | UCFRep | QUVA | ||

|---|---|---|---|---|

| MAE | OBO | MAE | OBO | |

| RepNet[9] | 0.999 | 0.01 | - | - |

| TransRAC[1] | 0.640 | 0.32 | 0.684 | 0.13 |

| Ours | 0.301 | 0.55 | 0.172 | 0.64 |

| Method | regular setting | re-split setting | ||

|---|---|---|---|---|

| MAE | OBO | MAE | OBO | |

| TransRAC[1] | 0.443 | 0.29 | 0.625 | 0.20 |

| ours(w/o TKA) | 0.316 | 0.38 | 0.553 | 0.25 |

| ours(with TKA) | 0.268 | 0.47 | 0.415 | 0.33 |

4.4 Ablation Studies

In this section, we conduct some ablation experiments to evaluate the effectiveness of the designing of the FCA-RAC model. We train the model on the training set of RepCount-A, countixAV, and UCFRep datasets, and then evaluate their performance on the corresponding test set.

| Method | RepCount-A | CountixAV | ||

|---|---|---|---|---|

| MAE | OBO | MAE | OBO | |

| Baseline(FC-V) | 0.360 | 0.32 | 0.411 | 0.52 |

| TGC (4) | 0.332 | 0.37 | 0.361 | 0.56 |

| MTGC (3-5) | 0.316 | 0.38 | 0.341 | 0.57 |

| MTGC (2-6) | 0.355 | 0.37 | 0.393 | 0.53 |

Multi-Temporal Granularity Convolution. We investigated the impact of applying temporal granularity convolution to our model to accommodate speed variations within a video. Table.5 demonstrates that MTGC with a kernel scale ranging from 3-5 achieves the best results. By using the multi-scale of the first action cycle as convolution kernels, we improve action counting performance by capturing action variations across different time scales within a video. However, a kernel scale ranging from 2-6 resulted in inferior performance, suggesting that kernels with extreme sizes may not effectively capture the action patterns in the video and disrupt the predicted results. Moreover, compared with the result of the FC-V baseline, it can be seen that the use of MTGC significantly improves the performance of the action counting task.

Training Knowledge Augmentation. Furthermore, we explored the effectiveness of Training Knowledge Augmentation (TKA). Figure.3 demonstrates that fine-tuning and inference with TKA surpass the performance without TKA. Moreover, we observed notable performance improvements by increasing the number of action cycles from 1 to 10 during TKA. Table.4 reveals the performance of TKA and non-TKA scenarios under different data settings. In the regular setting of the RepCount-A dataset, the TKA strategy gains 0.048/0.09 on MAE/OBO. While in the re-split setting, the corresponding values were 0.108/0.08. The results illustrate the strong generalization ability of TKA when dealing with both seen and unseen actions, emphasizing the importance of incorporating multiple cycles in TKA to enhance overall model performance.

Feature Fusion We analyzed the performance variations resulting from different feature fusion strategies on TKA in Table.6. The max pooling method, which selects the closest feature for TKA, is identical to TKA (top1) shown in Figure.3. The result reveals that feature fusion with attention pooling achieves the best performance. This observation indicates that learnable fractions from diverse features within TKA play a role in enhancing the model’s performance.

Loss Term As we use two losses to train the model, we compare the performance with different values of . As shown in Table.7, while training the model exclusively with Mean Absolute Error (MAE) yields effective results, incorporating Mean Square Error (MSE) further enhances performance. Additionally, through experimentation, we determined that the optimal value for is 10.

| Method | RepCount-A | UCFRep | ||

|---|---|---|---|---|

| MAE | OBO | MAE | OBO | |

| Average Pooling | 0.285 | 0.45 | 0.182 | 0.72 |

| Attention Pooling | 0.268 | 0.47 | 0.150 | 0.77 |

| Max Pooling | 0.305 | 0.40 | 0.223 | 0.67 |

| Loss | MAE | OBO | |

|---|---|---|---|

| only | - | 0.327 | 0.37 |

| 1 | 0.293 | 0.42 | |

| 10 | 0.268 | 0.47 | |

| 20 | 0.288 | 0.45 |

4.5 Qualitative Results

In this part, we give some qualitative results to better characterize the ability of our framework.

In Figure.4, we show some nearest actions selected using our proposed TKA strategy, given an input action. For seen actions (Figure.4a), the nearest neighbors belong to the same action type, i.e. front raise and push up. For unseen actions (Figure.4b), the nearest neighbors are selected from the samples with similar actions. For example, given an input action of jumping jack, the selected actions are raise up and squat. Similarly, given an input actions rolling scope, the selected action is front raises. This visualization indicates that the TKA strategy successfully selects the most useful action in the training set to enhance the training and inference performance.

In Figure.5a, we present successful cases achieved by our method. In the first instance, the subject executes a sit-up with relatively stable frequency, allowing our model to precisely predict the density. In the second example, the individual performs a squat with gradually decreasing speed due to fatigue; nonetheless, our model can also accurately predict the density map. While our framework performs well on the majority of data, there are still instances of failure, as depicted in Figure.5b. In the first pair, a girl performs jumping jacks, and the video speed abruptly slows down in the latter half, approximately one-third of the speed compared to the first half. This big change in video speed disrupts our model’s prediction. In the second pair, a boy plays tennis on the ground, and the camera movement is rapid, capturing the boy running and hitting the tennis ball from various angles. These extreme cases can adversely affect our model’s performance.

5 Conclusion

In this paper, we introduced a framework called First Cycle Annotated Repetitive Action Counting, which includes a labeling and sampling technique, as well as the MTGC module and TKA strategy. The proposed new labeling technique aims to capture the relationship between the first and subsequent actions within a video. In addition, our dynamic sampling technique adjusts the sampling rate according to the speed of the first action cycle. To accommodate various action speeds within a single video, we introduced a Multi-Temporal Granularity Convolution module to capture action variations across different time scales. We also propose Training Knowledge Augmentation, which utilizes annotated first action cycle information within the dataset to improve prediction accuracy, regardless of whether the model is predicting previously observed or new actions. Our proposed method achieved satisfactory results on the RepCount-A, CountixAV, UCFRep, and QUVA datasets. The extensive experiment shows that our method achieves competitive generalization performance on unseen data.

References

- \bibcommenthead

- Hu et al. [2022] Hu, H., Dong, S., Zhao, Y., Lian, D., Li, Z., Gao, S.: Transrac: Encoding multi-scale temporal correlation with transformers for repetitive action counting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 19013–19022 (2022)

- Ranjan et al. [2021] Ranjan, V., Sharma, U., Nguyen, T., Hoai, M.: Learning to count everything. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3394–3403 (2021)

- Soro et al. [2019] Soro, A., Brunner, G., Tanner, S., Wattenhofer, R.: Recognition and repetition counting for complex physical exercises with deep learning. Sensors 19(3), 714 (2019)

- Lima et al. [2021] Lima, J.P., Roberto, R., Figueiredo, L., Simoes, F., Teichrieb, V.: Generalizable multi-camera 3d pedestrian detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1232–1240 (2021)

- Ran et al. [2007] Ran, Y., Weiss, I., Zheng, Q., Davis, L.S.: Pedestrian detection via periodic motion analysis. International Journal of Computer Vision 71, 143–160 (2007)

- Li et al. [2018] Li, X., Li, H., Joo, H., Liu, Y., Sheikh, Y.: Structure from recurrent motion: From rigidity to recurrency. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3032–3040 (2018). https://doi.org/10.1109/CVPR.2018.00320

- Ribnick and Papanikolopoulos [2010] Ribnick, E., Papanikolopoulos, N.: 3d reconstruction of periodic motion from a single view. International Journal of Computer Vision 90, 28–44 (2010)

- Tokta and Hocaoglu [2018] Tokta, A., Hocaoglu, A.K.: A fast people counting method based on optical flow. In: 2018 International Conference on Artificial Intelligence and Data Processing (IDAP), pp. 1–4 (2018). IEEE

- Dwibedi et al. [2020] Dwibedi, D., Aytar, Y., Tompson, J., Sermanet, P., Zisserman, A.: Counting out time: Class agnostic video repetition counting in the wild. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10387–10396 (2020)

- Zhang et al. [2020] Zhang, H., Xu, X., Han, G., He, S.: Context-aware and scale-insensitive temporal repetition counting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 670–678 (2020)

- Zhang et al. [2021] Zhang, Y., Shao, L., Snoek, C.G.: Repetitive activity counting by sight and sound. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14070–14079 (2021)

- Zhang et al. [2020] Zhang, H., Xu, X., Han, G., He, S.: Context-aware and scale-insensitive temporal repetition counting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 670–678 (2020)

- Carreira and Zisserman [2017] Carreira, J., Zisserman, A.: Quo vadis, action recognition? a new model and the kinetics dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6299–6308 (2017)

- Hara et al. [2017] Hara, K., Kataoka, H., Satoh, Y.: Learning spatio-temporal features with 3d residual networks for action recognition. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 3154–3160 (2017)

- Chen et al. [2021] Chen, S., Sun, P., Xie, E., Ge, C., Wu, J., Ma, L., Shen, J., Luo, P.: Watch only once: An end-to-end video action detection framework. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 8158–8167 (2021). https://doi.org/10.1109/ICCV48922.2021.00807

- Chao et al. [2018] Chao, Y.-W., Vijayanarasimhan, S., Seybold, B., Ross, D.A., Deng, J., Sukthankar, R.: Rethinking the faster r-cnn architecture for temporal action localization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1130–1139 (2018)

- Vaswani et al. [2017] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. Advances in neural information processing systems 30 (2017)

- Junejo et al. [2010] Junejo, I.N., Dexter, E., Laptev, I., Perez, P.: View-independent action recognition from temporal self-similarities. IEEE transactions on pattern analysis and machine intelligence 33(1), 172–185 (2010)

- Lian et al. [2021] Lian, D., Chen, X., Li, J., Luo, W., Gao, S.: Locating and counting heads in crowds with a depth prior. IEEE Transactions on Pattern Analysis and Machine Intelligence 44, 9056–9072 (2021)

- Arteta et al. [2016] Arteta, C., Lempitsky, V., Zisserman, A.: Counting in the wild. In: Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part VII 14, pp. 483–498 (2016). Springer

- Levy and Wolf [2015] Levy, O., Wolf, L.: Live repetition counting. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3020–3028 (2015)

- Zheng et al. [2014] Zheng, Y., Liu, Q., Chen, E., Ge, Y., Zhao, J.L.: Time series classification using multi-channels deep convolutional neural networks. In: Web-Age Information Management: 15th International Conference, WAIM 2014, Macau, China, June 16-18, 2014. Proceedings 15, pp. 298–310 (2014). Springer

- Lu et al. [2019] Lu, E., Xie, W., Zisserman, A.: Class-agnostic counting. In: Computer Vision–ACCV 2018: 14th Asian Conference on Computer Vision, Perth, Australia, December 2–6, 2018, Revised Selected Papers, Part III 14, pp. 669–684 (2019). Springer

- Van Den Oord et al. [2017] Van Den Oord, A., Vinyals, O., et al.: Neural discrete representation learning. Advances in neural information processing systems 30 (2017)

- Zhou et al. [2021] Zhou, Z., Shin, J.Y., Gurudu, S.R., Gotway, M.B., Liang, J.: Active, continual fine tuning of convolutional neural networks for reducing annotation efforts. Medical image analysis 71, 101997 (2021)

- Liu et al. [2021] Liu, Z., Ning, J., Cao, Y., Wei, Y., Zhang, Z., Lin, S., Hu, H.: Video swin transformer. CoRR abs/2106.13230 (2021) 2106.13230

- Liu et al. [2019] Liu, W., Salzmann, M., Fua, P.: Context-aware crowd counting. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2019)

- Wan and Chan [2019] Wan, J., Chan, A.: Adaptive density map generation for crowd counting. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 1130–1139 (2019). https://doi.org/10.1109/ICCV.2019.00122

- Tan et al. [2019] Tan, X., Tao, C., Ren, T., Tang, J., Wu, G.: Crowd counting via multi-layer regression. Proceedings of the 27th ACM International Conference on Multimedia (2019)

- Ranjan et al. [2018] Ranjan, V., Le, H., Hoai, M.: Iterative crowd counting. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision – ECCV 2018, pp. 278–293. Springer, Cham (2018)

- Guo [2011] Guo, H.: A simple algorithm for fitting a gaussian function [dsp tips and tricks]. IEEE Signal Processing Magazine 28(5), 134–137 (2011)

- Nadaraya [1964] Nadaraya, E.A.: On estimating regression. Theory of Probability & Its Applications 9(1), 141–142 (1964) https://doi.org/10.1137/1109020 https://doi.org/10.1137/1109020

- Chen et al. [2023] Chen, F., Datta, G., Kundu, S., Beerel, P.A.: Self-attentive pooling for efficient deep learning. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3974–3983 (2023)

- Feichtenhofer [2020] Feichtenhofer, C.: X3D: expanding architectures for efficient video recognition. CoRR abs/2004.04730 (2020) 2004.04730

- Liu et al. [2020] Liu, Z., Wang, L., Wu, W., Qian, C., Lu, T.: TAM: temporal adaptive module for video recognition. CoRR abs/2005.06803 (2020) 2005.06803

- Huang et al. [2020] Huang, Y., Sugano, Y., Sato, Y.: Improving action segmentation via graph-based temporal reasoning. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, June 13-19, 2020, pp. 14021–14031. Computer Vision Foundation / IEEE, ??? (2020). https://doi.org/10.1109/CVPR42600.2020.01404 . https://openaccess.thecvf.com/content_CVPR_2020/html/Huang_Improving_Action_Segmentation_via_Graph-Based_Temporal_Reasoning_CVPR_2020_paper.html

- Runia et al. [2018] Runia, T.F., Snoek, C.G., Smeulders, A.W.: Real-world repetition estimation by div, grad and curl. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9009–9017 (2018)

- Yao et al. [2023] Yao, Z., Cheng, X., Zou, Y.: Poserac: Pose saliency transformer for repetitive action counting. arXiv preprint arXiv:2303.08450 (2023)