Faster Optimization-Based Meta-Learning Adaptation Phase

Abstract

Neural networks require a large amount of annotated data to learn. Meta-learning algorithms propose a way to decrease the number of training samples to only a few. One of the most prominent optimization-based meta-learning algorithms is Model-Agnostic Meta-Learning (MAML). However, the key procedure of adaptation to new tasks in MAML is quite slow. In this work we propose an improvement to MAML meta-learning algorithm. We introduce Lambda patterns by which we restrict which weight are updated in the network during the adaptation phase. This makes it possible to skip certain gradient computations. The fastest pattern is selected given an allowed quality degradation threshold parameter. In certain cases, quality improvement is possible by a careful pattern selection. The experiments conducted have shown that via Lambda adaptation pattern selection, it is possible to significantly improve the MAML method in the following areas: adaptation time has been decreased by a factor of 3 with minimal accuracy loss; accuracy for one-step adaptation has been substantially improved.

Keywords: Model-Agnostic Meta-Learning, MAML, Adaptation Time, Adaptation Speed, Few-Shot Learning, Meta-Learning.

Nomenclature

is a number of images per class that are given for the network training.

is a number of classes the network is trained to distinguish between.

is a network input, in our case, images.

is a neural network.

is a matrix of network weights.

is a number of layers in the neural network.

is a distribution of all tasks.

is one of the tasks, consisting of Support Set , Query Set .

is a number of adaptation steps.

is a matrix of adapted weights after iterations that correspond to th task.

is an adaptation step size, .

is a learning rate, .

is an adaptation pattern, which controls which neural network layers should be updated during the adaptation procedure to the current task .

1 Introduction

The neural network accuracy for image classification has significantly improved thanks to deep convolutional neural networks. However, a very large number of images is required for such networks to train successfully. For instance, all of the ResNet [1] neural network configurations from ResNet-18 to ResNet-152 (18 and 152 layers deep correspondingly) are trained on the ImageNet dataset [2], which contains 1,281,167 images and 1,000 classes (about 1,200 samples per class). Obviously, for many of the practically significant tasks it is impossible to collect and label a dataset that large. Thus, learning deep convolutional networks from scratch might yield poor results. Because-of that, on the smaller datasets typically an approach called transfer learning is used instead. That is, an ImageNet pretrained network of a particular architecture is taken and then further finetuned on the target (smaller) dataset [1, 3, 4]. However, training on few examples per class is still a challenge. This contrasts to how we, humans, learn, when even a single example given to a child might be enough. Also, it is hard to estimate the quality of a certain ImageNet pretrained network on the target dataset. Hence, we get a model selection problem: if the model A is better than the model B on ImageNet, will it be better on our small dataset? A promising approach to resolving both of these problems is to use meta-learning or its benchmark known as few-shot learning. Meta-learning trains the network on a set of different tasks, which are randomly sampled from the whole space of tasks. By learning the network in such a way, it is assumed that the network will learn features that are relevant to all of the tasks and not only to the single one, i.e., will learn more general features.

In this work we focus on one of the most prominent optimization-based meta-learning methods, called Model-Agnostic Meta-Learning (MAML) [5]. This method has become a keystone, and as it will be shown in the literature overview section, many of the newer method base on its ideas. Training of the MAML method is split into the so-called adaptation and meta-gradient update phases. It has been shown that the adaptation phase of the MAML is quite slow to perform [6], and in general, slow neural network execution is a major problem for applications [7]. In this work we introduce gradient update patterns, i.e., a selective update of the neural network weights during the adaptation phase.

The purpose of this work is to show that by carefully selecting the newly-proposed gradient update pattern, it is possible to increase the speed of MAML adaptation phase, and to significantly improve MAML performance in case, when only 1 adaptation phase is used. The testing results will be shown on a publicly available few-shot learning dataset CIFAR-FS [8].

2 Problem Statement

The goal behind meta-learning is to train a neural net-work , that is capable of adapting to the new previously unknown tasks given a small number of examples. Meta-learning is also said to be learning to learn problem. The training procedure is defined using a concept of tasks, that are sampled from the whole task space of the problem domain. The task is a tuple , consisting of the so-called Support Set and Query Set [5, 9, 10, 11]. Support Set is used to adapt (or train) the network to the new task. The set is small. are the network inputs, are the expected predictions. The number of examples per class is denoted as and written as -shot. is typically in range from 1 to 20, although no hard upper-bound is defined. are the query inputs and expected outputs correspondingly, which are used to evaluate the network. Number of classes the network should distinguish between is denoted as -way.

Next, we define the training procedure for optimization-based meta-learning, which this paper is focused on. It is defined in 2 steps: 1) adaptation step, which computes adaptation weights in a form of a function , that minimizes task-specific error ; 2) meta-gradient update, which updates the meta-weights . The idea behind such training procedure is that by finding good weights , it will be possible to adapt to new previously unseen tasks with few training examples in the adaptation procedure. For classification, the loss function used is typically cross-entropy:

| (1) |

3 Literature Overview

The meta-learning approaches are mainly divided into 3 broad categories [12]: metric-based, model-based and optimization-based. Representatives of each group differ in the neural network design and training procedure. Applications exist in literally every field of machine learning [5, 13, 14, 15], such as NLP, Reinforcement Learning, Face Verification, etc. In this work we focus on Image Classification.

Typically meta-learning methods are split into the following categories:

-

1.

Metric-based methods, where the goal is to define a neural network architecture that produces an embedding into a metric space and a similarity measure (metric). The distance between embeddings of the same class should be smaller than that of the different classes. Examples of such methods include Siamese Networks [16], Matching Networks [17], Prototypical Networks [9].

-

2.

In model-based methods the network architecture is designed, so that the model has explicit memory cells, which help the network to adapt quickly. Memory-Augmented Neural Networks [18] might serve as an illustrative example of this approach.

-

3.

In contrast to previous categories, optimization-based learning does not involve network architecture change, which means that conventional architectures for image classification can be used.

One of the quintessential optimization-based methods is MAML [5]. It defines the training procedure as a 2nd-order optimization problem. The method applicability has been shown in regression, classification and reinforcement learning. Two popular datasets were considered for image classification: Omniglot [19] and miniImageNet [10, 17], where MAML has beaten many of the previous methods with a margin. After MAML has been introduced, a lot of works have proposed its modifications. Reptile [20] has simplified MAML training scheme, MAML++ [11] has given practical recommendations on improving MAML training stability. In has been noted that while MAML++ has introduced more parameters to the network, total training time has decreased thanks to the performance optimizations proposed. Authors of Meta-SGD [21] note that by learning not only network weights, but also separate update coefficients for each of the weights, it is possible to achieve higher accuracy. However, the network training time and memory consumption has significantly increased as twice the number of the parameters should be optimized.

In contrast to previous works, in this paper we focus on improving the network adaptation and not training time. We assume that after the initial training, the network can be adapted to multiple tasks in an online format. Thus, minimizing adaptation time is an important problem. The results obtained in the paper will be applicable to many of the optimization-based algorithms, including but not limited to the ones mentioned above.

4 Materials and Methods

In this work we propose a modification to the MAML algorithm. This class of algorithms is defined in terms of adaptation and meta-gradient update phases. The algorithm starts by randomly sampling a training task . To sample a task means to 1) randomly select classes from all classes that are available in the dataset split (training, validation or test); 2) randomly select images per each of the classes for the Support Set and images for the Query Set. The first phase of the algorithm is adaptation, where MAML minimizes loss function Eq. 1 on the Support Set by performing several stochastic gradient descent steps. The algorithm iteratively builds model weights via Eq. 2, note that :

| (2) |

Having iteratively built the task specific weights , the algorithm updates the meta-weights using Eq. 3:

| (3) |

In essence, in Eq. 3 the algorithm updates the meta-weights by averaging computed loss function Eq. 1 on the Query Set for the neural network with weights on several tasks , i.e., in this step the algorithm backpropagates through the losses of all the task-specific adaptations. Throughout the paper we use 4 tasks for the meta-update step. Note, that in Eq. 2 task-specific weights are computed on the Support Set, while in Eq. 3 Query Set is used for the loss computation. Also, in contrast to the conventional neural network training procedure the loss function is computed twice: first, to compute the adaptation weights in Eq. 2; second, to compute the resulting adaption loss in Eq. 3. Additionally, in Eq. 2 the gradient is taken by task-specific weights , and in Eq. 3 the gradient is taken by the meta-weights . Thus, as can be seen from Eqs. 2 and 3 the method requires Hessian computation during the meta-gradient update. Hence, this is a second-order optimization method. The whole training procedure is depicted in Algorithm 1. A more detailed description can be found in the original paper [5].

Next, we define our modified adaptation procedure. Given a convolutional neural network that has layers, we define an adaptation pattern Eq. 4, where is an indicative function as defined in Eq. 5, which indicates layers of the network that should be updated during backpropagation.

| (4) |

| (5) |

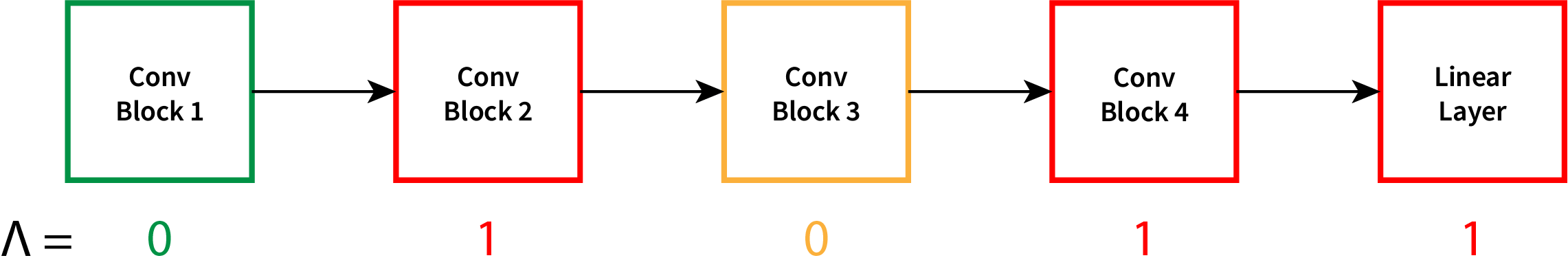

We say that pattern is full if . In this case our adaptation phase will be equivalent to the one proposed in MAML. We consider all possible patterns , except , when no weights can be updated; thus, no adaptation is possible. We assume that updating only certain weights might be useful, because the neural networks tend to learn features that differ in complexity, the closer the layer is to the input the simple the features are [22]. Also, authors of Meta-SGD [21] have shown that by learning weight-specific learning rates the resulting quality was superior to the original MAML algorithm. However, Meta-SGD approach was much slower to train as both weights and learning rates have to be learned during the training procedure. Training time in our approach is intact. In contrast to previous works, we propose to update only certain weights; thus, essentially freezing some layers. This allows us to decrease gradient computations required during the adaptation phase as is shown in Fig. 1 for a convolutional network that contains 4 convolutional and a single fully connected (linear) layer ().

In Fig. 1 the backpropagation pass goes in the direction opposite to arrows. The architecture is taken as an example and can be arbitrary in practice. For the considered pattern :

-

1.

In the convolutional block 4 and the linear layers both the gradient is computed and the weights are updated.

-

2.

In convolutional block 3 the weights are not updated, but the gradients are computed as convolutional block 2 requires weight update.

-

3.

In convolutional block 1 no gradients computation or weight update are performed.

Given the above-described pattern description, the updated adaptation formula will look as follows:

| (6) |

5 Experiments

To conduct the experiments, we have reimplemented the MAML algorithm. The following paragraph describes the details.

The authors of MAML have defined a convolutional neural network architecture and have used it for miniImageNet experiments. This network is commonly referred to as “CNN4” in the literature. It has 4 convolutional blocks, followed by a linear layer. Each of the blocks has a convolutional layer with a kernel size of 3 and a padding of 1, followed by the Batch Normalization [23], ReLU activation and Max Pooling with a kernel size of 2. Number of filters in the convolutional layers is a configurable parameter. The authors have used 32, which we follow. Number of outputs in the linear layer is defined by for -way classification problem. Training is performed via Adam [24] gradient descent method as meta-optimizer with learning rate of , and as the adaptation step size. Each model has been trained for 600 epochs. The authors used meta-batch size of 2 for 5-shot and 4 for 2-shot experiments. Instead, we consistently use meta-batch size of 4 as it leads to slightly better performance on CIFAR-FS [8] dataset during our experiments. Each epoch has 100 randomly sampled tasks . For the gradient update samples are taken for -shot -way classification problem for training and 15 samples per class for evaluation, following [10].

In addition, we have modified the network adaptation procedure, so that it updates only weights defined by pattern as defined in Eqs. 4, 5 and 6.

For the experiments we have used the novel CIFAR-FS [8] dataset. It has been constructed from a well-known CIFAR-100 [25] classification dataset. It has images of different kinds of mammals, reptiles, flowers, man-made things, etc. The images are in color and have a size of . In [8] it has been suggested to split 100 classes into train, validation and test sets. If it has been the non-few-shot neural network training, we would expect all of the 100 classes to be equally represented in each of the sets, only the images themselves would have been split. However, in case of few-shot learning different disjoint classes are taken into each subset. Specifically, 64, 16, and 20 classes have been selected for training, validation, and test set correspondingly. The exact classes that go into each split are important and are defined in [8]. By using different classes for training and testing, the adaptation to the new classes can be better estimated. After such training the model is expected to quickly adapt to the new unseen classes. We have taken the CIFAR-FS dataset for our experiments as it hasn’t been analyzed by the MAML authors and is also faster to compute than miniImageNet.

All of the training procedures and time measurements were done on our own MAML implementation and tested on NVIDIA GTX 1050Ti GPU.

6 Results

Accuracies and timings for our MAML implementation on CIFAR-FS are presented in Table 1.

| 1-shot | 5-shot | 1-shot | 5-shot | |

|---|---|---|---|---|

| 2-way | 2-way | 5-way | 5-way | |

| Accuracy | 77.2 % | 87.6 % | 51.7 % | 70.3 % |

| Time | 38.43 ms | 40.70 ms | 41.67 ms | 45.35 ms |

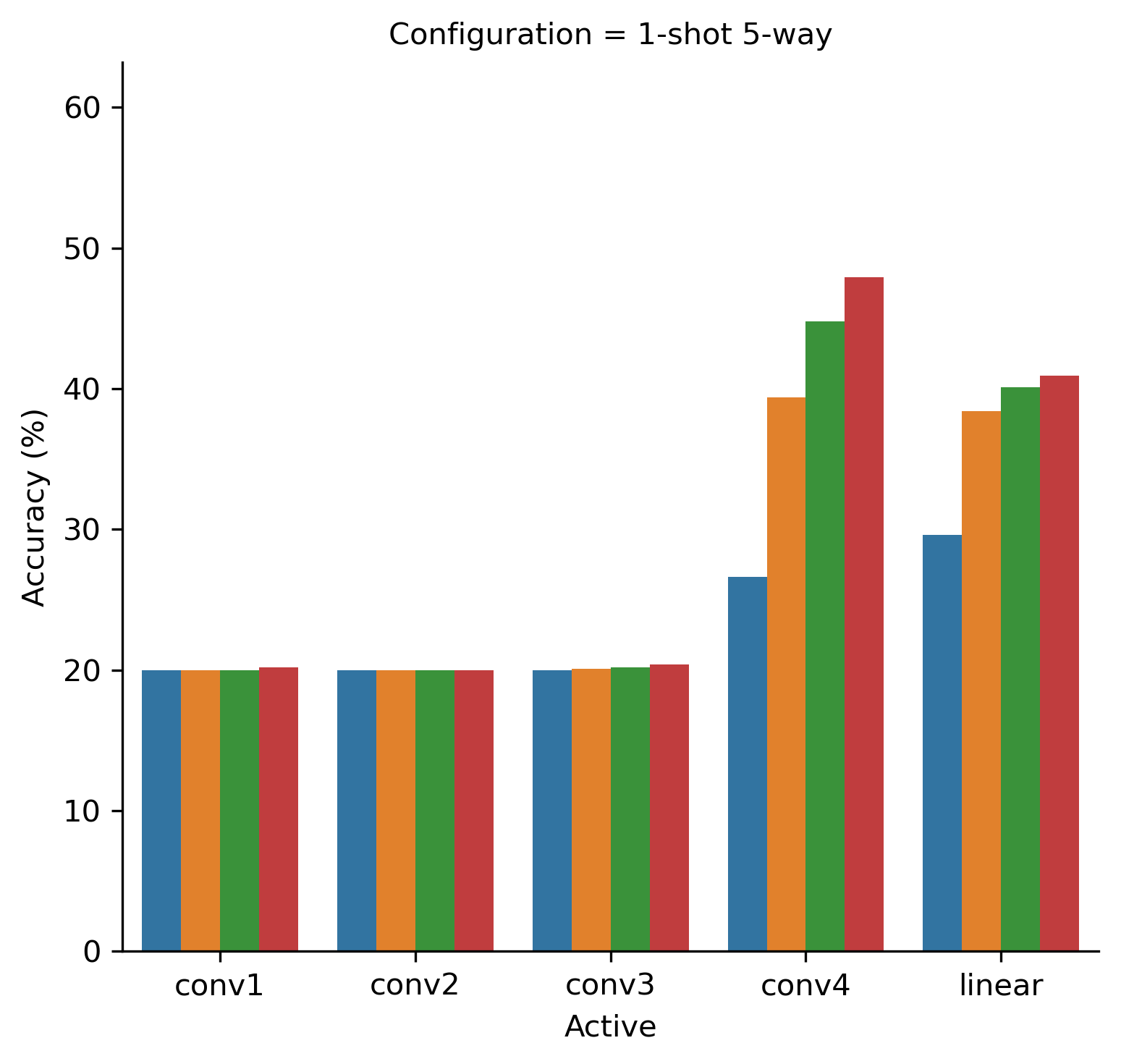

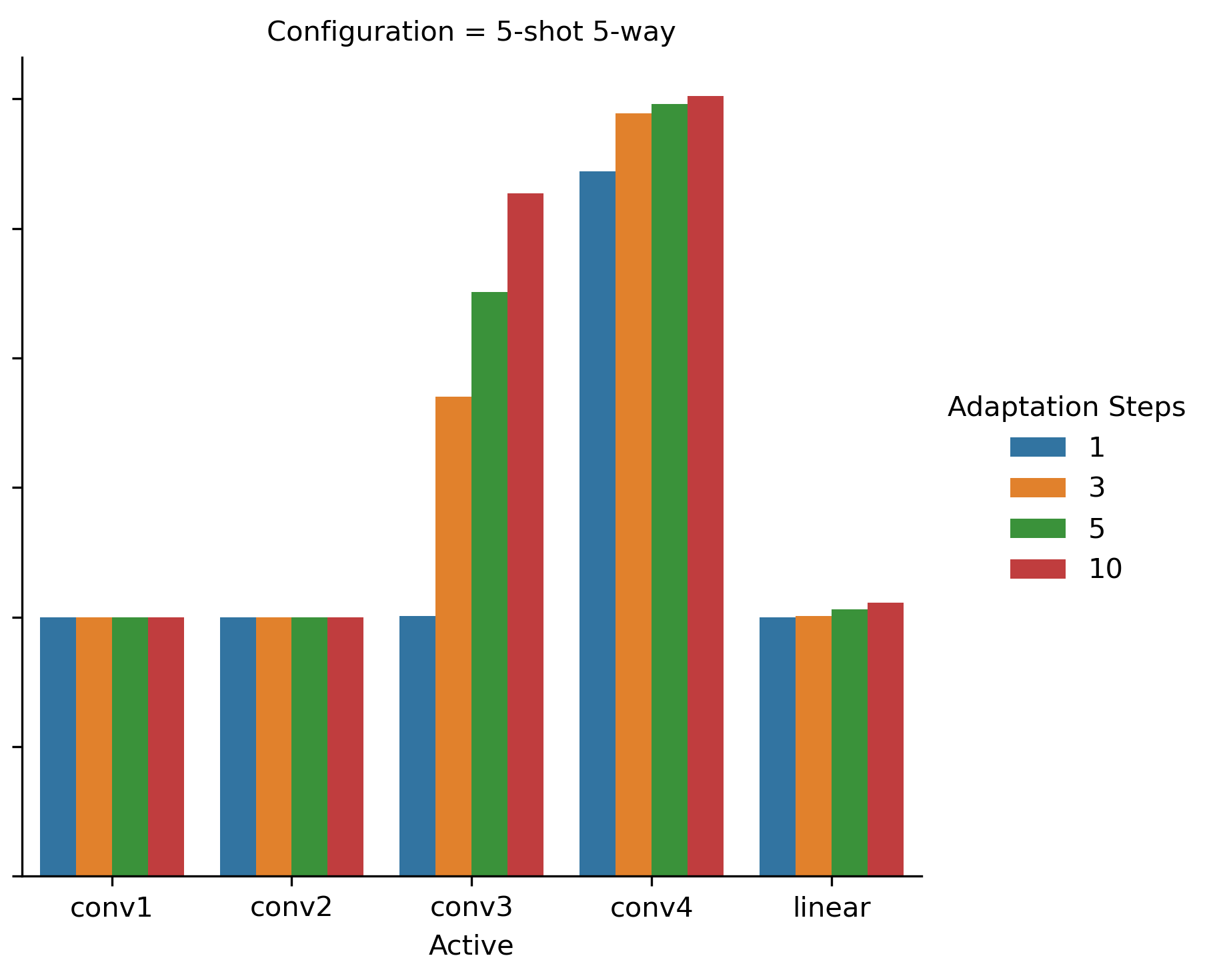

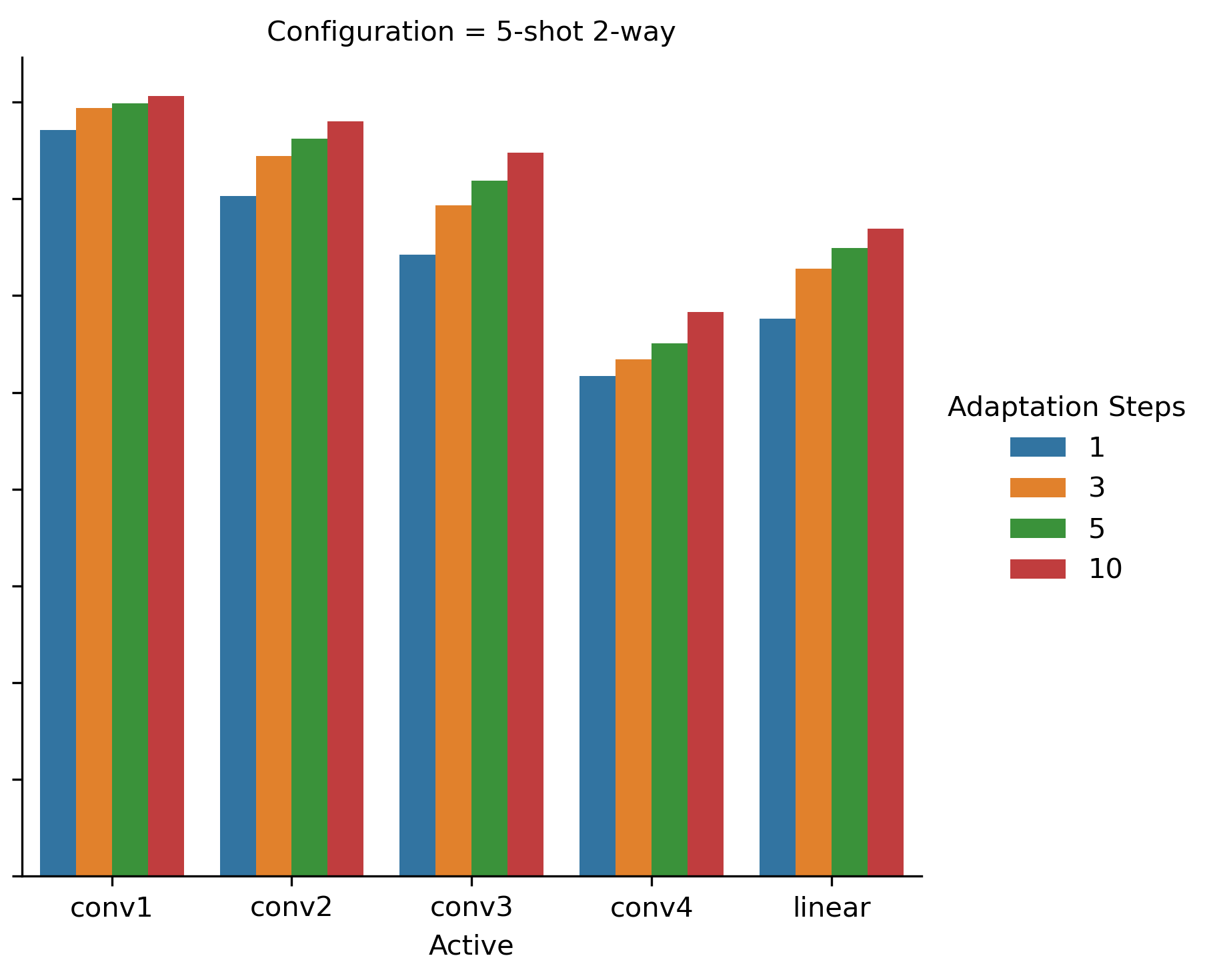

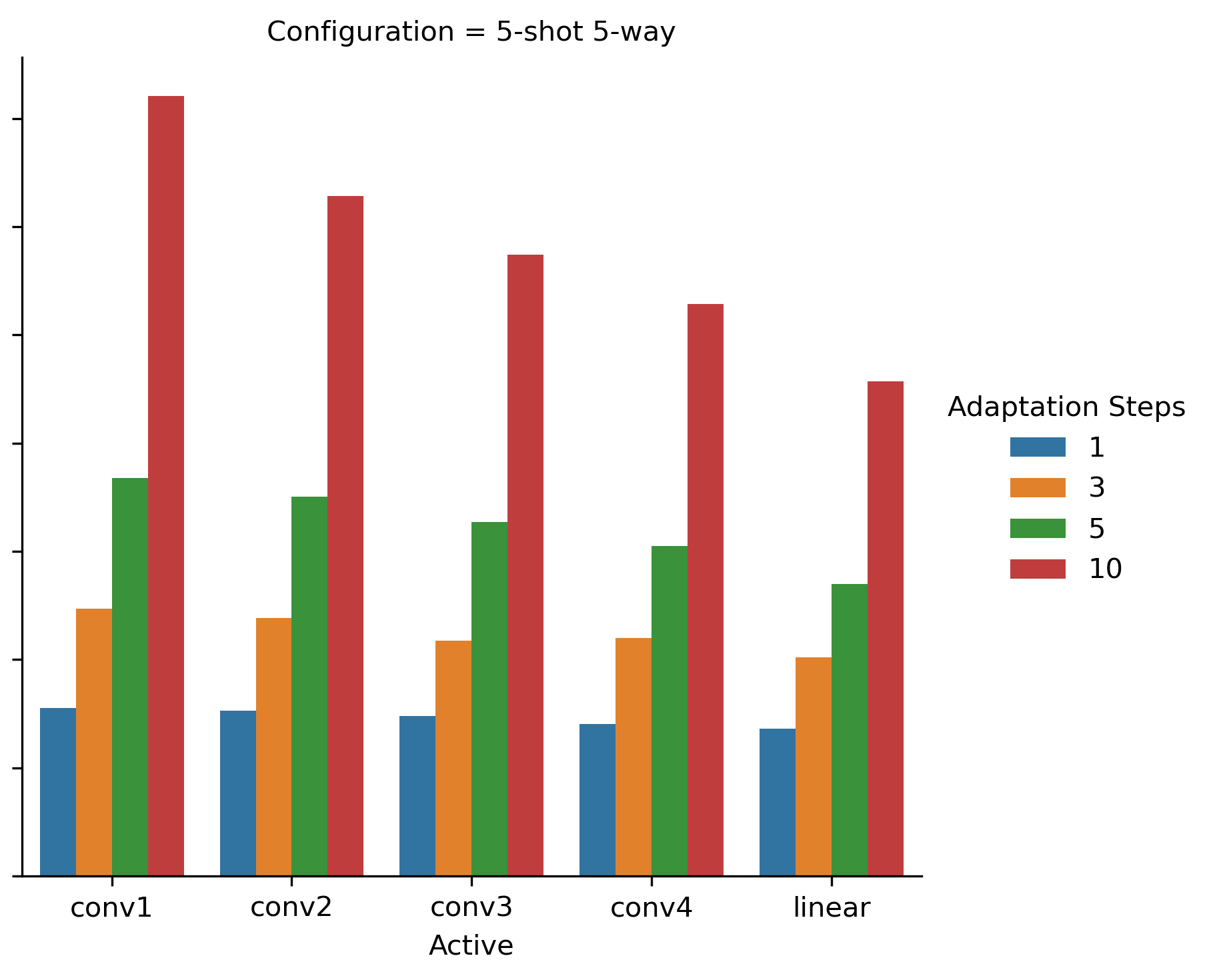

In Eq. 6 we have proposed a modified adaptation formula, where only a part of weights is updated during the adaptation procedure. To begin with, let us consider only trivial patterns , where only one network layer is updated during the adaptation procedure. In Figs. 2(a) and 2(b) we conduct the experiment for 1-shot 5-way and 5-shot 5-way configurations correspondingly. The results are presented on the test set. To see the impact of the number of adaptation steps, we show the accuracies for (default) and 1, 3, 5 adaptation steps. As it can be seen, the model accuracy differs significantly between the configurations.

For 1-shot 5-way, learning one of the first three convolutional layers only has no effect, the accuracy remains on the level of random guessing (20%). However, training either convolutional layer 4 or the last linear layer improves the model accuracy. Note, that the number of parameters in layers differs. In Table 2, we show the number of parameters in each layer. Note, that the final layer has different number of parameters depending on output classes. It can be seen, that the first convolutional layer and the final linear (fully connected) layers have fewer parameter than inner convolutional blocks.

For 5-shot 5-way scenario we see a different picture. Only convolutional layers 3 and 4 have positive impact on the performance if adapted alone. Interestingly, the number of adaptation steps has a significant impact on the performance with only convolutional layer #3 enabled. As we will see later, such an impact is higher, than when all network layers are updated during the adaptation.

| Layer Name | # Parameters |

|---|---|

| Conv Block 1 | 960 |

| Conv Block 2 | 9,312 |

| Conv Block 3 | 9,312 |

| Conv Block 4 | 9,312 |

| Linear | |

| 2-way | 1,602 |

| 5-way | 4,005 |

| Total | |

| 2-way | 30,498 |

| 5-way | 32,901 |

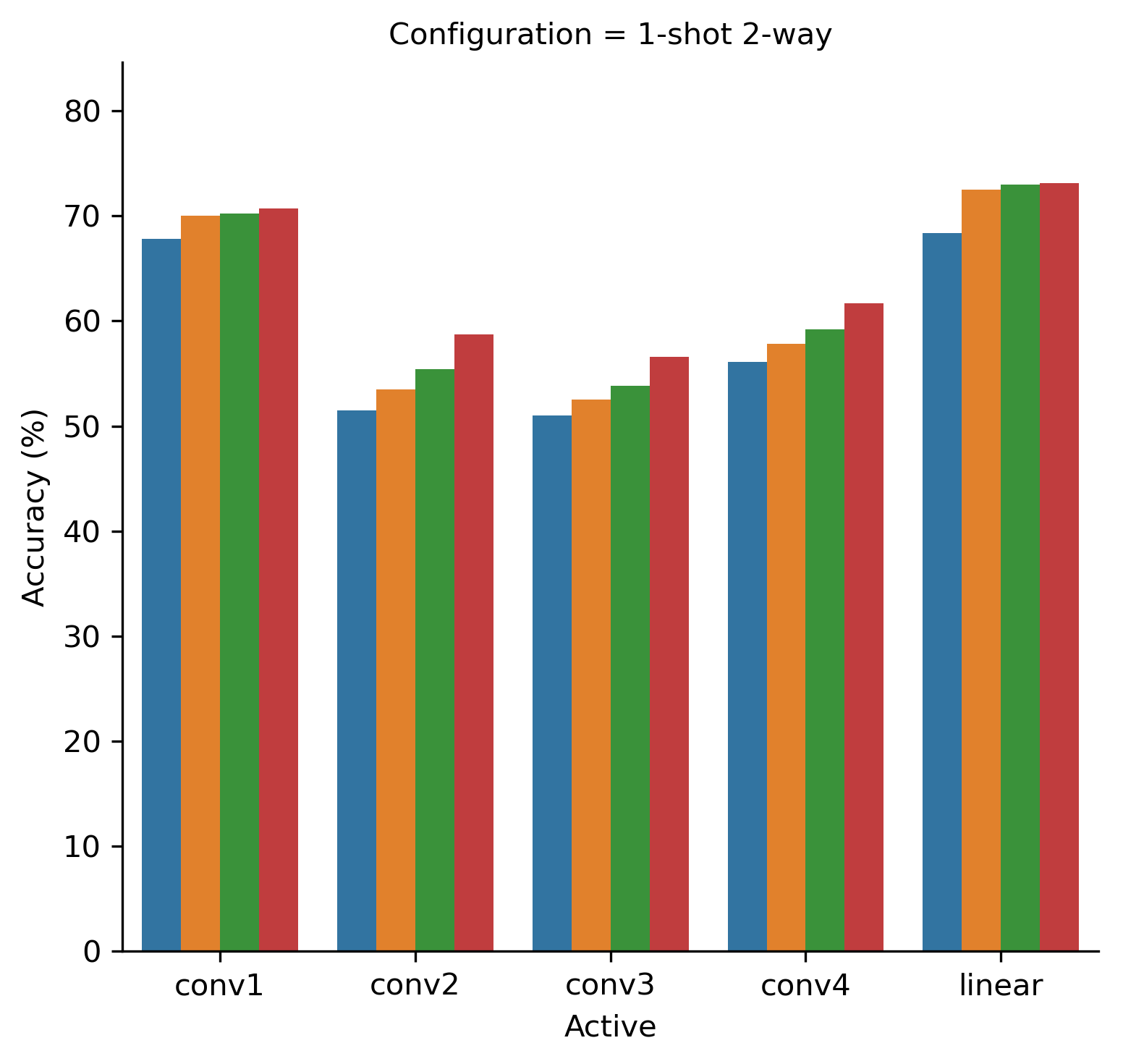

In Figs. 3(a) and 3(b) we depict a similar experiment for 1-shot 2-way and 5-shot 2-way configurations correspondingly. Note, that random guessing baseline for these configurations is now at 50%, so the lower bound for accuracy is now higher than in Fig. 2. Here we see an opposite trend, where updating the first layers also has a positive impact on the resulting accuracy. Contrasting to previous experiment, updating exclusively the convolutional block 4 doesn’t provide the best results in either configuration.

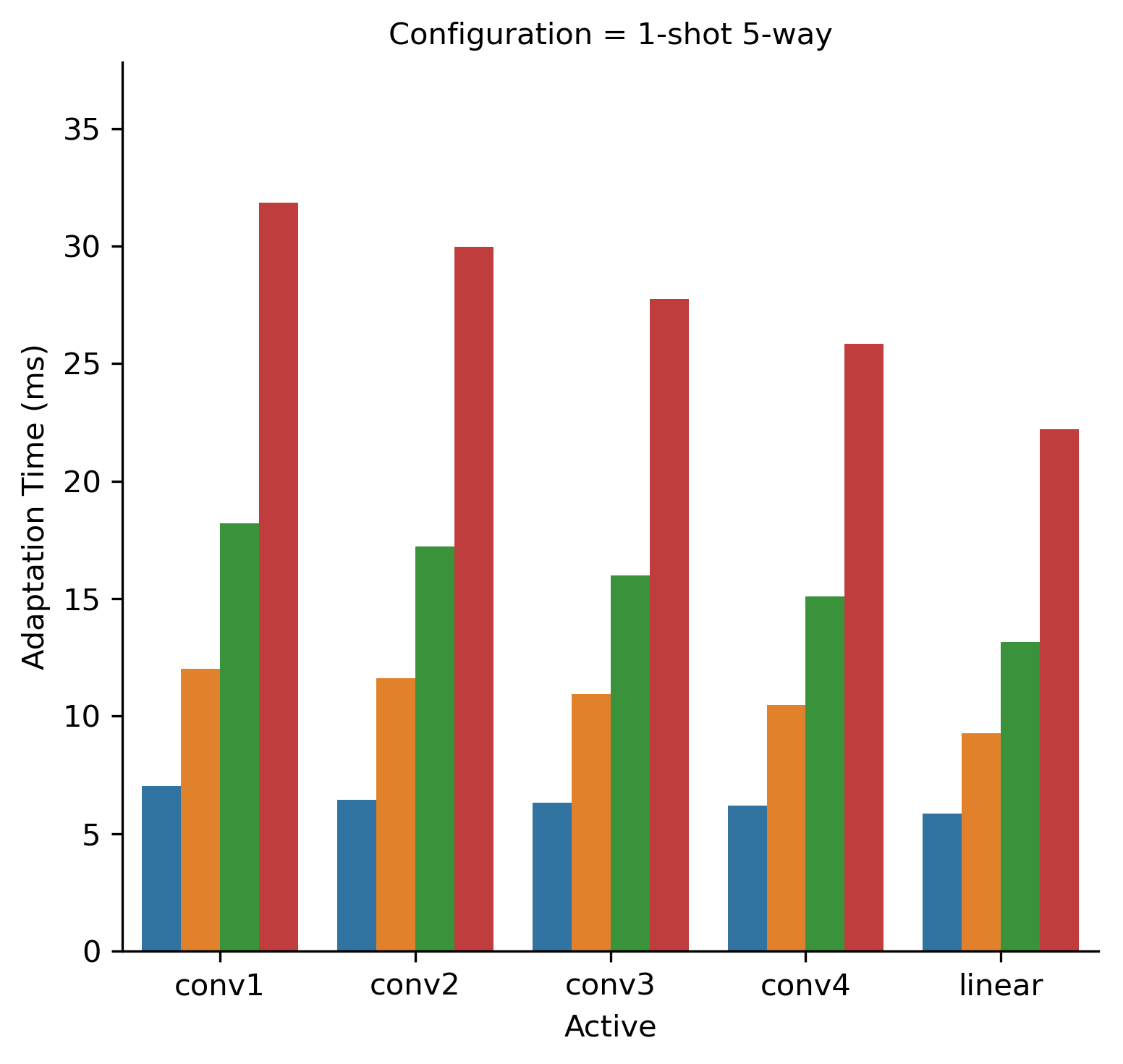

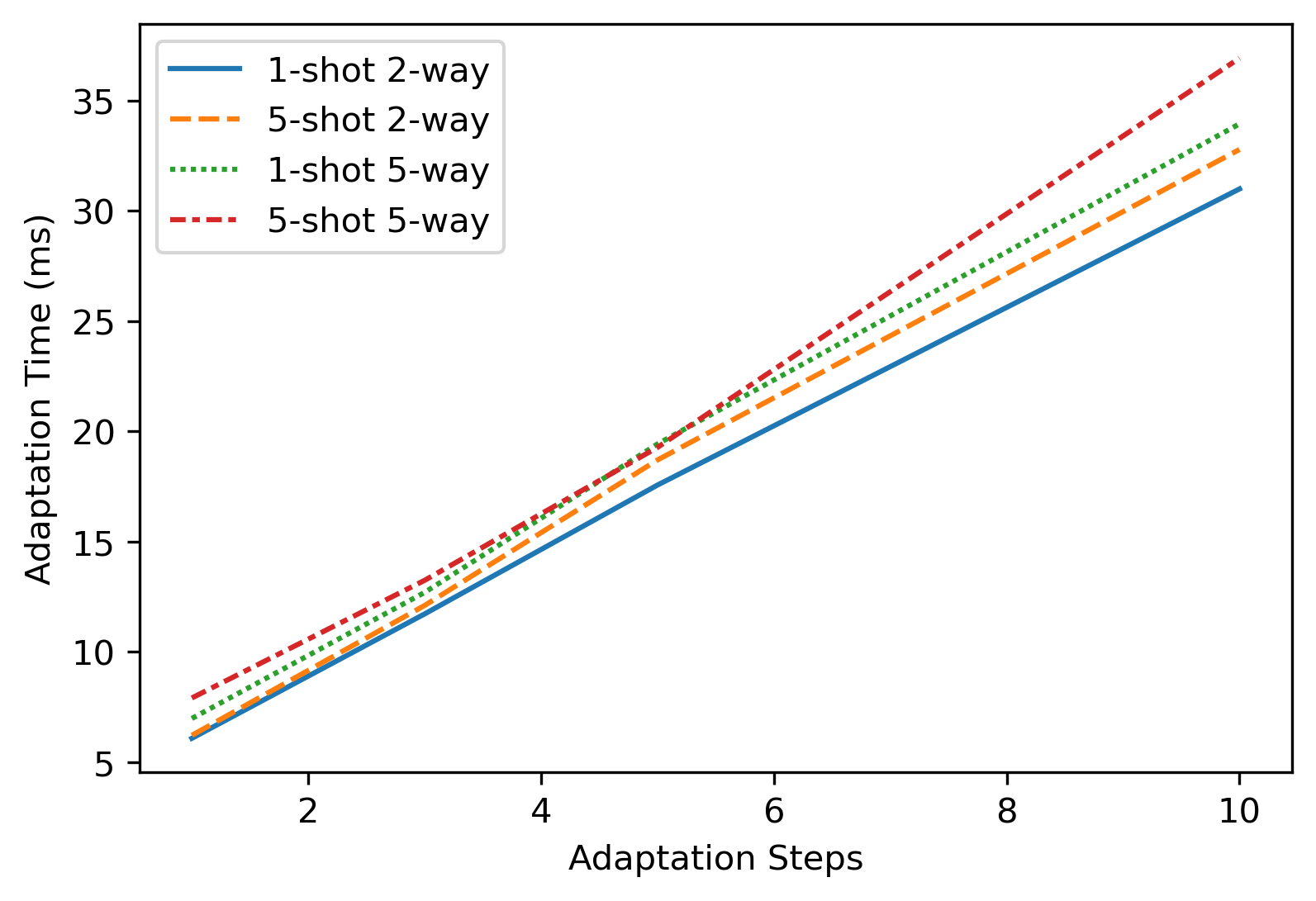

In Fig. 4 we show adaptation time for 1-shot 5-way and 5-shot 5-way configurations for all trivial patterns. As can be seen, selected pattern and the number of adaptation steps have a significant impact on the adaptation speed. A similar trend is observed in 2-way configurations.

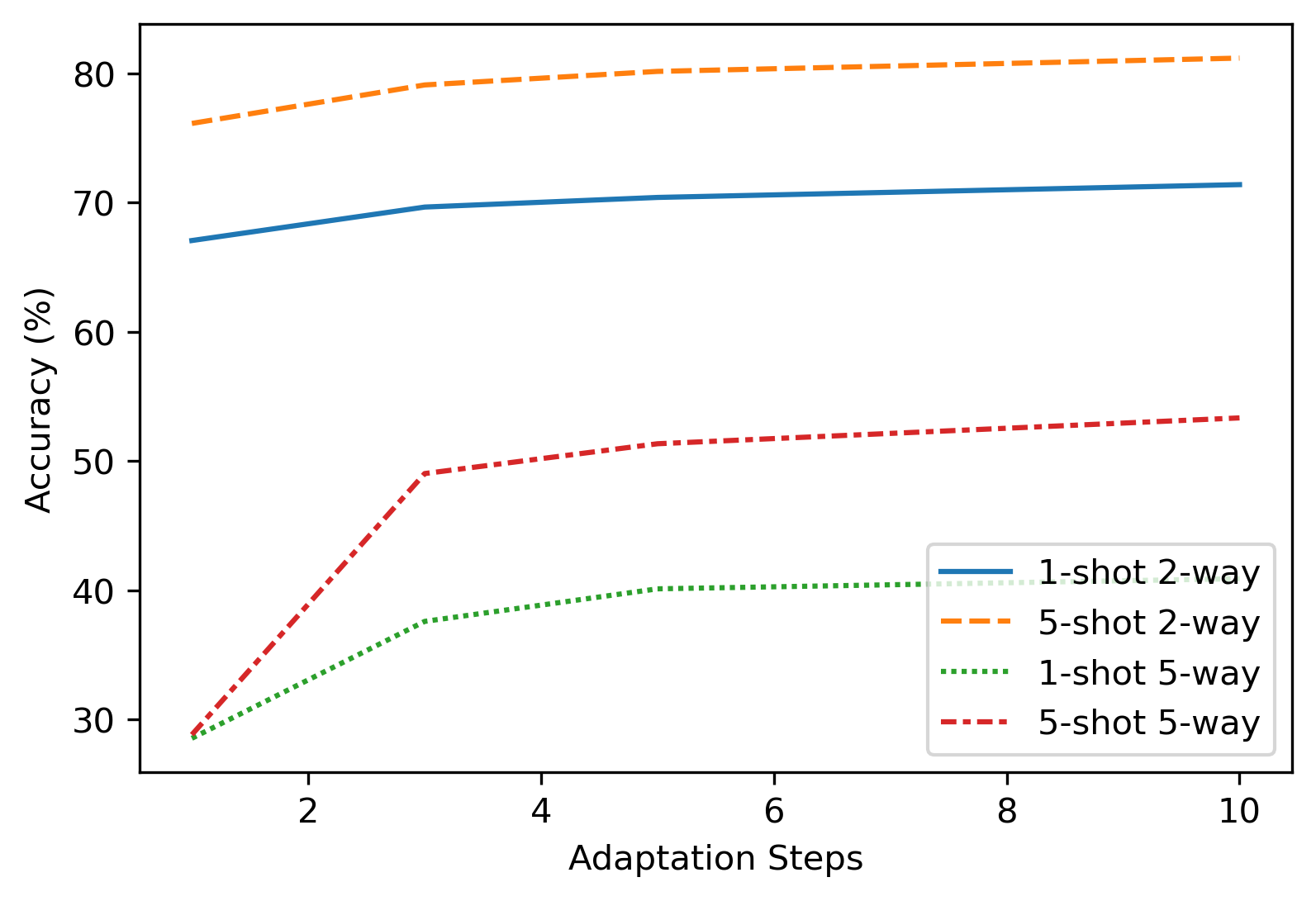

In Fig. 5 we show the model accuracy for each of the four scenarios and in Fig. 6 we depict the corresponding timings, both shown with respect to the number of the adaptation steps. As before, the experiments have been conducted for and 10 adaptation steps. The results between those reference points have been linearly interpolated. The presented accuracies and timings are averaged for all 31 possible patterns. Note, that throughout the article we exclude pattern , as no weights can be changed for such pattern, therefore no adaptation is possible. As can be seen, while the adaptation time grows linearly with the number of adaptation steps, the accuracy growth plateaus at around 5 adaptation steps. Actually, for the full pattern increasing the number of adaptation steps from 5 to 10 has less than 0.3% improvement in accuracy. In typical practical scenarios such an improvement is insignificant. Thus, we suggest that performing 10 adaptation steps is redundant.

Next, we try to search for such a pattern and number of adaptation steps, so that the resulting accuracy drops no more than 0.07 times the full pattern accuracy. We see such a quality degradation threshold reasonable for practical applications. Obviously, the approach we propose can be applied with an arbitrary quality degradation threshold. We search for such patterns and show them in Table 3. Based on this table, we suggest using the , which offers a factor of 3.0 speed improvement with an insignificant quality loss. It can be seen that pattern also suits the specified criteria and has a slightly higher (factor of 3.1) performance improvement. However, it has a significantly lower performance for both of the 2-way configurations, degrading on 2.5% and 3.2% relative to the best selected pattern . We consider such a degradation not worth the additional speed up. The fact that enabling first CNN layer is significant for the 2-way learning accuracy, closely follows the presented above description of Fig. 3. Also, not to be mistaken, in Figs. 2, 3 and 4, we had only one layer updated during the adaptation phase (thus ). However, the best selected pattern has all expect one layer updated.

| Adaptation | Pattern | 1-shot 2-way | 5-shot 2-way | 1-shot 5-way | 5-shot 5-way | Mean Adaptation | Relative |

|---|---|---|---|---|---|---|---|

| Steps | (%) | (%) | (%) | (%) | Time (ms) | Speedup | |

| 3 | 0,1,1,1,1 | 74.7 | 83.2 | 49.3 | 69.7 | 13.3 | 3.1 |

| 3 | 1,0,1,1,1 | 76.6 | 85.9 | 49.3 | 69.8 | 13.9 | 3.0 |

| 3 | 1,1,1,1,1 | 76.6 | 87.2 | 49.3 | 70.0 | 15.0 | 2.8 |

| 5 | 0,1,1,1,1 | 75.2 | 83.9 | 51.5 | 69.9 | 20.0 | 2.1 |

| 5 | 1,0,1,1,1 | 76.9 | 86.2 | 51.4 | 70.1 | 21.1 | 2.0 |

| 5 | 1,1,1,1,1 | 77.0 | 87.4 | 51.6 | 70.2 | 22.6 | 1.8 |

| 10 | 0,1,1,1,1 | 75.4 | 84.6 | 51.7 | 70.1 | 36.1 | 1.2 |

| 10 | 1,0,1,1,1 | 77.1 | 86.6 | 51.7 | 70.1 | 38.6 | 1.1 |

| 10 | 1,1,1,1,1 | 77.2 | 87.6 | 51.7 | 70.3 | 41.5 | 1.0 |

Finally, we pose a question, whether updating only a part of the neural network weights can improve the method accuracy. It turns out, that in an extreme case of learning with a single adaptation step (), significant improvement in 5-way adaptation performance is achieved by updating with a partial pattern . This is shown in Table 4.

| Pattern | 1-shot | 5-shot | 1-shot | 5-shot |

|---|---|---|---|---|

| 2-way | 2-way | 5-way | 5-way | |

| 74.3 % | 86.0 % | 36.8 % | 20.4 % | |

| 74.3 % | 83.1 % | 36.9 % | 53.1 % |

We have also performed a search of all cases, when our approach gives better results than the original with . The results are shown in Table 5.

| 1-shot 2-way | 5-shot 2-way | 1-shot 5-way | 5-shot 5-way | |

|---|---|---|---|---|

| Accuracy on | 74.3 % | 86.0 % | 36.8 % | 20.4 % |

| Accuracy on selected | 74.5 % | 86.2 % | 36.9 % | 54.8 % |

| Selected Pattern | 1,1,1,0,1 | 1,1,1,0,1 | 1,1,0,1,1 | 1,1,0,1,0 |

7 Discussion

In [22] it has been shown that each trained neural network’s convolutional layer has a different meaning. The first layer learns to detect simple features, like edges, lines or color gradients. The second layer increases the complexity and understands simple shapes, e.g., circles, corners or stripes, while the last layers learn high-level features, such as eyes, faces, text-like objects, etc. The exact features learned, obviously, depend on the training dataset, still such logic is retained. In the few-shot classification scenario the tasks differ by the types of objects that the model has to classify. As we have described in the experiments section, train and test sets have disjoint classes included. Thus, it might be reasonable to expect that only the last layers of the network should be changed to adapt to the new tasks and classes. This is exactly what we see in the case of 5-way classification as is shown in Fig. 2. However, such a statement contradicts to the experiment results from Fig. 3.

To understand the contradiction, we examine the original CIFAR-100 dataset. There image labels (classes) are structured to form larger coarse groups. For instance, coarse class (or superclass) “aquatic mammals” contains “beaver”, “dolphin”, “otter”, “seal”, “whale”. Other examples of superclasses include “fish”, “large carnivores”, “household electrical devices”, etc. From the examples we have picked, it becomes obvious that images from different classes have significantly different color gamut. Images of aquatic mammals and fish typically contain blue and gray colors, while large carnivores might have more yellow and green. In case of 2-way classification it is more probable that the network has to classify only between subclasses of the same superclass. Consequently, we assume that if the first layer is updated in a 2-way few-shot learning scenario, the new weights better adjust to features with different color gamut. We see this as an analogy to how a human eye works: it adjusts the amount of light coming to the retina by expanding or contracting the pupil, so that it becomes easier to see the details.

From Table 3 we see that keeping the inner layers stale is the most fruitful way to improve the performance, with little to no quality loss. A substantial increase in adaptation speed has been achieved with a target quality loss set to 7 % relative to the original pattern and adaptation steps. The actual quality loss turns out to be even smaller as we have skipped slightly faster, but worse pattern . Thereby, with the best and adaptation steps, we achieve a factor of 3.0 speed improvement. Our quality losses are the following: 1-shot 2-way is 0.78 %, 5-shot 2-way is 1.97 % 1-shot 5-way is 4.86 % and 5-shot 5-way is 0.71 %. Even smaller quality losses can be achieved by consulting Table 3. Note, that these are relative quality losses. If the losses are computed in absolute terms, they are even more negligible. Thus, we have achieved a significant adaptation time reduction with small-enough quality loss.

We also discuss a way to improve algorithm quality by selecting a pattern . In an extreme case of a single adaptation step, simply avoiding the inner layer update has helped to improve the overall model quality as is shown in Table 4. In addition, we have been able to find such a pattern for each of the few-shot learning configurations, such that it improves the model performance for adaptation step in Table 5. It is curious that no such behavior is observed in cases when . To the best of our knowledge such behavior has not been previously observed and should be further investigated.

8 Conclusion

MAML is an optimization-based few-shot learning method that is able to learn by using only a few samples per class. Many algorithms follow the learning scheme proposed in MAML. In this work we solve its problems of 1) long adaptation time, and 2) poor performance in cases when a single adaptation step is used.

In this work pattern method has been introduced. This method reduces the number of gradient computations in MAML adaptation phase. By selecting an appropriate adaptation pattern, we have significantly improved the method in the following areas: 1) long MAML adaptation time has been decreased by the factor 3 with minimal accuracy loss; 2) accuracy in cases when only a single adaptation step is used has been substantially improved.

The improvement of adaptation time of the widespread MAML algorithm will enable its applicability on less powerful devices and will in general decrease the time needed for the algorithm to adapt to new tasks.

Prospects for further research are to investigate a way of a more robust automatic pattern selection scheme for an arbitrary training dataset and network configuration.

Funding

The work is supported by the state budget scientific research project of Dnipro University of Technology “Development of New Mobile Information Technologies for Person Identification and Object Classification in the Surrounding Environment” (state registration number 0121U109787).

References

- [1] Kaiming He, Xiangyu Zhang, Shaoqing Ren and Jian Sun “Deep Residual Learning for Image Recognition” In 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, June 27-30, 2016 IEEE Computer Society, 2016, pp. 770–778 DOI: 10.1109/CVPR.2016.90

- [2] Jia Deng et al. “ImageNet: A large-scale hierarchical image database” In 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2009), 20-25 June 2009, Miami, Florida, USA IEEE Computer Society, 2009, pp. 248–255 DOI: 10.1109/CVPR.2009.5206848

- [3] Gao Huang, Zhuang Liu, Laurens Maaten and Kilian Q. Weinberger “Densely Connected Convolutional Networks” In 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, July 21-26, 2017 IEEE Computer Society, 2017, pp. 2261–2269 DOI: 10.1109/CVPR.2017.243

- [4] Sergey Zagoruyko and Nikos Komodakis “Wide Residual Networks” In Proceedings of the British Machine Vision Conference 2016, BMVC 2016, York, UK, September 19-22, 2016 BMVA Press, 2016 URL: http://www.bmva.org/bmvc/2016/papers/paper087/index.html

- [5] Chelsea Finn, Pieter Abbeel and Sergey Levine “Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks” In Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6-11 August 2017 70, Proceedings of Machine Learning Research PMLR, 2017, pp. 1126–1135 URL: http://proceedings.mlr.press/v70/finn17a.html

- [6] Aravind Rajeswaran, Chelsea Finn, Sham M. Kakade and Sergey Levine “Meta-Learning with Implicit Gradients” In Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, December 8-14, 2019, Vancouver, BC, Canada, 2019, pp. 113–124 URL: https://proceedings.neurips.cc/paper/2019/hash/072b030ba126b2f4b2374f342be9ed44-Abstract.html

- [7] Kostiantyn Khabarlak and Larysa Koriashkina “Fast Facial Landmark Detection and Applications: A Survey” In Journal of Computer Science and Technology 22.1, 2022, pp. 12–41 DOI: 10.24215/16666038.22.e02

- [8] Luca Bertinetto, João F. Henriques, Philip H.. Torr and Andrea Vedaldi “Meta-learning with differentiable closed-form solvers” In 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019 OpenReview.net, 2019 URL: https://openreview.net/forum?id=HyxnZh0ct7

- [9] Jake Snell, Kevin Swersky and Richard S. Zemel “Prototypical Networks for Few-shot Learning” In Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4-9, 2017, Long Beach, CA, USA, 2017, pp. 4077–4087 URL: https://proceedings.neurips.cc/paper/2017/hash/cb8da6767461f2812ae4290eac7cbc42-Abstract.html

- [10] Sachin Ravi and Hugo Larochelle “Optimization as a Model for Few-Shot Learning” In 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, April 24-26, 2017, Conference Track Proceedings OpenReview.net, 2017 URL: https://openreview.net/forum?id=rJY0-Kcll

- [11] Antreas Antoniou, Harrison Edwards and Amos J. Storkey “How to train your MAML” In 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019 OpenReview.net, 2019 URL: https://openreview.net/forum?id=HJGven05Y7

- [12] Lilian Weng “Meta-Learning: Learning to Learn Fast” In lilianweng.github.io, 2018 URL: https://lilianweng.github.io/posts/2018-11-30-meta-learning/

- [13] Wenpeng Yin “Meta-learning for Few-shot Natural Language Processing: A Survey” In CoRR abs/2007.09604, 2020 arXiv: https://arxiv.org/abs/2007.09604

- [14] Yaqing Wang, Quanming Yao, James T. Kwok and Lionel M. Ni “Generalizing from a Few Examples: A Survey on Few-shot Learning” In ACM Comput. Surv. 53.3, 2020, pp. 63:1–63:34 DOI: 10.1145/3386252

- [15] Yandong Guo and Lei Zhang “One-shot Face Recognition by Promoting Underrepresented Classes” In CoRR abs/1707.05574, 2017 arXiv: http://arxiv.org/abs/1707.05574

- [16] Gregory Koch, Richard Zemel and Ruslan Salakhutdinov “Siamese Neural Networks for One-Shot Image Recognition” In ICML Deep Learning Workshop 2 Lille, 2015

- [17] Oriol Vinyals et al. “Matching Networks for One Shot Learning” In Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems 2016, December 5-10, 2016, Barcelona, Spain, 2016, pp. 3630–3638 URL: https://proceedings.neurips.cc/paper/2016/hash/90e1357833654983612fb05e3ec9148c-Abstract.html

- [18] Adam Santoro et al. “Meta-Learning with Memory-Augmented Neural Networks” In Proceedings of the 33nd International Conference on Machine Learning, ICML 2016, New York City, NY, USA, June 19-24, 2016 48, JMLR Workshop and Conference Proceedings JMLR.org, 2016, pp. 1842–1850 URL: http://proceedings.mlr.press/v48/santoro16.html

- [19] Brenden M. Lake, Ruslan Salakhutdinov and Joshua B. Tenenbaum “Human-Level Concept Learning through Probabilistic Program Induction” In Science 350.6266, 2015, pp. 1332–1338 DOI: 10.1126/science.aab3050

- [20] Alex Nichol, Joshua Achiam and John Schulman “On First-Order Meta-Learning Algorithms” In CoRR abs/1803.02999, 2018 arXiv: http://arxiv.org/abs/1803.02999

- [21] Zhenguo Li, Fengwei Zhou, Fei Chen and Hang Li “Meta-SGD: Learning to Learn Quickly for Few Shot Learning” In CoRR abs/1707.09835, 2017 arXiv: http://arxiv.org/abs/1707.09835

- [22] Matthew D. Zeiler and Rob Fergus “Visualizing and Understanding Convolutional Networks” In Computer Vision - ECCV 2014 - 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part I 8689, Lecture Notes in Computer Science Springer, 2014, pp. 818–833 DOI: 10.1007/978-3-319-10590-1“˙53

- [23] Sergey Ioffe and Christian Szegedy “Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift” In Proceedings of the 32nd International Conference on Machine Learning, ICML 2015, Lille, France, 6-11 July 2015 37, JMLR Workshop and Conference Proceedings JMLR.org, 2015, pp. 448–456 URL: http://proceedings.mlr.press/v37/ioffe15.html

- [24] Diederik P. Kingma and Jimmy Ba “Adam: A Method for Stochastic Optimization” In 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, 2015 URL: http://arxiv.org/abs/1412.6980

- [25] Alex Krizhevsky “Learning Multiple Layers of Features from Tiny Images”, 2009