Fast Saturating Gate for Learning Long Time Scales with

Recurrent Neural Networks

Abstract

Gate functions in recurrent models, such as an LSTM and GRU, play a central role in learning various time scales in modeling time series data by using a bounded activation function. However, it is difficult to train gates to capture extremely long time scales due to gradient vanishing of the bounded function for large inputs, which is known as the saturation problem. We closely analyze the relation between saturation of the gate function and efficiency of the training. We prove that the gradient vanishing of the gate function can be mitigated by accelerating the convergence of the saturating function, i.e., making the output of the function converge to 0 or 1 faster. Based on the analysis results, we propose a gate function called fast gate that has a doubly exponential convergence rate with respect to inputs by simple function composition. We empirically show that our method outperforms previous methods in accuracy and computational efficiency on benchmark tasks involving extremely long time scales.

1 Introduction

Recurrent neural networks (RNNs) are models suited to processing sequential data in various applications, e.g., speech recognition (Ling et al. 2020) and video analysis (Zhu et al. 2020). The most widely used RNNs are a long short-term memory (LSTM) (Hochreiter and Schmidhuber 1997) and gated recurrent unit (GRU) (Cho et al. 2014), which has a gating mechanism. The gating mechanism controls the information flow in the state of RNNs via multiplication with a gate function bounded to a range . For example, when the forget gate takes a value close to 1 (or 0 for the update gate in the GRU), the state preserves the previous information. On the other hand, when it gets close to the other boundary, the RNN updates the state by the current input. Thus, in order to represent long temporal dependencies of data involving hundreds or thousands of time steps, it is crucial for the forget gate to take values near the boundaries (Tallec and Ollivier 2018; Mahto et al. 2021).

However, it is difficult to train RNNs so that they have the gate values near the boundaries. Previous studies hypothesized that this is due to gradient vanishing for the gate function called saturation (Chandar et al. 2019; Gu et al. 2020b), i.e., the gradient of the gate function near the boundary is too small to effectively update the parameters. To avoid the saturation problem, a previous study used unbounded activation functions (Chandar et al. 2019). However, this makes training unstable due to the gradient explosion (Pascanu, Mikolov, and Bengio 2013). Another study introduced residual connection for a gate function to push the output value toward boundaries, hence mitigating the saturation problem (Gu et al. 2020b). However, it requires additional computational cost due to increasing the number of parameters for another gate function. For broader application of gated RNNs, a more efficient solution is necessary.

To overcome the difficulty of training, we propose a novel activation function for the forget gate based on the usual sigmoid function, which we call the fast gate. Modification of the usual sigmoid gate to the fast gate is simple and easy to implement since it requires only one additional function composition. To this end, we analyze the relation between the saturation and gradient vanishing of the bounded activation function. Specifically, we focus on the convergence rate of the activation function to the boundary, which we call the order of saturation. For example, the sigmoid function has the exponential order of saturation, i.e., (see Fig. 1), and the derivative also decays to 0 exponentially as goes to infinity. When a bounded activation function has a higher order of saturation, the derivative decays much faster as the input grows. Since previous studies have assumed that the decaying derivative on the saturating regime causes the stuck of training (Ioffe and Szegedy 2015), it seems that a higher order of saturation would lead to poor training. Contrarily to this intuition, we prove that a higher order of saturation alleviates the gradient vanishing on the saturating regime through observation on a toy problem for learning long time scales. This result indicates that functions saturating superexponentially are more suitable for the forget gate to learn long time scales than the sigmoid function. On the basis of this observation, we explore a method of realizing such functions by composing functions which increase faster than the identity function (e.g., ) as . We find that the hyperbolic sinusoidal function is suitable for achieving a higher order of saturation in a simple way, and we obtain the fast gate. Since the fast gate has a doubly exponential order of saturation , it improves the trainability of gated RNNs for long time scales of sequential data. We evaluate the computational efficiency and accuracy of a model with the fast gate on several benchmark tasks, including synthetic tasks, pixel-by-pixel image classification, and language modeling, which involve a wide range of time scales. The model with the fast gate empirically outperforms other models including an LSTM with the sigmoid gate and variants recently proposed for tackling the saturation problem (Chandar et al. 2019; Gu et al. 2020b) in terms of accuracy and the convergence speed of training while maintaining stability of training. Further visualization analysis of learning time scales shows that our theory fits the learning dynamics of actual models and that the fast gate can learn extremely long time scales of thousands of time steps.

Our major contributions are as follows:

-

•

We prove that gate functions which saturate faster actually accelerates learning values near boundaries. The result indicates that fast saturation improves learnability of gated RNNs on data with long time scales.

-

•

We propose the fast gate that saturates faster than the sigmoid function. In spite of its simplicity, the fast gate achieves a doubly exponential order of saturation, and thus effectively improves learning of long time scales.

- •

2 Preliminaries

2.1 Time Scales in Gated RNNs

In this section, we review gated RNNs and their time scale interpretation (Tallec and Ollivier 2018). We begin with an LSTM (Hochreiter and Schmidhuber 1997), which is one of the most popular RNNs. An LSTM has a memory cell and hidden state inside, which are updated depending on the sequential input data at each time step by

| (1) | ||||

| (2) | ||||

| (3) | ||||

| (4) | ||||

| (5) | ||||

| (6) |

where and are weight and bias parameters for each . The sigmoid function is defined as

| (7) |

are called forget, input, and output gates, respectively. They were initially motivated as a binary mechanism, i.e., switching on and off, allowing information to pass through (Gers, Schmidhuber, and Cummins 2000). The forget gate has been reinterpreted as the representation for time scales of memory cells (Tallec and Ollivier 2018). Following that study, we simplify Eq. (1) by assuming for an interval . Then, we obtain

| (8) | ||||

| (9) |

where is the (entry-wise) geometric mean of the values of the forget gate. Through Eq. (8), the memory cell loses its information on data up to time exponentially, and the entry of represents its (averaged) decay rate. This indicates that, in order to capture long-term dependencies of the sequential data, the forget gate is desired to take values near 1 on average. We refer the associated time constant111An exponential function of time decreases by a factor of in time , which is called the time constant. as the time scale of units, which has been empirically shown to illustrate well the temporal behavior of LSTMs (Mahto et al. 2021).

The above argument applies not only to an LSTM, but also to general gated RNNs including a GRU (Cho et al. 2014) with state update of the form

| (10) |

where denotes the state, forget gate, and input gate, respectively, and is the activation to represent new information at time . Here again, the forget gate takes a role to control the time scale of each unit of the state.

2.2 Saturation in Gating Activation Functions

The sigmoid function in the gating mechanism requires large to take a value near 1 as the output. On the other hand, the derivative takes exponentially small values for (Fig. 1). Thus, when a gated model needs to learn large gate values such as with gradient methods, parameters in the gate cannot be effectively updated due to gradient vanishing. This is called saturation of bounded activation functions (Gulcehre et al. 2016). The behavior of gate functions on the saturating regime is important for gated RNNs because forget gate values need to be large to represent long time scales as explained in Section 2.1. That is, gated RNNs must face saturation of the forget gate to learn long time scales. Thus, it is hypothesized that saturation causes difficulty in training gated RNNs for data with extremely long time scales (Chandar et al. 2019; Gu et al. 2020b).

3 Related Work

Although there is abundant literature on learning long-term dependencies with RNNs, we outline the most related studies in this sectoin due to the space limitation and provide additional discussion of other studies in Appendix A.

Several studies investigate the time scale representation of the forget gate function to improve learning on data involving long-term dependencies (Tallec and Ollivier 2018; Mahto et al. 2021). For example, performance of LSTM language models can be improved by fixing the bias parameter of the forget gate in accordance with a power law of time scale distribution, which underlies natural language (Mahto et al. 2021). Such techniques require us to know the appropriate time scales of data a priori, which is often difficult. Note that this approach can be combined with our method since it is complementary with our work.

Several modifications of the gate function have been proposed to tackle the saturation problem. The noisy gradient for a piece-wise linear gate function was proposed to prevent the gradient to take zero values (Gulcehre et al. 2016). This training protocol includes hyperparameters controlling noise level, which requires manual tuning. Furthermore, such a stochastic approach can result in unstable training due to gradient estimation bias (Bengio, Léonard, and Courville 2013). The refine gate (Gu et al. 2020b) was proposed as another modification introducing a residual connection to push the gate value to the boundaries. It is rather heuristic and does not provide theoretical justification. It also requires additional parameters for the auxiliary gate, which increases the computational cost for both inference and training. In contrast, our method theoretically improves learnability and does not introduce any additional parameters. Another study suggests that omitting gates other than the forget gate makes training of models for long time scales easier (Van Der Westhuizen and Lasenby 2018). However, such simplification may lose the expressive power of the model and limit its application fields. Chandar et al. (2019) proposed an RNN with a non-saturating activation function to directly avoid the gradient vanishing due to saturation. Since its state and memory vector evolves in unbounded regions, the behavior of the gradient can be unstable depending on tasks. Our method mitigates the gradient vanishing by controlling the order of saturation, while maintaining the bounded state transition.

4 Analysis on Saturation and Learnability

We discuss the learning behavior of the forget gate for long time scales. First, we formulate a problem of learning long time scales in a simplified setting. Next, we relate the efficiency of learning on the problem to the saturation of the gate functions. We conclude that the faster saturation makes learning more efficient. All proofs for mathematical results below are given in Appendix C.

4.1 Problem Setting

Recall Eq. (8), which describes the time scales of the memory cell of an LSTM via exponential decay. Let the memory cell at time be with . Requiring long time scales corresponds to getting close to 1. Therefore, we can consider a long-time-scale learning problem as minimizing a loss function that measures discrepancy of and where is a desired value close to 1. We take as the absolute loss for example. Then, we obtain

| (11) | ||||

| (12) | ||||

| (13) |

using Eq. (8). Let , so that . Since we are interested in the averaged value of , we consider to be time-independent, that is, in the same way as Tallec and Ollivier (2018). The problem is then reduced to a problem to obtain that minimizes

| (14) |

We consider this as the minimal problem to analyze the learnability of the forget gate for long time scales. Note that since the product is taken element-wise, we can consider this as a one-dimensional problem. Furthermore, the global solution can be explicitly written as where is an inverse of .

Next, we consider the learning dynamics of the model on the aforementioned problem Eq. (14). RNNs are usually trained with gradient methods. Learning dynamics with gradient methods can be analyzed considering learning rate limit known as gradient flow (Harold and George 2003). Therefore, we consider the following gradient flow

| (15) |

using the loss function introduced above. Here, denotes a time variable for learning dynamics, which should not be confused with representing the state transition. Our aim is to investigate the convergence rate of a solution of the differential equation Eq. (15) when in the forget gate is replaced with another function .

4.2 Order of Saturation

To investigate the effect of choice of gate functions on the convergence rate, we first define the candidate set of bounded functions for the gate function.

Definition 4.1.

Let be a set of differentiable and strictly increasing surjective functions such that the derivative is monotone on for some .

is a natural class of gating activation functions including . As we explained in Section 2.2, gated RNNs suffer from gradient vanishing due to saturation when learning long time scales. To clarify the issue, we first show that saturation is inevitable regardless of the choice of .

Proposition 4.2.

holds for any .

Nevertheless, choices of significantly affect the efficiency of the training. When the target takes an extreme value near boundaries, the efficiency of training should depend on the asymptotic behavior of for , that is, the rate at which converges as . We call the convergence rate of as as the order of saturation. More precisely, we define the notion as follows222Our definition for asymptotic order is slightly different from the usual one which adopts , since it is more suitable for analyzing training efficiency.:

Definition 4.3.

Let be a decreasing function. has the order of saturation of if for some . For , has a higher order of saturation than if holds for any and is convex for .

Intuitively, the order of saturation of means that the convergence rate of to 1 is bounded by the decay rate of up to constant multiplication of . For example, the sigmoid function satisfies as for any , thus has the exponential order of saturation . The convexity condition for a higher order of saturation is rather technical, but automatically satisfied for typical functions, see Appendix C.2. If has a higher order of saturation (or saturates faster) than another function , then converges faster than as , and becomes smaller than . In this sense, training with seems more efficient than in the above problem. However, this is not the case as we discuss in the next section.

4.3 Efficient Learning via Fast Saturation

To precisely analyze learning behavior, we trace the learning dynamics of the output value since our purpose is to obtain the desired output value rather than the input . We transform the learning dynamics (Eq. (15)) into that of by

| (16) |

To treat Eq. (16) as purely of , we define a function of by so that Eq. (16) becomes

| (17) |

Our interest is in the dynamics of near the boundary, i.e., the limit of . We have the following result:

Theorem 4.4.

Let . If has a higher order of saturation than , then as .

Theorem 4.4 indicates that a higher order of saturation accelerates the move of the output near boundaries in accordance with Eq. (17) since takes larger values. Thus, contrarily to the intuition in Section 4.2, a higher order of saturation leads to more efficient training for target values near boundaries. We demonstrate this effect using two activation functions, the sigmoid function and normalized softsign function where . is the softsign function modified so that and . has a higher order of saturation than since has the order of saturation of and has (see Fig. 1). We plot the learning dynamics of gradient flow for the problem in Fig. 2. Since has a higher order of saturation than , the gate value of converges slower to the boundary. Fig. 2 also shows the dynamics of gradient descent with the learning rate 1. While gradient descent is a discrete approximation of gradient flow, it behaves similar to gradient flow.

Explicit convergence rates. Beyond Theorem 4.4, we can explicitly calculate effective bounds of the convergence rate for the problem when the activation function is the sigmoid function or normalized softsign function .

Proposition 4.5.

Consider the problem in Section 4.1 with the absolute loss with . For the sigmoid function , the convergence rate for the problem is bounded as . Similarly, for the normalized softsign function , the convergence rate is bounded as .

Proposition 4.5 shows the quantitative effect of difference in the order of saturation on the convergence rates. We fit the bounds to the learning curves with the gradient flow in Fig. 2. The convergence rates of the learning are well approximated by the bounds. These asymptotic analysis highlights that choices of the function significantly affects efficiency of training for long time scales.

5 Proposed Method

On the basis of the analysis in Section 4, we construct the fast gate, which is suitable for learning long time scales.

5.1 Desirable Properties for Gate Functions

We consider modification of the usual sigmoid function to another function for the forget gate in a gated RNN. Function should satisfy the following conditions.

-

(i)

has a higher order of saturation than ,

-

(ii)

for ,

-

(iii)

is symmetric in a sense that .

Condition (i) comes from the argument in the previous section that fast saturating functions learn values near boundaries efficiently. Conditions (ii) and (iii) indicate that the function behaves similarly to around . In order to avoid possible harmful effects due to the modification, we do not want to change the behavior of the function away from the saturating regime. Hence, we require these conditions. The requirements are analogous to those by Gu et al. (2020b, Section 3.4) for the gate adjustment. The first condition can be viewed as a theoretical refinement of their heuristic modification.

5.2 Fast Gate

We explore gate functions satisfying the above conditions. Recall that the sigmoid function has the exponential order of saturation. From condition (i) in the previous section, we explore functions saturating superexponentially. Since any superexponential order can be written as with a function satisfying for large , it is enough to consider a function of the form for such . The desirable properties in Section 5.1 are rephrased as follows in terms of : (i) for , (ii) , and (iii) = for . Such functions can be found as examples in the form where is a polynomial consisting of only odd higher degree terms, such as . Since a higher degree term has a larger effect on the order of saturation, it mitigates gradient vanishing of the gate function more in accordance with Theorem 4.4. Thus, we take a limit of the degree to infinity, which leads to a simple function expression

| (18) | ||||

| (19) |

Therefore, we adopt for the alternative function and obtain the fast gate

| (20) |

This simple expression enables us to implement it with only one additional function. Note that the above form is an example of gate functions which satisfy desirable properties in Section 5.1 and there are infinitely many other possible choices. The above particular form is one of the simplest choices, and not a limitation of our method. For discussion of other candidates, see Appendix D.

| Softsign | Sigmoid | Refine | Fast | |

| Saturation order | ||||

| Convergence rate | ||||

| Additional parameters | No | No | Yes | No |

5.3 Comparison with Other Gate Functions

We analyze the fast gate and compare it with other gate functions. First, the order of saturation of the fast gate is since as for any . We briefly describe the method of the refine gate (Gu et al. 2020b), which was proposed to avoid gradient vanishing of the gate function. This method exploits an auxiliary gate to modify the forget gate value to . When a large output value is desired for the forget gate value, the auxiliary gate is expected to take to push to . Therefore, from the asymptotic view point, this method modifies the order of saturation of the gate function from to . Compared with the refine gate, the fast gate has a much higher order of saturation. We also analyze the asymptotic convergence rates of solving the toy problem in Section 4. We summarize the results in Tab. 1. See Appendix C.3 for detailed derivation. Since the fast gate has a doubly exponential order of saturation, the order of convergence rate of learning long time scales is faster than the sigmoid and refine gates which have an exponential order of saturation (see also Fig. 2 for comparison of the convergence rates). In addition, the fast gate does not require additional parameters whereas the refine gate does. Therefore, the fast gate is computationally more efficient than the refine gate.

6 Experiments

6.1 Synthetic Tasks

We evaluate the learnability for long time scales across various methods on two synthetic tasks, adding and copy, following previous studies (Hochreiter and Schmidhuber 1997; Arjovsky, Shah, and Bengio 2016). While these tasks are simple and easy to solve for short sequences, they get extremely difficult for gated RNNs to solve when the sequence length grows to hundreds or thousands.

Setup. We compare the fast gate with the refine gate because Gu et al. (2020b) reported that the refine gate achieved the best performance among other previous gate variants. We also include the normalized softsign function in Section 4 as a referential baseline to test the compatibility of our theory. We use these gate functions in a single-layer LSTM. Since the initialization of the forget gate bias is critical to model performance (Tallec and Ollivier 2018), we set it so that is satisfied for each gate function (with the bias for an additional gate function initialized by 0 for the refine gate), which amounts to in the usual sigmoid case (Gers, Schmidhuber, and Cummins 2000; Greff et al. 2016). We also compare performance of these gate variants to that of chrono-initialization (Tallec and Ollivier 2018), a method to initialize parameters to represent long time scales for the sigmoid gate. In addition to the LSTM, we include JANET (Van Der Westhuizen and Lasenby 2018) and NRU (Chandar et al. 2019) as baselines. JANET is one of the simplest gated RNNs specialized to learn long time scales by omitting gates other than the forget gate and applying chrono-initialization. NRU uses non-saturating activation functions to write or erase to a memory cell. We train and evaluate each model three times by varying the random seed. See Appendix E.3 for detailed setting.

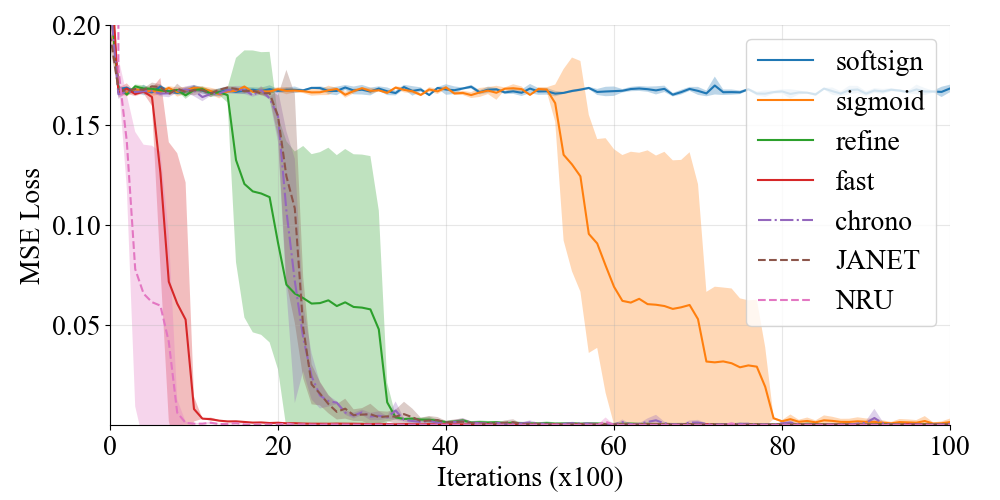

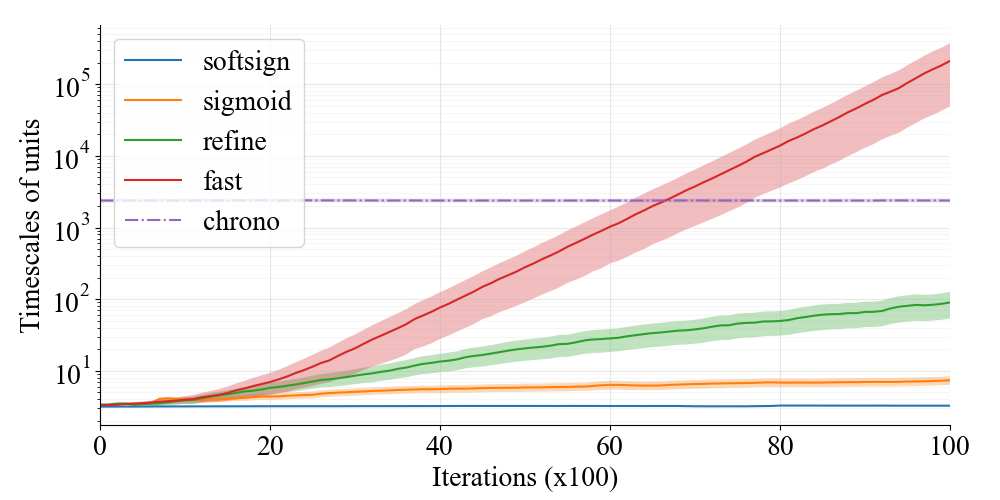

Results. The mean squared error on the adding task of sequence length 5000 and the accuracy on the copy task of sequence length 500 during training are shown in Fig. 3 and 4, respectively. While NRU requires the least number of parameter updates on the adding task, the training diverges on the copy tasks due to gradient explosion (Pascanu, Mikolov, and Bengio 2013). This is because the state in the NRU evolves on an unbounded region; thus, a small parameter update can drastically change the behavior of the model. We could not fix this instability even by reducing the clipping threshold for gradient by a factor of . We hypothesize that the training of the NRU tends to be more unstable on the copy task because this task has higher dimensional nature than the adding task in the sense of input dimension (10 vs 2) and the number of tokens to memorize (10 vs 2). Among the gate functions, the fast gate converges the fastest. This is due to the higher order of saturation: the fast gate has the order of saturation whereas the refine gate has , thus learns long time scales more efficiently. The normalized softsign gate completely fails to learn since it has a lower order of saturation than the sigmoid function. Thus, the performance of the models is well explained by the difference in the order of saturation in Tab. 1. This indicates that our theoretical analysis matches practical settings despite the fact that it builds on a simplified learning problem. The result also shows that modification of the gate function is more effective for learning long-term dependencies than other methods such as chrono-initialization and JANET.

We further observe the growth of time-scale distribution of the memory cell in the LSTM on the adding task. The time scale of -th unit in the memory cell is measured using the bias term of the forget gate by (Tallec and Ollivier 2018; Mahto et al. 2021). We show the statistics of time scales over all 128 units at each iteration of the training in Fig. 5. The fast gate represents much longer time scales than other gate functions after training, which validates our method. While chrono-initialization set time scales so that they are uniformly distributed within the range , they do not change at all during training due to saturation of the usual sigmoid function . Since the fast gate can learn to adapt to even longer time scales than such initialization, it is effective to approximate arbitrary desired time scales which is usually unknown a priori.

6.2 Pixel-by-pixel Image Recognition

| sMNIST | psMNIST | sCIFAR | Time | |

| Softsign | 97.50 0.58 | 91.71 0.33 | 59.21 0.39 | 17.7 min. |

| Sigmoid | 98.88 0.12 | 95.71 0.02 | 69.14 0.39 | 14.3 min. |

| Refine | 98.94 0.03 | 95.93 0.16 | 69.55 0.50 | 22.7 min. |

| Fast | 99.05 0.04 | 96.18 0.14 | 70.06 0.38 | 14.7 min. |

| chrono | 98.83 0.09 | 94.37 0.69 | 60.36 0.51 | 14.3 min. |

| JANET | 98.59 0.03 | 93.85 0.23 | 60.99 0.51 | 10.6 min. |

| NRU | 98.73 0.27 | 94.76 0.35 | 62.32 0.30 | 35.0 min. |

Next, we evaluate the fast gate on the sequential image recognition task, where a pixel value is applied into recurrent models at each time step (Le, Jaitly, and Hinton 2015).

Setup. We use the usual order sequential MNIST (sMNIST) task and permuted order version (psMNIST) to introduce more complex and long-term temporal dependencies. We also use the sequential CIFAR-10 (sCIFAR) task, which involves higher dimensional inputs and longer sequence length (i.e., ) than MNIST. We train the LSTM with the various gates, JANET, and NRU. See Appendix E.4 for training details. We set the hidden dimension as 512 on all models and the dimension of the memory cell in NRU as 144 following the original paper. We evaluate the averaged test accuracy with the standard deviation over three random seeds. We also report the computational time to train each model to compare the computational efficiency of the LSTM with the fast gate to other models.

Results. The results are shown in Tab. 2. The fast gate performs the best among various gates while the normalized softsign gate poorly performs. This is because the order of saturation of activation functions in the forget gate directly affects the learnability for long time scales. The LSTM with the fast gate also outperforms all the other baselines. Note that as chrono-initialization gives too heavy-tailed time scale distribution (Gu et al. 2020b), the chrono-LSTM and JANET performs worse than simply initializing the gate bias as (Sigmoid in the table). Since large model size tends to result in high accuracy on these tasks (Voelker, Kajić, and Eliasmith 2019; Erichson et al. 2021), we also provide results of smaller models in Appendix F.3 for comparison. While the NRU achieves the highest accuracies on the psMNIST task in Tab. 5 in the appendix, the NRU performs worse than the LSTMs in Tab. 2. Therefore, performance of the LSTM seems to scale better than the NRU in model size. The refine gate requires more than 1.5 times longer processing time than the fast gate for training since it involves an auxiliary gate computation with additional parameters. The NRU requires longer processing time than the LSTMs due to its complicated state update rule. The learning curve for the psMNIST task in Fig. 6 shows that the fast gate reaches high accuracy in as few epochs as the refine gate. Combining with the results on the processing time per epoch, we conclude that the fast gate learns complex time scales the most efficiently among the gate functions.

| 10K | 1K-10K | 100-1K | 100 | All tokens | Time | |

| Sigmoid | 7.25 | 27.72 | 170.25 | 2026.87 | 60.22 | 130 sec. |

| Refine | 7.61 | 28.01 | 166.46 | 1936.02 | 60.50 | 185 sec. |

| Fast | 7.43 | 27.70 | 166.68 | 1975.51 | 60.09 | 138 sec. |

6.3 Language Modeling

In natural language processing, performance of language models can suffer from difficulty in learning long time scales because predicting statistically rare words involves long time scales (Mahto et al. 2021). It is expected that using a forget gate function with a higher order of saturation improves learning to predict such rare words. We validate this effect in the following experiment.

Setup. We train and evaluate three-layer LSTM language models following a previous study (Mahto et al. 2021)333https://github.com/HuthLab/multi-timescale-LSTM-LMs. on the Penn Treebank (PTB) dataset, replacing every sigmoid forget gate in the baseline LSTM with the fast gate. We compare the LSTM with the fast gate against the LSTM with the sigmoid and refine gates and also NRU by replacing all LSTM modules with NRU modules under the same experimental setting. To evaluate the model performance on data involving different ranges of time scales, the test dataset is divided into four bins depending on their frequencies in the training dataset: more than 10,000, 1000-10,000, 100-1000, and fewer than 100 occurrences.

Results. The results are shown in Tab. 3. The result for the NRU is not in the table since the training diverges. Both refine and fast gates improve perplexity for less frequently observed words compared to the sigmoid gate. The model with the fast gate also achieves the lowest total perplexity. Since frequently observed words involve short-term dependencies, this result indicates that the fast gate improves model performance by learning a wide range of time scales that appear in practical tasks. Tab. 3 also shows the training time taken for one epoch. We observe that the refine gate has larger computational overhead than the fast gate, although the LSTM with the refine gate has the same number of parameters as other LSTMs by using the gate-tying trick (Appendix E.2). This is due to the two-stage computation for the gate value via the auxiliary gate, which cannot be parallelized, thus leads to slow computation. In summary, the fast gate can improve performance on data involving extremely long time scales without sacrificing the performance on data involving short time scales and with less computational overhead.

7 Conclusion

We analyzed the saturation problem in learning of gate functions in recurrent models. Against the common intuition that saturation of the activation function degrades training, we proved that strengthening the saturating behavior is effective in mitigating gradient vanishing of gate functions. We proposed the fast gate, which has a doubly exponential order of convergence with respect to inputs, by simply composing the hyperbolic sinusoidal function to the usual sigmoid function. We evaluated the trainability of the fast gate on data involving extremely long time scales. We empirically showed that the fast gate improves accuracy on benchmark tasks with little computational overhead. Our analytical approach is applicable to any other bounded activation functions that appear in the core of modules such as an attention mechanism. Thus, we expect that it can improve learnability of other neural networks beyond recurrent models.

References

- Arjovsky, Shah, and Bengio (2016) Arjovsky, M.; Shah, A.; and Bengio, Y. 2016. Unitary evolution recurrent neural networks. In International Conference on Machine Learning, 1120–1128. PMLR.

- Bengio, Léonard, and Courville (2013) Bengio, Y.; Léonard, N.; and Courville, A. 2013. Estimating or propagating gradients through stochastic neurons for conditional computation. arXiv preprint arXiv:1308.3432.

- Bengio, Simard, and Frasconi (1994) Bengio, Y.; Simard, P.; and Frasconi, P. 1994. Learning long-term dependencies with gradient descent is difficult. IEEE Transactions on Neural Networks, 157–166.

- Chandar et al. (2019) Chandar, S.; Sankar, C.; Vorontsov, E.; Kahou, S. E.; and Bengio, Y. 2019. Towards non-saturating recurrent units for modelling long-term dependencies. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 33, 3280–3287.

- Chang et al. (2019) Chang, B.; Chen, M.; Haber, E.; and Chi, E. H. 2019. Antisymmetricrnn: A dynamical system view on recurrent neural networks. In International Conference on Learning Representations.

- Chen, Pennington, and Schoenholz (2018) Chen, M.; Pennington, J.; and Schoenholz, S. 2018. Dynamical isometry and a mean field theory of RNNs: Gating enables signal propagation in recurrent neural networks. In International Conference on Machine Learning, 873–882. PMLR.

- Cho et al. (2014) Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; and Bengio, Y. 2014. Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), 1724–1734.

- Collins, Sohl-Dickstein, and Sussillo (2017) Collins, J.; Sohl-Dickstein, J.; and Sussillo, D. 2017. Capacity and trainability in recurrent neural networks. In International Conference on Learning Representations.

- Erichson et al. (2021) Erichson, N. B.; Azencot, O.; Queiruga, A.; Hodgkinson, L.; and Mahoney, M. W. 2021. Lipschitz recurrent neural networks. In International Conference on Learning Representations.

- Gers, Schmidhuber, and Cummins (2000) Gers, F. A.; Schmidhuber, J. A.; and Cummins, F. A. 2000. Learning to Forget: Continual Prediction with LSTM. Neural computation, 12(10): 2451â2471.

- Greff et al. (2016) Greff, K.; Srivastava, R. K.; Koutník, J.; Steunebrink, B. R.; and Schmidhuber, J. 2016. LSTM: A search space odyssey. IEEE Transactions on Neural Networks and Learning Systems, 28(10): 2222–2232.

- Gu et al. (2020a) Gu, A.; Dao, T.; Ermon, S.; Rudra, A.; and Ré, C. 2020a. Hippo: Recurrent memory with optimal polynomial projections. Advances in Neural Information Processing Systems, 33: 1474–1487.

- Gu et al. (2020b) Gu, A.; Gulcehre, C.; Paine, T.; Hoffman, M.; and Pascanu, R. 2020b. Improving the Gating Mechanism of Recurrent Neural Networks. In International Conference on Machine Learning, 3800–3809. PMLR.

- Gu et al. (2021) Gu, A.; Johnson, I.; Goel, K.; Saab, K.; Dao, T.; Rudra, A.; and Ré, C. 2021. Combining Recurrent, Convolutional, and Continuous-time Models with Linear State Space Layers. Advances in Neural Information Processing Systems, 34.

- Gulcehre et al. (2016) Gulcehre, C.; Moczulski, M.; Denil, M.; and Bengio, Y. 2016. Noisy activation functions. In International Conference on Machine Learning, 3059–3068. PMLR.

- Harold and George (2003) Harold, K.; and George, Y. 2003. Stochastic approximation and recursive algorithms and applications, volume 35. Springer Science & Business Media.

- Helfrich and Ye (2020) Helfrich, K.; and Ye, Q. 2020. Eigenvalue Normalized Recurrent Neural Networks for Short Term Memory. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, 4115–4122.

- Hochreiter and Schmidhuber (1997) Hochreiter, S.; and Schmidhuber, J. 1997. Long short-term memory. Neural computation, 9(8): 1735–1780.

- Ioffe and Szegedy (2015) Ioffe, S.; and Szegedy, C. 2015. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International conference on machine learning, 448–456. PMLR.

- Kingma and Ba (2015) Kingma, D. P.; and Ba, J. 2015. Adam: A method for stochastic optimization. In International Conference on Learning Representations.

- Kusupati et al. (2018) Kusupati, A.; Singh, M.; Bhatia, K.; Kumar, A.; Jain, P.; and Varma, M. 2018. Fastgrnn: A fast, accurate, stable and tiny kilobyte sized gated recurrent neural network. In Advances in Neural Information Processing Systems, 9031–9042.

- Le, Jaitly, and Hinton (2015) Le, Q. V.; Jaitly, N.; and Hinton, G. E. 2015. A simple way to initialize recurrent networks of rectified linear units. arXiv preprint arXiv:1504.00941.

- Lechner and Hasani (2020) Lechner, M.; and Hasani, R. 2020. Learning long-term dependencies in irregularly-sampled time series. arXiv preprint arXiv:2006.04418.

- Lezcano-Casado and Martınez-Rubio (2019) Lezcano-Casado, M.; and Martınez-Rubio, D. 2019. Cheap orthogonal constraints in neural networks: A simple parametrization of the orthogonal and unitary group. In International Conference on Machine Learning, 3794–3803. PMLR.

- Ling et al. (2020) Ling, S.; Liu, Y.; Salazar, J.; and Kirchhoff, K. 2020. Deep contextualized acoustic representations for semi-supervised speech recognition. In ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 6429–6433. IEEE.

- Mahto et al. (2021) Mahto, S.; Vo, V. A.; Turek, J. S.; and Huth, A. G. 2021. Multi-timescale representation learning in LSTM Language Models. International Conference on Learning Representations.

- Pascanu, Mikolov, and Bengio (2013) Pascanu, R.; Mikolov, T.; and Bengio, Y. 2013. On the difficulty of training recurrent neural networks. In International Conference on Machine Learning, 1310–1318. PMLR.

- Romero et al. (2022) Romero, D. W.; Kuzina, A.; Bekkers, E. J.; Tomczak, J. M.; and Hoogendoorn, M. 2022. CKConv: Continuous kernel convolution for sequential data. In International Conference on Learning Representations.

- Rusch and Mishra (2021) Rusch, T. K.; and Mishra, S. 2021. UnICORNN: A recurrent model for learning very long time dependencies. In International Conference on Machine Learning, 9168–9178. PMLR.

- Tallec and Ollivier (2018) Tallec, C.; and Ollivier, Y. 2018. Can recurrent neural networks warp time? In International Conference on Learning Representations.

- Van Der Westhuizen and Lasenby (2018) Van Der Westhuizen, J.; and Lasenby, J. 2018. The unreasonable effectiveness of the forget gate. arXiv preprint arXiv:1804.04849.

- Voelker, Kajić, and Eliasmith (2019) Voelker, A.; Kajić, I.; and Eliasmith, C. 2019. Legendre memory units: Continuous-time representation in recurrent neural networks. Advances in neural information processing systems, 32.

- Vorontsov et al. (2017) Vorontsov, E.; Trabelsi, C.; Kadoury, S.; and Pal, C. 2017. On orthogonality and learning recurrent networks with long term dependencies. In International Conference on Machine Learning, 3570–3578. PMLR.

- Zhang, Lei, and Dhillon (2018) Zhang, J.; Lei, Q.; and Dhillon, I. 2018. Stabilizing gradients for deep neural networks via efficient SVD parameterization. In International Conference on Machine Learning, 5806–5814. PMLR.

- Zhu et al. (2020) Zhu, L.; Tran, D.; Sevilla-Lara, L.; Yang, Y.; Feiszli, M.; and Wang, H. 2020. FASTER recurrent networks for efficient video classification. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, 13098–13105.

Appendix A Further discussion of related work

There are plenty of research approaching to learning of long-term dependencies of time series data with RNNs. We discuss relations between our work and other previous studies.

Several methods on properly constraining a weight matrix of a simple RNN without gating mechanism are proposed to alleviate the gradient vanishing problem (Arjovsky, Shah, and Bengio 2016; Zhang, Lei, and Dhillon 2018; Lezcano-Casado and Martınez-Rubio 2019; Helfrich and Ye 2020). These methods limits expressivity of models due to parameter constraint (Vorontsov et al. 2017) and thus are not usually used for practical tasks. Our method takes a different approach for learning long-term dependencies through time scales of models and does not limit the model expressivity.

There is another approach to improve learnability of gated RNNs by reducing redundant parameters (Collins, Sohl-Dickstein, and Sussillo 2017; Chen, Pennington, and Schoenholz 2018; Kusupati et al. 2018). Since the forget gate is the most critical component of gated RNNs, these minimal RNNs are also equipped with the forget gate. Therefore, our method is applicable to those minimal models to further enhance model performances.

Recently, continuous-time models based on differential equations and their discrete counterparts have been attracting attention to deal with extremely long (and possibly irregularly sampled) time series data (Chang et al. 2019; Voelker, Kajić, and Eliasmith 2019; Gu et al. 2020a; Lechner and Hasani 2020; Gu et al. 2021; Rusch and Mishra 2021). Some of these models utilize the sigmoid function to represent variable time steps for discretization. Such use of the sigmoid function essentially has the same effect of the forget gate in LSTM and GRU in terms of time scales of models (Tallec and Ollivier 2018; Gu et al. 2020a). Thus, our method replacing gate function can be applied to the sigmoid function representing time steps to further enhance the learnability for wide range of time scales.

Appendix B Relation to Gradient Vanishing due to Recurrence

When learning long-term dependencies of time series data, recurrent models often suffer from gradient vanishing due to recurrent calculation (Bengio, Simard, and Frasconi 1994; Pascanu, Mikolov, and Bengio 2013). We describe the relation between this gradient vanishing and the saturation problem in this section. First, consider a general recurrent model with state update rule of form , where and are the state and input at time and denotes the parameter vector of the model. During training, the parameter is updated using the gradient

| (21) |

where the right hand side is calculated by back-propagation through time (BPTT)

| (22) |

If the matrix is contractive, the gradient exponentially decays through this backward recurrence. Then, the gradient for does not include information on data at time distant from the last time step, which leads to difficulty in learning long-term dependencies of data.

We now consider a gated model with the state update rule where and are forget and input gates and is some state update function. Then, we have

| (23) |

Assuming that the first term has relatively large effect than other terms, the decay rate of the gradient is largely dominated by the distance of to 1. This corresponds to the time scales of the gated model. Namely, if the forget gate represents only short time scales, then the gradient vanishing due to recurrence tends to occur. On the other hand, if the forget gate learns long time scales, then it mitigates the gradient vanishing and helps the model to learn to extract important features at distant time steps. Thus, learnability of gated models for long time scales is critical to capture long term dependencies. In this work, we focus on learnability for long time scales by considering the gradient vanishing due to saturation of bounded functions. The distinction of two gradient vanishing problems is easy to see through Fig. 2: every gate learns slowly at early stage (until 40 iteration) because of gradient vanishing due to recurrence. After it gets through the plateau, it now faces the gradient vanishing due to saturation to learn long time scales. In this way, the gradient vanishing due to recurrence and the gradient vanishing of bounded function relates closely to each other.

Appendix C Details on Theoretical Results

C.1 Proof of Proposition 4.2

Proof.

Let be an element of . Since is monotone for , exists. Since is increasing, we obtain . If , then . This contradicts the boundedness of . Thus, we have . ∎

C.2 Proof of Theorem 4.4

First, we remark some technical points on the following definition of the order of saturation.

Definition C.1.

For , has higher order of saturation than if is convex for and

| (24) |

holds for any .

The first condition for convexity is automatically satisfied for typical functions such as logarithmic, polynomial, and exponential and required to ensure the growth rates of functions to be well behaved444We have not found any examples of which do not satisfy the convexity of under Eq. (24).. For example, consider the fast gate and sigmoid function . Then is indeed convex for . The second condition is slightly different from the common definition of order that adopts . While the two limit conditions coincides for logarithmic and polynomial classes, there is a difference in the treatment of exponential functions. For example, and are distinguished in the usual sense but are identified in our definition. Note that in terms of learning of neural networks, this amounts to ignoring re-parametrization of parameters with constant multiplication . Since such naive re-parametrization should not drastically change the learning behavior, our definition for the order of saturation makes more sense for considering learning of neural networks. This is well illustrated in Tab. 1 and Fig. 2: the refine gate has the saturation of order but results in the same order of convergence rate of learning as the usual sigmoid gate since is the same as in our definition. The fast gate, however, has a higher order of saturation in the sense of our definition, thus has a faster order of convergence rate.

We now prove Theorem 4.4.

Proof.

Take such that has a higher order of saturation than . Define a function by . Note that is convex for since has a higher order of saturation than . Let be any positive number. Then, we have for since for and is increasing. Thus, for any large , we get for some . By convexity of on , we obtain for . On the other hand, we have

| (25) | ||||

| (26) |

By substituting , we obtain

| (27) |

for . Since is arbitrary, we have as . ∎

C.3 Derivation of Explicit Convergence Rate

In this section, we derive the convergence rates of learning long time scales on the toy problem in Section 4 for the sigmoid and normalized softsign gate, proving Proposition 4.5. Similarly, we also derive the convergence rates for the refine and fast gate. We consider the absolute loss with the gate value . Since the learning dynamics does not depend on when we consider the absolute loss, we may take without loss of generality.

The sigmoid gate case. We first analyze the case with the sigmoid gate . We assume that the initial value of is small so that . Then Eq. (17) becomes

| (28) |

as long as holds. Since monotonically increases over time, we obtain a lower bound for the right hand side after time as with . This gives a lower bound of the dynamics of which is defined by

| (29) |

Solving this, we obtain . Thus, we obtain the upper bound of the difference

| (30) |

which approximates the asymptotic convergence rate for training.

Tightness of bounds. In the above, we only evaluated the upper bound of the difference as the convergence rate. We can easily show that this evaluation is tight and thus the bound gives the exact order of convergence rate. We consider the sigmoid case for example. While the lower bound of gradient is evaluated as , it is also upper bounded by with another since is upper bounded. Thus, we have the same order of upper bound of and thus the bound gives tight order of convergence rate. This argument easily holds in other cases below, but we omit it for simplicity.

The normalized softsign gate case. For the asymptotic analysis, we may assume . Then the normalized softsign function is . Thus, . Then for , Eq. (17) becomes

| (31) |

as long as holds. The lower bound of the right hand side over is given by with constant since increases during learning. Therefore, the dynamics of a lower bound of can be written as

| (32) |

Solving this, we get . Thus, we obtain the upper bound of the difference

| (33) |

The refine gate case. The refine gate (Gu et al. 2020b) is an auxiliary gate that adjust the forget gate value to the effective gate value . We first reformulate the problem as minimizing the loss function

| (34) |

i.e., we replace with . Then, the learning dynamics of is given as

| (35) | ||||

| (36) |

Since the refine gate monotonically increases during learning, we may assume . We claim that and are bounded as follows when :

| (37) | |||

| (38) |

Indeed, we have

| (39) | ||||

| (40) | ||||

| (41) |

and

| (42) | ||||

| (43) |

Thus, we get a bound

| (44) |

with and . With this bound, we can do the exactly same calculation as the sigmoid case to obtain the bound

| (45) |

The fast gate case. Let be the fast gate. By the chain rule, the derivative of the function is given by . Therefore, Eq. (17) for can be written as

| (46) | ||||

| (47) | ||||

| (48) | ||||

| (49) |

with constant . Since we consider the asymptotic convergence rate, we assume is sufficiently close to 1 so that and . Then, by properly replacing the constant , we obtain

| (50) |

This inequality gives the upper bound of the difference , the dynamics of which is given by

| (51) |

Rewriting this equation, we have

| (52) |

By integration, we get

| (53) |

Thus we obtain

| (54) |

or equivalently,

| (55) |

where is an inverse of function . This gives the effective bound of the difference as

| (56) |

with some constant . Note that this order is asymptotically smaller than which is the convergence rate of learning for the sigmoid and refine gate.

C.4 Further Visualization

Fig. 7 shows the increase in the gradient of gate functions with different order of saturation against output values. This illustrates the intuition for Theorem 4.4 that the gradient with respect to the output has different convergence rate to the limit in accordance with the order of saturation.

In addition to the simple gradient descent, we also consider solving the toy problem in Section 4 with other optimizers. RMSprop and Adam optimizer (Kingma and Ba 2015) are widely used for training deep neural networks. We plot the learning dynamics on the toy problem with RMSprop and Adam in Fig. 8. Since RMSprop and Adam solve the problem more efficiently than the gradient descent, we set the learning rate and . Other hyperparameters are set to the default in pytorch library. We observe that the fast gate consistently learns faster than other gate functions. Thus, we conclude that our method also applies to training of gated recurrent networks with practical optimizers.

Appendix D Arbitrariness of Fast Gate

In Section 5, we adopted the particular form as the fast gate. There are infinitely many other choices of gate functions satisfying the desirable properties in Section 5.1. For example, we can construct a function saturating even faster by composing again, i.e., . A natural question is whether such a faster gate function further improves the performance of gated models. We evaluated the LSTM with the iterated fast gate function (iter_fast) on the synthetic and image classification tasks. The training converges even faster on both adding and copy tasks (Fig. 9, 10), which is consistent with our theory. On the other hand, there are almost no difference in test accuracy of the image classification tasks (“Iterated Fast LSTM” in Tab. 4), possibly because the models must learn other complex features in addition to time scales. Note that the processing time increases by the application of another function. Taking the results and the overhead of additional into account, only one composition of (i.e., the fast gate in the main text) seems practically the simplest and most efficient solution for learning long time scales. Further investigation of other effective candidates is left as future work.

Appendix E Experimental Details

E.1 Computational settings

Our computational setup is as follows: CPU is Intel Xeon Silver 4214R 2.40GHz, memory size is 512 GB, and the GPU is NVIDIA Tesla V100S.

E.2 LSTM with Gate Tying and Parameter Count

An LSTM has four affine transform computation in its state update rule as in Eq. (1). Since each affine transform has the same number of parameters, the LSTM has parameters, where is the number of parameters for one linear transform. With a thorough exploration on variants of gated recurrent models, Greff et al. (2016) reported that coupling the forget gate with the input gate by

| (57) |

does not empirically degrade model performance. Here is the hidden dimension and is an -dimensional vector which has 1 for all entries. This trick reduces the model parameters from to . Gu et al. (2020b) proposed the refine gate on the basis of this variant of LSTM. Their method introduces additional parameters to train an auxiliary gate; thus the total number of model parameters matches to the of the original LSTM. In the experiments excluding language modeling, we used the gate-tied variant of LSTM to fairly compare the learnability of the gate functions. Thus, the LSTMs with the normalized softsign, sigmoid, and fast gates have parameters while that with the refine gate LSTM has . In the language-modeling experiment, we applied the fast gate to the forget gate without tying gates to compare the practical performance on an equal number of parameters. Note that the JANET has parameters because it further removes the output gate from the gate-tied LSTM.

E.3 Synthetic Tasks

The adding and copy tasks are defined as follows.

Adding task. The input for this task consist of two sequences of prescribed length . One is a sequence of random numbers sampled from the uniform distribution on . The other is the indicator sequence, which has 1 on two random indices and , and has 0 for other entries. RNNs are required to output a target value after reading all tokens. We trained and evaluated models on this task of sequence length with a mean squared error (MSE).

Copy task. The input sequence of length consists of 10 alphabets. The first ten are taken randomly from eight alphabets , and others are all void token except the -th revoking token . RNNs are required to output the first ten tokens after reading the revoking input. Each alphabet is encoded as a one-hot vector. The models were trained on this task of void sequence length with the cross-entropy loss calculated over the last 10 tokens as in a previous study (Gu et al. 2020b) and evaluated in terms of accuracy.

On both tasks, we generated 64 training data randomly at each training iteration instead of creating a dataset beforehand. We set the hidden dimension to 128 for each model, as in a previous study (Gu et al. 2020b). For NRU, the memory cell dimension was set to 64 following the original paper (Chandar et al. 2019). We used RMSProp with a learning rate and other parameters set as default () to train each model. We used the gradient clipping (Pascanu, Mikolov, and Bengio 2013) with threshold for stable training. For the observation of the growth of time scales of models (Fig. 5), models were trained in the same setting above except that the learning rate was set to to see clearer difference.

E.4 Pixel-by-pixel Image Recognition

We generally follow the way of Gu et al. (2020b) to train models on image recognition tasks. In the psMNIST task, the means of permutation of pixels adopts bit-reversal permutation rather than random permutation. In the sCIFAR task, recurrent models are given a three-dimensional pixel value at each time step, so the sequence length is 1024 time steps. The hidden dimension of each model was set to 512 on all tasks. We used Adam (Kingma and Ba 2015) for training and the learning rate was swept over . The best stable learning rate was for the LSTM with the softsign gate and the NRU and for the other models on every tasks. The gradient clipping (Pascanu, Mikolov, and Bengio 2013) was applied with threshold 1. We trained models with 150 epochs with the batch size 50. The memory size for the NRU is set to 144 as in Chandar et al. (2019).

E.5 Language Modeling

We explain the implementation details of the language modeling experiment. We used the public code of Mahto et al. (2021) to train and evaluate the language models and used the same hyperparameters. However, their code causes an out-of-memory error of GPUs during training in our computational environment. The memory usage increases after switching the optimizer to ASGD, then training breaks after 324 epochs. We completed the training by successively retraining each model twice after a break in training due to the out-of-memory error. Furthermore, each LSTM module was manually re-implemented on the basis of pytorch library without low-level optimization. Although this results in longer runtime for the baseline LSTM than the original pytorch implementation of LSTM, we did this to fairly compare the runtime.

Appendix F Additional Experimental Results

| sMNIST | psMNIST | sCIFAR | Time / Epoch | |

| Softsign LSTM | 21.63 10.15 | 91.71 0.33 | 59.21 0.39 | 17.7 min. |

| Sigmoid LSTM | 98.88 0.12 | 95.71 0.02 | 69.14 0.39 | 14.3 min. |

| Refine LSTM | 98.94 0.03 | 95.93 0.16 | 69.55 0.50 | 22.7 min. |

| UR-LSTM | 98.80 0.14 | 96.11 0.08 | 70.29 0.12 | 21.7 min. |

| Fast LSTM | 99.05 0.04 | 96.18 0.14 | 70.06 0.38 | 14.7 min. |

| UF-LSTM | 99.01 0.01 | 96.11 0.03 | 70.04 0.33 | 14.7 min. |

| Iterated Fast LSTM | 99.02 0.07 | 96.22 0.08 | 69.66 0.41 | 15.8 min. |

| Softsign GRU | 69.71 41.27 | 93.80 0.28 | 61.90 1.33 | 17.5 min. |

| Sigmoid GRU | 98.99 0.06 | 95.23 0.07 | 68.92 0.48 | 14.9 min. |

| Refine GRU | 98.95 0.02 | 95.61 0.02 | 69.54 0.71 | 22.7 min. |

| UR-GRU | 98.86 0.08 | 95.49 0.13 | 70.29 0.35 | 22.8 min. |

| Fast GRU | 98.95 0.05 | 95.54 0.34 | 69.65 0.18 | 15.5 min. |

| UF-GRU | 98.79 0.02 | 95.46 0.30 | 69.59 0.34 | 15.9 min. |

For completeness, we further demonstrated the effectiveness of the fast gate on additional experiments.

F.1 Synthetic Tasks Evaluated on Elapsed Time

To show the efficiency of learning with the fast gate based on the processing time rather than the number of parameter updates, we plot the mean squared error on the adding task to wall clock training time on Fig. 9. We observe that the fast gate achieves the convergence speed comparable to NRU due to less computational overhead on the state update. We also show results on accuracy and cross entropy loss for the copy task based on wall clock time in Fig. 10 and Fig. 11. The learning curve of NRU for the loss is truncated since its training diverges. Training with the fast gate converges the fastest among all baselines. As explained in Appendix D, the iterated fast gate converges even faster than the fast gate on both tasks.

F.2 Fast gate combined with other methods

Since our method is in principle applicable to any gated RNNs, we further validate the fast gate on another popular gated RNN, GRU (Cho et al. 2014). Additionally, we evaluate the fast gate combined with the gate initialization technique, called uniform initialization (Gu et al. 2020b).

In Tab. 4, we see similar (but slightly smaller) improvement of accuracy of the GRU in accuracy as that of the LSTM on the psMNIST and sCIFAR tasks, while keeping the computational cost. There is almost no difference in accuracy on the sMNIST task among the sigmoid, refine and fast gates because sMNIST contains relatively short-term dependencies. For the fast gate, combination of uniform initialization does not contribute to further performance improvement unlike the refine gate. Note that for the refine gate, the uniform initialization is applied to both the original gate and the auxiliary gate (Gu et al. 2020b, Appendix A), which actually provides different time scale distribution between the refine gate and other gates. That is, the time scale distribution of the refine gate with uniform initialization results in skewed distribution different from unlike explained in (Gu et al. 2020b, Proposition 1). We suspect that this skewness leads to better improvements of the refine gate combined the uniform initialization. More sophisticated initialization methods suited also to other gates are to be explored as future work.

F.3 Image classification with limited model size

| sMNIST | psMNIST | sCIFAR | Time | |

| Softsign | 97.20 0.20 | 87.89 0.76 | 47.91 1.13 | 345 sec. |

| Sigmoid | 98.84 0.08 | 92.80 0.37 | 62.73 0.75 | 284 sec. |

| Refine | 98.88 0.12 | 93.28 0.27 | 62.10 0.68 | 486 sec. |

| UR- | 98.69 0.08 | 92.56 0.46 | 62.54 0.85 | 484 sec. |

| Fast | 98.81 0.08 | 93.83 0.15 | 62.98 0.29 | 314 sec. |

| UF- | 98.81 0.07 | 93.00 0.54 | 63.00 0.78 | 315 sec. |

| chrono | 97.96 0.16 | 90.32 0.62 | 54.71 0.50 | 287 sec. |

| JANET | 98.07 0.09 | 87.84 0.50 | 55.82 0.35 | 214 sec. |

| NRU | 98.19 0.22 | 94.89 0.15 | 53.34 1.06 | 802 sec. |

As pointed out in (Voelker, Kajić, and Eliasmith 2019), improvements in learning long term dependencies could be better compared under the limits of model size. Therefore, we also tested a smaller classifier for sMNIST, psMNIST and sCIFAR than that in the main text in terms of the number of the output layer (1 vs 2) and hidden dimension of the recurrent layer (128 vs 512), which is commonly adopted in the literature (Chandar et al. 2019; Chang et al. 2019). The memory size of NRU is set to 64 in this setting as in the original paper.

The results in Tab. 5 are generally consistent with the main text, which shows robustness of the performance improvement of the fast gate. While the NRU shows the highest accuracy in the psMNIST task, it does not scale to larger models in terms of accuracy and the processing time as in the main text. For training, we used RMSprop with the learning rate 0.0005 except the following. On the sMNIST and sCIFAR tasks, learning rate of 0.0002 is applied to the NRU since it suffers from training instability at higher learning rates. The gradient clipping (Pascanu, Mikolov, and Bengio 2013) was applied with threshold 1. We trained models with 150 epochs on these task, and 100 epochs on the sCIFAR task. The learning rate was divided by 5 after 100 epochs on the sMNIST and psMNIST tasks, and after 50 epochs on the sCIFAR task. The batch size was set to 100 on all tasks. The models are trained with four different random seeds.

F.4 Raw speech classification

| Model | Test Accuracy |

| Sigmoid LSTM | 21.5* |

| Refine LSTM | 83.9* |

| UR-LSTM | 77.6* |

| Fast LSTM | 92.3 |

To see effectiveness of the fast gate on much longer sequences, we trained LSTMs with various gates on raw Speech Command dataset (Romero et al. 2022), which consists of sequences of length 16,000. We adopted the public code555https://github.com/HazyResearch/state-spaces to train 1-layer LSTM of hidden dimension 256 for classifying speech data into 10 classes. We search the best learning rate from and gradient clipping threshold from .

The results in Tab. 6 show that gates other than the fast gate suffer from training instability. In particular, the model with the sigmoid gate diverges with few epochs. We see that the refine gate improves learning, but the training diverges before it reaches sufficiently high accuracy. On the other hand, the LSTM with the fast gate can stably learn and achieve the best accuracy. These results indicate that a gate function with higher order saturation enables more stable training on such extremely long sequences.