Fast Conditional Mixing of MCMC Algorithms for Non-log-concave Distributions

Abstract

MCMC algorithms offer empirically efficient tools for sampling from a target distribution . However, on the theory side, MCMC algorithms suffer from slow mixing rate when is non-log-concave. Our work examines this gap and shows that when Poincaré-style inequality holds on a subset of the state space, the conditional distribution of MCMC iterates over mixes fast to the true conditional distribution. This fast mixing guarantee can hold in cases when global mixing is provably slow. We formalize the statement and quantify the conditional mixing rate. We further show that conditional mixing can have interesting implications for sampling from mixtures of Gaussians, parameter estimation for Gaussian mixture models and Gibbs-sampling with well-connected local minima.

1 Introduction

Sampling from a given target distribution of the form plays a central role in many machine learning problems, such as Bayesian inference, optimization, and generative modeling [9, 14, 15]. The Langevin MCMC algorithm in particular has received a lot of recent attention; it makes use of the first-order gradient information , and can be viewed as the sampling analog of gradient descent.

Langevin MCMC has been shown to converge quickly when is log-concave [6, 10]. More recently, similar guarantees have been established for satisfying weaker conditions such as log-Sobolev inequality (LSI) [23], for instance, can have a good LSI constant when is a small perturbation of a convex function. However, few guarantees exist for general non-log-concave distributions. One simple example is when is a well-separated mixture of two Gaussian distributions; in this case, one verifies that Langevin MCMC mixes at a rate proportional to the inverse of the exponential of the separation distance.

Many important modern machine-learning problems are highly non-convex, one prominent class being functions arising from neural networks. Though finding the global minimum of a non-convex function can be difficult, gradient descent can often be shown to converge rather quickly to a local optimum [3]. This raises the important question:

What is the sampling analog of a local minimum? And how can we sample efficiently from such a minimum?

In [3], authors provide a partial answer to this question by adopting the view of Langevin Diffusion as the gradient flow of KL divergence in probability space. Under this perspective, the gradient of KL divergence is given by the Fisher Information (FI). Authors of [3] show that LMC achieves error in FI in steps.

However, one crucial question remains unanswered: how does the local optimality of FI help us sample from non-convex distributions? Intuitively, small FI is useful for characterizing local convergence when is multi-modal. Authors of [3] suggested this connection, but it remains unclear how local mixing is defined and how small FI can quantitatively lead to good local mixing. Thus motivated, one of the goals of this paper is to provide a useful interpretation of the FI bound.

To this end, we propose a rigorous quantitative measure of "local mixing". We also provide a more general notion of "stationary point" for the sampling problem. Under these definitions, we show that LMC can achieve error in our proposed "measure of local mixing", in polynomial time. Finally, we consider discrete-time analog of the aforementioned ideas, and prove local convergence for random walk on a hypercube. Below is a detailed description of our theoretical contributions.

1.1 Main Contributions

-

1.

We define a notion of conditional convergence: Let denote a subset of the state space. We study the convergence of to , where denotes distributions of LMC iterates and denotes the target distribution. This definition of convergence is much weaker than the standard global convergence, but in exchange, LMC can achieve fast conditional convergence in settings where global convergence is known to be exponentially slow.

-

2.

We define local Logarithmic Sobolev Inequality and show how to combine it with existing results on the convergence of Fisher information to derive the conditional convergence of LMC.

-

3.

When local Logarithmic Sobolev Inequality does not hold, we define local Poincaré Inequality and Poincaré Fisher information (which is an analogy of Fisher information). We show the convergence of Poincaré Fisher information assuming strong dissipativity and show the conditional convergence of LMC when local Poincaré Inequality is present.

-

4.

To showcase the applications of our results, we respectively study sampling from Gaussian mixture model with the same covariance and sampling from the power posterior distribution of symmetric two-component Gaussian mixtures. The global isometric constants of these examples are exponential in dimension and may have slow global convergence. We show that the local isoperimetric constants of these examples are polynomial in dimension, and prove fast conditional convergence for these examples.

-

5.

In Theorem 1, we consider an application of our result to Gibbs sampling on discrete state space. We show that fast conditional mixing happens when the spectral gap is not small. We further show in Theorem 2 that a subset has large spectral gap if it contains only one local minimum and is well connected.

2 Related Work

When the target distribution is strongly log-concave, the entropy is known to be strongly convex with respect to , and thus Langevin Dynamics converges exponentially fast [2]. Such a result is later extended to LMC [8, 4, 9, 7, 21]. Several works further loosen the assumption by using isoperimetric inequalities such as Logarithmic Sobolev Inequality and Poincaré Inequality instead of strong log-concavity [24, 5, 25, 11]. However, there are few existing works on general non-log-concave sampling. Recently, Balasubramanian [3] defines convergence in relative Fisher information as a kind of "weak convergence" for sampling and proves a polynomial guarantee in general non-log-concave case only assuming global Lipschitz condition. However, this paper doesn’t give any rigorous statistical interpretation of this weak convergence; Majka et al. [18] and Erdogdu et al. [11] study the cases when the target distribution is non-log-concave but has some good tail growth or curvature; Ma et al. [17] analyze the situation when the target distribution is non-log-concave inside the region but log-concave outside. Although these works give strict proofs of polynomial time guarantee in their setting, their results only hold for a small branch of non-log-concave distributions. It is still hardly possible to obtain a polynomial guarantee in general non-log-concave cases. Multimodality, as a special case of non-log-concavity, has attracted lots of attention due to its prevalence in applied science. Many modified versions of MCMC were proposed to try to tackle the sampling of these distributions, such as Darting MC [1], Wormhole HMC [16], and etc. However, these algorithms require explicit knowledge of the location of the modes.

3 Conditional Mixing for MCMC

In this section, we provide a formal definition of "conditional mixing". Specifically, let denote the distribution of a Markov chain at time . We assume that the Markov chain dynamics is reversible with a unique stationary distribution . Existing analyses mostly focus on understanding the rate of convergence measured by where is some probability distance.

However, unless the stationary distribution satisfies certain restrictive properties (e.g., log-concavity), the rate of convergence can be exponentially slow in the problem dimension or the distribution moments even for simple distributions such as the mixture of Gaussians. For this reason, we consider a weaker notion of convergence below.

Definition 1 (Conditional mixing).

Given a distribution supported on the state space . We say converges conditioned on set with respect to the divergence if

where we have the conditional distribution

For now we can think of the distance as the total variation distance. Later we will discuss stronger divergence such as KL-divergence or Chi-squared divergence.

The focus of our work is on identifying several sufficient conditions for fast convergence, and quantitatively bounding the convergence rate under these conditions. We focus on two MCMC algorithms: the Langevin Monte Carlo in continuous space, and the Gibbs sampling algorithm in discrete space. We further discuss the implications of conditional convergence for the two algorithms.

4 Conditional Mixing for Langevin Monte Carlo

In this section, we study the conditional mixing of the Langevin Monte Carlo (LMC) algorithm. This section is organized as follows: in Subsection 4.1, we first introduce the Langevin Monte Carlo algorithm, Langevin Dynamics, functional inequalities, and the Fisher information; in Subsection 4.2, we provide our main results characterizing the conditional convergence of LMC; finally, in Subsection 4.3, we showcase two applications of our main results.

4.1 Preliminaries

Langevin Monte Carlo. We are interested in the convergence of Langevin Monte Carlo (LMC), which is a standard algorithm employed to sample from a target probability density , where is called the potential function. The pseudocode of LMC is given in Algorithm 1.

Input: Initial parameter , potential function , step size , number of iteration

LMC can be viewed as a time-discretization of Langevin Dynamics (LD), described by the following stochastic differential equation:

| (1) |

We can interpolate LMC following the similar manner of LD as

| (2) |

One can easily observe that in Eq. (2) has the same joint distribution as in Algorithm 1, and thus Eq. (2) is a continuous-time interpolation of Algorithm 1.

Poincaré Inequality & Logarithmic Sobolev Inequalities. The convergence of LMC does not necessarily hold for all potential functions, and quantitative bounds require specific conditions over the potential function . Poincare Inequality (PI) and Logarithmic Sobolev Inequality (LSI) are two commonly used conditions in the analysis of LMC convergence. We present these below:

Definition 2 (Poincaré Inequality).

A probability measure on satisfies the Poincaré inequality with constant > 0 (abbreviated as ), if for all functions ,

| (PI) |

Definition 3 (Logarithmic Sobolev Inequality).

Given a function and a probability measure on , define . We say that a distribution on satisfies the Logarithmic Sobolev Inequality (abbreviated as ) with some constant , if for all functions ,

| (LSI) |

Both PI and LSI can imply the convergence of LMC when the step size is small. Specifically, denote as the distribution of in LMC (Algorithm 1) and as the distribution of in Langevin Dynamics (Eq.(1)). We further denote as the distribution of interpolating LMC. The left-hand-side of Eq.(PI) with is then the derivative (w.r.t. time) of the entropy of , i.e., , and thus we directly establish the exponential convergence of . The convergence of LMC can then be induced by bounding the discretization error between LMC and Langevin Dynamics. The methodology is similar when we have LSI.

Fisher information. It is well-known that if is either strongly convex or convex with bounded support, then it obeys both PI and LSI, and the convergence of LMC follows immediately according to the arguments above. However, real-world sampling problems usually have non-convex potential functions, and for these problems we may no longer have either PI or LSI. If we revisit the above methodology to establish the convergence of LMC under LSI, we find that we still have

and thus . Following this methodology, [3] uses the considers a notion of convergence which is defined using Fisher Information , which they use to analyze LMC convergence. We present the result of [3] for completeness:

Proposition 1 (Theorem 2, [3]).

Assume is -lipschitz. Then, for any step size ,

4.2 Rates of convergence

4.2.1 Conditional mixing under local Logarithmic Sobolev Inequality

We first show that if the target distribution obeys a local Logarithmic Sobolev Inequality (defined below), then convergence of Fisher information implies conditional convergence.

Definition 4 (Local Logarithmic Sobolev Inequality).

We say that a distribution on satisfies the local Logarithmic Sobolev Inequality over a subset with some constant (abbreviated as ), if satisfies .

Definition 4 characterizes the local property of one distribution. It is considerably weaker than global LSI (i.e., Definition 3) when is convex, and recovers LSI when . The intuition is that when the distribution is multi-modal, it is quite possible that LSI does not hold (or hold but with an exponentially small constant). In contrast, we can reasonably expect local LSI hold within each mode. In Subsection 4.3, we will show that a Gaussian mixture model with the same covariance satisfies Local Logarithmic Sobolev Inequality. We show in the following lemma that, whenever we have an estimation on the Fisher information between and , we can turn it to an estimation of the entropy between and given .

Lemma 1.

Let and assume that obeys . For any distribution such that , we have that either , or .

We defer the proof of Lemma 1 to Appendix A.1. Intuitively, Lemma 1 states that if the distribution satisfies local LSI over the region and has small global Fisher information with respect to a distribution , one can expect that either the probability mass of over is minor, or is close to both conditional over . In other words, is close to conditional over whenever has a moderate mass over . Note that Proposition 1 has already guaranteed global FI of LMC with little assumption, which together with Lemma 1 leads to the following corollary:

Corollary 1.

Assume is -lipschitz and satisfies that obeys . Define . Choosing step size , we have that either , or

Corollary 1 is one of our key results. Intuitively, it shows that LMC can achieve local mixing when only local LSI is assumed. As we discussed under Definition 4, we can reasonably expect that local LSI holds within each mode when the distribution is multi-modal, in which case Corollary 1 can be further applied to show local mixing with polynomial rate. Note here the dependence of the local mixing rate is polynomial over the dimension , meaning that considering local mixing helps to get rid of the exponential dependence over the dimension in the global mixing rate when studying multi-modal distributions.

4.2.2 Conditional Convergence under local Poincaré Inequality

We have established the conditional convergence of LMC under local Logarithmic Sobolev Inequality above. However, there are cases where even local LSI fails to hold. To tackle these cases and inspired by Poincaré Inequality, we introduce a weaker notion than local LSI called local Poincaré Inequality:

Definition 5 (Local Poincaré Inequality).

A distribution on satisfies the local Poincaré Inequality over a subset with constant (abbreviated as ), if satisfies .

As Poincaré Inequality is weaker than Logarithmic Sobolev Inequality, one can easily see that local Poincaré Inequality is also weaker than local Logarithmic Sobolev Inequality. Based on local Poincaré Inequality, we have the following Poincaré version of Lemma 1, which converts an estimation of Poincaré Fisher information to an estimation of chi-squared divergence of the conditional distributions.

Lemma 2.

Define Poincaré Fisher information as . Let and assume that obeys . For any distribution such that , we have that either .

To derive the conditional convergence of LMC under local Poincaré inequality, we further need to bound Poincaré Fisher information of LMC. Such a result, however, does not exist in the literature to our best knowledge. In analogy to the analysis in [3], we develop the following lemma to control the Fisher information of LD (recall that is the distribution of Langevin Dynamics in time ).

Proposition 2.

Denote . Then, we have the following estimation of the PFI between and .

The proof can be directly derived by taking the derivative of with respect to . We defer the formal proof to Appendix A.2. Combining Lemma 2, Proposition 2, and recent advances in discrete error analysis of LD [19], we obtain the following result showing conditional convergence under local Poincaré inequality.

Corollary 2.

Assume and is -lipschtiz, satisfies strong dissipativity, i.e., , and satisfies that obeys . Initialize and select . Recall that . Then either , or .

Corollary 2 shows that conditional mixing can be established when local PI holds, which is a weaker assumption than local LSI. However, the assumption of Corollary 2 is stronger than its LSI counterpart, and the mixing rate depends on instead of , which can be considerably large. These drawbacks are mainly due to that the dynamics of chi-square distance are much harder than that of KL divergence, even when global PI holds [12].

Extension to Rényi divergence. In some prior works, e.g. [5, 23], Rényi divergence is considered a natural “measure” of convergence, due to the analytical simplicity of the resulting expressions. One may wonder if our methodology above can be extended to establish a local mixing rate of Rényi divergence. Unfortunately, there are technical difficulties which for now we have not find a way to tackle or bypass, which we list in Appendix A.3.

4.3 Applications

In this subsection, we apply Corollary 1 and Corollary 2 to two concrete examples to demonstrate their usage in obtaining local mixing rates. We start by analyzing the example of sampling from Gaussian Mixture Models with uniform covariance and then turn to the example of sampling from the power posterior distribution of symmetric two-component Gaussian mixtures.

4.3.1 Case Study: Sampling from Gaussian Mixture Model with the uniform covariance

Target distribution and potential function. We are interested in the gaussian mixture model formed by gaussians with the same variance but different means. Specifically, the target distribution is defined as , where , , and . The potential function is then defined as .

Partition of Space. We divide the space according to the sub-level set. Specifically, we define . One can easily verify that . Furthermore, is convex since by the definition of , we have

As is positive definite and symmetric, we can decompose into , where is also positive definite and symmetric. We then obtain

and thus is one region of the Voronoi diagrams generated by , which is convex. As can be obtained by performing linear transformation to , we obtain that is also convex.

Verification of local Logarithmic Sobolev Inequality. We prove the local Logarithmic Sobolev Inequality of over each partition as follows.

Lemma 3.

For all , obeys .

Lemma 3 is proved by first showing obeys through Bakry-Émery criterion due to the convexity of and is strongly convex, and then applying Holley-Stroock perturbation principle by viewing as a perturbation of over . The concrete proof is deferred to Appendix B.1.

Verification of Lipschitz gradient. By direct calculation, we derive the following bound on the Lipschitz constant of .

Lemma 4.

, .

As a conclusion, we obtain the following guarantee of running LMC over this example.

Corollary 3.

Let the stepsize of LMC be . Assume . Then, for every , either , or

Corollary 3 indicates that running LMC over gaussian mixture distribution (with the same covariance) can ensure local mixing with cost polynomial over the dimension and independent of the distance between the means. By contrast, it is a folk-tale that even the global mixing of gaussian mixture with 2 components has a global mixing rate exponential over the distance, which suggests local mixing analysis provide a more concrete analysis of what is going on over each component.

4.3.2 Sampling from the power posterior distribution of symmetric Gaussian mixtures

Target distribution and potential function. The symmetric Gaussian mixture model is given as

Here denotes the multivariate Gaussian distribution with location parameter and covariance matrix . Without loss of generality, we assume , i.e., all coordinates of except the first is zero, and . The power posterior distribution, or the target distribution, is defined as We set for simplicity. When no ambiguity is possible, we abbreviate as . The potential function is then given as .

Partition of Space. With an accuracy budget , we define , , and , where and are -dependent hyperparameter specified latter.

Verification of local Poincaré Inequality over and . We have the following characterization for the local Poincaré Inequality over and :

Lemma 5.

If and , then with probability at least with respect to the sampling of , we have that obeys and .

The lemma is derived by first considering the distribution corresponding to the potential function and proving local Poincaré Inequality of (or ), then bounding the difference between and through concentration inequality, and finally completing the proof by applying Holley-Stroock perturbation principle to pass local Poincaré Inequality from to . A concrete proof is deferred to Appendix B.2.

Verification of strong dissipativity. Similar to local Logarithmic Sobolev Inequality, we can show strong dissipativity of holds in high probability.

Lemma 6.

If , then with probability at least over the sampling of , , and .

Bounding the probability of . Using strong dissipativity, we can bound as follows.

Lemma 7.

If , then with probability at least over the sampling of , we have

All in all, we obtain the following characterization of running LMC over this example.

Corollary 4.

Initialize for and select . Set . If and , then with probability at least over the sampling of , either or for , and .

As a comparison, such a problem was also studied by [20], where they constructed a specific sampling algorithm with prior knowledge of this problem to achieve global convergence. In contrast, we analyze LMC, which does not require any prior knowledge of the problem, and derive the conditional convergence of LMC.

5 Conditional Mixing for Gibbs Sampling on Finite States

In previous sections, we showed that conditional mixing can happen for LMC on a continuous state space. We now show that similar results hold for MCMC algorithms on a finite state space. For simplicity, we consider an energy function defined on the vertices of a hypercube. Denote its corresponding Gibbs measure .

We consider vertices of the hypercube as a d-regular graph where for any , an edge exists if and only if they differ by one coordinate, . Then a lazy Gibbs sampler has the following transition matrix on this finite graph:

Note that the process is lazy because, for any . This is assumed for simplicity of analysis to avoid almost periodic behaviors. This assumption does not make the analysis less general, as a lazy self loop with probability only changes the mixing time by a multiplicative absolute constant (see Corollary 9.5 [22]).

To prove conditional convergence, we need an analogue of conditional Poincaré Inequality. The story for the discrete state space can be more convoluted as the transition matrix, in addition to the stationary distribution, plays a role here. Many of the analyses below are inspired by [13].

First, given a finite number of subsets , we define the conditional probability supported on , and hence for ,

We also need to design a conditioned transition kernel so that

| (3) |

where denotes the complement of , and hence the conditioned kernel simply rejects all outgoing transitions. Then we can easily tell that is reversible with a unique stationary distribution . We are now ready to give an analogue of Corollary 1.

Theorem 1.

Given a sequence of subsets . If for every , defined in (3) as a distribution on has spectral gap at least , then we have that either for some , the distribution has small probability on , , or the conditional mixing happens with respect to Chi-squared divergence,

The proof builds upon the convergence of the Dirichlet form and can be found in Appendix C. The above theorem suggests that if the spectral gap of the Gibbs sampling algorithm is lower bounded on a local subset, then after a polynomial number of iterations, we get either has very small probability on this set, or conditioned on the set, the distribution is close to the stationary distribution.

One thing less clear, in finite-state Gibbs sampling as compared to the LMC, is when would the spectral gap for a Gibbs distribution be large. For the Langevin Monte Carlo algorithm, classical results show that the spectral gap cannot be too small if the stationary distribution is locally near log-concave. Below we provide an analogue of this observation for discrete state space.

5.1 Spectral Gap for Gibbs Sampling

We first define a graph , where and if and only if . Then we define quasi-concavity in this case. Note that Gibbs sampling in each iteration can only move to a neighbor or stay at the current vertex. Then we introduce the counterpart of quasi-convexity on a function defined on vertices of graphs.

Definition 6.

Let be a subgraph, . We say a function is quasi-convex with a radius D on a subgraph , if there exists a local minimum such that for any , any shortest path from is of length at most , and is non-increasing along the path.

Before providing a guarantee for the spectral gap, we give two examples of quasi-convexity.

Example 5.1.

If is a quasi-convex function defined on positive reals. Then for a given any function is a quasi-convex function on the graph where are reals and is the shortest path length from to .

Example 5.2.

If on a subset , for some (i.e. f is a linear function), then is quasi-convex on the graph.

The next theorem states that for such a function, the spectral gap is polynomial in problem dimension and the diameter of the region .

Theorem 2.

If a function is quasi-convex with a radius D on a subset , then the conditional transition of Gibbs sampling defined in (3) has a spectral gap lower bounded by .

The proof studies the conductance of the Markov chain and can be found in Appendix D. The above theorem suggests that although Gibbs sampling can mix very slowly, on any subset with a well connected local minimum, the conditional mixing to the stationary distribution can be fast. This concludes our analysis and we move on to verify our statements with experiments in the next section.

6 Experiments

6.1 Observations on Gaussian Mixture

In this section, we conduct experiments to verify the theoretical results and compare global mixing versus conditional mixing for Gaussian mixture models. We take three Gaussian mixtures: , , and as our target distributions. We use Algorithm 1 as our sampling algorithm, and set step size . The initial distributions are both uniform in a large enough range. We plot the sampling distribution after rounds respectively in Figure 1(a), 1(b), and 1(c), and plot the conditional and global KL divergence in Figure 1(d), 1(e), and 1(f).

We make the following observations: (1) The global KL divergences of the sampling distributions of and decrease fast at first. Then they maintain at a constant level and never converge to 0. It is reasonable since have very bad LSI constants (exponential in the distance between means). Thus, by classic Langevin Danymics analysis results[23], global KL divergence would have an exponentially slow convergence rate. (2) Both the global and conditional divergences of the sampling distribution of converge very fast. This is because dense Gaussian mixtures like have good LSI constant. The KL values are noisy due to the limited calculation precision of KL divergence. (3) The conditional divergence of the sampling distribution of converges faster than the global divergence because the LSI constant bound of is much worse than the conclusion in Corollary 1, which means conditional convergence is faster than global convergence. (4) The conditional KL divergences of the sampling distributions of and converge to 0 very fast (the flat part is due to the limit of calculation precision), which could be seen as a verification of our theoretical result. (5) The sampling distributions of and after iterations contain all of the Gaussian components in target distributions, the only difference between them is weight. Since learning the right weight and component will directly lead to global KL convergence, this observation could be seen as an example of the gap between global convergence and conditional convergence.

6.2 Observations on LMC with restarts

In the LMC analysis, We study the evolution of a distribution that usually is an absolutely continuous distribution. However, in practice, a more common implementation is as below: one first randomly generates an initial point , runs the sampling algorithm for iterations, and then collects samples along a single trajectory. A gap between theory and practice here is that we always assume continuity and smoothness conditions on the initial distribution in theory, while in practice, we only generate a limited number of initial points (sometimes only one point) to run the sampling algorithm.

For log-concave sampling, this gap is usually negligible since the ergodicity of Langevin Dynamics guarantees that we could always capture the features of the target distribution. Thus, it’s reasonable that there are plenty of works about the discretization error on the time scale, while we hardly pay attention to the approximation error of initial distribution. When it comes to non-log-concave sampling, this gap may become crucial. We conduct several experiments in Appendix E to verify this conjecture and show that LMC with restarts could empirically help to eliminate the gap and improve the convergence speed.

7 Conclusions

Our work examines sampling problems where the global mixing of an MCMC algorithm is slow. We show that in such cases, fast conditional mixing can be achieved on subsets where the target distribution has benign local structures. We make the above statements rigorous and provide polynomial-time guarantees for conditional mixing. We give several examples, such as the mixture of Gaussian and the power posterior to show that the benign local structure often exists despite the global mixing rate is exponentially slow.

Much remains to be done. Theoretically, whether faster convergence rates can be achieved or a lower bound exists remain unknown. Instantiating our analyses to more MCMC algorithms may also lead to new observations. More importantly, the implication of being able to sample efficiently from local distributions requires more careful analysis. This may lead to a new theoretical guarantee for problems with symmetry (such as permutation symmetry, sign symmetry, rotation invariance, etc) where all local minima are equally good and sampling from any mode suffices.

Acknowledgments and Disclosure of Funding

Jingzhao Zhang acknowledges support from Tsinghua University Initiative Scientific Research Program.

References

- [1] S. Ahn, Y. Chen, and M. Welling. Distributed and adaptive darting monte carlo through regenerations. In International Conference on Artificial Intelligence and Statistics, 2013.

- [2] D. Bakry and M. Émery. Diffusions hypercontractives. In Séminaire de Probabilités XIX 1983/84: Proceedings, pages 177–206. Springer, 2006.

- [3] K. Balasubramanian, S. Chewi, M. A. Erdogdu, A. Salim, and S. Zhang. Towards a theory of non-log-concave sampling: first-order stationarity guarantees for langevin monte carlo. In Conference on Learning Theory, pages 2896–2923. PMLR, 2022.

- [4] X. Cheng and P. Bartlett. Convergence of langevin mcmc in kl-divergence. In Algorithmic Learning Theory, pages 186–211. PMLR, 2018.

- [5] S. Chewi, M. A. Erdogdu, M. B. Li, R. Shen, and M. Zhang. Analysis of langevin monte carlo from poincaré to log-sobolev, 2021.

- [6] A. S. Dalalyan. Theoretical guarantees for approximate sampling from smooth and log-concave densities. Journal of the Royal Statistical Society. Series B (Statistical Methodology), pages 651–676, 2017.

- [7] A. S. Dalalyan and A. Karagulyan. User-friendly guarantees for the langevin monte carlo with inaccurate gradient. Stochastic Processes and their Applications, 129(12):5278–5311, 2019.

- [8] A. S. Dalalyan and A. B. Tsybakov. Sparse regression learning by aggregation and langevin monte-carlo. Journal of Computer and System Sciences, 78(5):1423–1443, 2012.

- [9] A. Durmus, S. Majewski, and B. Miasojedow. Analysis of langevin monte carlo via convex optimization. The Journal of Machine Learning Research, 20(1):2666–2711, 2019.

- [10] A. Durmus and E. Moulines. Nonasymptotic convergence analysis for the unadjusted langevin algorithm. 2017.

- [11] M. A. Erdogdu and R. Hosseinzadeh. On the convergence of langevin monte carlo: The interplay between tail growth and smoothness, 2020.

- [12] M. A. Erdogdu, R. Hosseinzadeh, and S. Zhang. Convergence of langevin monte carlo in chi-squared and rényi divergence. In International Conference on Artificial Intelligence and Statistics, pages 8151–8175. PMLR, 2022.

- [13] Y. Guan and S. M. Krone. Small-world mcmc and convergence to multi-modal distributions: From slow mixing to fast mixing. 2007.

- [14] A. T. Kalai and S. Vempala. Simulated annealing for convex optimization. Mathematics of Operations Research, 31(2):253–266, 2006.

- [15] D. Kingma, T. Salimans, B. Poole, and J. Ho. Variational diffusion models. Advances in neural information processing systems, 34:21696–21707, 2021.

- [16] S. Lan, J. Streets, and B. Shahbaba. Wormhole hamiltonian monte carlo, 2014.

- [17] Y.-A. Ma, Y. Chen, C. Jin, N. Flammarion, and M. I. Jordan. Sampling can be faster than optimization. Proceedings of the National Academy of Sciences, 116(42):20881–20885, sep 2019.

- [18] M. B. Majka, A. Mijatović, and L. Szpruch. Non-asymptotic bounds for sampling algorithms without log-concavity, 2019.

- [19] W. Mou, N. Flammarion, M. J. Wainwright, and P. L. Bartlett. Improved bounds for discretization of langevin diffusions: Near-optimal rates without convexity. Bernoulli, 28(3):1577–1601, 2022.

- [20] W. Mou, N. Ho, M. J. Wainwright, P. L. Bartlett, and M. I. Jordan. Sampling for bayesian mixture models: Mcmc with polynomial-time mixing. arXiv preprint arXiv:1912.05153, 2019.

- [21] A. Mousavi-Hosseini, T. Farghly, Y. He, K. Balasubramanian, and M. A. Erdogdu. Towards a complete analysis of langevin monte carlo: Beyond poincar’e inequality. arXiv preprint arXiv:2303.03589, 2023.

- [22] Y. Peres and P. Sousi. Mixing times are hitting times of large sets. Journal of Theoretical Probability, 28:488–519, 2015.

- [23] S. Vempala and A. Wibisono. Rapid convergence of the unadjusted langevin algorithm: Isoperimetry suffices. Advances in neural information processing systems, 32, 2019.

- [24] S. S. Vempala. Recent progress and open problems in algorithmic convex geometry. In IARCS Annual Conference on Foundations of Software Technology and Theoretical Computer Science (FSTTCS 2010). Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik, 2010.

- [25] A. Wibisono. Proximal langevin algorithm: Rapid convergence under isoperimetry. arXiv preprint arXiv:1911.01469, 2019.

Appendix A Proofs of results in Section 4.2

A.1 Local LSI: Proof of Lemma 1

Proof.

Since

if , the proof is finished. Otherwise, we have

The proof is completed. ∎

A.2 Local PI: Proof of Proposition 2

To begin with, we provide the proof of Lemma 2.

We then prove Proposition 2.

Proof of Propsition 2.

By applying the definition of LD, we have

Taking integration of both sides of the above equation then gives

The proof is then completed by noting that according to Hölder’s inequality,

The proof is then completed. ∎

We then restate the main result from [19], which provides a tight characterization of the discretization error between LMC and LD.

Proposition 3 (Theorem 2, [19]).

Assume and is -lipschtiz. Initialize according to Gaussian and select . Then for , .

It should be noticed that in the original version of [Theorem 2, [19]], the result is only stated for interger time, i.e., where . However, their proof also applies to non-integer time which leads to the above proposition.

We are now ready to prove Corollary 2.

Proof of Corollary 2.

On the other hand, according to Proposition 3 and Pinsker’s inequality, we obtain that for ,

and thus

Due to the convexity of norm, we then obtain

| (5) |

Let be a positive constant. According to Eq. (4), we have either , or . In the former case, combined with Eq. (5), we have that

In the latter case, we have that

and thus

The proof is completed by selecting , and . ∎

A.3 Difficulty of extension to Rényi divergence

Given two distribution and , Rényi divergence of between them, i.e., is defined as follows:

For simplicity, here we consider Langevin Dynamics defined as Eq. (1) with the distribution of time denoted as . The measure of convergence is then defined as , the derivative of which, according to (Lemma 6, [23]) is given as

where

If we would like to follow the routine of Lemma 1, we need to firstly lower bound by and secondly transfer back to . The second step can be accomplished following the same routine as (Lemma 9,[24]) using local PI. However, we are still unaware of how to implement the first step. This is because although we have

which is similar to the analysis of local LSI, we also have

In other words, when restricting to , both the denominator and the numerator of will get smaller, which makes no longer lower bounded by . We leave how to resolve this challenge as an interesting future work.

Appendix B Proof of Applications

B.1 Proof of gaussian-mixture

To start with, we recall Bakry-Émery criterion and Holley-Stroock perturbation principle.

Lemma 8 (Bakry-Émery criterion).

Let be convex and let be a Hamiltonian with Gibbs measure and assume that for all . Then satisfies .

Lemma 9 (Holley-Stroock perturbation principle).

If satisfies , and satisfies , where . Then, satisfies .

We are now ready to prove Lemma 3.

Proof of Lemma 3.

Since is convex, by applying Lemma 8, we obtain is . Let .

Meanwhile, we have over

Therefore, by Holley-Stroock perturbation principle, we have that satisfies . ∎

Proof of Lemma 4.

Through direct calculation, we obtain

Then, for any with , we have

and

The proof is completed. ∎

B.2 Proof of sampling from the power posterior distribution of symmetric Gaussian mixtures

To begin with, define the expected potential function as

We further define the corresponding distribution as .

The following lemmas will be needed in the proof.

Lemma 10 (Theorem 2, [20]).

If , we have satisfies .

Lemma 11 (Lemma 4, [20]).

If , we have with probability at least ,

Lemma 12.

Suppose obeys strong dissipativity, i.e., is -lipschitz and . Then, running LMC with and , we have

Proof.

Define . Assume wlog that .

Then,

Let denote expectation wrt . Then

Using the fact that variable is sub-exponential,

On the other hand, notice that is a 1-dimensional gaussian random variable. It can be shown that

| (6) |

Combining the above,

Let denote expectation wrt all randomness. Then

Suppose that is drawn from the invariant distribution under LMC. Then we know that . In this case,

Moving things around gives

∎

We are now ready to prove the lemmas in the main text.

Proof of Lemma 5.

Proof of Lemma 6.

To begin with, set , we have

We obtain

following a standard empirical process argument due to (see Lemma 6, [20] as an example).

Meanwhile, denote if is sampled from and else-wise. We have

and thus . As , by concentration inequality of chi-square variable and since , we have with probability at least ,

The claim for can be calculated following the similar routine. The proof is completed. ∎

Appendix C Proof of Theorem 1

Proof.

We consider the variational form of spectral gap. We first define the Dirichlet form and the variance

Then by the fact that is lazy, and hence for some reversible transition , we have

By nonnegativity , we have

| (7) |

By the variational form of spectral gap,

Therefore, applying the Markov chain generated by we have that for any

| (8) |

We then note that

We note by (PI) that for any Therefore, the second term is zero. Hence,

Combining with (7) and (8) we get,

| (9) |

The claim then follows by discussing if for all , , then

| (10) |

∎

Appendix D Proof for Theorem 2

Proof.

Given the subgraph , we have that conditioned transition kernel is

We analyze the conductance on graph induced by ,

For any partition of , without loss of generality assume the local min of is in , . For simplicity, for any , we denote be the last element in its shortest path to . We note that

Denote be the set of such elements in . Then we have

| (11) |

Further denote for some as the set of elements at distance from along the descending shortest paths. We provide an illustrative figure in 2. Then we have that

| (12) |

We note by reversibility that

| (13) |

Further by the fact that the function along the path is descending, we get that

| (14) |

Therefore,

| (15) |

Hence we have

| (16) |

Therefore, when we have

when we have

The theorem then follows by Cheeger’s inequality.

∎

Appendix E Additional Experiments

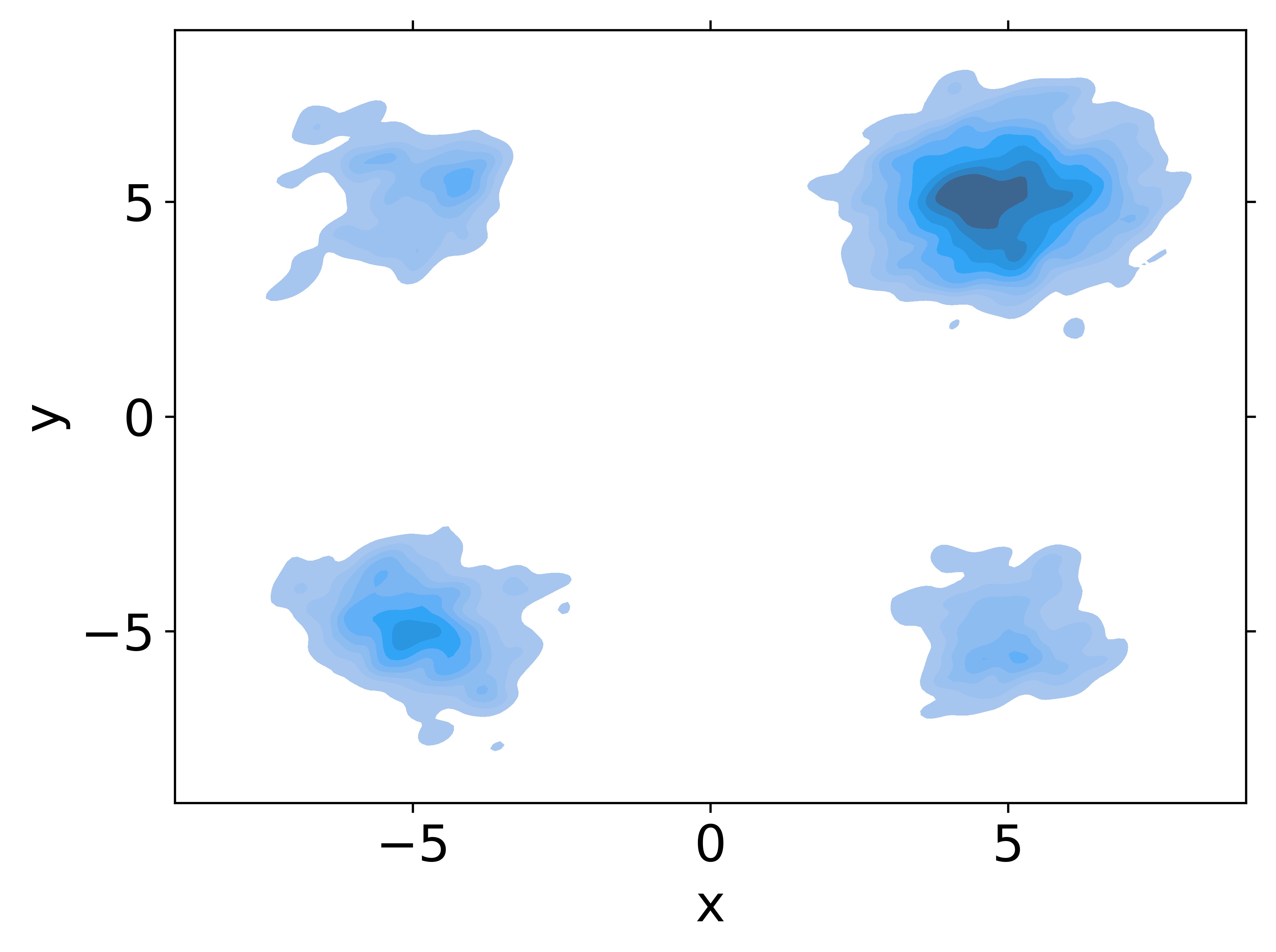

We still use in 6.1 as our target distribution. We initially set particles to run the LMC sampling algorithm respectively. We collect the locations of these particles after iterations as valid samples. The target distribution is shown in Figure 3(a); empirical distributions using particles are shown in Figure 3(b), 3(c), and 3(d).

We highlight two observations from the experiments. First when we use only one particle, it is always trapped in one Gaussian component and we never get samples from other modes. It has very bad global convergence, but still gets good conditional convergence: The convergence result conditioned on the 1st quadrant is good, and the sample after iterations has no probability distributed on the other 3 quadrants. Second, when the number of particles grows, the sample after iterations has non-negligible probabilities distributed on all of the 4 quadrants, which means we could capture all of the Gaussian components. The results indicate that although restarting LMC may not directly lead to global convergence, it may help us capture the features in multi-modal distributions, which further implies we may empirically eliminate the "small probability mass" condition in Corollary 1 and mitigate the gap between sampling distribution and target distribution.

A broader implication could be on ensemble and stochastic averaging in neural network training, where ensemble follows the restart procedure where as stochastic averaging is closer to sampling from a single trajectory. Our theory and experiment suggest that the two can have very different distributions in the end.