Fast Approximation of Similarity Graphs

with Kernel Density Estimation

Abstract

Constructing a similarity graph from a set of data points in is the first step of many modern clustering algorithms. However, typical constructions of a similarity graph have high time complexity, and a quadratic space dependency with respect to . We address this limitation and present a new algorithmic framework that constructs a sparse approximation of the fully connected similarity graph while preserving its cluster structure. Our presented algorithm is based on the kernel density estimation problem, and is applicable for arbitrary kernel functions. We compare our designed algorithm with the well-known implementations from the scikit-learn library and the FAISS library, and find that our method significantly outperforms the implementation from both libraries on a variety of datasets.

1 Introduction

Given a set of data points and a similarity function for any pair of data points and , the objective of clustering is to partition these data points into clusters such that similar points are in the same cluster. As a fundamental data analysis technique, clustering has been extensively studied in different disciplines ranging from algorithms and machine learning, social network analysis, to data science and statistics.

One prominent approach for clustering data points in Euclidean space consists of two simple steps: the first step is to construct a similarity graph from , where every vertex of corresponds to , and different vertices and are connected by an edge with weight if their similarity is positive, or higher than some threshold. Secondly, we apply spectral clustering on , and its output naturally corresponds to some clustering on [19]. Because of its out-performance over traditional clustering algorithms like -means, this approach has become one of the most popular modern clustering algorithms.

On the other side, different constructions of similarity graphs have significant impact on the quality and time complexity of spectral clustering, which is clearly acknowledged and appropriately discussed by von Luxburg [31]. Generally speaking, there are two types of similarity graphs:

-

•

the first one is the -nearest neighbour graph (-NN graph), in which every vertex connects to if is among the -nearest neighbours of . A -NN graph is sparse by construction, but loses some of the structural information in the dataset since is usually small and the added edges are unweighted.

-

•

the second one is the fully connected graph, in which different vertices and are connected with weight . While a fully connected graph maintains most of the distance information about , this graph is dense and storing such graphs requires quadratic memory in .

Taking the pros and cons of the two constructions into account, one would naturally ask the question:

Is it possible to directly construct a sparse graph that preserves the cluster structure of a fully connected similarity graph?

We answer this question affirmatively, and present a fast algorithm that constructs an approximation of the fully connected similarity graph. Our constructed graph consists of only edges111We use to represent for some constant ., and preserves the cluster structure of the fully connected similarity graph.

1.1 Our Result

Given any set and a kernel function , a fully connected similarity graph of consists of vertices, and every corresponds to ; we set for any different and . We introduce an efficient algorithm that constructs a sparse graph directly from , such that and share the same cluster-structure, and the graph matrices for and have approximately the same eigen-gap. This ensures that spectral clustering from and return approximately the same result.

The design of our algorithm is based on a novel reduction from the approximate construction of similarity graphs to the problem of Kernel Density Estimation (KDE). This reduction shows that any algorithm for the KDE can be employed to construct a sparse representation of a fully connected similarity graph, while preserving the cluster-structure of the input data points. This is summarised as follows:

Theorem 1 (Informal Statement of Theorem 2).

Given a set of data points as input, there is a randomised algorithm that constructs a sparse graph of , such that it holds with probability at least that

-

1.

graph has edges,

-

2.

graph has the same cluster structure as the fully connected similarity graph of .

The algorithm uses an approximate KDE algorithm as a black-box, and has running time for , where is the running time of solving the KDE problem for data points up to a -approximation.

This result builds a novel connection between the KDE and the fast construction of similarity graphs, and further represents a state-of-the-art algorithm for constructing similarity graphs. For instance, when employing the fast Gauss transform [10] as the KDE solver, Theorem 1 shows that a sparse representation of the fully connected similarity graph with the Gaussian kernel can be constructed in time when is constant. As such, in the case of low dimensions, spectral clustering runs as fast (up to a poly-logarithmic factor) as the time needed to read the input data points. Moreover, any improved algorithm for the KDE would result in a faster construction of approximate similarity graphs.

To demonstrate the significance of this work in practice, we compare the performance of our algorithm with five competing algorithms from the well-known scikit-learn library [20] and FAISS library [11]: the algorithm that constructs a fully connected Gaussian kernel graph, and four algorithms that construct different variants of -nearest neighbour graphs. We apply spectral clustering on the six constructed similarity graphs, and compare the quality of the resulting clustering. For a typical input dataset of 15,000 points in , our algorithm runs in 4.7 seconds, in comparison with between 16.1 – 128.9 seconds for the five competing algorithms from scikit-learn and FAISS libraries. As shown in Figure 1, all the six algorithms return reasonable output.

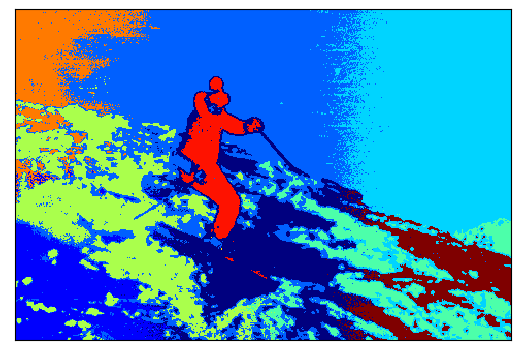

We further compare the quality of the six algorithms on the BSDS image segmentation dataset [2], and our algorithm presents clear improvement over the other five algorithms based on the output’s average Rand Index. In particular, due to its quadratic memory requirement in the input size, one would need to reduce the resolution of every image down to 20,000 pixels in order to construct the fully connected similarity graph with scikit-learn on a typical laptop. In contrast, our algorithm is able to segment the full-resolution image with over 150,000 pixels. Our experimental result on the BSDS dataset is showcased in Figure 2 and demonstrates that, in comparison with SKLearn GK, our algorithm identifies a more detailed pattern on the butterfly’s wing. In contrast, none of the -nearest neighbour based algorithms from the two libraries is able to identify the wings of the butterfly.

1.2 Related Work

There is a number of work on efficient constructions of -neighbourhood graphs and -NN graphs. For instance, Dong et al. [9] presents an algorithm for approximate -NN graph construction, and their algorithm is based on local search. Wang et al. [32] presents an LSH-based algorithm for constructing an approximate -NN graph, and employs several sampling and hashing techniques to reduce the computational and parallelisation cost. These two algorithms [9, 32] have shown to work very well in practice, but lack a theoretical guarantee on the performance.

Our work also relates to a large and growing number of KDE algorithms. Charikar and Siminelakis [7] study the KDE problem through LSH, and present a class of unbiased estimators for kernel density in high dimensions for a variety of commonly used kernels. Their work has been improved through the sketching technique [24], and a revised description and analysis of the original algorithm [4]. Charikar et al. [6] presents a data structure for the KDE problem, and their result essentially matches the query time and space complexity for most studied kernels in the literature. In addition, there are studies on designing efficient KDE algorithms based on interpolation of kernel density estimators [29], and coresets [12].

Our work further relates to efficient constructions of spectral sparsifiers for kernel graphs. Quanrud [22] studies smooth kernel functions, and shows that an explicit -approximate spectral approximation of the geometric graph with edges can be computed in time. Bakshi et al. [5] proves that, under the strong exponential time hypothesis, constructing an -approximate spectral sparsifier with edges for the Gaussian kernel graph requires time, where is a fixed universal constant and is the minimum entry of the kernel matrix. Compared with their results, we show that, when the similarity graph with the Gaussian kernel presents a well-defined structure of clusters, an approximate construction of this similarity graph can be constructed in nearly-linear time.

2 Preliminaries

Let be an undirected graph with weight function , and . The degree of any vertex is defined as , where we write if . For any , the volume of is defined by , and the conductance of is defined by

where . For any , we call subsets of vertices a -way partition if for any , for any , and . Moreover, we define the -way expansion constant by

Note that a lower value of ensures the existence of clusters of low conductance, i.e, has at least clusters.

For any undirected graph , the adjacency matrix of is defined by if , and otherwise. We write as the diagonal matrix defined by , and the normalised Laplacian of is defined by . For any PSD matrix , we write the eigenvalues of as .

It is well-known that, while computing exactly is NP-hard, is closely related to through the higher-order Cheeger inequality [13]: it holds for any that

2.1 Fully Connected Similarity Graphs

We use to represent the set of input data points, where every . Given and some kernel function , we use to represent the fully connected similarity graph from , which is constructed as follows: every corresponds to , and any pair of different and is connected by an edge with weight . One of the most common kernels used for clustering is the Gaussian kernel, which is defined by

for some bandwidth parameter . Other popular kernels include the Laplacian kernel and the exponential kernel which use and in the exponent respectively.

2.2 Kernel Density Estimation

Our work is based on algorithms for kernel density estimation (KDE), which is defined as follows. Given a kernel function with source points and target points , the KDE problem is to compute , where

| (1) |

for . While a direct computation of the values requires operations, there is substantial research to develop faster algorithms approximating these quantities.

In this paper we are interested in the algorithms that approximately compute for all up to a -multiplicative error, and use to denote the asymptotic complexity of such a KDE algorithm. We also require that is superadditive in and ; that is, for and , we have

it is known that such property holds for many KDE algorithms (e.g., [1, 6, 10]).

3 Cluster-Preserving Sparsifiers

A graph sparsifier is a sparse representation of an input graph that inherits certain properties of the original dense graph. The efficient construction of sparsifiers plays an important role in designing a number of nearly-linear time graph algorithms. However, most algorithms for constructing sparsifiers rely on the recursive decomposition of an input graph [26], sampling with respect to effective resistances [15, 25], or fast SDP solvers [14]; all of these need the explicit representation of an input graph, requiring time and space complexity for a fully connected graph.

Sun and Zanetti [27] study a variant of graph sparsifiers that mainly preserve the cluster structure of an input graph, and introduce the notion of cluster-preserving sparsifier defined as follows:

Definition 1 (Cluster-preserving sparsifier).

Let be any graph, and the -way partition of corresponding to . We call a re-weighted subgraph a cluster-preserving sparsifier of if for , and .

Notice that graph has exactly clusters if (i) has disjoint subsets of low conductance, and (ii) any -way partition of would include some of high conductance, which would be implied by a lower bound on due to the higher-order Cheeger inequality. Together with the well-known eigen-gap heuristic [13, 31] and theoretical analysis on spectral clustering [16, 21], the two properties in Definition 1 ensures that spectral clustering returns approximately the same output from and .222The most interesting regime for this definition is and , and we assume this in the rest of the paper.

Now we present the algorithm in [27] for constructing a cluster-preserving sparsifier, and we call it the SZ algorithm for simplicity. Given any input graph with weight function , the algorithm computes

for every edge , where is some constant. Then, the algorithm samples every edge with probability

and sets the weight of every sampled in as . By setting as the set of the sampled edges, the algorithm returns as output. It is shown in [27] that, with high probability, the constructed has edges and is a cluster-preserving sparsifier of .

We remark that a cluster-preserving sparsifier is a much weaker notion than a spectral sparsifier, which approximately preserves all the cut values and the eigenvalues of the graph Laplacian matrices. On the other side, while a cluster-preserving sparsifier is sufficient for the task of graph clustering, the SZ algorithm runs in time for a fully connected input graph, since it’s based on the computation of the vertex degrees as well as the sampling probabilities for every pair of vertices and .

4 Algorithm

This section presents our algorithm that directly constructs an approximation of a fully connected similarity graph from with . As the main theoretical contribution, we demonstrate that neither the quadratic space complexity for directly constructing a fully connected similarity graph nor the quadratic time complexity of the SZ algorithm is necessary when approximately constructing a fully connected similarity graph for the purpose of clustering. The performance of our algorithm is as follows:

Theorem 2 (Main Result).

Given a set of data points as input, there is a randomised algorithm that constructs a sparse graph of , such that it holds with probability at least that

-

1.

graph has edges,

-

2.

graph has the same cluster structure as the fully connected similarity graph of ; that is, if has well-defined clusters, then it holds that and .

The algorithm uses an approximate KDE algorithm as a black-box, and has running time for .

4.1 Algorithm Description

At a very high level, our designed algorithm applies a KDE algorithm as a black-box, and constructs a cluster-preserving sparsifier by simultaneous sampling of the edges from a non-explicitly constructed fully connected graph. To explain our technique, we first claim that, for an arbitrary , a random can be sampled with probability through queries to a KDE algorithm. To see this, notice that we can apply a KDE algorithm to compute the probability that the sampled neighbour is in some set , i.e.,

where we use to denote that the KDE is taken with respect to the set of source points . Based on this, we recursively split the set of possible neighbours in half and choose between the two subsets with the correct probability. The sampling procedure is summarised as follows, and is illustrated in Figure 3. We remark that the method of sampling a random neighbour of a vertex in through KDE and binary search also appears in Backurs et al. [5]

-

1.

Set the feasible neighbours to be .

-

2.

While :

-

•

Split into and with and .

-

•

Compute and ; set with probability , and with probability .

-

•

-

3.

Return the remaining element in as the sampled neighbour.

Next we generalise this idea and show that, instead of sampling a neighbour of one vertex at a time, a KDE algorithm allows us to sample neighbours of every vertex in the graph “simultaneously”. Our designed sampling procedure is formalised in Algorithm 1.

Finally, to construct a cluster-preserving sparsifier, we apply Algorithm 1 to sample neighbours for every vertex , and set the weight of every sampled edge as

| (2) |

where is an estimate of the sampling probability of edge , and

for some constant ; see Algorithm 2 for the formal description.

4.2 Algorithm Analysis

Now we analyse Algorithm 2, and sketch the proof of Theorem 2. We first analyse the running time of Algorithm 2. Since it involves executions of Algorithm 1 in total, it is sufficient to examine the running time of Algorithm 1.

We visualise the recursion of Algorithm 1 with respect to and in Figure 4. Notice that, although the number of nodes doubles at each level of the recursion tree, the total number of samples and data points remain constant for each level of the tree: it holds for any that and . Since the running time of the KDE algorithm is superadditive, the combined running time of all nodes at level of the tree is

Hence, the total running time of Algorithm 2 is as the tree has levels. Moreover, when applying the Fast Gauss Transform (FGT) [10] as the KDE algorithm, the total running time of Algorithm 1 is when and .

Finally, we prove that the output of Algorithm 2 is a cluster preserving sparsifier of a fully connected similarity graph, and has edges. Notice that, comparing with the sampling probabilities and used in the SZ algorithm, Algorithm 2 samples each edge through recursive executions of a KDE algorithm, each of which introduces a -multiplicative error. We carefully examine these -multiplicative errors and prove that the actual sampling probability used in Algorithm 2 is an -approximation of for every . As such the output of Algorithm 2 is a cluster-preserving sparsifier of a fully connected similarity graph, and satisfies the two properties of Theorem 2. The total number of edges in follows from the sampling scheme. We refer the reader to the supplementary material for the complete proof of Theorem 2.

5 Experiments

In this section, we empirically evaluate the performance of spectral clustering with our new algorithm for constructing similarity graphs. We compare our algorithm with the algorithms for similarity graph construction provided by the scikit-learn library [20] and the FAISS library [11] which are commonly used machine learning libraries for Python. In the remainder of this section, we compare the following six spectral clustering methods.

-

1.

SKLearn GK: spectral clustering with the fully connected Gaussian kernel similarity graph constructed with the scikit-learn library.

-

2.

SKLearn -NN: spectral clustering with the -nearest neighbour similarity graph constructed with the scikit-learn library.

-

3.

FAISS Exact: spectral clustering with the exact -nearest neighbour graph constructed with the FAISS library.

-

4.

FAISS HNSW: spectral clustering with the approximate -nearest neighbour graph constructed with the “Hierarchical Navigable Small World” method [18].

-

5.

FAISS IVF: spectral clustering with the approximate -nearest neighbour graph constructed with the “Invertex File Index” method [3].

-

6.

Our Algorithm: spectral clustering with the Gaussian kernel similarity graph constructed by Algorithm 2.

We implement Algorithm 2 in C++, using the implementation of the Fast Gauss Transform provided by Yang et al. [33], and use the Python SciPy [30] and stag [17] libraries for eigenvector computation and graph operations respectively. The scikit-learn and FAISS libraries are well-optimised and use C, C++, and FORTRAN for efficient implementation of core algorithms. We first employ classical synthetic clustering datasets to clearly compare how the running time of different algorithms is affected by the number of data points. This experiment highlights the nearly-linear time complexity of our algorithm. Next we demonstrate the applicability of our new algorithm for image segmentation on the Berkeley Image Segmentation Dataset (BSDS) [2].

For each experiment, we set for the approximate nearest neighbour algorithms. For the synthetic datasets, we set the value of the Gaussian kernel used by SKLearn GK and Our Algorithm to be , and for the BSDS experiment we set . This choice follows the heuristic suggested by von Luxburg [31] to choose to approximate the distance from a point to its th nearest neighbour. All experiments are performed on an HP ZBook laptop with an 11th Gen Intel(R) Core(TM) i7-11800H @ 2.30GHz processor and 32 GB RAM. The code to reproduce our results is available at https://github.com/pmacg/kde-similarity-graph.

5.1 Synthetic Dataset

In this experiment we use the scikit-learn library to generate 15,000 data points in from a variety of classical synthetic clustering datasets. The data is generated with the make_circles, make_moons, and make_blobs methods of the scikit-learn library with a noise parameter of . The linear transformation is applied to the blobs data to create asymmetric clusters. As shown in Figure 1, all of the algorithms return approximately the same clustering, but our algorithm runs much faster than the ones from scikit-learn and FAISS.

We further compare the speedup of our algorithm against the five competitors on the two_moons dataset with an increasing number of data points, and our result is reported in Figure 5. Notice that the running time of the scikit-learn and FAISS algorithms scales roughly quadratically with the size of the input, while the running time of our algorithm is nearly linear. Furthermore, we note that on a laptop with 32 GB RAM, the SKLearn GK algorithm could not scale beyond 20,000 data points due to its quadratic memory requirement, while our new algorithm can cluster 1,000,000 data points in 240 seconds under the same memory constraint.

5.2 BSDS Image Segmentation Dataset

Finally we study the application of spectral clustering for image segmentation on the BSDS dataset. The dataset contains images with several ground-truth segmentations for each image. Given an input image, we consider each pixel to be a point where , , are the red, green, blue pixel values and , are the coordinates of the pixel in the image. Then, we construct a similarity graph based on these points, and apply spectral clustering, for which we set the number of clusters to be the median number of clusters in the provided ground truth segmentations. Our experimental result is reported in Table 1, where the “Downsampled” version is employed to reduce the resolution of the image to 20,000 pixels in order to run the SKLearn GK and the “Full Resolution” one is to apply the original image of over 150,000 pixels as input. This set of experiments demonstrates our algorithm produces better clustering results with repsect to the average Rand Index [23].

| Algorithm | Downsampled | Full Resolution |

|---|---|---|

| SKLearn GK | 0.681 | - |

| SKLearn -NN | 0.675 | 0.682 |

| FAISS Exact | 0.675 | 0.680 |

| FAISS HNSW | 0.679 | 0.677 |

| FAISS IVF | 0.675 | 0.680 |

| Our Algorithm | 0.680 | 0.702 |

6 Conclusion

In this paper we develop a new technique that constructs a similarity graph, and show that an approximation algorithm for the KDE can be employed to construct a similarity graph with proven approximation guarantee. Applying the publicly available implementations of the KDE as a black-box, our algorithm outperforms five competing ones from the well-known scikit-learn and FAISS libraries for the classical datasets of low dimensions. We believe that our work will motivate more research on faster algorithms for the KDE in higher dimensions and their efficient implementation, as well as more efficient constructions of similarity graphs.

Acknowledgements

We would like to thank the anonymous reviewers for their valuable comments on the paper. This work is supported by an EPSRC Early Career Fellowship (EP/T00729X/1).

References

- [1] Josh Alman, Timothy Chu, Aaron Schild, and Zhao Song. Algorithms and hardness for linear algebra on geometric graphs. In 61st Annual IEEE Symposium on Foundations of Computer Science (FOCS’20), pages 541–552, 2020.

- [2] Pablo Arbelaez, Michael Maire, Charless Fowlkes, and Jitendra Malik. Contour detection and hierarchical image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33(5):898–916, 2011.

- [3] Artem Babenko and Victor Lempitsky. The inverted multi-index. IEEE Transactions on Pattern Analysis and Machine Intelligence, 37(6):1247–1260, 2014.

- [4] Arturs Backurs, Piotr Indyk, and Tal Wagner. Space and time efficient kernel density estimation in high dimensions. In 33rd Advances in Neural Information Processing Systems (NeurIPS’19), pages 15773–15782, 2019.

- [5] Ainesh Bakshi, Piotr Indyk, Praneeth Kacham, Sandeep Silwal, and Samson Zhou. Subquadratic algorithms for kernel matrices via kernel density estimation. In 11th International Conference on Learning Representations (ICLR ’23), 2023.

- [6] Moses Charikar, Michael Kapralov, Navid Nouri, and Paris Siminelakis. Kernel density estimation through density constrained near neighbor search. In 61st Annual IEEE Symposium on Foundations of Computer Science (FOCS’20), pages 172–183, 2020.

- [7] Moses Charikar and Paris Siminelakis. Hashing-based-estimators for kernel density in high dimensions. In 58th Annual IEEE Symposium on Foundations of Computer Science (FOCS’17), pages 1032–1043, 2017.

- [8] Fan Chung and Linyuan Lu. Concentration inequalities and martingale inequalities: a survey. Internet mathematics, 3(1):79–127, 2006.

- [9] Wei Dong, Moses Charikar, and Kai Li. Efficient -nearest neighbor graph construction for generic similarity measures. In 20th International Conference on World Wide Web (WWW ’11), pages 577–586, 2011.

- [10] Leslie Greengard and John Strain. The fast gauss transform. SIAM Journal on Scientific & Statistical Computing, 12(1):79–94, 1991.

- [11] Jeff Johnson, Matthijs Douze, and Hervé Jégou. Billion-scale similarity search with GPUs. IEEE Transactions on Big Data, 7(3):535–547, 2021.

- [12] Zohar Karnin and Edo Liberty. Discrepancy, coresets, and sketches in machine learning. In 32nd Conference on Learning Theory (COLT ’19), pages 1975–1993, 2019.

- [13] James R. Lee, Shayan Oveis Gharan, and Luca Trevisan. Multiway spectral partitioning and higher-order Cheeger inequalities. Journal of the ACM, 61(6):37:1–37:30, 2014.

- [14] Yin Tat Lee and He Sun. An SDP-based algorithm for linear-sized spectral sparsification. In 49th Annual ACM Symposium on Theory of Computing (STOC’17), pages 678–687, 2017.

- [15] Yin Tat Lee and He Sun. Constructing linear-sized spectral sparsification in almost-linear time. SIAM Journal on Computing, 47(6):2315–2336, 2018.

- [16] Peter Macgregor and He Sun. A tighter analysis of spectral clustering, and beyond. In 39th International Conference on Machine Learning (ICML’22), pages 14717–14742, 2022.

- [17] Peter Macgregor and He Sun. Spectral toolkit of algorithms for graphs: Technical report (1). CoRR, abs/2304.03170, 2023.

- [18] Yu A Malkov and Dmitry A Yashunin. Efficient and robust approximate nearest neighbor search using hierarchical navigable small world graphs. IEEE transactions on Pattern Analysis and Machine Intelligence, 42(4):824–836, 2018.

- [19] Andrew Y. Ng, Michael I. Jordan, and Yair Weiss. On spectral clustering: Analysis and an algorithm. In 14th Advances in Neural Information Processing Systems (NeurIPS’01), pages 849–856, 2001.

- [20] F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Passos, D. Cournapeau, M. Brucher, M. Perrot, and E. Duchesnay. Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12:2825–2830, 2011.

- [21] Richard Peng, He Sun, and Luca Zanetti. Partitioning well-clustered graphs: Spectral clustering works! SIAM Journal on Computing, 46(2):710–743, 2017.

- [22] Kent Quanrud. Spectral sparsification of metrics and kernels. In 32nd Annual ACM-SIAM Symposium on Discrete Algorithms (SODA’21), pages 1445–1464, 2021.

- [23] William M. Rand. Objective criteria for the evaluation of clustering methods. Journal of the American Statistical Association, 66(336):846–850, 1971.

- [24] Paris Siminelakis, Kexin Rong, Peter Bailis, Moses Charikar, and Philip Levis. Rehashing kernel evaluation in high dimensions. In 36th International Conference on Machine Learning (ICML’19), Proceedings of Machine Learning Research, pages 5789–5798, 2019.

- [25] Daniel A. Spielman and Nikhil Srivastava. Graph sparsification by effective resistances. SIAM Journal on Computing, 40(6):1913–1926, 2011.

- [26] Daniel A. Spielman and Shang-Hua Teng. Spectral sparsification of graphs. SIAM Journal on Computing, 40(4):981–1025, 2011.

- [27] He Sun and Luca Zanetti. Distributed graph clustering and sparsification. ACM Transactions on Parallel Computing, 6(3):17:1–17:23, 2019.

- [28] Joel A Tropp. User-friendly tail bounds for sums of random matrices. Foundations of Computational Mathematics, 12(4):389–434, 2012.

- [29] Paxton Turner, Jingbo Liu, and Philippe Rigollet. Efficient interpolation of density estimators. In 24th International Conference on Artificial Intelligence and Statistics (AISTATS ’21), pages 2503–2511, 2021.

- [30] Pauli Virtanen, Ralf Gommers, Travis E. Oliphant, Matt Haberland, Tyler Reddy, David Cournapeau, Evgeni Burovski, Pearu Peterson, Warren Weckesser, Jonathan Bright, Stéfan J. van der Walt, Matthew Brett, Joshua Wilson, K. Jarrod Millman, Nikolay Mayorov, Andrew R. J. Nelson, Eric Jones, Robert Kern, Eric Larson, C J Carey, İlhan Polat, Yu Feng, Eric W. Moore, Jake VanderPlas, Denis Laxalde, Josef Perktold, Robert Cimrman, Ian Henriksen, E. A. Quintero, Charles R. Harris, Anne M. Archibald, Antônio H. Ribeiro, Fabian Pedregosa, Paul van Mulbregt, and SciPy 1.0 Contributors. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nature Methods, 17:261–272, 2020.

- [31] Ulrike von Luxburg. A tutorial on spectral clustering. Statistics and Computing, 17(4):395–416, 2007.

- [32] Yiqiu Wang, Anshumali Shrivastava, Jonathan Wang, and Junghee Ryu. Randomized algorithms accelerated over CPU-GPU for ultra-high dimensional similarity search. In 2018 International Conference on Management of Data (SIGMOD ’18), pages 889–903, 2018.

- [33] Changjiang Yang, Ramani Duraiswami, Nail A. Gumerov, and Larry Davis. Improved fast Gauss transform and efficient kernel density estimation. In 9th International Conference on Computer Vision (ICCV’03), pages 664–671, 2003.

Appendix A Proof of Theorem 2

This section presents the complete proof of Theorem 2. Let be random variables which are equal to the indices of the points sampled for . Recall that by the SZ algorithm, the “ideal” sampling probability for from is

We denote the actual sampling probability that is sampled from under Algorithm 2 to be

Finally, for each added edge, Algorithm 2 also computes an estimate of which we denote

Similar with the definition of in Section 3, we define

-

•

,

-

•

, and

-

•

.

Notice that, following the convention of [27], we use to refer to the probability that a given edge is sampled from the vertex and is the probability that the given edge is sampled at all by the algorithm. We use the same convention for and .

We first prove a sequence of lemmas showing that these probabilities are all within a constant factor of each other.

Lemma 1.

For any point , the probability that a given sampled neighbour is equal to is given by

Proof.

Let be the input data points for Algorithm 2, and be the indices of the input data points. Furthermore, let be the set of indices between and . Then, in each recursive call to Algorithm 1, we are given a range of indices as input and assign to one half of it: either or . By Algorithm 1, we have that the probability of assigning to is

By the performance guarantee of the KDE algorithm, we have that , where we define

This gives

| (3) |

Next, notice that we can write for a sequence of intervals that

where each term corresponds to one level of recursion of Algorithm 1 and there are at most terms. Then, by (3) and the fact that the denominator and numerator of adjacent terms cancel out, we have

since and .

For the lower bound, we have that

where the final inequality follows by the condition of that .

For the upper bound, we similarly have

where the first inequality follows since . ∎

The next lemma shows that the probability of sampling each edge is approximately equal to the “ideal” sampling probability .

Lemma 2.

For every and , we have

Proof.

Let be the neighbours of sampled by Algorithm 2, where . Then,

The proof proceeds by case distinction.

Case 1: . In this case, we have

Case 2: . In this case, we have

which completes the proof on the lower bound of .

For the upper bound, we have

from which the statement follows. ∎

An immediate corollary of Lemma 2 is as follows.

Corollary 1.

For all different , it holds that

and

Proof.

For the upper bound of the first statement, we can assume that and , since otherwise we have and the statement holds trivially. Then, by Lemma 2, we have

and

For the second statement, notice that

since is a approximation of and . Similarly,

Then, for the upper bound of the second statement, we can assume that and , since otherwise and the statement holds trivially. This implies that

and

which completes the proof. ∎

We are now ready to prove Theorem 2. It is important to note that, although some of the proofs below are parallel to that of [27], our analysis needs to carefully take into account the approximation ratios introduced by the approximate KDE algorithm, which makes our analysis more involved. The following concentration inequalities will be used in our proof.

Lemma 3 (Bernstein’s Inequality [8]).

Let be independent random variables such that for any . Let , and . Then, it holds that

Lemma 4 (Matrix Chernoff Bound [28]).

Consider a finite sequence of independent, random, PSD matrices of dimension that satisfy . Let and . Then, it holds that

for , and

forr .

Proof of Theorem 2.

We first show that the degrees of all the vertices in the similarity graph are preserved with high probability in the sparsifier . For any vertex , and let be the indices of the neighbours of sampled by Algorithm 2.

For every pair of indices , and for every , we define the random variable to be the weight of the sampled edge if is the neighbour sampled from at iteration , and otherwise:

Then, fixing an arbitrary vertex , we can write

We have

By Lemmas 1 and 2 and Corollary 1, we have

where the last inequality follows by the fact that . Similarly, we have

where the inequality follows by the fact that

In order to prove a concentration bound on this degree estimate, we would like to apply the Bernstein inequality for which we need to bound

where the third inequality follows by the fact that

Then, by applying Bernstein’s inequality we have for any constant that

where we use the fact that

Therefore, by taking to be sufficiently large and by the union bound, it holds with high probability that the degree of all the nodes in are preserved up to a constant factor. For the remainder of the proof, we assume that this is the case. Note in particular that this implies for any subset .

Next, we prove it holds for that for any , where form an optimal clustering in .

By the definition of , it holds for any that

where the last line follows by Corollary 1. By Markov’s inequality and the union bound, with constant probability it holds for all that

Therefore, it holds with constant probability that

Finally, we prove that . Let be the projection of on its top eigenspaces, and notice that can be written

where are the eigenvectors of . Let be the square root of the pseudoinverse of .

We prove that the top eigenvalues of are preserved, which implies that . To prove this, for each data point and sample , we define a random matrix by

where , is the edge indicator vector, and is defined by

Notice that

where

is the Laplacian matrix of normalised with respect to the degrees of the nodes in . We prove that, with high probability, the top eigenvectors of and are approximately the same. Then, we show the same for and which implies that .

We begin by looking at the first moment of , and have that

where the last inequality follows by the fact that

Similarly,

where the last inequality follows by the fact that

Additionally, for any and and , we have that

Now, we apply the matrix Chernoff bound and have that

for some constant . The other side of the matrix Chernoff bound gives us that

Combining these, with probability it holds for any non-zero in the space spanned by that

By setting , we can rewrite this as

Since , we have shown that there exist orthogonal vectors whose Rayleigh quotient with respect to is . By the Courant-Fischer Theorem, we have .

It only remains to show that , which implies that . By the definition of , we have that . Therefore, for any and , it holds that

where the final guarantee follows from the fact that the degrees in are preserved up to a constant factor. The conclusion of the theorem follows by the Courant-Fischer Theorem.

Finally, we bound the running time of Algorithm 2 which is dominated by the recursive calls to Algorithm 1. We note that, although the number of nodes doubles at each level of the recursion tree (visualised in Figure 4), the total number of samples and data points remain constant for each level of the tree. Then, since the running time of the KDE algorithm is superadditive, the total running time of the KDE algorithms at level of the tree is

Since there are levels of the tree, the total running time of Algorithm 1 is . This completes the proof. ∎

Appendix B Additional Experimental Results

In this section, we include in Figures 6 and 7 some additional examples of the performance of the six spectral clustering algorithms on the BSDS image segmentation dataset. Due to its quadratic memory requirement, the SKLearn GK algorithm cannot be used on the full-resolution image. Therefore, we present its results on each image downsampled to 20,000 pixels. For every other algorithm, we show the results on the full-resolution image. In every case, we find that our algorithm is able to identify more refined detail of the image when compared with the alternative algorithms.