Faithful Model Explanations through

Energy-Constrained Conformal Counterfactuals111This is a pre-print.

Abstract

Counterfactual explanations offer an intuitive and straightforward way to explain black-box models and offer algorithmic recourse to individuals. To address the need for plausible explanations, existing work has primarily relied on surrogate models to learn how the input data is distributed. This effectively reallocates the task of learning realistic explanations for the data from the model itself to the surrogate. Consequently, the generated explanations may seem plausible to humans but need not necessarily describe the behaviour of the black-box model faithfully. We formalise this notion of faithfulness through the introduction of a tailored evaluation metric and propose a novel algorithmic framework for generating Energy-Constrained Conformal Counterfactuals that are only as plausible as the model permits. Through extensive empirical studies, we demonstrate that ECCCo reconciles the need for faithfulness and plausibility. In particular, we show that for models with gradient access, it is possible to achieve state-of-the-art performance without the need for surrogate models. To do so, our framework relies solely on properties defining the black-box model itself by leveraging recent advances in energy-based modelling and conformal prediction. To our knowledge, this is the first venture in this direction for generating faithful counterfactual explanations. Thus, we anticipate that ECCCo can serve as a baseline for future research. We believe that our work opens avenues for researchers and practitioners seeking tools to better distinguish trustworthy from unreliable models.

1 Introduction

Counterfactual explanations provide a powerful, flexible and intuitive way to not only explain black-box models but also offer the possibility of algorithmic recourse to affected individuals. Instead of opening the black box, counterfactual explanations work under the premise of strategically perturbing model inputs to understand model behaviour (Wachter, Mittelstadt, and Russell 2017). Intuitively speaking, we generate explanations in this context by asking what-if questions of the following nature: ‘Our credit risk model currently predicts that this individual is not credit-worthy. What if they reduced their monthly expenditures by 10%?’

This is typically implemented by defining a target outcome for some individual described by attributes, for which the model initially predicts a different outcome: . Counterfactuals are then searched by minimizing a loss function that compares the predicted model output to the target outcome: . Since counterfactual explanations work directly with the black-box model, valid counterfactuals always have full local fidelity by construction where fidelity is defined as the degree to which explanations approximate the predictions of a black-box model (Molnar 2022).

In situations where full fidelity is a requirement, counterfactual explanations offer a more appropriate solution to Explainable Artificial Intelligence (XAI) than other popular approaches like LIME (Ribeiro, Singh, and Guestrin 2016) and SHAP (Lundberg and Lee 2017), which involve local surrogate models. But even full fidelity is not a sufficient condition for ensuring that an explanation faithfully describes the behaviour of a model. That is because multiple distinct explanations can lead to the same model prediction, especially when dealing with heavily parameterized models like deep neural networks, which are underspecified by the data (Wilson 2020). In the context of counterfactuals, the idea that no two explanations are the same arises almost naturally. A key focus in the literature has therefore been to identify those explanations that are most appropriate based on a myriad of desiderata such as closeness (Wachter, Mittelstadt, and Russell 2017), sparsity (Schut et al. 2021), actionability (Ustun, Spangher, and Liu 2019) and plausibility (Joshi et al. 2019).

In this work, we draw closer attention to modelling faithfulness rather than fidelity as a desideratum for counterfactuals. We define faithfulness as the degree to which counterfactuals are consistent with what the model has learned about the data. Our key contributions are as follows: first, we show that fidelity is an insufficient evaluation metric for counterfactuals (Section 3) and propose a definition of faithfulness that gives rise to more suitable metrics (Section 4). Next, we introduce a ECCCo: a novel algorithmic approach aimed at generating energy-constrained conformal counterfactuals that faithfully explain model behaviour in Section 5. Finally, we provide extensive empirical evidence demonstrating that ECCCo faithfully explains model behaviour and attains plausibility only when appropriate (Section 6).

To our knowledge, this is the first venture in this direction for generating faithful counterfactuals. Thus, we anticipate that ECCCo can serve as a baseline for future research. We believe that our work opens avenues for researchers and practitioners seeking tools to better distinguish trustworthy from unreliable models.

2 Background

While counterfactual explanations (CE) can also be generated for arbitrary regression models (Spooner et al. 2021), existing work has primarily focused on classification problems. Let denote the one-hot-encoded output domain with classes. Then most counterfactual generators rely on gradient descent to optimize different flavours of the following counterfactual search objective:

| (1) |

Here denotes the primary loss function, is a function that maps from the counterfactual state space to the feature space and is either a single penalty or a collection of penalties that are used to impose constraints through regularization. Equation 1 restates the baseline approach to gradient-based counterfactual search proposed by Wachter, Mittelstadt, and Russell (2017) in general form as introduced by Altmeyer et al. (2023). To explicitly account for the multiplicity of explanations, denotes an -dimensional array of counterfactual states.

The baseline approach, which we will simply refer to as Wachter, searches a single counterfactual directly in the feature space and penalizes its distance to the original factual. In this case, is simply the identity function and corresponds to the feature space itself. Many derivative works of Wachter, Mittelstadt, and Russell (2017) have proposed new flavours of Equation 1, each of them designed to address specific desiderata that counterfactuals ought to meet in order to properly serve both AI practitioners and individuals affected by algorithmic decision-making systems. The list of desiderata includes but is not limited to the following: sparsity, closeness (Wachter, Mittelstadt, and Russell 2017), actionability (Ustun, Spangher, and Liu 2019), diversity (Mothilal, Sharma, and Tan 2020), plausibility (Joshi et al. 2019; Poyiadzi et al. 2020; Schut et al. 2021), robustness (Upadhyay, Joshi, and Lakkaraju 2021; Pawelczyk et al. 2022; Altmeyer et al. 2023) and causality (Karimi, Schölkopf, and Valera 2021). Different counterfactual generators addressing these needs have been extensively surveyed and evaluated in various studies (Verma et al. 2020; Karimi et al. 2020; Pawelczyk et al. 2021; Artelt et al. 2021; Guidotti 2022).

The notion of plausibility is central to all of the desiderata. For example, Artelt et al. (2021) find that plausibility typically also leads to improved robustness. Similarly, plausibility has also been connected to causality in the sense that plausible counterfactuals respect causal relationships (Mahajan, Tan, and Sharma 2019). Consequently, the plausibility of counterfactuals has been among the primary concerns for researchers. Achieving plausibility is equivalent to ensuring that the generated counterfactuals comply with the true and unobserved data-generating process (DGP). We define plausibility formally in this work as follows:

Definition 2.1 (Plausible Counterfactuals).

Let denote the true conditional distribution of samples in the target class . Then for to be considered a plausible counterfactual, we need: .

To generate plausible counterfactuals, we first need to quantify the conditional distribution of samples in the target class (). We can then ensure that we generate counterfactuals that comply with that distribution.

One straightforward way to do this is to use surrogate models for the task. Joshi et al. (2019), for example, suggest that instead of searching counterfactuals in the feature space , we can traverse a latent embedding (Equation 1) that implicitly codifies the DGP. To learn the latent embedding, they propose using a generative model such as a Variational Autoencoder (VAE). Provided the surrogate model is well-specified, their proposed approach REVISE can yield plausible explanations. Others have proposed similar approaches: Dombrowski, Gerken, and Kessel (2021) traverse the base space of a normalizing flow to solve Equation 1; Poyiadzi et al. (2020) use density estimators () to constrain the counterfactuals to dense regions in the feature space; finally, Karimi, Schölkopf, and Valera (2021) assume knowledge about the causal graph that generates the data.

A competing approach towards plausibility that is also closely related to this work instead relies on the black-box model itself. Schut et al. (2021) show that to meet the plausibility objective we need not explicitly model the input distribution. Pointing to the undesirable engineering overhead induced by surrogate models, they propose to rely on the implicit minimization of predictive uncertainty instead. Their proposed methodology, which we will refer to as Schut, solves Equation 1 by greedily applying Jacobian-Based Saliency Map Attacks (JSMA) in the feature space with cross-entropy loss and no penalty at all. The authors demonstrate theoretically and empirically that their approach yields counterfactuals for which the model predicts the target label with high confidence. Provided the model is well-specified, these counterfactuals are plausible. This idea hinges on the assumption that the black-box model provides well-calibrated predictive uncertainty estimates.

3 Why Fidelity is not Enough: A Motivational Example

As discussed in the introduction, any valid counterfactual also has full fidelity by construction: solutions to Equation 1 are considered valid as soon as the label predicted by the model matches the target class. So while fidelity always applies, counterfactuals that address the various desiderata introduced above can look vastly different from each other.

To demonstrate this with an example, we have trained a simple image classifier on the well-known MNIST dataset (LeCun 1998): a Multi-Layer Perceptron (MLP) with test set accuracy . No measures have been taken to improve the model’s adversarial robustness or its capacity for predictive uncertainty quantification. The far left panel of Figure 1 shows a random sample drawn from the dataset. The underlying classifier correctly predicts the label ‘nine’ for this image. For the given factual image and model, we have used Wachter, Schut and REVISE to generate one counterfactual each in the target class ‘seven’. The perturbed images are shown next to the factual image from left to right in Figure 1. Captions on top of the images indicate the generator along with the predicted probability that the image belongs to the target class. In all cases, that probability is very high, while the counterfactuals look very different.

Since Wachter is only concerned with closeness, the generated counterfactual is almost indistinguishable from the factual. Schut expects a well-calibrated model that can generate predictive uncertainty estimates. Since this is not the case, the generated counterfactual looks like an adversarial example. Finally, the counterfactual generated by REVISE looks much more plausible than the other two. But is it also more faithful to the behaviour of our MNIST classifier? That is much less clear because the surrogate used by REVISE introduces friction: explanations no longer depend exclusively on the black-box model itself.

So which of the counterfactuals most faithfully explains the behaviour of our image classifier? Fidelity cannot help us to make that judgement, because all of these counterfactuals have full fidelity. Thus, fidelity is an insufficient evaluation metric to assess the faithfulness of CE.

4 Faithful first, Plausible second

Considering the limitations of fidelity as demonstrated in the previous section, analogous to Definition 2.1, we introduce a new notion of faithfulness in the context of CE:

Definition 4.1 (Faithful Counterfactuals).

Let denote the conditional distribution of in the target class , where denotes the parameters of model . Then for to be considered a faithful counterfactual, we need: .

In doing this, we merge in and nuance the concept of plausibility (Definition 2.1) where the notion of ‘consistent with the data’ becomes ‘consistent with what the model has learned about the data’.

Quantifying the Model’s Generative Property

To assess counterfactuals with respect to Definition 4.1, we need a way to quantify the posterior conditional distribution . To this end, we draw on ideas from energy-based modelling (EBM), a subdomain of machine learning that is concerned with generative or hybrid modelling (Grathwohl et al. 2020; Du and Mordatch 2019). In particular, note that if we fix to our target value , we can conditionally draw from by randomly initializing and then using Stochastic Gradient Langevin Dynamics (SGLD) as follows,

| (2) |

where is the stochastic term and the step-size is typically polynomially decayed (Welling and Teh 2011). The term denotes the model energy conditioned on the target class label which we specify as the negative logit corresponding to . To allow for faster sampling, we follow the common practice of choosing the step-size and the standard deviation of separately. While is only guaranteed to distribute as if and , the bias introduced for a small finite is negligible in practice (Murphy 2023).

Generating multiple samples using SGLD thus yields an empirical distribution that approximates what the model has learned about the input data. While in the context of EBM, this is usually done during training, we propose to repurpose this approach during inference in order to evaluate the faithfulness of model explanations. The appendix provides additional implementation details for any tasks related to energy-based modelling.

Quantifying the Model’s Predictive Uncertainty

Faithful counterfactuals can be expected to also be plausible if the learned conditional distribution (Defintion 4.1) is close to the true conditional distribution (Definition 2.1). We can further improve the plausibility of counterfactuals without the need for surrogate models that may interfere with faithfulness by minimizing predictive uncertainty (Schut et al. 2021). Unfortunately, this idea relies on the assumption that the model itself provides predictive uncertainty estimates, which may be too restrictive in practice.

To relax this assumption, we use conformal prediction (CP), an approach to predictive uncertainty quantification that has recently gained popularity (Angelopoulos and Bates 2021; Manokhin 2022). Crucially for our intended application, CP is model-agnostic and can be applied during inference without placing any restrictions on model training. It works under the premise of turning heuristic notions of uncertainty into rigorous estimates by repeatedly sifting through the training data or a dedicated calibration dataset. Calibration data is used to compute so-called nonconformity scores: where is referred to as score function (see appendix for details).

Conformal classifiers produce prediction sets for individual inputs that include all output labels that can be reasonably attributed to the input. These sets are formed as follows,

| (3) |

where denotes the -quantile of and is a predetermined error rate. These sets tend to be larger for inputs that do not conform with the training data and are characterized by high predictive uncertainty. To leverage this notion of predictive uncertainty in the context of gradient-based counterfactual search, we use a smooth set size penalty introduced by Stutz et al. (2021):

| (4) |

Here, is a hyper-parameter and can be interpreted as the probability of label being included in the prediction set (see appendix for details). In order to compute this penalty for any black-box model, we merely need to perform a single calibration pass through a holdout set . Arguably, data is typically abundant and in most applications, practitioners tend to hold out a test data set anyway. Consequently, CP removes the restriction on the family of predictive models, at the small cost of reserving a subset of the available data for calibration. This particular case of conformal prediction is referred to as split conformal prediction (SCP) as it involves splitting the training data into a proper training dataset and a calibration dataset.

Evaluating Plausibility and Faithfulness

The parallels between our definitions of plausibility and faithfulness imply that we can also use similar evaluation metrics in both cases. Since existing work has focused heavily on plausibility, it offers a useful starting point. In particular, Guidotti (2022) have proposed an implausibility metric that measures the distance of the counterfactual from its nearest neighbour in the target class. As this distance is reduced, counterfactuals get more plausible under the assumption that the nearest neighbour itself is plausible in the sense of Definition 2.1. In this work, we use the following adapted implausibility metric,

| (5) |

where denotes the counterfactual and is a subsample of the training data in the target class . By averaging over multiple samples in this manner, we avoid the risk that the nearest neighbour of itself is not plausible according to Definition 2.1 (e.g. an outlier).

Equation 5 gives rise to a similar evaluation metric for unfaithfulness. We swap out the subsample of observed individuals in the target class for the set of samples generated through SGLD ():

| (6) |

Our default choice for the function in both cases is the Euclidean Norm. Depending on the type of input data other choices may be more adequate (see Section 6).

5 Energy-Constrained Conformal Counterfactuals

Given our proposed notion of faithfulness, we now describe ECCCo, our proposed framework for generating Energy-Constrained Conformal Counterfactuals. It is based on the premise that counterfactuals should first and foremost be faithful. Plausibility, as a secondary concern, is then still attainable to the degree that the black-box model itself has learned plausible explanations for the underlying data.

We begin by substituting the loss function in Equation 1,

| (7) |

where is a hybrid loss function used in joint-energy modelling evaluated at a given counterfactual state for a given model and target outcome:

| (8) |

The first term, , is any standard classification loss function such as cross-entropy loss. The second term, , is used to measure loss with respect to the generative task222In practice, regularization loss is typically also added. We follow this convention but have omitted the term here for simplicity.. In the context of joint-energy training, induces changes in model parameters that decrease the energy of observed samples and increase the energy of samples generated through SGLD (Du and Mordatch 2019).

The key observation in our context is that we can rely solely on decreasing the energy of the counterfactual itself. This is sufficient to capture the generative property of the underlying model since it is implicitly captured by its parameters . Importantly, this means that we do not need to generate conditional samples through SGLD during our counterfactual search at all (see appendix for details).

This observation leads to the following simple objective function for ECCCo:

| (9) | ||||

The first penalty term involving induces closeness like in Wachter, Mittelstadt, and Russell (2017). The second penalty term involving induces faithfulness by constraining the energy of the generated counterfactual. The third and final penalty term involving ensures that the generated counterfactual is associated with low predictive uncertainty. To tune these hyperparameters we have relied on grid search.

Concerning feature autoencoding (), ECCCo does not rely on latent space search to achieve its primary objective of faithfulness. By default, we choose to be the identity function as in Wachter. This is generally also enough to achieve plausibility, provided the model has learned plausible explanations for the data. In some cases, plausibility can be improved further by mapping counterfactuals to a lower-dimensional latent space. In the following, we refer to this approach as ECCCo+: that is, ECCCo plus dimensionality reduction.

Figure 2 illustrates how the different components in Equation 9 affect the counterfactual search for a synthetic dataset. The underlying classifier is a Joint Energy Model (JEM) that was trained to predict the output class (blue or orange) and generate class-conditional samples (Grathwohl et al. 2020). We have used four different generator flavours to produce a counterfactual in the blue class for a sample from the orange class: Wachter, which only uses the first penalty (); ECCCo (no EBM), which does not constrain energy (); ECCCo (no CP), which involves no set size penalty (); and, finally, ECCCo, which involves all penalties defined in Equation 9. Arrows indicate (negative) gradients with respect to the objective function at different points in the feature space.

While Wachter generates a valid counterfactual, it ends up close to the original starting point consistent with its objective. ECCCo (no EBM) avoids regions of high predictive uncertainty near the decision boundary, but the outcome is still not plausible. The counterfactual produced by ECCCo (no CP) is energy-constrained. Since the JEM has learned the conditional input distribution reasonably well in this case, the counterfactual is both faithful and plausible. Finally, the outcome for ECCCo looks similar, but the additional smooth set size penalty leads to somewhat faster convergence.

6 Empirical Analysis

Our goal in this section is to shed light on the following research questions:

Research Question 6.1 (Faithfulness).

To what extent are counterfactuals generated by ECCCo more faithful than those produced by state-of-the-art generators?

Research Question 6.2 (Balancing Desiderata).

Compared to state-of-the-art generators, how does ECCCo balance the two key objectives of faithfulness and plausibility?

The second question is motivated by the intuition that faithfulness and plausibility should coincide for models that have learned plausible explanations of the data.

Experimental Setup

To assess and benchmark the performance of our proposed generator against the state of the art, we generate multiple counterfactuals for different models and datasets. In particular, we compare ECCCo and its variants to the following counterfactual generators that were introduced above: firstly, Schut, which works under the premise of minimizing predictive uncertainty; secondly, REVISE, which is state-of-the-art (SOTA) with respect to plausibility; and, finally, Wachter, which serves as our baseline. In the case of ECCCo+, we use principal component analysis (PCA) for dimensionality reduction: the latent space is spanned by the first principal components where we choose to be equal to the latent dimension of the VAE used by REVISE.

For the predictive modelling tasks, we use multi-layer perceptrons (MLP), deep ensembles, joint energy models (JEM) and convolutional neural networks (LeNet-5 CNN (LeCun et al. 1998)). Both joint-energy modelling and ensembling have been associated with improved generative properties and adversarial robustness (Grathwohl et al. 2020; Lakshminarayanan, Pritzel, and Blundell 2017), so we expect this to be positively correlated with the plausibility of ECCCo. To account for stochasticity, we generate many counterfactuals for each target class, generator, model and dataset over multiple runs.

We perform benchmarks on eight datasets from different domains. From the credit and finance domain we include three tabular datasets: Give Me Some Credit (GMSC) (Kaggle 2011), German Credit (Hoffman 1994) and California Housing (Pace and Barry 1997). All of these are commonly used in the related literature (Karimi et al. 2020; Altmeyer et al. 2023; Pawelczyk et al. 2021). Following related literature (Schut et al. 2021; Dhurandhar et al. 2018) we also include two image datasets: MNIST (LeCun 1998) and Fashion MNIST (Xiao, Rasul, and Vollgraf 2017).

Full details concerning model training as well as detailed descriptions and results for all datasets can be found in the appendix. In the following, we will focus on the most relevant results highlighted in Tables 1 and 2. The tables show sample averages along with standard deviations across multiple runs for our key evaluation metrics for the California Housing and GMSC datasets (Table 1) and the MNIST dataset (Table 2). For each metric, the best outcomes are highlighted in bold. Asterisks indicate that the given value is more than one (*) or two (**) standard deviations away from the baseline (Wachter). For the tabular datasets, we use the default Euclidian distance to measure unfaithfulness and implausibility as defined in Equations 6 and 5, respectively. The third metric presented in Table 1 quantifies the predictive uncertainty of the counterfactual as measured by Equation 4. For the vision datasets, we rely on measuring the structural dissimilarity between images for our unfaithfulness and implausibility metrics (Wang, Simoncelli, and Bovik 2003).

Faithfulness

| California Housing | GMSC | ||||||

| Model | Generator | Unfaithfulness ↓ | Implausibility ↓ | Uncertainty ↓ | Unfaithfulness ↓ | Implausibility ↓ | Uncertainty ↓ |

| ECCCo | 3.69 ± 0.08** | 1.94 ± 0.13 | 0.09 ± 0.01** | 3.84 ± 0.07** | 2.13 ± 0.08 | 0.23 ± 0.01** | |

| ECCCo+ | 3.88 ± 0.07** | 1.20 ± 0.09 | 0.15 ± 0.02 | 3.79 ± 0.05** | 1.81 ± 0.05 | 0.30 ± 0.01* | |

| ECCCo (no CP) | 3.70 ± 0.08** | 1.94 ± 0.13 | 0.10 ± 0.01** | 3.85 ± 0.07** | 2.13 ± 0.08 | 0.23 ± 0.01** | |

| ECCCo (no EBM) | 4.03 ± 0.07 | 1.12 ± 0.12 | 0.14 ± 0.01** | 4.08 ± 0.06 | 0.97 ± 0.08 | 0.31 ± 0.01* | |

| REVISE | 3.96 ± 0.07* | 0.58 ± 0.03** | 0.17 ± 0.03 | 4.09 ± 0.07 | 0.63 ± 0.02** | 0.33 ± 0.06 | |

| Schut | 4.00 ± 0.06 | 1.15 ± 0.12 | 0.10 ± 0.01** | 4.04 ± 0.08 | 1.21 ± 0.08 | 0.30 ± 0.01* | |

| MLP Ensemble | Wachter | 4.04 ± 0.07 | 1.13 ± 0.12 | 0.16 ± 0.01 | 4.10 ± 0.07 | 0.95 ± 0.08 | 0.32 ± 0.01 |

| ECCCo | 1.40 ± 0.08** | 0.69 ± 0.05** | 0.11 ± 0.00** | 1.20 ± 0.06* | 0.78 ± 0.07** | 0.38 ± 0.01 | |

| ECCCo+ | 1.28 ± 0.08** | 0.60 ± 0.04** | 0.11 ± 0.00** | 1.01 ± 0.07** | 0.70 ± 0.07** | 0.37 ± 0.01 | |

| ECCCo (no CP) | 1.39 ± 0.08** | 0.69 ± 0.05** | 0.11 ± 0.00** | 1.21 ± 0.07* | 0.77 ± 0.07** | 0.39 ± 0.01 | |

| ECCCo (no EBM) | 1.70 ± 0.09 | 0.99 ± 0.08 | 0.14 ± 0.00* | 1.31 ± 0.07 | 0.97 ± 0.10 | 0.32 ± 0.01** | |

| REVISE | 1.39 ± 0.15** | 0.59 ± 0.04** | 0.25 ± 0.07 | 1.01 ± 0.07** | 0.63 ± 0.04** | 0.33 ± 0.07 | |

| Schut | 1.59 ± 0.10* | 1.10 ± 0.06 | 0.09 ± 0.00** | 1.34 ± 0.07 | 1.21 ± 0.10 | 0.26 ± 0.01** | |

| JEM Ensemble | Wachter | 1.71 ± 0.09 | 0.99 ± 0.08 | 0.14 ± 0.00 | 1.31 ± 0.08 | 0.95 ± 0.10 | 0.33 ± 0.01 |

Overall, we find strong empirical evidence suggesting that ECCCo consistently achieves state-of-the-art faithfulness. Across all models and datasets highlighted here, different variations of ECCCo consistently outperform other generators with respect to faithfulness, in many cases substantially. This pattern is mostly robust across all other datasets.

In particular, we note that the best results are generally obtained when using the full ECCCo objective (Equation 9). In other words, constraining both energy and predictive uncertainty typically yields the most faithful counterfactuals. We expected the former to play a more significant role in this context and that is typically what we find across all datasets. The results in Table 1 indicate that faithfulness can be improved substantially by relying solely on the energy constraint (ECCCo (no CP)). In most cases, however, the full objective yields the most faithful counterfactuals. This indicates that predictive uncertainty minimization plays an important role in achieving faithfulness.

We also generally find that latent space search does not impede faithfulness for ECCCo. In most cases ECCCo+ is either on par with ECCCo or even outperforms it. There are some notable exceptions though. Cases in which ECCCo achieves substantially better faithfulness without latent space search tend to involve more vulnerable models like the simple MLP for MNIST in Table 2. We explain this finding as follows: even though dimensionality reduction through PCA in the case of ECCCo+ can be considered a relatively mild form of intervention, the first principal components fail to capture some of the variation in the data. More vulnerable models may be particularly sensitive to this residual variation in the data.

Consistent with this finding, we also observe that REVISE ranks higher for faithfulness, if the model itself has learned more plausible representations of the underlying data: REVISE generates more faithful counterfactuals than the baseline for the JEM Ensemble in Table 1 and the LeNet-5 CNN in Table 2. This demonstrates that the two desiderata—faithfulness and plausibility—are not mutually exclusive.

Balancing Desiderata

| MNIST | |||

|---|---|---|---|

| Model | Generator | Unfaithfulness ↓ | Implausibility ↓ |

| ECCCo | 0.243 ± 0.000** | 0.420 ± 0.001 | |

| ECCCo+ | 0.246 ± 0.000* | 0.306 ± 0.001** | |

| REVISE | 0.248 ± 0.000 | 0.301 ± 0.004** | |

| Schut | 0.247 ± 0.001 | 0.303 ± 0.008** | |

| MLP | Wachter | 0.247 ± 0.000 | 0.344 ± 0.002 |

| ECCCo | 0.248 ± 0.000** | 0.387 ± 0.002 | |

| ECCCo+ | 0.248 ± 0.000** | 0.310 ± 0.002** | |

| REVISE | 0.248 ± 0.000** | 0.301 ± 0.002** | |

| Schut | 0.250 ± 0.002 | 0.289 ± 0.024* | |

| LeNet-5 | Wachter | 0.249 ± 0.000 | 0.335 ± 0.002 |

Overall, we find strong empirical evidence suggesting that ECCCo can achieve near state-of-the-art plausibility without sacrificing faithfulness. Figure 3 shows one such example taken from the MNIST benchmark where the objective is to turn the factual ‘three’ (far left) into a ‘five’. The underlying model is a LeNet-5 CNN. The different images show the counterfactuals produced by the generators, of which all but the one produced by Schut are valid. Both variations of ECCCo produce plausible counterfactuals.

Looking at the benchmark results presented in Tables 1 and 2 we firstly note that although REVISE generally performs best, ECCCo and in particular ECCCo+ often approach SOTA performance. Upon visual inspection of the generated images we actually find that ECCCo+ performs much better than REVISE (see appendix). Zooming in on the details we observe that ECCCo and its variations do particularly well, whenever the underlying model has been explicitly trained to learn plausible representations of the data. For both tabular datasets in Table 1, ECCCo improves plausibility substantially compared to the baseline. This broad pattern is mostly consistent for all other datasets, although there are notable exceptions for which ECCCo takes the lead on both plausibility and faithfulness.

While we maintain that generally speaking plausibility should hinge on the quality of the model, our results also indicate that it is possible to balance faithfulness and plausibility if needed: ECCCo+ generally outperforms other variants of ECCCo in this context, occasionally at the small cost of slightly reduced faithfulness. For the vision datasets especially, we find that ECCCo+ is consistently second only to REVISE for all models and regularly substantially better than the baseline. Looking at the California Housing data, latent space search markedly improves plausibility without sacrificing faithfulness: for the JEM Ensemble, ECCCo+ performs substantially better than the baseline and only marginally worse than REVISE. Importantly, ECCCo+ does not attain plausibility at all costs: for the MLP Ensemble, plausibility is still very low but this seems to faithfully represent what the model has learned.

We conclude from the findings presented thus far that ECCCo enables us to reconcile the objectives of faithfulness and plausibility. It produces plausible counterfactuals if and only if the model itself has learned plausible explanations for the data. It thus avoids the risk of generating plausible but potentially misleading explanations for models that are highly susceptible to implausible explanations.

Additional Desiderata

While we have deliberately focused on our key metrics of interest so far, it is worth briefly considering other common desiderata for counterfactuals. With reference to the right-most columns for each dataset in Table 1, we firstly note that ECCCo typically reduces predictive uncertainty as intended. Consistent with its design, Schut performs well on this metric even though it does not explicitly address uncertainty as measured by conformal prediction set sizes.

Another commonly discussed desideratum is closeness (Wachter, Mittelstadt, and Russell 2017): counterfactuals that are closer to their factuals are associated with smaller costs to individuals in the context of algorithmic recourse. As evident from the additional tables in the appendix, the closeness desideratum tends to be negatively correlated with plausibility and faithfulness. Consequently, both REVISE and ECCCo generally yield more costly counterfactuals than the baseline. Nonetheless, ECCCo does not seem to stretch costs unnecessarily: in Figure 3 useful parts of the factual ‘three’ are clearly retained.

7 Limitations

Despite having taken considerable measures to study our methodology carefully, limitations can still be identified.

Firstly, we recognize that our proposed distance-based evaluation metrics for plausibility and faithfulness may not be universally applicable to all types of data. In any case, they depend on choosing a distance metric on a case-by-case basis, as we have done in this work. Arguably, commonly used metrics for measuring other desiderata such as closeness suffer from the same pitfall. We therefore think that future work on counterfactual explanations could benefit from defining universal evaluation metrics.

Relatedly, we note that our proposed metric for measuring faithfulness depends on the availability of samples generated through SGLD, which in turn requires gradient access for models. This means it cannot be used to evaluate non-differentiable classifiers. Consequently, we also have not applied ECCCo to some machine learning models commonly used for classification such as decision trees. Since ECCCo itself does not rely on SGLD, its defining penalty functions are indeed applicable to gradient-free counterfactual generators. This is an interesting avenue for future research.

Next, common challenges associated with energy-based modelling including sensitivity to scale, training instabilities and sensitivity to hyperparameters also apply to ECCCo to some extent. In grid searches for optimal hyperparameters, we have noticed that unless properly regularized, ECCCo is sometimes prone to overshoot for the energy constraint.

Finally, while we have used ablation to understand the roles of the different components of ECCCo, the scope of this work has prevented us from investigating the role of conformal prediction in this context more thoroughly. We have exclusively relied on split conformal prediction and have used fixed values for the predetermined error rate and other hyperparameters. Future work could benefit from more extensive ablation studies that tune hyperparameters and investigate different approaches to conformal prediction.

8 Conclusion

This work leverages ideas from energy-based modelling and conformal prediction in the context of counterfactual explanations. We have proposed a new way to generate counterfactuals that are maximally faithful to the black-box model they aim to explain. Our proposed generator, ECCCo, produces plausible counterfactuals iff the black-box model itself has learned realistic explanations for the data, which we have demonstrated through rigorous empirical analysis. This should enable researchers and practitioners to use counterfactuals in order to discern trustworthy models from unreliable ones. While the scope of this work limits its generalizability, we believe that ECCCo offers a solid base for future work on faithful counterfactual explanations.

Acknowledgements

Some of the members of TU Delft were partially funded by ICAI AI for Fintech Research, an ING—TU Delft collaboration.

Research reported in this work was partially or completely facilitated by computational resources and support of the DelftBlue (Delft High Performance Computing Centre DHPC) and the Delft AI Cluster (DAIC: https://doc.daic.tudelft.nl/) at TU Delft. Detailed information about the utilized computing resources can be found in the appendix. The authors would like to thank Azza Ahmed, in particular, for her tremendous help with running Julia jobs on the cluster. The work remains the sole responsibility of the authors.

We would also like to express our gratitude to the group of students who have recently contributed to the development of CounterfactualExplanations.jl (Altmeyer, van Deursen et al. 2023), the Julia package that was used for this analysis: Rauno Arike, Simon Kasdorp, Lauri Kesküll, Mariusz Kicior, Vincent Pikand.

All code used for the analysis in this paper can be found here: https://github.com/pat-alt/ECCCo.jl.

References

- Altmeyer et al. (2023) Altmeyer, P.; Angela, G.; Buszydlik, A.; Dobiczek, K.; van Deursen, A.; and Liem, C. C. 2023. Endogenous Macrodynamics in Algorithmic Recourse. In 2023 IEEE Conference on Secure and Trustworthy Machine Learning (SaTML), 418–431. IEEE.

- Altmeyer, van Deursen et al. (2023) Altmeyer, P.; van Deursen, A.; et al. 2023. Explaining Black-Box Models through Counterfactuals. In Proceedings of the JuliaCon Conferences, volume 1, 130.

- Angelopoulos and Bates (2021) Angelopoulos, A. N.; and Bates, S. 2021. A gentle introduction to conformal prediction and distribution-free uncertainty quantification. arXiv preprint arXiv:2107.07511.

- Artelt et al. (2021) Artelt, A.; Vaquet, V.; Velioglu, R.; Hinder, F.; Brinkrolf, J.; Schilling, M.; and Hammer, B. 2021. Evaluating robustness of counterfactual explanations. In 2021 IEEE Symposium Series on Computational Intelligence (SSCI), 01–09. IEEE.

- Delft High Performance Computing Centre (DHPC) Delft High Performance Computing Centre (DHPC). 2022. DelftBlue Supercomputer (Phase 1). https://www.tudelft.nl/dhpc/ark:/44463/DelftBluePhase1.

- Dhurandhar et al. (2018) Dhurandhar, A.; Chen, P.-Y.; Luss, R.; Tu, C.-C.; Ting, P.; Shanmugam, K.; and Das, P. 2018. Explanations based on the missing: Towards contrastive explanations with pertinent negatives. Advances in neural information processing systems, 31.

- Dombrowski, Gerken, and Kessel (2021) Dombrowski, A.-K.; Gerken, J. E.; and Kessel, P. 2021. Diffeomorphic Explanations with Normalizing Flows. In ICML Workshop on Invertible Neural Networks, Normalizing Flows, and Explicit Likelihood Models.

- Du and Mordatch (2019) Du, Y.; and Mordatch, I. 2019. Implicit generation and generalization in energy-based models. arXiv preprint arXiv:1903.08689.

- Grathwohl et al. (2020) Grathwohl, W.; Wang, K.-C.; Jacobsen, J.-H.; Duvenaud, D.; Norouzi, M.; and Swersky, K. 2020. Your classifier is secretly an energy based model and you should treat it like one. In International Conference on Learning Representations.

- Guidotti (2022) Guidotti, R. 2022. Counterfactual explanations and how to find them: literature review and benchmarking. Data Mining and Knowledge Discovery, 1–55.

- Hoffman (1994) Hoffman, H. 1994. German Credit Data.

- Joshi et al. (2019) Joshi, S.; Koyejo, O.; Vijitbenjaronk, W.; Kim, B.; and Ghosh, J. 2019. Towards realistic individual recourse and actionable explanations in black-box decision making systems. arXiv preprint arXiv:1907.09615.

- Kaggle (2011) Kaggle. 2011. Give Me Some Credit, Improve on the State of the Art in Credit Scoring by Predicting the Probability That Somebody Will Experience Financial Distress in the next Two Years. https://www.kaggle.com/c/GiveMeSomeCredit. Accessed: 2023-12-14.

- Karimi et al. (2020) Karimi, A.-H.; Barthe, G.; Schölkopf, B.; and Valera, I. 2020. A survey of algorithmic recourse: definitions, formulations, solutions, and prospects. arXiv preprint arXiv:2010.04050.

- Karimi, Schölkopf, and Valera (2021) Karimi, A.-H.; Schölkopf, B.; and Valera, I. 2021. Algorithmic Recourse: From Counterfactual Explanations to Interventions. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, 353–362.

- Kingma and Ba (2014) Kingma, D. P.; and Ba, J. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980.

- Lakshminarayanan, Pritzel, and Blundell (2017) Lakshminarayanan, B.; Pritzel, A.; and Blundell, C. 2017. Simple and scalable predictive uncertainty estimation using deep ensembles. Advances in neural information processing systems, 30.

- LeCun (1998) LeCun, Y. 1998. The MNIST database of handwritten digits. http://yann. lecun. com/exdb/mnist/.

- LeCun et al. (1998) LeCun, Y.; Bottou, L.; Bengio, Y.; and Haffner, P. 1998. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11): 2278–2324.

- Lundberg and Lee (2017) Lundberg, S. M.; and Lee, S.-I. 2017. A Unified Approach to Interpreting Model Predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems, 4768–4777.

- Mahajan, Tan, and Sharma (2019) Mahajan, D.; Tan, C.; and Sharma, A. 2019. Preserving causal constraints in counterfactual explanations for machine learning classifiers. arXiv preprint arXiv:1912.03277.

- Manokhin (2022) Manokhin, V. 2022. Awesome Conformal Prediction. https://doi.org/10.5281/zenodo.6467205. Accessed: 2023-12-24.

- Molnar (2022) Molnar, C. 2022. Interpretable Machine Learning. 2 edition.

- Mothilal, Sharma, and Tan (2020) Mothilal, R. K.; Sharma, A.; and Tan, C. 2020. Explaining Machine Learning Classifiers through Diverse Counterfactual Explanations. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, 607–617.

- Murphy (2023) Murphy, K. P. 2023. Probabilistic machine learning: Advanced topics. MIT press.

- Pace and Barry (1997) Pace, R. K.; and Barry, R. 1997. Sparse Spatial Autoregressions. Statistics & Probability Letters, 33(3): 291–297.

- Pawelczyk et al. (2021) Pawelczyk, M.; Bielawski, S.; Heuvel, J. v. d.; Richter, T.; and Kasneci, G. 2021. Carla: a python library to benchmark algorithmic recourse and counterfactual explanation algorithms. arXiv preprint arXiv:2108.00783.

- Pawelczyk et al. (2022) Pawelczyk, M.; Datta, T.; van-den Heuvel, J.; Kasneci, G.; and Lakkaraju, H. 2022. Probabilistically Robust Recourse: Navigating the Trade-offs between Costs and Robustness in Algorithmic Recourse. arXiv preprint arXiv:2203.06768.

- Poyiadzi et al. (2020) Poyiadzi, R.; Sokol, K.; Santos-Rodriguez, R.; De Bie, T.; and Flach, P. 2020. FACE: Feasible and Actionable Counterfactual Explanations. In Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, 344–350.

- Ribeiro, Singh, and Guestrin (2016) Ribeiro, M. T.; Singh, S.; and Guestrin, C. 2016. ”Why Should I Trust You?” Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 1135–1144.

- Schut et al. (2021) Schut, L.; Key, O.; Mc Grath, R.; Costabello, L.; Sacaleanu, B.; Gal, Y.; et al. 2021. Generating Interpretable Counterfactual Explanations By Implicit Minimisation of Epistemic and Aleatoric Uncertainties. In International Conference on Artificial Intelligence and Statistics, 1756–1764. PMLR.

- Slack et al. (2021) Slack, D.; Hilgard, A.; Lakkaraju, H.; and Singh, S. 2021. Counterfactual Explanations Can Be Manipulated. Advances in Neural Information Processing Systems, 34.

- Spooner et al. (2021) Spooner, T.; Dervovic, D.; Long, J.; Shepard, J.; Chen, J.; and Magazzeni, D. 2021. Counterfactual explanations for arbitrary regression models. arXiv preprint arXiv:2106.15212.

- Stutz et al. (2021) Stutz, D.; Cemgil, A. T.; Doucet, A.; et al. 2021. Learning optimal conformal classifiers. arXiv preprint arXiv:2110.09192.

- Upadhyay, Joshi, and Lakkaraju (2021) Upadhyay, S.; Joshi, S.; and Lakkaraju, H. 2021. Towards robust and reliable algorithmic recourse. Advances in Neural Information Processing Systems, 34: 16926–16937.

- Ustun, Spangher, and Liu (2019) Ustun, B.; Spangher, A.; and Liu, Y. 2019. Actionable Recourse in Linear Classification. In Proceedings of the Conference on Fairness, Accountability, and Transparency, 10–19.

- Verma et al. (2020) Verma, S.; Boonsanong, V.; Hoang, M.; Hines, K. E.; Dickerson, J. P.; and Shah, C. 2020. Counterfactual explanations and algorithmic recourses for machine learning: A review. arXiv preprint arXiv:2010.10596.

- Wachter, Mittelstadt, and Russell (2017) Wachter, S.; Mittelstadt, B.; and Russell, C. 2017. Counterfactual Explanations without Opening the Black Box: Automated Decisions and the GDPR. Harv. JL & Tech., 31: 841.

- Wang, Simoncelli, and Bovik (2003) Wang, Z.; Simoncelli, E. P.; and Bovik, A. C. 2003. Multiscale structural similarity for image quality assessment. In The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003, volume 2, 1398–1402. Ieee.

- Welling and Teh (2011) Welling, M.; and Teh, Y. W. 2011. Bayesian learning via stochastic gradient Langevin dynamics. In Proceedings of the 28th international conference on machine learning (ICML-11), 681–688. Citeseer.

- Wilson (2020) Wilson, A. G. 2020. The case for Bayesian deep learning. arXiv preprint arXiv:2001.10995.

- Xiao, Rasul, and Vollgraf (2017) Xiao, H.; Rasul, K.; and Vollgraf, R. 2017. Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747.

Appendices

The following appendices provide additional details that are relevant to the paper. Appendices Energy-Based Modelling and Conformal Prediction explain any tasks related to Energy-Based Modelling and Predictive Uncertainty Quantification through Conformal Prediction, respectively. Appendix ECCCo provides additional technical and implementation details about our proposed generator, ECCCo, including references to our open-sourced code base. A complete overview of our experimental setup detailing our parameter choices, training procedures and initial black-box model performance can be found in Appendix Experimental Setup. Finally, Appendix Results reports all of our experimental results in more detail.

Energy-Based Modelling

Since we were not able to identify any existing open-source software for Energy-Based Modelling that would be flexible enough to cater to our needs, we have developed a Julia package from scratch. The package has been open-sourced, but to avoid compromising the double-blind review process, we refrain from providing more information at this stage. In our development we have heavily drawn on the existing literature: Du and Mordatch (2019) describe best practices for using EBM for generative modelling; Grathwohl et al. (2020) explain how EBM can be used to train classifiers jointly for the discriminative and generative tasks. We have used the same package for training and inference, but there are some important differences between the two cases that are worth highlighting here.

Training: Joint Energy Models

To train our Joint Energy Models we broadly follow the approach outlined in Grathwohl et al. (2020). Formally, JEMs are defined by the following joint distribution:

| (10) |

Training therefore involves a standard classification loss component (e.g. cross-entropy loss) as well as a generative loss component . Analogous to how we defined the conditional distribution over inputs in Definition 4.1, denotes the unconditional distribution over inputs. The model gradient of this component of the loss function can be expressed as follows:

| (11) |

To draw samples from , we rely exclusively on the conditional sampling approach described in Grathwohl et al. (2020) for both training and inference: we first draw and then sample (Grathwohl et al. 2020) via Equation 2 with energy where returns the linear predictions (logits) of our classifier . While our package also supports unconditional sampling, we found conditional sampling to work well. It is also well aligned with CE, since in this context we are interested in conditioning on the target class.

As mentioned in the body of the paper, we rely on a biased sampler involving separately specified values for the step size and the standard deviation of the stochastic term involving . Formally, our biased sampler performs updates as follows:

| (12) |

Consistent with Grathwohl et al. (2020), we have specified and as the default values for all of our experiments. Here we have deliberately departed slightly from the notation in Equation 2 to emphasize that we use fixed values for and , consistent with the related literature. The number of total SGLD steps varies by dataset (Table LABEL:tab:ebmparams). Following best practices, we initialize randomly in 5% of all cases and sample from a buffer in all other cases. The buffer itself is randomly initialised and gradually grows to a maximum of 10,000 samples during training as is stored in each epoch (Du and Mordatch 2019; Grathwohl et al. 2020).

It is important to realise that sampling is done during each training epoch, which makes training Joint Energy Models significantly harder than conventional neural classifiers. In each epoch the generated (batch of) sample(s) is used as part of the generative loss component, which compares its energy to that of observed samples :

| (13) |

Our full training objective can be summarized as follows,

| (14) |

where is a Ridge penalty (L2 norm) that regularises energy magnitudes for both observed and generated samples (Du and Mordatch 2019). We have used varying degrees of regularization depending on the dataset ( in Table LABEL:tab:ebmparams).

Contrary to existing work, we have not typically used the entire minibatch of training data for the generative loss component but found that using a subset of the minibatch was often sufficient in attaining decent generative performance (Table LABEL:tab:ebmparams). This has helped to reduce the computational burden for our models, which should make it easier for others to reproduce our findings. Figures 4 and 5 show generated samples for our MNIST and Moons data, to provide a sense of their generative property.

Inference: Quantifying Models’ Generative Property

At inference time, we assume no prior knowledge about the model’s generative property. This means that we do not tab into the existing buffer of generated samples for our Joint Energy Models, but instead generate conditional samples from scratch. While we have relied on the default values and also during inference, the number of total SGLD steps was set to in all cases, so significantly higher than during training. For all of our synthetic datasets and models, we generated 50 conditional samples and then formed subsets containing the lowest-energy samples. While in practice it would be sufficient to do this once for each model and dataset, we have chosen to perform sampling separately for each individual counterfactual in our experiments to account for stochasticity. To help reduce the computational burden for our real-world datasets we have generated only 10 conditional samples each time and used all of them in our counterfactual search. Using more samples, as we originally did, had no substantial impact on our results.

Conformal Prediction

In this Appendix Conformal Prediction we provide some more background on CP and explain in some more detail how we have used recent advances in Conformal Training for our purposes.

Background on CP

Intuitively, CP works under the premise of turning heuristic notions of uncertainty into rigorous uncertainty estimates by repeatedly sifting through the data. It can be used to generate prediction intervals for regression models and prediction sets for classification models. Since the literature on CE and AR is typically concerned with classification problems, we focus on the latter. A particular variant of CP called Split Conformal Prediction (SCP) is well-suited for our purposes, because it imposes only minimal restrictions on model training.

Specifically, SCP involves splitting the data into a proper training set and a calibration set . The former is used to train the classifier in any conventional fashion. The latter is then used to compute so-called nonconformity scores: where is referred to as score function. In the context of classification, a common choice for the score function is just , that is one minus the softmax output corresponding to the observed label (Angelopoulos and Bates 2021).

Finally, classification sets are formed as follows,

| (15) |

where denotes the -quantile of and is a predetermined error rate. As the size of the calibration set increases, the probability that the classification set for a newly arrived sample does not cover the true test label approaches (Angelopoulos and Bates 2021).

Observe from Equation 15 that Conformal Prediction works on an instance-level basis, much like CE are local. The prediction set for an individual instance depends only on the characteristics of that sample and the specified error rate. Intuitively, the set is more likely to include multiple labels for samples that are difficult to classify, so the set size is indicative of predictive uncertainty. To see why this effect is exacerbated by small choices for consider the case of , which requires that the true label is covered by the prediction set with probability equal to 1.

Differentiability

The fact that conformal classifiers produce set-valued predictions introduces a challenge: it is not immediately obvious how to use such classifiers in the context of gradient-based counterfactual search. Put differently, it is not clear how to use prediction sets in Equation 1. Fortunately, Stutz et al. (2021) have recently proposed a framework for Conformal Training that also hinges on differentiability. Specifically, they show how Stochastic Gradient Descent can be used to train classifiers not only for the discriminative task but also for additional objectives related to Conformal Prediction. One such objective is efficiency: for a given target error rate , the efficiency of a conformal classifier improves as its average prediction set size decreases. To this end, the authors introduce a smooth set size penalty defined in Equation 4 in the body of this paper. Formally, it is defined as for , where is the sigmoid function and is a hyper-parameter used for temperature scaling (Stutz et al. 2021).

In addition to the smooth set size penalty, Stutz et al. (2021) also propose a configurable classification loss function, that can be used to enforce coverage. For MNIST data, we found that using this function generally improved the visual quality of the generated counterfactuals, so we used it in our experiments involving real-world data. For the synthetic dataset, visual inspection of the counterfactuals showed that using the configurable loss function sometimes led to overshooting: counterfactuals would end up deep inside the target domain but far away from the observed samples. For this reason, we instead relied on standard cross-entropy loss for our synthetic datasets. As we have noted in the body of the paper, more experimental work is certainly needed in this context. Figure 6 shows the prediction set size (left), smooth set size loss (centre) and configurable classification loss (right) for a JEM trained on our Linearly Separable data.

ECCCo

In this section, we explain ECCCo in some more detail, briefly discuss convergence conditions for counterfactual explanations and provide details concerning the actual implementation of our framework in Julia.

Deriving the search objective

The counterfactual search objective for ECCCo was introduced in Equation 9 in the body of the paper. We restate this equation here for reference:

| (16) | ||||

We can make the connection to energy-based modeling more explicit by restating the counterfactual search objective in terms , which we defined in Equation 14. In particular, consider the following counterfactual search objective,

| (17) |

where we have simply used the JEM loss function as .

Now note that aside from the additional penalties in Equation 16, the only key difference between our counterfactual search objective and the joint-energy training objective is the parameter that is being optimized. In joint-energy training we optimize the objective with respect to the network weights . Recall that . Then the partial gradient with respect to the generative loss component of can be expressed as follows:

| (18) |

During the counterfactual search, we take the network parameters as fixed and instead optimize with respect to the counterfactual itself333Here we omit the notion of a latent search space to make the comparison easier.,

| (19) |

where the second term is equal to zero because is invariant with respect to . Since this term has zero gradients, we can remove it from the loss function altogether. For the regularization loss component of we can proceed analogously such that we can rewrite Equation 17 as follows:

| (20) | ||||

Now we notice that Equation 20 is equivalent to Equation 16 for . For the sake of simplicity, we omitted the regularization component from Equation 9 in the main text. Intuitively, taking iterative gradient steps according to Equation 19 has the effect of constraining the energy of the counterfactual until. The generative property of the underlying model implicitly enters this equation through .

The ECCCo algorithm

Algorithm 1 describes how exactly ECCCo works. For the sake of simplicity and without loss of generality, we limit our attention to generating a single counterfactual . The counterfactual state is initialized at the factual . Other forms of initialization are also suitable but not considered here. For example, one may choose at a small random perturbation to all features (Slack et al. 2021). Next, we calibrate the model through split conformal prediction. Finally, we search counterfactuals through gradient descent where denotes our loss function defined in Equation 9. The search terminates once the convergence criterium is met or the maximum number of iterations has been exhausted. Note that the choice of convergence criterium has important implications on the final counterfactual which we explain below.

The ECCCo+ algorithm

Algorithm 2 describes how exactly ECCCo+ works. The only difference to ECCCo is that we encode and decode features using PCA. In particular, we let denote the projection of to its first principal components. Conversely, maps back from the projection to the feature space.

The ECCCo-L1 algorithm

Algorithm 3 describes a variation of ECCCo that we initally considered but ultimately discarded. For the sake of completeness we have included this approach here in the appendix. It generally yields very faithful counterfactuals but it is computationally much more expensive and struggles with plausibility.

Instead of constraining energy directly, this approach works under the premise of penalizing the distance between the counterfactual and samples generated through SGLD. The counterfactual state is initialized as in Algorithm 1. Next, we generate conditional samples using SGLD (Equation 2) and store the instances with the lowest energy. We then calibrate the model through split conformal prediction. Finally, we search counterfactuals through gradient descent where denotes our loss function defined in Equation 9, but instead of constraining energy directly we use Equaqtion 6 (unfaithfulness metric) as a penalty.

A Note on Convergence

Convergence is not typically discussed much in the context of CE, even though it has important implications on outcomes. One intuitive way to specify convergence is in terms of threshold probabilities: once the predicted probability exceeds some user-defined threshold such that the counterfactual is valid, we could consider the search to have converged. In the binary case, for example, convergence could be defined as in this sense. Note, however, how this can be expected to yield counterfactuals in the proximity of the decision boundary, a region characterized by high aleatoric uncertainty. In other words, counterfactuals generated in this way would generally not be plausible. To avoid this from happening, we specify convergence in terms of gradients approaching zero for all our experiments and all of our generators. This is allows us to get a cleaner read on how the different counterfactual search objectives affect counterfactual outcomes.

ECCCo.jl

We have built a small Julia package, ECCCo.jl, that was used to perform the analysis in this paper: https://github.com/pat-alt/ECCCo.jl. The package will be absorbed by CounterfactualExplanations.jl in the future (Altmeyer, van Deursen et al. 2023).

Experimental Setup

In our experiments, we always generate multiple counterfactuals for each model and generator over multiple runs to account for stochasticity. For each generator, model and dataset, we sample a total of factuals for a single benchmark, where each time the factual and target class is drawn randomly. This process is repeated over 50 runs for the synthetic and tabular datasets, which yields a total of around 1.5 million counterfactuals per dataset for the final results. For vision datasets, we relied on 10 runs yielding a total of 300,000 counterfactuals. The final results presented in the paper and this appendix show the sample averages and the standard deviations of samples averages across all runs. For grid search, we used single runs for each parameter specification generating 100 counterfactuals per generator and model for synthetic and tabular datasets. For vision data, we generated 10 counterfactuals for each model, generator and parameter specification.

Table LABEL:tab:params provides an overview of all parameters related to our experiments. The GMSC data were randomly undersampled for balancing purposes and all features were standardized. MNIST data was also randomly undersampled for reasons outlined below. Pixel values were preprocessed to fall in the range of and a small Gaussian noise component () was added to training samples following common practice in the EBM literature. All of our models were trained through mini-batch training using the Adam optimiser (Kingma and Ba (2014)). Table LABEL:tab:perf shows standard evaluation metrics measuring the predictive performance of our different models grouped by dataset. These measures were computed on test data.

Table LABEL:tab:genparams summarises our hyperparameter choices for the counterfactual generators where denotes the learning rate used for Stochastic Gradient Descent (SGD) and , , represent the chosen penalty strengths (Equations 1 and 9). Here also refers to the chosen penalty for the distance from factual values that applies to both Wachter and REVISE, but not Schut which is penalty-free. Schut is also the only generator that uses JSMA instead of SGD for optimization.

Compute

Research reported in this work was partially or completely facilitated by computational resources and support of the DelftBlue (Delft High Performance Computing Centre DHPC) and the Delft AI Cluster (DAIC: https://doc.daic.tudelft.nl/) at TU Delft.

For grid search, we used 300 CPUs for tabular real-world datasets (1.5 hours each), 150 CPUs for synthetic datasets (1 hour each) and 100 CPUs for vision datasets (4 hours each), where we ran a smaller grid search for the latter. To generate the final results reported in the tables we used 300 CPUs for all datasets (1.5 hours each) except the vision datasets. For the latter, we used 50 CPUs for smaller final experiments and at longer run times (5 hours each).

Results

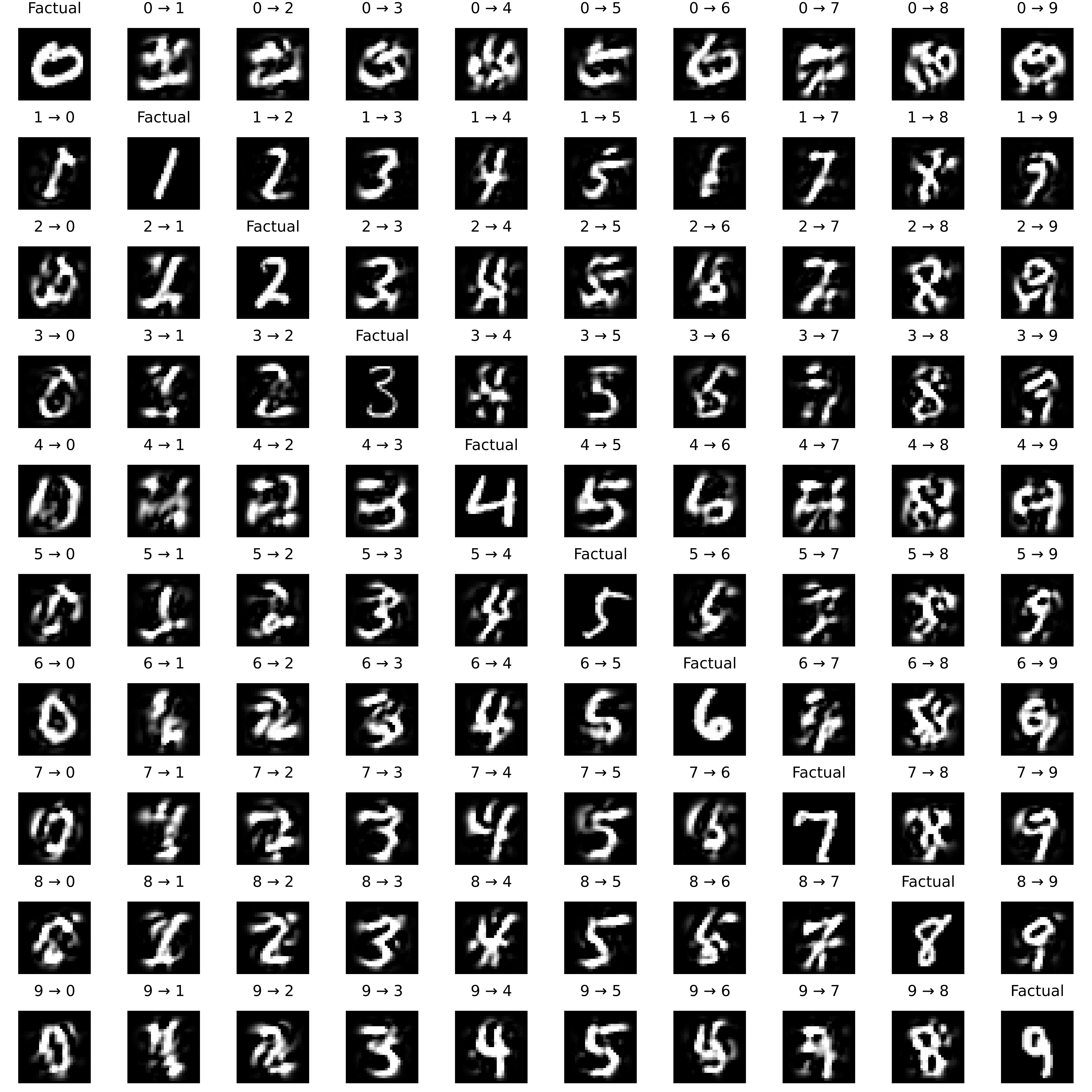

Figures 7 to 11 show examples of counterfactuals for MNIST generated by ECCCo+ for our different models. Original images are shown on the diagonal and the corresponding counterfactuals are plotted across rows. Figures 12 to 16 show the same examples but for REVISE. Both counterfactual generators have access to the same optimizer. While the results for REVISE look fairly poor here, we have observed better results for optizers with higher step sizes. Note that the seemingly poor performance by REVISE upon visual inspection is not driven by a weak surrogate VAE: Figure 17 shows image reconstructions generated by the VAE.

Tables LABEL:tab:results-linearly-separable to LABEL:tab:results-fashion-mnist reports all of the evaluation metrics we have computed. Tables LABEL:tab:results-linearly-separable-valid to LABEL:tab:results-fashion-mnist-valid reports the same metrics for the subset of valid counterfactuals. The ‘Unfaithfulness’ and ‘Implausibility’ metrics have been discussed extensively in the body of the paper. The ‘Cost’ metric relates to the distance between the factual and the counterfactual and is measured using the L1 Norm. The ‘Redundancy’ metric measures sparsity in is defined as the percentage of features that remain unperturbed (higher is better). The ‘Uncertainty’ metric is just the average value of the smooth set size penalty (Equation 4). Finally, ‘Validity’ is the percentage of valid counterfactuals.