Faithful and Consistent Graph Neural Network Explanations with Rationale Alignment

Abstract.

Uncovering rationales behind predictions of graph neural networks (GNNs) has received increasing attention over recent years. Instance-level GNN explanation aims to discover critical input elements, like nodes or edges, that the target GNN relies upon for making predictions. Though various algorithms are proposed, most of them formalize this task by searching the minimal subgraph which can preserve original predictions. However, an inductive bias is deep-rooted in this framework: several subgraphs can result in the same or similar outputs as the original graphs. Consequently, they have the danger of providing spurious explanations and failing to provide consistent explanations. Applying them to explain weakly-performed GNNs would further amplify these issues. To address this problem, we theoretically examine the predictions of GNNs from the causality perspective. Two typical reasons for spurious explanations are identified: confounding effect of latent variables like distribution shift, and causal factors distinct from the original input. Observing that both confounding effects and diverse causal rationales are encoded in internal representations, we propose a new explanation framework with an auxiliary alignment loss, which is theoretically proven to be optimizing a more faithful explanation objective intrinsically. Concretely for this alignment loss, a set of different perspectives are explored: anchor-based alignment, distributional alignment based on Gaussian mixture models, mutual-information-based alignment, etc. A comprehensive study is conducted both on the effectiveness of this new framework in terms of explanation faithfulness/consistency and on the advantages of these variants. For our codes, please refer to the following URL link: https://github.com/TianxiangZhao/GraphNNExplanation.

Graph-structured data is ubiquitous in the real world, such as social networks (Fan2019GraphNN, ; zhao2020semi, ; xu2018enhancing, ), molecular structures (Mansimov2019MolecularGP, ; Chereda2019UtilizingMN, ) and knowledge graphs (Sorokin2018ModelingSW, ; liu2022federated, ). With the growing interest in learning from graphs, graph neural networks (GNNs) are receiving more and more attention over the years. Generally, GNNs adopt message-passing mechanisms, which recursively propagate and fuse messages from neighbor nodes on the graphs. Hence, the learned node representation captures both node attributes and neighborhood information, which facilitates various downstream tasks such as node classification (kipf2016semi, ; velivckovic2017graph, ; hamilton2017inductive, ; ren2017robust, ), graph classification (xu2018powerful, ), and link prediction (zhang2018link, ; lu2022graph, ; ren2018tracking, ).

Despite the success of GNNs for various domains, as with other neural networks, GNNs lack interpretability. Understanding the inner working of GNNs can bring several benefits. First, it enhances practitioners’ trust in the GNN model by enriching their understanding of the model characteristics such as if the model is working as desired. Second, it increases the models’ transparency to enable trusted applications in decision-critical fields sensitive to fairness, privacy and safety challenges (rao2021quantitative, ; ren2022mitigating, ; ren2021cross, ). Thus, studying the explainability of GNNs is attracting increasing attention and many efforts have been taken (ying2019gnnexplainer, ; luo2020parameterized, ; zhao2022consistency, ).

Particularly, we focus on post-hoc instance-level explanations. Given a trained GNN and an input graph, this task seeks to discover the substructures that can explain the prediction behavior of the GNN model. Some solutions have been proposed in existing works (ying2019gnnexplainer, ; huang2020graphlime, ; vu2020pgm, ). For example, in search of important substructures that predictions rely upon, GNNExplainer learns an importance matrix on node attributes and edges via perturbation (ying2019gnnexplainer, ). The identified minimal substructures that preserve original predictions are taken as the explanation. Extending this idea, PGExplainer trains a graph generator to utilize global information in explanation and enable faster inference in the inductive setting (luo2020parameterized, ). SubgraphX constraints explanations as connected subgraphs and conduct Monte Carlo tree search based on Shapley value (yuan2021explainability, ). These methods can be summarized in a label preserving framework, i.e., the candidate explanation is formed as a masked version of the original graph and is identified as the minimal discriminative substructure.

However, due to the complexity of topology and the combinatory number of candidate substructures, existing label preserving methods are insufficient for a faithful and consistent explanation of GNNs. They are unstable and are prone to give spurious correlations as explanations. A failure case is shown in Figure 1, where a GNN is trained on Graph-SST5 (yuan2020explainability, ) for sentiment classification. Each node represents a word and each edge denotes syntactic dependency between nodes. Each graph is labeled based on the sentiment of the sentence. In the figure, the sentence “Sweet home alabama isn’t going to win any academy awards, but this date-night diversion will definitely win some hearts” is labeled positive. In the first run, GNNExplainer (ying2019gnnexplainer, ) identifies the explanation as “definitely win some hearts”, and in the second run, it identifies “win academy awards” to be the explanation instead. Different explanations obtained by GNNExplainer break the criteria of consistency, i.e., the explanation method should be deterministic and consistent with the same input for different runs (nauta2022anecdotal, ). Consequently, explanations provided by existing methods may fail to faithfully reflect the decision mechanism of the to-be-explained GNN.

Inspecting the inference process of target GNNs, we find that the inconsistency problem and spurious explanations can be understood from the causality perspective. Specifically, existing explanation methods may lead to spurious explanations either as a result of different causal factors or due to the confounding effect of distribution shifts (identified subgraphs may be out of distribution). These failure cases originate from a particular inductive bias that predicted labels are sufficiently indicative for extracting critical input components. This underlying assumption is rooted in optimization objectives adopted by existing works (ying2019gnnexplainer, ; luo2020parameterized, ; yuan2021explainability, ). However, our analysis demonstrates that the label information is insufficient to filter out spurious explanations, leading to inconsistent and unfaithful explanations.

Considering the inference of GNNs, both confounding effects and distinct causal relationships can be reflected in the internal representation space. With this observation, we propose a novel objective that encourages alignment of embeddings of raw graph and identified subgraph in internal embedding space to obtain more faithful and consistent GNN explanations. Specifically, to evaluate the semantic similarity between two inputs and incorporate the alignment objective into explanation, we design and compare strategies with various design choices to measure similarity in the embedding space. These strategies enable the alignment between candidate explanations and original inputs and are flexible to be incorporated into various existing GNN explanation methods. Particularly, aside from directly using Euclidean distance, we further propose three distribution-aware strategies. The first one identifies a set of anchoring embeddings and utilizes relative distances against them. The second one assumes a Gaussian mixture model and captures the distribution using the probability of falling into each Gaussian center. The third one learns a deep neural network to estimate mutual information between two inputs, which takes a data-driven approach with little reliance upon prior domain knowledge. Further analysis shows that the proposed method is in fact optimizing a new explanation framework, which is more faithful in design. Our main contributions are:

-

•

We point out the faithfulness and consistency issues in rationales identified by existing GNN explanation models. These issues arise due to the inductive bias in their label-preserving framework, which only uses predictions as the guiding information;

-

•

We propose an effective and easy-to-apply countermeasure by aligning intermediate embeddings. We implement a set of variants with different alignment strategies, which is flexible to be incorporated to various GNN explanation models. We further conduct a theoretical analysis to understand and validate the proposed framework.

-

•

Extensive experiments on real-world and synthetic datasets show that our framework benefits various GNN explanation models to achieve more faithful and consistent explanations.

1. Related Work

In this section, we review related works, including graph neural networks and interpretability of GNNs.

1.1. Graph Neural Networks

Graph neural networks (GNNs) are developing rapidly in recent years, with the increasing need for learning on relational data structures (Fan2019GraphNN, ; dai2022towards, ; zhao2021graphsmote, ; zhou2022graph, ; zhao2023skill, ). Generally, existing GNNs can be categorized into two categories, i.e., spectral-based approaches (bruna2013spectral, ; liang2023abslearn, ; liang2022reasoning, ) based on graph signal processing theory, and spatial-based approaches (duvenaud2015convolutional, ; atwood2016diffusion, ; xiao2021learning, ) relying upon neighborhood aggregation. Despite their differences, most GNN variants can be summarized with the message-passing framework, which is composed of pattern extraction and interaction modeling within each layer (gilmer2017neural, ). Specifically, GNNs model messages from node representations. These messages are then propagated with various message-passing mechanisms to refine node representations, which are then utilized for downstream tasks (hamilton2017inductive, ; zhang2018link, ; zhao2021graphsmote, ; zhao2022topoimb, ). Explorations are made by disentangling the propagation process (wang2020disentangled, ; zhao2022exploring, ; xiao2022decoupled, ) or utilizing external prototypes (lin2022prototypical, ; xu2022hp, ). Research has also been conducted on the expressive power (balcilar2021breaking, ; sato2020survey, ) and potential biases introduced by different kernels (nt2019revisiting, ; balcilar2021analyzing, ) for the design of more effective GNNs. Despite their success in network representation learning, GNNs are uninterpretable black box models. It is challenging to understand their behaviors even if the adopted message passing mechanism and parameters are given. Besides, unlike traditional deep neural networks where instances are identically and independently distributed, GNNs consider node features and graph topology jointly, making the interpretability problem more challenging to handle.

1.2. GNN Interpretation Methods

Recently, some efforts have been taken to interpret GNN models and provide explanations for their predictions (wang2020causal, ). Based on the granularity, existing methods can be generally grouped into two categories: (1) instance-level explanation (ying2019gnnexplainer, ), which provides explanations on the prediction for each instance by identifying important substructures; and (2) model-level explanation (baldassarre2019explainability, ; zhang2021protgnn, ), which aims to understand global decision rules captured by the target GNN. From the methodology perspective, existing methods can be categorized as (1) self-explainable GNNs (zhang2021protgnn, ; dai2021towards, ), where the GNN can simultaneously give prediction and explanations on the prediction; and (2) post-hoc explanations (ying2019gnnexplainer, ; luo2020parameterized, ; yuan2021explainability, ), which adopt another model or strategy to provide explanations of a target GNN. As post-hoc explanations are model-agnostic, i.e., can be applied for various GNNs, in this work, we focus on post-hoc instance-level explanations (ying2019gnnexplainer, ), i.e., given a trained GNN model, identifying instance-wise critical substructures for each input to explain the prediction. A comprehensive survey can be found in (dai2022comprehensive, ).

A variety of strategies for post-hoc instance-level explanations have been explored in the literature, including utilizing signals from gradients based (pope2019explainability, ; baldassarre2019explainability, ), perturbed predictions based (ying2019gnnexplainer, ; luo2020parameterized, ; yuan2021explainability, ; shan2021reinforcement, ), and decomposition based (baldassarre2019explainability, ; schnake2020higher, ). Among these methods, perturbed prediction-based methods are the most popular. The basic idea is to learn a perturbation mask that filters out non-important connections and identifies dominating substructures preserving the original predictions (yuan2020explainability, ). The identified important substructure is used as an explanation for the prediction. For example, GNNExplainer (ying2019gnnexplainer, ) employs two soft mask matrices on node attributes and graph structure, respectively, which are learned end-to-end under the maximizing mutual information (MMI) framework. PGExplainer (luo2020parameterized, ) extends it by incorporating a graph generator to utilize global information. It can be applied in the inductive setting and prevent the onerous task of re-learning from scratch for each to-be-explained instance. SubgraphX (yuan2021explainability, ) expects explanations to be in the form of sub-graphs instead of bag-of-edges and employs Monte Carlo Tree Search (MCTS) to find connected subgraphs that preserve predictions measured by the Shapley value. To promote faithfulness in identified explanations, some works introduced terminologies from the causality analysis domain, via estimating the individual causal effect of each edge (lin2021generative, ) or designing interventions to prevent the discovery of spurious correlations (wu2022discovering, ). Ref. (yu2020graph, ) connects the idea of identifying minimally-predictive parts in explanation with the principle of information bottleneck (tishby2015deep, ) and designs an end-to-end optimization framework for GNN explanation.

Despite the aforementioned progress in interpreting GNNs, most of these methods discover critical substructures merely upon the change of outputs given perturbed inputs. Due to this underlying inductive bias, existing label-preserving methods are heavily affected by spurious correlations caused by confounding factors in the environment. On the other hand, by aligning intermediate embeddings in GNNs, our method alleviates the effects of spurious correlations on interpreting GNNs, leading to faithful and consistent explanations.

1.3. Graph Contrastive Learning

In recent years, contrastive learning (CL) has garnered significant attention as it mitigates the need for manual annotations via unsupervised pretext tasks (LKhc2020ContrastiveRL, ; Khosla2020SupervisedCL, ; chen2020simple, ). In a typical CL framework, the model is trained in a pairwise manner, promoting attraction between positive sample pairs and repulsion between negative sample pairs (Graf2021DissectingSC, ; Wang2020UnderstandingCR, ).

Contrastive Learning (CL) techniques have recently been extended to the graph domain, constructing multiple graph views and maximizing mutual information (MI) among semantically similar instances (chen2020simple, ; feng2022adversarial, ; xia2022hypergraph, ; zhu2021empirical, ; wang2022explanation, ; liang2023knowledge, ). Existing methods primarily vary on graph augmentation techniques and contrastive pretext tasks. Graph augmentations commonly involve node-level attribute masking or perturbation (Hu2019StrategiesFP, ; Opolka2019SpatioTemporalDG, ; Velickovic2018DeepGI, ), edge dropping or rewiring (Zhu2020SelfsupervisedTO, ; You2020GraphCL, ), and graph diffusions(Hassani2020ContrastiveMR, ; Jin2021MultiScaleCS, ). Contrastive pretext tasks can be categorized into two branches, same-scale contrasts between embeddings of node (graph) pairs (Zhu2020DeepGC, ; Hassani2020ContrastiveMR, ; Zeng2022ImGCLRG, ) and cross-scale contrasts between global graph embeddings and local node representations (Velickovic2018DeepGI, ; Opolka2019SpatioTemporalDG, ). Recent works have also explored dynamic contrastive objectives (You2021GraphCL, ; Yin2021AutoGCLAG, ) via learning the augmentation strategies adaptively.

2. Preliminary

2.1. Problem Definition

We use to denote a graph, where is a set of nodes and is the set of edges. Nodes are accompanied by an attribute matrix , and is the -dimensional node attributes of node . is described by an adjacency matrix . if there is an edge between node and ; otherwise, . For graph classification, each graph has a label , and a GNN model is trained to map to its class, i.e., . Similarly, for node classification, each graph denotes a -hop subgraph centered at node and a GNN model is trained to predict the label of based on node representation of learned from . The purpose of explanation is to find a subgraph , marked with binary importance mask on adjacency matrix and on node attributes, respectively, e.g., , where denotes elementwise multiplication. These two masks highlight components of that are important for to predict its label. With the notations, the post-hoc instance-level GNN explanation task is:

Given a trained GNN model , for an arbitrary input graph , find a subgraph that can explain the prediction of on . The obtained explanation is depicted by importance mask on node attributes and importance mask on adjacency matrix.

2.2. MMI-based Explanation Framework

Many approaches have been designed for post-hoc instance-level GNN explanation. Due to the discreteness of edge existence and non-grid graph structures, most works apply a perturbation-based strategy to search for explanations. Generally, they can be summarized as Maximization of Mutual Information (MMI) between predicted label and explanation , i.e.,

| (1) | ||||

where represents mutual information and denotes the perturbations on original input with importance masks . For example, let represent the perturbed . Then, and in GNNExplainer (ying2019gnnexplainer, ), where is sampled from marginal distribution of node attributes . denotes regularization terms on the explanation, imposing prior knowledge into the searching process, like constraints on budgets or connectivity distributions (luo2020parameterized, ). Mutual information quantifies consistency between original predictions and prediction of candidate explanation , which promotes the positiveness of found explanation . Since mutual information measures the predictive power of on , this framework essentially tries to find a subgraph that can best predict the original output . During training, a relaxed version (ying2019gnnexplainer, ) is often adopted as:

| (2) | ||||

where denotes cross-entropy. With this same objective, existing methods mainly differ from each other in optimization and searching strategies.

Different aspects regarding the quality of explanations can be evaluated (nauta2022anecdotal, ). Among them, two most important criteria are faithfulness and consistency. Faithfulness measures the descriptive accuracy of explanations, indicating how truthful they are compared to behaviors of the target model. Consistency considers explanation invariance, which checks that identical input should have identical explanations. However, as shown in Figure 1, the existing MMI-based framework is sub-optimal in terms of these criteria. The cause of this problem is rooted in its learning objective, which uses prediction alone as guidance in search of explanations. Due to the complex graph structure, the prediction alone as a guide could result in spurious explanations. A detailed analysis will be provided in the next section.

3. Analyze Spurious Explanations

With “spurious explanations”, we refer to those explanations lie outside the genuine rationale of prediction on , making the usage of as explanations anecdotal. As examples in Figure 1, despite rapid developments in explaining GNNs, the problem w.r.t faithfulness and consistency of detected explanations remains. To get a deeper understanding of reasons behind this problem, we will examine the behavior of target GNN model from the causality perspective. Figure 2(a) shows the Structural Equation Model (SEM), where variable denotes discriminative causal factors and variable represents confounding environment factors. Two paths between and the predicted label can be found.

-

•

: This path presents the inference of target GNN model, i.e., critical patterns that are informative and discriminative for the prediction would be extracted from input graph, upon which the target model is dependent. Causal variables are determined by both the input graph and learned knowledge by the target GNN model.

-

•

: We denote as the confounding factors, such as depicting the overall distribution of graphs. It is causally related to both the appearance of input graphs and the prediction of target GNN models. A masked version of could create out-of-distribution (OOD) examples, resulting in spurious causality to prediction outputs. For example in the chemical domain, removing edges (bonds) or nodes (atoms) may obtain invalid molecular graphs that never appear during training. In the existence of distribution shifts, model predictions would be less reliable.

Figure 2(a) provides us with a tool to analyze ’s behaviors. From the causal structures, we can observe that spurious explanations may arise as a result of failure in recovering the original causal rationale. learned from Equation 1 may preserve prediction due to confounding effect of distribution shift or different causal variables compared to original . Weakly-trained GNN that are unstable or non-robust towards noises would further amplify this problem as the prediction is unreliable.

To further understand the issue, we build the correspondence from SEM in Figure 2(a) to the inference process of GNN . Specifically, we first decompose as a feature extractor and a classifier . Then, its inference can be summarized as two steps: (1) encoding step with , which takes as input and produce its embedding in the representation space ; (2) classification step with , which predicts output labels on input’s embedding. Connecting these inference steps to SEM in Figure 2(a), we can find that:

-

•

The causal path lies behind the inference process with representation space to encode critical variables ;

-

•

The confounding effect of distribution shift works on the inference process via influencing distribution of graph embedding in . When masked input is OOD, its embedding would fail to reflect its discriminative features and deviate from real distributions, hence deviating the classification step on it.

To summarize, we can observe that spurious explanations are usually obtained due to the following two reasons:

-

(1)

The obtained is OOD graph. During inference of target GNN model, the encoded representation of is distant from those seen in the training set, making the prediction unreliable;

-

(2)

The encoded discriminative representation does not accord with that of the original graph. Different causal factors () are extracted between and , resulting in false explanations.

4. Methodology

Based on the discussion above, in this section, we focus on improving the faithfulness and consistency of GNN explanations and correcting the inductive bias caused by simply relying on prediction outputs. We first provide an intuitive introduction to the proposed countermeasure, which takes the internal inference process into account. We then design four concrete algorithms to align and in the latent space, to promote that they are seen and processed in the same manner. Finally, theoretical analysis is provided to justify our strategies.

4.1. Alleviate Spurious Explanations

Instance-level post-hoc explanation dedicates to finding discriminative substructures that the target model depends upon. The traditional objective in Equation 2 can identify minimal predictive parts of input, however, it is dangerous to directly take them as explanations. Due to diversity in graph topology and combinatory nature of sub-graphs, multiple distinct substructures could be identified leading to the same prediction, as discussed in Section 3.

For an explanation substructure to be faithful, it should follow the same rationale as the original graph inside the internal inference of to-be-explained model . To achieve this goal, the explanation should be aligned to w.r.t the decision mechanism, reflected in Figure 2(a). However, it is non-trivial to extract and compare the critical causal variables and confounding variables due to the black box nature of the target GNN model to be explained.

Following the causal analysis in Section 3, we propose to take an alternative approach by looking into internal embeddings learned by . Causal variables are encoded in representation space extracted by , and out-of-distribution effects can also be reflected by analyzing embedding distributions. An assumption can be safely made: if two graphs are mapped to embeddings near each other by a GNN layer, then these graphs are seen as similar by it and would be processed similarly by following layers. With this assumption, a proxy task can be designed by aligning internal graph embeddings between and . This new task can be incorporated into Framework 1 as an auxiliary optimization objective.

Let be the representation of node at the -th GNN layer with . Generally, the inference process inside GNN layers can be summarized as a message-passing framework:

| (3) | ||||

where and are the message function and update function at -th layer, respectively. is the set of node ’s neighbors. Without loss of generality, the graph pooling layer can also be presented as:

| (4) |

where denotes mapping weight from node in layer to node in layer inside the myriad of GNN for graph classification. We propose to align embedding at each layer, which contains both node and local neighborhood information.

4.2. Distribution-Aware Alignment

Achieving alignment in the embedding space is not straightforward. It has several distinct difficulties. (1) It is difficult to evaluate the distance between and in this embedding space. Different dimensions could encode different features and carry different importance. Furthermore, is a substructure of the original , and a shift on unimportant dimensions would naturally exist. (2) Due to the complexity of graph/node distributions, it is non-trivial to design a measurement of alignments that is both computation-friendly and can correlate well to distance on the distribution manifold.

To address these challenges, we design a strategy to identify explanatory substructures and preserve their alignment with original graphs in a distribution-aware manner. The basic idea is to utilize other graphs to obtain a global view of the distribution density of embeddings, providing a better measurement of alignment. Concretely, we obtain representative node/graph embeddings as anchors and use distances to these anchors as the distribution-wise representation of graphs. Alignment is conducted on obtained representation of graph pairs. Next, we go into details of this strategy.

-

•

First, using graphs from the same dataset, a set of node embeddings can be obtained as for each layer , where denotes embedding of node in graph . For node-level tasks, we set to contain only the center node of graph . For graph-level tasks, contains nodes set after graph pooling layer, and we process them following to get global graph representation.

-

•

Then, a clustering algorithm is applied to the obtained embedding set to get groups. Clustering centers of these groups are set to be anchors, annotated as . In experiments, we select DBSCAN (ester1996density, ) as the clustering algorithm, and tune its hyper-parameters to get around groups.

-

•

At -th layer, is represented in terms of relative distances to those anchors, as with the -th element calculated as .

Alignment between and can be achieved by comparing their representations at each layer. The alignment loss is computed as:

| (5) |

This metric provides a lightweight strategy for evaluating alignments in the embedding distribution manifold, by comparing relative positions w.r.t representative clustering centers. This strategy can naturally encode the varying importance of each dimension. Fig. 2(b) gives an example, where is the graph to be explained and the red stars are anchors. and are both similar to w.r.t absolute distances; while it is easy to see is more similar to w.r.t to the anchors. In other words, the anchors can better measure the alignment to filter out spurious explanations.

This alignment loss is used as an auxiliary task incorporated into MMI-based framework in Equation 2 to get faithful explanation as:

| (6) | ||||

where controls the balance between prediction preservation and embedding alignment. is flexible to be incorporated into various existing explanation methods.

4.3. Direct Alignment

As a simpler and more direct implementation, we also design a variant based on absolute distance. For layers without graph pooling, the objective can be written as . For layers with graph pooling, as the structure could be different, we conduct alignment on global representation , where denotes node set after pooling.

5. Extended Methodology

In this section, we further examine more design choices for the strategy of alignment to obtain faithful and consistent explanations. Instead of using heuristic approaches, we explore two new directions: (1) statistically sound distance measurements based on the Gaussian mixture model, (2) fully utilizing the power of deep neural networks to capture distributions in the latent embedding space. Details of these two alignment strategies will be introduced below.

5.1. Gaussian-Mixture-based Alignment

In this strategy, we model the latent embeddings of nodes (or graphs) using a mixture of Gaussian distributions, with representative node/graph embeddings as anchors (Gaussian centers). The produced embedding of each input can be compared with those prototypical anchors, and the semantic information of inputs taken by the target model would be encoded by relative distances from them.

Concretely, we first obtain prototypical representations, annotated as , by running the clustering algorithm on collected embeddings from graphs , in the same strategy as introduced in Sec. 4.2. Clutering algorithm DBSCAN (ester1996density, ) is adopted and we tune its hyper-parameters to get around groups.

Next, the probability of encoded representation falling into each prototypical Gaussian centers can be computed as:

| (7) |

This distribution probability can serve as a natural tool for depicting the semantics of the input graph learned by the GNN model. Consequently, the distance between and can be directly measured as the KL-divergence w.r.t this distribution probability:

| (8) |

where denotes the distribution probability of candidate explanation embedding, . Using this strategy, the alignment loss between original graph and the candidate explanation is computed as:

| (9) |

which can be incorporated into the revised explanation framework proposed in Eq. 6.

Comparison Compared with the alignment loss based on relative distances against anchors in Eq. 5, this new objective offers a better strategy in taking distribution into consideration. Specifically, we can show the following two advantages:

-

•

In obtaining the distribution-aware representation of each instance, this variant uses a Gaussian distance kernel (Eq. 7) while the other one uses Euclidean distance in Sec. 4.2, which may amplify the influence of distant anchors. We can prove this by examining the gradient of changes in representation w.r.t GNN embeddings . In the -th layer at dimension , the gradient of the previous variant can be computed as:

(10) On the other hand, the gradient of this variant is:

(11) It is easy to see that for the previous variant, the magnitude of gradient would grow linearly w.r.t distances towards corresponding anchors. For this variant, on the other hand, the term would down-weight the importance of those distant anchors while up-weight the importance of similar anchors, which is more desired in obtaining distribution-aware representations.

-

•

In computing the distance between representations of two inputs, this variant adopts the KL divergence as in Eq. 8, which would be scale-agnostic compared to the other one directly using Euclidean distance as in Eq. 5. Again, we can show the gradient of alignment loss towards obtained embeddings that encode distribution information. It can be observed that for the previous variant:

(12) For this variant based on Gaussian mixture model, the gradient can be computed as:

(13) It can be observed that the previous strategy focuses on representation dimensions with a large absolute difference, while would be sensitive towards the scale of each dimension. On the other hand, this strategy uses the summation between the logarithm of relative difference with a constant, which is scale-agnostic towards each dimension.

5.2. MI-based Alignment

In this strategy, we further consider the utilization of deep models to capture the distribution and estimate the semantic similarity of two inputs, and incorporate it into the alignment loss for discovering faithful and consistent explanations. Specifically, we train a deep model to estimate the mutual information (MI) between two input graphs, and use its prediction as a measurement of alignment between original graph and its candidate explanation. This strategy circumvents the reliance on heuristic strategies and is purely data-driven, which can be learned in an end-to-end manner.

To learn the mutual information estimator, we adopt a neural network and train it to be a Jensen-Shannon MI estimator (hjelm2018learning, ). Concretely, we train this JSD-based estimator on top of intermediate embeddings with the learning objective as follows, which offers better stability in optimization:

| (14) | ||||

where denotes expectation. In this equation, is a compatibility estimation function in the -th layer, and we denote as the MI estimator . Activation function is the softplus function, and represents the embedding of augmented node that is a positive pair of in the original graph. On the contrary, denotes the embedding of augmented node that is a negative pair of original input. A positive pair is obtained through randomly dropping intermediate neurons, corresponding to masking out a ratio of original input, and a negative pair is obtained as embeddings of different nodes. This objective can guide to capture the correlation or similarity between two input graphs encoded by the target model. Note that to further improve the learning of MI estimator, more advanced graph augmentation techniques can be incorporated for obtaining positive/negative pairs, following recent progresses in graph contrastive learning (feng2022adversarial, ; xia2022hypergraph, ). In this work, we stick to the strategy adopted in (hjelm2018learning, ) for its popularity, and leave that for future explorations. With this MI estimator learned, alignment loss between and can be readily computed:

| (15) |

which can be incorporated into the revised explanation framework proposed in Eq. 6.

In this strategy, we design a data-driven approach by capturing the mutual information between two inputs, which circumvents the potential biases of using human-crafted heuristics.

6. Theoretical Analysis

With those alignment strategies and our new explanation framework introduced, next, we want to look deeper and provide theoretical justifications for the proposed new loss function in Eq. 6. In this section, we first propose a new explanation objective to prevent spurious explanations based Sec. 3. Then, we theoretically show that it squares with our loss function with mild relaxations.

6.1. New Explanation Objective

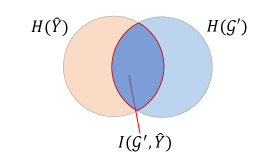

From previous discussions, it is shown that obtained via Equation 1 cannot be safely used as explanations. One main drawback of existing GNN explanation methods lies in the inductive bias that the same outcomes do not guarantee the same causes, leaving existing approaches vulnerable towards spurious explanations. An illustration is given in Figure 3. Objective proposed in Equation 1 optimizes the mutual information between explanation candidate and , corresponding to maximize the overlapping between and in Figure 3(a), or region in Figure 3(b). Here, denotes information entropy. However, this learning target cannot prevent the danger of generating spurious explanations. Provided may fall into the region , which cannot faithfully represent graph . Instead, a more sensible objective should be maximizing region in Figure 3(b). The intuition behind this is that in the search input space that causes the same outcome, identified should account for both representative and discriminative parts of original , to prevent spurious explanations that produce the same outcomes due to different causes. Concretely, finding that maximize can be formalized as:

| (16) |

6.2. Connecting to Our Method

is intractable as the latent generation mechanism of is unknown. In this part, we expand this objective, connect it to Equation 6, and construct its proxy optimizable form as:

Since both and depicts entropy of explanation and are closely related to perturbation budgets, we can neglect these two terms and get a surrogate optimization objective for as .

In , the first term is the same as Eq.(1). Following (ying2019gnnexplainer, ), We relax it as , optimizing to preserve original prediction outputs. The second term, , corresponds to maximizing consistency between and . Although the graph generation process is latent, with the safe assumption that embedding extracted by is representative of , we can construct a proxy objective , improving the consistency in the embedding space. In this work, we optimize this objective by aligning their representations, either optimizing a simplified distance metric or conducting distribution-aware alignment.

7. Experiment

In this section, we conduct a set of experiments to evaluate the benefits of the proposed auxiliary task in providing instance-level post-hoc explanations. Experiments are conducted on datasets, and obtained explanations are evaluated with respect to both faithfulness and consistency. Particularly, we aim to answer the following questions:

-

•

RQ1 Can the proposed framework perform strongly in identifying explanatory sub-structures for interpreting GNNs?

-

•

RQ2 Is the consistency problem severe in existing GNN explanation methods? Could the proposed embedding alignment improve GNN explainers over this criterion?

-

•

RQ3 Can our proposed strategies prevent spurious explanations and be more faithful to the target GNN model?

7.1. Experiment Settings

7.1.1. Datasets

We conduct experiments on five publicly available benchmark datasets for explainability of GNNs. The key statistics of the datasets are summarized in Table 1.

-

•

BA-Shapes(ying2019gnnexplainer, ): A node classification dataset with a Barabasi-Albert (BA) graph of nodes as the base structure. “house” motifs are randomly attached to the base graph. Nodes in the base graph are labeled as and those in the motifs are labeled based on positions. Explanations are conducted on those attached nodes, with edges inside the corresponding motif as ground-truth.

-

•

Tree-Grid (ying2019gnnexplainer, ): A node classification dataset created by attaching grid motifs to a single -layer balanced binary tree. Nodes in the base graph are labeled as and those in the motifs are labeled as . Edges inside the same motif are used as ground-truth explanations for nodes from class .

-

•

Infection (faber2021comparing, ): A single network initialized with an ER random graph. of nodes are labeled as infected, and other nodes are labeled based on their shortest distances to those infected ones. Labels larger than are clipped. Following (faber2021comparing, ), infected nodes and nodes with multiple shortest paths are neglected. For each node, its shortest path is used as the ground-truth explanation.

-

•

Mutag (ying2019gnnexplainer, ): A graph classification dataset. Each graph corresponds to a molecule with nodes for atoms and edges for chemical bonds. Molecules are labeled with consideration of their chemical properties, and discriminative chemical groups are identified using prior domain knowledge. Following PGExplainer (luo2020parameterized, ), chemical groups and are used as ground-truth explanations.

-

•

Graph-SST5 (yuan2020explainability, ): A graph classification dataset constructed from text, with labels from sentiment analysis. Each node represents a word and edges denote word dependencies. In this dataset, there is no ground-truth explanation provided, and heuristic metrics are usually adopted for evaluation.

| BA-Shapes | Tree-Grid | Infection | Mutag | SST-5 | |

|---|---|---|---|---|---|

| Level | Node | Node | Node | Graph | Graph |

| Graphs | |||||

| Avg.Nodes | |||||

| Avg.Edges | |||||

| Classes |

7.1.2. Baselines

To evaluate the effectiveness of the proposed framework, we select a group of representative and state-of-the-art instance-level post-hoc GNN explanation methods as baselines. The details are given as follows:

-

•

GRAD (luo2020parameterized, ): A gradient-based method, which assigns importance weights to edges by computing gradients of GNN’s prediction w.r.t the adjacency matrix.

-

•

ATT (luo2020parameterized, ): It utilizes average attention weights inside self-attention layers to distinguish important edges.

-

•

GNNExplainer (ying2019gnnexplainer, ): A perturbation-based method which learns an importance matrix separately for every instance.

-

•

PGExplaienr (luo2020parameterized, ): A parameterized explainer that learns a GNN to predict important edges for each graph, and is trained via testing different perturbations;

-

•

Gem (lin2021generative, ): Similar to PGExplainer but from the causal view, based on the estimated individual causal effect.

-

•

RG-Explainer (shan2021reinforcement, ): A reinforcement learning (RL) enhanced explainer for GNN, which constructs by sequentially adding nodes with an RL agent.

Our proposed algorithms in Section 4.2 are implemented and incorporated into two representative GNN explanation frameworks, i.e., GNNExplainer (ying2019gnnexplainer, ) and PGExplainer (luo2020parameterized, ).

7.1.3. Configurations

Following existing work (luo2020parameterized, ), a three-layer GCN (kipf2016semi, ) is trained on each dataset as the target model, with the train/validation/test data split as 8:1:1. The latent dimension is set to across all datasets, and we use Relu as activation function. For graph classification, we concatenate the outputs of global max pooling and global mean pooling as the graph representation. All explainers are trained using ADAM optimizer with weight decay set to -. For GNNExplainer, learning rate is initialized to with training epoch being . For PGExplainer, learning rate is initialized to and training epoch is set as . Hyper-parameter , which controls the weight of , is tuned via grid search within the range for different datasets separately. Explanations are tested on all instances.

7.1.4. Evaluation Metrics

To evaluate faithfulness of different methods, following (yuan2020explainability, ), we adopt two metrics: (1) AUROC score on edge importance and (2) Fidelity of explanations. On benchmarks with oracle explanations available, we can compute the AUROC score on identified edges as the well-trained target GNN should follow those predefined explanations. On datasets without ground-truth explanations, we evaluate explanation quality with fidelity measurement following (yuan2020explainability, ). Concretely, we observe prediction changes by sequentially removing edges following assigned importance weight, and a faster performance drop represents stronger fidelity.

To evaluate consistency of explanations, we randomly run each method times, and report average structural hamming distance (SHD) (tsamardinos2006max, ) among obtained explanations. A smaller SHD score indicates stronger consistency.

7.2. Explanation Faithfulness

To answer RQ1, we compare explanation methods in terms of AUROC score and explanation fidelity.

7.2.1. AUROC on Edges

In this subsection, AUROC scores of different methods are reported by comparing assigned edge importance weight with ground-truth explanations. For baseline methods GRAD, ATT, Gem, and RG-Explainer, their performances reported in their original papers are presented. GNNExplainer and PGExplainer are re-implemented, upon which four alignment strategies are instantiated and tested. Each experiment is conducted times, and we summarize the average performance in Table 2. A higher AUROC score indicates more accurate explanations. From the results, we can make the following observations:

-

•

Across all four datasets, with both GNNExplainer or PGExplainer as the base method, incorporating embedding alignment can improve the quality of obtained explanations;

-

•

Among proposed alignment strategies, those distribution-aware approaches, particularly the variant based on Gaussian mixture models, achieve the best performance. In most cases, the variant utilizing latent Gaussian distribution demonstrates stronger improvements, showing the best results on out of datasets;

-

•

On more complex datasets like Mutag, the benefit of introducing embedding alignment is more significant, e.g., the performance of PGExplainer improves from 83.7% to 95.9% with Align_Gaus. This result also indicates that spurious explanations are severer with increased dataset complexity.

7.2.2. Explanation Fidelity

In addition to comparing to ground-truth explanations, we also evaluate the obtained explanations in terms of fidelity. Specifically, we sequentially remove edges from the graph by following importance weight learned by the explanation model and test the classification performance. Generally, the removal of really important edges would significantly degrade the classification performance. Thus, a faster performance drop represents stronger fidelity. We conduct experiments on Tree-Grid and Graph-SST5. Each experiment is conducted times and we report results averaged across all instances on each dataset. PGExplainer and GNNExplainer are used as the backbone method. We plot the curves of prediction accuracy concerning the number of removed edges in Fig. 4. From the figure, we can observe that when the proposed embedding alignment is incorporated, the classification accuracy from edge removal drops much faster, which shows that the proposed embedding alignment can help to identify more important edges used by GNN for classification, hence providing better explanations. Furthermore, distribution-aware alignment strategies like the variant based on Gaussian mixture models demonstrate stronger fidelity in most cases. Besides, it can be noted that on Tree-Grid the fidelity of mutual-information-based alignment is dependent on the number of edges, and achieves better results with edge number within .

| BA-Shapes | Tree-Grid | Infection | Mutag | |

| GRAD | ||||

| ATT | – | |||

| Gem | – | – | ||

| RG-Explainer | – | |||

| GNNExplainer | ||||

| + Align_Emb | ||||

| + Align_Anchor | ||||

| + Align_MI | ||||

| + Align_Gaus | ||||

| PGExplainer | ||||

| + Align_Emb | ||||

| + Align_Anchor | ||||

| + Align_MI | ||||

| + Align_Gaus |

From these two experiments, we can observe that embedding alignment can obtain explanations of better faithfulness and is flexible to be incorporated into various models such as GNNExplainer and PGExplainer, which answers RQ1.

7.3. Explanation Consistency

One problem of spurious explanation is that, due to the randomness in initialization of the explainer, the explanation for the same instance given by a GNN explainer could be different for different runs, which violates the consistency of explanations. To test the severity of this problem and answer RQ2, we evaluate the proposed framework in terms of explanation consistency. We adopt GNNExplainer and PGExplainer as baselines. Specifically, SHD distance among explanatory edges with top- importance weights identified each time is computed. Then, the results are averaged for all instances in the test set. Each experiment is conducted times, and the average consistency on dataset Tree-Grid and Mutag are reported in Table 3 and Table 4, respectively. Larger distances indicate inconsistent explanations. From the table, we can observe that existing method following Equation 1 suffers from the consistency problem. For example, average SHD distance on top- edges is for GNNExplainer. Introducing the auxiliary task of aligning embeddings can significantly improve explainers in terms of this criterion. Of these different alignment strategies, the variant based on Gaussian mixture model shows the strongest performance in most cases. After incorporating Align_Gaus on dataset TreeGrid, SHD distance of top- edges drops from to for GNNExplainer and from to for PGExplainer. After incorporating it on dataset Mutag, SHD distance of top- edges drops from to for GNNExplainer and from to for PGExplainer. These results validate the effectiveness of our proposal in obtaining consistent explanations.

| Top-K Edges | ||||||

|---|---|---|---|---|---|---|

| Methods | 1 | 2 | 3 | 4 | 5 | 6 |

| GNNExplainer | ||||||

| +Align_Emb | ||||||

| +Align_Anchor | ||||||

| +Align_MI | ||||||

| +Align_Gaus | ||||||

| PGExplainer | ||||||

| +Align_Emb | 0.11 | |||||

| +Align_Anchor | 0.13 | |||||

| +Align_MI | ||||||

| +Align_Gaus | 0.05 | 0.08 | 0.10 | 0.19 | 0.13 | |

| Top-K Edges | ||||||

|---|---|---|---|---|---|---|

| Methods | 1 | 2 | 3 | 4 | 5 | 6 |

| GNNExplainer | ||||||

| +Align_Emb | ||||||

| +Align_Anchor | ||||||

| +Align_MI | ||||||

| +Align_Gaus | ||||||

| PGExplainer | ||||||

| +Align_Emb | ||||||

| +Align_Anchor | 0.51 | 0.90 | 1.05 | |||

| +Align_MI | ||||||

| +Align_Gaus | 0.84 | 1.25 | 1.15 | |||

7.4. Ability in Avoiding Spurious Explanations

Existing graph explanation benchmarks are usually designed to be less ambiguous, containing only one oracle cause of labels, and identified explanatory substructures are evaluated via comparing with the ground-truth explanation. However, this result could be misleading, as faithfulness of explanation in more complex scenarios is left untested. Real-world datasets are usually rich in spurious patterns and a trained GNN could contain diverse biases, setting a tighter requirement on explanation methods. Thus, to evaluate if our framework can alleviate the spurious explanation issue and answer RQ3, we create a new graph-classification dataset: MixMotif, which enables us to train a biased GNN model, and test whether explanation methods can successfully expose this bias.

Specifically, inspired by (wu2022discovering, ), we design three types of base graphs, i.e., Tree, Ladder, and Wheel, and three types of motifs, i.e., Cycle, House, and Grid. With a mix ratio , motifs are preferably attached to base graphs. For example, Cycle is attached to Tree with probability , and to others with probability . So are the cases for House to Ladder and Grid to Wheel. Labels of obtained graphs are set as type of the motif. When is set to , each motif has the same probability of being attached to the three base graphs. In other words, there’s no bias on which type of base graph to attach for each type of motif. Thus, we consider the dataset with as clean or bias-free. We would expect GNN trained on data with to focus on the motif structure for motif classification. However, when becomes larger, the spurious correlation between base graph and the label would exist, i.e., a GNN might utilize the base graph structure for motif classification instead of relying on the motif structure. For each setting, the created dataset contains graphs, and train:evaluation:test are split as .

In this experiment, we set to and separately, and train GNN and for each setting. Two models are tested in graph classification performance. Then, explanation methods are applied to and fine-tuned on . Following that, these explanation methods are applied to explain using found hyper-parameters. Results are summarized in Table 5.

| in Training | |||||

| Classification | |||||

| in test | |||||

| Explanation | PGExplainer | +Align | PGExplainer | +Align | |

| AUROC on Motif | |||||

| (Higher is better) | (Lower is better) | ||||

From Table 5, we can observe that (1) achieves almost perfect graph classification performance during testing. This high accuracy indicates that it captures the genuine pattern, relying on motifs to make predictions. Looking at explanation results, it is shown that our proposal offers more faithful explanations, achieving higher AUROC on motifs. (2) fails to predict well with , showing that there are biases in it and it no longer depends solely on the motif structure for prediction. Although ground-truth explanations are unknown in this case, a successful explanation should expose this bias. However, PGExplainer would produce similar explanations as the clean model, still highly in accord with motif structures. Instead, for explanations produced by embedding alignment, AUROC score would drop from to , exposing the change in prediction rationales, hence able to expose biases. (3) In summary, our proposal can provide more faithful explanations for both clean and mixed settings, while PGExplainer would suffer from spurious explanations and fail to faithfully explain GNN’s predictions, especially in the existence of biases.

7.5. Hyperparameter Sensitivity Analysis

In this part, we vary the hyper-parameter to test the sensitivity of the proposed framework toward its values. controls the weight of our proposed embedding alignment task. To keep simplicity, all other configurations are kept unchanged, and is varied within the scale . PGExplainer is adopted as the base method. Experiments are randomly conducted times on dataset Tree-Grid and Mutag. Averaged results are visualized in Figure 5. From the figure, we can make the following observations:

-

•

For all four variants, increasing has a positive effect at first, and the further increase would result in a performance drop. For example on the Tree-Grid dataset, best results of variants based on anchors, latent Gaussian mixture models and mutual information scores are all obtained with around . When is small, the explanation alignment regularization in Eq. 6 will be underweighted. On the other hand, a too-large may underweight the MMI-based explanation framework, which preserves the predictive power of obtained explanations.

-

•

Among these variants, the strategy based on latent Gaussian mixture models shows the strongest performance in most cases. For example, for both datasets Tree-Grid and Mutag, this variant achieves the highest AUROC scores on identified explanatory edges. On the other hand, the variant directly using Euclidean distances shows inferior performances in most cases. We attribute this to their different ability in modeling the distribution and conducting alignment.

8. Conclusion

In this work, we study a novel problem of obtaining faithful and consistent explanations for GNNs, which is largely neglected by existing MMI-based explanation framework. With close analysis on the inference of GNNs, we propose a simple yet effective approach by aligning internal embeddings. Theoretical analysis shows that it is more faithful in design, optimizing an objective that encourages high MI between the original graph, GNN output, and identified explanation. Four different strategies are designed, by directly adopting Euclidean distance, using anchors, KL divergence with Gaussian mixture models, and estimated MI scores. All these algorithms can be incorporated into existing methods with no effort. Experiments validate their effectiveness in promoting the faithfulness and consistency of explanations.

In the future, we will seek more robust explanations. Increased robustness indicates stronger generality, and could provide better class-level interpretation at the same time. Besides, the evaluation of explanation methods also needs further studies. Existing benchmarks are usually clear and unambiguous, failing to simulate complex real-world scenarios.

Acknowledgements.

This material is based upon work supported by, or in part by, the National Science Foundation under grants number IIS-1707548 and IIS-1909702, the Army Research Office under grant number W911NF21-1-0198, and DHS CINA under grant number E205949D. The findings and conclusions in this paper do not necessarily reflect the view of the funding agency.References

- [1] Wenqi Fan, Y. Ma, Qing Li, Yuan He, Y. Zhao, Jiliang Tang, and D. Yin. Graph neural networks for social recommendation. The World Wide Web Conference, 2019.

- [2] Tianxiang Zhao, Xianfeng Tang, Xiang Zhang, and Suhang Wang. Semi-supervised graph-to-graph translation. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, pages 1863–1872, 2020.

- [3] Linli Xu, Wenjun Ouyang, Xiaoying Ren, Yang Wang, and Liang Jiang. Enhancing semantic representations of bilingual word embeddings with syntactic dependencies. In IJCAI, pages 4517–4524, 2018.

- [4] Elman Mansimov, O. Mahmood, Seokho Kang, and Kyunghyun Cho. Molecular geometry prediction using a deep generative graph neural network. Scientific Reports, 9, 2019.

- [5] Hryhorii Chereda, A. Bleckmann, F. Kramer, A. Leha, and T. Beißbarth. Utilizing molecular network information via graph convolutional neural networks to predict metastatic event in breast cancer. Studies in health technology and informatics, 267:181–186, 2019.

- [6] Daniil Sorokin and Iryna Gurevych. Modeling semantics with gated graph neural networks for knowledge base question answering. ArXiv, abs/1808.04126, 2018.

- [7] Zhiwei Liu, Liangwei Yang, Ziwei Fan, Hao Peng, and Philip S Yu. Federated social recommendation with graph neural network. ACM Transactions on Intelligent Systems and Technology (TIST), 13(4):1–24, 2022.

- [8] Thomas N Kipf and Max Welling. Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907, 2016.

- [9] Petar Veličković, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Lio, and Yoshua Bengio. Graph attention networks. arXiv preprint arXiv:1710.10903, 2017.

- [10] Will Hamilton, Zhitao Ying, and Jure Leskovec. Inductive representation learning on large graphs. Advances in neural information processing systems, 30, 2017.

- [11] Weijieying Ren, Lei Zhang, Bo Jiang, Zhefeng Wang, Guangming Guo, and Guiquan Liu. Robust mapping learning for multi-view multi-label classification with missing labels. In Knowledge Science, Engineering and Management: 10th International Conference, KSEM 2017, Melbourne, VIC, Australia, August 19-20, 2017, Proceedings 10, pages 543–551. Springer, 2017.

- [12] Keyulu Xu, Weihua Hu, Jure Leskovec, and Stefanie Jegelka. How powerful are graph neural networks? arXiv preprint arXiv:1810.00826, 2018.

- [13] Muhan Zhang and Yixin Chen. Link prediction based on graph neural networks. Advances in neural information processing systems, 31, 2018.

- [14] Zhilong Lu, Weifeng Lv, Zhipu Xie, Bowen Du, Guixi Xiong, Leilei Sun, and Haiquan Wang. Graph sequence neural network with an attention mechanism for traffic speed prediction. ACM Transactions on Intelligent Systems and Technology (TIST), 13(2):1–24, 2022.

- [15] Xiaoying Ren, Linli Xu, Tianxiang Zhao, Chen Zhu, Junliang Guo, and Enhong Chen. Tracking and forecasting dynamics in crowdfunding: A basis-synthesis approach. In 2018 IEEE International Conference on Data Mining (ICDM), pages 1212–1217. IEEE, 2018.

- [16] Jiahua Rao, Shuangjia Zheng, and Yuedong Yang. Quantitative evaluation of explainable graph neural networks for molecular property prediction. arXiv preprint arXiv:2107.04119, 2021.

- [17] Weijieying Ren, Lei Wang, Kunpeng Liu, Ruocheng Guo, Lim Ee Peng, and Yanjie Fu. Mitigating popularity bias in recommendation with unbalanced interactions: A gradient perspective. In 2022 IEEE International Conference on Data Mining (ICDM), pages 438–447. IEEE, 2022.

- [18] Weijieying REN, Jing Jiang, Ling Min Serena Khoo, and Hai Leong Chieu. Cross-topic rumor detection using topic-mixtures. 2021.

- [19] Rex Ying, Dylan Bourgeois, Jiaxuan You, Marinka Zitnik, and Jure Leskovec. Gnnexplainer: Generating explanations for graph neural networks. Advances in neural information processing systems, 32:9240, 2019.

- [20] Dongsheng Luo, Wei Cheng, Dongkuan Xu, Wenchao Yu, Bo Zong, Haifeng Chen, and Xiang Zhang. Parameterized explainer for graph neural network. Advances in neural information processing systems, 33:19620–19631, 2020.

- [21] Tianxiang Zhao, Dongsheng Luo, Xiang Zhang, and Suhang Wang. On consistency in graph neural network interpretation. arXiv preprint arXiv:2205.13733, 2022.

- [22] Qiang Huang, Makoto Yamada, Yuan Tian, Dinesh Singh, Dawei Yin, and Yi Chang. Graphlime: Local interpretable model explanations for graph neural networks. arXiv preprint arXiv:2001.06216, 2020.

- [23] Minh N Vu and My T Thai. Pgm-explainer: Probabilistic graphical model explanations for graph neural networks. arXiv preprint arXiv:2010.05788, 2020.

- [24] Hao Yuan, Haiyang Yu, Jie Wang, Kang Li, and Shuiwang Ji. On explainability of graph neural networks via subgraph explorations. In International Conference on Machine Learning, pages 12241–12252. PMLR, 2021.

- [25] Hao Yuan, Haiyang Yu, Shurui Gui, and Shuiwang Ji. Explainability in graph neural networks: A taxonomic survey. arXiv preprint arXiv:2012.15445, 2020.

- [26] Meike Nauta, Jan Trienes, Shreyasi Pathak, Elisa Nguyen, Michelle Peters, Yasmin Schmitt, Jörg Schlötterer, Maurice van Keulen, and Christin Seifert. From anecdotal evidence to quantitative evaluation methods: A systematic review on evaluating explainable ai. arXiv preprint arXiv:2201.08164, 2022.

- [27] Enyan Dai, Wei Jin, Hui Liu, and Suhang Wang. Towards robust graph neural networks for noisy graphs with sparse labels. arXiv preprint arXiv:2201.00232, 2022.

- [28] Tianxiang Zhao, Xiang Zhang, and Suhang Wang. Graphsmote: Imbalanced node classification on graphs with graph neural networks. In Proceedings of the Fourteenth ACM International Conference on Web Search and Data Mining, 2021.

- [29] Yu Zhou, Haixia Zheng, Xin Huang, Shufeng Hao, Dengao Li, and Jumin Zhao. Graph neural networks: Taxonomy, advances, and trends. ACM Transactions on Intelligent Systems and Technology (TIST), 13(1):1–54, 2022.

- [30] Tianxiang Zhao, Wenchao Yu, Suhang Wang, Lu Wang, Xiang Zhang, Yuncong Chen, Yanchi Liu, Wei Cheng, and Haifeng Chen. Skill disentanglement for imitation learning from suboptimal demonstrations. KDD, 2023.

- [31] Joan Bruna, Wojciech Zaremba, Arthur Szlam, and Yann LeCun. Spectral networks and locally connected networks on graphs. arXiv preprint arXiv:1312.6203, 2013.

- [32] Ke Liang, Jim Tan, Dongrui Zeng, Yongzhe Huang, Xiaolei Huang, and Gang Tan. Abslearn: a gnn-based framework for aliasing and buffer-size information retrieval. Pattern Analysis and Applications, pages 1–19, 2023.

- [33] Ke Liang, Lingyuan Meng, Meng Liu, Yue Liu, Wenxuan Tu, Siwei Wang, Sihang Zhou, Xinwang Liu, and Fuchun Sun. Reasoning over different types of knowledge graphs: Static, temporal and multi-modal. arXiv preprint arXiv:2212.05767, 2022.

- [34] David K Duvenaud, Dougal Maclaurin, Jorge Iparraguirre, Rafael Bombarell, Timothy Hirzel, Alán Aspuru-Guzik, and Ryan P Adams. Convolutional networks on graphs for learning molecular fingerprints. In Advances in neural information processing systems, pages 2224–2232, 2015.

- [35] James Atwood and Don Towsley. Diffusion-convolutional neural networks. In Advances in neural information processing systems, pages 1993–2001, 2016.

- [36] Teng Xiao, Zhengyu Chen, Donglin Wang, and Suhang Wang. Learning how to propagate messages in graph neural networks. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pages 1894–1903, 2021.

- [37] Justin Gilmer, Samuel S Schoenholz, Patrick F Riley, Oriol Vinyals, and George E Dahl. Neural message passing for quantum chemistry. In ICML, 2017.

- [38] Tianxiang Zhao, Dongsheng Luo, Xiang Zhang, and Suhang Wang. Topoimb: Toward topology-level imbalance in learning from graphs. In Learning on Graphs Conference, pages 37–1. PMLR, 2022.

- [39] Xiang Wang, Hongye Jin, An Zhang, Xiangnan He, Tong Xu, and Tat-Seng Chua. Disentangled graph collaborative filtering. In Proceedings of the 43rd international ACM SIGIR conference on research and development in information retrieval, pages 1001–1010, 2020.

- [40] Tianxiang Zhao, Xiang Zhang, and Suhang Wang. Exploring edge disentanglement for node classification. In Proceedings of the ACM Web Conference 2022, pages 1028–1036, 2022.

- [41] Teng Xiao, Zhengyu Chen, Zhimeng Guo, Zeyang Zhuang, and Suhang Wang. Decoupled self-supervised learning for non-homophilous graphs. arXiv e-prints, pages arXiv–2206, 2022.

- [42] Shuai Lin, Chen Liu, Pan Zhou, Zi-Yuan Hu, Shuojia Wang, Ruihui Zhao, Yefeng Zheng, Liang Lin, Eric Xing, and Xiaodan Liang. Prototypical graph contrastive learning. IEEE Transactions on Neural Networks and Learning Systems, 2022.

- [43] Junjie Xu, Enyan Dai, Xiang Zhang, and Suhang Wang. Hp-gmn: Graph memory networks for heterophilous graphs. arXiv preprint arXiv:2210.08195, 2022.

- [44] Muhammet Balcilar, Pierre Héroux, Benoit Gauzere, Pascal Vasseur, Sébastien Adam, and Paul Honeine. Breaking the limits of message passing graph neural networks. In International Conference on Machine Learning, pages 599–608. PMLR, 2021.

- [45] Ryoma Sato. A survey on the expressive power of graph neural networks. arXiv preprint arXiv:2003.04078, 2020.

- [46] Hoang Nt and Takanori Maehara. Revisiting graph neural networks: All we have is low-pass filters. arXiv preprint arXiv:1905.09550, 2019.

- [47] Muhammet Balcilar, Renton Guillaume, Pierre Héroux, Benoit Gaüzère, Sébastien Adam, and Paul Honeine. Analyzing the expressive power of graph neural networks in a spectral perspective. In Proceedings of the International Conference on Learning Representations (ICLR), 2021.

- [48] Xiang Wang, Yingxin Wu, An Zhang, Xiangnan He, and Tat-seng Chua. Causal screening to interpret graph neural networks. 2020.

- [49] Federico Baldassarre and Hossein Azizpour. Explainability techniques for graph convolutional networks. arXiv preprint arXiv:1905.13686, 2019.

- [50] Zaixi Zhang, Qi Liu, Hao Wang, Chengqiang Lu, and Cheekong Lee. Protgnn: Towards self-explaining graph neural networks. arXiv preprint arXiv:2112.00911, 2021.

- [51] Enyan Dai and Suhang Wang. Towards self-explainable graph neural network. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, pages 302–311, 2021.

- [52] Enyan Dai, Tianxiang Zhao, Huaisheng Zhu, Junjie Xu, Zhimeng Guo, Hui Liu, Jiliang Tang, and Suhang Wang. A comprehensive survey on trustworthy graph neural networks: Privacy, robustness, fairness, and explainability. arXiv preprint arXiv:2204.08570, 2022.

- [53] Phillip E Pope, Soheil Kolouri, Mohammad Rostami, Charles E Martin, and Heiko Hoffmann. Explainability methods for graph convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10772–10781, 2019.

- [54] Caihua Shan, Yifei Shen, Yao Zhang, Xiang Li, and Dongsheng Li. Reinforcement learning enhanced explainer for graph neural networks. Advances in Neural Information Processing Systems, 34, 2021.

- [55] Thomas Schnake, Oliver Eberle, Jonas Lederer, Shinichi Nakajima, Kristof T Schütt, Klaus-Robert Müller, and Grégoire Montavon. Higher-order explanations of graph neural networks via relevant walks. arXiv preprint arXiv:2006.03589, 2020.

- [56] Wanyu Lin, Hao Lan, and Baochun Li. Generative causal explanations for graph neural networks. In International Conference on Machine Learning, pages 6666–6679. PMLR, 2021.

- [57] Ying-Xin Wu, Xiang Wang, An Zhang, Xiangnan He, and Tat-Seng Chua. Discovering invariant rationales for graph neural networks. arXiv preprint arXiv:2201.12872, 2022.

- [58] Junchi Yu, Tingyang Xu, Yu Rong, Yatao Bian, Junzhou Huang, and Ran He. Graph information bottleneck for subgraph recognition. arXiv preprint arXiv:2010.05563, 2020.

- [59] Naftali Tishby and Noga Zaslavsky. Deep learning and the information bottleneck principle. In 2015 ieee information theory workshop (itw), pages 1–5. IEEE, 2015.

- [60] Phúc H. Lê Khac, Graham Healy, and Alan F. Smeaton. Contrastive representation learning: A framework and review. IEEE Access, 8:193907–193934, 2020.

- [61] Prannay Khosla, Piotr Teterwak, Chen Wang, Aaron Sarna, Yonglong Tian, Phillip Isola, Aaron Maschinot, Ce Liu, and Dilip Krishnan. Supervised contrastive learning. ArXiv, abs/2004.11362, 2020.

- [62] Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey Hinton. A simple framework for contrastive learning of visual representations. In International conference on machine learning, pages 1597–1607. PMLR, 2020.

- [63] Florian Graf, Christoph Hofer, Marc Niethammer, and Roland Kwitt. Dissecting supervised constrastive learning. ArXiv, abs/2102.08817, 2021.

- [64] Tongzhou Wang and Phillip Isola. Understanding contrastive representation learning through alignment and uniformity on the hypersphere. ArXiv, abs/2005.10242, 2020.

- [65] Shengyu Feng, Baoyu Jing, Yada Zhu, and Hanghang Tong. Adversarial graph contrastive learning with information regularization. In Proceedings of the ACM Web Conference 2022, pages 1362–1371, 2022.

- [66] Lianghao Xia, Chao Huang, Yong Xu, Jiashu Zhao, Dawei Yin, and Jimmy Huang. Hypergraph contrastive collaborative filtering. In Proceedings of the 45th International ACM SIGIR conference on research and development in information retrieval, pages 70–79, 2022.

- [67] Yanqiao Zhu, Yichen Xu, Qiang Liu, and Shu Wu. An empirical study of graph contrastive learning. arXiv preprint arXiv:2109.01116, 2021.

- [68] Lei Wang, Ee-Peng Lim, Zhiwei Liu, and Tianxiang Zhao. Explanation guided contrastive learning for sequential recommendation. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, pages 2017–2027, 2022.

- [69] Ke Liang, Yue Liu, Sihang Zhou, Wenxuan Tu, Yi Wen, Xihong Yang, Xiangjun Dong, and Xinwang Liu. Knowledge graph contrastive learning based on relation-symmetrical structure. IEEE Transactions on Knowledge and Data Engineering, 2023.

- [70] Weihua Hu, Bowen Liu, Joseph Gomes, Marinka Zitnik, Percy Liang, Vijay S. Pande, and Jure Leskovec. Strategies for pre-training graph neural networks. arXiv: Learning, 2019.

- [71] Felix L. Opolka, Aaron Solomon, Cătălina Cangea, Petar Velickovic, Pietro Lio’, and R. Devon Hjelm. Spatio-temporal deep graph infomax. ArXiv, abs/1904.06316, 2019.

- [72] Petar Velickovic, William Fedus, William L. Hamilton, Pietro Lio’, Yoshua Bengio, and R. Devon Hjelm. Deep graph infomax. ArXiv, abs/1809.10341, 2018.

- [73] Qikui Zhu, Bo Du, and Pingkun Yan. Self-supervised training of graph convolutional networks. ArXiv, abs/2006.02380, 2020.

- [74] Yuning You, Tianlong Chen, Yongduo Sui, Ting Chen, Zhangyang Wang, and Yang Shen. Graph contrastive learning with augmentations. ArXiv, abs/2010.13902, 2020.

- [75] Kaveh Hassani and Amir Hosein Khas Ahmadi. Contrastive multi-view representation learning on graphs. ArXiv, abs/2006.05582, 2020.

- [76] Ming Jin, Yizhen Zheng, Yuan-Fang Li, Chen Gong, Chuan Zhou, and Shirui Pan. Multi-scale contrastive siamese networks for self-supervised graph representation learning. ArXiv, abs/2105.05682, 2021.

- [77] Yanqiao Zhu, Yichen Xu, Feng Yu, Q. Liu, Shu Wu, and Liang Wang. Deep graph contrastive representation learning. ArXiv, abs/2006.04131, 2020.

- [78] Liang Zeng, Lanqing Li, Zi-Chao Gao, Peilin Zhao, and Jian Li. Imgcl: Revisiting graph contrastive learning on imbalanced node classification. ArXiv, abs/2205.11332, 2022.

- [79] Yuning You, Tianlong Chen, Yang Shen, and Zhangyang Wang. Graph contrastive learning automated. In International Conference on Machine Learning, 2021.

- [80] Yihang Yin, Qingzhong Wang, Siyu Huang, Haoyi Xiong, and Xiang Zhang. Autogcl: Automated graph contrastive learning via learnable view generators. In AAAI Conference on Artificial Intelligence, 2021.

- [81] Martin Ester, Hans-Peter Kriegel, Jörg Sander, Xiaowei Xu, et al. A density-based algorithm for discovering clusters in large spatial databases with noise. In kdd, volume 96, pages 226–231, 1996.

- [82] R Devon Hjelm, Alex Fedorov, Samuel Lavoie-Marchildon, Karan Grewal, Phil Bachman, Adam Trischler, and Yoshua Bengio. Learning deep representations by mutual information estimation and maximization. In International Conference on Learning Representations, 2018.

- [83] Lukas Faber, Amin K. Moghaddam, and Roger Wattenhofer. When comparing to ground truth is wrong: On evaluating gnn explanation methods. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pages 332–341, 2021.

- [84] Ioannis Tsamardinos, Laura E Brown, and Constantin F Aliferis. The max-min hill-climbing bayesian network structure learning algorithm. Machine learning, 65(1):31–78, 2006.