Failure behavior in a connected configuration model

under a critical loading mechanism

Abstract

We study a cascading edge failure mechanism on a connected random graph with a prescribed degree sequence, sampled using the configuration model. This mechanism prescribes that every edge failure puts an additional strain on the remaining network, possibly triggering more failures. We show that under a critical loading mechanism that depends on the global structure of the network, the number of edge failure exhibits scale-free behavior (up to a certain threshold). Our result is a consequence of the failure mechanism and the graph topology. More specifically, the critical loading mechanism leads to scale-free failure sizes for any network where no disconnections take place. The disintegration of the configuration model ensures that the dominant contribution to the failure size comes from edge failures in the giant component, for which we show that the scale-free property prevails. We prove this rigorously for sublinear thresholds, and we explain intuitively why the analysis follows through for linear thresholds. Moreover, our result holds for other graph structures as well, which we validate with simulation experiments.

1 Introduction

Cascading failures is a term used to describe the phenomenon where an initial disturbance can trigger subsequent failures in a system. A typical way to describe cascading failure models is through a network where every node/edge failure creates an additional strain on the surviving network, possibly leading to knock-on failure effects. It appears in many different application areas, such as communication networks [28, 27, 23], road systems [8, 41], earthquakes [2, 3, 1], material science [30], epidemiology [40, 26], power transmission systems [7, 31, 6, 37], and more.

A macroscopic characteristic that is observed in different natural and engineering systems, is the emergence of scale-free behavior [5, 36, 4, 9, 32]. Although this heavy-tailed property sometimes relates to the nodal degree distribution, there are many physical networks that do not share this feature (e.g. power grids [39]). An intriguing question arises why failure sizes still display scale-free behavior for these types of networks.

In this paper, we introduce a cascading mechanism on a complex network whose nodal distribution does not (necessarily) exhibit scale-free behavior. The cascade is initialized by a small additional loading of the network, and failures occur on edges whenever its load capacity is exceeded. An intrinsic feature in this work is that the propagation of failures occurs non-locally and depends on the global network structure, which continually changes as the failure process advances. This is a novel feature with respect to the existing literature: in order to obtain an analytically tractable model, the cascading mechanisms is often described through local relations, or assumes an independence of the global network structure throughout the cascade. Well-known examples that satisfy at least one of these properties are epidemiology models (where an initially infected node may taint each of its neighbors with a fixed probability), or percolation models (where each edge/node in the network fails with a fixed probability). In this work, we introduce a critical load function that leads to a power-law distributed tail for the failure size.

1.1 Model description

Let denote a graph, where denotes the vertex set with , and denotes the edge set with . Typically, we consider graphs that can be scaled in the number of vertices/edges. Suppose that each edge in the network is subjected to a load demand that is initially exceeded by its edge capacity. The difference between the initial load and the edge capacity is called the surplus capacity of the edge, and we assume the surplus capacities to be independently and uniformly distributed on at the various edges. The failure process is triggered by an initial disturbance: all edges are additionally loaded with for some constant . If the total load increase surpasses the surplus capacity of one or more edges, these edges fail and in turn, cause an additional load increase on all surviving edges that are in the same component upon failure. We call these load increments the load surges. We make two assumptions: edge failures occur subsequently, and all edges in the same component upon failure experience the same load surge. We continue with the failure of edges that have insufficient surplus capacity to handle the load surges till there are no more. We are interested in the number of edge failures, also referred to as the failure size.

We are interested in the setting where the failure size exhibits scale-free behavior. To this purpose, we define the critical load surge function at edge after experiencing load surges to be

| (3) |

where is the number of edges in the component that contains edge after perceiving edge failures in that component.

We observe that as long as two edges remain in the same component during the cascade, this recursive relation implies that the load surges are the same at both edges. Moreover, as long as all edges remain to be in a single component, it holds that at every surviving edge, and hence (3) is solved by

| (4) |

In particular, applying (3) to a star topology with nodes and edges yields load surge function (4) for all surviving edges.

We point out that the load surges defined in (3) are typically non-deterministic, and are only well-defined as long as edge has not yet failed throughout the cascade. Edge failures may cause the network to disintegrate in multiple components, which affects in a probabilistic way that depends on the structure of the graph. In contrast to processes in epidemiology or percolation models, the propagation of failures thus occurs non-locally and depends on the global structure of the graph throughout the cascade.

To provide an intuitive understanding why (3) gives rise to scale-free behavior for the failure size, consider the following. For a scale-free failure size to appear, the cascade propagation should occur in some form of criticality. This happens when the load surges are close to the expected values of the ordered surplus capacities. More specifically, since the surplus capacities are independently and uniformly distributed on , the mean of the -th smallest surplus capacity is . If no disconnections occurred during the cascade, this would imply that the additional load surges at every edge should be close to after every edge failure. This explains intuitively why (4) leads to scale-free behavior for the star-topology, as is shown rigorously in [33]. Yet, whenever the network disintegrates in different components, the consecutive load surges need to be of a size such that the load surge is close the smallest expected surplus capacity of the remaining edges in order for the cascade to remain in the window of criticality. More specifically, suppose that the first disconnection occurs after edge failures and splits the graph in two components of edge size and , respectively. Due to the properties of uniformly distributed random variables, we note that the expectation of the smallest surplus capacity in the first component equals . In other words, in order to stay in a critical failure regime, the additional load surges should be close to after every edge failure until the cascade stops in that component, or another disconnection occurs. This process can be iterated, and gives rise to load surge function (3).

In this paper, our main focus is to apply failure mechanism (3) to the (connected) configuration model on vertices with a prescribed degree sequence . The configuration model is constructed by assigning half-edges to each vertex , after which the half-edges are paired randomly: first we pick two half-edges at random and create an edge out of them, then we pick two half-edges at random from the set of remaining half-edges and pair them into an edge, and we continue this process until all half-edges have been paired. For consistency, we assume that the total degree is even. The construction can give rise to self-loops and multiple edges between vertices, but these events are relatively rare when is large [16, 19, 20]. We point out that the number of edges is thus a function of and , i.e. , where we suppress the dependency on and for the sake of exposition.

Define as the number of vertices of degree , and let denote the degree of a vertex chosen uniformly at random from . We assume the following condition on the degree sequence.

Condition 1.1 (Regularity conditions).

Unless stated otherwise, we assume that satisfies the following conditions:

-

•

There exists a limiting degree variable such that converges in distribution to as ;

-

•

;

-

•

;

-

•

for all for some ;

-

•

.

Under these conditions, we can write as the limiting fraction of degree vertices in the network. Moreover, under these conditions it is known that there is a positive probability for to be connected [13, 24]. Our starting point will be such a configuration model conditioned to be connected, denoted by , on which we apply the edge failure mechanism (3). We are interested in quantifying the resilience of the connected configuration model under (3), measured by the number of edge failures .

Remark 1.2.

In Condition 1.1, we assume to ensure that the resulting graph has a positive probability to be connected. Moreover, as explained in [13], it suffices to pose the condition that for a positive probability of having a connected configuration model. We point that our results do not change when allowing that , but for the sake of exposition, we removed the details in this paper.

1.2 Notation

In Appendix A, we provide an overview of the quantities that are commonly used throughout the paper. Unless stated otherwise, all limits are taken as , or equivalently by Condition 1.1, as . A sequence of events happens with high probability (w.h.p.) if . For random variables , we write and to denote convergence in distribution and in probability, respectively. For real-valued sequences and , we write and if tends to zero or is bounded, respectively. Similarly, we write and if tends to zero or is bounded, respectively. We write if both and hold. We adopt an analogue notation for random variables, e.g. for sequences of random variables and , we denote if . For convenience of notation, we denote if . Finally, always denotes a Poisson distributed random variable with mean , denotes an exponentially distributed random variable with parameter , and denotes a binomial distributed random variable with parameters and .

1.3 Main result

We are interested in the probability that the failure size exceeds a threshold . In this paper, we mainly focus on thresholds satisfying for some , which we refer to as the sublinear case. Our main result shows that the failure size has a power-law distribution.

Theorem 1.3.

Consider the connected configuration model , and suppose such that for some . Then,

| (5) |

To see why (5) holds, we need to understand the typical behavior of the failure process as the cascade continues. A first result we show is that it is likely that the number of edge failures that need to occur for the network to become disconnected is of order . This suggests that as long as the threshold satisfies , the tail is the same as in the case of a star topology. The latter case is known to satisfy (5), as is shown in [33].

As long as the cascade continues, we show that it typically disconnects small components. This suggests that up to a certain point, the cascading failure mechanism creates a network with a single large component that contains almost all vertices and edges, referred to as the giant component, and some small disconnected components with few edges. It turns out that the total number of edges that are outside the giant component is sufficiently small, and hence the dominant contribution to the failure size comes from the number of edges that are contained in the giant component upon failure. Moreover, due to small sizes of the components outside the giant component, the load surge function for the edges in the giant as prescribed by (3) is relatively close to (4). We show that as long as threshold for some , the perturbations are sufficiently small such that the failure size behavior in the giant component satisfies (5).

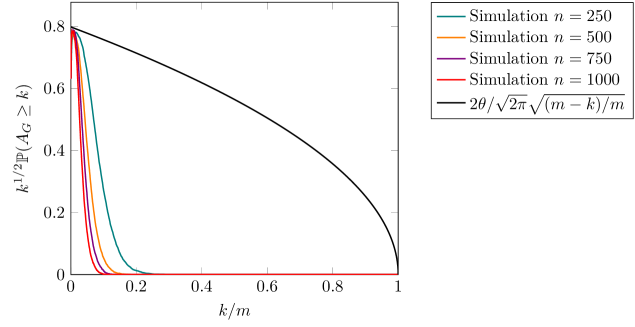

We heuristically explain in Section 5 that the power-law tail property prevails beyond the sublinear case up to a certain critical point. However, the prefactor is affected in this case, i.e. the failure size tail is different from the star topology. Moreover, this notion holds for a broader set of graphs, and we validate this claim by extensive simulation experiments in Section 5.

We would like to remark that the disintegration of the network by the cascading failure mechanism is closely related to percolation, a process where each edge is failed/removed with a corresponding removal probability. In fact, percolation results will be crucial in our analysis to prove Theorem 1.3, combined with first-passage theory of random walks over moving boundaries. Before we lay out a more detailed road map to prove Theorem 1.3, we provide an outline of the remainder of this paper.

1.4 Outline

The proof of Theorem 1.3 requires many different steps, and therefore we provide a road map of the proof in Section 2. We explain that we need to derive novel percolation results for the sublinear case, which we show in Section 3. We rigorously identify the impact of the disintegration of the network on the cascading failure process in Section 4, where we use first-passage theory for random walks over moving boundaries to conclude our main result. Finally, we study the failure size behavior beyond the sublinear case in Section 5, as well as the failure size behavior for other graphs.

2 Proof strategy for Theorem 1.3

Our proof of Theorem 1.3 requires several steps. In this section, we provide a high-level road map of the proof.

2.1 Relation of failure process and sequential removal process

There are two elements of randomness involved in the cascading failure process: the sampling of the surplus capacities of the edges, and the way the network disintegrates as edge failures occur. The second aspect determines the values of the load surges, and only when the surplus capacity of an edge is insufficient to handle the load surge, the cascading failure process continues. Recall that as long as edges remain in the same component, they experience the same load surge. Since the surplus capacities are i.i.d., it follows that every edge in the same component is equally likely to be the next edge to fail as long as the failure process continues in that component. This is a crucial observation, as this provides a relation with the sequential edge-removal process. That is, suppose that we sequentially remove edges uniformly at random from a graph. Given that a new edge removal occurs in a certain component, each edge in that component is equally likely to be removed next, just as in the cascading failure process. Consequently, this observation gives rise to a coupling of the disintegration of the network through the cascading failure process to one that is caused by sequentially removing edges uniformly at random.

More specifically, suppose that sequentially removing edges uniformly at random yields the permutation . For the cascading failure process, sample uniformly distributed random variables and order them so that and assign to edge surplus capacity . In particular, this implies that if the cascading failure process would always continue until all edges have failed, then the edges would fail in the same order as the sequential edge-removal process. Moreover, it is possible to exactly compute the load surge function from the order over the edge set. We illustrate this claim by the example in Figure 1. In this example, we observe that , and we consider the sequential removal process up to five edge removals. We see that the first three edge failures do not cause the graph to disconnect. It holds for every dotted edge ,

and for every dashed edge ,

Recursion (3) yields the corresponding load surge values.

This example illustrates that using our coupling, the sequential removal process gives rise to the load surge values as prescribed in (3). We point out that due to our coupling, if after step an edge for some has sufficient surplus capacity to deal with the load surge, then so do all the other edges in that component. In other words, the cascade stops in that component. To determine the failure size of the cascade, one needs to subsequently compare the load surge values to the surplus capacities in all components until the surplus capacities at all the surviving edges are sufficient to deal with the load surges.

In summary, sequentially removing edges uniformly at random gives rise to the load surge values in every component. This idea decouples the two sources of randomness: first one needs to understand the disintegration of the network through the sequential removal process, leading to the load surge values through (3). Then, we can determine the failure size by comparing the surplus capacities to the load surges up to the point where the cascade stop in every component.

2.2 Disintegration of the network through sequential removal

The next step is to determine the typical behavior of the sequential removal process on the connected configuration model . A first question that needs to be answered is how many edges need to fail, or equivalently, need to be removed uniformly at random from the network to become disconnected. It turns out that this is likely to occur when edges are removed.

Theorem 2.1.

Suppose that we subsequently remove edges uniformly at random from . Define as the smallest number of edges that need to be removed for the network to be disconnected. Then,

where has a Rayleigh distribution with density function

| (6) |

After this point, more disconnections start to occur as more edges are removed uniformly at random from the graph. In Section 3.3, we focus on the typical network structure if edges have been removed uniformly at random for some . Typically, there is a giant component that contains almost all edges and vertices. The components that detach from the giant component are isolated nodes, line components, and possibly isolated cycles. We show that the total number of edges contained in these components are likely to be of order , while the number of edges in more complex component structures are negligible in comparison. This leads to the following result, which is proved in Section 3.6.

Theorem 2.2.

Suppose we remove edges uniformly at random from the connected configuration model with for some . Write for the set of edges in the largest component of this graph, and let denote its cardinality. Then,

| (7) |

We stress that determining the typical behavior of the network disintegration is not enough to prove our main result. In addition, for each of our results, we need to show that it is extremely unlikely to be far from its typical behavior, which we consider in Section 3.4. Moreover, we combine these large deviations results to show the following result in Section 3.6.

Theorem 2.3.

Suppose edges have been removed uniformly at random from the connected configuration model , where for some . Then,

| (8) |

Moreover, for every for which for some ,

| (9) |

2.3 Impact of disintegration on the failure process

The sequential removal process gives rise to the load surge function at every edge, and we need to compare these values to the surplus capacities in every component until the cascade stops. To keep track of the cascading failure process in every component may seem cumbersome at first glance. However, due to the way the connected configuration model is likely to disintegrate, it turns out that it only matters what happens in the giant component.

Intuitively, this can be understood as follows. By Theorem 2.1 and 2.2, removing any sublinear number of edges always leaves edges outside the giant. Therefore, if edges are sequentially removed, then only of these edges were contained outside the giant upon removal. Moreover, even if the cascading failure process struck every edge of the components outside the giant, the contribution to the failure size would be at most . This is negligible with respect to the failures that occur in the giant. The failure size should therefore be well-approximated by the failure size in the giant. We formalize these ideas in Section 4.

More specifically, write

and hence . Moreover, define

| # remaining edges outside the giant when edges are removed uniformly at random, | |||

| # edges removed from giant when edges are removed uniformly at random. |

The main idea is that since the number of edges outside the giant is likely to be when a sublinear number of edges are removed uniformly at random, the contribution of edge failures that occur outside the giant is asymptotically negligible. That is, we bound the probability that to occur due to the cascading failure process by the same event in two related processes. For the upper bound, we consider the scenario where all edges in the small components that disconnect from the giant immediately fail upon disconnection. For the lower bound, we consider the scenario where none of the edges in the small components that disconnect from the giant fail.

To make this rigorous, we first consider the probability that the number of edge failures in the giant exceeds . That is, if we sequentially remove edges uniformly at random, a set of edges were contained in the giant upon failure. We are interested in the probability that the coupled surplus capacities of all these edges are insufficient to deal with the corresponding load surges. By translating this setting to a first-passage problem of a random walk bridge over a moving boundary, we show the following result in Section 4.3.

Proposition 2.4.

If for some , then as ,

Second, we use this result to derive an upper bound for the failure size tail. Trivially, the failure size is bounded by the number of edges that are contained in the giant component upon failure plus all the edges that exist outside the giant after the cascade has stopped. We introduce the stopping time

| (10) |

the minimum number of edges that need to be removed uniformly at random for the number of edges outside the giant to exceed . In other words, after we have removed edges uniformly at random, the sum of the number of edges outside the giant and the number of removed edges exceeds . The number of edge removals in the giant is given by , and hence

We prove that with sufficiently high probability, and hence

Third, we derive a lower bound. We note that , and hence

where

| (11) |

That is, is the number of edges that need to be removed uniformly at random for failures to have occurred in the giant. We show that that with sufficiently high probability, and hence

Since the upper and lower bounds coincide, this yields Theorem 1.3. We formalize this in Section 4.4.

3 Disintegration of the network

As explained in the previous section, the cascading failure process can be decoupled in two elements of randomness. In this section, we study the first element of randomness: the disintegration of the network as we sequentially remove edges uniformly at random. In view of Theorem 1.3, our main focus is the case where we remove only edges with . In particular, our goal is to prove Theorems 2.1-2.3.

This section is structured as follows. First, we show in Section 3.1 that the sequential removal process is well-approximated by a process where we remove each edge independently with a certain probability, also known as percolation. This is a well-studied process in the literature, and particularly in case of the configuration model [18]. In Section 3.2, we provide an alternative way to construct a percolated configuration model by means of an algorithm as developed in [18]. This alternative construction allows for simpler analysis, and is used in Section 3.3 to show that for the percolated (connected) configuration model, typically, the components outside the giant component are either isolated nodes, line components or possibly cycle components. In Section 3.4, we derive large deviations bounds on the number of edges outside the giant. We prove Theorem 2.1 in Section 3.5, and in addition, we provide a large deviations bound for the number of edges that need to be removed for the connected configuration model to become disconnected. We prove Theorems 2.2 and 2.3 in Section 3.6. Although these sections focus on the (connected) configuration model under a sublinear number of edge removals, we briefly recall results known in the literature involving the typical behavior of the random graph beyond the sublinear window in Section 3.7. This will be important to obtain intuition of what happens to the failure size behavior beyond the sublinear case, the topic of interest in Section 5.

3.1 Percolation on the connected configuration model

To prove our results regarding the sequential removal process, we relate this process to another one where each edge is removed independently with a certain removal probability, also known as percolation. This is illustrated by the following lemma.

Lemma 3.1.

Let be a graph, and write as the set of edges that have been removed by percolation with parameter , and as the set of edges when edges are removed uniformly at random. It holds for every , , and such that ,

| (12) |

Moreover, if , then as ,

| (13) |

Lemma 3.1 is a direct consequence of the concentration of the binomial random variable, and the proof is given in Appendix B. This lemma establishes that sequentially removing edges uniformly at random is well-approximated by a percolation process with removal probability . In particular, this holds for the (connected) configuration model. This allows us to study many questions involving the connected configuration model subject to uniformly removing edges in the setting of percolation. The study of percolation processes on finite (deterministic or random) graphs is a well-established research field, which dates back to the work of Gilbert [14] and is still very active these days. In particular, percolation on the configuration model is a fairly well-understood process, as established by Janson in [18].

It is known that there exists a critical parameter such that if , then the largest component of the percolated (connected) configuration model contains a non-vanishing proportion of the vertices and edges [25, 18]. In particular, it is implied by the formula for that in order for a phase transition to appear, it is necessary that . If , then the largest component already has a sublinear size in even before percolation, while if , then there exists a connected component of linear size in the percolated graph for every . Typically, the (w.h.p. unique) component of linear size is referred to as the giant component. All other components are likely to be much smaller, i.e. of size under some regularity conditions on the limiting degree sequence [25]. If , there is no giant component in the percolated graph and all the components have sublinear size [18].

In order to derive Theorem 1.3, these results in the literature are not sufficient. In particular, in view of Theorems 2.1 and 2.2, a more detailed description of the network structure is needed in case that . A critical tool to derive the results in this setting is the explosion algorithm, as we describe next.

3.2 Explosion algorithm

Key to prove Theorems 2.1 and 2.2 is the explosion algorithm, designed by Janson in [18]. This algorithm prescribes that instead of applying percolation on with removal probability , we can run the procedure as described in Algorithm 1.

-

11.

Remove each half-edge independently with probability . Let be the number

Add degree-one vertices to the vertex set. Define the new degree sequence as with

Pair . 4. Remove vertices of degree 1 from uniformly at random.

Janson proved in [18] that the algorithm produces a random graph that is statistically indistinguishable from , the graph obtained by removing every edge in (not necessarily connected) with probability . Yet, the graph obtained by the explosion method is significantly easier to study as it is simply a configuration model with a new degree sequence and a couple of vertices of degree that have been removed, where the latter operation does not significantly change the structure of the graph. Since the graphs obtained from the percolation process and the explosion method are identically distributed, we use the denomination both for the configuration model after percolation and for the graph we obtain via the explosion algorithm.

Remark 3.2.

The observant reader may notice that the explosion algorithm is designed for the configuration model that is not necessarily initially connected. Fortunately, as established in [13], connectivity has a non-vanishing probability to occur under Condition 1.1 as . This is a crucial observation, since it implies that certain events that happen w.h.p. on also happen w.h.p. on . More specifically, for a sequence of events ,

| (14) |

where under Condition 1.1 [13],

In particular, this implies that if for some sequence of random variables it holds that for some constant in , then the same statement holds for the graph . Similarly, if for some sequence in , then this is also true for .

Next, we point out some properties we use extensively in this section. First, we observe that if , then by Taylor expansion, the removal probability in the explosion algorithm satisfies . Therefore, the probability of a vertex of degree to retain half-edges satisfies

| (15) |

if and . Moreover, let represent the number of vertices of degree that retain half-edges. That is, are random variables with distribution . Due to Markov’s inequality, it holds that

| (16) |

Moreover,

| (19) |

due to the Poisson limit theorem and the law of large numbers, respectively. Note that

| (20) |

By Markov’s inequality, it holds for every

| (21) |

Similarly, it follows that for all ,

| (22) |

and hence for every ,

| (23) |

Finally, we observe that for every , by the law of large numbers,

| (24) |

3.3 Typical structure of the percolated configuration model

We recall that our focus is on the case where the number of edges that are removed from the giant is of order . In view of Lemma 3.1, this number is well-approximated by the number of edge removals in a percolation process with removal probability . In particular, in this regime there is a unique giant component w.h.p. and other components are likely to be much smaller, i.e. w.h.p. the number of vertices and edges ourside the giant is of order . Even more can be said about the structure of these components. In this section, we show in that these components are typically isolated nodes, line components or potentially isolated circles. More complex structures are relatively rare.

Remark 3.3.

Remark 3.2 implies that often it suffices to consider to prove an analogous result for . Moreover, the explosion algorithm is used to construct from by the removal of degree-one vertices, and hence we often also focus on in this section. We point out that the operation of removing degree-one vertices does not affect the connectivity of a component. Moreover, the probability that the giant component in is not unique is exponentially small [25]. If , then the probability for not to be of sublinear size is also exponentially small. Therefore, the giant component in remains the giant component in with extremely high probability, i.e. the probability that the complement is true has an exponentially decaying rate. Therefore, the number of edges outside the giant in provides an upper bound (with extremely high probability) for the number of edges outside the giant in . Moreover, since line components outside the giant in either remain line components or become isolated nodes after the removal of degree-one vertices, the number of edges in outside the giant that are not contained in line components is bounded from above (with extremely high probability) by the same quantity in .

First, we explore the degree sequence of in the explosion method from Algorithm 1. Analogously to the notation for the original degree sequence , we write for the number of vertices of degree in and as the limiting fraction.

Lemma 3.4.

Consider the explosion algorithm from Algorithm 1 with initial graph satisfying Condition 1.1 and with . The degree sequence after explosion satisfies the following properties. For the number of vertices of degree zero,

| (28) |

The number of degree-one vertices in satisfies

| (29) |

Finally, the fraction of vertices of degree in converges to the limiting fraction of degree- vertices in , i.e.

| (30) |

Proof.

Recall that if , then

and . Using (19), we obtain

By (20) we know that in both cases, these are the only leading-order contributions to , since with . From (21), we obtain that

if . Similarly, it follows from (19), (23) and (24),

Finally, we note that, since every removal of a half-edge in the first step of the explosion algorithm changes the degree of one vertex,

with and hence for all .

We use the degree sequence to study the structure of the components outside the giant in . Again, we will show that these components are either isolated nodes, line components or possibly isolated cycles. More complex structures are rather unlikely to appear as the network disintegrates.

We begin by proving a bound on the number of edges belonging to components that have a more complex structure, i.e. contain a vertex of degree at least three when for some . We show that this is not the leading-order term for the number of edges outside the giant, since the number of lines and isolated vertices is much larger. For a graph , define as the largest degree of any vertex in and as the edge set of . For every edge and vertex , write and for the connected component that contains or , respectively. Finally, let denote the largest component in a graph .

Proposition 3.5.

Consider the graphs , and with , where for some . For all three graphs, if , then

| (31) |

For both graphs, if , then

| (32) |

Proof.

We prove the result using the explosion algorithm. First, recall Remark 3.2. Applying (14) to the events

implies that to prove (31) and (32) for , it suffices to show (31) and (32) hold for . In addition, recall Remark 3.3, implying that the number of edges in in components containing a node with degree at least three is bounded by the same quantity in with sufficiently high probability. In other words, it suffices to prove that (31) and (32) hold for .

Recall the degree distribution of from Lemma 3.4. It follows from the proof of [25, Lemma 11] that for every supercritical degree sequence (i.e. ) and any , there exists such that in , . Therefore, for large enough,

| (33) |

Consequently, since the number of edges in the giant component is much larger than , it suffices to prove the claims (31) and (32) for

| (34) |

For this purpose, we use the standard exploration algorithm of used in the literature (see e.g. [13, 11] for some similar formulations). At each time , we define the sets of half-edges as the active, dead and neutral sets, and explore them in the following way:

-

1.

At step , pick a vertex with uniformly at random and set all its half-edges as active. All other half-edges are set as neutral, and .

-

2.

At each step , pick a half-edge in uniformly at random, and pair it with another half-edge chosen uniformly at random in . Set as dead. If , then find the vertex incident to and activate all its other half-edges.

-

3.

Terminate the process when .

We observe that when , we have exhausted the exploration of the connected component , and the number of steps performed by the exploration algorithm is the number of edges in In order to prove the claim, we thus need to prove that there is no such that with sufficiently high probability. Let count the number of active half-edges starting from a vertex with . Note that at step the process can only go down if i) and its incident vertex has degree one, causing , or ii) , causing . We denote these events by and , respectively. Since , this counting process needs to decrease by at least three in total for the exploration process to die out. Moreover, the values of the counting process is small at the time steps where the process decreases. More specifically, , where

We can bound the probabilities that these events occur by

Consequently, using the union bound, we obtain that for every that satisfies for some ,

| (35) |

By Markov’s inequality, it follows that

and

The following proposition specifies the number of vertices and edges in lines and the number of isolated nodes which are disconnected from the giant by a percolation process, which constitutes the leading-order term for .

Proposition 3.6.

Consider with with . Define as the number of isolated lines of length and the number of isolated vertices. Then,

| (36) | |||

| (37) |

Instead, if , then

| (38) |

Before moving to the proof of Proposition 3.6, we first consider the higher moments of , the number of isolated lines of length in .

Lemma 3.7.

For any sequence with of positive integer values, it holds as ,

| (39) |

From Lemma 3.7, we can bound the number of edges in isolated line components in .

Corollary 3.8.

For every , as ,

| (40) |

Proof of Proposition 3.6.

Again, note that it follows from Corollary 3.8 that

By Markov’s inequality and Lemma 3.4, if ,

Recall that in the final step of the explosion algorithm, we only remove vertices of degree 1. Therefore, the only way for the number of vertices in line components and isolated vertices to increase is when a component in with a vertex that has a degree at least three turns into a line or an isolated node. By Proposition 3.5, such components appear in with probability , and we conclude that (38) holds.

In order to prove (36) and (37), we also need second moments. By Corollary 3.8,

and thus, by the second moment method

Since by Lemma 3.4, we obtain that

The same arguments can also be used to prove concentration of , since it is dominated by . That is,

We computed the number of vertices and edges that are contained in isolated node components or in line components in . To obtain the corresponding value for we need to subtract the number of degree one vertices that are part of line components and are removed in the last step of the explosion algorithm, and add the number of vertices whose components turn into a line or an isolated vertex by the removal of some degree vertices. By Proposition 3.5, the number of components that can turn into a line or isolated vertex is bounded by , and hence the contribution of these type of events is negligible. Therefore, it suffices to consider the number of vertices and edges that are removed in the final step of the explostion algorithm from the line components in . We observe that there are in total

| (41) |

vertices of degree one in the line components out of the that exist in . We define as the number of vertices removed from line components in the last step of the explosion algorithm. We remove edges of degree one uniformly at random, so the number of the degree-one vertices removed from lines is given by an hypergeometric variable with

| (42) |

A hypergeometric random variable with diverging mean concentrates around the mean, and hence by the law of large numbers,

as claimed.

We do the same computation for the number of edges, this time accounting for the fact that if both vertices of a line of length are removed, only one edge is removed, while all the other vertex and edge removals are in bijection. The number of lines of length which are removed is given by

| (43) |

We conclude that

| (44) |

Proposition 3.6 indicates that the typical number of isolated nodes and line components outside the giant component is of order . Naturally, the isolated nodes do not contribute to the number of edges outside the giant component, and hence we are mostly interested in the total number of edges in the line components, which is likely to be of order due to (37).

Finally, we would like to comment on the number of isolated cycles in . Let denote the number of isolated cycles with edges. In view of Lemma 3.4, if , then the degree distribution satisfies all conditions in [13] (with extremely high probability) except one, namely . However, the proof of Theorem 3.3 in [13] does not use this condition to prove a bound on the number of isolated cycles: this condition was only needed to bound the number of line components. Therefore, it follows from [13, (5.18)],

| (45) |

In other words, the expected number of edges outside the giant that are contained in cycle components is finite. Since is created from by removing vertices of degree one, we observe that all isolated cycles that exist in remain isolated cycles in . Moreover, more isolated cycles can be formed from more complex components in . Using Proposition 3.6 and (45) together with Markov’s inequality, we observe that if with for some , then the number of edges in , outside the giant that are contained in cycle components is . In view of Remark 3.2, the same statement holds for .

3.4 Probabilistic bounds on component sizes outside the giant

In this section, we provide large deviation bounds on the number of edges outside the giant for the percolated connected configuration model with removal probability with for some . Again, in view of Remarks 3.2 and 3.3, it suffices to consider only.

First, we provide a sharper bound on the probability of having a number of edges in these complex components outside the giant that is even of a higher order of magnitude than .

Proposition 3.9.

Consider obtained by removal probability with for some . For every ,

| (46) |

Proof.

We note that, by the proof of [25, Lemma 11], for sufficiently large,

| (47) |

We are left to bound the contribution from components that contain at most edges. We use the method of moments. We observe that

We stress that the vertices in the summation are not necessarily mutually distinct. For the purpose of exposition, write for a vertex ,

Applying the tower property, we obtain

For a graph (or component) , write as the vertex set of that graph. Then,

The first term is trivially bounded by

For the second term, we note that we count the number of vertices outside the giant component that have a degree at least three, while disregarding the set . We note that if we remove the components from , the remaining graph is a configuration model but with a modified degree sequence. In other words, we remove components that have a total of at most edges. This number is too small to change the degree sequence much. That is, it follows from (the proof of) Proposition 3.5 that

Iterating the argument, we obtain

and hence

Finally, using Markov’s inequality, it holds for every ,

Choosing for some , the claim follows.

Next, we provide a result that shows that the probability for the number of edges in line components in to be of a higher order of magnitude than its expectation decays fast.

Lemma 3.10.

Consider obtained by removal probability , where for some . For every ,

| (48) |

Proof.

3.5 First disconnections

In this section, we consider the question of how many edges need to be removed to cause the (initially connected) configuration model to become disconnected. That is, we would like to prove Theorem 2.1, and show that the most likely moment for the first disconnection to occur is after edges have been removed.

To prove Theorem 2.1, we use the equivalence between sequential edge removal and percolation as established in Lemma 3.1. More specifically, in order to prove Theorem 2.1, it follows from Lemma 3.1 that it suffices to show that in a percolation process with a removal probability of the order leads to a positive probability for the configuration model to remain connected.

Proposition 3.11.

Consider the graphs and . If with , then

| (49) |

and

| (50) |

Proof.

We build using the explosion algorithm from Algorithm 1. To obtain the limiting probability that is connected, we first require more detailed information on the degree sequence of . Observe that

Recall (21), (23), (24) and (19), and hence

| (51) | ||||

| (52) |

In addition, we observe that for every , and hence with high probability,

Moreover, we observe that the average degree of converges in probability to .

Secondly, we note that removing vertices of degree one cannot disconnect a component. Therefore, there are only two ways for to be connected after the explosion algorithm. That is, either is already connected, or consists of one (giant) component and other components that are lines of length two (the only component entirely made of vertices of degree one), where all vertices of the line components are removed in the final step of the explosion algorithm. In either case, we observe that one requires that , which occurs with probability

Theorem 2.2 of [13] implies that if , then with high probability the graph consists of a giant component, possible components that are lines, possible components that are cycles, but no other type of components. Recall that represents the number of components that are lines of length in , and the number of components that are cycles of length . We call component with a single vertex of degree two with a self-loop a cycle component of length one, and a component with two vertices with multi-edges between them a cycle of length two. Theorem 2.2 of [13] implies that

and all limits are independent random variables. For to be connected after the explosion algorithm, no cycles should appear in , which occurs with probability

Also, no lines of length more than three should appear, which occurs with probability

Finally, we require that all vertices in the line components are removed in the final step of the explosion algorithm. That is, a set of vertices need to be removed out of the randomly chosen vertices of degree one. Note that the number of vertices that are removed from line components in the last step of the explosion algorithm is hypergeometrically distributed: we remove vertices uniformly at random from vertices of which are in line components. Therefore, conditionally on , and , the probability of all vertices to be removed in the final step of the explosion algorithm is given by

In particular, if , then with probability one all vertices in line components are removed in the last step of the algorithm. Using the tower property, (24), (52) and the observation that converges to a Poisson distribution, we observe that

We conclude that

For the second statement of the proposition, it is known from [24] that

and since , the statement follows.

See 2.1

Proof.

First, connectivity is a monotone property, and thus, once the graph is disconnected it will stay disconnected for the rest of the process. From Lemma 3.1 it follows that sequential removal of edges in is well-approximated by a percolation process with removal probability . Consequently, due to Proposition 3.11,

| (53) |

In other words, converges in distribution to a random variable whose complementary cumulative distribution function is given by the expression on the right-hand side of (53).

Proposition 3.11 implies that the percolated graph with removal probability is disconnected with probability of order . More detailed bounds can be provided for this range, as is illustrated by the next result.

Proposition 3.12.

Consider with for some . Then,

| (54) |

Consequently, if we remove edges uniformly at random from with for some , then the probability of the resulting graph being disconnected is of order .

Proof.

If is disconnected, then there is at least one component outside the giant that is either an isolated node, a line, a cycle or a component that contains a vertex with degree at least three. To show the result, it therefore suffices to prove that each of these events occur with probability .

First, it follows from Proposition 3.6 that in ,

In other words, the event that there is an isolated node or a line component (outside the giant) occurs with probability . Moreover, we can apply Proposition 3.5 to obtain that in ,

Finally, we show that with probability percolation on does not create cycles. We observe that

where denotes the number of newly formed isolated cycles in that do not exist in the initial graph . Since is connected with non-vanishing probability, it is sufficient to show that

Again, we use the explosion method. We stress that running this algorithm on a sampled results in a graph that is not the same as the graph that would have been obtained if percolation had been applied on the same sampled . Instead, sampling a and running the explosion algorithm results in a graph that is statistically indistinguishable from one that is obtained by sampling another and applying percolation on it. Therefore, we need to consider the question how to bound newly formed cycles in . The crucial observation to answer this question is that such cycles contain at least one node whose degree in was at least three.

More specifically, the number of newly formed isolated cycles in is at most the number of cycles in that contain at least one node whose degree in is at least three. Write for the number of vertices whose degree in is two, but has degree at least three in , i.e.

Every isolated cycle in that contains at least one node having an original degree at least three in , either that cycle already exists in , or it was formed from a component in by the removal of degree one vertices. In the second case, this implies that there exists a component in outside the giant with a maximum degree at least three, which occurs with probability by Proposition 3.5. What remains is to analyze the first case.

Write for the number of cycles that exist in and contain at least one node whose original degree in is at least three. For any , a set of (distinct) degree-two vertices in forms a cycle in with probability

if , and if . The number of sets of vertices in that all have degree two of which at least one vertex has degree at least three in is bounded by . Therefore,

We observe that and , where is a binomially distributed random variable with parameters and . Therefore, for every , the probability that occurs has an exponentially decaying tail. On the other hand, since , it holds for every and all sufficiently large,

and hence this sequence converges to zero exponentially fast in . Applying dominated convergence, we obtain

By Markov’s inequality, we conclude that

This proves the first statement of the proposition.

To prove the second statement of the proposition, we need to relate the percolation process to the process of removing edges uniformly at random from . To each edge, attach a uniformly distributed random variable. An alternative description of the percolation process with removal probability is by removing all edges whose values of the random variable are below . Let denote uniformly distributed order statistics. Then, the probability that edge removals disconnects is given by . We note that

The above proof shows that

The second term is negligible, since by the Chernoff bound,

3.6 Number of edges outside the giant component

See 2.2

Proof of Theorem 2.2.

By Lemma 3.1, the number of edges outside the largest component in after failures can be derived by considering percolation on this graph with removal probability . The edges outside the giant can be divided into edges in line components, edges in cyclic components and edges in more complex components (i.e. components which contain a vertex of degree at least three). From Proposition 3.6 it follows that

Next we bound the number of edges outside the giant that are contained in components that are cycles or contained in more complex components. In view of Remarks 3.2 and 3.3, we point out that this is bounded by the same quantity in . Therefore, to conclude the proof, it suffices to show that the total number of edges contained in cycle components or more complex components outside the giant is of order for .

By Proposition 3.5, the number of edges in outside the giant that is contained in a more complex component is of order . To count the number of edges in cycle components, recall that denotes the number of cyclic components with edges in , and that it satisfies (45), i.e. . Using Markov’s inequality, this implies that

Consequently, the number of edges in outside the giant that are not contained in line components is indeed of order .

Theorem 2.2 prescribes the likely number of edges outside the giant component as an effect of sequentially removing edges uniformly at random. The initially connected configuration model is likely to disintegrate, as more edges are removed, in a unique giant and several small components, the majority of which are either lines or isolated nodes as long as the number of edge failures is sublinear.

Next, we show that the number of edges outside the giant component in is unlikely to be of a higher order of magnitude than its typical value during the cascade. We stress that this results concerns the percolation process, which can in turn be used to derive a large deviations bound for the sequential removal process.

Theorem 3.13.

Consider and with , with for some . Then, for every ,

| (55) |

and

| (56) |

Proof.

First, we show (55) by using the explosion algorithm. Again, in view of Remarks 3.2 and 3.3, it suffices to show

| (57) |

We partition the different kinds of contributions to the total number of edges outside the giant of as follows: edges can be contained in a line, a cycle or a more complex component. Due to Proposition 3.10, the number of edges in line components in is bounded by

| (58) |

To bound the edges in cycles, we point out that if follows from [13, (3.7)] that all moments of the number of cycles in converge to a finite constant. That is, convergence of the first factorial moments is shown, which implies convergence of the first moments. Again, this result is shown under the condition that the number of vertices of degree one is of order in [13], but this assumption is not used for the results with respect to the isolated cycles. Consequently, for every , as long as ,

where the Poisson variables in the second term are all independent. Therefore, for every , by applying Markov’s inequality, we obtain

Theorem 3.13 reveals that it is very unlikely for the number of edges outside the giant to be larger than of order when applying the percolation process. We can use this result to show that this also holds under a sequential removal process. That is, we can prove Theorem 2.3, which we recall next.

See 2.3

Proof of Theorem 2.3.

First, we prove (8) for such that for some sufficiently small. We use this partial result to show (9). In the proof of (9), it is implied that (8) also holds for all for some sufficiently small, concluding the proof of Theorem 3.13 follows.

Let denote uniformly distributed order statistics, and note that

Let (sufficiently) small, and note that by the Chernoff bound,

Therefore,

We observe that for every with ,

for all sufficiently small. By Theorem 3.13, we conclude that

To prove (9), note that by Proposition 3.12,

Moreover, if for some , using that , it follows directly from (8),

Therefore, to conclude (9), it suffices to show that for some sufficiently small,

For convenience, write , and consider values of such that for some . Note that

We observe that is the number of edges outside the giant when removing edges uniformly at random. Write as the number of edges that are removed out of this set of edges if we remove another edges uniformly at random. Then, , and hence

Since for every , it follows from the first claim of the corollary that with probability ,

In other words, the probability that an edge out of the set of is chosen to be removed is strictly bounded by with probability . In that case, the probability for more than half of the edges to be removed has an exponentially decaying tail. In particular, this implies that

For the other term, we observe that for every ,

Similarly as in the proof of the first claim of the corollary, we observe that

and hence

It follows from Theorem 3.13 that the second term is also of order for every . We conclude that for every with ,

from which (9) follows by the union bound. Moreover, it implies that (8) holds for all for some as well.

3.7 Linear number of edge removals

For completeness, we provide a brief overview of known results about what looks like when the removal probability is a fixed value , as studied in [18]. It is shown that for any fixed , has a unique giant component and many small components. However, in this phase the giant does not contain vertices, as in the case when .

From [18], it is known that there exists a function defined for such that in

| (59) |

i.e., the proportion of edges in the giant component concentrates for every . The exact formula for comes from [18, Theorem 3.9],

| (60) |

where is defined as the solution of the equation

| (61) |

where is the probability generating function of . The same concentration result, with a different limit function, holds also for the number of vertices in the largest component.

It is worth mentioning that in this case, lines and isolated vertices do not constitute the vast majority of the small components anymore, but there is also a positive density of more complex small components to appear. These are mainly trees.

If , instead, there is no giant component that contains a non-vanishing proportion of the edges or vertices and usually the high-degree vertices determine the size of the largest components, i.e. [17].

4 Cascading failure process

The results in the previous section explain the way the connected configuration model is likely to disintegrate as edge failures occur sequentially and uniformly at random. This yields the load surge values, and hence what remains to be done for proving Thoerem 1.3, is to compare the load surge values to the corresponding surplus capacities at the edges.

To prove our main results as stated in Theorem 1.3, we follow the proof strategy as laid out in Section 2.3. Recall that the connected disintegrates in a giant component and a sublinear number of edges outside the giant as long as no more than edges are removed. We point out that, intuitively, this implies that the only dominant contribution to the total failure size comes from the number of edges that are contained in the giant component upon failure. We make this statement rigorous in this section.

This section is structured as follows. In Section 4.1, we consider the setting where no disconnections takes place yet. Since it follows from Theorem 2.1 that it is unlikely that the connected configuration model becomes disconnected before , we can show that Theorem 2.2 holds if . For larger thresholds, we consider the failure size tail of the giant component. To derive this tail behavior, we translate this problem to a first-passage time problem over a random moving boundary in Section 4.2. In Section 4.3, we derive the behavior of this first-passage time. We conclude Theorem 2.2 in Section 4.4 by using the strategy as laid out in Section 2.3.

4.1 No edge disconnections

Before we move to the tail of the failure size, we first consider the scenario where no disconnections have occurred yet during the cascade, or alternatively, the setting where the failure mechanism is applied to a graph with a star topology. As long as edge failures do not cause (edge) disconnections in the graph, the load surge function remains the same at every surviving edge. Recall that in this case it holds that for all surviving edges , and hence recursion (3) is solved by

| (62) |

In other words, given that no disconnections have occurred after edge failures, the load surge function behaves deterministically until step (at every surviving edge). In this case, the problem reduces to a first-passage time problem, i.e. the event corresponds to the event that the smallest uniformly distributed order statistics are below the linear load surge function. The following result follows by applying Theorem 1 of [33].

Proposition 4.1.

Define as the number of edge failures in a star network with nodes and edges, and load surge function given by (62). For every satisfying and as ,

In particular, if , it holds that

A crucial argument used in the proof of Proposition 4.1 is that as ,

The asymptotic behavior of the latter expression is obtained by observing that the edge failure distribution is quasi-binomial for this particular load surge function, and exploiting the analytic expression for the probability distribution function to derive the tail behavior. Alternatively, this result can be derived by relating this problem to an equivalent setting where one is interested in the first-passage time of a random walk bridge, as is done in [35]. We use such a relation in the more involved case where disconnections occur as well. Before moving to the general case, we briefly recall the translation to the equivalent random walk problem in the much simpler setting where no (edge) disconnections occur yet.

Translation to random walk setting:

Note that

| (63) | ||||

where the exponentially distributed random variables are independent. Define the random walk

| (64) |

where . Then,

Define for every ,

| (65) |

Then, the objective can be written as

Using the main result in [35], we observe that for ,

where the latter equality follows from the memoryless property of exponentials.

A similar random walk construction can be done for the case where disconnections do take place. Before we consider this process, we first show the result of Theorem 1.3 in the phase where it is unlikely to have any disconnections in the connected configuration model. That is, removing edges uniformly at random is unlikely to cause any disconnection by Theorem 2.1. Due to the coupling between the cascading failure process and sequential edge-removal process, Proposition 4.1 prescribes exactly the asymptotic behavior of the edge failure size in that case.

Theorem 4.2.

The probability that the cascading failure process disconnects the network is given by

| (66) |

where denotes the gamma function. Consequently, for any threshold ,

| (67) |

4.2 Random walk formulation

This section is devoted to introducing a related random walk, and to showing that the number of edge failures in the giant asymptotically behaves the same as the first-passage time of a random walk bridge.

Recall that denotes the number of edges in the giant when edges have been removed uniformly at random, and let correspond to the edge corresponding to the ’th order statistic of the surplus capacities. Define the sequence of processes , where , and for ,

| (70) |

Note that this corresponds to the load surge increments in the giant if , rescaled by a factor . We consider a sequence of random walks defined as

| (71) |

with increments

| (72) |

where are independent exponential random variables with unit rate. We note that and by definition, since removing edges leaves only isolated nodes and one component with two nodes connected by a single edge which inherently is the component that contains the largest number of edges. Therefore,

and hence the random walk satisfies the property

| (73) |

Finally, for all define the stopping times

| (74) |

and whenever for all .

In case of the star topology, i.e. no edge disconnections occur, it holds that , causing for all . In that case we observed that the failure size tail could be written as the first-passage tail of the random walk bridge. The above random walk formulation is a generalization that accounts for edges that may possibly no longer be contained in the giant as edge failures occur.

Proposition 4.3.

Suppose for some . If

| (75) |

then

Proof of Proposition 4.3.

Write for the load surge function corresponding to the edges in the giant. Note that by construction,

In other words, whenever an edge is contained in the giant, one checks whether this edge has sufficient capacity to deal with the load surge function. Instead of looking only at those instances, we would like to compare all order statistics to an appropriately chosen function. For this purpose, we introduce the function defined as

We note this function satisfies two important properties:

-

(p1)

if ;

-

(p2)

if .

Moreover, as is non-decreasing for all , this holds as well for . We define the two stopping times,

and

We observe that the first property (p1) implies that , and if . Together with the second property (p2) and the observation that , this implies

| (76) |

Therefore,

| (77) |

To conclude the proof, we relate the random walk to the stopping time , and show that the second contribution in (77) is negligible. For the first claim, we consider the perturbed increments with and

| (80) |

Note that this corresponds to the load surge increments rescaled by a factor . In particular, we observe , and

Note that , and

which is of order with probability by Theorem 2.3. Since

it follows that

Due to our hypothesis (75), it follows that as ,

To conclude the result, it remains to be shown that the second term in (77) is of order . Since we assumed that for some , we observe that there exists an such that both . For all such , it holds that

We note that by our hypothesis and our previous result,

Finally, we observe that by (76),

where the third assertion follows from Theorem 3.13.

4.3 Behavior of the number of edge failures in the giant

We start the analysis by showing that if for some , then Proposition 2.4 holds for the number of failures in the giant. We recap this proposition next.

See 2.4

For , this result is already proven in Theorem 4.2. For the remainder of the proofs in this section, we therefore assume .

To prove Proposition 2.4, we will extensively use the random walk

where . This is related to as defined in (74) through the relation

| (81) |

and if for all . Moreover, for a sequence , let correspond to the first-passage time of the random walk over this sequence, i.e.,

We use the following strategy to prove Proposition 2.4. First, we show that for a particular class of (deterministic) boundary sequences, it holds that

as . Next, we show that the boundary as given in (81) falls in this class of boundary sequences with sufficiently high probability, and hence

as . Finally, we show that conditioning on the event that the random walk returns to zero at time does not affect the tail behavior, i.e. for all for some , it holds that as ,

4.3.1 First-passage time for moving boundaries initially constant for sufficient time

Before moving to the proof of Proposition 2.4, we consider the first-passage time behavior of for a particular class of moving boundaries. The next lemma shows that the first-passage time over a boundary that is monotone non-decreasing, grows slower than , and is initially constant for a sufficiently large time, behaves the same as the first-passage time over the constant boundary.

Lemma 4.4.

Suppose is such that as for some . Define the boundary sequence

with . Then, as ,

Remark 4.5.

We point out that the class of boundary sequences as described in Lemma 4.4 is not covered by the literature. That is, almost all related literature consider moving boundaries that can be described by sequences of the form , i.e. that do not depend on . The exception is [12], but this paper restricts to constant boundaries only.

Still, the literature offers some partial results. First, since ,

For a lower bound, we would like to remark that is a non-decreasing sequence in , where for all . Therefore, due to Proposition 1 in [38] (or Theorem 2 in [15]),

where . Moreover, since

it holds that [15]. In order to prove Lemma 4.4, we therefore need to show that .

Proof.

First, recall that , and hence

Therefore, it suffices to show that the reversed inequality holds asymptotically.

We bound the moving boundary by an appropriate piecewise constant boundary with finitely many jumps. For each of these constant intervals, we use the results in [12] to show that the trajectory of the random walk is asymptotically indistinguishable with respect to the boundary and the piecewise constant one. Finally, we glue the intervals together to conclude the result.

First, note that since , we can assume without loss of generality that is such that with . To define the piecewise constant boundary, let

and note that since and . Choose a fixed sufficiently small such that

-

•

, which implies that ;

-

•

;

-

•

.

Define with and

We point out that corresponds to the number of times the piecewise constant boundary makes a jump, and the values , , correspond to the times where the piecewise constant boundaries jump. Since is chosen sufficiently small, as . Write

and define the boundary sequence as

We point out that by construction,

and hence for all as . Moreover,

Consequently,

and therefore we obtain the lower bound

Next, we provide a lower bound for the tail behavior of . Fix , and note that

Conditioning on the position of the random walk at the times , yields

where we write and for convenience. In other words, we partition the trajectory of the random walk in intervals where the boundary is constant. Recall that for every , it holds that , and for every . Applying Proposition 18 in [12], we obtain uniformly in , ,

where denotes the renewal function corresponding to the decreasing ladder height process of the random walk. The behavior of this function is well-understood: it is non-decreasing and as . Since by construction, for every , we obtain

Similarly, it holds uniformly in [12, Proposition 18],

Then,

Conditioning on staying above the constant boundary and applying the union bound yields

Letting , we find that for every sufficiently small,

from which we conclude that the result holds.

The next lemma shows a similar result as Lemma 4.4, yet with a boundary that is monotone non-increasing and initially constant for a sufficiently large time. The proof is similar to the proof of Lemma 4.4, and therefore given in Appendix C.

Lemma 4.6.

Suppose is such that for some as . Define the boundary sequence

with . Then, as ,

Corollary 4.7.

Suppose is such that both for some , and the boundary sequence satisfies

for some and . Then, as ,

4.3.2 Proof of Proposition 2.4

To prove Proposition 2.4, we first show that the tail of behaves the same as that of , after which we use the relation in Proposition 4.3 to derive the tail of . In view of (81), we need to understand the behavior of the random walk

where . In order to apply Corollary 4.7, we therefore need to show that the random walk is likely to be close to zero for a sufficiently long time , and within for all for some .

Proposition 4.8.

Suppose for some and . Then, as ,

Proof.

From Proposition 3.12, it follows that the probability that there are disconnections when removing less than edges with is of order . Therefore, for every with , it is likely that for every , and hence

Therefore, to prove the proposition, it suffices to show that for every for which for some and ,

where e.g. . Write , and

a random variable representing the probability that edge is in the giant. Let denote a Bernoulli distributed random variable with success probability , and note that

| (82) |

In view of Theorem 2.3, we observe that is likely to be

More precisely, let . Then,

where due to Theorem 2.3, it holds that . Next, we show that the summed probabilities have an exponentially decaying tail. Define the stopping time

We remark that . Due to (82), it holds for every ,

Applying Chernoff’s bound, we obtain for every

Although the random variables are not independent, they are conditioned to be close to one and satisfy a Markovian property. Let , and note that the conditional event implies that for all . Define as the filtration generated by removing edges uniformly at random. Applying the law of total expectation and noting that is a (strictly) decreasing function for all , we observe that

Applying the same argument recursively yields the bound

for every . We point out that the right-hand side corresponds exactly to the Chernoff bound that would have been obtained if we consider the sum of Bernoulli distributed independent random variables with parameter . It follows that

We conclude that

On the other hand, we can use analogous arguments to bound . This would yield

We conclude that

Corollary 4.9.

If for some , then as ,

Proposition 2.4 follows from Corollary 4.9 if we show that conditioning on the event that does not change the tail of the stopping time . Indeed, this turns out to be the case.

Proof of Proposition 2.4.

We show that asymptotically the behavior of the conditioned stopping time is determined solely by what happens for the increments until time . Note that by Proposition 4.3

Fix . We bound the probability terms both from above and below, and show that these bounds asymptotically behave the same as . Denote by the density of the random walk at time . Since the density of the random walk is bounded, we point out that it holds that [29]

| (83) |

where denotes the standard normal density function. Note that

For the first term, we observe

Due to (83),

and

as . This yields the upper bound

For the second term, we show it is negligible. Note that

Applying Chernoff’s bound, it holds for every ,

In particular, this holds for (for large enough). Using this choice of and applying series expansions, we derive

Due to Corollary 4.9,

and hence

We conclude the upper bound

4.4 Proof of main result

As is laid out in the proof strategy described in Section 2.3, it only remains to be shown that the stopping times and , as defined in (10) and (11) respectively, are close to . This follows from the extremely likely events that only a few components disconnect from the giant, and that such components are relatively small. In particular, it is likely that and .

Lemma 4.10.

Suppose for some . Then,

Lemma 4.11.

Suppose for some . Then,

The proofs of these two lemma are fairly straightforward, and given in Appendix C for completeness. Using these lemmas, we can prove our main result.

5 Universality principle

Theorem 2.2 described the tail behavior of the failure size in case of sublinear thresholds. In this section, we consider whether the scale-free behavior prevails if the threshold is of linear size, i.e. the threshold is of the same order as the number of vertices/edges. We conjecture that the scale-free behavior prevails up to a critical point. Also, we provide an explanation why the scale-free behavior can extend to a wide class of other graphs as well. We stress that the arguments that are provided in this section are intuitive in nature, and rigorous proofs of the claims remain to be established.

The proof of Theorem 2.2 relies on the translation to a first-passage time of the random walk bridge over a (moving) boundary that is close to constant . We prove that the difference is small (Proposition 4.8) if is sublinear, causing the random walk to be asymptotically indistinguishable from up to time . That is, the random walk bridge is asymptotically indistinguishable from the one corresponding to the star topology. Since is a random walk with independent identically distributed increments with zero mean and finite variance, it is well-known by Donsker’s theorem that appropriately scaling the random walk bridge yields convergence to a Brownian bridge. Therefore, the probability that exceeds asymptotically behaves the same as the probability that a Brownian bridge stays above zero until time , multiplied by a constant that relates to the translation of the boundary to any other (constant) boundary. We recall that in case of and a boundary (close to) , this constant is given by .

When the threshold is of the same order as , this analysis does not follow through. The (maximum) difference between the two random walks up to time is likely to become of order , an order of magnitude that affects the asymptotic behavior. Moreover, the number of edges outside the giant is also no longer likely to be of size , and hence we need to understand what the failure behavior typically is in these components as well. The natural question that comes to mind is whether the scale-free behavior in the failure size tail prevails or not for linear-sized thresholds. Next, we argue heuristically why this type of behavior prevails up to a certain critical point.