Factorization for the full-line matrix Schrödinger equation and a unitary transformation to the half-line scattering††thanks: Research partially supported by project PAPIIT-DGAPA UNAM IN100321

Abstract

The scattering matrix for the full-line matrix Schrödinger equation is analyzed when the corresponding matrix-valued potential is selfadjoint, integrable, and has a finite first moment. The matrix-valued potential is decomposed into a finite number of fragments, and a factorization formula is presented expressing the matrix-valued scattering coefficients in terms of the matrix-valued scattering coefficients for the fragments. Using the factorization formula, some explicit examples are provided illustrating that in general the left and right matrix-valued transmission coefficients are unequal. A unitary transformation is established between the full-line matrix Schrödinger operator and the half-line matrix Schrödinger operator with a particular selfadjoint boundary condition and by relating the full-line and half-line potentials appropriately. Using that unitary transformation, the relations are established between the full-line and the half-line quantities such as the Jost solutions, the physical solutions, and the scattering matrices. Exploiting the connection between the corresponding full-line and half-line scattering matrices, Levinson’s theorem on the full line is proved and is related to Levinson’s theorem on the half line.

Dedicated to Vladimir Aleksandrovich Marchenko to commemorate his 100th birthday

AMS Subject Classification (2020): 34L10, 34L25, 34L40, 47A40, 81U99

Keywords: matrix-valued Schrödinger equation on the line, factorization of scattering data, matrix-valued scattering coefficients, Levinson’s theorem, unitary transformation to the half-line scattering

1 Introduction

In this paper we consider certain aspects of the matrix-valued Schrödinger equation on the full line

| (1.1) |

where represents the spacial coordinate, the prime denotes the -derivative, the wavefunction may be an matrix or a column vector with components. Here, can be chosen as any fixed positive integer, including the special value which corresponds to the scalar case. The potential is assumed to be an matrix-valued function of satisfying the selfadjointness

| (1.2) |

with the dagger denoting the matrix adjoint (matrix transpose and complex conjugation), and also belonging to the Faddeev class, i.e. satisfying the condition

| (1.3) |

with denoting the operator norm of the matrix Since all matrix norms are equivalent for matrices, any other matrix norm can be used in (1.3). We use the conventions and notations from [5] and refer the reader to that reference for further details.

Let us decompose the potential into two pieces and as

| (1.4) |

where we have defined

| (1.5) |

We refer to and as the left and right fragments of respectively. We are interested in relating the matrix-valued scattering coefficients corresponding to to the matrix-valued scattering coefficients corresponding to and respectively. This is done in Theorem 3.3 by presenting a factorization formula in terms of the transition matrix defined in (2.24) and an equivalent factorization formula in terms of defined in (2.25). In fact, in Theorem 3.6 the scattering coefficients for themselves are expressed in terms of the scattering coefficients for the fragments and

The factorization result of Theorem 3.3 corresponds to the case where the potential is fragmented into two pieces at the fragmentation point as in (1.5). In Theorem 3.4 the factorization result of Theorem 3.3 is generalized by showing that the fragmentation point can be chosen arbitrarily. In Corollary 3.5 the factorization formula is further generalized to the case where the matrix potential is arbitrarily decomposed into any finite number of fragments and by expressing the transition matrices and in terms of the respective transition matrices corresponding to the fragments.

Since the potential fragments are either supported on a half line or compactly supported, the corresponding reflection coefficients have meromorphic extensions in from the real axis to the upper-half or lower-half complex plane or to the whole complex plane, respectively. Thus, it is more efficient to deal with the scattering coefficients of the fragments than the scattering coefficients of the whole potential. Furthermore, it is easier to determine the scattering coefficients when the corresponding potential is compactly supported or supported on a half line. We refer the reader to [2] for a proof of the factorization formula for the full-line scalar Schrödinger equation and to [4] for a generalization of that factorization formula. A composition rule has been presented in [9] to express the factorization of the scattering matrix of a quantum graph in terms of the scattering matrices of its subgraphs.

The factorization formulas are useful in the analysis of direct and inverse scattering problems because they help us to understand the scattering from the whole potential in terms of the scattering from the fragments of that potential. We recall that the direct scattering problem on the half line consists of the determination of the scattering matrix and the bound-state information when the potential and the boundary condition are known. The goal in the inverse scattering problem on the half line is to recover the potential and the boundary condition when the scattering matrix and the bound-state information are available. The direct and inverse scattering problems on the full line are similar to those on the half line except for the absence of a boundary condition. For the direct and inverse scattering theory for the half-line matrix Schrödinger equation, we refer the reader to the seminal monograph [1] of Agranovich and Marchenko when the Dirichlet boundary condition is used and to our recent monograph [6] when the general selfadjoint boundary condition is used.

The factorization formulas yield an efficient method to determine the scattering coefficients for the whole potential by first determining the scattering coefficients for the potential fragments. For example, in Section 4 we provide some explicit examples to illustrate that the matrix-valued transmission coefficients from the left and from the right are not necessarily equal even though the equality holds in the scalar case. In our examples, we determine the left and right transmission coefficients explicitly with the help of the factorization result of Theorem 3.6. Since the resulting explicit expressions for those transmission coefficients are extremely lengthy, we use the symbolic software Mathematica on the first author’s personal computer in order to obtain those lengthy expressions. Even though those transmission coefficients could be determined without using the factorization result, it becomes difficult or impossible to determine them directly and demonstrate their unequivalence by using Mathematica on the same personal computer.

In Section 2.4 of [6] we have presented a unitary transformation between the half-line matrix Schrödinger operator with a specific selfadjoint boundary condition and the full-line matrix Schrödinger operator with a point interaction at Using that unitary transformation and by introducing a full-line physical solution with a point interaction, in [13] the relation between the half-line and full-line physical solutions and the relation between the half-line and full-line scattering matrices have been found. In our current paper, we elaborate on such relations in the absence of a point interaction on the full line, and we show how the half-line physical solution and the standard full-line physical solution are related to each other and also show how the half-line and full-line scattering matrices are related to each other. We also show how some other relevant half-line and full-line quantities are related to each other. For example, in Theorem 5.2 we establish the relationship between the determinant of the half-line Jost matrix and the determinant of the full-line transmission coefficient, and in Theorem 5.3 we provide the relationship between the determinant of the half-line scattering matrix and the determinant of the full-line transmission coefficient. Those results help us establish in Section 6 Levinson’s theorem for the full-line matrix Schrödinger operator and compare it with Levinson’s theorem for the corresponding half-line matrix Schrödinger operator.

We have the following remark on the notation we use. There are many equations in our paper of the form

| (1.6) |

where and are continuous in the quantity is also continuous at but is not necessarily well defined at We write (1.6) as

| (1.7) |

with the understanding that we interpret in the sense that by the continuity of at the limit of at exists and we have

| (1.8) |

Our paper is organized as follows. In Section 2 we provide the relevant results related to the scattering problem for (1.1), and this is done by presenting the Jost solutions, the physical solutions, the scattering coefficients, the scattering matrix for (1.1), and the relevant properties of those quantities. In Section 3 we establish our factorization formula by relating the scattering coefficients for the full-line potential to the scattering coefficients for the fragments of the potential. We also provide an alternate version of the factorization formula. In Section 4 we elaborate on the relation between the matrix-valued left and right transmission coefficients, and we provide some explicit examples to illustrate that they are in general not equal to each other. In Section 5, we elaborate on the unitary transformation connecting the half-line and full-line matrix Schrödinger operators, and we establish the connections between the half-line and full-line scattering matrices, the half-line and full-line Jost solutions, the half-line and full-line physical solutions, the half-line Jost matrix and the full-line transmission coefficients, and the half-line and full-line zero-energy solutions that are bounded. Finally, in Section 6 we present Levinson’s theorem for the full-line matrix Schrödinger operator and compare it with Levinson’s theorem for the half-line matrix Schrödinger operator with a selfadjoint boundary condition.

2 The full-line matrix Schrödinger equation

In this section we provide a summary of the results relevant to the scattering problem for the full-line matrix Schrödinger equation (1.1). In particular, for (1.1) we introduce the pair of Jost solutions and the pair of physical solutions and the four matrix-valued scattering coefficients and the scattering matrix the three relevant matrices and and the pair of transition matrices and For the preliminaries needed in this section, we refer the reader to [5]. For some earlier results on the full-line matrix Schrödinger equation, the reader can consult [10, 11, 12].

When the potential in (1.1) satisfies (1.2) and (1.3), there are two particular matrix-valued solutions to (1.1), known as the left and right Jost solutions and denoted by and respectively, satisfying the respective spacial asymptotics

| (2.1) |

| (2.2) |

For each the Jost solutions have analytic extensions in from the real axis of the complex plane to the upper-half complex plane and they are continuous in where we have defined As listed in (2.1)–(2.3) of [5], we have the integral representations for and which are respectively given by

| (2.3) |

| (2.4) |

For each fixed the combined columns of and form a fundamental set for (1.1), and any solution to (1.1) can be expressed as a linear combination of those column-vector solutions. The matrix-valued scattering coefficients are defined [5] in terms of the spacial asymptotics of the Jost solutions via

| (2.5) |

| (2.6) |

where is the left transmission coefficient, is the right transmission coefficient, is the left reflection coefficient, and is the right reflection coefficient. With the help of (2.3)–(2.6), it can be shown that

| (2.7) |

| (2.8) |

As a result, the leading asymptotics in (2.7) and (2.8) are obtained by taking the -derivatives of the leading asymptotics in (2.5) and (2.6), respectively. From (2.16) and (2.17) of [5] it follows that the matrices and are invertible for We remark that (2.5) and (2.6) hold in the limit as their left-hand sides are continuous at even though each of the four matrices and generically behaves as when The scattering matrix for (1.1) is defined as

| (2.9) |

As seen from Theorem 3.1 and Theorem 4.6 of [5], the scattering coefficients can be defined first via (2.5) and (2.6) for and then their domain can be extended in a continuous way to include When the potential in (1.1) satisfies (1.2) and (1.3), from [11, 12] and the comments below (2.21) in [5] it follows that the matrix-valued transmission coefficients and have meromorphic extensions in from to where any possible poles are simple and can only occur on the positive imaginary axis. On the other hand, the domains of the matrix-valued reflection coefficients and cannot be extended from unless the potential in (1.1) satisfies further restrictions besides (1.2) and (1.3).

The left and right physical solutions to (1.1), denoted by and are the two particular matrix-valued solutions that are related to the Jost solutions and respectively, as

| (2.10) |

and, as seen from (2.1), (2.2), (2.5), (2.6), and (2.10) they satisfy the spacial asymptotics

| (2.11) |

| (2.12) |

Using (2.11) and (2.12), we can interpret in terms of the matrix-valued plane wave of unit amplitude sent from the matrix-valued reflected plane wave of amplitude at and the matrix-valued transmitted plane wave of amplitude at Similarly, the physical solution can be interpreted in terms of the matrix-valued plane wave of unit amplitude sent from the matrix-valued reflected plane wave of amplitude at and the matrix-valued transmitted plane wave of amplitude at

The following proposition is a useful consequence of Theorem 7.3 on p. 28 of [7], where we use and tr to denote the matrix determinant and the matrix trace, respectively.

Proposition 2.1.

Proof.

The second-order matrix-valued systems (1.1) for and can be expressed as a first-order matrix-valued system as

| (2.14) |

From Theorem 7.3 on p. 28 of [7] we know that (2.14) implies

| (2.15) |

Since the coefficient matrix in (2.14) has zero trace, the right-hand side of (2.15) is zero, and hence the vanishment of the left-hand side of (2.15) shows that the determinant in (2.13) cannot depend on ∎

Since appears as in (1.1) and we already know that and are solutions to (1.1), it follows that and are also solutions to (1.1). From the known properties of the Jost solutions and we conclude that, for each the solutions and have analytic extensions in from the real axis to the lower-half complex plane and they are continuous in where we have defined In terms of the four solutions to (1.1), we introduce three useful matrices as

| (2.16) |

| (2.17) |

| (2.18) |

Since the -domains of those four solutions are known, from (2.16) and (2.17) we see that and are defined when and from (2.18) we see that is defined when

In the next proposition, with the help of Proposition 2.1 we show that the determinant of each of the three matrices defined in (2.16), (2.17), and (2.18), respectively, is independent of and in fact we determine the values of those determinants explicitly. We also establish the equivalence of the determinants of the left and right transmission coefficients.

Proposition 2.2.

Assume that the matrix-valued potential in (1.1) satisfies (1.2) and (1.3). We then have the following:

- (a)

-

(b)

The matrix-valued left transmission coefficient and right transmission coefficient have the same determinant, i.e. we have

(2.22) -

(c)

We have

(2.23) where the matrices and are defined in terms of the scattering coefficients in (2.9) as

(2.24) (2.25)

Proof.

As already mentioned, the four quantities are all solutions to (1.1), and hence from Proposition 2.1 it follows that the determinants of and are independent of In their respective -domains, we can evaluate each of those determinants as and and we know that we have the equivalent values. Using (2.1) in (2.16) we get

| (2.26) |

Using an elementary row block operation on the block matrix appearing on the right-hand side of (2.26), i.e. by multiplying the first row block by and subtracting the resulting row block from the second row block, we obtain (2.19). In a similar way, using (2.2) in (2.17), we have

| (2.27) |

and again using the aforementioned elementary row block operation on the matrix appearing on the right-hand side of (2.27), we get (2.20). For using (2.1) and (2.6)–(2.8) in (2.18), we obtain

| (2.28) |

where we have defined

Using the aforementioned elementary row block operation on the matrix we get

| (2.29) |

where denotes the zero matrix. From (2.28) and (2.29) we get (2.21). Thus, the proof of (a) is complete. Let us now turn to the proof of (b). Using (2.2), (2.5), and (2.7) in (2.18), we obtain

| (2.30) |

where we have defined

Using an elementary row block operation on the matrix i.e. by multiplying the first row block by and adding the resulting row block to the second row block, we get

| (2.31) |

Interchanging the first and second row blocks of the matrix appearing on the right-hand side of (2.31), we have

| (2.32) |

Then, from (2.30) and (2.32) we conclude that

| (2.33) |

and by comparing (2.21) and (2.33) we obtain (2.21) for However, for each fixed the quantity is analytic in and continuous in Thus, (2.21) holds for and the proof of (b) is also complete. Using (2.5) and (2.7) in (2.16), and by exploiting the fact that is independent of we evaluate as and we get the equality

| (2.34) |

where we have defined

Using two consecutive elementary row block operations on the matrix on the right-hand side of (2.34), i.e. by multiplying the first row block by and adding the resulting row block to the second row block and then by dividing the resulting second row block by and subtracting the resulting row block from the first row block, we can write (2.34) as

| (2.35) |

By interchanging the first and second row blocks of the matrix appearing on the right-hand side of (2.35) and simplifying the determinant of the resulting matrix, from (2.35) we obtain

| (2.36) |

Comparing (2.19), (2.24), and (2.36), we see that the first equality in (2.23) holds. We remark that the matrix defined in (2.24) behaves as as On the other hand, we observe that the first equality of (2.23) holds at by the continuity argument based on (1.6)–(1.8). In a similar way, using (2.6) and (2.8) in (2.17), and by exploiting the fact that is independent of we evaluate as and we get the equality

| (2.37) |

where we have defined

Using two elementary row block operations on the matrix on the right-hand side of (2.37), i.e. by multiplying the first row block by and adding the resulting row block to the second row block and then by dividing the resulting second row block by and subtracting the resulting row block from the first row block, we can write (2.37) as

| (2.38) |

By interchanging the first and second row blocks of the matrix appearing on the right-hand side of (2.38) and simplifying the determinant of the resulting matrix, from (2.38) we obtain

| (2.39) |

Comparing (2.20), (2.25), and (2.39), we see that the second equality in (2.23) holds. We remark that the matrix defined in (2.25) behaves as as but the second equality of (2.23) holds at by the continuity argument expressed in (1.6)–(1.8). ∎

We note that, in the proof of Proposition 2.2, instead of using elementary row block operations, we could alternatively make use of the matrix factorization formula involving a Schur complement. Such a factorization formula is given by

| (2.40) |

which corresponds to (1.11) on p. 17 of [8]. In the alternative proof of Proposition 2.2, it is sufficient to use (2.40) in the special case where the block matrices have the same size and is invertible.

Let us use to denote the Wronskian of two matrix-valued functions of where we have defined

Given any two matrix-valued solutions and to (1.1), one can directly verify that the Wronskian is independent of where we use an asterisk to denote complex conjugation. Evaluating the Wronskians involving the Jost solutions to (1.1) as and also as respectively, for we obtain

| (2.41) |

| (2.42) |

| (2.43) |

| (2.44) |

| (2.45) |

and for we get

| (2.46) |

| (2.47) |

With the help of (2.41), (2.43), and (2.45), we can prove that

| (2.48) |

where is the scattering matrix defined in (2.9). From (2.48) we conclude that is unitary, and hence we also have

| (2.49) |

From (2.42), (2.44), and (2.46) we obtain

| (2.50) |

which can equivalently be expressed as

where is the constant matrix given by

| (2.51) |

We remark that the matrix is equal to its own inverse.

In Proposition 2.2(a) we have evaluated the determinants of the matrices and appearing in (2.16), (2.17), and (2.18), respectively. In the next theorem we determine their matrix inverses explicitly in terms of the Jost solutions and

Theorem 2.3.

Assume that the matrix-valued potential in (1.1) satisfies (1.2) and (1.3). We then have the following:

-

(a)

The matrix defined in (2.16) is invertible when and we have

(2.54) -

(b)

The matrix defined in (2.17) is invertible when and we have

(2.55) -

(c)

The matrix given in (2.18) is invertible for except at the poles of the determinant of the transmission coefficient where such poles can only occur on the positive imaginary axis in the complex -plane, those -values correspond to the bound states of (1.1), and the number of such poles is finite. Furthermore, for we have

(2.56)

Proof.

From (2.19) we see that the determinant of vanishes only at on the real axis. We confirm (2.54) by direct verification. This is done by first postmultiplying the right-hand side of (2.54) with the matrix given in (2.16) and then by simplifying the block entries of the resulting matrix product with the help of (2.41) and (2.42). Thus, the proof of (a) is complete. We prove (b) in a similar manner. From (2.20) we observe that is nonzero when except at Consequently, the matrix is invertible when By postmultiplying the right-hand side of (2.55) by the matrix given in (2.17), we simplify the resulting matrix product with the help of (2.43) and (2.44) and verify that we obtain the identity matrix as the product. Thus, the proof of (b) is also complete. For the proof of (c) we proceed as follows. From (2.21) we observe that is nonzero when except when and when has poles. From (2.22) we know that the determinants of and coincide, and from [5] we know that the poles of correspond to the -values at which the bound states of (1.1) occur. It is also known [5] that the bound-state -values can only occur on the positive imaginary axis in the complex -plane and that the number of such -values is finite. We verify (2.56) directly. That is done by postmultiplying both sides of (2.56) with the matrix defined in (2.18), by simplifying the matrix product by using (2.42), (2.44), (2.46), and (2.47), and by showing that the corresponding product is equal to the identity matrix. ∎

3 The factorization formulas

In this section we provide a factorization formula for the full-line matrix Schrödinger equation (1.1), by relating the matrix-valued scattering coefficients corresponding to the potential appearing in (1.1) to the matrix-valued scattering coefficients corresponding to the fragments of We also present an alternate version of the factorization formula, which is equivalent to the original version.

We already know that and are both matrix-valued solutions to (1.1), and from (2.1) we conclude that their combined columns form a fundamental set of column-vector solutions to (1.1) when Hence, we can express as a linear combination of those columns, and we get

| (3.1) |

where the coefficient matrices are obtained by letting in (3.1) and using (2.1) and (2.6). Note that we have included in (3.1) by using the continuity of at for each fixed Similarly, and are both matrix-valued solutions to (1.1), and from (2.2) we conclude that their combined columns form a fundamental set of column-vector solutions to (1.1) when Thus, we have

| (3.2) |

where we have obtained the coefficient matrices by letting in (3.2) and by using (2.2) and (2.5). Note that we can write (3.1) and (3.2), respectively, as

| (3.3) |

| (3.4) |

Using (3.2) in (2.16) and comparing the result with (2.17), we see that the matrices and defined in (2.16) and (2.17), respectively, are related to each other as

| (3.5) |

where is the matrix defined in (2.24). We remark that, even though has the behavior as by the continuity the equality in (3.5) holds also at In a similar way, by using (3.1) in (2.17) and comparing the result with (2.16), we obtain

| (3.6) |

where is the matrix defined in (2.25). By (2.19) and (2.20), we know that the matrices and are invertible when Thus, from (3.5) and (3.6) we conclude that and are inverses of each other for each i.e. we have

| (3.7) |

where the result in (3.7) holds also at by the continuity. We already know from (2.23) that the determinants of the matrices and are both equal to We remark that (3.7) yields a wealth of relations among the left and right matrix-valued scattering coefficients, which are similar to those given in (2.50), (2.52), and (2.53).

Using (3.3) and (3.4) in (2.18), we get

| (3.8) |

We can write (3.8) in terms of the scattering matrix appearing in (2.9) as

| (3.9) |

where is the constant matrix defined in (2.51) and is the involution matrix defined as

| (3.10) |

Note that is equal to its own inverse. By taking the determinants of both sides of (3.9) and using (2.21) and the fact that and we obtain the determinant of the scattering matrix as

| (3.11) |

Because of (2.22), we can write (3.11) also as

From (2.22) and (2.50) we see that

| (3.12) |

where we recall that an asterisk is used to denote complex conjugation. Hence, (3.11) and (3.12) imply that

| (3.13) |

The next proposition indicates how the relevant quantities related to the full-line Schrödinger equation (1.1) are affected when the potential is shifted by units to the right, i.e. when we replace in (1.1) by defined as

| (3.14) |

We use the superscript to denote the corresponding transformed quantities. The result will be useful in showing that the factorization formulas (3.44) and (3.45) remain unchanged if the potential is decomposed into two pieces at any fragmentation point instead the fragmentation point used in (1.4).

Proposition 3.1.

Consider the full-line matrix Schrödinger equation (1.1) with the matrix potential satisfying (1.2) and (1.3). Under the transformation described in (3.14), the quantities relevant to (1.1) are transformed as follows:

-

(a)

The Jost solutions and are transformed into and respectively, where we have defined

(3.15) - (b)

- (c)

Proof.

The matrix defined in the first equality of (3.15) is the transformed left Jost solution because it satisfies the transformed matrix-valued Schrödinger equation

| (3.20) |

and is asymptotic to as Similarly, the matrix defined in the second equality of (3.15) is the transformed right Jost solution because it satisfies (3.20) and is asymptotic to as Thus, the proof of (a) is complete. Using in the analog of (2.5), as we have

| (3.21) |

Using the right-hand side of the first equality of (3.15) in (3.21) and comparing the result with (2.5) we get the first equalities of (3.16) and (3.17), respectively. Similarly, using in the analog of (2.6), as we get

| (3.22) |

Using the right-hand side of the second equality of (3.15) in (3.22) and comparing the result with (2.6), we obtain the second equalities of (3.16) and (3.17), respectively. Hence, the proof of (b) is also complete. Using (3.16) and (3.17) in the analogs of (2.24) and (2.25) corresponding to the shifted potential we obtain (3.18) and (3.19). ∎

When the potential in (1.1) satisfies (1.2) and (1.3). let us decompose it as in (1.4). For the left fragment and the right fragment defined in (1.5), let us use the subscripts and respectively, to denote the corresponding relevant quantities. Thus, analogous to (2.9) we define the scattering matrices and corresponding to and respectively, as

| (3.23) |

where and are the respective left transmission coefficients, and are the respective right transmission coefficients, and are the respective left reflection coefficients, and and are the respective right reflection coefficients. In terms of the scattering coefficients for the respective fragments, we use and as in (2.24) and use and as in (2.25) to denote the transition matrices corresponding to the left and right potential fragments and Thus, we have

| (3.24) |

| (3.25) |

| (3.26) |

| (3.27) |

In preparation for the proof of our factorization formula, in the next proposition we express the value at of the matrix defined in (2.16) in terms of the left scattering coefficients for the right fragment and similarly we express the value at of the matrix defined in (2.17) in terms of the right scattering coefficients for the left fragment

Proposition 3.2.

Consider the full-line matrix Schrödinger equation (1.1), where the potential satisfies (1.2) and (1.3) and is fragmented as in (1.4) into the left fragment and the right fragment defined in (1.5). We then have the following:

-

(a)

For the matrix defined in (2.16) satisfies

(3.28) where and are the left transmission and left reflection coefficients, respectively, for the right fragment

-

(b)

For the matrix defined in (2.17) satisfies

(3.29) where and are the right transmission and right reflection coefficients, respectively, for the left fragment

- (c)

- (d)

Proof.

We remark that, although the matrices and behave as as the equalities in (3.30) and (3.36) hold also at by the continuity. Let us use and to denote the left and right Jost solutions, respectively, corresponding to the potential fragment Similarly, let us use and to denote the left and right Jost solutions, respectively, corresponding to the potential fragment Since and both satisfy (1.1) and the asymptotics (2.1), we have

| (3.40) |

Similarly, since and both satisfy (1.1) and the asymptotics (2.2), we have

| (3.41) |

From (1.5), (2.5), (2.6), (3.40), and (3.41), we get

| (3.42) |

| (3.43) |

Comparing (3.40) and (3.42) at and using the result in (2.16), we establish (3.28), which completes the proof of (a). Similarly, comparing (3.41) and (3.43) at and using the result in (2.17), we establish (3.29). Hence, the proof of (b) is also complete. We can confirm (3.30) directly by evaluating the matrix product on its right-hand side with the help of (3.31)–(3.33). Similarly, (3.34) can directly be confirmed by evaluating the matrix products on the right-hand sides of (3.31)–(3.33). Thus, the proof of (c) is complete. Let us now prove (d). We can verify (3.36) directly by evaluating the matrix product on its right-hand side with the help of (3.34), (3.37), and (3.38). In the same manner, (3.39) can be directly confirmed by evaluating the matrix products on the right-hand sides of (3.37) and (3.38) and by comparing the result with (3.26). Hence, the proof of (d) is also complete. ∎

In the next theorem we present our factorization formula corresponding to the potential fragmentation given in (1.4).

Theorem 3.3.

Consider the full-line matrix Schrödinger equation (1.1) with the potential satisfying (1.2) and (1.3). Let and denote the left and right fragments of described in (1.4) and (1.5). Let and be the transition matrices defined in (2.24), (3.24), and (3.25) corresponding to and respectively. Similarly, let and denote the transition matrices defined in (2.25), (3.26), and (3.27) corresponding to and respectively. Then, we have the following:

-

(a)

The transition matrix is equal to the ordered matrix product i.e. we have

(3.44) -

(b)

The factorization formula (3.44) can also be expressed in terms of the transition matrices and as

(3.45)

Proof.

Evaluating (3.5) at we get

| (3.46) |

Using (3.30) and (3.36) on the left and right-hand sides of (3.46), respectively, we obtain

| (3.47) |

From (3.34) we see that is invertible when Thus, using (3.35) and (3.39) in (3.47), we get

or equivalently

| (3.48) |

From (3.7) we already know that and hence (3.48) yields (3.44). Thus, the proof of (a) is complete. Taking the matrix inverses of both sides of (3.44) and then making use of (3.7), we obtain (3.45). Thus, the proof of (b) is also complete. ∎

The following theorem shows that the factorization formulas (3.44) and (3.45) also hold if the potential in (1.1) is decomposed into and by choosing the fragmentation point anywhere on the real axis, not necessarily at

Theorem 3.4.

Consider the full-line matrix Schrödinger equation (1.1) with the potential satisfying (1.2) and (1.3). Let and denote the left and right fragments of described as in (1.4), but (1.5) replaced with

| (3.49) |

where is a fixed real constant. Let and appearing in (2.24), (3.24), (3.25), respectively, be the transition matrices corresponding to and respectively. Similarly, let and appearing in (2.25), (3.26), (3.27) be the transition matrices corresponding to and respectively. Then, we have the following:

-

(a)

The transition matrix is equal to the ordered matrix product i.e. we have

(3.50) -

(b)

The factorization formula (3.50) can also be expressed in terms of the transition matrices and as

(3.51)

Proof.

Let us translate the potential and its fragments and as in (3.14). The shifted potentials satisfy

where we have defined

Note that the shifted potential fragments and correspond to the fragments of with the fragmentation point i.e. we have

Since the shifted potential is fragmented at we can apply Theorem 3.3 to Let us use and to denote the left and right transmission coefficients, the left and right reflections coefficients, and the transition matrices, respectively, for the shifted potentials with and In analogy with (3.24)–(3.27), for we have

From Theorem 3.3 we have

| (3.52) |

Using (3.18) and (3.19) in (3.52), after some minor simplification we get (3.50) and (3.51). ∎

The result of Theorem 3.4 can easily be extended from two fragments to any finite number of fragments. This is because any existing fragment can be decomposed into further subfragments by applying the factorization formulas (3.50) and (3.51) to each fragment and to its subfragments. Since a proof can be obtained by using an induction on the number of fragments, we state the result as a corollary without a proof.

Corollary 3.5.

Consider the full-line matrix Schrödinger equation (1.1) with the matrix-valued potential satisfying (1.2) and (1.3). Let and defined in (2.9), (2.24), and (2.25), respectively be the corresponding scattering matrix and the transition matrices, with and denoting the corresponding left and right transmission coefficients and the left and right reflection coefficients, respectively. Assume that is partitioned into fragments at the fragmentation points with as

where is any fixed positive integer and

with and Let be the corresponding left and right transmission coefficients and the left and right reflection coefficients, respectively, for the potential fragment Let and denote the scattering matrix and the transition matrices for the corresponding fragment which are defined as

Then, the transition matrices and for the whole potential are expressed as ordered matrix products of the corresponding transition matrices for the fragments as

In Theorem 3.4 the transition matrix for a potential on the full line is expressed as a matrix product of the transition matrices for the left and right potential fragments. In the next theorem, we express the scattering coefficients of a potential on the full line in terms of the scattering coefficients of the two potential fragments.

Theorem 3.6.

Consider the full-line matrix Schrödinger equation (1.1) with the potential satisfying (1.2) and (1.3). Assume that is fragmented at an arbitrary point into the two pieces and as described in (1.4) and (3.49). Let given in (2.9) be the scattering matrix for the potential and let and given in (3.23) be the scattering matrices corresponding to the potential fragments and respectively. Then, for the scattering coefficients in are related to the right scattering coefficients in and the left scattering coefficients in as

| (3.53) |

| (3.54) |

| (3.55) |

| (3.56) |

where we recall that is the identity matrix and the dagger denotes the matrix adjoint.

Proof.

From the entry in (3.50), for we have

| (3.57) |

From the entry of (2.52) we know that

| (3.58) |

Multiplying both sides of (3.58) by from the left and by from the right, we get

| (3.59) |

In (3.59), after replacing by we obtain

| (3.60) |

Next, using (3.60) and the second equality of (2.50) in (3.57), for we get

| (3.61) |

Using the third equality of (2.50) in the first term on the right-hand side of (3.61), for we have

| (3.62) |

Factoring the right-hand side of (3.62) and then taking the inverses of both sides of the resulting equation, we obtain (3.53). Let us next prove (3.54). From the entry of (3.50), for we have

| (3.63) |

In (3.60) by replacing by and using the resulting equality in (3.63), when we get

| (3.64) |

Next, using the third equality of (2.50) in the second term on the right-hand side of (3.64), for we obtain

which is equivalent to

| (3.65) |

Using the second equality of (2.50) in (3.65), we have

| (3.66) |

Then, multiplying (3.66) from the right by the respective sides of (3.53), we obtain (3.54). Let us now prove (3.55). From the third equality of (2.50) we have Thus, by taking the matrix adjoint of both sides of (3.53), then replacing by in the resulting equality, and then using the first two equalities of (2.50), we get (3.55). Let us finally prove (3.56). From the entry of (2.53), we have

| (3.67) |

Using the first and third equalities of (2.50) in (3.67), we get

| (3.68) |

Then, on the right-hand side of (3.68), we replace by the right-hand side of (3.53) and we also replace by the right-hand side of (3.66) after the substitution After simplifying the resulting modified version of (3.68), we obtain (3.56). ∎

4 The matrix-valued scattering coefficients

The choice in (1.1) corresponds to the scalar case. In the scalar case, the potential satisfying (1.2) and (1.3) is real valued and the corresponding left and right transmission coefficients are equal to each other. However, when the matrix-valued transmission coefficients are in general not equal to each other. In the matrix case, as seen from (2.46) we have

| (4.1) |

On the other hand, from (2.22) we know that the determinants of the left and right transmission coefficients are always equal to each other for In this section, we first provide some relevant properties of the scattering coefficients for (1.1), and then we present some explicit examples demonstrating the unequivalence of the matrix-valued left and right transmission coefficients.

The following theorem summarizes the large -asymptotics of the scattering coefficients for the full-line matrix Schrödinger equation.

Theorem 4.1.

Proof.

By solving (2.3) and (2.4) via the method of successive approximation, for each fixed we obtain the large -asymptotics of the Jost solutions as

| (4.6) |

| (4.7) |

The integral representations involving the scattering coefficients are obtained from (2.3) and (2.4) with the help of (2.5) and (2.6). As listed in (2.10)–(2.13) of [5], we have

| (4.8) |

| (4.9) |

| (4.10) |

| (4.11) |

With the help of (4.6) and (4.7), from (4.8)–(4.11) we obtain (4.2)–(4.5) in their appropriate domains. ∎

We observe that it is impossible to tell the unequivalence of and from the large -limits given in (4.2) and (4.3). However, as the next example shows, the small -asymptotics of and may be used to see their unequivalence in the matrix case with

Example 4.2.

Consider the full-line Schrödinger equation (1.1) when the potential is a matrix and fragmented as in (1.4) and (1.5), where the fragments and are compactly supported and given by

| (4.12) |

with the understanding that vanishes when and that vanishes when Thus, the support of the potential is confined to the interval Since vanishes when as seen from (2.1) the corresponding Jost solution is equal to there. The evaluation of the corresponding scattering coefficients for the potential specified in (4.12) is not trivial. A relatively efficient way for that evaluation is accomplished as follows. For we construct the Jost solution corresponding to the constant matrix by diagonalizing obtaining the corresponding eigenvalues and eigenvectors of and then constructing the general solution to (1.1) with the help of those eigenvalues and eigenvectors. Next, by using the continuity of and at the point we construct explicitly when Then, by using (3.42) and its derivative at we obtain the corresponding scattering coefficients and By using a similar procedure, we construct the right Jost solution corresponding to the potential as well as the corresponding scattering coefficients and Next, we construct the transmission coefficients and corresponding to the whole potential by using (3.53) and (3.55), respectively. In fact, with the help of the symbolic software Mathematica, we evaluate and explicitly in a closed form. However, the corresponding explicit expressions are extremely lengthy, and hence it is not feasible to display them here. Instead, we present the small -limits of and which shows that We have

| (4.13) |

| (4.14) |

where we have defined

| (4.15) |

| (4.16) |

| (4.17) |

| (4.18) |

| (4.19) |

| (4.20) |

| (4.21) |

| (4.22) |

Note that we use an overbar on a digit to denote a roundoff on that digit. From (4.13)–(4.22) we see that and in fact we confirm (4.1) up to as

In Example 4.2, we have illustrated that the matrix-valued left and right transmission coefficients are not equal to each other in general, and that has been done when the potential is selfadjoint but not real. In order to demonstrate that the unequivalence of and is not caused because the potential is complex valued, we would like to demonstrate that, in general, when we have even when the matrix potential in (1.1) is real valued. Suppose that, in addition to (1.2) and (1.3), the potential is real valued, i.e. we have

where we recall that we use an asterisk to denote complex conjugation. Then, from (1.1) we see that if is a solution to (1.1) then is also a solution. In particular, using (2.1) and (2.2) we observe that, when the potential is real valued, we have

| (4.23) |

Then, using (4.23), from (2.5) and (2.9) we obtain

| (4.24) |

| (4.25) |

Comparing (4.1) and (4.24), we have

| (4.26) |

and similarly, by comparing the first two equalities in (2.50) with (4.25) we get

where we use the superscript to denote the matrix transpose. We remark that, in the scalar case, since the potential in (1.1) is real valued, the equality of the left and right transmission coefficients directly follow from (4.26). Let us mention that, if the matrix potential is real valued, then (3.53)–(3.56) of Theorem 3.6 yield

| (4.27) |

| (4.28) |

In the next example, we illustrate the unequivalence of the left and right transmission coefficients when the matrix potential in (1.1) is real valued. As in the previous example, we construct and in terms of the scattering coefficients corresponding to the fragments and specified in (1.4) and (1.5), and then we check the unequivalence of the resulting expressions.

Example 4.3.

Consider the full-line Schrödinger equation (1.1) with the matrix potential consisting of the fragments and as in (1.4) and (1.5), where those fragments are real valued and given by

| (4.29) |

| (4.30) |

The parameter appearing in (4.29) is a real constant, and it is understood that the support of is the interval and the support of is The procedure to evaluate the corresponding scattering coefficients is not trivial. As described in Example 4.2, we evaluate the scattering coefficients corresponding to and by diagonalizing each of the constant matrices and and by constructing the corresponding Jost solutions and We then use (4.27) and (4.28) to obtain and As in Example 4.2 we have prepared a Mathematica notebook to evaluate and explicitly in a closed form. The resulting expressions are extremely lengthy, and hence it is not practical to display them in our paper. Because the potential is real valued in this example, in order to check if as seen from (4.26) it is sufficient to check whether the matrix is symmetric or not. We remark that it is also possible to evaluate directly by constructing the Jost solution when and then by using (2.5). However, the evaluations in that case are much more involved, and Mathematica is not capable of carrying out the computations properly unless a more powerful computer is used. This indicates the usefulness of the factorization formula in evaluating the matrix-valued scattering coefficients for the full-line matrix Schrödinger equation. For various values of the parameter by evaluating the difference at any -value we confirm that is not a symmetric matrix. At any particular -value, we have if and only if the matrix norm of is zero. Using Mathematica, we are able to plot that matrix norm as a function of in the interval for any positive We then observe that that norm is strictly positive, and hence we are able to confirm that in general we have when One other reason for us to use the parameter in (4.29) is the following. The value of affects the number of eigenvalues for the full-line Schrödinger operator with the specified potential and hence by using various different values of as input we can check the unequivalence of and as the number of eigenvalues changes. The eigenvalues occur at the -values on the positive imaginary axis in the complex plane where the determinant of has poles. Thus, we are able to identify the eigenvalues by locating the zeros of when Let us recall that we use an overbar on a digit to indicate a roundoff. We find that the numerically approximate value corresponds to an exceptional case, where the number of eigenvalues changes by For example, when we observe that there are no eigenvalues and that there is exactly one eigenvalue when When we have an eigenvalue at the eigenvalue shifts to when When we do not have any eigenvalues and the zero of occurs on the negative imaginary axis at We repeat our examination of the unequivalence of and by also changing other entries of the matrices and appearing in (4.29) and (4.30), respectively. For example, by letting

| (4.31) |

| (4.32) |

we evaluate using (4.27). In this case we observe that there are three eigenvalues occurring when

and we still observe that in this case. In fact, using and appearing in (4.31) and (4.32), respectively, as input, we observe that the corresponding transmission coefficients satisfy

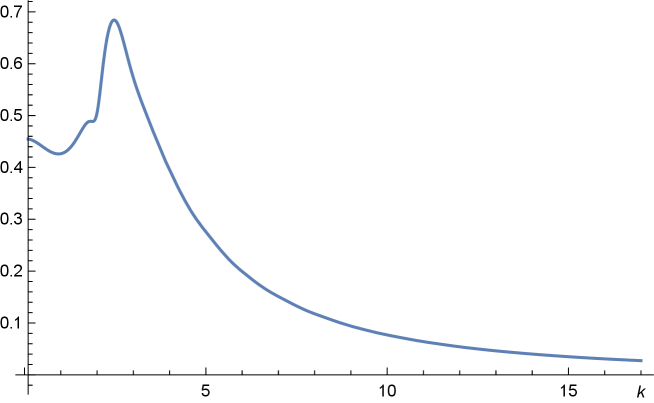

confirming that we cannot have In Figure 4.1 we present the plot of the matrix norm of as a function of which also shows that

5 The connection to the half-line Schrödinger operator

In this section we explore an important connection between the full-line matrix Schrödinger equation (1.1) and the half-line matrix Schrödinger equation

| (5.1) |

where and the potential is a matrix-valued function of The connection will be made by choosing the potential in terms of the fragments and appearing in (1.4) and (1.5) for the full-line potential in an appropriate way and also by supplementing (5.1) with an appropriate boundary condition. To make a distinction between the quantities relevant to the full-line Schrödinger equation (1.1) and the quantities relevant to the half-line Schrödinger equation (5.1), we use boldface to denote some of quantities related to (5.1).

Before making the connection between the half-line and full-line Schrödinger operators, we first provide a summary of some basic relevant facts related to (5.1). Since our interest in the half-line Schrödinger operator is restricted to its connection to the full-line Schrödinger operator, we consider (5.1) when the matrix potential has size where is the positive integer related to the matrix size of the potential in (1.1). We refer the reader to [6] for the analysis of (5.1) when the size of the matrix potential is where can be chosen as any positive integer.

We now present some basic relevant facts related to (5.1) by assuming that the half-line matrix potential in (5.1) satisfies

| (5.2) |

| (5.3) |

To construct the half-line matrix Schrödinger operator related to (5.1), we supplement (5.1) with the general selfadjoint boundary condition

| (5.4) |

where and are two constant matrices satisfying

| (5.5) |

We recall that a matrix is positive (also called positive definite) when all its eigenvalues are positive.

It is possible to express the boundary conditions listed in (5.4) in an uncoupled form. We refer the reader to Section 3.4 of [6] for the explicit steps to transform from any pair of boundary matrices appearing in the general selfadjoint boundary condition described in (5.4) and (5.5) to the diagonal boundary matrix pair given by

| (5.6) |

for some appropriate real parameters It can directly be verified that the matrix pair satisfies the two equalities in (5.5) and that (5.4) is equivalent to the uncoupled system of equations given by

| (5.7) |

We remark that the case corresponds to the Dirichlet boundary condition and the case corresponds to the Neumann boundary condition. Let us use and to denote the number of Dirichlet and Neumann boundary conditions in (5.7), and let us use to denote the number of mixed boundary conditions in (5.7), where a mixed boundary condition occurs when or It is clear that we have

In Sections 3.3 and 3.5 of [6] we have constructed a selfadjoint realization of the matrix Schrödinger operator with the boundary condition described in (5.4) and (5.5), and we have used to denote it. In Section 3.6 of [6] we have shown that that selfadjoint realization with the boundary matrices and the selfadjoint realization with the boundary matrices are related to each other as

| (5.8) |

for some unitary matrix

In Section 2.4 of [6] we have established a unitary transformation between the half-line matrix Schrödinger operator and the full-line matrix Schrödinger operator by choosing the boundary matrices and in (5.4) appropriately so that the full-line potential at includes a point interaction. Using that unitary transformation, in [13] the half-line physical solution to (5.1) and the full-line physical solutions to (1.1) are related to each other, and also the corresponding half-line scattering matrix and full-line scattering matrix are related to each other. In this section of our current paper, in the absence of a point interaction, via a unitary transformation we are interested in analyzing the connection between various half-line quantities and the corresponding full-line quantities.

By proceeding as in Section 2.4 of [6], we establish our unitary operator from onto as follows. We decompose any complex-valued, square-integrable column vector with components into two pieces of column vectors and each with components, as

Then, our unitary transformation maps onto the complex-valued, square-integrable column vector with components in such a way that

We use the decomposition of the full-line matrix potential into the potential fragments and as described in (1.4) and (1.5). We choose the half-line matrix potential in terms of and by letting

| (5.9) |

The inverse transformation which is equivalent to maps onto via

Under the action of the unitary transformation the half-line Hamiltonian appearing on the left-hand side of (5.8) is unitarily transformed onto the full-line Hamiltonian where is related to as

| (5.10) |

and its domain is given by

where denotes the domain of The operator specified in (5.10) is a selfadjoint realization in of the formal differential operator where is the full-line potential appearing in (1.4) and satisfying (1.2) and (1.3).

The boundary condition (5.4) at of satisfied by the functions in implies that the functions in themselves satisfy a transmission condition at of the full line In order to determine that transmission condition, we express the boundary matrices and appearing in (5.4) in terms of block matrices and as

| (5.11) |

Using (5.11) in (5.4) we see that any function in satisfies the transmission condition at given by

| (5.12) |

For example, let us choose the boundary matrices and as

| (5.13) |

where we recall that is the identity matrix and denotes the zero matrix. It can directly be verified that the matrices and appearing in (5.13) satisfy the two matrix equalities in (5.5). Then, the transmission condition at of given in (5.12) is equivalent to the two conditions

which indicate that the functions and are continuous at In this case, is the standard matrix Schrödinger operator on the full line without a point interaction. In the special case when the boundary matrices and are chosen as in (5.13), as shown in Proposition 5.9 of [13], the corresponding transformed boundary matrices and in (5.6) yield precisely Dirichlet and Neumann boundary conditions.

We now introduce some relevant quantities for the half-line Schrödinger equation (5.1) with the boundary condition (5.4). We assume that the half-line potential is chosen as in (5.9), where the full-line potential satisfies (1.2) and (1.3). Hence, satisfies (5.2) and (5.3). As already mentioned, we use boldface to denote some of the half-line quantities in order to make a contrast with the corresponding full-line quantities. For example, is the half-line matrix potential whereas is the full-line matrix potential, is the half-line scattering matrix while is the full-line scattering matrix, is the half-line matrix-valued Jost solution whereas and are the full-line matrix-valued Jost solutions, is the half-line matrix-valued regular solution, the quantity denotes the half-line matrix-valued physical solution whereas and are the full-line matrix-valued physical solutions. We use for the identity matrix and use for the identity matrix. We recall that denotes the constant matrix defined in (3.10) and it should not be confused with the half-line Jost matrix

The Jost solution is the solution to (5.1) satisfying the spacial asymptotics

| (5.14) |

In terms of the boundary matrices and appearing in (5.4) and the Jost solution the Jost matrix is defined as

| (5.15) |

where is used because has [6] an analytic extension in from to and is continuous in The half-line scattering matrix is defined in terms of the Jost matrix as

| (5.16) |

When the potential satisfies (5.2) and (5.3), in the so-called exceptional case the matrix has a singularity at even though the limit of the right-hand side of (5.16) exists when Thus, is continuous in including It is known that satisfies

We refer the reader to Theorem 3.8.15 of [6] regarding the small -behavior of and

The matrix-valued physical solution to (5.1) is defined in terms of the Jost solution and the scattering matrix as

| (5.17) |

It is known [6] that has a meromorphic extension from to and it also satisfies the boundary condition (5.4), i.e. we have

| (5.18) |

There is also a particular matrix-valued solution to (5.1) satisfying the initial conditions

Because is entire in for each fixed it is usually called the regular solution. The physical solution and the regular solution are related to each other via the Jost matrix as

In the next theorem, we consider the special case where the half-line Schrödinger operator and the full-line Schrödinger operator are related to each other as in (5.10), with the potentials and being related as in (1.4) and (5.9) and with the boundary matrices and chosen as in (5.13). We show how the corresponding half-line Jost solution, half-line physical solution, half-line scattering matrix, and half-line Jost matrix are related to the appropriate full-line quantities. We remark that the results in (5.21) and (5.22) have already been proved in [13] by using a different method.

Theorem 5.1.

Consider the full-line matrix Schrödinger equation (1.1) with the matrix potential satisfying (1.2) and (1.3). Assume that the corresponding full-line Hamiltonian and the half-line Hamiltonian are unitarily connected as in (5.10) by relating the half-line matrix potential to as in (1.4) and (5.9) and by choosing the boundary matrices and as in (5.13). Then, we have the following:

- (a)

- (b)

- (c)

- (d)

Proof.

The Jost solutions and satisfy (1.1). Since appears as in (1.1), the quantities and also satisfy (1.1). Furthermore, and satisfy the respective spacial asymptotics in (2.1) and (2.2). Then, with the help of (1.5) and (5.9) we see that the half-line Jost solution given in (5.19) satisfies the half-line Schrödinger equation (5.1) and the spacial asymptotics (5.14). Thus, the proof of (a) is complete. Let us now prove (b). We evaluate (5.17) and its -derivative at the point and then we use the result in (5.18), where and are the matrices in (5.13). This yields

| (5.25) |

Using the matrices and defined in (2.18) and (3.10), respectively, we write (5.25) as

which yields

| (5.26) |

We remark that the invertibility of for is assured by Theorem 2.3(c) and that (5.26) holds also at as a consequence of the continuity of in From (3.9) we have

| (5.27) |

where we recall that is the full-line scattering matrix in (2.9) and is the constant matrix in (2.51). Using (5.27) in (5.26) we get

| (5.28) |

Using and in (5.28), we obtain (5.20). Then, we get (5.21) by using (2.9) in (5.20). Thus, the proof of (b) is complete. Let us now prove (c). Using (5.19) and (5.21) in (5.17), we obtain

| (5.29) |

The use of (3.3) and (3.4) in (5.29) yields

| (5.30) |

Then, using (2.10) on the right-hand side of (5.30), we obtain (5.22), which completes the proof of (c). We now turn to the proof of (d). In (5.15) we use (5.13), (5.19), and the -derivative of (5.19). This gives us (5.23). Finally, by postmultiplying both sides of (5.24) with the respective sides of (5.23), one can verify that In the simplification of the left-hand side of the last equality, one uses (2.42), (2.44), (2.46), and (2.47). Thus, the proof of (d) is complete. ∎

We recall that, as (5.10) indicates, the full-line and half-line Hamiltonians and respectively, are unitarily equivalent. However, as seen from (5.20), the corresponding full-line and half-line scattering matrices and respectively, are not unitarily equivalent. For an elaboration on this issue, we refer the reader to [13].

Let us also mention that it is possible to establish (5.24) without the direct verification used in the proof of Theorem 5.1. This can be accomplished as follows. Comparing (2.18) and (5.23), we see that

| (5.31) |

By taking the matrix inverse of both sides of (5.31), we obtain

| (5.32) |

With the help of (2.18), (2.51), and (3.10), we can write (2.56) as

| (5.33) |

From (5.33), for we get

| (5.34) |

Using (4.1) in the last matrix factor on the right-hand side of (5.34), we can write (5.34) as

| (5.35) |

Finally, using (5.35) on the right-hand side of (5.32), we obtain

| (5.36) |

which is equivalent to (5.24).

In the next theorem, we relate the determinant of the half-line Jost matrix to the determinant of the full-line matrix-valued transmission coefficient

Theorem 5.2.

Consider the full-line matrix Schrödinger equation (1.1) with the matrix potential satisfying (1.2) and (1.3). Assume that the corresponding full-line Hamiltonian is unitarily connected to the half-line Hamiltonian as in (5.10) by relating the half-line matrix potential to as in (1.4) and (5.9) and by choosing the boundary matrices and as in (5.13). Then, the determinant of the half-line Jost matrix defined in (5.15) is related to the determinant of the corresponding full-line matrix-valued transmission coefficient appearing in (2.5) as

| (5.37) |

Proof.

When the potential satisfies (5.2) and (5.3), from Theorems 3.11.1 and 3.11.6 of [6] we know that the zeros of in can only occur on the positive imaginary axis and the number of such zeros is finite. Assume that the full-line Hamiltonian and the half-line Hamiltonian are connected to each other through the unitary transformation as described in (5.10), where and are related as in (1.4) and (5.9) and the boundary matrices are chosen as in (5.13). We then have the following important consequence. The number of eigenvalues of coincides with the number of eigenvalues of and the multiplicities of the corresponding eigenvalues also coincide. The eigenvalues of occur at the -values on the positive imaginary axis in the complex -plane where vanishes, and the multiplicity of each of those eigenvalues is equal to the order of the corresponding zero of Thus, as seen from (5.37) the eigenvalues of occur on the positive imaginary axis in the complex -plane where has poles, and the multiplicity of each of those eigenvalues is equal to the order of the corresponding pole of Let us use to denote the number of such poles without counting their multiplicities, and let us assume that those poles occur at for We use to denote the multiplicity of the pole at The nonnegative integer corresponds to the number of distinct eigenvalues of the corresponding full-line Schrödinger operator associated with (1.1). If then there are no eigenvalues. Let us use to denote the number of eigenvalues including the multiplicities. Hence, is related to as

| (5.39) |

From (5.37) it is seen that the zeros of the determinant of the corresponding Jost matrix occur at with multiplicity for It is known [6] that is analytic in and continuous in Thus, from (5.37) we also see that cannot vanish in

The unitary equivalence given in (5.10) between the half-line matrix Schrödinger operator and the full-line matrix Schrödinger operator has other important consequences. Let us comment on the connection between the half-line and full-line cases when From Corollary 3.8.16 of [6] it follows that

| (5.40) |

where is the geometric multiplicity of the zero eigenvalue of and is a nonzero constant. Furthermore, from Proposition 3.8.18 of [6] we know that coincides with the geometric and algebraic multiplicity of the eigenvalue of In fact, the nonnegative integer is related to (5.1) when i.e. related to the zero-energy Schrödinger equation given by

| (5.41) |

When the matrix potential satisfies (5.2) and (5.3), from (3.2.157) and Proposition 3.2.6 of [6] it follows that, among any fundamental set of linearly independent column-vector solutions to (5.41), exactly of them are bounded and are unbounded. In fact, the columns of the zero-energy Jost solution make up the linearly independent bounded solutions to (5.41). Certainly, not all of those column-vector solutions necessarily satisfy the selfadjoint boundary condition (5.4) with the boundary matrices and chosen as in (5.5). From Remark 3.8.10 of [6] it is known that coincides with the number of linearly independent bounded solutions to (5.41) satisfying the boundary condition (5.4) and that we have

| (5.42) |

From (5.37) and (5.40) we obtain

| (5.43) |

We have the following additional remarks. Let us consider (1.1) when i.e. let us consider the full-line zero-energy Schrödinger equation

| (5.44) |

where is the matrix potential appearing in (1.1) and satisfying (1.2) and (1.3). We recall that [4], for the full-line Schrödinger equation in the scalar case, i.e. when the generic case occurs when there are no bounded solutions to (5.44) and that the exceptional case occurs when there exists a bounded solution to (5.44). In the matrix case, let us assume that (5.44) has linearly independent bounded solutions. It is known [5] that

| (5.45) |

We can call the degree of exceptionality. From the unitary connection (5.10) it follows that the number of linearly independent bounded solutions to (5.44) is equal to the number of linearly independent bounded solutions to (5.41). Thus, we have

| (5.46) |

As a result, we can write (5.43) also as

| (5.47) |

From (5.42), (5.45), and (5.46) we conclude that

| (5.48) |

The restriction from (5.42) to (5.48), when we have the unitary equivalence (5.10) between the half-line matrix Schrödinger operator and the full-line matrix Schrödinger operator can be made plausible as follows. As seen from (5.9), the matrix potential is a block diagonal matrix consisting of the two square matrices and for Thus, we can decompose any column-vector solution to (5.41) with components into two column vectors each having components as

| (5.49) |

Consequently, we can decompose the matrix system (5.41) into the two matrix subsystems

| (5.50) |

in such a way that the two subsystems given in the first and second lines, respectively, in (5.50) are uncoupled from each other. We now look for matrix solutions to each of the two subsystems in (5.50). Let us first solve the first subsystem in (5.50). From (3.2.157) and Proposition 3.2.6 of [6], we know that there are linearly independent bounded solutions to the first subsystem in (5.50). With the help of (5.49) and the boundary matrices and in (5.13), we see that the boundary condition (5.4) is expressed as two systems as

| (5.51) |

Thus, once we have at hand, the initial values for and are uniquely determined from (5.51). Then, we solve the second subsystem in (5.50) with the already determined initial conditions given in (5.51). Since the first subsystem in (5.50) can have at most linearly independent bounded solutions and we have uniquely determined using from the decomposition (5.49) we conclude that we can have at most linearly independent bounded column-vector solutions to the system (5.41).

In (3.13) we have expressed the determinant of the scattering matrix for the full-line Schrödinger equation (1.1) in terms of the determinant of the left transmission coefficient When the full-line Hamiltonian and the half-line Hamiltonian are related to each other unitarily as in (5.10), in the next theorem we relate the determinant of the corresponding half-line scattering matrix to

Theorem 5.3.

Consider the full-line matrix Schrödinger equation (1.1) with the matrix potential satisfying (1.2) and (1.3). Assume that the corresponding full-line Hamiltonian and the half-line Hamiltonian are unitarily connected as in (5.10) by relating the half-line matrix potential to as in (1.4) and (5.9) and by choosing the boundary matrices and as in (5.13). Then, the determinant of the half-line scattering matrix defined in (5.16) is related to the determinant of the full-line scattering matrix defined in (2.9) as

| (5.52) |

and hence we have

| (5.53) |

where we recall that is the matrix-valued left transmission coefficient appearing in (2.5).

Proof.

The scattering matrices and are related to each other as in (5.20), where is the constant matrix defined in (2.51). By interchanging the first and second row blocks in we get the identity matrix, and hence we have Thus, by taking the determinant of both sides of (5.20), we obtain (5.52). Then, using (3.13) on the right-hand side of (5.52), we get (5.53). ∎

6 Levinson’s theorem

The main goal in this section is to prove Levinson’s theorem for the full-line matrix Schrödinger equation (1.1). In the scalar case, we recall that Levinson’s theorem connects the continuous spectrum and the discrete spectrum to each other, and it relates the number of discrete eigenvalues including the multiplicities to the change in the phase of the scattering matrix when the independent variable changes from to In the matrix case, in Levinson’s theorem one needs to use the phase of the determinant of the scattering matrix. In order to prove Levinson’s theorem for (1.1), we exploit the unitary transformation given in (5.10) relating the half-line and full-line matrix Schrödinger operators and we use Levinson’s theorem presented in Theorem 3.12.3 of [6] for the half-line matrix Schrödinger operator. We also provide an independent proof with the help of the argument principle applied to the determinant of the matrix-valued left transmission coefficient appearing in (2.5).

In preparation for the proof of Levinson’s theorem for (1.1), in the next theorem we relate the large -asymptotics of the half-line scattering matrix to the full-line matrix potential in (1.1). Recall that the half-line potential is related to the full-line potential as in (5.9), where and are the fragments of appearing in (1.4) and (1.5). We also recall that the boundary matrices and appearing in (5.13) are used in the boundary condition (5.4) in the formation of the half-line scattering matrix

Theorem 6.1.

Consider the full-line matrix Schrödinger equation (1.1) with the matrix potential satisfying (1.2) and (1.3). Assume that the corresponding full-line Hamiltonian and the half-line Hamiltonian are unitarily connected by relating the half-line matrix potential to as in (1.4) and (5.9) and by choosing the boundary matrices and as in (5.13). Then, the half-line scattering matrix in (5.16) has the large -asymptotics given by

| (6.1) |

where we have

| (6.2) |

with being the constant matrix defined in (2.51).

Proof.

Remark 6.2.

We have the following comments related to the first equality in (6.2). One can directly evaluate the eigenvalues of the matrix defined in (2.51) and confirm that it has the eigenvalue with multiplicity and has the eigenvalue with multiplicity By Theorem 3.10.6 of [6] we know that the number of Dirichlet boundary conditions associated with the boundary matrices and in (5.13) is equal to the (algebraic and geometric) multiplicity of the eigenvalue of the matrix and that the number of non-Dirichlet boundary conditions is equal to the (algebraic and geometric) multiplicity of the eigenvalue of the matrix Hence, the first equality in (6.2) implies that the boundary matrices and in (5.13) correspond to Dirichlet and non-Dirichlet boundary conditions. In fact, it is already proved in Proposition 5.9 of [13] with a different method that the number of Dirichlet boundary conditions equal to and that the aforementioned non-Dirichlet boundary conditions are actually all Neumann boundary conditions.

Next, we provide a review of some relevant facts on the small -asymptotics related to the full-line matrix Schrödinger equation (1.1) with the matrix potential satisfying (1.2) and (1.3). We refer the reader to [5] for an elaborate analysis including the proofs and further details.

Let us consider the full-line matrix-valued zero-energy Schrödinger equation given in (5.44) when the matrix potential satisfies (1.2) and (1.3). There are two matrix-valued solutions to (5.44) whose columns form a fundamental set. We use to denote one of those two matrix-valued solutions, where satisfies the asymptotic conditions

Thus, all the columns of correspond to unbounded solutions to (5.44). The other matrix-valued solution to (5.44) is given by where is the left Jost solution to (1.1) appearing in (2.1). As seen from (2.1), the function satisfies the asymptotics

and hence remains bounded as On the other hand, some or all the columns of may be unbounded as In fact, we have [5]

where is the constant matrix defined as

| (6.3) |

with the limit taken from within

From Section 5, we recall that the degree of exceptionality, denoted by is defined as the number of linearly independent bounded column-vector solutions to (5.44), and we know that satisfies (5.45). From (5.45) we see that we have the purely generic case for (1.1) when and we have the purely exceptional case when Hence, corresponds to the degree of genericity for (1.1). Since the value of is uniquely determined by only the potential in (1.1), we can also say that the matrix potential is exceptional with degree

We can characterize the value of in various different ways. For example, corresponds to the geometric multiplicity of the zero eigenvalue of the matrix defined in (6.3). The value of is equal to the nullity of the matrix It is also equal to the number of linearly independent bounded columns of the zero-energy left Jost solution Thus, the remaining columns of are all unbounded solutions to (5.44). Hence, (5.44) has linearly independent unbounded column-vector solutions and it has linearly independent bounded column-vector solutions.

The degree of exceptionality can also be related to the zero-energy right Jost solution to (5.44). As we see from (2.2), the function satisfies the asymptotics

| (6.4) |

and hence remains bounded as On the other hand, we have

| (6.5) |

where is the constant matrix defined as

| (6.6) |

with the limit taken from within From (4.1) it follows that the matrices and satisfy

| (6.7) |

With the help of (6.4)–(6.7) we observe that the degree of exceptionality is equal to the geometric multiplicity of the zero eigenvalue of the matrix to the nullity of and also to the number of linearly independent bounded columns of

One may ask whether the degree of exceptionality for the full-line potential can be determined from the degrees of exceptionality for its fragments. The answer is no unless all the fragments are purely exceptional, in which case the full-line potential must also be exceptional. This answer can be obtained by first considering the scalar case and then by generalizing it to the matrix case by arguing with a diagonal matrix potential. We refer the reader to [3] for an explicit example in the scalar case, where it is demonstrated that the potential may be generic or exceptional if at least one of the fragments is generic. In the next example, we also illustrate this fact by a different example.

Example 6.3.

We recall that, in the full-line scalar case, if the potential in (1.1) is real valued and satisfies (1.3) then generically the transmission coefficient vanishes linearly as and we have in the exceptional case. In the generic case we have and in the exceptional case we have where we use and for the left and right reflection coefficients. Hence, from (3.51) we observe that, in the scalar case, if the two fragments of a full-line potential are both exceptional then the potential itself must be exceptional. By using induction, the result can be proved to hold also in the case where the number of fragments is arbitrary. In this example, we demonstrate that if at least one of the fragments is generic, then the potential itself can be exceptional or generic. In the full-line scalar case, let us consider the square-well potential of width and depth where is a positive parameter and is a nonnegative parameter. Without any loss of generality, because the transmission coefficient is not affected by shifting the potential, we can assume that the potential is given by

| (6.8) |

The transmission coefficient corresponding to the scalar potential in (6.8) is given by

| (6.9) |

where we have defined

From (6.9) we obtain

| (6.10) |

Hence, (6.10) implies that the exceptional case occurs if and only if is an integer multiple of in which case does not vanish at Thus, the potential in (6.8) is exceptional if and only if we have the depth of the square-well potential is related to the width as

| (6.11) |

for some nonnegative integer For simplicity, let us use and choose our potential fragments and as

where is a parameter in so that the potential in (6.8) is given by

| (6.12) |

We remark that the zero potential is exceptional. With the help of (6.11), we conclude that is exceptional if and only if there exists a nonnegative integer satisfying

| (6.13) |

that is exceptional if and only if there exists a nonnegative integer satisfying

| (6.14) |

and that is exceptional if and only if there exists a nonnegative integer satisfying

| (6.15) |

In fact, (6.15) happens if and only if From (6.13)–(6.15) we have the following conclusions:

-

(a)

If and are both exceptional, then is also exceptional. This follows from the restriction that if and are both nonnegative integers then must also be a nonnegative integer. As argued earlier, if and are both exceptional then cannot be generic.

-

(b

If the nonnegative integer satisfying (6.13) exists but the nonnegative integer satisfying (6.14) does not exist, then the nonnegative integer satisfying (6.15) cannot exist because of the restriction Thus, we can conclude that if is exceptional then and are either simultaneously exceptional or simultaneously generic. Similarly, we can conclude that if is exceptional then and are either simultaneously exceptional or simultaneously generic.

-

(c)

If both are are generic, then can be generic or exceptional. This can be seen easily by arguing that in the equation it may happen that is a nonnegative integer or not a nonnegative integer even though neither nor are nonnegative integers.