Factor Analysis, Probabilistic Principal Component Analysis,

Variational Inference, and Variational Autoencoder: Tutorial and Survey

Abstract

This is a tutorial and survey paper on factor analysis, probabilistic Principal Component Analysis (PCA), variational inference, and Variational Autoencoder (VAE). These methods, which are tightly related, are dimensionality reduction and generative models. They assume that every data point is generated from or caused by a low-dimensional latent factor. By learning the parameters of distribution of latent space, the corresponding low-dimensional factors are found for the sake of dimensionality reduction. For their stochastic and generative behaviour, these models can also be used for generation of new data points in the data space. In this paper, we first start with variational inference where we derive the Evidence Lower Bound (ELBO) and Expectation Maximization (EM) for learning the parameters. Then, we introduce factor analysis, derive its joint and marginal distributions, and work out its EM steps. Probabilistic PCA is then explained, as a special case of factor analysis, and its closed-form solutions are derived. Finally, VAE is explained where the encoder, decoder and sampling from the latent space are introduced. Training VAE using both EM and backpropagation are explained.

*\AtPageUpperLeft

1 Introduction

Learning models can be divided into discriminative and generative models (Ng & Jordan, 2002; Bouchard & Triggs, 2004). Discriminative models discriminate the classes of data for better separation of classes while the generative models learn a latent space which generates the data points. The methods introduced in this paper are generative models.

Variational inference is a technique which finds a lower bound on the log-likelihood of data and maximizes this lower bound rather than the log-likelihood in the Maximum Likelihood Estimation (MLE). This lower bound is usually referred to as the Evidence Lower Bound (ELBO). Learning the parameters of latent space can be done using Expectation Maximization (EM) (Bishop, 2006). Variational Autoencoder (VAE) (Kingma & Welling, 2014) implements the variational inference in an autoencoder neural network setup where the encoder and decoder model the E-step and M-step of EM, respectively. Although, VAE is usually trained using backprogatation, in practice (Rezende et al., 2014; Hou et al., 2017). Variational inference and VAE have had many applications in Bayesian analysis; for example, see the application of variational inference in 3D human motion analysis (Sminchisescu & Jepson, 2004) and the application of VAE in forecasting (Walker et al., 2016).

Factor analysis assumes that every data point is generated from a latent factor/variable where some noise may have been added to data in the data space. Using the EM introduced in variational inference, the ELBO is maximized and the parameters of the latent space are learned iteratively. Probabilistic PCA (PPCA), as a special case of factor analysis, restricts the noise of dimensions to be uncorrelated and assumes the variance of noise to be equal in all dimensions. This restriction makes the solution of PPCA closed-form and simpler.

In this paper, we explain the theory and details of factor analysis, PPCA, variational inference, and VAE. The remainder of this paper is organized as follows. Section 2 introduces variational inference. We explain factor analysis and PPCA in Sections 3 and 4, respectively. VAE is explained in Section 5. Finally, Section 6 concludes the paper.

Required Background for the Reader

This paper assumes that the reader has general knowledge of calculus, probability, linear algebra, and basics of optimization.

2 Variational Inference

Consider a dataset . Assume that every data point is generated from a latent variable . This latent variable has a prior distribution . According to Bayes’ rule, we have:

| (1) |

Let be an arbitrary distribution denoted by . Suppose the parameter of conditional distribution of on is denoted by ; hence, . Therefore, we can say:

| (2) |

2.1 Evidence Lower Bound (ELBO)

Consider the Kullback-Leibler (KL) divergence (Kullback & Leibler, 1951) between the prior probability of the latent variable and the posterior of the latent variable:

where is for definition of KL divergence and is because is independent of and comes out of integral and . Hence:

| (3) | ||||

We define the Evidence Lower Bound (ELBO) as:

| (4) |

So:

Therefore:

| (5) |

As the second term is negative with its minus, the ELBO is a lower bound on the log likelihood of data:

| (6) |

The likelihood is also referred to as the evidence. Note that this lower bound gets tight when:

| (7) |

This lower bound is depicted in Fig. 1.

2.2 Expectation Maximization

2.2.1 Background on Expectation Maximization

This part is taken from our previous tutorial paper (Ghojogh et al., 2019a). Sometimes, the data are not fully observable. For example, the data are known to be whether zero or greater than zero. In this case, Maximum Likelihood Expectation (MLE) cannot be directly applied as we do not have access to complete information and some data are missing. In this case, Expectation Maximization (EM) is useful. The main idea of EM can be summarized in this short friendly conversation:

– What shall we do? Some data are missing! The log-likelihood is not known completely so MLE cannot be used.

– Hmm, probably we can replace the missing data with something…

– Aha! Let us replace it with its mean.

– You are right! We can take the mean of log-likelihood over the possible values of the missing data. Then everything in the log-likelihood will be known, and then…

– And then we can do MLE!

EM consists of two steps which are the E-step and the M-step. In the E-step, the expectation of log-likelihood with respect to the missing data is calculated, in order to have a mean estimation of it. In the M-step, the MLE approach is used where the log-likelihood is replaced with its expectation. These two steps are iteratively repeated until convergence of the estimated parameters.

2.2.2 Expectation Maximization in Variational Inference

According to MLE, we want to maximize the log-likelihood of data. According to Eq. (6), maximizing the ELBO will also maximize the log-likelihood. The Eq. (6) holds for any prior distribution . We want to find the best distribution to maximize the lower bound. Hence, EM for variational inference is performed iteratively as:

| (8) | |||

| (9) |

where denotes the iteration index.

E-step in EM for Variational Inference: The E-step is:

The second term is always non-negative; hence, its minimum is zero:

which was already found in Eq. (7). Thus, the E-step assigns:

| (10) |

In other words, in Fig. 1, it pushes the middle line toward the above line by maximizing the ELBO.

M-step in EM for Variational Inference: The M-step is:

where is for definition of KL divergence. The second term is constant w.r.t. . Hence:

where is because of definition of expectation. Thus, the M-step assigns:

| (11) |

In other words, in Fig. 1, it pushes the above line higher. The E-step and M-step together somehow play a game where the E-step tries to reach the middle line (or the ELBO) to the log-likelihood and the M-step tries to increase the above line (or the log-likelihood). This procedure is done repeatedly so the two steps help each other improve to higher values.

To summarize, the EM in variational inference is:

| (12) | |||

| (13) |

It is noteworthy that, in variational inference, sometimes, the parameter is absorbed into the latent variable . According to the chain rule, we have:

Considering the term as one probability term, we have:

where the parameter disappears because of absorption.

3 Factor Analysis

3.1 Background on Marginal Multivariate Gaussian Distribution

Consider two random variables and and let . Assume that and are jointly multivariate Gaussian; hence, the variable has a multivariate Gaussian distribution, i.e., . The mean and covariance can be decomposed as:

| (14) | |||

| (15) |

where , , , , , and .

It can be shown that the marginal distributions for and are Gaussian distributions where and (Ng, 2018). The covariance matrix of the joint distribution can be simplified as (Ng, 2018):

| (16) |

This shows that the marginal distributions are:

| (17) | |||

| (18) |

According to the definition of the multivariate Gaussian distribution, the conditional distribution is also a Gaussian distribution, i.e., where (Ng, 2018):

| (19) | |||

| (20) |

and likewise for :

| (21) | |||

| (22) |

3.2 Main Idea of Factor Analysis

Factor analysis (Fruchter, 1954; Cattell, 1965; Harman, 1976; Child, 1990) is one of the simplest and most fundamental generative models. Although its theoretical derivations are a little complicated but its main idea is very simple. Factor analysis assumes that every data point is generated from a latent variable . The latent variable is also referred to as the latent factor; hence, the name of factor analysis comes from the fact that it analyzes the latent factors.

In factor analysis, we assume that the data point is obtained through the following steps: (1) by linear projection of the -dimensional onto a -dimensional space by projection matrix , then (2) applying some linear translation, and finally (3) adding a Gaussian noise with covariance matrix . Note that as the noises in different dimensions are independent, the covariance matrix is diagonal. Factor analysis can be illustrated as a graphical model (Ghahramani & Hinton, 1996) where the visible data variable is conditioned on the latent variable and the noise random variable.. Figure 2 shows this graphical model.

3.3 The Factor Analysis Model

For simplicity, the prior distribution of the latent variable can be assumed to be a multivariate Gaussian distribution:

| (23) |

where and are the mean and the covariance matrix of and is the determinant of matrix. As was explain in Section 3.2, is obtained through (1) the linear projection of by , (2) applying some linear translation, and (3) adding a Gaussian noise with covariance . Hence, the data point has a conditional multivariate Gaussian distribution given the latent variable; its conditional likelihood is:

| (24) |

where , which is the translation vector, is the mean of data :

| (25) |

The marginal distribution of is:

| (26) | |||

| (27) |

where , , and is because mean is linear and variance is quadratic so the mean and variance of projection are applied linearly and quadratically, respectively.

As the mean and covariance are needed to be learned, we can absorb and into and and assume that and .

In summary, factor analysis assumes every data point is obtained by projecting a latent variable onto a -dimensional space by projection matrix and translating it by and finally adding some Gaussian noise (whose dimensions are independent) as:

| (28) | |||

| (29) | |||

| (30) |

3.4 The Joint and Marginal Distributions in Factor Analysis

The joint distribution of and is:

| (31) |

The expectation of is:

| (32) |

where is because of Eqs. (29) and (30). Hence:

| (33) |

where is because of Eqs. (29) and (32). Consider Eq. (15). According to Eq. (29), we have . According to Eq. (28), we have:

| (34) |

where is because of Eqs. (29) and (30). Moreover, we have:

| (35) |

where is because of Eqs. (28) and (29) and is because and are independent. We also have . Therefore:

| (36) |

Hence, the marginal distribution of data point is:

| (37) |

According to Eqs. (21) and (22), the posterior or the conditional distribution of latent variable given data is:

| (38) | ||||

where:

| (39) | |||

| (40) |

Recall that the conditional distribution of data given the latent variable, i.e. , was introduced in Eq. (24).

3.5 Expectation Maximization in Factor Analysis

3.5.1 Maximization of Joint Likelihood

In factor analysis, the parameter of variational inference is the two parameters and . As we have in Eq. (13), consider the maximization of joint likelihood, which reduces to the likelihood of data, over all data points:

| (43) |

where is because of the chain rule , and is because the second term is zero because of zero mean of prior of (see Eq. (29)).

3.5.2 The E-Step in EM for Factor Analysis

3.5.3 The M-Step in EM for Factor Analysis

We have two variables and so we solve the maximization w.r.t. these variables.

Finding parameter :

where is because of the cyclic property of trace. Setting this derivative to zero gives us the optimum :

| (46) |

Finding parameter : Now, consider maximization w.r.t . We restate Eq. (43) as (Paola Garcia, 2018):

| (47) |

where is a sample covariance matrix defined as:

| (48) |

The maximization results in (Paola Garcia, 2018):

Note that as the dimensions of noise are independent, the covariance matrix of noise, , is a diagonal matrix. Hence:

| (49) | ||||

3.5.4 Summary of Factor Analysis Algorithm

According to the derived Eqs. (44), (45), (46), and (49), the EM algorithm in factor analysis is summarized as follows. The mean of data, , is computed. Then, for every data point , we iteratively solve as:

Note that if data are centered as a pre-processing to factor analysis, i.e. , the algorithm of factor analysis is simplified as:

As it can be seen, factor analysis does not have a closed-form solution and its solution, which are the projection matrix and the noise covariance matrix , are found iteratively until convergence.

4 Probabilistic Principal Component Analysis

4.1 Main Idea of Probabilistic PCA

Probabilistic PCA (PPCA) (Roweis, 1997; Tipping & Bishop, 1999b) is a special case of factor analysis where the variance of noise is equal in all dimensions of data space with covariance between dimensions, i.e.:

| (50) |

In other words, PPCA considers an isotropic noise in its formulation. Therefore, Eq. (30) is simplified to:

| (51) |

Because of having zero covariance of noise between different dimensions, PPCA assumes that the data points are independent of each other given latent variables. As depicted in Figure 3, PPCA can be illustrated as a graphical model, where the visible data variable is conditioned on the latent variable and the isotropic noise random variable.

4.2 MLE for Probabilistic PCA

As PPCA is a special case of factor analysis, it also is solved using EM. Similar to factor analysis, it can be solved iteratively using EM (Roweis, 1997). However, one can also find a closed-form solution to its EM approach (Tipping & Bishop, 1999b). Hence, by restricting the noise covariance to be isotropic, its solution becomes simpler and closed-form. The iterative approach is as we had in factor analysis. Here, we derive the closed-form solution.

Consider the likelihood or the marginal distribution of data points which is Eq. (37). The log-likelihood of data is:

where is the sample covariance matrix of data:

| (52) |

We use MLE where the variables of maximization optimization are the projection matrix and the noise variance :

| (53) |

It is noteworthy that literature usually defines:

| (54) | |||

| (55) |

According to the matrix inversion lemma, we have:

| (56) |

This inversion is interesting because the inverse of a matrix is reduced to inversion of a matrix which is much simpler because we usually have .

4.2.1 MLE for Determining

Taking the derivative of Eq. (53) w.r.t. and setting it to zero is:

whose trivial solutions are and which are not valid. For the non-trivial solution, consider the Singular Value Decomposition (SVD) where and contain the left and right singular vectors, respectively, and is the diagonal matrix containing the singular values denoted by . Moreover, note that because and are orthogonal matrices.

From the previous calculations, we have (Hauskrecht, 2007):

| (57) |

which is an eigenvalue problem (Ghojogh et al., 2019b) for the covariance matrix where the columns of are the eigenvectors of and the eigenvalues are . Recall that is the variance of noise in different dimensions and is the -th singular value of (sorted from largest to smallest). We denote the -th eigenvalue of the covariance matrix by:

| (58) |

We consider only the top singular values and the top eigenvalues ; substituting the singular values in the SVD of the projection matrix results in:

where . However, as Eq. (57) does not include any , we can replace with any arbitrary orthogonal matrix (Tipping & Bishop, 1999b):

| (59) |

The arbitrary orthogonal matrix is a rotation matrix which rotates data in projection. It is arbitrary because rotation is not important in dimensionality reduction (the relative distances of embedded data points do not change if all embedded points are rotated). A simple choice for this rotation matrix is which results in:

| (60) |

4.2.2 MLE for Determining

4.2.3 Summary of MLE Formulas

4.3 Zero Noise Limit: PCA Is a Special Case of Probabilistic PCA

Recall the posterior which is Eq. (38). According to Eqs. (39), (40), and (50), the posterior in PPCA is:

| (65) | ||||

Consider zero noise limit where the variance of noise goes to zero:

| (66) |

In this case, the uncertainty of PPCA almost disappears. In the following, we show that in zero noise limit, PPCA is reduced to PCA (Ghojogh & Crowley, 2019; Jolliffe & Cadima, 2016) and this explains why the PPCA method is a probabilistic approach to PCA.

In the zero noise limit, the posterior becomes:

| (67) | ||||

where is because according to (Roweis, 1997, footnote 4), we have:

| (68) |

On the other hand, according to (Tipping & Bishop, 1999b, Appendix C), PCA minimizes the reconstruction error as:

| (69) |

where denotes the Frobenius norm of matrix. See (Ghojogh & Crowley, 2019) for more details on minimization of reconstruction error by PCA.

Instead of minimizing the reconstruction error, one may minimize the reconstruction error for the mean of posterior (Tipping & Bishop, 1999b, Appendix C):

| (70) |

This is the minimization of reconstruction error after projection by . According to the posterior in the zero noise limit (see Eq. (67)), this is equivalent to PPCA in the zero noise limit model. Hence, PCA is a deterministic special case of PPCA where the variance of noise goes to zero.

4.4 Other Variants of Probabilistic PCA

There exist some other variants of PPCA. We briefly mention them here. PPCA has a hyperparameter which is the dimensionality of latent space . Bayesian PCA (Bishop, 1999) models this hyperparameter as another latent random variable which is learned during the EM training.

According to Eqs. (28), (29), and (51), note that PPCA uses Gaussian distributions. PPCA with Student-t distribution (Zhao & Jiang, 2006) has been proposed which uses t distributions. This change may improve the embedding of PPCA because of the heavier tails of Student-t distribution compared to Gaussian distribution. This avoids the crowding problem which has motivated the proposal of t-SNE (Ghojogh et al., 2020a).

Sparse PPCA (Guan & Dy, 2009; Mattei et al., 2016) has inserted sparsity into PPCA. Supervised PPCA (Yu et al., 2006) makes use of class labels in the formulation of PPCA. Mixture of PPCA (Tipping & Bishop, 1999a) uses mixture of distributions in the formulation PPCA. It trains the parameters of a mixture distribution using EM (Ghojogh et al., 2019a). Generalized PPCA for correlated data is another recent variant of PPCA (Gu & Shen, 2020).

5 Variational Autoencoder

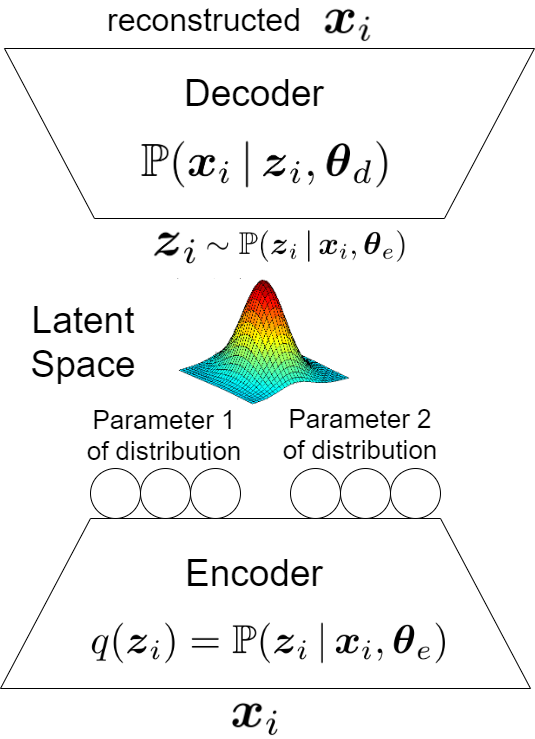

Variational Autoencoder (VAE) (Kingma & Welling, 2014) applies variational inference, i.e., maximizes the ELBO, but in an autoencoder setup and makes it differentiable for the backpropagation training (Rumelhart et al., 1986). As Fig. 4 shows, VAE includes an encoder and a decoder, each of which can have several network layers. A latent space is learned between the encoder and decoder. The latent variable is sampled from the latent space. In the following, we explain the encoder and decoder parts. The input of encoder in VAE is the data point and the output of decoder in VAE is its reconstruction .

5.1 Parts of Variational Autoencoder

5.1.1 Encoder of Variational Autoencoder

The encoder of VAE models the distribution where the parameters of distribution are the weights of encoder layers in VAE. The input and output of encoder are and , respectively. As Fig. 4 depicts, the output neurons of encoder are supposed to determine the parameters of the conditional distribution . If this conditional distribution has number of parameters, we have sets of output neurons from the encoder, denoted by . The dimensionality of these sets may differ depending on the size of the parameters.

For example, let the latent space be -dimensional, i.e., . If the distribution is a multivariate Gaussian distribution, we have two sets of output neurons for encoder where one set has neurons for the mean of this distribution and the other set has neurons for the covariance of this distribution . If the covariance matrix is diagonal, the second set has neurons rather than neurons. In this case, we have . Any distribution with any number of parameters can be chosen for but the multivariate Gaussian with diagonal covariance is very well-used:

| (71) |

Let the network weights for the output sets of encoder, , be denoted by . As the input of encoder is , the -th output set of encoder can be written as . In the case of multivariate Gaussian distribution for the latent space, the parameters are and .

5.1.2 Sampling the Latent Variable

When the data point is fed as input to the encoder, the parameters of the conditional distribution are obtained; hence, the distribution of latent space, which is , is determined corresponding to the data point . Now, in the latent space, we sample the corresponding latent variable from the distribution of latent space:

| (72) |

This latent variable is fed as input to the decoder which is explained in the following.

5.1.3 Decoder of Variational Autoencoder

As Fig. 4 shows, the decoder of VAE models the conditional distribution where are the weights of decoder layers in VAE. The input and output of decoder are and , respectively. The output neurons of decoder are supposed to either generate the reconstructed data point or determine the parameters of the conditional distribution ; the former is more common. In the latter case, if this conditional distribution has number of parameters, we have sets of output neurons from the decoder, denoted by . The dimensionality of these sets may differ depending the size of every parameters. The example of multivariate Gaussian distribution also can be mentioned for the decoder. Let the network weights for the output sets of decoder, , be denoted by . As the input of decoder is , the -th output set of decoder can be written as .

5.2 Training Variational Autoencoder with Expectation Maximization

We use EM for training the VAE. Recall Eqs. (8) and (9) for EM in variational inference. Inspired by that, VAE uses EM for training where the ELBO is a function of encoder weights , decoder weights , and data point :

| (73) | |||

| (74) |

We can simplify this iterative optimization algorithm by alternating optimization (Jain & Kar, 2017) where we take a step of gradient ascent optimization in every iteration. We consider mini-batch stochastic gradient ascent and take training data in batches where denotes the mini-batch size. Hence, the optimization is:

| (75) | |||

| (76) |

where and are the learning rates for and , respectively.

The ELBO is simplified as:

| (77) |

Note that the parameter of is because is generated after the encoder and before the decoder.

There are different ways for approximating the KL divergence in Eq. (77) (Hershey & Olsen, 2007; Duchi, 2007). We can simplify the ELBO in at least two different ways which are explained in the following.

5.2.1 Simplification Type 1

We continue the simplification of ELBO:

| (78) |

This expectation can be approximated using Monte Carlo approximation (Ghojogh et al., 2020b) where we draw samples , corresponding to the -th data point, from the conditional distribution distribution as:

| (79) |

Monte Carlo approximation (Ghojogh et al., 2020b), in general, approximates expectation as:

| (80) |

where is a function of . Here, the approximation is:

| (81) |

5.2.2 Simplification Type 2

We can simplify the ELBO using another approach:

| (82) |

The second term in the above equation can be estimated using Monte Carlo approximation (Ghojogh et al., 2020b) where we draw samples from :

| (83) |

The first term in the above equation can be converted to expectation and then computed using Monte Monte Carlo approximation (Ghojogh et al., 2020b) again, where we draw samples from :

| (84) |

5.2.3 Simplification Type 2 for Special Case of Gaussian Distributions

We can compute the KL divergence in the first term of Eq. (83) analytically for univariate or multivariate Gaussian distributions. For this, we need two following lemmas.

Lemma 1.

The KL divergence between two univariate Gaussian distributions and is:

| (85) |

Proof.

See Appendix A for proof. ∎

Lemma 2.

The KL divergence between two multivariate Gaussian distributions and with dimensionality is:

| (86) |

Proof.

See (Duchi, 2007, Section 9) for proof. ∎

5.2.4 Training Variational Autoencoder with Approximations

5.2.5 Prior Regularization

Some works regularize the ELBO, Eq. (77), with a penalty on the prior distribution . Using this, we guide the learned distribution of latent space to have a specific prior distribution . Some examples for prior regularization in VAE are geodesic priors (Hadjeres et al., 2017) and optimal priors (Takahashi et al., 2019). Note that this regularization can inject domain knowledge to the latent space. It can also be useful for making the latent space more interpretable.

5.3 The Reparametrization Trick

Sampling the samples for the latent variables, i.e. Eq. (72), blocks the gradient flow because computing the derivatives through by chain rule gives a high variance estimate of gradient. In order to overcome this problem, we use the reparameterization technique (Kingma & Welling, 2014; Rezende et al., 2014; Titsias & Lázaro-Gredilla, 2014). In this technique, instead of sampling , we assume is a random variable but is a deterministic function of another random variable as follows:

| (92) |

where is a stochastic variable sampled from a distribution as:

| (93) |

The Eqs. (78) and 82 both contain an expectation of a function . Using this technique, this expectation is replaced as:

| (94) |

Using the reparameterization technique, the encoder, which implemented , is replaced by where in the latent space between encoder and decoder, we have and .

A simple example for the reparameterization technique is when and are univariate Gaussian variables:

For some more advanced reparameterization techniques, the reader can refer to (Figurnov et al., 2018).

5.4 Training Variational Autoencoder with Backpropagation

In practice, VAE is trained by backpropagation (Rezende et al., 2014) where the backpropagation algorithm (Rumelhart et al., 1986) is used for training the weights of network. Recall that in training VAE with EM, the encoder and decoder are trained separately using the E-step and the M-step of EM, respectively. However, in training VAE with backpropagation, the whole network is trained together and not in separate steps. Suppose the whole weights if VAE are denoted by . Backpropagation trains VAE using the mini-batch stochastic gradient descent with the negative ELBO, , as the loss function:

| (95) |

where is the learning rate. Note that we are minimizing here because neural networks usually minimize the loss function.

5.5 The Test Phase in Variational Autoencoder

In the test phase, we feed the test data point to the encoder to determine the parameters of the conditional distribution of latent space, i.e., . Then, from this distribution, we sample the latent variable from the latent space and generate the corresponding reconstructed data point by the decoder. As you see, VAE is a generative model which generates data points (Ng & Jordan, 2002).

5.6 Other Notes and Other Variants of Variational Autoencoder

There exist many improvements on VAE. Here, we briefly review some of these works. One of the problems of VAE is generating blurry images when data points are images. This blurry artifact may be because of several following reasons:

-

•

sampling for the Monte Carlo approximations

-

•

lower bound approximation by ELBO

-

•

restrictions on the family of distributions where usually simple Gaussin distributions are used.

There are some other interpretations for the reason of this problem; for example, see (Zhao et al., 2017). This work also proposed a generalized ELBO. Note that generative adversarial networks (Goodfellow et al., 2014) usually generate clearer images; therefore, some works have combined variational and adversarial inferences (Mescheder et al., 2017) for using the advantages of both models.

Variational discriminant analysis (Yu et al., 2020) has also been proposed for classification and discrimination of classes. Two other tutorials on VAE are (Doersch, 2016) and (Odaibo, 2019). Some more recently published papers on VAE are nearly optimal VAE (Bai et al., 2020), deep VAE (Hou et al., 2017), Hamiltonian VAE (Caterini et al., 2018), and Nouveau VAE (Vahdat & Kautz, 2020) which is a hierarchical VAE. For image data and image and caption modeling, a fusion of VAE and convolutional neural network is also proposed (Pu et al., 2016). The influential factors in VAE are also analyzed in the paper (Liu et al., 2020).

6 Conclusion

This paper was a tutorial and survey on several dimensionality reduction and generative model which are tightly related. Factor analysis, probabilistic PCA, variational inference, and variational autoencoder are covered in this paper. All of these methods assume that every data point is generated from a latent variable or factor where some noise have also been applied on data in the data space.

Acknowledgement

The authors hugely thank the instructors of deep learning course at the Carnegie Mellon University (you can see their YouTube channel) whose lectures partly covered some materials mentioned in this tutorial paper.

Appendix A Proof for Lemma 1

According to integration by parts, we have:

We also have:

We know that:

Hence:

Therefore, finally, we have:

Q.E.D.

References

- Bai et al. (2020) Bai, Jincheng, Song, Qifan, and Cheng, Guang. Nearly optimal variational inference for high dimensional regression with shrinkage priors. arXiv preprint arXiv:2010.12887, 2020.

- Bishop (1999) Bishop, Christopher M. Bayesian PCA. In Advances in neural information processing systems, pp. 382–388, 1999.

- Bishop (2006) Bishop, Christopher M. Pattern recognition and machine learning. Springer, 2006.

- Bouchard & Triggs (2004) Bouchard, Guillaume and Triggs, Bill. The tradeoff between generative and discriminative classifiers. In 16th IASC International Symposium on Computational Statistics, 2004.

- Caterini et al. (2018) Caterini, Anthony L, Doucet, Arnaud, and Sejdinovic, Dino. Hamiltonian variational auto-encoder. Advances in Neural Information Processing Systems, 31:8167–8177, 2018.

- Cattell (1965) Cattell, Raymond B. A biometrics invited paper. factor analysis: An introduction to essentials i. the purpose and underlying models. Biometrics, 21(1):190–215, 1965.

- Child (1990) Child, Dennis. The essentials of factor analysis. Cassell Educational, 1990.

- Doersch (2016) Doersch, Carl. Tutorial on variational autoencoders. arXiv preprint arXiv:1606.05908, 2016.

- Duchi (2007) Duchi, John. Derivations for linear algebra and optimization. Technical report, Berkeley, California, 2007.

- Figurnov et al. (2018) Figurnov, Mikhail, Mohamed, Shakir, and Mnih, Andriy. Implicit reparameterization gradients. Advances in Neural Information Processing Systems, 31:441–452, 2018.

- Fruchter (1954) Fruchter, Benjamin. Introduction to factor analysis. Van Nostrand, 1954.

- Ghahramani & Hinton (1996) Ghahramani, Zoubin and Hinton, Geoffrey E. The EM algorithm for mixtures of factor analyzers. Technical report, Technical Report CRG-TR-96-1, University of Toronto, 1996.

- Ghojogh & Crowley (2019) Ghojogh, Benyamin and Crowley, Mark. Unsupervised and supervised principal component analysis: Tutorial. arXiv preprint arXiv:1906.03148, 2019.

- Ghojogh et al. (2019a) Ghojogh, Benyamin, Ghojogh, Aydin, Crowley, Mark, and Karray, Fakhri. Fitting a mixture distribution to data: tutorial. arXiv preprint arXiv:1901.06708, 2019a.

- Ghojogh et al. (2019b) Ghojogh, Benyamin, Karray, Fakhri, and Crowley, Mark. Eigenvalue and generalized eigenvalue problems: Tutorial. arXiv preprint arXiv:1903.11240, 2019b.

- Ghojogh et al. (2020a) Ghojogh, Benyamin, Ghodsi, Ali, Karray, Fakhri, and Crowley, Mark. Stochastic neighbor embedding with Gaussian and Student-t distributions: Tutorial and survey. arXiv preprint arXiv:2009.10301, 2020a.

- Ghojogh et al. (2020b) Ghojogh, Benyamin, Nekoei, Hadi, Ghojogh, Aydin, Karray, Fakhri, and Crowley, Mark. Sampling algorithms, from survey sampling to Monte Carlo methods: Tutorial and literature review. arXiv preprint arXiv:2011.00901, 2020b.

- Goodfellow et al. (2014) Goodfellow, Ian, Pouget-Abadie, Jean, Mirza, Mehdi, Xu, Bing, Warde-Farley, David, Ozair, Sherjil, Courville, Aaron, and Bengio, Yoshua. Generative adversarial nets. In Advances in neural information processing systems, pp. 2672–2680, 2014.

- Gu & Shen (2020) Gu, Mengyang and Shen, Weining. Generalized probabilistic principal component analysis of correlated data. Journal of Machine Learning Research, 21(13):1–41, 2020.

- Guan & Dy (2009) Guan, Yue and Dy, Jennifer. Sparse probabilistic principal component analysis. In Artificial Intelligence and Statistics, pp. 185–192, 2009.

- Hadjeres et al. (2017) Hadjeres, Gaëtan, Nielsen, Frank, and Pachet, François. GLSR-VAE: Geodesic latent space regularization for variational autoencoder architectures. In 2017 IEEE Symposium Series on Computational Intelligence, pp. 1–7. IEEE, 2017.

- Harman (1976) Harman, Harry H. Modern factor analysis. University of Chicago press, 1976.

- Hauskrecht (2007) Hauskrecht, Milos. CS3750 lecture notes for probabilistic principal component analysis and the E-M algorithm. Technical report, University of Pittsburgh, 2007.

- Hershey & Olsen (2007) Hershey, John R and Olsen, Peder A. Approximating the Kullback Leibler divergence between Gaussian mixture models. In 2007 IEEE International Conference on Acoustics, Speech and Signal Processing, volume 4, pp. IV–317. IEEE, 2007.

- Hou et al. (2017) Hou, Xianxu, Shen, Linlin, Sun, Ke, and Qiu, Guoping. Deep feature consistent variational autoencoder. In 2017 IEEE Winter Conference on Applications of Computer Vision, pp. 1133–1141. IEEE, 2017.

- Jain & Kar (2017) Jain, Prateek and Kar, Purushottam. Non-convex optimization for machine learning. Foundations and Trends® in Machine Learning, 10(3-4):142–336, 2017.

- Jolliffe & Cadima (2016) Jolliffe, Ian T and Cadima, Jorge. Principal component analysis: a review and recent developments. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 374(2065), 2016.

- Kingma & Welling (2014) Kingma, Diederik P and Welling, Max. Auto-encoding variational Bayes. In International Conference on Learning Representations, 2014.

- Kullback & Leibler (1951) Kullback, Solomon and Leibler, Richard A. On information and sufficiency. The annals of mathematical statistics, 22(1):79–86, 1951.

- Liu et al. (2020) Liu, Shiqi, Liu, Jingxin, Zhao, Qian, Cao, Xiangyong, Li, Huibin, Meng, Deyu, Meng, Hongying, and Liu, Sheng. Discovering influential factors in variational autoencoders. Pattern Recognition, 100:107166, 2020.

- Mattei et al. (2016) Mattei, Pierre-Alexandre, Bouveyron, Charles, and Latouche, Pierre. Globally sparse probabilistic pca. In Artificial Intelligence and Statistics, pp. 976–984, 2016.

- Mescheder et al. (2017) Mescheder, Lars, Nowozin, Sebastian, and Geiger, Andreas. Adversarial variational bayes: Unifying variational autoencoders and generative adversarial networks. In International Conference on Machine Learning, 2017.

- Ng (2018) Ng, Andrew. CS229 lecture notes for factor analysis. Technical report, Stanford University, 2018.

- Ng & Jordan (2002) Ng, Andrew Y and Jordan, Michael I. On discriminative vs. generative classifiers: A comparison of logistic regression and naive Bayes. In Advances in neural information processing systems, pp. 841–848, 2002.

- Odaibo (2019) Odaibo, Stephen. Tutorial: Deriving the standard variational autoencoder (VAE) loss function. arXiv preprint arXiv:1907.08956, 2019.

- Paola Garcia (2018) Paola Garcia, Leibny. Lecture notes for factor analysis. Technical report, Carnegie Mellon University, 2018.

- Pu et al. (2016) Pu, Yunchen, Gan, Zhe, Henao, Ricardo, Yuan, Xin, Li, Chunyuan, Stevens, Andrew, and Carin, Lawrence. Variational autoencoder for deep learning of images, labels and captions. In Advances in neural information processing systems, pp. 2352–2360, 2016.

- Rezende et al. (2014) Rezende, Danilo Jimenez, Mohamed, Shakir, and Wierstra, Daan. Stochastic backpropagation and approximate inference in deep generative models. In International Conference on Machine Learning, 2014.

- Roweis (1997) Roweis, Sam. EM algorithms for PCA and SPCA. Advances in neural information processing systems, 10:626–632, 1997.

- Rumelhart et al. (1986) Rumelhart, David E, Hinton, Geoffrey E, and Williams, Ronald J. Learning representations by back-propagating errors. Nature, 323(6088):533–536, 1986.

- Sminchisescu & Jepson (2004) Sminchisescu, Cristian and Jepson, Allan. Generative modeling for continuous non-linearly embedded visual inference. In Proceedings of the twenty-first international conference on Machine learning, pp. 96, 2004.

- Takahashi et al. (2019) Takahashi, Hiroshi, Iwata, Tomoharu, Yamanaka, Yuki, Yamada, Masanori, and Yagi, Satoshi. Variational autoencoder with implicit optimal priors. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 33, pp. 5066–5073, 2019.

- Tipping & Bishop (1999a) Tipping, Michael E and Bishop, Christopher M. Mixtures of probabilistic principal component analyzers. Neural computation, 11(2):443–482, 1999a.

- Tipping & Bishop (1999b) Tipping, Michael E and Bishop, Christopher M. Probabilistic principal component analysis. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 61(3):611–622, 1999b.

- Titsias & Lázaro-Gredilla (2014) Titsias, Michalis and Lázaro-Gredilla, Miguel. Doubly stochastic variational Bayes for non-conjugate inference. In International conference on machine learning, pp. 1971–1979, 2014.

- Vahdat & Kautz (2020) Vahdat, Arash and Kautz, Jan. NVAE: A deep hierarchical variational autoencoder. Advances in Neural Information Processing Systems, 33, 2020.

- Walker et al. (2016) Walker, Jacob, Doersch, Carl, Gupta, Abhinav, and Hebert, Martial. An uncertain future: Forecasting from static images using variational autoencoders. In European Conference on Computer Vision, pp. 835–851. Springer, 2016.

- Yu et al. (2006) Yu, Shipeng, Yu, Kai, Tresp, Volker, Kriegel, Hans-Peter, and Wu, Mingrui. Supervised probabilistic principal component analysis. In Proceedings of the 12th ACM SIGKDD international conference on Knowledge discovery and data mining, pp. 464–473, 2006.

- Yu et al. (2020) Yu, Weichang, Azizi, Lamiae, and Ormerod, John T. Variational nonparametric discriminant analysis. Computational Statistics & Data Analysis, 142:106817, 2020.

- Zhao & Jiang (2006) Zhao, J and Jiang, Q. Probabilistic PCA for t distributions. Neurocomputing, 69(16-18):2217–2226, 2006.

- Zhao et al. (2017) Zhao, Shengjia, Song, Jiaming, and Ermon, Stefano. Towards deeper understanding of variational autoencoding models. arXiv preprint arXiv:1702.08658, 2017.