Facilitating Self-Guided Mental Health Interventions Through Human-Language Model Interaction: A Case Study of Cognitive Restructuring

Abstract.

Self-guided mental health interventions, such as “do-it-yourself” tools to learn and practice coping strategies, show great promise to improve access to mental health care. However, these interventions are often cognitively demanding and emotionally triggering, creating accessibility barriers that limit their wide-scale implementation and adoption. In this paper, we study how human-language model interaction can support self-guided mental health interventions. We take cognitive restructuring, an evidence-based therapeutic technique to overcome negative thinking, as a case study. In an IRB-approved randomized field study on a large mental health website with 15,531 participants, we design and evaluate a system that uses language models to support people through various steps of cognitive restructuring. Our findings reveal that our system positively impacts emotional intensity for 67% of participants and helps 65% overcome negative thoughts. Although adolescents report relatively worse outcomes, we find that tailored interventions that simplify language model generations improve overall effectiveness and equity.

1. Introduction

As mental health conditions surge worldwide, healthcare systems are struggling to provide accessible mental health care for all (Organization et al., 2022; Olfson, 2016; Sickel et al., 2014). Self-guided mental health interventions, such as tools to journal and reflect on negative thoughts, offer great promise to expand modes of care and help people learn coping strategies (Schleider et al., 2020; Patel et al., 2020; Schleider et al., 2022; Shkel et al., 2023). While not a replacement for formal psychotherapy, these interventions provide immediate on-demand access to resources that can help develop techniques for mental well-being, especially for those who lack access to a trained professional, are on waiting lists, or seek to supplement therapy with other forms of care (Adair et al., 2005).

However, developing interventions that individuals can effectively use without the assistance of a professional therapist is challenging (Garrido et al., 2019). Currently, most self-guided interventions simply transform traditional manual therapeutic worksheets into digital online formats with limited instructions and support (Shkel et al., 2023). Using these worksheets without professional support often leads to cognitively demanding and emotionally triggering experiences, limiting engagement and usage (Garrido et al., 2019; Baumel et al., 2019; Fleming et al., 2018; Torous et al., 2020). For example, a popular self-guided intervention involves independently practicing Cognitive Restructuring of Negative Thoughts, an evidence-based, well-established process that helps people notice and change their negative thinking patterns (Beck, 1976; Burns, 1980). However, the practice includes complex steps like identifying thinking traps (faulty or distorted patterns of thinking like “all-or-nothing thinking”), which pose a significant challenge for many (Beck, 1976; Burns, 1980). Most individuals lack the necessary knowledge or experience to successfully use such interventions independently without explicit training and support. Moreover, analyzing one’s own thoughts, emotions, and behavioral patterns can be emotionally triggering, especially for those actively experiencing distress. Such accessibility barriers inhibit the widespread adoption of self-guided mental health interventions.

Language models may be able to assist individuals in engaging with self-guided mental health interventions to improve intervention accessibility and effectiveness. Specifically, language models can help individuals learn and practice cognitively demanding tasks (e.g., through automatic suggestions on potential thinking traps in a thought). Moreover, the language model support could help users manage emotionally triggering thoughts and potentially enable a reduction in the emotional intensity of negative thoughts.

Previous work exploring self-guided mental health interventions based on human-language model interaction has predominantly been limited to small-scale (Ly et al., 2017) and wizard-of-oz-style research (Smith et al., 2021; Morris et al., 2015; Kornfield et al., 2023; Kumar et al., 2023). These studies were conducted in controlled lab settings and evaluated on online crowdworker platforms, such as MTurk, which may not accurately represent the people who actively seek mental health care or use such an intervention (Mohr et al., 2017). Much less is known about intervention effectiveness in ecologically valid settings with individuals experiencing mental health challenges and seeking care. This limits our understanding of end-user preferences within these emerging forms of intervention (Mohr et al., 2017; Blandford et al., 2018; Borghouts et al., 2021; Poole, 2013). Furthermore, language models can exhibit biases resulting in highly varied performance across people from diverse demographics and populations (Caliskan et al., 2017; Blodgett et al., 2020; Lin et al., 2022). There is a need to investigate and improve the equity of language modeling-based interventions.

In this paper, we study supporting self-guided mental health interventions with human-language model interaction. Specifically, we take Cognitive Restructuring, an evidence-based self-guided intervention, as a case study. Cognitive Restructuring helps individuals to recognize when they are getting stuck in distorted patterns of thinking (identifying thinking traps) and to come up with new ways to think about their situation (writing reframed thoughts).

We design and evaluate a human-language model interaction based tool for cognitive restructuring. We conduct an ecologically valid and large-scale randomized field study on Mental Health America (screening.mhanational.org; MHA; a popular website that hosts mental health tools and resources) with over 15,000 participants (Section 3). We examine the design of our tool, investigate its impact on people seeking mental health care, and evaluate and improve its equity across key subpopulations. Specifically, we address the following research questions:

RQ1 – Design. How can we design a self-guided cognitive restructuring intervention that is supported through human-language model interaction?

RQ2a – Overall Effectiveness. To what extent does human-language model interaction based cognitive restructuring help individuals in alleviating negative emotions and overcoming negative thoughts?

RQ2b – Impact of Design Hypotheses. What is the impact of individual design hypotheses on the intervention effectiveness?

RQ3 – Equity. How equitable is the intervention and what strategies may improve equity?

To address these research questions, we first formulate the design hypotheses for this intervention through qualitative feedback from participants of early prototypes of the system and brainstorming with mental health professionals (Section 4.1). We hypothesize that assisting users in cognitively and emotionally challenging processes, contextualizing thought reframes by reflecting on situations and emotions, integrating psychoeducation, facilitating interactive refinement of reframes, and ensuring safety will result in a more effective human-language model interaction system for cognitive restructuring. Taking these hypotheses into consideration, we design a new system that uses a language model to support people through various steps of cognitive restructuring, including the identification of thinking traps in thoughts and the reframing of negative thoughts. Our language model suggests possible thinking traps a given thought may have, as well as suggests possible ways of reframing negative thought (Section 4.2).

After systematic ethical and safety considerations, active collaboration with mental health professionals, advocates, and clinical psychologists, and IRB review and approval, we deploy this system on MHA. We utilize a mixed-methods study design, combining quantitative and qualitative feedback, to assess the outcomes of this system on the platform visitors. We find that 67.64% of participants experience a positive shift (i.e., reduction) in their emotion intensity and 65.65% report helpfulness in overcoming their negative thoughts through the use of our system. Moreover, participants indicate that the system assists them in alleviating cognitive barriers by simplifying task complexity and emotional barriers by providing a less triggering experience (Section 5). Also, enabling participants to iteratively improve reframes by seeking additional reframing suggestions leads to a 23.73% greater reduction in the intensity of negative emotions. Moreover, participants who choose to make their reframes actionable report superior outcomes compared to those who make them empathic or personalized (Section 6).

To address the needs of individuals from diverse demographics and subpopulations, it is critical to develop equitable solutions. Here, we evaluate the performance of our system across people of different demographics and subpopulations (Section 7). The intervention is found to be more effective for individuals identifying as females, older adults, individuals with higher education levels, and those struggling with issues, such as parenting and work. However, it is found to be less effective for individuals identifying as males, adolescents, those with lower education levels, and those struggling with issues such as hopelessness or loneliness. We investigate the potential benefits of customizing the intervention for adolescents, who we found to have one of the largest disparities in our intervention outcomes. We find that making the language model-generated suggestions simpler and more casual leads to a 14.44% increase in reframe helpfulness for adolescents in the age group of 13 to 14.

We discuss the implications of our study for the use of human-language interaction in the development of self-guided mental health interventions, emphasizing the need for effective personalization, appropriate levels of interactivity with the language model, equity across subpopulations, and its safety (Section 8).

To summarize, our contributions are as follows:

-

(1)

Design of a novel health and wellness technology for human-language model interaction based self-guided mental health intervention.

-

•

We design and evaluate a new system that uses language models to help people through cognitive restructuring of negative thoughts, a core, well-established intervention in Cognitive Behavioral Therapy (Section 4).

-

•

We formulate design hypotheses/decisions that researchers developing self-guided interventions must consider to increase efficacy and ensure safety (Section 4).

- •

-

•

-

(2)

Large-scale, randomized, empirical studies in an ecologically informed setting to understand how people with lived experience of mental health interact with this technology and key takeaways for researchers developing self-guided interventions that leverage human-language model interaction.

-

•

There are opportunities to assist users in cognitively challenging and emotionally triggering psychological processes: Effective self-guided mental health interventions could rapidly increase access to care. However, there are many steps in such interventions that pose cognitive and emotional challenges, leading to well-documented, high rates of participant dropout. Our work shows how the use of human-language model interaction can help address these challenges and help people use these interventions more effectively (Section 5).

-

•

Certain types of suggestions are most effective: Our study suggests that users are more likely to seek more actionable suggestions and are more likely to find actionable suggestions more helpful (Section 6).

-

•

Human-language model interaction systems are not necessarily equitable, but it is possible to adapt them to individual subgroups: Our work shows that human-language model interaction systems are likely to have heterogeneous effects across key subpopulations that require further adapting the intervention (Section 7).

-

•

2. Related Work

Our work builds upon previous research on digital mental health interventions (Section 2.1), AI for mental health (Section 2.2), and the design of human-language model interaction systems (Section 2.3).

2.1. Digital Mental Health Interventions

The critical gap between the overwhelming need for and limited access to mental health care has prompted clinicians, technologists, and advocates to develop digital interventions that provide accessible care for all. Several efforts have concentrated on facilitating digital, text-based supportive conversations, through peer-to-peer support networks, such as TalkLife (talklife.com) and Supportiv (supportiv.com), as well as through on-demand talk therapy platforms like Talkspace (talkspace.com), BetterHelp (betterhelp.com), and SanVello (sanvello.com). Researchers have conducted randomized controlled trials to study the efficacy of these interventions compared to traditional methods of care, such as in-person counseling and worksheet-based skill practices (Hull and Mahan, 2017; Song et al., 2023; Moberg et al., 2019).

Another key focus in this pursuit has been the development of self-guided mental health interventions (Schleider et al., 2020; Patel et al., 2020; Schleider et al., 2022; Shkel et al., 2023). These interventions are designed in various forms, such as “Do-It-Yourself” apps to improve mental health “in-the-moment” of crisis, self-help tools for learning and practicing therapeutic skills, and more. Popular examples include self-guided meditation such as Headspace (headspace.com) or Calm (calm.com). Researchers have also explored the design of apps to track mood changes (Schueller et al., 2021), emotion regulation (Smith et al., 2022b) and to combat loneliness (Boucher et al., 2021). Kruzan et al. (Kruzan et al., 2022b) studied the process of online self-screening of mental illnesses and its role in help-seeking. Howe et al. (Howe et al., 2022) designed and evaluated a workplace stress-reduction intervention system and found that high-effort interventions reduced the most stress. Also, Cognitive Behavioral Therapy (CBT) is an evidence-based, well-established psychological treatment (Beck, 1976). Researchers have designed digital self-guided interventions that streamline elements of CBT like cognitive restructuring of negative thoughts by transforming traditional manual worksheets into digital online formats (Rennick-Egglestone et al., 2016; Shkel et al., 2023). Other work has focused on digital mental health interventions based on Dialectical Behavioral Therapy (DBT) (Schroeder et al., 2018, 2020).

Our work extends this literature by investigating how to design and evaluate self-guided digital mental health interventions that leverage human-language model interaction. We take CBT-based cognitive restructuring as a case study and design and evaluate a system that assists individuals in restructuring of negative thoughts through language models in a large-scale randomized field study.

2.2. AI for Mental Health

Our work is related to the growing field of research in Artificial Intelligence (AI) and Natural Language Processing (NLP) for mental health and wellbeing.

Prior work has proposed machine learning-based methods to measure key mental health constructs including adaptability and efficacy of counselors (Pérez-Rosas et al., 2019), personalized vs templated counseling language (Althoff et al., 2016), psychological perspective change (Althoff et al., 2016; Wadden et al., 2021), staying on topic (Wadden et al., 2021), therapeutic actions (Lee et al., 2019), empathy (Sharma et al., 2020b), moments of change (Pruksachatkun et al., 2019), counseling strategies (Pérez-Rosas et al., 2022; Shah et al., 2022), and conversational engagement (Sharma et al., 2020a). Research has also been conducted on building virtual assistants and chatbots for behavioral health (Darcy et al., 2021; Nicol et al., 2022; Sinha et al., 2022) and counseling (Srivastava et al., 2023). Further, researchers have designed AI-based systems to assist mental health support providers. Tanana et al. (Tanana et al., 2019) proposed a machine learning system for training counselors. Sharma et al. (Sharma et al., 2021, 2023a) developed a GPT-2 and reinforcement learning-based system for providing empathy-focused feedback to untrained online peer supporters. Our work informs how to support self-guided mental health interventions using AI-based systems.

Prior computational work on cognitive restructuring has often relied on small-scale, wizard-of-oz style studies in controlled lab settings (Smith et al., 2021; Morris et al., 2015). Further, Sharma et al. (Sharma et al., 2023b) and Ziems et al. (Ziems et al., 2022) have developed language models for automating cognitive reframing and positive reframing respectively. Sharma et al. (Sharma et al., 2023b) also investigate what constitutes a “high-quality” reframe and find that people prefer highly empathic or specific reframes, as opposed to reframes that are overly positive. Our work expands on these studies by investigating the design of a system that supports people through various steps of cognitive restructuring of negative thoughts. We evaluate this intervention in an ecologically valid setting with individuals experiencing mental health challenges on a large mental health platform. In addition, through randomized controlled trials, we assess the impact of key design hypotheses associated with this intervention including personalizing the intervention to the participant, facilitating iterative interactivity with the language model, and pursuing equity. Building on Sharma et al. (Sharma et al., 2023b), we also develop mechanisms that allow people to actively seek specific types of reframes that are likely to be helpful (e.g., actionable) and assess its effects on different restructuring outcomes.

In their human-centered study, Kornfeld et al. (Kornfield et al., 2022) sought to understand the adoption of automated text messaging tools. The study revealed that the participants were interested in making the tools more personalized, favored varying levels of engagement, and wanted to explore a broad range of concepts and experiences. The design of our self-guided intervention builds on these findings aiming to facilitate intervention personalization through situations and emotions of participants, iterative engagement with the language model, and to improve the equity of the intervention across different participant issues and demographics.

Prior work has also studied mental health bias in language models. Lin et al. (Lin et al., 2022) investigated gendered mental health stigma present in masked language models and showed that models captured social stereotypes, such as the perception that men are less likely to seek treatment for mental illnesses. We study the disparities of our intervention’s effectiveness among individuals from diverse demographics and facing different issues. We also propose a way of improving the interventions for a key subpopulation.

2.3. Design of Human-Language Model Interaction Systems

Broadly, our work relates to the design of human-language model interaction systems that facilitate an interactive setting in which humans can effectively engage with language models to accomplish real-world tasks (Amershi et al., 2019; Lee et al., 2022). Examples include systems for creative writing (Clark et al., 2018), programming (e.g., CoPilot (github.com/features/copilot)), and brainstorming ideas (e.g., Jasper (jasper.ai)). Our work studies how such human-language model interaction can support self-guided mental health interventions.

3. Study Overview

Our study was conducted over a nine-month process involving iterative ideation, prototyping, and evaluation in collaboration with mental health experts (some of whom are co-authors).

3.1. A Case Study of Cognitive Restructuring

Cognitive Restructuring is a well-established therapeutic technique that fosters awareness of and methods for changing negative thinking patterns (Beck, 1976; Beck et al., 2015). Cognitive Restructuring has been proven to be an effective treatment strategy for psychological disorders, especially anxiety and depression (Clark, 2013). It is a process that is central to Cognitive Behavioral Therapy (Beck, 1976), a modality of treatment which has been demonstrated to be as effective as, or more effective than, other forms of psychological therapy or psychiatric medications (Hofmann et al., 2012; Butler et al., 2006).

A participant initiates this process by writing the negative thought they are struggling with. Next, they try to identify any potential thinking traps (biased or irrational patterns of thinking) in their thought. Thinking Traps, alternatively known as cognitive distortions, refer to biased or irrational patterns of thinking that lead individuals to perceive reality inaccurately (Beck, 1976; Ding et al., 2022). These typically manifest as exaggerated thoughts, such as making assumptions about what others think (“Mind reading”), thinking in extremes (“All-or-nothing thinking”), jumping to conclusions based on one experience (“Overgeneralizing”), etc. Finally, participants write a reframed thought which involves appropriately addressing their underlying thinking traps and coming up with a more balanced and helpful perspective on the situation.

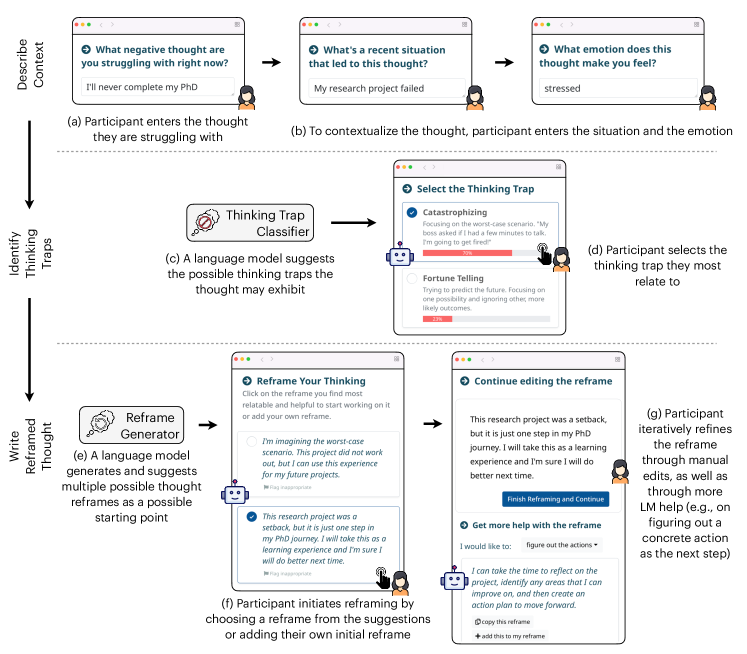

As an example, consider a PhD student who has struggled with their research project and starts to worry, “I’ll never complete my PhD”. Some possible thinking traps that this thought is falling into include Catastrophizing (thinking of the worst-case scenario) and Fortune telling (trying to predict the future). Addressing these thinking traps, a possible way in which the student can reframe the thought could be to say, “I’m imagining the worst-case scenario. This project did not work out, but I was able to formulate meaningful hypotheses for my next research project.” This process, along with our proposed system (Section 4.2), is also illustrated in Figure 1. Here, we study how cognitive restructuring can be supported through language models.

3.2. Study Design

Platform. We used Mental Health America (MHA) for our study, a large mental health website that provides mental health resources and tools to millions of users. We deployed our system on this platform, where it was hosted with appropriate ethical considerations and participant consent process (see Privacy, Ethics, and Safety), along with other existing self-guided mental health tools.

Participants. Many MHA visitors are interested in mental health resources including self-guided systems. Our study participants were visitors to MHA, who chose to use our system and provided informed consent to participate in the research study. Our study comprised a total of 15,531 participants.111Overall, we had 43,347 participants in total who consented initially, but 27,816 (64.17%) dropped out before completing the outcome survey. Analysis in the paper only include the 15,531 (35.83%) participants who completed the outcome survey. Note that dropout rates of online self-guided tools are commonly between 70% to 99.5% (Karyotaki et al., 2015; Fleming et al., 2018). We carried out several experiments in parallel, including randomized trials with independent participants. The number of participants involved in each experiment is represented by “N” values throughout the paper. Participants were 13 years and older (also, see Privacy, Ethics, and Safety below for a discussion on our reasons for including minors).

Tasks and Procedures. We started our study by formulating design hypotheses for developing self-guided cognitive restructuring through human-language model interaction. This was done through feedback from users of early prototypes of the system, brainstorming with mental health experts, and leveraging pertinent findings from prior work (Section 4.1). Based on these design hypotheses, we developed our final system for human-language model interaction powered cognitive restructuring (Section 4.2).

We evaluated the effectiveness of our system on several psychotherapy based metrics through a field study on the MHA platform (Section 5). In addition, we evaluated the importance of our design hypotheses by conducting randomized trials on the platform, explicitly ablating specific design features, such as removing psychoeducation from the system (Section 6). Finally, we analyzed and improved the equity of our system (Section 7).

Privacy, Ethics, and Safety. We designed and conducted our field study after carefully reviewing the potential benefits and risks to participants in consultation and collaboration with mental health experts. Our study, including the participation of minors, was approved by our Institutional Review Board. We included minors (those aged between 13 and 17) in this study as they represent a large, key demographic on MHA and were already frequently using similar self-guided interventions outside of our study. Therefore, we concluded that disallowing minors access to some self-guided tools would not decrease risk but potentially make an effective intervention inaccessible to a large fraction of users.We obtained informed consent from adults and informed assent from minors, ensuring they were fully aware of the study’s purpose, risks, and benefits (Appendix Figure 12 and Figure 13). Participants were informed that they would interact with an AI-based model that automatically generates reframed thoughts, without any human supervision. Further, appropriate steps were taken to avoid harmful content generation (Section 4.2), but participants were informed about the possibility that some of the generated content may be upsetting or disturbing. Also, participants were given access to a crisis hotline. Examples in this paper have been anonymized using best privacy practices (Matthews et al., 2017). Also, see Section 8.3 for broader discussion on the frameworks that guided our ethical and safety considerations.

For minors, we requested and received a waiver of parental permission from the IRB. This is because discussing mental health issues with parents may pose additional risks including discomfort in disclosing psychological distress (Smith et al., 2022a; Samargia et al., 2006) and reduced autonomy (Wilson and Deane, 2012). Therefore, obtaining parental permission is generally avoided in similar studies (Schleider et al., 2022). Also, given our online setting, obtaining parental permission is impractical and logistically difficult as we are not directly interacting with the participants. We instead obtained the assent of minors as approved by the IRB.

4. RQ1: How can we design a self-guided cognitive restructuring intervention that is supported through human-language model interaction?

Here, we design a novel system for human-language model interaction based self-guided cognitive restructuring.

4.1. Design Hypotheses

The design of our system was based on hypotheses that were formulated by incorporating qualitative feedback from users of early prototypes of the system, brainstorming on design decisions with mental health experts, and leveraging relevant insights from previous mental health studies. Here, we briefly describe the design hypotheses that surfaced through this process. Our primary contributions include the design (Section 4.2) and evaluation (Section 5) of a system based on these hypotheses. In addition, we evaluate the impact of , , and through randomized trials (Section 6). Moreover, we evaluate and through qualitative analysis of participant feedback (Sections 5 and 6.4 respectively).

The figure illustrates the interface for our human-language model interaction based system for self-guided cognitive restructuring of negative thoughts. First row: Through the system, the participant first describes context by entering the thought they are struggling with (e.g., ”I’ll never complete my PhD”). Further, to contextualize the thought, the participant enters the situation (My research project failed) and the emotion (stressed). Second row: A language model (thinking trap classifier) suggests the possible thinking traps the thought may exhibit along with their individual estimated likelihoods (e.g., “Catastrophizing – 70%; Fortune Telling – 23%; Overgeneralizing – 7%”). In order to incorporate psychoeducation, we also provide definitions and examples of these thinking traps. The participant selects the thinking trap they most relate to. Third row: A language model (reframe generator) generates and suggests multiple possible thought reframes as a possible starting point (e.g., ”I’m imagining the worst-case scenario. This project did not work out, but I can use this experience for my future projects.” and ”This research project was a setback, but it is just one step in my PhD journey. I will take this as a learning experience and I’m sure I will do better next time.”). Participant initiates reframing by choosing a reframe from the suggestions (the second one in this example) or adding their own initial reframe. Participant iteratively refines the reframe through manual edits, as well as through more LM help (e.g., on figuring out a concrete action as the next step).

: Assisting participants in processes that are cognitively and emotionally challenging may improve intervention effectiveness. Previous research suggests that restructuring negative thoughts can be cognitively and emotionally challenging (Shkel et al., 2023). The limited availability of mental health professionals and resources often acts as barriers to accessibility, typically resulting in a lack of knowledge and exposure to therapeutic processes among people. Moreover, the entrenched nature of thoughts makes them difficult to overcome in the moment. In fact, many participants during our design exploration phase highlighted these challenges (e.g., a participant wrote, “I struggle with formulating these thought –¿ situation –¿ thinking trap –¿ reframe scenarios by myself”; another participant wrote, “I find it hard to think of these reframes myself in the moment”; another participant wrote, “I have a hard time figuring out with cognitive distortions I’m using”). Therefore, we aim to guide participants through the identification of thinking traps and assist them in writing effective reframes. Here, we leverage language models to achieve this, as detailed in Section 4.2. Section 5 evaluates the effectiveness of this assistance.

: Contextualizing thought reframes through situations and emotions may improve intervention effectiveness. Cognitive Behavioral Therapy posits that our thoughts are shaped by our situations, subsequently influencing our beliefs and emotions (Beck, 1976). Therefore, the ability to reflect on situations and emotions and recognize their connection with negative thoughts could be beneficial in accurately assessing thinking traps and writing effective reframes. Our system enabled participants to contextualize their thoughts by answering additional questions related to their situations and emotions (Section 4.2). However, it is well established that introducing additional burdens in the form of questions may lead to increased dropout, a core challenge in digital mental health (Baumel et al., 2019; Torous et al., 2020). Section 6.1 describes the randomized trial that assesses the impact of this contextualization on the tradeoff between intervention effectiveness and overall participant engagement.

: Integrating psychoeducation may improve intervention effectiveness. Cognitive Restructuring is a skill people can learn. Learning this skill enables participants to identify their negative thoughts, assess the thinking traps they often fall into, and develop the ability to reframe them into something more hopeful in the moment (Hundt et al., 2013; Strunk et al., 2014). However, acquiring this skill is not straightforward and typically requires comprehensive psychoeducation coupled with practice (e.g., one participant wrote “I don’t think that everyone knows about thinking traps and the types or kinds of thinking traps there is, so I think there should be a description or definition about each thinking traps”). Here, we introduced participants to different thinking traps through definitions and examples, along with strategies to reframe their specific thinking traps (Section 4.2). Section 6.2 details the randomized trial that evaluates the impact of integrating psychoeducation on skill learnability.

: Facilitating interactive refinement of reframes may improve intervention effectiveness. A key component of our design involves the suggestion of automatically generated reframes through a language model (; Section 4.2). However, we found that participants of early prototypes of the system desired the ability to have varied AI suggestions. One participant said, “[I want] more choices and variation in the reframing”. Another participant said “I wish it included actionable insights.” Moreover, many participants desired the ability to interactively refine the reframes. One participant said, “I need to write the information and revise it as many times as possible.” Another participant said, “It could be more interactive, also go more in depth.”

Therefore, our design included the option for iterative editing and updating of reframes. This enabled participants to seek more specific suggestions from the language model, including “making it more relatable to their situation”, “figuring out the next steps and actions”, and “feeling more supported and validated” (Section 4.2). Section 6.3 evaluates the effects of this interactive interaction through a randomized trial.

: Mechanisms to avoid unsafe content generation and flag inappropriate content may make the intervention more safe. The need for safety considerations is crucial when intervening in high-stakes settings like mental health (Li et al., 2020; Martinez-Martin et al., 2018). There is a risk that AI might inadvertently harm, rather than help, individuals coping with mental health challenges. Therefore, a key goal was to ensure safety and minimize risks. Our design included mechanisms to avoid unsafe content generation and flag inappropriate content, as described in Section 4.2. We discuss the content that was flagged by participants in Section 6.4.

4.2. System Design

In our system (Figure 1), we guided participants through a five-step process. This included (1) describing the thought, (2) detailing the situation, (3) reflecting on the emotion, (4) identifying the thinking traps, and (5) finally, reframing the thought.

Step I, II, and III: Participant describes the context. On selecting to use the system and after consenting to participate in our study (Appendix Figure 12 and Figure 13), a participant first articulates the thought they are struggling with (e.g., “I’ll never complete my PhD”; Figure 1a). Next, to contextualize their thought (; Figure 1b), the participant describes a recent situation that may have led to this thought (e.g., “My research project failed”). Additionally, the participant reflects on the emotion they are currently experiencing along with its intensity (e.g., “stressed”; 9 out of 10).

Step IV: LLM-assisted selection of thinking traps. The next step is to identify thinking traps. The typical process of identifying thinking traps involves participants navigating through a list to single out possible traps in their thoughts, which we identified as a cognitive and emotional barrier (). Here, we use a language model to assist participants in the identification of thinking traps among 13 common thinking traps (see Appendix Table 6).

For this, we rank the thinking traps for the given thoughts using a language model and show those to the participants along with their individual estimated likelihoods (e.g., “Catastrophizing – 70%; Fortune Telling – 23%; Overgeneralizing – 7%”; Figure 1c). In order to incorporate psychoeducation, we provide definitions and examples of these thinking traps (; Appendix Table 6). We use the GPT-3 model (Brown et al., 2020) finetuned over a dataset of thinking traps by Sharma et al. (Sharma et al., 2023b). This model achieves a top-1 accuracy of 62.98% on the 13-class thinking trap classification problem.

The participant selects one or more thinking traps from this ranked list that they most closely identify with based on their thinking pattern (e.g., “Catastrophizing”; Figure 1d).

Step V: LLM-assisted writing of reframes. Finally, the participant writes a reframed thought addressing their thinking traps. Writing reframes to negative thoughts while maintaining composure in the moment is challenging (), therefore, we use a language model to assist participants. Our language model is based on Sharma et al. (Sharma et al., 2023b), who propose a retrieval-enhanced in-context learning method using GPT-3 (Brown et al., 2020). Given a new thought and a situation , this model retrieves -similar examples from a dataset of {(situation, thought, reframe), …} triples collected from mental health experts. Those examples are used as an in-context prompt for the GPT-3 model to generate reframes for and ().

Using this language model, we generate multiple suggestions for possible thought reframes and show them to the participant as a potential starting point (Figure 1e).222To generate multiple suggestions, we perform top-p sampling (Holtzman et al., 2020) multiple times. We show three suggestions by default, but participants are provided with an option to seek more suggestions if needed. The multiple suggestions are aimed at offering varying perspectives to the participant’s original thought (e.g., “I’m imagining the worst-case scenario. This project did not work out, but I can use this experience for my future projects.”, “This research project was a setback, but it is just one step in my PhD journey. I will take this as a learning experience and I’m sure I will do better next time.”, “I am disappointed that my research project failed, but I can still complete my PhD if I keep working hard and don’t give up.”333The reframing suggestions in this example have been generated by our GPT-3 based model.). In addition, to incorporate psychoeducation, we provide instructions on ways in which the participant can reframe the specific thinking traps selected by them in the previous step (e.g., “Tips to overcome catastrophizing”; ; Appendix Table 6).

The participant initiates reframing by choosing a reframe from the initial suggestion list or by writing a reframe on their own (Figure 1f). The participant then iteratively refines this reframe through manual edits, as well as through additional, optional help from the language model. For this, we provide participants with an option to seek more specific suggestions from the language model, including “making it more relatable to their situation”, “figuring out the next steps and actions”, and “feeling more supported and validated” (; Figure 1g). For the option selected by the participant, we generate additional suggestions using the language model. Participants can either copy these additional suggestions, add them to their initial reframe, replace their initial reframe with it, or use it as an inspiration. Also, see Appendix Figure 14 for the detailed interface.

Safety considerations. To minimize harmful outputs generated by language models (), we combined classification-based content filtering with rule-based content filtering. We use a classification-based content filtering system provided by Azure OpenAI (bit.ly/azure-content-filter) which identifies and filters out content related to “hate”, “sexual”, “violence”, and “self-harm” categories. In addition, we developed a rule-based method to filter out any generated content that contained words or phrases related to suicidal ideation or self-harm. To achieve this, we created a list of 50 regular expressions (e.g., to identify phrases like “feeling suicidal”, “want to die”, and “harm myself”) based on suicidal risk assessment lexicons such as the one by Gaur et al., 2019 (Gaur et al., 2019). A language model-generated reframe suggestion that matched any of the regular expressions was filtered out and not suggested to the participants. Also, participants were given the option to flag inappropriate reframing suggestions through a “Flag inappropriate” button (Figure 1f; Section 6.4).

We deployed this system on the MHA platform and studied its effectiveness with platform visitors (see Section 3.2). We make the code used to design the system publicly available at

github.com/behavioral-data/Self-Guided-Cognitive-Restructuring.

5. RQ2a – To what extent does our intervention help individuals in alleviating negative emotions and overcoming negative thoughts?

We used a mixed-method approach to evaluate the effectiveness of our system. Here, we first describe the different quantitative and qualitative measures used in our study, followed by the evaluation of our system on these metrics.

5.1. Quantitative Measures

Drawing from various metrics prevalent in the cognitive behavioral therapy literature, we conducted a comprehensive analysis of our system. We assessed the effects of our system on the participants’ emotions, the efficacy of the reframes they wrote, and learnability of the skill:

-

(1)

Reduction in Emotion Intensity: Intensity of the participant’s emotion before the system use Intensity of the participant’s emotion after the system use. We collected the emotion associated with the participant’s negative thought before the system use (“What emotion does this thought make you feel?”) and collected its intensity both before and after the system use (“How strong is your emotion? (1 to 10)”).

-

(2)

Reframe Relatability: After the system use, we asked the participant: “How strongly do you agree or disagree with the following statement? – I believe in the reframe I came with” (1 to 5; 1: Strongly Disagree; 5: Strongly Agree).

-

(3)

Reframe Helpfulness: After the system use, we asked the participant: “How strongly do you agree or disagree with the following statement? – The reframe helped me deal with the thoughts I was struggling with’’ (1 to 5; 1: Strongly Disagree; 5: Strongly Agree).

-

(4)

Reframe Memorability: After the system use, we asked the participant: “How strongly do you agree or disagree with the following statement? – I will remember this reframe the next time I experience this thought” (1 to 5; 1: Strongly Disagree; 5: Strongly Agree).

-

(5)

Skill Learnability: After the system use, we asked the participant: “How strongly do you agree or disagree with the following statement? – By doing this activity, I learned how I can deal with future negative thoughts” (1 to 5; 1: Strongly Disagree; 5: Strongly Agree).

5.2. Qualitative Measures

We also collected subjective feedback from participants. At the end of the system usage, we asked an optional open-ended question “We would love to know your feedback. What did you like or dislike about the tool? What can we do to improve?”

5.3. Results: Quantitative

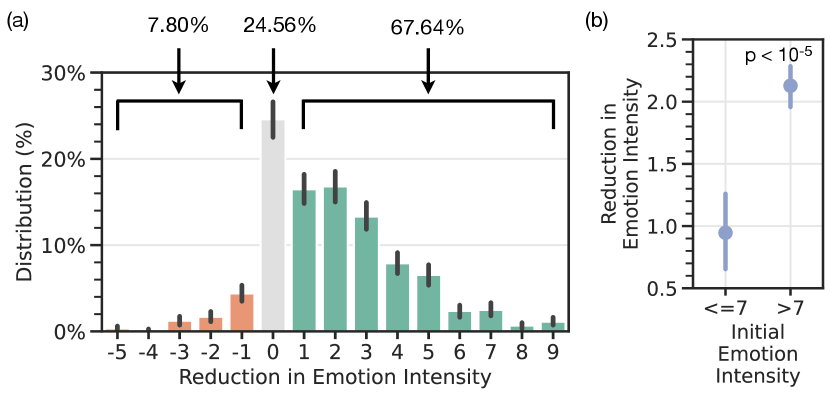

Two plots illustrating the effects of our system on emotion intensity. The first one is a bar plot that shows the reduction in emotion intensity of participants before and after using the system (emotion scale: 1 to 10). The x-axis is ”Reduction in Emotion Intensity” and goes from -5 to 10. And the y-axis is the ”Distribution” in percentage from 0% to 30%. 67% of the participants report having a positive reduction in (negative) emotions, 24.56% report no change in emotions, and 7.80% report a negative change (N=1,922). The second one is a point plot with Initial Emotion Intensity on the x-axis (with two discrete values – ¡=7 and ¿7) and Reduction in Emotion Intensity on the y-axis (from 0.5 to 2.5). Participants with higher emotion intensity before using the system reported a higher reduction in emotion intensity post system usage (N=1,922).

| Outcome Measure | Mean | Std |

|---|---|---|

| Reduction in Emotion Intensity (-10 to 10) | 1.90 | 1.29 |

| Reframe Relatability (1 to 5) | 3.84 | 1.17 |

| Reframe Helpfulness (1 to 5) | 3.33 | 1.35 |

| Reframe Memorability (1 to 5) | 3.52 | 1.36 |

| Skill Learnability (1 to 5) | 3.39 | 1.39 |

| Outcome Measures | Initial Emotion Intensity | |

|---|---|---|

| Reduction in Emotion Intensity (-10 to 10) | 0.95 | 2.13 |

| Reframe Relatability (1 to 5) | 3.98 | 3.65 |

| Reframe Helpfulness (1 to 5) | 3.49 | 3.09 |

| Reframe Memorability (1 to 5) | 3.67 | 3.33 |

| Skill Learnability (1 to 5) | 3.57 | 3.14 |

67.64% of the participants reported a positive change in emotions. We assessed the difference in the intensity of participants’ self-reported negative emotions before and after utilizing our system (N=1,922). Figure 2a shows the distribution. Our findings revealed a positive emotional shift in 67.64% (1,300) of the participants, while 24.56% (472) of the participants reported no change in their emotion intensity. A small 7.80% (150) of the participants reported a negative shift in their emotions, with the majority (72%; 108) of them experiencing a relatively minor negative shift of -1.

Participants with higher initial emotion intensity experienced a greater improvement in emotions. We checked the effects of our system on participants with different initial emotion intensities. We found that participants with more intense initial emotions (¿7 out of 10) reported 124.21% more substantial positive shifts in their emotional state than those with less intense initial emotions (2.13 vs. 0.95; ¡=7 out of 10; N=1,922; 444We use a Two-sided student’s t-test for all statistical tests in this paper.; Fig 2b). Psychotherapy research suggests that a higher intensity of negative moods and depression is associated with stronger negative cognition and maladaptive thoughts (Beevers et al., 2007). Our findings indicate that participants with greater initial emotional intensity could have a greater benefit from a cognitive restructuring intervention like ours, potentially due to the positive effects it has on their cognition, which in turn may positively effect mood and emotion.

Majority of participants found the reframes believable, helpful, and memorable. We evaluated the effectiveness of the reframes that people are able to write using our system (N=1,922). Overall, we found that 80.49% of the participants found the reframes relatable to them, 65.65% of participants found the reframes helpful in overcoming negative thoughts, and 70.49% of participants found the reframes memorable or easy to remember. Further investigating people with different emotional intensities, we found that people with higher initial emotional intensities ( out of 10) reported 8.29% lower reframe relatability (3.65 vs. 3.98; ), 11.46% lower reframe helpfulness (3.09 vs. 3.49; ), 9.26% lower reframe memorability (3.33 vs. 3.67; ), and 12.04% lower skill learnability (3.14 vs. 3.57; ) than those with lower initial emotional intensities ( out of 10; N=1,922; Table 2). This suggests that when individuals are emotionally agitated, it is harder to come up with effective reframes and learn cognitive restructuring.

Most participants found the system helpful in learning cognitive restructuring. We assessed how effectively our system can be used to learn the skill of managing negative thoughts. We found that 67.38% of the participants reported that the system helped them in learning how to deal with negative thoughts.

We also report mean and standard deviations of the quantitative outcome measures in Table 1.

5.4. Results: Qualitative

On analyzing the qualitative feedback from participants, we observed that participants highlighted three key ways in which they found assistance from our system ().

First, many participants indicated that the system helped them overcome cognitive barriers, especially when they “feel stuck”, and doing this exercise is “difficult”, “on their own” and “in the moment.” A participant wrote, “My own reframes are difficult, and AI gives multiple other perspectives to consider.” Also, some participants reported that it helped them find “the right words” or “ideas to start with.” A participant wrote, “Thank you for helping me to find the right words to clearly reframe a negative thought and how to apply the thought to my own thinking processes.” Another noted, “I appreciated that the option of having the AI tool walk you through the reframing process step by step (e.g., by choosing the negative thought you may be experiencing + giving possible reframing ideas to start with/add more details to).”

Second, participants expressed how the system enabled a less emotionally triggering experience. One participant wrote, “I felt in control and more comforted that I can handle difficult situations with confidence.” Another participant wrote, “This activity let me calm down…”. Another participant noted, “…this made the process much less daunting…”. This is perhaps consistent with the quantitative findings on reduced emotion intensity (Section 5.3).

Third, participants valued that the system allowed them to explore multiple viewpoints. One participant wrote, “…After reading several reframes and looking over them I realized that there are many options, many positive sides.” Another participant wrote, “I felt reassured to see multiple views, and reflect upon them…”

Overall, these results suggest that there are opportunities to assist participants in cognitively challenging and emotionally triggering psychological processes through human-language model interaction.

6. RQ2b – What is the impact of individual design hypotheses on the effectiveness of the intervention?

Here, we studied the impact of individual design hypotheses (Section 4.1) on the effectiveness of the intervention and overall participant engagement. To facilitate this, we deployed different design variations of our system by ablating specific design features (e.g., one variation that includes psychoeducation and another variation that removes it). For each design ablation, we conducted randomized controlled trials in which incoming participants were randomly assigned one of the two design variations (Appendix Table 5). To measure the impact, we evaluated the difference in outcomes between participants involved in the two design variations. In this section, we report the results from ablating contextualization (), psychoeducation (), and interactivity ().

6.1. Contextualizing through Situations Improves Reframe Helpfulness

Reflecting on situations and emotions and understanding their connection with negative thoughts can help in writing more personalized and effective reframes (). However, asking participants for additional information like descriptions of a relevant situation and emotion can potentially increase dropout which could prevent successful outcomes. Therefore, the reflection process comes with a tradeoff with higher participant burden and higher dropout rates. Here, we conducted two different randomized trials. One where we enabled contextualization through situations to half of the participants at random. Another where we enabled contextualization through emotions to half of the participants at random.

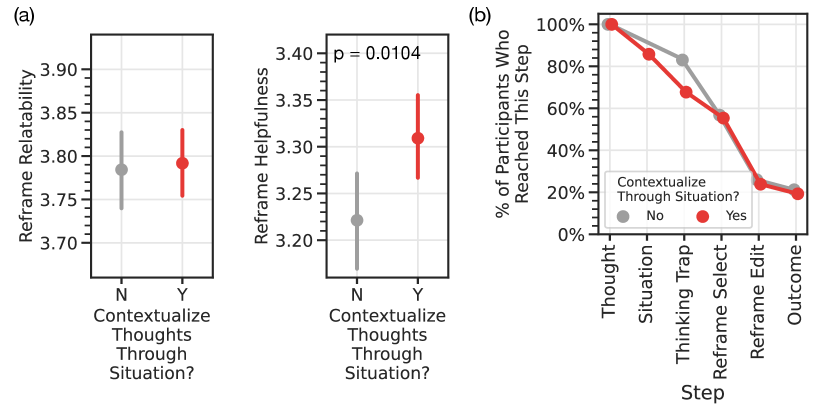

We found that contextualizing participant thoughts through their situations led to 2.80% more helpful reframes and similar levels of relatability (3.31 vs. 3.22; N=1,636; ; Figure 3a). This indicates the benefits of increased reflection in self-guided mental health interventions. Also, while typically an increased information request is correlated with a higher dropout rate, we found that a similar number of participants reached the end of the tool, regardless of the additional information requested (Figure 3b).

Two separate point plots and one line plot. Both the point plots have ”Contextualize through Situation?” as their x-axis with two possible values ”N” and ”Y”. The y-axes are Reframe Relatability and Reframe Helpfulness. Plots show that contextualizing participant thoughts through their situations led to 2.80% more helpful reframes (3.31 vs. 3.22) but did not lead to more relatable reframes. The line plot represents the % of participants who reached various steps (y-axis) against different steps of cognitive restructuring (x-axis; Thought, Situation, Thinking Trap, Reframe Select, Reframe Edit, and Outcome). There are two lines, one each for the two possible values of ”Contextualize through Situation?” (”N” or ”Y”). The plot shows that asking for additional context did not lead to a lower completion rate.

Surprisingly, participants who contextualized their thoughts through emotions reported 3.86% lower levels of relatability (3.87 vs. 3.72; N=4,016; ; Appendix Figure 7). This may be because our language model does not incorporate emotions while identifying thinking traps or suggesting reframes, due to the lack of relevant cognitive restructuring dataset containing self-reported emotion annotations. Consequently, this could possibly lead participants to develop unwarranted expectations where they anticipate their emotional states to be addressed in the reframing suggestions, even when they are not. Note that this is different for descriptions of situations, which the language model does take into account and typically reflects in the generated reframes.

Qualitative feedback from participants indicated that they desired the inclusion of these steps as it helped them better process their thoughts (e.g., a participant wrote “What made it especially helpful was being able to contextualize my feelings, which I feel allows for a more relatable reframe”).

6.2. Integrating Psychoeducation has Limited Impact on Overall Effectiveness

Providing psychoeducation with the intervention may help people learn the cognitive restructuring skill more effectively. While users of early prototypes expressed interest in integrating psychoeducation (; Section 4.1), our randomized trial indicated that it did not lead to significant quantitative improvement in outcomes including skill learnability as self-reported by participants (at ; N=1,850; Appendix Figure 7).

Nevertheless, qualitative feedback from participants indicated that they found the provided definitions, examples, and strategies helpful (e.g. one participant wrote, “I like how the tool provided explanations”; another participant wrote, “I like the simple explanations and examples for each thought trap”).

6.3. Increased Interactivity with the Language Model is Associated with Improved Outcomes

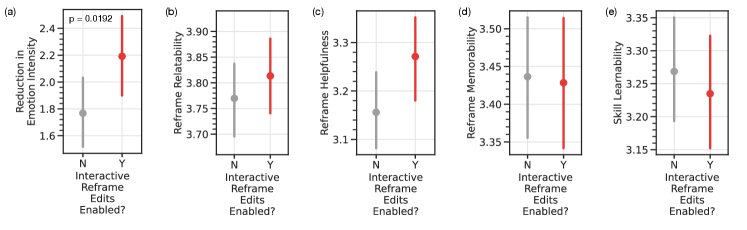

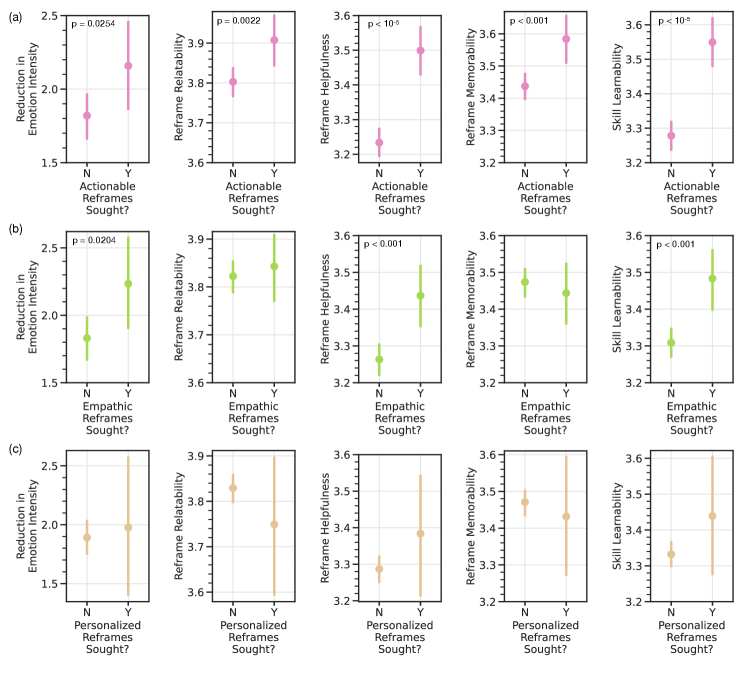

Five different points plots, all with the x-axis of ”Interactive Reframe Edits Enabled?” with two possible values (”N” or ”Y”). The y-axes are Reduction in Emotion Intensity, Reframe Relatability, Reframe Helpfulness, Reframe Memorability, and Skill Learnability. The plots show that having the option of interactive reframe edits available to participants led to a 23.73% greater reduction in emotion intensity (2.19 vs. 1.77). However, it did not lead to significant differences in other outcomes (at alpha = 0.05).

Three rows of five different point plots each. The x-axes for the plots in the three rows are ”Actionable Reframes Sought?” (”N” or ”Y”), ”Empathic Reframes Sought?” (”N” or ”Y”), and ”Personalized Reframes Sought?” (”N” or ”Y”) respectively. The y-axes for the five plots in all rows are Reduction in Emotion Intensity, Reframe Relatability, Reframe Helpfulness, Reframe Memorability, and Skill Learnability. The plots show that those who chose to make their reframes actionable experienced superior effectiveness across all five outcomes. Those who chose to make their reframes empathic reported a 21.86% higher reduction in emotion intensity (2.23 vs. 1.83), 5.52% higher reframe helpfulness (3.44 vs. 3.26), and 5.14% higher skill learnability (3.48 vs. 3.31) and no significant differences based on reframe relatability and reframe memorability (at alpha = 0.05). Those who chose to make their reframes personalized reported no significant differences in outcomes (at alpha = 0.05).

We provided participants with an option to seek more specific suggestions from the language model, including “making the reframe more relatable to their situation”, “figuring out the next steps and actions”, and “feeling more supported and validated” (; Figure 1g; Appendix Figure 14).

In our randomized trial, where only half of the participants at random were given this option, we found that having this option available led to a 23.73% greater reduction in emotion intensity (2.19 vs. 1.77; ; N=2,165) and insignificant differences in other outcomes (Figure 4). Also, some participants appreciated having this option. One participant wrote, “I’m glad there was an option to get supported and validated”.

Further, among the study participants who were provided this option, we observed that 38.60% of them made use of it. Those who chose to use it to further interact with the language model to seek additional reframing suggestions of specific types (actionable, empathic, or personalized) reported 5.57% higher reframe helpfulness (3.41 vs. 3.23; ) and 4.86% higher skill learnability (3.45 vs. 3.29; ) than participants who did not use it (N=992; Appendix Figure 9). Moreover, those who chose to make their reframes actionable during this step (by choosing the option, “I want to figure out the next steps and actions”) reported significantly superior effectiveness across all five outcomes than those who did not (Figure 5a). Prior work has highlighted the importance of behavioral activation which involves engaging in behaviors or actions that may help in overcoming negative thoughts (Dimidjian et al., 2011; Burkhardt et al., 2021). Our work shows that participants explicitly seeking out actionable reframed thoughts are more likely to report better outcomes.

Moreover, those who chose to make their reframe empathic reported a 21.86% higher reduction in emotion intensity (2.23 vs. 1.83; ), 5.52% higher reframe helpfulness (3.44 vs. 3.26; ) and 5.14% higher skill learnability (3.48 vs. 3.31; ) and no significant differences based on reframe relatability and reframe memorability (at ; N=992; Figure 5b). We did not find significant differences based on whether a participant chose to make a reframe personalized or not (at ; N=992; Figure 5c).

6.4. Analysis of the Language Model Generated Content that was Flagged Inappropriate

We implemented a feature for participants to report any inappropriate content generated by the language model. This was achieved by including a distinct “Flag inappropriate” button for each generated reframe (Section 4.2). Overall, we found that 0.65% (301 out of 46,593) of the reframing suggestions shown were flagged. After conducting a qualitative review of these flagged reframes, we found a few dozen instances where the model’s suggestions repeated the negative factors/sentiment described by the participant in the original thought which may inadvertently reinforce negative beliefs about oneself (e.g., “I may be a “failure”, but I’m still trying my best.” in response to the thought “I’m a failure”). Note that in most cases repeating parts of the participant’s thought or situation helps to validate their experience and emotional reaction and personalize their reframe. Therefore, this highlights the importance of effectively differentiating which aspects of the participant’s thoughts to re-state in the reframing suggestions. Future work should look more closely at how to facilitate this differentiation.

Nevertheless, for many of the 301 flagged instances, because all participants were shown three reframes as starting points, participants were able to select a different reframing suggestion from the three options presented to them, eventually reporting favorable outcomes. We also checked user dropout between users who flagged content vs. those who did not and found no significant differences. Interestingly, the dropout rate was slightly lower among users who flagged content, at 38.2%, compared to 46.5% for those who who did not. Notably, none of the flagged instances had references to suicidal ideation or self-harm, suggesting that the safety mechanisms designed to address these concerns were likely effective (Section 4.2).

7. RQ3 – How equitable is the intervention and what strategies may improve its equity?

Next, we assess how equitable our intervention is across the issues expressed by participants (Section 7.1) and across participant demographics (Section 7.2). Moreover, we work towards improving equity of our system by improving its effectivness for a specific subpopulation experiencing one of the worst outcome disparities, adolescents (Section 7.3).

7.1. Assessing Outcomes across Issues

| Issues | Reduction in Emotion Intensity | Reframe Relatability | Reframe Helpfulness | Reframe Memorability | Skill Learnability | N |

|---|---|---|---|---|---|---|

| Body Image | 1.42 | 3.89 | 3.20 | 3.49 | 3.38 | 71 |

| Dating & Marriage | 2.05 | 3.79 | 3.20 | 3.47 | 3.33 | 328 |

| Family | 1.99 | 3.78 | 3.26 | 3.35 | 3.34 | 170 |

| Fear | 1.63 | 3.53 | 3.07 | 3.26 | 3.20 | 123 |

| Friendship | 1.91 | 3.65 | 3.20 | 3.48 | 3.20 | 159 |

| Habits | 1.72 | 3.98 | 3.50 | 3.52 | 3.57 | 42 |

| Health | 2.36 | 3.91 | 3.45 | 3.77 | 3.47 | 53 |

| Hopelessness | 1.11 | 3.41 | 2.66 | 3.06 | 2.84 | 70 |

| Identity | 2.54 | 4.00 | 3.55 | 3.64 | 3.09 | 11 |

| Loneliness | 1.56 | 3.43 | 2.74 | 3.03 | 2.77 | 146 |

| Money | 1.71 | 3.73 | 2.80 | 3.43 | 3.17 | 30 |

| Parenting | 2.06 | 4.19 | 3.69 | 3.97 | 3.61 | 36 |

| School | 1.94 | 3.79 | 3.20 | 3.34 | 3.13 | 181 |

| Tasks & Achievement | 1.65 | 3.56 | 3.04 | 3.23 | 2.99 | 232 |

| Trauma | 1.42 | 3.33 | 2.58 | 3.00 | 2.50 | 12 |

| Work | 2.31 | 3.89 | 3.54 | 3.77 | 3.58 | 258 |

To better determine the effectiveness of our intervention in various scenarios, we assessed the outcomes across different types of situations and thoughts that individuals might experience. We characterized participants’ situations and thoughts based on the broader issues that they relate to. In collaboration with mental health experts (some of whom are co-authors), we manually labeled 500 thoughts and situations to identify the potential issues that they are associated with. The result of this iterative open-ended coding process was a set of 16 different issues expressed by participants. These include Body Image, Dating & Marriage, Family, Fear, Friendship, Habits, Health, Hopelessness, Identity, Loneliness, Money, Parenting, School, Tasks & Achievement, Trauma, and Work. See Appendix Table 7 for their definitions and examples.

We used this dataset to finetune a GPT-3 model (text-davinci), which achieved an accuracy of 73.00% on a held-out set (random performance 6.25%). We used this model to analyze the outcomes for people experiencing different issues and to identify the issues where our intervention performed better or worse (Table 3).

We found that participants expressing Hopelessness and Loneliness related thoughts reported worse outcomes relative to other issues. Participants with Hopelessness (e.g., “I will never be better”) reported 41.27% lower reduction in emotion intensity (1.11 vs. 1.89; ), 8.33% lower reframe relatability (3.42 vs. 3.72; ), 16.61% lower reframe helpfulness (2.66 vs. 3.19; ), 10.53% lower reframe memorability (3.06 vs. 3.42; ), and 12.07% lower skill learnability (2.84 vs. 3.23; ) than the population means. Moreover, participants with Loneliness (e.g., “I feel like no one is with me”) reported 8.45% lower reframe relatability (3.43 vs. 3.72; ), 14.11% lower reframe helpfulness (2.74 vs. 3.19; ), 11.40% lower reframe memorability (3.03 vs. 3.42; ), and 14.24% lower skill learnability (2.77 vs. 3.23; ) than the population means. These differences could suggest that thoughts related to some issues are more challenging to overcome than others (as also suggested in psychology theory (Hawkley and Cacioppo, 2010; Heinrich and Gullone, 2006; Beck et al., 1975) ) or that they represent a different subpopulation. However, we also found a lower reduction in emotion intensity (i.e., an outcome measured pre- and post-intervention), suggesting that our system might have had greater difficulty in assisting these issues. In fact, some participants commented that the reframing suggestions did not work well for issues that were too complex and nuanced. One participant wrote, “It might be too simple for more complicated problems.” Another participant wrote, “More complex problems need more precise results in my opinion.” Some participants thought that the suggestions to such complex problems were “superficial”, “artificial,” or “hard to relate to.” Future iterations of the system could benefit from designing more sophisticated language modeling solutions for complex issues.

| Demographics | Reduction in Emotion Intensity | Reframe Relatability | Reframe Helpfulness | Reframe Memorability | Skill Learnability | N |

| Age | ||||||

| 13–14 | 1.84 | 3.64 | 2.94 | 3.03 | 2.99 | 146 |

| 15–17 | 1.64 | 3.50 | 2.68 | 3.07 | 2.83 | 149 |

| 18–24 | 1.98 | 3.78 | 3.13 | 3.48 | 3.15 | 247 |

| 25–34 | 2.00 | 3.89 | 3.32 | 3.70 | 3.40 | 179 |

| 35–44 | 2.15 | 3.97 | 3.40 | 3.69 | 3.51 | 109 |

| 45–54 | 1.64 | 3.96 | 3.32 | 3.64 | 3.56 | 71 |

| 55–64 | 1.88 | 3.96 | 3.46 | 3.96 | 3.21 | 32 |

| 65+ | 1.20 | 4.00 | 3.38 | 3.88 | 4.13 | 8 |

| Gender | ||||||

| Female | 1.92 | 3.78 | 3.21 | 3.44 | 3.26 | 646 |

| Male | 2.19 | 3.74 | 2.95 | 3.38 | 3.04 | 258 |

| Non-Binary | 1.94 | 3.76 | 3.30 | 3.46 | 3.22 | 54 |

| Race/Ethnicity | ||||||

| AIAN | 2.17 | 2.50 | 2.67 | 3.17 | 2.67 | 6 |

| Asian | 1.91 | 3.79 | 3.08 | 3.43 | 3.12 | 216 |

| Black / African Am. | 2.43 | 3.85 | 3.30 | 3.62 | 3.49 | 47 |

| Hispanic or Latino | 1.97 | 3.91 | 3.43 | 3.59 | 3.47 | 76 |

| MENA | 1.90 | 3.78 | 2.90 | 2.98 | 2.80 | 50 |

| NHPI | 2.00 | 4.40 | 4.00 | 3.60 | 3.20 | 5 |

| White | 2.05 | 3.73 | 3.12 | 3.47 | 3.22 | 438 |

| More than One | 2.83 | 3.84 | 3.29 | 3.24 | 3.16 | 38 |

| Other | 0.78 | 3.75 | 3.06 | 3.29 | 3.02 | 48 |

| Education | ||||||

| Middle School | 1.80 | 3.58 | 2.89 | 2.96 | 2.79 | 120 |

| High School | 1.80 | 3.65 | 2.97 | 3.31 | 3.09 | 313 |

| Undergraduate | 2.04 | 3.79 | 3.19 | 3.54 | 3.28 | 239 |

| Graduate | 2.30 | 3.98 | 3.47 | 3.69 | 3.52 | 211 |

| Doctorate | 1.46 | 4.21 | 2.96 | 3.93 | 3.07 | 28 |

We also observed Tasks & Achievement related thoughts (e.g., “I can’t finish my work”) to have 4.30% lower reframe relatability (3.56 vs. 3.72; ), 5.56% lower reframe memorability (3.23 vs. 3.42; ), and 7.42% lower skill learnability (2.99 vs. 3.23; ). Qualitative feedback from participants with such thoughts revealed that they often sought concrete actions beyond what the reframe suggestions could offer.

Moreover, those who used our system for Parenting and Work reported significantly better outcomes than the population means. Those with Parenting issues reported 12.63% higher reframe relatability (4.19 vs. 3.72; ), 15.67% higher reframe helpfulness (3.69 vs. 3.19; ), and 16.08% higher reframe memorability (3.97 vs. 3.42; ). And those with Work issues reported 22.22% higher reduction in emotion intensity (2.31 vs. 1.89; ), 4.57% higher reframe relatability (3.89 vs. 3.72; ), 10.97% higher reframe helpfulness (3.54 vs. 3.19; ), 10.23% higher reframe memorability (3.77 vs. 3.42; ), and 10.84% higher skill learnability (3.58 vs. 3.23; ).

7.2. Assessing Outcomes across Demographics

Language modeling interventions are known to be biased toward people of specific demographics. For interventions targeting mental health, previous research has found that language models are likely to perpetuate social stereotypes, for example, under-emphasizing men’s mental health (Lin et al., 2022). Broadly, this corresponds to the principle of demographic parity in the fairness in machine learning literature (Caliskan et al., 2017; Mehrabi et al., 2021; Blodgett et al., 2020).

Here, we studied the difference in outcomes of our intervention across participants of different demographics. We asked participants to optionally provide demographic information, including age (ranges between 13 to 65+), gender (Female, Male, or Non-Binary), race/ethnicity (American Indian or Alaska Native, Asian, Black or African American, Hispanic or Latino, Middle Eastern or North African, Native Hawaiian and Pacific Islander, White, More than One, or Other), and education levels (Middle School, High School, Undergraduate, Graduate, or Doctorate).

Table 4 reports the outcome differences. We found that participants aged 17 or younger reported 4.84% lower reframe relatability (3.54 vs. 3.72; ), 10.03% lower reframe helpfulness (2.87 vs. 3.19; ), 10.23% lower reframe memorability (3.07 vs. 3.42; ), and 10.22% lower skill learnability (2.90 vs. 3.23; ) compared to the population mean. On the other hand, those aged 25 or above reported significantly better outcomes overall. This suggests that our intervention is less effective for adolescents and more effective for adults. Section 7.3 explores improving the effectiveness of our intervention for adolescents.

Moreover, we found that male participants reported 7.52% lower reframe helpfulness (2.95 vs. 3.19; ), and 5.88% lower skill learnability (3.04 vs. 3.23; ) than the population mean. This is consistent with prior work that shows that language models are likely to be disparate toward men’s mental health (Lin et al., 2022). Race or ethnicity of the participants was not consistently associated with better or worse outcomes. However, those who identified their race as “Other” reported a 58.73% lower reduction in emotion intensity than the population mean (0.78 vs. 1.89; ). Moreover, those who identified their race as “Middle Eastern or North African” reported 12.87% lower reframe memorability (2.98 vs. 3.42; ) and 13.31% lower skill learnability (2.80 vs. 3.23; ).

Finally, based on education levels, participants with a “Middle School” education reported 9.40% lower reframe helpfulness (2.89 vs. 3.19; ), 13.45% lower reframe memorability (2.96 vs. 3.42; ), and 13.62% lower skill learnability (2.79 vs. 3.23; ). On the other hand, those who identified as “Graduate” reported 6.99% higher reframe relatability (3.98 vs. 3.72; ), 8.78% higher reframe helpfulness (3.47 vs. 3.19; ), 7.89% higher reframe memorability (3.69 vs. 3.42; ), and 8.98% higher skill learnability (3.52 vs. 3.23; ). Note that age and education are strongly correlated (pearson’s correlation = 0.62), especially for younger participants that did not yet have the time to advance their education, suggesting that the relationship between education and outcomes may be at least partially explained by age.

7.3. Improving Intervention Equity by Improving the Experience of Adolescents

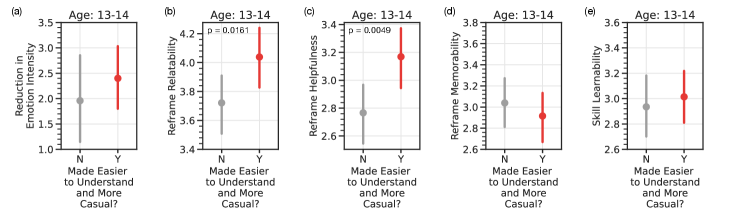

Five different point plots for the Age Group 13-14, all with an x-axis of ”Made Easier to Understand and More Casual?” with two possible values (”N” or ”Y”). The y-axes are Reduction in Emotion Intensity, Reframe Relatability, Reframe Helpfulness, Reframe Memorability, and Skill Learnability. The plots show that Adolescents in the age group 13 to 14 reported 8.60% higher reframe relatability (4.04 vs. 3.72) and 14.44% higher reframe helpfulness (3.17 vs. 2.77) if they were suggested easier to understand and more casual reframes compared to instances where such reframes were not suggested.

Because intervention effectiveness differs significantly across people’s issues and demographics, it is crucial to identify solutions that improve intervention equity. This may require adapting the intervention to different subpopulations.

Here, we performed one specific experiment to study how our language model-based intervention may be adapted to make it more equitable. We particularly focused on teenagers and adolescents whom we found to have one of the largest outcome discrepancy.555While we also observed a large outcome discrepancy across educational attainment, this can largely be explained through age (as a 15 year old almost certainly did not yet have a chance to complete a college education yet).

Research suggests that current treatment methods are often structurally incompatible with the ways adolescents engage with, or wish to engage with, mental health care (Kruzan et al., 2022a). Although adolescents are more likely to use self-guided mental health interventions (Schleider et al., 2020), our analysis suggests that our human-language modeling based intervention may be less effective for this demographic (Section 7.2). Given the escalating youth mental health crisis (Avenevoli et al., 2015), it is important to develop solutions that bridge this gap. To achieve this, we tried and identified the challenges that uniquely affect adolescents.

We hypothesized that the linguistic complexity of our system may affect its performance among adolescents. Research in sociolinguistics has shown that language use varies with age (Barbieri, 2008). On analyzing the reading complexity of the reframed thoughts authored by participants of different age groups, we found that those between the ages of 13 and 17 tend to write thoughts and reframes with the lowest levels of reading complexity (based on the Coleman–Liau Index (Coleman and Liau, 1975); Appendix Figure 10). Therefore, we tried to reduce the reading complexity of the reframing suggestions to adolescents. For this, given a reframing suggestion, we asked the GPT-3 language model (Brown et al., 2020) to make it easier to understand and more casual (using the prompt, “Revise the following text to make it easy to understand for a 5th grader. Also, make it more casual: {reframe}’’), similar to other efforts targeting people of different subpopulations (e.g., for scientific communication (August et al., 2022)). Also, see Appendix Table 8 for examples that illustrate this rewriting process.

Figure 6 reports the results of a randomized trial that only provides these easier to understand and more casual reframing suggestions to half of the participants at random. We found that adolescents in the age group 13 to 14 reported 8.60% higher reframe relatability (4.04 vs. 3.72; ) and 14.44% higher reframe helpfulness (3.17 vs. 2.77; ) when they were suggested reframes with lower reading complexity (N=148). Moreover, adolescents in the age group 15 to 17 reported 15.58% higher reframe helpfulness (3.19 vs. 2.76; ) when they were suggested such reframes (N=174). We did not find significant differences for adults () through this intervention (N=760; Appendix Figure 11). This suggests that a simpler and more casual language might be beneficial to many. However, based on qualitative feedback, certain adult participants expressed a preference for a less casual language. Future work could explore how to accommodate such individual preferences.

8. Discussion

8.1. Supporting the Learning and Practice of Self-Guided Interventions

Our work demonstrates how language modeling interventions can support mental health. Approximately 20% of people worldwide are experiencing mental health problems, but less than half receive any treatment (Organization et al., 2022; Olfson, 2016). Due to widespread clinician shortages, lengthy waiting lists, and lack of insurance coverage, many vulnerable individuals have limited access to therapy and counseling. In addition, mental health issues are heavily stigmatized, which frequently prevents individuals from seeking appropriate care (Sickel et al., 2014).

Effective self-guided mental health interventions could rapidly increase access to care (Schleider et al., 2020; Patel et al., 2020; Schleider et al., 2022; Shkel et al., 2023). However, despite their inherent promise, the wide-scale implementation of these interventions remains a challenge owing to the cognitive and emotional challenges that they pose (Shkel et al., 2023; Garrido et al., 2019). Most interventions that digitally facilitate self-guided interventions simply transform traditional manual therapeutic worksheets into digital online formats (Shkel et al., 2023). These provide limited instructions and support, which affects user engagement and usage (Garrido et al., 2019; Baumel et al., 2019; Fleming et al., 2018; Torous et al., 2020). Other studies have used wizard-of-oz methods to assist users (Ly et al., 2017; Smith et al., 2021; Morris et al., 2015; Kornfield et al., 2023; Kumar et al., 2023). However, the controlled research setting of these studies limit their ecological validity, thereby limiting our understanding of user preferences when systems are deployed in real world (Mohr et al., 2017; Blandford et al., 2018; Borghouts et al., 2021; Poole, 2013).

Here, we contribute the design of a novel system for human-language model interaction-based self-guided cognitive restructuring of negative thoughts. We conduct a large-scale, randomized, empirical studies in an ecologically informed setting to understand how people with lived experience of mental health interact with it.

Our findings open up opportunities for improved learning and practicing of key mental health strategies and coping skills. Moreover, these interventions could complement traditional treatment options, e.g., by being accessible to users when they have difficulties finding a therapist, or in between sessions.

8.2. Implications on the Design of Self-Guided Mental Health Intervention

Several of our design hypotheses (Section 4.1) were observed to improve intervention outcomes. These include personalizing the intervention to the participant, facilitating iterative interactivity with the language model, and pursuing equity, all of which may generalize to support other self-guided mental health interventions.