1]\orgdivDepartment of CSE, \orgnameUttara University, \orgaddress\countryBangladesh 2]\orgdivDepartment of CSE, \orgnameManarat International University, \orgaddress\countryBangladesh

FaceGemma: Enhancing Image Captioning with Facial Attributes for Portrait Images

Abstract

Automated image caption generation is essential for improving the accessibility and understanding of visual content. In this study, we introduce FaceGemma, a model that focuses on accurately describing facial attributes such as emotions, expressions, and features. Using FaceAttdb data, we generated descriptions for 2000 faces with the Llama 3 - 70B model and fine-tuned the PaliGemma model with these descriptions. Based on the attributes and captions supplied in FaceAttDB, we created a new description dataset where each description perfectly depicts the human-annotated attributes, including key features like attractiveness, full lips, big nose, blond hair, brown hair, bushy eyebrows, eyeglasses, male, smile, and youth. This detailed approach ensures that the generated descriptions are closely aligned with the nuanced visual details present in the images. Our FaceGemma model leverages an innovative approach to image captioning by using annotated attributes, human-annotated captions, and prompt engineering to result in high quality facial descriptions. Our method significantly improved caption quality, achieving an average BLEU-1 score of 0.364 and a METEOR score of 0.355. These metrics demonstrate the effectiveness of incorporating facial attributes into image captioning, providing more accurate and descriptive captions for portrait images.

keywords:

Facial attributes, Portrait images, Llama3, Gemma, PaliGemma, VGG-Face model, InceptionV3, LSTM model, FaceGemma, BLEU score, METEOR score, Linguistic coherence.1 Introduction

Image captioning [1] represents a captivating and multifaceted interdisciplinary challenge, uniting the realms of Computer Vision [2] and Natural Language Processing (NLP) [3]. Its primary objective is to create meaningful and contextually relevant captions for images by using advanced Deep Learning models [4]. These models are pivotal in extracting important visual features and understanding the semantic context necessary for generating informative and coherent human-readable captions. With far-reaching applications that include aiding the visually impaired and enriching human-computer interactions, image captioning stands at the forefront of AI research.

The landscape of automatic image captioning has witnessed remarkable progress, largely attributed to the advent of Deep Neural Networks and the availability of extensive captioning datasets. These networks are renowned for producing fact-based image descriptions. Recent endeavors in this domain have pushed the boundaries, focusing on detecting emotional and relational facets within images and infusing captions with emotive features to craft more engaging and immersive descriptions. In summation, image captioning remains a pivotal enabler of AI technology, narrowing the gap between visual content and human language to facilitate seamless human-machine interactions.

In the realm of modern Image Captioning, an encoder-decoder paradigm [5, 6] is commonly adopted. This top-down approach begins with a Convolutional Neural Network (CNN) [7] model performing image content encoding, followed by a Long Short-Term Memory (LSTM) [8] model which is responsible for generating the image caption through decoding. Based on a thorough review of the current state-of-the-art, we have decided to develop our Facial Attribute Image Captioning Model, FaceGemma, following this paradigm.

Several image captioning systems have addressed facial expressions and emotions, but facial attribute captioning goes further by capturing and describing specific physical characteristics beyond emotions. To our knowledge, despite the presence of some facial attribute recognition models, there is no image captioning system that can generate captions based on the attributes of a subject’s face from an image. This gap in the literature has motivated our work on building a Facial Attribute Image Captioning Model, named FaceGemma.

In this paper, we also introduce the dataset FaceAttDB 111https://www.kaggle.com/datasets/naimul314/faceattdb carefully curated and generated by our research team. This dataset contains comprehensive descriptions of 40 facial attributes such as attractiveness, full lips, big nose, blond hair, brown hair, bushy eyebrows, eyeglasses, male, smile, and youth, among others. We further used these captions and attributes to generate new descriptions of the images using Llama3 70B parameterized model and prompt engineering. It serves as a foundation for our proposed model, FaceGemma 222https://huggingface.co/naimul011/FaceGemma, which leverages the synergy of Computer Vision and Natural Language Processing to automatically recognize and describe this diverse range of facial attributes within images. Our contributions can be summarized as follows:

-

1.

The FaceGemma model, takes a pioneering approach by generating portrait image descriptions that seamlessly incorporate a wide range of facial attributes. To the best of our knowledge, this represents the first study to apply facial attribute analysis within the realm of image captioning.

-

2.

We evaluate our FaceGemma model through a set of experiments utilizing well-established evaluation metrics, namely BLEU and METEOR. Furthermore, we systematically compare the impact of several image feature extraction techniques on our model’s performance.

-

3.

The ’FaceAttDB’ image caption dataset, comprising portrait images sourced from the CelebA dataset [24], accompanied by captions. We have made this dataset publicly available333https://zenodo.org/record/8144361 to support future research in this field.

The remainder of the paper is structured as follows: Section 2 covers related works, Section 3 introduces our dataset, Section 4 details the facial description generation which is used for the final method, Section 5 presents our proposed methodology, Section 6 presents our results, and finally, Section 7 concludes the paper.

2 Related Works

In the intersection of Computer Vision and Natural Language Processing, the field of image captioning for facial images offers many opportunities. It can enhance human-computer interaction, help understand emotional expressions, and support assistive technologies. Although research on captioning facial attributes is still new, it builds on established areas like general image captioning, facial expression analysis, and face recognition.

In face recognition, Parkhi et al.[9] (2015) developed a deep neural network that learns discriminative features from facial images, achieving high accuracy despite challenges like lighting and pose variations, but requiring extensive training datasets and computational resources. Nezami et al.[10] (2018) introduced SENTI-ATTEND, integrating sentiment analysis and attention mechanisms into image captioning to produce emotionally meaningful and contextually relevant captions. Fuhai Chen et al.[11] (2018) proposed ”GroupCap,” a framework for group-based image captioning that uses structured relevance and diversity constraints to create coherent and diverse captions for groups of images, improving the quality and richness of image descriptions.

In 2019, Nezami et al. introduced Face-Cap[12], a method that combined facial expression analysis with caption generation, achieving better performance than traditional methods. Later in 2019, Hong et al. introduced the Facial Expression Sentence (FES) Generation[13], which associated facial action units with natural language descriptions to capture facial expressions in image captions. In 2020, FACE-CAP and FACE-ATTEND[14] were presented, integrating facial expression features into caption generation to create emotionally resonant captions.

Facial Recognition for Identity Cards [15] Usgan et al. made significant contributions in 2020 by improving facial recognition for electronic identity cards, resulting in enhanced identification accuracy. Moreover, Tran et al Entity-Aware News Image Captioning [16], (2020) demonstrated an innovative approach by integrating named entity recognition with transformer-based caption generation, leading to more informative image descriptions.

In 2021, BORNON[17] improved Bengali image captioning. In 2022, Priya S. enriched captions with emotional cues Emotion-Based Caption Generation [18], while Bisikalo explored emotional attitudes [19]. Object-centric unsupervised Captioning[20], comprehensive facial representation, and fair attribute classification advanced the field.

A 2022 study integrated visual and linguistic cues for comprehensive facial representation, aiding facial attribute prediction and emotion recognition named by General Facial Representation Learning [21] in 2022. Park et al. proposed Fair Contrastive Learning for Facial Attribute Classification [22] addressed biased learning in facial attribute classification, achieving fairer results. In 2023, EDUVI [23] combined Visual Question Answering and Image Captioning to enhance primary-level education through interactive and informative mechanisms.

While these studies contribute significantly to image captioning, a notable gap exists in the exploration of facial attributes in portrait image captioning, presenting an opportunity for future research, such as the proposed FaceGemma model.

3 Dataset

Our dataset comprises 2,000 curated portrait images, sourced from the CelebA dataset [24], which boasts over 200,000 celebrity images with 10,177 unique identities and various facial attributes. CelebA supports numerous computer vision tasks. Each image in our dataset is paired with five captions.

The captions in this dataset are generated based on the 40 attribute annotations available in the CelebA dataset, which covers various aspects such as age, gender, expression, hair color, eye color, nose shape, and skin complexion. These attributes are used to create captions that accurately describe the visual characteristics of the portrait images. We have included a figure displaying examples of our generated captions, in which we emphasize and highlight the various facial attributes Figure 7. This visual representation serves to illustrate the accuracy and effectiveness of our image captioning model in capturing and describing facial features, contributing to a better understanding of its performance

It is important to note that while the FaceAttDB dataset currently comprises 2,000 images, each with five captions, the size of the dataset may be expanded in future work to enhance the diversity and robustness of the models trained on it which ensures its continued usefulness for advancing research in facial attribute captioning.

4 Facial Description Engineering

The captions that are annotated by humans do not contain all or most of the attributes from our attribute vector list. Hence, we had to engineer prompts that effectively generate facial descriptions using human captions, attributes, and prompt engineering. The engineered prompts are used to generate facial descriptions using the Llama3 large language model.

4.1 Llama3

Meta’s Llama3 [32] is an advanced open-source large language model (LLM) available in two sizes: 8 billion and 70 billion parameters. It excels in comprehending and generating intricate text, making it highly suitable for tasks such as image captioning. Although there isn’t an official research paper published yet, benchmarks indicate that Llama3 surpasses most other open-source models of similar scale. This superiority can be attributed to its training on an extensive dataset, which is seven times larger than its predecessor, and advancements in training methodologies. Llama3 offers superior performance for text generation tasks compared to currently available open-source alternatives.

In this study, we employed the Llama 3 70B model which is 3 generation iteration of Meta’s Llama language model [34] to enhance the generation of facial attribute descriptions within the FaceAttDB dataset. This model was instrumental in generating detailed descriptions for 2000 faces, leveraging advanced prompt engineering and attribute annotations. The Llama 3 70B model facilitated the creation of a new dataset where each description meticulously portrays human-annotated attributes such as attractiveness, full lips, big nose, blond hair, brown hair, bushy eyebrows, eyeglasses, male, smile, and youth.

By leveraging the Llama 3 70B model, we ensured that the generated descriptions accurately capture nuanced visual details present in portrait images. These descriptions served as crucial training data for fine-tuning our PaliGemma model, a 2 billion parameter model designed to excel in facial attribute captioning. Figure 2 shows a few of the samples of the generated facial description.

5 Proposed methodology

In this section, we outline the key processes of our FaceGemma model which is our fine-tuned PaliGemma model. We start by explaining the interworkings of PaliGemma and then how this multimodal model inference on the image using prompts.

5.1 PaliGemma

PaliGemma [33] is a vision-language model that processes both image and text inputs, inspired by PaLI-3 [30] and incorporating SigLIP [29] and Gemma [28] components. It answers questions about images, generates captions for visual media, detects objects, and reads embedded text. It offers general-purpose pre-trained models adaptable through fine-tuning for diverse applications, alongside research-oriented models specialized for specific datasets. Fine-tuning optimizes PaliGemma for specific tasks, enhancing accuracy and relevance in both general and research contexts, making it versatile for detailed image analysis and multimodal understanding.

5.2 Prompt Engineering

To generate new descriptions from the dataset , which contains attributes and captions , we employ prompt engineering . Here, represents each sample caption-attribute pair from which can be represented as . The process involves applying prompt engineering to each , using a specific prompt, to produce descriptions that are tailored to the attributes and captions provided in the dataset .

5.3 Description Generation

The equation shows mathematically how we generate facial descriptions for the images, using prompt engineering and language model function:

This formulation ensures that the generated descriptions reflect the nuanced details of the attributes and captions present in the original dataset, thereby enhancing the accuracy and relevance of the generated text outputs using Llama3’s 70B parameterized model.

Our engineered prompt instructs the Llama3 model to expertly analyze attributes and captions, and craft a coherent paragraph description of the face. Key guidelines include combining positive attributes with ”and” for clarity, excluding attributes with a value of 0.0, in the attribute vector, to focus on significant features, and emphasizing attributes labeled at 1.0. The goal is to ensure the descriptions are fluent and natural, resembling human-generated text.

5.4 Method

Our overall method is shown in figure 3 where the first step of facial description generation has been shown which is also explained in the previous subsection section.

Next, the generated description dataset and corresponding image dataset are used for fine-tuning the PaliGemma model to form FaceGemma. This fine-tuning takes center stage in transforming PaliGemma into FaceGemma. Each image, , and description is paired with its corresponding description, and PaliGemma learns to bridge the gap between its own generated descriptions and the human-written ones in . This iterative process involves minimizing a loss function that measures this difference. Finally, FaceGemma’s newfound expertise is evaluated on a separate dataset to ensure it can describe facial features effectively in unseen images. Through this focused training, FaceGemma leverages PaliGemma’s foundation and the rich details in to become a master of facial attribute description.

5.5 Inference

The inference process of the fine-tuned FaceGemma has been depicted in the figure 4. The testing image is first converted into soft tokens using the visual feature extractor model SigLip. Simultaneously the prompt is supplied which is tokenized by Gemma’s tokenization method into word tokens. These soft tokens or image features are concatenated with the word tokens and passed to the fine-tuned Gemma model to get a descriptive response of the supplied portrait image.

5.6 Training FaceGemma Model

Before training, the batch size, number of training examples, and learning rate hyperparameters are tuned to improve the model’s performance. In the experiment you described, a batch size of 4, 512 training examples, and a learning rate of 0.003 were used.

Figure 5 shows a graph of training loss over steps for training PaliGemma, a model for generating descriptions of portrait images. The x-axis represents the number of training steps, while the y-axis represents the training loss. It is calculated by comparing the model’s generated descriptions to the ground truth descriptions. A lower training loss indicates that the model is performing better.

The graph shows that the training loss decreases over time, which means that the model is gradually learning to generate better descriptions of portrait images.

6 Result

We evaluated our model FaceGemma’s predicted captions using BLEU and METEOR scores.

BLEU (Bilingual Evaluation Understudy) [31] is a metric for evaluating machine-generated translations by measuring the similarity between generated and reference text using n-gram overlap. The BLEU-n score is calculated as in Eq. 6.1.

| (6.1) |

BP is the Brevity Penalty, is the maximum n-gram order (typically 4), and is the precision of n-grams. and BP are calculated as in Eq. 6.2 and Eq. 6.3.

| (6.2) |

| (6.3) |

BLEU ranges from 0 to 1, with higher scores indicating better translations. Different BLEU variants (BLEU-1, BLEU-2, BLEU-3, BLEU-4) consider different n-gram orders.

METEOR (Metric for Evaluation of Translation with Explicit Ordering) [35] is another metric for translation quality. It considers precision, recall, and to balance them:

| (6.4) |

Precision and recall involve n-gram matching and typically equals 0.9. METEOR provides a comprehensive evaluation of translation quality, including word order and vocabulary differences.

| Model | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | METEOR |

|---|---|---|---|---|---|

| VGGFace | 0.3357 | 0.0849 | 0.0252 | 0.0064 | 0.2670 |

| VGGFace + Att | 0.3737 | 0.0881 | 0.0222 | 0.0034 | 0.2776 |

| ResNet50 | 0.3408 | 0.0725 | 0.0181 | 0.0034 | 0.2674 |

| ResNet50 + Att | 0.3900 | 0.0849 | 0.0219 | 0.0060 | 0.3048 |

| InceptionV3 | 0.3050 | 0.0601 | 0.0144 | 0.0015 | 0.2083 |

| InceptionV3 + Att | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| FaceGemma (our) | 0.3641 | 0.2349 | 0.1514 | 0.0988 | 0.3550 |

Our experiments involved comparing FaceGemma with several baseline models, namely VGGFace, ResNet50, and InceptionV3, each augmented with LSTM language models and facial attribute vectors. Table 1 presents a comparison of BLEU and METEOR scores between FaceGemma and the baseline models. FaceGemma achieved notable improvements, particularly in BLEU-2 (0.2349), BLEU-3 (0.1514), BLEU-4 (0.0988), and METEOR (0.3550) metrics, surpassing all other techniques evaluated.

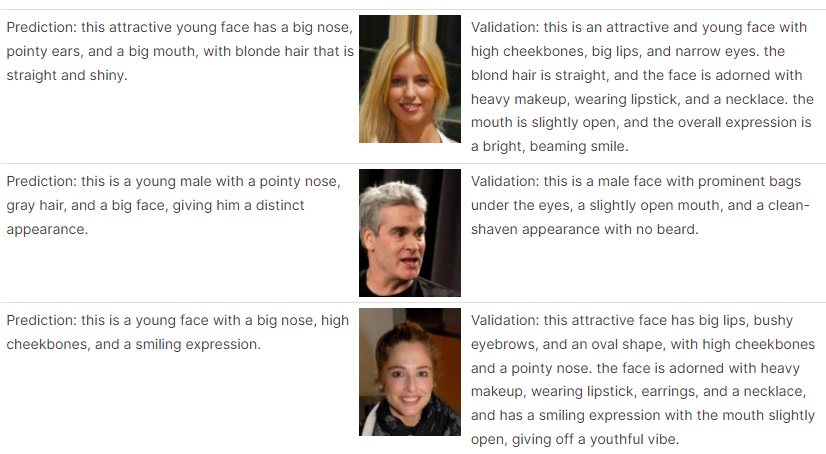

Figure 6 and 7 illustrate examples of predictions generated by FaceGemma, showcasing its capability to produce accurate and contextually relevant captions for facial images. These results demonstrate the effectiveness of FaceGemma in leveraging both visual features and facial attributes for enhanced caption generation.

7 Conclusion

In this research, we introduced the FaceGemma model for generating descriptive and contextually relevant captions for portrait images, focusing on the integration of facial attributes into the captioning process. We conducted extensive experiments, trained our model, and evaluated its performance using BLEU and METEOR scores.

Our results have demonstrated the potential of the FaceGemma model in the field of facial attribute captioning. Additionally, the inclusion of facial attributes significantly improved the model’s performance, underlining the importance of considering attribute information in multilingual captioning tasks.

It is worth noting that our research is unique in its focus on captioning based on facial attributes. To the best of our knowledge, there is no prior work in the literature that directly compares with our approach. This highlights the novelty and pioneering nature of our research, breaking new ground in the intersection of computer vision and natural language processing.

Our FaceGemma model, trained and evaluated rigorously, paves the way for diverse applications in the fields of computer vision, image understanding, and multilingual content generation. Its ability to generate contextually relevant captions for portrait images, taking into account both visual features and facial attributes, holds great promise for applications such as image indexing, content retrieval, and accessibility for visually impaired individuals.

As the field of computer vision and natural language processing continues to evolve, our work contributes a valuable step forward in the development of models that can understand and describe the visual world with nuance and accuracy. We anticipate that our research will inspire further exploration in this exciting and groundbreaking area, opening new avenues for innovation and discovery in the realm of image captioning and beyond.

References

- [1] Sarang, Poornachandra. ”Image Captioning.” Artificial Neural Networks with TensorFlow 2: ANN Architecture Machine Learning Projects. Apress, Berkeley, CA, 2021, pp. 471-522. ISBN: 978-1-4842-6150-7 DOI: 10.1007/978-1-4842-6150-7_10 URL: https://doi.org/10.1007/978-1-4842-6150-7_10

- [2] Srivastava, Rajeev. (2013). Research Developments in Computer Vision and Image Processing: Methodologies and Applications. IGI Global.

- [3] Khurana, Diksha, Aditya Koli, Kiran Khatter, and Sukhdev Singh. ”Natural Language Processing: State of the Art, Current Trends and Challenges.” Multimedia Tools and Applications, vol. 82, no. 3, pp. 3713–3744, 2023. Springer.

- [4] Ian Goodfellow, Yoshua Bengio, and Aaron Courville. Deep Learning. MIT Press, 2016. Available online: http://www.deeplearningbook.org.

- [5] Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhudinov, Rich Zemel, and Yoshua Bengio. Show, attend and tell: Neural image caption generation with visual attention. In International conference on machine learning, pages 2048–2057, 2015. Publisher: PMLR.

- [6] Xinyu Xiao, Lingfeng Wang, Kun Ding, Shiming Xiang, and Chunhong Pan. Deep Hierarchical Encoder–Decoder Network for Image Captioning. IEEE Transactions on Multimedia, volume 21, number 11, pages 2942-2956, 2019. DOI: 10.1109/TMM.2019.2915033.

- [7] Jianxin Wu. Introduction to Convolutional Neural Networks. National Key Lab for Novel Software Technology. Nanjing University. China, volume 5, number 23, page 495, 2017.

- [8] Greg Van Houdt, Carlos Mosquera, and Gonzalo Nápoles. A Review on the Long Short-Term Memory Model. Artificial Intelligence Review, volume 53, pages 5929-5955, 2020. Publisher: Springer.

- [9] Omkar M. Parkhi, Andrea Vedaldi, and Andrew Zisserman. Deep Face Recognition. In Proceedings of the British Machine Vision Conference (BMVC), pages 41.1-41.12, September 2015. Publisher: BMVA Press. DOI: 10.5244/C.29.41. URL: https://dx.doi.org/10.5244/C.29.41.

- [10] Omid Mohamad Nezami, Mark Dras, Stephen Wan, and Cecile Paris. Senti-attend: Image Captioning Using Sentiment and Attention. arXiv preprint arXiv:1811.09789, 2018.

- [11] Fuhai Chen, Rongrong Ji, Xiaoshuai Sun, Yongjian Wu, and Jinsong Su. Groupcap: Group-Based Image Captioning with Structured Relevance and Diversity Constraints. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1345-1353, 2018.

- [12] Omid Mohamad Nezami, Mark Dras, Peter Anderson, and Len Hamey. Face-Cap: Image Captioning using Facial Expression Analysis. CoRR, volume abs/1807.02250, 2018. URL: http://arxiv.org/abs/1807.02250.

- [13] Joanna Hong, Hong Joo Lee, Yelin Kim, and Yong Man Ro. Face Tells Detailed Expression: Generating Comprehensive Facial Expression Sentence Through Facial Action Units. In MultiMedia Modeling: 26th International Conference, MMM 2020, Daejeon, South Korea, January 5–8, 2020, Proceedings, Part II, pages 100-111, 2020. Publisher: Springer.

- [14] Omid Mohamad Nezami, Mark Dras, Stephen Wan, and Cecile Paris. Image Captioning Using Facial Expression and Attention. Journal of Artificial Intelligence Research, volume 68, pages 661-689, 2020.

- [15] M Usgan, R Ferdiana, and I Ardiyanto. Deep Learning Pre-trained Model as Feature Extraction in Facial Recognition for Identification of Electronic Identity Cards by Considering Age Progressing. IOP Conference Series: Materials Science and Engineering, volume 1115, number 1, pages 012009, 2021. Publisher: IOP Publishing. DOI: 10.1088/1757-899X/1115/1/012009. URL: https://dx.doi.org/10.1088/1757-899X/1115/1/012009.

- [16] Alasdair Tran, Alexander Mathews, and Lexing Xie. Transform and Tell: Entity-Aware News Image Captioning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 13035-13045, 2020.

- [17] Faisal Muhammad Shah, Mayeesha Humaira, Md Abidur Rahman Khan Jim, Amit Saha Ami, and Shimul Paul. Bornon: Bengali Image Captioning with Transformer-based Deep Learning Approach. 2021. Eprint: arXiv:2109.05218. Archive Prefix: arXiv. Primary Class: cs.CV.

- [18] Jayakumar Kaliappan, Senthil Kumaran Selvaraj, Baye Molla, and others. Caption Generation Based on Emotions Using CSPDenseNet and BiLSTM with Self-Attention. Applied Computational Intelligence & Soft Computing, 2022.

- [19] Oleg Bisikalo, V Kovenko, I Bogach, and O Chorna. Explaining Emotional Attitude Through the Task of Image-captioning. In Proceedings of the 6th International Conference on Computational Linguistics and Intelligent Systems (COLINS 2022). Volume I: Main Conference, Gliwice, Poland, May 12-13, 2022. Publisher: RWTH Aachen University.

- [20] Zihang Meng, David Yang, Xuefei Cao, Ashish Shah, and Ser-Nam Lim. Object-centric Unsupervised Image Captioning. In European Conference on Computer Vision, pages 219-235, 2022. Publisher: Springer.

- [21] Yinglin Zheng, Hao Yang, Ting Zhang, Jianmin Bao, Dongdong Chen, Yangyu Huang, Lu Yuan, Dong Chen, Ming Zeng, and Fang Wen. General Facial Representation Learning in a Visual-Linguistic Manner. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 18697-18709, 2022.

- [22] Sungho Park, Jewook Lee, Pilhyeon Lee, Sunhee Hwang, Dohyung Kim, and Hyeran Byun. Fair Contrastive Learning for Facial Attribute Classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10389-10398, 2022.

- [23] Manisha Gupta, Priya Asthana, and Preetvanti Singh. EDUVI: An Educational-Based Visual Question Answering and Image Captioning System for Enhancing the Knowledge of Primary Level Students. 2023.

- [24] Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. Deep Learning Face Attributes in the Wild. Proceedings of International Conference on Computer Vision (ICCV), December 2015.

- [25] Karen Simonyan and Andrew Zisserman. Very Deep Convolutional Networks for Large-scale Image Recognition. arXiv preprint arXiv:1409.1556, 2014.

- [26] Luqman Ali, Fady Alnajjar, Hamad Al Jassmi, Munkhjargal Gocho, Wasif Khan, and M Adel Serhani. Performance Evaluation of Deep CNN-Based Crack Detection and Localization Techniques for Concrete Structures. Sensors, volume 21, number 5, pages 1688, 2021. Publisher: MDPI.

- [27] Christian Szegedy, Vincent Vanhoucke, Sergey Ioffe, Jon Shlens, and Zbigniew Wojna. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2818-2826, 2016.

- [28] Gemma Team, Thomas Mesnard, Cassidy Hardin, et al. Gemma: Open Models Based on Gemini Research and Technology. Year: 2024. eprint: 2403.08295. archivePrefix: arXiv. primaryClass: cs.CL. url: https://arxiv.org/abs/2403.08295

- [29] Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer. Sigmoid Loss for Language Image Pre-Training. Year: 2023. eprint: 2303.15343. archivePrefix: arXiv. primaryClass: cs.CV. url: https://arxiv.org/abs/2303.15343

- [30] X. Chen et al. PaLI-3 Vision Language Models: Smaller, Faster, Stronger. Year: 2023. eprint: 2310.09199. archivePrefix: arXiv. url: https://arxiv.org/abs/2310.09199

- [31] Kishore Papineni, Salim Roukos, Todd Ward, and Wei-Jing Zhu. Bleu: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th annual meeting of the Association for Computational Linguistics, pages 311-318, 2002.

- [32] Meta AI. (2024). Meta Llama 3 [Online]. Retrieved July 9, 2024, from [llama3](https://llama.meta.com/)

- [33] Google Research. (n.d.). PaliGemma model README. GitHub. Retrieved July 9, 2024, from [PaliGemma](https://github.com/google-research/big_vision/blob/main/big_vision/configs/proj/paligemma/README.md)

- [34] Touvron, H., Lavril, T., Izacard, G., Martinet, X., Lachaux, M.-A., Lacroix, T., … & Joulin, A. (2023). LLaMA: Open and Efficient Foundation Language Models. [arXiv:2302.13971](https://arxiv.org/abs/2302.13971)

- [35] Satanjeev Banerjee and Alon Lavie. METEOR: An Automatic Metric for MT Evaluation with Improved Correlation with Human Judgments. In Proceedings of the acl workshop on intrinsic and extrinsic evaluation measures for machine translation and/or summarization, pages 65-72, 2005.

- [36] Naimul Haque, Iffat Labiba, and Sadia Akter. “FaceAtt: Enhancing Image Captioning with Facial Attributes for Portrait Images.” arXiv preprint arXiv:2309.13601 (2023).

- [37] Naimul Haque and Abida Sultana. “FaceAttDB: A Multilingual Dataset for Facial Attribute Captioning.” Zenodo, 2023. doi: 10.5281/zenodo.8144361.