FA-GANs: Facial Attractiveness Enhancement with Generative Adversarial Networks on Frontal Faces

Abstract

Facial attractiveness enhancement has been an interesting application of Computer Graphics and Vision over these years. It aims to generate a more attractive face via manipulations of the facial image while preserving face identity. In this paper, we propose the first Generative Adversarial Networks (GANs) for enhancing facial attractiveness in both the appearance and geometry aspects, which we call “FA-GANs”. FA-GANs contain two parallel branches, which are both GANs used to enhance facial attractiveness in two perspectives: facial appearance and facial geometry. With an embedded ranking module, the proposed FA-GANs are able to evaluate facial attractiveness and extract attractiveness features which are then further imposed on the enhancement results. This also enables us to train the networks without using paired faces as most previous methods have done. Benefited from our parallel structure, the appearance and geometry networks are able to adjust face appearance as well as face geometry independently. The consistency of outcomes of the two branches are enforced by a geometry consistency module which links the two branches naturally. To the best of our knowledge, we are the first to enhance facial attractiveness by considering both the appearance and geometry aspects using GANs under a unified framework. Experimental results show that our FA-GANs generate compelling results and outperform the state-of-the-arts.

Index Terms:

Facial attractiveness enhancement, generative adversarial networks, geometry and appearance adjustment.I Introduction

Related researches have shown that human faces tend to make a powerful first impression and thus may have potential influence on later social behaviors. The advantages brought by attractive faces are proven in many scientific studies [1]. Consequently, growing numbers of celebrities enhance their facial attractiveness in daily life.

Enhancing facial attractiveness is the process of adjusting a given face in the sense of visual aesthetics. Besides its academic purpose, it also shares a wide market in entertainment industry as a way to beautify the portrait. Facial attractiveness enhancement thus has received considerable attention in the Graphics and Vision communities.

During the last decade, the proposed facial manipulation methods can be roughly divided into two categories. The first category resorts to traditional Computer Graphics techniques which warp the input face to alter facial appearance. To enhance 2D facial image attractiveness, Leyvand et al. [2] introduce a method by learning the distances among facial key points and adjusting the facial image with multilevel free-form deformation. Li et al. [3] simulate makeup through adaptations of physically-based reflectance models. For the 3D face models with both geometry and texture information, Liao et al. [4] propose a sequence of manipulations concerning symmetry, frontal face proportion, and facial angular profile proportion. The methods in the other category generate the desired face via building the generative models by using deep learning. The representative methods are the deep generative networks which have exhibited a remarkable capability in facial image generation. BeautyGAN [5] adjusts facial appearance by transferring the makeup style from a given reference face to another non-makeup one. Though promising results are generated, this method mainly focuses on style transfer and cannot be generalized to automatic facial attractiveness enhancement easily. It is widely recognized that the geometry structure of the face plays a critical role in facial attractiveness. However, to the best of our knowledge, till now there does not exist a unified framework for improving the attractiveness involving both the appearance and geometry aspects.

The purpose of this paper is to automate facial attractiveness enhancement in a unified deep learning framework which could not only alter the facial appearance but also improve the geometry structure. A key challenge in our task is the lack of paired data which can be used for training. It is obviously very expensive to collect a large quantity of paired faces such that each pair is of the same individual before and after enhancement for supervised learning. Another challenge is that people may define facial attractiveness in different ways. Their answers vary a lot when asked to explain this concept. However, there does exist a general criterion in some ways, such that most actresses or actors are recognized by the majority for their beauty or handsomeness. Therefore, it is possible to explore the relationship between the ordinary and attractive faces to make the enhancement task tractable, even though it might be difficult to build a set of explicit, explainable, and well acknowledged rules to define facial attractiveness.

In this paper, we propose the facial attractiveness enhancement Generative Adversarial Networks, called FA-GANs, to achieve attractiveness enhancement by altering the input face in both the appearance and geometry aspects. Given the difficulty in collecting paired data, FA-GANs are trained on a dataset we collected which consists of the attractive faces and ordinay ones. The faces in the two subsets are, however, uncorrelated. To learn with unpaired faces, we specifically design a novel attractiveness ranking module which could extract attractiveness features. Facial attractiveness can thus be evaluated with implicit rules in a data-driven manner.

FA-GANs consist of two parallel branches used for the enhancement of facial geometry and appearance, respectively. Both of them are with the structure of GANs. For the geometry branch, we employ the facial landmarks to depict the geometry structure of a face. For the appearance branch, the ranking module with the structure of VGG-16 is pre-trained, and the feature maps extracted are utilized to depict the attractiveness rules. The ranking module determines the attractiveness domain that the ordinary faces should be enhanced. Then, the networks of deep face descriptor [6] are utilized by minimizing the distance of the facial images before and after enhanced. This guarantees the enhanced face and the original input can be recognized as the same individual. A newly designed geometry enhancement consistency module is proposed to combine these two branches, ensuring FA-GANs enhance the facial attractiveness in two aspects consistently.

To the best of our knowledge, we are the first to enhance the facial attractiveness with GANs by considering not only the appearance aspect but also the facial geometry. Our comprehensive experiments show that FA-GANs generate compelling perceptual results and outperform the state-of-the-arts.

In addition to the general framework, our paper also makes the following technical contributions.

-

•

We propose FA-GANs for facial attractiveness enhancement with a newly designed geometry enhancement consistency module to automatically enhance the input face in both geometry and appearance aspects.

-

•

The pre-trained attractiveness ranking module is embedded in FA-GANs to learn the features of attractive faces via unsupervised adversarial learning, which does not require the training data to be organized as paired faces.

-

•

FA-GANs consist of two branches each of which is able to work independently to adjust either facial appearance or geometry.

II Related Work

Facial attractiveness has been investigated for a long time in many research areas, such as computer vision and cognitive psychology. Numerous empirical experiments suggest that beauty cognition does exist over the education background, age, and gender. Facial attractiveness enhancement is closely related to facial attractiveness analysis and face generation. In this section, we first review the researches in altering facial appearance and geometry, and then pay special emphasis on the recent facial image generation methods with generative adversarial networks.

II-A Facial Appearance Enhancement

Arakawa et al. propose a system using interactive evolutionary computing which removes undesirable skin components from human facial images to make the face look beautiful [7]. Other works devote to making the enhancement via face makeup methods. Liu et al. propose a fully automatic makeover recommendation and synthesis system named beauty e-Expert [8]. Deep learning has been used and shown remarkable performance. Liu et al. propose an end-to-end deep localized makeup transfer network to automatically synthesize the makeup for female faces, achieving natural-looking results [9]. Li et al. achieve makeup transfer from a given reference makeup face to the non-makeup one in high quality with their proposed BeautyGAN [5]. Chang et al. [10] propose PairedCycleGAN which can quickly transfer the style from an arbitrary reference makeup photo to an arbitrary source photo. Most of these methods based on deep learning focus on style transfer. By contrast, our aim is to automatically enhance facial image in the aspects of not only facial appearance but also face geometry. Given a target face, we do not require an additional image as the reference.

II-B Facial Geometry Enhancement

The shapes of beautiful faces are defined in different ways by different individual groups, but beautiful faces can always be recognized to be attractive by individuals from other groups [11].

For the 2D facial image, Leyvand et al. propose a data-driven approach for facial attractiveness enhancement [2], which adjusts the geometry structure by learning from the distance vectors of the landmark points. Li et al. propose a deep face beautification framework which is able to automatically modify the geometry structure of a face to boost the attractiveness [12]. For the 3D face model, Liao et al. propose an enhancing approach by adjusting geometry symmetry, frontal face proportion, and facial angular profile proportion [4]. They apply proportion optimization to the frontal face restricted to the Neoclassical Canons and golden ratios. The combination of asymmetry correction and adjustment of frontal or profile proportions can achieve better results. However, the research also suggests that asymmetry correction is more effective than adjusting the proportions of the frontal or profile [11]. Qian et al. propose additive focal variational auto-encoder (AF-VAE) to arbitrarily manipulate high-resolution face images [13]. Different from these methods which mainly alter face geometry based on empirical rules, we explore facial attractiveness by learning from unpaird training data in a data-driven manner, considering that unanimous and measurable rules about attractiveness are difficult to define explicitly.

II-C Generative Adversarial Networks

Our facial enhancement architecture is also one kind of deep generative model derived from GANs [14]. GANs work in a simple and efficient way to train both the generator and discriminator via the min-max two-player game, achieving remarkable results on unsupervised learning tasks. Recently, GANs and their variants have shown great success in realistic image generation including super-resolution [15], image translation [16], and image synthesis [17]. We here briefly review the recent applications of GANs on image generation.

Plenty of modified architectures of GANs [18, 19, 20, 21, 22, 23, 24, 23, 25] are proposed to fulfill face generation. For the face age progression problem, Yang et al. propose a pyramid architecture of GANs to learn face age progression, achieving remarkable results [26]. Besides, the identity-preserved conditional generative adversarial networks are proposed to generate a face with target age while preserving the identity [18].

In the research area of face synthesis, Song et al. propose the geometry-guided GANs to achieve facial expression removal and synthesis [27]. Shen et al. synthesize faces while preserving identity with proposed three-player GAN called FaceID-GAN [28]. Bao et al. propose a framework based on GANs to disentangle identity and attributes of faces [24], and recombine different identities and attributes for identity preserving face synthesis in open domain. Li et al. propose an effective object completion algorithm using a deep generative model [21]. Cao et al. propose CariGANs for unpaired photo-to-caricature translation which generates the caricature image in two steps of geometry and appearance adjustment [29].

Inspired by these works, we propose our facial attractiveness enhancement framework based on GANs to generate the enhanced and identity preserved facial images automatically. To the best of our knowledge, till now none of existing works based on GANs are specifically devised to fulfill this task.

III Our Method

The original GANs consist of a generator and a discriminator . and are trained alternatively and iteratively via an adversarial process. and compete with each other via a min-max game formulated as the following Equation,

| (1) | ||||

where represents the noise sampled from a prior probability distribution , and denotes the data item sampled from real data distribution . We aim to get until cannot distinguish real samples from the generated ones.

Let X and Y denote the domains of unattractive and attractive faces respectively. No paired faces exist between these two domains. Given an unattractive sample , our goal is to learn a mapping which can transfer to an attractive sample . Unlike previous works enhancing the facial attractiveness in either geometry structure or appearance texture, FA-GANs make the enhancement involving both of these two aspects. Therefore, FA-GANs consist of two parallel branches: the geometry adjustment network and the appearance adjustment network .

The proposed framework of FA-GANs with two branches is shown in Fig. 2. Each branch basically is with the architecture of GANs. To enhance face attractiveness in both the geometry and appearance aspects, the geometry branch is trained to learn the geometry-to-geometry translation from to . The appearance branch is trained with the help of geometry translation to learn the adjustment in both of the appearance and geometry aspects. Similar to the traditional beautification engine [2] of 2D facial images, facial landmarks are used to represent the geometry structure. We denote and as the domains of geometry structures of unattractive and attractive faces, respectively. The geometry branch learns the mapping to convert geometry structure of the unattractive face to the attractive one . The appearance branch learns to convert the instance to an intermediate instance , where is an appearance adjusted face with the same geometry structure as , and is the intermediate domain with geometry structure of and appearance texture of . The mapping of appearance branch learned is defined as .

To combine these two branches and enhance the facial attractiveness in both aspects, we further minimize the energy function to ensure the consistency between the geometry structure of the intermediate face and the geometry structure generated by geometry branch. The consistency energy is defined as:

| (2) |

To achieve this, we further introduce the pre-trained geometry analysis networks to extract the geometry structure from generated by the appearance branch. FA-GANs combine the geometry mapping and appearance mapping and achieve the mapping by enforceing the consistency between the geometry and appearance branches. The geometry branch, appearance branch, and the combination of these two branches are described in detail in the following.

III-A Geometry Enhancement Branch

III-A1 Geometry data

We extract 2D facial landmarks for each of the training face images. For each face, landmarks are detected, and the detected landmarks are located on the outlines of left eyebrow, right eyebrow, left eye, right eye, nose bridge, nose tip, top lip, bottom lip, and chin. Fig. 3 shows an example of extracted landmarks. To normalize the scale, all faces are cropped and resized to the size of pixels. Instead of learning from facial landmarks directly, we prefer to enhance facial attractiveness via adjusting the relative distances among important facial components, such as eyes, nose, lip, and chin. Landmarks are more sensitive to small errors than relative distances. Therefore, the detected landmarks are used to construct a distance embedded mesh containing edges through Delaunay triangulation. We further reduce the dimension of the distance vector to by applying principal component analysis (PCA) and reserve of total variants.

III-A2 Geometry enhancement networks

Geometry branch aims to learn the mapping , while no samples in and are paired. We achieve this by constructing the geometry adjustment GANs including only full connected (FC) and ReLU [30] layers. Unlike traditional GANs which train the generator until it fools the discriminator, the feature maps of are also utilized to help to learn the geometry mapping. The generator takes as input and outputs the adjusted distance vector . Fig. 3 shows the architecture of our geometry adjustment branch. Apart from the last layer, the discriminator has the same architecture with the generator. The details of the architectures are shown in Table I.

| Module | Layer | Activation size |

|---|---|---|

| Input | ||

| FC-ReLU | ||

| FC-ReLU | ||

| FC-ReLU | ||

| FC-ReLU | ||

| Generator | FC | |

| Discriminator | FC | 1 |

Following the classic GANs [14, 31], the process of training discriminator amounts to minimizing the loss:

| (3) | ||||

where and are the discriminator and the generator, respectively. takes both the unattractive samples and generated samples as negative samples and regards the attractive samples as positive samples. The loss of discriminator can be rewritten as:

| (4) | ||||

Considering that samples coming from the same category have similar feature maps, the feature loss is introduced and the loss of generator is defined as:

| (5) | ||||

where is the FC layer. indicates the i-th layer in the generator .

III-A3 Geometry adjustment on faces

Geometry branch converts to with being the distance vector derived from the landmark points . To adjust facial geometry, the enhanced points corresponding to are estimated by minimizing the following energy function for the best fit,

| (6) |

where is the element of the connectivity matrix of our constructed facial mesh. The distance term is the entry in corresponding to . Minimization of the energy function is performed by the Levenberg-Marquardt [32] algorithm. Then the geometry enhanced face is generated by mapping the original face texture from the mesh constructed by to the mesh of .

III-B Appearance Enhancement Branch

The outstanding performance of GANs in fitting data distribution has significantly promoted many computer graphics and vision applications such as image-to-image translation [33, 16, 29, 5]. Inspired by these studies, we employ GANs to perform facial appearance enhancement while preserving identity. The appearance adjustment networks and the loss are introduced in the following.

III-B1 Appearance adjustment networks

The process of appearance enhancement only requires a forward pass through the generator . The generator is designed with the U-Net [34]. The discriminator is introduced to output the indicators suggesting the probability that comes from the attractiveness category. Different from the classic discriminator of GANs, we implement our discriminator with the pyramid architecture [26] to estimate high-level attractiveness-related cues in a fine-grained way.

Specifically, a facial attractiveness classifier is pre-trained with the architecture of VGG-16 [35] to classify attractive and unattractive faces. The hierarchical layers of VGG-16 endow our network with the ability to capture image features from the pixel level to semantic level. The generator is optimized until the discriminator is confused about and for all the pixel and semantic level features. Consequently, the feature maps of the 2nd, 4th, 7th, and 10th convolutional layers in VGG-16 are integrated into the discriminator for adversarial learning. The generator not only transfers to but also preserves the identity. We achieve this by leveraging the network of deep face descriptor [6] to measure the identity similarity between and . The deep face descriptor is trained on a large face dataset containing millions of facial images by recognizing unique individuals. We remove the classification layer and take the last FC layer as the identity preserving output, forming an identity descriptor . Both the original face and enhanced face are fed into to generate with small margin between and .

| Layer | Activation size |

|---|---|

| Input | 3224224 |

| stride padding | |

| stride padding | |

| stride padding | |

| stride padding | |

| stride padding | |

| stride padding | |

| stride padding | |

| stride padding | |

| stride padding | |

| FC-ReLU | |

| FC-ReLU | |

| FC | 32 |

III-B2 Loss

The appearance improved face should be recognized as the same individual as the original one. To this end, the loss concerning appearance enhancement should not only take effect in helping improve the facial attractiveness, but also facilitate the preservation of identity. In addition, the difference between the improved face and the input should be constrained. Considering all these aspects, four types of loss for training the appearance branch are defined. They are the adversarial loss, identity loss, pixel loss, and total variation loss.

a) Adversarial loss. Similar to the geometry adjustment branch, both unattractive faces and the generated faces are deemed as negative faces and the attractive faces are deemed as positive ones. We also adopt the adversarial loss of LSGAN [31] to train the appearance adjustment branch. The adversarial loss is defined as:

| (7) | ||||

| (8) |

b) Identity loss. To ensure that appearance enhanced face and the original face are recognized as the same individual, the identity loss is introduced and is defined as:

| (9) |

where is the identity descriptor derived from the deep face descriptor [6].

c) Pixel loss. The generated face is more attractive than the input one . However, the gap between and in pixels should be constrained. The pixel loss enforces the enhanced face to have small difference with the original face in the raw-pixel space. In addition, in experiences of other image generation tasks, regularization performs better with less blurring than regularization. The pixel loss is thus formulated as:

| (10) |

where and are the width and height of the image, respectively, and is the number of channels.

d) Total variation loss. Total variation loss is defined as total variation regularizer [36] in order to encourage spatial smoothness of the enhanced face area .

III-C Geometry Enhancement Consistency

Given a face as well as its distance vector , geometry enhancement branch converts to , where lies in the domain of . At the same time, appearance branch converts to , where lies in the domain of . As illustrated in Fig. 2, FA-GANs combine these two branches and output the enhanced face with the generator of the appearance enhancement branch. The geometry consistency exists between and , where is the distance vector of . To extract the distance vector, an extractor is pre-trained on our dataset. The extractor is constructed with convolutional and FC layers, and each convolutional layer is followed by a Batch Normalization [37] layer and a ReLU [30] layer. The detailed architecture of the extractor is shown in Table II.

The extracted vector is the PCA representation of distance vectors derived from landmarks. Thus, the geometry enhancement consistency is defined with the following loss:

| (11) |

In summary, FA-GANs contain the losses in the appearance branch and the loss enforcing geometry enhancement consistency. Therefore, the overall training loss is expressed as,

| (12) | ||||

| (13) |

We train and alternately until learns the desired facial attractiveness transformation and becomes a reliable estimator.

IV Experiments

In this section, we first report the face dataset used in our experiments. We then analyze the performance of the appearance and geometry branches of our FA-GANs, which corresponds to the ablation study of geometry enhancement consistency module. We finally conduct comprehensive experiments to compare FA-GANs with the state-of-the-arts, including the automatic function of facial enhancement provided by prevalent mobile APPs such as Meitu [38]. In addition to the results generated by FA-GANs, we also show the results obtained by applying our geometry branch and appearance branch successively to the testing faces and compare with relevant methods.

IV-A Datasets

Facial attractiveness analysis is fundamental to facial image enhancement. The attractiveness criterion is explored in a data-driven way in our paper. However, few datasets designed specifically for facial attractiveness analysis are publicly available. Fortunately, Liang et al. propose a diverse benchmark dataset, called SCUT-FBP5500, for the prediction of multi-paradigm facial beauty [39]. The SCUT-FBP5500 dataset contains totally 5500 frontal faces involving diverse properties, such as male/female, Asian/Caucasian, and ages. Specifically, it includes 2000 Asian females, 2000 Asian males, 750 Caucasian females, and 750 Caucasian males, mostly aged from 15 to 60 with neutral expression. The attractiveness score of each facial image is labeled with 60 different people on a five-point holistic scale, where 1 indicates the most unattractive and 5 means the most attractive. Our attractiveness learner classifies the faces into two categories of attractiveness and unattractiveness. In order to obtain a dataset with unanimous labels, the faces that have conflict scores are ruled out. Moreover, we enlarge the dataset by further collecting the portraits of famous beautiful actresses as attractive faces and the portraits of some selected ordinary people as unattractive faces. Totally, female facial images are collected for FA-GANs. The attractiveness and unattractiveness categories contain and images, separately.

IV-B Implementation Details

All faces are detected and their landmark points are extracted. The geometric information extracted are fed to the geometry enhancement branch. All faces are cropped and scaled to the size of pixels as the training data of our appearance enhancement branch. To get a more robust attractiveness ranking module, we also add the color jitter, random affine, random rotation, and random horizontal flip to the facial attractiveness network. The trade-off parameters , , , , and in Equation (12) are set to , , , , and , separately. The Adam [41] algorithm with the learning rate of is used to optimize FA-GANs. FA-GANs are trained on a computer with a 4.20GHz 4-core, i7-7700K Intel CPU and a GTX 1080Ti GPU, which costs around hours for iterations with the batch size of to generate the desired results.

IV-C Branch Analysis of FA-GANs

To investigate the performance of the appearance branch and geometry branch of our FA-GANs, we perform ablation studies and analyze the effect of each branch. These two branches are the variations of GANs. In implementation, the geometry branch is pre-trained for training FA-GANs. Hence, we explore the performance of appearance branch by training it without the geometry consistency constraint. The adjustment results of geometry and appearance branches are demonstrated in Figs. 4 and 5. As seen from Fig. 4, geometry branch enhances the facial attractiveness by generating faces with small cheeks and big eyes. This suggests that people prefer smaller faces and bigger eyes in nowadays. Besides, appearance branch adjusts faces mainly on the texture instead of the geometry structure as shown in Fig. 5. It tends to generate faces with clean cheeks, red lips, and black eyes, which reveals the popular cognition of beauty. We further analyze the results of attractiveness in qualitative evaluation and time consuming in the following subsection.

IV-D Comparison with State-of-the-Arts

We verify the effectiveness of FA-GANs by comparing our generated results with the results by existing methods in geometry and appearance adjustment. Specifically, we make comparisons with the geometry adjustment method proposed in [2] and the appearance adjustment method with photo-realistic image style transfer [40]. We implement [2] with its KNN-based beautification engine. beautiful faces are searched in the domain of , and is set to 3.The geometry structure is then adjusted as described in subsection III-A3. Photorealistic image style transfer [40] needs a face as the reference in order to transfer its style to the input face . We choose by selecting the nearest face in the domain of , which can be obtained in the implementation of [2] with . With the help of facial landmarks we detected, the input face and the reference face are further parsed111https://github.com/zllrunning/face-parsing.PyTorch for [40], and the exemplar parsed faces are shown in Fig. 6. Furthermore, the attractiveness enhancement results involving these two aspects are also analyzed. Given a face, we first adjust its geometry structure with [2] or our geometry branch and then adjust its appearance with our appearance branch or [40]. Moreover, we also compare FA-GANs to BeautyGAN [5] with the reference face selected for [40].

| original | FA-GANs | geo | [2] | app | [40] | geo app | geo [40] | [2] app | [2] [40] | Meitu | BeautyGAN |

|---|---|---|---|---|---|---|---|---|---|---|---|

| total | ||||

|---|---|---|---|---|

| Geo branch | ||||

| [2] |

| face parsing | total | |||

|---|---|---|---|---|

| App branch | ||||

| [40] |

| geo app | geo [40] | [2] app | [2] [40] | FA-GANs |

|---|---|---|---|---|

Some mobile APPs are specifically designed for beautifying facial images and the automatic beautification on facial images is supported. We also make a comparison with the APP of Meitu, which is famous for beautifying the faces. Each face is adjusted by the “One-click beauty” in face beautification module of Meitu and with the default settings.

IV-D1 Qualitative evaluation

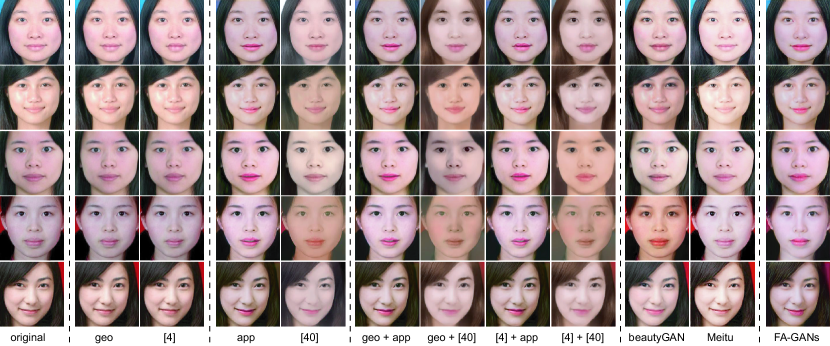

The comparison results are shown in Fig. 7, and are arranged into six groups. They are original, geometry adjustment, appearance adjustment, two-step adjustment, the state-of-the-arts, and FA-GANs, respectively.

In Fig. 7, Leyvand et al. [2] adjusts the geometry structure effectively compared to the original faces, and it also tends to generate portrait with smaller cheeks and bigger eyes. Li et al. [40] adjust the appearance mainly depending on the reference image, and the generated face looks homogeneous on the face area. BeautyGAN [5] only adjusts the facial image in the appearance aspect, and it has no effects in the geometry aspect. Moreover, BeautyGAN adjusts the facial image depends on the reference image and transfer the makeup style to the non-makeup image. Compared to BeautyGAN, “One-click beauty” of Meitu adjusts the face in both aspects. All of the instances generated by Meitu shrink the cheek slightly and have whiter skins while the colors of mouth and eyebrows are diluting. Our appearance branch promotes the original faces greatly and also generates whiter skin and has the makeup style on the mouth, eyes, and eyebrows. The geometry branch adjusts the geometry structure and tends to generate smaller cheeks and bigger eyes in a data-driven manner. FA-GANs adjust the face in both appearance and geometry aspects. The enhanced faces have whiter skin and the makeup style as appearance branch and have adjusted geometry structure as geometry branch.

IV-D2 Quantitive evaluation

To assess the results by different methods quantitatively, the facial attractiveness is assessed by Face++ API222https://www.faceplusplus.com/beauty/, which provides users with accurate, efficient, and reliable face-based services. It gives two scores: and indicating that males and females generally think this face is more attractive than and persons, respectively. groups of the original faces and their results are evaluated. The averaged attractiveness scores for each method are shown in Fig. 8.

All these methods get higher attractiveness scores than the original faces. The statistical results suggest that FA-GANs achieve the best performance over all these methods. The two-step adjustment method of applying our geometry branch followed by appearance branch to the input faces achieves the second best. FA-GANs promote the attractiveness score from to and to in the female and male views, respectively. It suggests that FA-GANs enhance the attractiveness effectively. Similarly, the two-step adjustment method achieves the second best, getting scores of , , respectively and outperforms BeautyGAN (, ) and Meitu (, ). Comparing the geometry adjustment results of our geometry branch in FA-GANs and [2], geometry branch outperforms [2] with a small margin in both assessing aspects of female and male. On the other hand, the appearance branch performs better than [40] no matter directly applying these appearance adjusting methods directly to the original faces or to the intermediate results obtained by other geometry adjusting methods. Comparing the results between appearance and geometry adjustment, appearance always achieves higher scores than geometry indicating that people can enhance their attractiveness by paying more attention to makeups than facelifts.

For identity preservation, we measure it using the cosine distance between and where and are the original face and the adjusted face, respectively, and is the deep face descriptor. The evaluated similarity scores are shown in Table III with respect to the listed methods in Fig. 8. As can be seen, all of the methods preserve the identity with the similarity greater than . Meitu preserves the identity best with the similarity of . Our geometry branch achieves the second best with the similarity of , and FA-GANs also performs well with the similarity of .

IV-D3 Runtime time analysis

To build an effective and efficient method for enhancing the attractiveness of faces, we further compare these methods in time consuming. All the time consuming experiments are performed on the aforementioned computer. Geometry adjustment can be recognized as three steps, which contain extracting distance vector from original face , enhancing to , and mapping to the enhanced face . The time consuming analysis of these steps is shown in Table IV. As can be seen in Table IV, Leyvand et al. [2] performs faster than our geometry branch in seconds on our geometry adjustment dataset. The geometry adjustment methods spend most time on mapping to enhanced face . Appearance branch enhances the attractiveness with only a forward pass through the generator. However, Li et al. [40] adjusts the appearance with an extra reference image, and requires image parsing in order to get a better result. The time consumption of appearance adjustment is shown in Table V. It suggests that appearance branch only requires seconds to make adjustment on average. At last, we further compare the time consuming in the two-step adjustment method and FA-GANs in Table VI. It demonstrates that FA-GANs is the fastest. An input face can be adjusted in only seconds. Furthermore, we compare FA-GANs with BeautyGAN in adjusting an input facial image. FA-GANs also performs faster than BeautyGAN, which takes seconds on average.

V Conclusions

We have presented FA-GANs, a deep end-to-end framework for automating facial attractiveness enhancement in both the geometry and appearance aspects. FA-GANs learn the implicit attractiveness rules via the pre-trained facial attractiveness ranking module and avoid training on the paired faces for which a large dataset is extremely difficult to obtain. In this way, FA-GANs enhance facial attractiveness in a data-driven manner. FA-GANs contain two branches of geometry adjustment and appearance adjustment, and both of them can enhance the attractiveness independently. FA-GANs generate compelling perceptual results and enhance facial attractiveness both effectively and efficiently. These are verified by our comprehensive experiments and thorough analysis, which also demonstrate that FA-GANs achieve superior performance over existing geometry and appearance enhancement methods.

Although we have shown the superiority of FA-GANs, there still exist several aspects that need to be improved in the future. FA-GANs are limited to the frontal faces, and this is because few faces of other poses are collected in our dataset. And these faces are much more difficult to collect than frontal faces. A possible solution is that estimating the facial pose and adjusting the facial appearance and geometry in 3D face space. The attractiveness of the adjusted faces in other poses can also be evaluated. Another possible solution is that enlarging the facial attractiveness dataset and training facial attractiveness enhancement networks involving lots of faces with variant poses.

References

- [1] D. I. Perrett, K. J. Lee, I. Penton-Voak, D. Rowland, S. Yoshikawa, D. M. Burt, S. Henzi, D. L. Castles, and S. Akamatsu, “Effects of sexual dimorphism on facial attractiveness,” Nature, vol. 394, no. 6696, p. 884, 1998.

- [2] T. Leyvand, D. Cohen-Or, G. Dror, and D. Lischinski, “Data-driven enhancement of facial attractiveness,” in SIGGRAPH, 2008, pp. 38:1–38:9.

- [3] C. Li, K. Zhou, and S. Lin, “Simulating makeup through physics-based manipulation of intrinsic image layers,” in CVPR, June 2015.

- [4] Q. Liao, X. Jin, and W. Zeng, “Enhancing the symmetry and proportion of 3d face geometry,” TVCG, vol. 18, no. 10, pp. 1704–1716, Oct 2012.

- [5] T. Li, R. Qian, C. Dong, S. Liu, Q. Yan, W. Zhu, and L. Lin, “Beautygan: Instance-level facial makeup transfer with deep generative adversarial network,” in Proceedings of the 26th ACM International Conference on Multimedia, ser. MM ’18. New York, NY, USA: ACM, 2018, pp. 645–653.

- [6] P. Omkar M., V. Andrea, and Z. Andrew, “Deep face recognition,” in BMVC, X. Xianghua, J. Mark W., and G. K. L. Tam, Eds., September 2015, pp. 41.1–41.12.

- [7] K. Arakawa and K. Nomoto, “A system for beautifying face images using interactive evolutionary computing,” in ISPACS, Dec 2005, pp. 9–12.

- [8] L. Liu, H. Xu, J. Xing, S. Liu, X. Zhou, and S. Yan, “”wow! you are so beautiful today!”,” in Proceedings of the 21st ACM International Conference on Multimedia, ser. MM ’13. New York, NY, USA: ACM, 2013, pp. 3–12.

- [9] S. Liu, X. Ou, R. Qian, W. Wang, and X. Cao, “Makeup like a superstar: Deep localized makeup transfer network,” in IJCAI, 2016, pp. 2568–2575.

- [10] H. Chang, J. Lu, F. Yu, and A. Finkelstein, “Pairedcyclegan: Asymmetric style transfer for applying and removing makeup,” in CVPR, June 2018.

- [11] A. Laurentini and A. Bottino, “Computer analysis of face beauty: A survey,” CVIU, vol. 125, pp. 184 – 199, 2014.

- [12] J. Li, C. Xiong, L. Liu, X. Shu, and S. Yan, “Deep face beautification,” in MM, 2015, pp. 793–794.

- [13] S. Qian, K.-Y. Lin, W. Wu, Y. Liu, Q. Wang, F. Shen, C. Qian, and R. He, “Make a face: Towards arbitrary high fidelity face manipulation,” in The IEEE International Conference on Computer Vision (ICCV), October 2019.

- [14] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial nets,” in NIPS, 2014, pp. 2672–2680.

- [15] C. Ledig, L. Theis, F. Huszár, J. Caballero, A. Cunningham, A. Acosta, A. Aitken, A. Tejani, J. Totz, Z. Wang, and W. Shi, “Photo-realistic single image super-resolution using a generative adversarial network,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July 2017.

- [16] J. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired image-to-image translation using cycle-consistent adversarial networks,” in 2017 IEEE International Conference on Computer Vision (ICCV), Oct 2017, pp. 2242–2251.

- [17] H. Zhang, T. Xu, H. Li, S. Zhang, X. Wang, X. Huang, and D. Metaxas, “Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks,” in 2017 IEEE International Conference on Computer Vision (ICCV), Oct 2017, pp. 5908–5916.

- [18] Z. Wang, X. Tang, W. Luo, and S. Gao, “Face aging with identity-preserved conditional generative adversarial networks,” in CVPR, June 2018.

- [19] A. Bulat and G. Tzimiropoulos, “Super-fan: Integrated facial landmark localization and super-resolution of real-world low resolution faces in arbitrary poses with gans,” in CVPR, June 2018.

- [20] J. Kossaifi, L. Tran, Y. Panagakis, and M. Pantic, “Gagan: Geometry-aware generative adversarial networks,” in CVPR, June 2018.

- [21] Y. Li, S. Liu, J. Yang, and M.-H. Yang, “Generative face completion,” in CVPR, July 2017.

- [22] R. Yi, Y.-J. Liu, Y.-K. Lai, and P. L. Rosin, “Apdrawinggan: Generating artistic portrait drawings from face photos with hierarchical gans,” in CVPR, June 2019.

- [23] T. Karras, S. Laine, and T. Aila, “A style-based generator architecture for generative adversarial networks,” in CVPR, June 2019.

- [24] J. Bao, D. Chen, F. Wen, H. Li, and G. Hua, “Towards open-set identity preserving face synthesis,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018.

- [25] X. Yin, X. Yu, K. Sohn, X. Liu, and M. Chandraker, “Towards large-pose face frontalization in the wild,” in The IEEE International Conference on Computer Vision (ICCV), Oct 2017.

- [26] H. Yang, D. Huang, Y. Wang, and A. K. Jain, “Learning face age progression: A pyramid architecture of gans,” in CVPR, June 2018.

- [27] L. Song, Z. Lu, R. He, Z. Sun, and T. Tan, “Geometry guided adversarial facial expression synthesis,” in MM, 2018, pp. 627–635.

- [28] Y. Shen, P. Luo, J. Yan, X. Wang, and X. Tang, “Faceid-gan: Learning a symmetry three-player gan for identity-preserving face synthesis,” in CVPR, June 2018.

- [29] K. Cao, J. Liao, and L. Yuan, “Carigans: Unpaired photo-to-caricature translation,” TOG, vol. 37, no. 6, pp. 244:1–244:14, Dec. 2018.

- [30] V. Nair and G. E. Hinton, “Rectified linear units improve restricted boltzmann machines,” in ICML, 2010, pp. 807–814.

- [31] X. Mao, Q. Li, H. Xie, R. Y. Lau, Z. Wang, and S. Paul Smolley, “Least squares generative adversarial networks,” in ICCV, Oct 2017.

- [32] J. J. Moré, “The levenberg-marquardt algorithm: implementation and theory,” in Numerical analysis, 1978, pp. 105–116.

- [33] P. Isola, J. Zhu, T. Zhou, and A. A. Efros, “Image-to-image translation with conditional adversarial networks,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July 2017.

- [34] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015, N. Navab, J. Hornegger, W. M. Wells, and A. F. Frangi, Eds. Cham: Springer International Publishing, 2015, pp. 234–241.

- [35] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” CoRR, 2014.

- [36] H. A. Aly and E. Dubois, “Image up-sampling using total-variation regularization with a new observation model,” TIP, vol. 14, no. 10, pp. 1647–1659, Oct 2005.

- [37] S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” in ICML, volume 37, ser. ICML’15, 2015, pp. 448–456.

- [38] “Meitu,” https://corp.meitu.com/en/.

- [39] L. Liang, L. Lin, L. Jin, D. Xie, and M. Li, “Scut-fbp5500: A diverse benchmark dataset for multi-paradigm facial beauty prediction,” 2018.

- [40] Y. Li, M.-Y. Liu, X. Li, M.-H. Yang, and J. Kautz, “A closed-form solution to photorealistic image stylization,” in ECCV, September 2018.

- [41] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.