Exploring the Impact of a Transformer’s Latent Space Geometry on Downstream Task Performance

Abstract

It is generally thought that transformer-based large language models benefit from pre-training by learning generic linguistic knowledge that can be focused on a specific task during fine-tuning. However, we propose that much of the benefit from pre-training may be captured by geometric characteristics of the latent space representations, divorced from any specific linguistic knowledge. In this work we explore the relationship between GLUE benchmarking task performance and a variety of measures applied to the latent space resulting from BERT-type contextual language models. We find that there is a strong linear relationship between a measure of quantized cell density and average GLUE performance and that these measures may be predictive of otherwise surprising GLUE performance for several non-standard BERT-type models from the literature Alajrami and Aletras (2022); Sinha et al. (2021); Zhang (2021). These results may be suggestive of a strategy for decreasing pre-training requirements, wherein model initialization can be informed by the geometric characteristics of the model’s latent space.

Exploring the Impact of a Transformer’s Latent Space Geometry on Downstream Task Performance

Anna C. Marbut1,†, John W. Chandler2, Travis J. Wheeler3 1Interdisciplinary Studies, University of Montana, Missoula, MT, USA 2College of Business, University of Montana, Missoula, MT, USA 3R. Ken Coit College of Pharmacy, University of Arizona, Tucson, AZ, USA †Correspondence: [email protected]

1 Introduction

Transformer-based large language models, such as BERT Devlin et al. (2018) and the GPT models Radford et al. (2018), have outperformed other language models on linguistic benchmarking tasks time and again. These models largely rely on a pre-training and fine-tuning training protocol, where the base model is trained with a very large corpus on a generic language task, such as masked language modeling, and then the weights are updated in a second round of training with a more specific task, such as text similarity or entailment. The assumption motivating this process is that the pre-training task provides the model with general linguistic information, which allows it to better learn a specific linguistic task during fine-tuning.

However, this assumption has been challenged in several recent works in which the BERT model training process is altered such that we would expect the model to perform poorly on benchmarking tasks. Examples of these pathological changes include using a non-linguistic training task or shuffling the model’s input embeddings Alajrami and Aletras (2022); Sinha et al. (2021); Zhang (2021). If the outstanding benchmarking performance of the standard models relied on the presence of general linguistic knowledge in the underlying model, then these alterations should reduce that performance to a level similar to an untrained model. However, these works consistently find benchmarking performance that is closer to that of a standard model than to that of an untrained model.

We propose that at least some of the benchmarking performance seen with this pre-training/fine-tuning protocol can be attributed to geometric characteristics of the latent space that result from the transformer architecture, divorced from any generic linguistic knowledge. Many common downstream tasks for language models, such as classification or paraphrase, use measures of similarity or separability in their loss functions. These measures rely on the way that latent representations are organized in this space, so it stands to reason that there could be a measurable characteristic of this organization that would be advantageous for learning these fine-tuning tasks. If the latent space is already organized with data placed in various high-dimensional nooks and crannies, it may be easier for a powerful transformer model to learn how to access the data appropriately during fine-tuning, even if the organization scheme is non-linguistic.

Here we explore a variety of measures that could be used to describe the geometry of a high-dimensional contextual language model latent space, and we examine the relationships between these measures and the GLUE benchmarking task performance on a range of BERT-type contextual language models. We introduce a quantized cell density measure that appears to have a strong linear relationship with GLUE performance when applied to latent space representations. We also find that this measure can be used to predict the surprising GLUE performance of many of the non-standard models presented in the aforementioned works, though the performance of a few of these models remains unexplained.

2 Related Work

When Vaswani et al. (2017) introduced the transformer model, it was as an end-to-end autoencoder that relied on the attention mechanism to provide contextual information for sequential data modeling. That architecture was adapted to be used in large language models by isolating either the encoder component (as in the BERT models from Devlin et al. 2018) or the decoder component (as in the GPT models from Radford et al. 2018) and training with a very large dataset on a generalized linguistic task, such as masked language modeling. These models are intended to be fine-tuned on specific downstream tasks, generally with a much smaller, labeled dataset, by adding an additional layer and jointly training the pre-trained and new layer weights on the new task.

It has been shown that this pre-training/fine-tuning procedure produces improved performance on downstream tasks, both on transformer models Devlin et al. (2018); Radford et al. (2018) and on more traditional neural models Dai and Le (2015); Howard and Ruder (2018). The advantage of this dynamic is generally understood to fall under the theory of transfer learning, whereby the model learns general information during the pre-training task, and this can then be specialized during fine-tuning. This means that many fewer parameters need to be trained from scratch on the fine-tuning tasks, allowing for large models to be effectively applied to these tasks without drastically overfitting to the (often small) supervised training datasets.

Benchmarking datasets provide the research community with the ability to compare performance on the same exact tasks, and generally aim to encapsulate some knowledge or skill that would be useful for the type of model being evaluated. The pre-training/fine-tuning process lends itself nicely to benchmarking measures, as the limited-size benchmarking dataset can be used to fine-tune a very large model. The GLUE benchmarking suite is a collection of tasks for language models, and each of the nine datasets is focused on evaluating a linguistic skill, from sentiment to paraphrase to inference Wang et al. (2018). Although other language model benchmarks have been proposed, GLUE remains a key set of measures for evaluating language model performance, and, at the time of writing this, nine of the ten top performing models are transformer-based models, and all ten follow the pre-training/fine-tuning paradigm NYU-MLL (2024b).

However, several recent works bring into question the source of this impressive benchmarking performance. Sinha et al. (2021) showed that RoBERTa models perform comparably to the standard model when trained with randomly shuffled word order. Zhang (2021) found that using English-trained input embeddings with BERT models trained in another language has surprisingly little effect on the GLUE performance, as does shuffling the indices of trained input embeddings. Alajrami and Aletras (2022) found that RoBERTa models with non-linguistic pre-training tasks, described in Section 3.4, still perform surprisingly well on GLUE tasks.

The unexpected performance on the GLUE tasks found in these papers suggests that the benefit these models get from pre-training may not be due to generalized linguistic knowledge. Sinha et al. (2021) suggest that the models may simply be learning complicated distributional co-occurrence statistics, while Zhang (2021) suggest that the linguistic information may actually be contained largely within the trained input embeddings. We instead propose that the benefit of the pre-training process may be related to the geometric characteristics of a latent space resulting from training a model with the transformer architecture, divorced from any specific content knowledge.

Several works have shown that increasing the isotropy of a language model’s latent space, or causing the resulting representations to be more uniformly spread in all directions, may increase performance on benchmarking tasks Mu et al. (2017); Liang et al. (2021); Kaneko and Bollegala (2020); Gao et al. (2019). The motivation behind this work rests on the finding that the latent spaces of these language models are highly anisotropic, with all of the data concentrated in a narrow “cone”, rather than spread throughout the available space and dimensions Mimno and Thompson (2017); Mu et al. (2017); Ethayarajh (2019). By adjusting the models to have more isotropic latent spaces through training or retrofitting methods, these works have attempted to cause the models to use more of the available space, producing some improvement on selected benchmarking tasks. However, there is a growing body of evidence that an isotropic latent space may not be as beneficial as initially thought. Ding et al. (2021) suggest that the benefits found in earlier work may be isolated to static word embedding models, like Word2Vec and GloVe. Rudman and Eickhoff (2023) find that increasing isotropy is detrimental to contextual model performance when measured with a more reliable measure than used in previous work Marbut et al. (2023); Rudman et al. (2021). Cai et al. (2020) and Rajaee and Pilehvar (2021) find that increasing overall isotropy is not beneficial in contextual latent space models, but that increasing isotropy within local clusters may improve model performance.

Similarly, it has been found that applying quantization methods during model training or fine-tuning may improve benchmarking performance as well Liao et al. (2020); Sablayrolles et al. (2018); Hu et al. (2022). Quantization is a compression method in which high-dimensional data are typically clustered within some number of subspaces that can be recombined to recover the full-dimensional data with minimal loss Gholami et al. (2022). Minimizing this loss during some part of the model training could result in a latent space that is more easily separable, which may prepare data to be more easily partitioned during fine-tuning on downstream tasks.

3 Methods

To explore the question of how the geometric characteristics of a contextual language model’s latent space may be related to benchmarking performance, we produced a series of 170 BERT-type models with varying levels of noise added to the pre-trained weights of all the encoder layers, leaving the pre-trained input embeddings un-noised. We then examined the relationship between the GLUE benchmark performance of these models and the values of measures intended to approximate characteristics of data spread and separability in the models’ latent spaces. Finally, we explored the possibility of using these measures to predict the surprising GLUE performance of non-standard models from the literature.

3.1 BERT Models with Injected Noise

Starting with a pre-trained BERT-type model, we gradually inject noise into all encoder layer weights as shown in Equation 1. Here, is the complete pre-trained network of encoder layer weights, noise vector is a set of random weight modifiers with the same dimensions, and is a hyperparameter describing the fractional amount of noise in model .

| (1) |

This process resulted in a dataset of 125 perturbed BERT-small models (Turc et al. 2019, 5 transformer blocks and 29.1M total parameters) and 8 BERT-base models (Devlin et al. 2018, 12 transformer blocks and 110M total parameters), as well as 38 perturbed RoBERTa-base models (Liu et al. 2019, 12 transformer blocks and 125M total parameters) .

After perturbing the pre-trained weights, we fine-tuned the models on the GLUE datasets using the script and default parameters from the Transformers library Wolf et al. (2020). Following Devlin et al. (2018), we excluded the WNLI inference task from our results due to known issues with the dataset NYU-MLL (2024a).

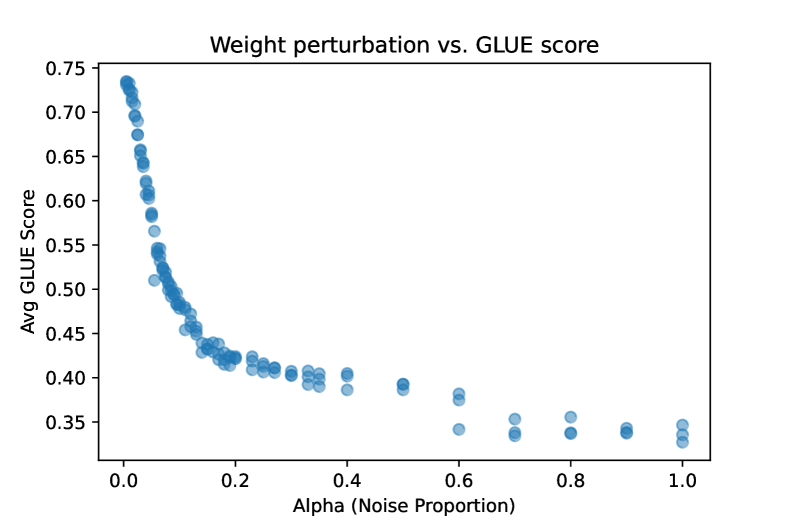

As seen in Figure 1, as increases, or as the weights are transitioned from their trained values to random noise, the GLUE score decreases exponentially. We focused most of our experimentation on lower levels to capture as much signal as possible during that rapid decrease in GLUE performance, with for 70 of our 125 BERT-small models.

3.2 Latent Space Sampling

Much of the existing work that describes the distribution of representations in natural language model latent spaces is centered on static embeddings Mimno and Thompson (2017); Mu et al. (2017); Gong et al. (2018); Gao et al. (2019). Studying the shape of a contextual model’s latent space is complicated by the fact that the latent representation of each token in the vocabulary will depend on the context in which it is seen, presenting a practically infinite number of representations of each individual token. Many works exploring these distributions largely focus on very local statistics or the relationships between the representations of specific tokens Ethayarajh (2019); Bihani and Rayz (2021); Valeriani et al. (2024); Merchant et al. (2020), and although both Cai et al. (2020) and Ferner and Wegenkittl (2021) are focused on an examination of entire latent spaces, to the best of our knowledge, there is not currently a standard way to produce a representative sample of these spaces as a whole.

As such, we opted to sample 5K sequences (roughly 250K tokens) from the complete Penn Tree Bank (PTB) Marcus et al. (1993) and WikiText2 Merity et al. (2016) datasets, including train, validation, and test splits111We use the scikit-learn Pedregosa et al. (2011) unigram tokenizer to only include unique sampled sequences between three and fifty tokens, as a surprising number of the sequences from these datasets are duplicates or empty strings.. To ensure that we produced a reasonably representative latent space sample, we compared the frequency distributions of unigrams, bigrams, and sentence lengths in ten separate samples to the distributions over the entire combined dataset. As seen in Figure 2, the sample distributions closely follow the whole dataset distribution in all three plots.

3.3 Measures

Although distributional statistics are well understood and established in low dimensions, many common measures, and our intuitions about them, fail as the number of dimensions grows above about seven Marbut et al. (2023). As such, we identified a variety of measures that may approximate how data are organized in a high dimensional latent space, in particular considering whether the space is used evenly (data spread) and whether the data are organized in a clustered manner.

3.3.1 Data Spread

As a simple first pass, we apply a measure of data spread, which can quantify whether the data are distributed evenly in all directions and along each dimension. The concept of data spread may not provide much detail about the data distribution (it does not offer any insight into the nature of an uneven/irregular distribution), but it is ultimately simpler and more interpretable than our quantization-based measures (see below). Here, we describe one measure of overall data spread; two others are described in the Appendix.

Eigenvalue Early Enrichment (EEE)

Following Marbut et al. (2023), we consider the cumulative sum of the eigenvalues of a distribution. These eigenvalues, often referred to as the explained variance of the principle components, will all be equal when data is spread evenly. Conversely, almost all of the explained variance will be concentrated in the first few components in a very uneven distribution. Equation 2 calculates the area between the cumulative sum curve, , and the linear sum for a perfectly even distribution, , as a proportion of the total space available above that linear sum line (with as the total variance in the -dimensional distribution). We expect the EEE of a distribution to decrease as data becomes more evenly spread.

| (2) |

3.3.2 Quantization

Another way to assess the organization of a data distribution is to quantify how easily it can be broken into distinct and separable clusters. Quantization can be seen as a clustering method that is particularly attractive when working with large, high-dimensional datasets. Typically, in quantization, a high-dimensional data distribution is broken into subspaces and then the data are clustered within each subspace to create a compressed representation of the original data.

We take advantage of the speed-optimized quantization methods available through the FAISS library Douze et al. (2024). In particular, we examine measures based on the results of an additive quantizer (LSQ++ from Martinez et al. 2018) which iteratively adjusts the assignment of subspaces and cluster centroids to minimize data loss. We describe a few measures based on how data are distributed among or within the quantized bins, which aim to capture the extent to which the quantized points are clustered. Results from several conceptually redundant measures and the full results of using a product quantizer Jegou et al. (2010) can be found in Appendix C.

While calculating measures in the quantized latent space may help to quantify how separable or clustered the space is, we note that the process of quantization is, itself, an imperfect proxy for data separability. In particular, as with traditional clustering methods, hyperparameters such as cluster/bin count can have a dramatic effect on the result, and there is not a single standard method for identifying ideal hyperparamters. Here, we rely on a default bin count of 256, and note the potential benefit of further exploration of this and other hyperparameters.

Point Patchiness (PP)

The distribution of how points are assigned to centroids in a quantized space, or the observed density of those quantized bins, may tell us how uniformly data are distributed. For a uniform latent space, we would expect the cell density to be the same for all centroids, whereas an irregular space would have greater variation in cell density between clusters.

We borrow a measure from ecology Wade et al. (2018) and compute the patchiness index over the quantized latent spaces. This measure is a variance-adjusted average cell density that approximates the cell density as seen from the perspective of an individual datapoint. In Equation 3, is the average cell density and is the variance of cell densities .

| (3) |

The patchiness index is typically applied over equal-sized bins by design, a state not expected to be the case for our quantized clusters. However, our empirical experiments in weighting the cell densities by their volume (approximated as n-dimensional spheres with a radius equal to the distance between the centroid and its furthest assigned point) resulted in almost all of the densities becoming zero. This should not be surprising given the unintuitive nature of high-dimensional geometry, wherein the volume of a hypersphere with a fixed radius quickly goes to zero as the dimensions increase above seven Marbut et al. (2023). Due to this oddity, we do not include any cell weighting in our patchiness calculation.

Reconstruction Skew (RS)

In a uniform distribution, each quantized cell will be about the same size, and there will be limited variation in the distance between an original datapoint and its reconstructed counterpart (the error magnitude, Equation 4). In a very clustered distribution, some bins will be very small (where the data are clustered) while others will be large (where the data are more sparse). We expect the error magnitude to be small for data in tight clusters and to be large for data in sparsely populated bins.

We calculate the skew of this distribution Kokoska and Zwillinger (2000), shown in Equation 5, where is the mean and is the standard deviation. Here we would expect the skew of a uniform distribution to be near zero, whereas an irregular, more clustered distribution would result in a left (negative) skew.

| (4) | ||||

| (5) |

Centroid Distribution (CDvar)

We also consider how the centroids themselves are distributed within the subspaces. In a uniform latent space, we would expect the centroids to be evenly spaced and have very similar nearest-neighbor distances, but in a more irregular space we would expect to see greater variation in these distances. For each centroid we calculate the normalized distance to the nearest centroid () and report the variance of these distances as a measure over the combined subspaces (Equation 6). We use the FAISS L2 nearest neighbor implementation to efficiently find the approximate nearest neighbor Douze et al. (2024).

| (6) |

Cluster Distribution (PDEEE)

It may also be useful to quantify whether the data within each cluster (assigned to each centroid) are distributed irregularly. We compute the EEE (Equation 2) for each centroid and report the combined average over all centroids and subspaces (Equation 7). For a uniform latent space, or locally uniform clusters, we expect the PDEEE to be low, and with more irregularly shaped clusters we expect the PDEEE to be higher.

| (7) |

3.4 Non-Standard Models

We also applied our measures to several models from the literature that had non-linguistic or otherwise challenging alterations made to the training protocol before fine-tuning on the standard (English) GLUE benchmarking tasks. If our measures can explain the surprising GLUE performance of these models, this would further support the notion that there were something about the data organization in the latent space that contributed directly to learning downstream tasks. The models that we included in our experiment, which we term “non-standard” models for simplicity, are briefly described below.

From Zhang (2021):

-

•

Chinese: a standard pre-trained Chinese BERT-base model with the input embeddings swapped out for the pre-trained English BERT embeddings;

-

•

German - a standard pre-trained German BERT-base model with the input embeddings swapped out for the pre-trained English BERT embeddings;

-

•

Shuffle: a standard pre-trained English BERT-base model with the indices of the input embeddings shuffled;

-

•

Untrained: a randomly initialized BERT-base model with the pre-trained English BERT embeddings. Note that the BERT-base model is initialized using a distribution, so the results of this model are expected to differ from a model with 100% weight perturbation pulled from a distribution.

From Alajrami and Aletras (2022):

-

•

MLM: A RoBERTa model pre-trained with the traditional masked language modeling task alone;

-

•

First Char: A RoBERTa model pre-trained with the task of predicting the first character of the masked tokens;

-

•

ASCII : A RoBERTa model pre-trained with the task of predicting the sum of the ASCII characters of the masked tokens;

-

•

Random: A RoBERTa model pre-trained with the task of predicting a random number.

From Sinha et al. (2021):

-

•

RoBERTa: A standard pre-trained RoBERTa model using the same training regimen (datasets, length of training, etc.) as the other models in the paper;

-

•

Sequence: A RoBERTa model trained with words shuffled within each input sequence;

-

•

Corpus: A RoBERTa model trained with sequences sampled from the frequency distribution of the entire corpus (analogous to shuffling words within the whole corpus).

4 Results

4.1 Cell Density Measures

Figure 3 shows the strong linear relationship between GLUE scores and a density measure (patchiness) on BERT-small models (Pearson’s )222We produced triplicate perturbed models for all -levels of the BERT-small models, and on RoBERTa models with , and report the median value on our charts to ease interpretation.. These results support our intuition that a less uniform data distribution is more conducive to learning downstream tasks, since we expect the variation in cell density (patchiness) to increase in a less isotropic data distribution.

A weaker linear relationship is also apparent with the RoBERTa models (), with reduction in predictive value particularly at higher GLUE (lower ) values. This plot also shows that both the RoBERTa and BERT-base model results are consistently shifted to the right (with higher PP values) when compared to the BERT-small results. This shift could be caused by any number of differences between the models, including number of parameters, length of training, size of training dataset, and, for RoBERTa, several differences in the pre-training tasks.

The predictive utility of the patchiness index is shown in Figure 4. Including the model architecture (BERT-small vs. BERT-base vs. RoBERTa) as a variable in a simple linear regression model yields an overall model fit of .

The linear relationship seen for our perturbed model data is less clear for the non-standard models described in Section 3.4. Figure 5 includes these non-standard models in the linear regression plot. Although the model seems predictive for many of the models when we include a model architecture variable, there are still a few models that are clear outliers and remain unexplained by this measure alone.

These results demonstrate that, while there is a suggestive relationship between the patchiness measure and GLUE score, it is not fully explanatory. It is certainly possible that factors other than the latent space data organization contribute to the performance of these unexplained non-standard models, but it is also likely that our measures, which merely approximate some geometric characteristics of the space, do not fully capture a relationship that does exist.

4.2 Other Measures

While the cell density measures are the most promising candidates for linear prediction of GLUE performance, the results in Figure 6 show that other measures have a more complex relationship with the average GLUE score. When looking at the BERT-small results in particular, these relationships are clearly non-linear, and often non-monotonic in the measure value. Several of our measures result in the “zig-zag” shape seen clearly on the EEE plot, and several others have more of the “hook” shape seen on the PD-EEE plot. The full set of measure plots can be found in Appendix C.

| Pearson’s | LM | ||

|---|---|---|---|

| BERT-small | RoBERTa | MSE | |

| PP | 0.902 | 0.640 | 0.089 |

| RS | 0.461 | -0.574 | 0.039 |

| CDvar | 0.537 | -0.292 | 0.053 |

| PDEEE | -0.429 | -0.764 | 0.017 |

| EEE | -0.557 | -0.535 | 0.054 |

Given these non-linear relationships, the results are surprising when we apply linear regression models of the measures to predict the GLUE scores of the non-standard models described in Section 3.4. In the rightmost column of Table 1, we see that the PDEEE model has the lowest MSE, even compared to the PP model after adding the model architecture information. However, the curved shape on the residual plot for this model (Figure 7) illustrates that, even though the non-standard models are fairly well-predicted by the model, a linear model is not a good fit for the data more generally.

We explored the utility of linear regression models with higher order variables, combinations of different measures, and interactions between measures as well. While some of these models showed minimal improvement over the simple models discussed above, we determined that the loss of interpretability in these more complex models outweighed the improvement to model fit.

Although these relationships are interesting and may be informative about the inner workings of the transformer models themselves, the non-monotonicity of these measures in relation to the GLUE average makes them poor candidates for predicting downstream task performance. For example, we cannot say that lowering the EEE or PDEEE of a latent space will necessarily improve benchmarking performance. We offer some discussion of these non-linear relationships in Appendix B, but leave any further exploration of these results to future work.

5 Conclusion

In this work, we address the question of whether there is some measurable geometric characteristic to the latent space of transformer models that could be used to predict performance on downstream tasks, specifically the GLUE benchmarking tasks. We produced models with gradually decreasing GLUE scores by transitioning the weights of pre-trained language models to random noise, and then we applied a set of measures chosen to approximately capture the amount of data spread and separability present in the resulting models’ latent spaces.

In examining the relationship between these measures and GLUE benchmarking performance, we identified a quantized cell density measure, based on the patchiness index Wade et al. (2018), that has a strong linear relationship to the GLUE score. We also considered whether any of these measures could be used to predict the unexpected GLUE performance found in a body of work exploring non-standard training tasks and model manipulations. Although the evidence was less clear, we once again found that the patchiness measure showed promise as a predictive measure for the GLUE score of most of the non-standard models that we examined.

This research is potentially relevant to the growing body of work attempting to reduce the resource requirements for pre-training large language models. If a measure of separability within a model’s utilized latent space could be used to initialize a transformer model before any linguistic training begins, it is possible that the pre-training requirements could be greatly reduced without sacrificing performance on downstream tasks.

Our experiments also uncovered interesting non-linear relationships between many of the measures that we examined and average GLUE score. Further exploration of these non-monotonic patterns could shed light on the inner workings of transformer models, contributing to work on increasing the general interpretability of transformer models.

6 Limitations

Our experiments were limited by two major difficulties: (1) The practically infinite nature of a contextual model’s latent space, and (2) the lack of established measures for describing high dimensional data distributions. To overcome these obstacles, we had to accept approximate solutions to both.

Our measure calculations are based on a sample of the latent space that we showed to be representative of a sizable corpus, but is still necessarily an imperfect approximation of the theoretical complete latent space. Additionally, and perhaps more relevant to our findings, the contextual nature of the latent spaces required manipulation of the transformer model itself to approximate a gradual change in the geometry of the latent space. As briefly discussed in Appendix B, some of our results clearly indicate that adding noise to the model weights is not the same as adding noise to the latent space representations, which would perhaps provide a clearer signal for our intended experiment.

While the data spread measures that we examined are fairly well understood, our use of quantization algorithms to approximate some measure of the clustered nature of the data introduces a bit of a “black box” into the measure calculations. The algorithms are designed to effectively segment the data for optimal reconstruction, and it is unclear how this goal interacts with some of the measures that we apply. For example, the quantized cell density measure (for which we found the most compelling results) is based on the distribution of the number of points assigned to each centroid in the quantized space. However, it may be the case that the quantization algorithms themselves are in direct conflict with this measure, specifically attempting to adjust the location of the centroids and subspaces to even out the distribution of points assigned to each cluster. Also, the number of quantized components may not adequately describe the complexity of space utilization – imposition of too many or too few components may play a role in some of the observed non-monotonic relationship between density measure and GLUE score. We do not believe these to be issues for our experimental results, but it does hinder our understanding of how our measures are related to the latent space geometry.

Additionally, we remain unsatisfied that any of our measures quite captured the concept of separability that we intended, somehow quantifying the extent to which data is located in “nooks and crannies” in the latent space. We point this out not to discredit our work and results, but to again highlight the fact that all of these measures are approximations of complex geometric relationships. It is certainly possible that a different measure exists that would better capture the relationship between GLUE performance and latent space organization, and it is perhaps equally possible that the relationship is too complex to be fully approximated by a single measure.

Finally, with the future goal of using this paper’s results to reduce pre-training requirements for transformer models, we come up against the major issue that existing quantization methods are non-differentiable. This means that none of the quantization-based measures explored here can be used as an additional or initial loss function. However, we do intend to explore the possibility of using differentiable k-means algorithms in place of the quantization methods used here to address this problem in future work.

7 Acknowledgements

We thank Katy McKinney-Bock, Conner Copeland, and Daniel Olson for enlightening conversations during the development of this project. Computational analysis was performed on Jetstream2 resources at Indiana University through allocation BIO220047 from the NSF ACCESS program, which is supported by OAC Grants Nos. 2138259, 2138286, 2138307, 2137603, and 2138296.

References

- Alajrami and Aletras (2022) Ahmed Alajrami and Nikolaos Aletras. 2022. How does the pre-training objective affect what large language models learn about linguistic properties? arXiv preprint arXiv:2203.10415.

- Bihani and Rayz (2021) Geetanjali Bihani and Julia Taylor Rayz. 2021. Low anisotropy sense retrofitting (laser): Towards isotropic and sense enriched representations. arXiv preprint arXiv:2104.10833.

- Cai et al. (2020) Xingyu Cai, Jiaji Huang, Yuchen Bian, and Kenneth Church. 2020. Isotropy in the contextual embedding space: Clusters and manifolds. In International conference on learning representations.

- Dai and Le (2015) Andrew M Dai and Quoc V Le. 2015. Semi-supervised sequence learning. Advances in neural information processing systems, 28.

- Devlin et al. (2018) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2018. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

- Ding et al. (2021) Yue Ding, Karolis Martinkus, Damian Pascual, Simon Clematide, and Roger Wattenhofer. 2021. On isotropy calibration of transformers. arXiv preprint arXiv:2109.13304.

- Douze et al. (2024) Matthijs Douze, Alexandr Guzhva, Chengqi Deng, Jeff Johnson, Gergely Szilvasy, Pierre-Emmanuel Mazaré, Maria Lomeli, Lucas Hosseini, and Hervé Jégou. 2024. The faiss library. arXiv.

- Ethayarajh (2019) Kawin Ethayarajh. 2019. How contextual are contextualized word representations? comparing the geometry of bert, elmo, and gpt-2 embeddings. arXiv preprint arXiv:1909.00512.

- Ferner and Wegenkittl (2021) Cornelia Ferner and Stefan Wegenkittl. 2021. Isotropic contextual representations through variational regularization.

- Gao et al. (2019) Jun Gao, Di He, Xu Tan, Tao Qin, Liwei Wang, and Tie-Yan Liu. 2019. Representation degeneration problem in training natural language generation models. arXiv preprint arXiv:1907.12009.

- Gholami et al. (2022) Amir Gholami, Sehoon Kim, Zhen Dong, Zhewei Yao, Michael W Mahoney, and Kurt Keutzer. 2022. A survey of quantization methods for efficient neural network inference. In Low-Power Computer Vision, pages 291–326. Chapman and Hall/CRC.

- Gong et al. (2018) Chengyue Gong, Di He, Xu Tan, Tao Qin, Liwei Wang, and Tie-Yan Liu. 2018. Frage: Frequency-agnostic word representation. Advances in neural information processing systems, 31.

- Howard and Ruder (2018) Jeremy Howard and Sebastian Ruder. 2018. Universal language model fine-tuning for text classification. arXiv preprint arXiv:1801.06146.

- Hu et al. (2022) Ting Hu, Christoph Meinel, and Haojin Yang. 2022. Empirical evaluation of post-training quantization methods for language tasks. arXiv preprint arXiv:2210.16621.

- Jegou et al. (2010) Herve Jegou, Matthijs Douze, and Cordelia Schmid. 2010. Product quantization for nearest neighbor search. IEEE transactions on pattern analysis and machine intelligence, 33(1):117–128.

- Kaneko and Bollegala (2020) Masahiro Kaneko and Danushka Bollegala. 2020. Autoencoding improves pre-trained word embeddings. arXiv preprint arXiv:2010.13094.

- Kokoska and Zwillinger (2000) S. Kokoska and D. Zwillinger. 2000. CRC Standard Probability and Statistics Tables and Formulae, Student Edition. Mathematics/Probability/Statistics. Taylor & Francis.

- Liang et al. (2021) Yuxin Liang, Rui Cao, Jie Zheng, Jie Ren, and Ling Gao. 2021. Learning to remove: Towards isotropic pre-trained bert embedding. International Conference on Artificial Neural Networks, pages 448–459.

- Liao et al. (2020) Siyu Liao, Jie Chen, Yanzhi Wang, Qinru Qiu, and Bo Yuan. 2020. Embedding compression with isotropic iterative quantization. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, pages 8336–8343.

- Liu et al. (2019) Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. 2019. Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692.

- Marbut et al. (2023) Anna Marbut, Katy McKinney-Bock, and Travis Wheeler. 2023. Reliable measures of spread in high dimensional latent spaces. In International Conference on Machine Learning, pages 23871–23885. PMLR.

- Marcus et al. (1993) Mitch Marcus, Beatrice Santorini, and Mary Ann Marcinkiewicz. 1993. Building a large annotated corpus of english: The penn treebank. Computational linguistics, 19(2):313–330.

- Martinez et al. (2018) Julieta Martinez, Shobhit Zakhmi, Holger H Hoos, and James J Little. 2018. Lsq++: Lower running time and higher recall in multi-codebook quantization. In Proceedings of the European conference on computer vision (ECCV), pages 491–506.

- Merchant et al. (2020) Amil Merchant, Elahe Rahimtoroghi, Ellie Pavlick, and Ian Tenney. 2020. What happens to bert embeddings during fine-tuning? arXiv preprint arXiv:2004.14448.

- Merity et al. (2016) Stephen Merity, Caiming Xiong, James Bradbury, and Richard Socher. 2016. Pointer sentinel mixture models. arXiv preprint arXiv:1609.07843.

- Mickus et al. (2024) Timothee Mickus, Stig-Arne Grönroos, and Joseph Attieh. 2024. Isotropy, clusters, and classifiers. arXiv preprint arXiv:2402.03191.

- Mimno and Thompson (2017) David Mimno and Laure Thompson. 2017. The strange geometry of skip-gram with negative sampling. Empirical Methods in Natural Language Processing.

- Mu et al. (2017) Jiaqi Mu, Suma Bhat, and Pramod Viswanath. 2017. All-but-the-top: Simple and effective postprocessing for word representations. arXiv preprint arXiv:1702.01417.

- NYU-MLL (2024a) NYU-MLL. 2024a. Glue benchmark faq. https://gluebenchmark.com/faq. Accessed on Jun 10, 2024.

- NYU-MLL (2024b) NYU-MLL. 2024b. Glue benchmark leaderboard. https://gluebenchmark.com/leaderboard(Archived at https://web.archive.org/web/20240419155339/https://gluebenchmark.com/leaderboard/). Accessed on Apr 3, 2024.

- Pedregosa et al. (2011) F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Passos, D. Cournapeau, M. Brucher, M. Perrot, and E. Duchesnay. 2011. Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12:2825–2830.

- Radford et al. (2018) Alec Radford, Karthik Narasimhan, Tim Salimans, Ilya Sutskever, et al. 2018. Improving language understanding by generative pre-training.

- Rajaee and Pilehvar (2021) Sara Rajaee and Mohammad Taher Pilehvar. 2021. A cluster-based approach for improving isotropy in contextual embedding space. arXiv preprint arXiv:2106.01183.

- Rudman and Eickhoff (2023) William Rudman and Carsten Eickhoff. 2023. Stable anisotropic regularization. arXiv preprint arXiv:2305.19358.

- Rudman et al. (2021) William Rudman, Nate Gillman, Taylor Rayne, and Carsten Eickhoff. 2021. Isoscore: Measuring the uniformity of embedding space utilization. arXiv preprint arXiv:2108.07344.

- Sablayrolles et al. (2018) Alexandre Sablayrolles, Matthijs Douze, Cordelia Schmid, and Hervé Jégou. 2018. Spreading vectors for similarity search. arXiv preprint arXiv:1806.03198.

- Sinha et al. (2021) Koustuv Sinha, Robin Jia, Dieuwke Hupkes, Joelle Pineau, Adina Williams, and Douwe Kiela. 2021. Masked language modeling and the distributional hypothesis: Order word matters pre-training for little. arXiv preprint arXiv:2104.06644.

- Turc et al. (2019) Iulia Turc, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. Well-read students learn better: On the importance of pre-training compact models. arXiv preprint arXiv:1908.08962.

- Valeriani et al. (2024) Lucrezia Valeriani, Diego Doimo, Francesca Cuturello, Alessandro Laio, Alessio Ansuini, and Alberto Cazzaniga. 2024. The geometry of hidden representations of large transformer models. Advances in Neural Information Processing Systems, 36.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. Advances in neural information processing systems, 30.

- Wade et al. (2018) Michael J Wade, Courtney L Fitzpatrick, and Curtis M Lively. 2018. 50-year anniversary of lloyd’s “mean crowding”: Ideas on patchy distributions. journal of animal ecology, 87(5):1221–1226.

- Wang et al. (2018) Alex Wang, Amanpreet Singh, Julian Michael, Felix Hill, Omer Levy, and Samuel R Bowman. 2018. Glue: A multi-task benchmark and analysis platform for natural language understanding. arXiv preprint arXiv:1804.07461.

- Wolf et al. (2020) Thomas Wolf, Lysandre Debut, Victor Sanh, Julien Chaumond, Clement Delangue, Anthony Moi, Pierric Cistac, Tim Rault, Rémi Louf, Morgan Funtowicz, Joe Davison, Sam Shleifer, Patrick von Platen, Clara Ma, Yacine Jernite, Julien Plu, Canwen Xu, Teven Le Scao, Sylvain Gugger, Mariama Drame, Quentin Lhoest, and Alexander M. Rush. 2020. Transformers: State-of-the-art natural language processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, pages 38–45, Online. Association for Computational Linguistics.

- Zhang (2021) Jiacheng Zhang. 2021. General Benefits of Mono-Lingual Pre-Training in Transformers. Ph.D. thesis, The University of Arizona.

- Zhou et al. (2019) Hattie Zhou, Janice Lan, Rosanne Liu, and Jason Yosinski. 2019. Deconstructing lottery tickets: Zeros, signs, and the supermask. Advances in neural information processing systems, 32.

Appendix A Other Measures

Many of the measures that we included in our experiments were highly correlated (see Figure 8). This is not an unexpected result, as we intentionally chose measures that would be conceptually redundant in case one captured the distributional characteristic better than others. The detailed descriptions for the measures not included in the main paper can be found below.

A.1 Data Spread

In addition to the EEE measure, we included two other measures of overall data spread in our experiments, described below. VRM, like EEE, is designed to measure how evenly data are spread in all directions and along each dimension, while IsoScore is only designed to measure isotropy, or the equal use of all directions. EEE and VRM are very highly correlated, with IsoScore only slightly less highly correlated.

Vasicek Ratio Mean Squared Error (VRM)

Following Marbut et al. (2023), we compute a measure based on the Vasicek entropy approximation as presented in Equation 8. This approximation considers pairs of ordered points that are separated by a fixed interval, , where we would expect the distances between these points to be equal in an evenly spread distribution, and highly variant (entropic) in an uneven distribution. is then used in a ratio with the theoretical value for a reference normal distribution, and the mean squared error (MSE) is computed to produce a multi-dimensional measure (Equation 9). We expect the VRM of a distribution to decrease as data become more evenly spread.

| (8) | ||||

| (9) |

IsoScore (IS)

IsoScore, from Rudman et al. (2021), approximates the proportion of dimensions that are isotropically used as shown in Equation 11. This is based on the isotropy defect (Equation 10), which is essentially the distance between the normalized covariance matrix of a latent space, , and the identity matrix. We expect the IS of a distribution to increase as data becomes more isotropically spread.

| (10) | ||||

| (11) |

A.2 Quantized Cell Density

In addition to the Point Patchiness measure, we included two other measures of quantized cell density in our experiments. All three of these measures were highly correlated, so we chose to only include the Point Patchiness measure in our main discussion.

Point Count Distribution (PCvar & PCkl)

As alternative measures of cell density, we also consider the variance (Equation 13) and the KL-divergence compared to a uniform distribution (Equation 14) of the odds-ratio of points assigned to the centroids (Equation 12), as averaged over the subspaces.

| (12) | ||||

| (13) | ||||

| (14) |

Here, is the normalized count of points assigned to centroid , is the mean of the odds ratio distribution, and and are the observed and reference uniform odds ratio distributions, respectively.

A.3 Quantization Reconstruction

In addition to the Reconstruction Skew measure, we included two other reconstruction-based measures in our experiments. Although these were not highly correlated with each other, they were correlated with other measures included in the main discussion.

Reconstruction Error (RE)

We consider Reconstruction Error as a potential measure of separability. We expect that a distribution that is already organized in clusters would result in quantized (compressed) representations that are close to the original data, resulting in lower error than a distribution that is not as easily clustered. We calculate a simple element-wise sum squared error between the original data, , and the reconstructed data, , as shown in Equation 15.

| (15) |

Reconstruction IQR (RI)

We also consider the width of the inner quartile range (IQR) for the distribution of normalized error magnitudes (Equation 4), where the IQR is defined as the range between the 75th and 25th percentiles of the distribution. For a more uniform distribution we expect little variation in the error magnitudes, resulting in a very small IQR. If the data were distributed irregularly, we would expect some points to be very close to their reconstructed counterparts, and some to be relatively far, causing a larger IQR.

Appendix B Non-Linear Relationship Discussion

While it is not central to our work in this paper, the non-linear and non-monotonic relationships apparent between many of our proposed measures and average GLUE score were too intriguing not to address. We leave some ideas and light research notes here in hopes that it may spark further investigation.

One finding of note that comes out of the zig-zag pattern seen in many of our measures is that the addition of noise to the weights of a pre-trained BERT-small model does not equate to the addition of noise in the model’s latent space. This is particularly evident in the results for the data spread measures, which are specifically designed to measure how evenly the data is spread. Results for all three data spread measures are reversed from our expectation, with the measures generally showing less even spread as noise is added to the model weights (with the exception of the central “zig” section of the plots). This also supports the argument against the findings that increasing isotropy improves downstream task performance, as discussed in Mickus et al. (2024) and Rudman and Eickhoff (2023).

In our experiment, we gradually add the same random noise (using triplicate random seeds for each -level on the BERT-small models, and for small -levels in the RoBERTa models) to the same pre-trained model weights. Zhou et al. (2019) explore the idea of “lottery tickets” within trained transformer models, wherein a subset of the model weights can be used to reproduce (or even improve on) the full model’s performance. One possible explanation for the non-monotonic behavior of the measure results is that in gradually adding noise to all of the pre-trained weights, we momentarily isolate or accentuate the influence of weights on one or more of these subnetworks along the way to actual random noise.

It has also been shown that the weights in different layers of transformer models are affected unevenly during training Merchant et al. (2020); Valeriani et al. (2024). Once again, we treat all weights in the model equally during perturbation, so it may be that the signals from certain layers take more or less noise to fully ablate. It is plausible that this could cause a momentary reversal in our latent space measures, resulting in the “zig-zag” shape observed for several of the measures.

Appendix C Full Results

The table and figures on the following pages provide a view into the predictive nature of the expanded set of measures introduced in this Appendix.

| Pearson’s | LM | |||

| BERT-small | RoBERTa | MSE | ||

| Additive Quantizer | PP | 0.902 | 0.640 | 0.089 |

| PCvar | 0.901 | 0.652 | 0.082 | |

| PCkl | 0.890 | 0.228 | 0.058 | |

| RS | 0.461 | -0.574 | 0.039 | |

| RE | 0.334 | 0.227 | 0.023 | |

| RI | 0.695 | -0.051 | 0.116 | |

| CDvar | 0.537 | -0.292 | 0.053 | |

| PDEEE | -0.429 | -0.764 | 0.017 | |

| Product Quantizer | PP | 0.326 | -0.418 | 0.029 |

| PCvar | 0.327 | -0.415 | 0.029 | |

| PCkl | 0.260 | 0.496 | 0.032 | |

| RS | -0.123 | -0.184 | 0.034 | |

| RE | 0.420 | 0.001 | 0.024 | |

| RI | 0.808 | 0.514 | 0.082 | |

| CDvar | 0.342 | -0.422 | 0.037 | |

| PDEEE | -0.456 | -0.705 | 0.026 | |

| Data Spread | EEE | -0.557 | -0.535 | 0.054 |

| VRM | -0.573 | -0.534 | 0.016 | |

| IS | -0.078 | -0.398 | 0.035 | |