Exploring Robustness of Unsupervised Domain Adaptation in Semantic Segmentation

Abstract

Recent studies imply that deep neural networks are vulnerable to adversarial examples—inputs with a slight but intentional perturbation are incorrectly classified by the network. Such vulnerability makes it risky for some security-related applications (e.g., semantic segmentation in autonomous cars) and triggers tremendous concerns on the model reliability. For the first time, we comprehensively evaluate the robustness of existing UDA methods and propose a robust UDA approach. It is rooted in two observations: (i) the robustness of UDA methods in semantic segmentation remains unexplored, which pose a security concern in this field; and (ii) although commonly used self-supervision (e.g., rotation and jigsaw) benefits image tasks such as classification and recognition, they fail to provide the critical supervision signals that could learn discriminative representation for segmentation tasks. These observations motivate us to propose adversarial self-supervision UDA (or ASSUDA) that maximizes the agreement between clean images and their adversarial examples by a contrastive loss in the output space. Extensive empirical studies on commonly used benchmarks demonstrate that ASSUDA is resistant to adversarial attacks.

1 Introduction

Semantic segmentation aims to predict semantic labels of each pixel in the given images, which plays an important role in autonomous driving [18] and medical diagnosis [28]. However, pixel-wise labeling is extremely time-consuming and labor-intensive. For instance, 90 minutes are required to annotate a single image for the Cityscapes dataset [6]. Although synthetic datasets [29, 30] with freely-available labels provide an opportunity for model training, the model trained on synthetic data suffers from dramatic performance degradation when applying it directly to the real data of interest.

Motivated by the success of unsupervised domain adaptation (UDA) in image classification, various UDA methods for semantic segmentation are recently proposed. The key idea of these methods is to learn domain-invariant representations by minimizing marginal distribution distance between the source and target domains [14], adapting structured output space [38, 5], or reducing appearance discrepancy through image-to-image translation [1, 47, 17]. Another alternative is to explicitly explore the supervision signals from the target domain through self-training. The key idea is to alternatively generate pseudo labels on target data and re-train the model with these labels. Most of the existing state-of-the-art UDA methods in semantic segmentation rely on this strategy and demonstrate significant performance improvement. [51, 17, 15, 26, 41, 16, 45, 43, 31]. However, the limitation of self-training-based UDA methods lies in that pseudo labels are noisy and less accurate, giving rise to severe label corruption problems [27, 48]. Recent studies further prove that with high degrees of label corruption, models tend to overfit the misinformation in the corrupted labels [48, 13]. As a consequence, performance improvement cannot be guaranteed by simply increasing the re-training rounds.

Another critical issue of UDA methods in semantic segmentation is that they are possibly vulnerable to adversarial attacks. In other words, the performance of a UDA model may dramatically degrade under an unnoticeable perturbation. Unfortunately, the robustness of UDA methods remains largely unexplored. With the increasing applications of UDA methods in security-related areas, the lack of robustness of these methods leads to massive safety concerns. For instance, even small-magnitude perturbations on traffic signs can potentially cause disastrous consequences to autonomous cars [9, 33], such as life-threatening accidents.

Self-supervised learning (SSL) aims to learn more transferable and generalized features for vision tasks (e.g., classification and recognition) [8, 10, 12, 4]. Key to SSL is the design of pretext tasks, such as rotation prediction, selfie, and jigsaw, to obtain self-derived supervisory signals on unlabeled data. Recent studies reveal that SSL is effective in improving model robustness and uncertainty [13]. However, the commonly used pretext tasks fail to provide critical supervision signals for segmentation tasks where fine-grained features are required [46].

In this paper, we first perform a comprehensive study to evaluate the robustness of existing UDA methods in semantic segmentation. Our results first reveal that these methods can be easily fooled by small perturbations or adversarial attacks. We, therefore introduce a new UDA method known as ASSUDA to robustly adapt domain knowledge in urban-scene semantic segmentation. The key insight of our method is to leverage the regularization power of adversarial examples in self-supervision. Specifically, we propose the adversarial self-supervision that maximizes the agreement between clean images and their adversarial examples by a contrastive loss in the output space. The key to our method is that we use adversarial examples to (i) provide fine-grained supervision signals for unlabeled target data, so that more transferable and generalized features can be learned and (ii) improve the robustness of our model against adversarial attacks by taking advantages of both adversarial training and self-supervision.

Our main contributions can be summarized as: (i) To the best of our knowledge, this paper presents the first systematic study on how existing UDA methods in semantic segmentation are vulnerable to adversarial attacks. We believe this investigation provides new insight into this area. (ii) We propose a new UDA method that takes advantage of adversarial training and self-supervision to improve the model robustness. (iii) Comprehensive empirical studies demonstrate the robustness of our method against adversarial attacks on two benchmark settings, i.e., ”GTA5 to Cityscapes” and ”SYNTHIA to Cityscapes”.

2 Related Work

Unsupervised Domain Adaptation

Unsupervised domain adaptation (UDA) refers to the scenario where no labels are available for the target domain. In the past few years, various UDA methods are proposed for semantic segmentation, which can be mainly summarized as three streams: (i) adapt domain-invariant features by directly minimizing the representation distance between two domains [14, 50], (ii) align pixel space through translating images from the source domain to the target domain [1, 24], and (iii) align structured output space, which is inspired by the fact that source output and target output share substantial similarities in terms of structure layout [38]. However, simply aligning cross-domain distribution has limited capability in transferring pixel-level domain knowledge for semantic segmentation. To address this problem, the most recent studies integrate self-training into existing UDA frameworks and demonstrate the state-of-the-art performance [51, 17, 15, 26, 41, 16, 45, 43, 31]. However, the most obvious drawback of such a strategy is that the generated pseudo labels are noisy and the model tends to overfit the misinformation in the corrupted labels.

Our method instead resorts to self-supervision by integrating contrastive learning into existing UDA methods. This strategy demonstrates two advantages, i.e., (i) provides supervision for the target domain, which is proved to be robust to the label corruption, and (ii) encourages the model to learn more transferable and robust features. Another major difference is that our method mainly focuses on improving its robustness against adversarial attacks, which is overlooked by existing UDA methods.

Self-supervised Learning

Self-supervision aims to make use of massive amounts of unlabeled data through getting free supervision from the data itself. This is typically achieved by training self-supervised tasks (a.k.a., pretext tasks) through two paradigms, i.e., pre-training & fine-tuning or multi-task learning. Specifically, the pre-training & fine-tuning first performs pre-training on the pretext task, then fine-tunes on the downstream task. In contrast, multi-task learning optimizes the pretext task and the downstream task simultaneously. Our method falls into the latter, where the downstream task is to predict the segmentation labels of the target domain. To learn transferable and generalized features through self-supervision, it is essential to design pretext tasks that are tailored to the downstream task. Commonly used pretext tasks include exemplar [8], rotation [10], predicting the relative position between two random patches [7], and jigsaw [25]. Motivated by this, recent UDA methods introduce self-supervision into segmentation adaptation to learn domain invariant feature representations [42, 35]. Although commonly used pretext tasks contribute to cross-domain feature alignment, these tasks have limited ability in learning fine-grained representations that are essential in semantic segmentation.

By contrast, this paper proposes to use adversarial examples to build pretext tasks. Different from [4] that perform contrastive learning in the latent space, we maximize agreement between each sample and its adversarial example via a contrastive loss in the output space. Therefore, (i) our method is encouraged to learn more transferable features which are domain invariant and fine-grained, and (ii) the trained model is more robust to label corruption and adversarial attacks.

Adversarial Attacks

Previous studies reveal that adversarial attacks are commonly observed in machine learning methods such as SVMs [2] and logistic regression [21]. Recent publications suggest that neural networks are also highly vulnerable to adversarial perturbations [36, 11]. Even worse, adversarial attacks are proven to be transferable across different models [37], i.e., the adversarial examples generated to attack a specific model are also harmful to other models. To fully understand adversarial attacks in deep neural networks (DNNs), considerable attention is received in the past few years. Specifically, [11] proposes a fast gradient sign method (FGSM) to efficiently generate adversarial examples with only one gradient step. DeepFool [23] generates minimal perturbations by iteratively linearizing the image classifier. By utilizing the differential evolution, [34] enables us to generate one-pixel adversarial perturbations to accurately attack DNNs.

Unlike the aforementioned studies that focus on effectively creating adversarial attacks, our method uses adversarial examples to build pretext tasks for UDA models, and in turn to improve the model robustness. This is motivated by the fact that a clean image and its adversarial example should have the same segmentation output. Therefore, we can get supervision for free and encourage our method to learn discriminative representation for segmentation tasks.

| Base | GTA5 to City | SYNTHIA to City | |

|---|---|---|---|

| VGG16 | 0.1 | 41.3 30.5 | 39.0 29.3 |

| 0.25 | 41.3 14.6 | 39.0 13.6 | |

| 0.5 | 41.3 7.10 | 39.0 5.90 | |

| ResNet101 | 0.1 | 48.5 36.2 | 51.4 41.2 |

| 0.25 | 48.5 19.9 | 51.4 26.6 | |

| 0.5 | 48.5 6.50 | 51.4 11.0 |

3 Methodology

We first briefly recall the preliminary of UDA, adversarial training, and self-supervision. We then perform the first-of-its-kind empirical study to show that existing UDA methods are vulnerable to adversarial attacks, which arises tremendous concerns for the application of these methods in safety-critical areas. To address this problem, we propose a new domain adaptation method known as ASSUDA to improve the model robustness without satisfying the predictive accuracy. Specifically, our method takes advantage of adversarial training and self-supervision and thus enabling us to generate more robust and generalized features.

3.1 Preliminary

UDA in Semantic Segmentation

Consider the problem of UDA in semantic segmentation, we have the source-domain data and the target-domain data . Our goal is to learn a segmentation model which guarantees accurate prediction on the target domain. Formally, the loss function of a typical UDA model is given by,

| (1) |

where is the typical segmentation objective parameterized by , and measures the domain distance. The most commonly used is the adversarial loss that encourages a discriminative and domain-invariant feature representation through a domain discriminator [14, 1, 38], which is formalized as .

Adversarial Training

Recall that the objective of an vanilla adversarial training is:

| (2) |

where are allowed perturbations, is an adversarial example of with the perturbation . To obtain , the most commonly used attack method is FGSM [11]:

| (3) |

where is the magnitude of the perturbation. The generated adversarial examples are imperceptible to human but can easily fool deep neural networks. Recent studies further prove that training models exclusively on adversarial examples can improve the model robustness [20].

3.2 Robustness of UDA Methods

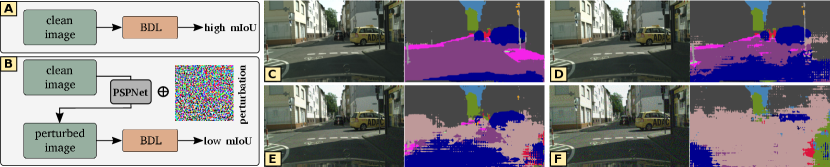

Although existing UDA methods achieve record-breaking predictive accuracy, their robustness against adversarial attacks remains unexplored. We hypothesis that they are also vulnerable to adversarial attacks, which makes it risky to apply those UDA methods in safety-critical environments. To fill this gap and to validate our hypothesis, we perform the black-box attack on BDL [17] by conducting the following two steps: (1) for each clean image in the test data, we first generate its adversarial example by attacking PSPNet [49] with , and , respectively; (2) we then evaluate the pre-trained BDL model on the generated adversarial examples (or perturbed test data) (Figure 1). The rationale behind this setting is that (i) most of existing state-of-the-art UDA methods in semantic segmentation [15, 26, 41, 16, 45, 43, 31] are built upon BDL, so conducting empirical studies on this method would be representative; (ii) a black-box attack assumes that the attacker can only access very limited information of the victim model, which is a common case in the real world. The commonly used attack strategy is: attackers first train a surrogate model to approximate the information of the victim model and then generate adversarial examples to attack the victim model. This strategy is motivated by the fact that adversarial attacks are transferable across different models [11], i.e., the adversarial examples generated to attack a specific model are also harmful to other models. Therefore, a black-box attack would be very dangerous if it can work. We hereby perform the black-box attack to examine the transferability of adversarial examples on UDA models.

As shown in Table 1, despite the remarkable performance of BDL on clean test data, slight and unnoticeable perturbations can result in dramatic performance degradation. For instance, BDL (with VGG16 backbone) only achieves a mean IoU (mIoU) of 30.5% on perturbed test data generated by , compared to 41.3% on clean images. By increasing the perturbation ratio , the performance can drop even further (Figure 1), indicating that BDL can be easily fooled by slight perturbations on the test data, even though the perturbation is generated by a surrogate model. This empirical study suggests that existing UDA methods are also possibly vulnerable to adversarial perturbations, which can make them especially risky for some security-related areas.

3.3 Adversarial Self-Supervision UDA

To address this problem, the most straightforward approach is adversarial training (equation 2) which requires class labels to generate adversarial examples. However, we are unable to access the labels of target data under the scenario of UDA (equation 1). The success of existing UDA methods heavily relies on the self-training strategy that alternatively generates highly confident pseudo labels for the target domain and re-trains the model using these labels [17, 15, 26, 41, 16, 45, 43, 31]. Although pseudo labels provide an opportunity to generate adversarial examples for the target data, these labels are usually noisy and less accurate. Hendrycks et al. prove that self-supervision improves the robustness of deep neural networks for vision tasks [13]. Nevertheless, commonly used pretext tasks (e.g., rotation prediction and jigsaw) in self-supervision fail to provide the critical supervision signals in learning discriminative features for semantic segmentation.

These challenges reach the question: can we take advantage of both adversarial training and self-supervision in improving the robustness of UDA methods in semantic segmentation? To achieve this goal, we propose to build the pretext task by using adversarial examples (Figure 2). Specifically, we consider a clean image and its adversarial example as a positive pair and maximize agreement on their segmentation outputs by a contrastive loss. This is motivated by the fact that a clean image and its adversarial example should share the same segmentation output. Different from [4] (designed for image classification) that uses a contrastive loss in the latent space, our pretext task is performed in the output space to learn discriminative representations for semantic segmentation. To adapt knowledge from the source domain to the target domain, a domain discriminator is applied to the source and target outputs. It is worth mentioning that the domain discriminator minimizes the domain-level difference, while the contrastive loss is performed on the pixel level.

Our model is built upon BDL [17], and follows the same experimental protocol to generate the transformed source images and pseudo labels of . For simplicity, we use to represent in the remaining of this paper, unless otherwise specified. At each training iteration , a minibatch of source-target pairs are randomly sampled from and , resulting in examples: . Their adversarial examples are generated by fixing and that are learned from the previous iteration ,

| (4) |

| (5) |

where is a domain discriminator parameterized by , is an adversarial loss which is designed for encouraging cross-domain knowledge alignment:

| (6) | ||||

Given these data points , each pair of examples is considered as a positive pair ( can be either or to denote a source or a target domain), while the other examples are considered as negative examples. We define the contrastive loss for a positive pair as

| (7) |

where is Gaussian kernel that is used to measure the similarity between two segmentation output tensors and , is the Euclidean distance. The contrastive loss is computed across all positive pairs (see Algorithm 1).

Taken together, our goal is to minimize the following loss function:

| (8) | ||||

Therefore, our model can leverage the regularization power of adversarial examples through a self-supervision manner, and in turn, improve the model robustness against adversarial attacks. The whole training process is detailed in Algorithm 1.

| GTA5 to Cityscapes | ||||||||||||||||||||||

|

road |

sidewalk |

building |

wall |

fence |

pole |

traffic light |

traffic sign |

vegetation |

terrain |

sky |

person |

rider |

car |

truck |

bus |

train |

motorbike |

bicycle |

mIoU |

mIoU drop |

||

| FDA [45] | 73.9 | 18.5 | 69.7 | 7.5 | 6.4 | 18.7 | 23.9 | 21.5 | 76.7 | 12.2 | 66.3 | 45.2 | 18.4 | 70.2 | 18.9 | 13.9 | 14.6 | 9.3 | 22.0 | 32.0 | 10.2 | |

| AdaptSegNet [38] | 71.9 | 22.7 | 70.8 | 7.6 | 7.9 | 16.5 | 15.4 | 8.3 | 71.8 | 12.2 | 52.6 | 33.8 | 0.6 | 65.8 | 15.8 | 7.6 | 0.0 | 0.7 | 0.1 | 25.4 | 9.6 | |

| PCEDA [44] | 90.9 | 25.0 | 73.5 | 6.3 | 7.2 | 14.2 | 24.0 | 27.4 | 76.2 | 23.4 | 70.3 | 45.0 | 19.9 | 70.0 | 16.3 | 20.3 | 0.0 | 9.8 | 25.1 | 33.4 | 11.2 | |

| BDL [17] | 64.0 | 21.9 | 70.0 | 10.0 | 3.9 | 8.4 | 20.5 | 12.8 | 77.4 | 22.3 | 79.2 | 49.8 | 13.8 | 73.2 | 17.8 | 12.1 | 0.0 | 7.8 | 15.2 | 30.5 | 10.8 | |

| Ours | 90.7 | 40.9 | 80.3 | 24.6 | 14.9 | 13.1 | 22.8 | 16.7 | 83.0 | 36.1 | 81.7 | 52.7 | 24.3 | 82.1 | 20.8 | 19.1 | 1.8 | 19.5 | 23.6 | 39.4 | 0.7 | |

| FDA | 25.4 | 3.4 | 24.5 | 0.5 | 1.6 | 2.4 | 7.7 | 6.4 | 58.6 | 1.2 | 44.8 | 6.5 | 1.4 | 14.6 | 4.9 | 0.4 | 0.1 | 0.1 | 1.3 | 10.8 | 31.4 | |

| AdaptSegNet | 5.4 | 5.0 | 43.8 | 1.2 | 2.2 | 3.7 | 6.3 | 2.5 | 31.3 | 3.9 | 22.8 | 6.2 | 0.0 | 11.9 | 4.3 | 0.1 | 0.0 | 0.0 | 0.0 | 7.9 | 27.1 | |

| PCEDA | 34.6 | 1.5 | 40.9 | 0.6 | 1.6 | 2.2 | 9.6 | 11.1 | 56.4 | 0.5 | 43.8 | 12.7 | 2.0 | 28.0 | 7.0 | 3.7 | 0.0 | 1.0 | 5.0 | 13.8 | 30.8 | |

| BDL | 25.4 | 4.7 | 55.1 | 2.8 | 1.5 | 1.3 | 9.1 | 4.3 | 61.3 | 1.5 | 54.1 | 26.7 | 0.1 | 20.7 | 6.5 | 1.5 | 0.0 | 0.7 | 1.0 | 14.6 | 26.7 | |

| Ours | 88.5 | 20.6 | 77.3 | 8.4 | 10.8 | 7.2 | 19.2 | 14.9 | 77.8 | 22.6 | 84.6 | 48.4 | 18.2 | 75.6 | 12.1 | 13.9 | 0.0 | 6.1 | 17.4 | 32.8 | 7.3 | |

| FDA | 22.0 | 0.4 | 3.2 | 0.0 | 1.3 | 0.1 | 1.9 | 0.6 | 33.8 | 1.1 | 22.6 | 0.1 | 0.0 | 0.1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 4.6 | 37.6 | |

| AdaptSegNet | 0.1 | 0.0 | 14.4 | 0.0 | 2.1 | 0.7 | 2.9 | 0.4 | 23.3 | 0.0 | 8.4 | 0.2 | 0.0 | 0.1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 2.8 | 32.2 | |

| PCEDA | 26.8 | 0.1 | 15.0 | 0.1 | 1.3 | 0.1 | 2.5 | 2.3 | 18.1 | 0.0 | 15.4 | 0.1 | 0.0 | 2.0 | 0.2 | 0.0 | 0.0 | 0.0 | 0.0 | 4.4 | 40.2 | |

| BDL | 27.8 | 0.9 | 36.8 | 0.5 | 1.2 | 0.1 | 2.7 | 0.9 | 34.1 | 0.0 | 25.1 | 5.4 | 0.0 | 0.7 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 7.1 | 34.2 | |

| Ours | 54.0 | 2.1 | 66.2 | 0.9 | 3.3 | 1.0 | 13.0 | 8.8 | 62.0 | 3.9 | 73.8 | 29.1 | 1.4 | 35.3 | 3.2 | 2.5 | 0.0 | 0.0 | 2.9 | 19.1 | 21.0 | |

| FDA [45] | 85.8 | 27.8 | 70.2 | 8.6 | 7.4 | 17.9 | 30.7 | 23.4 | 70.8 | 22.4 | 59.7 | 53.8 | 26.5 | 71.6 | 29.2 | 26.8 | 6.3 | 23.1 | 38.3 | 36.9 | 13.5 | |

| FADA [40] | 53.2 | 19.7 | 65.2 | 6.3 | 14.1 | 21.3 | 19.0 | 8.2 | 74.4 | 21.6 | 55.7 | 50.3 | 14.8 | 73.2 | 13.4 | 9.1 | 1.0 | 9.6 | 20.5 | 29.0 | 20.2 | |

| IntraDA [26] | 89.1 | 31.1 | 76.6 | 11.3 | 16.4 | 14.9 | 25.3 | 15.8 | 80.8 | 29.4 | 74.9 | 54.3 | 23.3 | 78.7 | 32.1 | 39.2 | 0.0 | 21.5 | 30.8 | 39.2 | 7.1 | |

| CLAN [19] | 75.8 | 21.3 | 69.8 | 11.9 | 7.3 | 12.7 | 24.6 | 8.8 | 77.1 | 20.4 | 66.9 | 51.0 | 19.6 | 65.4 | 28.7 | 31.3 | 2.5 | 15.2 | 24.8 | 33.4 | 9.8 | |

| MaxSquare [22] | 28.6 | 9.3 | 52.0 | 3.9 | 3.1 | 9.7 | 29.1 | 10.3 | 73.6 | 10.2 | 41.7 | 46.1 | 19.1 | 36.1 | 26.5 | 10.7 | 0.2 | 17.2 | 28.0 | 24.0 | 22.4 | |

| AdaptSegNet [38] | 80.9 | 21.2 | 66.3 | 7.4 | 5.7 | 7.4 | 25.2 | 6.5 | 76.2 | 12.5 | 69.9 | 45.6 | 11.7 | 71.3 | 21.8 | 8.0 | 1.6 | 6.5 | 14.3 | 29.5 | 12.9 | |

| PCEDA [44] | 89.8 | 31.8 | 75.8 | 17.4 | 9.2 | 26.9 | 31.1 | 30.0 | 80.0 | 19.3 | 85.6 | 55.2 | 27.5 | 79.4 | 30.2 | 34.4 | 0.0 | 20.3 | 38.3 | 41.2 | 9.3 | |

| BDL [17] | 75.5 | 31.3 | 75.3 | 8.8 | 8.5 | 17.1 | 29.3 | 23.0 | 76.9 | 22.4 | 80.5 | 51.2 | 25.8 | 51.9 | 24.0 | 33.3 | 1.6 | 20.3 | 31.3 | 36.2 | 12.3 | |

| Ours | 88.8 | 41.3 | 81.8 | 26.0 | 17.4 | 27.8 | 30.2 | 37.9 | 81.6 | 30.5 | 81.2 | 55.0 | 29.4 | 79.7 | 33.0 | 38.4 | 0.0 | 32.7 | 34.8 | 44.6 | 1.8 | |

| FDA | 50.8 | 6.7 | 51.0 | 1.6 | 3.7 | 3.5 | 17.2 | 6.3 | 49.5 | 1.5 | 60.9 | 28.3 | 12.8 | 49.1 | 14.5 | 4.6 | 1.2 | 2.6 | 25.0 | 20.6 | 29.8 | |

| FADA | 54.1 | 14.8 | 50.4 | 2.2 | 8.2 | 6.8 | 4.7 | 0.9 | 59.4 | 7.4 | 32.8 | 29.9 | 3.0 | 53.6 | 4.1 | 0.3 | 1.2 | 0.7 | 5.9 | 17.9 | 31.3 | |

| IntraDA | 26.4 | 3.0 | 46.3 | 0.4 | 4.5 | 0.7 | 8.6 | 0.5 | 30.9 | 0.4 | 43.9 | 21.3 | 1.2 | 47.5 | 8.33 | 7.5 | 0.0 | 0.2 | 6.5 | 13.6 | 32.7 | |

| CLAN | 58.3 | 9.4 | 52.7 | 5.0 | 2.7 | 1.3 | 14.7 | 2.1 | 58.5 | 3.0 | 64.5 | 37.6 | 14.0 | 46.1 | 20.0 | 13.6 | 1.8 | 3.6 | 17.3 | 22.4 | 20.8 | |

| MaxSquare | 15.2 | 2.3 | 37.9 | 2.7 | 1.5 | 1.0 | 15.8 | 1.8 | 54.1 | 1.5 | 30.6 | 14.3 | 7.2 | 31.5 | 11.8 | 1.6 | 0.0 | 0.7 | 13.8 | 12.9 | 33.5 | |

| AdaptSegNet | 66.9 | 4.8 | 32.8 | 1.3 | 2.4 | 0.7 | 13.2 | 1.2 | 60.6 | 2.4 | 65.3 | 19.6 | 1.5 | 49.0 | 8.2 | 1.2 | 0.0 | 0.1 | 0.8 | 17.5 | 24.9 | |

| PCEDA | 76.4 | 3.0 | 50.9 | 1.5 | 3.3 | 11.5 | 18.1 | 10.0 | 59.3 | 0.6 | 59.4 | 37.0 | 16.1 | 49.6 | 11.6 | 5.6 | 0.0 | 2.6 | 25.2 | 23.3 | 27.2 | |

| BDL | 40.7 | 7.2 | 56.6 | 3.1 | 2.0 | 4.0 | 20.3 | 5.5 | 62.7 | 1.5 | 65.8 | 19.4 | 15.3 | 30.2 | 8.0 | 8.4 | 0.0 | 6.4 | 21.2 | 19.9 | 28.6 | |

| Ours | 74.1 | 26.3 | 69.9 | 9.1 | 5.6 | 20.7 | 25.0 | 29.6 | 68.0 | 11.7 | 77.5 | 43.0 | 20.2 | 66.8 | 19.8 | 24.9 | 0.0 | 12.6 | 25.0 | 33.1 | 13.3 | |

| FDA | 14.5 | 0.9 | 23.2 | 1.0 | 5.3 | 1.1 | 7.6 | 0.9 | 28.4 | 0.0 | 57.9 | 3.0 | 0.2 | 8.2 | 3.8 | 0.0 | 0.0 | 0.0 | 1.6 | 8.3 | 42.1 | |

| FADA | 17.4 | 7.6 | 18.1 | 1.2 | 2.1 | 0.4 | 0.5 | 0.1 | 29.2 | 0.0 | 11.8 | 3.8 | 0.2 | 18.5 | 0.0 | 0.1 | 0.0 | 0.0 | 0.0 | 5.8 | 43.4 | |

| IntraDA | 26.4 | 3.0 | 46.3 | 0.4 | 4.5 | 0.7 | 8.6 | 0.5 | 30.9 | 0.4 | 43.9 | 21.3 | 1.2 | 47.5 | 8.3 | 7.5 | 0.0 | 0.2 | 6.5 | 13.6 | 32.7 | |

| CLAN | 33.0 | 0.6 | 39.2 | 2.3 | 1.8 | 0.1 | 8.4 | 0.2 | 36.2 | 0.3 | 38.1 | 21.5 | 3.4 | 38.0 | 9.4 | 3.4 | 0.0 | 0.1 | 4.3 | 12.6 | 30.6 | |

| MaxSquare | 17.0 | 0.3 | 33.6 | 0.6 | 2.2 | 0.4 | 9.9 | 0.4 | 29.5 | 0.0 | 31.2 | 3.5 | 0.4 | 28.8 | 5.7 | 0.4 | 0.0 | 0.0 | 1.3 | 8.7 | 37.7 | |

| AdaptSegNet | 43.0 | 0.2 | 10.1 | 0.7 | 2.8 | 0.2 | 7.3 | 0.1 | 34.8 | 0.0 | 58.1 | 4.9 | 0.0 | 18.6 | 0.8 | 0.3 | 0.0 | 0.0 | 0.0 | 9.6 | 32.8 | |

| PCEDA | 30.4 | 0.0 | 36.6 | 0.2 | 1.7 | 1.5 | 4.0 | 1.2 | 27.1 | 0.0 | 8.1 | 9.7 | 0.4 | 7.4 | 1.2 | 0.0 | 0.0 | 0.0 | 5.3 | 7.1 | 43.4 | |

| BDL | 9.7 | 0.1 | 25.9 | 0.0 | 0.8 | 0.2 | 8.1 | 0.6 | 43.5 | 0.0 | 13.7 | 4.8 | 4.3 | 7.6 | 2.6 | 0.0 | 0.0 | 0.2 | 1.9 | 6.5 | 42.0 | |

| Ours | 40.8 | 11.0 | 47.5 | 2.1 | 1.9 | 10.2 | 16.5 | 13.6 | 45.5 | 0.5 | 72.6 | 14.4 | 6.0 | 23.4 | 13.4 | 4.2 | 0.0 | 0.7 | 9.4 | 17.6 | 28.8 | |

| SYNTHIA to Cityscapes | |||||||||||||||||||

|

road |

sidewalk |

building |

wall |

fence |

pole |

traffic light |

traffic sign |

vegetation |

sky |

person |

rider |

car |

bus |

motorbike |

bicycle |

mIoU |

mIoU drop |

||

| FDA [45] | 68.5 | 28.4 | 72.7 | 0.4 | 0.3 | 22.2 | 5.1 | 19.1 | 57.6 | 75.7 | 45.8 | 18.8 | 55.6 | 18.5 | 5.1 | 31.5 | 32.8 | 7.7 | |

| PCEDA [44] | 80.9 | 25.0 | 73.5 | 6.3 | 7.1 | 14.2 | 24.0 | 27.4 | 76.2 | 70.3 | 45.0 | 19.9 | 70.0 | 20.3 | 9.8 | 25.1 | 37.2 | 3.9 | |

| BDL [17] | 34.9 | 21.2 | 47.8 | 0.0 | 0.2 | 20.5 | 9.2 | 20.2 | 67.2 | 74.3 | 49.0 | 17.5 | 57.2 | 11.9 | 2.5 | 34.6 | 29.3 | 9.7 | |

| Ours | 88.4 | 43.4 | 77.7 | 0.1 | 0.2 | 25.6 | 10.9 | 27.8 | 78.7 | 80.1 | 55.3 | 21.8 | 76.5 | 18.2 | 6.8 | 42.9 | 40.9 | -0.8 | |

| FDA | 46.3 | 16.0 | 38.7 | 0.0 | 0.2 | 4.9 | 2.5 | 8.9 | 31.3 | 38.9 | 8.6 | 5.3 | 17.7 | 6.0 | 1.3 | 5.4 | 14.5 | 26.0 | |

| PCEDA | 75.6 | 11.4 | 59.1 | 0.0 | 0.4 | 9.6 | 5.5 | 12.9 | 63.1 | 45.0 | 30.7 | 13.4 | 34.9 | 8.6 | 2.5 | 24.5 | 24.8 | 16.3 | |

| BDL | 8.0 | 8.9 | 31.1 | 0.0 | 0.14 | 8.7 | 6.9 | 9.8 | 52.0 | 54.1 | 22.9 | 4.9 | 25.6 | 2.5 | 0.8 | 13.3 | 13.6 | 25.4 | |

| Ours | 84.0 | 29.7 | 69.1 | 0.0 | 0.2 | 22.1 | 10.2 | 24.9 | 70.1 | 56.7 | 45.9 | 17.2 | 59.2 | 13.7 | 3.2 | 31.5 | 33.6 | 6.5 | |

| FDA | 42.2 | 4.9 | 14.2 | 0.0 | 0.1 | 0.6 | 1.0 | 1.7 | 26.2 | 1.9 | 0.5 | 0.4 | 1.5 | 0.1 | 0.1 | 0.1 | 6.0 | 34.5 | |

| PCEDA | 66.2 | 1.1 | 47.9 | 0.0 | 0.4 | 3.1 | 2.5 | 5.0 | 47.8 | 18.8 | 10.0 | 1.9 | 8.3 | 3.2 | 1.1 | 10.2 | 14.2 | 26.9 | |

| BDL | 0.6 | 1.0 | 24.8 | 0.0 | 0.0 | 1.6 | 1.9 | 2.3 | 35.8 | 18.6 | 2.2 | 0.1 | 4.1 | 0.1 | 0.0 | 0.5 | 5.9 | 33.1 | |

| Ours | 65.4 | 9.3 | 46.9 | 0.0 | 0.4 | 15.0 | 7.0 | 14.5 | 48.6 | 9.9 | 22.8 | 7.6 | 26.9 | 4.4 | 1.5 | 15.6 | 18.5 | 21.6 | |

| FDA [45] | 83.4 | 32.4 | 73.5 | ✗ | ✗ | ✗ | 13.1 | 18.9 | 71.6 | 79.5 | 56.1 | 24.9 | 77.5 | 27.6 | 18.2 | 42.8 | 47.7 | 4.8 | |

| FADA [40] | 74.0 | 32.5 | 69.8 | ✗ | ✗ | ✗ | 6.8 | 15.8 | 57.0 | 58.3 | 46.7 | 8.6 | 55.1 | 18.0 | 4.5 | 9.8 | 35.1 | 17.4 | |

| DADA [39] | 80.0 | 33.8 | 75.0 | ✗ | ✗ | ✗ | 8.0 | 9.4 | 62.1 | 76.3 | 49.7 | 14.3 | 76.3 | 27.8 | 5.2 | 31.7 | 42.3 | 7.5 | |

| MaxSquare [22] | 70.1 | 23.3 | 72.8 | ✗ | ✗ | ✗ | 6.7 | 7.2 | 60.2 | 77.6 | 48.7 | 13.8 | 63.7 | 17.4 | 3.1 | 20.1 | 37.3 | 10.9 | |

| AdaptSegNet [38] | 79.5 | 34.7 | 76.6 | ✗ | ✗ | ✗ | 4.1 | 5.4 | 61.0 | 80.8 | 49.3 | 18.3 | 72.1 | 26.1 | 7.5 | 29.8 | 41.9 | 4.8 | |

| PCEDA [44] | 64.5 | 33.4 | 77.1 | ✗ | ✗ | ✗ | 17.6 | 16.5 | 50.1 | 81.3 | 48.9 | 24.8 | 71.9 | 25.7 | 13.3 | 41.0 | 43.6 | 10.0 | |

| BDL [17] | 79.2 | 33.7 | 75.3 | ✗ | ✗ | ✗ | 5.6 | 8.7 | 61.1 | 80.6 | 45.0 | 21.7 | 65.7 | 26.7 | 8.5 | 24.5 | 41.2 | 10.2 | |

| Ours | 88.3 | 40.1 | 76.9 | ✗ | ✗ | ✗ | 11.3 | 15.9 | 68.8 | 81.4 | 53.3 | 27.3 | 78.2 | 33.1 | 16.9 | 39.4 | 48.5 | 3.6 | |

| FDA | 8.6 | 9.0 | 40.8 | ✗ | ✗ | ✗ | 3.9 | 7.1 | 21.5 | 51.3 | 14.5 | 6.9 | 35.3 | 5.4 | 0.0 | 14.4 | 16.8 | 35.7 | |

| FADA | 80.8 | 23.5 | 59.3 | ✗ | ✗ | ✗ | 1.7 | 3.7 | 50.6 | 15.6 | 26.2 | 0.8 | 21.2 | 6.2 | 0.3 | 2.1 | 22.5 | 30.0 | |

| DADA | 58.0 | 11.5 | 42.7 | ✗ | ✗ | ✗ | 4.5 | 4.2 | 31.9 | 41.2 | 23.4 | 6.0 | 53.9 | 8.3 | 0.4 | 14.0 | 23.1 | 26.7 | |

| MaxSquare | 70.3 | 4.6 | 53.1 | ✗ | ✗ | ✗ | 8.1 | 6.0 | 37.2 | 61.0 | 11.2 | 3.9 | 42.3 | 6.9 | 0.4 | 3.4 | 23.7 | 24.5 | |

| AdaptSegNet | 28.4 | 7.6 | 56.8 | ✗ | ✗ | ✗ | 4.4 | 2.6 | 26.4 | 62.8 | 22.5 | 9.8 | 44.2 | 8.3 | 1.1 | 10.2 | 21.9 | 24.8 | |

| PCEDA | 15.4 | 7.2 | 64.9 | ✗ | ✗ | ✗ | 9.3 | 9.8 | 27.0 | 71.4 | 35.3 | 13.9 | 52.0 | 12.3 | 2.2 | 25.4 | 26.7 | 26.9 | |

| BDL | 46.9 | 9.1 | 65.5 | ✗ | ✗ | ✗ | 4.0 | 5.9 | 34.7 | 68.5 | 22.7 | 12.5 | 50.7 | 10.8 | 1.2 | 12.8 | 26.6 | 21.3 | |

| Ours | 74.1 | 13.1 | 58.7 | ✗ | ✗ | ✗ | 7.7 | 15.1 | 39.2 | 73.4 | 22.0 | 12.0 | 47.2 | 14.0 | 2.3 | 21.5 | 30.8 | 20.7 | |

| FDA | 0.0 | 0.0 | 7.2 | ✗ | ✗ | ✗ | 1.3 | 0.7 | 17.8 | 13.7 | 0.0 | 0.0 | 2.5 | 0.2 | 0.0 | 0.0 | 3.3 | 49.2 | |

| FADA | 76.0 | 15.9 | 56.3 | ✗ | ✗ | ✗ | 0.2 | 0.6 | 45.0 | 0.2 | 7.6 | 0.0 | 5.2 | 0.9 | 0.0 | 0.1 | 16.0 | 36.5 | |

| DADA | 42.9 | 2.3 | 16.3 | ✗ | ✗ | ✗ | 1.8 | 0.7 | 24.1 | 12.5 | 2.5 | 0.8 | 23.5 | 2.1 | 0.0 | 4.8 | 10.3 | 39.5 | |

| MaxSquare | 42.7 | 0.2 | 25.3 | ✗ | ✗ | ✗ | 5.0 | 2.7 | 24.5 | 18.0 | 0.8 | 0.1 | 15.0 | 1.5 | 0.0 | 0.2 | 10.5 | 37.7 | |

| AdaptSegNet | 2.1 | 0.4 | 24.5 | ✗ | ✗ | ✗ | 2.1 | 0.5 | 19.2 | 21.4 | 1.4 | 2.2 | 11.7 | 1.7 | 0.1 | 2.5 | 6.9 | 39.8 | |

| PCEDA | 0.1 | 0.1 | 40.0 | ✗ | ✗ | ✗ | 2.4 | 1.8 | 21.0 | 37.2 | 13.1 | 1.3 | 9.3 | 2.5 | 0.7 | 1.6 | 10.1 | 43.5 | |

| BDL | 2.8 | 0.7 | 32.1 | ✗ | ✗ | ✗ | 2.0 | 1.8 | 20.3 | 53.7 | 2.7 | 1.3 | 22.3 | 1.4 | 0.4 | 1.7 | 11.0 | 40.4 | |

| Ours | 15.3 | 2.0 | 26.0 | ✗ | ✗ | ✗ | 2.5 | 5.9 | 20.7 | 51.5 | 1.6 | 4.0 | 10.8 | 1.9 | 0.4 | 9.6 | 11.7 | 40.4 | |

4 Experiments

4.1 Datasets

Following the same setting as previous studies, we use GTA5 [29] and SYNTHIA-RAND-CITYSCAPES [30] as the source domain, and use Cityscapes [6] as the target domain in our study. GTA5 is composed of 24,966 images (resolution: 1914 1052) with pixel-accurate semantic labels, which is collected from a photo-realistic open-world game known as Grand Theft Auto V. SYNTHIA-RAND-CITYSCAPES dataset is generated from a virtual city, including 9,400 images (resolution: 1280 760) with precise pixel-level semantic annotations. Cityscapes contains 5,000 images (resolution: 2048 1024), which is a large-scale street scene datasets collected from 50 cities. There are 2,975 images for training, 500 images for validation, and 1,525 images for testing. The training set from Cityscapes is used as target domain images, and we use the validation set for performance evaluation.

4.2 Implementation Details

Network Architecture

We follow the same experimental protocol in this area, which uses two network architectures: DeepLab-v2 [3] with VGG16 [32] backbone, and DeepLab-v2 with ResNet101 backbone. The domain discriminator has 5 convolution layers with kernel 44 and stride of 2, each of which is followed by a leaky ReLU parameterized by 0.2 except the last one. The channel number of each layer is {64, 128, 256, 512, 1}.

Model Training

Adam optimizer with initial learning rate 1e-4 and momentum (0.9, 0.99) is used in DeepLab-VGG16. We apply step decay to the learning rate with step size 30000 and drop factor 0.1. Stochastic Gradient Descent optimizer with momentum 0.9 and weight decay 5e-4 is used in DeepLab-ResNet101. The learning rate of DeepLab-ResNet101 is initialized as 1e-4 and is decreased by the polynomial policy with a power of 0.9. Adam optimizer with momentum (0.9, 0.99) and initial learning rate 1e-6 is used in the domain discriminator. We set in equation 4 and 5.

Perturbed Test Data

To evaluate the robustness of our approach and existing UDA methods against adversarial attacks, we generate the perturbed image for each clean test image from Cityscapes. we use PSPNet [49] as the surrogate model owing to its popularity in the semantic segmentation area. To attack PSPNet, we use FGSM with three different values, i.e., , , and . For each , we generate its corresponding adversarial examples and use them to evaluate the robustness of recently published UDA methods. For a fair comparison, we directly download the pre-trained models from the original paper and evaluate these models on the perturbed test data generated by PSPNet.

4.3 Experimental Results

Since the robustness of existing UDA methods remains unexplored, we first comprehensively evaluate their robustness against adversarial attacks in this section (Table 2 and Table 3). We then perform a comparison of our method on two widely used benchmark settings, i.e., ”GTA5 to Cityscapes” and ”SYNTHIA to Cityscapes”. Two criteria, i.e., mIoU and mIoU drop are used for performance assessment. Specifically, mIoU indicates the mean IoU on perturbed test data, while mIoU drop indicates the performance degradation compared to the mIoU on clean test data. Therefore, the higher the mIoU and the lower the mIoU drop, the more robust the model.

GTA5 to Cityscapes

As shown in Table 2, we achieve the best performance on all three kinds of adversarial attacks. In particular, even slight adversarial perturbations can mislead AdaptSegNet [38] and BDL [17] and dramatically degrade their performance. For instance, when evaluated with VGG16 backbone on perturbed test data from , they only achieve mIoU 7.9 and mIoU 14.6, with mIoU drop 27.1 and 26.7, respectively. By contrast, our method still gets mIoU 32.8 and only has a performance drop of mIoU 7.3. Similarly, two recently proposed UDA methods, i.e., FDA [45] and PCEDA [44] suffer from mIoU drop of 31.4 and 30.8, respectively. The results suggest that existing UDA methods in semantic segmentation are broadly vulnerable to adversarial attacks, even a slight but intentional perturbation leads to dramatic performance degradation. The reason is that although these methods demonstrate remarkable performance on clean test data, none of them, however, take the adversarial attack into account. Instead, we innovatively propose adversarial self-supervision to take advantage of adversarial examples, to improve the robustness of UDA models. Note, different from previous contrastive learning models [4], our method is tailored to UDA environments, which can adapt domain knowledge simultaneously. This is evident in the qualitative comparison in Figure 3, where our method demonstrates accurate predictions on the target domain.

SYNTHIA to Cityscapes

Table 3 shows the performance comparison on ”SYNTHIA to Cityscapes”, where our method again demonstrates significant robustness improvement. In contrast, other UDA methods can be easily fooled by small perturbation on the test data. The only exception is when evaluated with ResNet101 backbone on perturbed test data , where FADA [40] achieves the best accuracy. Much of this interest is attributed to its fine-grained adversarial strategy for class-level alignment. Another interesting observation is that our model achieves better performance on perturbed test data () than on clean test data by using the VGG16 backbone. This can be explained by the fact that training on adversarial examples can regularize the model somewhat, as reported in [11, 36]. We further perform a qualitative study of our method when evaluated on the different magnitude of perturbations as shown in Figure 4.

Ablation Study

We perform the ablation study of the perturbation magnitude (equation 4 and 5). Table 4 reveals that results in more robust UDA model than . The reason is that the adversarial examples generated by are highly perturbed compared to the adversarial examples from , which in turn encourages our model to be more robust against perturbations.

| GTA5 to Cityscapes | SYNTHIA to Cityscapes | |||

| 0.1 | 35.9 | 39.4 | 37.2 | 40.9 |

| 0.25 | 21.2 | 32.8 | 21.5 | 33.6 |

| 0.5 | 8.3 | 19.1 | 8.9 | 18.5 |

| 0.1 | 42.7 | 44.6 | 45.5 | 48.5 |

| 0.25 | 30.2 | 33.1 | 26.2 | 30.8 |

| 0.5 | 14.9 | 17.6 | 10.4 | 11.7 |

5 Conclusion

In this paper, we introduce a new unsupervised domain adaptation framework for semantic segmentation. This is motivated by the observation that the robustness of semantic adaptation methods against adversarial attacks has not been investigated. For the first time, we perform a comprehensive evaluation of their robustness and propose the adversarial self-supervision by maximizing agreement between clean samples and their adversarial examples. Extensive empirical studies are performed to explore the benefits of our method in improving the model’s robustness on adversarial attacks. The superiority of our method is also thoroughly proved on ”GTA5-to-Cityscapes” and ”SYNTHIA-to-Cityscapes”.

References

- [1] Cycada: Cycle consistent adversarial domain adaptation. In International Conference on Machine Learning (ICML), 2018.

- [2] Battista Biggio, Blaine Nelson, and Pavel Laskov. Poisoning attacks against support vector machines. International conference on machine learning (ICML), 2012.

- [3] Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L Yuille. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE transactions on pattern analysis and machine intelligence (TPAMI), 40(4):834–848, 2018.

- [4] Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey Hinton. A simple framework for contrastive learning of visual representations. International Conference on Machine Learning (ICML), 2020.

- [5] Yuhua Chen, Wen Li, and Luc Van Gool. Road: Reality oriented adaptation for semantic segmentation of urban scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 7892–7901, 2018.

- [6] Marius Cordts, Mohamed Omran, Sebastian Ramos, Timo Rehfeld, Markus Enzweiler, Rodrigo Benenson, Uwe Franke, Stefan Roth, and Bernt Schiele. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 3213–3223, 2016.

- [7] Carl Doersch, Abhinav Gupta, and Alexei A Efros. Unsupervised visual representation learning by context prediction. In Proceedings of the IEEE international conference on computer vision (ICCV), 2015.

- [8] Alexey Dosovitskiy, Philipp Fischer, Jost Tobias Springenberg, Martin Riedmiller, and Thomas Brox. Discriminative unsupervised feature learning with exemplar convolutional neural networks. IEEE transactions on pattern analysis and machine intelligence (PAMI), 2015.

- [9] Kevin Eykholt, Ivan Evtimov, Earlence Fernandes, Bo Li, Amir Rahmati, Chaowei Xiao, Atul Prakash, Tadayoshi Kohno, and Dawn Song. Robust physical-world attacks on deep learning visual classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- [10] Spyros Gidaris, Praveer Singh, and Nikos Komodakis. Unsupervised representation learning by predicting image rotations. International Conference on Learning Representations (ICLR), 2018.

- [11] Ian J Goodfellow, Jonathon Shlens, and Christian Szegedy. Explaining and harnessing adversarial examples. International Conference on Learning Representations (ICLR), 2015.

- [12] Kaiming He, Haoqi Fan, Yuxin Wu, Saining Xie, and Ross Girshick. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- [13] Dan Hendrycks, Kimin Lee, and Mantas Mazeika. Using pre-training can improve model robustness and uncertainty. International Conference on Machine Learning (ICML), 2019.

- [14] Judy Hoffman, Dequan Wang, Fisher Yu, and Trevor Darrell. Fcns in the wild: Pixel-level adversarial and constraint-based adaptation. arXiv preprint arXiv:1612.02649, 2016.

- [15] Jiaxing Huang, Shijian Lu, Dayan Guan, and Xiaobing Zhang. Contextual-relation consistent domain adaptation for semantic segmentation. Proceedings of the European Conference on Computer Vision (ECCV), 2020.

- [16] Myeongjin Kim and Hyeran Byun. Learning texture invariant representation for domain adaptation of semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 12975–12984, 2020.

- [17] Yunsheng Li, Lu Yuan, and Nuno Vasconcelos. Bidirectional learning for domain adaptation of semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- [18] Pauline Luc, Natalia Neverova, Camille Couprie, Jakob Verbeek, and Yann LeCun. Predicting deeper into the future of semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2017.

- [19] Yawei Luo, Liang Zheng, Tao Guan, Junqing Yu, and Yi Yang. Taking a closer look at domain shift: Category-level adversaries for semantics consistent domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 2507–2516, 2019.

- [20] Aleksander Madry, Aleksandar Makelov, Ludwig Schmidt, Dimitris Tsipras, and Adrian Vladu. Towards deep learning models resistant to adversarial attacks. International Conference on Learning Representations (ICLR), 2018.

- [21] Shike Mei and Xiaojin Zhu. Using machine teaching to identify optimal training-set attacks on machine learners. In AAAI Conference on Artificial Intelligence (AAAI), 2015.

- [22] Hongyang Xue Minghao Chen and Deng Cai. Domain adaptation for semantic segmentation with maximum squares loss. In Proceedings of the IEEE international conference on computer vision (ICCV), 2019.

- [23] Seyed-Mohsen Moosavi-Dezfooli, Alhussein Fawzi, and Pascal Frossard. Deepfool: a simple and accurate method to fool deep neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), 2016.

- [24] Zak Murez, Soheil Kolouri, David Kriegman, Ravi Ramamoorthi, and Kyungnam Kim. Image to image translation for domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), volume 13, 2018.

- [25] Mehdi Noroozi and Paolo Favaro. Unsupervised learning of visual representations by solving jigsaw puzzles. In European Conference on Computer Vision (ECCV), 2016.

- [26] Fei Pan, Inkyu Shin, Francois Rameau, Seokju Lee, and In So Kweon. Unsupervised intra-domain adaptation for semantic segmentation through self-supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 3764–3773, 2020.

- [27] Giorgio Patrini, Alessandro Rozza, Aditya Krishna Menon, Richard Nock, and Lizhen Qu. Making deep neural networks robust to label noise: A loss correction approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017.

- [28] Ashwin Raju, Chi-Tung Cheng, Yunakai Huo, Jinzheng Cai, Junzhou Huang, Jing Xiao, Le Lu, ChienHuang Liao, and Adam P Harrison. Co-heterogeneous and adaptive segmentation from multi-source and multi-phase ct imaging data: A study on pathological liver and lesion segmentation. Proceedings of the European Conference on Computer Vision (ECCV), 2020.

- [29] Stephan R Richter, Vibhav Vineet, Stefan Roth, and Vladlen Koltun. Playing for data: Ground truth from computer games. In Proceedings of the European Conference on Computer Vision (ECCV), pages 102–118, 2016.

- [30] German Ros, Laura Sellart, Joanna Materzynska, David Vazquez, and Antonio M Lopez. The synthia dataset: A large collection of synthetic images for semantic segmentation of urban scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 3234–3243, 2016.

- [31] Inkyu Shin, Sanghyun Woo, Fei Pan, and In So Kweon. Two-phase pseudo label densification for self-training based domain adaptation. Proceedings of the European Conference on Computer Vision (ECCV), 2020.

- [32] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. In International Conference on Learning Representations (ICLR), 2015.

- [33] Chawin Sitawarin, Arjun Nitin Bhagoji, Arsalan Mosenia, Mung Chiang, and Prateek Mittal. Darts: Deceiving autonomous cars with toxic signs. arXiv preprint arXiv:1802.06430, 2018.

- [34] Jiawei Su, Danilo Vasconcellos Vargas, and Kouichi Sakurai. One pixel attack for fooling deep neural networks. IEEE Transactions on Evolutionary Computation, 2019.

- [35] Yu Sun, Eric Tzeng, Trevor Darrell, and Alexei A Efros. Unsupervised domain adaptation through self-supervision. arXiv preprint arXiv:1909.11825, 2019.

- [36] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. Intriguing properties of neural networks. International Conference on Learning Representations (ICLR), 2014.

- [37] Florian Tramèr, Nicolas Papernot, Ian Goodfellow, Dan Boneh, and Patrick McDaniel. The space of transferable adversarial examples. arXiv preprint arXiv:1704.03453, 2017.

- [38] Yi-Hsuan Tsai, Wei-Chih Hung, Samuel Schulter, Kihyuk Sohn, Ming-Hsuan Yang, and Manmohan Chandraker. Learning to adapt structured output space for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- [39] Tuan-Hung Vu, Himalaya Jain, Maxime Bucher, Matthieu Cord, and Patrick Pérez. Dada: Depth-aware domain adaptation in semantic segmentation. In Proceedings of the IEEE international conference on computer vision (ICCV), 2019.

- [40] Haoran Wang, Tong Shen, Wei Zhang, Lingyu Duan, and Tao Mei. Classes matter: A fine-grained adversarial approach to cross-domain semantic segmentation. Proceedings of the European Conference on Computer Vision (ECCV), 2020.

- [41] Zhonghao Wang, Mo Yu, Yunchao Wei, Rogerio Feris, Jinjun Xiong, Wen-mei Hwu, Thomas S Huang, and Honghui Shi. Differential treatment for stuff and things: A simple unsupervised domain adaptation method for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 12635–12644, 2020.

- [42] Jiaolong Xu, Liang Xiao, and Antonio M López. Self-supervised domain adaptation for computer vision tasks. IEEE Access, 2019.

- [43] Jinyu Yang, Weizhi An, Sheng Wang, Xinliang Zhu, Chaochao Yan, and Junzhou Huang. Label-driven reconstruction for domain adaptation in semantic segmentation. Proceedings of the European Conference on Computer Vision (ECCV), 2020.

- [44] Yanchao Yang, Dong Lao, Ganesh Sundaramoorthi, and Stefano Soatto. Phase consistent ecological domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- [45] Yanchao Yang and Stefano Soatto. Fda: Fourier domain adaptation for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 4085–4095, 2020.

- [46] Xiaohang Zhan, Ziwei Liu, Ping Luo, Xiaoou Tang, and Chen Change Loy. Mix-and-match tuning for self-supervised semantic segmentation. AAAI Conference on Artificial Intelligence (AAAI), 2018.

- [47] Yiheng Zhang, Zhaofan Qiu, Ting Yao, Dong Liu, and Tao Mei. Fully convolutional adaptation networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 6810–6818, 2018.

- [48] Zhilu Zhang and Mert Sabuncu. Generalized cross entropy loss for training deep neural networks with noisy labels. In Advances in neural information processing systems (NeurIPS), 2018.

- [49] Hengshuang Zhao, Jianping Shi, Xiaojuan Qi, Xiaogang Wang, and Jiaya Jia. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 2881–2890, 2017.

- [50] Xinge Zhu, Hui Zhou, Ceyuan Yang, Jianping Shi, and Dahua Lin. Penalizing top performers: Conservative loss for semantic segmentation adaptation. In Proceedings of the European Conference on Computer Vision (ECCV), pages 568–583, 2018.

- [51] Yang Zou, Zhiding Yu, BVK Vijaya Kumar, and Jinsong Wang. Unsupervised domain adaptation for semantic segmentation via class-balanced self-training. In Proceedings of the European Conference on Computer Vision (ECCV), pages 297–313. Springer, 2018.