Exploring Complex Dynamical Systems via Nonconvex Optimization

Abstract

Cataloging the complex behaviors of dynamical systems can be challenging, even when they are well-described by a simple mechanistic model. If such a system is of limited analytical tractability, brute force simulation is often the only resort. We present an alternative, optimization-driven approach using tools from machine learning. We apply this approach to a novel, fully-optimizable, reaction-diffusion model which incorporates complex chemical reaction networks (termed “Dense Reaction-Diffusion Network” or “Dense RDN”). This allows us to systematically identify new states and behaviors, including pattern formation, dissipation-maximizing nonequilibrium states, and replication-like dynamical structures.

1 Introduction

Chemical reaction systems driven far from equilibrium can demonstrate striking complexity in both the temporal and spatial variations of the concentration of their constituent chemical species (Figure 1, Appendix B.1, [1]). This complexity can be captured in simple reaction-diffusion (RD) models, and has been appreciated to be relevant for understanding a variety of nonequilibrium phenomena ranging from biological pattern formation [2][3] to the emergence of entropy-producing “dissipative structures” more broadly [4], and has even been speculated to be important for the earliest stages of abiogenesis [5][6][7]. The underlying microscopic physicochemical principles are well understood, and with minimal assumptions simple differential models incorporating these principles agree well with experiment [8][9][10][11].

Despite this mechanistic understanding, a reductionist approach to examining these systems often bears little fruit. Writing down the partial differential equations describing the system does not easily yield a full picture of the allowed behaviors via analytical means, requiring instead a focus on reduced models or specific behaviors [12][13][14][15]. Some analytical approaches may define parameter ranges corresponding to stable or complex dynamics, but cannot in the general case enumerate which complex behaviors and states are attainable [16]. This is further complicated by the fact that many of these systems display chaotic behavior, implying that over time they may visit an infinity of states [17][18][19]. Forward numerical simulation can be carried out with high accuracy, yet for systems of even moderate complexity a brute-force exploration of state and parameter space is at best tedious and at worst prohibitively computationally intensive [3].

One alternative “inverse desig” approach, explored here, is to first choose a specific behavior of interest and then ask whether points in the configuration space of a model can be found which correspond to this behavior. If the behavior of interest can be formulated as an easily evaluable mathematical function of the system state and or dynamics, this allows the exploration of the system’s behavior to be framed as an optimization problem. While this optimization problem is in most cases nonconvex, some tools from modern machine learning (ML) may still be applied. In this way rather than attempting to fully catalog the possible behaviors a chemical reaction system can display, we instead test a hypothesis regarding a particular state or dynamic of interest, side-stepping both the analytical intractability and the prohibitive complexity of brute force search.

The inverse design of RD systems has previously been framed as an optimization problem, albeit without physically realistic chemical models and with applications often aimed at image processing or texture synthesis [20][21][22][23]. Some have designed realistic RD systems, but using heuristics [24]\citesscholes2017three. Other inverse design approaches working with physically realistic RD models avoid optimization and instead aim to produce RD system modules that compartmentalize complexity and allow programmatic design of modular or hierarchical structures [26] or cellular automata-like boolean dynamics [27]. Some methods allow inverse design of CRN dynamics but without a diffusion component or spatial organization [28]. Machine learning approaches have been applied to related realistic models, but in unrelated ways; either as methods to approximate the forward model [29][30][31], or to learn models from real world data [32][33][34]. Here we do not approximate the physical model nor do we use any real world data. Instead we use only the optimization approaches from ML to explore not just steady states but dynamics and thermodynamics of an un-approximated, known, yet flexible, physically realistic forward model.

The approach we present has it’s limitations, not only in the systems and behaviors which can be explored, but also in the conclusions that can be formed from these explorations: The failure of the optimization to converge is an absence of proof that a state or dynamic is possible, not a proof of absence. Still, we aim to demonstrate here that it can complement existing approaches and may find use in investigations not only of driven nonequilibrium chemical reaction systems but in complex dynamical systems more broadly.

2 An Optimization Approach

At a high level, this approach requires that we specify a model which simulates the dynamics of the system of interest, as well as a loss function representing a hypothesis about a dynamical behavior the system may be capable of. The loss is simply a scalar function of the model’s state, dynamics, and parameters which takes on small values if and only if the behavior or state of interest is exhibited. If the model and loss are both differentiable, we can use model construction and optimization approaches from machine learning to minimize the loss and attempt to realize the behavior of interest. Here we focus on a novel ’dense reaction-diffusion network’ model or “Dense RDN”, where an arbitrary number of chemical species interchange via a reaction network while also diffusing in two spatial dimensions. The chemical reactions, their rate constants, the diffusion coefficients, and the initial conditions are all determined by the optimization. The loss function is of the investigator’s choice, and could represent simple behaviors like stability or bi-stability, or more complex behaviors, of which we present several examples in Section 3.

2.1 Preliminaries

More formally, we assume that it is possible to specify a forward model which propagates a state through time, parameterized by . The model should give differentials which can be used in e.g. forward Euler integration:

| (1) | ||||

With a time step size giving a change in state (see Appendix A.1 for details on how we ensure this step size is appropriately small). The initial conditions are generated from a random vector by a model (in this work a neural network) parameterized by . Repeated application of (1) to these initial conditions gives a time evolution . We define for convenience and require that both and be differentiable almost everywhere with respect to both and .

Finally, we assume a scalar loss function can be specified such that as the loss approaches a minimum value the behavior of interest is exhibited in . As a simple example, if we sought to identify steady states we could define . Thus, in general we seek parameters :

| (2) |

Because is fully differentiable, gradient-based optimization techniques such as stochastic gradient descent (SGD) can be applied. If the optimization converges to a sufficiently low value of the loss such that the resulting dynamics meet the investigator’s criteria, then we will have determined initial conditions and transition model parameters which result in a time evolution that exhibits the behavior of interest, thus proving it is within the possible dynamics of the model. In contrast, if the optimization fails to yield such parameters, we cannot conclude that the desired behavior is impossible, only that this procedure failed to parameterize it.

An expectation of success?

At first glance this may seem a doomed endeavor. Our expressed interest is in exploring models with intractably complex, nonlinear dynamics. This makes the relationship between and highly nonlinear and provides no guarantee of convexity in our loss . Nonconvex optimization is difficult (NP-hard), yet we propose to use local optimization methods such as SGD to find . Nonetheless we are encouraged by two observations. First, we do not require that we find a global minimum of the loss, only a ’sufficiently low’ local minimum, such that the resulting structure and/or dynamics meet the investigator’s criteria for the behavior of interest. Second, the entire field of deep learning (DL, a sub-field of machine learning), a field which has seen remarkable success, relies on such optimizations succeeding despite their apparent in-feasibility. The reasons for the empirically observed reliable convergence of such nonconvex optimizations is still an active area of research, and not addressed here. However, one proposed explanation which we conjecture is of relevance here is this: When the parameter space being optimized is of sufficiently high dimensionality, the existence of true local minima with high loss values becomes increasingly unlikely [35]. We do not systematically study the conditions required for convergence. We do however demonstrate that by embedding high-dimensional physicochemical models within yet higher dimensional neural networks and employing optimization approaches used in DL, we are able to achieve convergence with a variety of loss functions. This at least demonstrates empirically that the optimization approach popularized in data driven machine learning can be applied to a data-free, purely forward simulation-driven exploration of complex dynamical models.

2.2 A Dense Reaction-Diffusion Network Model

We chose reaction-diffusion (RD) models as a simple yet accurate model which can display spatiotemporally complex behaviors and which has at least conceptual relevance for physics, chemistry, and biology. Even simple RD systems have been observed experimentally to produce complex and even chaotic behavior which is well described by these models [8][9][10][11]. When driven by the constant influx of reactants, these models represent nonequilibrium systems of interest in thermodynamics and possibly even abiogenesis [5][36]. While much prior work has analyzed hand-designed chemical reaction sets, here we begin with a large, dense network of chemical reactions and allow the appropriate reaction set to be determined during the optimization.

2.2.1 Chemistry: Chemical Reaction Network

In this work the forward model is a reaction-diffusion model. The chemical reactions in this model comprise what we refer to as a “dense” chemical reaction network (CRN), because it contains every possible reaction which matches these three reaction prototypes:

| (3) | ||||

By “reaction prototype” we mean that, while the CRN may contain any number of chemical species , the letters in (3) simply indicate that ,, must be 3 different species, with the specified stoichiometries (See Appendix B). The forward and reverse rates of each reaction are free parameters which, with standard mass action kinetics, yield a net change in state due to chemical reactions . With the reaction types in (3), the resulting dynamics are highly nonlinear, and we can be assured that complex behavior is at least possible (See Appendix B.3), despite much of the kinetic parameter space producing uninteresting behavior (e.g. monotonic relaxation to equilibrium). This reaction network structure also encompasses much of the uni-, bi-, and tri-molecular reactions possible amongst 3 or more chemical species.

While the simultaneous existence of all of these reactions is perhaps not probable in a real-world CRN, note that the optimization can set reaction rates to , and so we are effectively optimizing not only for the rates of reactions but also which reactions to include.

2.2.2 Nonequilibrium: Flow Reactor Drive

We model the system as being within a ’flow reactor’, so it is maintained away from equilibrium by a constant influx of reactants. This influx produces a corresponding outflow, giving a net change in concentration for chemical species due to this drive of [37][1]:

| (4) |

Where again both the per-species feed concentrations and the shared flow rate are determined during the optimization.

2.2.3 Space: Diffusion and Initial Conditions

To allow for spatial organization, the CRN exists within a discretized two dimensional domain such that the concentration of chemical species at position is given by . The initial conditions are generated from random vector by which is instantiated as a neural network, similar to convolutional generator models such as DCGAN [38] (see Appendix A.4 for details).

Each chemical species also undergoes diffusion:

| (5) |

With an optimizable diffusion coefficient . The final combined change in state then is the sum of the contributions from reactions, drive, and diffusion:

| (6) |

Together these terms and their parameters define a space of nonequilibrium physicochemical models which is capable of both mundane and spatiotemporally complex behavior, and is flexible enough to allow the optimization procedure to determine the actual states and dynamics it adopts.

3 Results

All that remains to fully specify an optimization is the loss function encoding a behavior of interest. In effect, this loss function encodes an hypothesis about the possible states and/or dynamics of the model, and we investigate several such hypotheses here.

3.1 Pattern Formation

Pattern formation - emergent structured spatial variation in the concentrations of chemical species - is a hallmark behavior of reaction-diffusion systems [2][39]. Since postulated by Turing in 1952 [40] it has been repeatedly recapitulated experimentally and is understood to be important for biological pattern formation [2][3] and even hypothesized to be relevant for the earliest stages of abiogenesis [5][6][7].

We seek to identify kinetic parameter () regimes which correspond to the ability of the system to support stable patterns of arbitrary structure. As these will be driven nonequilibrium patterns, we choose a fitting arbitrary structure as our ’target’: An Image of Ilya Prigogine (Figure 2a, left) as a tribute to his seminal work on dissipative structures [4][41]. We therefore define a loss term which encourages the concentration distribution of chemical species to match this target, :

| (7) |

Where the expectation is taken both over time and , in practice implemented via random sampling in time and from . Furthermore we aim to identify stable patterns, so we introduce an additional loss term which encourages temporal stability:

| (8) |

This ensures that we are not just optimizing initial conditions which match the target, but also a dynamical model which preserves it over time. Successful convergence to patterns which mimic this target requires many of the optimization heuristics that have become commonplace in deep learning, as well as some task- and model-specific adjustments, detailed in Appendix D.

Nonetheless it is possible, as shown in Figure 2a, where a pattern found in a 4-species CRN shows reasonable fidelity to the target (Average Pearson’s of .88 over the optimized timescale of ), as well as stability over timescales dramatically longer than those employed during the optimization (Figure 2c). In other experiments the target pattern is not as temporally stable, and the optimization has clearly converged to a kinetic regime corresponding to dynamic pattern formation (Figure 2b). We confirm that the optimization of both the initial conditions as well as the reaction network parameters are necessary, by randomizing these and comparing the resulting correlation with the target over time (Figure 2c, see also Appendix D.4).

This therefore confirms that we can de novo identify a combination of initial conditions and reaction network kinetics and topologies (See Appendix B.4) which support the formation of arbitrary patterns purely through optimization.

3.2 Dissipation Maximization

As these are driven nonequilibrium systems it is natural to ask how the behavior of these models varies as the rate of entropy production - the dissipation rate - increases. In a system which is not relaxing towards equilibrium the dissipation rate is the rate at which input drive energy is converted to entropy. The thermodynamics of this Dense RDN system are well defined([37][42], see Appendix C). The entropy production rates are differentiable functions of the time evolution , and so can be used to formulate a loss function, broken down in terms of the diffusion and reaction dissipation rates :

| (9) |

Note that we exponentiate the negative dissipation rates so that this is a minimization problem bounded below by zero; the raw dissipation rates are not bounded and can vary by several orders of magnitude, which can induce numerical instability in the optimization. Finally, we seek states which are stably nonequilibrium, rather than those which are simply undergoing dissipative relaxation to equilibrium, so we introduce a term which encourages solutions for which the dissipation rate is unchanging:

| (10) |

Where the variance is only over time and where the drive flow rate is constrained so that the maximum dissipation rate is finite. Minimization of (10) is dominated by the reaction dissipation rate, which is invariant under permutation of spatial positions and so unsurprisingly does not induce any spatial structure. Still, we can examine the resulting reaction networks as examples of high-entropy generation rate CRNs (see Appendix B.4 for a visualization of the CRN. Note in this example the drive flow rate is fixed to ).

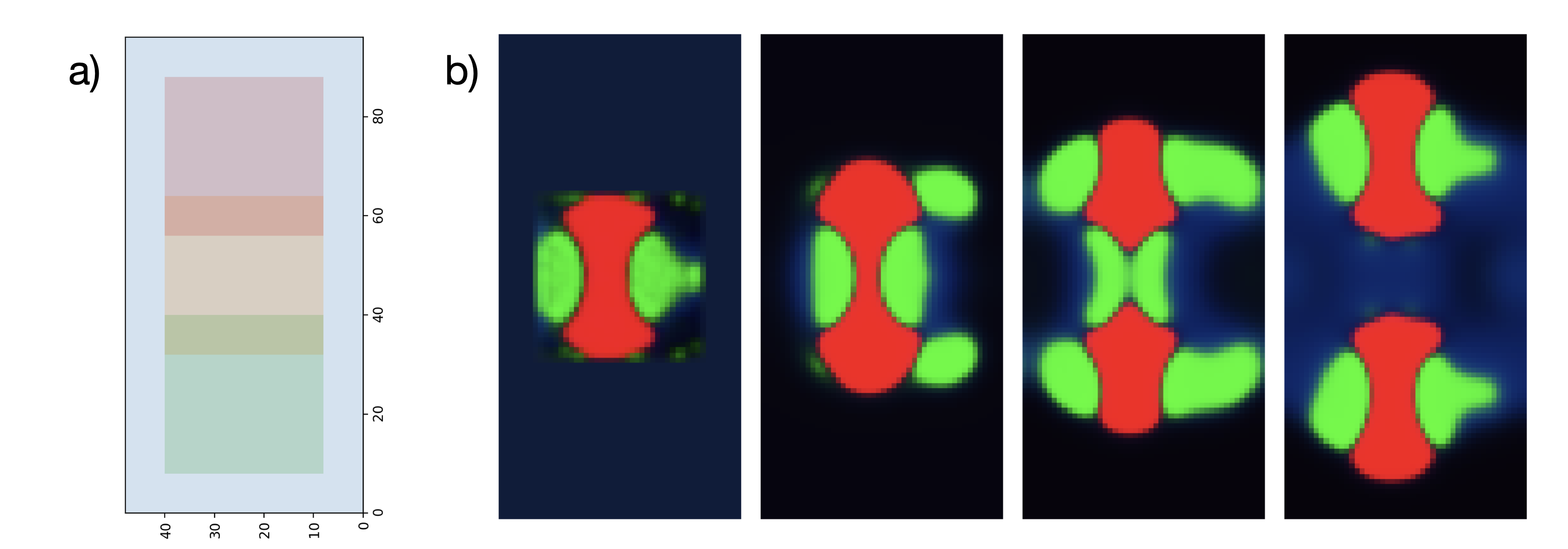

If we instead include only the diffusion dissipation rate term, which requires spatial non-uniformity in concentration to be non-zero, we find interesting structures which stably dissipate drive energy at a rate well above those seen without optimization and over timescales much longer than the 64 seconds used during optimization (Figure 3). It is worth noting that the form of these structures was not in any way pre-specified but rather emerges purely from the interaction between the dissipation-maximization loss function and the physicochemical properties of the model.

3.2.1 Dissipative Distributions

In practice all of the preceding optimizations suffer from ’mode collapse’ in the generative model such that it converges to producing only a single initial condition (meaning that the expectations in (8) (10) are effectively over time only). It may be desirable to instead produce a distribution, not only of but of its time evolution as well. We can enforce this by introducing a decoder model which is optimized to reconstruct the random binary vector used to generate , via an associated loss term:

| (11) |

Where is the cross entropy and is implemented as a convolutional neural network. With a deterministic forward model (11) is trivially satisfiable, so we also introduce a spatiotemporally variable, uniformly distributed noise term which corresponds to stochastic variation in the flow rate:

| (12) |

With (Gaussian low-pass filtered to avoid spatial discontinuities) and indicating the Hadamard product. This serves to ensure some approximate -ball separation between the samples of .

Minimizing a the sum of (10) and (11) yields corresponding to a distribution of states which maintain their uniqueness over time, despite the randomly fluctuating drive (Figure 4). In the example shown the decoder is able to reconstruct the 16-bit vector with 100% accuracy for over 1000 time points, thus implying that this dynamical system is capable of transmitting information through time with a channel capacity proportional to the Shannon entropy of , despite the noisy environment.

3.3 Dynamic Structure

Thus far we have solved for specific states of dynamical systems or for properties of their transitions . It may be interesting instead to optimize directly for properties of the full time evolution . Dissipative structures in reaction-diffusion models have been previously shown to undergo particle-like motion [43][44], and even ‘replication’ of simple spot patterns [1][45][46]. Here we seek structural reorganization and motion without explicitly specifying the dynamical model or any of the states . We do this by optimizing a loss which requires similarity between and two shifted versions of (Figure 5a):

| (13) |

Where indicates a vertical translation of by a distance . There is however a trivial solution to (13) which consists of uniform, time-invariant concentrations of all chemical species. We therefore introduce a loss term which encourages the spatial standard deviation in concentrations at the end of the time series to be close to or above a target value :

| (14) |

Where the variance is over spatial positions . We then minimize the sum of (13) and (14). By requiring this similarity between a central region at the beginning of the time series and two distinct regions of at the end of the time series (Figure 5a) the optimization must converge to not simply translation but something vaguely akin to replication to minimize this loss. This requires optimization over longer timescales, necessitating a modified ‘incremental’ optimization procedure (See Appendix D.3). Nonetheless the optimization converges finally to a combination of a chemical reaction network (Appendix Figure 6b) and initial conditions which roughly matches the desired dynamics (Figure 5b). The replication fidelity is modest, with an average Pearson’s correlation between the ‘parent’ structure at and the two ‘daughter’ structures at of .893.

This is of course not true replication. The two ‘daughter’ patterns on the right of Figure 5b are not capable themselves of dividing, and if they were this loss structure would not handle the inevitable crowding and collisions between their offspring. Nonetheless, this serves as an example of relatively complex dynamical reorganization which emerges completely from the form of the loss and the properties of the physicochemical model.

4 Discussion

We have demonstrated through several examples that if a hypothesis about the possible states and dynamics of a complex system can be appropriately represented mathematically as a loss function, the search through the combinatorially vast space of possible behaviors of the system can be guided by nonconvex optimization.

This approach has a fundamental limitation; failure of the optimization to converge does not constitute falsification of the hypothesis. Rather, this is an absence of proof of the hypothesis, not a proof of absence of the hypothesized behavior.

Still, this approach allowed the optimization-driven identification of diffusion-coupled chemical reaction networks which can stably support predetermined spatial patterns (3.1). While the examples we show here are not emergent but rather dependent on optimized initial conditions, flavors of our approach may nonetheless find use as improvements on previously demonstrated techniques in e.g. micro/nanofabrication [47][48][24][49][26]. Importantly, our approach does not require pre-specification of the specific reactions to include but rather allows the optimization to select them from within an initial dense reaction network.

Rather than optimizing for specific states we can also search for dynamics, and we’ve shown here that we can do this without specifying a priori either the shape or motion (3.3). While the form of the loss we’ve chosen gives “replication-esque” dynamics, in reality the similarity to replication is quite superficial: The replication is low-fidelity and unstable, and likely requires longer timescales, more complex CRNs, losses which specifically encourage stability, or a combination of these, in order to make the analogy to true replication less flimsy. Still, the ability to derive purely from optimization a non-isotropic concentration distribution that can dramatically re-organize in such a manner is at least a step towards demonstrating the feasibility of complex true replicators in a system without compartmentalization but rather only reaction and diffusion.

Perhaps most interestingly, this approach allows us to examine the dissipative structures that emerge when we search for models with extreme thermodynamic properties e.g. maximal entropy production rates (3.2). Here we show only qualitative observations, and note that for emergent spatial structure in this model only the diffusion component of the dissipation rate should be maximized. These structures were found in the presence of a simple, spatiotemporally invariant drive. However it has been hypothesized that the adaptations relevant for the emergence of persistent nonequilibrium phenomena (such as life) are induced while dissipating energy from more complex, difficult-to-exploit drives [50][51]. It is possible that, if combined with appropriate spatiotemporally variable and chemically complex drives, this optimization approach could help shed light on these hypotheses.

Finally, while we focus on a specific reaction-diffusion type model here, the method presented is applicable to any differentiable model and loss function. The intersection of physics, chemistry and biology is littered with systems where reductionism has produced a simple and accurate differential model of a complex system, and yet this model has, as yet, failed to yield a comprehensive understanding of the phenomenon it describes [52]. This knowledge gap is mirrored in an observation made by Turing himself, one of the first to investigate mathematical models of RD systems:

This is the assumption that as soon as a fact is presented to a mind all consequences of that fact spring into the mind simultaneously with it. It is a very useful assumption under many circumstances, but one too easily forgets that it is false [53].

In analogy, when the ’fact’ of the mechanistic model of a complex system is known, and yet existing methods struggle to map the universe of dynamics that are consequences of this fact, we hope that the method described here provides a complementary approach to closing that gap in understanding.

All source code is available at https://github.com/hunterelliott/dense-rdn

References

- [1] John E Pearson “Complex patterns in a simple system” In Science 261.5118 American Association for the Advancement of Science, 1993, pp. 189–192

- [2] Shigeru Kondo and Takashi Miura “Reaction-diffusion model as a framework for understanding biological pattern formation” In science 329.5999 American Association for the Advancement of Science, 2010, pp. 1616–1620

- [3] Amit N Landge, Benjamin M Jordan, Xavier Diego and Patrick Müller “Pattern formation mechanisms of self-organizing reaction-diffusion systems” In Developmental biology 460.1 Elsevier, 2020, pp. 2–11

- [4] Ilya Prigogine and René Lefever “Symmetry breaking instabilities in dissipative systems. II” In The Journal of Chemical Physics 48.4 American Institute of Physics, 1968, pp. 1695–1700

- [5] Ilya Prigogine and Gregoire Nicolis “Biological order, structure and instabilities1” In Quarterly reviews of biophysics 4.2-3 Cambridge University Press, 1971, pp. 107–148

- [6] Péter Szabó, István Scheuring, Tamás Czárán and Eörs Szathmáry “In silico simulations reveal that replicators with limited dispersal evolve towards higher efficiency and fidelity” In Nature 420.6913 Nature Publishing Group, 2002, pp. 340–343

- [7] Paul Adamski et al. “From self-replication to replicator systems en route to de novo life” In Nature Reviews Chemistry 4.8 Nature Publishing Group, 2020, pp. 386–403

- [8] Kyoung J Lee, WD McCormick, Qi Ouyang and Harry L Swinney “Pattern formation by interacting chemical fronts” In Science 261.5118 American Association for the Advancement of Science, 1993, pp. 192–194

- [9] Gavin R Armstrong, Annette F Taylor, Stephen K Scott and Vilmos Gáspár “Modelling wave propagation across a series of gaps” In Physical Chemistry Chemical Physics 6.19 Royal Society of Chemistry, 2004, pp. 4677–4681

- [10] Gerhard Ertl “Oscillatory kinetics and spatio-temporal self-organization in reactions at solid surfaces” In Science 254.5039 American Association for the Advancement of Science, 1991, pp. 1750–1755

- [11] Chad T Hamik and Oliver Steinbock “Excitation waves in reaction-diffusion media with non-monotonic dispersion relations” In New Journal of Physics 5.1 IOP Publishing, 2003, pp. 58

- [12] M Or-Guil, M Bode, CP Schenk and H-G Purwins “Spot bifurcations in three-component reaction-diffusion systems: The onset of propagation” In Physical Review E 57.6 APS, 1998, pp. 6432

- [13] AH Khater, W Malfliet, DK Callebaut and ES Kamel “The tanh method, a simple transformation and exact analytical solutions for nonlinear reaction–diffusion equations” In Chaos, Solitons & Fractals 14.3 Elsevier, 2002, pp. 513–522

- [14] Stephen Smith and Neil Dalchau “Beyond activator-inhibitor networks: the generalised Turing mechanism” In arXiv preprint arXiv:1803.07886, 2018

- [15] Shigeru Kondo “An updated kernel-based Turing model for studying the mechanisms of biological pattern formation” In Journal of Theoretical Biology 414 Elsevier, 2017, pp. 120–127

- [16] Martin Feinberg “Foundations of Chemical Reaction Network Theory” Springer, 2019

- [17] Vitaly Volpert and Sergei Petrovskii “Reaction–diffusion waves in biology” In Physics of life reviews 6.4 Elsevier, 2009, pp. 267–310

- [18] Reuben H Simoyi, Alan Wolf and Harry L Swinney “One-dimensional dynamics in a multicomponent chemical reaction” In Physical Review Letters 49.4 APS, 1982, pp. 245

- [19] Otto E Rössler “Chemical turbulence: chaos in a simple reaction-diffusion system” In Zeitschrift für Naturforschung A 31.10 De Gruyter, 1976, pp. 1168–1172

- [20] Ly Phan and Cindy Grimm “Sketching reaction-diffusion texture” In Proceedings of the Third Eurographics conference on Sketch-Based Interfaces and Modeling, 2006, pp. 107–114

- [21] Ezio Bartocci, Ebru Aydin Gol, Iman Haghighi and Calin Belta “A formal methods approach to pattern recognition and synthesis in reaction diffusion networks” In IEEE Transactions on Control of Network Systems 5.1 IEEE, 2016, pp. 308–320

- [22] Alexander Mordvintsev, Ettore Randazzo and Eyvind Niklasson “Differentiable Programming of Reaction-Diffusion Patterns” In arXiv preprint arXiv:2107.06862, 2021

- [23] Alexander Mordvintsev, Eyvind Niklasson and Ettore Randazzo “Texture Generation with Neural Cellular Automata” In arXiv preprint arXiv:2105.07299, 2021

- [24] Grigory Tikhomirov, Philip Petersen and Lulu Qian “Fractal assembly of micrometre-scale DNA origami arrays with arbitrary patterns” In Nature 552.7683 Nature Publishing Group, 2017, pp. 67–71

- [25] Natalie S Scholes and Mark Isalan “A three-step framework for programming pattern formation” In Current opinion in chemical biology 40 Elsevier, 2017, pp. 1–7

- [26] Dominic Scalise and Rebecca Schulman “Designing modular reaction-diffusion programs for complex pattern formation” In Technology 2.01 World Scientific, 2014, pp. 55–66

- [27] Dominic Scalise and Rebecca Schulman “Emulating cellular automata in chemical reaction–diffusion networks” In Natural Computing 15.2 Springer, 2016, pp. 197–214

- [28] Niall Murphy et al. “Synthesizing and tuning stochastic chemical reaction networks with specified behaviours” In Journal of The Royal Society Interface 15.145 The Royal Society, 2018, pp. 20180283

- [29] Leon O Chua, Martin Hasler, George S Moschytz and Jacques Neirynck “Autonomous cellular neural networks: a unified paradigm for pattern formation and active wave propagation” In IEEE Transactions on Circuits and Systems I: Fundamental theory and applications 42.10 IEEE, 1995, pp. 559–577

- [30] Angran Li, Ruijia Chen, Amir Barati Farimani and Yongjie Jessica Zhang “Reaction diffusion system prediction based on convolutional neural network” In Scientific reports 10.1 Nature Publishing Group, 2020, pp. 1–9

- [31] Jaideep Pathak et al. “Model-free prediction of large spatiotemporally chaotic systems from data: A reservoir computing approach” In Physical review letters 120.2 APS, 2018, pp. 024102

- [32] Jaideep Pathak et al. “Using machine learning to replicate chaotic attractors and calculate Lyapunov exponents from data” In Chaos: An Interdisciplinary Journal of Nonlinear Science 27.12 AIP Publishing LLC, 2017, pp. 121102

- [33] Behzad Zakeri, Amin Karimi Monsefi, Sanaz Samsam and Bahareh Karimi Monsefi “Weakly supervised learning technique for solving partial differential equations; case study of 1-d reaction-diffusion equation” In International Congress on High-Performance Computing and Big Data Analysis, 2019, pp. 367–377 Springer

- [34] Kyongmin Yeo and Igor Melnyk “Deep learning algorithm for data-driven simulation of noisy dynamical system” In Journal of Computational Physics 376 Elsevier, 2019, pp. 1212–1231

- [35] Yann N Dauphin et al. “Identifying and attacking the saddle point problem in high-dimensional non-convex optimization” In Advances in neural information processing systems 27, 2014

- [36] István Scheuring et al. “Spatial models of prebiotic evolution: soup before pizza?” In Origins of life and evolution of the biosphere 33.4 Springer, 2003, pp. 319–355

- [37] Dilip Kondepudi and Ilya Prigogine “Modern thermodynamics: from heat engines to dissipative structures” John Wiley & Sons, 2014

- [38] Alec Radford, Luke Metz and Soumith Chintala “Unsupervised representation learning with deep convolutional generative adversarial networks” In arXiv preprint arXiv:1511.06434, 2015

- [39] Vladimir K Vanag and Irving R Epstein “Pattern formation mechanisms in reaction-diffusion systems” In International Journal of Developmental Biology 53.5-6 UPV/EHU Press, 2009, pp. 673–681

- [40] AM Turing “The Chemical Basis of Morphogenesis” In Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences 237.641, 1952, pp. 37–72

- [41] Ilya Prigogine “Time, structure, and fluctuations” In Science 201.4358 American Association for the Advancement of Science, 1978, pp. 777–785

- [42] Hitoshi Mahara, Tomohiko Yamaguchi and Masatsugu Shimomura “Entropy production in a two-dimensional reversible Gray-Scott system” In Chaos: An Interdisciplinary Journal of Nonlinear Science 15.4 American Institute of Physics, 2005, pp. 047508

- [43] Henry C Tuckwell “Solitons in a reaction-diffusion system” In Science 205.4405 American Association for the Advancement of Science, 1979, pp. 493–495

- [44] Mathias Bode and H-G Purwins “Pattern formation in reaction-diffusion systems-dissipative solitons in physical systems” In Physica D: Nonlinear Phenomena 86.1-2 Elsevier, 1995, pp. 53–63

- [45] Kyoung-Jin Lee, William D McCormick, John E Pearson and Harry L Swinney “Experimental observation of self-replicating spots in a reaction–diffusion system” In Nature 369.6477 Nature Publishing Group, 1994, pp. 215–218

- [46] Nathaniel D Virgo “Thermodynamics and the structure of living systems”, 2011

- [47] Irving R Epstein and Bing Xu “Reaction–diffusion processes at the nano-and microscales” In Nature nanotechnology 11.4 Nature Publishing Group, 2016, pp. 312–319

- [48] Marcin Fialkowski et al. “Principles and implementations of dissipative (dynamic) self-assembly” In The Journal of Physical Chemistry B 110.6 ACS Publications, 2006, pp. 2482–2496

- [49] Gabriele M Coli, Emanuele Boattini, Laura Filion and Marjolein Dijkstra “Inverse design of soft materials via a deep learning–based evolutionary strategy” In Science advances 8.3 American Association for the Advancement of Science, 2022, pp. eabj6731

- [50] Nikolay Perunov, Robert A Marsland and Jeremy L England “Statistical physics of adaptation” In Physical Review X 6.2 APS, 2016, pp. 021036

- [51] Jeremy L England “Dissipative adaptation in driven self-assembly” In Nature nanotechnology 10.11 Nature Publishing Group, 2015, pp. 919–923

- [52] Philip W Anderson “More is different: broken symmetry and the nature of the hierarchical structure of science.” In Science 177.4047 American Association for the Advancement of Science, 1972, pp. 393–396

- [53] A.. Turing “I.—COMPUTING MACHINERY AND INTELLIGENCE” In Mind LIX.236 Oxford Academic, 1950, pp. 433–460

- [54] Lothar Collatz “The numerical treatment of differential equations” In Berlin: Springer, 1966

- [55] Sergey Ioffe and Christian Szegedy “Batch normalization: Accelerating deep network training by reducing internal covariate shift” In International conference on machine learning, 2015, pp. 448–456 PMLR

- [56] P Gray and SK Scott “Autocatalytic reactions in the isothermal, continuous stirred tank reactor: isolas and other forms of multistability” In Chemical Engineering Science 38.1 Elsevier, 1983, pp. 29–43

- [57] David Angeli “A tutorial on Chemical Reaction Networks dynamics” In 2009 European Control Conference (ECC), 2009, pp. 649–657 IEEE

- [58] Hitoshi Mahara and Tomohiko Yamaguchi “Calculation of the entropy balance equation in a non-equilibrium reaction-diffusion system” In Entropy 12.12 Molecular Diversity Preservation International, 2010, pp. 2436–2449

- [59] Diederik P Kingma and Jimmy Ba “Adam: A Method for Stochastic Optimization” In ICLR (Poster), 2015

- [60] François Chollet “Keras”, https://keras.io, 2015

Appendix A Miscellaneous Methods Details

Here we provide further verbal and mathematical description of key aspects of the methods. Additional detail can be found in the source code, available at https://github.com/hunterelliott/dense-rdn.

A.1 Minimizing Numerical Integration Error

With highly nonlinear differential models and a simple Euler integration scheme, we must be careful to ensure that the results of the optimizations are still reflective of the physicochemical model and not simply artifacts of numerical integration error. We minimize the effect of these errors in several ways.

First, we control the step size via penalty terms in the loss. In 3.1 this is a natural part of the loss (Eq. 8) which encourages stable pattern formation, and we set in those experiments. For the remaining experiments we introduce a penalty only for step sizes that exceed a threshold :

| (15) |

Where we used and for all experiments except those in 3.3 which used and . The full loss then is the sum of and the loss described for each experiment.

Additionally, for all results presented we verified that the results were unchanged if we ran forward simulations with the time step decreased by two orders of magnitude (from 1.0 to .01). This decreased time step should dramatically reduce numerical error and so the fact that our results are unchanged indicates they are unlikely to be artifactual.

A.2 Local Concentration Visualizations

When the number of chemical species to be visualized is 3 we first perform a principal component analysis (PCA) projection of the concentration dimensions. We retain only the largest 3 components and map these to the intensities of the red, green and blue channels, respectively, of an RGB image for visualization. In all cases presented here these three components explained 90% of the concentration variance. Note that this approach allows approximate visualization of arbitrarily complex chemical species mixtures, but precludes inclusion of a concentration color scale bar. This is however unimportant, given the fact that both the concentrations and reaction rate constants are determined simultaneously in the optimization; they could both be arbitrarily re-scaled and so we are effectively working in arbitrary concentration units.

A.3 Diffusion Modeling

We used an isotropic discrete approximation of the Laplacian operator [54] to approximate Eq. (5). In all cases diffusion coefficients were constrained to lie between and . The lower bound serves to prevent runaway accumulation of chemical species and spatial discontinuities in concentration. The upper bound was chosen to prevent numerical artifacts (checkerboard patterns, oscillation) given the time step and discrete Laplacian approximation. Using these units for the diffusion coefficients the physical dimension of a single spatial element of (the ‘pixel size’) is , but given that the diffusion coefficients and concentrations are all optimized simultaneously and could be arbitrarily re-scaled, all the units are effectively arbitrary. All spatial domains were pixels except for in 3.3 where the dimensions were .

A.4 Neural Networks

The generator model is a simple architecture consisting of repeated blocks of stride 2 convolution transpose, batch normalization [55] and a activation. The final generated spatial domain is therefore in both width and height, and the number of blocks varies within the presented results accordingly. The output is also filtered with a fixed 2D gaussian kernel ( pixels), to avoid introducing spatial discontinuities and therefore numerical error in diffusion simulations and diffusion entropy rate calculations. Finally, we exclude batch norm from the last layer (as in DCGAN [38]) and instead add only an optimizable scaling that is constrained to prevent negative concentrations and limit the maximum generated concentration to 10.0 (in arbitrary units).

The encoder models used in 3.2.1 use a similar structure, with stride 2 convolutions followed by a activation and batch normalization.

Experimentation was not extensive and constrained by hardware limitations, but in general we saw no obvious improvement in convergence properties or final optimized values from using ReLU activations or higher capacity generator or encoder architectures.

Appendix B Chemical Reaction Networks

B.1 Introductory Example Reaction Network

B.2 Dense Chemical Reaction Networks

The reaction networks used here are “dense” in the sense that they contain all possible reactions matching a particular prototype. That is, if a reaction network contained species then for the first reaction in (3) we would include:

| (16) | ||||

While for the second reaction we would include every possible reversible unimolecular interconversion e.g. , , , , and so on. More formally, we would generate reaction sets matching each of the reaction prototypes given in (3):

| (17) | ||||

Every reaction in these sets is governed by independent forward and reverse reaction rates which are free parameters determined during the optimization. This gives e.g. a total of 40 reactions for a 5-species dense CRN (including forward and reverse), and 24 reactions for a 4 species dense CRN.

To model the dynamics of these chemical reactions we assume standard mass-action kinetics. Using the matrix representations commonly used in chemical reaction network theory, the reaction rate calculations are matrix multiplications [16][57], which are efficiently calculated on the GPUs used for both forward simulation and optimization.

B.3 CRN Motivation

We chose the reaction network described above both for the variety of reactions which comprise it, as well as it’s potential for diverse behavior. This gives the optimization process a broad space of possible reaction sets and dynamics to explore, allowing for the possibility of interesting behavior without pre-specifying it.

The reactions include many if not most stereotypical uni- bi- and tri-molecular reactions. Given sufficient timescale, more complex reaction mechanisms can be approximated with these more elementary reactions. Of more specific interest they include, as a subset, the reactions from well-studied reaction-diffusion models exhibiting complex behavior such as the Gray-Scott reaction system [56] or the Brussellator [4]. It is therefore not surprising that chemical reaction network theory tells us, on the basis of the topology and stoichiometry of these reactions, that complex dynamics are at least possible [16].

B.4 Optimized Reaction Networks

The optimization process effectively chooses a specific reaction network from the many possible networks the model described above can represent. We can visualize this reaction network as a graph, with arrows pointing from reactants to products, and with the style of arrow indicating the ‘strength’ of that reaction: Darker, larger arrows indicate larger reaction rate constants, and the graph is laid out such that strongly reacting species should be closer together (shorter arrows).

This allows us to compare the optimized reaction network from, for example, the dissipation-maximizing loss used in 3.2 (Figure 6a), to that from the replication-like-dynamics-inducing loss used in 3.3 (Figure 6b). It is worth noting that these are just the reaction rate constants, and the net flux through these reaction pathways depends on the state and its time progression.

Appendix C Thermodynamics

The instantaneous entropy production rate of a single volume element due to chemical reactions is given by [37][58]:

| (18) |

Where and are the local forward and reverse reaction rates of reaction in that volume element and and is Boltzmann’s constant, giving units of . In practice we replace with for numerical stability.

The instantaneous local entropy production rate due to diffusion in a volume element is [58]:

| (19) |

Where again is the diffusion coefficient for species and is the concentration of species at position . We add a small numerical stabilizer (0.1) to the denominator of (19) to avoid division by zero.

The total entropy production rate is simply the sum of these two components:

| (20) |

Rather than integrate this rate to produce a net change in entropy we report and maximize the average of the instantaneous rates to avoid explicit dependence on the time and length scale of optimization.

Appendix D Optimization

D.1 Initialization

As is commonly observed in neural network optimization, we found that initialization was important for convergence. Specifically, in our case models initialized with uniform random but low reaction rates ( and similarly low drive flow rates and concentrations failed to converge. Models initialized to more highly dissipative regimes - with higher reaction rates and higher drive flow rates - converged more reliably. Exhaustive exploration of initialization dependence is prohibitively computationally intensive, so we instead mimicked the kinetic parameters used in [1], generalizing it to our dense CRNs: One autocatalytic reaction per chemical species was initialized with a rate constant of 1. All other reaction rates were set to with as this was found to be low enough to prevent large and the associated numerical inaccuracies and/or runaway reaction rates. The small uniform random noise breaks symmetry in the reaction rates.

Feed concentrations for the flow-reactor drive were initialized to where is the number of autocatalytic reactions producing species . This scaling was found to produce a highly dissipative yet somewhat kinetically and numerically stable initial state.

Diffusion coefficients were initialized from , uniformly within the numerically stable regime.

D.2 Heuristics and Hyperparameters

| Figure | lr | lr decrease by at | lr patience | es patience | ADAM | |

|---|---|---|---|---|---|---|

| 2a | 32 | .95 | ||||

| 2b | 64 | .95 | ||||

| 3a | 64 | - | .995 | |||

| 3b | 64 | - | .995 | |||

| 4 | 128 | .995 |

We used the ADAM optimizer [59], with learning rates and moving averages as given in Table 1 ( of .999 was used in all experiments). With these highly nonlinear dynamical models we found that, especially for optimizations with longer timescales, gradient clipping was essential for stability of convergence. We used gradient norm clipping at 0.5 as implemented in Keras [60]. Learning rates were automatically decreased and optimization was automatically terminated via Keras’ callbacks with patience parameters as given in Table 1. In some experiments we used RMSD instead of the L2 norm given in Eq. 7 to simplify changing the size of the spatial domain without altering the magnitude of the loss, and found this gave similar results to the L2 norm as expected.

Calculating gradients with these models requires backpropagation through the entire time series , introducing significant memory overhead. For this reason, and because in most cases we are optimizing for a single , we set the batch size to 1 except for in Sect. 3.2.1, where we used 2. For experiments where dissipation losses were included, was set to 1.0 except for in 3.2.1 where it was 0.04. During optimization the reaction rate constants and feed concentrations were constrained to be non-negative, and the flow rate was constrained to between .01 and 1.0. Diffusion coefficients were constrained to within their initialization range (A.3).

D.3 Incremental Optimization

| Optimization | lr | lr decrease by at | ||

|---|---|---|---|---|

| 1 | 256 | 1.0 | ||

| 2 | 320 | 1.0 | ||

| 3 | 352 | 0.5 |

For the experiments shown in 3.3 we must optimize over timescales long enough for the full replication-like dynamics to occur. Optimization from a random initialization above time points (equivalent to 250 seconds) was highly unstable, in large part due to an inability to balance vanishing and exploding gradients in the highly nonlinear CRN. Convergence was finally achieved via an “incremental” optimization approach, where we first optimize at shorter timescales and then use the parameters from shorter timescales to initialize optimization at longer timescales. Additionally, at the longest timescales the variance target seemed to be at odds with the replication fidelity loss (Eq. 13), and decreasing this value gave better parent-daughter correlations at convergence. It is worth noting that the large number of iterations required resulted in long optimization; the final result presented here required weight updates in total for a wall clock time of more than 26 days. The hyperparameters used for each optimization that produced Figure 5 are given in Table 2. The ADAM optimizer was set to .95 for all optimizations.

D.4 Control Analyses

We performed additional analyses to test the necessity and sufficiency of the optimization of each component of the models. These computational “ablation experiments” correspond to several conditions:

-

•

“Optimized” - All components optimized.

-

•

“Random ” - Optimized initial conditions replaced with normally distributed random noise with the same mean and variance as the optimized initial conditions (truncated to prevent negative concentrations).

-

•

“Initial ” - Optimized reaction network parameters and diffusion coefficients replaced with their values from the start of optimization (as described in D.1).

-

•

“Random ” - Optimized reaction network parameters and diffusion coefficients replaced with normally distributed random noise with the same mean and variance as the optimized parameters (truncated to prevent negative reaction rates or diffusion coefficients outside the numerically stable range).

The results of these experiments are shown in Figure 2c and Figure 3c with some additional shown here and described below. These results confirm that all optimized components are required for persistent correlation with the target and for persistently high dissipation rates.

In Figure 7a we show the control experiments as in the main text but for the second example (from Figure 2b). We then repeat the same analyses for both examples but with the time step now two orders of magnitude shorter, to further reinforce that the stability and fidelity is not a result of the optimization exploiting numerical error (Figure 7b-c).

In Figure 8a we show the same result as the main text but for the second example (from Figure 3b). We also run both examples at a longer timescale to demonstrate that the optimized states are stably dissipative and produce entropy at consistently higher rates than those with non-optimized components (Figure 8b-c). Note that in this case the models with mean and standard deviation-matched random kinetic parameters (“Random ”, shown in red) are highly unstable and display significant numerical integration error (as evidenced by their behavior changing with a smaller time step). This further reinforces both the necessity and effectiveness of the numerical error avoidance methods described in Section A.1.