Exploration of Class Center for Fine-Grained Visual Classification

Abstract

Different from large-scale classification tasks, fine-grained visual classification is a challenging task due to two critical problems: 1) evident intra-class variances and subtle inter-class differences, and 2) overfitting owing to fewer training samples in datasets. Most existing methods extract key features to reduce intra-class variances, but pay no attention to subtle inter-class differences in fine-grained visual classification. To address this issue, we propose a loss function named exploration of class center, which consists of a multiple class-center constraint and a class-center label generation. This loss function fully utilizes the information of the class center from the perspective of features and labels. From the feature perspective, the multiple class-center constraint pulls samples closer to the target class center, and pushes samples away from the most similar nontarget class center. Thus, the constraint reduces intra-class variances and enlarges inter-class differences. From the label perspective, the class-center label generation utilizes class-center distributions to generate soft labels to alleviate overfitting. Our method can be easily integrated with existing fine-grained visual classification approaches as a loss function, to further boost excellent performance with only slight training costs. Extensive experiments are conducted to demonstrate consistent improvements achieved by our method on four widely-used fine-grained visual classification datasets. In particular, our method achieves state-of-the-art performance on the FGVC-Aircraft and CUB-200-2011 datasets.

Index Terms:

Fine-grained visual classification, Exploration of class center, Class center, Soft label1 Introduction

As an extension of generic image classification (e.g. ImageNet classification [1]), fine-grained visual classification (FGVC) aims to recognize different subcategories belonging to a basic-level category (e.g., birds, cars, and aircraft). In FGVC tasks, samples from the same class show evident differences in posture of objects, lighting and backgrounds. Moreover, because of their similar appearances, samples from different classes are easily confused. Thus, FGVC exhibits obvious intra-class variances and subtle inter-class differences. Moreover, there are fewer training samples in each category in datasets, which leads to overfitting when large-scale deep neural networks are trained. Therefore, FGVC is a challenging task.

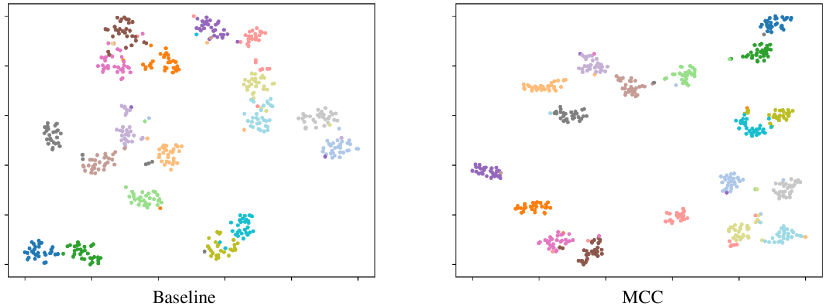

Recent FGVC methods design complex networks to focus on object areas to ignore cluttered backgrounds[2, 3, 4, 5] or extract the features of parts to reduce the impact of posture[6, 7, 8, 9, 10, 11]. Thus, these FGVC methods significantly reduce intra-class variances. However, most of these methods rely only on cross entropy loss to obtain classification boundaries, which is insufficient to handle inter-class differences. In addition, some common visual classification methods introduce class center as representations of whole classes, and reduce the distances between samples and class centers to reduce intra-class variances[12, 13, 14, 15]. In addition, Zhang et al. proposed a feature aggregation scheme to resist intra-class variances[16]. However, these methods do not consider inter-class differences in the FGVC, which limits further improvement in model performance. For example, in Fig.1 (a), there are no clear classification boundaries between some closer class clusters that are masked with boxes. The same issue occurs in the t-SNE results of center loss[12] in Fig.1 (c). The class clusters masked by the blue box are even closer than those in Fig.1 (a). Thus, we should further consider inter-class differences in FGVC.

To simultaneously handle inter-class differences and intra-class variances, some common visual classification methods utilize contrastive learning to constrain the feature distances of positive and negative sample pairs[17, 18, 19, 20, 21]. However, these methods are not suitable for FGVC for two reasons. First, the optimization direction of each positive or negative pair is not consistent, which limit improvement of the FGVC. For example, consider three samples, A1, A2 and A3 which belong to class A, and a sample B1 that belongs to class B. Contrastive learning attempts to optimize the distance between a positive sample pair (A1 and A2) and the distance between a negative sample pair (A3 and B1). A1 and A2 are pulled closer, and A3 is pushed away from B1. However, the distance between A1 and A3 may be greater, and A1 and B1 may be closer. In this case, the optimization directions of A1 and A3 are inconsistent. This method can neither guarantee that A1, A2 and A3 are clustered together nor ensure that the distance between A1 and B1 is larger. If we consider only optimization between samples, optimizing one sample pair may have a negative effect on other samples. As shown by the t-SNE results on 18 categories of warblers in Fig.1 (b), the improvement in the contrastive loss is limited. Class clusters do not pull samples from the same class into compact clusters (such as class clusters masked by blue and black boxes), and distances between some class clusters are not significantly widened (such as class clusters masked by blue and red boxes). Second, in fine-grained image classification, one category is usually very similar to one or two other categories. Therefore only the differences between these similar categories need to be expanded. Because of the absence of prior knowledge concerning inter-class similarity, the above contrastive learning methods treat different nontarget classes equally. Therefore, these methods do not effectively expand the differences between similar categories and are not suitable for handling inter-class differences in the FGVC. How to compress intra-class variances while effectively expanding the distance between similar classes is a problem that needs to be solved.

In addition, there is also the issue of overfitting in FGVC. Soft labels are considered an effective way to address overfitting[22]. Label smoothing (LS) uniformly reassigns partial confidence of the target class to the nontarget categories to generate soft labels [23]. Nevertheless, uniform confidence in nontarget categories ignores rich correlations between fine-grained categories [13, 24]. Compared with other nontarget classes, more similar nontarget classes should be assigned more confidence. For instance, Fig.2 displays some images of birds in (a) and (b) shows samples from the classes that are similar to (a). The soft labels of LS in Fig.2 (c) do not reflect the similarity between (a) and (b). In the soft labels of (a), classes of (b) should be assigned more confidence than other nontarget classes. Label refinery (LR) and guided label refinery (GLR) use the predictions of the trained model as soft labels to reflect similarity[25, 26]. However, because similar categories are difficult to distinguish, model predictions are likely inaccurate and may generate incorrect soft labels. As shown in Fig.2 (d), the trained model incorrectly predicts the images in (a) as classes in (b). Thus, the problem of how to generate reliable and reasonable soft labels remains.

Motivated by the above issue, we propose a simple but effective method named exploration of class center (ECC), which fully mines the information of class center from the perspectives of features and labels. Our ECC consists of 1) a multiple class-center constraint (MCC) and 2) a class-center label generation (CLG). From the feature perspective, MCC constructs class-center features to provide overall representations of the classes. The feature distance between the sample and target class center is constrained to compress intra-class variances. Then the cosine similarity between class-center features is calculated as the similarity between classes. According to the similarity, the most similar nontarget class can be searched, and inter-class differences are enlarged by constraining the feature distance between the sample feature and class-center feature of the most similar nontarget class. By constraining the distances between samples and class centers (the target class and the most similar nontarget class center), we can pull samples close to the target class centers, and push samples away from the most similar nontarget class centers. Thus, we can guarantee consistent sample optimization directions. We can also specifically address the differences between samples and the most similar nontarget categories with similarity between class center features. Fig.1 (d) shows that compared with other methods, our method results in class clusters that are tightly gathered and larger distances between different class clusters. From the label perspective, the CLG employs class-center distributions to generate reliable soft labels, as shown in Fig.2 (e), to alleviate overfitting and further introduce correlations between classes. Finally, ECC and cross entropy (CE) loss are combined to optimize the model. In addition , different from existing methods based on class center, a novel strategy for updating class center is proposed to update class center more stably. Our method addresses FGVC tasks without complex structures or training strategies, thus, allowing our approach to be easily integrated into other FGVC methods to further boost performance. Extensive experiments are conducted on FGVC-Aircrafts (AIR) [27], CUB-200-2011 (CUB) [28], Stanford Cars (CAR) [29] and NABirds (NAB) [30]. The results prove effectiveness of our proposed approach.

2 Related Work

2.1 Fine-grained Image Classification

Benefiting from the development of deep learning [31, 32], there has been significant progress in existing FGVC research in recent years [33, 34, 35]. FGVC methods can be divided into two categories based on whether they employ extra manual annotations: strongly supervised methods and weakly supervised methods. Strongly supervised methods require manually labelled bounding boxes or part annotations, with which informative key parts can be located for extracting discriminative part features [36, 37, 38, 39, 40]. Finally, key part features and object features are integrated for classification. However, these additional manual annotations require extensive expert knowledge. There is limited feasibility and scalability in real-world applications. Therefore, weakly supervised methods without manual annotation have attracted much attention from researchers [34, 35, 41, 42, 43]. M2DRL[44] learns multigranular discriminative region attention and multiscale region-based feature representation for more accurate object region positioning and category recognition. DME-Net[34] introduces a multitasking framework for the low-resolution fine-grained image recognition task, that aims to capture reliable object descriptions from macro- and microperspectives, respectively. SIM-Trans[45] incorporates object structure information into transformer to enhance discriminative representation learning to contain both appearance information and structure information. SIA-Net[35] extracts the low-level image details under the guidance of accurate semantics and makes the details spatially correspond to high-level semantics with complementary content. AA-Trans[11] acquires discriminative parts of the image precisely to better capture local fine-grained information. MA-CNN[6] locates part localization with proposed channel grouping layers in a weakly supervised manner. Then, part-based features and object-based representations are integrated to produce the final classification. MAMC [10] and Cross-X [9] obtain specific part features directly through attention mechanisms with an end-to-end network. In addition, PMG [46], CA-PMG [47] and DCL [48] force models learn specific features from jigsaw patches. Zhang et al.[41] leverages a small and clean meta-set to provide reliable prior knowledge for tackling noisy web images for webly-supervised FGVC. MetaIRNet[42] combines generated images with original images to generate hybrid training images to improve the performance of one-shot FGVC. The above weakly supervised methods employ complicated structures and complex training strategies to extract key part features to reduce intra-class variances. However, there is no efficient way to handle inter-class differences in FGVC.

2.2 Class Center

The concept of class center is introduced to represent the whole class by center loss[12, 49], in which Euclidean distances between sample features and class centers are minimized to enhance the discriminative features in neural networks. Furthermore, Farzaneh et al.[14] argued that not all elements in a class-center feature are relevant to discrimination, and further proposes sparse center loss. Specifically, the sparse center loss is calculated by multiplying the Euclidean distance in the center loss by the weights from an attention network. Li et al. also referred to the idea of class center and proposed single center loss (SCL)[15]. SCL aggregates representations of natural samples around the center point and increases the distance from manipulated samples to the center point, making it greater than from natural samples by a margin. CSDL[13] combines class centers and one-hot labels to generate soft labels. To measure the importance of samples in the same cluster, AdaMG[50] calculates the distances between these samples and their corresponding class centers. Some few-shot methods represent the class as a whole with prototype [51, 52, 53], which is similar to the class center. However, considering intra-class variances, these methods with class center do not address the inter-class differences of FGVC, and do not fully explore for the ability of class centers.

2.3 Soft Labels

One-hot labels are eligible for coarse-grained visual classification because of significant visual differences between coarse categories[13]. However for FGVC, models with hard labels pay attention to irrelevant features (e.g., background) or sample-specific noise to achieve high prediction confidence from these ”hard” labels. LS[23] reduces prediction confidence by uniformly redistributing partial probabilities to nontarget classes to produce smoothed soft labels. Local distributional smoothness (LDS)[54] proposes the local distributional smoothness of model outputs as a regularization term when inputs are perturbed. LR[25] and GLR[26] consider the rich inter-class correlations of FGVC, and optimize models with instance-level soft labels generated from a trained teacher network. However, the soft labels in these methods may be incorrect.

3 Exploration of Class Center

In this section, we present the proposed ECC in detail. Our method handles problems of FGVC from the perspectives of features and labels. It includes: 1) an MCC which handles evident intra-class variances and subtle inter-class differences in the feature space, and 2) a CLG which addresses overfitting of models with reliable and reasonable soft labels. The framework of our ECC is shown in Fig.3.

3.1 Multiple Class-Center Constraint

MCC optimizes feature distances between sample features and multiple class-center features, and aims to handle intra-class variances and inter-class differences. Given that the - image belongs to the - class, a basic neural network is utilized as a backbone to extract the -dim feature .

First, class-center features are initialized and updated as the representation of the - whole class. In most existing loss functions based on class-center [12, 14], class-center features are set as learnable parameters, and are updated backpropagation. Because of the small number of samples in each class of FGVC, it is difficult to stably learn robust class-center features. To address this issue, the MCC updates the class-center feature by averaging all the input sample features of the - class in the training phase. Specifically, we first restore the sum of previously inputted sample features from the current class-center feature of the - class. For this purpose, a counter is maintained to record the number of sample features used for updating . The sum of the previously input sample features of the - class can be obtained by multiplying the current counter by . Then, a new sample feature is added to the sum to update as . The updating of and is formulated as follows:

| (1) | ||||

| (2) |

As the average of features of all samples in the - class, does not need to be updated as learnable parameters in the training phase. Thus the updating is quite stable. The stability and effectiveness of our strategy are demonstrated in ablation studies.

Then we constrain the intra-class variances and inter-class differences with class-center features. To constrain intra-class variances, we reduce the feature distance between sample feature and the corresponding class-center feature directly. However, constraining only intra-class variances is not enough for FGVC. Subtle inter-class differences still cause confusion. Thus, for inter-class differences, we enlarge feature distance between and , which is the most similar class-center feature to . is obtained according to the similarity of class-center features. Specifically, we first construct a cosine similarity matrix , in which the similarity between the - class and the - class is calculated as

| (3) |

denotes the number of classes. With the cosine similarity matrix , the most similar class to the - class is chosen by searching for the maximum value of the - row in :

| (4) |

where is considered the index of the most similar class to the - class.

In practice, the cosine distance is utilized to represent the feature distance. Normally, should be minimized in the trained phase, while should be maximized. In order to integrate these two distances into a loss, cosine distance is replaced with the cosine similarity . Moreover, is multiplied by similarity as a weight to adaptively handle confusing classes. For a mini-batch, the MCC loss function is expressed as

| (5) |

where denotes the number of samples in a mini-batch, is the cosine similarity, and is the index of the sample in the mini-batch.

3.2 Class-Center Label Generation

In this section, CLG is proposed to generate proper soft labels to alleviate overfitting and introduce relationships among classes. In our method, soft labels are generated from class-center distributions. Like class-center features, the class-center distribution of the - class is generated by averaging distributions of all input samples in the - class during training phase. The updating strategy of the CLG can be expressed as follows:

| (6) |

where represents the last fully connected layer, which outputs a -dim vector. Then, the sample probability distribution and the class-center probability distribution are calculated with the activation function.

| (7) | ||||

| (8) |

Consequently, the class-center probability distribution is regarded as the soft label of . The nontarget categories that are more similar to the target category have higher confidence in the soft labels. This nonuniform confidence is more reasonable and realistic.

For a mini-batch, KL divergence is computed between sample probability distributions and soft labels as the CLG loss:

| (9) |

and denote the - elements in and , respectively. With models supervised by soft labels of CLG, overfitting is effectively alleviated. Moreover, rich information among categories is considered to further improve the ability to model objects.

3.3 Exploration of Class Center

Finally, the MCC and CLG are integrated as the ECC, and the two components are multiplied by the hyperparameters and , respectively, to adjust the effects for model training. The entire ECC is formulated as,

| (10) |

In addition, the CE loss is combined with our ECC. The final loss function is expressed as follows:

| (11) |

where denotes the - element in the one-hot label of , and is the - element in the sample distribution .

4 Experiments

4.1 Implementation details

Four widely-used fine-grained datasets are utilized in our experiments, including the AIR[27], CUB[28], CAR[29] and NAB[30] datasets. The details of datasets are shown in Table.I. In addition, we conduct the experiments on a large-scale dataset iNaturalist 2018 (iNat2018)[55]. The experimental results of iNat2018 are displayed and discussed in the supplementary material.

| Dataset | Object | Classes | Train images | Test images |

|---|---|---|---|---|

| AIR[27] | Aircraft | 100 | 6667 | 3333 |

| CUB[28] | Bird | 200 | 5994 | 5794 |

| CAR[29] | Car | 196 | 8144 | 8041 |

| NAB[30] | Bird | 555 | 23,929 | 24,633 |

ResNet50[31] is used as backbone in our experiments unless otherwise stated. For data augmentation, the images are resized to 600×600. Random cropping and center cropping are utilized to crop image to 448×448 during the training and test phases respectively. In addition, we apply random horizontal flipping in training. Stochastic Gradient Descent (SGD) is utilized with a momentum of 0.9. The initial learning rate is 0.01, which decays every 15 epochs at a decay rate of 0.1. The initial learning rate is multiplied by 0.1 for the pretrained backbone on the CUB dataset. The batch size and epochs are set as 32 and 50, respectively. Class-center features and class-center distributions are randomly initialized before training. For the center loss, PC loss [56] and LS, we choose the optimal hyperparameters among the settings from original papers and our experimental best values. Any extra annotations or extra training data are not used and all backbone models are pretrained on the ImageNet dataset.

In ablation studies, same training hyperparameters (including batch size, learning rate and so on) are utilized. And Resnet50 is used as the backbone for all ablation experiments.

4.2 Integration with existing FGVC methods and different backbones

First, to verify the effectiveness of our method, we test it on different backbones including InceptionV3[23], ResNet50, ResNet101[31] and DenseNet121 [57]. According to Table.II, our ECC brings satisfactory improvements on four datasets (0.8%1.9% on AIR, 0.6%3.4% on CUB, 1.0%1.6% on CAR and 1.2%3% on NAB). Compared with the improvements on InceptionV3 (0.8% on AIR, 0.6% on CUB, 1.2% on CAR, 1.2% on NAB), ResNet50, DenseNet121 and ResNet101 have better feature extraction capabilities for constructing better class centers. Thus, there are more improvements on those models (average boost of 1.83% on AIR, 2.8% on CUB, 1.4% on CAR and 2.9% on NAB).

We also show the results of integration with existing FGVC methods, including DCL[48], MGE-CNN[4], WSDAN[2], Swin transformer [58] and CAL[59] in Table.III. Compared with baselines, integrated methods obtain obvious and consistent improvements on these four datasets.

| Model | Loss | AIR | CUB | CAR | NAB |

|---|---|---|---|---|---|

| InceptionV3 | CE loss | 90.7 | 83.9 | 92.8 | 83.3 |

| InceptionV3-ECC | ECC loss | 91.5 | 84.5 | 94.0 | 84.5 |

| ResNet50 | CE loss | 91.1 | 84.7 | 93.1 | 83.4 |

| ResNet50-ECC | ECC loss | 93.0 | 87.3 | 94.7 | 85.5 |

| DenseNet121 | CE loss | 91.6 | 84.6 | 93.0 | 83.8 |

| DenseNet121-ECC | ECC loss | 93.4 | 88.0 | 94.6 | 86.2 |

| ResNet101 | CE loss | 91.5 | 85.6 | 93.7 | 83.5 |

| ResNet101-ECC | ECC loss | 93.3 | 88.0 | 94.7 | 86.5 |

| Method | Backbone | AIR | CUB | CAR | NAB |

|---|---|---|---|---|---|

| DCL[48] | ResNet50 | 93.0 | 87.8 | 94.5 | 86.0 |

| DCL-ECC | ResNet50 | 93.7 | 88.8 | 95.0 | 87.2 |

| MGE-CNN[4] | ResNet50 | - | 88.5 | 93.9 | 86.7 |

| MGE-CNN-ECC | ResNet50 | 93.8 | 88.8 | 94.8 | 86.9 |

| WSDAN[2] | InceptionV3 | 93.0 | 89.4 | 94.5 | 87.9 |

| WSDAN-ECC | InceptionV3 | 94.0 | 89.7 | 94.8 | 88.7 |

| Swin[58] | Swin-base | 92.2 | 91.0 | 94.5 | 90.7 |

| Swin-ECC | Swin-base | 92.8 | 92.3 | 94.7 | 91.4 |

| CAL[59] | ResNet101 | 94.2 | 90.6 | 95.5 | 91.0 |

| CAL-ECC | ResNet101 | 95.2 | 91.0 | 95.9 | 91.3 |

| Backbone | Dataset | Baseline | Ct loss | SC loss | PC loss | LS | Ctt loss | Tlt loss | Ours |

|---|---|---|---|---|---|---|---|---|---|

| InceptionV3 | AIR | 90.7 | 90.8 | 90.5 | 90.7 | 90.7 | 91.3 | 91.3 | 91.5 |

| CUB | 83.9 | 84.2 | 82.1 | 84.7 | 83.6 | 85.1 | 85.1 | 84.5 | |

| CAR | 92.8 | 92.6 | 92.9 | 93.0 | 92.8 | 93.2 | 93.5 | 94.0 | |

| NAB | 83.3 | 83.4 | 83.4 | 83.9 | 83.8 | 83.5 | 84.1 | 84.5 | |

| ResNet50 | AIR | 91.1 | 91.5 | 91.5 | 91.6 | 92.1 | 91.8 | 92.1 | 93.0 |

| CUB | 84.7 | 84.9 | 86.2 | 85.5 | 85.5 | 86.3 | 86.5 | 87.3 | |

| CAR | 93.1 | 93.2 | 93.0 | 93.8 | 94.1 | 93.2 | 93.9 | 94.7 | |

| NAB | 83.4 | 83.4 | 84.5 | 84.2 | 85.3 | 83.6 | 84.3 | 85.5 | |

| DenseNet121 | AIR | 91.6 | 91.8 | 91.6 | 91.6 | 92.2 | 91.8 | 92.5 | 93.4 |

| CUB | 84.6 | 84.5 | 86.4 | 86.0 | 85.3 | 86.5 | 86.3 | 88.0 | |

| CAR | 93.0 | 92.8 | 93.0 | 93.3 | 93.7 | 93.4 | 94.3 | 94.6 | |

| NAB | 83.8 | 84.0 | 85.4 | 84.7 | 85.3 | 84.3 | 85.0 | 86.2 | |

| ResNet101 | AIR | 91.5 | 91.9 | 91.8 | 91.9 | 92.3 | 92.1 | 92.5 | 93.3 |

| CUB | 85.6 | 85.7 | 85.8 | 86.4 | 86.5 | 87.0 | 87.0 | 88.0 | |

| CAR | 93.7 | 93.6 | 93.8 | 94.0 | 94.2 | 93.4 | 94.3 | 94.7 | |

| NAB | 83.5 | 84.6 | 85.2 | 85.5 | 86.4 | 84.8 | 85.9 | 86.5 |

4.3 Comparison with different loss functions

In this section, we compare our approach with different loss functions, including Center loss (Ct loss)[12], Single Center loss (SC loss)[15], Pairwise Confusion loss (PC loss)[56], Label Smoothing (LS)[23], Contrastive loss (Ctt loss)[18] and Triplet loss (Tlt loss)[19] on four datasets. For fairness, experiments are conducted with different hyperparameters (including recommended hyperparameters from original papers and our experimental best values) on ResNet50 to choose the optimal results for comparison with our methods. Moreover, ResNet50 is replaced with different backbones (Inceptionv3, DenseNet121, ResNet101) to further demonstrate the superiority of our method. Results are shown in Table.IV. CE loss is regarded as baseline, which have achieved acceptable results. Existing methods based on class center (Ct loss, SC loss) bring few improvement, due to the unstable updating strategy of class centers and the lack of constraints for inter-class differences. Contrastive loss and Triplet loss (Ctt loss and Tlt loss) achieve better performances than methods based on class center. Compared with the above methods, our method effectively constrains intra-class variances and inter-class differences and achieves optimal overall performance.

An exception is InceptionV3 on CUB dataset, where Ctt loss and Tlt loss are superior to our MCC (85.1% vs 84.5%). In fact, in our method, the quality of the class-center features and class-center distributions depends on the quality of the sample features and sample distributions extracted from the backbones. Compared with other backbones (ResNet50, ResNet101 and DenseNet121), the features and distributions from InceptionV3 are not good enough, which leads to limited improvement. In practice, compared with InceptionV3, ResNet and DenseNet have more extensive applications, and most FGVC methods use ResNet and DenseNet as backbones. With these backbones, our method has more obvious advantages. Therefore, our MCC loss has greater application value in FGVC.

4.4 Comparison with SoTA methods

| Method | AIR | CUB | CAR | NAB |

|---|---|---|---|---|

| B-CNN[60] | 86.9 | 84.0 | 90.6 | - |

| MA-CNN[6] | 89.9 | 86.5 | 92.8 | - |

| M2DRL[44] | - | 87.2 | 93.3 | - |

| NTS-Net[43] | 91.4 | 87.5 | 93.9 | - |

| Cross-X[9] | 92.6 | 87.7 | 94.6 | 86.2 |

| MGE-CNN[4] | - | 88.5 | 93.9 | 86.7 |

| ELP[61] | 92.7 | 88.8 | 94.2 | - |

| DCL[48] | 93.0 | 87.8 | 94.5 | 86.0 |

| WSDAN[2] | 93.0 | 89.4 | 94.5 | 87.9 |

| SFFF[5] | 93.1 | 85.4 | 94.4 | - |

| API-Net[62] | 93.4 | 88.6 | 94.9 | 86.2 |

| PMG[46] | 93.4 | 89.6 | 95.1 | - |

| CDSL-DCL[13] | 93.5 | 88.6 | 94.9 | - |

| CAL[59] | 94.2 | 90.6 | 95.5 | 91.0 |

| SIA-Net[35] | 94.3 | 90.7 | 95.5 | - |

| ALIGN[63] | - | - | 96.1 | - |

| ViT[32] | - | 90.3 | 93.7 | 89.9 |

| AA-Trans[11] | - | 91.4 | - | 90.2 |

| TransFG[17] | - | 91.7 | 94.8 | 90.8 |

| Swin[58] | 92.2 | 91.0 | 94.5 | 90.7 |

| CAMF[64] | 93.3 | 91.2 | 95.3 | - |

| SIM-Trans[45] | - | 91.8 | - | - |

| Dual-TR[49] | - | 92.0 | - | 91.3 |

| DCL-ECC | 93.7 | 88.8 | 95.0 | 87.2 |

| MGE-CNN-ECC | 93.8 | 88.8 | 94.8 | 86.9 |

| WSDAN-ECC | 94.0 | 89.7 | 94.8 | 88.7 |

| CAL-ECC | 95.2 | 91.0 | 95.9 | 91.3 |

| Swin-ECC | 92.8 | 92.3 | 94.7 | 91.4 |

In this section, integrated models are compared with existing state-of-the-art (SoTA) approaches. Extensive experiments are conducted to verify the effectiveness of our ECC. Table.V shows the performances. Compared with other methods, our ECC (Swin-ECC on the CUB dataset, CAL-ECC on the AIR and CAR datasets, and Swin-ECC on the NAB dataset) achieves excellent performances, with SoTA on the CUB and AIR datasets. Although ALIGN outperforms our method on CAR dataset, ALIGN is pretrained on a large-scale noisy image-text dataset (LSNITD)[63], which includes 1.8B image-text pairs. ImageNet-1k and ImageNet-21k are utilized in our integrated models. The size of LSNITD is 120 times larger than ImageNet-21k.

Moreover, we made an interesting observation. Transformer-based methods achieve better results than CNNs on the CUB and NAB datasets, but CNNs achieve better performances on the AIR and CAR datasets. We argue that transformer-based methods are naturally not subject to the local inductive bias of CNNs. Thus, these methods have the ability to model global dependency, which has advantages over CNN-based methods in terms of classifying nonstructural rigid objects (e.g., birds). However, transformer-based methods destroy the structural information of rigid structural objects including cars and aircraft, by preprocessing an image into a sequence of flattened patches [65]. This process leads to inferior performance on the AIR and CAR datasets.

4.5 Ablation studies

In this section, ablation studies are conducted for different components of our ECC. First, hyperparameters of the MCC and CLG are investigated via extensive experiments. Then, each component in our approach is explored. In addition, we discuss different updating strategies of class centers in detail.

4.5.1 Hyperparameter Selection

To determine the proper weights for proposed components, we test the performances of different values on all datasets. The best results with MCC occur at =1.4 on the AIR, =1.7 on CUB, =1.4 on CAR and =0.7 on NAB. With chosen , are chosen for CLG as 0.2 on AIR, 0.6 on CUB, 0.3 on CAR and 0.08 on NAB.

The changes in accuracy are displayed in Fig.4. When increases, the accuracy changes less than for all datasets ( for AIR, for CUB, for CAR, and for NAB). Regardless of what value is used for from 0.1 to 2.0, our MCC is always better than that of the baselines (represented by dashed grey lines in Fig.4), which may indicate that the MCC is not sensitive to and that better parameters can lead to better results. However, there is an obvious change when increases. This change indicates that the CLG is more sensitive to weight than the MCC. In the early stage of training, the class-center features and class-center distributions are both unreliable, which results in incorrect supervision information for the model. However, in the MCC, it is also reasonable to expand the distance between sample features and unreliable similar class-center features. Thus, the impact of the weight of the MCC is relatively small. Unreliable class-center distributions have a greater effect on the CLG than on the MCC. Therefore, the weight of the CLG should be smaller. In addition, the size of the NAB dataset is three to four times greater than that of other datasets. Therefore, there are more erroneous predictions at the early stage of training than with other datasets, and the early class-center distributions are more unreliable. A larger weight leads to a more serious negative impact on the model.

4.5.2 Contribution of Components

Table.VI shows the performance of the proposed components and their combinations. First, the performance of each separate component (MCC and CLG) is demonstrated. Compared with the baseline (CE loss), our method shows obvious advances, namely, 1.6% on AIR, 1.7%2.4% on CUB, 0.9%1.5% on CAR and 1.0%2.0% on NAB. Finally, our ECC obtains excellent results with a combination of two components (93.0% on AIR, 87.3% on CUB, 94.7% on CAR and 85.5% on NAB). The improvements verify the effectiveness of all the components. The MCC, CLG and their combination all boost the performances significantly on all datasets.

| Component | Backbone | AIR | CUB | CAR | NAB |

|---|---|---|---|---|---|

| Baseline | ResNet50 | 91.1 | 84.7 | 93.1 | 83.4 |

| MCC | ResNet50 | 92.7 | 86.4 | 94.0 | 85.4 |

| CLG | ResNet50 | 92.7 | 87.1 | 94.6 | 84.4 |

| ECC | ResNet50 | 93.0 | 87.3 | 94.7 | 85.5 |

Fig.5 shows the results of t-SNE [66] on the CUB dataset. The left figure and right figure show the results before and after adding our MCC, respectively. In the left figure, many points belonging to the same class (with the same colour) are scattered and mixed with points from other classes. The results of adding the MCC are displayed in the right figure and compared with those in the left figure. These scattered points obviously converge after adding the MCC. Moreover, there are no clear boundaries between some extremely confusing classes with CE loss, while clear boundaries appear constrained by the MCC. This finding suggests that our MCC component can compress intra-class variances and expand inter-class differences simultaneously.

The soft labels are displayed in Fig.6. The images in row (a) all belong to orioles, and there are subtle differences. Therefore, the four classes are visually similar, and our soft labels also reflect the similarities between classes in row (b). Rows (c) and (d) also support the reasonableness of our soft labels.

4.5.3 Discussion of the Strategy of Updating the Class Center

| Method | Backbone | AIR | CUB | CAR | NAB |

|---|---|---|---|---|---|

| Baseline | ResNet50 | 91.1 | 84.7 | 93.1 | 83.4 |

| Center | ResNet50 | 91.5 | 84.9 | 93.2 | 83.4 |

| Contrastive center | ResNet50 | 91.3 | 85.9 | 93.5 | 83.4 |

| Deep attentive center | ResNet50 | 91.7 | 86.0 | 93.5 | 84.3 |

| Ours | ResNet50 | 93.0 | 87.3 | 94.7 | 85.5 |

To verify the advantage of our proposed strategy of updating class center, we compare different loss functions based on the class center, including center loss (Ct loss), contrastive center loss (CttCt loss)[67] and deep attentive center loss (DACt loss)[14]. The Euclidean distances of class-center features between each epoch and its next epoch, are utilized to represent changes of class-center features. Fig.7 shows the results on the CUB dataset. There are violent shakes during the training phase with the strategy of center loss. Contrastive center loss further handles inter-class differences, but does not optimize the update strategy. Thus, violent shaking still occurs. The deep attentive center loss utilizes attention to reduce the impact of useless elements in the class center. Although deep attentive center loss further stabilizes the update of class center, it does not actually solve the problem. Compared with the above methods, our strategy always maintains a smooth curve throughout the training phase. This finding indicates that our strategy facilitates the stable updating of the class center. Furthermore, we compare the performances of different updating strategies for class center in Table.VII. Consistent superior performance also demonstrates the advantage of our strategy.

| Model | AIR | CUB | CAR | NAB |

|---|---|---|---|---|

| InceptionV3 | 13.21G | 13.21G | 13.21G | 13.21G |

| InceptionV3-ECC | 13.28G | 13.46G | 13.45G | 15.11G |

| ResNet50 | 16.44G | 16.44G | 16.44G | 16.44G |

| ResNet50-ECC | 16.50G | 16.69G | 16.68G | 18.34G |

| DenseNet121 | 11.46G | 11.46G | 11.46G | 11.46G |

| DenseNet121-ECC | 11.49G | 11.58G | 11.58G | 12.41G |

| ResNet101 | 31.33G | 31.33G | 31.33G | 31.33G |

| ResNet101-ECC | 31.39G | 31.39G | 31.39G | 31.39G |

| Average extra FLOPs | 0.06G | 0.22G | 0.24G | 1.66G |

4.6 Discussion of Time Complexity and Computational Complexity

In this section, we consider the complexity of our ECC. First, the time complexity is discussed. To update the class center feature, the MCC needs to index the class center feature with the corresponding label. The class-center feature is updated according to Eq. (1). The time complexity of updating the class center feature is . To handle inter-class differences, the MCC needs to calculate the similarity between each class according to Eq. (3), whose time complexity is , where is the number of classes. Then, the MCC algorithm searches the maximum in a row of the similarity matrix, with a time complexity of . Finally, the MCC loss is calculated according to Eq. (5) with a time complexity of . In summary, the time complexity of MCC is . Furthermore, the CLG indexes the class center label with the corresponding label to update the class center label according to Eq. (6) and calculates the loss according to Eq. (9). The time complexity of the CLG is . The overall time complexity is determined by the complexity of the MCC and the CLG. Therefore, the overall time complexity of our method is .

Second, we discuss the computational complexity and calculate the FLOPs of the MCC and CLG. Actually, the computational complexity of the CLG is less than that of 0.1 G FLOPs, which is negligible considering that of the FLOPs of the MCC. Therefore, we regard the FLOPs of the MCC as the overall FLOPs. The comparison of the computational complexity before and after using our method is shown in Table.VIII. Our method requires only a few FLOPs (average 0.06 G to 1.66 G on all datasets). Compared with the complexity of the backbone network, this computational complexity is insignificant. Moreover, the costs exist in the training phase only and are not incurred in the practical test phase. Thus, our method is very practical and can result in significant improvement with negligible costs.

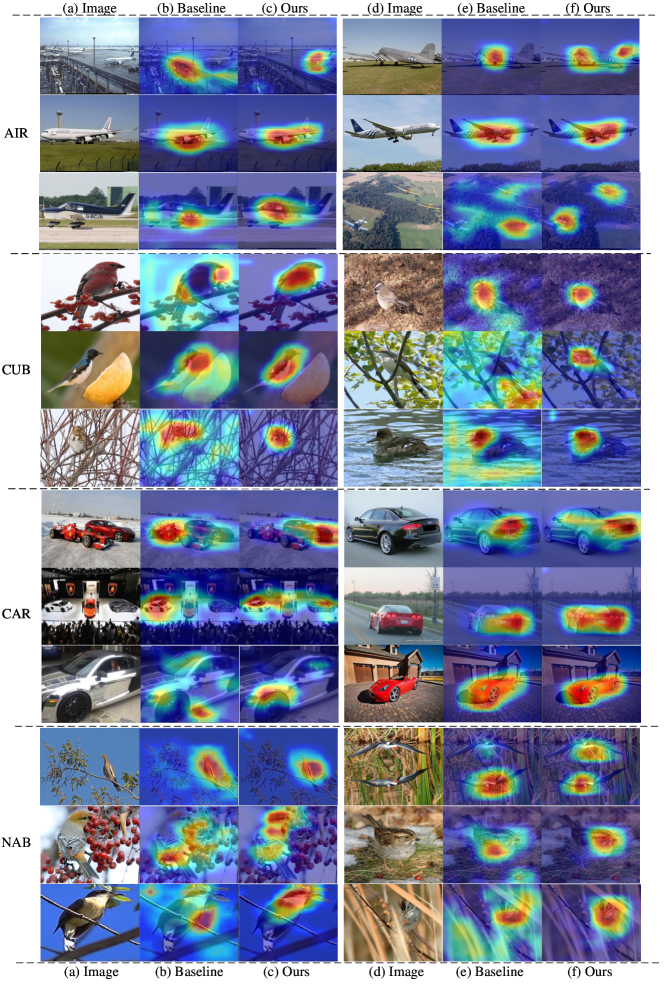

4.7 Visualization

First, we visualize heatmaps with Grad-CAM[68] images, which are shown in Fig.8. Our ECC guides the model to learn discriminative features and alleviates model overfitting. It is evident that the model no longer pays attention to background information, especially in the CUB and NAB datasets, which usually contain complex backgrounds. Moreover, in the first and last heatmaps of the AIR dataset, the results of CE loss incorrectly focus on complex background information, while our method correctly focuses on the objects. Similarly, our method achieves better results on the CAR dataset. Fig.9 displays the t-SNE results of the baseline and our ECC loss on 18 species of visually similar warblers from the CUB dataset. The left image and right image represent the results of CE loss and our ECC loss, respectively. There are more evident margins between different classes in the results of ECC than in those of CE loss. Compression within a class is also observed in the t-SNE results. These results indicate the effectiveness of our method.

5 Conclusion

In this paper, we propose a simple but effective method named ECC to improve the feature extraction capability of the model. ECC explores the role of class centers from the perspectives of features and labels with two components: an MCC and a CLG. From the feature perspective, the MCC reduces intra-class variances by reducing the cosine distance between sample features and target class-center features. Moreover, the MCC decreases the cosine similarity between sample features and the most similar nontarget class-center features to increase intra-class differences. Furthermore, from the label perspective, the CLG converts the class-center distribution of each class as a soft label to supervise the model to alleviate overfitting. Our soft labels are reliable and introduce correlations between categories. Finally, ECC loss and CE loss are combined to optimize the model. Extensive experiments and visualizations demonstrate the effectiveness of our method.

Acknowledgements

The work was jointly supported by the National Science and Technology Major Project under grant No. 2022ZD0117103, the National Natural Science Foundations of China under grant No. 62272364, the provincial Key Research and Development Program of Shaanxi under grant No. 2024GH-ZDXM-47, the Teaching Reform Project of Shaanxi Higher Continuing Education under Grant No. 21XJZ004, the Innovation Fund of Xidian University, High-performance Computing Platform of XiDian University, Natural Science Basic Research Program of Shaanxi (Program No. 2024JC-YBQN-0639).

References

- [1] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, A. C. Berg, and L. Fei-Fei, “ImageNet Large Scale Visual Recognition Challenge,” International Journal of Computer Vision, vol. 115, no. 3, pp. 211–252, 2015.

- [2] T. Hu, H. Qi, Q. Huang, and Y. Lu, “See Better Before Looking Closer: Weakly Supervised Data Augmentation Network for Fine-Grained Visual Classification,” 2019. [Online]. Available: http://arxiv.org/abs/1901.09891

- [3] Z. Huang and Y. Li, “Interpretable and accurate fine-grained recognition via region grouping,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2020, pp. 8659–8669.

- [4] L. Zhang, S. Huang, W. Liu, and D. Tao, “Learning a mixture of granularity-specific experts for fine-grained categorization,” in Proceedings of the IEEE International Conference on Computer Vision, vol. 2019-Octob, 2019, pp. 8330–8339.

- [5] M. Wang, P. Zhao, X. Lu, F. Min, and X. Wang, “Fine-grained visual categorization: A spatial–frequency feature fusion perspective,” IEEE Transactions on Circuits and Systems for Video Technology, 2022.

- [6] H. Zheng, J. Fu, T. Mei, and J. Luo, “Learning Multi-attention Convolutional Neural Network for Fine-Grained Image Recognition,” in Proceedings of the IEEE International Conference on Computer Vision, vol. 2017-Octob, 2017, pp. 5219–5227.

- [7] J. Zhang, R. Zhang, Y. Huang, and Q. Zou, “Unsupervised Part Mining for Fine-grained Image Classification,” arXiv preprint arXiv:1902.09941, 2019. [Online]. Available: http://arxiv.org/abs/1902.09941

- [8] R. Ji, L. Wen, L. Zhang, D. Du, Y. Wu, C. Zhao, X. Liu, and F. Huang, “Attention Convolutional Binary Neural Tree for Fine-Grained Visual Categorization,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 10 465–10 474, 2020.

- [9] W. Luo, X. Yang, X. Mo, Y. Lu, L. Davis, J. Li, J. Yang, and S. N. Lim, “Cross-X learning for fine-grained visual categorization,” in Proceedings of the IEEE International Conference on Computer Vision, vol. 2019-Octob. Institute of Electrical and Electronics Engineers Inc., oct 2019, pp. 8241–8250.

- [10] M. Sun, Y. Yuan, F. Zhou, and E. Ding, “Multi-Attention Multi-Class Constraint for Fine-grained Image Recognition,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 11220 LNCS, 2018, pp. 834–850.

- [11] Q. Wang, J. Wang, H. Deng, X. Wu, Y. Wang, and G. Hao, “Aa-trans: Core attention aggregating transformer with information entropy selector for fine-grained visual classification,” Pattern Recognition, vol. 140, p. 109547, 2023.

- [12] Y. Wen, K. Zhang, Z. Li, and Y. Qiao, “A discriminative feature learning approach for deep face recognition,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 9911 LNCS. Springer, 2016, pp. 499–515.

- [13] P. Du, Z. Sun, Y. Yao, and Z. Tang, “Exploiting category similarity-based distributed labeling for fine-grained visual classification,” IEEE Access, vol. 8, pp. 186 679–186 690, 2020.

- [14] A. H. Farzaneh and X. Qi, “Facial expression recognition in the wild via deep attentive center loss,” Proceedings - 2021 IEEE Winter Conference on Applications of Computer Vision, WACV 2021, pp. 2401–2410, 2021.

- [15] J. Li, H. Xie, J. Li, Z. Wang, and Y. Zhang, “Frequency-aware Discriminative Feature Learning Supervised by Single-Center Loss for Face Forgery Detection,” Tech. Rep., 2021.

- [16] Z. Zhang, C. Luo, H. Wu, Y. Chen, N. Wang, and C. Song, “From individual to whole: reducing intra-class variance by feature aggregation,” International Journal of Computer Vision, vol. 130, no. 3, pp. 800–819, 2022.

- [17] J. He, J.-N. Chen, S. Liu, A. Kortylewski, C. Yang, Y. Bai, and C. Wang, “TransFG: A Transformer Architecture for Fine-grained Recognition,” arXiv preprint arXiv:2103.07976, 2021. [Online]. Available: http://arxiv.org/abs/2103.07976

- [18] R. Hadsell, S. Chopra, and Y. LeCun, “Dimensionality reduction by learning an invariant mapping,” in 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), vol. 2. IEEE, 2006, pp. 1735–1742.

- [19] F. Schroff, D. Kalenichenko, and J. Philbin, “Facenet: A unified embedding for face recognition and clustering,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 815–823.

- [20] Y. Zeng, B. Zhao, S. Qiu, T. Dai, and S.-T. Xia, “Towards effective image manipulation detection with proposal contrastive learning,” IEEE Transactions on Circuits and Systems for Video Technology, 2023.

- [21] S. Zhang, J. Bai, T. Li, Z. Yan, and Z. Li, “Modeling intra-class and inter-class constraints for out-of-domain detection,” in International Conference on Database Systems for Advanced Applications. Springer, 2023, pp. 142–158.

- [22] G. Hinton, O. Vinyals, J. Dean et al., “Distilling the knowledge in a neural network,” arXiv preprint arXiv:1503.02531, vol. 2, no. 7, 2015.

- [23] C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna, “Rethinking the Inception Architecture for Computer Vision,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2016-Decem, pp. 2818–2826, 2016.

- [24] C. B. Zhang, P. T. Jiang, Q. Hou, Y. Wei, Q. Han, Z. Li, and M. M. Cheng, “Delving deep into label smoothing,” IEEE Transactions on Image Processing, vol. 30, pp. 5984–5996, 2021.

- [25] H. Bagherinezhad, M. Horton, M. Rastegari, and A. Farhadi, “Label Refinery: Improving ImageNet Classification through Label Progression,” arXiv preprint arXiv:1805.02641, 2018. [Online]. Available: http://arxiv.org/abs/1805.02641

- [26] P. Zhao, H. Yao, X. Liu, R. Liu, and Q. Miao, “Improving image classification through joint guided learning,” IEEE Transactions on Instrumentation and Measurement, 2022.

- [27] S. Maji, E. Rahtu, J. Kannala, M. Blaschko, and A. Vedaldi, “Fine-Grained Visual Classification of Aircraft,” 2013. [Online]. Available: http://arxiv.org/abs/1306.5151

- [28] B. Englert and S. Lam, “The Caltech-UCSD Birds-200-2011 Dataset,” IFAC Proceedings Volumes (IFAC-PapersOnline), vol. 42, no. 15, pp. 50–57, 2009.

- [29] J. Krause, M. Stark, J. Deng, and L. Fei-Fei, “3D object representations for fine-grained categorization,” Proceedings of the IEEE International Conference on Computer Vision, pp. 554–561, 2013.

- [30] G. Van Horn, S. Branson, R. Farrell, S. Haber, J. Barry, P. Ipeirotis, P. Perona, and S. Belongie, “Building a bird recognition app and large scale dataset with citizen scientists: The fine print in fine-grained dataset collection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 595–604.

- [31] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2016-Decem, 2016, pp. 770–778. [Online]. Available: http://openaccess.thecvf.com/content_cvpr_2016/html/He_Deep_Residual_Learning_CVPR_2016_paper.html

- [32] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly, J. Uszkoreit, and N. Houlsby, “An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale,” Icml, pp. 1–21, 2020. [Online]. Available: http://arxiv.org/abs/2010.11929

- [33] J. Li, L. Yang, Q. Wang, and Q. Hu, “Wdan: A weighted discriminative adversarial network with dual classifiers for fine-grained open-set domain adaptation,” IEEE Transactions on Circuits and Systems for Video Technology, 2023.

- [34] T. Yan, H. Li, B. Sun, Z. Wang, and Z. Luo, “Discriminative feature mining and enhancement network for low-resolution fine-grained image recognition,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 32, no. 8, pp. 5319–5330, 2022.

- [35] S. Wang, Z. Wang, H. Li, J. Chang, W. Ouyang, and Q. Tian, “Semantic-guided information alignment network for fine-grained image recognition,” IEEE Transactions on Circuits and Systems for Video Technology, 2023.

- [36] X. S. Wei, C. W. Xie, J. Wu, and C. Shen, “Mask-CNN: Localizing parts and selecting descriptors for fine-grained bird species categorization,” Pattern Recognition, vol. 76, pp. 704–714, 2018.

- [37] S. Huang, Z. Xu, D. Tao, and Y. Zhang, “Part-stacked CNN for fine-grained visual categorization,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2016-Decem, pp. 1173–1182, 2016.

- [38] N. Zhang, J. Donahue, R. Girshick, and T. Darrell, “Part-based R-CNNs for fine-grained category detection,” Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 8689 LNCS, no. PART 1, pp. 834–849, 2014.

- [39] D. Lin, X. Shen, C. Lu, and J. Jia, “Deep LAC: Deep localization, alignment and classification for fine-grained recognition,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 07-12-June, pp. 1666–1674, 2015.

- [40] S. Branson, G. Van Horn, S. Belongie, and P. Perona, “Bird species categorization using pose normalized deep convolutional nets,” BMVC 2014 - Proceedings of the British Machine Vision Conference 2014, 2014.

- [41] C. Zhang, G. Lin, Q. Wang, F. Shen, Y. Yao, and Z. Tang, “Guided by meta-set: a data-driven method for fine-grained visual recognition,” IEEE Transactions on Multimedia, 2022.

- [42] S. Tsutsui, Y. Fu, and D. Crandall, “Reinforcing generated images via meta-learning for one-shot fine-grained visual recognition,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

- [43] Z. Yang, T. Luo, D. Wang, Z. Hu, J. Gao, and L. Wang, “Learning to navigate for fine-grained classification,” in Proceedings of the European conference on computer vision (ECCV), 2018, pp. 420–435.

- [44] X. He, Y. Peng, and J. Zhao, “Which and how many regions to gaze: Focus discriminative regions for fine-grained visual categorization,” International Journal of Computer Vision, vol. 127, pp. 1235–1255, 2019.

- [45] H. Sun, X. He, and Y. Peng, “Sim-trans: Structure information modeling transformer for fine-grained visual categorization,” in Proceedings of the 30th ACM International Conference on Multimedia, 2022, pp. 5853–5861.

- [46] R. Du, D. Chang, A. K. Bhunia, J. Xie, Z. Ma, Y. Z. Song, and J. Guo, “Fine-Grained Visual Classification via Progressive Multi-granularity Training of Jigsaw Patches,” Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 12365 LNCS, pp. 153–168, 2020.

- [47] P. Zhao, Q. Miao, H. Yao, X. Liu, R. Liu, and M. Gong, “CA-PMG: Channel attention and progressive multi-granularity training network for fine-grained visual classification,” IET Image Processing, vol. 15, no. 14, pp. 3718–3727, 2021.

- [48] Y. Chen, Y. Bai, W. Zhang, and T. Mei, “Destruction and construction learning for fine-grained image recognition,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2019-June, 2019, pp. 5152–5161.

- [49] R. Ji, J. Li, L. Zhang, J. Liu, and Y. Wu, “Dual transformer with multi-grained assembly for fine-grained visual classification,” IEEE Transactions on Circuits and Systems for Video Technology, 2023.

- [50] J. Peng, G. Jiang, and H. Wang, “Adaptive memorization with group labels for unsupervised person re-identification,” IEEE Transactions on Circuits and Systems for Video Technology, 2023.

- [51] H. Huang, Z. Wu, W. Li, J. Huo, and Y. Gao, “Local descriptor-based multi-prototype network for few-shot learning,” Pattern Recognition, vol. 116, p. 107935, 2021.

- [52] J. Snell, K. Swersky, and R. Zemel, “Prototypical networks for few-shot learning,” in Advances in Neural Information Processing Systems, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, Eds., vol. 30. Curran Associates, Inc., 2017. [Online]. Available: https://proceedings.neurips.cc/paper_files/paper/2017/file/cb8da6767461f2812ae4290eac7cbc42-Paper.pdf

- [53] H. Chen, H. Li, Y. Li, and C. Chen, “Sparse spatial transformers for few-shot learning,” arXiv preprint arXiv:2109.12932, 2021.

- [54] T. Miyato, S. I. Maeda, M. Koyama, K. Nakae, and S. Ishii, “Distributional Smoothing with Virtual Adversarial Training,” 4th International Conference on Learning Representations, ICLR 2016 - Conference Track Proceedings, 2018. [Online]. Available: http://www.shortscience.org/paper?bibtexKey=journals/corr/1507.00677#davidstutz

- [55] “iNaturalist 2018 competition dataset.” https://github.com/visipedia/inat_comp/tree/master/2018, 2018.

- [56] A. Dubey, O. Gupta, P. Guo, R. Raskar, R. Farrell, and N. Naik, “Pairwise confusion for fine-grained visual classification,” Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 11216 LNCS, pp. 71–88, 2018.

- [57] G. Huang, Z. Liu, L. Van Der Maaten, and K. Q. Weinberger, “Densely connected convolutional networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 4700–4708.

- [58] Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, S. Lin, and B. Guo, “Swin Transformer: Hierarchical Vision Transformer using Shifted Windows,” 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 9992–10 002, mar 2021. [Online]. Available: http://arxiv.org/abs/2103.14030https://ieeexplore.ieee.org/document/9710580/

- [59] Y. Rao, G. Chen, J. Lu, and J. Zhou, “Counterfactual Attention Learning for Fine-Grained Visual Categorization and Re-identification,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 1025–1034. [Online]. Available: http://arxiv.org/abs/2108.08728

- [60] T. Y. Lin, A. Roychowdhury, and S. Maji, “Bilinear Convolutional Neural Networks for Fine-Grained Visual Recognition,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 40, no. 6, pp. 1309–1322, 2018.

- [61] Y. Liang, L. Zhu, X. Wang, and Y. Yang, “A Simple Episodic Linear Probe Improves Visual Recognition in the Wild,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 9559–9569.

- [62] P. Zhuang, Y. Wang, and Y. Qiao, “Learning attentive pairwise interaction for fine-grained classification,” in AAAI 2020 - 34th AAAI Conference on Artificial Intelligence, 2020, pp. 13 130–13 137.

- [63] C. Jia, Y. Yang, Y. Xia, Y. T. Chen, and T. Duerig, “Scaling up visual and vision-language representation learning with noisy text supervision,” 2021.

- [64] H. Zhu, W. Ke, D. Li, J. Liu, L. Tian, and Y. Shan, “Dual Cross-Attention Learning for Fine-Grained Visual Categorization and Object Re-Identification,” 2022. [Online]. Available: http://arxiv.org/abs/2205.02151

- [65] Z. Miao, X. Zhao, J. Wang, Y. Li, and H. Li, “Complemental Attention Multi-Feature Fusion Network for Fine-Grained Classification,” IEEE Signal Processing Letters, vol. 28, pp. 1983–1987, 2021.

- [66] L. van der Maaten and G. Hinton, “Visualizing data using t-SNE,” Journal of Machine Learning Research, vol. 9, no. 1, pp. 2579–2605, 2008. [Online]. Available: https://www.jmlr.org/papers/volume9/vandermaaten08a/vandermaaten08a.pdf?fbclid=IwA

- [67] C. Qi and F. Su, “Contrastive-center loss for deep neural networks,” Proceedings - International Conference on Image Processing, ICIP, vol. 2017-Septe, pp. 2851–2855, 2018.

- [68] R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra, “Grad-cam: Why did you say that? visual explanations from deep networks via gradient-based localization,” Revista do Hospital das Cl??nicas, vol. 17, pp. 331–336, 2016. [Online]. Available: http://arxiv.org/abs/1610.02391

Appendix A Experiment on the large-scale dataset

To order to verify the effectiveness of the proposed ECC on the large-scale dataset, we conduct experiments on the iNaturalist 2018 dataset (iNat2018), which includes 8142 species, 437513 training images and 24426 validation images. There is a serious long-tail problem: the category with the most samples in the training set has several thousand samples, while the category with the least samples has only a few samples.

In the experiments, we use ResNet50 as backbone. is set as 0.05, and is set as 0.001. The other hyperparameters remain consistent with those of the other datasets (such as batch size is 32 and initial learning is 0.01). In addition, we do not use additional information, including latitude, longitude and date.

We explore the efficiency of the proposed ECC and two components (the MCC and CLG). The results are shown in Table.IX. Although the main challenge of the iNat2018 dataset is the long-tail problem, rather than intra-class variances and inter-classes differences, our approach still brings improvements.

| Components | Backbone | iNat2018 |

|---|---|---|

| Baseline | ResNet50 | 62.4 |

| MCC | ResNet50 | 62.7 |

| CLG | ResNet50 | 62.5 |

| ECC (MCC+CLG) | ResNet50 | 62.8 |