Exploiting homogeneity for the optimal control of discrete-time systems: application to value iteration

Abstract

To investigate solutions of (near-)optimal control problems, we extend and exploit a notion of homogeneity recently proposed in the literature for discrete-time systems. Assuming the plant dynamics is homogeneous, we first derive a scaling property of its solutions along rays provided the sequence of inputs is suitably modified. We then consider homogeneous cost functions and reveal how the optimal value function scales along rays. This result can be used to construct (near-)optimal inputs on the whole state space by only solving the original problem on a given compact manifold of a smaller dimension. Compared to the related works of the literature, we impose no conditions on the homogeneity degrees. We demonstrate the strength of this new result by presenting a new approximate scheme for value iteration, which is one of the pillars of dynamic programming. The new algorithm provides guaranteed lower and upper estimates of the true value function at any iteration and has several appealing features in terms of reduced computation. A numerical case study is provided to illustrate the proposed algorithm.

I Introduction

Optimal control seeks for inputs that minimize a given cost function along the trajectories of the considered plant dynamics, see, e.g., [21, 2, 11]. While we know how to systematically construct optimal inputs for linear systems and quadratic costs since the 60’s [9], optimal control of general nonlinear systems with general cost functions remains very challenging in general. In this setting, dynamic programming provides various algorithms to construct near-optimal inputs based on Bellman equation [2, 3]. When the state and input spaces are continuous, as in this work, dynamic programming algorithms cannot be implemented exactly. A typical procedure consists in considering compact subsets of the state and input spaces, respectively, which are then discretized to make the implementation of the algorithm tractable. A drawback of this approach is that we obtain (near-)optimal inputs for states in the considered compact set only. In addition, it is not always obvious that a solution initialized in this set would remain in it for all future times. Also, there is no clear recipe on how to select the compact set and typically this is done on a case by case basis by the user.

In this work, we explain how these drawbacks can be addressed when the system and the cost functions satisfy homogeneity properties. The underlying idea is that, in this case, we only have to solve the original optimal control problem on a given well-defined compact set to derive (near-)optimal inputs for any state in the fully unbounded state space. This approach was investigated in e.g., [17, 5, 7, 16, 13, 18] for continuous-time systems. The results on discrete-time systems we are aware of are more scarce [8, 18] on the other hand, and only consider homogeneous properties of degree (or depending on the convention), which limits their applicability. This restriction is due to the homogeneity notion used in [8, 18], which does not allow to derive scaling properties of the plant solutions along homogeneous rays when the degree is different from or . In this paper, we relax this degree constraint by exploiting a homogeneity definition recently proposed in the literature in [20, 19]. In that way, we can derive scaling properties of the solutions for any homogeneity degree including those featured in [8, Proposition 2] and [18, proof of Corollary 1] as particular cases. We are thus able to consider a much broader class of systems, which include polynomial systems for instance.

To this end, we first extend the definition in [20, 19] to be applicable for systems with inputs. We then derive a new scaling property of the system solutions, provided the sequence of inputs is suitably modified. Assuming the stage and terminal costs are also homogeneous, we explain how the optimal value function scales along homogeneous rays. As a result, by solving the original control problem at a given state, we obtain a solution to a different optimal control problem by scaling the obtained sequence of inputs when moving along the corresponding ray. This differs from [17] where continuous-time systems are studied, as the results here employ scaled weighting constants, while [17] features instead scaled cost horizons. Here, we deduce optimal sequences of inputs for the same cost function along the considered ray in some specific but important cases, which include minimum-time control and maximum hands-off control [15, 14], thereby covering and generalizing the results of [8].

We then explain how the general scaling property of the optimal value function can be exploited to obtain a new approximation scheme for value iteration (VI), which is one of the core algorithms of dynamic programming. This new scheme has the following appealing features. First, it only requires to solve the original VI equation on a given compact manifold whose dimension is strictly smaller than the dimension of the state space. Second, the estimates of the value function are defined on the whole state space. Finally, the proposed algorithm is applied to the optimal control of a van der Pol oscillator.

The rest of the paper is organized as follows. The notation is defined in Section II. The main results are given in Section III, where the homogeneous scaling properties are stated. The new approximation scheme for VI is presented in Section IV. The numerical case study is provided in Section VI, and Section VII concludes the paper.

II Preliminaries

Let be the set of real numbers, , , , be the set of integers, and . Given a matrix with , we write when is positive semi-definite and we denote by , for , the induced norm. For any with , stands for the diagonal matrix, whose diagonal components are . The notation stands for , where , and . The Euclidean norm of a vector with is denoted by . We also use the so-called norm, which we denote for with , which is the number of components of that are not equal to . For any set , and , the map is defined as when and when . Given vectors with , is the vectorial space generated by the family . For any and , . We denote a sequence of (possibly infinitely many) elements, with and for any , .

The homogeneity definitions used in this work involve the next maps and sets. Given with , the dilation map is defined as for any and . We denote as when the dimension is clear from the context for the sake of convenience. We say that a dilation map is standard when for some . We call homogeneous ray (induced by dilation map ) passing at , the set . When , we say that and belong to the same homogeneous ray. Given a set and , we also denote by the set . Given with , and , we denote by the map defined as for any and . We write instead of again when the dimension of is clear from context for the sake of convenience.

III Main results

In this section, we describe the homogeneity assumptions we make on the system and the stage cost, and how these allow to derive various scaling properties along homogeneous rays of the solutions, the stage cost and finally of the optimal value function.

III-A Homogeneous system

Consider the discrete-time nonlinear system

| (1) |

where is the plant state, is the control input and . We assume throughout the paper that satisfies the homogeneity property stated next.

Standing Assumption 1 (SA1)

There exist , , and such that is homogeneous of degree with respect to the dilation pair in the sense that for any and , , or, equivalently, .

The homogeneity property in SA1 is inspired by [20, Definition 2] and extends it to vector fields with inputs. Some classes of vector fields satisfying SA1 are provided in Section V-A. Homogeneity definitions commonly found in the literature, as in, e.g., [8, 18], require instead of as in SA1. The homogeneity notions in [20, Definition 2] and SA1 are better suited for vector fields arising in discrete-time dynamical systems. Indeed, they allow deriving scaling properties of the solutions as in [20, Lemma 3], which is extended below to systems equipped with inputs. In terms of notation, we use to denote the solution to system (1) with initial condition and inputs sequence of length at time with the convention that .

Lemma 1

For any , , , and with ,

| (2) |

Proof: Let , and . We proceed by induction. Considering the definition of , we obtain

| (3) |

which corresponds to (2) at . Furthermore, by the definitions of and , it follows that

| (4) |

We derive from SA1

| (5) |

which corresponds to (2) for .

Suppose now that (2) holds for . We have

| (6) |

By assumption , thus

| (7) |

Noting that and in view of the definition of , we derive that by SA1

| (8) |

which corresponds to the desired property, namely (2) at . We have proved that when (2) holds for some , it also does for . The induction proof is complete.

Lemma 1 provides an explicit relationship between the solutions to system (1) initialized on the same homogeneous ray (as defined in Section II), provided their successive inputs are appropriately scaled by the map .

Next, we introduce the cost function and the homogeneity properties we assume it satisfies.

III-B Homogeneous cost

We consider the cost function of horizon , for any and any sequence of inputs of length ,

| (9) |

where is the stage cost, is the terminal cost, moreover and are weighting constants whose role is to capture the scaling effects due to homogeneity properties as it will be clarified in the sequel. When , is the zero function. The stage and terminal costs and are assumed to satisfy the next homogeneity property.

Standing Assumption 2 (SA2)

There exists such that and are homogeneous of degree with respect to dilation pairs and , respectively, in the sense that for any and , and .

Examples of stage and terminal costs ensuring SA2 are given in Section V-B. The next result is a consequence of Lemma 1 and SA2.

Proof: Let , , , and . In view of (2), . Since and , in view of SA2, , which corresponds to the first equality in Lemma 2. We similarly derive the second equality in Lemma 2.

We are ready to analyse the optimal value function associated to (9).

III-C Scaling property of the optimal value function

Given , , and , we define the optimal value function associated to cost at as

| (10) |

which is assumed to exist, per the next assumption.

Standing Assumption 3 (SA3)

For any , , and , there exists a -length sequence of inputs such that .

The next theorem provides an explicit relationship on the optimal value function along homogeneous rays.

Theorem 1

For any , , denote an optimal sequence of inputs for the cost at , then for any ,

| (11) |

where and respectively come from SA1-SA2, and is an optimal sequence of inputs for cost at , i.e., , or, equivalently, , and, for an optimal sequence of inputs for costs at , is an optimal sequence of inputs for cost at , i.e., .

Proof: Let , , be an optimal sequence of inputs for cost at , which exists according to SA3, and let . From the definitions of , , and , we derive that

| (12) |

Hence, in view of Lemma 2 and by definition of , we have

| (13) | |||

On the other hand,

| (14) |

where is an optimal sequence of inputs for cost at initial state , which exists by SA3. We have that

| (15) | |||

from which we derive, in view of Lemma 2 and the definition of ,

| (16) |

We deduce from (III-C) and (16) that . As a consequence, in view of the equality in (III-C), is an optimal sequence of inputs for cost at .

We now prove the last part of Theorem 1. Let . In view of the first part, , which, by using the definition of , is equivalent to . We have obtained the desired result.

Theorem 1 provides a scaling-like property of the optimal value function along homogeneous rays. In particular, by computing and an associated sequence of optimal inputs at some , we can deduce a scaled version of the optimal value function and an associated sequence of optimal inputs for any point on the homogeneous ray . However, the obtained optimal value function differs in general in Theorem 1, except in two specific cases formalized in the next corollary, the second one being novel, which is a direct application of Theorem 1.

Corollary 1

In Corollary 1, the optimal value function is proportional along homogeneous rays under extra conditions compared to Theorem 1. As a result, by computing the optimal value function on any -sphere of centered at the origin for instance and an associated sequence of optimal inputs, we can obtain the value of the optimal value function as well as a sequence of optimal inputs for any point in . Note that, by SA1-SA2, and , hence the optimal sequence at the origin is the 0 sequence of appropriate length, and for any , and . The extra condition imposed by Corollary 1 is on the homogeneity degree of , which needs to be equal to , or on the one of and , which needs to be equal to . The first case corresponds to [8, Proposition 2], up to the minor difference that here the cost can have an infinite horizon. The second case, namely when the homogeneity degree of and is , is new as far as we know, and is important as it covers minimum time control and maximum hands off control as particular cases, as explained in more detail in Section V-B.

Remark 1

Theorem 1 may also be useful when the primary control objective is to constrain the state in a given set . This requirement can be incorporated in the definition of the stage cost using the function , which is zero when the argument is in and infinite elsewhere, as satisfies the desired homogeneity property when is homogeneous, as discussed in more details in Section V-B. In this case, even though the sequence of optimal inputs obtained at can be scaled to minimize another cost function on according to Theorem 1, the obtained sequence of inputs ensures the cost is bounded and thus that the solution remain in for all future times. Indeed, if the solution for the scaled state did not remain in , the scaled cost would be infinite from the cost incurred by when the state is not in . Since the scaled cost is finite, the state is always in .

IV Approximate VI scheme

We explain in this section how the scaling property of the optimal value function established in Theorem 1 can be exploited to derive a new approximation scheme for VI [2] for homogeneous systems and homogeneous costs satisfying SA1–SA3.

IV-A Value iteration

We recall that VI is an iterative procedure to solve optimal control problems [2]. We consider in this section an infinite-horizon cost, i.e., and in (9), and the goal is to derive (near-)optimal sequence of inputs for any state . VI works as follows. Given an initial value function denoted , at iteration , an estimate of the optimal value function is obtained by solving, for any ,

| (22) |

assuming the minimum exists. When solving (22), we can retrieve a minimizer for any , which is used for control. Note that VI trivially accepts the finite-horizon case by initializing with and stopping at , but we do not consider this case here for convenience.

To exactly solve (22) for any is in general impossible for system (1) and stage cost . Instead, most of the time we compute an approximation of on a compact set of interest, see, e.g., [4, 6]. The drawbacks of this approach are that (i) the selection of the compact set is not always rigorously established, (ii) the approximation is only defined on the considered set, (iii) we therefore need to make sure this set is forward invariant for the considered controlled system with the obtained control policy, which is not true in general, (iv) the computational cost may still be prohibitive. In the next section, we propose to exploit homogeneity to mitigate these issues.

IV-B Algorithm

The idea is to solve (22) only on a given compact set and to derive the value of elsewhere in the state space thanks to Theorem 1, under SA1-SA3. The proposed algorithm works as follows.

The compact set is selected such that

| (23) |

that is, the union of all possible dilations of spans . Examples of set include the unit -sphere or , where come from SA1 and can take any value. Note that both of these examples are manifolds of degree while the state space is of dimension . Again, the origin is excluded in (23) as we know that the optimal value function is equal to in this case, as so are and in view of SA1-SA2.

We initialize the modified version of VI with where is homogeneous of degree with respect to dilation pair , i.e., for any and any , . We can simply take for instance. At iteration , we generate a lower and an upper bound of in (22), which are respectively denoted and , like in relaxed dynamic programming [12]. In particular, and, for any and ,

| (24) |

and

| (25) |

In both (24) and (25), VI equation (22) is only solved for in . The values of and when do not require solving (22): these are instead derived through the second equation of (24) and (25), respectively. Note that the two equations in (24) and (25) match when as .

Remark 2

Compared to classical VI (22), we only have to solve the first line of (24) and (25) in the given set , e.g., in an -sphere centered at the origin. Moreover, an implementation of (22) in a forward invariant compact set will have the value functions defined only for states in such set, which in contrast the approximation scheme (24) and (25) provide values for the whole state space , in view of their respective second line. However, executing the minimization exactly for all states in set is still difficult in general, we will study the effect of the induced approximation errors in future work.

IV-C Guarantees

The next proposition ensures that and are indeed lower and upper bounds of on .

Proposition 1

For any and any ,

| (26) |

where is defined by iteration (22) and is initialized with . In addition, when or , for any .

Proof: In view of (24) and (25), . Since SA1-SA2 hold, . Hence, we only need to prove that (26) holds for any , which is equivalent to proving that, for any and ,

| (27) |

in view of the property of in (23). We proceed by induction to prove (27).

. Property (27) holds as .

. We assume that (27) holds for and we aim at proving that this is also satisfied for . Let and . When , in view of (24),

| (28) |

By assumption , thus, in view of (22),

| (29) |

When , from (22),

| (30) | ||||

We derive from SA1-2 and the fact that ,

| (31) |

Since by assumption,

| (32) |

We have from (24) that for any , hence

| (33) |

Since and in view of (24),

| (34) |

The proof that follows similar lines as above. Consequently, (27) holds for . The proof by induction is complete: (26) is guaranteed.

When or , for any . Hence, since , we derive from (24) and (25) that for any and . Consequently, for any and in view of (26).

Proposition 1 ensures that the exact value function given by VI at any iteration , i.e., in (22) is lower and upper-bounded by and as defined in (24) and (25), respectively. We can then exploit these bounds to construct a control law by taking, at any iteration and for any ,

| (35) |

where is any function such that . Compared to relaxed dynamic programming [12] where lower and upper-bounds on are used to derive near-optimal inputs, and in (24) and (25) do not require to solve minimization problems on the whole state space, but only on , which is favourable for practical implementation. Moreover, when or as in Corollary 1, we only need to solve (22) on at each iteration to deduce the value of on the whole state space , in view of the last part of Proposition 1. We investigate numerically the tightness of the bounds of the proposed scheme in Section VI.

V Ensuring the standing assumptions

In this section, we provide examples of vector fields in (1) and stage cost , which verify SA1-2. SA3, on the other hand, can be analysed using the results in [10] as already mentioned.

V-A Standing Assumption 1

The next result may be used to ensure the satisfaction of SA1.

Proposition 2

Suppose that there exist , , scalar fields that are homogeneous of degree with respect to a dilation pair and such that, for any and ,

| (36) |

For any , the vector field with

| (37) |

and , satisfies SA1, in particular it is homogeneous of degree with respect to the dilation pair .

Proof: Let and . From the homogeneity property of for any ,

| (38) |

On the other hand, . Hence, is homogeneous of degree with respect to dilation pair , which concludes the proof.

Proposition 2 implies that when the components of , namely the ’s, can be written as the sum of (scalar) homogeneous functions with respect to the same dilation pair, SA1 can always be ensured by adding a variable , whose dynamics is . By initializing at , we note that for any and we have . The idea to add the extra dynamics to ensure the desired homogeneity property is common in the homogeneous systems literature, see for instance [1] where a different homogeneity property is studied for polynomial vector fields. Notice that there is no loss of generality by considering the same integer for all components of in (36) as we can always add zero-valued functions if needed.

Proposition 2 applies to polynomial vector fields as a particular case. Indeed, suppose is a polynomial vector field of degree , then can be written as in (36) with and are monomials of multiplied by a constant. Proposition 2 also covers the case where is a rational function of components of using the same technique as in [1].

Proposition 2 reduces the homogeneity analysis of to those of the components of , which may be derived from the next simple though useful lemma. Its proof directly follows from the homogeneity properties of the considered functions and is therefore omitted.

Lemma 3

Consider homogeneous scalar functions of degree and respectively, then

-

(i)

for any , is homogeneous of degree ,

-

(ii)

is homogeneous of degree ,

-

(iii)

is homogeneous of degree ,

-

(iv)

is homogeneous of degree .

Examples of functions and as in Lemma 3 include with , with , ReLU functions and with to mention a few. In view of Lemma 3, any vector field which involves compositions, products or linear combinations of such elementary homogeneous functions can be suitably modified using variable as in Proposition 2 to ensure the satisfaction of SA1.

V-B Standing Assumption 2

First, we have the next lemma, which shows that the linear combination of homogeneous stage (terminal) costs of different degrees but with respect to the same dilation pair can be modified by adding an extra dummy variable as in Proposition 2 to ensure the satisfaction of SA2.

Lemma 4

Let and (respectively, ) be homogeneous with respect to a dilation pair (respectively, ) of degree . Let . Then, for any , is homogeneous of degree with respect to the dilation pair (respectively, is homogeneous of degree with respect to ). When , is homogeneous of degree with respect to the dilation pair (respectively, is homogeneous of degree with respect to ).

Proof: Let and . In view of the homogeneity properties of ,

| (39) |

We have proved that is homogeneous of degree with respect to dilation pair . When , we directly derive the desired result from the above development. Finally, the case of similarly follow.

The next lemma provides a condition for the dilation maps from SA1 under which SA2 is verified with quadratic(-like) costs.

Lemma 5

Proof: Let and .

Item (i): From the definitions of , and , we have

| (40) |

from which we derive that is homogeneous of degree with respect to dilation pair .

Item (ii): From the definitions of , and , we have

| (41) |

from which we derive that is homogeneous of degree with respect to dilation pair .

Item (ii): Let , , , , and . Since, for any and , in view of the definition of , we derive

| (42) |

Hence is homogeneous of degree with respect to dilation pair .

Item (i) of Lemma 5 shows that quadratic stage costs satisfy SA2 when SA1 holds with . When the dilation pair does not satisfy this property, the stage cost can always be modified as in item (ii) of Lemma 5 to enforce SA2. This change of cost was proposed in the paragraph before [8, Proposition 1], except that the function is used here instead of the absolute value for generality. Regarding the terminal cost , the results of Lemma 5 apply by setting .

We have seen at the end of Section III that having a stage cost and a terminal cost of homogeneity degree can be exploited to simplify the computation of the optimal value functions and of a sequence of optimal inputs for any . It appears that the examples of stage and terminal costs in Lemma 3 are not homogeneous of degree zero. The next lemma provides relevant examples ensuring homogeneity with degree , but before that we need to recall the notion of homogeneous sets.

Definition 1

Let . A set is homogeneous with respect to a dilation when, for any , if and only is .

We write for short that a set is homogeneous when there exists a dilation with respect to which it is homogeneous. Examples of homogeneous sets include and where and are the standard unit vectors of for any dilation map. We are ready to present examples of functions, which can be used to define stage and terminal costs ensuring SA2 with -homogeneity degree.

Lemma 6

Let with and be a homogeneous set with respect to . For any , is homogeneous of degree with respect to dilation .

Proof: Let , and , when , which is equivalent to by homogeneity of , and when for the same reason. Hence , which corresponds to the desired result.

In view of Lemma 6 and the fact that the linear combination of -degree homogeneous functions is homogeneous of degree in view of Lemma 4, we derive that important optimal control problems correspond to the stage and terminal costs of degree including

-

•

minimum time control to a homogeneous set , in which case , and thus ,

- •

VI Numerical case study

We illustrate the results of Section V. In particular, we study how conservative the bounds in Proposition 1 are.

VI-A System and cost

We consider the optimal control of the discretized model of a van der Pol oscillator using Euler’s direct method, where are the states, is the control input, is a parameter and is the sampling period used for discretization.

To ensure the satisfaction of SA1, we add a variable so that the extended system is of the form of (1) with and . Like in Proposition 2, by initializing , the -component of the solutions to (1) with defined above matches the solution to the original system initialized at the same point and, with a careful choice of the stage cost in the sequel, will solve the same optimal control problem. The vector field satisfies SA1 with , and . Indeed, for any , and , We fix in the sequel.

We choose a quadratic stage cost. Hence, for any and , let with and . By item (i) of Proposition 5, is homogeneous of degree . Hence, SA2 is verified with . Note that there is no cost associated to the component in either the stage or the terminal cost as we wish for the incurred cost along solutions of to coincide with the original system when . We also need to check that SA3 holds. We apply [10, Theorem 2] for this purpose. Indeed, items a) to c) of [10, Theorem 1] hold. Furthermore, item of [10, Theorem 2] is satisfied as , hence implies for any . The last condition to check is item e) of [10, Theorem 2], i.e., that for any initial condition there is a sequence of inputs which makes the cost finite. For any , we can construct a linearizing feedback that brings the state to the set in at most two steps, while the trivial input has cost for any . Therefore an infinite sequence with finite cost exists, and by invoking [10, Theorem 2] we conclude that exists and SA3 holds.

VI-B Numerical implementation

We first describe the details of the implementation of the new algorithm in Section IV-B. We take the compact set , which verifies (23).

Because we do not know how to solve the minimization steps in (24) and (25) analytically in general, we proceed numerically. We rewrite the problem in polar coordinates and we consider a finite difference approximation of points equally distributed in , representing the azimuth and elevation angles of the upper-hemisphere of , and equally distributed quantized inputs in .

To evaluate the accuracy of the proposed algorithm, we need to compute given by standard VI with . We consider for this purpose a finite difference approximation of points equally distributed in for the state space, and equally distributed quantized inputs in centered at . Note that the classical VI only produces the value function on , contrary to and which are defined for any . Moreover, this issue is exacerbated at certain points where for all . For standard (approximate) VI schemes to be well defined, an unclear but consequential assessment has to be made to assign values for when .

VI-C Results

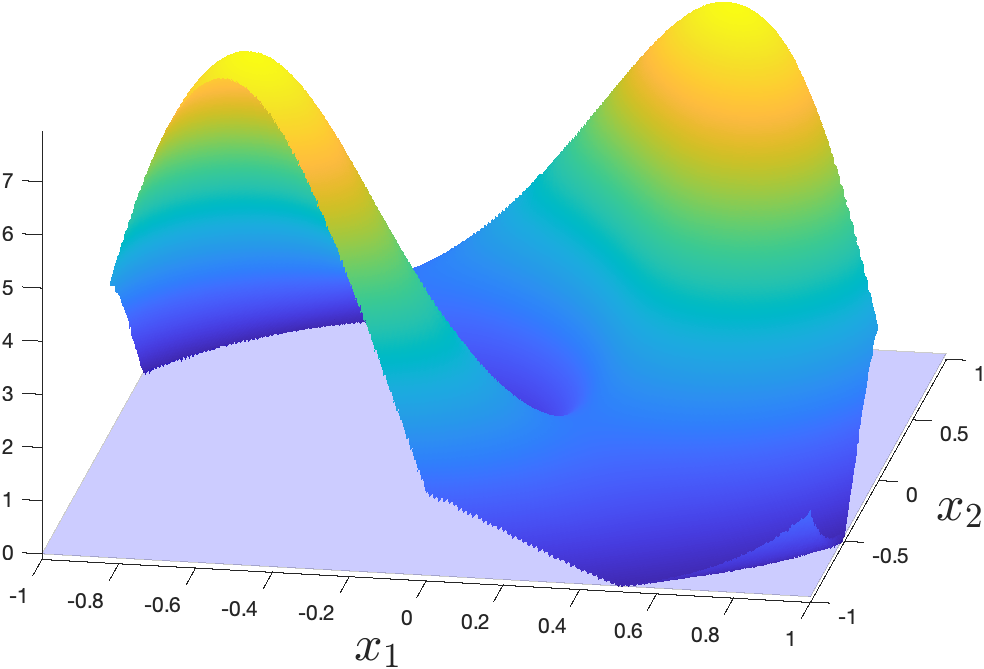

We illustrate the difference between and for in Figure 1, and similarly Figure 2 shows the difference between and . As expected, and under and over estimate , respectively. We note from Figure 1 that the error is small near the intersection of set and , which is expected as it corresponds to points where the minimization in (24) and (25) coincides with (22). We have then a circle of radius where the error is small, and grows when moving to points outside this circle. The error near the origin is also small despite not being part of , which is explained by the fact that , and vanish at the origin. Similar observations can be made for the upper bound in Figure 2, even when varying the size of the radius for the compact set , that is, by taking for different values of . Hence, by exploiting homogeneity, we have a VI scheme that is calculated only for points in the compact set but has analytical guarantees for any state in , and not just for states in (the forward invariant subset) of as in the classical VI implementation.

VII Concluding remarks

In this work, we have first adapted the homogeneity notion for discrete-time systems proposed in [20] to be applicable to systems with inputs. We have then provided several scaling properties of the system, cost and optimal value function. The latter is particularly important as it implies that we only need to solve the original optimal control problem on a given compact manifold of dimension strictly smaller than the dimension of the state space to derive (near-)optimal inputs for any state. We then exploit this observation to derive a new approximation scheme for VI.

Future work will include further investigation of the error of approximation when solving VI on the set in Section IV, as well as other ways to construct upper and lower bounds of the function generated by VI.

References

- [1] J. Baillieul. The geometry of homogeneous polynomial dynamical systems. Nonlinear Anal.-Theor., 4(5):879 – 900, 1980.

- [2] D. P. Bertsekas. Dynamic Programming and Optimal Control, volume 2. Athena Scientific, Belmont, U.S.A., 4th edition, 2012.

- [3] D.P. Bertsekas. Value and policy iterations in optimal control and adaptive dynamic programming. IEEE Transactions on Neural Networks and Learning Systems, 28(3):500–509, 2015.

- [4] D.P. Bertsekas. Abstract Dynamic Programming. Athena Scientific, Belmont, U.S.A., 2nd edition, 2018.

- [5] S. P. Bhat and D. S. Bernstein. Geometric homogeneity with applications to finite-time stability. Mathematics of Control, Signals and Systems, 17(2):101–127, 2005.

- [6] L. Buşoniu, D. Ernst, B. De Schutter, and R. Babuška. Approximate dynamic programming with a fuzzy parameterization. Automatica, 46(5):804–814, 2010.

- [7] J.-M. Coron, L. Grüne, and K. Worthmann. Model predictive control, cost controllability, and homogeneity. SIAM Journal on Control and Optimization, 58(5):2979–2996, 2020.

- [8] G. Grimm, M.J. Messina, S.E. Tuna, and A.R. Teel. Model predictive control: for want of a local control Lyapunov function, all is not lost. IEEE Transactions on Automatic Control, 50(5):546–558, 2005.

- [9] R. E. Kalman. Contributions to the theory of optimal control. Bol. soc. mat. mexicana, 5(2):102–119, 1960.

- [10] S.S. Keerthi and E.G. Gilbert. An existence theorem for discrete-time infinite-horizon optimal control problems. IEEE Transactions on Automatic Control, 30(9):907–909, 1985.

- [11] D. Liberzon. Calculus of variations and optimal control theory: a concise introduction. Princeton university press, 2011.

- [12] B. Lincoln and A. Rantzer. Relaxing dynamic programming. IEEE Transactions on Automatic Control, 51(8):1249–1260, 2006.

- [13] K. Mino. On the homogeneity of value function of the optimal control problem. Economics Letters, 11(1-2):149–154, 1983.

- [14] M. Nagahara, J. Østergaard, and D.E. Quevedo. Discrete-time hands-off control by sparse optimization. EURASIP Journal on Advances in Signal Processing, 2016(1):1–8, 2016.

- [15] M. Nagahara, D.E. Quevedo, and D. Nešić. Maximum hands-off control: a paradigm of control effort minimization. IEEE Transactions on Automatic Control, 61(3):735–747, 2015.

- [16] N. Nakamura, H. Nakamura, Y. Yamashita, and H. Nishitani. Homogeneous stabilization for input affine homogeneous systems. IEEE Transactions on Automatic Control, 54(9):2271–2275, 2009.

- [17] A. Polyakov. Generalized homogeneity in systems and control. Springer, 2020.

- [18] M. Rinehart, M. Dahleh, and I. Kolmanovsky. Value iteration for (switched) homogeneous systems. IEEE TAC, 54(6):1290–1294, 2009.

- [19] T. Sanchez, D. Efimov, and A. Polyakov. Discrete-time homogeneity: Robustness and approximation. Automatica, 122:109275, 2020.

- [20] T. Sanchez, D. Efimov, A. Polyakov, J.A. Moreno, and W. Perruquetti. A homogeneity property of discrete-time systems: Stability and convergence rates. Int. J. Robust Nonlin., 29(8):2406–2421, 2019.

- [21] E. Trélat. Optimal control and applications to aerospace: some results and challenges. J. Optimiz. Theory App., 154(3):713–758, 2012.