Exploiting Global Contextual Information for Document-level Named Entity Recognition

Abstract

Most existing named entity recognition (NER) approaches are based on sequence labeling models, which focus on capturing the local context dependencies. However, the way of taking one sentence as input prevents the modeling of non-sequential global context, which is useful especially when local context information is limited or ambiguous. To this end, we propose a model called Global Context enhanced Document-level NER (GCDoc) to leverage global contextual information from two levels, i.e., both word and sentence. At word-level, a document graph is constructed to model a wider range of dependencies between words, then obtain an enriched contextual representation for each word via graph neural networks (GNN). To avoid the interference of noise information, we further propose two strategies. First we apply the epistemic uncertainty theory to find out tokens whose representations are less reliable, thereby helping prune the document graph. Then a selective auxiliary classifier is proposed to effectively learn the weight of edges in document graph and reduce the importance of noisy neighbour nodes. At sentence-level, for appropriately modeling wider context beyond single sentence, we employ a cross-sentence module which encodes adjacent sentences and fuses it with the current sentence representation via attention and gating mechanisms. Extensive experiments on two benchmark NER datasets (CoNLL 2003 and Ontonotes 5.0 English dataset) demonstrate the effectiveness of our proposed model. Our model reaches score of 92.220.02 (93.400.02 with BERT) on CoNLL 2003 dataset and 88.320.04 (90.490.09 with BERT) on Ontonotes 5.0 dataset, achieving new state-of-the-art performance.

Index Terms:

Named entity recognition, global Contextual Information, natural language processing.I Introduction

Named Entity Recognition (a.k.a., entity chunking), which aims to identify and classify text spans that mention named entities into the pre-defined categories, e.g., persons, organizations and locations, etc., is one of the fundamental sub-tasks in information extraction (IE). With advances in deep learning, much research effort [13, 17, 23] has been dedicated to enhancing NER systems by utilizing neural networks (NNs) to automatically extract features, yielding state-of-the-art performance.

Most existing approaches address NER by sequence labeling models [24, 3], which process each sentence independently. However, in many scenarios, entities need to be extracted from a document, such as the financial field. The way of taking single sentence as input prevents the utilization of global information from the entire document. Intead of sentence-level NER, we focus on studying the document-level NER problem.

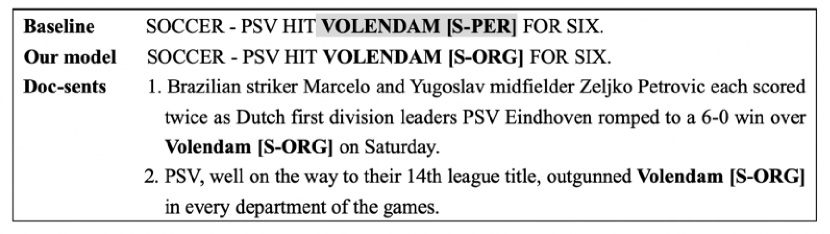

Named entity mentions appearing repeatedly in a document may be naturally topically relevant to the current entity and thus can provide external context for entity understanding, for example, in Figure 1, the baseline model mistakenly tags “VOLENDAM” as a person (PER) instead of organization (ORG) owing to the limited local context, while some text in the two other sentences within the same document in Figure 1 such as “6-0 win over” clearly implies “VOLENDAM” to represent a team that should be tagged as ORG. Additionally, the semantic relevances are naturally distributed into the adjacent sentences. For example, in the case of Figure 1, the baseline model fails to recognize the OOV token “Rottweilers” as a Miscellaneous (MISC) entity, while the previous sentence actually has provided an obvious hint that it specifically refers to a Pet name like “Doberman”. Therefore, in this paper we propose to fully and effectively exploit the rich global contextual information for enhancing the performance of document-level NER.

However, the use of extra information will inevitably introduce noise. Since the entity category of words that repeatedly appear also can be different, and adjacent sentences might bring semantic interference. For example, in Figure 1, the first sentence provides useful information for tagging “Bucharest” as ORG in the query sentence, whereas the second sentence brings noise to some extent. In the end, proper strategies must be adopted to avoid the interference of noise information.

Indeed, there exist several attempts to utilize global information besides single sentence for NER [1, 23, 11, 4]. However, these methods still easily fail to achieve the above task due to the following facts.

First, they [1, 23, 4] don’t provide a sufficiently effective method to address the potential noise problems while introducing global information. Second, they [1, 23, 11] only utilize extra information at the word-level, but ignore the modeling at the sentence level.

In this paper, we propose GCDoc that exploit global contextual information for document-level NER from both word and sentence level perspectives. At the word level, a simple but effective document graph is constructed to model the connection between words that reoccur in document, then the contextualized representation of each word is enriched. To avoid the interference of noise information, we further propose two strategies. We first apply the epistemic uncertainty theory to find out tokens whose representations are less reliable, thereby helping prune the document graph. Then we explore a selective auxiliary classifier for effectively capturing the importance of different neighboring nodes. At the sentence level, we propose a cross-sentence module to appropriately model wider context. Three independent sentence encoders are utilized to encode the current sentence, its previous sentences as well as its next sentences with certain window size to be discussed later. The enhanced sentence representations are assigned to each token at the embedding layer. To verify the effectiveness of our proposed model, we conduct extensive experiments on two benchmark NER datasets. Experimental results suggest that our model can achieve state-of-the-art performance, demonstrating that our model truly exploits useful global contextual information.

The main contributions are as follows.

-

•

We propose a novel model named GCDoc to effectively exploit global contextual information by introducing document graph and cross-sentence module.

-

•

Extensive experiments on two benchmark NER datasets verify the effectiveness of our proposed model.

-

•

We conduct an elaborate analysis with various components in GCDoc and analyze the computation complexity to investigate our model in detail.

II Related Work

Most of conventional high performance NER systems are based on classical statistical machine learning models, such as Hidden Markov Model (HMM) [32], Conditional Random Field (CRF) [16, 25, 12], Support Vector Machine (SVM) [15] and etc. Although great success has been achieved, these methods heavily relying on handcrafted features and external domain-specific knowledge. With the rise of deep learning, many research efforts have been conducted on neural network based approaches to automatically learning feature representations for NER tasks.

LSTM-based NER. Currently, LSTM is widely adpoted as the context encoder for most NER models. Huang et al. [13] initially employ a Bi-directional LSTM (Bi-LSTM) and CRF model for NER for better capturing long-range dependency features and thus achieve excellent performances. Subsequently, the proposed architecture is widely used for various sequence labeling tasks [24, 17]. Several researches [24, 17] further extend such model with an additional LSTM/CNN layer to encode character-level representations. More recently, using contextualized representations from pretrained language models has been adopted to significantly improve performance [30, 8]. However, most of these approaches focus on capturing the local context dependencies, which prevents the modeling of global context.

Exploiting Global Context. Several researches have been carried out to utilize global information besides single sentence for NER. Qian et al. [31] model non-local context dependencies in document level and aggregates information from other sentences via convolution. Akbik et al. [1] dynamically aggregate contextualized embeddings for each unique string in previous sentences, and use a pooling operation to generate a global word representation. All these works ignore the potential noise problems while introducing global information. Luo et al. [22] adopt the attention mechanism to leverage document-level information and enforce label consistency across multiple instances of the same token. Similarly, Zhang et al. [37] propose to retrieve supporting document-level context and dynamically weight their contextual information by attention. More recently, Luo et al. [23] propose a model that adopts memory networks to memorize the word representations in training datasets. Following their work, Gui et al. [11] adopt similar memory networks to record document-level information and explicitly model the document-level label consistency between the same token sequences. Though attention operation is called in these models to compute the weight of document-level representations, it’s not clear enough which context information should be more reliable, thus the ability of these methods to filtering noise information is limited. Additionally, all the above work exploit extra information at the word-level, ignoring the modeling at the sentence level. Chen et al. [4] propose to capture interactions between sentences within the same document via a multi-head self attention network, while ignoring exploiting global information at the word level. Different from their work, we propose a more comprehensive approach to exploit global context information, which has been demonstrated more effective on NER task.

III Preliminary

In this section, we introduce the problem statement and then briefly describe a widely-adopted and well-known method for NER, i.e., Bi-LSTM-CRF, which is also a baseline in our experiment. And then we briefly present the theories of representing model uncertainty, which we apply for pruning the document graph module.

III-A Problem Formulation

Let be a sequence composed of tokens that represents a document and be its corresponding sequence of tags over . Specifically, each sentence in is denoted by , where is the length of and indicates the -th token of . Formally, given a document , the problem of document-level name entity recognition (NER) is to learn a parameterized () prediction function, i.e., from input tokens to NER labels over the entire document .

III-B Baseline: Bi-LSTM-CRF Model

In Bi-LSTM-CRF Model, an input sequence is typically encoded into a sequence of low-dimensional distributed dense vectors, in which each element is formulated as a concatenation of pre-trained and character-level word embeddings . Then, a context encoder is employed (i.e., Bi-directional LSTM) to encode the embedded into a sequence of hidden states for capturing the local context dependencies within sequence, namely,

| (1) | |||

| (2) | |||

| (3) |

where and are the hidden states incorporating past and future contexts of , respectively.

Subsequently, conditional random field (CRF [16]), which is commonly used as a tag decoder, is employed for jointly modeling and predicting the final tags based on the output (i.e., ) of the context encoder, which can be transformed to the probability of label sequence being the correct tags to the given , namely,

| (4) | |||

| (5) |

where is the score function; is the set of possible label sequences for ; and indicate the weight matrix and bias corresponding to . Then, a likelihood function is employed to maximize the negative log probability of ground-truth sentences for training,

| (6) |

where denotes the set of training instances, and indicates the corresponding tag set.

III-C Representing Model Uncertainty

Epistemic (model) uncertainty explains the uncertainty in the model parameters. In this paper, we adopt the epistemic uncertainty to find out tokens whose labels are likely to be incorrectly predicted by the model, thereby pruning the document graph to effectively prevent the further spread of noise information.

Bayesian probability theory provides mathematical tools to estimate model uncertainty, but these usually lead to high computational cost. Recent advances in variational inference have introduced new techniques into this field, which are used to obtain new approximations for Bayesian neural networks. Among these, Monte Carlo dropout [9] is one of the simplest and most effective methods, which requires little modification to the original model and is applicable to any network architecture that makes use of dropout.

Given the dataset with training inputs and their corresponding labels , the objective of Bayesian inference is estimating , i.e., the posterior distribution of the model parameters given the dataset . Then the prediction for a new input is obtained as follows:

| (7) |

Since the posterior distribution is intractable, Monte Carlo dropout adopts the variational inference approach to use , a distribution over matrices whose columns are randomly set to zero, to approximate the intractable posterior. Then the minimisation objective is the Kullback-Leibler (KL) divergence between the approximate posterior and the true posterior .

With Monte Carlo sampling strategy, the integral of Eq. 7 can be approximated as follows:

| (8) |

where and is the number of sampling. In fact, this is equivalent to performing stochastic forward passes through the network and averaging the results. In contrast to standard dropout that only works during training stage, the Monte Carlo dropouts are also activated at test time. And the predictive uncertainty can be estimated by collecting the results of stochastic forward passes through the model and summarizing the predictive variance.

IV Proposed Model

IV-A Overview

In this section, we focus on fully exploiting the document-level global contexts for enhancing the word-level representation capability of tokens, and thus propose a novel NER model named GCDoc, and then we proceed to structure the following sections with more details about each submodule of GCDoc, which is under the Bi-LSTM-CRF framework (rf. Section III-B) and shown in Figure 2.

Token Representation. Given a document composed of a set of tokens, i.e., , the embedding layer is formulated as a concatenation of three different types of embeddings for obtaining the word-level (pre-trained and character-level ) and the sentence-level (i.e., ) simultaneously,

| (9) |

Generally, the adjacent sentences may be naturally topically relevant to the current sentence within a same document, and thus we propose a novel cross-sentence contextual embedding module (bottom part in Figure 2, rf. Section IV-B) for encoding the global-level context information at sentence-level to enhance the representations of tokens within the current sentence.

Context Encoder. Next, the concatenated token embeddings (instead of ) is fed into a Bi-directional LSTM model to capture the local context dependencies within sequence, and then we obtain a sequence hidden states .

For enriching the contextual representation of each token, we develop a document graph module based on gated graph neural networks to capture the non-local dependencies between tokens across sentences, namely,

IV-B Cross-Sentence Contextual Embedding Module

Generally, the adjacent sentences may be topically relevant to the current sentence within a same document naturally, and thus we propose a novel cross-sentence contextual embedding module. To this end, in this section we propose a cross-sentence module, which treats the adjacent sentences as auxiliary context at sentence-level to enhance the representation capability of the given sentence. Similar to [26], we only take account of the cross-sentence context information within a certain range (e.g., ) before and after the current sentence, namely, we concatenate the sentences before/after the current sentence for capturing cross-sentence context information.

Without loss of generality, suppose the representations of the current sentence, the past context representation of sentences and the future context representation of sentences are denoted by , and , respectively. Furthermore, we also introduce an attention mechanism for measuring the relevance of such two auxiliary representations to the current one, and thus a weighted sum () of such two representations is calculated for the representation of the current sentence, namely,

| (11) |

where and are the learned attentions of the past and the future context information to the current sentence, which are used to control the contributions of such two context information and computed based on softmax,

| (12) | |||

| (13) |

where is a compatibility function to calculate the pair-wise similarity between two sentences, which is computed by

| (14) |

where is an activation function; , are the learnable weight matrices; is a weight vector, and denotes the bias vector.

Finally, a gating mechanism is employed to decide the amount of cross-sentence context information () that should be incorporated and fused with the initial sentence representation :

| (15) |

where represents elementwise multiplication, and is the trade-off parameter, which is calculated by

| (16) |

where are trainable weight matrices.

Remark. The enhanced sentence-level representation is then concatenated with the word-level embeddings of tokens (rf. Eq (9)) and fed into the context encoder. In particular, For each sentence, we adopt Bi-LSTM as the basic architecture for sentence embedding. Specifically, for each sentence , a sequence of hidden states are generated by Bi-LSTM model according to Eq (1)-(3), and then the sentence representation is computed by mean pooling over all hidden states , that is,

| (17) |

where is the length of the sequence.

IV-C Gated Graph Neural Networks based Context Encoder

As mentioned, an intuition is that the entity mentions appearing repeatedly in a document are more likely to be of same entity category. To this end, in this section, we develop a simple but effective graph layer based on a word-level graph, which is built over the re-occurrent tokens to capture the non-local dependencies among them.

Graph Construction. Given a document , a recurrent graph is defined as an undirected graph , where each node denotes a recurrent token, each edge is a connection of every two same case-insensitive tokens (rf. Figure 3), which is used to model the re-occurrent context information of tokens across sentences, as the local context of a token in a sentence may be ambiguous or limited.

Node Representation Learning. To learn the node representations of tokens, we adopt graph neural networks to generate the local permutation-invariant aggregation on the neighborhood of a node in a graph, and thus the non-local context information of tokens can be efficiently captured.

Specifically, for each node , the information propagation process between different nodes can be formalized as:

| (18) |

where denotes the set of neighbors of , which does not include itself; indict the weight matrices and denotes the bias vector; is the hidden state of the neighbor node .

Through Eq (18), we can easily aggregate the neighbours’ information to enrich the context representation of node via the constrained edges (). Inspired by the gated graph neural networks, the enhanced representation is combined with its initial embedding () via a gating mechanism, which is employed for deciding the amount of aggregated context information to be incorporated, namely,

| (19) | |||

| (20) | |||

| (21) | |||

| (22) |

where are learnable weight matrices, denotes the logistic sigmoid function and represents elementwise multiplication. and are update gate and reset gate, respectively. The output of Eq (19) is the final output of our document graph module (rf. Eq (10)), which will be fed into the tag decoder for generating the tag sequence.

Furthermore, directly aggregating the contextual information may contain noise, since not all re-occurrent tokens can provide reliable context information for disambiguating others. As such, we propose two strategies to avoid introducing noise information in the document graph module. First we apply the epistemic uncertainty to find out tokens whose labels are likely to be incorrectly predicted, thereby pruning the document graph. Then we adopt a selective auxiliary classifier for distinguishing the entity categories of two different nodes, thus guiding the process of calculating edge weights.

(1) Document Graph Pruning. We suppose that tokens whose labels are more likely to be correctly predicted by the model will have more reliable contextual representations, and will be more helpful in the graph module for disambiguating other tokens. In this paper, we use Monte Carlo dropout to estimate the model uncertainty for indicating whether model predictions are likely to be correct. Specifically, we obtain the uncertainty value of each token through an independent sub-module. Then we set a threshold , and put tokens whose uncertainty value is greater than the threshold into a set . Since the context information of these tokens should be less reliable, we prune the graph module by ignoring the impact of these tokens on their neighboring nodes. Thus Eq 18 is rewritten as follows,

| (23) |

where denotes the the number of neighbor nodes of node that actually participating in the operation.

As for the model uncertainty prediction, we apply the Monte Carlo dropout and adopt a independent sub-module that predicts labels of each token by a simple architecture composed of a Bi-LSTM and dense layer. The forward pass of the Bi-LSTM is run times with the same inputs by using the approximated posterior in Eq 8. Then given the output representation of the Bi-LSTM layer, the probability distribution of the -th word’s label can be obtained by a fully connected layer and a final softmax function,

| (24) |

where , , and is the number of all possible labels. Then, the uncertainty value of the token can be represented by the uncertainty of its corresponding probability vector , which can be summarized using the entropy of the probability vector:

| (25) |

(2) Edge Weights Calculation. Since not all of repeated entity mentions in document belong to the same categories, which is shown in Figure 3, we propose a selective auxiliary classifier for distinguishing the entity categories of two different nodes, which is defined as a binary classifier that takes the representation of two nodes as input and outputs whether it should be the same entity category, which is denoted by the relation score for any edge and is computed by

| (26) |

where is a learnable weight matrix, represents the bias and denotes the logistic sigmoid function.

In specific, the loss function of the selective auxiliary classifier is defined as:

| (27) |

where is the ground truth label whose value is assigned when nodes and belong to the same entity category, and for otherwise. As a result, Eq (23) can be transformed as follows via the computed relation scores, namely,

| (28) |

After obtaining the enhanced representation that encodes the neighbours’ information, Eq (19)-(22) are applied to generate the final output of our document graph module .

The employed auxiliary classifier can be regarded as a special regularization term, which explicitly injects the supervision information into the process of calculating edge weights and helps the model select useful information in the process of aggregating neighbors.

V Experiments

V-A Data Sets

We use two benchmark NER datasets for evaluation, i.e., CoNLL 2003 dataset (CoNLL03) and OntoNotes 5.0 English datasets (OntoNotes 5.0). The details about corporas are shown in Table I.

-

•

CoNLL03 [33] is a collection of news wire articles from the Reuters corpus, which includes four different types of named entities: persons (PER), locations (LOC), organizations (ORG), and miscellaneous (MISC). We use the standard dataset split [7] and follow BIOES tagging scheme (B, I, E represent the beginning, middle and end of an entity, S means a single word entity and O means non-entity).

-

•

OntoNotes 5.0 is much larger than CoNLL 2003 dataset, and consists of text from a wide variety of sources (broadcast conversation, newswire, magazine and Web text, etc..) It is tagged with eighteen entity types, such as persons (PERSON), organizations (ORG), geopolitical entity (GPE), and law (LAW), etc.. Following previous works [5], we adopt the portion of the dataset with gold-standard named entity annotations, in which the New Testaments portion is excluded.

V-B Network Training

In this section, we show the details of network training. Related hyper-parameter settings are presented including initialization, optimization, and network structure. We also report the training time of our model on the two datasets.

Initialization. We initialize word embedding with -dimensional GloVe [28] and randomly initialize -dimensional character embedding. We adopt fine-tuning strategy and modify initial word embedding during training. All weight matrices in our model are initialized by Glorot Initialization [10], and the bias parameters are initialized with .

Optimization. We train the model parameters by the mini-batch stochastic gradient descent (SGD) with batch size and learning rate . The regularization parameter is -. We adopt the dropout strategy to overcome the over-fitting on the input and the output of Bi-LSTM with a fixed ratio of . We also use a gradient clipping [27] to avoid gradient explosion problem. The threshold of the clip norm is set to . Early stopping [18] is applied for training models according to their performances on development sets.

Network Structure. We use Bi-LSTM to learn character-level representation of words, which will form the distributed representation for input together with the pre-trained word embedding and sentence-level representation. The size of hidden state of character and word-level Bi-LSTM are set to and , respectively. And we fix the depth of these layers as 1 in our neural architecture. The hidden dim of sentence-level representation is set to and the window size in cross-sentence module is . The threshold in uncertainty prediction sub-module is set to . The hyper-parameter in Eq 29 is set to . In the auxiliary experiments, the output hidden states of BERT [8] are taken as additional contextualized embeddings, which are combined with other three types of word representations (rf. Eq 9). We still adopts Bi-LSTM to encode the context of words instead of using BERT as encoder and fine-tune it.

Training Time. We implement our model based on the PyTorch library and the training process has been conducted on one GeForce GTX 1080 Ti GPU. The model training completes in about 1.6 hours on the CoNLL03 dataset and about 6.7 hours on the OntoNotes 5.0 dataset.

| Corpus | Type | Train | Dev | Test |

|---|---|---|---|---|

| CoNLL03 | Sentences | 14,987 | 3,466 | 3,684 |

| Tokens | 204,567 | 51,578 | 46,666 | |

| OntoNotes 5.0 | Sentences | 59,924 | 8,528 | 8,262 |

| Tokens | 1,088,503 | 147,724 | 152,728 |

V-C Evaluation Results and Analysis

| Category | Index & Model | -score | |

|---|---|---|---|

| Type | Value() | ||

| Sentence-level | Lample et al., 2016 [17] | reported | 90.94 |

| Ma and Hovy, 2016 [24] | reported | 91.21 | |

| Yang et al., 2017 [35] | reported | 91.26 | |

| Liu et al., 2018 [20] | avg | 91.240.12 | |

| max | 91.35 | ||

| Ye and Ling, 2018 [36] | reported | 91.38 | |

| Document-level | Zhang et al., 2018 [37] | avg | 91.64 |

| max | 91.81 | ||

| Qian et al., 2019 [31] | reported | 91.74 | |

| Luo et al., 2020 [23] | reported | 91.960.03 | |

| Gui et al., 2020 [11] | reported | 92.13 | |

| [17] | avg | 91.010.21 | |

| max | 91.27 | ||

| GCDoc | avg | 92.220.02 | |

| max | 92.26 | ||

| + Language Models / External knowledge | |||

| Sentence-level | Peters et al., 2017 [29] | reported | 91.930.19 |

| Peters et al., 2018 [30] | reported | 92.220.10 | |

| Akbik et al., 2018 [2] | reported | 92.610.09 | |

| Devlin et al., 2018 [8] | reported | 92.80 | |

| Document-level | Akbik et al., 2019 [1] | reported | 93.180.09 |

| Chen et al., 2020 [4] | reported | 92.68 | |

| Luo et al., 2020 [23] | reported | 93.370.04 | |

| Gui et al., 2020 [11] | reported | 93.05 | |

| GCDoc+ | avg | 93.400.02 | |

| max | 93.42 | ||

-

means Standard Deviation.

-

2 Here we re-implement the classical Bi-LSTM-CRF model using the same model setting and optimization method with our model.

V-C1 Over Performance

This experiment is to evaluate the effectiveness of our approach on different datasets. Specifically, we report standard -score for evaluation. In order to enhance the fairness of the comparisons and verify the solidity of our improvement, we rerun times with different random initialization and report both average and max results of our proposed model. As for the previous methods, we will show their reported results. Note that these models use the same methods of dataset split and evaluation metrics calculation as ours, which can ensure the accuracy and fairness of the comparison. The results are given in Table II and Table III, respectively.

On CoNLL03 dataset, we compare our model with the state-of-the-art models which can be categorized into sentence-level models and document-level models. The listed sentence-level models are usually popular baselines for most subsequent work in this field [17, 24, 35, 20, 36]. And the document-level models are recent work that also utilize global information besides single sentence for NER as well [37, 31, 23, 11, 1, 4]. Besides, we leverage the pre-trained language model BERT (large version) as an external resource for fair comparison with other models that also use external knowledge [29, 30, 2] On CoNLL03 dataset, our model achieves -score without external knowledge and with BERT. Even compared with the more recent top-performance baseline [11], our model achieves a better result with an improvement of and . Considering that the CoNLL03 dataset is relatively small, we further conduct experiment on a much more large OntoNotes 5.0 dataset. We compare our model with previous methods that also reported results on it [3, 23, 1]. As shown in Table III, our model shows a significant advantage on this dataset, which achieves score without external knowledge and with BERT, consistently outperforming all previous baseline results substantially. Besides, the std (Standard Deviation) value of our model is smaller than the one of Bi-LSTM-CRF, which demonstrates our proposed method is more stable and robust. In Tabel IV, we show the comparison results of the detailed metrics (including precision, recall and ) of GCDoc and Bi-LSTM-CRF on the two datasets. We can indicate from the table that compared with Bi-LSTM-CRF, the evaluation results of GCDoc under all metrics are significantly improved. And the score of recall is improved even more compared with precision.

Overall, the comparisons on these two benchmark datasets well demonstrate that our model can truly leverage the document-level contextual information to enhance NER tasks without the support from external knowledge.

| Category | Index & Model | -score | |

| Type | Value( std) | ||

| Sentence-level | Chiu and Nichols, 2016 [5] | reported | 86.280.26 |

| Strubell et al., 2017 [34] | reported | 86.840.19 | |

| Li et al., 2017 [19] | reported | 87.21 | |

| Chen et al., 2019 [3] | reported | 87.670.17 | |

| Document-level | Qian et al., 2019 [31] | reported | 87.43 |

| Luo et al., 2020 [23] | reported | 87.980.05 | |

| Bi-LSTM-CRF [17] | avg | 87.640.23 | |

| max | 87.80 | ||

| GCDoc | avg | 88.320.04 | |

| max | 88.35 | ||

| + Language Models / External knowledge | |||

| Sentence-level | Clark et al., 2018 [6] | reported | 88.810.09 |

| Liu et al., 2019 [21] | reported | 89.940.16 | |

| Jie and Lu, 2019 [14] | reported | 89.88 | |

| Document-level | Luo et al., 2020 [23] | reported | 90.30 |

| GCDoc+ | avg | 90.490.09 | |

| max | 90.56 | ||

| Model | CoNLL03 | OntoNotes 5.0 | ||||

|---|---|---|---|---|---|---|

| Precision | Recall | -score | Precision | Recall | -score | |

| Bi-LSTM-CRF | 90.96 | 91.07 | 91.01 | 87.58 | 87.70 | 87.64 |

| GCDoc | 92.12 | 92.33 | 92.22 | 87.90 | 88.74 | 88.32 |

| +1.16 | +1.26 | +1.21 | +0.32 | +1.04 | +0.68 | |

V-C2 Ablation Study

In this section, we run experiments to dissect the relative impact of each modeling decision by ablation studies.

For better understanding the effectiveness of two key components in our model, i.e., document graph module and cross-sentence module, we conduct ablation tests where one of the two components is individually adopted. The results are reported in Table V. The experimental results show that by adding any of the two modules, the model’s performance on both datasets is significantly improved. We discover that just adopting the document graph module can get a large gain of %/% over baseline. And by combining with the cross-sentence module, we achieve the final state-of-the-arts results, indicating the effectiveness of our proposed approach to exploit document-level contexual information at both the word and sentence level.

| No | Model | CoNLL03 | OntoNotes 5.0 |

|---|---|---|---|

| 1 | base model | 91.010.21 | 87.640.23 |

| 2 | + Cross-Sentence Module | 91.530.09 | 87.910.21 |

| 3 | + Document Graph Module | 91.860.02 | 88.200.11 |

| 4 | + ALL | 92.220.02 | 88.320.04 |

In order to analyze the working mechanism of the document graph module in our porposed model, we conduct additional experiments on CoNLL03 dataset. We introduce 3 baselines as shown in Table VI: (1) base model only with cross-sentence module; (2) add document graph module but without the two strategies for avoiding noise, which means that Eq 18 is adopted to aggregate neighbor information, i.e., takes the average of all neighbors’ representations; (3) adopt document graph pruning strategy with uncertainty prediction sub-module, i.e., using Eq 23 to aggregate neighbor information; The last one is GCDoc, which applys a selective auxiliary classifier to guide the edge weights calculation progress and further helps the model select useful information. By comparing Model 1 and Model 2, we can find that just adding a simple document graph can get a significant improvement of , which shows that modeling the connection between words that reoccur in document indeed brings much useful global contextual information. The experiment that comparing Model 2 and Model 3 indicates that adding document graph pruning strategy will bring further improvement, since noise information is prevented from introducing by ignoring the impact of less reliable tokens on their neighboring nodes. And the result of comparing Model 3 and Model 4 demonstrates that the designed selective auxiliary classifier provides additional improvements, which proves its importance for capturing the weights of different neighbor nodes and filtering noise information.

In order to appropriately model a wider context beyond single sentence, we encode both the previous and next sentences to enhance the semantic information of the current sentence. In this experiment, one of the two parts is removed from model each time and the results are shown in Table VII. In the first baseline we only encode the current sentence and utilize it as the sentence-level representation. We can find that including either previous or next sentences contributes to an improvement over the base model, which verifies the effectiveness of modeling wider context and encoding neighbour sentences to improve NER performance.

| No | Strategies | -scorestd |

|---|---|---|

| 1 | Base | 91.530.09 |

| 2 | +Doc Graph | 92.000.07 |

| 3 | +Pruning | 92.100.03 |

| 4 | +Edge Weights | 92.220.02 |

| Previous Sentences | Next Sentences | -scorestd |

|---|---|---|

| 91.920.14 | ||

| ✓ | 92.040.13 | |

| ✓ | 91.960.18 | |

| ✓ | ✓ | 92.220.02 |

V-C3 Complexity Analysis

In this section, we analyze in detail the computation complexity of the proposed GCDoc for a better comparison with the baseline model. Compared with Bi-LSTM-CRF, GCDoc mainly adds two modules, i.e., the cross-sentence module, and the document graph module. Therefore, we first analyze the complexity of these two modules separately. Then we compare the overall complexity of GCDoc and Bi-LSTM-CRF.

The cross-sentence module includes a sentence encoder based on Bi-LSTM (rf. Eq (1)-(3), Eq (17)) and an attention mechanism for aggregating cross-sentence context (rf. Eq (11)-(16)). The time complexity of the two can be expressed as and respectively, where denotes the sentence length and is the dimension of the sentence embeddings. Therefore, the overall time complexity of the cross-sentence module is .

The document graph module. The computation complexity of this module mainly comes from two parts: one is to use GNN to aggregate the neighbours’ information of each node (rf. Eq (26), (28)), and the other is to combine the enhanced representation with the initial embedding via a gating mechanism (rf. Eq (19)-(22)). Note that the size of each node’s neighbors varies and some may be very large. Therefore, we sample a fixed-size (i.e., ) neighborhood of each node as the receptive field during the data preprocessing stage, which can facilitate parallel computing in batches and improve efficiency. In this way, the computational complexity of aggregating the neighbours’ information can be expressed as . And the gating mechanism for combining representations requires computation complexity. Therefore, the overall time complexity of the document graph module is .

As for the Bi-LSTM-CRF model, its time complexity can be expressed as . GCDoc adds the above two modules on its basis, thus its overall time complexity is . Since here is actually a constant (set to in our experiment), the time complexity of GCDoc is also . Compared with the Bi-LSTM-CRF baseline, our model’s performance on both datasets is significantly improved without leading to a higher computation complexity.

V-C4 Impact of Window Size in Cross-Sentence Module

The window size (rf. Section IV-B) is clearly a hyperparameter which must be optimized for, thus we investigate the influence of the value of on the CoNLL2003 NER task. The plot in Figure 4 shows that when assigning the value of to the model achieves the best results. When is less than , the larger may incorporate more useful contextualized representation by encoding neighboring sentences and improve the results accordingly, when is larger than , our model drops slightly since more irrelevant context information is involved.

V-C5 Case Study

In this subsection, we present an in-depth analysis of results given by our proposed model. Figure 5 shows four cases that our model predicts correctly but Bi-LSTM-CRF model doesn’t. All examples are selected from the CoNLL 2003 test datasets. We also list sentences in the same document that contain words mistakenly tagged by baseline model (Doc-sents in the figure) for better understanding the influence of utilizing document-level context in our model.

In the first case, the word “Charlton” is a person name while baseline recognizes it as an organization (ORG). In the listed Doc-sents, PER related contextual information, such as “said” in the first sentence and “ENGLISHMAN” in the second sentence indicate “Charlton” to be a PER. Our model correctly tags “Charlton” as PER by leveraging document-level contextual information. In the second case, “Barcelona” is a polysemous word that can represent a city in spain, or represent an organization as a football club name. Without obvious ORG related context information, “Barcelona” is mistakenly labeled as S-LOC by the baseline model. However, our model successfully labels it as S-ORG by utilizing useful sentences in the document whose context inforamtion strongly indicates “Barcelona” to be a football club. Similar situation is shown in the third case. We can infer from “’s eldest daughter” that the word “Suharto” represents the name of a person in the sentence, rather than a part of an organization “City Group of Suharto”. And our model assigns correct label S-PER to it with the help of another sentence in the same document but the baseline model fails.

In the fourth case, we show situation where the document-level context information contains noise. The word “Bucharest” is part of the orgnazation “National Bucharest” while baseline mistakenly recognizes it as LOC. In the listed Doc-sents, in addition to useful contextual information brought by the first and third sentences, “Bucharest” in the second sentence represents LOC, i.e. a city in Romania, which will inevitibaly introduce noise. Our model makes a correct prediction which demenstrates the effectiveness of our model to avoid the interference of noise information.

V-C6 Performance on Different Types of Entities

We further compare the performance of our model and Bi-LSTM-CRF baseline with respect to different types of entities. We are interested in the proportion of each type of entity being correctly labeled by different models. Therefore, in Table VIII, we show the corresponding recall scores of the two models with respect to different types of entities on CoNLL03 dataset.

Compared with the Bi-LSTM-CRF model, the recall score of GCDoc drops slightly (0.47%) on the LOC type, while it’s significantly improved on the other three entity types. Among them, for PER and ORG types, GCDoc brings a great improvement of more than 2%. We speculate that this is because the document graph module of GCDoc can introduce more useful global information for these two types of entities, because in the CoNLL03 test dataset, there are more scenarios where entity tokens of these two types appear repeatedly in the same document.

| Model | PER | LOC | ORG | MISC |

|---|---|---|---|---|

| Bi-LSTM-CRF | 95.18 | 94.12 | 88.44 | 80.91 |

| GCDoc | 97.34 | 93.65 | 90.79 | 81.91 |

| +2.16 | -0.47 | +2.35 | +1.00 |

VI Conclusions

This paper proposes a model that exploits document-level contextual information for NER at both word-level and sentence-level. A document graph is constructed to model wider range of dependencies between words, then obtain an enriched contextual representation via graph neural networks (GNN), we further propose two strategies to avoid introducing noise information in the document graph module. A cross-sentence module is also designed to encode adjacent sentences for enriching the contexts of the current sentence. Extensive experiments are conducted on two benchmark NER datasets (CoNLL 2003 and Ontonotes 5.0 English datasets) and the results show that our proposed model achieves new state-of-the-art performance.

References

- [1] Alan Akbik, Tanja Bergmann, and Roland Vollgraf. Pooled contextualized embeddings for named entity recognition. In NAACL, pages 724–728, 2019.

- [2] Alan Akbik, Duncan Blythe, and Roland Vollgraf. Contextual string embeddings for sequence labeling. In COLING, pages 1638–1649, 2018.

- [3] Hui Chen, Zijia Lin, Guiguang Ding, Jianguang Lou, Yusen Zhang, and Borje Karlsson. Grn: Gated relation network to enhance convolutional neural network for named entity recognition. AAAI, 2019.

- [4] Yubo Chen, Chuhan Wu, Tao Qi, Zhigang Yuan, and Yongfeng Huang. Named entity recognition in multi-level contexts. Proceedings of the 1st Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 10th International Joint Conference on Natural Language Processing, 2020.

- [5] Jason PC Chiu and Eric Nichols. Named entity recognition with bidirectional lstm-cnns. TACL, 4:357–370, 2016.

- [6] Kevin Clark, Minh-Thang Luong, Christopher D Manning, and Quoc V Le. Semi-supervised sequence modeling with cross-view training. arXiv preprint arXiv:1809.08370, 2018.

- [7] Ronan Collobert, Koray Kavukcuoglu, Jason Weston, Leon Bottou, Pavel Kuksa, and Michael Karlen. Natural language processing (almost) from scratch. JMLR, 12(1):2493–2537, 2011.

- [8] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- [9] Yarin Gal and Zoubin Ghahramani. Dropout as a bayesian approximation: Representing model uncertainty in deep learning. ICML, 2016.

- [10] Xavier Glorot and Yoshua Bengio. Understanding the difficulty of training deep feedforward neural networks. In AISTATS, pages 249–256, 2010.

- [11] Tao Gui, Jiacheng Ye, Qi Zhang, Yaqian Zhou, and Xuanjing Huang. Leveraging document-level label consistency for named entity recognition. In Twenty-Ninth International Joint Conference on Artificial Intelligence and Seventeenth Pacific Rim International Conference on Artificial Intelligence IJCAI-PRICAI-20, 2020.

- [12] Aaron L. F. Han, Derek F. Wong, and Lidia S. Chao. Chinese Named Entity Recognition with Conditional Random Fields in the Light of Chinese Characteristics. Language Processing and Intelligent Information Systems, 2013.

- [13] Zhiheng Huang, Wei Xu, and Kai Yu. Bidirectional lstm-crf models for sequence tagging. Computer Science, 2015.

- [14] Zhanming Jie and Wei Lu. Dependency-guided lstm-crf for named entity recognition. EMNLP, 2019.

- [15] Taku Kudoh and Yuji Matsumoto. Use of support vector learning for chunk identification. In CoNLL, 2000.

- [16] John Lafferty, Andrew McCallum, and Fernando CN Pereira. Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In ICML, 2001.

- [17] Guillaume Lample, Miguel Ballesteros, Sandeep Subramanian, Kazuya Kawakami, and Chris Dyer. Neural architectures for named entity recognition. In NAACL, 2016.

- [18] C. Lee, Giles Overfitting, Rich Caruana, Steve Lawrence, and Lee Giles. Overfitting in neural nets: Backpropagation, conjugate gradient, and early stopping. In NIPS, 2000.

- [19] Peng-Hsuan Li, Ruo-Ping Dong, Yu-Siang Wang, Ju-Chieh Chou, and Wei-Yun Ma. Leveraging linguistic structures for named entity recognition with bidirectional recursive neural networks. In EMNLP, pages 2664–2669, 2017.

- [20] Liyuan Liu, Jingbo Shang, Xiang Ren, Frank Fangzheng Xu, Huan Gui, Jian Peng, and Jiawei Han. Empower sequence labeling with task-aware neural language model. In AAAI, 2018.

- [21] Tianyu Liu, Jin-Ge Yao, and Chin-Yew Lin. Towards improving neural named entity recognition with gazetteers. In ACL, pages 5301–5307, 2019.

- [22] Ling Luo, Zhihao Yang, Pei Yang, Yin Zhang, Lei Wang, HongfeiH Lin, and Jian Wang. An attention-based bilstm-crf approach to document-level chemical named entity recognition. Bioinformatics, (8):8, 2017.

- [23] Ying Luo, Fengshun Xiao, and Hai Zhao. Hierarchical contextualized representation for named entity recognition. AAAI, 2020.

- [24] Xuezhe Ma and Eduard Hovy. End-to-end sequence labeling via bi-directional lstm-cnns-crf. In ACL, 2016.

- [25] Andrew McCallum and Wei Li. Early results for named entity recognition with conditional random fields, feature induction and web-enhanced lexicons. In CoNLL@NAACL, pages 188–191. Association for Computational Linguistics, 2003.

- [26] Tomas Mikolov, Kai Chen, Greg Corrado, and Jeffrey Dean. Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781, 2013.

- [27] Razvan Pascanu, Tomas Mikolov, and Yoshua Bengio. On the difficulty of training recurrent neural networks. In ICML, 2013.

- [28] Jeffrey Pennington, Richard Socher, and Christopher Manning. Glove: Global vectors for word representation. In EMNLP, pages 1532–1543, 2014.

- [29] Matthew E Peters, Waleed Ammar, Chandra Bhagavatula, and Russell Power. Semi-supervised sequence tagging with bidirectional language models. In ACL, 2017.

- [30] Matthew E. Peters, Mark Neumann, Mohit Iyyer, Matt Gardner, Christopher Clark, Kenton Lee, and Luke Zettlemoyer. Deep contextualized word representations. NAACL, 2018.

- [31] Yujie Qian, Enrico Santus, Zhijing Jin, Jiang Guo, and Regina Barzilay. Graphie: A graph-based framework for information extraction. NAACL, 2019.

- [32] Lawrence R Rabiner. A tutorial on hidden markov models and selected applications in speech recognition. Proceedings of the IEEE, 77(2):257–286, 1989.

- [33] Erik F. Tjong Kim Sang and Fien De Meulder. Introduction to the conll-2003 shared task: Language-independent named entity recognition. NAACL-HLT, pages 142–147, 2003.

- [34] Emma Strubell, Patrick Verga, David Belanger, and Andrew McCallum. Fast and accurate entity recognition with iterated dilated convolutions. EMNLP, 2017.

- [35] Zhilin Yang, Ruslan Salakhutdinov, and William W Cohen. Transfer learning for sequence tagging with hierarchical recurrent networks. In ICLR, 2017.

- [36] Zhi-Xiu Ye and Zhen-Hua Ling. Hybrid semi-markov crf for neural sequence labeling. ACL, 2018.

- [37] Boliang Zhang, Spencer Whitehead, Lifu Huang, and Heng Ji. Global attention for name tagging. CoNLL, 2018.