Explicit Use of Fourier Spectrum in Generative Adversarial Networks

Electrical Engineering & Computer Science \masterofApplied Science \degreenameMasters \committeememberslist

-

1.

Hui Jiang

-

2.

Mokhtar Aboelaze

abstract.tex \acknowledgementsfileacknowledgements.tex \abbreviationsfileabbreviations.tex \makefrontmatter

Chapter 1 Introduction

Generative adversarial networks (GANs) proposed by [1] have had a significant effect on the computer vision literature. They have been used in a wide range of applications, such as generating realistic images [2, 3, 4], image-to-image mappings [5], text-to-image translation [6, 7, 8, 9], transfer learning [10], photograph editing [11, 12, 13, 14], 3D object generation [15, 16] and many more that are still coming up. Image synthesis state-of-the-art works successfully generated photo-realistic images almost indistinguishable for human eyes. This rapid progress gave birth to the applications such as deepfake [17] in which the central idea is to fool the human eyes into believing the machine-generated images as real ones. Naturally, it raised security concerns on the problem challenging photo-forensics experts on the subject [18]. On the flip side, recent works [19] have shown that having a "large" amount of data, one can detect fake images with relative ease using simple classifiers powered by neural networks. [20] attributes the machine’s power for classifying to the dissimilarities between the authentic and artificial images in their spectrum. It further discusses the roots of dissimilarities in the high-frequency residuals of the image synthesis in convolutional neural networks. It also discusses that the checkerboard effect, one of the most disputed phenomenons in the GANs, is one of the consequences of the frequency discrepancies.

The Frequency domain is a well-established tool to process and analyze the images in the signal processing literature. It represents images without losing any information due to the bijective property of the FFT. This representation gives us the advantage when dealing with the rate of change within the neighborhood of the pixels, intuitively making it related to the problem of the checkerboard patterns and any other harsh changes regarding the details of an image.

The main objective of the current research is to find a deep learning based method to reduce the existing gap between the frequency spectrum of the real images and the ones produced by the generative adversarial networks. We also ask the question of how we can find a way to incorporate frequency information of the images in the deep learning driven computer vision tasks. To be precise, to build a deep learning layer for processing frequency information along with spatial information.

Imitating human responses and behaviors has always been a topic of utmost interest for artificial intelligence researchers. Spatial frequency theory [21] is an acclaimed construct showing the weight of the spatial frequencies in the brain’s neural response. Recent research [22] also reveals that the mechanism of the human primary visual cortex (V1) is highly related to the second-order spatial frequencies received from our visual sensory inputs. These spatial frequencies stand out in the spectrum representation of an image. Useful filters such as the Gabor filter applied on the spectrum of the images are also considered to be similar to the actions of cells in the mammalian visual cortex for texture discrimination [23, 24, 25]. Knowing how vital the frequency domain is in the ultimate baseline of the AI, meaning humans, motivates us even more to use models consist of frequency-related elements.

To get an image realistic to the finest detail, generative models need to learn the distribution of the images accurately enough to imitate the spectrum of the generated images, which is not the case for the current approaches. Identifying this problem, in this work, we are trying to propose a new add-on to the current architectures of the CNN-based GANs in order to incorporate the frequency representation with more emphasis in the training process.

The rest of this work is organized as follows: In chapter 2 the necessary information for understanding the ideas are provided. Chapter 3 introduces the current major advances in the literature of generative adversarial networks and frequency-driven efforts in the area of neural networks. Next, in chapter 4 we discuss the idea of the geometric deep learning, which is the main building block for our contribution. Chapter 5 formalizes the problem at hand and gives theoretical methodologies for our contributions. Chapter 6 assess the performance of the idea in spatial and frequency domain as well as a stability analysis of the implementations. Finally, chapter 7 summarizes the contributions of the paper and discusses the future steps for this research.

Chapter 2 Backgrounds

In this section, we first present the concept of neural networks, and with a simple example of MLP, we explain the procedure behind their ability to learn. Then we discuss Convolutional Neural Networks as the most successful neural network structure in vision tasks. Then we move to Generative Adversarial Networks, which are the main neural network architecture examined in the current work. We explain their structure, the basic mathematical idea behind them and intuitively explain how they are supposed to work. In section 2.3 we talk about the methods for GAN’s quality evaluation in the spatial domain we used in our work. For evaluation in the frequency domain, we present three metrics later in 6.4. Section 2.4 gives a brief description of the frequency domain and its importance, plus some of its applications in natural language processing and computer vision. It also contains the fundamental mathematics of discrete Fourier transform in 1D and 2D.

2.1 Neural Networks

Neural Networks, also known as Artificial Neural Networks (ANNs), are a set of algorithms in machine learning. The recent deep learning revolution in computer science owes its existence to these frameworks of algorithms. They got their name on their design to mimic the structure of human neurons in the brain. The most simple structure of neural networks, known as Multi-Layer Perceptron (MLP), consists of a layer of nodes (neurons) as the input layer, one or more hidden layers, and an output layer connected with a designed architecture in a layer-wise manner. Values of the nodes in each layer can be seen as a vector and determined by three elements. The first element is the vector of the value of the previous layer. Second, a weight function linearly transforms the previous layer’s outputs, represented as a matrix. Finally, a non-linear activation function adding non-linearity to the structure. Figure 2.1 shows the architecture a simple MLP with two hidden layers, and activation function .

NNs function upon training over a large amount of data, changing their wight matrices gradually with a process called backpropagation to improve their accuracy. The popularity of the NNs is owing to their quick performance and superb accuracy over data-driven tasks such as prediction, classification, and regression.

Convolutional Neural Networks (CNNs)

CNNs are a subset of deep learning algorithms introduced in [26]. They are best for learning grid-like data such as Images and time series. They have achieved remarkable accuracy and became an inseparable part of image-related and video-related tasks such as object segmentation [27, 28, 29], image classification [30, 31, 32], object detection [33, 34, 35] and image reconstruction [36, 37, 38]. Moreover, they have shown capabilities in natural language processing such as Sentiment Analysis and Topic Categorization tasks [39, 40], semantic clustering [41] and relation extraction [42, 43, 44]

In a naive two-dimensional convolutional layer, the input should be in a grid form with vector define over it. An image can be shown as signal in this space . A convolutional filter (whose weights are subject to learning) slides over the image. We can write the convolution step with filter size using the linear combination of generators . In here lets use the unit impulse function .

and are D-matrices flattened into a vector here. Figure 2.2 shows the operation .

In coordinates, it also corresponds to the standard notation of D-convolution

When we have more than one channel, e.g., RGB photos or in the later layers when we have several feature maps, a convolutional tensor is used instead of a convolutional filter. And, in coordinates, we have:

where M and N are respectively the number of input and output channels.

After the convolutional step, the output, known as the feature map, is subjected to a pooling operation which down-samples the feature map to reduce the redundancy and add robustness against over-fitting. Convolutional layers can be cascaded together to form a deep CNN, and they can also be a component of a neural network with linear layers haven their outputs flatten to a vector.

Deep-learning-powered computer vision has been thriving in virtue of the abilities CNNs unlock over the normal perceptron. The foremost power in CNNs is their ability to extract features such as patterns, texture, object edges, etc., automatically with convolutional kernels. Some other benefits of using CNNs are as follow: 1) avoiding parameter growth with increasing the number of inputs (let us say pixels for the case of images); this is on account of two facts, the weight sharing over layers, and having local connection which means each node is connected only to some local nodes in the previous layer not all of them. 2) Robustness against translation, in other words, when the input image is slightly shifted, CNN does not treat it as a completely different input. This characteristic, known as shift-equivariency, is explained in detail in chapter 4

2.2 Generative Adversarial Networks

Generative models

In general, machine learning algorithms can be categorized into supervised and unsupervised learning. In the former, a labeled dataset is necessary. The goal is to predict an accurate function, mapping the features of the dataset to the target labels. Some examples of it are classification and regression. While in unsupervised learning, there is no labeled dataset; all the data are in the same category. Generative modeling is an unsupervised task in machine learning aiming to learn the data distribution well enough so that the model can produce new data points with all the attributes of the input data. Intuitively, designing a generative model requires a deep understanding of the semantics of the data and the field. However, Generative Adversarial Networks (GANs) are a subcategory of generative models capturing the significant features of the dataset automatically.

Generative Adversarial Networks

Goodfellow et al. [1] introduced the concept of Generative Adversarial Networks as an algorithmic architecture that uses two neural networks competing against each other. The goal is to generate new data points imitating the distribution of the original training dataset. The skeleton consists of two separate neural networks. A generator that tries to capture training data distribution. Plus, a discriminator whose goal is to predict the probability that its input is a generated fake sample or an original data point. The two networks will train simultaneously to satisfy the following two-player minimax game:

| (2.1) |

is a defined prior over input noise variable , is a mapping from noise latent space to the data space implemented with a neural network with parameters . is another mapping from data space to a single scalar with parameters . shows the probability that came from data distribution rather than generator. The first term of the right-hand side of the equation (2.1) is an expectation representing the quality of the model performance on detecting the authentic images. While the second term representing the quality of the model on generated samples. Together they build up the loss function , where the discriminator tries to maximize and generator tries to minimize. Training them simultaneously with precise hyperparameter tuning results in both networks getting better at their works in detecting or generating data points. At the final step, the stand-alone generator module will produce indistinguishable data points.

2.3 Methods for Measuring GAN’s Quality

To measure the performance of the neural network models on supervised tasks, researchers use various metrics. For the classification, we have precision, recall, accuracy, F1-score, etc.; for regression tasks, we have mean square error, mean absolute deviation, etc. However, all of them need a labeled dataset out of hand for any unsupervised task, including GANs. Defining a metric for unsupervised tasks is more difficult. Clustering tasks, for example, are usually evaluated using the Silhouette Coefficient. It measures how similar an item in the cluster is to the other items in the same cluster. GANs case is different and even trickier; there is no label in hand, and there is no ground truth other than some samples from the real dataset. The loss for each neural network is not in any way an indicator of how successful the model is in generating a distribution close to the original sampled distribution. They only show how good is each module (Generator or Discriminator) in fooling the other one. Finding an excellent metric to evaluate the GANs performance has been a topic of controversy for a while now [46]. Nonetheless, for quantitative measurement of their success, there are two widely adopted metrics, the Inception Score (IS) [47] and Fréchet Inception Distance (FID) [48].

Inception Score (IS)

IS takes two main high-level concepts into account:

-

•

each image decidedly belong to a class (image of a cat belongs to the cat class, and there is no confusion about putting it in another class)

-

•

The images are diverse (the model can generate images of a wide variety of classes)

To mathematically talk about the concepts as mentioned earlier, using the notion of entropy is useful. The higher the entropy of the data, the less predictable its behaviors. Accordingly, if we get random variable as the given images and as the image class, to satisfy the first concept, we want the conditional probability to have low entropy. In other words, predicting the class, given an image, should be a highly predictable task. For the second concept, we want the marginal probability to have a high entropy; namely, we want the model to give us different classes as uniform as possible. Bringing together the two concepts, we have the final form of inception score using KL-divergence as the following:

| (2.2) |

For the computation of posterior distributions, the classifier, Inception Net, [49] is being used over the data (hence the name Inception Score). Then, the marginal probabilities are calculated by the following integral:

Fréchet Inception Distance (FID)

FID introduced by [48] embeds a set of generated samples and also actual samples into a feature space. It uses an intermediate layer of Inception Net (usually after the third pooling layer). It deals with the embedded space like a multivariate Gaussian, and it estimates the mean vector and covariance matrix for both real and generated data distributions, , , and respectively. The Fréchet Inception Distance is then calculated between the two Gaussians.

| (2.3) |

The trace function is the sum of all the diagonal elements. Since it calculates how alike the authentic and generated distributions are, the lower the distance, the better the model; it is unlike the Inception Score, where the higher score is better. FID is usually a better metric than IS Since it is sensitive to GAN failures such as mode collapse. We explain failure modes in GAN’s in section 6.2.

2.4 Frequency Domain

Decomposing an image into different size scales with a basis function is a prominent idea in mathematics and engineering. The idea is to write any spatial signal as a sum of orthogonal basis functions to get a new representation based on the different resolutions of the original signal. This representation is known as frequency representation. Most of the decomposition techniques, including the ones described in this thesis, are bijective mappings, i.e., there is a unique representation in the frequency domain for each spatial signal. Arguably frequency domain’s theoretical significance comes from the Convolution Theorem for standard Linear Time Invariant systems. The interactions of such systems with their input and output signals formulate by the convolution operator. The Fourier basis diagonalizes convolution, hence replace it with a simple element-wise multiplication in the frequency domain. The importance does not only summarize in theoretical works. The frequency-domain has opened up various tools for engineers. For audio signals applying filters to remove noise, detect different voices, or equalize the voices of different musical instruments are a small portion of many applications of frequency representations. In the case of the image spectrum, although the information is not apparent by the look of the spectrum, it contains valuable data related to the rate of change in moving to neighbor pixels, the lines and curves and edges of the objects, and noise in the images. It can also tell us how much information there is in a particular resolution, which is important when an image contains chaotic structures. There are helpful filters in noise reduction, edge detection, and many others operating on the images’ frequency domain.

A few series and transforms have been introduced to change the representation of spatial periodic and non-periodic signals to a frequency space, such as Fourier transforms and series, Laplace transforms, and Z-transform. In this work, we adopted the arguably most famous decomposition technique, Discrete Fourier Transform (DFT).

2.4.1 Power Spectrum

A power spectrum is, in essence, a measure of the strength of the different features at different resolutions. Basically, We decompose an image into different size scales, for example, a coarser version like , and each new superpixel contains the dominant value of the corresponding pixels within the original image. If we subtract this dominant color from the original image, we are left with a version of the image without these largest structures. Repeating this procedure for less and less coarse versions of the original image give us a set of images (measurements on images) between the very coarse and the original image. Every image in between contains a measurement of how influential features in the original image are at that specific resolution, and the size scale associated with that resolution tells us what the size scale of those features is. For each resolution, the power spectrum is defined as the variance of the features on that resolution, i.e., how much total spread there is in values. A large spread means a strong signal and hence much variation on this scale.

To perform the size scale decomposition, we use DFT, which we will explain its detail in the next section. Basically, the time signal of each resolution corresponds to a sine and cosine pair with a period of . We know as the frequency. Since we are performing a 2D transform, vector consists of two elements as well and each of the elements has two amplitudes for cosine and sine. As we discussed earlier, the power spectrum is the variance of the features in size scales. However, since different combination of elements can contribute to the same resolution (e.g. ), the standard procedure is to bin them all together. The appropriate measure for the power for a specific value is the average power within the corresponding bin, multiplied with the volume of that bin in space. Equation (2.4) shows the mathematical definition, and Fig 2.4 shows an example of the spectrum, power spectrum pair, indicated by two different bins and their corresponding location on both spectrum and power spectrum.

| (2.4) |

where and are coming from the standard change from Cartesian to polar coordinate change:

2.4.2 Discrete Fourier Transform (DFT) and Fast Fourier Transform (FFT)

Fourier Transform presumably is the most common signal decomposition in the literature, and it is the foundation of the harmonic analysis. DFT is a sampled version of the Fourier transform, so it does not contain all the frequencies in the spatial function. however, it contains enough frequency elements to describe one and only one spatial function without losing any information. 1D-DFT of the the function with points is calculated as follow:

| (2.5) |

To get back to the spatial domain the inverse DFT is as follow:

| (2.6) |

The exponential terms are the basis function corresponding to each point in the Fourier domain representation . The value of each point in is calculated by multiplying each point in the spatial domain with the corresponding Fourier basis and then summing them all up. In a more formal fashion, DFT expresses the spatial function as a linear combination of orthogonal oscillating basis functions , indexed by their rate of oscillation (frequency).

Dealing with the images, we need to extend the 1D-DFT to 2D-DFT. Given an square image , we can extend the definition of DFT and iDFT to two dimensions as follow:

| (2.7) |

| (2.8) |

Note that the regularization constant only appears in the inverse function. The N is usually a power of 2, and when the original function does not satisfy the size, it is usually zero-padded to get to the desired size. DFT output is a complex function, and they can be shown with two different images, as real and imaginary or magnitude and phase. Neither part can be ignored as both of them contain information about the original image.

The complexity of the DFT computation is ; however, with a computational trick, it can be reduced to , the algorithms calculating the DFT with reduced complexity are known as Fast Fourier Transform (FFT). We use the terms DFT and FFT interchangeably in this document as the output to them is the same, only the procedure to get the output is different.

Chapter 3 Literature Review

Since [1] introduced GANs in 2014, there has been a considerable body of work on improving and stabilizing this category of neural networks. [2] proposed a set of constraints, making GAN training with convolutional layers more stable. However, they could not overcome the mode collapse problem in training. They also showed the vector arithmetic properties of the latent input space of the generator similar to the known relations in the word embedding. Their other contribution was showing that adversarial network pairs in GANs can learn meaningful representation from objects to scenes. [51, 52] brought up a new distance to measure the difference between original data and generated data distributions, leading to the most noteworthy GAN success due to robustness to architectural choices. They were the first to address the mode collapse problem significantly. It is also worthy of mentioning that their new distance (estimated EM distance) provided meaningful learning curves for debugging and hyperparameter tuning. [sngan] applied spectral normalization over the weight matrix, which not only helped the stability of the discriminator network but also, due to its significant computational benefits over the previous methods, made generating high-quality class conditional ImageNet [53] scale training possible. [sagan] used self-attention in both generator and discriminator, leading to a significant increase to the inception score of the GANs as well as delivering high-quality unconditional full-ImageNet samples. Their method involved a self-attention procedure allowing both generator and discriminator to have access to non-local regions of images when trying to detect or generate images. [54] continued the efforts to scale up the dataset with a new variant of orthogonal regularization and truncation trick. Their model allows increasing the batch size and model width, which not only allows for a significant scale-up but also increases the performance in the Inception Score. [55, 56] modified the discriminator by adding an extra path for the encoded generated images to decide not only based on the data space but also by another representation of the latent space. They introduced a novel representation learning approach to train GANs, which was one of our motivations in the current work.

StyleGAN based GANs [3, 4] at the time of writing this document is holding the state-of-the-art in the literature. Their main contribution was the new architecture for the generator where it does not feed noise directly to the generator. instead, it passes through an MLP to get a style vector and feed in the generator architecture at different layers.

Although GANs have succeeded in delivering high-quality images almost impossible for human eyes to detect, recent studies [20, 19, 57, 50] showed that it is not an arduous task to detect GAN-generated images. In the core of the forensic analysis of [20, 57, 50], lies the discrepancies between the spectrum of the generated images vs. the real ones. To further relate the frequency notion to the current state of neural network training in the literature [58] found evidence of a spectral bias on neural networks, following this idea [59] came up with band-limited training of the CNNs. Another frequency evidence lies in [60] which provided theoretical proof on having band-limited model as a condition for perfect learning in neural networks.

Learning in the frequency domain is not well-investigated in the literature. However, as we explained above, the clues are further pushing us to take the frequency domain More seriously. currently, the models are using fully connected [61] or CNN-based architectures[62] in the input. However, the recent CVPR paper [63] proposed a model based on DCT transformation, which aims to retain more original picture information through DCT transformation and reduce the communication bandwidth between CPU and GPU. In this work, we use geometric deep learning [45] to introduce a new model designed to perform specifically on the frequency domain of the image. We will explain the related literature review on geometric deep learning in more detail in the chapter 4.

Chapter 4 Geometric Deep Learning

In this chapter, we give a brief overview of geometric deep learning [45] and how it brings together a large class of neural networks working on different data types such as unstructured sets, grids, graphs, and manifolds to interpret under unified principles. Then we describe symmetries in the image data form and how geometric deep learning uses them to add inductive bias to the neural network framework, reducing the search space in the hypothesis class for more suitable functions. In section 5.4.2 we use the idea of equivariant functions to devise a suitable deep neural network architecture for the frequency domain. Our proposed architecture searches the frequency while keeps the essential property of shift invariancy of the image in mind. To the best of the author’s knowledge, a systematic architectural design for the frequency domain has never been done in the literature. We consider it one of our main contributions.

4.1 Inductive bias

In machine learning theory, supervised learning is usually formalized by assuming that labels are generated by an unknown function , such that . Also, let us assume that the data points and labels come from an i.i.d sampling of the underlying data. , where here is the number of observations. The learning objective here is to estimate the function as best as possible from a set of parameterized functions we have, known as a hypothesis class, . Neural network architecture can be seen as an instance of such parameterized hypothesis classes with parameters as the networks’ weights. The ideal method to evaluate the chosen function from the hypothesis class is the expected loss defined as follow:

is the chosen function, and is the underlying distribution of the data. is the suitable loss for our task; some examples can be square loss and binary cross-entropy. Thus, to have successful learning, one needs to consider a notion of regularity in choosing functions . This idea of regularization is known as the inductive bias, which can be applied via various methods, explained further in this section.

To have a successful learning scheme, the other important and even more prominent property is that the hypothesis class should be dense enough to have the capacity to approximate functions over current large-scale, high-quality datasets. However, in the case of neural networks, it has never been a problem. Even a simple two-layer perceptron, shown to be dense in the space of continuous functions on .

To formalize the regularity notion into the learning scheme, we can get help from complexity measure , now the problem can be seen as:

The realizability assumption is considered for this formulation for convenience. In other words, now we are looking for the least complex (more regular) function among our hypothesis class. For the complexity measure, different norms of the working space are a well-known option used in learning approaches such as logistic regression or SVMs. In neural networks, network weights are a proper choice for complexity measurements. i.e. . While explicitly applying regularities to the empirical loss is a common, well-defined approach known as "Structural Risk Minimization," the Implicit approaches are getting more and more attention of researchers [64, 65].

As the dimension of the space goes higher, finding suitable regular functions will get more challenging as well. In the case of the long-established regularity notion, 1-Lipschitz functions. The number of observations needed for the desired loss will grow exponentially. The good news is that real-world applications such as computer vision tend to work with functions that inherently possess some spatial structures in their physical domains. The geometric deep learning objective is to exploit structures as a notion of regularity further to reduce the search space for the most proper function.

4.2 Symmetries as Regularities

Symmetries on a domain or a signal are transformations that leave certain properties of the domain of the signal, or the signal itself, unchanged. Real-world applications are full of these operations. In computer vision’s object detection tasks, shift or rotation are examples of transformations that leave the type of object invariant. If there is an image of a rhinoceros, the shifted or rotated rhinoceros should still be classified as a rhinoceros.

The symmetries of the domain , and signal operating on it present a constraint on the function operating on those signals. It turns out that these symmetries are powerful prior to consider as inductive bias. They will reduce the search space to only the functions satisfying the invariancy. Here we explain the two groups of functions we use their symmetric properties in this thesis, invariants and equivariants.

Invariant functions:

A function is invariant with respect to transformation if and , in other words, if its output is unchanged after the transformation.

Assuming the transformation is linear i.e , is the matrix equivalent of the the transformation .

The function for the rhinoceros classification example is categorized under shift invariant functions, which is very common in pattern recognition literature.

Classic perceptron networks hypothesis’ class consists of functions not having the shift invariant property. It is making them an unseemly choice for vision tasks where the object’s position is not relevant in the final result. However, as we explain later in section 4.3, applying a geometric prior for the shift would result in an architecture known as Convolutional Neural Networks (CNNs) (for further reading on CNNs please refer to section 2.1). That being said, shift geometric prior in the CNNs is not shift invariancy. In fact, it is shift equivariancy. That is to say, a particular amount of shift in the input would result in the same amount of shift in the output. Formally we can define an equivariant function as follow:

Equivariant functions:

A function is equivariant with respect to transformation if and . In other words, If the output react in the exact same way input reacted to the transformation.

A computer vision example of such tasks is image segmentation. If the segmented input moved, we expect the output to shift the same amount.

Another noteworthy property of the invariant and equivariant function arises in their combinations. If and are equivariant and and are invariant, then by definition, , and are equivariant, invariant and equivariant respectively. We also know the following properties for symmetric transformation, by the axioms of symmetric transformations.

Associativity: For all the functions belong to the same symmetry group . Although invariants and equivariant are not from the same symmetry group, by definition they are also have this property together.

Inverse: for every function belong to the same symmetry group there is a unique inverse in the same symmetry group, e.g.

Geometric deep learning provides a blueprint for constructing specific architectures satisfying the explained conditions. For the specific case of equivariant functions. the blueprint’s building blocks are as follow:

Linear equivariant layer :

Non-linearity : applied element-wise as .

Local pooling (coarsening) :

Invariant global pooling layer :

By using the mentioned building blocks in cascade, we can construct the equivariant function f as follow:

The building blocks are designed such that the output of each block matches the input space of the next layer.

4.3 Designing CNNs with GDL:

This section describes how we can apply the blueprint to a homogeneous grid space, where images are defined as signals over this space. Understanding this design is essential for the cause of this thesis since designing the Fourier layer for the discriminator of the GAN uses the same method with a similar approach.

Let’s assume that we have a one dimensional grid for simplicity. in this grid each element has a right and a left neighbor . Using the blueprint we can design the equivariant function with a local aggregation function operating over a grid element and its neighbors . Choosing yields the function as the matrix product:

In the machine learning, using this form of diagonal matrix is known as weight sharing. This matrix in an example of a circulant matrix, which is essentially a vector append to itself after circular shifts. . product of a vector with a circulant matrix is equivalent with cyclic discrete convolution.

In machine learning and signal processing, the above is known as the filter, and its weights/values are subject to the learning process. Circulant matrices have commutativity property, which means their product is commutative, and it is also circulant. More mathematically If we choose the resulted circulant matrix , shift vectors one position right (circularly) and we call it the shift matrix. From the properties of the convolution, and circulant matrices, we get the desired shift equivariance:

An interesting fact is that the other way around is also true. A matrix is circulant if it commutes with the shift. In other words, convolutions are the only linear operators keeping the equivariancy.

Chapter 5 Methodology

5.1 Frequency in the Neural Networks

Recent state-of-the-art GAN architectures used for vision tasks utilized the convolutional neural networks (CNN) structure for their generator and discriminator modules [3, 4, 54]. Regardless of the network architecture and the loss function difference, they all need to map from a low dimensional latent space to a high dimensional high-resolution image space. To this end different GANs used different upsampling approaches like bi-linear [3, 4, 54], transposed convolution [2, 66, 67] or nearest neighbor [68, 69]. In the simple case, when the up-sampler scales the image by a factor of ; it inserts a zero between all the pixels in each row/column. Then it applies the convolution to interpolates the inserted zeros with suitable values. A simple formulation of the last two is shown in Figure 5.1.

[20] first analyzed the frequency consequences of the GAN’s up-sampling modules. Properties of the DFT (Discrete Fourier Transform) reveal that zero-insertion in an image will affect the spectrum in a way that looks like multiple copies of the original spectrum attached together. The proof for the one-dimensional case is as follows, and it is easily extendable to the two-dimensional case. Given as the input signal to the up-sampler and as its DFT. By inserting the zeros we’ll get where and for . Now the DFT of the will be . For ,

| (5.1) |

for , let , thus then,

| (5.2) |

The above shows that there will be two copies of the spectrum of the in first at and second at . This transformation introduces high-frequency components to the spectrum. [70] discussed that these high-frequency components play a significant role in a checkerboard artifact phenomenon seen in the outputs of generative convolutional networks. Figure 5.2 demonstrate the high-frequency components in the spectrum as well as the checkerboard artifacts. In the next step of the up-sampling, when the convolution occurs, we have neither a guarantee for the learned convolution kernel to be a suitable low-pass filter nor a sampling strategy to avoid such high-frequency artifact appear in the subsequent layer output. Thus, it can not fade out the newly introduced high-frequency components.

5.2 DFT and Principles of Symmetry

Maybe the most famous property of the Fourier domain in signal processing arises from the convolution theorem. That is to say, the convolution operation in the spatial domain is equivalent to element-wise multiplication in the Fourier domain. It is important since the interactions of linear time invariant (LTI) signals and systems can be described with the convolution operation. This property is derivable with properties of symmetry, and circulant matrices explained in section 4.3. To that end, we need to remember that diagonalizable matrices share common eigenvectors (with different eigenvalues) iff they mutually commute. Since circulant matrices satisfy these conditions, we can calculate the eigenvectors of one of them. Shift matrix is a convenient choice here, whose eigenvectors luckily happens to be the DFT basis:

Arranging the vectors into a matrix gives us . Matrix multiplication with yield the inverse DFT and with its complex conjugate the DFT. All circulant matrices share this eigenvectors, so the Fourier transform of the filter is the eigenvector matrix for the circulant matrix . Thus, we can write in diagonalized form to obtain the convolution theorem:

where is element-wise multiplication. we use the above mentioned characteristic of circulant matrices to provide a proof of work for our frequency domain architecture in the next section.

5.3 Problem Definition

[20] shed light on the dissimilarities between authentic image spectrum and the images generated by convolutional networks. These disparities mostly show themselves in the forms of high-frequency patches in the generated images spectrum, discussed by [70] as responsible for the checkerboard artifacts showed in them. Knowing that Fourier is a bijective mapping, any apparent difference in the spectrum demonstrates a flaw in learning the original distribution, which is the main objective of training GANs as a generative model. In this work, we are developing a new architecture for the discriminator to address the spectral difference where the discriminator gets the images in the Fourier and the spatial domains. We then experimentally demonstrate that our model can enhance the learned distribution, emphasizing the importance of the spectrum of images on the learning process.

5.4 Methodology

The most straightforward way for solving dissimilarities in the spectrum is to add frequency information to the discriminator. [5, 55, 56] have already proved that feeding information to the discriminator can boost the performance of the GANs for other applications. In this case, the network would better detect high-frequency components’ differences in the generated images by providing the discriminator with frequency information. Thus, it pushes the generator to produce images matching the actual image distribution’s spatial and frequency representation. Equation (5.3) shows the new loss function for the GAN incorporating the Fourier transform of the images directly in the loss.

| (5.3) |

For preprocessing the Fourier information [50] discusses the following 1D representation of Fourier transform via azimuthal integration over radial frequencies, practical enough to point out the dissimilarities of the spectrum and spatial domain. Assuming that images are .

| (5.4) |

Equation (5.4) can be seen as a coordinate conversion from Cartesian to polar and then getting the mean intensity over the radial distance. It is also used in [71] as the input to the frequency module. Despite its popularity due to more straightforward computation in 1D, performing average operation will cause loss of information in FFT amplitudes and completely disregard the phase information, which proved to be an essential part of the spectrum [72, 73]. In this work, we use both amplitude and phase of the image as the input to our frequency module to fully exploit the spectrum information.

At a high level, our work consists of two sections. First, we introduce a high-level architecture with unary losses (Figure 5.3) to incorporate frequency information into the GAN architecture in a guided manner. It will result in a discriminator more sensitive to frequency discrepancies, which pushes the generator to produce images more realistic in the frequency domain. Second, we devise a Frequency module to use in our architecture. This module’s task is to get the frequency spectrum and output a realness score. The frequency module uses a base layer we named EV-Freq which is designed based on the blueprint provided by geometric deep learning.

5.4.1 The High-Level Architecture

Importing the spectrum to the network has shown degradation in the performance of the model [71]. This phenomenon is a consequence of losing too much image information in the spectrum, hence, not having enough gradient for the adversarial training. In order to overcome this problem, we adopt the three unary terms proposed by [56] to guide the optimization.

Figure 5.3 shows a high-level view of our model’s architecture. The generator and discriminator loss in our model can be described as follow:

in the sixth line refers to the loss we chose to apply on the instances. [56] chose hinge loss for this, and we intend to do the same here. The discriminator has three modules. takes the images in the data only in the spatial domain while ’s input is the data in the frequency domain . With this model, we get two separate scores for the realness of the image’s spectrum and images in the spatial domain. Each module calculates a realness scalar score based on its input. The scalar scores respectively are and calculated with their parameters and . The desirable output should get a high score both in frequency and spatial domains. Thus, the last module gets the output of the other two modules, and , as its inputs, and the output is the joint score which defines how real the image is concerning both discussed domains. The generator’s parameters and discriminator’s are optimized to minimize the losses and respectively. The Discriminator loss trains the discriminator to identify the joint representations of its input coming from real data, , or the generator, with predicting, respectively, positive and negative values for them.

The discriminator Loss consists of three losses coming from spatial, frequency, and joint modules. Each of them is responsible for of the total loss. We recognize the equal weights for each module as a limitation for our work, and we planed to design an architecture with adaptive weights in our future works.

The generator loss, optimize the generator to misguide the discriminator to predict incorrectly, stirring the generator to create images matching both representations (in spatial and frequency domain) of the real data.

5.4.2 Frequency Module

Deep learning researchers developed various deep neural network architectures for different spaces and signals defined over them, such as sets, graphs, images, and text. However, there is no particular design for the frequency spectrum. Current frequency-driven neural networks use fully connected or convolutional neural networks. The former results in a vast search space for optimization. Therefore, a slight chance to exploit the approximation function over frequency. The latter, without any modification, is designed for spatial feature extraction, which is by itself not a helpful feature in the spectrum data. Geometric deep learning establishes a framework for unifying some of the well-defined architectures. Moreover, it provides a blueprint for systematically design a new architecture for new domains and signals. In this section, by following the GDL blueprint, we propose a new architecture EV-Freq for frequency-domain signals.

In section 4.3, shift equivariancy of the image’s spatial domain provided the inductive bias needed for designing CNNs. We use the same idea here. Our functions in the frequency domain should not disturb the equivariancy of their corresponding spatial images. Let’s denote out input image with , our chosen function operating over frequency domain from our designed hypothesis class as , and also . In other words, is the Fourier inverse of the output. Figure 5.4-a shows the relationship.

As we discussed in section 5.2, we can make the Fourier transfer of a function by matrix multiplication with matrix . We use this notation in the following proof whenever we perform a Fourier transform on a signal. Aimed at keeping the equivariance in the spatial domain should satisfy the following equation: (Figure 5.4-b)

| (5.5) |

In other words, the exact shift on input should be applied to the spatial domain image corresponding to the output Fourier spectrum. From figure 5.4-a we also have:

| (5.6) |

Fourier matrix is Unitary orthogonal hence, , Thus from equation (5.6) we get:

| (5.7) |

By putting equation (5.7) in equation (5.5):

| (5.8) |

From properties of circulant matrices discussed in section 5.2, contains the eigenvectors of in each row. Hence, whera is a diagonal matrix, consisting of ’s eigenvalues on its diagonal. Knowing that we can rewrite equation (5.8) as:

Finally we get to our desired constraint on the function :

| (5.9) |

is non-symmetric orthogonal, hence its eigenvalues are complex and roots of with absolute value of and they are located and the diagonal of . Thus, if we define any function that keeps the phase intact, the equation (5.9) will be satisfied.

Now that we found a set of functions operating on the spectrum, keeping the spatial equivarincy, our next step is to design an architecture satisfying the resulted constraint. To that end, we first perform the Fourier transform and separate amplitude and phase. While we keep the phase intact, we pass the amplitude from a residual block with spectrum normalization. The spectral normalization is an attempt to stabilize GAN training introduced by [sngan]. Performing max-pooling will change the dimension of the amplitude. We get the pooling indices and apply them to the phase to preserve the phase while sustaining its dimensions compatible with the amplitude. Since it is an element-wise operation over the phase matrix, it does not jeopardize the equivarincy. After all the computation on amplitude, we append the phase to get a complete spectrum representation. In the end, we perform a Fourier inverse to get back to the spatial domain and input to the next layer. Figure 5.5 shows the different elements of the architecture and their relationship together.

Chapter 6 Experiments

6.1 Experimental Setup

6.1.1 Systems and Baseline

The systems used for the experiments are provided by the Laboratory for Neural Computing for Machine Learning, the specifications of the systems are listed in the table 6.1

| System List | |||

|---|---|---|---|

| System | CPU | Memory | GPUs |

| Speech 8 | 4-core CPU (Xeon W-2125) | 32GB | 1 GTX 1080, 8GB memory |

| Text | 6-core CPU (i7-5820K) | 64GB | 4 TITAN X, 12GB memory |

| Video | 6-core CPU (Xeon E5-1650) | 64GB | 3 TITAN X, 12GB memory |

| Image | 6-core CPU (i7-6800K) | 128GB | 4 GTX 1080, 8GB memory |

The experiments are all conducted with the python version 3.7, PyTorch version 1.7.1 utilized with CUDA 10.1, with torchvision version 0.8.2, and torchaudio version 0.7.2.

SNGAN [sngan] is the baseline chosen for the GAN experiments. It uses spectral normalization (normalization of the weight matrices by the largest singular value) in its convolutional layers. It is a fairly stable version of GANs, and it is used in other frequency-driven efforts for GAN improvements such as [71], so it makes our work comparable with others. The implementation is done based on Mimicry [74] which is a lightweight PyTorch library aimed towards the reproducibility of GAN research. It is introduced in the CVPR 2020 workshops to resolve (in their words): "(a) Standardized implementations of popular GANs that closely reproduce reported scores; (b) Baseline scores of GANs trained and evaluated under the same conditions; (c) A framework for researchers to focus on the implementation of GANs without rewriting most of GAN training boilerplate code, with support for multiple GAN evaluation metrics."

6.1.2 Datasets

We used two datasets in this work, CIFAR100 [75] and stl-10 [76]. The first one consists of 3232 images in 100 classes developed by the University of Toronto. It has 600 images in each class, which makes it a total of 60000 images. There are 500 training and 100 testing images in each class. Since in the unsupervised problem of GAN, we do not need a separate testing class, we used all 600 images for the training purpose. This dataset provides us with images with different features due to its variety in classes. It is important for us since our objective is to see the improvement of the GANs for the general problem of creating synthetic images, not as a class conditional problem. Some examples of the classes are animals, insects, fruits and vegetables, plants, household devices and furniture, people, plants, outdoor and indoor scenes with different lightings and vehicles. Stl-10 contains 10 classes of images: airplane, bird, car, cat, deer, dog, horse, monkey, ship, and truck. in each 500 training and 800 testing images which we used both for training. The resolution of the original images is 9696. However, we resized the images to 4848 for memory-related and comparison reasons. The images in this dataset are acquired from rescaling of the ImageNet [53] dataset.

6.1.3 Optimization and GAN Hyperparameters Settings

We used Adam optimizer for both generator and discriminator with the setting: . The learning rate is set to with a linear decay throughout the training. The batch size is set to and we adopt Xavier initialization for the all neural network weights. We update discriminator 5 times for each generator update to keep the training balance. The architecure for generator for each dataset is reported in the table 6.2 and 6.3, for the discriminator spatial path in table 6.4 and 6.5, for the discriminator frequency path in table 6.6 and 6.7 and finally for the last unitary module of the discriminator in tables 6.8 and 6.9.

The FFT input to the frequency path consists of a double-sided FFT of the gray-scaled images. We chose to make our images monochrome since color frequencies possess less importance than brightness frequencies. We needed our module to focus on the brightness frequencies for this step. The addition of the color frequencies to the path is in our plans for future works.

Notations for reading the tables are: ResBlock: A residual block with a compatible shortcut whether it has down or up a sample or not, CONV: a convolutional layer with the written specifications, SNCONV: a convolutional layer with spectral normalization, K: kernel size, S: stride size, P: padding size, N: number of output channels, FC: fully connected layer with number of input and output neurons, Up: an upsampling procedure, PhaseAttach: procedure of attaching the phase which is pooled the same as amplitude in parallel, BN: batch normalization

| Layer | Input Output Shape |

|---|---|

| FC-(128,2048), Reshape | (128) (256,4,4) |

| ResBlock: SNCONV-(N256,K3,S1,P1), BN, ReLU, Up | (256,4,4) (256,8,8) |

| ResBlock: SNCONV-(N256,K3,S1,P1), BN, ReLU | (256,8,8) (256,8,8) |

| ResBlock: SNCONV-(N256,K3,S1,P1), BN, ReLU, Up | (256,8,8) (256,16,16) |

| ResBlock: SNCONV-(N256,K3,S1,P1), BN, ReLU | (256,16,16) (256,16,16) |

| ResBlock: SNCONV-(N256,K3,S1,P1), BN, ReLU, Up | (256,16,16) (256,32,32) |

| ResBlock: SNCONV-(N256,K3,S1,P1), BN, ReLU | (256,32,32) (256,32,32) |

| ResBlock: SNCONV-(N256,K3,S1,P1), BN, ReLU | (256,32,32) (256,32,32) |

| ResBlock: SNCONV-(N256,K3,S1,P1), BN, ReLU | (256,32,32) (256,32,32) |

| BN, ReLU, CONV-(N3,K3,S1,P1), Tanh | (256,32,32) (3,32,32) |

| Layer | Input Output Shape |

|---|---|

| FC-(128,18432), Reshape | (128)(512,6,6) |

| ResBlock: SNCONV-(N256,K3,S1,P1), BN, ReLU, Up | (512,6,6) (256,12,12) |

| ResBlock: SNCONV-(N256,K3,S1,P1), BN, ReLU | (256,12,12) (256,12,12) |

| ResBlock: SNCONV-(N128,K3,S1,P1), BN, ReLU, Up | (256,12,12) (128,24,24) |

| ResBlock: SNCONV-(N128,K3,S1,P1), BN, ReLU | (128,24,24) (128,24,24) |

| ResBlock: SNCONV-(N64,K3,S1,P1), BN, ReLU, Up | (128,24,24) (64,48,48) |

| ResBlock: SNCONV-(N64,K3,S1,P1), BN, ReLU | (64,48,48) (64,48,48) |

| ResBlock: SNCONV-(N64,K3,S1,P1), BN, ReLU | (64,48,48) (64,48,48) |

| ResBlock: SNCONV-(N64,K3,S1,P1), BN, ReLU | (64,48,48) (64,48,48) |

| BN, ReLU, CONV-(N3,K3,S1,P1), Tanh | (64,48,48) (3,48,48) |

| Layer | Input Output Shape |

|---|---|

| ResBlock: SNCONV-(N128,K3,S1,P1), ReLU | (3,32,32)(128,32,32) |

| ResBlock: SNCONV-(N128,K3,S1,P1), ReLU, AvgPool-(K2,S2) | (128,32,32)(128,16,16) |

| ReLU, ResBlock: SNCONV-(N128,K3,S1,P1) | (128,16,16)(128,16,16) |

| ReLU, ResBlock: SNCONV-(N128,K3,S1,P1), AvgPool-(K2,S2) | (128,16,16)(128,8,8) |

| ReLU, ResBlock: SNCONV-(N128,K3,S1,P1) | (128,8,8)(128,8,8) |

| ReLU, ResBlock: SNCONV-(N128,K3,S1,P1) | (128,8,8)(128,8,8) |

| ReLU, ResBlock: SNCONV-(N128,K3,S1,P1) | (128,8,8)(128,8,8) |

| ReLU, ResBlock: SNCONV-(N128,K3,S1,P1) | (128,8,8)(128,8,8) |

| ReLU, GlobalSumPool | (128,8,8) (128) |

| Layer | Input Output Shape |

|---|---|

| ResBlock: SNCONV-(N64,K3,S1,P1), ReLU | (3,48,48)(64,48,48) |

| ResBlock: SNCONV-(N64,K3,S1,P1), ReLU, AvgPool-(K2,S2) | (64,48,48)(64,24,24) |

| ReLU, ResBlock: SNCONV-(N128,K3,S1,P1) | (64,24,24)(128,24,24) |

| ReLU, ResBlock: SNCONV-(N128,K3,S1,P1), AvgPool-(K2,S2) | (128,24,24)(128,12,12) |

| ReLU, ResBlock: SNCONV-(N256,K3,S1,P1) | (128,12,12)(256,12,12) |

| ReLU, ResBlock: SNCONV-(N256,K3,S1,P1), AvgPool-(K2,S2) | (256,12,12)(256,6,6) |

| ReLU, ResBlock: SNCONV-(N512,K3,S1,P1) | (256,6,6)(512,6,6) |

| ReLU, ResBlock: SNCONV-(N512,K3,S1,P1), AvgPool-(K2,S2) | (512,6,6)(512,3,3) |

| ReLU, ResBlock: SNCONV-(N1024,K3,S1,P1) | (512,3,3)(1024,3,3) |

| ReLU, ResBlock: SNCONV-(N1024,K3,S1,P1) | (1024,3,3)(1023,3,3) |

| ReLU, GlobalSumPool | (1024,3,3) (1024) |

| Layer | Input Output Shape |

|---|---|

| ResBlock: SNCONV-(N128,K3,S1,P1), ReLU | (1,32,32)(128,32,32) |

| ResBlock: SNCONV-(N128,K3,S1,P1), ReLU, MaxPool-(K2,S2) | (128,32,32)(128,16,16) |

| PhaseAttach, IFFT, GlobalSumPool | (128,16,16)(128) |

| Layer | Input Output Shape |

|---|---|

| ResBlock: SNCONV-(N256,K3,S1,P1), ReLU | (1,48,48)(256,48,48) |

| ResBlock: SNCONV-(N1024,K3,S1,P1), ReLU, MaxPool-(K2,S2) | (256,48,48)(1024,24,24) |

| PhaseAttach, IFFT, GlobalSumPool | (1024,24,24)(1024) |

| Layer | Input Output Shape |

|---|---|

| FC-(128,1) | (128) spatial (1) s-score |

| FC-(128,1) | (128) frequency (1) f-score |

| Concat | (128) spatial, (128) frequency (256) |

| FC-(256,1) | (256) (1) j-score |

| Layer | Input Output Shape |

|---|---|

| FC-(1024,1) | (1024) spatial (1) s-score |

| FC-(1024,1) | (1024) frequency (1) f-score |

| Concat | (1024) spatial, (1024) frequency (2048) |

| FC-(2048,1) | (2048) (1) j-score |

6.2 Stability of The Training

Before evaluating any result, we need to ensure that the model converges. Otherwise, the results can not serve as an indication of the superiority of our model. In GAN tasks, unlike conventional tasks, the objective in training is not improving variables such as decreasing a loss. We care about a gradual and continuous improvement in both agents involved in the process, meaning generator and discriminator. If any agents get too strong with respect to the other, the balance is disturbed, and the model will fail. This multi-agent scheme made GANs more susceptible to the instability of training. In fact, GANs are famous for being hard to train due to instability, and there have been several works to make them more stable. In this section, we provide empirical substantiations that our extension to the GAN framework will not jeopardize the stability of the baseline. We further discuss how each of the spatial and frequency modules acts separately convergence-wise and how they contribute to the convergence of the discriminator in total.

When networks are out of balance, we see a fast convergence in one of them, showing that the converged network has won the competition. It can happen when one network is inherently more potent. To address this kind of problem, we update the weaker network more frequently. In our cases, the baseline updates the discriminator 5 times for each generator update, and our extension uses the same ratio without instability. The other cause of failure in GANs is mode collapse. A mode collapse refers to a generator model that can generate one or a small subset of different outcomes or modes. It happens when the generator finds a way to produce plausible outcomes for the discriminator without imitating the distribution. Mode collapse is easily detectable by looking at the generated images since they come from a small set of images and are not diverse. In the learning curve, it shows itself as an oscillatory behavior in the generator loss (and, in some cases, discriminator loss).

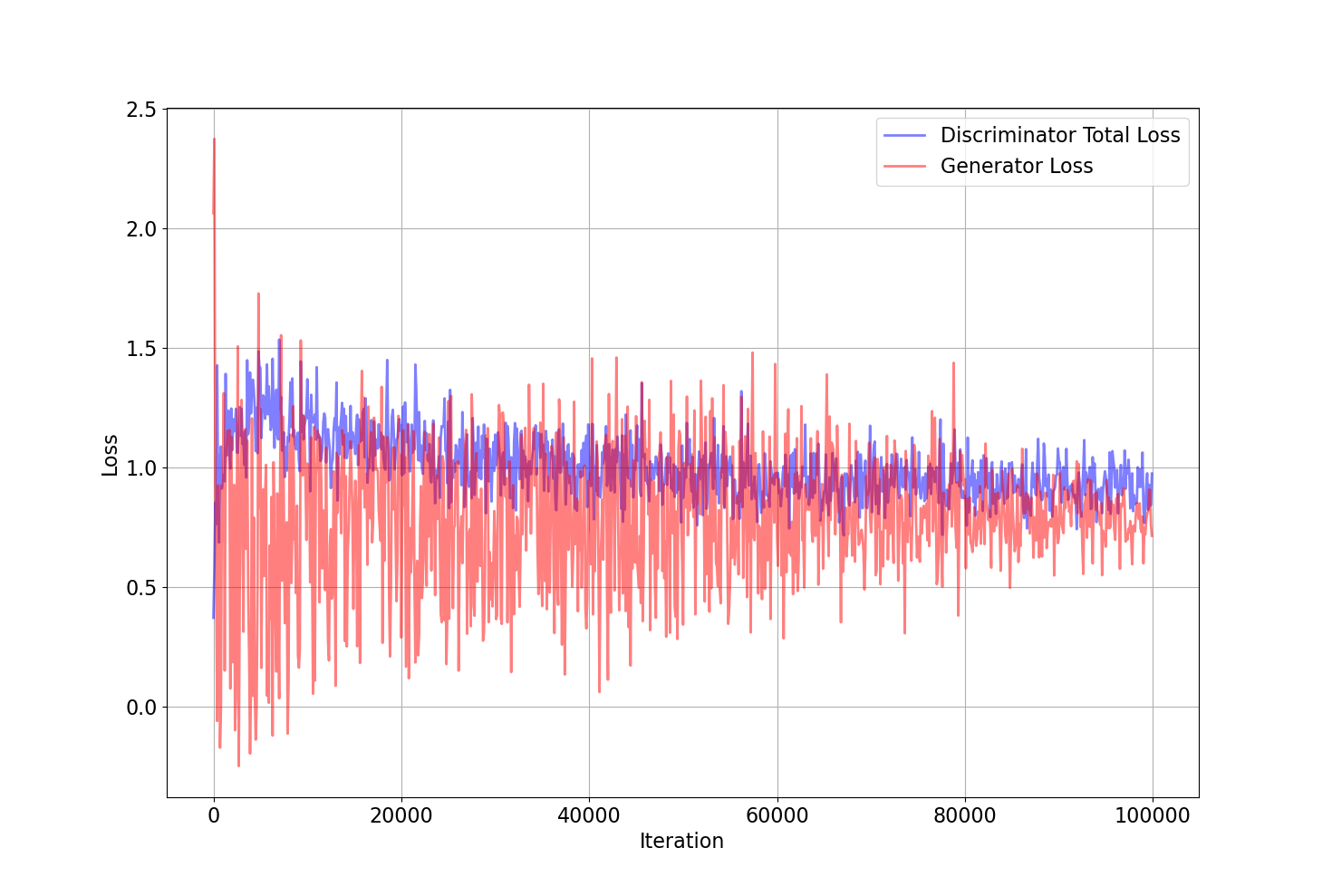

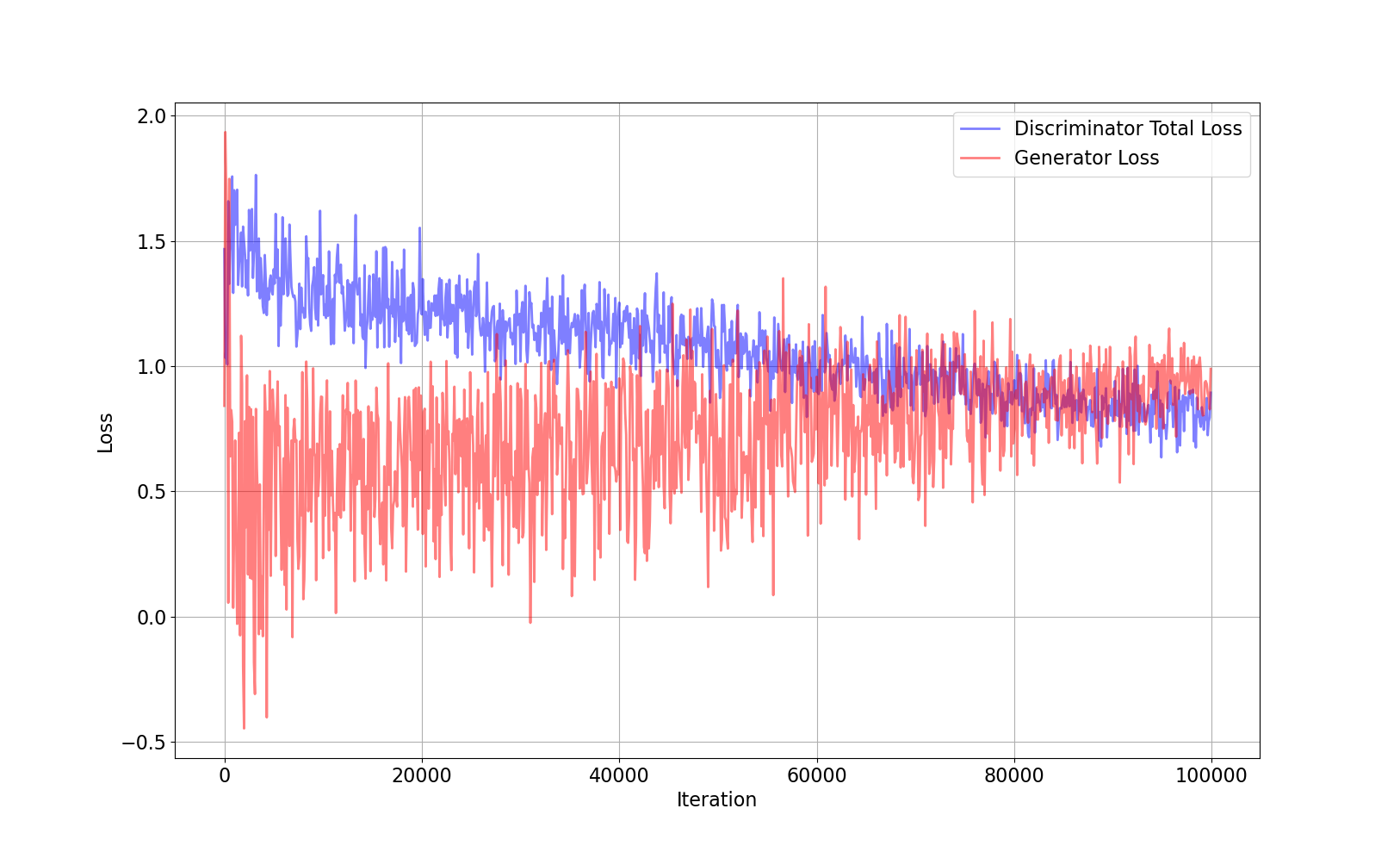

Figures 6.1 and 6.2 show the Discriminator’s total loss, sum of the discriminator’s fake and real losses, and generator’s loss together for CIFAR100 and stl10 datasets. The curves are in a balanced manner showing fair competition while converging to the same loss. There is no instability in the curves, whether a fast convergence or an oscillatory behavior. For a deeper look, each discriminator loss consists of 3 other losses coming from the spatial and frequency modules and one joint loss from the J module. Figures 6.3 and 6.4 show the derailed losses for the mentioned datasets. Frequency loss has a lower value compared to the spatial loss in CIFAR100 and greater in the stl10. It shows that the frequency domain is as useful as the spatial domain to detect the fake images for the discriminator. The joint loss trains to gives most of its attention to the more functional loss. The power of unitary modules emerges here. While spatial and frequency losses equally contribute to the of the discriminator loss, the joint loss which is responsible for the last of the total loss, automatically learns to adopts its weights for better use of each domain.

Figures 6.5 and 6.6 show the raw scores used by discriminator for classification. The real scores are expected to be above zero and fake scores below zero. Although joint score mostly follows the spatial scores, frequency scores show fewer fluctuations and well above and below the 0 threshold, making it a valid feature for classification.

To recapitulate, frequency features showed to be a good representative for fake and real images in the discriminator and made the discriminator more powerful. The Generator also shows the capacity to be pushed along with the frequency extension in the discriminator without losing stability.

6.3 Evaluation in Spatial Domain

We use the conventional methods, Frechet Inception Distance and Inception Score explained in the section 2.3 for quantitative comparisons in the spatial domain. FID is calculated over 50000 samples of fake and real images. Inception score is computed over 50000 samples from the fake images with 10 splits. The evaluation is against the baseline (SNGAN) [sngan] and the SSDGAN [71] model. SSDGAN, the same as our model, uses frequency domain to enhance the discriminator. They use the azimuthal Integral of the amplitude as an input for their frequency module, which is a fully connected layer. Their model disregards the phase information of the FFT completely. While the amplitude of the FFT carries the intensity of each frequency, Phase information contains the location of these frequencies. To a human eyes phase information looks more important; hence we have not set them aside in our model. The other drawback of their model is using the azimuthal integration over FFT. By binning the same frequency resolutions, their model will lose the amplitude location information of the FFT. On the other hand, Ours takes the FFT as it is without any information loss as input.

table 6.10 and 6.11 demonstrate the quantitative comparison. Our model outperforms all other models except the inception score for stl-10, which is less but comparable to SSDGAN. However, SSDGAN has some major flaws, which we fully describe in the next section. Note that for the FID, the lower, the better, and for inception score, the higher, the better.

| Model | FID | Inception Score |

|---|---|---|

| SNGAN | 22.61 | 7.57 |

| SSDGAN | 20.90 | 7.71 |

| FreqGAN | 19.63 | 7.82 |

| Model | FID | Inception Score |

|---|---|---|

| SNGAN | 39.56 | 8.04 |

| SSDGAN | 36.41 | 8.47 |

| FreqGAN | 35.89 | 8.31 |

Figure 6.7 and 6.8 show some examples of the images created based on CIFAR100 and stl10 datasets respectively. We have not cherry picked the images and they are random images generated by the generator of our model.

6.4 Evaluation in Frequency Domain

Unlike the spatial domain, Evaluation in the frequency domain does not have a well-established method. To illustrate how generated images are similar to the real ones in the frequency-domain power spectrum is a good candidate. To further show the difference, we use the power spectrum distance between real images and those produced by the GAN models, normalized by the spectra of real images. (used in [77])

We also introduce the 2D frequency amplitude gap, which uses the average distance between the expected 2D-frequency gap between the amplitude of the authentic images and synthetic images normalized by those of authentic images.

Figure 6.9 shows the power spectrum of our model with real images and the other two models in the stl10 dataset. FreqGAN has the closest distance from the real image. It is more clear looking at the normalized power spectrum distance in figure 6.10. The ideal generator should have a constant zero distance throughout all the frequency bins. To our surprise, despite getting the azimuthal integration of the magnitude, which is essentially a variation of the power spectrum itself, SSDGAN has even poorer performance than the baseline SNGAN in the power spectrum. We believe this is owing to fully connected architecture. The fully connected neural networks have a dense hypothesis class in the frequency domain; therefore, estimating the best function even if the neurons are not many is hard for the neural network. In addition, the lack of unitary modules will cause an uncontrolled effect of these not-well-trained elements in the training process. FreqGAN, contrastingly, shows an almost flat curve over all frequency bins. addressing the high-frequency discrepancies in the SNGAN.

Figure 6.11 measures the same idea in a more direct way with the normalized 2d frequency spectrum gap. The color map shows the difference; the darker the pixel, the more significant the difference between the fake and authentic images. FreqGAN here shows the best performance overall again, while SSDGAN shows inferior performance than the baseline.

For further discussion, we believe the difference of our work will show itself for higher resolution photos more significantly. The high-resolution photos suffer more from high-frequency discrepancies, and our model shows a spectacular result in addressing them. In our plans for future work, we have in mind to further analyze the relation of resolution with the performance of our model.

6.5 Frequency Architecture Investigation

We used the powerful tool of geometric deep learning to design our architecture for the frequency domain. In this section, we discuss its performance over the fully connected architecture and regular CNN. We trained 3 trails of neural networks for each architecture. The fully connected version is the same as the work done by SSDGAN [71]. The CNN version is equipped with spectral normalization as well to keep the comparison fair. Tabel 6.12 shows that GDL based architecture outperforms other models by a significant margin.

| Model | FID |

|---|---|

| SNGAN (Baseline) | 22.61 |

| Fully Connected | 21.57 |

| Unmodified CNN | 22.01 |

| GDL inspired CNN | 19.63 |

Chapter 7 Conclusion

7.1 Conclusion

This thesis brought up current flaws in the CNN-based image generation techniques (such as generative adversarial networks), which made them easily distinguishable from authentic images. Then we investigate the possible justification for such flaws both mathematically and empirically. We found the main reason in high-frequency discrepancies between the spectrum of synthetic and authentic images. We showed that this disparity has its roots in the transconvolution layers of CNNs. This work proposed a solution for the GANs. An additional path for the discriminator, processing the spectrum of the images. Knowing the frequency representation of the images, the discriminator is equipped with direct access to detect problematic high-frequency elements. On the other side of the story, the generator adopts its weight to reduce the high-frequency elements, resulting in more realistic, hard to distinguish images.

Different datasets exhibit different distributions in their spatial and frequency features. Some are easier to detect in frequency, some in spatial. The proposed unitary modules in this work adjust the amount of attention the model gives to frequency or spatial domains based on their importance in the data. This scheme in leading the optimization not only resulted in better outputs but also stabilized the training.

This work empirically investigates different architectural designs for the frequency path of the discriminator, including fully connected and CNN schemes, and comes up with a brand new architecture EV-Freq. EV-Freq has systematically designed based on the geometric deep learning blueprint. It utilizes the physical constraints of shift invariancy in the images as an inductive bias to reduce the search space and improve the neural network’s estimation ability on the spectrum of the corresponding images. This thesis provides complete theoretical analysis and proofs for the newly design architecture.

We evaluate our extension to the GANs framework over two datasets, CIFAR100 and stl10, in spatial and frequency domains. In the conventional spatial domain, it outperformed the baseline by a significant margin. Our model also outperformed the other frequency-driven effort in improving GANs, SSDGAN. FreqGAN achieved an outstanding performance in the frequency domain, generating images almost identical to real images concerning the power spectrum. GANs are notorious for training instability. A section of this work focused on empirical substantiations that the proposed extension would not jeopardize the stability of the baseline.

7.2 Future Works

The future steps for the current research are listed as follow:

-

•

This work improves the quality spectrum characteristics of the latent distribution of the generator by improving the discriminator power in frequency. Yet, it does not propose any direct change in the generator’s architecture. Investigating new designs for the generator to facilitate adopting with the frequency features is one of our prominent future lines of research

-

•

EV-freq introduced a shift equivariant function operating on frequency domain; however, these classes of functions keep the phase information intact, in the future steps, we continue our search to find functions operating on the phase of FFT spectrum to utilize them in the detection process of the discriminator.

-

•

Current work uses the FFT of the grayscaled version of the images. In other words, it just takes advantage of the brightness frequency in the images. For future steps, we intend to extend our work to a colored version of the image utilizing the color frequency information in our model

-

•

New PyTorch update introduces complex autograd. Since back-propagation is now available for complex numbers, we can readily work with complex losses. It promises a new architectural design with no need for IFFT at the end of the block, which can improve our performance in the spectral domain and improve the memory usage and computation complexity.

References

- [1] Ian Goodfellow et al. “Generative Adversarial Nets” In Advances in Neural Information Processing Systems 27 Curran Associates, Inc., 2014 URL: https://proceedings.neurips.cc/paper/2014/file/5ca3e9b122f61f8f06494c97b1afccf3-Paper.pdf

- [2] Alec Radford, Luke Metz and Soumith Chintala “Unsupervised representation learning with deep convolutional generative adversarial networks” In arXiv preprint arXiv:1511.06434, 2015

- [3] Tero Karras, Samuli Laine and Timo Aila “A style-based generator architecture for generative adversarial networks” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 4401–4410

- [4] Tero Karras et al. “Analyzing and improving the image quality of stylegan” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 8110–8119

- [5] Phillip Isola, Jun-Yan Zhu, Tinghui Zhou and Alexei A. Efros “Image-to-Image Translation with Conditional Adversarial Networks” In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) IEEE, 2017 DOI: 10.1109/cvpr.2017.632

- [6] Han Zhang et al. “StackGAN: Text to Photo-Realistic Image Synthesis with Stacked Generative Adversarial Networks” In 2017 IEEE International Conference on Computer Vision (ICCV) IEEE, 2017 DOI: 10.1109/iccv.2017.629

- [7] Scott Reed et al. “Generative Adversarial Text to Image Synthesis”, 2016 arXiv:1605.05396 [cs.NE]

- [8] Ayushman Dash et al. “TAC-GAN - Text Conditioned Auxiliary Classifier Generative Adversarial Network”, 2017 arXiv:1703.06412 [cs.CV]

- [9] Scott Reed et al. “Learning What and Where to Draw”, 2016 arXiv:1610.02454 [cs.CV]

- [10] Sergey Bartunov and Dmitry Vetrov “Few-shot generative modelling with generative matching networks” In International Conference on Artificial Intelligence and Statistics, 2018, pp. 670–678 PMLR

- [11] He Zhang, Vishwanath Sindagi and Vishal M. Patel “Image De-Raining Using a Conditional Generative Adversarial Network” In IEEE Transactions on Circuits and Systems for Video Technology 30.11 Institute of ElectricalElectronics Engineers (IEEE), 2020, pp. 3943–3956 DOI: 10.1109/tcsvt.2019.2920407

- [12] Andrew Brock, Theodore Lim, J.. Ritchie and Nick Weston “Neural Photo Editing with Introspective Adversarial Networks”, 2016 arXiv:1609.07093 [cs.LG]

- [13] Ming-Yu Liu and Oncel Tuzel “Coupled Generative Adversarial Networks”, 2016 arXiv:1606.07536 [cs.CV]

- [14] Guim Perarnau, Joost Weijer, Bogdan Raducanu and Jose M. Álvarez “Invertible Conditional GANs for image editing”, 2016 arXiv:1611.06355 [cs.CV]

- [15] Jiajun Wu et al. “Learning a Probabilistic Latent Space of Object Shapes via 3D Generative-Adversarial Modeling”, 2016 arXiv:1610.07584 [cs.CV]

- [16] Matheus Gadelha, Subhransu Maji and Rui Wang “3D Shape Induction from 2D Views of Multiple Objects” In 2017 International Conference on 3D Vision (3DV) IEEE, 2017 DOI: 10.1109/3dv.2017.00053

- [17] Momina Masood et al. “Deepfakes Generation and Detection: State-of-the-art, open challenges, countermeasures, and way forward”, 2021 arXiv:2103.00484 [cs.CR]

- [18] Hany Farid “Photo Forensics” The MIT Press, 2016

- [19] Sheng-Yu Wang et al. “CNN-Generated Images Are Surprisingly Easy to Spot… for Now” In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) IEEE, 2020 DOI: 10.1109/cvpr42600.2020.00872

- [20] Xu Zhang, Svebor Karaman and Shih-Fu Chang “Detecting and Simulating Artifacts in GAN Fake Images” In 2019 IEEE International Workshop on Information Forensics and Security (WIFS) IEEE, 2019 DOI: 10.1109/wifs47025.2019.9035107

- [21] Louise Kauffmann, Stephen Ramanoël and Carole Peyrin “The neural bases of spatial frequency processing during scene perception” In Frontiers in Integrative Neuroscience 8, 2014, pp. 37 DOI: 10.3389/fnint.2014.00037

- [22] Luke E. Hallum, Michael S. Landy and David J. Heeger “Human primary visual cortex (V1) is selective for second-order spatial frequency” PMID: 21346207 In Journal of Neurophysiology 105.5, 2011, pp. 2121–2131 DOI: 10.1152/jn.01007.2010

- [23] S. Marĉelja “Mathematical description of the responses of simple cortical cells” In J. Opt. Soc. Am. 70.11 OSA, 1980, pp. 1297–1300 DOI: 10.1364/JOSA.70.001297

- [24] John G. Daugman “Uncertainty relation for resolution in space, spatial frequency, and orientation optimized by two-dimensional visual cortical filters” In Journal of the Optical Society of America A 2.7, 1985, pp. 1160–1169 DOI: 10.1364/JOSAA.2.001160

- [25] I. Fogel and D. Sagi “Gabor Filters as Texture Discriminator” In Biol. Cybern. 61.2 Berlin, Heidelberg: Springer-Verlag, 1989, pp. 103–113 DOI: 10.1007/BF00204594

- [26] Y. Lecun, L. Bottou, Y. Bengio and P. Haffner “Gradient-based learning applied to document recognition” In Proceedings of the IEEE 86.11, 1998, pp. 2278–2324 DOI: 10.1109/5.726791

- [27] Bharath Hariharan, Pablo Arbeláez, Ross Girshick and Jitendra Malik “Simultaneous Detection and Segmentation” In Lecture Notes in Computer Science Springer International Publishing, 2014, pp. 297–312 DOI: 10.1007/978-3-319-10584-0_20

- [28] Vijay Badrinarayanan, Alex Kendall and Roberto Cipolla “SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation” In IEEE Transactions on Pattern Analysis and Machine Intelligence 39.12, 2017, pp. 2481–2495 DOI: 10.1109/TPAMI.2016.2644615

- [29] Kaiming He, Georgia Gkioxari, Piotr Dollar and Ross Girshick “Mask R-CNN” In 2017 IEEE International Conference on Computer Vision (ICCV) IEEE, 2017 DOI: 10.1109/iccv.2017.322

- [30] Alex Krizhevsky, Ilya Sutskever and Geoffrey E Hinton “ImageNet Classification with Deep Convolutional Neural Networks” In Advances in Neural Information Processing Systems 25 Curran Associates, Inc., 2012 URL: https://proceedings.neurips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf

- [31] Karen Simonyan and Andrew Zisserman “Very Deep Convolutional Networks for Large-Scale Image Recognition”, 2014 arXiv:1409.1556 [cs.CV]

- [32] Kaiming He, Xiangyu Zhang, Shaoqing Ren and Jian Sun “Deep Residual Learning for Image Recognition” In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) IEEE, 2016 DOI: 10.1109/cvpr.2016.90

- [33] Pierre Sermanet et al. “OverFeat: Integrated Recognition, Localization and Detection using Convolutional Networks”, 2013 arXiv:1312.6229 [cs.CV]

- [34] Shaoqing Ren, Kaiming He, Ross Girshick and Jian Sun “Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks” In IEEE Transactions on Pattern Analysis and Machine Intelligence 39.6 Institute of ElectricalElectronics Engineers (IEEE), 2017, pp. 1137–1149 DOI: 10.1109/tpami.2016.2577031

- [35] Joseph Redmon, Santosh Divvala, Ross Girshick and Ali Farhadi “You Only Look Once: Unified, Real-Time Object Detection” In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) IEEE, 2016 DOI: 10.1109/cvpr.2016.91

- [36] Aaron Oord, Nal Kalchbrenner and Koray Kavukcuoglu “Pixel Recurrent Neural Networks”, 2016 arXiv:1601.06759 [cs.CV]

- [37] Guilin Liu et al. “Image Inpainting for Irregular Holes Using Partial Convolutions” In Lecture Notes in Computer Science Springer International Publishing, 2018, pp. 89–105 DOI: 10.1007/978-3-030-01252-6_6

- [38] Joseph Y. Cheng et al. “Highly Scalable Image Reconstruction using Deep Neural Networks with Bandpass Filtering”, 2018 arXiv:1805.03300 [cs.CV]

- [39] Yoon Kim “Convolutional Neural Networks for Sentence Classification” In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP) Association for Computational Linguistics, 2014 DOI: 10.3115/v1/d14-1181

- [40] Nal Kalchbrenner, Edward Grefenstette and Phil Blunsom “A Convolutional Neural Network for Modelling Sentences” In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) Association for Computational Linguistics, 2014 DOI: 10.3115/v1/p14-1062