Explainable Online Validation of Machine Learning Models for Practical Applications

Abstract

We present a reformulation of the regression and classification, which aims to validate the result of a machine learning algorithm. Our reformulation simplifies the original problem and validates the result of the machine learning algorithm using the training data. Since the validation of machine learning algorithms must always be explainable, we perform our experiments with the kNN algorithm as well as with an algorithm based on conditional probabilities, which is proposed in this work. For the evaluation of our approach, three publicly available data sets were used and three classification and two regression problems were evaluated. The presented algorithm based on conditional probabilities is also online capable and requires only a fraction of memory compared to the kNN algorithm.

I Introduction

Neural networks, decision trees or support vector machines are used in many industrial areas today. In sensor technology and data analysis these machine learning methods are used to convert a multitude of complex measured values and data into useful information [68, 1, 53, 65, 34, 35, 20, 18, 19, 2]. This includes position and orientation [78, 62, 74, 58], monitoring of biometric data [65, 52, 21, 11, 3, 22], control of robots [58, 74, 17, 56], malware analysis [72, 13, 54], credit rating [75, 55, 61, 63], visualization [36, 38, 48, 39], eye movement evaluation [40, 46, 24, 25, 33, 23, 26], and much more. The ever-increasing number of applications for machine learning methods is due to the fact that they are very economical to use compared to the classical algorithms which usually demand for a more detailed knowledge about the modeled system and are less generic. Another advantage of machine learning methods is the steadily improving performance compared to classical algorithms. As a result, new areas where automation through machine learning methods is gaining ground are constantly growing.

A disadvantage of machine learning methods is that they cannot be easily explained and validated [69, 12]. In many areas, where living beings are involved and safety standards must be guaranteed, the application of complex procedures is not possible because their reliable function cannot be proven. Of course, there are exceptions such as the k Nearest Neighbour method (kNN) or probabilistic models such as the Gaussian Mixture Models (GMM). Unfortunately, these methods lack flexibility to work well for all problems and for example in case of the kNN, huge training datasets result in a computationally expensive classifier.

The range of application of an automatic online validation for machine learning algorithms [34] is manifold since classical algorithms are already replaced by machine learning algorithms [47, 37, 32, 30, 45, 28, 31, 41, 27, 43, 42, 44, 29]. An important point here is of course the validation of the output of the machine learning model. The most common areas of application are online measurement systems [77, 16] where data is collected via sensors from the areas of environmental [15, 5], biological [4, 73] or technical systems [67, 71]. Thus, these measuring systems are used for monitoring, control and long-term data acquisition, although in most cases they are not safety-critical applications. Nevertheless, a validation of the result is desirable, because this way a new run can be carried out or the user can be informed that the result is probably wrong and can thus exclude it for a data analysis. Also, the online validation can indicate defective sensors, because the output is certainly no longer valid for defective sensors. In the case of safety-critical applications it is even mandatory to validate the model and in case of very high safety requirements like a nuclear plant it is even mandatory to prove the functionality of the model.

In this work, we address the validation and explainability of the results of complex machine learning algorithms. For this purpose, we present an additional reformulation of the general problem definition for machine learning methods, which is described in detail in Section III.

Our contributions to the state of the art are as follows:

- 1

-

Reformulation of the general problem definition for machine learning methods.

- 2

-

Provable and explainable validation for machine learning algorithms in general.

- 3

-

Realization of our proposed validation approach using conditional probabilities and a kNN model with feature-based qualitative metrics.

- 4

-

Empirical evaluation of our approach to classification and regression problems on public data sets.

II Related Work

The first approach for Neural Network validation was published in [6, 7]. As with our approach, the data is considered as a distribution and a manually defined threshold is used to decide whether the data is valid or not. The biggest difference to our approach is that we do not have to set thresholds, we formulate an additional quality metric per data input value, and we formulate the classes and quantified regression steps as conditional probability. In [57] the authors used the difference in the responses of different neural network architectures trained on different subsets of the training data. If the difference between the model responses was too large, an invalid response can be assumed. A disadvantage of this approach in practical application is that it does not trace back to a cause of the error regarding the input data. A list of restrictions for neural networks and machine learning algorithms in general is given in [59, 60]. One of the main problems that neural networks face is that they often lack a kind of white-box view of behaviour [59, 60]. A layered validation and verification of online adaptive neural networks was presented in [70]. Here, the input and output of the neural network are monitored and confidence intervals are calculated using input data. If the input data differs too much from the previous seen input data, the output is considered uncertain. Since this work is in progress, as stated by the authors, the exact calculation was not explained in detail.

The latest approaches to the validation of neural networks attempt to validate every single neuron in a network. This is done by reformulating it into a linear programming formalism which is then checked using box constraints [9, 10]. Theoretically, it is the best approach but since it cannot be used online, only given input and output conditions are tested, and not all neurons can be validated in general, a lot of research is needed before these approaches can be applied in practice. In addition, [9, 10] are limited to neural networks.

III Method

In this work, we deal with the validation and explainability of the results of complex machine learning algorithms. For this purpose, we present an additional reformulation of the general problem definition for machine learning methods. In the case of classification, this problem is given by , where is the function which is calculated by the machine learning method, is the -dimensional data or sensor values and is the class output. To validate the output class , we suggest a ”backprojection”, which is a binary classification. Thus the ”backprojection” can be represented as . This classification indicates whether the output class represents a valid solution for the given input data . The advantage of this formulation is that the complex multiclass problem can be mapped to a simpler binary classification problem. Therefore, simple machine learning methods for can be used without having to expect large losses in the accuracy of the prediction method .

In the case of regression, the underlying problem is . To make our procedure applicable to such a problem, we use quantization on the real output to form a multiclass classification problem. The formula is where is the quantization and each can have a different division. With this quantization, the ”backprojection” can be defined as . This means that each output value of the machine learning model is considered either as valid or invalid .

Since in real or industrial applications the pure class assignment is usually not sufficient, a quality signal can be determined by the distance to the individual classes () and this can also be mapped to the value range . In the case of a kNN for the backprojection, the distance of the k selected neighbors to the input data would be exactly this distance. Mapped to the value range , this would allow the statement how similar the input data is to already known data. Thus, the result of the machine learning process can be easily explained using the training data. Since a kNN requires a lot of memory for the individual training samples and is expensive to calculate with increasing amounts of training data, we describe the backprojection by means of a conditional probability in the following. In addition, the individual input data streams are mapped to distributions and thus allow to evaluate the quality of individual data streams in general.

Figure 1 shows the process of our novel approach for the online validation of the results from machine learning approaches. In Figure 1, we selected a neural network as an instance. The upper part (orange) is the simple execution. Here the network is applied to sensor data and delivers a result.

The lower part (green) is our approach to validate the result. In case of a regression, the results of the neural network are quantified to form conditional probability distributions. This is already given for the classification. Each quantification level or class forms an index to probability distributions calculated on the sensor data. Since these form a high-dimensional vector in most cases, either a dimension reduction must be applied or each individual sensor value must be represented as a probability distribution. Using the quantified result of the network, it is now possible to calculate from the distributions how certain one can be that the input data matches the data already seen (training data) with respect to the result. This gives you the information whether you have already evaluated the net on similar data and the result is the same or not. It makes it possible to validate online whether the sensor values match the data that was used for the approval of the algorithm. Another advantage of the distributions on the sensor data is the quality of the distribution. In high-dimensional input vectors, there may be single values that do not contribute to certain results. Therefore the distribution has no significance or a high degree of uncertainty for the result. This can be measured by the difference of the integrals of the raw data and the distribution. This measure of uncertainty in combination with the assignment to quantified results allows to weight the contribution of individual distributions to the validity differently.

In the following, the whole procedure is described mathematically. During the operational mode, we aim to compute a conditional probability

| (1) |

which is the probability of given the output at time of the machine learning approach. Since the input raw values and the output are vectors, we consider each index of the input vector and each position of the output vector separately. contains the discretized sensor values where the index labels the intervals. The validity of the output is judged by comparison of the distribution in Equation 1 with a second distribution which was fitted beforehand against the training dataset:

| (2) |

Equation 2, represents the calculated distribution for , which was fitted using the input data and the ground truth labels together with the network output. Therefore, this conditional probability distribution can be understood as a reliability function of the network. This can be done either as histogram or using a fitting algorithm to an formally defined function like a gauss distribution. The formally defined function itself is freely selectable, but care must be taken that the difference between the output of the formally defined distribution and the raw distribution is as small as possible. We continue with the calculation of the normalized intersection of Equations 1 and 2:

| (3) |

Equation 3 determines the quality of the distribution and corresponds to the Jaccard Index for area comparisons. This means that the more similar the two distributions are, when superimposed, the higher is the quality. For the comparison of distributions the Kullback-Leibler divergence is normally used, and is characterized by an asymmetry . As we have no preferred ordering and store the distributions as histograms, it makes sense to use the symmetric Jaccard Index for our implementation. The normalization leads to a maximum value of 1 and a minimum value equal to 0. is needed to prevent a division by 0. An advantage of this formulation is that it is very sensitive to gaps in the distribution and results in a poorly rated quality if the data base is small. With these three equations we can formulate the overall evaluation of new input data with an output value at time .

| (4) |

Equation 4 describes the computation of the validity of each th response of the machine learning algorithm separately, which is illustrated as neural network in Figure 1. The computation consist of the quality of the features () and the reliability of the network output per feature (). For normalization and to ensure numerical stability, the sum between the product of the quality of the features and the reliability of the network output per feature is divided by the maximum values of the reliability of the network output per feature () since those values are the maximal outcomes of the product ().

For a large validity , the scalar product has to be large, which is the case for a precomputed distribution that coincides well with the one based on the model data alone . Then, and are similar, which maximizes the numerator in Equation 4. The normalization is done using the sum of maxima of all the included distributions which leads to a preference of homogeneous distributions with small maxima. The validity has a maximum value of 1 and a minimum value of 0. This quality signal can be used to measure whether the input data follows the data used to validate the algorithm or not. Thus, it is possible to make online statements whether the response of the neural network is reliable and has been tested and is therefore explainable and comprehensible. Alternative formulations for Equation 4 can use the median of all computed values or predefine a minimum of quality, by using a threshold.

The formulation of Equation 4 is limited by the need for sufficiently many data points which correspond to the assigned output and provide a high quality according to Equation 3. If those data is not given, no statement can be made with regard to the input data, since these cannot be represented as a distribution either.

To circumvent this limitation we follow the principle of divide and conquer. We determine a large number of distributions that fit as well as possible to a local value of the output. This way we simplify the complexity of the whole distribution to many smaller distributions. In addition, we also divide the nonlinear output into ranges (quantization) or use the predefined classes. Since the individual outputs of the machine learning algorithm are also considered separately, the validity can be evaluated separately for each output, but also in total for the whole network (Equation 5).

| (5) |

Equation 5 describes the evaluation of the whole network over all outputs where the total number of outputs is . This is a simple average where each output can be weighted to obtain a weighted average validity ( with as weight per output).

IV Evaluation

In this part of the work, different non-linear machine learning approaches (neural networks, gradient boosted decision trees, and bagged decision trees) are applied to three public data sets. For each machine learning approach we evaluated different model sizes, but only a small part is shown here. The remaining evaluations are in the supplementary material. For the evaluation, we used three classification challenges and two regression challenges. For the validation, we show the results of the both approaches discussed in the last section: The probabilistic approach, and the kNN approach based on the reformulation and histograms as distributions. First, we describe the public data sets used.

Beach Water Quality 111https://data.cityofchicago.org/Parks-Recreation/Beach-Water-Quality-Automated-Sensors/qmqz-2xku: This data set contains the sensor data from the water quality of the Chicago Park District along Chicago’s Lake Michigan lakefront. It is recorded on six different beaches which are our target classes for the classification experiment. For regression, we used the wave height sensor response. In total it has 39.469 entries but we omitted all records where at least one value was missing. This was done to make all machine learning based approaches like neural networks applicable to the data. Therefore, we used 10.034 records for our evaluation.

Classify Gestures by Reading Muscle Activity 222https://www.kaggle.com/kyr7plus/emg-4: This data set was recorded using a MYO armband which records the signals from eight EMG (Electromyography) sensors. Eight of such recordings are connected (64 features per class) and linked to a muscle activity (Gesture classes were : rock - 0, scissors - 1, paper - 2, ok - 3). These gestures are the targets for our classification experiment. We did not use this data set for regression since the sensor values would not make much sense as targets. In total the data set has 11.678 records which we all used in our experiment.

Red Wine Quality 333https://www.kaggle.com/uciml/red-wine-quality-cortez-et-al-2009: This data set was published in [14]. It is related to red variants of the Portuguese ”Vinho Verde” wine and contains eleven features like fixed acidity, volatile acidity, citric acid, residual sugar, chlorides, free sulfur dioxide, etc., as well as a numerical quality measure. For classification, we used the numeric quality measure as target. For our regression experiment, we selected citric acid as target value. It is a highly unbalanced data set with 1.599 records. We used all of the records for our evaluation.

IV-A Evaluated Machine Learning Approaches

To test our approach extensively, we evaluated three machine learning algorithms in four different configurations each. The selected machine learning algorithms are neural networks, bagged ensemble of decision trees, and gradient boosted ensemble of decision trees. We selected those because all of these approaches are known to perform very well on nearly all data sets. For the neural network, we used 20, 30, 40, and 50 neurons as one hidden layer before the final output neuron. For the regression we used Levenberg Marquart backpropagation [49] for training. For the classification we used Scaled Conjugated gradients [66]. The bagged and boosted ensembles were used with 5, 10, 15, and 20 decision stumps. For the classification with bagging, we used the standard randomized approach form random forests [8] and quantile regression forests [64] for the regression. The classification with boosting was done using totally corrective boosting [76], and for the regression we used least-squares boosting [51].

The validity signal was estimated using a kNN [50]. As an alternative using our proposed approach without explicit distribution fitting for the features, we used the estimated distribution from the data as histogram. This means that our quality of the distribution is always one (Equation 3). For the kNN we set K as the number of features in the data set and used euclidean as distance metric. In addition, we compared the distance to all known entries instead of an approximation with a tree structure. In addition, we compare our results with the previous work [6] to show the advantage of our algorithm.

The train and test split was done using a 50% to 50% split. This means we used 50% for training of the classifier and for the validation of them. This data was also used to train the validation algorithms. The other 50% are for evaluation only.

Since showing the complete evaluation of all the models for three classifications and two regression problems would exceed the content of this work, only the results of the neural network with 50 neurons in the hidden layer were included. All other results are found in the supplementary material.

IV-B Results

Figure 2, 3 and 4 show the classification results as well as the validation signal. The classification results are displayed as a confusion matrix for each data set (top plot). The three central plots show the validation signal on the correctly recognized classes per algorithm (left kNN, center Probability, and right re-implementation of [6]). Here it is desirable that the validation signal is as high as possible. As can be seen in all plots, the signals are high for both the kNN and for our conditional probability approach, but not for the [6] in Figure 2 and 4. Here the validation signal of the re-implementation has a significant impact on the classification accuracy.

Figure 2 shows that for some correctly recognized classes the validation signal of the kNN and Probability approach slips below a validity of 60%. This reduces the accuracy of the classifier. In contrast to the correctly recognized classes are the incorrectly recognized classes, which are shown in the three plots on the bottom. Here it is desirable that the validation signal is as low as possible. As can be seen in Figure 2, this is the case for all wrongly detected classes and the validation signal of the two proposed methods, and worse for the re-implementation of [6].

In Figure 3 this looks a little different. Here we are trying to classify the beach based on the water sensor values. If we look at the validation signal in the bottom three plots, we see that for the kNN three entries and for the conditional probability approach two entries are wrongly considered valid. This is due to the similarity of the data, as it is possible that on some days the water quality on the beaches does not differ. The reason kNN scores worse is that the data are very unbalanced, as can be seen from the confusion matrix in Figure 3. In the case of correctly classified data, only one entry is considered invalid by our conditional probability approach and three entries by the kNN. This has the same reasons as for the incorrectly classified data. [6] provides worse results in both cases.

For the Wine Quality Classification in Figure 4, the confusion matrix again shows very unbalanced data. The validation, however, works very well for the wrongly classified data, where all three approaches do not consider a wrongly classified entry as valid. In the case of correctly classified data, four entries are considered invalid by the conditional probability approach and three entries are marked as invalid by the kNN approach.

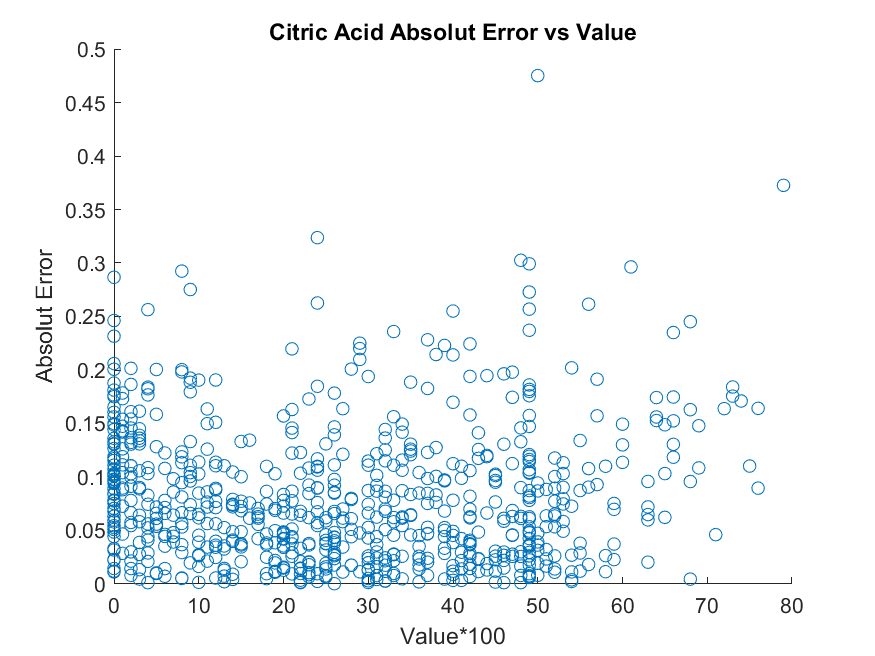

For the evaluation of the regression, we have decided to quantify to 10 bins with an enlargement of the target value by a factor of 10. The maximum target value after enlargement is assumed to be 10. This applies to the regression of the wave height as well as to the citric acid. We used the value of 10 for quantification since the wave height are fixed values between 1 to 10 and the ciric acid value range was from 4,6 to 15,9. The enlargement factor of 10 was selected since the numerical accuracy for the ciric acid value is . The results are shown in Figures 5 and 6. The first plot shows the error distribution (y-axis) regarding the true magnitude on the x-axis (Figure 5 the wave height and Figure 6 the citric acid content). The three subsequent plots show the validation signal of the kNN, the conditional probabilities, and the re-implementation of [6]. As can be seen, the validation signal decreases with increasing error, which shows the desired behaviour of our approach. To express this numerically we accept an error of 0.1 (corresponding to 100 in Figure 5 and Figure 6 of the second, third, and fourth plots) since we have quantized the values to 10 and take 10 as the maximum value. If we take this error as a threshold value, we get that for citric acid kNN recognized 6.7% of all correct results as invalid and interpreted 1.1% of all wrong values as valid. For the conditional probability approach, 14.2% of the correct values are marked as invalid and 0% of the incorrect values as valid. In comparison to this the re-implementation of [6] marked 42.7% of the correct values as invalid and 13.2% of the incorrect values as valid. For the wave height with the same threshold value of 0.1, kNN marks 4.2% of the correctly recognized heights as invalid and 1.2% of the incorrectly recognized heights as valid. The conditional probability approach marks 4.3% of the correctly detected heights as invalid and 1.2% of the incorrectly detected heights as valid. The re-implementation of [6] marked 4.5% of the correct values as invalid and 4.2% of the incorrect values as valid.

V Conclusion

In this work we have shown how to transform a multi class and regression problem into a two class problem with the classes valid or invalid. This two class problem is easier to solve and can be used to validate the result of a machine learning algorithm. For this purpose, an explanatory algorithm is necessary why we have evaluated a KNN for this purpose in this work. Since a KNN with a constantly growing amount of training data requires more and more memory and the computing time is increasing because more comparisons have to be made, we presented an alternative solution using probabilities. Both methods were evaluated on three public datasets where two regression problems and three classification problems were considered for a variety of machine learning algorithms and compared against a re-implementation of a state-of-the-art algorithm [6]. Our results show that our approach provides a high quality validity signal and works on a variety of problems. Both presented approaches can be used online and the probabilistic approach can be executed on a microcontroller even with large data sets. Together with the explanatory power of validation based on the training data, our approach represents a step towards machine learning algorithms in critical areas of application. Future work will go in the direction of sensor data acquisition regarding critical areas to further evaluate and validate our method for a real application.

References

- [1] Alsheikh, M. A., Lin, S., Niyato, D., and Tan, H.-P. Machine learning in wireless sensor networks: Algorithms, strategies, and applications. IEEE Communications Surveys & Tutorials 16, 4 (2014).

- [2] Bahmani, H., Fuhl, W., Gutierrez, E., Kasneci, G., Kasneci, E., and Wahl, S. Feature-based attentional influences on the accommodation response. In Vision Sciences Society Annual Meeting Abstract (2016).

- [3] Beam, A. L., and Kohane, I. S. Big data and machine learning in health care. Jama 319, 13 (2018), 1317–1318.

- [4] Bell, R. P., Reed, S. K., Schoonover, M. J., Whitfield, C. T., Yonezawa, Y., Maki, H., Pai, P. F., and Keegan, K. G. Associations of force plate and body-mounted inertial sensor measurements for identification of hind limb lameness in horses. American Journal of Veterinary Research 77, 4 (2016), 337–345.

- [5] Bibri, S. E. The iot for smart sustainable cities of the future: An analytical framework for sensor-based big data applications for environmental sustainability. Sustainable cities and society 38 (2018), 230–253.

- [6] Bishop, C. M. Neural network validation: an illustration from the monitoring of multi-phase flows. In 1993 Third International Conference on Artificial Neural Networks (1993), IET, pp. 41–45.

- [7] Bishop, C. M. Novelty detection and neural network validation. IEEE Proceedings-Vision, Image and Signal processing 141, 4 (1994), 217–222.

- [8] Breiman, L. Random forests. Machine learning 45, 1 (2001), 5–32.

- [9] Bunel, R., Lu, J., Turkaslan, I., Kohli, P., Torr, P., and Mudigonda, P. Branch and bound for piecewise linear neural network verification. Journal of Machine Learning Research 21, 2020 (2020).

- [10] Bunel, R. R., Turkaslan, I., Torr, P., Kohli, P., and Mudigonda, P. K. A unified view of piecewise linear neural network verification. In Advances in Neural Information Processing Systems (2018), pp. 4790–4799.

- [11] Char, D. S., Shah, N. H., and Magnus, D. Implementing machine learning in health care—addressing ethical challenges. The New England journal of medicine 378, 11 (2018), 981.

- [12] Cheng, C.-H., Diehl, F., Hinz, G., Hamza, Y., Nührenberg, G., Rickert, M., Ruess, H., and Truong-Le, M. Neural networks for safety-critical applications—challenges, experiments and perspectives. In 2018 Design, Automation & Test in Europe Conference & Exhibition (DATE) (2018), IEEE, pp. 1005–1006.

- [13] Chowdhury, M., Rahman, A., and Islam, R. Protecting data from malware threats using machine learning technique. In 2017 12th IEEE Conference on Industrial Electronics and Applications (ICIEA) (2017), IEEE, pp. 1691–1694.

- [14] Cortez, P., Cerdeira, A., Almeida, F., Matos, T., and Reis, J. Modeling wine preferences by data mining from physicochemical properties. Decision Support Systems 47, 4 (2009), 547–553.

- [15] Cross, E. S., Williams, L. R., Lewis, D. K., Magoon, G. R., Onasch, T. B., Kaminsky, M. L., Worsnop, D. R., and Jayne, J. T. Use of electrochemical sensors for measurement of air pollution: correcting interference response and validating measurements. Atmospheric Measurement Techniques 10, 9 (2017), 3575.

- [16] Czichos, H. Measurement, Testing and Sensor Technology. Springer, 2018.

- [17] Dillmann, R., Rogalla, O., Ehrenmann, M., Zöliner, R., and Bordegoni, M. Learning robot behaviour and skills based on human demonstration and advice: the machine learning paradigm. In Robotics Research. Springer, 2000, pp. 229–238.

- [18] Eivazi, S., Fuhl, W., and Kasneci, E. Towards intelligent surgical microscopes: Surgeons gaze and instrument tracking. In Proceedings of the 22st International Conference on Intelligent User Interfaces, IUI (2017).

- [19] Eivazi, S., Hafez, A., Fuhl, W., Afkari, H., Kasneci, E., Lehecka, M., and Bednarik, R. Optimal eye movement strategies: a comparison of neurosurgeons gaze patterns when using a surgical microscope. Acta Neurochirurgica (2017).

- [20] Eivazi, S., Slupina, M., Fuhl, W., Afkari, H., Hafez, A., and Kasneci, E. Towards automatic skill evaluation in microsurgery. In Proceedings of the 22st International Conference on Intelligent User Interfaces, IUI 2017 (03 2017), ACM.

- [21] Farrar, C. R., and Worden, K. Structural health monitoring: a machine learning perspective. John Wiley & Sons, 2012.

- [22] Fuhl, W. From perception to action using observed actions to learn gestures. User Modeling and User-Adapted Interaction (08 2020), 1–18.

- [23] Fuhl, W., Bozkir, E., Hosp, B., Castner, N., Geisler, D., Santini, T. C., and Kasneci, E. Encodji: encoding gaze data into emoji space for an amusing scanpath classification approach. In Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications (2019), pp. 1–4.

- [24] Fuhl, W., Castner, N., and Kasneci, E. Histogram of oriented velocities for eye movement detection. In International Conference on Multimodal Interaction Workshops, ICMIW (2018).

- [25] Fuhl, W., Castner, N., and Kasneci, E. Rule based learning for eye movement type detection. In International Conference on Multimodal Interaction Workshops, ICMIW (2018).

- [26] Fuhl, W., Castner, N., Kübler, T. C., Lotz, A., Rosenstiel, W., and Kasneci, E. Ferns for area of interest free scanpath classification. In Proceedings of the 2019 ACM Symposium on Eye Tracking Research & Applications (ETRA) (06 2019).

- [27] Fuhl, W., Castner, N., Zhuang, L., Holzer, M., Rosenstiel, W., and Kasneci, E. Mam: Transfer learning for fully automatic video annotation and specialized detector creation. In International Conference on Computer Vision Workshops, ICCVW (2018).

- [28] Fuhl, W., Eivazi, S., Hosp, B., Eivazi, A., Rosenstiel, W., and Kasneci, E. Bore: Boosted-oriented edge optimization for robust, real time remote pupil center detection. In Eye Tracking Research and Applications, ETRA (2018).

- [29] Fuhl, W., Gao, H., and Kasneci, E. Neural networks for optical vector and eye ball parameter estimation. In ACM Symposium on Eye Tracking Research & Applications, ETRA 2020 (01 2020), ACM.

- [30] Fuhl, W., Gao, H., and Kasneci, E. Tiny convolution, decision tree, and binary neuronal networks for robust and real time pupil outline estimation. In ACM Symposium on Eye Tracking Research & Applications, ETRA 2020 (01 2020), ACM.

- [31] Fuhl, W., Geisler, D., Rosenstiel, W., and Kasneci, E. The applicability of cycle gans for pupil and eyelid segmentation, data generation and image refinement. In International Conference on Computer Vision Workshops, ICCVW (11 2019).

- [32] Fuhl, W., Geisler, D., Santini, T., Appel, T., Rosenstiel, W., and Kasneci, E. Cbf:circular binary features for robust and real-time pupil center detection. In ACM Symposium on Eye Tracking Research & Applications (06 2018).

- [33] Fuhl, W., and Kasneci, E. Eye movement velocity and gaze data generator for evaluation, robustness testing and assess of eye tracking software and visualization tools. In Poster at Egocentric Perception, Interaction and Computing, EPIC (2018).

- [34] Fuhl, W., and Kasneci, E. Learning to validate the quality of detected landmarks. In International Conference on Machine Vision, ICMV (11 2019).

- [35] Fuhl, W., Kasneci, G., Rosenstiel, W., and Kasneci, E. Training decision trees as replacement for convolution layers. In Conference on Artificial Intelligence, AAAI (02 2020).

- [36] Fuhl, W., Kübler, T. C., Brinkmann, H., Rosenberg, R., Rosenstiel, W., and Kasneci, E. Region of interest generation algorithms for eye tracking data. In Third Workshop on Eye Tracking and Visualization (ETVIS), in conjunction with ACM ETRA (06 2018).

- [37] Fuhl, W., Kübler, T. C., Hospach, D., Bringmann, O., Rosenstiel, W., and Kasneci, E. Ways of improving the precision of eye tracking data: Controlling the influence of dirt and dust on pupil detection. Journal of Eye Movement Research 10, 3 (05 2017).

- [38] Fuhl, W., Kübler, T. C., Sippel, K., Rosenstiel, W., and Kasneci, E. Arbitrarily shaped areas of interest based on gaze density gradient. In European Conference on Eye Movements, ECEM 2015 (08 2015).

- [39] Fuhl, W., Kübler, T. C., Santini, T., and Kasneci, E. Automatic generation of saliency-based areas of interest for the visualization and analysis of eye-tracking data. In VMV (2018), pp. 47–54.

- [40] Fuhl, W., Rong, Y., and Enkelejda, K. Fully convolutional neural networks for raw eye tracking data segmentation, generation, and reconstruction. In Proceedings of the International Conference on Pattern Recognition (2020), pp. 0–0.

- [41] Fuhl, W., Rosenstiel, W., and Kasneci, E. 500,000 images closer to eyelid and pupil segmentation. In Computer Analysis of Images and Patterns, CAIP (11 2019).

- [42] Fuhl, W., Santini, T., Geisler, D., Kübler, T. C., and Kasneci, E. Eyelad: Remote eye tracking image labeling tool. In 12th Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2017) (02 2017).

- [43] Fuhl, W., Santini, T., Geisler, D., Kübler, T. C., Rosenstiel, W., and Kasneci, E. Eyes wide open? eyelid location and eye aperture estimation for pervasive eye tracking in real-world scenarios. In ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct publication – PETMEI 2016 (09 2016).

- [44] Fuhl, W., Santini, T., and Kasneci, E. Fast and robust eyelid outline and aperture detection in real-world scenarios. In IEEE Winter Conference on Applications of Computer Vision (WACV 2017) (03 2017).

- [45] Fuhl, W., Santini, T., and Kasneci, E. Fast camera focus estimation for gaze-based focus control. In CoRR (2017).

- [46] Fuhl, W., Santini, T., Kuebler, T., Castner, N., Rosenstiel, W., and Kasneci, E. Eye movement simulation and detector creation to reduce laborious parameter adjustments. arXiv preprint arXiv:1804.00970 (2018).

- [47] Fuhl, W., Santini, T., Reichert, C., Claus, D., Herkommer, A., Bahmani, H., Rifai, K., Wahl, S., and Kasneci, E. Non-intrusive practitioner pupil detection for unmodified microscope oculars. Elsevier Computers in Biology and Medicine 79 (12 2016), 36–44.

- [48] Geisler, D., Fuhl, W., Santini, T., and Kasneci, E. Saliency sandbox: Bottom-up saliency framework. In 12th Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2017) (02 2017).

- [49] Hagan, M. T., and Menhaj, M. B. Training feedforward networks with the marquardt algorithm. IEEE transactions on Neural Networks 5, 6 (1994), 989–993.

- [50] Hart, P. The condensed nearest neighbor rule (corresp.). IEEE transactions on information theory 14, 3 (1968), 515–516.

- [51] Hastie, T., Tibshirani, R., and Friedman, J. The elements of statistical learning: data mining, inference, and prediction. Springer Science & Business Media, 2009.

- [52] Holzinger, A. Interactive machine learning for health informatics: when do we need the human-in-the-loop? Brain Informatics 3, 2 (2016), 119–131.

- [53] Hu, T., and Fei, Y. Qelar: A machine-learning-based adaptive routing protocol for energy-efficient and lifetime-extended underwater sensor networks. IEEE Transactions on Mobile Computing 9, 6 (2010), 796–809.

- [54] Hu, X., Shin, K. G., Bhatkar, S., and Griffin, K. Mutantx-s: Scalable malware clustering based on static features. In Presented as part of the 2013 USENIX Annual Technical Conference (USENIXATC 13) (2013), pp. 187–198.

- [55] Huang, Z., Chen, H., Hsu, C.-J., Chen, W.-H., and Wu, S. Credit rating analysis with support vector machines and neural networks: a market comparative study. Decision support systems 37, 4 (2004), 543–558.

- [56] Klingspor, V., Demiris, J., and Kaiser, M. Human-robot communication and machine learning. Applied Artificial Intelligence 11, 7 (1997), 719–746.

- [57] Krogh, A., and Vedelsby, J. Neural network ensembles, cross validation, and active learning. In Advances in neural information processing systems (1995), pp. 231–238.

- [58] Kuleshov, A., Bernstein, A., and Burnaev, E. Mobile robot localization via machine learning. In International Conference on Machine Learning and Data Mining in Pattern Recognition (2017), Springer, pp. 276–290.

- [59] Kurd, Z., and Kelly, T. Establishing safety criteria for artificial neural networks. In International Conference on Knowledge-Based and Intelligent Information and Engineering Systems (2003), Springer, pp. 163–169.

- [60] Kurd, Z., Kelly, T., and Austin, J. Developing artificial neural networks for safety critical systems. Neural Computing and Applications 16, 1 (2007), 11–19.

- [61] Lee, Y.-C. Application of support vector machines to corporate credit rating prediction. Expert Systems with Applications 33, 1 (2007), 67–74.

- [62] Liao, Z., Yu, Y., and Chen, B. Anomaly detection in gps data based on visual analytics. In 2010 IEEE Symposium on Visual Analytics Science and Technology (2010), IEEE, pp. 51–58.

- [63] Luo, C., Wu, D., and Wu, D. A deep learning approach for credit scoring using credit default swaps. Engineering Applications of Artificial Intelligence 65 (2017), 465–470.

- [64] Meinshausen, N. Quantile regression forests. Journal of Machine Learning Research 7, Jun (2006), 983–999.

- [65] Mohr, D. C., Zhang, M., and Schueller, S. M. Personal sensing: understanding mental health using ubiquitous sensors and machine learning. Annual review of clinical psychology 13 (2017), 23–47.

- [66] Møller, M. F. A scaled conjugate gradient algorithm for fast supervised learning. Aarhus University, Computer Science Department, 1990.

- [67] Müller-Schloer, C., and Tomforde, S. Organic Computing-Technical Systems for Survival in the Real World. Springer, 2017.

- [68] Naghibi, S. A., Pourghasemi, H. R., and Dixon, B. Gis-based groundwater potential mapping using boosted regression tree, classification and regression tree, and random forest machine learning models in iran. Environmental monitoring and assessment 188, 1 (2016), 44.

- [69] Rodvold, D. M. A software development process model for artificial neural networks in critical applications. In IJCNN’99. International Joint Conference on Neural Networks. Proceedings (Cat. No. 99CH36339) (1999), vol. 5, IEEE, pp. 3317–3322.

- [70] Schumann, J., Gupta, P., and Nelson, S. On verification & validation of neural network based controllers. EANN’03 (2003).

- [71] Serov, A. Cognitive sensor technology for structural health monitoring. Procedia Structural Integrity 5 (2017), 1160–1167.

- [72] Shamili, A. S., Bauckhage, C., and Alpcan, T. Malware detection on mobile devices using distributed machine learning. In 2010 20th International Conference on Pattern Recognition (2010), IEEE, pp. 4348–4351.

- [73] Shcherbina, A., Mattsson, C. M., Waggott, D., Salisbury, H., Christle, J. W., Hastie, T., Wheeler, M. T., and Ashley, E. A. Accuracy in wrist-worn, sensor-based measurements of heart rate and energy expenditure in a diverse cohort. Journal of personalized medicine 7, 2 (2017), 3.

- [74] Sng, H., Gupta, G. S., and Messom, C. H. Strategy for collaboration in robot soccer. In Proceedings First IEEE International Workshop on Electronic Design, Test and Applications’ 2002 (2002), IEEE.

- [75] Tsai, C.-F., and Chen, M.-L. Credit rating by hybrid machine learning techniques. Applied soft computing 10, 2 (2010), 374–380.

- [76] Warmuth, M. K., Liao, J., and Rätsch, G. Totally corrective boosting algorithms that maximize the margin. In Proceedings of the 23rd international conference on Machine learning (2006), pp. 1001–1008.

- [77] Webster, J. G., and Eren, H. Measurement, Instrumentation, and Sensors Handbook: Two-Volume Set. CRC press, 2018.

- [78] Wu, C.-H., Su, W.-H., and Ho, Y.-W. A study on gps gdop approximation using support-vector machines. IEEE Transactions on Instrumentation and Measurement 60, 1 (2010), 137–145.