Excavation Learning for Rigid Objects in Clutter

Abstract

Autonomous excavation for hard or compact materials, especially irregular rigid objects, is challenging due to high variance of geometric and physical properties of objects, and large resistive force during excavation. In this paper, we propose a novel learning-based excavation planning method for rigid objects in clutter. Our method consists of a convolutional neural network to predict the excavation success and a sampling-based optimization method for planning high-quality excavation trajectories leveraging the learned prediction model. To reduce the sim2real gap for excavation learning, we propose a voxel-based representation of the excavation scene. We perform excavation experiments in both simulation and real world to evaluate the learning-based excavation planners. We further compare with two heuristic baseline excavation planners and one data-driven scene-independent planner. The experimental results show that our method can plan high-quality excavations for rigid objects in clutter and outperforms the baseline methods by large margins. As far as we know, our work presents the first learning-based excavation planner for cluttered and irregular rigid objects.

Index Terms:

Deep learning in grasping and manipulation, perception for grasping and manipulation, manipulation planningI Introduction

Excavators are widely used in various applications, including construction, material loading, and mining. Excavators need to operate in extreme environments or weather conditions, which are challenging for human operators. Operating excavators requires special and costly training to ensure safe operations of equipment [1]. Moreover, occupational machine-related fatalities and injuries occur each year [2]. Automating the excavator operation has been an active area of research because of its potential to increase safety, reduce cost and improve the work efficiency [3, 4, 5].

In terms of developing autonomous excavator systems, there have been many efforts that focus on particular aspects [6, 7], including perception [8], planning [9, 10], control [5], teleoperation [11, 12], and system integration and applications [4]. Despite these advances, autonomous excavation for hard or compact materials, especially irregular rigid objects, remains challenging and relatively few works have looked at this problem [13, 14].

Rock excavations are typical scenarios in mining job sites [15]. As compared to granular material, rocks are rigid and often in clutter. It is more challenging, more time consuming, and much more expensive to excavate [15] rocks. Excavation of rocks can result in large resistive forces to the bucket [16]. Furthermore, unlike granular materials composited by uniform particles, rigid objects often have high variance of geometrical shapes (e.g., concave and convex), appearances, and physics properties (e.g., mass), which largely increases the challenges for robotic perception and manipulation.

In this paper, we focus on excavation learning and planning for irregular rigid objects in clutter. We employ deep learning to tackle the challenges of excavation for rigid objects in clutter. Given the visual representation of the excavation scene, our goal is to plan a high-quality trajectory to excavate objects with large total volume per excavation. We first propose novel RGBD and voxel-based convolutional neural network (CNN) models to predict the excavation success, which we train by collecting a large set of training excavation samples in simulation. We then formulate the excavation planning as an optimization problem leveraging the learned prediction models. We perform excavation experiments in both simulation and real world to evaluate our learning-based excavation methods. Our excavation experiments in simulation and real world show that our learning-based planners are able to generate excavations with high success rates. The experimental results also demonstrate the advantages of the learning-based excavation planners over two heuristic planners.

In summary, the main contribution of this work includes:

-

1.

We propose two CNN models for success prediction of a new task, excavation for rigid objects in clutter, and solve the excavation planning as an optimization problem leveraging the learned models.

-

2.

Our excavation experiments in simulation and real world show that our learning-based planner is able to generate excavation trajectories with a high success rate.

-

3.

We represent the excavation trajectories in task space, which allows the transfer of the learned excavation prediction models across different hardware platforms.

-

4.

We demonstrate the voxel-grid representation of the excavation scene reduces the sim2real gap for excavation learning, compared with the RGBD image representation.

-

5.

We collected and released an excavation dataset for cluttered rigid objects.

We summarize the related work in Section II. In Section III, we define the excavation planning problem for cluttered rigid objects. We follow this in Section IV with an overview of our approach to excavation learning and planning. We then give a thorough account of our simulated and real-robot experiments in Section V. We conclude with a brief discussion in Section VI. In the Appendix (i.e., Section VI), we present the excavation data collection, model training, offline validation, further results analysis, and ablation study.

II Related Work

In this section, we summarize the literature of autonomous excavators, manipulation learning, and voxel-based planning.

II-A Autonomous Excavators

Prior work on developing autonomous excavators mainly focuses on soil excavation and granular material handling. In the seminal work [4], a prototype system for autonomous material loading to dump trucks is proposed. Recently, a system for autonomous trenching [17] is presented and validated on a real excavator. Yang and colleagues [10] propose a trajectory optimization method for for granular material excavation. Recently, various prototypes and experiments have been carried out on the task planning for large-scale excavation tasks, e.g., soil pile removals [9]. A novel real-time panoramic telepresence system for construction machines is presented in [11]. Tanzini and others discuss a novel approach for interactive operation of working machines in [12]. A reinforcement learning approach is proposed for automated arm control of a hydraulic excavator in [12].

Different control approaches have been proposed for excavation automation. Maeda et al. propose a new control structure with explicit disturbance compensation for soil excavation in [18]. In [5], a force control method is presented and the resulting bucket motions can be adaptive to different terrain. In [19], a straight-line motion tracking control scheme is proposed for hydraulic excavator system. In [20], a model-free extremum-seeking approach using power maximization is presented.

There are relatively few work related to rigid objects excavation [13, 14, 21]. Fernando and others develop an iterative learning-based admittance control algorithm for autonomous excavation in fragmented rock using robotic wheel loaders. An admittance-based Autonomous Loading Controller for fragmented rock excavation is discussed in [14]. Compared with the low-level excavation control work in [13, 14], our work focuses on learning-based excavation trajectory planning that considers the visual scene representation of cluttered rigid objects. In [21], Sotiropoulos and Asada integrate a Gaussian process rock motion model and an Unscented Kalman Filter for rock excavation. However, they only focus on excavation of a single rock in isolation and use the OptiTrack motion capture system to track the motion of the rock. In comparison, we focus on excavation for rigid objects in clutter using a RGBD camera.

II-B Deep Learning for Manipulation

In recent years, researchers have looked to capitalize on the success of deep learning to improve robotic manipulation, including non-prehensile manipulation [22], grasping [23, 24, 25], and granular material scooping [26, 27]. For example, deep learning has been shown to generalize well to previously unseen objects where only partial-view visual information is available for grasping [28]. In [29], Halbach and others train an end-to-end Neural Network controller for automated pile loading of granular media using human demonstration data. In [30], a statistical model is learned to predict the behavior of soil excavation, which is used for controlling the amount of excavated soil. In our work, we apply deep learning to tackle the perception and manipulation challenges of excavation for cluttered rigid objects and generate high-quality excavations.

Various planning approaches have been developed to leverage deep neural network predictive models. In [25], Lenz and colleagues proposes cascaded deep networks to efficiently evaluate a large number of candidate grasps. In [26], a highly-tailored CNN model is developed to learn the dynamics of the granular material scooping task and the cross entropy method (CEM) leveraging the learned prediction model is used for scoop planning. In [24], the grasping planning is formulated and solved as a gradient-based optimization over the grasp configuration leveraging the grasp predication network. In this paper, we model excavation planning as an optimization problem which maximizes the probability of excavation success predicted by our excavation prediction network and solve the optimization using CEM.

II-C Voxel-based Planning

In [28], a voxel-based object representation and two 3D CNNs are presented for multi-fingered grasp learning and planning. Jetchev and Toussaint model environments with voxel-grids and present a novel way for faster movement planning in such environments by predicting good path initializations [31]. To overcome the sim2real issue, we propose a 3D voxel-grid representation of the excavation scene.

III Problem Definition

In this section, we define the excavation task for rigid objects and the excavation trajectory representation.

III-A Task Overview

This work focuses on rigid objects excavation in clutter. Given the visual representation (i.e., the RGBD image or voxel-grid in this work) of the current excavation scene, our goal is to plan a trajectory that excavates rigid objects (e.g., stones or wood blocks) with the maximum total volume . An excavation instance/sample is defined to be the pair of the scene visual representation and the excavation trajectory . We focus on maximizing the excavated objects volume of the current excavation greedily without considering the future excavations.

In this work, we emulate a standard 4 DOF excavator model using a Franka Panda 7 DOF robot arm mounted with a 3D printed excavation bucket. Figure 1(b) shows our excavation task and scene setup, where rigid wood blocks are put in an excavation tray and the task is to excavate these blocks and dump to a dumping tray after each excavation.

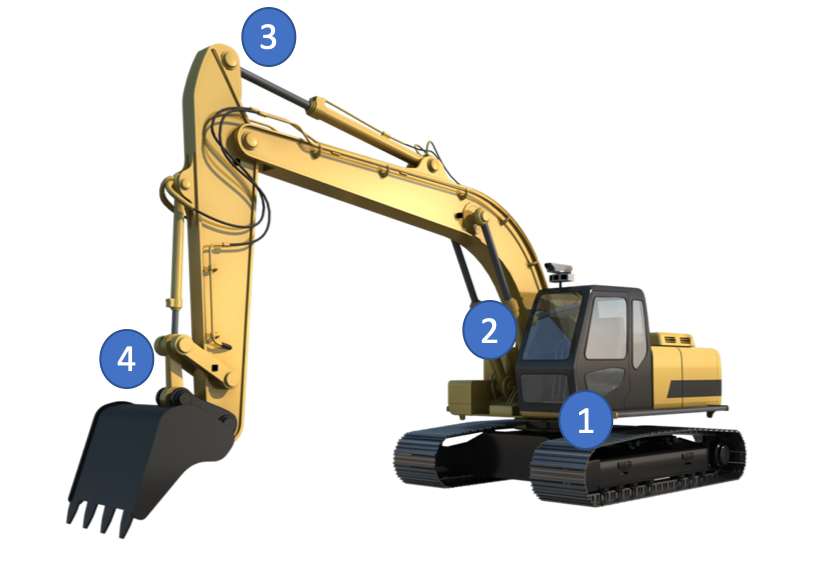

III-B Excavation Trajectory Representation in Task Space

As shown in Figure 1, an excavator arm usually has 4 DoF, including the base swinging, boom, stick, and bucket joint. The D excavation pose of the bucket in task (Cartesian) space is composed of the D excavation position and the D excavation angle . The excavation angle determines the bucket orientation, which also equals to the sum of the joint angles of the last three excavation joints. We define the excavation angle to be zero degree when the bucket orientation is horizontal and points away from the robot. The excavation angle is degree when the bucket orientation is vertical and points down. One example of the excavation angle visualization can be seen from the closing excavation angle in Figure 2.

In general, an excavation trajectory can be divided into multiple phases [3]. Figure 2 illustrates one scheme, where the trajectory is divided into 5 phases: attacking, penetration, dragging, closing, and lifting. In the attacking phase, the excavator arm moves the bucket from the starting pose to its D target attacking pose . In the penetration phase, the bucket penetrates into objects with a specified depth along the gravity direction. Then the bucket drags horizontally towards the excavator base in the excavation plane for a given length . Dragging allows the excavator arm to push and accumulate more objects into the bucket along the way. In the closing phase, the excavator arm decreases the angle between the bucket and the horizontal plane to degree in order to close the bucket and manipulate objects into the bucket. Finally, the excavator arm lifts the bucket to a certain height .

We assume the attacking point is always on the surface of the objects clutter. Given the coordinate of the attacking pose , its coordinate value on the objects clutter surface is computed as the height of the grid/height map of the objects clutter at . More details and examples of the grid map can be found in Section VII-C of the Appendix. We fix the lifting height to lift the bucket to the height of the robot base. Therefore, we can represent a task space excavation trajectory using parameters . The points of attack are learned and planned in the objects tray frame. The object tray frame definition for simulation and real world is explained in more detail in Section V-A and VII-B of the Appendix respectively.

Having the D task trajectory parameters, we interpolate the excavation trajectory and generate its corresponding joint space trajectory by applying inverse kinematics (IK) of the excavator arm [32]. Then the interpolated joint trajectory waypoints are sent to a position controller in both simulation and real world. Though the robotic arm is used for excavation in this paper, the task trajectory representation and excavator IK can also be translated directly to hydraulically actuated excavator arms.

IV Objects Excavation Learning and Planning

In this section we present the design of our deep network models to predict the excavation success for rigid objects in clutter. We then propose an excavation planner leveraging the learned prediction excavation model.

IV-A Excavation Scene Representation

We consider two visual representations for the excavation scene, RGBD image and voxel-grid. RGB and depth images are captured using the corresponding RGBD camera in simulation or real world. It turns out the RGBD image representation suffers from a large sim2real gap when transferring the learned excavation knowledge from simulation into real world, because (1) our simulated excavation environment (e.g., the geometry and color of the excavation tray and the color of the floor) differs from the real-world excavation environment; (2) the RGBD image depends on the camera intrinsics and extrinsics. Figure 7(a) and Figure 7(b) show the RGB image of the simulation and real world respectively.

To overcome the sim2real issue, we propose a 3D voxel-grid representation of the excavation scene. The pointcloud (i.e., depth) of the excavation scene obtained from the same RGBD camera is first transformed into the objects tray frame. Then we filter the transformed pointcloud according to the specific excavation cuboid space and voxelize the filtered pointcloud to generate its voxel-grid. More details of the excavation space specification for simulation and real world can be found in Section VII-A and VII-B of the Appendix. The voxel-grid has a dimension of with a resolution of m. An example of the voxel-grid visualization and its source pointcloud are shown in Figure 3. The voxel-grid dimension and resolution are empirically designed to cover the excavation space and maintain a reasonable level of visual details, similar to the voxelization for grasping in [28].

Since our voxelization only focuses the specified excavation space, the voxel-grid representation is not affected by the environment surroundings. Moreover, our voxel-grid representation is agnostic to the camera intrinsics and extrinsics, because the voxelization is applied in the tray frame instead of the camera frame. Our experimental results in Section V demonstrate the sim2real benefits of the voxel-grid representation over the RGBD one.

IV-B Excavation Prediction Models

We model the excavation prediction as a binary classification problem. The excavation classifier predicts the probability of excavation success (i.e., bucket filling success), , as a function of an excavation instance. We propose two CNN models to predict the excavation success probability, namely “excavation-RGBD-net” and “excavation-voxel-net”. Each model takes an excavation instance composed of a task trajectory and a RGBD image/voxel-grid as input and predicts the excavation success probability as output.

ResNet [33] provides one of the state of the art CNN architectures for various computer vision tasks such as image classification and object detection. We choose ResNet- as the backbone architecture of excavation-RGBD-net and naturally extend ResNet- to 3D CNN as the backbone of excavation-voxel-net. The offline validation results in Section VII-E of the Appendix and the experiments in Section V empirically show the effectiveness of both models, especially excavation-voxel-net. We believe other alternative network structures such as scoop & dump-net in [26] for the 2D CNN RGBD model and voxel-config-net in [28] or the shape completion CNN in [34] for the 3D voxel model could also potentially work well.

Figure 3 shows the architecture of our excavation-voxel-net using the ResNet- backbone. We tile each trajectory parameter point-wise across the voxel-grid dimension (cf. [23]). We then concatenate the tiled trajectory parameter voxel-grids with the scene voxel-grid to generate the final input voxel-grid of the given excavation instance. The input voxel-grid has channels (i.e., the dimension of each voxel) in total, including for the scene voxel-grid and for the tiled trajectory parameters.

The 2D convolution filters of the raw ResNet-18 are replaced with 3D convolution filters to build the ResNet3D-18 backbone. We feed the input voxel-grid into ResNet3D-18 to generate a -dimensional feature vector. The ResNet3D feature is then processed by fully-connected layers followed by a sigmoid output layer to generate the excavation success probability. These fully-connected layers have , , and ReLu neurons, respectively. The design of the fully-connected layers is inspired from the voxel-config-net in [28], which is further tuned empirically during training. We apply batch normalization for all fully-connected layers except the output layer. We train our the excavation classifier using the cross entropy loss.

The excavation-RGBD-net shares a similar architecture with the excavation-voxel-net, except we use the raw ResNet-18 backbone with 2D convolution and tile the trajectory parameters in the image space instead of voxel-grid space.

To compare with classification, we also model the excavation prediction as a regression problem. Excavation-RGBD-net and excavation-voxel-net are adapted to “excavation-RGBD-reg-net” and “excavation-voxel-reg-net” respectively by replacing the sigmoid output layer with the fully-connected layer. The regression models are trained using the smooth L1 loss (i.e., Huber loss)111https://pytorch.org/docs/stable/generated/torch.nn.SmoothL1Loss.html.

In order to show the importance of the scene dependency for excavation learning and provide a data-driven baseline for experiments, we also develop a fully-connected excavation classification network “excavation-traj-net”. The scene-independent excavation-traj-net only takes the task trajectory without the visual scene representation as input. It has fully-connected layers with , , and ReLu neurons respectively. Its final sigmoid layer outputs the excavation success probability.

In summary, five excavation prediction models are presented: excavation-RGBD-net, excavation-voxel-net, excavation-traj-net, excavation-RGBD-reg-net, and excavation-voxel-reg-net.

IV-C Learning-based Excavation Planning

Given the excavation scene visual representation , our goal is to plan an excavation trajectory that maximizes the the probability of excavation success, . Similar with the grasp planner in [24], we formulate the excavation planning as an optimization problem:

| (1) |

In Eq. 1 defines a neural network classifier with logistic output trained to predict the excavation success probability as a Bernoulli distribution over . The parameters define the neural network parameters.

We use CEM [35] leveraging the learned excavation prediction model to solve the excavation optimization problem, similar with [26]. As a sampling-based optimization approach, CEM iteratively samples from the current distribution and selects the top samples using a scoring function to update the distribution. We aim to optimize a Gaussian distribution of the D task trajectory parameters, given the visual representation of the current excavation scene. heuristic excavation trajectories are generated using the random-heu excavation planner to initialize the Gaussian distribution. More details of the random-heu planner can be seen from Section VII-C1 of the Appendix. We have iterations for the CEM excavation planning. At each iteration, we first sample excavation trajectory samples from the current distribution. We predict the success probability of each sample using the learned excavation prediction network. Then we select the top samples with higher success probabilities to update the Gaussian distribution. To summarize, CEM uses the learned prediction model as a quality metric to iteratively improves the distribution of the task trajectory parameters through sampling and distribution updating. We sample excavation trajectories from the final CEM distribution, evaluate each one using the learned prediction model, and pick the one with the highest success probability, valid IK solution, and valid attacking point range as the planned task trajectory.

V Excavation Experiments

In this section, we first describe the excavation experiment setup and results in simulation. Then we introduce the experiment setup and results in real world. Our learning-based planners are compared with two heuristic planners and a data-driven baseline planner in simulation and real world. Our experimental results demonstrate the learning-based planners are able to plan high-quality excavations and significantly outperform the baseline methods. The data collection, model training, offline validation, more detailed results analysis, and ablation study are provided in the Appendix.

V-A Experiment Setup in Simulation

We collect the training data and perform simulated experiments in PyBullet222https://pybullet.org/wordpress/. A UR5 robot arm is used for excavation data collection. The UR5 arm has 6 DoF in total. We control the shoulder panning, shoulder lifting, elbow, and the first wrist joints of the UR5 arm and disable the other two wrist joints by fixing their joint angles in simulation. A 3D designed bucket is used as the end-effector of UR5 in simulation. The full volume of the bucket is cm3.

The RGB and depth image of each excavation trial are generated by the built-in simulated camera in PyBullet. Figure 4(a) shows the camera setup in simulation. One example of the RGB image generated by the simulated camera can be seen from Figure 7(a) in the Appendix. More details of the camera and excavation scene setup for real-robot experiments are discussed in Section VII-A of the Appendix.

For each experiment trial of a certain excavation planner, the joint space trajectory is interpolated and computed from its planned task trajectory using IK, and sent the joint space waypoints to a joint position controller of the UR5 arm in simulation.

V-B Experiments in Simulation

We perform simulated experiments to evaluate the learning-based planner of excavation-voxel-net, excavation-RGBD-net, excavation-voxel-reg-net, excavation-RGBD-reg-net, and excavation-traj-net. We name them “CEM-voxel”, “CEM-RGBD”, “CEM-voxel-reg”, “CEM-RGBD-reg”, and “CEM-traj” respectively. CEM-traj serves as a data-driven baseline planner without visual scene representation input. In addition, these five learning-based planners are compared with two heuristic planners: random-heu and highest-heu. More details of these two heuristic planners can be found in Section VII-C1 of the Appendix. excavation episodes are experimented for each method. excavation trials are sequentially performed for each excavation episode. That gives us excavation experimented trials in total for each method.

The simulated results of all seven methods are presented in Table I. We benchmark the excavations of each planner using three metrics: the volume of excavated objects (excavation volume), the excavated objects number, and the excavation success rate. Same as the model training in Section VII-D of the Appendix, if the total volume of a sample’s successfully excavated objects is above cm3 (i.e., bucket filling rate), it is counted as a success, otherwise a failure. We also report the computation time of each planner.

The mean with standard deviation in parentheses are listed for all metrics except the success rate. The mean and standard deviation for each method are computed across its experimented excavation trials. As shown in Table I, CEM-voxel achieves the best excavation performance in terms of the excavation volume, excavated object number, and success rate. CEM-voxel excavates objects of per excavation in average, which is of the full bucket volume (i.e. bucket volume filling rate). CEM-voxel, CEM-RGBD, and CEM-voxel-reg outperform the two heuristic planners and CEM-traj by relatively large margins in terms of these 3 excavation metrics, which shows the effectiveness of the scene-dependent excavation learning.

Classification-based CEM-voxel and CEM-RGBD perform better than regression-based CEM-voxel-reg and CEM-RGBD-reg respectively. Since classification is about predicting a label and regression is about predicting a continuous quantity, we believe excavation regression is more complex and needs a lot more training data to perform as well as or better than excavation classification.

The fact that scene-dependent planner CEM-voxel, CEM-RGBD, and CEM-voxel-reg significantly outperform the scene-independent CEM-traj planner demonstrates that it is important to learn to plan excavation trajectories based on the visual scene information.

The five learning-based planners all have higher standard deviations in terms of excavation volume and objects number than the two heuristic planners. CEM-voxel has the highest standard deviation. The experiment results of heuristic planners are dominated by failure excavations with low excavation volumes. Learning-based planners, especially CEM-voxel, generate excavations with relatively higher excavation volumes. This makes the excavation volume distribution of learning-based planners more uniform and have larger standard deviations, which is shown by the volume histogram of different planners in Figure 9 of the Appendix.

In terms of computation speed, heuristic planners spend second to plan one excavation trajectory. CEM-voxel, CEM-RGBD, CEM-voxel-reg, and CEM-RGBD-reg takes more than seconds to generate one excavation trajectory. It costs CEM-traj seconds to plan a trajectory. Finally, Figure 5 visualizes high-quality excavation examples planned by the CEM-voxel planner in simulation.

| Method | Volume (cm3) | Number | Success Rate | Time (s) |

|---|---|---|---|---|

| CEM-voxel | ||||

| CEM-RGBD | ||||

| CEM-voxel-reg | ||||

| CEM-RGBD-reg | ||||

| CEM-traj | ||||

| random-heu | ||||

| highest-heu |

V-C Experiment Setup in Real World

Real-robot excavation experiments are performed using a Franka Panda robotic arm. The Franka Panda arm has 7 DOF in total. We control the shoulder panning, shoulder lifting, elbow lifting, and the wrist lifting joint of the Franka arm as the excavation joints and disable the other three joints (i.e. the elbow panning and the last two wrist joints) by fixing their joint angles. The same bucket model used in simulation is 3D printed as the Franka arm end-effector. The Azure Kinect camera generates the RGBD image and pointcloud of the excavation scene. Figure 4(b) shows the camera setup in real world. An example of the Azure RGB image showing the excavation setup can be seen from Figure 7(b) in the Appendix. More details of the camera, robot, and excavation scene setup for real-robot experiments are introduced in Section VII-B of the Appendix.

For each experiment trial of a certain planner, we compute the joint space trajectory from the planned task trajectory using IK and send the joint space trajectory to the built-in joint position controller of the Franka arm. Compared with hydraulic excavator arms, the Franka arm can only produce a limited amount of force and torque. For example, Franka’s force and torque range along (i.e., gravity direction) are and respectively. Considering the large resistive force of rigid objects, this makes it hard for the bucket to penetrate into the rigid objects. During penetration, the robot is automatically commanded to alternatively shift the bucket back or forth by cm horizontally per waypoint, which helps to prevent the robot getting stuck.

V-D Real Robot Experiments

The excavation model learned on UR5 in simulation is transferred to Franka in real world for rigid objects excavation experiments. The representation of the excavation trajectory in task space allows us to transfer the excavation prediction model from one hardware platform to another with similar kinematic reachability. The reachability of UR5 and Franka arm are mm and mm respectively. In addition to the task trajectory representation, we represent excavation poses in the tray frame to make excavation learning and planning agnostic to different tray poses across simulation and real world.

Excavation experiments are performed to evaluate our learning-based planners CEM-voxel and CEM-RGBD, which achieves the best performance in simulation experiments. We also compare our learning-based planners with these two heuristic planners random-heu and highest-heu. We experiment excavation episodes for each method in real world. We randomly reset the rigid objects for each excavation episode. We perform excavation trials for each excavation episode. That gives us excavation experimented trials in total for each method.

Details of these two heuristic planners for simulation are described in Section VII-C1 of the Appendix. Random parameter ranges of heuristic planners in real world are smaller than that in simulation. Because experiments with large heuristic ranges can be unsafe for human or robot. For example, relatively long dragging lengths cause collision with the tray. Moreover, the Franka arm can only produce a limited amount of force and torque, which makes it difficult to penetrate into the rigid objects with a depth larger than cm. Specifically, we randomly generate the attacking excavation angle and the closing angle in the range of and degree respectively in real world. We randomly generate the penetration depth and the dragging length in the range of m and m respectively. The trajectory parameter range of heuristic planners also affect our learning-based planners, since we generate heuristic excavation trajectories to initialize CEM, as described in Section IV-C of the Appendix.

The real-robot experiment results of all four methods are presented in Table II. We evaluate the excavation performance in terms of the volume of excavated objects and the excavation success rate. The mean with standard deviation in parentheses are reported for the volume of excavated objects. The success threshold of the volume of excavated objects is cm3, same as simulation.

We also show the valid rate of each planner in the table. An excavation trial is treated as valid if the trajectory can be planned and executed successfully. Invalid excavation trials are mostly caused by limit exceeding of the robot force/torque. Large resistive force during excavation, especially penetration, and collision with the tray can both lead to the force/torque limit exceeding. Examples of the Franka arm getting stuck due to force/torque limit exceeding are shown in Figure 8 of the Appendix. In the future, we are interested to examine force control for excavation trajectory execution in order to mitigate force/torque limit exceeding. We also count the excavation trials without valid trajectory IK as invalid.

As shown in Table II, the CEM-voxel planner significantly outperforms these other 3 planners in terms of the volume of excavated objects and success rate in real world. CEM-voxel excavates objects of cm3 per excavation in average, which is of the full bucket volume. CEM-voxel significantly outperforms these two heuristic planners, which demonstrates the effectiveness of excavation learning in real world. The fact that CEM-voxel outperforms CEM-RGBD shows that the voxel-based visual representation handles the sim2real gap better than the RGBD representation. The computation time of each planner in the real world is similar with simulation.

The CEM-RGBD planner performs poorly in the real world, worse than random-heu and roughly on par with highest-heu. The attacking poses of the trajectories planned by CEM-RGBD are mostly close to the edge of the tray, which leads to invalid excavation trials with collision. This is because the RGBD image representation suffers from a large sim2real gap when transferring the excavation knowledge gained in simulation into real world. In addition to the poor excavation performance, another evidence of the RGBD sim2real gap is the predicted success probabilities of the CEM-RGBD trajectories are close to zero in real world. More details of the excavation scene visual representation are discussed in Section IV-A.

In Figure 6, we show high-quality excavation examples planned by the CEM-voxel planner on the real robot. We also annotate the volume of excavated objects of each example.

| Method | Volume (cm3) | Success Rate | Valid Rate |

|---|---|---|---|

| CEM-voxel | |||

| CEM-RGBD | |||

| random-heu | |||

| highest-heu |

VI Conclusion

In conclusion, we propose multiple deep networks for success prediction of a new task, rigid objects excavation in clutter. We solve excavation planning as an optimization problem leveraging the learned prediction models. Our excavation experiments in simulation and real world show that our learning-based planner is able to generate high-quality excavations. The experimental results also demonstrate the advantage of the learning-based excavation planner over two heuristic planners and one data-driven scene-independent planner.

We plan an excavation trajectory greedily by maximizing the excavation volumes of the current excavation. In the future, we would like to consider the long-term expected excavation reward of sequential excavations and investigate deep reinforcement learning for rigid objects excavation. We also plan to use force control instead of position control to make the excavation trajectory execution smoother and more robust to large resistive forces. Finally, we want to transfer the learning-based planner from robotic arms to real excavators.

References

- [1] X. Wang and P. S. Dunston, “Design, strategies, and issues towards an augmented reality-based construction training platform,” Journal of information technology in construction (ITcon), vol. 12, no. 25, pp. 363–380, 2007.

- [2] S. Marsh and D. Fosbroke, “Trends of occupational fatalities involving machines, United States, 1992-2010,” Am J Ind Med, 2015.

- [3] S. Sing, “Synthesis of tactical plans for robotic excavation,” Ph.D. dissertation, Carnegie Mellon University, 1995.

- [4] A. Stentz, J. Bares, S. Singh, and P. Rowe, “A robotic excavator for autonomous truck loading,” Autonomous Robots, vol. 7, no. 2, pp. 175–186, 1999.

- [5] D. Jud, G. Hottiger, P. Leemann, and M. Hutter, “Planning and control for autonomous excavation,” IEEE Robotics and Automation Letters, vol. 2, no. 4, pp. 2151–2158, 2017.

- [6] A. Hemami and F. Hassani, “An overview of autonomous loading of bulk material,” in 26th International Symposium on Automation and Robotics in Construction. Citeseer, 2009, pp. 405–411.

- [7] S. Dadhich, U. Bodin, and U. Andersson, “Key challenges in automation of earth-moving machines,” Automation in Construction, vol. 68, pp. 212–222, 2016.

- [8] R. Mascaro, M. Wermelinger, M. Hutter, and M. Chli, “Towards automating construction tasks: Large-scale object mapping, segmentation, and manipulation,” Journal of Field Robotics, 2020.

- [9] J. Seo, S. Lee, J. Kim, and S.-K. Kim, “Task planner design for an automated excavation system,” Automation in Construction, vol. 20, no. 7, pp. 954–966, 2011.

- [10] Y. Yang, P. Long, X. Song, J. Pan, and L. Zhang, “Optimization-based framework for excavation trajectory generation,” IEEE Robotics and Automation Letters, vol. Accepted, 2021.

- [11] P. Tripicchio, E. Ruffaldi, P. Gasparello, S. Eguchi, J. Kusuno, K. Kitano, M. Yamada, A. Argiolas, M. Niccolini, M. Ragaglia et al., “A stereo-panoramic telepresence system for construction machines,” Procedia Manufacturing, vol. 11, pp. 1552–1559, 2017.

- [12] M. Tanzini, P. Tripicchio, E. Ruffaldi, G. Galgani, G. Lutzemberger, and C. A. Avizzano, “A novel human-machine interface for working machines operation,” in 2013 IEEE RO-MAN. IEEE, 2013, pp. 744–750.

- [13] H. Fernando, J. A. Marshall, and J. Larsson, “Iterative learning-based admittance control for autonomous excavation,” Journal of Intelligent & Robotic Systems, vol. 96, no. 3, pp. 493–500, 2019.

- [14] A. A. Dobson, J. A. Marshall, and J. Larsson, “Admittance control for robotic loading: Design and experiments with a 1-tonne loader and a 14-tonne load-haul-dump machine,” Journal of Field Robotics, vol. 34, no. 1, pp. 123–150, 2017.

- [15] S. Dessureault, “Lecture notes in rock excavation,” University of Arizona Mining and Geological Engineering, 2003.

- [16] J. A. Marshall, P. F. Murphy, and L. K. Daneshmend, “Toward autonomous excavation of fragmented rock: full-scale experiments,” IEEE Transactions on Automation Science and Engineering, vol. 5, no. 3, pp. 562–566, 2008.

- [17] D. Jud, P. Leemann, S. Kerscher, and M. Hutter, “Autonomous free-form trenching using a walking excavator,” IEEE Robotics and Automation Letters, vol. 4, no. 4, pp. 3208–3215, 2019.

- [18] G. J. Maeda, I. R. Manchester, and D. C. Rye, “Combined ilc and disturbance observer for the rejection of near-repetitive disturbances, with application to excavation,” IEEE Transactions on Control Systems Technology, vol. 23, no. 5, pp. 1754–1769, 2015.

- [19] P. H. Chang and S.-J. Lee, “A straight-line motion tracking control of hydraulic excavator system,” Mechatronics, vol. 12, no. 1, pp. 119–138, 2002.

- [20] F. E. Sotiropoulos and H. H. Asada, “A model-free extremum-seeking approach to autonomous excavator control based on output power maximization,” IEEE Robotics and Automation Letters, vol. 4, no. 2, pp. 1005–1012, 2019.

- [21] Sotiropoulos, Filippos E and Asada, H Harry, “Autonomous excavation of rocks using a gaussian process model and unscented kalman filter,” IEEE Robotics & Automation Letters, vol. 5, no. 2, pp. 2491–2497, 2020.

- [22] C. Finn and S. Levine, “Deep visual foresight for planning robot motion,” in IEEE Intl. Conf. on Robotics and Automation. IEEE, 2017, pp. 2786–2793.

- [23] S. Levine, P. Pastor, A. Krizhevsky, J. Ibarz, and D. Quillen, “Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection,” The International Journal of Robotics Research, vol. 37, no. 4-5, pp. 421–436, 2018.

- [24] Q. Lu, K. Chenna, B. Sundaralingam, and T. Hermans, “Planning Multi-Fingered Grasps as Probabilistic Inference in a Learned Deep Network,” in Intl. Symp. on Robotics Research, 2017.

- [25] I. Lenz, H. Lee, and A. Saxena, “Deep learning for detecting robotic grasps,” The International Journal of Robotics Research, vol. 34, no. 4-5, pp. 705–724, 2015.

- [26] C. Schenck, J. Tompson, S. Levine, and D. Fox, “Learning robotic manipulation of granular media,” in Conference on Robot Learning, 2017, pp. 239–248.

- [27] S. Clarke, T. Rhodes, C. G. Atkeson, and O. Kroemer, “Learning audio feedback for estimating amount and flow of granular material,” Proceedings of Machine Learning Research, vol. 87, 2018.

- [28] Q. Lu, M. Van der Merwe, B. Sundaralingam, and T. Hermans, “Multi-fingered grasp planning via inference in deep neural networks,” IEEE Robotics & Automation Magazine, 2020.

- [29] E. Halbach, J. Kämäräinen, and R. Ghabcheloo, “Neural network pile loading controller trained by demonstration,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 980–986.

- [30] R. J. Sandzimier and H. H. Asada, “A data-driven approach to prediction and optimal bucket-filling control for autonomous excavators,” IEEE Robotics and Automation Letters, vol. 5, no. 2, pp. 2682–2689, 2020.

- [31] N. Jetchev and M. Toussaint, “Trajectory prediction in cluttered voxel environments,” in 2010 IEEE International Conference on Robotics and Automation. IEEE, 2010, pp. 2523–2528.

- [32] Y. Yang, L. Zhang, X. Cheng, J. Pan, and R. Yang, “Compact reachability map for excavator motion planning,” in IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems. IEEE, 2019, pp. 2308–2313.

- [33] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [34] J. Varley, C. DeChant, A. Richardson, J. Ruales, and P. Allen, “Shape completion enabled robotic grasping,” in 2017 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, 2017, pp. 2442–2447.

- [35] P.-T. De Boer, D. P. Kroese, S. Mannor, and R. Y. Rubinstein, “A tutorial on the cross-entropy method,” Annals of operations research, vol. 134, no. 1, pp. 19–67, 2005.

- [36] P. Fankhauser and M. Hutter, “A Universal Grid Map Library: Implementation and Use Case for Rough Terrain Navigation,” in Robot Operating System (ROS) – The Complete Reference (Volume 1), A. Koubaa, Ed. Springer, 2016, ch. 5.

VII APPENDIX

In this Appendix section, we first introduce the excavation scene setup in simulation and real world. Then we describe the data collection and training of the excavation prediction model, before presenting the offline evaluation of the learned model results. We also show the excavation volume histograms, trajectory analysis, and ablation experiments.

As defined in Section III-B, a task space excavation trajectory contains parameters . We name and of a task trajectory as “Point of Attack” (PoA) and “Geometric Trajectory Parameters” (GTP) respectively for the ablation study.

VII-A Camera and Excavation Scene Setup in Simulation

The camera is located at m, m, m in the robot base frame in simulation. The robot frame and the camera location for simulation are shown in Figure 4(a). Both the transformation between the camera and the robot base frame and the transformation between the robot base frame and the tray frame are known. With these two transformations, the pointcloud obtained from the PyBullet RGBD camera can be transformed into the tray frame for grid map and voxel-grid generation.

We sample the number of objects uniformly in the range of for each excavation scene. We then spawn testing objects with random poses into the tray for the current excavation scene. The testing object meshes are unseen from training as described in Section VII-C of the Appendix. The m3 cuboid range is specified to filter the pointcloud of the excavation scene in the tray frame, which is then used for grid map and voxel-grid generation. This cuboid range covers the excavation space of rigid objects in the tray in simulation.

VII-B Camera and Excavation Scene Setup in Real World

The Azure camera is located at m, m, m in the robot frame in real world. The robot frame and camera location in real world can be seen in Figure 4(b). The transformation between the camera and the robot base frame is manually calibrated using an ArUco marker 333https://docs.opencv.org/master/d5/dae/tutorial_aruco_detection.html. We manually define the tray frame with respect to the robot base frame according to the excavation range, which gives the transformation between the robot and the tray frame. The tray frame has the same orientation with the robot base frame. Its origin is defined to be the center of the excavation cuboid range. Knowing these two transformations, the pointcloud obtained from the Azure camera can be transformed into the tray frame for grid map and voxel-grid generation.

rigid wooden objects with various geometrical shapes and colors are used for real robot experiments, including “Melissa & Doug wood blocks” and “Biubee wooden stone balancing blocks”. For example, there are objects with both convex and concave shapes. The density of the wooden rigid objects is estimated to be kgcm3. All of these rigid objects are unseen from the training.

A layer of rocks with heavy mass is first put into the excavation tray, which stabilizes the tray during excavation. Then we lay a layer of red mulch on top of rocks. Finally rigid wooden objects are put on top of the red mulch in front of the Franka arm for excavation. We use the relatively deformable red mulch as the excavation surface for safety reasons. The m3 cuboid range is specified to filter the pointcloud of the excavation scene in the tray frame, which is used for grid map and voxel-grid generation. This cuboid space covers the excavation space of the real-robot experiments. Roughly only the half of the tray space that is closer to the robot base is used for excavation experiments.

An excavation episode is created by shaking these rigid objects in a box, and then pouring them into the excavation area of the tray. The robot dumps the excavated objects into a dumping tray after excavation for each trial. A certain amount of red mulch under the rigid objects can be excavated and dumped sometimes. On average the amount of excavated red mulch is relatively small across all experimented trials.

The desired dumping pose of the bucket end-effector is specified to be the center of the dumping tray. The robot first moves to the desired dumping pose, then pour the objects into the dumping tray by controlling the bucket to point down vertically. We use a kitchen scale under the dumping tray to weigh the objects dumped into the tray for each trial. Having the mass of the excavated objects and the objects density, we can compute the volume of excavated objects.

VII-C Data Collection in Simulation

We use an UR5 arm with a 3D designed bucket to perform excavation experiments in simulation. The full bucket volume of the bucket is cm3. The data collection setup is the same with the simulated experiment setup in Section V-A. The camera and excavation scene setup for data collection is described in Section VII-A of the Appendix. Rigid object meshes with random geometry for simulated excavation are generated using trimesh444https://github.com/mikedh/trimesh. The number of vertices for each object mesh are randomly selected in the range of to . The maximum value of each coordinate is uniformly sampled from cm to cm for the object mesh. The 3D coordinates of all vertices of the object mesh are randomly generated from the range of to its maximum coordinate values. We then compute the convex hull of the original mesh and use that as the final object mesh. We assume the object density to be gcm3 in simulation. We separately generate k training and testing candidate object meshes. The training object mesh dataset is used for training data collection in simulation. The testing object mesh dataset are used for excavation prediction model offline evaluation and experiments in simulation.

A certain number of object meshes are randomly selected from the training objects set for each excavation episode of the data collection. We then spawn each selected object into the excavation tray with a random generated pose. The objects number of each scene is randomly and uniformly generated in the range of to . excavation trials (i.e., samples) are sequentially executed for each excavation episode. We randomly select one of our two heuristic planners to use and compute the total volume of the objects excavated successfully at each trial. The excavated objects are dumped into the dumping tray for each excavation trial. We collected training excavation samples and testing samples for offline validation of the excavation prediction network.

VII-C1 Heuristic Excavation Planners

We design two heuristic excavation planners for data collection, namely “heu-random” and “heu-highest”. For the heu-random planner, we randomly select a grid map cell of the excavation scene and use its center as the 2D coordinate of the attacking excavation pose. For the heu-highest planner, we generate the 2D coordinate of the attacking excavation pose as the center of the grid map cell with the maximum height. We assume the attacking point is on the object clutter surface. Under this assumption, the coordinate value of the attacking excavation pose is computed as the height of the corresponding grid map cell.

The excavation grid map is generated from the point cloud of the excavation space using the grid map library in [36] in both simulation and real world. We randomly generate the attacking excavation angle and the closing angle in the range of and degree respectively. We randomly generate the penetration depth and the dragging length in the range of m and m respectively. The same random parameter ranges are used for data collection and experiments in simulation.

VII-D Excavation Prediction Model Training

We collected training excavation samples in simulation. training samples are used for training and these other training samples are used as the validation set. For the excavation binary classification, if the total volume of a sample’s successfully excavated objects is above cm3 (i.e., bucket filling rate), the excavation sample is treated as a success, otherwise a failure. Excavation samples without valid task trajectory IK are labeled as failure excavations, using which we aim to learn to plan excavation trajectories with valid IK. out of these () training samples are successful excavations.

We train all five excavation prediction models, including excavation-RGBD-net, excavation-voxel-net, excavation-RGBD-reg-net, excavation-voxel-reg-net, and excavation-traj-net, using the same specifications. In order to overcome the class imbalance (i.e., i.e. low percentage of successful excavation samples), the successful samples are oversampled to make the number of positive and negative samples roughly the same in each training epoch for all five models. We compare training the excavation-RGBD-net from scratch and fine-tuning ResNet-. We find training from scratch has significantly better performance, which we believe is because our excavation task is significantly different from the ResNet ImageNet classification. In addition to excavation-RGBD-net, we also train all other four models from scratch.

All networks are trained using the Adam optimizer with mini-batches of size for epochs. The learning rate starts at and decreases by every epochs. The training of excavation-RGBD-net and excavation-RGBD-reg-net take around minutes on an Alienware desktop computer with an Intel i7-6800K processors, 32GB RAM, and a Nvidia GeForce GTX TITAN Z graphics card. Excavation-voxel-net and excavation-voxel-reg-net take around minutes to train on the same machine. It takes excavation-traj-net around minutes to train on the same machine. We implement all excavation prediction networks in PyTorch. We have released the data and the trained models in this link: https://drive.google.com/drive/folders/1X54doBlf3QBjjNFTAZA48igO9VInY0k2?usp=sharing

VII-E Excavation Prediction Model Offline Evaluation

We collected testing samples using the testing objects dataset in simulation for offline validation of the excavation prediction models. Among these testing samples, samples are successful excavations.

Table III shows the accuracy, precision, recall, and F score of three methods. The second, third, and forth row show the offline testing result of the excavation-voxel-net, excavation-RGBD-net, and excavation-traj-net respectively. The “random-” method in the fifth row refers to random guessing with a probability of for positive prediction. The “random-” method in the last row means random guessing with a probability of for positive prediction. The prediction metrics of random guessing show the classification challenges due to the low percentage of successful excavation samples. Excavation-voxel-net and excavation-RGBD-net perform reasonably well and significantly outperform random guessing in terms of these offline evaluation metrics. Excavation-voxel-net achieves the best offline evaluation performance. Excavation-traj-net performs worse than excavation-voxel-net and excavation-RGBD-net for the offline evaluation, but significantly better than random guessing.

| Method | Accuracy | Precision | Recall | F |

|---|---|---|---|---|

| excavation-voxel-net | ||||

| excavation-RGBD-net | ||||

| excavation-traj-net | ||||

| random- | ||||

| random- |

The excavation regression model excavation-voxel-reg-net and excavation-RGBD-net are also offline evaluated on the testing set using the L1-norm error. The mean and standard deviation of the testing L1-norm error of excavation-voxel-reg-net are cm3 and cm3 respectively. The testing L1-norm error of excavation-RGBD-reg-net has a mean of cm3 and a standard deviation of cm3. Both regression models achieve reasonably good testing performance.

VII-F Excavation Volume Histograms of Experiments in Simulation

The histograms of the excavation volume for the simulated experimented excavations of seven planners are visualized in Figure 9(a)-9(g). In addition to the excavation volume means and the excavation rates in Table I, the histograms further shows the learning-based planners excavate objects with larger volumes than heuristic planners. The histograms also show the distributions of learning-based planners are more uniform and have larger standard deviations than heuristic planners. Only the excavation histograms of simulation experiments are shown here, since the number of excavations in real world experiments is relatively small.

The excavation volume histogram of the training data is plotted in Figure 9(h). We consider an excavation as a success if its excavation volume is above cm3. The red vertical line in Figure 9(h) show where the excavation volume is cm3. With cm3 as the excavation success threshold, out of these () training samples are successful.

Since there are a lot less successful training excavation samples than failure ones, we oversample the successful samples overcome this class imbalance issue in the excavation training, as described in Section VII-D of the Appendix. Increasing the threshold to be larger than cm3 will lead to even less successful excavation training samples, which would make the excavation training harder due to more severe class imbalance. On the other hand, if we decrease the success threshold to be smaller than cm3, the learning-based planners would be more likely to generate excavation trajectories whose bucket filling rates are below . This would hurt the excavation performance of the learning-based planners. Therefore, we believe cm3 is a reasonable success threshold for our excavation learning. Moreover, it is shown in Section V-B that classification-based CEM-voxel and CEM-RGBD outperform regression-based CEM-voxel-reg and CEM-RGBD-reg respectively, which empirically justify the choice of the excavation threshold.

VII-G Experimental Excavation Trajectory Analysis

The trajectory parameter mean and standard deviation of the simulated experimented excavations for each of the seven planners are presented in Table IV and V respectively. In Figure 10, we plot the PoA distributions of the simulated experiment results of all seven planners. The coordinate origin is at the center of the tray in each PoA plot. The robot base locates at in the 2D tray frame.

The GTP means and standard deviations of different planners are mostly similar, which shows learning-based planners generate excavation trajectories with large GTP diversity. The PoA standard deviations of the learning-based planners are smaller than heuristic planners due to the randomness of heuristic planners. In terms of the PoA mean, CEM-voxel, CEM-RGBD, and CEM-voxel-reg are similar and they are relatively different from CEM-RGBD-reg, CEM-traj-opt, random-heu, and highest-heu.

As can be seen from both the trajectory means in Table IV and the PoA distribution plots in Figure 10, learning-based planners prefer to generate PoA in the top (i.e., positive direction) right (i.e., positive direction) area. Highest-heu generates a lot of PoA close to the left edge of the tray (i.e., m). Objects tend to be pushed to the left wall of the tray during excavations. So highest points are more likely to occur close to the left wall of the tray.

We randomly select excavation training excavation samples and plot the PoA of the successful and failure excavations separately in Figure 11. As described in Section VII-C, the training data contains half random-heu and half highest-heu excavations statistically. As shown in Figure 11(a), there are more successful PoA in the top half of the tray, which explains the learning-based planners prefers PoA in the top area. The UR5 excavator swings around the swing center m, m in the tray 2D coordinate. When the PoA gets closer to the bottom of the tray (i.e., m), the robot would have relatively less space to drag and close due to collision with the tray. Moreover, there are more failure excavations close to the left edge of the tray in Figure 11(b), which pushes the learning-based planners to plan PoA away from the left edge.

It has been reported in Section V-B that learning-based scene-dependent planners such as CEM-voxel significantly outperform CEM-traj, which shows that it is important to learn to plan scene-dependent excavation trajectories using the visual representation of the excavation scene. However, the trajectory and PoA distributions can not reflect the benefits of the learning-based scene-dependent planning. In the future, we would like to further investigate and understand how learning-based planners use the scene representation to generate high-quality excavation trajectories.

| Method | Trajectory Mean |

|---|---|

| CEM-voxel | |

| CEM-RGBD | |

| CEM-voxel-reg | |

| CEM-RGBD-reg | |

| CEM-traj-opt | |

| random-heu | |

| highest-heu |

| Method | Trajectory Std |

|---|---|

| CEM-voxel | |

| CEM-RGBD | |

| CEM-voxel-reg | |

| CEM-RGBD-reg | |

| CEM-traj-opt | |

| random-heu | |

| highest-heu |

VII-H Ablation Experiments

Ablation experiments are performed to show insights on how the learning-based planners improve excavation for cluttered rigid objects. The ablation study is focused on the CEM-voxel planner, since it achieves the best excavation performance in the simulated and real-robot experiments. Two ablation experiments are performed by replacing the PoA and GTP of each CEM-voxel trajectory with random parameters respectively. Random PoA and GTP parameters are uniformly sampled from the same range as the heuristic planners introduced in Section VII-C1. excavation trials are experimented for both ablation experiments in simulation using the same experiment setup and protocol as Section V-A.

The excavation volumes, the excavated objects numbers, and the excavation success rates of both ablation experiments are presented in Table VI. We list the mean with standard deviation in parentheses for excavation volumes and objects numbers. The original CEM-voxel experiment results in simulation are shown in Table I. CEM-voxel with random PoA and random GTP both performs worse than the original CEM-voxel in terms the three excavation metrics. This demonstrates CEM-voxel learns about how to generate both good PoA and GTP parameters for excavation. CEM-voxel with random PoA gets worse excavation performance than CEM-voxel with random GTP. This implies the learning of PoA matters more than the learning of GTP for CEM-voxel.

| Method | Volume (cm3) | Number | Success Rate |

|---|---|---|---|

| CEM-voxel with random PoA | |||

| CEM-voxel with random GTP |