Examining Machine Learning for 5G and Beyond through an Adversarial Lens

Abstract

Spurred by the recent advances in deep learning to harness rich information hidden in large volumes of data and to tackle problems that are hard to model/solve (e.g., resource allocation problems), there is currently tremendous excitement in the mobile networks domain around the transformative potential of data-driven AI/ML based network automation, control and analytics for 5G and beyond. In this article, we present a cautionary perspective on the use of AI/ML in the 5G context by highlighting the adversarial dimension spanning multiple types of ML (supervised/unsupervised/RL) and support this through three case studies. We also discuss approaches to mitigate this adversarial ML risk, offer guidelines for evaluating the robustness of ML models, and call attention to issues surrounding ML oriented research in 5G more generally.

Index Terms:

5G and Beyond 5G Mobile Networks, Adversarial Machine Learning, SecurityI Introduction

A considerable amount of industry and academic research and development endeavors are currently paving the way toward 5G and Beyond 5G (B5G) networks. 5G networks, unlike its 4G counterpart, are foreseen to be the underpinning infrastructure for a diverse set of future cellular services well beyond mobile broadband to span multiple vertical industries. To flexibly and cost-effectively support diverse use-cases and to enable complex network functions at scale, 5G network design espouses several innovations and technologies such as artificial intelligence (AI) along with software-defined networking (SDN), network function virtualization (NFV), multi-access edge computing (MEC), and cloud-native architecture that are new to the domain of mobile telecommunications.

Technical developments toward 5G and B5G of mobile networks are quickly embracing a variety of deep learning (DL) algorithms as a de facto approach to help tackle the growing complexities of the network problems. However, the well-known vulnerability of the DL models to the adversarial machine learning (ML) attacks can significantly contribute to broadening the overall attack surface for 5G and beyond networks. This observation motivates us to deviate from the on-going trend of developing a newer ML model to address a 5G network problem and, instead, examine the robustness of the existing ML models in relation to the 5G networks under adversarial ML attacks. In particular, we focus on representative use cases for deep neural network (DNN)-driven supervised learning (SL), unsupervised learning (UL), and reinforcement learning (RL) techniques in the 5G setting and highlight their brittleness when subject to adversarial ML attacks.

Through this article, we would like to draw the attention of the research community and all stakeholders of 5G and beyond mobile networks to seriously consider the security risks that emerge from the rapid unvetted adoption of DL algorithms across the wide spectrum of network operations, control, and automation, and urge to make robustness of the ML models a criterion before they are integrated into deployed systems. Overall, we make the following two contributions.

-

1.

We highlight that despite the well-known vulnerability of DL models to adversarial ML attacks, there is dearth of critical scrutiny on the impact of the wide-scale adoption of ML techniques on security attack surface of 5G and B5G networks.

-

2.

We bridge the aforementioned gap through a vulnerability study of the DL models in all its major incarnations (SL, UL, and Deep RL) from an adversarial ML perspective in the context of 5G and B5G networks.

II Background

II-A Primer on 5G Architecture

A schematic diagram of the 5G network architecture is depicted in Figure 1. Apart from the user equipment (UE), the 5G system features a cloud-native core network, a flexible and disaggregated radio access network (RAN), and a provision for multi-access edge (MEC) cloud for reduced latency. The gNodeB (gNB) of the RAN comprises of split-able access nodes, distributed and centralized units (DU and CU), to efficiently handle evolved network requirements. The gNB connects to the MEC to significantly reduce the network latency for selected applications by availing edge server computing at the MEC cloud which is close to the radio service cells. For instance, to cater to the ultra-reliable low-latency communication (URLLC) use-case of industry automation, the RAN radio unit along with the DU, CU, and the MEC can be installed onsite. Thus, 5G network architecture enables applications to be deployed remotely (App 3 and App 4) or near the edge (App 1 and App 2) where low latency is a requirement. The provision of MEC also reduces the aggregated traffic load on the transport networks that is responsible for connecting RAN to the core network. The 5G core network (5G-CN) is a cloud-native network that stores subscriber databases and hosts essential virtualized network functions for network operations and management. Although, the network management and control functions are shown to be co-located with the core in the figure, they can be flexibly deployed at the edge as needed.

II-B ML in 5G and B5G Networks

A wide spectrum of DL algorithms are being developed for the broad context of wireless communications and 5G networking to deal with problems that are either hard to solve or hard to model [1]. For instance, optimal physical network resource allocation for NFV is an NP-hard problem and so require exponential computational power with increasing system size [2]. Deep RL (DRL)-based solutions are proposed to efficiently address resource allocation problems (e.g., [3]). Network channel estimation for efficient beamforming is a problem that is hard to model and deep neural network (DNN)-based SL solution is well-accepted to tackle it [4]. Moreover, in certain use-cases, conventional expert systems become inappropriate due to real-world constraints, such as limited availability of power, where AI can perform effectively. For instance, deep autoencoder based systems can replace the power-hungry RF chain hardware with small embedded sensor systems enabling them to sustain longer on onboard power supplies [5]. DL algorithms generally outperform the conventional approaches in solving mobile network prediction problems such as physical layer channel prediction by SL, signal detection problems such as recovering transmitted signals from noisy received signals by UL, and optimization problems like resource allocation by RL.

III Widened Attack Surface in ML-Driven 5G and B5G Networks

The security of the 5G networks is well explored (e.g., [6]), but little attention has focused on the security of 5G and B5G networks in the face of the adversarial ML threat [8]. In this section, we briefly introduce the adversarial ML in general and subsequently outline the adversarial ML risks in 5G and B5G networks.

III-A Overview of Security Attacks on ML

The vulnerability of the ML algorithms, especially the DL models, to the adversarial attacks is now well-established, where adversarial inputs are small carefully-crafted perturbations in the test data built for fooling the underlying ML model into making wrong decisions. An adversary can often successfully target an ML model with no knowledge of the model (black-box attack), or some knowledge (grey-box attack), or full knowledge (white-box attack) of the target model. An adversary can attack the model during its training phase and in its testing phase as well. The training phase attacks are known as “poisoning attacks" and the test time attacks are known as “evasion attacks". Evasion attacks are commonly known as adversarial attacks in the literature [9].

More formally, an adversarial example is crafted by adding a small indistinguishable perturbation to the test example of a trained ML classifier where is approximated by the nonlinear optimization problem provided in equation 1, where is the class label.

| (1) |

In 2013, Szegedy et al. [10] observed the discontinuity in the DNN’s input-output mapping and reported that DNN is not resilient to the small changes in the input. Following on this discontinuity Goodfellow et al. [11] propose a gradient-based optimization method for crafting adversarial examples. This technique is known as fast gradient sign method (FGSM). Papernot et al. [12] craft adversarial perturbation using a saliency map-based approach on the forward derivatives of DNN. This approach is known as Jacobian saliency map based attack (JSMA). Carlini et al. [13] crafted three different adversarial attacks using three different distance matrices (, , and ). More details about adversarial ML attacks are described in [9, 7].

III-B Added Threat from Adversarial ML for 5G and Beyond

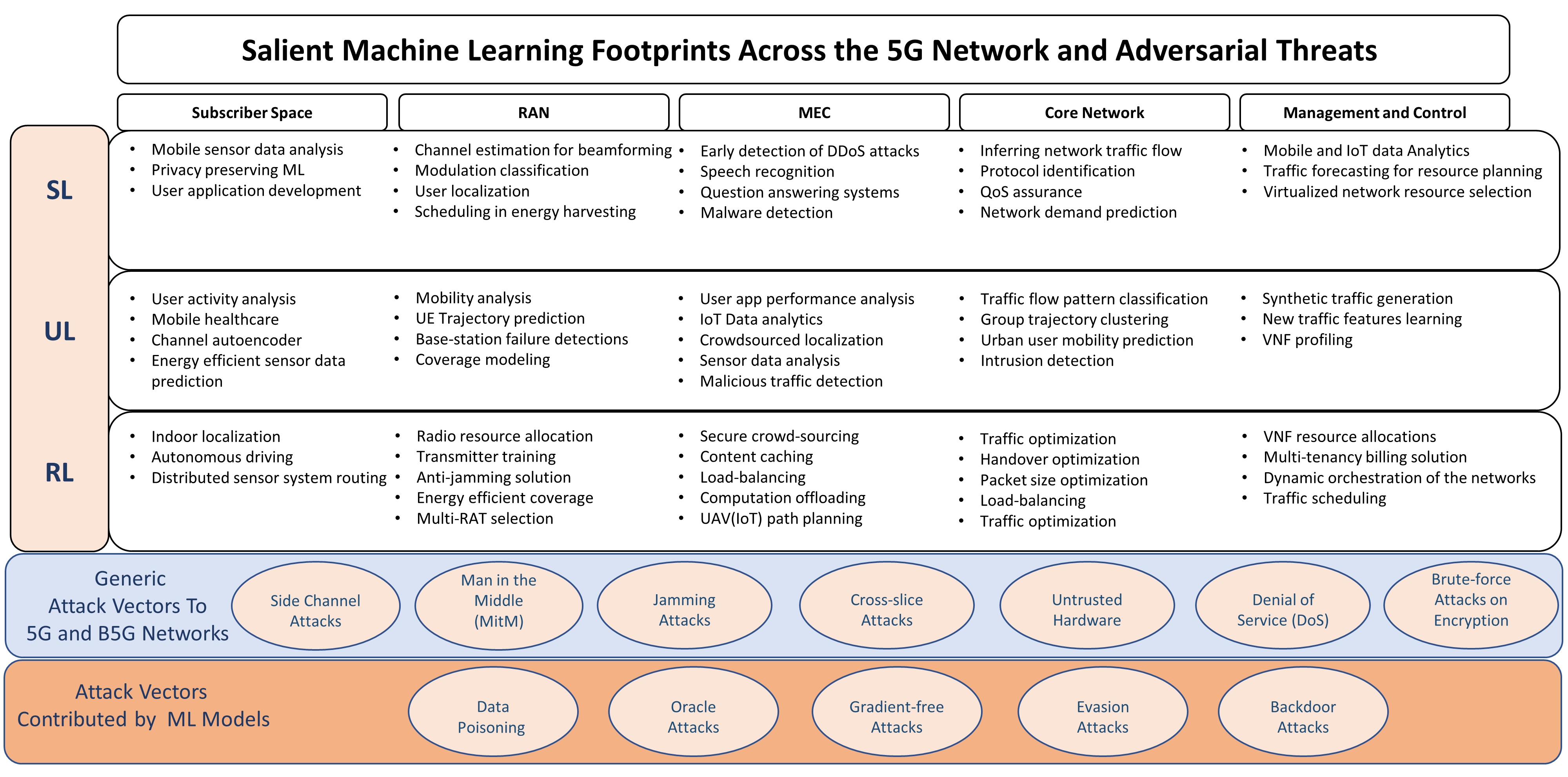

5G and B5G networks embrace ML-driven solutions for improved accuracy and smarter network operations at scale. Figure 2 illustrates network problems from different network segments of 5G, namely user devices, RAN, MEC, core networks, and the network management and control layer that have recently attracted ML-based solutions from all the three categories of ML. However, in light of the above discussion in §III-A, the DL-powered ML models gaining popularity for 5G and B5G networks are vulnerable to the adversarial attacks thereby further aggravating the security risks of future generations of mobile networks.

In our attempt to show the feasibility of adversarial ML attacks on 5G systems we take three well-known ML models—one from each of the three ML families of algorithms (UL, SL, and DRL)—from wireless physical layer operations relevant to 5G and B5G context and show the vulnerability that naive use of ML brings to future mobile networks. We choose all the three ML models for our case studies from the physical layer network operations because of the maturity of ML-research in the context of AI-driven 5G networking and the availability of open-sourced ML models backed up with accessible data-sets111https://mlc.committees.comsoc.org/research-library/.

IV Highlighting Adversarial ML Risk for 5G and Beyond: Three Case Studies

In this section, we critically evaluate to exemplify the security threats posed by DL models in the three canonical ML families of algorithms (UL, SL, and RL) in the 5G and B5G networking and present our work as three different case-studies.

IV-A Attacking Supervised ML-based 5G Applications

Automatic modulation classification is a critical task for intelligent radio receivers where the signal amplitude, carrier frequency, phase offsets, and distribution of noise power are unknown variables to the receivers subjected to real-world frequency-selective time-varying channels perturbed by multipath fading and shadowing. The conventional maximum-likelihood and feature-based solutions are often infeasible due to the high computational overhead and domain-expertize that is involved. To make modulation classifiers more common in modern 5G and B5G networked devices, current approaches deploy DL to build an end-to-end modulation classification systems capable of automatic extraction of signal features in the wild [14].

We pick a convolutional neural network (CNN)-driven SL-based modulation classification model in this case study to illustrate the added dimension of vulnerability introduced in the networks by it. We use the well-known GNU radio ML RML2016.10a dataset that consists of 220000 input examples of 11 digital and analog modulation schemes (AM-DSB, AM-SSB, WBFM, PAM4, BPSK, QPSK, 8PSK, QAM16, QAM64, CPFSK, and GFSK) on the signal to noise ratio (SNR) ranging from -20 dB to 18dB [15]. However, we exclude the analog modulation schemes from our study and consider only the eight digital modulations from the data set because from 2G onward all mobile wireless standards are strictly digital communications. Figure 3 depicts the classification performance of the CNN model in the multi-class modulation classification for the signals between -20dB to 18dB of SNR.

To show the feasibility of an adversarial ML attack on the CNN-based modulation classifier we make the following assumptions:

-

•

We consider the white-box attack model where we assume that the adversary has a complete knowledge about the deployed modulation classifier.

-

•

Goal of the adversary is to compromise the integrity of the CNN classifier leading to a significant decay in the classification accuracy which is the measure of the success of the adversary.

To craft the adversarial examples to fool the CNN classifier, we use the Carlini & Wagner (C&W) attack [13] for each modulation class by minimizing the norm on the perturbation , such that when the perturbation is added to the input and sent to the CNN-based modulation classifier it misclassifies the input . More details on the C&W attack are available in [13]. The performance of the CNN-based modulation classifier before and during the adversarial attack is depicted in Figure 3. A distinct drop in the accuracy of the modulation classification after the adversarial attacks indicates the brittleness of deep supervised ML in 5G and B5G applications. Moreover, our results show that the approbation of unsafe DL models in the physical layer operations of the 5G and B5G networks can make the air-interface of the future networks vulnerable to adversarial ML attacks.

IV-B Attacking Unsupervised ML-based 5G Applications

In 2016, O’Shea et al. proposed the idea of channel autoencoders which is an abstraction of how an end-to-end radio communication module functions in real-world wireless systems [17]. The deep autoencoder-based communication model gains rapid popularity and is seen as a viable alternative to the dedicated radio hardware in the future 5G and beyond networks [5]. Figure 4(a) depicts the conceptual design of the channel autoencoder that we choose as a deep UL model for this case study. We assume the model is subjected to an additive white Gaussian noise (AWGN) channel and apply the parameter-configurations provided in [16]. To perform the adversarial ML attack on the channel autoencoder we consider the following threat model and compare the performance of the model with and without attack.

-

•

We assume a white-box setting, where the adversary has complete knowledge of the deployed ML model. We further assume that the autoencoder learns a broadcast channel. The proposed adversarial attack on channel autoencoder can be converted into a black-box adversarial attack, where the adversary has zero knowledge of the target ML model, by following the surrogate model approach provided in [18].

-

•

The goal of the adversary is to compromise the integrity of channel autoencoder and the success of the adversary is measured by the elevated block error rate (BLER) with improving SNR per bit ().

We take the following two-step data-independent approach to craft adversarial examples for the channel autoencoder:

-

1.

Sample the Gaussian distribution randomly (we sampled Gaussian distribution because the channel is AWGN) and use it as an initial adversarial perturbation ;

-

2.

Maximize the mean activations of the decoder model when the input of the decoder is the perturbation .

This produces maximal spurious activations at each decoder layer and results in the loss of the integrity of the channel autoencoder. Figure 4(b) shows the performance of the model before and under the adversarial attack. Moreover, the figure suggests the adversarial ML attack is often outperforms the traditional jamming attacks.

Since the idea of channel autoencoder in a wireless device is to model the on-board communication system as an end-to-end optimizable operation, the adversarial ML attacks on channel autoencoder show that the application of unsupervised ML in the 5G mobile networks increases its vulnerability to adversarial examples. Hence, we argue that deep UL-based 5G networked systems and applications need to be revisited for their robustness before being integrated into the 5G IoT, and related systems.

IV-C Attacking DRL-based 5G Applications

In the final case study, we have performed the adversarial ML attacks on an end-to-end DRL autoencoder with a noisy channel feedback system [19]. Before providing the details of the adversarial ML attack on the DRL-based communication system, we briefly discuss the DRL-based channel autoencoder. Goutay et al. [19] take the same architecture we consider in the previous case study §IV-B and add a noisy feedback mechanism to it, as shown in Figure 5(a). The end-to-end training procedure involves:

-

1.

The RL-based transmitter training by a policy gradient theorem [20] to ensure that the intelligent transmitter learns from the noisy feedback after a round of communication.

-

2.

SL model-based receiver training to train the receiver as a classifier.

Both transmitter (encoder) and receiver (decoder) are implemented as separate parametric functions (differentiable DNN architectures) that can be optimized jointly. The communication channel is modeled as a stochastic system that provides a conditionally distributed relation between the encoder and the decoder of the channel autoencoder. More details on the design and training procedure are available in [19]. The considered threat model for this case study is given as:

-

•

We choose a black-box settings where the adversary does not know the target model. We assume black-box settings because these are realistic as the attacker has no information about the deployed encoder and decoder model, and the adversary can only attack by adding perturbation in the broadcast channel. We also assume that the adversary can perform an adversarial ML attack for “”-time steps.

-

•

The goal of the adversary is to compromise the performance of the DRL autoencoder with noisy feedback for a specific time interval. The success of the adversary is measured by the degradation in the decoder’s performance during the attack interval.

To evaluate the robustness of DRL model, we exploit the transferability property of the adversarial examples, which states that adversarial examples compromising an ML model will compromise other ML models with high probability if the underlying data distribution is same between these two models. We opt for the surrogate model approach for performing the adversarial ML attack. So we transfer the adversarial examples crafted in case study (§IV-B) and measure the average accuracy of the receiver. We run the DRL autoencoder with a noisy feedback system for 600-time steps (one time-step is equal to one communication round) and perform the adversarial attack between 200 to 400-time step window. We transfer 200 successful perturbations from the previous case study (§IV-B) and report the performance for the DRL system. Figure 5(b) provides the performance of the receiver (decoder) of the DRL autoencoder. It is evident that the performance of the receiver degrades from 95% to nearly 80% during the adversarial attack window. The recovery in the performance after the adversarial attack explicates that the end-to-end DRL autoencoder communication system can recover because of the noisy feedback and DRL adaptive learning behavior.

Our results, as presented in this section, confirm the feasibility of adversarial ML attacks on DL-based applications from all the three types of ML algorithms that are prevalent in the 5G network systems and highlight the additional threat landscape emerges due to the integration of vulnerable DL models to the 5G and B5G networks.

V Discussion

In this section, we discuss the inadequacies of ML models to address the comprehensive technology needs of 5G networks and we recommend a set of improving measures toward robust ML models appropriate for the 5G and B5G approbation.

V-A Towards Robust ML-Driven 5G and Beyond Networks

Robustness against adversarial ML attacks is a very challenging problem. To date, there does not exist a defense that ensures complete protection against adversarial ML attacks. In our previous works [9, 21], we have performed an extensive survey of the adversarial ML literature on robustness against adversarial examples, and showed that nearly all defensive measures proposed in the literature can be divided into:

-

1.

modifying data approaches (adversarial training, feature squeezing, input masking, etc.);

-

2.

auxiliary model addition approaches (generative model addition, ensemble defenses, etc.);

-

3.

modifying model approaches (defensive distillation, model masking, gradient regularization, etc.).

Our results in §IV indicate that ML-based 5G applications are very vulnerable to the adversarial ML attacks. There does not exist much work on the recommendations and guidelines for evaluating the robustness of ML in 5G applications. In the following, we have provided a few important evaluation guidelines for evaluating the ML-based 5G applications against adversarial ML attacks. These insights are extracted from the Carlini et al. [22] and our previous works [23, 9].

-

•

Many defenses are available in the literature against adversarial attacks but these defenses are limited by the design of the application. Using them without considering the threat model of ML-based 5G applications can create a false sense of security. So for ML-based 5G application threat models must clearly state the assumptions taken, type of the adversary, and the metrics used for evaluating the defense.

-

•

Always test the defense against the strongest known attack and use it as a baseline. Evaluating for an adaptive adversary is also necessary.

-

•

Evaluate the defense procedure for gradient-based, gradient-free, and random noise-based attacks 222https://www.robust-ml.org/.

-

•

Clearly state the evaluation parameters (accuracy, recall, precision, F1 score, ROC, etc.) used in validating the defense and always look for a change in the false positive and false negative scores.

-

•

Evaluation of the defense mechanism against out-of-distribution examples and transferability-based adversarial attacks is very important.

Although these recommendations and many others in [23, 9, 21, 22] can help in designing a suitable defense against adversarial examples but this is still an open research problem in adversarial ML and ripe for investigation for ML-based 5G applications.

V-B Beyond Vulnerability to Adversarial ML Attacks

Apart from the vulnerability of the ML models to the adversarial ML attacks, we underline the following drawbacks that call into question the viability of ML-driven solutions to be integrated into the real-world 5G networks any time soon.

V-B1 Lack of real-world datasets

The availability of large data from real-world sources is the fuel of the ML models. Especially the advancement of modern DL research critically depends on having easy access to a variety of data-sets in the research community. However, diversified real-world data-sets in telecommunication and mobile networking is not readily available due to privacy issues and stringent data-sharing policies adopted by the global telecom operators. Hence, a large amount of ML research in the telecom domain still depends on synthetic data which often falls short of truly representing real-world randomness and variations. Thus, current state-of-the-art ML models in telecommunication applications are oftentimes can not replace the domain-knowledge based expert systems currently in operation.

V-B2 Lack of explainability

In ML studies, the accuracy of a model comes at the cost of explainability. The DL models are highly accurate in providing output but lack an explanation of why a particular output is achieved. Explanation of a decision taken often becomes a critical requirement for the 5G network, especially because many critical services such as transport signaling, connected vehicles, and URLLC depend on the 5G infrastructure.

V-B3 Lack of operational success of ML in real-world mobile networks

A plethora of ML models exists in the literature of mobile networking, albeit there is a dearth of operational ML models in currently operational mobile networks. We perform attacks on the ML models running under the ideal environment, simulated in favorable lab conditions, and still, the victim models can not withstand the adversaries. In the real-world mobile network, the ML models need to be deployed and functional under unforeseen random environments leaving them more vulnerable to the cyber-attacks. Moreover, the computational overhead, requirement of hardware enhancement, and run-time delay introduced by the ML models become critical factors to bring operational success for ML in the real-world large-scale mobile networks like 5G.

VI Conclusions

Security and privacy are uncompromising necessities for a modern and future global networks standards such as 5G and Beyond 5G (B5G) and accordingly there is an interest in fortifying it to thwart attacks on it and withstand the rapidly evolving landscape of future security threats. This article specifically highlights the approbation of a large number of DL-driven solutions in 5G and B5G networking gives rise to security concerns that remain unattended by the 5G standardization bodies, such as the 3GPP. We argue this is the right time for cross-disciplinary research endeavors considering ML and cybersecurity to gain momentum to enable secure and trusted future 5G and B5G mobile networks for all future stakeholders. We hope that our work will motivate further research toward a “telecom-grade ML” that is safe and trustworthy enough to be incorporated into 5G and beyond 5G networks, thereby power intelligent and robust mobile networks supporting diverse services including mission-critical systems.

References

- [1] Rubayet Shafin, Lingjia Liu, Vikram Chandrasekhar, Hao Chen, Jeffrey Reed, and Jianzhong Charlie Zhang. Artificial intelligence-enabled cellular networks: A critical path to beyond-5G and 6G. IEEE Wireless Communications, 27(2):212–217, 2020.

- [2] Aun Haider, Richard Potter, and Akihiro Nakao. Challenges in resource allocation in network virtualization. In 20th ITC specialist seminar, volume 18. ITC, 2009.

- [3] Xenofon Foukas, Mahesh K Marina, and Kimon Kontovasilis. Iris: Deep reinforcement learning driven shared spectrum access architecture for indoor neutral-host small cells. IEEE Journal on Selected Areas in Communications, 37(8):1820–1837, 2019.

- [4] Hongji Huang, Jie Yang, Hao Huang, Yiwei Song, and Guan Gui. Deep learning for super-resolution channel estimation and doa estimation based massive MIMO system. IEEE Transactions on Vehicular Technology, 67(9):8549–8560, 2018.

- [5] Hilburn, Ben and O’Shea, Timothy J and Roy, Tamoghna and West, Nathan. DeepSig: Deep Learning for Wireless Communications, 2018. Retrieved July, 2020 from https://developer.nvidia.com/blog/deepsig-deep-learning-wireless-communications/.

- [6] Ijaz Ahmad, Shahriar Shahabuddin, Tanesh Kumar, Jude Okwuibe, Andrei Gurtov, and Mika Ylianttila. Security for 5G and beyond. IEEE Communications Surveys & Tutorials, 21(4):3682–3722, 2019.

- [7] Battista Biggio and Fabio Roli. Wild patterns: Ten years after the rise of adversarial machine learning. Pattern Recognition, 84:317–331, 2018.

- [8] Yalin E Sagduyu, Yi Shi, Tugba Erpek, William Headley, Bryse Flowers, George Stantchev, and Zhuo Lu. When wireless security meets machine learning: Motivation, challenges, and research directions. arXiv preprint arXiv:2001.08883, 2020.

- [9] A. Qayyum, M. Usama, J. Qadir, and A. Al-Fuqaha. Securing connected autonomous vehicles: Challenges posed by adversarial machine learning and the way forward. IEEE Communications Surveys Tutorials, 22(2):998–1026, 2020.

- [10] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. Intriguing properties of neural networks. arXiv preprint arXiv:1312.6199, 2013.

- [11] Ian J Goodfellow, Jonathon Shlens, and Christian Szegedy. Explaining and harnessing adversarial examples (2014). arXiv preprint arXiv:1412.6572.

- [12] Nicolas Papernot, Patrick McDaniel, Somesh Jha, Matt Fredrikson, Z Berkay Celik, and Ananthram Swami. The limitations of deep learning in adversarial settings. In Security and Privacy (EuroS&P), 2016 IEEE European Symposium on, pages 372–387. IEEE, 2016.

- [13] Nicholas Carlini and David Wagner. Towards evaluating the robustness of neural networks. In Security and Privacy (SP), 2017 IEEE Symposium on, pages 39–57. IEEE, May 2017.

- [14] Fan Meng, Peng Chen, Lenan Wu, and Xianbin Wang. Automatic modulation classification: A deep learning enabled approach. IEEE Transactions on Vehicular Technology, 67(11):10760–10772, 2018.

- [15] Timothy J O’Shea and Nathan West. Radio machine learning dataset generation with GNU radio. In Proceedings of the GNU Radio Conference, volume 1, 2016.

- [16] Timothy O’Shea and Jakob Hoydis. An introduction to deep learning for the physical layer. IEEE Transactions on Cognitive Communications and Networking, 3(4):563–575, 2017.

- [17] Timothy J O’Shea, Kiran Karra, and T Charles Clancy. Learning to communicate: Channel auto-encoders, domain specific regularizers, and attention. In 2016 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), pages 223–228. IEEE, 2016.

- [18] M. Usama, A. Qayyum, J. Qadir, and A. Al-Fuqaha. Black-box adversarial machine learning attack on network traffic classification. In 2019 15th International Wireless Communications Mobile Computing Conference (IWCMC), pages 84–89, 2019.

- [19] Mathieu Goutay, Fayçal Ait Aoudia, and Jakob Hoydis. Deep reinforcement learning autoencoder with noisy feedback. In 2019 17th International Symposium on Modeling and Optimization in Mobile, Ad Hoc, and Wireless Networks, (WIOPT), 2019.

- [20] Richard S Sutton, David A McAllester, Satinder P Singh, and Yishay Mansour. Policy gradient methods for reinforcement learning with function approximation. In Advances in neural information processing systems, pages 1057–1063, 2000.

- [21] Inaam Ilahi, Muhammad Usama, Junaid Qadir, Muhammad Umar Janjua, Ala Al-Fuqaha, Dinh Thai Hoang, and Dusit Niyato. Challenges and countermeasures for adversarial attacks on deep reinforcement learning. arXiv preprint arXiv:2001.09684, 2020.

- [22] Nicholas Carlini, Anish Athalye, Nicolas Papernot, Wieland Brendel, Jonas Rauber, Dimitris Tsipras, Ian Goodfellow, Aleksander Madry, and Alexey Kurakin. On evaluating adversarial robustness. arXiv preprint arXiv:1902.06705, 2019.

- [23] M. Usama, J. Qadir, A. Al-Fuqaha, and M. Hamdi. The adversarial machine learning conundrum: Can the insecurity of ML become the achilles’ heel of cognitive networks? IEEE Network, 34(1):196–203, 2020.