Exact Solutions of a Deep Linear Network

Abstract

This work finds the analytical expression of the global minima of a deep linear network with weight decay and stochastic neurons, a fundamental model for understanding the landscape of neural networks. Our result implies that the origin is a special point in deep neural network loss landscape where highly nonlinear phenomenon emerges. We show that weight decay strongly interacts with the model architecture and can create bad minima at zero in a network with more than hidden layer, qualitatively different from a network with only hidden layer. Practically, our result implies that common deep learning initialization methods are insufficient to ease the optimization of neural networks in general.

1 Introduction

Applications of neural networks have achieved great success in various fields. One central open question is why neural networks, being nonlinear and containing many saddle points and local minima, can sometimes be optimized easily (Choromanska et al., 2015a, ) while becoming difficult and requiring many tricks to train in some other scenarios (Glorot and Bengio,, 2010; Gotmare et al.,, 2018). One established approach is to study the landscape of deep linear nets (Choromanska et al., 2015b, ), which are believed to approximate the landscape of a nonlinear net well. A series of works proved the famous results that for a deep linear net, all local minima are global (Kawaguchi,, 2016; Lu and Kawaguchi,, 2017; Laurent and Brecht,, 2018), which is regarded to have successfully explained why deep neural networks are so easy to train because it implies that initialization in any attractive basin can reach the global minimum without much effort (Kawaguchi,, 2016). However, the theoretical problem of when and why neural networks can be hard to train is understudied.

In this work, we theoretically study a deep linear net with weight decay and stochastic neurons, whose loss function takes the following form in general:

| (1) |

where denotes the expectation over the training set, and are the model parameters, is the depth of the network,111In this work, we use “depth” to refer to the number of hidden layers. For example, a linear regressor has depth . is the noise in the hidden layer (e.g., due to dropout), is the width of the -th layer, and is the strength of the weight decay. Previous works have studied special cases of this loss function. For example, Kawaguchi, (2016) and Lu and Kawaguchi, (2017) study the landscape of when is a constant (namely, when there is no noise). Mehta et al., (2021) studies with (a more complicated type of) weight decay but without stochasticity and proved that all the stationary points are isolated. Another line of works studies when the noise is caused by dropout (Mianjy and Arora,, 2019; Cavazza et al.,, 2018). Our setting is more general than the previous works in two respects. First, apart from the mean square error (MSE) loss , an regularization term (weight decay) with arbitrary strength is included; second, the noise is arbitrary. Thus, our setting is arguably closer to the actual deep learning practice, where the injection of noises to latent layers is common, and the use of weight decay is virtually ubiquitous (Krogh and Hertz,, 1992; Loshchilov and Hutter,, 2017). One major limitation of our work is that we assume the label to be -dimensional, and it can be an important future problem to prove whether an exact solution exists or not when is high-dimensional.

Our foremost contribution is to prove that all the global minimum of an arbitrarily deep and wide linear net takes a simple analytical form. In other words, we identify in closed form the global minima of Eq. (1) up to a single scalar, whose analytical expression does not exist in general. We then show that it has nontrivial properties that can explain many phenomena in deep learning. In particular, the implications of our result include (but are not limited to):

-

1.

Weight decay makes the landscape of neural nets more complicated;

-

•

we show that bad minima222Unless otherwise specified, we use the word “bad minimum” to mean a local minimum that is not a global minimum. emerge as weight decay is applied, whereas there is no bad minimum when there is no weight decay. This highlights the need to escape bad local minima in deep learning with weight decay.

-

•

-

2.

Deeper nets are harder to optimize than shallower ones;

-

•

we show that a linear net contains a bad minimum at zero, whereas a net does not. This partially explains why deep networks are much harder to optimize than shallower ones in deep learning practice.

-

•

-

3.

Depending on the task, the common initialization methods (such as the Kaiming init.) can initialize a deep model in the basin of attraction of the bad minimum at zero;

-

•

common initialization methods initialize the models at a radius of roughly around the origin; however, we show that the width of the bad minimum is task-dependent and can be larger than the initialization radius for tasks with a small margin ();

-

•

-

4.

Thus, the use of (effective) weight decay is a major cause of various types of collapses in deep learning (for example, see Figure 1).

Organization: In the next section, we discuss the related works. In Section 3, we derive the exact solution for a two-layer net. Section 4 extends the result to an arbitrary depth. In Section 5, we study and discuss the relevance of our results to many commonly encountered problems in deep learning. The last section concludes the work and discusses unresolved open problems. All proofs are delayed to Section B. Moreover, additional theoretical results on the effect of including a bias term is considered in Section D.

Notation. For a matrix , we use to denote the -th row vector of . denotes the norm if is a vector and the Frobenius norm if is a matrix. The notation signals an optimized quantity. Additionally, we use the superscript ∗ and subscript ∗ interchangeably, whichever leads to a simpler expression. For example, and denote the same quantity, while the former is “simpler."

2 Related Works

In many ways, linear networks have been used to help understand nonlinear networks. For example, even at depth , where the linear net is just a linear regressor, linear nets are shown to be relevant for understanding the generalization behavior of modern overparametrized networks (Hastie et al.,, 2019). Saxe et al., (2013) studies the training dynamics of a depth- network and uses it to understand the dynamics of learning of nonlinear networks. These networks are the same as a linear regression model in terms of expressivity. However, the loss landscape is highly complicated due to the existence of more than one layer, and linear nets are widely believed to approximate the loss landscape of a nonlinear net (Kawaguchi,, 2016; Hardt and Ma,, 2016; Laurent and Brecht,, 2018). In particular, the landscape of linear nets has been studied as early as 1989 in Baldi and Hornik, (1989), which proposed the well-known conjecture that all local minima of a deep linear net are global. This conjecture is first proved in Kawaguchi, (2016), and extended to other loss functions and deeper depths in Lu and Kawaguchi, (2017) and Laurent and Brecht, (2018). Many relevant contemporary deep learning problems can be understood with deep linear models. For example, two-layer linear VAE models are used to understand the cause of the posterior collapse problem (Lucas et al.,, 2019; Wang and Ziyin,, 2022). Deep linear nets are also used to understand the neural collapse problem in contrastive learning (Tian,, 2022). We also provide more empirical evidence in Section 5.

3 Two-layer Linear Net

This section finds the global minima of a two-layer linear net. The data point is a -dimensional vector drawn from an arbitrary distribution, and the labels are generated through an arbitrary function . For generality, we let different layers have different strengths of weight decay even though they often take the same value in practice. We want to minimize the following objective:

| (2) |

where is the width of the hidden layer and are independent random variables. and are the weight decay parameters. Here, we consider a general type of noise with and where is the Kronecker’s delta, and .333While we formally require and to nonzero, one can show that the solutions we provided remain global minimizers in the zero limit by applying Theorem 2 from Ziyin and Ueda, (2022). For shorthand, we use the notation , and the largest and the smallest eigenvalues of are denoted as and respectively. denotes the -th eigenvalue of viewed in any order. For now, it is sufficient for us to assume that the global minimum of Eq. (2) always exists. We will prove a more general result in Proposition 1, when we deal with multilayer nets.

3.1 Main Result

We first present two lemmas showing that the global minimum can only lie on a rather restrictive subspace of all possible parameter settings due to invariances in the objective.

Lemma 1.

At the global minimum of Eq. (2), for all .

Proof Sketch. We use the fact that the first term of Eq. (2) is invariant to a simultaneous rescaling of rows of the weight matrix to find the optimal rescaling, which implies the lemma statement.

This lemma implies that for all , must be proportional to the norm of its corresponding row vector in . This lemma means that using weight decay makes all layers of a deep neural network balanced. This lemma has been referred to as the “weight balancing" condition in recent works (Tanaka et al.,, 2020), and, in some sense, is a unique and potentially essential feature of neural networks that encourages a sparse solution (Ziyin and Wang,, 2023). The following lemma further shows that, at the global minimum, all elements of must be equal.

Lemma 2.

At the global minimum, for all and , we have

| (3) |

Proof Sketch. We show that if the condition is not satisfied, then an “averaging" transformation will strictly decrease the objective.

This lemma can be seen as a formalization of the intuition suggested in the original dropout paper (Srivastava et al.,, 2014). Namely, using dropout encourages the neurons to be independent of one another and results in an averaging effect. The second lemma imposes strong conditions on the solution of the problem, and the essence of this lemma is the reduction of the original problem to a lower dimension. We are now ready to prove our first main result.

Theorem 1.

The global minimum and of Eq. (2) is and if and only if

| (4) |

When , the global minima are

| (5) |

where is an arbitrary vertex of a -dimensional hypercube, and satisfies:

| (6) |

Apparently, is the trivial solution that has not learned any feature due to overregularization. Henceforth, we refer to this solution (and similar solutions for deeper nets) as the “trivial" solution. We now analyze the properties of the nontrivial solution when it exists.

The condition for the solution to become nontrivial is interesting: . The term can be seen as the effective strength of the signal, and is the strength of regularization. This precise condition means that the learning of a two-layer can be divided into two qualitatively different regimes: an “overregularized regime" where the global minimum is trivial, and a “feature learning regime" where the global minimum involves actual learning. Lastly, note that our main result does not specify the exact value of . This is because must satisfy the condition in Eq. (6), which is equivalent to a high-order polynomial in with coefficients being general functions of the eigenvalues of , whose solutions are generally not analytical by Galois theory. One special case where an analytical formula exists for is when . See Section C for more discussion.

3.2 Bounding the General Solution

While the solution to does not admit an analytical form for a general , one can find meaningful lower and upper bounds to such that we can perform an asymptotic analysis of . At the global minimum, the following inequality holds:

| (7) |

where and are the smallest and largest eigenvalue of , respectively. The middle term is equal to by the global minimum condition in (33), and so, assuming , this inequality is equivalent to the following inequality of :

| (8) |

Namely, the general solution should scale similarly to the homogeneous solution in Eq. (104) if we treat the eigenvalues of as constants.

4 Exact Solution for An Arbitrary-Depth Linear Net

This section extends our result to multiple layers. We first derive the analytical formula for the global minimum of a general arbitrary-depth model. We then show that the landscape for a deeper network is highly nontrivial.

4.1 General Solution

The loss function is

| (9) |

where all the noises are independent, and for all and , and . We first show that for general , the global minimum exists for this objective.

Proposition 1.

For and strictly positive , the global minimum for Eq.(9) exists.

Note that the positivity of the regularization strength is crucial. If one of the is zero, the global minimum may not exist. The following theorem is our second main result.

Theorem 2.

Any global minimum of Eq. (9) is of the form

| (10) |

where , , and , and is an arbitrary vertex of a -dimensional hypercube for all . Furthermore, let and , satisfies

| (11) |

Proof Sketch. We prove by induction on the depth . The base case is proved in Theorem 1. We then show that for a general depth, the objective involves optimizing subproblems, one of which is a layer problem that follows by the induction assumption, and the other is a two-layer problem that has been solved in Theorem 1. Putting these two subproblems together, one obtains Eq. (10).

Remark.

We deal with the technical case of having a bias term for each layer in Appendix D. For example, we will show that if one has preprocessed the data such that and , our main results remain precisely unchanged.

The condition in Eq. (11) shows that the scaling factor for all is not independent of one another. This automatic balancing of the norm of all layers is a consequence of the rescaling invariance of the multilayer architecture and the use of weight decay. It is well-known that this rescaling invariance also exists in a neural network with the ReLU activation, and so this balancing condition is also directly relevant for ReLU networks.

Condition (11) implies that all the can be written in terms of one of the :

| (12) |

where and . Consider the first layer (), Eq (11) shows that the global minimum must satisfy the following equation, which is equivalent to a high-order polynomial in that does not have an analytical solution in general:

| (13) |

Thus, this condition is an extension of the condition (6) for two-layer networks.

At this point, it pays to clearly define the word “solution," especially given that it has a special meaning in this work because it now becomes highly nontrivial to differentiate between the two types of solutions.

Definition 1.

We say that a non-negative real is a solution if it satisfies Eq. (13). A solution is trivial if and nontrivial otherwise.

Namely, a global minimum must be a solution, but a solution is not necessarily a global minimum. We have seen that even in the two-layer case, the global minimum can be the trivial one when the strength of the signal is too weak or when the strength of regularization is too strong. It is thus natural to expect to be the global minimum under a similar condition, and one is interested in whether the condition becomes stronger or weaker as the depth of the model is increased. However, it turns out this naive expectation is not true. In fact, when the depth of the model is larger than , the condition for the trivial global minimum becomes highly nontrivial.

The following proposition shows why the problem becomes more complicated. In particular, we have seen that in the case of a two-layer net, some elementary argument has helped us show that the trivial solution is either a saddle or the global minimum. However, the proposition below shows that with , the landscape becomes more complicated in the sense that the trivial solution is always a local minimum, and it becomes difficult to compare the loss value of the trivial solution with the nontrivial solution because the value of is unknown in general.

Proposition 2.

Let in Eq. (9). Then, the solution , , …, is a local minimum with a diagonal positive-definite Hessian .

Comparing the Hessian of and , one notices a qualitative difference: for , the Hessian is always diagonal (at ); for , in sharp contrast, the off-diagonal terms are nonzero in general, and it is these off-diagonal terms that can break the positive-definiteness of the Hessian. This offers a different perspective on why there is a qualitative difference between and .

Lastly, note that, unlike the depth- case, one can no longer find a precise condition such that a solution exists for a general . The reason is that the condition for the existence of the solution is now a high-order polynomial with quite arbitrary intermediate terms. The following proposition gives a sufficient but stronger-than-necessary condition for the existence of a nontrivial solution, when all the , intermediate width and regularization strength are the same.444This is equivalent to setting . The result is qualitatively similar but involves additional factors of if , , and all take different values. We thus only present the case when , , and are the same for notational concision and for emphasizing the most relevant terms. Also, note that this proposition gives a sufficient and necessary condition if is proportional to the identity.

Proposition 3.

Let , and for all . Assuming , the only solution is trivial if

| (14) |

Nontrivial solutions exist if

| (15) |

Moreover, the nontrivial solutions are both lower and upper-bounded:555For , we define the lower-bound as , which equal to zero if , and if . With this definition, this proposition applies to a two-layer net as well.

| (16) |

Proof Sketch. The proof follows from the observation that the l.h.s. of Eq. (13) is a continuous function and must cross the r.h.s. under certain sufficient conditions.

One should compare the general condition here with the special condition for . One sees that for , many other factors (such as the width, the depth, and the spectrum of the data covariance ) come into play to determine the existence of a solution apart from the signal strength and the regularization strength .

4.2 Which Solution is the Global Minimum?

Again, we set , and for all for notational concision. Using this condition and applying Lemma 3 to Theorem 2, the solution now takes the following form, where ,

| (17) |

The following theorem gives a sufficient condition for the global minimum to be nontrivial. It also shows that the landscape of the linear net becomes complicated and can contain more than local minimum.

Theorem 3.

Let , and for all and assuming . Then, if

| (18) |

the global minimum of Eq. (9) is one of the nontrivial solutions.

While there are various ways this bound can be improved, it is general enough for our purpose. In particular, one sees that, for a general depth, the condition for having a nontrivial global minimum depends not only on the and but also on the model architecture in general. For a more general architecture with different widths etc., the architectural constant from Eq. (13) will also enter the equation. In the limit of , relation (18) reduces to

| (19) |

which is the condition derived for the 2-layer case.

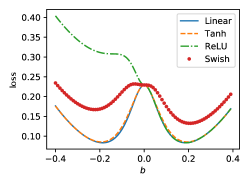

5 Implications

Relevance to nonlinear models. We first caution the readers that the following discussion should be taken with a caveat and is based on the philosophy that deep linear nets can approximate the nonlinear ones. This approximation certainly holds for fully connected models with differentiable activation functions such as tanh or Swish because they are, up to first-order Taylor expansion, a deep linear net around zero, which is the region for which our theory is the most relevant. We empirically demonstrate that close to the origin, the landscape of linear nets can indeed approximate that of nonlinear nets quite well. To compare, we plug in the solution in Theorem 4 to both linear and nonlinear models of the same architecture and compare the loss values at different values of around . For simplicity, we only consider the case . The activation functions we consider are ReLU, Tanh, and Swish (Ramachandran et al.,, 2017), a modern and differentiable variant of ReLU. See Fig. 2.

The regressor is sampled as Gaussian random vectors. We consider two methods of generating ; the first one (left) is . The second one (right) is , where the weight is obtained as a Gaussian random vector. Fig. 2 shows that the landscape consisting of Tanh is always close to the linear landscape. Swish is not as good as Tanh, but the Swish landscape shows a similar tendency to the linear landscape. The ReLU landscape is not so close to the linear landscape either for or , but it agrees completely with the linear landscape on the other side, as expected. Besides the quantitative closeness, it is also important to note that all the landscapes agree qualitatively, containing the same number of local minima at similar values of .

Landscape of multi-layer neural networks. The combination of Theorem 3 and Proposition 2 shows that the landscape of a deep neural network can become highly nontrivial when there is a weight decay and when the depth of the model is larger than . This gives an incomplete but meaningful picture of a network’s complicated but interesting landscape beyond two layers (see Figure 1 for an incomplete summary of our results). In particular, even when the nontrivial solution is the global minimum, the trivial solution is still a local minimum that needs to be escaped. Our result suggests the previous understanding that all local minima of a deep linear net are global cannot generalize to many practical settings where deep learning is found to work well. For example, a series of works attribute the existence of bad (non-global) minima to the use of nonlinearities (Kawaguchi,, 2016) or the use of a non-regular (non-differentiable) loss function (Laurent and Brecht,, 2018). Our result, in contrast, shows that the use of a simple weight decay is sufficient to create a bad minimum.666Some previous works do suggest the existence of bad minima when weight decay is present, but no direct proof exists yet. For example, Taghvaei et al., (2017) shows that when the model is approximated by a linear dynamical system, regularization can cause bad local minima. Mehta et al., (2021) shows the existence of bad local minima in deep linear networks with weight decay through numerical simulations. Moreover, the problem with such a minimum is two-fold: (1) (optimization) it is not global and so needs to be “overcome" and (2) (generalization) it is a minimum that has not learned any feature at all because the model constantly outputs zero. To the best of our knowledge, previous to our work, there has not been any proof that a bad minimum can generically exist in a rather arbitrary network without any restriction on the data.777In the case of nonlinear networks without regularization, a few works proved the existence of bad minima. However, the previous results strongly depend on the data and are rather independent of architecture. For example, one major assumption is that the data cannot be perfected and fitted by a linear model (Yun et al.,, 2018; Liu,, 2021; He et al.,, 2020). Some other works explicitly construct data distribution (Safran and Shamir,, 2018; Venturi et al.,, 2019). Our result, in contrast, is independent of the data. Thus, our result offers direct theoretical justification for the widely believed importance of escaping local minima in the field of deep learning (Kleinberg et al.,, 2018; Liu et al.,, 2021; Mori et al.,, 2022). In particular, previous works on escaping local minima often hypothesize landscapes that are of unknown relevance to an actual neural network. With our result, this line of research can now be established with respect to landscapes that are actually deep-learning-relevant.

Previous works also argue that having a deeper depth does not create a bad minimum (Lu and Kawaguchi,, 2017). While this remains true, its generality and applicability to practical settings now also seem low. Our result shows that as long as weight decay is used, and as long as , there is indeed a bad local minimum at . In contrast, there is no bad minimum at for a depth- network: the point is either a saddle or the global minimum.888Of course, in practice, the model trained with SGD can still converge to the trivial solution even if it is a saddle point (Ziyin et al.,, 2021) because minibatch SGD is, in general, not a good estimator of the local minima. Having a deeper depth thus alters the qualitative nature of the landscape, and our results agree better with the common observation that a deeper network is harder, if not impossible, to optimize.

We note that our result can also be relevant for more modern architectures such as the ResNet. Using ResNet, one needs to change the dimension of the hidden layer after every bottleneck, and a learnable linear transformation is applied here. Thus, the “effective depth” of a ResNet would be roughly between the number of its bottlenecks and its total number of blocks. For example, a ResNet18 applied to CIFAR10 often has five bottlenecks and 18 layers in total. We thus expect it to have qualitatively similar behavior to a deep linear net with a depth in between. See Figure 1. The experimental details are given in Section A.

Learnability of a neural network. Now we analyze the solution when tends to infinity. We first note that the existence condition bound in (15) becomes exponentially harder to satisfy as becomes large:

| (20) |

When this bound is not satisfied, the given neural network cannot learn the data. Recall that for a two-layer net, the existence condition is nothing but , independent of the depth, width, or stochasticity in the model. For a deeper network, however, every factor comes into play, and the architecture of the model has a strong (and dominant) influence on the condition. In particular, a factor that increases polynomially in the model width and exponentially in the model depth appears.

A practical implication is that the use of weight decay may be too strong for deep networks. If one increases the depth or width of the model, one should also roughly decrease according to Eq. (20).

Insufficiency of the existing initialization schemes. We have shown that is often a bad local minimum for deep learning. Our result further implies that escaping this local minimum can be highly practically relevant because standard initialization schemes are trapped in this local minimum for tasks where the signal is weak. See Inequality (16): any nontrivial global minimum is lower-bounded by a factor proportional to , which can be seen as an approximation of the radius of the local minimum at the origin. In comparison, standard deep learning initialization schemes such as Kaiming init. initialize at a radius roughly . Thus, for tasks , these initialization methods are likely to initialize the model in the basin of attraction of the trivial regime, which can cause a serious failure in learning. To demonstrate, we perform a numerical simulation shown in the right panel of Figure 3, where we train nonlinear networks with width with SGD on tasks with varying . For sufficiently small , the model clearly is stuck at the origin.999There are many natural problems where the signal is extremely weak. One well-known example is the problem of future price prediction in finance, where the fundamental theorem of finance forbids a large (Fama,, 1970). In contrast, linear regression is never stuck at the origin. Our result thus suggests that it may be desirable to devise initialization methods that are functions of the data distribution.

Prediction variance of stochastic nets. A major extension of the standard neural networks is to make them stochastic, namely, to make the output a random function of the input. In a broad sense, stochastic neural networks include neural networks trained with dropout (Srivastava et al.,, 2014; Gal and Ghahramani,, 2016), Bayesian networks (Mackay,, 1992), variational autoencoders (VAE) (Kingma and Welling,, 2013), and generative adversarial networks (Goodfellow et al.,, 2014). Stochastic networks are thus of both practical and theoretical importance to study. Our result can also be used for studying the theoretical properties of stochastic neural networks. Here, we present a simple application of our general solution to analyze the properties of a stochastic net. The following theorem summarizes our technical results.

Theorem 4.

Let , and for all . Let . Then, at any global minimum of Eq. (9), holding other parameters fixed,

-

1.

in the limit of large ,

-

2.

in the limit of large , ;

-

3.

In the limit of large , .

Interestingly, the scaling of prediction variance in asymptotic is different for different widths. The third result shows that the prediction variance decreases exponentially fast in . In particular, this result answers a question recently proposed in Ziyin et al., (2022): does a stochastic net trained on MSE have a prediction variance that scales towards ? We improve on their result in the case of a deep linear net by (a) showing that the is tight in general, independent of the depth or other factors of the model, and (b) proving a bound showing that the variance also scales towards zero as depth increases, which is a novel result of our work. Our result also offers an important insight into the cause of the vanishing prediction variance. Previous works (Alemi et al.,, 2018) often attribute the cause to the fact that a wide neural network is too expressive. However, our result implies that this is not always the case because a linear network with limited expressivity can also have a vanishing variance as the model tends to an infinite width.

Collapses in deep learning. Lastly, we comment briefly on the apparent similarity between different types of collapses that occur in deep learning. For neural collapse, our result agrees with the recent works that identify weight decay as a main cause (Rangamani and Banburski-Fahey,, 2022). For Bayesian deep learning, Wang and Ziyin, (2022) identified the cause of the posterior collapse in a two-layer VAE structure to be that the regularization of the mean of the latent variable is too strong. More recently, the origin and its stability have also been discussed as the dimensional collapse in self-supervised learning (Ziyin et al.,, 2023). Although appearing in different contexts of deep learning, the three types of collapses share the same phenomenology that the model converges to a “collapsed" regime where the learned representation becomes low-rank or constant, which agrees with the behavior of the trivial regime we identified. We refer the readers to Ziyin and Ueda, (2022) for a study of how the second-order phase transition framework of statistical physics can offer a possible unified explanation of these phenomena.

6 Conclusion

In this work, we derived the exact solution of a deep linear net with arbitrary depth and width and with stochasticity. Our work sheds light on the highly complicated landscape of a deep neural network. Compared to the previous works that mostly focus on the qualitative understanding of the linear net, our result offers a more precise quantitative understanding of deep linear nets. Quantitative understanding is one major benefit of knowing the exact solution, whose usefulness we have also demonstrated with the various implications. The results, although derived for linear models, are also empirically shown to be relevant for networks with nonlinear activations. Lastly, our results strengthen the line of thought that analytical approaches to deep linear models can be used to understand deep neural networks, and it is the sincere hope of the authors to attract more attention to this promising field.

Acknowledgement

Ziyin is financially supported by the GSS scholarship of the University of Tokyo and the JSPS fellowship. Li is financially supported by CNRS. X. Meng is supported by JST CREST Grant Number JPMJCR1912, Japan.

References

- Alemi et al., (2018) Alemi, A., Poole, B., Fischer, I., Dillon, J., Saurous, R. A., and Murphy, K. (2018). Fixing a broken ELBO. In International Conference on Machine Learning, pages 159–168. PMLR.

- Baldi and Hornik, (1989) Baldi, P. and Hornik, K. (1989). Neural networks and principal component analysis: Learning from examples without local minima. Neural networks, 2(1):53–58.

- Cavazza et al., (2018) Cavazza, J., Morerio, P., Haeffele, B., Lane, C., Murino, V., and Vidal, R. (2018). Dropout as a low-rank regularizer for matrix factorization. In International Conference on Artificial Intelligence and Statistics, pages 435–444. PMLR.

- (4) Choromanska, A., Henaff, M., Mathieu, M., Arous, G. B., and LeCun, Y. (2015a). The loss surfaces of multilayer networks. In Artificial Intelligence and Statistics, pages 192–204.

- (5) Choromanska, A., LeCun, Y., and Arous, G. B. (2015b). Open problem: The landscape of the loss surfaces of multilayer networks. In Conference on Learning Theory, pages 1756–1760. PMLR.

- Fama, (1970) Fama, E. F. (1970). Efficient capital markets: A review of theory and empirical work. The journal of Finance, 25(2):383–417.

- Gal and Ghahramani, (2016) Gal, Y. and Ghahramani, Z. (2016). Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In international conference on machine learning, pages 1050–1059. PMLR.

- Glorot and Bengio, (2010) Glorot, X. and Bengio, Y. (2010). Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the thirteenth international conference on artificial intelligence and statistics, pages 249–256. JMLR Workshop and Conference Proceedings.

- Goodfellow et al., (2014) Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y. (2014). Generative adversarial nets. Advances in neural information processing systems, 27.

- Gotmare et al., (2018) Gotmare, A., Keskar, N. S., Xiong, C., and Socher, R. (2018). A closer look at deep learning heuristics: Learning rate restarts, warmup and distillation. arXiv preprint arXiv:1810.13243.

- Hardt and Ma, (2016) Hardt, M. and Ma, T. (2016). Identity matters in deep learning. arXiv preprint arXiv:1611.04231.

- Hastie et al., (2019) Hastie, T., Montanari, A., Rosset, S., and Tibshirani, R. J. (2019). Surprises in high-dimensional ridgeless least squares interpolation. arXiv preprint arXiv:1903.08560.

- He et al., (2020) He, F., Wang, B., and Tao, D. (2020). Piecewise linear activations substantially shape the loss surfaces of neural networks. arXiv preprint arXiv:2003.12236.

- Kawaguchi, (2016) Kawaguchi, K. (2016). Deep learning without poor local minima. Advances in Neural Information Processing Systems, 29:586–594.

- Kingma and Welling, (2013) Kingma, D. P. and Welling, M. (2013). Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114.

- Kleinberg et al., (2018) Kleinberg, B., Li, Y., and Yuan, Y. (2018). An alternative view: When does sgd escape local minima? In International Conference on Machine Learning, pages 2698–2707. PMLR.

- Krogh and Hertz, (1992) Krogh, A. and Hertz, J. A. (1992). A simple weight decay can improve generalization. In Advances in neural information processing systems, pages 950–957.

- Laurent and Brecht, (2018) Laurent, T. and Brecht, J. (2018). Deep linear networks with arbitrary loss: All local minima are global. In International conference on machine learning, pages 2902–2907. PMLR.

- Liu, (2021) Liu, B. (2021). Spurious local minima are common for deep neural networks with piecewise linear activations. arXiv preprint arXiv:2102.13233.

- Liu et al., (2021) Liu, K., Ziyin, L., and Ueda, M. (2021). Noise and fluctuation of finite learning rate stochastic gradient descent. In International Conference on Machine Learning, pages 7045–7056. PMLR.

- Loshchilov and Hutter, (2017) Loshchilov, I. and Hutter, F. (2017). Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101.

- Lu and Kawaguchi, (2017) Lu, H. and Kawaguchi, K. (2017). Depth creates no bad local minima. arXiv preprint arXiv:1702.08580.

- Lucas et al., (2019) Lucas, J., Tucker, G., Grosse, R., and Norouzi, M. (2019). Don’t Blame the ELBO! A Linear VAE Perspective on Posterior Collapse.

- Mackay, (1992) Mackay, D. J. C. (1992). Bayesian methods for adaptive models. PhD thesis, California Institute of Technology.

- Mehta et al., (2021) Mehta, D., Chen, T., Tang, T., and Hauenstein, J. (2021). The loss surface of deep linear networks viewed through the algebraic geometry lens. IEEE Transactions on Pattern Analysis and Machine Intelligence.

- Mianjy and Arora, (2019) Mianjy, P. and Arora, R. (2019). On dropout and nuclear norm regularization. In International Conference on Machine Learning, pages 4575–4584. PMLR.

- Mori et al., (2022) Mori, T., Ziyin, L., Liu, K., and Ueda, M. (2022). Power-law escape rate of sgd. In International Conference on Machine Learning, pages 15959–15975. PMLR.

- Ramachandran et al., (2017) Ramachandran, P., Zoph, B., and Le, Q. V. (2017). Searching for activation functions.

- Rangamani and Banburski-Fahey, (2022) Rangamani, A. and Banburski-Fahey, A. (2022). Neural collapse in deep homogeneous classifiers and the role of weight decay. In ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 4243–4247. IEEE.

- Safran and Shamir, (2018) Safran, I. and Shamir, O. (2018). Spurious local minima are common in two-layer relu neural networks. In International conference on machine learning, pages 4433–4441. PMLR.

- Saxe et al., (2013) Saxe, A. M., McClelland, J. L., and Ganguli, S. (2013). Exact solutions to the nonlinear dynamics of learning in deep linear neural networks. arXiv preprint arXiv:1312.6120.

- Srivastava et al., (2014) Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., and Salakhutdinov, R. (2014). Dropout: a simple way to prevent neural networks from overfitting. The journal of machine learning research, 15(1):1929–1958.

- Taghvaei et al., (2017) Taghvaei, A., Kim, J. W., and Mehta, P. (2017). How regularization affects the critical points in linear networks. Advances in neural information processing systems, 30.

- Tanaka et al., (2020) Tanaka, H., Kunin, D., Yamins, D. L., and Ganguli, S. (2020). Pruning neural networks without any data by iteratively conserving synaptic flow. Advances in Neural Information Processing Systems, 33:6377–6389.

- Tian, (2022) Tian, Y. (2022). Deep contrastive learning is provably (almost) principal component analysis. arXiv preprint arXiv:2201.12680.

- Venturi et al., (2019) Venturi, L., Bandeira, A. S., and Bruna, J. (2019). Spurious valleys in one-hidden-layer neural network optimization landscapes. Journal of Machine Learning Research, 20:133.

- Wang and Ziyin, (2022) Wang, Z. and Ziyin, L. (2022). Posterior collapse of a linear latent variable model. In Advances in Neural Information Processing Systems.

- Yun et al., (2018) Yun, C., Sra, S., and Jadbabaie, A. (2018). Small nonlinearities in activation functions create bad local minima in neural networks. arXiv preprint arXiv:1802.03487.

- Ziyin et al., (2021) Ziyin, L., Li, B., Simon, J. B., and Ueda, M. (2021). Sgd can converge to local maxima. In International Conference on Learning Representations.

- Ziyin et al., (2023) Ziyin, L., Lubana, E. S., Ueda, M., and Tanaka, H. (2023). What shapes the loss landscape of self supervised learning? In The Eleventh International Conference on Learning Representations.

- Ziyin and Ueda, (2022) Ziyin, L. and Ueda, M. (2022). Exact phase transitions in deep learning. arXiv preprint arXiv:2205.12510.

- Ziyin and Wang, (2023) Ziyin, L. and Wang, Z. (2023). spred: Solving L1 Penalty with SGD. In International Conference on Machine Learning.

- Ziyin et al., (2022) Ziyin, L., Zhang, H., Meng, X., Lu, Y., Xing, E., and Ueda, M. (2022). Stochastic neural networks with infinite width are deterministic.

Checklist

-

1.

For all authors…

-

(a)

Do the main claims made in the abstract and introduction accurately reflect the paper’s contributions and scope? [Yes]

-

(b)

Did you describe the limitations of your work? [Yes]

-

(c)

Did you discuss any potential negative societal impacts of your work? [N/A]

-

(d)

Have you read the ethics review guidelines and ensured that your paper conforms to them? [Yes]

-

(a)

-

2.

If you are including theoretical results…

-

(a)

Did you state the full set of assumptions of all theoretical results? [Yes]

-

(b)

Did you include complete proofs of all theoretical results? [Yes] See Appendix.

-

(a)

-

3.

If you ran experiments…

-

(a)

Did you include the code, data, and instructions needed to reproduce the main experimental results (either in the supplemental material or as a URL)? [No] The experiments are only for demonstration and are straightforward to reproduce following the theory.

-

(b)

Did you specify all the training details (e.g., data splits, hyperparameters, how they were chosen)? [Yes]

-

(c)

Did you report error bars (e.g., with respect to the random seed after running experiments multiple times)? [No] The fluctuations are visually negligible.

-

(d)

Did you include the total amount of compute and the type of resources used (e.g., type of GPUs, internal cluster, or cloud provider)? [Yes] They are done on a single 3080Ti GPU.

-

(a)

-

4.

If you are using existing assets (e.g., code, data, models) or curating/releasing new assets…

-

(a)

If your work uses existing assets, did you cite the creators? [N/A]

-

(b)

Did you mention the license of the assets? [N/A]

-

(c)

Did you include any new assets either in the supplemental material or as a URL? [N/A]

-

(d)

Did you discuss whether and how consent was obtained from people whose data you’re using/curating? [N/A]

-

(e)

Did you discuss whether the data you are using/curating contains personally identifiable information or offensive content? [N/A]

-

(a)

-

5.

If you used crowdsourcing or conducted research with human subjects…

-

(a)

Did you include the full text of instructions given to participants and screenshots, if applicable? [N/A]

-

(b)

Did you describe any potential participant risks, with links to Institutional Review Board (IRB) approvals, if applicable? [N/A]

-

(c)

Did you include the estimated hourly wage paid to participants and the total amount spent on participant compensation? [N/A]

-

(a)

Appendix A Experimental Details

For the experiment in Figure 3, the input data consists of data points sampled from a multivariate Gaussian distribution: . The target is generated by a linear transformation , where the norm of is rescaled to obtain different values of as the control parameter of the simulation. The models are with neural networks with bias terms and with hidden width for both hidden layers. The training proceeds with gradient descent with a learning rate of for iterations when the training loss has stopped decreasing for all the experiments.

For the CIFAR10 experiments, we train a standard ResNet18 with roughly parameters under the standard procedure, with a batch size of for epochs.101010Specifically, we use the implementation and training procedure of https://github.com/kuangliu/pytorch-cifar, with standard augmentations such as random crop, etc. For the linear models, we use a hidden width of without any bias term. The training proceeds with SGD with batch size for epochs with a momentum of . The learning rate is , chosen as the best learning rate from a grid search over .

Appendix B Proofs

B.1 Proof of Lemma 1

Proof. Note that the first term in the loss function is invariant to the following rescaling for any :

| (21) |

meanwhile, the regularization term changes as changes. Therefore, the global minimum must have a minimized with respect to any and .

One can easily find the solution:

| (22) |

Therefore, at the global minimum, we must have , so that

| (23) |

which completes the proof.

B.2 Proof of Lemma 2

Proof. By Lemma 1, we can write as and as where is a unit vector, and finding the global minimizer of Eq. (2) is equivalent to finding the minimizer of the following objective,

| (24) | |||

| (25) |

The lemma statement is equivalent to for all and .

We prove this by contradiction. Suppose there exist and such that , we can choose to be the index of with maximum , and let be the index of with minimum . Now, we can construct a different solution by the following replacement of and :

| (26) |

where is a positive scalar and is a unit vector such that . Note that, by the triangular inequality, . Meanwhile, all the other terms, for and , are left unchanged. This transformation leaves the first term in the loss function (25) unchanged, and we now show that it decreases the other terms.

The change in the second term is

| (27) |

By the inequality , we see that the left-hand side is larger than the right-hand side.

We now consider the regularization term. The change is

| (28) |

and the left-hand side is again larger than the right-hand side by the inequality mentioned above: . Therefore, we have constructed a solution whose loss is strictly smaller than that of the global minimum: a contradiction. Thus, the global minimum must satisfy

| (29) |

for all and .

Likewise, we can show that for all and . This is because the triangular inequality is only an equality if . If , following the same argument above, we arrive at another contradiction.

B.3 Proof of Theorem 1

Proof. By Lemma 2, at any global minimum, we can write for some . We can also write for a general vector . Without loss of generality, we assume that (because the sign of can be absorbed into ).

The original problem in Eq. (2) is now equivalently reduced following problem because :

| (30) |

For any fixed , the global minimum of is well known:111111Namely, it is the solution of a ridge linear regression problem.

| (31) |

By Lemma 1, at a global minimum, also satisfies the following condition:

| (32) |

One solution to this equation is , and we are interested in whether solutions with exist. If there is no other solution, then must be the unique global minimum; otherwise, we need to identify which of the solutions are actual global minima. When ,

| (33) |

Note that the left-hand side is monotonically decreasing in , and is equal to when . When , the left-hand side tends to . Because the left-hand side is a continuous and monotonic function of , a unique solution that satisfies Eq. (33) exists if and only if , or,

| (34) |

Therefore, at most, three candidates for global minima of the loss function exist:

| (35) |

where .

In the second case, one needs to discern the saddle points from the global minima. Using the expression of , one finds the expression of the loss function as a function of

| (36) |

where such that is a diagonal matrix. We now show that condition (34) is sufficient to guarantee that is not the global minimum.

At , the first nonvanishing derivative of is the second-order derivative. The second order derivative at is

| (37) |

which is negative if and only if . If the second derivative at is negative, cannot be a minimum. It then follows that for , , are the two global minimum (because the loss is invariant to the sign flip of ). For the same reason, when , gives the unique global minimum. This finishes the proof.

B.4 Proof of Proposition 1

Proof. We first show that there exists a constant such that the global minimum must be confined within a (closed) -Ball around the origin. The objective (9) can be upper-bounded by

| (38) |

where . Now, let denote be the union of all the parameters () and viewed as a vector. We see that the above inequality is equivalent to

| (39) |

Now, note that the loss value at the origin is , which means that for any , whose norm , the loss value must be larger than the loss value of the origin. Therefore, let , we have proved that the global minimum must lie in a closed -Ball around the origin.

As the last step, because the objective is a continuous function of and the -Ball is a compact set, the minimum of the objective in this -Ball is achievable. This completes the proof.

B.5 Proof of Theorem 2

We divide the proof into the proof of a proposition and a lemma, and combining the following proposition and lemma obtains the theorem statement.

B.5.1 Proposition 4

Proposition 4.

Any global minimum of Eq. (9) is of the form

| (40) |

where , , and , and is an arbitrary vertex of a -dimensional hypercube for all .

Proof. Note that the trivial solution is also a special case of this solution with . We thus focus on deriving the form of the nontrivial solution.

We prove by induction on . The base case with depth is proved in Theorem 1. We now assume that the same holds for depth and prove that it also holds for depth .

For any fixed , the loss function can be equivalently written as

| (41) |

where . Namely, we have reduced the problem to a problem involving only a depth linear net with a transformed input .

By the induction assumption, the global minimum of this problem takes the form of Eq. (10), which means that the loss function can be written in the following form:

| (42) |

for an arbitrary optimizable vector . The term can now be regarded as a single random variable such that and . Computing the expectation over all the noises except for , one finds

| (43) | |||

| (44) |

where we have ignored the constant term because it does not affect the minimizer of the loss. Namely, we have reduced the original problem to a two-layer linear net problem where the label becomes effectively rescaled for a deep network.

For any fixed , we can define , and obtain the following problem, whose global minimum we have already derived:

| (45) |

By Theorem 1, the global minimum is identically if , or, . When , the solution can be non-trivial:

| (46) |

for some . This proves the theorem.

B.6 Lemma 3

Lemma 3.

At any global minimum of Eq. (9), let and ,

| (47) |

Proof. It is sufficient to show that for all and ,

| (48) |

We prove by contradiction. Let be the global minimum of the loss function. Assuming that for an arbitrary ,

| (49) |

Now, we introduce such that and . The loss without regularization is invariant under the transformation of , namely

| (50) |

In the regularization, all the terms remain invariant except two terms:

| (51) |

It could be shown that, the sum of and reaches its minimum when . If , one can choose to minimize the regularization terms in the loss function such that , indicating is not the global minimum. Thus, cannot be true.

B.7 Proof of Proposition 2

Proof. Let

| (52) |

is a polynomial containing th order, th order, and th order terms in terms of parameters and . The second order derivative of is thus a polynomial containing -th order and -th order terms; however, other orders are not possible. For , there are no constant terms in the Hessian of , and there is at least a parameter in each of the terms.

The Hessian of the full loss function with regularization is

| (53) | ||||

| (54) | ||||

| (55) |

For , , the Hessian of is , since each term in contains at least a or a . The Hessian of becomes

| (56) | ||||

| (57) | ||||

| (58) |

The Hessian of is a positive-definite matrix. Thus, , is always a local minimum of the loss function .

B.8 Proof of Proposition 3

We first apply Lemma 3 to determine the condition for the nontrivial solution to exist. In particular, the Lemma must hold for and , which leads to the following condition:

| (59) |

Note that the left-hand side is a continuous function that tends to as . Therefore, it is sufficient to find the condition that guarantees that there exists such that the l.h.s. is larger than . For any , the l.h.s. is a monotonically decreasing function of any eigenvalue of , and so the following two inequalities hold:

| (60) |

The second inequality implies that if

| (61) |

a nontrivial solution must exist. This condition is equivalent to the existence of a such that

| (62) |

which is a polynomial inequality that does not admit an explicit condition for for a general . Since the l.h.s is a continuous function that increases to infinity as , one sufficient condition for (62) to hold is that the minimizer of the l.h.s. is smaller than .

Note that the left-hand side of Eq. (62) diverges to as and tends to zero as . Moreover, Eq. (62) is lower-bounded and must have a nontrivial minimizer for some because the coefficient of the term is strictly negative. One can thus find its minimizer by taking derivative. In particular, the left-hand side is minimized when

| (63) |

and we can obtain the following sufficient condition for (62) to be satisfiable, which, in turn, implies that (59) is satisfiable:

| (64) |

which is identical to the proposition statement in (15).

Now, we come back to condition (60) to derive a sufficient condition for the trivial solution to be the only solution. The first inequality in Condition (60) implies that if

| (65) |

the only possible solution is the trivial one, and the condition for this to hold can be found using the same procedure as above to be

| (66) |

which is identical to (14).

We now prove the upper bound in (16). Because for any , the first condition in (60) gives an upper bound, and so any that makes the upper bound less than cannot be a solution. This means that any for which

| (67) |

cannot be a solution. This condition holds if and only if

| (68) |

Because , one sufficient condition to ensure this is that there exists such that

| (69) |

which is equivalent to

| (70) |

Namely, any solution satisfies

| (71) |

We can also find a lower bound for all possible solutions. When , another sufficient condition for Eq. (68) to hold is that there exists such that

| (72) |

because the term is always positive. This condition then implies that any solution must satisfy:

| (73) |

For , we have by Theorem 1 that

| (74) |

if and only if . This means that

| (75) |

This finishes the proof.

B.9 Proof of Theorem 3

Proof. When nontrivial solutions exist, we are interested in identifying when is not the global minimum. To achieve this, we compare the loss of with the other solutions. Plug the trivial solution into the loss function in Eq. (9), the loss is easily identified to be .

For the nontrivial minimum, defining to be the model,

| (76) | ||||

| (77) |

where, similar to the previous proof, we have defined such that and . With this notation, The loss function becomes

| (78) | |||

| (79) | |||

| (80) |

The last equation is obtained by rotating using a orthogonal matrix such that and denoting the rotated as . With , The term takes the form of

| (81) |

Combining the expressions of (81) and (80), we obtain that the difference between the loss at the non-trivial solution and the loss at is

| (82) |

Satisfaction of the following relation thus guarantees that the global minimum is nontrivial:

| (83) |

This relation is satisfied if

| (84) | ||||

| (85) |

The derivative or l.h.s. with respect to is

| (86) |

For , the derivative dives below , indicating the l.h.s. of (85) has a global maximum at a strictly positive . The value of is found when setting the derivative to , namely

| (87) | ||||

| (88) | ||||

| (89) |

The maximum value then takes the form

| (90) |

The following condition thus guarantees that the global minimum is non-trivial

| (91) | ||||

| (92) |

This finishes the proof.

B.10 Proof of Theorem 4

Proof. The model prediction is:

| (93) | ||||

| (94) |

One can find the expectation value and variance of a model prediction:

| (95) |

For the trivial solution, the theorem is trivially true. We thus focus on the case when the global minimum is nontrivial.

The variance of the model is

| (96) | ||||

| (97) | ||||

| (98) | ||||

| (99) |

where the last equation follows from Eq. (13). The variance can be upper-bounded by applying (16),

| (100) |

We first consider the limit with fixed :

| (101) |

For the limit with fixed, we have

| (102) |

Additionally, we can consider the limit when as we fix both and :

| (103) |

which is an exponential decay.

Appendix C Exact Form of for

Note that our main result does not specify the exact value of . This is because must satisfy the condition in Eq. (6), which is equivalent to a high-order polynomial in with coefficients being general functions of the eigenvalues of , whose solutions are generally not analytical by Galois theory. One special case where an analytical formula exists for is when . Practically, this can be achieved for any (full-rank) dataset if we disentangle and rescale the data by the whitening transformation: . In this case, we have

| (104) |

and

| (105) |

where .

Appendix D Effect of Bias

This section studies a deep linear network with biases for every layer and compares it with the no-bias networks. We first study a general case when the data does not receive any preprocessing. We then show that the problem reduces to the setting we considered in the main text under the common data preprocessing schemes that centers the input and output data: , and .

D.1 Two-layer network

The two-layer linear network with bias is defined as

| (106) |

where is the bias in the hidden layer, and is the bias at the output layer. The loss function is

| (107) | ||||

| (108) |

It is helpful to concatenate and into a single vector and concatenate and into a single matrix such that , , , and , are related via the following equation

| (109) |

Using and , the model can be written as

| (110) |

The loss function simplifies to

| (111) |

Note that (111) contains similar rescaling invariance between and and the invariance of aligning and . One can thus obtain the following two propositions that mirror Lemma 1 and 2.

Proposition 5.

At the global minimum of (107), .

Proposition 6.

At the global minimum, for all and , we have

| (112) |

The proofs are omitted because they are the same as those of Lemma 1 and 2, substituting by . Following a procedure similar to finding the solution for a no-bias network, one can prove the following theorem.

Theorem 5.

Proof. First of all, we derive a handy relation satisfied by and at all the stationary points. The zero-gradient condition of the stationary points gives

| (115) |

leading to

| (116) | |||

| (117) |

Proposition 5 and proposition 6 implies that we can define and . Consequently, , and the loss function can be written as

| (118) | ||||

The respective zero-gradient condition for and implies that for all stationary points,

| (119) |

To shorten the expressions, we introduce

| (120) |

With , we have

| (121) |

The inner product of and can be solved as

| (122) |

Inserting the expression of into the expression of one obtains

| (123) |

The global minimum must thus satisfy

| (124) | ||||

| (125) |

This completes the proof.

Remark.

As in the no-bias case, we have reduced the original problem to a one-dimensional problem. However, the condition for becomes so complicated that it is almost impossible to understand. That being said, the numerical simulations we have done all carry the bias terms, suggesting that even with the bias term, the mechanisms are qualitatively similar, and so the approach in the main text is justified.

When , the solution can be simplified a little:

| (126) |

where the value of is either or determined by

| (127) |

In this case, the expression of is identical to the no-bias model. The bias of both layers is proportional to . The equation determining the value of is also similar to the no-bias case. The only difference is the term proportional to .

Lastly, the solution becomes significantly simplified when both and . When this is the case, the solution reverts to the case when there is no bias. In practice, it is a common and usually recommended practice to subtract the average of and from the data and achieve precisely and . We generalize this result to deeper networks in the next section.

D.2 Deep linear network

Let be a -dimensional vector concatenating all , and denoting the collection of all the weights , , …, by , the model of a deep linear network with bias is defined as

| (128) | ||||

| (129) | ||||

| (130) | ||||

| (131) |

where

| (132) | ||||

and denotes Hadamard product. The loss function is

| (133) |

Proposition 5 and Proposition 6 can be generated to deep linear network. Similar to the no-bias case, we can reduce the landscape to a -dimensional problem by performing induction on and using the -dimensional case as the base step. However, we do not solve this case explicitly here because the involved expressions now become too long and complicated even to write down, nor can they directly offer too much insight. We thus only focus on the case when the data has been properly preprocessed. Namely, and .

For simplicity, we assume that the regularization strength for all the layers are identically . The following theorem shows that When and , the biases vanish for an arbitrarily deep linear network:

Theorem 6.

Let and . The global minima of Eq. (133) have .

Proof. At the global minimum, the gradient of the loss function vanishes. In particular, the derivatives with respect to vanish:

| (134) | ||||

| (135) | ||||

| (136) | ||||

| (137) |

where is the th element of . The last equation is obtained since is a linear function of . Using the condition and , Equation (137) becomes

| (138) |

is a linear combination of . Consequently, does not depend on , and is a linear combination of . Furthermore, the linearity of indicates that . The solution to the equation (138) also takes the form of

| (139) |

The only choice of that minimizes both the first term and the second term in (139) is, regardless of the value of ,

| (140) |

This finishes the proof.

Thus, for a deep linear network, a model without bias is good enough to describe data satisfying and , which could be achieved by subtracting the mean of the data.