Exact Phase Transitions in Deep Learning

Abstract

This work reports deep-learning-unique first-order and second-order phase transitions, whose phenomenology closely follows that in statistical physics. In particular, we prove that the competition between prediction error and model complexity in the training loss leads to the second-order phase transition for nets with one hidden layer and the first-order phase transition for nets with more than one hidden layer. The proposed theory is directly relevant to the optimization of neural networks and points to an origin of the posterior collapse problem in Bayesian deep learning.

Understanding neural networks is a fundamental problem in both theoretical deep learning and neuroscience. In deep learning, learning proceeds as the parameters of different layers become correlated so that the model responds to an input in a meaningful way. This is reminiscent of an ordered phase in physics, where the microscopic degrees of freedom behave collectively and coherently. Meanwhile, regularization effectively prevents the overfitting of the model by reducing the correlation between model parameters in a manner similar to the effect of an entropic force in physics. One thus expects a phase transition in the model behavior from the regime where the regularization is negligible to the regime where it is dominant. In the long history of statistical physics of learning Hopfield, (1982); Watkin et al., (1993); Martin and Mahoney, (2017); Bahri et al., (2020), a series of works studied the under-to-overparametrization (UO) phase transition in the context of linear regression Krogh and Hertz, 1992a ; Krogh and Hertz, 1992b ; Watkin et al., (1993); Haussler et al., (1996). Recently, this type of phase transition has seen a resurgence of interest Hastie et al., (2019); Liao et al., (2020). One recent work by Li and Sompolinsky, (2021) deals with the UO transition in a deep linear model. However, the UO phase transition is not unique to deep learning because it appears in both shallow and deep models and also in non-neural-network models Belkin et al., (2020). To understand deep learning, we need to identify what is unique about deep neural networks.

In this work, we address the fundamental problem of the loss landscape of a deep neural network and prove that there exist phase transitions in deep learning that can be described precisely as the first- and second-order phase transitions with a striking similarity to physics. We argue that these phase transitions can have profound implications for deep learning, such as the importance of symmetry breaking for learning and the qualitative difference between shallow and deep architectures. We also show that these phase transitions are unique to machine learning and deep learning. They are unique to machine learning because they are caused by the competition between the need to make predictions more accurate and the need to make the model simpler. These phase transitions are also deep-learning unique because they only appear in “deeper” models. For a multilayer linear net with stochastic neurons and trained with regularization,

-

1.

we identify an order parameter and effective landscape that describe the phase transition between a trivial phase and a feature learning phase as the regularization hyperparameter is changed (Theorem 3);

-

2.

we show that finite-depth networks cannot have the zeroth-order phase transition (Theorem 2);

-

3.

we prove that:

The theorem statements and proofs are presented in the Supplementary Section B. To the best of our knowledge, we are the first to identify second-order and first-order phase transitions in the context of deep learning. Our result implies that one can precisely classify the landscape of deep neural models according to the Ehrenfest classification of phase transitions.

Results

Formal framework. Let be a differentiable loss function that is dependent on the model parameter and a hyperparameters . The loss function can be decomposed into a data-dependent feature learning term and a data-independent term that regularizes the model at strength :

| (1) |

Learning amounts to finding the global minimizer of the loss:

| (2) |

Naively, one expects to change smoothly as we change . If changes drastically or even discontinuously when one perturb , it becomes hard to tune to optimize the model performance. Thus, that is well-behaved is equivalent to that is an easy-to-tune hyperparameter. We are thus interested in the case where the tuning of is difficult, which occurs when a phase transition comes into play.

It is standard to treat the first term in Eq. (1) as an energy. To formally identify the regularization term as an entropy, its coefficient must be proportional to the temperature:

| (3) |

where controls the fluctuation of at zero temperature. We note that this identification is consistent with many previous works, where the term that encourages a lower model complexity is identified as an “entropy” Haussler et al., (1996); Vapnik, (2006); Benedek and Itai, (1991); Friston, (2009); Li and Sompolinsky, (2021). In this view, learning is a balancing process between the learning error and the model complexity. Intuitively, one expects phase transitions to happen when one term starts to dominate the other, just like thermodynamic phase transitions that take place when the entropy term starts to dominate the energy.

In this setting, the partition function is . We consider a special limit of the partition function, where both and are made to vanish with their ratio held fixed at . In this limit, one can find the free energy with the saddle point approximation, which is exact in the zero-temperature limit:

| (4) |

We thus treat as the free energy.

Definition 1.

is said to have the th-order phase transition in at if is the smallest integer such that is discontinuous.

We formally define the order parameter and effective loss as follows.

Definition 2.

is said to be an order parameter of if there exists a function such that for all , , where is said to be an effective loss function of .

In other words, an order parameter is a one-dimensional quantity whose minimization on gives . The existence of an order parameter suggests that the original problem can effectively be reduced to a low-dimensional problem that is much easier to understand. Physical examples are the average magnetization in the Ising model and the average density of molecules in a water-to-vapor phase transition. A dictionary of the corresponding concepts between physics and deep learning is given in Table 1.

Our theory deals with deep linear nets, the primary minimal model for deep learning. It is well-established that the landscape of a deep linear net can be used to understand that of nonlinear networks Kawaguchi, (2016); Hardt and Ma, (2016); Laurent and Brecht, (2018). The most general type of deep linear nets, with regularization and stochastic neurons, has the following loss:

| (5) |

where is the input data, the label, and the model parameters, the network depth, the noise in the hidden layer (e.g., dropout), the width of the model, and the weight decay strength. We build on the recent results established in Ziyin et al., (2022). Let . Ref. Ziyin et al., (2022) shows that all the global minima of Eq. (5) must take the form and , where and are explicit functions of the hyperparameters. Ref. Ziyin et al., (2022) further shows that there are two regimes of learning, where, for some range of , the global minimum is uniquely given by , and for some other range of , some gives the global minimum. When , the model outputs a constant , and so this regime is called the “trivial regime,” and the regime where is not the global minimum is called the “feature learning regime.” In this work, we prove that the transition between these two regimes corresponds to a phase transition in the Ehrenfest sense (Definition 1), and therefore one can indeed refer to these two regimes as two different phases.

| machine learning | statistical physics |

| training loss | free energy |

| prediction error | internal energy |

| regularization | negative entropy |

| learning process | symmetry breaking |

| norm of model () | order parameter |

| feature learning regime | ordered phase |

| trivial regime | disordered phase |

| noise required for learning | latent heat |

No-phase-transition theorems. The first result we prove is that there is no phase transition in any hyperparameter () for a simple linear regression problem. In our terminology, this corresponds to the case of . The fact that there is no phase transition in any of these hyperparameters means that the model’s behavior is predictable as one tunes the hyperparameters. In the parlance of physics, a linear regressor operates within the linear-response regime.

Theorem 2 shows that a finite-depth net cannot have zeroth-order phase transitions. This theorem can be seen as a worst-case guarantee: the training loss needs to change continuously as one changes the hyperparameter. We also stress that this general theorem applies to standard nonlinear networks as well. Indeed, if we only consider the global minimum of the training loss, the training loss cannot jump. However, in practice, one can often observe jumps because the gradient-based algorithms can be trapped in local minima. The following theory offers a direct explanation for this phenomenon.

Phase Transitions in Deeper Networks. Theorem 4 shows that the quantity is an order parameter describing any phase transition induced by the weight decay parameter in Eq. (5). Let , , and be the -th eigenvalue of . The effective loss landscape is

| (6) |

where is a rotation of . See Figure 1 for an illustration. The complicated landscape for implies that neural networks are susceptible to initialization schemes and entrapment in meta-stable states is common (see Supplementary Section A.1).

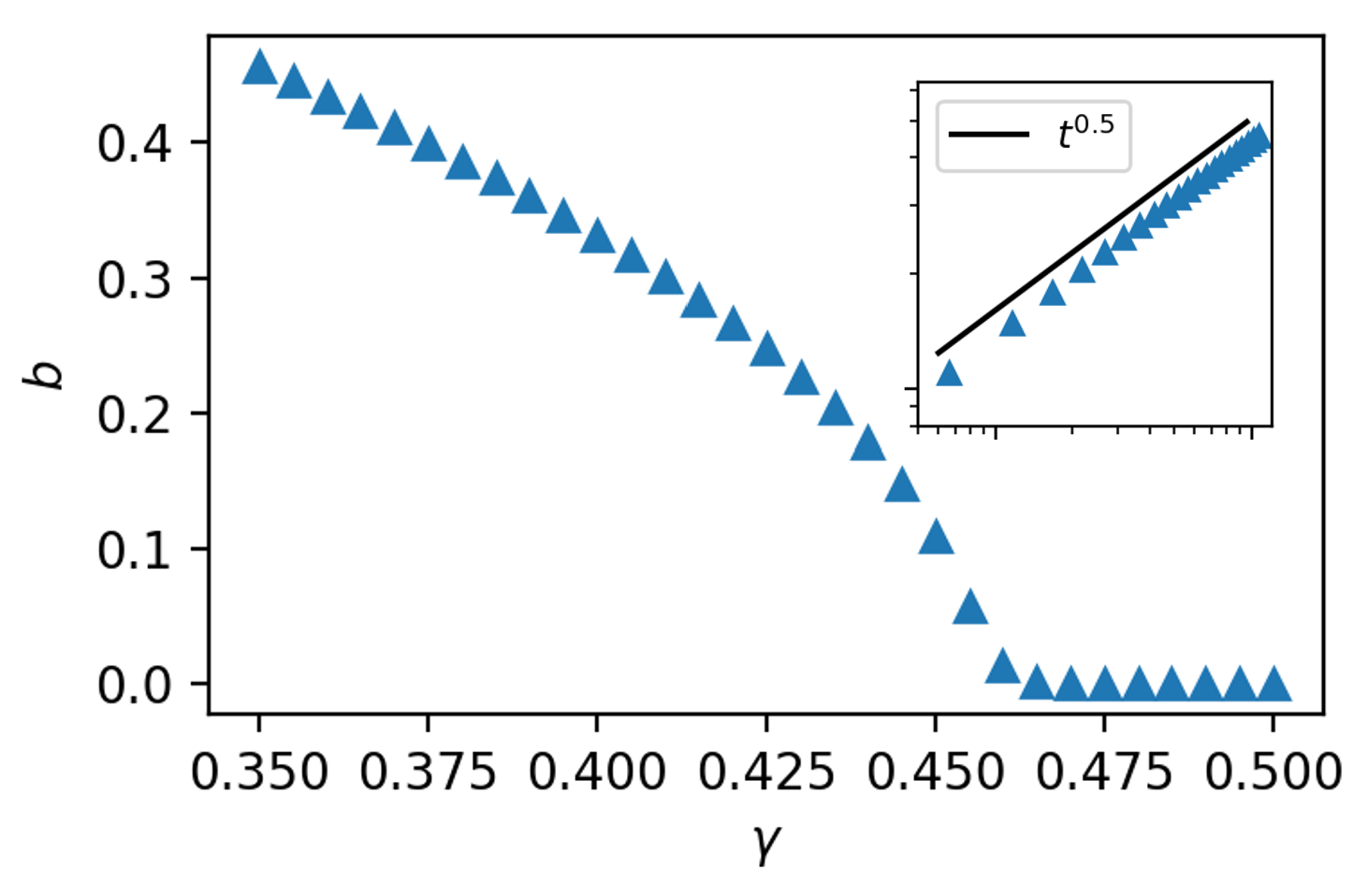

Theorem 5 shows that when in Eq. (5), there is a second-order phase transition precisely at

| (7) |

In machine learning language, is the regularization strength and is the signal. The phase transition occurs precisely when the regularization dominates the signal. In physics, and are proportional to the temperature and energy, respectively. The phase transition occurs exactly when the entropy dominates the energy. Also, the phase transition for a depth- linear net is independent of the number of parameters of the model. For , the size of the model does play a role in influencing the phase transition. However, remains the dominant variable controlling this phase transition. This independence of the model size is an advantage of the proposed theory because our result becomes directly relevant for all model sizes, not just the infinitely large ones that the previous works often adopt.

For , we show that there is a first-order phase transition between the two phases at some . However, an analytical expression for the critical point is not known. In physics, first-order phase transitions are accompanied by latent heat. Our theory implies that this heat is equivalent to the amount of random noise we have to inject into the model parameters to escape from a local to the global minimum for a deep model. We illustrate the phase transitions studied in Figure 2. We also experimentally demonstrate that the same phase transitions take place in deep nonlinear networks with the corresponding depths (Supplementary Section A.3). While infinite-depth networks are not used in practice, they are important from a theoretical point of view Sonoda and Murata, (2019) because they can be used for understanding a (very) deep network that often appears in the deep learning practice. Our result shows that the limiting landscape has a zeroth-order phase transition at . In fact, zeroth-order phase transitions do not occur in physics, and it is a unique feature of deep learning.

Relevance of symmetry breaking. The phase transitions we studied also involve symmetry breaking. This can be seen directly from the effective landscape in Eq. (6). The loss is unaltered as one flip the sign of , and therefore the loss is symmetric in . Figure 3 illustrates the effect and importance of symmetry breaking on the gradient descent dynamics. Additionally, this observation may also provide an alternative venue for studying general symmetry-breaking dynamics because the computation with neural networks is both accurate and efficient.

Mean-Field Analysis. The crux of our theory can be understood by applying a simplified “mean-field” analysis of the loss function in Eq. (5). Let each weight matrix be approximated by a scalar , , ignore the stochasticity due to , and let be one-dimensional. One then obtains a simplified mean-field loss:

| (8) |

where ’s are constants. The first term can be interpreted as a special type of -body interaction. We now perform a second mean-field approximation, where all the take the same value :

| (9) |

Here, , and are structural constants, only depending on the model (depth, width, etc). The first and the third terms monotonically increase in , encouraging a smaller . The second term monotonically decreases in , encouraging a positive correlation between and the feature . The leading and lowest-order terms regularize the model, while the intermediate term characterizes learning. For , the loss is quadratic and has no transition. For , the loss is identical to the Landau free energy, and a phase transition occurs when the second-order term flips sign: . For , the origin is always a local minimum, dominated by the quadratic term. This leads to a first-order phase transition. When , the leading terms become discontinuous in , and one obtains a zeroth-order phase transition. This simple analysis highlights one important distinction between physics and machine learning: in physics, the most common type of interaction is a two-body interaction, whereas, for machine learning, the common interaction is many-body and tends to infinite-body as increases.

One implication is that regularization may be too strong for deep learning because it creates a trivial phase. Our result also suggests a way to avoid the trivial phase. Instead of regularizing by , one might consider , which is the lowest-order regularization that does not lead to a trivial phase. The effectiveness of this suggested method is confirmed in Supplementary Section A.2.

Posterior Collapse in Bayesian Deep Learning. Our results also identify an origin of the well-known problem posterior collapse problem in Bayesian deep learning. Posterior collapse refers to the learning failure where the learned posterior distribution coincides with the prior, and so no learning has happened even after training Dai and Wipf, (2019); Alemi et al., (2018); Lucas et al., (2019). Our results offer a direct explanation for this posterior collapse problem. In the Bayesian interpretation, the training loss in Eq. (5) is the exact negative log posterior, and the trivial phase exactly corresponds to the posterior collapse: the global minimum of the loss is identical to the global maximum of the prior term. Our results thus imply that (1) posterior collapse is a unique problem of deep learning because it does not occur in shallow models, and (2) posterior collapse happens as a direct consequence of the competition between the prior and the likelihood. This means that it is not a good idea to assume a Gaussian prior for the deep neural network models. The suggested fix also leads to a clean and Bayesian-principled solution to the posterior collapse problem by using a prior .

Discussion

The striking similarity between phase transitions in neural networks and statistical-physics phase transitions lends a great impetus to a more thorough investigation of deep learning through the lens of thermodynamics and statistical physics. We now outline a few major future steps:

-

1.

Instead of classification by analyticity, can we classify neural networks by symmetry and topological invariants?

-

2.

What are other possible phases for a nonlinear network? Does a new phase emerge?

-

3.

Can we find any analogy of other thermodynamic quantities such as volume and pressure? More broadly, can we establish thermodynamics for deep learning?

-

4.

Can we utilize the latent heat picture to devise better algorithms for escaping local minima in deep learning?

This work shows that the Ehrenfest classification of phase transitions aligns precisely with the number of layers in deep neural networks. We believe that the statistical-physics approach to deep learning will bring about fruitful developments in both fields of statistical physics and deep learning.

References

- Alemi et al., [2018] Alemi, A., Poole, B., Fischer, I., Dillon, J., Saurous, R. A., and Murphy, K. (2018). Fixing a broken elbo. In International Conference on Machine Learning, pages 159–168. PMLR.

- Bahri et al., [2020] Bahri, Y., Kadmon, J., Pennington, J., Schoenholz, S. S., Sohl-Dickstein, J., and Ganguli, S. (2020). Statistical mechanics of deep learning. Annual Review of Condensed Matter Physics, 11:501–528.

- Belkin et al., [2020] Belkin, M., Hsu, D., and Xu, J. (2020). Two models of double descent for weak features. SIAM Journal on Mathematics of Data Science, 2(4):1167–1180.

- Benedek and Itai, [1991] Benedek, G. M. and Itai, A. (1991). Learnability with respect to fixed distributions. Theoretical Computer Science, 86(2):377–389.

- Dai and Wipf, [2019] Dai, B. and Wipf, D. (2019). Diagnosing and enhancing vae models. arXiv preprint arXiv:1903.05789.

- Friston, [2009] Friston, K. (2009). The free-energy principle: a rough guide to the brain? Trends in cognitive sciences, 13(7):293–301.

- Hardt and Ma, [2016] Hardt, M. and Ma, T. (2016). Identity matters in deep learning. arXiv preprint arXiv:1611.04231.

- Hastie et al., [2019] Hastie, T., Montanari, A., Rosset, S., and Tibshirani, R. J. (2019). Surprises in high-dimensional ridgeless least squares interpolation. arXiv preprint arXiv:1903.08560.

- Haussler et al., [1996] Haussler, D., Kearns, M., Seung, H. S., and Tishby, N. (1996). Rigorous learning curve bounds from statistical mechanics. Machine Learning, 25(2):195–236.

- Hopfield, [1982] Hopfield, J. J. (1982). Neural networks and physical systems with emergent collective computational abilities. Proceedings of the national academy of sciences, 79(8):2554–2558.

- Kawaguchi, [2016] Kawaguchi, K. (2016). Deep learning without poor local minima. Advances in Neural Information Processing Systems, 29:586–594.

- [12] Krogh, A. and Hertz, J. A. (1992a). Generalization in a linear perceptron in the presence of noise. Journal of Physics A: Mathematical and General, 25(5):1135.

- [13] Krogh, A. and Hertz, J. A. (1992b). A simple weight decay can improve generalization. In Advances in neural information processing systems, pages 950–957.

- Laurent and Brecht, [2018] Laurent, T. and Brecht, J. (2018). Deep linear networks with arbitrary loss: All local minima are global. In International conference on machine learning, pages 2902–2907. PMLR.

- Li and Sompolinsky, [2021] Li, Q. and Sompolinsky, H. (2021). Statistical mechanics of deep linear neural networks: The backpropagating kernel renormalization. Physical Review X, 11(3):031059.

- Liao et al., [2020] Liao, Z., Couillet, R., and Mahoney, M. W. (2020). A random matrix analysis of random fourier features: beyond the gaussian kernel, a precise phase transition, and the corresponding double descent. Advances in Neural Information Processing Systems, 33:13939–13950.

- Lucas et al., [2019] Lucas, J., Tucker, G., Grosse, R., and Norouzi, M. (2019). Don’t blame the elbo! a linear vae perspective on posterior collapse.

- Martin and Mahoney, [2017] Martin, C. H. and Mahoney, M. W. (2017). Rethinking generalization requires revisiting old ideas: statistical mechanics approaches and complex learning behavior. arXiv preprint arXiv:1710.09553.

- Mianjy and Arora, [2019] Mianjy, P. and Arora, R. (2019). On dropout and nuclear norm regularization. In International Conference on Machine Learning, pages 4575–4584. PMLR.

- Sonoda and Murata, [2019] Sonoda, S. and Murata, N. (2019). Transport analysis of infinitely deep neural network. The Journal of Machine Learning Research, 20(1):31–82.

- Tanaka and Kunin, [2021] Tanaka, H. and Kunin, D. (2021). Noether’s learning dynamics: Role of symmetry breaking in neural networks. Advances in Neural Information Processing Systems, 34.

- Vapnik, [2006] Vapnik, V. (2006). Estimation of dependences based on empirical data. Springer Science & Business Media.

- Watkin et al., [1993] Watkin, T. L., Rau, A., and Biehl, M. (1993). The statistical mechanics of learning a rule. Reviews of Modern Physics, 65(2):499.

- Ziyin et al., [2022] Ziyin, L., Li, B., and Meng, X. (2022). Exact solutions of a deep linear network. arXiv preprint arXiv:2202.04777.

Appendix A Additional Experiments

A.1 Sensitivity to the Initial Condition

Our result suggests that the learning of a deeper network is quite sensitive to the initialization schemes we use. In particular, for , some initialization schemes converge to the trivial solutions more easily, while others converge to the nontrivial solution more easily. Figure 4 plots the converged loss of a model for two types of initialization: (a) larger initialization, where the parameters are initialized around zero with the standard deviation and (b) small initialization with . The value of is thus equal to the expected norm of the model at initialization, and a small means that it is initialized closer to the trivial phase and a larger means that it is initialized closer to the learning phase. We see that across a wide range of , one of the initialization schemes gets stuck in a local minimum and does not converge to the global minimum. In light of the latent heat picture, the reason for the sensitivity to initial states is clear: one needs to inject additional energy to the system to leave the meta-stable state; otherwise, the system may become stuck for a very long time. The existing initialization methods are predominantly data-dependent. However, our result (also see [24]) suggests that the size of the trivial minimum is data-dependent, and our result thus highlights the importance of designing data-dependent initialization methods in deep learning.

A.2 Removing the Trivial Phase

We also explore our suggested fix to the trivial learning problem. Here, instead of regularization the model by , we regularize the model by . The training loss and the model norm are plotted in Figure 5. We find that the trivial phase now completely disappears even if we go to very high . However, we note that this fix only removes the local maximum at zero, but zero remains a saddle point from which it takes the system a long time to escape.

A.3 Nonlinear Networks

We expect our theory to also apply to deep nonlinear networks that can be locally approximated by linear net at the origin, e.g., a network with tanh activations. As shown in Figure 6, the data shows that a tanh net also features a second-order phase transition for and a first-order phase transition for .

One notable exception that our theory may not apply is the networks with the ReLU activation because these networks are not differentiable at zero (i.e., in the trivial phase). However, there are smoother (and empirically better) alternatives to ReLU, such as the swish activation function, to which the present theory should also be relevant.

Appendix B Main Results

B.1 Theorem Statements

For a simple ridge linear regression, the minimization objective is

| (10) |

Theorem 1.

There is no phase transition in any hyperparameter () in a simple ridge linear regression for any .

The following result shows that for a finite depth, must be continuous in .

Theorem 2.

For any finite and , has no zeroth-order phase transition with respect to .

Note that this theorem allows the weight decay parameter to be , and so our results also extend to the case when there is no weight decay.

The following theorem shows that there exists order parameters describing any phase transition induced by the weight decay parameter in Eq. (5).

Theorem 3.

Here, the norm of the last layer is referred to as the order parameter. The meaning of this choice should be clear. The norm of the last layer is zero if and only if all weights of the last layer is zero, and the model is a trivial model. The model can only learn something when the order parameter is nonzero. Additionally, we note that the choice of the order parameter is not unique and there are other choices for the order parameter (e.g., the norm of any other layer, or the sum of the norms of all layers).

The following theorem shows that when in Eq. (5), there is a second-order phase transition with respect to .

Theorem 4.

Equation (5) has the second-order phase transition between the trivial and feature learning phases at111When the two layers have different regularization strengths and , one can show that the phase transition occurs precisely at .

| (12) |

Now, we show that for , there is a first-order phase transition.

Theorem 5.

Let . There exists a such that the loss function Eq. (5) has the first-order phase transition between the trivial and feature learning phases at .

Theorem 6.

Let denote the loss function for a fixed depth as a function of . Then, for and some constant ,

| (13) |

The constant is, in general, not equal to . For example, in the limit , converges to the loss value of a simple linear regression, which is not equal to as long as .

B.2 Proof of Theorem 1

Proof. The global minimum of Eq. (10) is

| (14) |

The loss of the global minimum is thus

| (15) | ||||

| (16) | ||||

| (17) | ||||

| (18) |

which is infinitely differentiable for any (note that is always positive semi-definite by definition).

B.3 Proof of Theorem 2

Proof. For any fixed and bounded , is continuous in . Moreover, is a monotonically increasing function of . This implies that is also an increasing function of (but may not be strictly increasing).

We now prove by contradiction. We first show that is left-continuous. Suppose that for some , is not left-continuous in at some . By definition, we have

| (19) |

where is one of the (potentially many) global minima of . Since is not left-continuous by assumption, there exists such that for any ,

| (20) |

which implies that

| (21) |

Namely, the left-discontinuity implies that for all ,

| (22) |

However, by definition of , we have

| (23) |

Thus, by choosing , the relation in (21) is violated. Thus, must be left-continuous.

In a similar manner, we can prove that is right-continuous. Suppose that for some , is not right-continuous in at some . Let . By definition, we have

| (24) |

where is one of the (potentially many) global minima of . Since is not right-continuous by assumption, there exists such that for any ,

| (25) |

which implies that

| (26) |

Namely, the right-discontinuity implies that for all ,

| (27) |

However, by definition of , we have

| (28) |

Thus, by choosing , the relation in (26) is violated. Thus, must be right-continuous.

Therefore, is continuous for all . By definition, this means that there is no zeroth-order phase transition in for . Additionally, note that the above proof does not require , and so we have also shown that is right-continuous at .

B.4 Proof of Theorem 3

Proof. By Theorem 3 of Ref. [24], any global minimum of Eq. (5) is given by the following set of equations for some :

| (29) |

where is an arbitrary vertex of a -dimensional hypercube for all . Therefore, the global minimum must lie on a one-dimensional space indexed by . Let specify the model as

| (30) |

and let denote the set of all random noises .

Substituting Eq. (29) in Eq. (5), one finds that within this subspace, the loss function can be written as

| (31) | ||||

| (32) | ||||

| (33) |

where the term is

| (34) |

Combining terms, we can simplify the expression for the loss function to be

| (35) |

We can now define the effective loss by

| (36) |

Then, the above argument shows that, for all ,

| (37) |

By definition 2, is an order parameter of with respect to the effective loss . This completes the proof.

B.5 Two Useful Lemmas

Before continuing the proofs, we first prove two lemmas that will simplify the following proofs significantly.

Lemma 1.

If is differentiable, then for at least one of the global minima ,

| (38) |

Proof. Because is differentiable in , one can find the derivative for at least one of the global minima

| (39) | ||||

| (40) | ||||

| (41) | ||||

| (42) |

where we have used the optimality condition in the second equality.

B.6 Proof of Theorem 4

Proof. By definition 1, it suffices to only prove the existence of phase transitions on the effective loss. For , the effective loss is

| (43) |

By Theorem 1 of Ref. [24], the phase transition, if exists, must occur precisely at . To prove that has a second-order phase transition, we must check both its first derivative and second derivative.

When from the right, we find that the all derivatives of are zero because the loss is identically equal to . We now consider the derivative of when from the left.

We first need to find the minimizer of Eq. (43). Because Eq.(43) is differentiable, its derivative in must be equal to at the global minimum

| (44) |

Finding the minimizer is thus equivalent to finding the real roots of a high-order polynomial in . When , the solution is unique [24]:

| (45) |

where we labeled the solution with the subscript to emphasize that this solution is also the zeroth-order term of the solution in a perturbatively small neighborhood of . From this point, we define a shifted regularization strength: . When , the condition (44) simplifies to

| (46) |

Because the polynomial is not singular in , one can Taylor expand the (squared) solution in :

| (47) |

We first Substitute (47) in (44) to find222Note that alternatively, is implied by the no-zeroth-order transition theorem.

| (48) |

One can then again substitute Eq. (47) in Eq. (44) to find . To the first order in , Eq. (44) reads

| (49) | |||

| (50) | |||

| (51) |

Substituting this first-order solution to Lemma 1, we obtain that

| (52) |

Thus, the first-order derivative of is continuous at the phase transition point.

We now find the second-order derivative of . To achieve this, we also need to find the second-order term of in . We expand as

| (53) |

To the second order in , (44) reads

| (54) | |||

| (55) | |||

| (56) | |||

| (57) |

where, from the third line, we have used the shorthand , , and . Substitute in , one finds that

| (58) |

This allows us to find the second derivative of . Substituting and into Eq. (43) and expanding to the second order in , we obtain that

| (59) | ||||

| (60) |

At the critical point,

| (61) | ||||

| (62) | ||||

| (63) | ||||

| (64) |

Notably, the second derivative of from the left is only dependent on and not on .

| (65) |

Thus, the second derivative of is discontinuous at . This completes the proof.

Remark.

Note that the proof suggests that close to the critical point, , in agreement with the Landau theory.

B.7 Proof of Theorem 5

Proof. By definition, it suffices to show that is not continuous. We prove by contradiction. Suppose that is everywhere continuous on . Then, by Lemma 1, one can find the derivative for at least one of the global minima

| (66) |

Both terms in the last line are nonnegative, and so one necessary condition for to be continuous is that both of these two terms are continuous in .

In particular, one necessary condition is that is continuous in . By Proposition 3 of Ref. [24], there exist constants , such that , and

| (67) |

Additionally, if , must be lower-bounded by some nonzero value [24]:

| (68) |

Therefore, for any , must have a discontinuous jump from to a value larger than , and cannot be continuous. This, in turn, implies that jumps from zero to a nonzero value and cannot be continuous. This completes the proof.

B.8 Proof of Theorem 6

Proof. It suffices to show that a nonzero global minimum cannot exist at a sufficiently large , when one fixes . By Proposition 3 of Ref. [24], when , any nonzero global minimum must obey the following two inequalities:

| (69) |

where is the largest eigenvalue of . In the limit , the lower bound becomes

| (70) |

The upper bound becomes

| (71) |

But for any , . Thus, the set of such is empty.

On the other hand, when , the global minimizer has been found in Ref. [19] and is nonzero, which implies that . This means that is not continuous at . This completes the proof.