Evaluation of Saliency-based Explainability Methods

Abstract

A particular class of Explainable AI (XAI) methods provide saliency maps to highlight part of the image a Convolutional Neural Network (CNN) model looks at to classify the image as a way to explain its working. These methods provide an intuitive way for users to understand predictions made by CNNs. Other than quantitative computational tests, the vast majority of evidence to highlight that the methods are valuable is anecdotal. Given that humans would be the end-users of such methods, we devise three human subject experiments through which we gauge the effectiveness of these saliency-based explainability methods.

1 Introduction

Saliency-based explainability methods provide the image region that CNN models pay attention to make predictions. But there are no specific qualitative metrics in the literature to evaluate the different approaches. This lack of evaluation measure warrants the devising of a system to rate explainability methods.

There have been works to evaluate the different saliency methods. Sanity checks were performed to see if methods were affected by randomization of the model parameters or data labels (Adebayo et al., 2018). Sixt et al. (Sixt et al., 2020) advance a theoretical justification for why many modified backpropagation attributions fail to produce class sensitive saliency maps. Tjoa and Guan (Tjoa & Guan, 2020) perform quantitative analysis of different saliency-based XAI methods, which are shown to be helpful depending on the context. While all quantitative analyses need consideration, a tantamount quality of these XAI methods is to satisfy the cognitive rationales of its users (Li et al., 2020). A user study has also shown that XAI methods are effective in helping users understand and predict a models output better (Alqaraawi et al., 2020).

There are several desirable qualities of a method that can serve as potential evaluation measures. We can consider the degree of interpretablity of the explanation. The reliability of explanations is also an important factor to measure the efficacy. The users of the method should also be able to trust the generated explanations. Considering these desired qualities, we propose three human subject experiments to evaluate the saliency-based explainability methods. We want to evaluate XAI methods against traits such as predictability, reliability, and consistency. These characteristics may not be feasibly measured by quantitative tests, as they are qualitative in nature. Therefore, we conduct human subject experiments to evaluate these methods.

In our first experiment, which effectively evaluated the predictability of the explanations generated by the methods along with its class-discriminativeness, GradCAM performed best. In the other two experiments, which measured how well the explanations from the methods highlight the misclassifications and the consistency in the explanations, FullGrad (Srinivas & Fleuret, 2019), and GradientSHAP (Lundberg & Lee, 2017) performed well. FullGrad was also deemed consistent in our experiments.

2 Materials and Methods

We propose three human subject experiments to evaluate the efficacy of saliency-based explainability methods. The first two experiments use a subset of Animals with Attributes 2 (AWA2) dataset (Xian et al., 2018) having 20 animal classes. The third experiment uses Caltech-UCSD Birds (CUB) dataset (Wah et al., 2011) having 200 species of birds. We use VGG-16 (Simonyan & Zisserman, 2014) as baseline architecture to train our models for the AWA2 and CUB-200 datasets to an accuracy of 96.6% and 73.0%, respectively. The experiments are conducted in the form of surveys. The screenshots for the experiments are provided in Figure 1. Each survey had 24 questions. For each survey we received around 20-25 responses. The participants of the experiment were familiar with Artificial Intelligence and Machine Learning but had no prior experience in XAI.

2.1 Saliency-based XAI methods

The saliency-based XAI methods (along with their abbreviated form) considered for the experiments are the following:

-

1.

Saliency (Simonyan et al., 2013): Generates saliency map on the basis of gradients at the input layer resulting in a heat map. The heat map is at a fine-grained pixel-level as we have gradients for each pixel.

-

2.

GradCAM (Selvaraju et al., 2017): Converts the gradients at the final convolutional layer of the CNN into a heat map highlighting important regions. The method saliency maps are at a broad region-level.

-

3.

Contrastive Excitation Backprop (c-EBP) (Zhang et al., 2018): It results in region-level saliency maps. Attributions are backpropagated in place of gradients. Attribution for the desired class is taken as positive, whereas for all the other classes is taken as negative, resulting in a contrastive explanation.

-

4.

FullGrad (Srinivas & Fleuret, 2019): The approach also utilizes the gradients for the bias at each intermediate convolutional layer resulting in region-level heat maps that also have granular focus.

-

5.

Integrated Gradients (IG) (Sundararajan et al., 2017): The approach uses an axiomatic formulation to satisfy completeness, a desirable quality for the generated explanations. Gradients at the input layer result in pixel-level saliency maps.

-

6.

DeepLIFT (Shrikumar et al., 2017): A contribution score based on the difference between the output of the original input and a reference point is backpropagated to each neuron. The approach also considers the positive and negative influences of neurons separately. DeepLIFT generates pixel-level saliency maps.

-

7.

Gradient SHAP (GradSHAP) (Lundberg & Lee, 2017): Inspired from game-theoretic principles, this approach uses Shapley values to gauge importance of each pixel.

-

8.

SmoothGrad (SG) (Smilkov et al., 2017): This variant also provides pixel-level saliency maps by random sampling the inputs by adding the Gaussian noise to the original input. Multiple saliency maps are generated each random-sampled input, and the average of these maps results in the final SmoothGrad saliency map. The vanilla SmoothGrad considers Saliency (Simonyan et al., 2013) as its base XAI method.

-

9.

Integrated Gradients - SmoothGrad (IG-SG): SmoothGrad applied using Integrated Gradients as the base XAI method to provide pixel-level saliency maps.

2.2 Experiment #1-Predictability

The first experiment’s goal is to measure the predictability of the explanation methods. This measure also captures the class-discriminativeness of the generated explanations. Participants are presented with the explanations for the top-4 predicted class for an image, including the explanation for the ground truth class. Of the four saliency maps, the participants are asked to select the saliency map corresponding to the ground truth class. Ideally, the saliency map of the ground truth class would either demarcate the object of interest in the image from the background or highlight obvious discriminating regions. The average accuracy of the subjects correctly selecting the true class’s saliency map would be the explanation method’s score. A higher score in this experiment means that the participants can predict the method’s outcome among the few options. The measure also incorporates class-discriminativeness as the subjects would only easily select the true class’s saliency map if the maps are discriminative.

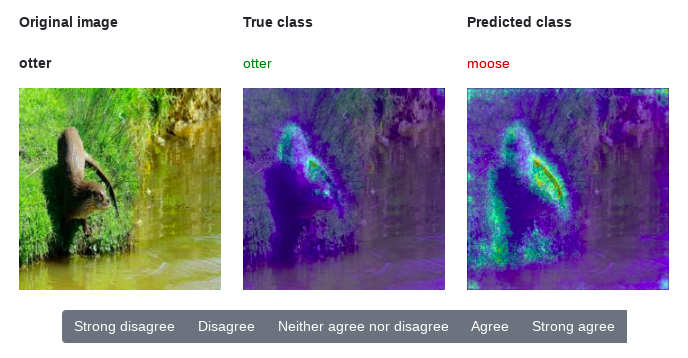

2.3 Experiment #2-Reliability

The second experiment measures the reliability of the methods. Justifiable explanations even for misclassifications make the method reliable. For this experiment, we show the participants the explanations for misclassified images, i.e., misclassified (predicted) class vs true class (ground truth) saliency maps. The subjects rate on a Likert scale the degree to which they agree that the explanations are justified for the misclassified image. The Likert scale (and corresponding values in parenthesis) that users could select are: Strongly disagree (1), Disagree (2), Neither agree nor disagree (3), Agree (4) and Strongly agree (5). The average rating by the subjects reflects the reliability of each method.

2.4 Experiment #3-Consistency

The third experiment aims to evaluate the consistency of the saliency-based explainability methods. By consistency, we mean that the explanation method for similar images would highlight similar regions (having same patterns) of the image. In the third experiment, we show the user several saliency maps of correctly predicted images belonging to the same class. We ask the participant to rate on a Likert scale (similar to one in experiment 2) the degree to which they agree that the saliency maps of different images of the bird (from the CUB dataset) focuses on the same or similar part(s) of the bird. This experiment is particularly beneficial in the case of the fine-grained image classification task.

3 Results

We show the accuracies for the task outlined in experiment #1 in Figure 2. GradCAM has the highest accuracy of 43.9% among all the XAI methods. Excitation Backprop and Saliency follow this with accuracies of 35.8% and 31.7%, respectively. There is a substantial difference in the score of the top-2 performing methods. Integrated-Gradients and FullGrad are the worst-performing XAI methods for the task, with accuracies of 22.2% and 23.7%.

Table 1 shows the ranks of the XAI methods based on the mean values on the Likert scale (Boone & Boone, 2012) (Sullivan & Artino Jr, 2013) for experiment #2. GradSHAP and FullGrad have the highest mean, 3.38 and 3.36, respectively. Saliency and GradCAM again feature in the top four with means of 3.26 and 3.22, respectively.

FullGrad has a Likert value mean of 4.51 in experiment #3 as reflected in Table 1. This implies that there is a near-unanimous consensus that the explanations provided by FullGrad are consistent for the VGG-16 model trained on the CUB-200 dataset. Other methods are also closely behind except GradCAM and DeepLIFT, which have mean values of 3.22 and 3.36, respectively. The frequency for “Strongly agree” in the case of FullGrad defies trends to stand out as the highest among the Likert items of the method. It covers 61.28% of the total responses for the method. The second highest was for c-EBP having 29.6%.

| XAI Method | MeanSDRank | |

|---|---|---|

| Experiment #2 Reliability | Experiment #3 Consistency | |

| FullGrad | 3.36 | 4.51 |

| c-EBP | 3.15 | 3.92 |

| GradSHAP | 3.38 | 3.90 |

| IG | 3.11 | 3.82 |

| SG | 3.18 | 3.80 |

| Saliency | 3.26 | 3.79 |

| IG-SG | 3.19 | 3.71 |

| DeepLIFT | 3.13 | 3.36 |

| GradCAM | 3.22 | 3.22 |

4 Discussion

We considered the AWA2 dataset for the first two tasks as it did not have too much variability considering that the backgrounds and even animals in many cases were similar, like bobcat and tiger, but the classes were also not too similar. The use of the CUB-200 dataset is also significant for the third experiment. The images capture the birds in very few ways, like either perched or flying. This helps us to look at the consistency of the saliency maps better, as, in the case of the AWA2 dataset, the animals are captured from various angles, distances, environments, and various positions.

Experiment #1 shows that although saliency maps are predictable, GradCAM scoring 43.9% accuracy despite no context being provided, the utility of its predictability cannot be ascertained. Among the methods, GradCAM and Excitation Backprop having the highest accuracy can also be attributed to the fact that GradCAM has highlights large regions of the image where boundaries may not be well-demarcated (Srinivas & Fleuret, 2019). Excitation Backprop is also helped by the same fact and also that we are using the contrastive variant, which gives well discriminative saliency maps. All the other methods provide pixel-level saliency maps except FullGrad, although it also provides a finely detailed saliency map. The fineness of the details of the saliency maps may have had counterproductive effects. Added by the fact that the model itself tries to discriminate between classes by specific features like object parts, textures, colours, even backgrounds (Gonzalez-Garcia et al., 2018) (Bau et al., 2017) and not strictly focuses entirely on the object of interest.

The effectiveness of FullGrad is also not surprising in the second and third experiment due to the same reasons. It has a mix of finely detailed and broad region covering saliency maps like GradCAM and other pixel-level methods. FullGrad also has a theoretical foundation that credits both local and global attribution.GadientSHAP uses Shapley values, to which its success can be attributed.

Fig. 3 shows the heatmap of the mean accuracies in Experiment 1 for the methods against each class. We want to bring attention to the fact that some classes exhibit better accuracy overall, indicated by a lighter tone in its column relative to other classes, like giant panda, gorilla and tiger. The opposite can be seen in the case of humpback whale, polar bear rhinoceros, which have relatively darker columns. This similar phenomenon is observed in Fig. 4, which shows the heatmap of the mean Likert values in Experiment 2 for the methods against each class. The classes gorilla and tiger are relatively lighter contrasted with the classes humpback whale, pig and rhinoceros, which are relatively darker, as seen by their corresponding columns. This phenomenon suggests that explainability differ among individual classes.

5 Conclusion

We conducted human subject experiments to gauge the effectiveness of saliency-based XAI methods, which provide saliency maps as explanations for predictions made by CNN models. The experiments were designed to capture whether the XAI methods had desirable qualities like predictability, reliability, and consistency. Our work has found the majority of these methods to possess the desirable qualities, but not to a high degree of proficiency. Some methods have come out on top, like GradientSHAP and FullGrad. FullGrad was also rated highly consistent nearly unanimously by the human subjects. The benefits of XAI methods to humans can only be quantified by how well it satisfies its human users. As suggested by the experiments, a human-centric approach to XAI might assist to bridge the vast gap that exists between the explanations and their utility.

References

- Adebayo et al. (2018) Adebayo, J., Gilmer, J., Muelly, M., Goodfellow, I., Hardt, M., and Kim, B. Sanity checks for saliency maps. Advances in neural information processing systems, 31:9505–9515, 2018.

- Alqaraawi et al. (2020) Alqaraawi, A., Schuessler, M., Weiß, P., Costanza, E., and Berthouze, N. Evaluating saliency map explanations for convolutional neural networks: a user study. In Proceedings of the 25th International Conference on Intelligent User Interfaces, pp. 275–285, 2020.

- Bau et al. (2017) Bau, D., Zhou, B., Khosla, A., Oliva, A., and Torralba, A. Network dissection: Quantifying interpretability of deep visual representations. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 6541–6549, 2017.

- Boone & Boone (2012) Boone, H. N. and Boone, D. A. Analyzing likert data. Journal of extension, 50(2):1–5, 2012.

- Gonzalez-Garcia et al. (2018) Gonzalez-Garcia, A., Modolo, D., and Ferrari, V. Do semantic parts emerge in convolutional neural networks? International Journal of Computer Vision, 126(5):476–494, 2018.

- Li et al. (2020) Li, X.-H., Shi, Y., Li, H., Bai, W., Song, Y., Cao, C. C., and Chen, L. Quantitative evaluations on saliency methods: An experimental study. arXiv preprint arXiv:2012.15616, 2020.

- Lundberg & Lee (2017) Lundberg, S. and Lee, S.-I. A unified approach to interpreting model predictions. arXiv preprint arXiv:1705.07874, 2017.

- Selvaraju et al. (2017) Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., and Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, pp. 618–626, 2017.

- Shrikumar et al. (2017) Shrikumar, A., Greenside, P., and Kundaje, A. Learning important features through propagating activation differences. In International Conference on Machine Learning, pp. 3145–3153. PMLR, 2017.

- Simonyan & Zisserman (2014) Simonyan, K. and Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- Simonyan et al. (2013) Simonyan, K., Vedaldi, A., and Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034, 2013.

- Sixt et al. (2020) Sixt, L., Granz, M., and Landgraf, T. When explanations lie: Why many modified bp attributions fail. In International Conference on Machine Learning, pp. 9046–9057. PMLR, 2020.

- Smilkov et al. (2017) Smilkov, D., Thorat, N., Kim, B., Viégas, F., and Wattenberg, M. Smoothgrad: removing noise by adding noise. arXiv preprint arXiv:1706.03825, 2017.

- Srinivas & Fleuret (2019) Srinivas, S. and Fleuret, F. Full-gradient representation for neural network visualization. In Advances in Neural Information Processing Systems, pp. 4124–4133, 2019.

- Sullivan & Artino Jr (2013) Sullivan, G. M. and Artino Jr, A. R. Analyzing and interpreting data from likert-type scales. Journal of graduate medical education, 5(4):541, 2013.

- Sundararajan et al. (2017) Sundararajan, M., Taly, A., and Yan, Q. Axiomatic attribution for deep networks. In International Conference on Machine Learning, pp. 3319–3328. PMLR, 2017.

- Tjoa & Guan (2020) Tjoa, E. and Guan, C. Quantifying explainability of saliency methods in deep neural networks. arXiv preprint arXiv:2009.02899, 2020.

- Wah et al. (2011) Wah, C., Branson, S., Welinder, P., Perona, P., and Belongie, S. The Caltech-UCSD Birds-200-2011 Dataset. Technical Report CNS-TR-2011-001, California Institute of Technology, 2011.

- Xian et al. (2018) Xian, Y., Lampert, C. H., Schiele, B., and Akata, Z. Zero-shot learning—a comprehensive evaluation of the good, the bad and the ugly. IEEE transactions on pattern analysis and machine intelligence, 41(9):2251–2265, 2018.

- Zhang et al. (2018) Zhang, J., Bargal, S. A., Lin, Z., Brandt, J., Shen, X., and Sclaroff, S. Top-down neural attention by excitation backprop. International Journal of Computer Vision, 126(10):1084–1102, 2018.