Evaluation and Optimization of Gradient Compression for Distributed Deep Learning

Abstract

To accelerate distributed training, many gradient compression methods have been proposed to alleviate the communication bottleneck in synchronous stochastic gradient descent (S-SGD), but their efficacy in real-world applications still remains unclear. In this work, we first evaluate the efficiency of three representative compression methods (quantization with Sign-SGD, sparsification with Top-k SGD, and low-rank with Power-SGD) on a 32-GPU cluster. The results show that they cannot always outperform well-optimized S-SGD or even worse due to their incompatibility with three key system optimization techniques (all-reduce, pipelining, and tensor fusion) in S-SGD. To this end, we propose a novel gradient compression method, called alternate compressed Power-SGD (ACP-SGD), which alternately compresses and communicates low-rank matrices. ACP-SGD not only significantly reduces the communication volume, but also enjoys the three system optimizations like S-SGD. Compared with Power-SGD, the optimized ACP-SGD can largely reduce the compression and communication overheads, while achieving similar model accuracy. In our experiments, ACP-SGD achieves an average of and speedups over S-SGD and Power-SGD, respectively, and it consistently outperforms other baselines across different setups (from 8 GPUs to 64 GPUs and from 1Gb/s Ethernet to 100Gb/s InfiniBand).

Index Terms:

Distributed Deep Learning; Gradient Compression; Power-SGD; System OptimizationI Introduction

Training deep neural networks (DNNs) with synchronous stochastic gradient descent (S-SGD) with data parallelism is one of the most popular approaches for distributed deep learning (DL) [1, 2]. The S-SGD mainly consists of two phases: gradient computation and gradient aggregation. During the aggregation phase, it exchanges the locally calculated gradients through the network. With the increase of model size of DNNs (millions to billions of parameters), distributed training has caused high communication overheads, which becomes the performance bottleneck in S-SGD [3, 4, 5].

To mitigate the communication overheads, on one hand, several system optimization techniques have been proposed to improve the performance of S-SGD, such as the ring all-reduce primitive [6], wait-free back-propagation (WFBP) [7], and tensor fusion [8, 9]. The ring all-reduce is known to be bandwidth optimal, so it is suitable for distributed training to transfer long messages [10]. In the all-reduce based architecture, due to the deep structure of DNNs, wait-free back-propagation and tensor fusion help to better overlap communication overheads with computation overheads of different layers [11, 12]. For example, in our experiments (see Fig. 9), the optimized S-SGD (with WFBP and tensor fusion) can achieve almost performance improvement over the naive implementation when training a ResNet-152 model [13].

On the other hand, gradient compression methods have received much attention to reduce the communication volume of transferred data in different manners, including quantization [14, 15, 16, 17, 18], sparsification [19, 20, 21, 22], and low-rank decomposition [23, 24]. For example, Sign-SGD [17] communicates only the signs of gradients, and Top- SGD [21] exchanges a fraction (e.g., ) of selected gradients. Compared to S-SGD, they are able to reduce the communication traffic by and even times for gradient aggregation, with a negligible impact on model accuracy [19].

To study the efficiency of current gradient compression methods, we choose three representative compression methods: Sign-SGD [17], Top- SGD [21], and Power-SGD [24], and compare their practical training performance against the well-optimized S-SGD (i.e. implemented with aforementioned system optimization techniques), in a typical data-center setting with a 32-GPU cluster connected with 10Gb/s Ethernet (10GbE). However, we find that three gradient compression methods fail to provide performance improvements over S-SGD in many cases, while they can achieve high compression ratios. For example, it is unexpected that S-SGD runs 21%-70% faster than compression counterparts in training ResNet-50 (see Fig. 2). This surprising observation motivates us to optimize gradient compression from the system perspective.

However, current gradient compression methods are not compatible with common system optimizations, as they do not incur either additive or non-blocking communications. To be precise, Sign-SGD and Top- SGD do not support gradient summation after quantization/sparsification, thus they are unable to utilize ring all-reduce for gradient aggregation. Meanwhile, the communication of Power-SGD will block the subsequent operations, causing trouble for wait-free back-propagation. Due to these limitations, it is non-trivial to integrate existing system optimizations into compression.

In this work, we propose a novel gradient compression method, called alternate compressed Power-SGD (ACP-SGD)111We provide open-source code in https://github.com/lzhangbv/acpsgd., to enable common system optimizations like S-SGD. The idea of ACP-SGD is derived from Power-SGD, which compresses each large gradient matrix into two low-rank matrices ( and ) using power iteration. Unlike Power-SGD, we do not calculate and aggregate and in one iteration, but compress the gradient into either and alternately. In other words, ACP-SGD only needs to compress and then aggregate the gradient once in each iteration, which implies that the gradient communication operations are additive and non-blocking like S-SGD. Another benefit of ACP-SGD is to reduce half gradient compression and aggregation costs compared to Power-SGD. In addition, we apply reuse and error feedback mechanisms to improve the approximation quality and incorporate the approximation error. By doing so, ACP-SGD can achieve model accuracy on par with S-SGD.

To optimize ACP-SGD, we use 1) ring all-reduce to aggregate or in each iteration, 2) wait-free back-propagation to overlap these all-reduce communication tasks with gradient computation and compression tasks, and 3) tensor fusion to merge small tensors to be communicated together to reduce the start-up cost. For tensor fusion, we use compressed buffer size to determine the fusion results on and , which has shown to be adaptive to the choice of ranks (compression ratios).

We conduct extensive experiments to validate the efficiency of our ACP-SGD with system optimizations, on 32 GPUs connected with 10GbE. The experimental results show that (1) ACP-SGD consistently outperforms S-SGD and Power-SGD in many setups, e.g., different models, batch sizes, compression ratios, and network bandwidths, (2) ACP-SGD achieves an average of and (up to and ) speedups over S-SGD, and Power-SGD, respectively, and (3) system optimization techniques integrated in ACP-SGD help achieve performance improvement over the naive implementation.

II Background and Related Work

In this section, we present the background and some related work of distributed S-SGD and gradient compression methods in DL training.

II-A S-SGD with System Optimizations

S-SGD. The S-SGD is one of the most popular algorithms used to accelerate DNN training with data parallelism [1, 2]. Each iteration of S-SGD consists of two main phases: gradient computation and gradient aggregation. During the gradient computation phase, the local gradient at each worker is computed via feed-forward and back-propagation (FF&BP) process, followed by the gradient aggregation. As shown in Fig. 1(a), local gradients are synchronously communicated (summed up) among all workers, so that each worker has the aggregated global gradient for the model update. Due to the large size of deep models, millions to billions of parameters are communicated among workers, leading to significant performance bottlenecks [3, 4, 5].

System Optimizations. To alleviate the communication overheads, on one hand, many system level optimization techniques [11] have been proposed to improve the training efficiency of S-SGD. In this paper, we introduce three commonly used system advances : ring all-reduce primitive [6], wait-free back-propagation [7], and tensor fusion [8, 25].

II-A1 Ring All-reduce

by building a ring-based topology for simultaneously communicating gradient chunks between any neighbor workers, ring all-reduce enjoys a very high bandwidth, and its communication complexity is linear to the number of parameters, no matter of the cluster size (as shown in Table II). On top of efficient implementations such as NCCL, ring all-reduce primitive has successfully become the major communication protocol for high performance distributed training in recent years [26, 27].

II-A2 Wait-free Back-propagation

due to the deep structure of DNNs, the gradients are calculated layer-wisely during the back-propagation. Instead of waiting for the completion of back-propagation to calculate all gradients, existing training frameworks support wait-free back-propagation (WFBP) [7], where gradient communication can start immediately when the gradient is ready. This enables gradient communication to be overlapped with gradient computation tasks of previous layers, hiding the time spent in communication. For example, in Fig. 1(b), when the gradient of layer is ready, it starts to aggregate immediately (i.e., ), so that communication task of can be overlapped with the gradient computation task of of layer .

II-A3 Tensor Fusion

naive WFBP by calling ring all-reduce operations layer-wisely can however lead to heavy communication overheads. This is because each all-reduce also has a start-up cost, which is unfortunately linear to the number of workers [10], making all-reducing small tensors separately very inefficient. For instance, on our 10GbE platform, all-reducing two KB tensors takes about ms, while all-reducing one KB tensor only requires ms. Based on this observation, existing training frameworks [8, 25] have equipped WFBP with tensor fusion (TF) [9, 12, 28], to merge small gradient tensors of nearby layers into one large tensor. With TF, fewer all-reduce operations are required to aggregate these merged large gradient tensors, which amortizes the start-up costs.

In this paper, we implement S-SGD with aforementioned system optimization techniques as the baseline.

II-B Gradient Compression Methods

Another line of work has proposed gradient compression methods to mitigate gradient communication costs [29]. Gradient compression methods aim to reduce the communication traffic by compressing the gradients, as demonstrated in Fig 1(c), in the following three categories.

II-B1 Quantization

Quantization methods reduce the number of bits of each element of the gradients. 1-bit SGD [14], Sign-SGD [17, 30], 1-bit Adam [5] quantize each float32 element of the gradient to 1-bit sign, by mapping the negative components to and the others to . TernGrad [15] and QSGD [16] quantize each element into three or more values via randomized rounding. However, quantization can at most reduce the communication volume by 32.

II-B2 Sparsification

To reduce communication traffic in a more aggressive way, sparsification methods select only a small subset of gradient elements (e.g., ), resulting in a sparse communication [19, 20, 21, 31]. Let be the number of selected gradients, Random- and Top- are two representatives to choose the random coordinates and largest coordinates (in magnitude), respectively, where Top- tends to achieve better convergence performance than Random- in practise [32]. For Top- SGD, one needs to communicate selected gradients and their indices [19, 33, 34].

II-B3 Low-rank Decomposition

Given a large gradient matrix , low-rank decomposition methods factorize into two low-rank matrices , where and . As the rank is smaller than and , communicating and is more efficient than communicating by a factor of . ATOMO [23] uses compute-expensive SVD to achieve low-rank decomposition, and Power-SGD [24] utilizes power iteration to calculate low-rank matrices with much cheaper decomposition costs, which makes Power-SGD relatively practical in real-world applications [35]. The procedure of Power-SGD is given in Algorithm 1. It involves two matrix multiplications, one orthogonalization, and two all-reduce operations to compute and aggregate and .

While gradient compression methods can dramatically reduce the communication traffic for gradient aggregation, they are non-trivial to be compatible with existing system optimization techniques that are oft-used in S-SGD, which adversely affects the practical scalability of gradient compression methods, as discussed in the next section.

Other Related Work. [29, 36] present quantitative evaluation of gradient compression methods, observing the unsatisfying performance of current gradient compression methods. To improve their scaling efficiency, HiPress [18] splits gradient aggregation into composable and pipelined computing and communication primitives, and ByteComp [37] attempts to search the optimal compression strategy with a decision tree. However, these works require much effort to develop new tools, without maximizing the potential of combining current system optimizations into gradient compression. For accelerating Top- SGD, sparse all-reduce algorithms [33, 22] and statistical-based top-k selection algorithms [38, 31] are specifically developed to alleviate the sparse communication and compression overheads, respectively, but unfortunately they are not compatible to each other [22].

III Characterizing Gradient Compression

In this section, we characterize the performance of three representative gradient compression methods, including Sign-SGD, Top- SGD, and Power-SGD.

III-A Experimental Settings

Methods: we compare the performance of different gradient compression methods with S-SGD which is well optimized atop off-the-shelf implementation of PyTorch-DDP [25]. Following the prior work [36], we choose three most scalable gradient compression methods in each category: Sign-SGD with majority vote [17], Top- SGD with multiple sampling [21] 222The exact Top- selection is very computationally inefficient in GPUs, instead, multiple sampling uses binary search to find a close top-k threshold. , and Power-SGD [24]. For Power-SGD, we use the all-reduce collective, while for Sign-SGD and Top- SGD, we use the all-gather collective [34]. The gradients are packed together to be compressed and communicated for better performance [36].

| Model | #Param. | Sign-SGD | Top- SGD | Power-SGD |

|---|---|---|---|---|

| ResNet-50 | 25.6 | 32 | 1000 | 67 (r=4) |

| ResNet-152 | 60.2 | 32 | 1000 | 53 (r=4) |

| BERT-Base | 110.1 | 32 | 1000 | 16 (r=32) |

| BERT-Large | 336.2 | 32 | 1000 | 21 (r=32) |

Models: following the work [36], we choose four popular DNN models: ResNet-50 [13], ResNet-152 [13], BERT-Base [39], and BERT-Large [39], with per-GPU batch size of 64, 32, 32, and 8, respectively. The input image size is set as for ResNets, and the input sequence length is set as for BERTs. The model statistics and compression ratios are given in Table I. We are optimistic to use compression ratio in Top- SGD. For Power-SGD, we use a rank for ResNets, and a higher rank for BERTs to achieve competitive results as suggested in [24].

Testbed: we conduct our experiments on a 32-GPU cluster of 8 nodes. Each node has 4 Nvidia RTX2080Ti GPUs (11GB RAM) connected by PCIe3.0x16. The interconnect between nodes is 10Gb/s Ethernet (10GbE). The common software includes PyTorch-1.12.1, CUDA-11.3, cuDNN-8.3.2, and NCCL-2.10.3.

Metric: we use the metric of average iteration time in running 120 iterations (exclude the first 20 iterations). Each gradient compression method mainly consists of gradient computation (i.e., FF&BP), gradient compression and decompression, and gradient communication costs. For the communication cost, we only measure its non-overlapped overhead.

III-B Performance Comparison

We report iteration time of S-SGD, Sign-SGD, Top- SGD, and Power-SGD in Fig. 2. It shows that Sign-SGD and Top- SGD usually perform worse than S-SGD, while they are able to compress the gradients by and times, respectively. For example, Sign-SGD and Top- SGD take and higher iteration time than S-SGD for training a ResNet-50 model. For training the largest BERT-Large model, the Top- SGD runs faster than S-SGD, while Sign-SGD runs out of memory due to its increased memory requirement. Among three gradient compression methods, Power-SGD has achieved the best performance over all four models. However, Power-SGD outperforms S-SGD only on large models (BERT-Base and BERT-Large), and it performs worse or closely than S-SGD on small models (ResNet-50 and ResNet-152). Overall, it is disappointing to find that three gradient compression methods, with to high compression ratios, fail to achieve speedup over S-SGD in many cases on 10GbE bandwidth, which is very common in public cloud clusters [40, 21].

To understand the performance bottleneck, we dive into time breakdowns of gradient compression methods. Specifically, we measure the FF&BP computation time, compression and decompress time, and non-overlapped communication time. The time breakdowns of ResNet-50 and BERT-Base are shown in Fig. 3. The results of ResNet-152 and BERT-Large are similar to ResNet-50 and BERT-Base, respectively.

First, the communication overhead of S-SGD on BERT-Base is much larger than that on ResNet-50, because BERT-Base has more parameters, as well as a higher communication-to-computation ratio than ResNet-50. Thus, the communication overhead cannot be well hidden on BERT-Base, leaving more speedup space for gradient compression methods. Second, Sign-SGD has cheaper compression cost than Top- SGD and Power-SGD, however, its communication cost is even higher than uncompressed S-SGD. We contribute it to the fact that Sign-SGD has at most compression ratio, but it requires all-gather for communication, which is less efficient than all-reduce used in S-SGD. On the other hand, even though Top- SGD uses all-gather for communication, its communication overhead is very low due to its up to compression ratio. However, Top- SGD suffers from the computation bottleneck of Top- operations, for example, its takes compression time to achieve communication speedup than Sign-SGD when training BERT-Base. It is partly because our multiple sampling top-k selection implemented atop PyTorch is less efficient than its highly-optimized CUDA version [21], which however is not publicly available. Third, Power-SGD achieves competitive communication performance by using all-reduce, while having a mild compression cost. For large model BERT-Base, Power-SGD is faster than S-SGD, showing it is one of the most promising gradient compression methods to outperform S-SGD.

In summary, existing gradient compression methods require non-negligible compression and/or communication overheads, resulting in poor scalability. It is worth noting that the S-SGD baseline has been well optimized in the system-level, while gradient compression methods are not. We will discuss the limitations of current gradient compression methods to integrate these techniques.

III-C Limitations of Gradient Compression

For S-SGD, the ring all-reduce primitive, wait-free back-propagation, and tensor fusion optimization techniques have been widely applied to accelerate distributed training, such as in Horovod [8] and PyTorch-DDP [25]. However, these techniques are not readily applied into aforementioned gradient compression methods.

We first summarize two good properties of S-SGD to enable these system optimization techniques as follows:

-

•

additive communication: the gradient communication operation in each DNN layer is to sum up the local gradient tensor from each workers. The gradient addition enables efficient aggregation with ring all-reduce primitive to distribute the reduced gradient result to all workers.

-

•

non-blocking communication: the gradient communication operation in each DNN layer is independent of backward operations, without blocking the subsequent back-propagation. It enables wait-free back-propagation and tensor fusion techniques very friendly to overlap communication with computation tasks.

However, these two properties are not both satisfied in current gradient compression methods. For Sign-SGD and Top- SGD, each DNN layer requires gradient calculation and gradient quantitation/sparsification tasks, followed by communicating the compressed gradient, so the communication will not block the subsequent back-propagation [41]. However, two quantized gradients cannot be simply added, e.g., the result of adding two values is out of the range in Sign-SGD. Two sparsified gradient tensors are not additive as well, because their selected gradient elements (i.e., Top- elements) have different coordinates. As a compromise, Top- SGD and Sign-SGD typically utilize the all-gather primitive [34] to collect quantized and/or sparsified gradient results. Specifically, the communication complexity comparison is given in Table II, showing that the communication complexity of Sign-SGD and Top- SGD (with all-gather) is linear to the number of workers, which is system inefficient compared to S-SGD (with all-reduce) [21, 36]. Sign-SGD lacks compatibility with all-reduce, as studied in Fig. 3, so its actual communication overhead (with compression ratio) is however higher than S-SGD when training BERT-Base.

| Complexity | S-SGD | Sign-SGD | Top- SGD | Power-SGD |

|---|---|---|---|---|

| Compress | – | |||

| Communicate |

On the other hand, the compressed gradients of Power-SGD are still dense matrices, which allows Power-SGD to use efficient ring all-reduce primitive to aggregate these smaller matrices (i.e., as shown in Table II). However, in Power-SGD, each DNN layer needs to calculate the gradient and low-rank , followed by aggregating , and then it needs to calculate based on the result of aggregated , followed by aggregating , as shown in Fig. 4(a). In other words, the communication of aggregating will block the subsequent operations of computing and aggregating , and causes trouble for overlapping communication tasks (i.e., aggregating and ) with back-propagation. As demonstrated in Fig. 4(b), Power-SGD with WFBP will overlap the whole gradient compression and communication process with back-propagation. By doing so, gradient compression and communication tasks (e.g., , , ) are performed in parallel with gradient computation tasks (e.g., ). However, as gradient compression and gradient computation are both compute intensive tasks (see Table II, the compress complexity of Power-SGD), they will compete for compute resources on the GPU, leading to performance slowdown [36], i.e., affecting computing tasks of and in Fig. 4(b). For example, Power-SGD with WFBP causes an overall of slowdown than Power-SGD without WFBP, when training ResNet-50 on one GPU (with only computation tasks).

Motivated by these limitations, we propose a novel gradient compression algorithm that can satisfy two good properties of S-SGD, providing opportunities for system optimizations.

IV Optimizing Gradient Compression

In this section, we present our proposed gradient compression algorithm, namely ACP-SGD, equipped with system optimization techniques, to improve the throughput of distributed DL training. ACP-SGD is an alternate compressed Power-SGD that enjoys the good property to use all-reduce to aggregate compressed gradients and it is able to utilize WFBP and TF as like in S-SGD to further improve its performance.

IV-A ACP-SGD: Alternate Compressed Power-SGD

Power-SGD. The Power-SGD algorithm [24] uses a single step of power iteration to decompose the large gradient matrix into two low-rank matrices as . As shown in Algorithm 1, it requires one right multiplication, one left multiplication, and an orthogonalization operation to compute low-rank matrices and . Besides, it calls two all-reduce operations to aggregate and , respectively.

In each power iteration, the previous result at the last step (i.e., ) will be re-used to update the low-rank matrices at the current step (i.e., and ). This query reuse trick helps Power-SGD approximate the stochastic gradient matrix at each step more accurately [24]. In the very beginning, is initialized from an i.i.d. standard normal distribution.

However, Power-SGD involves interleaving computing and communication tasks (i.e., compute aggregate compute aggregate ) in each DNN layer. That is, the communication tasks will block the subsequent back-propagation operations. And if we simply pipeline the gradient compression and aggregation tasks with gradient computing tasks, gradient compression and gradient computation will compete for compute resources on the GPU, leading to the performance interference and slowdown [36].

Alternate compression. To avoid this, we propose an alternate compressed Power-SGD (ACP-SGD) algorithm (Algorithm 1), which compresses the gradient into and alternately. It only requires computing (or ), followed by aggregating (or ) in each iteration. By doing so, the communication of ACP-SGD is not blocking anymore, leading to the benefits of overlapping computing and communication tasks like S-SGD. We defer the details of system optimization to the next subsection.

In each power iteration, ACP-SGD reuses the previous low-rank results ( or ) to approximate the stochastic gradient. Consider two consecutive iterations with gradients and , alternate compression is equal to performing a complete step of power iteration, except that it computes with but computes with . Under a small update stepsize, one can expect that is close to [24]. Thus, query reuse is helpful to ensure the approximation quality.

In addition, another benefit of ACP-SGD is that it can halve the gradient compression and communication costs compared to Power-SGD. That is, ACP-SGD performs one orthogonalization and one matrix multiplication with the computation complexity of , and one all-reduce primitive with the communication volume of .

Error-feedback. The error-feedback mechanism computes the difference between one worker’s gradient and the compressed gradient (i.e., error), and adds it back to the next gradient (i.e., feedback) [14, 16, 42, 24]. It has been shown to be crucial to improve the convergence performance of biased compression methods (i.e., compressed gradient is not equal to the original one in expectation), such as Sign-SGD, Top- SGD, and Power-SGD. Our ACP-SGD compression (derived from Power-SGD) is biased, therefore, it requires error-feedback to achieve good performance as shown in Algorithm 2.

Take computing and aggregating as an example, we apply the error-feedback into ACP-SGD as follows: 1) incorporate the previous local error into the local gradient before compression, i.e., , and 2) update the local error via before aggregation. ACP-SGD with error-feedback performs the layer-wise computation (including compute , orthogonalize , compute , and update ), followed by communication of aggregating , whose communication operation is still non-blocking. In the very beginning, is initialized as zeros, and low-rank matrices and are initialized randomly from standard normal distribution.

IV-B System Optimization of ACP-SGD

Wait-free Back-propagation (WFBP). Apart from using the ring all-reduce primitive for aggregating compressed tensors, the good property of non-blocking gradient communication allows ACP-SGD to apply WFBP to overlap communication tasks with computing tasks. As shown in Fig. 4(c), one can aggregate the compressed tensor of layer 2 (i.e., ) immediately after the tasks of calculating and compressing the gradient (i.e., and ) have been finished. By doing WFBP, the communication task of can be overlapped with the computing tasks of layer 1 (i.e., ). In the next iteration, WFBP can be equally applied to overlap the computing and communication tasks for . Meanwhile, ACP-SGD with WFBP only overlap all-reduce operations that are communication intensive and do not compete for the compute resources.

Tensor Fusion (TF). If the compressed tensors and are layer-wisely aggregated via ring all-reduce operations, the communication overheads are easily dominated by start-up costs [9]. This is because the compressed tensors in ACP-SGD are much smaller than uncompressed tensors in S-SGD. For example, in Fig. 5, we report the cumulative distribution functions (CDFs) of the number of parameters in two models. After low-rank decomposing the gradients of ResNet-50 (or BERT-Base), we observe that there is a increase in the proportion of small tensors having less than (or ) parameters.

Thus, all-reducing these small and compressed tensors separately can be very communication inefficient. To reduce start-up costs, one shall apply the TF technique to merge several small tensors to be communicated together via one all-reduce operation. For example, on our 10GbE platform, all-reducing uncompressed gradients of ResNet-50 takes ms, while all-reducing them together takes ms (with speedup). However, in ACP-SGD, all-reducing compressed tensors separately takes ms, and all-reducing them together only takes ms (with speedup). Although TF is very helpful to reduce start-up costs in ACP-SGD, fusing all tensors for one communication requires waiting the completion of gradient computation of BP, which will lose the opportunity of WFBP to hide communication overheads.

To enable both WFBP and TF, it is suggested to merge a subset of compressed tensors of nearby layers. For example, given a three-layer DNN, during the BP from layer to , one can merge and communicate the compressed gradients of layer and immediately when they are available, so that the fused communication task can be overlapped with the subsequent gradient computation task of layer .

Buffer Size. It is non-trivial to determine which tensors to be merged in order to minimize the communication overhead for different model and hardware configurations. In this work, we choose to allocate the buffer with a pre-defined buffer size, select the available tensors to fit in the buffer, and execute the all-reduce operation on the buffer. This has shown to be very effective in S-SGD [8, 25]. For example, the default buffer size in PyTorch-DDP is 25MB [25], and it will batch the gradient tensors of a ResNet-50 model (with MB parameters) into buffers. The selected tensors are copied to one buffer, and the buffer is aggregated as a whole when it is ready.

The buffer size is critical to control the trade-off between WFBP and TF. When the buffer size is too small, each tensor is communicated immediately to maximize the overlap (optimal WFBP), but it loses any opportunity of fusing tensors (no TF). When the buffer size is too large, all tensors will be merged together to be communicated at the end of BP (optimal TF), losing any change for overlapping (no WFBP).

However, the compressed tensors ( and ) to be communicated in ACP-SGD are smaller than the gradient tensors (), as shown in Fig. 5. This means the default buffer size (i.e., 25MB) used in S-SGD is not suitable for ACP-SGD anymore. For example, a ResNet-50 involves only MB and MB parameters in and , respectively, for ACP-SGD with a rank of . To reduce the trouble of tuning the hyper-parameter of buffer size for different models and ranks, we choose to configure the compressed buffer size by scaling the default buffer size with the compression rate. For instance, the compression rates of ACP-SGD in ResNet-50 are and for and , giving compressed buffer sizes of MB and MB, respectively. Thus, it will batch tensors into 4 buffers, and batch tensors into another 4 buffers for aggregation. Note that fusion results for and are different to each other. In this work, we use the default buffer size of 25MB, and the compressed buffer sizes are derived based on the compression rates. We notice that buffer size can be automatically tuned using e.g. Bayesian optimization technique [43], but we do not adopt it in this work as the default buffer size can generally provide nearly optimal performance as studied later in Fig. 10.

IV-C Implementation of ACP-SGD

We implement our ACP-SGD prototype atop PyTorch. It wraps the SGD optimizer to cope with the underling gradient compression and communication operations. We use native APIs, i.e., torch.linalg.qr and torch.distributed.all_reduce, to perform reduced QR decomposition for efficient orthogonalization (which is required for compression), and ring all-reduce for compressed gradient aggregation. The vector-shaped parameters (e.g., biases) requires no compression, while other parameters are reshaped into matrices for compression.

To support WFBP, we register a hook function for each learnable parameter tensor during the back-propagation, and the registered hook function will be called when each gradient is ready. In the hook function, we implement the ACP-SGD with EF to compress the gradient into and alternately. To support TF, the compressed tensors are copied into one of the buffers, and the ready buffer will be aggregated asynchronously via the all-reduce operation. At the end of back-propagation, we synchronize the all-reduce operations on the (or ) buffers.

V Evaluation

V-A Experimental Settings

For convergence experiments, we compare the performance of ACP-SGD with S-SGD and Power-SGD. We choose VGG-16 [44] and ResNet-18 [13] models on the Cifar-10 [45] dataset. We run each algorithm for epochs on GPUs. We use per-GPU batch size of and learning rate of , with a gradual warmup in the first epochs, and multiple learning rate decays (by a factor of ) at and epochs [1]. Each algorithm uses a momentum of , and Power-SGD and ACP-SGD use a rank of .

For time efficiency related experiments, we compare the iteration time of ACP-SGD with S-SGD and two versions of Power-SGD. The Power-SGD is the original implementation of [24], which packs gradients after back-propagation for compression and communication. The Power-SGD with WFBP and TF optimizations (i.e., Power-SGD*) is implemented on PyTorch’s communication hook [46], which overlaps gradient compression and communication tasks with back-propagation. For a fair comparison, two Power-SGD baselines use reduced QR decomposition for orthogonalization like ACP-SGD. Note that S-SGD, Power-SGD*, and ACP-SGD are all optimized in the system-level with WFBP and TF, using a buffer size of MB. We do not compare with other gradient compression methods, as we have shown that Power-SGD performed better than them in all cases (see §III). For other model and testbed settings, we follow the same configurations in §III-A.

V-B Convergence Verification

We first verify the convergence performance of our ACP-SGD on training two different models VGG-16 and ResNet-18 on the Cifar-10 dataset. The results are given in Fig. 6, showing that ACP-SGD can achieve very close accuracy results (i.e., for VGG-16, and for ResNet-18) compared with S-SGD and Power-SGD. We see that gradient compression methods Power-SGD and ACP-SGD converge slightly slower than S-SGD in the early training stage, which however does not affect their final achieved model accuracy.

We contribute the good convergence performance of ACP-SGD to the usage of EF and query reuse mechanisms. To validate it, we perform an ablation study by disabling one of them, and report their results in Fig. 7. It demonstrates that ACP-SGD without EF and reuse perform poorly, verifying that both of them are key components to ACP-SGD.

V-C Wall-clock Iteration Time

We compare the performance of our ACP-SGD with S-SGD [25], Power-SGD [24], and Power-SGD* (i.e., Power-SGD optimized with WFBP and TF) [46]. We report the iteration time (meanstd) in Table III, where the best results are in bold.

| Model | S-SGD | Power-SGD | Power-SGD* | ACP-SGD |

|---|---|---|---|---|

| ResNet-50 | ||||

| ResNet-152 | ||||

| BERT-Base | ||||

| BERT-Large |

From Table III, it is observed that ACP-SGD consistently outperforms other methods in all tested cases. Specifically, ACP-SGD achieves an average of , , and speedups over S-SGD, Power-SGD, and Power-SGD* methods, respectively. And ACP-SGD can provide a significant speedup over S-SGD, e.g., training BERT-Large up to faster than S-SGD. However, two gradient compression baselines (Power-SGD and Power-SGD*) do not guarantee improved performance over S-SGD, for example, they run about slower than S-SGD in training ResNet-50.

For Power-SGD* with system optimizations, it performs better than Power-SGD in small models (ResNet-50 and ResNet-152), but performs worse than Power-SGD in large models (BERT-Base and BERT-Large). This is because larger models typically require more compute-intensive gradient computation and compression tasks, and hence cause more severe performance interference for Power-SGD*. In contrast, ACP-SGD with system optimizations will not lead to resource competition, which in turns can achieve speedup than Power-SGD when training the BERT-Large model. The effects of system optimizations for Power-SGD and ACP-SGD are studied later in Fig. 9.

To further investigate the performance of ACP-SGD, we give the time breakdowns in Fig. 8 for two representative models (ResNet-50 and BERT-Base). It shows that ACP-SGD has very low gradient compression and communication overheads, and it achieves almost a linear scalability. Meanwhile, S-SGD can hide communication overhead very well for the small ResNet-50 model, but requires very high non-overlapped communication overhead for the large BERT-Base model. Power-SGD and Power-SGD* are useful to improve the training performance in large models, and our ACP-SGD moves a further step to improve the performance significantly.

V-D Benefits of System Optimizations

Next, we study the benefits of system optimizations (WFBP and TF) step-by-step for S-SGD, Power-SGD, and ACP-SGD. In the rest of the paper, Power-SGD refers to the implementation of Power-SGD* if it is not specified. We provide three variants for each method: Naive implementation without WFBP and TF, the one with only WFBP, and the one with WFBP and TF. We conduct experiments on two relatively large models ResNet-152 and BERT-Large, as they involve more communication optimizations than ResNet-50 and BERT-Base, respectively. The results are given in Fig. 9.

For S-SGD and ACP-SGD, it is observed that WFBP achieves about improvement over their Naive implementations. However, Power-SGD is not friendly to WFBP, since overlapping gradient computation and gradient compression causes compute resource competition, leading to an average of slowdown over its Naive implementation. Besides, we find that TF is able to provide a significant speedup for any method with WFBP. Specifically, WFBP with TF achieves an average of , and speedups than WFBP (without TF) for S-SGD, Power-SGD, and ACP-SGD, respectively. In particular, TF for Power-SGD achieves the most significant improvement among three methods, due to the collective effect of reduced start-up cost and alleviated performance interference. As for ACP-SGD, with the help of WFBP and TF, it can achieve up to speedup over its Naive implementation, which validates the importance of two system optimizations for our gradient compression algorithm.

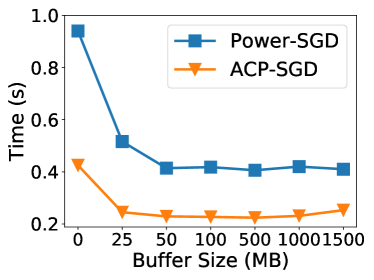

Sensitivity study of buffer size. When using two system optimizations, buffer size is critical to control the trade-off between WFBP and TF. To study the effect of buffer size, we change the buffer size from to MB when training the BERT-Large model (with MB parameters), that is, from optimal WFBP without TF to optimal TF without WFBP. We perform Power-SGD and ACP-SGD with two different ranks: 32 and 256, where rank 256 (with compression ratio) is suggested to avoid possible performance degradation in [35].

From Fig. 10, we see that ACP-SGD can consistently outperform Power-SGD under different buffer size and rank settings. And ACP-SGD is very robust to the value of buffer size under different ranks, for example, the default buffer size of MB remains a good candidate for ACP-SGD under different ranks (i.e., compression ratios). In particular, for rank=256, ACP-SGD with a buffer size of 25MB significantly outperforms two extreme cases: buffer size of 0MB (no TF) and buffer size of 1500MB (full TF), providing about improvement than both cases. We contribute it to that ACP-SGD can adaptively adjust the compressed buffer size for TF with different ranks, which can largely reduce the trouble for hyper-parameter tuning.

V-E Effect of Hyper-parameters

Effect of batch size. Here we compare ACP-SGD with S-SGD and Power-SGD on different batch sizes for training the ResNet-152 model. We vary the per-GPU batch size from 16 to 32 to fully utilize the GPU memory, and keep other configurations the same. We report results in Fig. 11(a). We find that ACP-SGD consistently outperforms S-SGD and Power-SGD under different batch sizes. For example, when using batch size 16, ACP-SGD provides and speedups than S-SGD and Power-SGD, respectively. Using batch size 32, it gives and speedups over S-SGD and Power-SGD, respectively. ACP-SGD achieves the best performance since it always has very small compression and communication costs for different batch sizes. Besides, for the three methods, using large batch sizes often provides performance improvement in terms of throughput. For instance, S-SGD, Power-SGD, and ACP-SGD achieve , , speedups on throughput by increasing batch size from to . Especially for S-SGD (with the heaviest communication), we observe that its non-overlapped communication overhead drops as the batch size increases. This is because increasing batch size leads to an increase in the computation-to-communication ratio, which in turns benefits S-SGD to overlap more communications with computations. Using larger batch sizes may further reduce the performance gap between S-SGD and ACP-SGD, but it is impractical due to the limited GPU memory capacity.

Effect of rank. The parameter of rank is used to control the compression ratio of Power-SGD and ACP-SGD. The lower the rank, the stronger the compression. To show the effect of rank, we vary the rank from 32 to 256 by a factor of 2 for training BERT-Large (with model dimension of 1024). We report the performance under different ranks in Fig. 11(b), showing that using large ranks leads to an increase in the compression and communication overheads for both Power-SGD and ACP-SGD. Therefore, Power-SGD and ACP-SGD have and higher total iteration time, respectively, when varying the rank from 32 to 256. However, compared to Power-SGD, ACP-SGD can overlap more communication overheads with gradient computation and compression overheads as the rank increases. For example, ACP-SGD provides speedup than Power-SGD when training using rank 32, and this speedup becomes for rank 256. In particular, when using rank 256, ACP-SGD has almost reduction in the non-overlapped communication time over Power-SGD. In addition, for training BERT-Large, ACP-SGD with rank 256 (i.e., with compression ratio) can still achieve about improvement over S-SGD.

V-F Effect of Cluster Settings

Effect of the number of GPUs. To study the scalability of ACP-SGD, we vary number of GPUs from 8 GPUs (2 nodes) to 64 GPUs (16 nodes). The interconnect between nodes is 10GbE. We use ring all-reduce for gradient aggregation.

We report the effect of the number of GPUs in Fig. 12. It shows that all three methods have high scalability as the number of GPUs increases. For instance, it is observed that S-SGD, Power-SGD, and ACP-SGD have only an average of , , and increase in per iteration time, respectively, when scaling from GPUs to GPUs. They can scale well because of using 1) ring all-reduce primitive with optimal bandwidth, whose communication complexity stays almost constant with increase in number of GPUs, and 2) tensor fusion to amortize start-up cost. While our testbed is up to 64 GPUs, the high scalability of ACP-SGD implies that it can always outperform S-SGD and Power-SGD using more GPUs.

Effect of network bandwidth. Here we validate the scalability of ACP-SGD on 32 GPUs, connected by different levels of networks, including inexpensive commodity 1Gb/s Ethernet (1GbE), ubiquitous data-center 10Gb/s Ethernet (10GbE), and expensive high-bandwidth 100Gb/s Infiniband (100GbIB).

The results are given in Fig. 13, showing that ACP-SGD performs better than S-SGD and Power-SGD under different networks. For slow 1GbE network, gradient compression methods Power-SGD and ACP-SGD can largely outperform S-SGD. In ResNet-50, Power-SGD and ACP-SGD achieves and speedups over S-SGD, and the speedups increase up to and in BERT-Base. For fast 100GbIB network, while the communication overhead of S-SGD can be well alleviated, ACP-SGD can provide about improvement over S-SGD when training BERT-Base.

VI Conclusion

In this paper, we studied gradient compression methods that mitigate communication bottleneck in distributed deep learning. We first evaluated the efficacy of three representative gradient compression methods, and it was observed that current compression methods performed poorly in many cases. To optimize the performance of gradient compression, we then proposed the alternate compressed Power-SGD (ACP-SGD) algorithm, that can 1) achieve model accuracy on par with S-SGD using error feedback and reuse mechanisms, and 2) outperform the efficiency of S-SGD and Power-SGD with several system optimization techniques. Finally, we conducted extensive experiments to show that our ACP-SGD can consistently outperform other popular baselines across many setups.

Acknowledgments

The research was supported in part by a RGC RIF grant under the contract R6021-20, RGC GRF grants under the contracts 16209120, 16200221 and 16207922, and the National Natural Science Foundation of China (NSFC) (Grant No. 62272122).

References

- [1] P. Goyal, P. Dollár, R. Girshick, P. Noordhuis, L. Wesolowski, A. Kyrola, A. Tulloch, Y. Jia, and K. He, “Accurate, large minibatch SGD: Training ImageNet in 1 hour,” arXiv preprint arXiv:1706.02677, 2017.

- [2] X. Jia, S. Song, W. He, Y. Wang, H. Rong, F. Zhou, L. Xie, Z. Guo, Y. Yang, L. Yu et al., “Highly scalable deep learning training system with mixed-precision: Training imagenet in four minutes,” arXiv preprint arXiv:1807.11205, 2018.

- [3] Y. Peng, Y. Zhu, Y. Chen, Y. Bao, B. Yi, C. Lan, C. Wu, and C. Guo, “A generic communication scheduler for distributed DNN training acceleration,” in Proc. of SOSP, 2019, pp. 16–29.

- [4] Z. Zhang, C. Chang, H. Lin, Y. Wang, R. Arora, and X. Jin, “Is network the bottleneck of distributed training?” Proceedings of the Workshop on Network Meets AI & ML, 2020.

- [5] H. Tang, S. Gan, A. A. Awan, S. Rajbhandari, C. Li, X. Lian, J. Liu, C. Zhang, and Y. He, “1-bit adam: Communication efficient large-scale training with adam’s convergence speed,” in Proc. of ICML, 2021.

- [6] Baidu, Baidu Ring All-Reduce, 2017. [Online]. Available: https://github.com/baidu-research/baidu-allreduce

- [7] H. Zhang, Z. Zheng, S. Xu, W. Dai, Q. Ho, X. Liang, Z. Hu, J. Wei, P. Xie, and E. P. Xing, “Poseidon: An efficient communication architecture for distributed deep learning on GPU clusters,” in Proc. of USENIX ATC, 2017, pp. 181–193.

- [8] A. Sergeev and M. Del Balso, “Horovod: fast and easy distributed deep learning in tensorflow,” arXiv preprint arXiv:1802.05799, 2018.

- [9] S. Shi, X. Chu, and B. Li, “MG-WFBP: Efficient data communication for distributed synchronous SGD algorithms,” in Proc. of INFOCOM, 2019, pp. 172–180.

- [10] R. Thakur, R. Rabenseifner, and W. Gropp, “Optimization of collective communication operations in MPICH,” The International Journal of High Performance Computing Applications, vol. 19, no. 1, pp. 49–66, 2005.

- [11] S. Shi, Z. Tang, X. Chu, C. Liu, W. Wang, and B. Li, “A quantitative survey of communication optimizations in distributed deep learning,” IEEE Network, pp. 1–8, 2020.

- [12] S. Shi, X. Chu, and B. Li, “MG-WFBP: Merging gradients wisely for efficient communication in distributed deep learning,” IEEE Transactions on Parallel and Distributed Systems, vol. 32, no. 8, pp. 1903–1917, 2021.

- [13] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proc. of CVPR, 2016, pp. 770–778.

- [14] F. Seide, H. Fu, J. Droppo, G. Li, and D. Yu, “1-bit stochastic gradient descent and its application to data-parallel distributed training of speech dnns,” in INTERSPEECH, 2014.

- [15] W. Wen, C. Xu, F. Yan, C. Wu, Y. Wang, Y. Chen, and H. Li, “Terngrad: Ternary gradients to reduce communication in distributed deep learning,” in Proc. of NeurIPS, 2017, pp. 1509–1519.

- [16] D. Alistarh, D. Grubic, J. Li, R. Tomioka, and M. Vojnović, “Qsgd: Communication-efficient sgd via gradient quantization and encoding,” in Proc. of NeurIPS, 2017, pp. 1709–1720.

- [17] J. Bernstein, Y.-X. Wang, K. Azizzadenesheli, and A. Anandkumar, “signsgd: compressed optimisation for non-convex problems,” in Proc. of ICML, vol. 80, 2018, pp. 559–568.

- [18] Y. Bai, C. Li, Q. Zhou, J. Yi, P. Gong, F. Yan, R. Chen, and Y. Xu, “Gradient compression supercharged high-performance data parallel dnn training,” Proc. of SOSP, 2021.

- [19] Y. Lin, S. Han, H. Mao, Y. Wang, and B. Dally, “Deep gradient compression: Reducing the communication bandwidth for distributed training,” in Proc. of ICLR, 2018.

- [20] P. Han, S. Wang, and K. K. Leung, “Adaptive gradient sparsification for efficient federated learning: An online learning approach,” Proc. of ICDCS, pp. 300–310, 2020.

- [21] S. Shi, X. Zhou, S. Song, X. Wang, Z. Zhu, X. Huang, X. Jiang, F. Zhou, Z. Guo, L. Xie et al., “Towards scalable distributed training of deep learning on public cloud clusters,” Proc. of MLSys, vol. 3, 2021.

- [22] S. Li and T. Hoefler, “Near-optimal sparse allreduce for distributed deep learning,” in Proc. of PPoPP, 2022, pp. 135–149.

- [23] H. Wang, S. Sievert, S. Liu, Z. Charles, D. S. Papailiopoulos, and S. J. Wright, “ATOMO: communication-efficient learning via atomic sparsification,” in Proc. of NeurIPS, 2018, pp. 9872–9883.

- [24] T. Vogels, S. P. Karimireddy, and M. Jaggi, “Powersgd: Practical low-rank gradient compression for distributed optimization,” Proc. of NeurIPS, vol. 32, 2019.

- [25] S. Li, Y. Zhao, R. Varma, O. Salpekar, P. Noordhuis, T. Li, A. Paszke, J. Smith, B. Vaughan, P. Damania, and S. Chintala, “Pytorch distributed,” Proc. of the VLDB Endowment, vol. 13, pp. 3005 – 3018, 2020.

- [26] Y. You, Z. Zhang, C.-J. Hsieh, J. Demmel, and K. Keutzer, “ImageNet training in minutes,” in Proc. of ICPP, 2018, pp. 1–10.

- [27] Y. You, J. Li, S. Reddi, J. Hseu, S. Kumar, S. Bhojanapalli, X. Song, J. Demmel, K. Keutzer, and C.-J. Hsieh, “Large batch optimization for deep learning: Training BERT in 76 minutes,” in Proc. of ICLR, 2020.

- [28] S. Shi, X. Chu, and B. Li, “Exploiting simultaneous communications to accelerate data parallel distributed deep learning,” in Proc. of INFOCOM, 2021.

- [29] H. Xu, C.-Y. Ho, A. M. Abdelmoniem, A. Dutta, E. H. Bergou, K. Karatsenidis, M. Canini, and P. Kalnis, “Grace: A compressed communication framework for distributed machine learning,” Proc. of ICDCS, pp. 561–572, 2021.

- [30] S. P. Karimireddy, Q. Rebjock, S. U. Stich, and M. Jaggi, “Error feedback fixes signsgd and other gradient compression schemes,” in Proc. of ICML, 2019.

- [31] A. M Abdelmoniem, A. Elzanaty, M.-S. Alouini, and M. Canini, “An efficient statistical-based gradient compression technique for distributed training systems,” Proc. of MLSys, vol. 3, pp. 297–322, 2021.

- [32] S. U. Stich, J.-B. Cordonnier, and M. Jaggi, “Sparsified sgd with memory,” in Proc. of NeurIPS, 2018.

- [33] S. Shi, Q. Wang, K. Zhao, Z. Tang, Y. Wang, X. Huang, and X. Chu, “A distributed synchronous SGD algorithm with global top-k sparsification for low bandwidth networks,” in Proc. of ICDCS, 2019, pp. 2238–2247.

- [34] C. Renggli, S. Ashkboos, M. Aghagolzadeh, D. Alistarh, and T. Hoefler, “SparCML: High-performance sparse communication for machine learning,” in Proc. of SC, 2019, pp. 1–15.

- [35] A. Ramesh, M. Pavlov, G. Goh, S. Gray, C. Voss, A. Radford, M. Chen, and I. Sutskever, “Zero-shot text-to-image generation,” in Proc. of ICML. PMLR, 2021, pp. 8821–8831.

- [36] S. Agarwal, H. Wang, S. Venkataraman, and D. S. Papailiopoulos, “On the utility of gradient compression in distributed training systems,” in Proc. of MLSys, 2022.

- [37] Z. Wang, H. Lin, Y. Zhu, and T. S. E. Ng, “Bytecomp: Revisiting gradient compression in distributed training,” in Arxiv abs/2205.14465, 2022.

- [38] S. Shi, X. Chu, K. C. Cheung, and S. See, “Understanding top-k sparsification in distributed deep learning,” arXiv preprint arXiv:1911.08772, 2019.

- [39] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of deep bidirectional transformers for language understanding,” in NAACL-HLT, 2019, pp. 4171–4186.

- [40] M. Cho, U. Finkler, D. S. Kung, and H. C. Hunter, “BlueConnect: Decomposing all-reduce for deep learning on heterogeneous network hierarchy,” in Proc. of MLSys, 2019.

- [41] S. Shi, Q. Wang, X. Chu, B. Li, Y. Qin, R. Liu, and X. Zhao, “Communication-efficient distributed deep learning with merged gradient sparsification on GPUs,” in Proc. of INFOCOM, 2020.

- [42] S. P. Karimireddy, Q. Rebjock, S. Stich, and M. Jaggi, “Error feedback fixes SignSGD and other gradient compression schemes,” in Proc. of ICML, 2019, pp. 3252–3261.

- [43] L. Zhang, S. Shi, X. Chu, W. Wang, B. Li, and C. Liu, “Decoupling the all-reduce primitive for accelerating distributed deep learning,” arXiv preprint arXiv:2302.12445, 2023.

- [44] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” in Proc. of ICLR, 2015.

- [45] A. Krizhevsky, “Learning multiple layers of features from tiny images,” Citeseer, 2009.

- [46] Y. Wang, A. Iankoulski, P. Damania, and S. Ranganathan, “Accelerating pytorch ddp by 10x with powersgd,” 2021.