Evaluate Geometry of Radiance Fields with Low-frequency Color Prior

Abstract

A radiance field is an effective representation of 3D scenes, which has been widely adopted in novel-view synthesis and 3D reconstruction. It is still an open and challenging problem to evaluate the geometry, i.e., the density field, as the ground-truth is almost impossible to obtain. One alternative indirect solution is to transform the density field into a point-cloud and compute its Chamfer Distance with the scanned ground-truth. However, many widely-used datasets have no point-cloud ground-truth since the scanning process along with the equipment is expensive and complicated. To this end, we propose a novel metric, named Inverse Mean Residual Color (IMRC), which can evaluate the geometry only with the observation images. Our key insight is that the better the geometry, the lower-frequency the computed color field. From this insight, given a reconstructed density field and observation images, we design a closed-form method to approximate the color field with low-frequency spherical harmonics, and compute the inverse mean residual color. Then the higher the IMRC, the better the geometry. Qualitative and quantitative experimental results verify the effectiveness of our proposed IMRC metric. We also benchmark several state-of-the-art methods using IMRC to promote future related research. Our code is available at https://github.com/qihangGH/IMRC.

Introduction

A radiance field has been popular for representing 3D objects or scenes since the Neural Radiance Field (NeRF) (Mildenhall et al. 2020) demonstrated that the performance of novel-view synthesis could be dramatically improved benefiting from it. From this path-breaking work, many works aimed at improving NeRF from several aspects, such as various challenging scenarios (Zhang et al. 2020; Martin-Brualla et al. 2020; Peng et al. 2021; Barron et al. 2021; Mildenhall et al. 2022), inference speed (Yu et al. 2021a; Hedman et al. 2021; Reiser et al. 2021; Garbin et al. 2021; Chen et al. 2022b), training efficiency (Deng, Barron, and Srinivasan 2020; Hu et al. 2022; Yu et al. 2022; Sun, Sun, and Chen 2022a, b; Müller et al. 2022; Chen et al. 2022a; Wang et al. 2023b, a), generalization ability (Yu et al. 2021b; Wang et al. 2021b; Chen et al. 2021), and so on. Some other works proposed restoring the surfaces via reconstructing the latent radiance field by inverse volume rendering (Wang et al. 2021a; Long et al. 2022; Oechsle, Peng, and Geiger 2021; Sun et al. 2022; Zhang et al. 2021; Wang et al. 2022; Wu et al. 2022a, 2023).

As the ground-truth of a radiance field is hard to obtain, we could not directly evaluate the reconstructed result. Alternatively, novel-view synthesis methods usually measure the similarity between the rendered image and its observed counterpart using image quality metrics such as peak signal-to-noise ratio (PSNR). As shown in the top two rows of Fig. 1, this metric may be enough to evaluate the synthetic images but not appropriate to evaluate the geometry.

Besides novel-view synthesis methods, some algorithms aim at improving the reconstructed geometry, e.g., distortion loss (Barron et al. 2022). And a lot of works exploit radiance fields for 3D reconstruction (Wang et al. 2021a; Long et al. 2022; Oechsle, Peng, and Geiger 2021; Sun et al. 2022; Zhang et al. 2021, 2022; Wu et al. 2022b; Wang et al. 2022; Wu et al. 2022a, 2023). These methods need a proper metric to quantitatively evaluate the geometry result. To this end, surface reconstruction methods, e.g., (Wang et al. 2021a; Long et al. 2022), usually transform the density field into a point-cloud using the marching cubes algorithm and compute Chamfer Distance (CD) with the scanned ground-truth. However, the scanning process along with the equipment is expensive and complicated. Therefore, few datasets have this ground-truth, and many widely-used ones do not.

To alleviate difficulties above, our key observation is that the color of any point in an ideal radiance field tends to be low-frequency if it is on the ground-truth surface of an object. This phenomenon is named as low-frequency color prior. Besides this, we adopt a closed-form method to compute the color field given the observation images and density field of a scene. We name the result as the computed color field to distinguish it from the reconstructed one given by the radiance field reconstruction method. Via exploring the computed color field, we find that the low-frequency color prior may be invalid for the points with inaccurate density values. Therefore, the density field could be evaluated by the mean frequency of a computed color field.

However, it is difficult to directly evaluate the color frequency even for a single point, not to mention the whole field. To this end, we approximate the color with low-frequency spherical harmonics and compute the residual color. Then, smaller mean residual color (MRC) implies lower color frequency, which indicates better geometry. Moreover, as the MRC is usually very small, it is not convenient for presentation and comparison. Thus, we transform it into decibel (dB) as PSNR does and name it as inverse mean residual color (IMRC). Then the higher the IMRC, the better the geometry. As demonstrated in Fig. 1, our IMRC metric could quantitatively evaluate the geometry correctly.

Our main contributions are concluded as follows: 1) We present the low-frequency color prior via analysing the ideal radiance field. We further find that this prior may be invalid for the computed color field if the density field is inaccurate. 2) To quantitatively evaluate the prior, we propose to approximate the color with low-frequency spherical harmonics and design the inverse mean residual color as a new metric. Qualitative and quantitative experimental results verify its effectiveness. 3) We further benchmark several state-of-the-art radiance field reconstruction methods using inverse mean residual color to promote future related research.

Related Work

We firstly review two types of radiance field reconstruction works, and then discuss the geometry metrics.

Novel View Synthesis. Mildenhall et al. (Mildenhall et al. 2020) proposed NeRF to synthesize novel-view images from posed images and dramatically improved the performance. They adopted a multilayer perceptron (MLP) to present the radiance field, which contains two components, i.e., the density field and color field. Each point in the density field has a scalar controlling how much the color is accumulated. Each point in the color field encodes the view-dependent color. The image can be rendered using the volume rendering algorithm (Max 1995). From this path-breaking work, there have been many works aiming at improving NeRF from several aspects, such as various challenging scenarios (Zhang et al. 2020; Martin-Brualla et al. 2020; Barron et al. 2021; Peng et al. 2021; Jain, Tancik, and Abbeel 2021; Mildenhall et al. 2022; Tancik et al. 2022; Weng et al. 2022; Zhao et al. 2022; Shao et al. 2022; Niemeyer et al. 2022), inference speed (Yu et al. 2021a; Hedman et al. 2021; Reiser et al. 2021; Garbin et al. 2021; Chen et al. 2022b), training efficiency (Deng, Barron, and Srinivasan 2020; Hu et al. 2022; Yu et al. 2022; Sun, Sun, and Chen 2022a, b; Müller et al. 2022; Chen et al. 2022a; Wang et al. 2023b, a), generalization ability (Yu et al. 2021b; Wang et al. 2021b; Chen et al. 2021), and so on.

Since these works focused on novel view synthesis tasks, to evaluate the results, they usually adopted image quality metrics, such as PSNR, SSIM, and LPIPS. These metrics are suitable for the task. However, it remains questionable how to evaluate the radiance field itself and whether these metrics are adequate. Evaluating the radiance field directly is challenging due to the difficulty in obtaining its ground-truth. Existing works have not well explored this problem.

Surface Reconstruction. Some other works proposed to restore the surfaces via reconstructing the latent radiance field by inverse volume rendering (Wang et al. 2021a; Oechsle, Peng, and Geiger 2021; Sun et al. 2022; Wu et al. 2022b; Zhang et al. 2022; Wu et al. 2022a, 2023). These methods usually obtain the surfaces via transforming the latent density field into an occupancy field or signed distance field (SDF). Then the Chamfer Distance between the points on the surfaces and the ground-truth can be computed to evaluate the results. However, it is expensive and complicated to set up the hardware environment and scan the ground-truth point-cloud. Moreover, during the transformation process, some information would be discarded as the transformed result only contains the iso-surface. Therefore, the CD metric may not be suitable to evaluate a density field. In contrast, IMRC is not suitable to evaluate the surfaces, but it could evaluate the density field.

Geometry Metrics. Due to the unavailable density field’s ground-truth, we can not use direct evaluation metrics, such as mean squared error or mean absolute error. Therefore, only indirect metrics could be considered. In previous Structure-from-Motion (SfM) systems, e.g., (Hartley and Zisserman 2004; Snavely, Seitz, and Szeliski 2006, 2008; Agarwal et al. 2009), the mean re-projection error is well-known and widely adopted as a metric and optimization objective to evaluate the reconstructed structures and motions. The mean re-projection error is defined as the mean distance between each observed image feature point and the re-projection point of its reconstructed 3D point. In other words, it could evaluate the reconstructed geometry without the corresponding ground-truth. Inspired by this, we argue that the geometry of a radiance field also could be evaluated only with observation images.

Low-frequency Color Prior

According to NeRF (Mildenhall et al. 2020), a radiance field is defined on a 3D space , which has two components, i.e., the density field and the color field . For a 3D point in the space , the density is a scalar that controls how much color of this point is accumulated. And the color encodes all view-dependent color information. For a specific 3D direction , the color vector is denoted as . To render the color observed from a ray with near and far bounds , we can follow the function

| (1) |

where is the camera original point, is the direction from to the corresponding pixel center, and the transmittance

| (2) |

To numerically estimate this continuous integral, NeRF resorts to quadrature (Max 1995). Then rendering equation is

| (3) |

where is the number of sample points along the ray,

| (4) |

and is the distance between the point and its previous neighbour . Specifically, .

To illustrated the low-frequency color prior, we construct an example scene in Fig. 2 (a), which consists of a cube. For better understanding, we also refer to the well-known related conception, i.e., light field, which describes the amount of light flowing in every direction through every point in space (Ng 2005). The difference between a light field and the color field of a radiance field is that, if a point has a zero density, its color in the color field could be arbitrary, but its color must be determined in the light field. If a point has a non-zero density, the colors in these two fields would be identical. Besides, a light field has no density information. As illustrated in Fig. 2 (b), the color frequency of a point tends to be lower when it approaches a surface. As the ground-truth density field should be the surface of the cube, and we do not care about the density values inside the surface as they do not affect the shape, a straight-forward hypothesis is that, for an ideal radiance field, the transmittance-density-weighted mean of color frequency should be small. This prior widely exists for most types of surface whether it is rough or glossy.

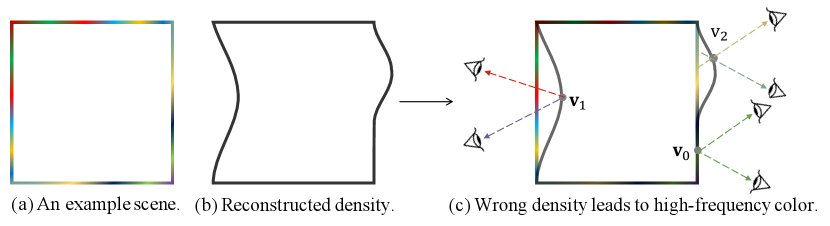

Moreover, we find that a wrong shape will break the low-frequency color prior. To illustrate this, we present an example scene in Fig. 3 (a), its reconstructed density field in Fig. 3 (b), and combine the scene and the reconstructed density field in Fig. 3 (c). Intuitively, as demonstrated in Fig. 3 (c), the observation color of a point from a ray should be identical with the color at the intersection of this ray and ground-truth surface. The color frequency of the points on wrong surfaces that lie inside () or outside () the ground-truth tends to be higher than that of the ground-truth surface (). Note that we use a 2D graph here for simplicity. All analyses and conclusions are applicable for 3D cases.

From these observations, if we have the color frequency field computed from a density field and observation images, the transmittance-density-weighted mean color frequency should be small for a good reconstructed density field. However, it is not easy to figure out the color frequency of even a single point, not to mention the frequency field. To alleviate this difficulty, we resort to the conjugate problem. According to the frequency domain transformation theory, if a signal is low-frequency, we could well approximate the signal with a group of low-frequency basis, and the residual would be quite small. Otherwise, the residual would be large. Therefore, the residual could be adopted to replace the frequency. Suppose that the residual color of a point at a direction is , and the transmittance of along is , the transmittance-density-weighted mean residual color

| (5) |

should be small for a good reconstructed density field. We use the product of transmittance and density, i.e., , as the residual color’s weight, which is the same as the color weight used in the rendering equation (1). In this way, only the points near the reconstructed surface are of the interest. The high color frequency of a point inside the reconstructed surface is not considered, as it does not affect the shape.

Inverse Mean Residual Color

In this section, we present the algorithm to compute the inverse mean residual color. The difficulty is that we could not use the color field reconstructed by the radiance field reconstruction method. If so, the geometry is not evaluated individually. To overcome this, we propose to utilize the observation images. For a 3D point, its projection points on all images are its observations. We can approximate its observations with a group of low-frequency basis, and the residual color could be obtained. We illustrate the whole pipeline in Fig. 4 and first introduce the observation acquisition process, then transform them into the low-frequency domain, and compute the IMRC at last.

Observation Acquisition

As illustrated in Fig. 4 (a), for any 3D point in the scene, given the reconstructed density field and cameras, we could obtain at most observations corresponding to the observed images. For a specific camera, we denote its projection matrix and original point as and respectively. Then the point could be projected on the image plane following the projection equation

| (6) |

where is the coordinate on an image. Its observed color as illustrated in Fig. 4 (b) could be calculated using bi-linear interpolation. Besides the color, we should also record the observation direction since the view-dependent color is a function of it. The observation direction is calculated as

| (7) |

where is the normalization operator.

It is important to note that, due to the occlusion, observations from different cameras should not be regarded equally. As illustrated in Fig. 4 (c), it is easy to understand that, if the accumulated density between the 3D point and camera original point is small, the corresponding observation confidence should be high, and vice versa. Coincidentally, this measurement is actually the transmittance between the 3D point and the camera. Therefore, we directly take the transmittance to measure the confidence of each observation, which could be calculated as

| (8) |

where . However, this continuous integral is not easy to be computed for a computer. Therefore, we numerically estimate it following NeRF (Mildenhall et al. 2020) as

| (9) |

where is the number of sample points, and is the -th point.

Overall, for any 3D point, we will have observations where each has three cells, i.e., color, direction, and confidence, which is denoted as . Specially, if the point is outside the viewing cone of the -th camera, the confidence should be set as .

Frequency Domain Transformation

In this subsection, we approximate the observations with a group of low-frequency basis. A natural choice is to use spherical harmonics (SH), since they have been widely adopted in computer graphics to represent low-frequency colors (Basri and Jacobs 2003; Ramamoorthi and Hanrahan 2001) and also have been successfully applied to radiance field reconstruction (Yu et al. 2021a, 2022). More details about SH could be found in (Green 2003). In the following, we briefly review the frequency coefficient estimation process following the well-known Monte Carlo method and present the difference under our situation. Note that, partial contents of this subsection have been presented in our previous work (Fang et al. 2023).

From the frequency domain transformation theory, given a signal defined on the unit sphere , we can obtain its coefficient of the basis function as

| (10) |

where is the degree and order of the SH basis, is the sampling probability of direction , is the expectation. As is sampled evenly on the unit sphere , . Based on this, given observations which are obtained in the previous subsection, we can estimate the coefficient as

| (11) |

However, this equation treats all observations equally, ignoring their varying confidence levels. To account for confidence , the equation can be revised as

| (12) |

Equation (12) above still has one problem. It implicitly assumes that the direction distributes uniformly on the unit sphere . Therefore, the estimation process of each is independent. In computer graphics, this could be guaranteed via uniformly sampling the direction. However, this is not true under our settings as we cannot control the observation directions at all. In practice, the observation directions usually are not uniformly distributed.

To overcome this, we should estimate the coefficients in turn and eliminate the influence of previous estimated coefficients. Then Eq. (12) could be further updated as

| (13) |

where

| (14) |

where if , else . In practice, the implementation involves incrementally increasing the degree and order while estimating the coefficients of the corresponding SH basis. Before each estimation, we remove the color encoded by previous basis functions and only use the residual color .

Note that, when the direction is uniformly distributed, Eq. 13 is equivalent to Eq. 11 since the basis functions are orthogonal to each other. Actually, Eq. 13 is the general equation to transform a discrete signal from the original domain to another one. If with the uniform distribution and orthogonal basis, we have , and Eq. 13 could be transformed to Eq. 11 because

| (15) |

and

| (16) |

However, in our case, the directions are not uniformly distributed and weighted, therefore Eq. 13 should be adopted. The detailed proof and comparison results could be found in our previous work (Fang et al. 2023).

Final Metric Computation

With the calculated residual color at all locations, the MRC could be obtained following Eq. 5. As it is a continuous integral, to numerically estimate it, we resort to quadrature (Max 1995) once again. Specifically, we discretize the 3D space into a volume, and denote all voxel vertexes as . Then the mean residual color could be estimated as

| (17) |

where is the final residual color at vertex as defined in Eq. (14), is the transmittance along the ray that connects and the -th camera center as defined in Eq. (4), and is the half voxel size.

Finally, we found that the MRC would be very small, which makes the value not convenient to be presented and compared. To this end, we further transform it into decibel as the PSNR metric does. As the theoretically maximal residual color is , the final metric could be obtained as

| (18) |

As the transformation includes an inverse operation, we denote it as IMRC shorted for Inverse Mean Residual Color. Then, the larger the IMRC, the better the geometry.

Experiments

In this section, we first verify the effectiveness of IMRC metric, then explore the influence of different settings. Finally, we benchmark several state-of-the-art methods.

Validation of IMRC

To exploit the effectiveness of the proposed IMRC metric, we conduct a series of experiments on the DTU dataset (Jensen et al. 2014) as it provides the point-cloud ground-truth. Though the point-cloud is essentially different from the radiance field, it still could be taken as a reference. As previous surface reconstruction works did, e.g., (Yariv et al. 2020; Wang et al. 2021a), we use the selected scenes. Each scene consists of or calibrated images and scanned point-cloud ground-truth. We select or images from the total or images respectively as testing ones, and use the remaining as training ones. The background of each image has been removed in advance.

For radiance field reconstruction methods, we select well-known methods, including JaxNeRF (Mildenhall et al. 2020; Deng, Barron, and Srinivasan 2020), Plenoxels (Yu et al. 2022), DVGO (Sun, Sun, and Chen 2022a), and TensoRF (Chen et al. 2022a), which have good performance. We adopt the code released by the authors to optimize each scene. Additionally, we add a transmittance loss to the JaxNeRF to supervise the transmittance of a ray according to the foreground mask. After the optimization process, we only export the density field obtained by each method. Notably, JaxNeRF (Mildenhall et al. 2020; Deng, Barron, and Srinivasan 2020) models a continuous density field while other methods model discrete ones. For a fair comparison, we discrete the density field of each method via sampling a density volume. Then the IMRC metric could be calculated. The experimental results are presented in Table 1, where the PSNR on testing images and the CD calculated with the point-cloud ground-truth are also reported. Specifically, we search the optimal threshold needed by the marching cubes algorithm for each method on each scene and report the lowest CD. Because the ground-truth of the density field is not available, we perform a user study inspired by previous work (Li et al. 2015). Specifically, experts evaluate and rank the scene geometry produced by the methods from (best) to (worst) based on the depth images, residual colors, and meshes. They are unknown about the metric values and the method that produces the results. We report the mean rank results in Table 1.

| Method | JaxNeRF | Plenoxels | DVGO | TensoRF |

|---|---|---|---|---|

| Scan24 | 29.19/1.415/20.11/1 | 27.72/1.592/14.96/3 | 27.96/2.028/17.45/2 | 28.76/2.144/11.73/4 |

| Scan37 | 26.77/1.502/15.43/1 | 26.22/1.813/12.99/3 | 26.47/1.466/14.05/2 | 26.00/1.778/ 8.86/4 |

| Scan40 | 29.43/1.495/20.74/1 | 28.72/2.030/15.75/3 | 28.38/2.017/17.43/2 | 28.85/2.381/13.39/4 |

| Scan55 | 31.06/0.637/19.55/1 | 30.50/0.892/15.55/3 | 31.66/1.247/19.37/2 | 29.57/1.765/ 9.78/4 |

| Scan63 | 34.43/1.689/20.68/1 | 34.09/1.931/17.63/3 | 35.42/1.574/19.94/2 | 34.69/2.216/10.92/4 |

| Scan65 | 29.90/1.212/17.59/1.3 | 31.16/1.548/14.55/3 | 30.63/1.482/17.32/1.7 | 30.63/1.947/10.78/4 |

| Scan69 | 29.25/1.306/19.07/1 | 30.15/2.346/15.40/3 | 29.67/1.489/17.81/2 | 29.43/2.254/ 7.91/4 |

| Scan83 | 35.28/1.478/15.98/1 | 37.00/2.245/16.63/2.7 | 36.14/1.610/17.95/2.3 | 35.96/2.712/ 7.95/4 |

| Scan97 | 28.02/1.600/17.10/1 | 29.56/2.809/15.29/3 | 29.11/1.629/15.85/2 | 29.25/2.278/ 9.06/4 |

| Scan105 | 31.86/1.136/19.99/1 | 33.36/1.907/16.67/3 | 33.07/1.352/18.85/2 | 32.92/2.219/ 9.19/4 |

| Scan106 | 33.97/0.903/19.70/1 | 33.82/2.317/16.37/3 | 34.70/1.595/19.29/2 | 33.61/2.524/ 9.01/4 |

| Scan110 | 32.96/1.842/16.00/1.7 | 32.49/3.349/14.86/3 | 34.00/1.770/16.13/1.3 | 33.67/3.396/ 7.99/4 |

| Scan114 | 29.44/1.091/18.89/1 | 30.52/1.657/16.53/3 | 30.47/1.481/18.18/2 | 29.79/2.024/ 9.54/4 |

| Scan118 | 36.68/0.998/21.77/1.7 | 36.10/2.719/17.82/3 | 37.58/1.297/22.57/1.3 | 35.76/2.436/11.34/4 |

| Scan122 | 35.06/0.761/18.00/2 | 36.42/2.349/17.15 /3 | 37.05/1.244/20.62/1 | 36.18/2.258/ 9.60/4 |

| Average | 31.55/1.271/18.71/1.18 | 31.86/2.100/15.88/2.98 | 32.15/1.552/18.19/1.84 | 31.67/2.289/ 9.80/4 |

In most cases, IMRC, CD, and UserRank agree with each other. The average results (last row) in Table 1 show that they rank methods consistently. We showcase three CD/IMRC/UserRank consistent cases in Fig. 5. They also indicate that PSNR cannot well evaluate a density field. Besides, there are 2 IMRC/UserRank conflict pairs and 11 CD/UserRank conflict pairs out of all pairs, respectively. In the left panel of Fig. 6, one IMRC/UserRank conflict case is shown. The density field of the JaxNeRF is not sharp, and its surface is surrounded by many low density floaters. In contrast, the DVGO has a distinct floater as highlighted by the residual color. Although the IMRC of DVGO is better, such a distinct floater may lead to worse UserRank. The CD/UserRank conflicts mainly stem from two reasons. First, a NeRF model does not guarantee that its surface points all have the same density value. Therefore, by extracting one iso-surface at a typical density level with the marching cubes algorithm, some surface information and near surface floaters that have different density values are inevitably discarded. As a result, the CD for such a surface may not well reflect real geometry. As shown by the middle two columns of Fig. 6. The JaxNeRF produces many low density floaters around the surface, as highlighted by its residual color, while these floaters are missing after applying the marching cubes algorithm. On the other hand, the iso-surface of DVGO is inferior because some of its surface points that have different density values are discarded, which leads to poor CD. Another reason is that an object mask is applied in CD calculation, so points that lie outside the mask are neglected. As shown in the last two columns of Fig. 6, the TensoRF’s mesh is qualitatively worse than Plenoxels’, but lots of floaters in it are out of the mask. As a result, the CD of TensoRF is erroneously better than that of Plenoxels. The IMRC metric successfully evaluate the last two scenes and is consistent with UserRank. We visualize all the rest conflict cases in the Appendix. They can be analyzed similarly.

Overall, the qualitative and quantitative results verify the effectiveness of IMRC to evaluate a density field. On one hand, PSNR could only evaluate the radiance field as a whole. On the other hand, the calculation of CD suffers from a loss of information that only an iso-surface at one specific density level is considered. The IMRC metric, in contrast, is more suitable to evaluate the whole field, rather than only a surface, as it can deal with density values at all locations. It is also more consistent with UserRank. Moreover, the CD metric needs the point-cloud ground-truth, which also limits its application as this data is complicated and expensive to obtain and usually is not available.

Different Settings of IMRC

In this subsection, we exploit the influences of the parameters defined during IMRC computation. There are only parameters that are needed to be configured manually, i.e., the SH degree and the volume resolution.

| SH Degree | ||||

|---|---|---|---|---|

| SH Basis # | ||||

| JaxNeRF | 15.93 | 17.18 | 18.71 | 19.57 |

| Plenoxels | 13.81 | 14.78 | 15.88 | 16.52 |

| DVGO | 15.66 | 16.80 | 18.19 | 18.97 |

| TensoRF | 9.00 | 9.40 | 9.80 | 10.06 |

| Average | 13.60 | 14.54 | 15.64 | 16.28 |

The first and foremost parameter is the degree of SH used to compute the residual color for each point. In Table 2, we present the IMRC results of the selected methods on the DTU dataset with different SH degrees. We can see that a higher SH degree leads to a better IMRC result. This is reasonable since the observations could be approximated better with more SH basis functions. However, we could not set a very large SH degree, because, if so, the residual color will be very small at all positions in the field, which makes the metric indistinct to evaluate the geometry. We also observe that the IMRC increases quickly when the SH degree is less than . And the increased margin becomes smaller starting from degree . Therefore, we set the SH degree as in all other experiments. This setting is also usually used in previous works such as (Yu et al. 2022; Chen et al. 2022a). Notably, the relative ranks of all methods remain unchanged with different SH degrees. This indicates that the IMRC is somehow stable with different SH degrees.

Notably, when the number of observations is small, we should decrease the SH degree. This will happen for the methods aiming at reconstructing the radiance field from sparse-views, such as (Niemeyer et al. 2022). According to the frequency domain transformation theory, the SH degree should be smaller than the number of observations.

The second parameter is the density volume’s resolution. As presented in Table 3, with the increasing resolution, the IMRC metric is slightly better. The increase may come from the more accurate density field with higher space resolution. We also observe that the relative ranks of all methods remain unchanged at different resolutions. This indicates that the IMRC is also somehow stable with different resolutions. As the average IMRC does not change from resolution to , we adopt in other experiments.

| Resolution | |||||

|---|---|---|---|---|---|

| JaxNeRF | 17.92 | 18.42 | 18.61 | 18.71 | 18.54 |

| Plenoxels | 15.96 | 16.04 | 16.04 | 15.88 | 16.00 |

| DVGO | 17.05 | 17.72 | 18.14 | 18.19 | 18.27 |

| TensoRF | 9.45 | 9.62 | 9.75 | 9.80 | 9.88 |

| Average | 15.09 | 15.45 | 15.64 | 15.64 | 15.67 |

Benchmarking State-of-the-arts

Besides the DTU dataset, we also benchmark the state-of-the-art methods on NeRF Synthetic (Mildenhall et al. 2020) and LLFF (Mildenhall et al. 2019) datasets. For the NeRF Synthetic dataset, we use the released code and train on the black background as done in the DTU dataset. For the LLFF dataset, we directly use the models released by the authors. As there is no point-cloud ground-truth on NeRF Synthetic and LLFF datasets, we could not compute the CD metric and only present the PSNR and IMRC results in Table 4 and Table 5, respectively. We also visualize some results in Fig. 7.

| Method | JaxNeRF | Plenoxels | DVGO | TensoRF |

|---|---|---|---|---|

| Chair | 30.28 / 19.21 | 30.66 / 17.60 | 33.79 / 21.59 | 30.46 / 19.10 |

| Drums | 24.16 / 13.41 | 24.26 / 12.65 | 25.69 / 13.38 | 24.51 / 14.32 |

| Ficus | 27.99 / 14.58 | 28.31 / 14.57 | 33.27 / 18.29 | 28.56 / 11.59 |

| Hotdog | 35.49 / 4.59 | 35.11 / 20.02 | 36.90 / 21.16 | 35.43 / 22.41 |

| Lego | 31.41 / 3.73 | 30.87 / 17.54 | 34.83 / 19.27 | 31.83 / 19.21 |

| Materials | 29.67 / 16.09 | 28.39 / 15.15 | 29.94 / 17.16 | 28.91 / 12.63 |

| Mic | 31.22 / 2.15 | 32.50 / 16.47 | 28.41 / 8.09 | 32.88 / 14.41 |

| Ship | 28.76 / 18.77 | 28.52 / 18.37 | 29.82 / 19.08 | 28.68 / 19.24 |

| Average | 29.87 / 11.57 | 29.83 / 16.55 | 31.58 / 17.25 | 30.16 / 16.61 |

| Method | JaxNeRF | Plenoxels | DVGO | TensoRF |

|---|---|---|---|---|

| Fern | 24.83 / 21.58 | 25.47 / 19.07 | 25.07 / 20.04 | 25.31 / 19.68 |

| Flower | 28.07 / 23.03 | 27.83 / 19.46 | 27.61 / 21.42 | 28.22 / 21.15 |

| Fortress | 31.76 / 26.97 | 31.09 / 22.32 | 30.38 / 24.82 | 31.14 / 24.57 |

| Horns | 28.10 / 23.58 | 27.60 / 19.09 | 27.55 / 21.20 | 27.64 / 21.80 |

| Leaves | 21.23 / 17.53 | 21.43 / 16.32 | 21.04 / 16.43 | 21.34 / 16.58 |

| Orchids | 20.27 / 18.06 | 20.26 / 16.03 | 20.38 / 16.59 | 20.02 / 16.37 |

| Room | 33.04 / 26.08 | 30.22 / 21.64 | 31.45 / 24.64 | 31.80 / 25.19 |

| Trex | 27.42 / 24.22 | 26.49 / 19.23 | 27.17 / 20.90 | 26.61 / 21.23 |

| Average | 26.84 / 22.63 | 26.30 / 19.15 | 26.33 / 20.76 | 26.51 / 20.82 |

Benefiting from the IMRC metric, we could quantitatively but not only qualitatively evaluate how a regularization loss improves the density field. Specifically, we train DVGO (Sun, Sun, and Chen 2022a) with distortion loss (Barron et al. 2022), which could improve the density field qualitatively by observations. The results are reported in Table 6. With distortion loss, the better IMRC for all the three datasets verified the effectiveness of both the distortion loss and the metric itself.

| Dataset | DTU | NeRF Synthetic | LLFF |

|---|---|---|---|

| DVGO | 32.15 / 18.19 | 31.58 / 17.25 | 26.23 / 18.87 |

| DVGO + Distortion Loss | 32.20 / 18.47 | 31.50 / 17.94 | 26.33 / 20.76 |

Discussion and Conclusion

We first discuss the limitations of IMRC and then conclude this paper in the following.

Limitations. There are also some degrade situations where the proposed IMRC metric may fail. For example, the low-frequency color prior no longer holds for surfaces that are purely specular or exhibit high levels of specular reflection, such as mirrors, leading to the failure of IMRC. Another degrade situation is that, if the whole density field is empty except for a few points that have been observed only by several cameras, the IMRC metric will be high. However, this density field is really bad. This type of degrade situations also exist for other geometry metrics, such as the re-projection error. Therefore, when using the proposed IMRC metric, it should be combined with other metrics, such as PSNR and SSIM. Only in this way, we can evaluate the results with less bias.

Conclusion. In this paper, we aim at evaluating the geometry information of a radiance field without ground-truth. This problem is important as the radiance field has been widely used not only on novel view synthesis but also 3D reconstruction tasks. For 3D reconstruction tasks, the geometry information is essential. However, due to the unavailable ground-truth and unsuitable metrics, there is no proper metric to quantitatively evaluate the geometry. To alleviate this dilemma, we propose the Inverse Mean Residual Color (IMRC) metric based on our insights on the properties of the radiance field. Qualitative and quantitative experimental results verify the effectiveness of the IMRC metric. We also benchmark state-of-the-art methods on datasets and hope this work could promote future related research.

Acknowledgments

This work was supported in part by the National Key Research and Development Program of China under Grant 2021YFB3301504, in part by the National Natural Science Foundation of China under Grant U19B2029, Grant U1909204, and Grant 92267103, in part by the Guangdong Basic and Applied Basic Research Foundation under Grant 2021B1515140034, and in part by the CAS STS Dongguan Joint Project under Grant 20211600200022.

References

- Agarwal et al. (2009) Agarwal, S.; Snavely, N.; Simon, I.; Seitz, S. M.; and Szeliski, R. 2009. Building Rome in a Day. In ICCV, 72–79.

- Barron et al. (2021) Barron, J. T.; Mildenhall, B.; Tancik, M.; Hedman, P.; Martin-Brualla, R.; and Srinivasan, P. P. 2021. Mip-nerf: A multiscale representation for anti-aliasing neural radiance fields. In ICCV, 5855–5864.

- Barron et al. (2022) Barron, J. T.; Mildenhall, B.; Verbin, D.; Srinivasan, P. P.; and Hedman, P. 2022. Mip-nerf 360: Unbounded anti-aliased neural radiance fields. In CVPR, 5470–5479.

- Basri and Jacobs (2003) Basri, R.; and Jacobs, D. W. 2003. Lambertian Reflectance and Linear Subspaces. IEEE TPAMI, 25(2): 218–233.

- Chen et al. (2022a) Chen, A.; Xu, Z.; Geiger, A.; Yu, J.; and Su, H. 2022a. TensoRF: Tensorial Radiance Fields. In ECCV.

- Chen et al. (2021) Chen, A.; Xu, Z.; Zhao, F.; Zhang, X.; Xiang, F.; Yu, J.; and Su, H. 2021. MVSNeRF: Fast Generalizable Radiance Field Reconstruction From Multi-view Stereo. In ICCV, 14124–14133.

- Chen et al. (2022b) Chen, Z.; Funkhouser, T.; Hedman, P.; and Tagliasacchi, A. 2022b. MobileNeRF: Exploiting the Polygon Rasterization Pipeline for Efficient Neural Field Rendering on Mobile Architectures. arXiv preprint arXiv:2208.00277.

- Deng, Barron, and Srinivasan (2020) Deng, B.; Barron, J. T.; and Srinivasan, P. P. 2020. JaxNeRF: an efficient JAX implementation of NeRF.

- Fang et al. (2023) Fang, Q.; Song, Y.; Li, K.; and Bo, L. 2023. Reducing Shape-Radiance Ambiguity in Radiance Fields with a Closed-Form Color Estimation Method. In NIPS.

- Garbin et al. (2021) Garbin, S. J.; Kowalski, M.; Johnson, M.; Shotton, J.; and Valentin, J. 2021. FastNeRF: High-fidelity Neural Rendering at 200fps. In ICCV, 14346–14355.

- Green (2003) Green, R. 2003. Spherical harmonic lighting: The gritty details. In Archives of the game developers conference, volume 56, 4.

- Hartley and Zisserman (2004) Hartley, R. I.; and Zisserman, A. 2004. Multiple View Geometry in Computer Vision. Cambridge University Press, ISBN: 0521540518, second edition.

- Hedman et al. (2021) Hedman, P.; Srinivasan, P. P.; Mildenhall, B.; Barron, J. T.; and Debevec, P. 2021. Baking Neural Radiance Fields for Real-Time View Synthesis. In ICCV.

- Hu et al. (2022) Hu, T.; Liu, S.; Chen, Y.; Shen, T.; and Jia, J. 2022. EfficientNeRF - Efficient Neural Radiance Fields. In CVPR, 12892–12901.

- Jain, Tancik, and Abbeel (2021) Jain, A.; Tancik, M.; and Abbeel, P. 2021. Putting Nerf on A Diet: Semantically Consistent Few-shot View Synthesis. In ICCV, 5885–5894.

- Jensen et al. (2014) Jensen, R.; Dahl, A.; Vogiatzis, G.; Tola, E.; and Aanæs, H. 2014. Large scale multi-view stereopsis evaluation. In CVPR, 406–413. IEEE.

- Li et al. (2015) Li, J.; Xia, C.; Song, Y.; Fang, S.; and Chen, X. 2015. A Data-Driven Metric for Comprehensive Evaluation of Saliency Models. In ICCV.

- Long et al. (2022) Long, X.; Lin, C.; Wang, P.; Komura, T.; and Wang, W. 2022. SparseNeuS: Fast Generalizable Neural Surface Reconstruction from Sparse views. In ECCV.

- Martin-Brualla et al. (2020) Martin-Brualla, R.; Radwan, N.; Sajjadi, M. S. M.; Barron, J. T.; Dosovitskiy, A.; and Duckworth, D. 2020. NeRF in the Wild: Neural Radiance Fields for Unconstrained Photo Collections. In CVPR.

- Max (1995) Max, N. 1995. Optical Models for Direct Volume Rendering. IEEE TVCG, 1(2): 99–108.

- Mildenhall et al. (2022) Mildenhall, B.; Hedman, P.; Martin-Brualla, R.; Srinivasan, P. P.; and Barron, J. T. 2022. Nerf in the dark: High dynamic range view synthesis from noisy raw images. In CVPR, 16190–16199.

- Mildenhall et al. (2019) Mildenhall, B.; Srinivasan, P. P.; Ortiz-Cayon, R.; Kalantari, N. K.; Ramamoorthi, R.; Ng, R.; and Kar, A. 2019. Local Light Field Fusion: Practical View Synthesis with Prescriptive Sampling Guidelines. ACM Trans. Graph., 38(4).

- Mildenhall et al. (2020) Mildenhall, B.; Srinivasan, P. P.; Tancik, M.; Barron, J. T.; Ramamoorthi, R.; and Ng, R. 2020. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In ECCV.

- Müller et al. (2022) Müller, T.; Evans, A.; Schied, C.; and Keller, A. 2022. Instant Neural Graphics Primitives With A Multiresolution Hash Encoding. arXiv preprint arXiv:2201.05989.

- Ng (2005) Ng, R. 2005. Fourier slice photography. In SIGGRAPH, 735–744.

- Niemeyer et al. (2022) Niemeyer, M.; Barron, J. T.; Mildenhall, B.; Sajjadi, M. S. M.; Geiger, A.; and Radwan, N. 2022. RegNeRF: Regularizing Neural Radiance Fields for View Synthesis from Sparse Inputs. In CVPR.

- Oechsle, Peng, and Geiger (2021) Oechsle, M.; Peng, S.; and Geiger, A. 2021. UNISURF: Unifying Neural Implicit Surfaces and Radiance Fields for Multi-View Reconstruction. In ICCV, 5569–5579.

- Peng et al. (2021) Peng, S.; Dong, J.; Wang, Q.; Zhang, S.; Shuai, Q.; Zhou, X.; and Bao, H. 2021. Animatable Neural Radiance Fields for Modeling Dynamic Human Bodies. In ICCV, 14314–14323.

- Ramamoorthi and Hanrahan (2001) Ramamoorthi, R.; and Hanrahan, P. 2001. On the Relationship Between Radiance and Irradiance: Determining the Illumination From Images of a Convex Lambertian Object. JOSA A, 18(10): 2448–2459.

- Reiser et al. (2021) Reiser, C.; Peng, S.; Liao, Y.; and Geiger, A. 2021. KiloNeRF: Speeding up Neural Radiance Fields with Thousands of Tiny MLPs. In ICCV.

- Shao et al. (2022) Shao, R.; Zhang, H.; Zhang, H.; Chen, M.; Cao, Y.; Yu, T.; and Liu, Y. 2022. DoubleField: Bridging the Neural Surface and Radiance Fields for High-fidelity Human Reconstruction and Rendering. In CVPR.

- Snavely, Seitz, and Szeliski (2006) Snavely, N.; Seitz, S. M.; and Szeliski, R. 2006. Photo Tourism: Exploring Photo Collections in 3D. ACM TOG, 25(3): 835–846.

- Snavely, Seitz, and Szeliski (2008) Snavely, N.; Seitz, S. M.; and Szeliski, R. 2008. Modeling the World from Internet Photo Collections. IJCV, 80(2): 189–210.

- Sun, Sun, and Chen (2022a) Sun, C.; Sun, M.; and Chen, H.-T. 2022a. Direct Voxel Grid Optimization: Super-fast Convergence for Radiance Fields Reconstruction. In CVPR.

- Sun, Sun, and Chen (2022b) Sun, C.; Sun, M.; and Chen, H.-T. 2022b. Improved Direct Voxel Grid Optimization for Radiance Fields Reconstruction. arXiv preprint arXiv:2206.05085.

- Sun et al. (2022) Sun, J.; Chen, X.; Wang, Q.; Li, Z.; Averbuch-Elor, H.; Zhou, X.; and Snavely, N. 2022. Neural 3D Reconstruction in the Wild. In SIGGRAPH.

- Tancik et al. (2022) Tancik, M.; Casser, V.; Yan, X.; Pradhan, S.; Mildenhall, B.; Srinivasan, P. P.; Barron, J. T.; and Kretzschmar, H. 2022. Block-NeRF: Scalable Large Scene Neural View Synthesis. In CVPR.

- Wang et al. (2023a) Wang, F.; Chen, Z.; Wang, G.; Song, Y.; and Liu, H. 2023a. Masked Space-Time Hash Encoding for Efficient Dynamic Scene Reconstruction. In NIPS.

- Wang et al. (2023b) Wang, F.; Tan, S.; Li, X.; Tian, Z.; Song, Y.; and Liu, H. 2023b. Mixed Neural Voxels for Fast Multi-view Video Synthesis. In ICCV, 19706–19716.

- Wang et al. (2021a) Wang, P.; Liu, L.; Liu, Y.; Theobalt, C.; Komura, T.; and Wang, W. 2021a. NeuS: Learning Neural Implicit Surfaces by Volume Rendering for Multi-view Reconstruction. In NeurIPS.

- Wang et al. (2021b) Wang, Q.; Wang, Z.; Genova, K.; Srinivasan, P. P.; Zhou, H.; Barron, J. T.; Martin-Brualla, R.; Snavely, N.; and Funkhouser, T. 2021b. IBRNet: Learning Multi-view Image-based Rendering. In CVPR, 4690–4699.

- Wang et al. (2022) Wang, Y.; Han, Q.; Habermann, M.; Daniilidis, K.; Theobalt, C.; and Liu, L. 2022. NeuS2: Fast Learning of Neural Implicit Surfaces for Multi-view Reconstruction. arXiv.

- Weng et al. (2022) Weng, C.-Y.; Curless, B.; Srinivasan, P. P.; Barron, J. T.; and Kemelmacher-Shlizerman, I. 2022. HumanNeRF: Free-viewpoint Rendering of Moving People from Monocular Video. In CVPR.

- Wu et al. (2022a) Wu, Q.; Liu, X.; Chen, Y.; Li, K.; Zheng, C.; Cai, J.; and Zheng, J. 2022a. Object-Compositional Neural Implicit Surfaces. In ECCV.

- Wu et al. (2023) Wu, Q.; Wang, K.; Li, K.; Zheng, J.; and Cai, J. 2023. ObjectSDF++: Improved Object-Compositional Neural Implicit Surfaces. In ICCV.

- Wu et al. (2022b) Wu, T.; Wang, J.; Pan, X.; Xu, X.; Theobalt, C.; Liu, Z.; and Lin, D. 2022b. Voxurf: Voxel-based Efficient and Accurate Neural Surface Reconstruction. arXiv:2208.12697.

- Yariv et al. (2020) Yariv, L.; Kasten, Y.; Moran, D.; Galun, M.; Atzmon, M.; Ronen, B.; and Lipman, Y. 2020. Multiview Neural Surface Reconstruction by Disentangling Geometry and Appearance. In Larochelle, H.; Ranzato, M.; Hadsell, R.; Balcan, M.; and Lin, H., eds., NeurIPS, volume 33, 2492–2502. Curran Associates, Inc.

- Yu et al. (2022) Yu, A.; Fridovich-Keil, S.; Tancik, M.; Chen, Q.; Recht, B.; and Kanazawa, A. 2022. Plenoxels: Radiance Fields Without Neural Networks. In CVPR.

- Yu et al. (2021a) Yu, A.; Li, R.; Tancik, M.; Li, H.; Ng, R.; and Kanazawa, A. 2021a. PlenOctrees for Real-time Rendering of Neural Radiance Fields. In ICCV.

- Yu et al. (2021b) Yu, A.; Ye, V.; Tancik, M.; and Kanazawa, A. 2021b. PixelNeRF: Neural Radiance Fields From One or Few Images. In CVPR, 4578–4587.

- Zhang et al. (2021) Zhang, J. Y.; Yang, G.; Tulsiani, S.; and Ramanan, D. 2021. NeRS: Neural Reflectance Surfaces for Sparse-view 3D Reconstruction in the Wild. In NeurIPS.

- Zhang et al. (2022) Zhang, K.; Luan, F.; Li, Z.; and Snavely, N. 2022. IRON: Inverse Rendering by Optimizing Neural SDFs and Materials from Photometric Images. In CVPR.

- Zhang et al. (2020) Zhang, K.; Riegler, G.; Snavely, N.; and Koltun, V. 2020. NeRF++: Analyzing and Improving Neural Radiance Fields. arXiv preprint arXiv:2010.07492.

- Zhao et al. (2022) Zhao, F.; Yang, W.; Zhang, J.; Lin, P.; Zhang, Y.; Yu, J.; and Xu, L. 2022. HumanNeRF: Efficiently Generated Human Radiance Field from Sparse Inputs. In CVPR.

Appendix

Appendix A More Experimental Details

Search for the Best Chamfer Distance

To calculate the Chamfer Distance (CD) metric on the DTU dataset, we first transform the density volume into a point-cloud using the marching cubes algorithm. The algorithm searches for iso-surfaces from the volume, where a hyper-parameter about the density level needs to be manually set. The hyper-parameter usually significantly affects the resulting CD value. Therefore, we vary this hyper-parameter and search for the best CD. Specifically, we adopt the golden section search algorithm, which effectively finds the local minimum, or probably the global minimum, of the CD in our case. The searching process is ended when the distance between the last two searched CD values is no greater than .

As in Fig. 8, we showcase the searched results of each scene reconstructed by JaxNeRF (Mildenhall et al. 2020; Deng, Barron, and Srinivasan 2020), which are based on density volume. The searched results first decrease and then increase. The ones of other methods, i.e., DVGO (Sun, Sun, and Chen 2022a), Plenoxels (Yu et al. 2022), and TensoRF (Chen et al. 2022a), have the similar trend. It is noteworthy that such a searching process is time-consuming, which costs tens of minutes or more, whereas the calculation of our Inverse Mean Residual Color (IMRC) metric is more efficient as reported in the next subsection.

Computational Cost of the IMRC

As in Table 7, we report the average time cost of calculating the IMRC metric on the DTU dataset’s 15 scenes with density volume resolution and SH degree . All the experiments are run on a single NVIDIA A100 GPU. The average computation costs of the methods are different since the sparsity of the density volume varies with different methods. More sparse the density volume is, less time the computation process costs. Compared with the CD metric, the calculation of IMRC is much more efficient as there is no searching process.

| Method | JaxNeRF | Plenoxels | DVGO | TensoRF |

|---|---|---|---|---|

| Time cost (seconds) | 17.80 | 16.98 | 10.66 | 16.50 |

Appendix B More Experimental Results

Further Validation of IMRC by Depth Supervision and Comparison with Other Geometry Metrics

Depth Supervison

To provide empirical evidence that the IMRC metric aligns with ground-truth density fields, a comparison between NeRF models trained with and without ground-truth depth supervision is conducted. It is expected that the models trained with depth supervision have better IMRC results. Specifically, we conduct an experiment on the NeRF synthetic dataset. Since only the 200 test images of each scene have the depth ground-truth, we further evenly split them into 2 subsets for training and testing. The results of DVGO trained with and without depth supervision are reported in Tab. 8. With depth supervision, the scenes should have better geometry. Accordingly, Tab. 8 shows that the IMRC (the higher the better) of all scenes increases, which means that it aligns with ground-truth density fields.

| With depth supervision | Without depth supervision | |||||||

|---|---|---|---|---|---|---|---|---|

| PSNR | IMRC | MSE | MAE° | PSNR | IMRC | MSE | MAE° | |

| Chair | 34.88 | 21.77 | 0.0188 | 55.47 | 34.89 | 21.62 | 0.0196 | 55.72 |

| Drums | 28.42 | 14.86 | 0.0786 | 64.58 | 28.40 | 13.82 | 0.1153 | 67.08 |

| Ficus | 37.01 | 19.34 | 0.1139 | 75.05 | 37.00 | 18.96 | 0.1501 | 75.38 |

| Hotdog | 39.25 | 23.34 | 0.0125 | 47.40 | 39.16 | 22.15 | 0.0259 | 48.54 |

| Lego | 37.92 | 21.00 | 0.0253 | 60.17 | 37.95 | 19.83 | 0.0785 | 61.16 |

| Materials | 35.12 | 17.40 | 0.0334 | 67.03 | 35.11 | 17.37 | 0.0394 | 68.45 |

| Mic | 35.35 | 18.38 | 0.0507 | 61.26 | 29.12 | 10.34 | 0.3760 | 69.72 |

| Ship | 31.52 | 19.85 | 0.0531 | 64.18 | 31.21 | 19.59 | 0.0611 | 64.79 |

| Average | 34.93 | 19.49 | 0.0483 | 61.89 | 34.11 | 17.96 | 0.1082 | 63.86 |

Comparison with Other Geometry Metrics

To bolster the metric’s credibility, we also demonstrate its correlation with commonly used geometry metrics, including the mean squared error (MSE) of depth images and the mean angular error (MAE) of normals, in Tab. 8. It shows that the variation tendency of IMRC is consistent with MSE and MAE. Moreover, IMRC does not need any geometric ground-truth which is hard to obtain especially for real-world data. Besides the synthetic data, for real-world data, the comparisons with the CD metric on the DTU dataset (please refer to Tab. 1 in the paper) also verify the metric’s credibility.

Scene-independent Nature of IMRC

A desirable metric should be independent of the scenes to be evaluated. For instance, it is better that the best values of a metric for different scenes are the same. Given the ground-truth density fields of different scenes, their IMRC can be different. However, IMRC may not vary significantly. Due to the absence of ground-truth density fields, we resort to the “practical” upper-bound of each scene, which is defined as the best IMRC of a scene obtained by all currently available methods. In this way, the biases of different methods are eliminated as far as possible. In Tab. 9, we present the average, standard deviation (SD), and coefficient of variation (CV), defined as SD / Average, of IMRC’s practical upper-bounds across scenes. For comparison, we also present the corresponding results of PSNR. We can see that, on all the 3 datasets, all the SDs of IMRC are lower than those of PSNR. And only on the NeRF synthetic dataset, IMRC has a higher CV than PSNR, since IMRC has an obviously lower average value. This experiment demonstrates that the variation of IMRC’s practical upper-bounds across different scenes is acceptable compared with the common metric PSNR. In other words, scene-dependent nature has a limited influence on IMRC.

| Practical upper-bound of IMRC | Practical upper-bound of PSNR | |||||

|---|---|---|---|---|---|---|

| Average | SD | CV | Average | SD | CV | |

| DTU | 19.07 | 1.92 | 10.07% | 32.50 | 3.35 | 10.31% |

| NeRF Synthetic | 18.59 | 2.65 | 14.25% | 32.14 | 3.51 | 10.92% |

| LLFF | 22.63 | 3.43 | 15.16% | 26.98 | 4.46 | 16.53% |

| Mean | 20.10 | 2.67 | 13.16% | 30.54 | 3.77 | 12.59% |

Analysis on Reflective Objects and Typical Geometry Artifacts

Reflective Object

The IMRC metric is based on the low-frequency color prior. We use SH of degree to approximate the view-dependent colors from different observation directions. The degree , rather than , ensures that we can deal with non-Lambertian surfaces. The higher the SH degree, the better the non-Lambertian surfaces be approximated. With degree , the highly reflective surface may have a high residual color. However, we are not intended to get a perfect approximation, which is not necessary. The IMRC makes sense if it can correctly rank the geometry of the same scene produced by different methods. We demonstrate this by a reflective object as shown in Fig. 9. The surface of the scissors is highly reflective, which leads to relatively high residual colors for a visually good geometry produced by JaxNeRF. Notice that because degree is applied to all methods, it is a fair treatment that all method has high residual colors on such a surface. More importantly, if one method produces worse geometry than that of JaxNeRF, its residual color will be much higher. This makes IMRC successfully distinguish and rank scene geometry even for a reflective case.

Thick Surface

The thick surface is a typical geometry artifact that would generate inaccurate disparity maps viewed from different locations. Because CD is only based on an iso-surface at a typical density level, it may not be aware about the thickness of a surface. In contrast, IMRC can well recognize such an artifact, because points on the thick surface always have higher residual colors than those that lie nearer to the true surface. We showcase two cases that the IMRC metric penalties thick surfaces in Fig. 10. A sharper surface is also presented for comparison.

Floating Surface

The floating surface is a common artifact in NeRF models. The floaters violate the low-frequency color prior, and so will have high residual colors. We showcase two cases that the IMRC metric penalties floating surfaces in Fig. 11. The better geometry without floating surfaces is also presented for comparison.

Remaining IMRC/UserRank and CD/UserRank Conflict Results

We showcase one remaining IMRC/UserRank conflict case in Fig. 12. On the whole, JaxNeRF produces a thicker surface than Plenoxels as its residual color is always higher surrounding the contour. The Plenoxels produces some floating surfaces and perform worse on certain local details. Overall, the density fields of both methods are not good. After applying the marching cubes algorithm, the floating surface of JaxNeRF’s density field near the right hand of the doll is missing. In contrast, some low density parts of Plenoxels’ density field are discarded, resulting in a poor mesh. Comprehensively considering the depth, residual color, and mesh, the users rank JaxNeRF as better, although the calculation results show that the Plenoxels has a lower residual color, or higher IMRC.

We showcase remaining CD/UserRank conflict cases in Fig. 13 to Fig. 15. We have analysed the two main reasons that cause conflicts in Sec. 5.1. of the main paper. On one hand, because of the marching cubes algorithm, the CD metric fails to recognize some thick surfaces, and some low density surface points are discarded. On the other hand, the object mask used in the calculation neglects some floating meshes and leads to an unfair comparison.

More CD/IMRC/UserRank Consistent Results

On the DTU dataset, except for the IMRC/UserRank and CD/UserRank conflict cases, the remaining pairs all have consistent CD/IMRC/UserRank. We illustrate more consistent cases in Fig. 16 to Fig. 20. We find that both CD and IMRC successfully reflect the quality of the density field in these cases.