Estimation and inference for the Wasserstein distance between mixing measures in topic models

Abstract

The Wasserstein distance between mixing measures has come to occupy a central place in the statistical analysis of mixture models. This work proposes a new canonical interpretation of this distance and provides tools to perform inference on the Wasserstein distance between mixing measures in topic models. We consider the general setting of an identifiable mixture model consisting of mixtures of distributions from a set equipped with an arbitrary metric , and show that the Wasserstein distance between mixing measures is uniquely characterized as the most discriminative convex extension of the metric to the set of mixtures of elements of . The Wasserstein distance between mixing measures has been widely used in the study of such models, but without axiomatic justification. Our results establish this metric to be a canonical choice. Specializing our results to topic models, we consider estimation and inference of this distance. Although upper bounds for its estimation have been recently established elsewhere, we prove the first minimax lower bounds for the estimation of the Wasserstein distance between mixing measures, in topic models, when both the mixing weights and the mixture components need to be estimated. Our second main contribution is the provision of fully data-driven inferential tools for estimators of the Wasserstein distance between potentially sparse mixing measures of high-dimensional discrete probability distributions on points, in the topic model context. These results allow us to obtain the first asymptotically valid, ready to use, confidence intervals for the Wasserstein distance in (sparse) topic models with potentially growing ambient dimension .

keywords:

1 Introduction

This work is devoted to estimation and inference for the Wasserstein distance between the mixing measures associated with mixture distributions, in topic models.

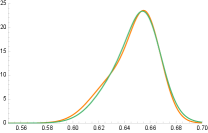

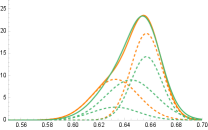

Our motivation stems from the general observation that standard distances (Hellinger, Total Variation, Wasserstein) between mixture distributions do not capture the possibility that similar distributions may arise from mixing completely different mixture components, and have therefore different mixing measures. Figure 1 illustrates this possibility.

In applications of mixture models in sciences and data analysis, discovering differences between mixture components is crucial for model interpretability. As a result, the statistical literature on finite mixture models largely focuses on the underlying mixing measure as the main object of interest, and compares mixtures by measuring distances between their corresponding mixing measures. This perspective has been adopted in the analysis of estimators for mixture models [42, 49, 31, 17, 48], in Bayesian mixture modeling [45, 44], and in the design of model selection procedures for finite mixtures [47]. The Wasserstein distance is a popular choice for this comparison [49, 31], as it is ideally suited for comparing discrete measures supported on different points of the common space on which they are defined.

Our first main contribution is to give an axiomatic justification for this distance choice, for any identifiable mixture models.

Our main statistical contribution consists in providing fully data driven inferential tools for the Wasserstein distance between mixing measures, in topic models. Optimal estimation of mixing measures in particular mixture models is an area of active interest (see, e.g., [42, 49, 31, 17, 48, 35, 69]).

In parallel, estimation and inference of distances between discrete distributions has begun to receive increasing attention [30, 65].

This work complements both strands of literature and we provide solutions to the following open problems:

(i) Minimax lower bounds for the estimation of the Wasserstein distance between mixing measures in topic models, when both the mixture weights and the mixture components are estimated from the data;

(ii) Fully data driven inferential tools for the Wasserstein distance between topic-model based, potentially sparse, mixing measures.

Our solution to (i) complements recently derived upper bounds on this distance [8], thereby confirming that near-minimax optimal estimation of the distance is possible, while the inference problem (ii), for topic models, has not been studied elsewhere, to the best of our knowledge. We give below specific necessary background and discuss our contributions, as well as the organization of the paper.

2 Our contributions

2.1 The Sketched Wasserstein Distance (SWD) between mixture distributions equals the Wasserstein distance between their mixing measures

Given a set of probability distributions on a Polish space , consider the set of finite mixtures of elements of . As long as mixtures consisting of elements of are identifiable, we may associate any with a unique mixing measure , which is a probability measure on .

We assume that is equipped with a metric . This may be one of the classic distances on probability measures, such as the Total Variation (TV) or Hellinger distance, or it may be a more exotic distance, for instance, one computed via a machine learning procedure or tailored to a particular data analysis task. As mentioned above, given mixing measures and , corresponding to mixture distributions and , respectively, it has become common in the literature to compare them by computing their Wasserstein distance with respect to the distance (see, e.g., [49, 31]). It is intuitively clear that computing a distance between the mixing measures will indicate, in broad terms, the similarity or dissimilarity between the mixture distributions; however, it is not clear whether using the Wasserstein distance between the mixing measures best serves this goal, nor whether such a comparison has any connection to a bona fide distance between the mixture distributions themselves.

As our first main contribution, we show that, indeed, the Wassersetin distance between mixing measures is a fundamental quantity to consider and study, but we arrive at it from a different perspective. In Section 3 we introduce a new distance, the Sketched Wasserstein Distance () on , between mixture distributions which is defined and uniquely characterized as the largest—that is, most discriminative—jointly convex metric on for which the inclusion is an isometry. Despite the abstractness of this definition, we show, in Theorem 1 below that

This fact justifies our name Sketched Wasserstein Distance for the new metric—it is a distance on mixture distributions that reduces to a Wasserstein distance between mixing measures, which may be viewed as “sketches” of the mixtures. We use the results of Section 3 as further motivation for developing inferential tools for the Wasserstein distance between mixing measures, in the specific setting provided by topic models. We present this below.

2.2 Background on topic models

To state our results, we begin by introducing the topic model, as well as notation that will be used throughout the paper. We also give in this section relevant existing estimation results for the model. They serve as partial motivation for the results of this paper, summarized in the following sub-sections.

Topic models are widely used modeling tools for linking discrete, typically high dimensional, probability vectors. Their motivation and name stems from text analysis, where they were first introduced [13], but their applications go beyond that, for instance to biology [15, 18]. Adopting a familiar jargon, topic models are used to model a corpus of documents, each assumed to have been generated from a maximum of topics. Each document is modeled as a set of words drawn from a discrete distribution supported on the set of words , where is the dictionary size. One observes independent, -dimensional word counts for and assumes that

| (1) |

For future reference, we let denote the associated word frequency vector.

The topic model assumption is that the matrix , with columns , the expected word frequencies of each document, can be factorized as , where the matrix has columns in the probability simplex , corresponding to the conditional probabilities of word occurrences, given a topic. The matrix collects probability vectors with entries corresponding to the probability with which each topic is covered by document , for each . The only assumption made in this factorization is that is common to the corpus, and therefore document independent: otherwise the matrix factorization above always holds, by a standard application of Bayes’s theorem. Writing out the matrix factorization one column at a time

| (2) |

one recognizes that each (word) probability vector is a discrete mixture of the (conditional) probability vectors , with potentially sparse mixture weights , as not all topics are expected to be covered by a single document . Each probability vector can be written uniquely as in (2) if uniqueness of the topic model factorization holds. The latter has been the subject of extensive study and is closely connected to the uniqueness of non-negative matrix factorization. Perhaps the most popular identifiability assumption for topic models, owing in part to its interpretability and constructive nature, is the so called anchor word assumption [25, 4], coupled with the assumption that the matrix has rank . We state these assumptions formally in Section 4.1. For the remainder of the paper, we assume that we can write uniquely as in (2), and refer to as the true mixture weights, and to , , as the mixture components. Then the collection of mixture components defined in the previous section becomes and the mixing measure giving rise to is

which we view as a probability measure on the metric space obtained by equipping with a metric .

Computationally tractable estimators of the mixture components collected in have been proposed by [4, 3, 61, 54, 9, 10, 67, 38], with finite sample performance, including minimax adaptive rates relative to and obtained by [61, 9, 10]. In Section 4.1 below we give further details on these results.

In contrast, minimax optimal, sparsity-adaptive estimation of the potentially sparse mixture weights has only been studied very recently in [8]. Given an estimator with for all , this work advocates the usage of an MLE-type estimator ,

| (3) |

If were treated as known, and replaced by , the estimator in (3) reduces to , the MLE of under the multinomial model (1).

Despite being of MLE-type, the analysis of , for , is non-standard, and [8] conducted it under the general regime that we will also adopt in this work. We state it below, to facilitate contrast with the classical set-up.

| Classical Regime | General Regime | |

|---|---|---|

| (a) is known | (a’) is estimated from samples of size each | |

| (b) is independent of | (b’) can grow with (and ). | |

| (c) , for all | (c’) can be sparse. | |

| (d) , for all | (d’) can be sparse. |

The General Regime stated above is natural in the topic model context. The fact that must be estimated from the data is embedded in the definition of the topic model. Also, although one can work with a fixed dictionary size, and fixed number of topics, one can expect both to grow as more documents are added to the corpus. As already mentioned, each document in a corpus will typically cover only a few topics, so is typically sparse. When is also sparse, as it is generally the case [10], then can also be sparse. To see this, take and write . If document covers topic 1 (), but not topic 2 (), and the probability of using word 1, given that topic 1 is covered, is zero (), then .

Under the General Regime above, [8, Theorem 10] shows that, whenever is sparse, the estimator is also sparse, with high probability, and derive the sparsity adaptive finite-sample rates for summarized in Section 4.1 below. Furthermore, [8] suggests estimating by , with

| (4) |

for given estimators , and of their population-level counterparts, and . Upper bounds for these distance estimates are derived [8], and re-stated in a slightly more general form in Theorem 2 of Section 4.2. The work of [8], however, does not study the optimality of their upper bound, and motivates our next contribution.

2.3 Lower bounds for the estimation of the Wasserstein distance between mixing measures in topic models

In Theorem 3 of Section 4.3, we establish a minimax optimal bound for estimating , for any pair , from a collection of independent multinomial vectors whose collective cell probabilities satisfy the topic model assumption. Concretely, for any on that is bi-Lipschitz equivalent to the TV distance (c.f. Eq. 26), we have

where , and denotes the norm of a vector, counting the number of its non-zero elements, For simplicity of presentation we assume that , although this is not needed for either theory or practice. The two terms in the above lower bound quantify, respectively, the smallest error of estimating the distance when the mixture components are known, and the smallest error when the mixture weights are known.

We also obtain a slightly sharper lower bound for the estimation of the mixing measures and themselves in the Wasserstein distance:

As above, these two terms reflect the inherent difficulty of estimating the mixture weights and mixture components, respectively. As we discuss in Section 4.3, a version of this lower bound with additional logarithmic factors follows directly from the lower bound presented above for the estimation of . For completeness, we give the simple argument leading to this improved bound in Appendix D of [7].

The upper bounds obtained in [8] match the above lower bounds, up to possibly sub-optimal logarithmic terms. Together, these results show that the plug-in approach to estimating the Wasserstein distance is nearly optimal, and complement a rich literature on the performance of plug-in estimators for non-smooth functional estimation tasks [41, 16]. Our lower bounds complement those recently obtained for the minimax-optimal estimation of the TV distance for discrete measures [36], but apply to a wider class of distance measures and differ by an additional logarithmic factor; whether this logarithmic factor is necessary is an interesting open question. The results we present are the first nearly tight lower bounds for estimating the class of distances we consider, and our proofs require developing new techniques beyond those used for the total variation case. More details can be found in Section 4.3. The results of this section are valid for any . In particular, the ambient dimension , and the number of mixture components are allowed to grow with the sample sizes .

2.4 Inference for the Wasserstein distance between sparse mixing measures in topic models

To the best of our knowledge, despite an active interest in inference for the Wasserstein distance in general [46, 22, 23, 19, 20, 21] and the Wasserstein distance between discrete measures, in particular [57, 59], inference for the Wasserstein between sparse discrete mixing measures, in general, and in particular in topic models, has not been studied.

Our main statistical contribution is the provision of inferential tools for , for any pair , in topic models. To this end:

-

(1)

In Section 5.2, Theorem 5, we derive a distributional limit for an appropriate estimator of the distance.

-

(2)

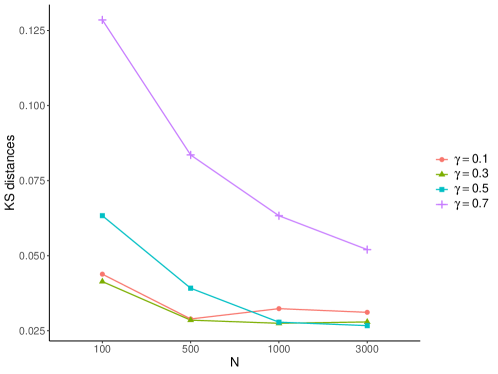

In Section 5.3, Theorem 6, we estimate consistently the parameters of the limit, thereby providing theoretically justified, fully data driven, estimates for inference. We also contrast our suggested approach with a number of possible bootstrap schemes, in our simulation study of Section F.4 in [7], revealing the benefits of our method.

Our approach to (1) is given in Section 5. In Proposition 1 we show that, as soon as that error in estimating can be appropriately controlled in probability, for instance by employing the estimator given in Section 4.1, the desired limit of a distance estimator hinges on inference for the potentially sparse mixture weights . Perhaps surprisingly, this problem has not been studied elsewhere, under the General Regime introduced in Section 2.2 above. Its detailed treatment is of interest in its own right, and constitutes one of our main contributions to the general problem of inference for the Wasserstein distance between mixing measures in topic models. Since our asymptotic distribution results for the distance estimates rest on determining the distributional limit of -dimensional mixture weight estimators, the asymptotic analysis will be conducted for fixed. However, we do allow the ambient dimension to depend on the sample sizes and .

2.4.1 Asymptotically normal estimators for sparse mixture weights in topic models, under general conditions

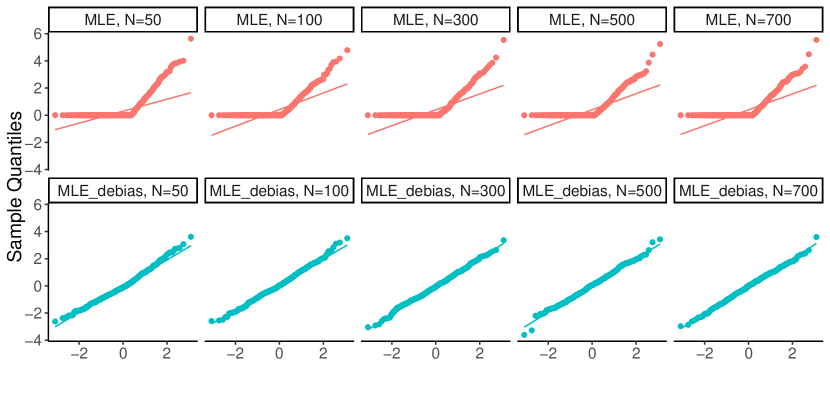

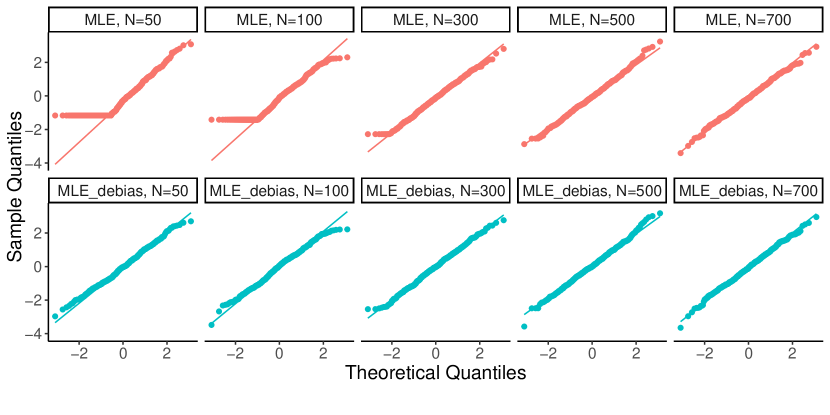

We construct estimators of the sparse mixture weights that admit a Gaussian limit under the General Regime, and are asymptotically efficient under the Classical Regime. The challenges associated with this problem under the General Regime inform our estimation strategy, and can be best seen in contrast to the existing solutions offered in the Classical Regime. In what follows we concentrate on inference of for some arbitrarily picked .

In the Classical Regime, the estimation and inference for the MLE of , in a low dimensional parametrization of a multinomial probability vector , when is a known function and lies in an open set of , with , have been studied for over eighty years. Early results go back to [52, 53, 11], and are reproduced in updated forms in standard text books, for instance Section 16.2 in [1]. Appealing to these results for , with known, the asymptotic distribution of the MLE of under the Classical Regime is given by

| (5) |

where the asymptotic covariance matrix is

| (6) |

a matrix of rank . By standard MLE theory, under the Classical Regime, any sub-vector of is asymptotically efficient, for any interior point of .

However, even if conditions (a) and (b) of the Classical Regime hold, but conditions (c) and (d) are violated, in that the model parameters are on the boundary of their respective simplices, the standard MLE theory, which is devoted to the analysis of estimators of interior points, no longer applies. In general, an estimator , restricted to lie in , cannot be expected to have a Gaussian limit around a boundary point (see, e.g., [2] for a review of inference for boundary points).

Since inference for the sparse mixture weights is only an intermediate step towards inference on the Wasserstein distance between mixing distributions, we aim at estimators of the weights that have the simplest possible limiting distribution, Gaussian in this case. Our solution is given in Theorem 4 of Section 5.1. We show, in the General Regime, that an appropriate one-step update , that removes the asymptotic bias of the (consistent) MLE-type estimator given in (3) above, is indeed asymptotically normal:

| (7) |

where the asymptotic covariance matrix is

| (8) |

with } and being the square root of its generalized inverse. We note although the dimension of is fixed, its entries are allowed to grow with and , under the General Regime.

We benchmark the behavior of our proposed estimator against that of the MLE, in the Classical Regime. We observe that, under the Classical Regime, we have , and reduces to , the limiting covariance matrix of the MLE, given by (6). This shows that, in this regime, our proposed estimator enjoys the same asymptotic efficiency properties as the MLE.

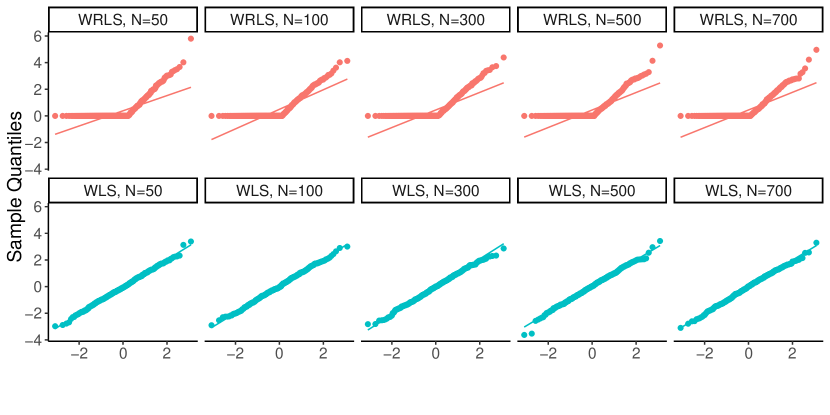

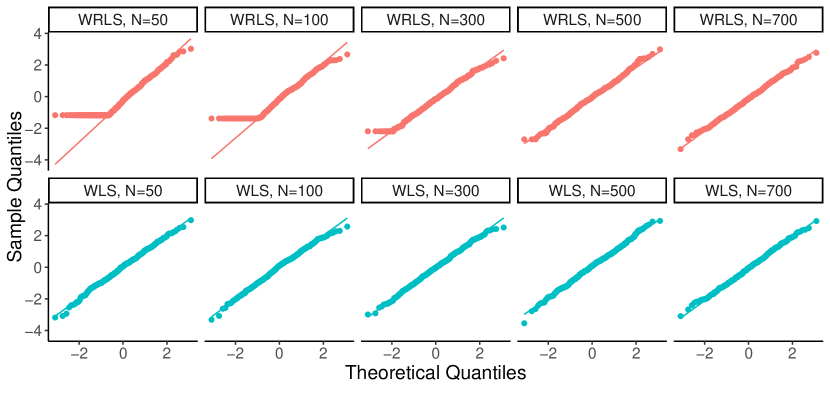

In Remark 5 of Section 5.1 we discuss other natural estimation possibilities. It turns out that a weighted least squares estimator of the mixture weights is also asymptotically normal, however it is not asymptotically efficient in the Classical Regime (cf. Theorem 15 in Appendix H, where we show that the covariance matrix of the limiting distribution of the weighted least squares does not equal ). As a consequence, the simulation results in Section F.3 show that the resulting distance confidence intervals are slightly wider than those corresponding to our proposed estimator. For completeness, we do however include a full theoretical treatment of this natural estimator in Appendix H.

2.4.2 The limiting distribution of the distance estimates and consistent quantile estimation

Having constructed estimators of the mixture weights admitting Gaussian limits, we obtain as a consequence the limiting distribution of our proposed estimator for . The limiting distribution is non-Gaussian and involves an auxiliary optimization problem based on the Kantorovich–Rubinstein dual formulation of the Wasserstein distance: namely, under suitable conditions we have

where is a Gaussian vector and is a certain polytope in . The covariance of and the polytope depend on the parameters of the problem.

To use this theorem for practical inference, we provide a consistent method of estimating the limit on the right-hand side, and thereby obtain the first theoretically justified, fully data-driven confidence intervals for . We refer to Theorem 6 and Section 5.3.2 for details.

Furthermore, a detailed simulation study presented in Appendix F of [7], shows that, numerically, (i) our method compares favorably with the -out-of- bootstrap [26], especially in small samples, while also avoiding the delicate choice of ; (ii) our method has comparable performance to the much more computationally expensive derivative-based bootstrap [28]. This investigation, combined with our theoretical guarantees, provides strong support in favor of the potential of our proposal for inference problems involving the Wasserstein distance between potentially sparse mixing measures in large topic models.

3 A new distance for mixture distributions

In this section, we begin by focusing on a given subset of probability measures on , and consider mixtures relative to this subset. The following assumption guarantees that, for a mixture , the mixing weights are identifiable.

Assumption 1.

The set is affinely independent.

As discussed in Section 2.2 above, in the context of topic models, it is necessary to simultaneously identify the mixing weights and the mixture components ; for this reason, the conditions for identifiability of topic models (3 in Section 4.1) is necessarily stronger than 1. However, the considerations in this section apply more generally to finite mixtures of distributions on an arbitrary Polish space. In the following sections, we will apply the general theory developed here to the particular case of topic models, where the set is unknown and has to be estimated along with the mixing weights.

We assume that is equipped with a metric . Our goal is to define a metric based on and adapted to on the space , the set of mixtures arising from the mixing components . That is to say, the metric should retain geometric features of the original metric , while also measuring distances between elements of relative to their unique representation as convex combinations of elements of . Specifically, to define a new distance between elements of , we propose that it satisfies two desiderata:

-

R1:

The function should be a jointly convex function of its two arguments.

-

R2:

The function should agree with the original distance on the mixture components i.e., for .

We do not specifically impose the requirement that be a metric, since many useful measure of distance between probability distributions, such as the Kullback–Leibler divergence, do not possess this property; nevertheless, in Corollary 1, we show that our construction does indeed give rise to a metric on .

R1 is motivated by both mathematical and practical considerations. Mathematically speaking, this property is enjoyed by a wide class of well behaved metrics, such as those arising from norms on vector spaces, and, in the specific context of probability distributions, holds for any -divergence. Metric spaces with jointly convex metrics are known as Busemann spaces, and possess many useful analytical and geometrical properties [37, 58]. Practically speaking, convexity is crucial for both computational and statistical purposes, as it can imply, for instance, that optimization problems involving are computationally tractable and that minimum-distance estimators using exist almost surely. R2 says that the distance should accurately reproduce the original distance when restricted to the original mixture components, and therefore that should capture the geometric structure induced by .

To identify a unique function satisfying R1 and R2, note that the set of such functions is closed under taking pointwise suprema. Indeed, the supremum of convex functions is convex [see, e.g., 32, Proposition IV.2.1.2], and given any set of functions satisfying R2, their supremum clearly satisfies R2 as well. For , we therefore define by

| (9) |

This function is the largest—most discriminative—quantity satisfying both R1 and R2.

Under 1, we can uniquely associate a mixture with the mixing measure , which is a probability measure on the metric space . Our first main result shows that agrees precisely with the Wasserstein distance on this space.

Theorem 1.

Under Assumption 1, any all ,

where denotes the Wasserstein distance between the mixing measures corresponding to , respectively.

Proof.

The proof can be found in Section B.1.1. ∎

Theorem 1 reveals a surprising characterization of the Wasserstein distances for mixture models. On a convex set of measures, the Wasserstein distance is uniquely specified as the largest jointly convex function taking prescribed values at the extreme points. This characterization gives an axiomatic justification of the Wasserstein distance for statistical applications involving mixtures.

The study of the relationship between the Wasserstein distance on the mixing measures and the classical probability distances on the mixtures themselves was inaugurated by [49].

Clearly, if the Wasserstein distance between the mixing measures is zero, then the mixture distributions agree as well, but the converse is not generally true.

This line of research has therefore sought conditions under which the Wasserstein distance on the mixing measures is comparable with a classical probability distance on the mixtures.

In the context of mixtures of continuous densities on , [49] showed that a strong identifiability condition guarantees that the total variation distance between the mixtures is bounded below by the squared Wasserstein distance between the mixing measures, as long as the number of atoms in the mixing measures is bounded.

Subsequent refinements of this result (e.g., [33, 34]) obtained related comparison inequalities under weaker identifiability conditions.

This work adopts a different approach, viewing the Wasserstein distance between mixing measures as a bona fide metric, the Sketched Wasserstein Distance, on the mixture distributions themselves.

Our Theorem 1 can also be viewed as an extension of a similar result in the nonlinear Banach space literature: Weaver’s theorem [66, Theorem 3.3 and Lemma 3.5] identifies the norm defining the Arens–Eells space, a Banach space into which the Wasserstein space isometrically embeds, as the largest seminorm on the space of finitely supported signed measures which reproduces the Wasserstein distance on pairs of Dirac measures.

Corollary 1.

Under 1, is a complete metric space.

The proof appears in Appendix B.1.2.

4 Near-Optimal estimation of the Wasserstein distance between mixing measures in topic models

Finite sample upper bounds for estimators of , when is either the total variation distance or the Wasserstein distance on discrete probability distributions supported on points have been recently established in [8]. In this section we investigate the optimality of these bounds, by deriving minimax lower bounds for estimators of this distance, a problem not previously studied in the literature.

For clarity of exposition, we begin with a review on estimation in topic models in Section 4.1, state existing upper bounds on estimation in Section 4.2, then prove the lower bound in Section 4.3.

4.1 Review of topic model identifiability conditions and of related estimation results

In this section, we begin by reviewing (i) model identifiability and the minimax optimal estimation of the mixing components , and (ii) estimation and finite sample error bounds for the mixture weights for . Necessary notation, used in the remainder of the paper, is also introduced here.

4.1.1 Identifiability and estimation of

Model identifiability in topic models is a sub-problem of the larger class devoted to the study of the existence and uniqueness of non-negative matrix factorization. The latter has been studied extensively in the past two decades. One popular set of identifiability conditions involve the following assumption on :

Assumption 3.

For each , there exists at least one such that and for all .

It is informally referred to as the anchor word assumption, as it postulates that every topic has at least one word solely associated with it. 3 in conjunction with ensures that both and are identifiable [25], therefore guaranteeing the full model identifiability. In particular, 3 implies 1. 3 is sufficient, but not necessary, for model identifiability, see, e.g., [29]. Nevertheless, all known provably fast algorithms make constructive usage of it. Furthermore, 3 improves the interpretability of the matrix factorization, and the extensive empirical study in [24] shows that it is supported by a wide range of data sets where topic models can be employed.

We therefore adopt this condition in our work. Since the paper [13], estimation of has been studied extensively from the Bayesian perspective (see, [12], for a comprehensive review of this class of techniques). A recent list of papers [4, 3, 6, 61, 9, 10, 38, 67] have proposed computationally fast algorithms of estimating , from a frequentist point of view, under 3. Furthermore, minimax optimal estimation of under various metrics has also been well understood [61, 9, 10, 38, 67]. In particular, under 3, by letting denote the number of words that satisfy 3, the work of [9] has established the following minimax lower bound

| (11) |

for all estimators , over the parameter space

| (12) |

with , being some absolute constant. Above for all is assumed for convenience of notation, and will be adopted in this paper as well, while noting that all the related theoretical results continue to hold in general.

The estimator of proposed in [9] is shown to be minimax-rate adaptive, and computationally efficient. Under the conditions collected in [8, Appendix K.1]), this estimator satisfies

| (13) |

and is therefore minimax optimal up to a logarithmic factor of . This factor comes from a union bound over the whole corpus and all words. Under the same set of conditions, we also have the following normalized sup-norm control (see, [8, Theorem K.1])

| (14) |

We will use this estimator of as our running example for the remainder of this paper. For the convenience of the reader, Section A of [7] gives its detailed construction. All our theoretical results are still applicable when is replaced by any other minimax-optimal estimator such as [61, 10, 38, 67] coupled with a sup-norm control as in (14).

4.1.2 Mixture weight estimation

We review existing finite sample error bounds for the MLE-type estimator defined above in (3), for , arbitrary fixed. Recall that . The estimator is valid and analyzed for a generic in [8], and the results pertain, in particular, to all estimators mentioned in Section 4.1. For brevity, we only state below the results for an estimator calculated relative to the estimator in [9].

The conditions under which is analyzed are lengthy, and fully explained in [8]. To improve readability, while keeping this paper self-contained, we opted for stating them in Appendix G, and we introduce here only the minimal amount of notation that will be used first in Section 4.3, and also in the remainder of the paper.

Let be any integer and consider the following parameter space associated with the sparse mixture weights,

| (15) |

For with any , let denote the vector of observed frequencies of , with

Define

| (16) |

These sets are chosen such that (see, for instance, [8, Lemma I.1])

Let and define

| (17) |

Another important quantity appearing in the results of [8] is the restricted condition number of defined, for any integer , as

| (18) |

with Let

| (19) |

For future reference, we have

| (20) |

Under conditions in Appendix G for and , Theorems 9 & 10 in [8] show that

| (21) |

The statement of the above result, included in Appendix G, of [8, Theorems 9 & 10] is valid for any generic estimator of that is minimax adaptive, up to a logarithmic factor of .

We note that the first term (21) reflects the error of estimating the mixture weights, if were known, whereas the second one is the error in estimating .

4.2 Review on finite sample upper bounds for

For any estimators and , plug-in estimators of with and defined in (4) can be analyzed using the fact that

| (22) |

with , whenever the following hold

| (23) |

and

| (24) |

for some deterministic sequences and . The notation is mnemonic of the fact that the estimation of mixture weights depends on the estimation of .

Inequality (22) follows by several appropriate applications of the triangle inequality and, for completeness, we give the details in Section B.2.1. We also note that (22) holds for based on any estimator .

When and are the MLE-type estimators given by (3), [8] used this strategy to bound the left hand side in (22) for any estimator , and then for a minimax-optimal estimator of , when is either the total variation distance or the Wasserstein distance on .

We reproduce the result [8, Corollary 13] below, but we state it in the slightly more general framework in which the distance on only needs to satisfy

| (25) |

for some . This holds, for instance, if is an norm with on (with ), or the Wasserstein distance on (with ). Recall that and are defined in (12) and (15). Further recall from (19) and .

Theorem 2 (Corollary 13, [8]).

Let and be obtained from (3) by using the estimator in [9]. Grant conditions in Appendix G for and . Then for any satisfying (25) with , we have

In the next section we investigate the optimality of this upper bound by establishing a matching minimax lower bound on estimating .

4.3 Minimax lower bounds for the Wasserstein distance between topic model mixing measures

Theorem 3 below establishes the first lower bound on estimating , the Wasserstein distance between two mixing measures, under topic models. Our lower bounds are valid for any distance on satisfying

| (26) |

with some .

Theorem 3.

Grant topic model assumptions and assume and for some universal constants . Then, for any satisfying (26) with some , we have

Here the infimum is taken over all distance estimators.

Proof.

The proof can be found in Section B.2.2. ∎

The lower bound in Theorem 3 consists of two terms that quantify, respectively, the estimation error of estimating the distance when the mixture components, , are known, and that when the mixture weights, , are known. Combined with Theorem 2, this lower bound establishes that the simple plug-in procedure can lead to near-minimax optimal estimators of the Wasserstein distance between mixing measures in topic models, up to the condition numbers of , the factor and multiplicative logarithmic factors of .

Remark 2 (Optimal estimation of the mixing measure in Wasserstein distance).

The proof of Theorem 2 actually shows that the same upper bound holds for ; that is, that the rate in Theorem 2 is also achievable for the problem of estimating the mixing measure in Wasserstein distance. Our lower bound in Theorem 3 indicates that the plug-in estimator is also nearly minimax optimal for this task. Indeed, if we let and be arbitrary estimators of and , respectively, then

Therefore, the lower bound in Theorem 3 also implies a lower bound for the problem of estimating the measures and in Wasserstein distance. In fact, a slightly stronger lower bound, without logarithmic factors, holds for the latter problem. We give a simple argument establishing this improved lower bound in Appendix D.

Remark 3 (New minimax lower bounds for estimating a general metric on discrete probability measures).

The proof of Theorem 3 relies on a new result, stated in Theorem 10 of Appendix C, which is of interest on its own. It establishes minimax lower bounds for estimating a distance between two probability measures on a finite set based on i.i.d. samples from and . Our results are valid for any distance satisfying

| (27) |

with some , including the Wasserstein distance as a particular case.

Prior to our work, no such bound even for estimating the Wasserstein distance was known except in the special case where the metric space associated with the Wasserstein distance is a tree [5, 65]. Proving lower bounds on the rate of estimation of the Wasserstein distance for more general metrics is a long standing problem, and the first nearly-tight bounds in the continuous case were given only recently [50, 43].

More generally, the lower bound in Theorem 10 adds to the growing literature on minimax rates of functional estimation for discrete probability measures (see, e.g., [36, 68]). The rate we prove is smaller by a factor of than the optimal rate of estimation of the total variation distance [36]; on the other hand, our lower bounds apply to any metric which is bi-Lipschitz equivalent to the total variation distance. We therefore do not know whether this additional factor of is spurious or whether there in fact exist metrics in this class for which the estimation problem is strictly easier. At a technical level, our proof follows a strategy of [50] based on reduction to estimation in total variation, for which we prove a minimax lower bound based on the method of “fuzzy hypotheses” [62]. The novelty of our bounds involves the fact that we must design priors which exhibit a large multiplicative gap in the functional of interest, whereas prior techniques [36, 68] are only able to control the magnitude of the additive gap between the values of the functionals. Though additive control is enough to prove a lower bound for estimating total variation distance, this additional multiplicative control is necessary if we are to prove a bound for any metric satisfying (27).

5 Inference for the Wasserstein distance between mixing measures in topic models, under the General Regime

We follow the program outlined in the Introduction and begin by giving our general strategy towards obtaining a asymptotic limit for a distance estimate. Throughout this section is considered fixed, and does not grow with or . However, the dictionary size is allowed to depend on both and . As in the Introduction, we let purely for notational convenience. We let and be (pseudo) estimators of and , that we will motivate below, and give formally in (41). Using the Kantorovich-Rubinstein dual formulation of (see, Eq. 61), we propose distance estimates of the type

| (28) |

for

| (29) |

constructed, for concreteness, relative to the estimator described in Section 4.1. Proposition 1, stated below, and proved in Section B.3.1, gives our general approach to inference for based on .

Proposition 1.

Assume access to:

-

(i)

Estimators that satisfy

(30) for , with some positive semi-definite covariance matrix .

-

(ii)

Estimators of such that defined in (24) satisfies

(31)

Then, we have the following convergence in distribution as ,

| (32) |

where

| (33) |

with

| (34) |

Proposition 1 shows that in order to obtain the desired asymptotic limit (32) it is sufficient to control, separately, the estimation of the mixture components, in probability, and that of the mixture weight estimators, in distribution. Since estimation of is based on all documents, each of size , all the limits in Proposition 1 are taken over both and to ensure that the error in estimating becomes negligible in the distributional limit. For our running example of , constructed in Section A of [7],

for , when is either the Total Variation distance, or the Wasserstein distance, as shown in [8].

The proof of Proposition 1 shows that, when (31) holds, as soon as (30) is established, the desired (32) follows by an application of the functional -method, recognizing (see, Proposition 2 in Section B.3.1) that the function defined as is Hadamard-directionally differentiable at with derivative defined as

| (35) |

The last step of the proof has also been advocated in prior work [56, 26, 28], and closest to ours is [57]. This work estimates the Wasserstein distance between two discrete probability vectors of fixed dimension by the Wasserstein distance between their observed frequencies. In [57], the equivalent of (30) is the basic central limit theorem for the empirical estimates of the cell probabilities of a multinomial distribution. In topic models, and in the Classical Regime, that in particular makes, in the topic model context, the unrealistic assumption that is known, one could take , the MLE of . Then, invoking classical results, for instance, [1, Section 16.2], the convergence in (30) holds, with given above in (6).

In contrast, constructing estimators of the potentially sparse mixture weights for which (30) holds, under the General Regime, in the context of topic models, requires special care, is a novel contribution to the literature, and is treated in the following section. Their analysis, coupled with Proposition 1, will be the basis of Theorem 5 of Section 5.2 in which we establish the asymptotic distribution of our proposed distance estimator in topic models.

5.1 Asymptotically normal estimators of sparse mixture weights in topic models, under the General Regime

We concentrate on one sample, and drop the superscript from all relevant quantities. To avoid any possibility of confusion, in this section we also let denote the true population quantity.

As discussed in Section 2.2 of the Introduction, under the Classical Regime, the MLE estimator of is asymptotically normal and efficient, a fact which cannot be expected to hold under General Regime for an estimator restricted to , especially when is on the boundary of . We exploit the KKT conditions satisfied by , defined in (3), to motivate the need for its correction, leading to a final estimator that will be a “de-biased” version of , in a sense that will be made precise shortly.

Recall that is the maximizer, over , of , with , for the observed frequency vector . Let

| (36) |

We have with probability equal to one. To see this, note that if for some we would have , and then would not be a maximizer.

Observe that the KKT conditions associated with , for dual variables satisfying , for all , and arising from the non-negativity constraints on in the primal problem, imply that

| (37) |

By adding and subtracting terms in (37) (see also the details of the proof of Theorem 9), we see that satisfies the following equality

| (38) |

where

| (39) |

On the basis of (38), standard asymptotic principles dictate that the asymptotic normality of would follow by establishing that converges in probability to a matrix that will contribute to the asymptotic covariance matrix, the term converges to a Gaussian limit, and vanishes, in probability. The latter, however, cannot be expected to happen, in general, and it is this term that creates the first difficulty in the analysis. To see this, writing the right hand side in (37) as

we obtain from (37) that

| (40) |

We note that, under the Classical Regime, , which implies that, with probability tending to one, thus and . Consequently, Eq. 40 yields , which is expected to vanish asymptotically. This is indeed in line with classical analyses, in which the asymptotic bias term, , is of order .

However, in the General Regime, we do not expect this to happen as we allow . Since we show in Lemma 3 of Section B.3.4 that, with high probability, , we therefore have . Thus, one cannot ensure that vanishes asymptotically, because the last term in (40) is non-zero, and we do not have direct control on the dual variables either. It is worth mentioning that the usage of is needed as the naive estimator of is not consistent in general when , whereas is; see, Lemma 3 of Section B.3.4.

The next natural step is to construct a new estimator, by removing the bias of . We let be a matrix that will be defined shortly, and denote by its generalized inverse. Define

| (41) |

and observe that it satisfies

| (42) |

a decomposition that no longer contains the possibly non-vanishing bias term in (38). The (lengthy) proof of Theorem 9 stated in [7] shows that, indeed, after appropriate scaling, the first term in the right hand side of (42) vanishes asymptotically, and the second one has a Gaussian limit, as soon as is appropriately chosen.

As mentioned above, the choice of is crucial for obtaining the desired asymptotic limit, and is given by

| (43) |

This choice is motivated by using defined in (8) as a benchmark for the asymptotic covariance matrix of the limit, as we would like that the new estimator not only be valid in the General Regime, but also not lose the asymptotic efficiency of the MLE, in the Classical Regime. Indeed, the proof of Theorem 9 shows that the asymptotic covariance matrix of is nothing but . Notably, from the decomposition in (42), it would be tempting to use , given by (39), with replaced by , instead of . However, although this matrix has the desired asymptotic behavior, we find in our simulations that its finite sample performance relative to is sub-par.

Our final estimator is therefore given by (41), with given by (43). We show below that this estimator is asymptotically normal. The construction of in (41) has the flavor of a Newton–Raphson one-step correction of (see, [40]), relative to the estimating equation . However, classical general results on the asymptotic distribution of one-step corrected estimators such as Theorem 5.45 of [63] cannot be employed directly, chiefly because, when translated to our context, they are derived for deterministic . In our problem, after determining the appropriate de-biasing quantity and the appropriate , the main remaining difficulty in proving asymptotic normality is in controlling the (scaled) terms in the right hand side of (42). A key challenge is the delicate interplay between and , which are dependent, as they are both estimated via the same sample. This difficulty is further elevated in the General Regime, when not only , but also the entries of and (thereby the quantities , , and , defined in Section 4.1.2), can grow with and . In this case, one needs careful control of their interplay.

We state and prove results pertaining to this general situation in Section B.3.4: Theorem 8 is our most general result, proved for any estimator whose estimation errors, defined by the left hand sides of (13) and (14), can be well controlled, in a sense made precise in the statement of Theorem 8. As an immediate consequence, Theorem 9 in Section B.3.4 establishes the asymptotic normality of under the specific control given by the right hand sides of (13) and (14).

We recall that, for instance, these upper bounds are valid for the estimator given in Section A of [7].

We state below a version of our results, corresponding to an estimator of that satisfies (13) and (14), by making the following simplifying assumptions: the condition numbers , the signal strength , and are bounded. The latter means that we assume that the number of positive entries in that fall below is bounded. We also assume, in this simplified version of our results, that the magnitude of the entries of does not grow with either or . We stress that we do not make any of these assumptions in Section B.3.4, and use them here for clarity of exposition only.

Recall that is defined in (8). Let be the Moore-Penrose inverse of and let be its matrix square root.

Theorem 4.

Then

| (44) |

The proof of Theorem 4 is obtained as a direct consequence of the general Theorem 9, stated and proved in Section B.3.4. Theorem 4 holds under a mild requirement on , the document length, and on a requirement on , the number of documents, that is expected to hold in topic model contexts, when is typically (much) larger than the dictionary size .

Remark 4.

In line with classical theory, joint asymptotic normality of mixture weights estimators, as in Theorem 4, can only be established when does not grow with either or .

In problems in which is expected to grow with the ambient sample sizes, although joint asymptotic results such as Eq. 78 become ill-posed, one can still study the marginal distributions of , over any subset of constant dimension. In particular, a straightforward modification of our analysis yields the limiting distribution of each component of : for any ,

The above result can be used to construct confidence intervals for any entry of , including those with zero values.

Remark 5 (Alternative estimation of the mixture weights).

Another natural estimator of the mixture weights is the weighted least squares estimators, defined as

| (45) |

with The use of the pre-conditioner in the definition of is reminiscent of the definition of the normalized Laplacian in graph theory: it moderates the size of the -th entry of each mixture estimate, across the mixtures, for each .

The estimator can also be viewed as an (asymptotically) de-biased version of a restricted least squares estimator, obtained as in (45), but minimizing only over (see Remark 11 in Appendix H). It therefore has the same flavor as our proposed estimator . In Theorem 14 of Appendix H we further prove that under suitable conditions, as ,

for some matrix that does not equal the covariance matrix given by (8).

The asymptotic normality of together with Proposition 1 shows that inference for the distance between mixture weights can also be conducted relative to a distance estimate (28) based on . Note however that the potential sub-optimality of the limiting covariance matrix of this mixture weight estimator also affects the length of the confidence intervals for the Wasserstein distance, see our simulation results in Section F.3. We therefore recommend the usage of the asymptotically debiased, MLE-type estimators analyzed in this section, with being a second best.

5.2 The limiting distribution of the proposed distance estimator

As a consequence of Proposition 1 and Theorem 9, we derive the limiting distribution of our proposed estimator of the distance in Theorem 5 below.

Let be defined as (28) by using and the de-biased estimators in (41). Let

| (46) |

where we assume that

| (47) |

exists with defined in (8). Here we rely on the fact that is independent of and to define the limit, but allow the model parameters , and to depend on both and .

Theorem 5.

The proof of Theorem 5 immediately follows from Proposition 1 and Theorem 9 together with and (13). The requirement of Proposition 1 in this context reduces to , which holds when: (i) is fixed, and grows, a situation encountered when the dictionary size is not affected by the growing size of the corpus; (ii) is independent of but grows with, and slower than, , as we expect that not many words are added to an initially fixed dictionary as more documents are added to the corpus; (iii) , which reflects the fact that the dictionary size can depend on the length of the document, but again should grow slower than the number of documents.

Our results in Theorem 5 can be readily generalized to the cases where is different from . We refer to Appendix E for the precise statement in this case.

5.3 Fully data driven inference for the Wasserstein distance between mixing measures

To utilize Theorem 5 in practice for inference on , for instance for constructing confidence intervals or conducting hypothesis testing, we provide below consistent quantile estimation for the limiting distribution in Theorem 5.

Other possible data-driven inference strategies are bootstrap-based. The table below summarizes the pros and cons of these procedures, in contrast with our proposal (in green). The symbol means that the procedure is not available in a certain scenario, while means that it is, and \colorgreen means that the procedure has best performance, in our experiments.

| Procedures | Make use of the form of the limiting distribution | Any | Only |

| (1) Classical bootstrap | No | ||

| (2) -out-of- bootstrap | No | ||

| (3) Derivative-based bootstrap | Yes | ||

| (4) Plug-in estimation | Yes | \colorgreen | \colorgreen |

| Performance for constructing confidence intervals ( means better) | (4) ¿ (2) | (3) (4) ¿ (2) | |

5.3.1 Bootstrap

The bootstrap [27] is a powerful tool for estimating a distribution. However, since the Hadamard-directional derivative of w.r.t. () is non-linear (see Eq. 35), [26] shows that the classical bootstrap is not consistent. The same paper also shows that this can be corrected by a version of -out-of- bootstrap, for and . Unfortunately, the optimal choice of is not known, which hampers its practical implementation, as suggested by our simulation result in Section F.7. Moreover, our simulation result in Section F.4 also shows that the -out-of- bootstrap seems to have inferior finite sample performance comparing to other procedures described below.

An alternative to the -out-of- bootstrap is to use a derivative-based bootstrap [28] for estimating the limiting distribution in Theorem 5, by plugging the bootstrap samples into the Hadamard-directional derivative (HDD) on the right hand side of (48). As proved in [28] and pointed out by [57], this procedure is consistent at the null in which case (see (34) and (33)) hence the HDD is a known function. However, this procedure is not directly applicable when since the HDD depends on which needs to be consistently estimated. Nevertheless, we provide below a consistent estimator of which can be readily used in conjunction with the derivative-based bootstrap. For the reader’s convenience, we provide details of both the -out-of- bootstrap and the derivative-based bootstrap in Section F.6.

5.3.2 A plug-in estimator for the limiting distribution of the distance estimate

In view of Theorem 5, we propose to replace the population level quantities that appear in the limit by their consistent estimates. Then, we estimate the cumulative distribution function of the limit via Monte Carlo simulations.

To be concrete, for a specified integer , let be i.i.d. samples from where, for ,

| (49) |

with and . We then propose to estimate the limiting distribution on the right hand side of (48) by the empirical distribution of

| (50) |

where, for some tuning parameter ,

| (51) |

Let be the empirical cumulative density function (c.d.f.) of (50) and write for the c.d.f. of the limiting distribution on the right hand side of (48). The following theorem states that converges to in probability for all . Its proof is deferred to Section B.3.10.

Theorem 6.

Remark 6 (Confidence intervals).

Theorem 6 in conjunction with [63, Lemma 21.2] ensures that any quantile of the limiting distribution on the right hand side of (48) can also be consistently estimated by its empirical counterpart of (50), which, therefore by Slutsky’s theorem, can be used to provide confidence intervals of . This is summarized in the following corollary. Let be the quantile of (50) for any .

Corollary 2.

Grant conditions in Theorem 6. For any , we have

Remark 7 (Hypothesis testing at the null ).

In applications where we are interested in inference for , the above plug-in procedure can be simplified. There is no need for the tuning parameter , since one only needs to compute the empirical distribution of

Remark 8 (The tuning parameter ).

The rate of in Theorem 6 is stated for the MLE-type estimators of and as well as the estimator of satisfying (13).

Recall and from (23) and (24). For a generic estimator and , Lemma 14 of Section B.3.10 requires the choice of to satisfy and as .

To ensure consistent estimation of the limiting c.d.f., we need to first estimate the set given by (33) consistently, for instance, in the Hausdorff distance. Although consistency of the plug-in estimator given by (29) of can be done with relative ease, proving the consistency of the plug-in estimator of the facet requires extra care. We introduce in (51) mainly for technical reasons related to this consistency proof. Our simulations reveal that simply setting yields good performance overall.

Supplement to “Estimation and inference for the Wasserstein distance between mixing measures in topic models” \sdescriptionThe supplement [7] contains all the proofs, additional theoretical results, all numerical results and review of the error bound of the MLE-type estimators of the mixture weights.

References

- [1] {bbook}[author] \bauthor\bsnmAgresti, \bfnmAlan\binitsA. (\byear2013). \btitleCategorical Data Analysis, \beditionthird ed. \bseriesWiley Series in Probability and Statistics. \bpublisherWiley-Interscience [John Wiley & Sons], Hoboken, NJ. \bmrnumber3087436 \endbibitem

- [2] {barticle}[author] \bauthor\bsnmAndrews, \bfnmDonald W. K.\binitsD. W. K. (\byear2002). \btitleGeneralized method of moments estimation when a parameter is on a boundary. \bjournalJ. Bus. Econom. Statist. \bvolume20 \bpages530–544. \bnoteTwentieth anniversary GMM issue. \bdoi10.1198/073500102288618667 \bmrnumber1945607 \endbibitem

- [3] {binproceedings}[author] \bauthor\bsnmArora, \bfnmSanjeev\binitsS., \bauthor\bsnmGe, \bfnmRong\binitsR., \bauthor\bsnmHalpern, \bfnmYonatan\binitsY., \bauthor\bsnmMimno, \bfnmDavid M.\binitsD. M., \bauthor\bsnmMoitra, \bfnmAnkur\binitsA., \bauthor\bsnmSontag, \bfnmDavid A.\binitsD. A., \bauthor\bsnmWu, \bfnmYichen\binitsY. and \bauthor\bsnmZhu, \bfnmMichael\binitsM. (\byear2013). \btitleA practical algorithm for topic modeling with provable guarantees. In \bbooktitleProceedings of the 30th International Conference on Machine Learning, ICML 2013, Atlanta, GA, USA, 16-21 June 2013. \bseriesJMLR Workshop and Conference Proceedings \bvolume28 \bpages280–288. \bpublisherJMLR.org. \endbibitem

- [4] {binproceedings}[author] \bauthor\bsnmArora, \bfnmSanjeev\binitsS., \bauthor\bsnmGe, \bfnmRong\binitsR. and \bauthor\bsnmMoitra, \bfnmAnkur\binitsA. (\byear2012). \btitleLearning topic models - Going beyond SVD. In \bbooktitle53rd Annual IEEE Symposium on Foundations of Computer Science, FOCS 2012, New Brunswick, NJ, USA, October 20-23, 2012 \bpages1–10. \bpublisherIEEE Computer Society. \bdoi10.1109/FOCS.2012.49 \endbibitem

- [5] {barticle}[author] \bauthor\bsnmBa, \bfnmKhanh Do\binitsK. D., \bauthor\bsnmNguyen, \bfnmHuy L.\binitsH. L., \bauthor\bsnmNguyen, \bfnmHuy N.\binitsH. N. and \bauthor\bsnmRubinfeld, \bfnmRonitt\binitsR. (\byear2011). \btitleSublinear time algorithms for earth mover’s distance. \bjournalTheory Comput. Syst. \bvolume48 \bpages428–442. \bdoi10.1007/S00224-010-9265-8 \endbibitem

- [6] {binproceedings}[author] \bauthor\bsnmBansal, \bfnmTrapit\binitsT., \bauthor\bsnmBhattacharyya, \bfnmChiranjib\binitsC. and \bauthor\bsnmKannan, \bfnmRavindran\binitsR. (\byear2014). \btitleA provable SVD-based algorithm for learning topics in dominant admixture corpus. In \bbooktitleAdvances in Neural Information Processing Systems 27: Annual Conference on Neural Information Processing Systems 2014, December 8-13 2014, Montreal, Quebec, Canada (\beditor\bfnmZoubin\binitsZ. \bsnmGhahramani, \beditor\bfnmMax\binitsM. \bsnmWelling, \beditor\bfnmCorinna\binitsC. \bsnmCortes, \beditor\bfnmNeil D.\binitsN. D. \bsnmLawrence and \beditor\bfnmKilian Q.\binitsK. Q. \bsnmWeinberger, eds.) \bpages1997–2005. \endbibitem

- [7] {barticle}[author] \bauthor\bsnmBing, \bfnmXin\binitsX., \bauthor\bsnmBunea, \bfnmFlorentina\binitsF. and \bauthor\bsnmNiles-Weed, \bfnmJonathan\binitsJ. (\byear2024). \btitleSupplement to “Estimation and inference for the Wasserstein distance between mixing measures in topic models”. \endbibitem

- [8] {barticle}[author] \bauthor\bsnmBing, \bfnmXin\binitsX., \bauthor\bsnmBunea, \bfnmFlorentina\binitsF., \bauthor\bsnmStrimas-Mackey, \bfnmSeth\binitsS. and \bauthor\bsnmWegkamp, \bfnmMarten\binitsM. (\byear2022). \btitleLikelihood estimation of sparse topic distributions in topic models and its applications to Wasserstein document distance calculations. \bjournalAnn. Statist. \bvolume50 \bpages3307–3333. \bdoi10.1214/22-AOS2229 \endbibitem

- [9] {barticle}[author] \bauthor\bsnmBing, \bfnmXin\binitsX., \bauthor\bsnmBunea, \bfnmFlorentina\binitsF. and \bauthor\bsnmWegkamp, \bfnmMarten\binitsM. (\byear2020). \btitleA fast algorithm with minimax optimal guarantees for topic models with an unknown number of topics. \bjournalBernoulli \bvolume26 \bpages1765–1796. \bdoi10.3150/19-BEJ1166 \bmrnumber4091091 \endbibitem

- [10] {barticle}[author] \bauthor\bsnmBing, \bfnmXin\binitsX., \bauthor\bsnmBunea, \bfnmFlorentina\binitsF. and \bauthor\bsnmWegkamp, \bfnmMarten\binitsM. (\byear2020). \btitleOptimal estimation of sparse topic models. \bjournalJ. Mach. Learn. Res. \bvolume21 \bpagesPaper No. 177, 45. \bmrnumber4209463 \endbibitem

- [11] {barticle}[author] \bauthor\bsnmBirch, \bfnmM. W.\binitsM. W. (\byear1964). \btitleA new proof of the Pearson-Fisher theorem. \bjournalAnn. Math. Statist. \bvolume35 \bpages817–824. \bdoi10.1214/aoms/1177703581 \bmrnumber169324 \endbibitem

- [12] {barticle}[author] \bauthor\bsnmBlei, \bfnmDavid M.\binitsD. M. (\byear2012). \btitleProbabilistic topic models. \bjournalCommun. ACM \bvolume55 \bpages77–84. \bdoi10.1145/2133806.2133826 \endbibitem

- [13] {barticle}[author] \bauthor\bsnmBlei, \bfnmDavid M.\binitsD. M., \bauthor\bsnmNg, \bfnmAndrew Y.\binitsA. Y. and \bauthor\bsnmJordan, \bfnmMichael I.\binitsM. I. (\byear2003). \btitleLatent dirichlet allocation. \bjournalJ. Mach. Learn. Res. \bvolume3 \bpages993–1022. \endbibitem

- [14] {bbook}[author] \bauthor\bsnmBonnans, \bfnmJ. Frédéric\binitsJ. F. and \bauthor\bsnmShapiro, \bfnmAlexander\binitsA. (\byear2000). \btitlePerturbation Analysis of Optimization Problems. \bseriesSpringer Series in Operations Research. \bpublisherSpringer-Verlag, New York. \bdoi10.1007/978-1-4612-1394-9 \bmrnumber1756264 \endbibitem

- [15] {barticle}[author] \bauthor\bsnmBravo González-Blas, \bfnmCarmen\binitsC., \bauthor\bsnmMinnoye, \bfnmLiesbeth\binitsL., \bauthor\bsnmPapasokrati, \bfnmDafni\binitsD., \bauthor\bsnmAibar, \bfnmSara\binitsS., \bauthor\bsnmHulselmans, \bfnmGert\binitsG., \bauthor\bsnmChristiaens, \bfnmValerie\binitsV., \bauthor\bsnmDavie, \bfnmKristofer\binitsK., \bauthor\bsnmWouters, \bfnmJasper\binitsJ. and \bauthor\bsnmAerts, \bfnmStein\binitsS. (\byear2019). \btitlecisTopic: cis-regulatory topic modeling on single-cell ATAC-seq data. \bjournalNat. Methods \bvolume16 \bpages397–400. \endbibitem

- [16] {barticle}[author] \bauthor\bsnmCai, \bfnmT. Tony\binitsT. T. and \bauthor\bsnmLow, \bfnmMark G.\binitsM. G. (\byear2011). \btitleTesting composite hypotheses, Hermite polynomials and optimal estimation of a nonsmooth functional. \bjournalAnn. Statist. \bvolume39 \bpages1012–1041. \bdoi10.1214/10-AOS849 \bmrnumber2816346 \endbibitem

- [17] {barticle}[author] \bauthor\bsnmChen, \bfnmJia Hua\binitsJ. H. (\byear1995). \btitleOptimal rate of convergence for finite mixture models. \bjournalAnn. Statist. \bvolume23 \bpages221–233. \bdoi10.1214/aos/1176324464 \bmrnumber1331665 \endbibitem

- [18] {barticle}[author] \bauthor\bsnmChen, \bfnmSisi\binitsS., \bauthor\bsnmRivaud, \bfnmPaul\binitsP., \bauthor\bsnmPark, \bfnmJong H.\binitsJ. H., \bauthor\bsnmTsou, \bfnmTiffany\binitsT., \bauthor\bsnmCharles, \bfnmEmeric\binitsE., \bauthor\bsnmHaliburton, \bfnmJohn R.\binitsJ. R., \bauthor\bsnmPichiorri, \bfnmFlavia\binitsF. and \bauthor\bsnmThomson, \bfnmMatt\binitsM. (\byear2020). \btitleDissecting heterogeneous cell populations across drug and disease conditions with PopAlign. \bjournalProc. Natl. Acad. Sci. U.S.A. \bvolume117 \bpages28784–28794. \bdoi10.1073/pnas.2005990117 \endbibitem

- [19] {barticle}[author] \bauthor\bparticledel \bsnmBarrio, \bfnmEustasio\binitsE., \bauthor\bsnmGiné, \bfnmEvarist\binitsE. and \bauthor\bsnmMatrán, \bfnmCarlos\binitsC. (\byear1999). \btitleCentral limit theorems for the Wasserstein distance between the empirical and the true distributions. \bjournalAnn. Probab. \bvolume27 \bpages1009–1071. \bdoi10.1214/aop/1022677394 \bmrnumber1698999 \endbibitem

- [20] {barticle}[author] \bauthor\bparticledel \bsnmBarrio, \bfnmEustasio\binitsE., \bauthor\bsnmGonzález Sanz, \bfnmAlberto\binitsA. and \bauthor\bsnmLoubes, \bfnmJean-Michel\binitsJ.-M. (\byear2024). \btitleCentral limit theorems for semi-discrete Wasserstein distances. \bjournalBernoulli \bvolume30 \bpages554–580. \bdoi10.3150/23-bej1608 \bmrnumber4665589 \endbibitem

- [21] {barticle}[author] \bauthor\bparticledel \bsnmBarrio, \bfnmEustasio\binitsE., \bauthor\bsnmGonzález-Sanz, \bfnmAlberto\binitsA. and \bauthor\bsnmLoubes, \bfnmJean-Michel\binitsJ.-M. (\byear2024). \btitleCentral limit theorems for general transportation costs. \bjournalAnn. Inst. Henri Poincaré Probab. Stat. \bvolume60 \bpages847–873. \bdoi10.1214/22-aihp1356 \bmrnumber4757510 \endbibitem

- [22] {barticle}[author] \bauthor\bparticledel \bsnmBarrio, \bfnmEustasio\binitsE., \bauthor\bsnmGordaliza, \bfnmPaula\binitsP. and \bauthor\bsnmLoubes, \bfnmJean-Michel\binitsJ.-M. (\byear2019). \btitleA central limit theorem for transportation cost on the real line with application to fairness assessment in machine learning. \bjournalInf. Inference \bvolume8 \bpages817–849. \bdoi10.1093/imaiai/iaz016 \bmrnumber4045479 \endbibitem

- [23] {barticle}[author] \bauthor\bparticledel \bsnmBarrio, \bfnmEustasio\binitsE. and \bauthor\bsnmLoubes, \bfnmJean-Michel\binitsJ.-M. (\byear2019). \btitleCentral limit theorems for empirical transportation cost in general dimension. \bjournalAnn. Probab. \bvolume47 \bpages926–951. \bdoi10.1214/18-AOP1275 \bmrnumber3916938 \endbibitem

- [24] {binproceedings}[author] \bauthor\bsnmDing, \bfnmWeicong\binitsW., \bauthor\bsnmIshwar, \bfnmPrakash\binitsP. and \bauthor\bsnmSaligrama, \bfnmVenkatesh\binitsV. (\byear2015). \btitleMost large topic models are approximately separable. In \bbooktitle2015 Information Theory and Applications Workshop (ITA) \bpages199-203. \bdoi10.1109/ITA.2015.7308989 \endbibitem

- [25] {binproceedings}[author] \bauthor\bsnmDonoho, \bfnmDavid L.\binitsD. L. and \bauthor\bsnmStodden, \bfnmVictoria\binitsV. (\byear2003). \btitleWhen does non-negative matrix factorization give a correct decomposition into parts? In \bbooktitleAdvances in Neural Information Processing Systems 16 [Neural Information Processing Systems, NIPS 2003, December 8-13, 2003, Vancouver and Whistler, British Columbia, Canada] (\beditor\bfnmSebastian\binitsS. \bsnmThrun, \beditor\bfnmLawrence K.\binitsL. K. \bsnmSaul and \beditor\bfnmBernhard\binitsB. \bsnmSchölkopf, eds.) \bpages1141–1148. \bpublisherMIT Press. \endbibitem

- [26] {barticle}[author] \bauthor\bsnmDümbgen, \bfnmLutz\binitsL. (\byear1993). \btitleOn nondifferentiable functions and the bootstrap. \bjournalProbab. Theory Related Fields \bvolume95 \bpages125–140. \bdoi10.1007/BF01197342 \bmrnumber1207311 \endbibitem

- [27] {barticle}[author] \bauthor\bsnmEfron, \bfnmB.\binitsB. (\byear1979). \btitleBootstrap methods: another look at the jackknife. \bjournalAnn. Statist. \bvolume7 \bpages1–26. \bmrnumber515681 \endbibitem

- [28] {barticle}[author] \bauthor\bsnmFang, \bfnmZheng\binitsZ. and \bauthor\bsnmSantos, \bfnmAndres\binitsA. (\byear2019). \btitleInference on directionally differentiable functions. \bjournalRev. Econ. Stud. \bvolume86 \bpages377–412. \bdoi10.1093/restud/rdy049 \bmrnumber3936869 \endbibitem

- [29] {barticle}[author] \bauthor\bsnmFu, \bfnmXiao\binitsX., \bauthor\bsnmHuang, \bfnmKejun\binitsK., \bauthor\bsnmSidiropoulos, \bfnmNicholas D.\binitsN. D., \bauthor\bsnmShi, \bfnmQingjiang\binitsQ. and \bauthor\bsnmHong, \bfnmMingyi\binitsM. (\byear2019). \btitleAnchor-free correlated topic modeling. \bjournalIEEE Trans. Pattern Anal. Mach. Intell. \bvolume41 \bpages1056-1071. \bdoi10.1109/TPAMI.2018.2827377 \endbibitem

- [30] {barticle}[author] \bauthor\bsnmHan, \bfnmYanjun\binitsY., \bauthor\bsnmJiao, \bfnmJiantao\binitsJ. and \bauthor\bsnmWeissman, \bfnmTsachy\binitsT. (\byear2020). \btitleMinimax estimation of divergences between discrete distributions. \bjournalIEEE J. Sel. Areas Inf. Theory \bvolume1 \bpages814–823. \bdoi10.1109/JSAIT.2020.3041036 \endbibitem

- [31] {barticle}[author] \bauthor\bsnmHeinrich, \bfnmPhilippe\binitsP. and \bauthor\bsnmKahn, \bfnmJonas\binitsJ. (\byear2018). \btitleStrong identifiability and optimal minimax rates for finite mixture estimation. \bjournalAnn. Statist. \bvolume46 \bpages2844–2870. \bdoi10.1214/17-AOS1641 \bmrnumber3851757 \endbibitem

- [32] {bbook}[author] \bauthor\bsnmHiriart-Urruty, \bfnmJean-Baptiste\binitsJ.-B. and \bauthor\bsnmLemaréchal, \bfnmClaude\binitsC. (\byear1993). \btitleConvex analysis and minimization algorithms. I. \bseriesGrundlehren der mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences] \bvolume305. \bpublisherSpringer-Verlag, Berlin \bnoteFundamentals. \bmrnumber1261420 \endbibitem

- [33] {barticle}[author] \bauthor\bsnmHo, \bfnmNhat\binitsN. and \bauthor\bsnmNguyen, \bfnmXuanLong\binitsX. (\byear2016). \btitleConvergence rates of parameter estimation for some weakly identifiable finite mixtures. \bjournalAnn. Statist. \bvolume44 \bpages2726–2755. \bdoi10.1214/16-AOS1444 \bmrnumber3576559 \endbibitem

- [34] {barticle}[author] \bauthor\bsnmHo, \bfnmNhat\binitsN. and \bauthor\bsnmNguyen, \bfnmXuanLong\binitsX. (\byear2016). \btitleOn strong identifiability and convergence rates of parameter estimation in finite mixtures. \bjournalElectron. J. Stat. \bvolume10 \bpages271–307. \bdoi10.1214/16-EJS1105 \bmrnumber3466183 \endbibitem

- [35] {barticle}[author] \bauthor\bsnmHo, \bfnmNhat\binitsN., \bauthor\bsnmNguyen, \bfnmXuanLong\binitsX. and \bauthor\bsnmRitov, \bfnmYa’acov\binitsY. (\byear2020). \btitleRobust estimation of mixing measures in finite mixture models. \bjournalBernoulli \bvolume26 \bpages828–857. \bdoi10.3150/18-BEJ1087 \bmrnumber4058353 \endbibitem

- [36] {barticle}[author] \bauthor\bsnmJiao, \bfnmJiantao\binitsJ., \bauthor\bsnmHan, \bfnmYanjun\binitsY. and \bauthor\bsnmWeissman, \bfnmTsachy\binitsT. (\byear2018). \btitleMinimax estimation of the distance. \bjournalIEEE Trans. Inf. Theory \bvolume64 \bpages6672–6706. \bdoi10.1109/TIT.2018.2846245 \endbibitem

- [37] {bbook}[author] \bauthor\bsnmKirk, \bfnmWilliam\binitsW. and \bauthor\bsnmShahzad, \bfnmNaseer\binitsN. (\byear2014). \btitleFixed Point Theory in Distance Spaces. \bpublisherSpringer, Cham. \bdoi10.1007/978-3-319-10927-5 \bmrnumber3288309 \endbibitem

- [38] {barticle}[author] \bauthor\bsnmKlopp, \bfnmOlga\binitsO., \bauthor\bsnmPanov, \bfnmMaxim\binitsM., \bauthor\bsnmSigalla, \bfnmSuzanne\binitsS. and \bauthor\bsnmTsybakov, \bfnmAlexandre B.\binitsA. B. (\byear2023). \btitleAssigning topics to documents by successive projections. \bjournalAnn. Statist. \bvolume51 \bpages1989–2014. \bdoi10.1214/23-aos2316 \bmrnumber4678793 \endbibitem

- [39] {bbook}[author] \bauthor\bsnmLe Cam, \bfnmLucien\binitsL. (\byear1986). \btitleAsymptotic Methods in Statistical Decision Theory. \bseriesSpringer Series in Statistics. \bpublisherSpringer-Verlag, New York. \bdoi10.1007/978-1-4612-4946-7 \bmrnumber856411 \endbibitem

- [40] {binbook}[author] \bauthor\bsnmLe Cam, \bfnmLucien\binitsL. and \bauthor\bsnmLo Yang, \bfnmGrace\binitsG. (\byear1990). \btitleLocally Asymptotically Normal Families. In \bbooktitleAsymptotics in Statistics: Some Basic Concepts \bpages52–98. \bpublisherSpringer US, \baddressNew York, NY. \endbibitem

- [41] {barticle}[author] \bauthor\bsnmLepski, \bfnmO.\binitsO., \bauthor\bsnmNemirovski, \bfnmA.\binitsA. and \bauthor\bsnmSpokoiny, \bfnmV.\binitsV. (\byear1999). \btitleOn estimation of the norm of a regression function. \bjournalProbab. Theory Related Fields \bvolume113 \bpages221–253. \bdoi10.1007/s004409970006 \bmrnumber1670867 \endbibitem

- [42] {barticle}[author] \bauthor\bsnmLeroux, \bfnmBrian G.\binitsB. G. (\byear1992). \btitleConsistent estimation of a mixing distribution. \bjournalAnn. Statist. \bvolume20 \bpages1350–1360. \bdoi10.1214/aos/1176348772 \bmrnumber1186253 \endbibitem

- [43] {barticle}[author] \bauthor\bsnmLiang, \bfnmTengyuan\binitsT. (\byear2019). \btitleOn the minimax optimality of estimating the Wasserstein metric. \endbibitem

- [44] {barticle}[author] \bauthor\bsnmLo, \bfnmAlbert Y.\binitsA. Y. (\byear1984). \btitleOn a class of Bayesian nonparametric estimates. I. Density estimates. \bjournalAnn. Statist. \bvolume12 \bpages351–357. \bdoi10.1214/aos/1176346412 \bmrnumber733519 \endbibitem

- [45] {barticle}[author] \bauthor\bsnmMaceachern, \bfnmSteven N.\binitsS. N. and \bauthor\bsnmMüller, \bfnmPeter\binitsP. (\byear1998). \btitleEstimating mixture of dirichlet process models. \bjournalJ. Comput. Graph. Statist. \bvolume7 \bpages223–238. \bdoi10.1080/10618600.1998.10474772 \endbibitem

- [46] {barticle}[author] \bauthor\bsnmManole, \bfnmTudor\binitsT., \bauthor\bsnmBalakrishnan, \bfnmSivaraman\binitsS., \bauthor\bsnmNiles-Weed, \bfnmJonathan\binitsJ. and \bauthor\bsnmWasserman, \bfnmLarry\binitsL. (\byear2024). \btitlePlugin estimation of smooth optimal transport maps. \bjournalAnn. Statist. \bpagesTo appear. \endbibitem

- [47] {barticle}[author] \bauthor\bsnmManole, \bfnmTudor\binitsT. and \bauthor\bsnmKhalili, \bfnmAbbas\binitsA. (\byear2021). \btitleEstimating the number of components in finite mixture models via the group-sort-fuse procedure. \bjournalAnn. Statist. \bvolume49 \bpages3043–3069. \bdoi10.1214/21-aos2072 \bmrnumber4352522 \endbibitem

- [48] {bbook}[author] \bauthor\bsnmMcLachlan, \bfnmGeoffrey\binitsG. and \bauthor\bsnmPeel, \bfnmDavid\binitsD. (\byear2000). \btitleFinite mixture models. \bseriesWiley Series in Probability and Statistics: Applied Probability and Statistics. \bpublisherWiley-Interscience, New York. \bdoi10.1002/0471721182 \bmrnumber1789474 \endbibitem

- [49] {barticle}[author] \bauthor\bsnmNguyen, \bfnmXuanLong\binitsX. (\byear2013). \btitleConvergence of latent mixing measures in finite and infinite mixture models. \bjournalAnn. Statist. \bvolume41 \bpages370–400. \bdoi10.1214/12-AOS1065 \bmrnumber3059422 \endbibitem

- [50] {barticle}[author] \bauthor\bsnmNiles-Weed, \bfnmJonathan\binitsJ. and \bauthor\bsnmRigollet, \bfnmPhilippe\binitsP. (\byear2022). \btitleEstimation of Wasserstein distances in the spiked transport model. \bjournalBernoulli \bvolume28 \bpages2663–2688. \bdoi10.3150/21-bej1433 \bmrnumber4474558 \endbibitem

- [51] {barticle}[author] \bauthor\bsnmPearson, \bfnmKarl\binitsK. (\byear1894). \btitleContributions to the mathematical theory of evolution. \bjournalPhilos. Trans. R. Soc. A \bvolume185 \bpages71–110. \endbibitem

- [52] {barticle}[author] \bauthor\bsnmRao, \bfnmC. Radhakrishna\binitsC. R. (\byear1957). \btitleMaximum likelihood estimation for the multinomial distribution. \bjournalSankhyā \bvolume18 \bpages139–148. \bmrnumber105183 \endbibitem

- [53] {barticle}[author] \bauthor\bsnmRao, \bfnmC. Radhakrishna\binitsC. R. (\byear1958). \btitleMaximum likelihood estimation for the multinomial distribution with infinite number of cells. \bjournalSankhyā \bvolume20 \bpages211–218. \bmrnumber107334 \endbibitem

- [54] {binproceedings}[author] \bauthor\bsnmRecht, \bfnmBen\binitsB., \bauthor\bsnmRé, \bfnmChristopher\binitsC., \bauthor\bsnmTropp, \bfnmJoel A.\binitsJ. A. and \bauthor\bsnmBittorf, \bfnmVictor\binitsV. (\byear2012). \btitleFactoring nonnegative matrices with linear programs. In \bbooktitleAdvances in Neural Information Processing Systems 25: 26th Annual Conference on Neural Information Processing Systems 2012. Proceedings of a meeting held December 3-6, 2012, Lake Tahoe, Nevada, United States (\beditor\bfnmPeter L.\binitsP. L. \bsnmBartlett, \beditor\bfnmFernando C. N.\binitsF. C. N. \bsnmPereira, \beditor\bfnmChristopher J. C.\binitsC. J. C. \bsnmBurges, \beditor\bfnmLéon\binitsL. \bsnmBottou and \beditor\bfnmKilian Q.\binitsK. Q. \bsnmWeinberger, eds.) \bpages1223–1231. \endbibitem

- [55] {binbook}[author] \bauthor\bsnmRömisch, \bfnmWerner\binitsW. (\byear2006). \btitleDelta Method, Infinite Dimensional In \bbooktitleEncyclopedia of Statistical Sciences. \bpublisherJohn Wiley & Sons, Ltd. \bdoihttps://doi.org/10.1002/0471667196.ess3139 \endbibitem

- [56] {barticle}[author] \bauthor\bsnmShapiro, \bfnmAlexander\binitsA. (\byear1991). \btitleAsymptotic analysis of stochastic programs. \bjournalAnn. Oper. Res. \bvolume30 \bpages169–186. \bnoteStochastic programming, Part I (Ann Arbor, MI, 1989). \bdoi10.1007/BF02204815 \bmrnumber1118896 \endbibitem

- [57] {barticle}[author] \bauthor\bsnmSommerfeld, \bfnmMax\binitsM. and \bauthor\bsnmMunk, \bfnmAxel\binitsA. (\byear2018). \btitleInference for empirical Wasserstein distances on finite spaces. \bjournalJ. R. Stat. Soc. Ser. B. Stat. Methodol. \bvolume80 \bpages219–238. \bdoi10.1111/rssb.12236 \bmrnumber3744719 \endbibitem

- [58] {barticle}[author] \bauthor\bsnmTakahashi, \bfnmWataru\binitsW. (\byear1970). \btitleA convexity in metric space and nonexpansive mappings. I. \bjournalKodai Math. Sem. Rep. \bvolume22 \bpages142–149. \bmrnumber267565 \endbibitem

- [59] {barticle}[author] \bauthor\bsnmTameling, \bfnmCarla\binitsC., \bauthor\bsnmSommerfeld, \bfnmMax\binitsM. and \bauthor\bsnmMunk, \bfnmAxel\binitsA. (\byear2019). \btitleEmpirical optimal transport on countable metric spaces: distributional limits and statistical applications. \bjournalAnn. Appl. Probab. \bvolume29 \bpages2744–2781. \bdoi10.1214/19-AAP1463 \bmrnumber4019874 \endbibitem